Abstract

The issue of food waste is more relevant than ever, both for emerging and developed economies. Information technologies have the potential to contribute to reducing this problem, and our research aims to present a viable prototype that uses on-cloud image classification and specific OCR techniques. The result of our study is a low-cost, high-performance mobile application prototype that paves the way for further research. We used advanced application integration concepts, including mobile architectures, Firebase machine learning components, and OCR techniques to highlight how close food products are to their expiration date. In contexts with no printed date, the system computes an indicative shelf-life estimate from conservative category priors. These estimates are not safety judgments and do not replace manufacturer date labels or national food-safety guidance. These results give our article clear elements of authenticity and contribution to the field of knowledge, improving the economic efficiency of warehouses and food stores. The implications of our study are technical, economic, and social.

1. Introduction

Food waste remains a significant sustainability challenge in Europe, impacting not only the environment but also society and the economy. According to figures published by Eurostat in 2024 for the reference year 2022 [1], the cumulative volume of food waste generated across the European Union’s supply chain is estimated to have reached approximately 59.2 million tonnes. This equates to roughly 132 kg of food waste for every person living in the EU, which is a concerning figure that underscores the scale of the problem. Households have been identified as the primary contributors to this issue, accounting for 54% of the total waste, which amounts to approximately 72 kg of discarded food per individual. Therefore, it becomes evident that addressing this issue can start at the individual level, where people have the potential to make meaningful changes in daily consumption and storage habits. In response to increasing concerns about food waste, several digital solutions have emerged to promote more sustainable consumption patterns. Too Good To Go [2] and Bonapp [3] by Munch exemplify marketplace-driven platforms that partner with restaurants, bakeries, and grocery stores to offer significant discounts on items nearing their expiration date, thereby encouraging last-minute rescue purchases. In contrast, Olio promotes a peer-to-peer sharing model, allowing individuals and local businesses to donate surplus food to nearby users at no cost, thereby strengthening community-based redistribution [4]. NoWaste takes a different approach by focusing on household-level inventory tracking, allowing users to register food items and receive reminders before they expire automatically [5].

While these tools contribute meaningfully to awareness and behavioral change, many of them still depend heavily on manual input or partner participation. More importantly, they primarily concentrate on prelabelled expiration dates without the ability to visually recognize the food item itself or to predict a consumption window when the expiration label is missing or unreadable. As a result, there is an ongoing need for an integrated, consumer-friendly solution that can automatically identify food items, extract any visible dates, and estimate a plausible consumption window when labels are not available.

In the literature, there are various studies and research about the concept of food waste management and the previous results focused on consumers’ education [6], laws and regulations [7], environmental impact [8], political aspects [9], specific drivers and barriers [10], integrated strategies [11] and economic consequences [12].

Many modern food management applications use optical character recognition (OCR) technology to read expiration dates printed on product packaging. While this approach is practical for clearly printed labels, it often falls short in real-world scenarios where expiration dates can be worn, smudged, faded, or partially missing due to poor print quality or handling [13]. Certain food items, such as fresh fruits, vegetables, or baked goods, often do not have printed date labels, mainly when sold loose or intended for quick consumption. In these instances, it is necessary to rely on visual recognition of the product rather than official text recognition [14].

Current technology remains fragmented: mobile OCR can extract expiration dates only when they are intact, while lightweight CNNs (Convolutional Neural Networks) can recognize unlabeled foods [15]. However, very few consumer-level systems combine these two capabilities to estimate a plausible consumption period when the date is missing or unreadable. Although platforms like Firebase ML Kit already integrate on-cloud text recognition and image-labeling APIs, neither academic prototypes nor commercial applications have assessed a comprehensive pipeline that identifies the product, verifies any detected dates, and deduces a consumption window in real time before sending alerts to a cloud-synced inventory. Therefore, our study seeks to close the essential technological gap by integrating these components into a streamlined, smartphone-compatible workflow.

This study aims to design and empirically evaluate an Android application that reduces the manual effort associated with household food tracking. The proposed system automatically recognizes products through lightweight, on-cloud image classification, extracts any visible expiration dates using the OCR engine in Firebase ML Kit, and estimates a reasonable shelf life in cases where no printed date is available, relying on category-specific patterns stored in a local knowledge base. It also sends real-time alerts while synchronizing inventory with the cloud via Firebase Firestore and Cloud Messaging. The primary objective is to provide a smartphone-friendly workflow that delivers instant feedback, minimizes user input, and could potentially support household food-waste reduction by facilitating awareness and timely consumption. The contribution of this paper is system-level rather than algorithmic. We introduce an integrated mobile workflow that combines cloud-based OCR, cloud-based image labeling, and a rule-based shelf-life fallback to reduce user input while preserving real-time responsiveness. When the system predicts an expiration date in the absence of a readable label, the result is intended as an orientation value. The actual safe consumption window depends on user storage conditions, temperature control, package integrity, and brand-specific formulations. Because packaging practices and manufacturer standards vary widely, we do not claim that the predicted date is exact or universally applicable.

To evaluate the feasibility of our mobile solution, this study addresses five research questions: (Question 1) How accurately can a lightweight, on-cloud image-classification model identify food items in natural, user-captured photos? (Question 2) How effective is Firebase ML Kit at extracting expiration dates from packaging under diverse real-world conditions such as glare, curvature, and worn print? (Question 3) How reliably can the system estimate a plausible shelf life when no printed date is detected, using the recognized product category as a proxy? (Question 4) What is the end-to-end performance of the overall approach, including latency, resource usage, and perceived usability on typical mid-range smartphones operating in real time? (Question 5) To what extent can an automated solution maintain higher user engagement and show potential to encourage more sustainable food-consumption practices compared with manual or barcoded-based tools?

The remainder of this article is structured as follows: Section 2 reviews related work and develops the research hypotheses; Section 3 explains the proposed methodology, including data collection, model design, and evaluation protocols; and Section 4 presents and discusses the empirical results compared to previous studies and highlights the technical contributions, and outlines potential social and economic implications. Finally, Section 5 concludes with key findings, limitations, and directions for future research, based on the research results obtained in this scientific study.

2. Related Work

Digitalization has emerged as a highly effective route for cutting household food waste, and smartphone applications, now the most accessible digital channel, lead individual-level interventions by enabling peer-to-peer sharing of surplus food, real-time discounts on near- expiration items, and on-device scanning of expiration dates for effortless inventory tracking [16].

2.1. Mobile Food-Waste Applications

In this context, this section reviews existing apps focused on household food waste. It discusses machine-learning techniques such as on-device OCR, lightweight CNNs, and Firebase ML Kit APIs that support a seamless, fully automated food-tracking process.

To contextualize the technological discussion, Table 1 synthetizes the defining characteristics of four mobile applications positioned at the forefront of household-level food-waste reduction: Too Good To Go [2], Olio [4], Bonapp by Munch [3], and NoWaste [5]. The comparison distills each platform’s normative waste-reduction logic, main user-facing workflow, and enabling mobile technologies, thereby illustrating both the diversity of current digital strategies and the common reliance on core smartphone capabilities that our own OCR and CNN-based solution intend to extend.

Table 1.

Comparative features of mobile applications for household-level food-waste reduction.

Each platform intervenes at a specific stage of the food-waste chain, relying on distinct smartphone capabilities such as geolocation with in-app payments, peer-to-peer messaging, or barcode and OCR scanning. The comparison highlights where current solutions are practical and where key functions remain undeveloped, especially automatic extraction of printed expiration dates and CNN-based recognition of unpackaged foods, emphasizing the need for the integrated approach proposed in this study.

In addition to third-party platforms, major supermarket chains across Europe have also embedded food waste reduction strategies into their proprietary mobile applications. Chains such as Lidl [20], Kaufland [21], Carrefour [22], and PENNY [23] use their apps to offer digital discount coupons, notify users about “last-minute” price reductions for products nearing expiration, and provide access to surplus items through automated markdown systems. In some cases, like Carrefour and PENNY, these efforts are strengthened through partnerships with specialized platforms such as Too Good To Go or Bonapp.eco, which distribute unsold food using real-time, location-based notifications [24,25]. These integrated approaches rely on mobile technologies, including barcode scanning, loyalty card usage, and inventory-linked pricing algorithms to improve stock rotation and reduce waste directly at the store level.

2.2. Technologies Used in Food-Waste Reduction Applications

Modern mobile applications that aim to reduce food waste leverage a combination of advanced technologies to automate inventory tracking and minimize spoilage. These apps commonly incorporate visual recognition and scanning tools so that users can log food items effortlessly by simply capturing images or scanning codes.

These technologies streamline the process of monitoring a household’s food inventory and provide timely alerts before perishable items spoil. By replacing tedious manual data entry with automated scanning, such apps help ensure that food is used on time, significantly reducing the likelihood of forgotten products going to waste [26].

Optical Character Recognition (OCR) technology is increasingly relevant in mobile applications aimed at reducing food waste because of its ability to automate the extraction of key information from product packaging, particularly expiration dates. By minimizing the need for manual data entry, OCR significantly reduces user effort while improving the accuracy and efficiency of food inventory tracking at the household level [27]. OCR is the process of translating rasterized text images into machine-readable strings through a multi-stage pipeline comprising image acquisition, pre-processing (e.g., binarization, noise removal, and skew correction), segmentation of individual glyphs, feature extraction, and final character classification [28].

Smartphone solutions integrate OCR engines such as Tesseract or Google ML Kit Text Recognition to scan product labels for expiration dates, a process that can quickly capture date information even from small or faint text and update a user’s pantry inventory with minimal effort [27].

Research prototypes have demonstrated very high accuracy for automated expiration-date reading, achieved over 99% recognition accuracy with a specialized OCR pipeline (using image preprocessing and deep learning) for expiration dates on beverage packages [29], and another study reported about 97.8% accuracy by enhancing an OCR model with a convolutional neural network attention module [27]. Such results indicate that OCR, especially when combined with deep learning, can be a reliable solution for detecting expiration dates.

A practical constraint is that OCR accuracy deteriorates when image quality is compromised by poor lighting, low contrast, or smudged characters, conditions that hinder reliable text segmentation and recognition [30]. Subsequent experimental work confirms that targeted pre-processing, such as adaptive binarization and contrast enhancement, can mitigate these errors, yet residual sensitivity remains, especially in field images captured on mobile phones [31]. Even so, embedding OCR in food-waste-reduction applications remains highly beneficial: the automated capture of expiration dates keeps household inventories up to date and triggers timely reminders that prompt users to consume items before they spoil, all without requiring manual data entry [32].

While OCR enables text-based recognition of expiration dates, it does not address the challenge of identifying unpackaged or label-free food items, such as fruits or leftovers. For this, visual classification using Convolutional Neural Networks (CNNs) offers a complementary solution, capable of recognizing food types directly from images [33].

A convolutional neural network (CNN) is a deep feed-forward architecture that processes an input image as a multi-channel tensor and iteratively transforms it through stacks of learned convolutions, nonlinear activations, and down-sampling operations, thereby producing hierarchical feature maps that progress from local edge detectors to high-level object representations [34]. Recent evaluations have shown that mobile-optimized CNNs achieve top-1 accuracies above 90% on fine-grained food recognition benchmarks, even under uncontrolled lighting and background conditions [35].

These technologies, OCR for text extraction and CNNs for image classification, now execute efficiently on smartphones thanks to hardware acceleration and compact model architectures. Despite these advances, developers often struggle with fragmented toolchains and steep learning curves when they attempt to combine multiple vision modules in production.

Firebase ML Kit provides a unified framework that supports both OCR and CNN-based functionalities. Through its on-device and cloud modules, it enables real-time text recognition and image labeling for identifying items, all while optimizing performance for mobile environments. Firebase ML Kit is a cross-platform mobile SDK developed by Google that packages lightweight [36], pre-trained computer-vision and natural-language models together with a TensorFlow Lite deployment pipeline and remote-model-update mechanism, thereby enabling developers to embed deep-learning inference that runs offline with millisecond-level latency and minimal code in Android and iOS applications [37].

These capabilities can power key app features for managing household food inventories, monitoring expirations, and identifying unpackaged foods. Recent studies emphasize that such features (e.g., automatic expiration date reminders and inventory monitoring) are crucial for curbing household food waste [38].

Firebase ML Kit’s Text Recognition API enables real-time extraction of printed text from images directly on mobile devices, using a deep learning-based pipeline that includes image preprocessing, character segmentation, and language-specific decoding. Unlike traditional OCR engines, such as Tesseract, ML Kit is optimized for mobile environments and supports seamless model updates through Firebase, eliminating the need for complete application redeployment, making it more adaptable and resource-efficient [39].

Another relevant technology is Firebase ML Kit’s image labeling API, which enables on-device inference using a general-purpose model that recognizes over 400 entities, allowing applications to automatically classify a photograph, such as identifying food items like fruits or vegetables, without supplying additional context. This capability is especially beneficial for recognizing unpackaged items in real time [40]. Firebase also exposes a cloud image labeling variant (backed by Google Cloud Vision) with broader functionality: coverage of 10,000+ labels, per-label Knowledge Graph entity IDs for language-independent disambiguation, and a usage-based pricing model that includes a limited no-cost tier (first 1000 calls/month). This cloud path is suitable when higher label coverage or canonical entity identifiers are required, accepting the trade-offs of network latency and per-request cost [41].

A further essential component is barcode scanning, which allows users to log packaged food items by decoding UPC, EAN, or QR codes directly from the product. ML Kit’s barcode scanning API supports real-time, on-device recognition of these formats and facilitates automatic retrieval of product identities such as SKU or product names [42].

Together, these integrated vision technologies—text recognition, image labeling, and barcode scanning—position Firebase ML Kit as a powerful enabler for building intelligent mobile solutions. Abstracting complex deep-learning tasks into accessible APIs allows developers to focus on designing meaningful user experiences rather than implementing low-level vision pipelines. This integration proves especially valuable in applications targeting food waste reduction, where automated logging, classification, and expiration tracking are essential to driving behavioral change at the household level.

Based on the gaps identified in the current landscape of mobile applications addressing household food waste, this study proposes the development of an integrated mobile solution that leverages the combined power of Optical Character Recognition (OCR) and Convolutional Neural Networks (CNNs), implemented via Firebase ML Kit. While several existing applications rely on partial implementations of either barcode scanning, manual input, or limited OCR functionalities, very few offer a cohesive system that automates the entire process of food inventory management in a user-friendly and efficient way.

The core research hypothesis guiding this study is that the development of a mobile application that integrates OCR-based text recognition and CNN-based image classification through Firebase ML Kit will significantly improve the automation, accuracy, and usability of household food inventory tracking compared to currently available mobile applications that only partially address these functionalities.

This hypothesis assumes that by embedding both OCR and CNN capabilities within a single application, users will be able to log both packaged and unpackaged food items with minimal effort. OCR will facilitate the automatic extraction of expiration dates and product names from labels. At the same time, CNN-based image classification will enable the recognition of fruits, vegetables, and leftovers that typically lack barcodes or printed text. Firebase ML Kit serves as the technological enabler, providing developers with a unified platform that supports both of these machine learning models in an optimized, mobile-friendly environment, ensuring smooth performance and low-latency inference directly on the user’s device.

The integration of these technologies is expected to reduce manual input requirements, increase the reliability of food tracking, and provide more timely and accurate notifications regarding food expiration. This, in turn, has the potential to empower users to make more informed decisions about their food consumption and reduce unnecessary household waste. The hypothesis will serve as the conceptual basis for the system’s architecture, development, and eventual user testing phases.

3. Methodology

The methodology outlines the technical and procedural framework used in the design and implementation of the mobile application. It documents the use cases, the technology stack, the architecture, and the decision flows that underpinned the UI/UX design and highlighted the coherence between the functional requirements and the proposed solutions. This approach iteratively guided the transition from prototypes to the final implementation, ensuring scalability, reliability, and consistent user experience.

3.1. Use Cases

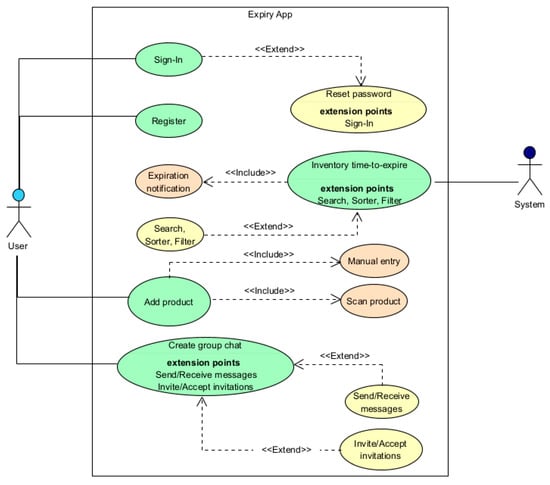

To proceed with the next development steps, we clarified what the application must do from the user’s perspective. Formalizing the use cases delineated the functional scope and the acceptance criteria, thereby establishing the foundation for the software architecture and for the design of the user interface and the user experience. The application covers six principal use cases, as shown in Figure 1:

Figure 1.

Use Case Diagram for the Expiry Application. Source: Author’s own elaboration (processed diagram based on the defined use cases).

- Account creation and sign in: Users create accounts, authenticate with email and password, maintain secure sessions, sign out, and reset passwords.

- Add product by image capture and automatic identification: The user takes a photo of the product, then the system identifies the product and extracts the expiration date from the packaging. If the date is missing or unclear, the system estimates a provisional date based on the product category. The user reviews and may edit the values, and after confirmation, the system saves a standardized record with name, category, expiration date, and computed time to expiration.

- Add product through manual entry: The user enters the name, selects the category and quantity, and chooses the expiration date by means of a date picker. All inputs are validated and stored in a unified data format consistent with the scanning flow.

- Inventory with “time to expiration.”: The application presents the current inventory and it displays a countdown of remaining days for each item. It supports sorting by proximity to expiration and highlights status indicators such as “near expiration” in order to facilitate consumption prioritization.

- Search and filtering: The inventory can be filtered and sorted by category and name. Full text search is available. The list updates in real time to enable efficient navigation within large inventories [43].

- Group messaging: Members of the household can exchange messages in dedicated channels with persistent storage and chronological ordering that support coordination without leaving the application [44].

The six use cases provide a complete description of user goals and observable outcomes. They delimit the system boundary, clarify the responsibilities of each actor, and translate user needs into verifiable acceptance criteria. They also establish traceability from requirements to architectural components, data structures, and test scenarios.

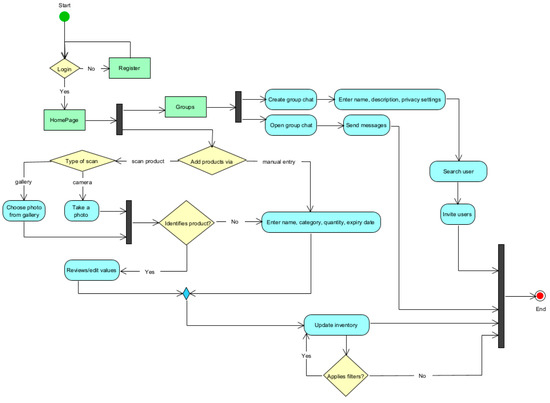

3.2. Application Flowchart

This section describes the end-to-end flows that operationalize the six use cases. Each flow specifies its trigger, the sequence of actions, the data written to persistent storage, and the observable outcomes in the user interface and the user experience. The flows are expressed at a technology-agnostic level to preserve generality while remaining verifiable against the acceptance criteria.

The account lifecycle begins when the user opens the application and chooses registration or sign in. During registration, the user provides identity attributes, the system validates them, creates the user record, and establishes a secure session. During signing in, the user submits credentials, the system validates them, restores the session, and loads the profile. All these aspects are visible in Figure 2.

Figure 2.

Application Flowchart for the Expiry Management System. Source: Authors’ own elaboration, based on the defined use cases.

Product intake follows two alternative paths that converge on one data model. In the image-capture path, the user selects scan, captures a photograph, the system identifies the product, and extracts or estimates the expiration date, then the user reviews and may adjust the values. In the manual path, the user enters name, category, quantity, and expiration date. In both cases, the system validates inputs, writes a standardized item with computed time to expiration, and updates the inventory.

Inventory computation runs continuously for the authenticated user or household. The system calculates time to expiration for each item, assigns status flags according to thresholds, and renders the list with countdown values, indicators, and ordering by proximity to expiration.

Search and filtering enable focused exploration, and the user applies category or name filters and may enter a free-text query. The system evaluates the criteria over the current inventory, returns a filtered and sorted list, and updates the view in real time, clearing the controls restores the full inventory.

Collaboration relies on group messaging and reminders. Users join household channels, compose messages, and receive chronologically ordered threads that remain accessible from the inventory context. In parallel, the system evaluates items against reminder thresholds, issues notifications that link to item details, and records delivery to avoid duplicates.

Data integrity and consistency are preserved throughout, and all operations are validated before commitment, client views synchronize with the data store across devices, and audit attributes record actor, timestamp, and action type. Conflicting edits are resolved by a deterministic policy, and the resulting state is presented to the user.

In conclusion, these flows map user goals to stable state transitions and observable feedback, ensuring consistent computation of derived information and reliable collaboration, while supporting testing, monitoring, and extensibility.

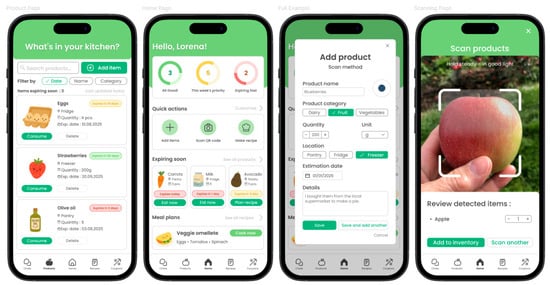

3.3. User Interface and User Experience Design in Figma

Grounded in the previously formalized use cases and in the end-to-end flowchart, a complete user interface and user experience design was developed in Figma [45]. The objective was to translate the functional requirements into clear screens, consistent interaction patterns, and a reusable design system, so that the implementation can proceed with reduced ambiguity and measurable usability targets.

The interface adopts a calm, content-first aesthetic with soft cards, rounded shapes, and a restrained palette in which a primary green conveys brand identity while semantic chips communicate urgency for expiring items through progressive coloration. Information hierarchy prioritizes current tasks and upcoming priorities, while secondary details are revealed through progressive disclosure to minimize cognitive load.

Navigation follows a stable bottom bar that surfaces the principal areas of the application, with a home-centric entry that aggregates status, quick actions, and the most relevant items. Forms employ inline validation, clear labels, and contextual help, while potentially destructive operations require explicit confirmation. System feedback is delivered through concise toasts, modals, and status chips, and micro-interactions provide immediate acknowledgement without distracting motion, as shown in Figure 3.

Figure 3.

Representative application screens designed in Figma. Source: Author’s own elaboration—available in the Supplementary Material (mockups derived from the use cases and the application flowchart).

The Figma design consolidates the use cases and the application flowchart into a coherent interface that is consistent, accessible, and easy to extend. The token-based system and reusable components ensure visual and behavioral uniformity across screens, while the prototypes demonstrate clear task flows from entry to outcome. By specifying hierarchy, feedback, and states in detail, the artifact reduces ambiguity for implementation and shortens iteration cycles during testing. The result is a stable foundation for development, usability evaluation, and future enhancements that preserve traceability from requirements to shipped features.

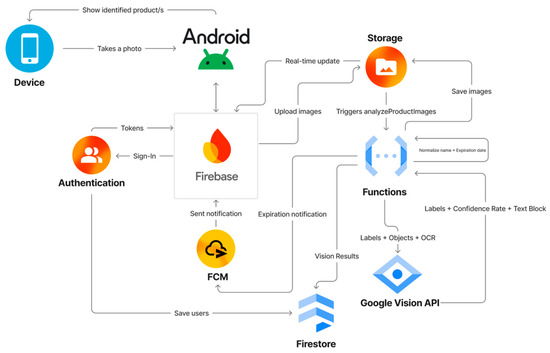

3.4. System Architecture

The system adopts a client–serverless, event-driven architecture, as shown in Figure 4. Interactive logic runs on the Android client, written in Java and integrated with the Firebase Android SDK. Persistence, identity management, image analysis, and notification delivery are provided by managed Firebase and Google Cloud services [46].

Figure 4.

System architecture and end-to-end data flow. Source: Authors’ own elaboration.

End-to-end execution begins with user sign-in through Firebase Authentication [47], after which the Android app captures a photo and uploads it to Cloud Storage. The object-finalize event triggers the analyzeProductImages Cloud Function, which calls Cloud Vision, receives labels with confidence scores and OCR text blocks, and performs normalization and enrichment.

The function then persists a canonical item document in Firestore containing the standardized name, category, quantity, expiration date or category-based estimate, computed days-left, status, and source. The client maintains an active onSnapshot subscription, so Firestore pushes a real-time update that renders the identified product and its countdown in the inventory view. When thresholds or events are met, such as a near- expiration condition or a new message, the backend sends a Firebase Cloud Messaging notification to the user’s devices.

In summary, the proposed serverless architecture centralizes recognition and validation in managed back-end services while keeping the Android client lightweight and responsive. The design enables elastic scaling, consistent model quality across devices, and real-time feedback through Firestore listeners and FCM. It is also maintainable and extensible, since new detectors, business rules, or notification policies can be deployed in Cloud Functions without re-shipping the mobile app.

3.5. Application Implementation

The application is built in Android Studio using Java and employs a client app with a serverless Firebase backend. Firebase Authentication handles account creation and sign-in, providing secure session persistence, password reset, and token-based access that integrates with Firestore security rules and FCM targeting. Scanning uses Firebase ML Kit, for rapid product identification and Text Recognition (OCR) to extract expiration dates; when a date is missing or unreadable, the app estimates one from the product category.

The workflow also supports manual item entry. Persistence and real-time synchronization of the product inventory and group chats are handled by Cloud Firestore, enabling filtering and sorting by category, name, and time-to-expiration through indexed queries and real-time listeners. Firebase Cloud Functions perform server-side logic, payload validation and normalization, days-left computation, reminder scheduling, and fan-out of group messages while Firebase Cloud Messaging delivers push notifications for items nearing expiration and for new chat activity. This combination of on-device inference and a managed backend aligns with the intended functionality and eliminates the need to operate dedicated servers.

In what follows, we present the code-level implementation of the scenario in which the expiration date is missing or unreadable and the application predicts a plausible date from the product category. The backend falls back from OCR parsing to a rule-based category-to-shelf-life mapping and then computes daysLeft and a status flag before persisting a canonical item to Firestore. The Android client maintains a real-time subscription and renders the enriched item as soon as it is available. The subsequent listings provide the key fragments in Cloud Functions (JavaScript) and Android (Java) that implement this behavior.

First, we show the event-driven entry point that consumes a newly uploaded image, invokes Google Cloud Vision for label detection and text recognition, performs normalization, and persists canonical documents in Firestore are provided in Appendix A, Listing A1: Cloud-Function Orchestration for Image Analysis and Persistence.

The function reacts to a Storage finalize event, downloads the image, requests labels, objects, and OCR from Cloud Vision, normalizes the candidate product name, extracts or estimates the expiration date, derives daysLeft and status, and upserts a canonical item in users/{uid}/items/{imgId}. Next, we detail the OCR parser that scans domain-specific patterns, resolves multiple date formats into an ISO timestamp, and discards implausible candidates.

The procedure for extracting expiration dates from OCR output appears in Appendix A, Listing A2: OCR Expiration-Date Extraction and Validation. The routine aligns with packaging conventions by combining numeric formats and cue phrases such as “best before” and “use by,” then validates candidates by requiring a parsable and future-dated value. The pipeline applies lowercasing and token normalization, scans multiple date patterns, resolves ambiguous formats by preferring unambiguous four-digit years when available, and rejects implausible or past-dated values.

The fallback estimator that maps food categories to typical shelf-life windows, imputes a provisional expiration, derives daysLeft and a status indicator, and thus enables consistent downstream rendering and alerts is presented in Appendix A, Listing A3: Category based shelf-life estimation and derived status fields.

The mapping encodes pragmatic priors for household products and produces a deterministic estimate when OCR cannot recover an explicit date. The derived daysLeft and status fields standardize UI ordering and notification thresholds across devices. The category-based estimator provides orientation values only. The application always prefers an OCR date over an estimate, allows the user to edit any value before saving, and flags items as near expiry conservatively. Predicted dates do not constitute food-safety advice and must not be used in place of the manufacturer’s use-by or best-before label.

To avoid arbitrary constants, our shelf-life priors were parameterized from EU guidance and assume correct household storage (refrigerator ≤ 5 °C, freezer ≈ −18 °C) [48]. In line with EFSA and the European Commission’s date-marking framework, any detected “use by” date (being a safety limit) always overrides estimates, whereas “best before” concerns quality and can remain acceptable if storage conditions were respected and the food shows no spoilage cues [49]. Accordingly, for highly perishable categories (e.g., raw meat and fish) the estimator applies very short refrigerated windows (order of days), and for pasteurized dairy it applies about-a-week horizons. For shelf-stable canned goods it uses long quality windows (months to years) while still deferring to the printed date. These values are conservative orientation ranges intended to trigger reminders, not food-safety judgements, and the app prominently prioritizes any manufacturer date it can read [50].

To mitigate OCR failures caused by motion blur, low light, or defocus, the mobile client employs a capture-assist loop that evaluates frame quality in real time and triggers the shutter only when minimum conditions are satisfied. Using CameraX, the system computes three indicators per frame, namely luminance as the average Y on a subsampled grid, sharpness as the variance of a Laplacian on Y, and motion as an exponential moving average of the gyroscope magnitude. The interface presents these as light, focus, and stability indicators, and the capture routine defers the shot until all three exceed their thresholds, specifically luminance at least 70, Laplacian variance at least 18, and gyroscope magnitude at most 0.5 radians per second, or until a short timeout of approximately 2.5 s elapses. Before capture, the client requests center autofocus and autoexposure using a metering point and waits for focus to complete, then retries if the saved JPEG size suggests a failed capture.

Upon upload, images are normalized through auto-orientation, mild sharpening, and contrast normalization, then processed with a two-stage detector that combines a global pass with a tiled pass to improve recall for small targets. OCR is attempted on each crop first, and when no valid date is parsed the system falls back to a conservative shelf-life estimate that is aware of product category and container type.

The resulting implementation delivers capture, cloud recognition, normalization, and real-time presentation with a thin Android client and a managed backend. The code structure is modular and testable, which supports extensions such as refined priors, additional detectors, and alternative notification policies without changing the mobile client.

4. Application Testing and Empirical Evaluation

We conducted an empirical evaluation of the application to measure two capabilities: accurate product identification and accurate determination of an expiration date. When the printed expiration date was absent or not legible on the packaging, the system invoked a predefined, category-based shelf-life mapping to generate an estimated expiration date. Each prediction paired the product label with either a parsed date from OCR or an estimated date from the fallback rule.

4.1. Experimental Setup and Dataset Construction

Testing took place on 24 September 2025, inside a supermarket using a Samsung Galaxy A54. Data were collected under everyday conditions (indoor ambient lighting, handheld capture). The dataset comprised standard single-product scenes and multi-instance scenes in which multiple units of the same product appeared within a single frame on shelves or in baskets. Packaging reflected the Romanian retail context, with labels predominantly in Romanian and English. Evaluation was performed per product type per scene: a frame containing many identical units generated one product-level observation, and performance was assessed on whether the application correctly identified the product and obtained an expiration date (via OCR or, when no readable date was present, via the category-based fallback).

To mirror realistic household purchasing, we assembled a diverse set of grocery items spanning fresh produce, dairy, meat and fish, bread/bakery, beverages, snacks, canned/preserved goods, frozen items, cereals, and oils/condiments. This selection is aligned with market evidence showing that dairy (~82%), fresh produce (~80%), snack foods (~76%), bread and pastries (~71%), frozen goods (~70%), canned goods (~65%), pasta and rice (~65%), butcher meats (~62%), and seafood (~38%) are among the most frequently purchased grocery categories; we therefore structured our test list to reflect the composition of typical supermarket baskets while also covering challenging OCR and classification cases [51].

The evaluation dataset comprised 59 distinct products distributed across twelve categories: fresh produce, citrus fruit, vegetables, culinary herbs and plants, meat and fish, dairy products, eggs, beverages, canned and preserved goods, condiments and edible oils, cereals and bakery items, and sweets and confectionery. For each image, the unit of observation was the product type within that scene. Images that contained a single product type contributed to one observation. Images that displayed multiple units of the same product type (for example, many identical packages on a shelf) also contributed to one observation, because the research task was to determine whether the system correctly recognized the product type and produced an expiration date for that type (additional identical units did not introduce new information).

For every product we documented in a standardized manner the single most probable predicted label and its confidence score. We also recorded whether an expiration or best-before date label was present on the packaging or absent. When a label existed, we noted its visibility as fully visible, partially visible, or not visible. We logged the outcome of the optical character recognition process and coded it as success, misread, or failure. When available, we stored the expiration date parsed from the label. We then specified the method by which the final expiration date was obtained, either optical character recognition or estimation via a category-based shelf-life rule.

For evaluation, the ground truth was the date printed on the packaging when a label was present, and when no printed date existed the ground truth was an agreed category-specific shelf-life mapping. Product identification was scored as correct or misclassified. The expiration-date output was scored as correct or incorrect relative to the ground truth. For dates produced through the shelf-life estimation rule we allowed a tolerance of plus or minus one calendar day to accommodate day-level rounding. In the absence of a legible on-pack date, the system provides an estimated expiry based on category-level, orientation-only ranges derived from general storage guidance. These values are intended solely to prioritize consumption and do not constitute food-safety judgments. The actual safe window depends on household storage conditions, package integrity, and product-specific characteristics. Whenever a manufacturer-printed ‘use by’ date is detected, it supersedes any estimate. Accordingly, throughout our analyses all estimated dates are treated as indicative placeholders that support countdown displays and notifications and may be revised by users in light of storage context and any observable signs of spoilage.

4.2. Representative Test Cases

During the empirical evaluation, we observed representative scenarios that characterize the system’s real-world behavior: (i) complete successes (correct product and OCR date), (ii) cases without a readable date where category-based estimation was applied, (iii) instances with a correct OCR date but a misclassified product, (iv) confusions among visually similar items, and (v) correct product predictions at very low confidence. The examples below include images and metadata (ground-truth label, predicted label, confidence score, date method, parsed/estimated date, and outcome).

- (a)

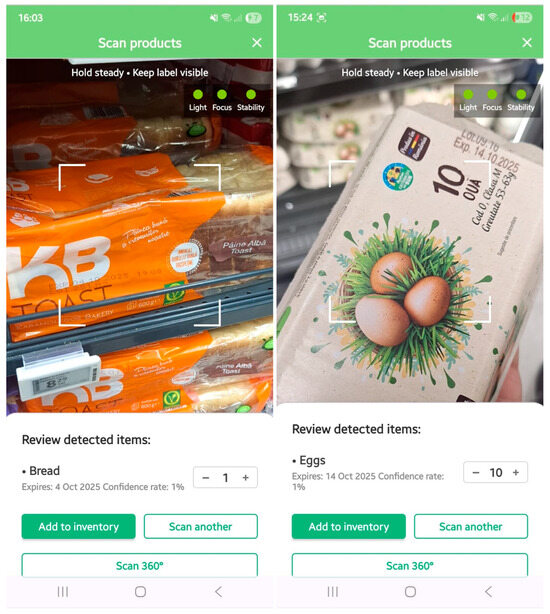

- Case A—Correct product and OCR date (packaged item)

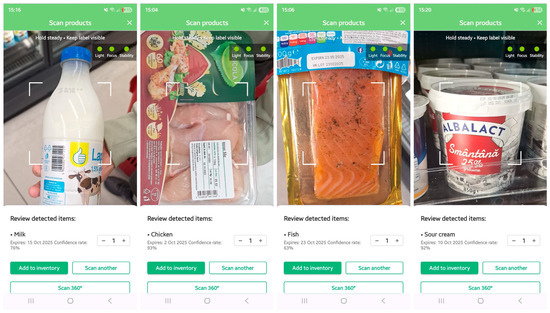

The system correctly recognized the packaged item and successfully parsed the printed expiration date from the label. The prediction paired the product label with an OCR-extracted date that matched the ground truth, as shown in Figure 5.

Figure 5.

Packaged items with correct product ID and correct OCR date. Source: Authors’ supermarket testing, 24 September 2025.

These examples illustrate “happy-path” outcomes: the system correctly identified the product and extracted a valid expiration date from a single image. In each case, the predicted label matched the ground truth and the OCR-parsed date matched the printed date, yielding a correct product–date pair.

- (b)

- Case B—Correct product, no readable date, category-based estimation (unlabelled produce)

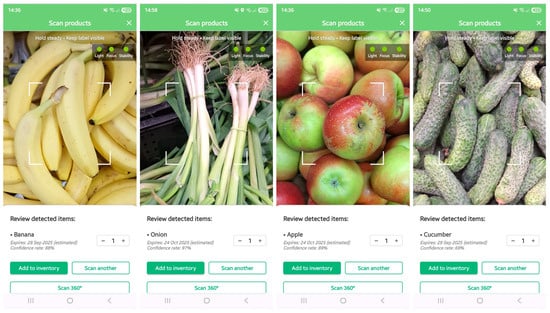

For a fresh produce item without a printed or readable date, the system returned the correct product label and issued a category-based expiration estimate consistent with the shelf-life mapping, as shown in Figure 6.

Figure 6.

Fresh produce with no readable printed date, expiration estimated via category-based shelf-life rule. Source: Authors’ supermarket testing, 24 September 2025.

Most fruits and vegetables were correctly identified, typically with high confidence, and because these items usually lack printed dates the application returned category-based shelf-life estimates consistent with the produce mapping.

- (c)

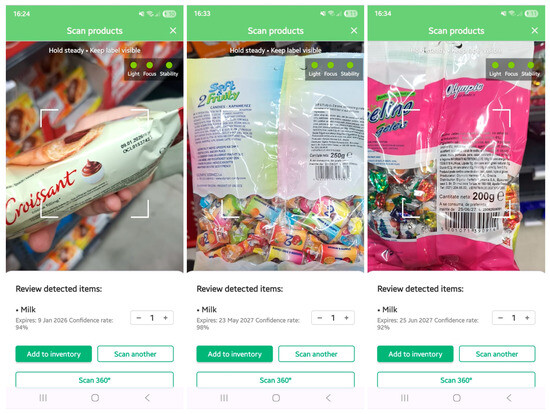

- Case C—Correct OCR date, product misclassified (packaged goods with misleading cues)

The system parsed the printed expiration date correctly from the packaging, but the product label was assigned incorrectly, likely due to distracting visual cues (e.g., large ingredient words such as “milk,” graphic elements, or cross-category imagery) that resemble other items on the shelf. The result is a correct date paired with an incorrect product, as shown inf Figure 7.

Figure 7.

Correct OCR date but misclassified product (packaged goods with misleading cues). Source: Authors’ supermarket testing, 24 September 2025.

Despite challenges such as glossy, reflective packaging surfaces and a small, low-contrast date stamp, the system was still able to successfully detect and parse the expiration date through OCR. This outcome illustrates the robustness of the recognition pipeline when faced with adverse visual conditions that typically reduce legibility for both humans and machines.

- (d)

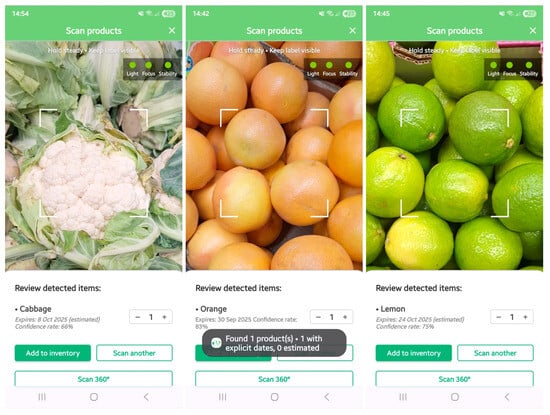

- Case D—Product confused within a visually similar family

In scenes with look-alike produce, the classifier occasionally assigned a closely related label within the same broader category. Items lacked readable dates, so the system returned category-based shelf-life estimates, as shown in Figure 8.

Figure 8.

Product confusions within visually similar families of fresh produce. Source: Authors’ supermarket testing, 24 September 2025.

These examples illustrate a typical limitation of fine-grained recognition in fresh produce: labels remain within the correct category but may drift at variety level. The date output stays reasonable because the fallback rule is shared.

- (e)

- Case E—Correct product with extremely low confidence

The classifier returned the correct product label in both scenes despite a ~1% confidence score, as shown in Figure 9. This unusually low confidence is plausibly explained by scene clutter, off-center framing, and other non-target objects surrounding the item.

Figure 9.

Correct product predictions at extremely low confidence levels. Source: Authors’ supermarket testing, 24 September 2025.

These examples show that correctness can coexist with low confidence. Practical mitigation includes calibrated thresholds and a brief “confirm product?” prompt for low-confidence predictions.

Taken together, these representative test cases demonstrate both the strengths and the limitations of the system under real-world supermarket conditions. The application proved capable of accurately pairing products with valid expiration dates in straightforward situations, while also showing resilience in challenging contexts such as reflective packaging, low-contrast date stamps, or visually cluttered scenes. At the same time, cases of product misclassification and low-confidence outputs highlight areas where fine-grained recognition and confidence calibration require further refinement. Overall, the evaluation confirms that the system can reliably support everyday grocery management while also pointing to clear directions for future improvements in robustness and user interaction.

4.3. Evaluation Summary

This section synthesizes the aggregate results reported in Table 2 and interprets system performance across all 59 products and 12 categories. We summarize overall accuracy for (i) product identification and (ii) expiration-date identification (OCR), and we relate these outcomes to the representative cases in Section 4.2.

Table 2.

Consolidated evaluation results by product category.

Across the full dataset, the application correctly identified 43/59 products (72.9%). For items that had a printed date on the package, the OCR pipeline correctly read the date for 25/30 items (83.3%). For items without a visible printed date (29/59), the fallback rule produced estimated dates for all cases (29/29, full coverage). Consequently, every observation yielded a usable expiration date, either parsed or estimated.

Performance was strongest in categories with unambiguous packaging and clearly printed dates, such as Meat and Fish, Dairy and Eggs, and Beverages, where both product identification and OCR reached or approached 100%. Condiments and Oils achieved perfect product recognition with OCR at 75%, consistent with occasional low-contrast stamps. Cereals and Bakery recorded 83.3% for product identification and 80% for OCR. Canned and Preserved and Sweets and Confectionery showed lower product identification at 50% and 42.9%, in line with the misclassification behavior illustrated in Case C. Fresh-produce categories rarely carried printed dates, product identification was moderate at approximately 50 to 70 percent, and expiration outputs relied on the category-based shelf-life estimates described in Cases B and D.

Misclassifications concentrated in visually dense scenes, in packages with cross-category visual cues such as prominent ingredient words, and in fine-grained families like citrus. OCR errors were linked to glossy or curved surfaces and small, low-contrast stamps, yet the pipeline often succeeded in such settings, as shown in Case C. Very low confidence scores near 1 percent sometimes coexisted with correct labels in cluttered frames, as shown in Case E. These findings motivate calibrated confidence thresholds, short confirmation prompts for low-confidence outputs, and multi-frame aggregation or targeted data augmentation to reduce confusion.

In everyday supermarket conditions, the system consistently returns a valid expiration date (parsed when available, estimated otherwise) and achieves excellent product recognition on packaged goods. The results also point to clear opportunities for improvement: augment training for fine-grained produce classes, increase tolerance to label glare/curvature in OCR, apply multi-frame (Scan 360°) aggregation for stability, and tune confidence-aware UI flows.

The external validity of our findings is constrained by the sample size (59 items) and the single-site, single-device design. As such, the reported accuracies should be interpreted as feasibility evidence rather than population estimates. Broader sampling across retailers, packaging materials, lighting conditions, languages, and handset optics would introduce the variability necessary to test generalizability and reduce site- or device-specific bias. We anticipate that enlarging the dataset and diversifying locations would yield performance estimates that more faithfully reflect the system’s capabilities in routine use. Accordingly, a planned multi-site, multi-device replication with stratified sampling by category will be conducted in follow-up work.

5. Conclusions and Future Directions

This study advanced a practical and scalable approach to household food management by combining product recognition with robust extraction or estimation of the expiration date. The proposed pipeline demonstrates that a lightweight mobile client paired with a serverless backend can deliver real-time feedback while keeping user effort low and trust high.

In a supermarket evaluation the system identified 43 of 59 distinct products and correctly read a printed expiration date for 25 of 30 labeled items. When no printed date was visible, the rule-based fallback produced a usable estimate for every case in that subset. These results show dependable coverage across varied categories and conditions and confirm that an everyday smartphone can sustain both accuracy and responsiveness for the target tasks.

These outcomes directly address the first three research questions: (RQ1) the classifier maintains robust performance in natural, user-captured scenes, (RQ2) the OCR component reads printed dates reliably across packaging and lighting variations, and (RQ3) the category-based fallback ensures complete coverage whenever text is absent or unreadable, with absolute accuracy to be calibrated against independent sources.

This paper should be read as a proof-of-concept and technical implementation study rather than a behavioral evaluation of food-waste outcomes. Our contribution is system-level: we demonstrate feasibility and performance characteristics of an integrated mobile pipeline (image classification, OCR, and rule-based estimation) under realistic capture conditions. We did not design or power the study to test changes in household behavior, purchasing, or measured waste reduction, nor did we run longitudinal or randomized interventions. External validity is constrained by a single-site, single-device dataset and by context-specific priors; thus, the reported accuracies are feasibility indicators, not population estimates. Future work will require multi-site deployments with diverse users and controlled study designs to quantify engagement, compliance, and net waste-reduction effects.

The application benefits consumers through faster intake, fewer missed items, and clear countdowns that guide daily decisions. The client captures a photo and receives an immediate product label with an expiration date or a defensible estimate, which reduces manual entry and improves adherence to reminders. Store staff can use the same workflow to triage shelves with minimal training, since the interface highlights items near expiration and groups them by urgency. Warehouse teams can monitor lots with near-term risk and act on aggregated alerts. Across these roles the combination of instant recognition, consistent normalization, and real-time updates increases confidence in the information displayed and shortens the time from observation to action.

The system unifies three capabilities that are often siloed in existing solutions. It recognizes the product, parses an expiration date when visible, imputes a plausible date when text is absent or unreadable, and then exposes a single canonical item with computed days left and status. The design runs on inexpensive, widely available devices and relies on managed cloud services, which keeps total cost of ownership low for both households and organizations. Precision is strong on packaged goods that carry clear labels, and usability remains high because the application requires only a brief capture followed by automatic processing. The workflow maintains a consistent data model across capture modes, which simplifies sorting, filtering, and notification policies on device and in the backend.

The evaluation reveals predictable limits that inform future work. Fine-grained classes inside visually similar families produce occasional confusion. Low-contrast date stamps printed on glossy or curved surfaces can still fail under ambient lighting. Confidence scores near the decision threshold sometimes coincide with correct labels, which complicates a simple cut off strategy. Fresh produce seldom carries a printed expiration date, so the system relies on category priors that are deliberately conservative. These constraints did not prevent the system from yielding a usable expiration date for every observation in the dataset, yet they motivate targeted improvements in acquisition and inference.

Regarding the remaining research questions, we did not implement end-to-end latency, CPU/memory, or battery on devices, nor did we run a longitudinal comparison to quantify engagement or household waste reduction; these will be evaluated in a subsequent study (RQ4–RQ5).

With explicit user consent and privacy controls, we will retain capture images to build a supervised machine-learning corpus for a convolutional neural network (CNN) that estimates product condition (fresh/aging/spoiled). The dataset will be annotated under a documented labeling protocol, split into train/validation/test folds, and used to train a lightweight CNN suitable for on-device inference (e.g., MobileNet/EfficientNet distilled to TensorFlow Lite). We will fuse the CNN output with OCR-extracted dates and category priors via a multimodal model (early or late fusion) and calibrate probabilities (isotonic or Platt scaling) to produce a consumption-window estimate with quantified uncertainty. Evaluation will report AUROC, F1, calibration error (ECE), and ablations for each signal (CNN, OCR, category). A multi-site field trial will then measure generalizability and operational impact, instrumenting latency, CPU/memory/battery, task time, confirmation rates for low-confidence cases, and proxy metrics for waste reduction. This program directly addresses RQ4–RQ5 and yields the evidence needed to tune thresholds, prompts, and deployment policies.

Model and data improvements provide another tractable path to higher reliability in everyday conditions. The category prior table can expand to variety level granularity for produce and bakery where shelf life varies meaningfully. Training data can be augmented with reflective foil packages and small embossed stamps that often challenge OCR, while capture can incorporate adaptive exposure and glare rejection to handle curved or glossy surfaces. Text line detection tuned for date patterns can further reduce false positives that originate from ingredient strings and promotional text.

Field observations confirm that the expiration date is rarely printed on the front of packages. It frequently appears on a side panel or the back and sometimes on the cap or a crimped edge. This placement interacts with glare and curvature in ways that reduce OCR reliability during a single front-facing capture. A structured 360-degree scanning mode can mitigate these issues. The application can guide the user to rotate the product in equal steps, capture short bursts at each angle, and fuse detections across frames. Multi-view OCR increases the chance that at least one frame presents the date stamp with favorable lighting and geometry.

Multi-frame classification stabilizes the product label through temporal voting and reduces sensitivity to clutter in any one frame. A practical policy is to request eight views around the object with a minimum time gap that prevents motion blur and to stop early when the system reaches a confidence threshold for both product and date. This addition fits the current architecture since the client already orchestrates capture and the backend already aggregates normalized fields into a canonical item.

Several extensions follow naturally from the results and from stakeholder needs. The first avenue concerns warehouse and store alerts, which take shape as role-based dashboards that surface near expiration items by location, lot, and supplier. Alerts can be batched for morning and afternoon checks in order to limit notification fatigue while preserving timely action. The same signals enable pick face rotation and targeted back room pulls that keep shelves aligned with first expiring first out practice, which improves compliance and reduces waste through earlier intervention.

A complementary direction targets automatic replenishment triggers that connect near expiration telemetry with classical reorder points for fast moving goods. When remaining shelf time and on hand quantity cross a jointly defined risk threshold the system can propose a replenishment action with preferred substitutes already ranked. This mechanism is particularly valuable for goods that retain sellable quality under a secondary classification after the initial expiration window, and it creates a bridge between recognition outputs and inventory execution.

Price management for quality transitions follows naturally from these signals and supports retail policies that discount products as they approach the expiration date or when they shift into a secondary quality tier. The application can publish a daily candidate file with recommended markdown percentages tied to days left and historical sell-through, which gives managers a concrete starting point. A closed loop can then learn discount elasticity at the category level and refine the recommendations over time without manual tuning, which stabilizes outcomes across stores with different traffic patterns.

User interaction can also become confidence-aware so that human attention is requested only when it adds measurable value. A brief confirmation prompt can appear when the classifier confidence is low or when OCR returns multiple plausible dates, which keeps the default path fast while preserving accuracy in the hard cases observed during evaluation. If the first single-view capture fails to yield a clean date the client can automatically switch to multi-view capture and fuse the additional evidence without requiring the user to restart the task.

Finally, household engagement features can turn accurate recognition into sustained behavior change with minimal friction. Shared goals, streaks, and waste reduction badges can reflect items consumed before the expiration date and make progress visible to all members of a household. Reminders can pair with simple meal suggestions derived from items that are nearing the end of their window, which increases adherence without additional data entry. Together these enhancements keep the system user-friendly and low-cost while raising precision and trust across both consumer and operational settings.

Supplementary Materials

The supporting information can be downloaded at: https://www.figma.com/design/Ysm1cMH2C3IfnDfVrPnX3z/Expiry?node-id=0-1&t=biC8hViNmFrfXm6f-1 (accessed on 24 July 2025).

Author Contributions

Conceptualization, O.D., G.-L.G. and B.-I.L.; methodology, O.D., G.-L.G. and B.-I.L.; software, O.D., G.-L.G. and B.-I.L.; validation, G.-L.G. and B.-I.L.; formal analysis, O.D., G.-L.G. and B.-I.L.; investigation, O.D., G.-L.G. and B.-I.L.; resources, O.D., G.-L.G. and B.-I.L.; data curation, G.-L.G. and B.-I.L.; writing—original draft preparation, O.D., G.-L.G. and B.-I.L.; writing—review and editing, O.D., G.-L.G. and B.-I.L.; supervision, O.D.; project administration, O.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Implementation Excerpts

Appendix A provides concise implementation excerpts that support reproducibility. It includes three listings covering cloud-function orchestration for image analysis and persistence, the OCR expiry-date parser with validation, and the category-based shelf-life estimator with derived status fields, together with brief notes on configuration and environment settings.

| Listing A1. Cloud-Function Orchestration for Image Analysis and Persistence. |

| // functions/index.js import { onObjectFinalized } from "firebase-functions/v2/storage"; import * as admin from "firebase-admin"; import vision from "@google-cloud/vision"; admin.initializeApp(); const db = admin.firestore(); const storage = admin.storage(); const visionClient = new vision.ImageAnnotatorClient(); // Helpers defined in Listings 2 and 3: import { extractExpiryDate } from "./lib/expiryFromOcr.js"; // Listing 2 import { estimateExpiryDate, guessCategory, computeDaysLeft, statusFlag } from "./lib/categoryEta.js"; // Listing 3 export const analyzeProductImages = onObjectFinalized({ region: "us-central1" }, async (event) => { const file = event.data; const path = file?.name ?? ""; // Only process raw uploads at users/{uid}/raw/{imgId}.jpg if (!/^users\/[^/]+\/raw\/[^/]+\.jpe?g$/i.test(path)) return; // Load image bytes const [bytes] = await storage.bucket(file.bucket).file(path).download(); // Single request covering labels, objects, and OCR const [annot] = await visionClient.annotateImage({ image: { content: bytes }, features: [ { type: "LABEL_DETECTION", maxResults: 30 }, { type: "OBJECT_LOCALIZATION" }, { type: "TEXT_DETECTION" }, ], }); // Normalize primary fields const labelAnn = annot.labelAnnotations ?? []; const ocrText = annot.fullTextAnnotation?.text ?? ""; const category = guessCategory(labelAnn); const name = normalizeName(labelAnn, category); // simple heuristic, e.g., top confident food label let expiry = extractExpiryDate(ocrText); // Fallback when OCR did not yield a reliable date if (!expiry) expiry = estimateExpiryDate(category); const daysLeft = computeDaysLeft(expiry); const status = statusFlag(daysLeft); // Derive Firestore document path: users/{uid}/items/{imgId} const [, uid, , filename] = path.split("/"); // users / {uid} / raw / {imgId}.jpg const imgId = filename.replace(/\.[^.]+$/, ""); await db.doc(‘users/${uid}/items/${imgId}‘).set({ name, category, quantity: 1, expiryDate: expiry, daysLeft, status, source: "photo", updatedAt: Date.now() }, { merge: true }); }); // Simple name normalizer (kept inline for clarity) function normalizeName(labels, fallbackCategory) { const top = labels.find(l => (l.description || "").length > 0); return top?.description ?? fallbackCategory ?? "Item"; } |

| Listing A2. OCR Expiration-Date Extraction and Validation. |

| // functions/lib/expiryFromOcr.js export function extractExpiryDate(textRaw) { const text = (textRaw || "").toLowerCase(); // Common patterns: numeric dates and cue phrases const patterns = [ /\b(\d{1,2}[./-]\d{1,2}[./-]\d{4})\b/g, // 12/09/2025 /\b(\d{1,2}[./-]\d{1,2}[./-]\d{2})\b/g, // 12-09-25 /\b(20\d{2}-\d{2}-\d{2})\b/g, // ISO-like YYYY-MM-DD /best\s*before[:\s]*([^\s\n]+)/gi, /use\s*by[:\s]*([^\s\n]+)/gi, /exp(iry)?\s*date[:\s]*([^\s\n]+)/gi ]; for (const rx of patterns) { let m; while ((m = rx.exec(text)) !== null) { const candidate = m[1] || m[2] || m[0]; const d = tryParseDate(candidate); if (d && isFuture(d)) return d.toISOString(); } } return null; } function tryParseDate(s) { // DD/MM/YYYY or DD.MM.YYYY or DD-MM-YYYY (also two-digit years) const m = s.match(/^(\d{1,2})[./-](\d{1,2})[./-](\d{2,4})$/); if (m) { const [, dd, mm, yyyy] = m; const y = (+yyyy < 100) ? 2000 + (+yyyy) : +yyyy; const d = new Date(y, (+mm) - 1, +dd); return isNaN(d.getTime()) ? null : d; } // ISO-like or Date.parse fallback const t = Date.parse(s); return Number.isNaN(t) ? null : new Date(t); } function isFuture(d) { // Avoid obviously stale dates const today = new Date(); today.setHours(0, 0, 0, 0); return d.getTime() >= today.getTime(); } |

| Listing A3. Category based shelf-life estimation and derived status fields. |

| // functions/lib/categoryEta.js export function estimateExpiryDate(category) { const shelf = { Dairy: 7, Meat: 3, Fish: 2, Bread: 3, Fruit: 5, Vegetable: 5, Snack: 30, Beverage: 180, Canned: 730, Frozen: 90, Other: 30 }; const days = shelf[category] ?? shelf.Other; const d = new Date(); d.setDate(d.getDate() + days); return d.toISOString(); } export function guessCategory(labelAnnotations) { const names = (labelAnnotations || []).map(l => (l.description || "").toLowerCase()); if (names.some(n => /milk|yogurt|cheese/.test(n))) return "Dairy"; if (names.some(n => /chicken|beef|pork|meat/.test(n))) return "Meat"; if (names.some(n => /fish|salmon|tuna/.test(n))) return "Fish"; if (names.some(n => /bread|bakery/.test(n))) return "Bread"; if (names.some(n => /apple|banana|fruit/.test(n))) return "Fruit"; if (names.some(n => /lettuce|carrot|vegetable/.test(n))) return "Vegetable"; if (names.some(n => /can|canned/.test(n))) return "Canned"; if (names.some(n => /frozen/.test(n))) return "Frozen"; if (names.some(n => /juice|water|soda|beverage/.test(n))) return "Beverage"; if (names.some(n => /snack|chips|cracker/.test(n))) return "Snack"; return "Other"; } export function computeDaysLeft(isoDate) { const ms = new Date(isoDate).getTime() - Date.now(); return Math.ceil(ms / (1000 * 60 * 60 * 24)); } export function statusFlag(daysLeft) { if (daysLeft == null) return "unknown"; if (daysLeft < 0) return "expired"; if (daysLeft <= 2) return "near-expiry"; return "ok"; } |

References

- Eurostat. Food Waste and Food Waste Prevention—Estimates. Eurostat Statistics Explained; European Commission: Brussels, Belgium, 2024.

- Too Good To Go. Available online: https://toogoodtogo.com/en (accessed on 24 July 2025).

- Munch. Bonapp. Available online: https://bonapp.eco/ (accessed on 24 July 2025).

- Olio. OlioApp. Available online: https://olioapp.com/ (accessed on 24 July 2025).

- NoWaste. Available online: https://www.nowasteapp.com/ (accessed on 24 July 2025).

- Al-Obadi, M.; Ayad, H.; Pokharel, S.; Ayari, M. Perspectives on food waste management: Prevention and social innovations. Sustain. Prod. Consum. 2022, 31, 190–208. [Google Scholar] [CrossRef]

- Suprapto, A. Laws and Regulations Regarding Food Waste Management as a Function of Environmental Protection in a Developing Nation. Int. J. Crim. Justice Sci. 2022, 17, 223–237. [Google Scholar]

- Batool, F.; Kurniawan, T.; Mohyuddin, A.; Othman, M.; Aziz, F.; Al-Hazmi, H.; Goh, H.; Anouzla, A. Environmental impacts of food waste management technologies: A critical review of life cycle assessment (LCA) studies. Trends Food Sci. Technol. 2024, 143, 104287. [Google Scholar] [CrossRef]

- Johansson, N. Why is biogas production and not food donation the Swedish political priority for food waste management? Environ. Sci. Policy 2021, 126, 60–64. [Google Scholar] [CrossRef]

- Chia, D.; Yap, C.; Wu, S.; Berezina, E.; Aroua, M.; Gew, L. A systematic review of country-specific drivers and barriers to household food waste reduction and prevention. Waste Manag. Res. 2023, 42, 459–475. [Google Scholar] [CrossRef]

- Bunditsakulchai, P.; Liu, C. Integrated Strategies for Household Food Waste Reduction in Bangkok. Sustainability 2021, 13, 7651. [Google Scholar] [CrossRef]

- Parsa, A.; Van De Wiel, M.; Schmutz, U.; Taylor, I.; Fried, J. Balancing people, planet, and profit in urban food waste management. Sustain. Prod. Consum. 2024, 45, 203–215. [Google Scholar] [CrossRef]

- Gong, L.; Thota, M.; Yu, M.; Duan, W.; Swainson, M.; Ye, X.; Kollias, S. A novel unified deep neural networks methodology for use by date recognition in retail food package image. Signal Image Video Process. 2020, 15, 449–457. [Google Scholar] [CrossRef]

- Newsome, R.; Balestrini, C.; Baum, M.; Corby, J.; Fisher, W.; Goodburn, K.; Labuza, T.; Prince, G.; Thesmar, H.; Yiannas, F. Applications and Perceptions of Date Labeling of Food. Compr. Rev. Food Sci. Food Saf. 2014, 13, 745–769. [Google Scholar] [CrossRef]

- Florea, V.; Rebedea, T. Expiry date recognition using deep neural networks. Int. J. User-Syst. Interact. 2020, 13, 1–17. [Google Scholar] [CrossRef]

- UNEP DTU Partnership; United Nations Environment. Reducing Consumer Food Waste Using Green and Digital Technologies; UNEP DTU Partnership: New York, NY, USA, 2022. [Google Scholar]

- Too Good To Go. Impact Report; Too Good To Go: Copenhagen, Denmark, 2023. [Google Scholar]

- Olio. OlioApp. Available online: https://olioapp.com/en/our-impact/ (accessed on 12 September 2025).

- BonApp. BonApp Eco. Available online: https://bonapp.eco/intrebari-generale/ (accessed on 13 September 2025).

- Lidl Plus. Available online: https://www.lidl.ro/c/lidl-plus/s10019652 (accessed on 15 September 2025).

- Kaufland. Aplicația Kaufland. Available online: https://www.kaufland.ro/utile/aplicatia-kaufland.html (accessed on 15 September 2025).

- Carrefour. Act for Good. Available online: https://carrefour.ro/corporate/actforgood (accessed on 15 September 2025).

- Penny Romania. Aplicația Penny. Available online: https://www.penny.ro/descarca-aplicatia-penny?gad_campaignid=19605674399 (accessed on 15 September 2025).

- Carrefour Group. Tackling Food Waste: Carrefour Joins Forces with Too Good To Go; Carrefour Group: Bucharest, Romania, 2023. [Google Scholar]

- Penny Romania. PENNY Extinde Parteneriatul cu Bonapp Pentru Reducerea Risipei Alimentare; Penny Romania: Stefanestii de Jos, Romania, 2024. [Google Scholar]

- Mathisen, T.; Johansen, F. The impact of smartphone apps designed to reduce food waste on improving healthy eating, financial expenses, and personal food waste: Crossover pilot intervention trial studying students’ user experiences. JMIR Form. Res. 2022, 6, e38520. [Google Scholar] [CrossRef]

- Peng, W.; Xu, J.; Wang, H.; Wang, Y. An efficient on-device OCR system for expiry date detection using FCOS and CRNN. Algorithms 2025, 18, 286. [Google Scholar] [CrossRef]

- Wang, X.-F.; He, Z.-H.; Wang, K.; Wang, Y.-F.; Zou, L.; Wu, Z.-Z. A survey of text detection and recognition algorithms based on deep learning. Neurocomputing 2023, 556, 126702. [Google Scholar] [CrossRef]

- Takeuchi, Y.; Suzuki, K. Expiration Date Recognition System Using Spatial Transformer Network for Visually Impaired. In Computers Helping People with Special Needs. ICCHP 2024, Linz, Austria, 8–12 July 2024. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar] [CrossRef]

- Gupta, M.; Jacobson, N.; Garcia, E. OCR binarization and image pre-processing for searching historical documents. Pattern Recognit. 2017, 40, 389–397. [Google Scholar] [CrossRef]

- Maliński, K.; Okarma, K. Analysis of Image Preprocessing and Binarization Methods for OCR-Based Detection and Classification of Electronic Integrated Circuit Labeling. Electronics 2023, 12, 2449. [Google Scholar] [CrossRef]

- Fraccascia, L.; Nastasi, A. Mobile apps against food waste: Are consumers willing to use them? A survey research on Italian consumers. Resour. Conserv. Recycl. Adv. 2023, 18, 200150. [Google Scholar] [CrossRef]

- Lubura, J.; Pezo, L.; Sandu, M.; Voronova, V.; Donsì, F.; Žlabur, J.Š.; Ribić, B.; Peter, A.; Šurić, J.; Brandić, I.; et al. Food Recognition and Food Waste Estimation Using Convolutional Neural Network. Electronics 2022, 11, 3746. [Google Scholar] [CrossRef]

- Liu, D.; Zuo, E.; Wang, D.; He, L.; Dong, L.; Lu, X. Deep Learning in Food Image Recognition: A Comprehensive Review. Appl. Sci. 2025, 15, 7626. [Google Scholar] [CrossRef]

- Dai, X. Robust deep-learning based refrigerator food recognition. Front. Artif. Intell. 2024, 7, 1442948. [Google Scholar] [CrossRef] [PubMed]

- Google LLC. Firebase. Available online: https://firebase.google.com/ (accessed on 7 August 2025).

- Google LLC. Use a TensorFlow Lite Model for Inference with ML Kit on Android. Available online: https://firebase.google.com/docs/ml/android/use-custom-models (accessed on 15 September 2025).

- Clark, Q.; Kanavikar, D.; Clark, J.; Donnelly, P. Exploring the potential of AI-driven food waste management strategies used in the hospitality industry for application in household settings. Front. Artif. Intell. 2025, 7, 1429477. [Google Scholar] [CrossRef]

- Google LLC. Text Recognition. Available online: https://firebase.google.com/docs/ml-kit/recognize-text (accessed on 6 August 2025).

- Google LLC. Image Labeling. Available online: https://firebase.google.com/docs/ml-kit/label-images (accessed on 7 August 2025).

- Google LLC. Image Labeling. Available online: https://firebase.google.com/docs/ml/label-images?authuser=0 (accessed on 15 September 2025).

- Google LLC. Barcode Scanning. Available online: https://developers.google.com/ml-kit/vision/barcode-scanning (accessed on 6 August 2025).

- Mastorakis, G.; Kopanakis, I.; Makridis, J.; Chroni, C.; Synani, K.; Lasaridi, K.; Abeliotis, K.; Louloudakis, I.; Daliakopoulos, I.N.; Manios, T. Managing Household Food Waste with the FoodSaveShare Mobile Application. Sustainability 2024, 16, 2800. [Google Scholar] [CrossRef]

- Lim, V.; Funk, M.; Marcenaro, L.; Regazzoni, C.; Rauterberg, M. Designing for action: An evaluation of Social Recipes in reducing food waste. Int. J. Hum. -Comput. Stud. 2017, 100, 18–32. [Google Scholar] [CrossRef]

- Grigorcea, G.-L.; Lefter, B.-I. Expiry App Interface Prototype. Available online: https://www.figma.com/design/Ysm1cMH2C3IfnDfVrPnX3z/Expiry?node-id=0-1&t=biC8hViNmFrfXm6f-1 (accessed on 1 October 2025).

- Firebase. Cloud Functions for Firebase. Available online: https://firebase.google.com/docs/functions (accessed on 15 September 2025).

- Firebase. Firebase Authentication. Available online: https://firebase.google.com/docs/auth (accessed on 15 September 2025).

- European Food Safety Authority (EFSA). Listeria Monocytogenes. Available online: https://www.efsa.europa.eu/en/topics/topic/listeria (accessed on 10 September 2025).

- European Commission. Date Marking. Directorate-General for Health and Food Safety. Available online: https://food.ec.europa.eu/food-safety/food-waste/eu-actions-against-food-waste/date-marking-and-food-waste-prevention_en (accessed on 10 September 2025).

- European Commission. Commission Notice on the Application of Regulation (EU) No 1169/2011 on the Provision of Food Information to Consumers. Official Journal of the European Union; European Union: Brussels, Belgium, 2017. [Google Scholar]

- Drive Research. The State of Grocery Shopping; Drive Research: Syracuse, NY, USA, 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).