This study focuses on high-speed road surface information acquisition technology driven by binocular vision, developing a low-latency embedded system. Based on demand analysis, the selection of core components was completed, including the OV2710 binocular CMOS image sensor, DDR3 SDRAM image caching module, HDMI image transmission interface, and Pro FPGA core control system. In this study, methods for enhancing image clarity during high-speed acquisition were also explored. The designed system was stably installed on an acquisition vehicle to validate its high-speed image acquisition performance. Additionally, a pixel-level image fusion algorithm was designed to process the acquired images, and the results were evaluated and analyzed using objective quality assessment methods.

2.1. Design of a High-Speed Road Surface Image Acquisition System

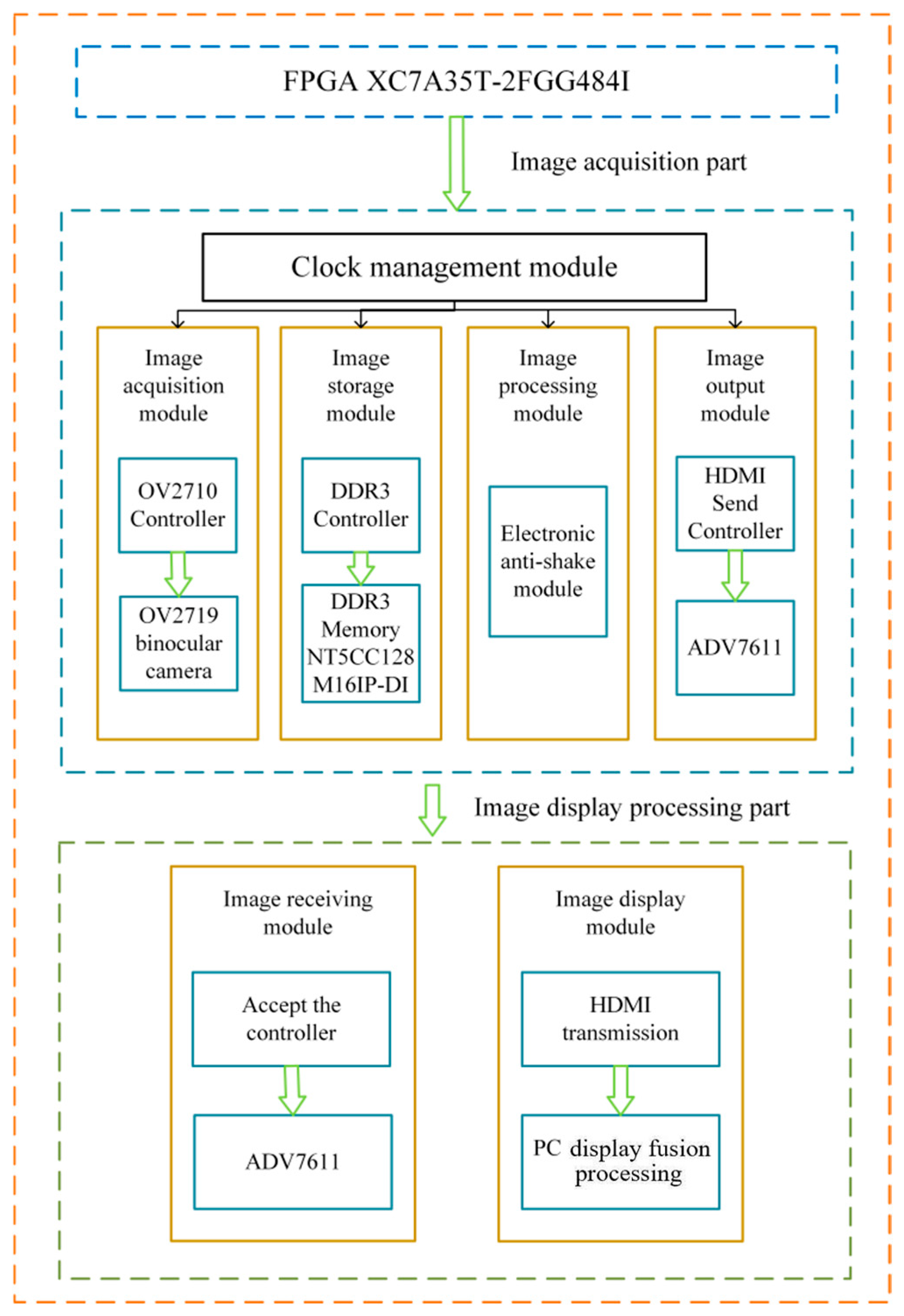

This system utilizes an FPGA hardware development platform to acquire, process, transmit, and store road surface images while the acquisition vehicle operates at high speeds and then transmits the images in real time to a PC for fusion processing. The entire system mainly consists of two parts: the road surface image acquisition module, which includes the FPGA hardware processing platform and binocular image sensors, and the image display and processing module. The acquisition module is responsible for high-speed image capture, whereas the display and processing module comprises a computer that handles real-time image transmission, reception, and fusion processing. The two modules are connected via an HDMI interface.

Figure 1 shows the overall architectural design diagram of the system [

14].

- (1)

Image Acquisition Module: Initialize the binocular OV2710 image sensor and configure its register.

- (2)

Image Storage Module: High-speed road surface images.

- (3)

Image Processing Module: Deblur is performed on real-time road surface images.

- (4)

Image Transmission Module: Package and transmit the processed image data.

- (5)

Image Reception Module: Extract road surface image data.

- (6)

Image Display Module: The acquired road surface images were displayed on a PC, and image fusion processing was performed.

The image acquisition module of this system consists of an image sensor, and the selection of the image sensor directly affects the functionality of the high-speed road surface image acquisition system. OV2710, chosen for this system, is a high-performance CMOS image sensor characterized by low power consumption, compact size, and high resolution. In addition, it supports the MIPI interface, offering significant advantages in high-speed data transmission.

The selected binocular OV2710 image sensor consisted of two OV2710 devices with different addresses and a binocular conversion board connected to the FPGA via the MIPI CSI-2 interface. As shown in

Table 1, which compares the parameters of common cameras, the MIPI CSI-2 interface used in the system significantly outperforms DVP and USB 3.0 in terms of high-speed road surface image data transmission. It supports both high-speed and low-power modes, with a transmission speed range of 80–1000 Mbps, enabling more efficient and faster image data transmission and high-quality image information.

In this system, the image storage module is mainly responsible for the real-time caching of road surface images. DDR3 SDRAM [

15], an advanced memory technology, features a more complex and refined internal structure and operational mode than its predecessors. It can perform two data transfers in a single clock cycle, thereby achieving a higher data-transfer rate than traditional SDRAM. Consequently, the system employs the NT5CC128M16IP-DI DDR3 SDRAM chip, which operates at a speed of up to 800 MHz and provides a maximum bandwidth of 25.6 Gbps, with a supply voltage of 1.35 V. This chip is capable of satisfying the high-capacity memory and high-bandwidth requirements of high-speed acquisition scenarios. The system’s physical diagram is shown in

Figure 2.

2.2. Methods for Enhancing Clarity in High-Speed Road Surface Acquisition Systems

The system must perform high-speed image acquisition while operating at high speeds, requiring the acquired images to undergo clarity enhancement. The primary issue to be addressed in the clarity enhancement method for the acquisition system is anti-shake stabilization. Therefore, in this study, a hardware mounting device was employed to install the system on an acquisition vehicle. Hardware fixation is a method of stabilizing the equipment through physical means to reduce shaking and vibration. In the field of high-speed image acquisition, hardware fixation is commonly used to ensure that the imaging device remains stable during the capture process, thereby obtaining clear and stable images or videos. Common hardware fixation methods include the use of tripods, stabilizers, and gimbals. Although these traditional methods can effectively reduce the shaking and vibration of image acquisition devices, they cannot shield the system from external environmental interference or add additional mechanical components that increase the system weight and cost. Thus, they are not suitable for high-speed road surface image acquisition systems.

Considering the system’s appearance and dimensional characteristics, in this study, a protective casing was innovatively designed to shield the system from external interference. This casing not only effectively blocks external disturbances but also reduces shaking and vibration through adhesive fixation. Therefore, to avoid increasing the weight and cost of the system, a material that is highly durable, resistant to damage, lightweight, and cost-effective is required. Acrylic sheets are common transparent plastic materials that offer high transparency, wear resistance, and weather resistance. With special treatment, they can withstand erosion from ultraviolet rays and other natural environmental factors. In addition, acrylic sheets have excellent processing properties, allowing for both mechanical machining and thermal forming, as shown in

Figure 3.

Therefore, the hardware stabilization shell of the system was constructed using an acrylic material. The acrylic shell has excellent weather resistance, effectively withstanding the effects of ultraviolet rays, rain, and temperature fluctuations, and is resistant to yellowing and aging during long-term outdoor use. Its surface hardness is high, providing scratch resistance and maintaining its gloss and transparency over extended periods. Acrylic acid can maintain stable performance across a wide temperature range from −40 °C to 90 °C, making it suitable for various climatic conditions. Additionally, it has high mechanical strength and can withstand certain pressures and loads without deformation or cracking, making it ideal for multiple extreme environments. The acrylic protective shell adopts a curved or arched design that distributes and disperses external forces along the arc or arch, ensuring that the force is evenly distributed across the entire structure. Furthermore, a thin-walled structure was employed to reduce the weight while maintaining sufficient strength. To enhance the mechanical properties of thin-walled structures, reinforcing ribs are designed inside the shell, increasing the structural rigidity and stability and improving the shell’s resistance to deformation and fracture. To securely connect the acrylic shell to the main system structure without damaging it, the dimensions of the shell were designed to closely match those of the system, forming a unified whole. Considering the safety and stability of the system, the acrylic shell was firmly adhered to the rear of the acquisition vehicle using adhesive bonding, which connects the two surfaces through intermolecular forces, providing good sealing and adequate strength. This stabilization method not only offers additional safety but also ensures the stability of the system during high-speed operation of the acquisition vehicle through tight bonding, thereby reducing motion blur in high-speed image acquisition caused by vibrations or bumps.The fixed position of the system is shown in

Figure 4.

In the design of the hardware stabilization device of the system, the shielding of external environmental interference during high-speed image acquisition was considered. Encapsulating the system in an acrylic shell effectively prevents interference from dust, noise, moisture, and other factors in complex external environments that can affect image quality. This not only enhances the accuracy and reliability of high-speed image acquisition and improves the overall performance of the system but also provides a solid foundation for the system’s long-term stability and service life.

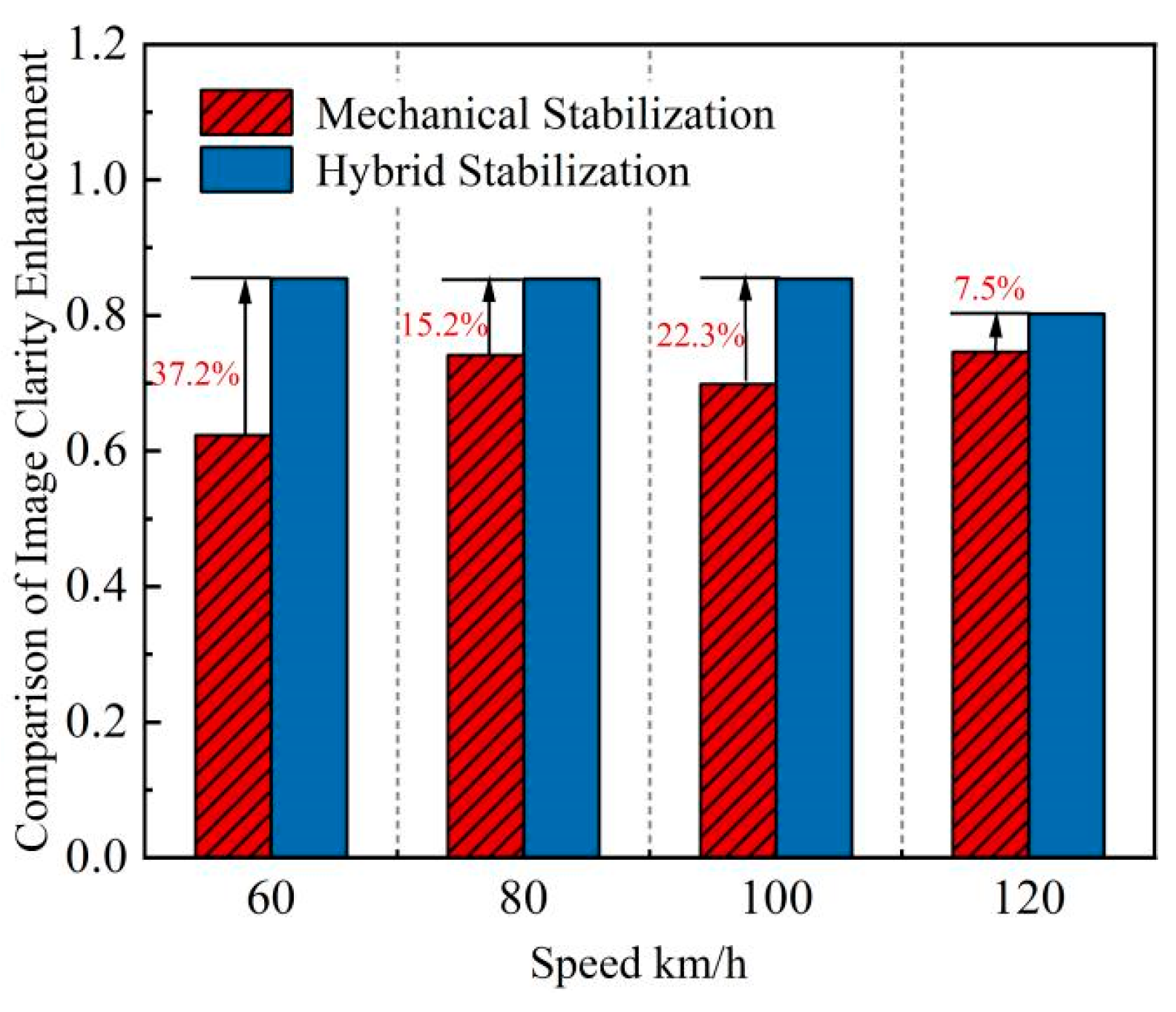

However, by relying solely on external mechanical hardware stabilization for the system, we can only ensure stability during the image acquisition phase and cannot guarantee the accuracy of the high-speed acquisition. Therefore, an internal stabilization technology that does not increase the system’s weight or cost and can achieve stabilization during the image processing phase is required. Thus, this system incorporates electronic image stabilization (EIS) along with mechanical hardware stabilization. This hybrid stabilization technology provides a more comprehensive and robust anti-shake effect, achieving stabilization in both image acquisition and processing phases.

Electronic image stabilization (EIS) technology utilizes digital image processing to detect and compensate for shifts in the image sequences. It can compensate for not only optical system movements but also various other types of motion. Compared with traditional optical and mechanical stabilization methods, EIS offers advantages such as simplicity of operation, precise results, compact size, and low cost. By detecting and analyzing the motion in image sequences, it isolates global motion vectors and compensates for them during image display, achieving image stabilization and producing clear and stable images.

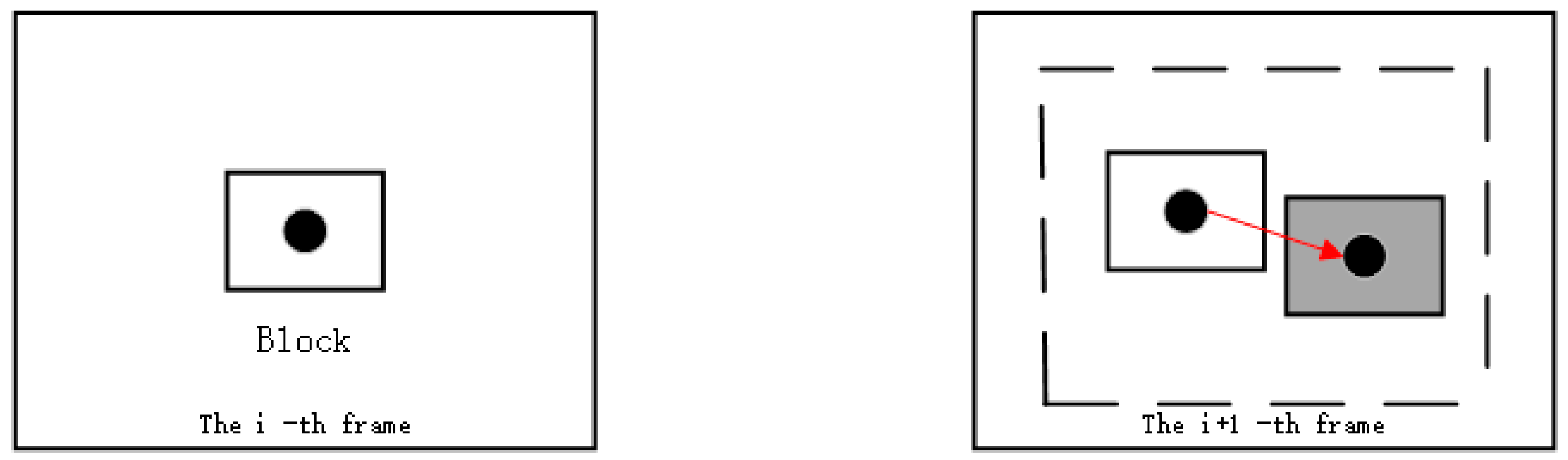

EIS technology mainly consists of three modules: a motion estimation module, a motion decision module, and a motion compensation module. The key technologies involved are global motion estimation, motion-vector filtering, and image-compensation correction. The motion estimation module uses mathematical methods to estimate the motion of an image sensor in an image sequence describing global motion. The interframe difference method was employed as the estimation technique, which calculates the interframe motion vectors using block matching. As shown in

Figure 5, the arrows represent the motion vectors from the ith frame to the (i + 1)th frame.

The motion decision module analyzes the motion vectors to distinguish between the scanning motion of the system and abnormal motions during high-speed acquisition. By employing motion vector filtering, abnormal jitter components were extracted while retaining stable scanning components. Subsequently, a fixed block shape was determined, with each shape being 57 pixels and the number of frames being 57 frames. Optimal block matching is achieved through an objective function expressed as

In the equation, i represents the number of pixels within the block, j denotes the number of matchable blocks, MxMy indicates the centroid coordinates of the block to be matched, and ƒi (x, y) represents the pixel values within the block. For the same reference block, min{dj} corresponds to the best-matched block. x and y are the coordinates of pixel points.

The motion compensation module conducts a detailed analysis of the system’s global motion vectors, effectively extracting the jitter components from the global motion vectors and compensating for them in real time. Electronic image stabilization detects the relative motion between two frames, thereby isolating translational and rotational movements of the image sensor. Based on the motion vectors, the position of the current frame was adjusted to cancel out the jitter.

Real-time compensation for high-speed road surface images is crucial during the operation of the electronic image stabilization module. Therefore, to ensure the coordination of this module with the image acquisition module, image caching module, and image output module, a 20 ms delay counter is designed in the system. When the system begins acquiring road surface images, the image acquisition module is first activated, which performs high-speed continuous acquisition of multiple frames. Simultaneously, the sensor input module receives data from the image sensor, and the image-caching module stores the acquired image data in real time. By introducing a fixed time delay, we can ensure that each module has sufficient time to complete the processing of image data and prevent data conflicts or losses owing to excessively fast processing speeds.

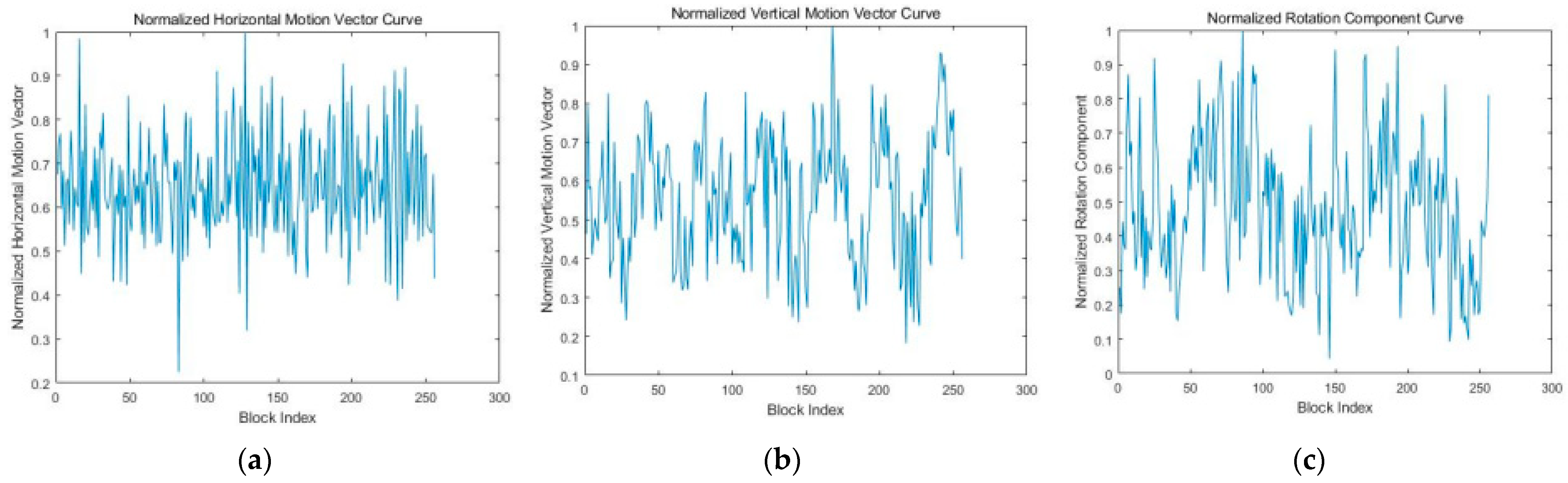

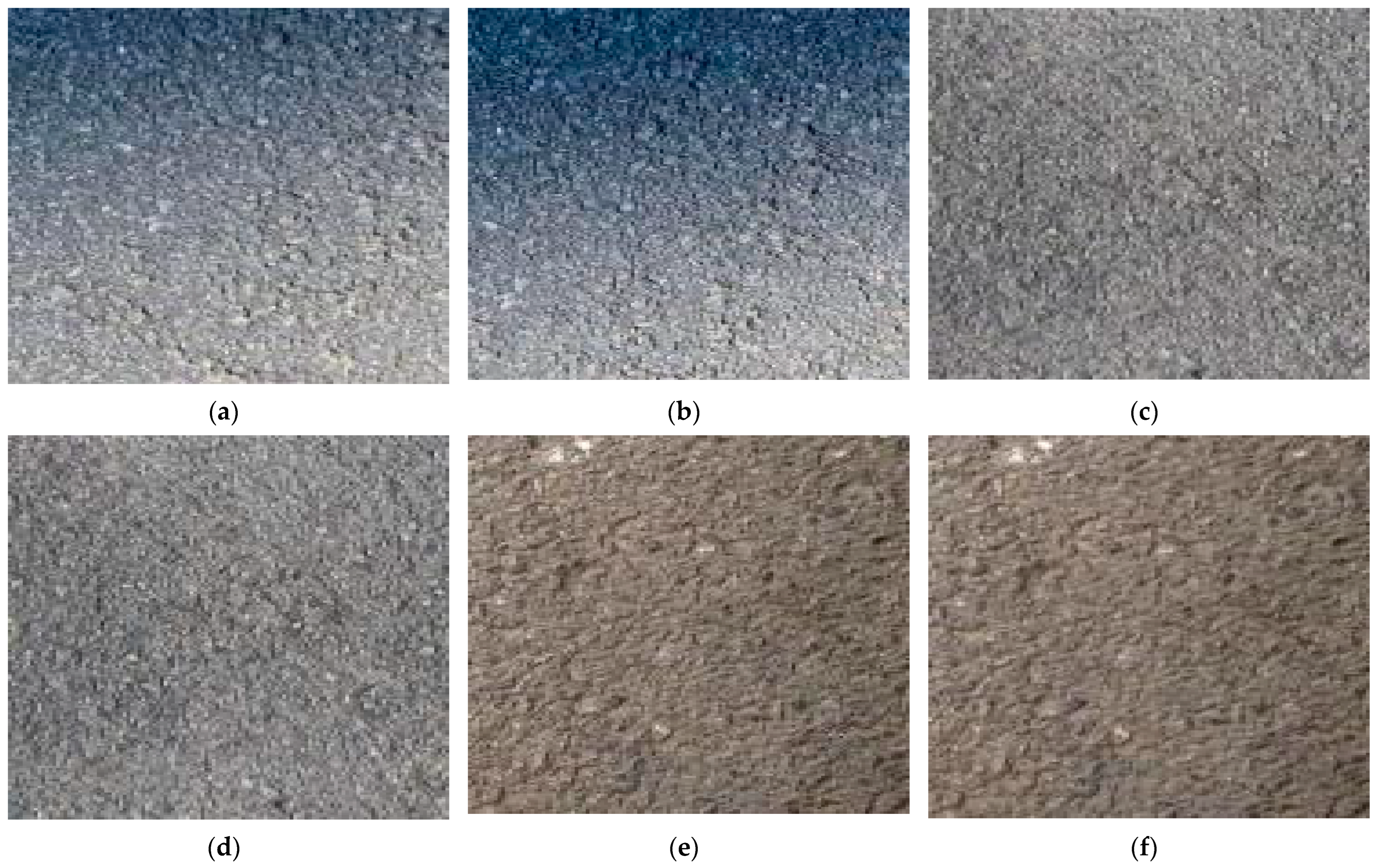

As shown in

Figure 6a, the road surface image without anti-shake processing has overall blur. From

Figure 7, it can be observed that the high-speed road surface image contains horizontal, vertical, and rotational jitter components, displaying large-amplitude and high-frequency fluctuations.

Figure 6b demonstrates that the image clarity improves and no blur is observed after electronic image stabilization compensates for the jitter components in all directions. Therefore, it was proven that the use of electronic image stabilization technology can effectively compensate for the horizontal, vertical, and rotational motion vectors of the image in real time. This also confirms the feasibility of adopting the electronic image stabilization technology in the image clarity enhancement module of this system.

To evaluate the quality of the images acquired by the system using hybrid anti-shake technology, the image clarity metric S was introduced. Objective evaluation methods for image clarity include the full-reference, reduced-reference, and no-reference approaches. In the context of road surface image quality evaluation, because the images acquired by the system at high speeds are stage-specific and the original images cannot be obtained, a no-reference objective evaluation method is used to analyze the clarity of high-speed road surface images. Reblur theory was used to generate reference images, and a full-reference method was applied to assess the degree of information loss in the images. Greater information loss indicates higher image clarity [

16]. By studying the no-reference structural clarity evaluation method based on reblur theory and the structural similarity method, a clarity evaluation method suitable for high-speed road surface images was analyzed using the following steps:

- (1)

The image was grayscale-processed using the weighted average method to retain its detailed and structural information, facilitating subsequent image analysis and processing.

In the equation, g represents the output grayscale image; r, g, and b denote the red, green, and blue channel images of the original image, respectively; the weights are set to 0.30, 0.59, and 0.11, respectively; and (x, y) indicates the pixel coordinates of the image.

- (2)

A Gaussian filter with a size of 7 × 7 and a variance of 6 was applied to smooth the image under evaluation. The Gaussian convolution kernel G is defined as

- (3)

When extracting the gradient images of the evaluated and reference images using the Canny operator, noise removal was performed to reduce noise interference and ensure accurate edge information extraction.

- (4)

The structural similarity index (SSIM) was calculated with values ranging from 0 to 1. This metric measures the similarity between two images, where a higher value indicates greater blurriness.

In the equation, µX and µY represent the pixel means of the two images, σX and σY denote the pixel variances of the two images, σXY is the pixel covariance of the images, and C1 and C2 are constants. Constants C1 and C2 are employed in the luminance and contrast computations to prevent division by zero, where C1 is typically defined as C1 = (k1 × L)2 with k1 generally set to 0.01, and L representing the dynamic range of the image pixel values. Similarly, C2 is used in the contrast component and defined as C2 = (k2 × L)2, where k2 generally takes the value of 0.03.

- (5)

The clarity S of the high-speed acquired image was obtained. The value of S ranges from 0 to 1, where a value closer to 1 indicates higher image clarity.

2.3. Design of Image Fusion Algorithm for High-Speed Road Surface Acquisition

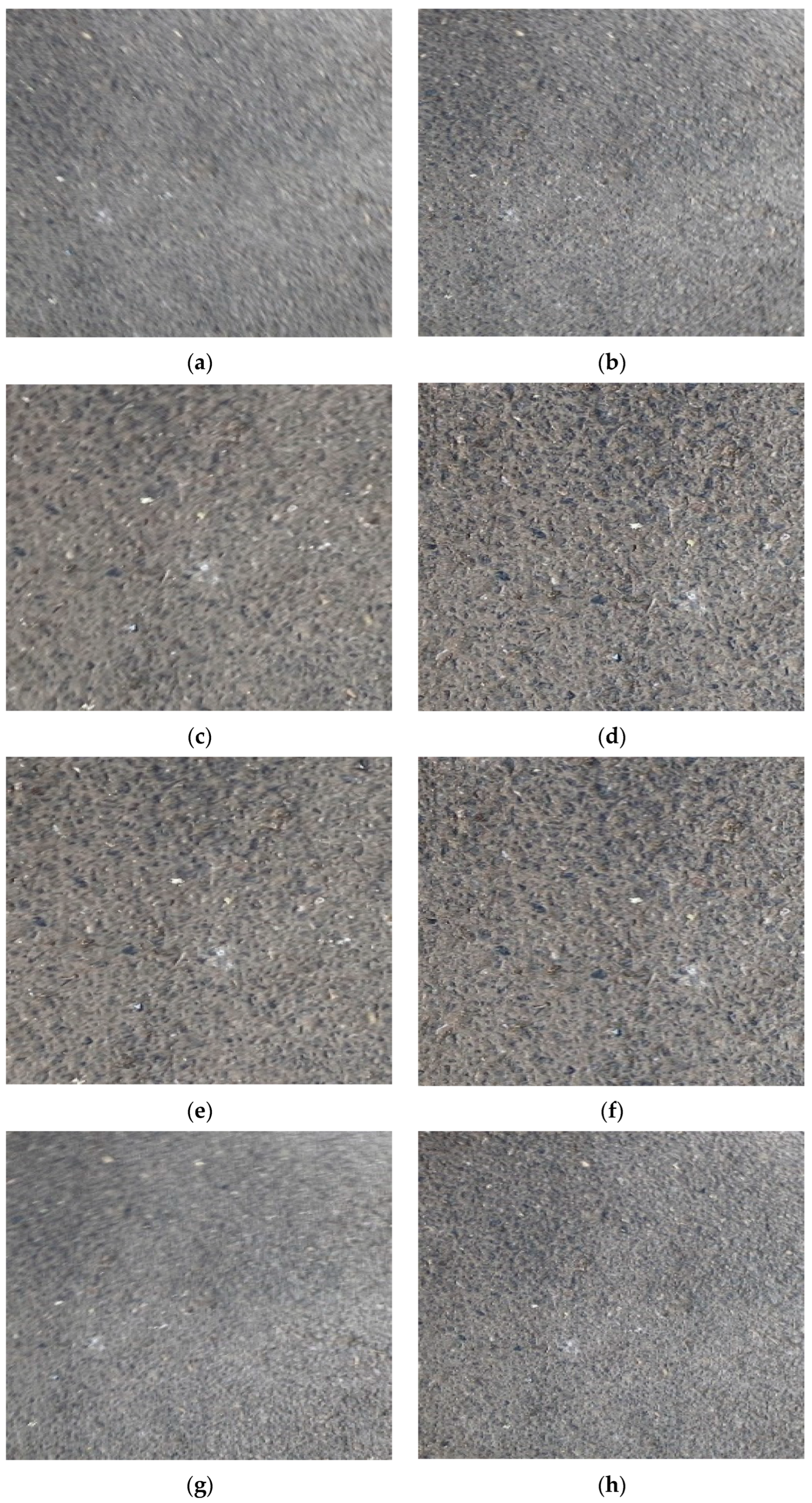

After the road surface image acquisition system obtains clear road surface images while the acquisition vehicle operates at high speeds, the images are transmitted in real time to the PC via the HDMI. To acquire road surface images with richer information and higher clarity, the images first underwent registration preprocessing. Subsequently, a transform-domain-based image fusion algorithm was applied for fusion processing, and objective quality evaluation methods were used to assess and analyze the fusion results.

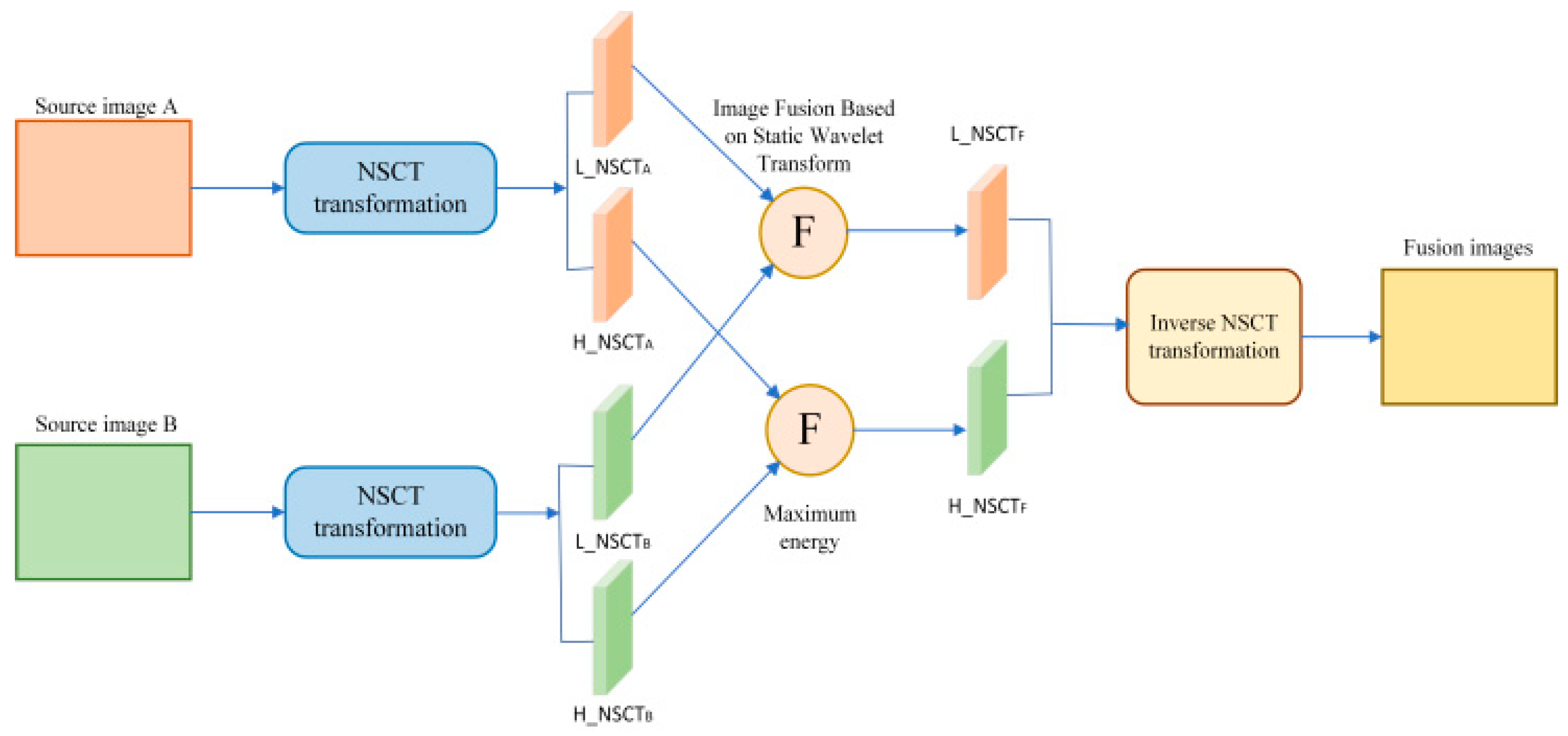

The SWT is a classic shift-invariant wavelet transform that captures detailed information of images [

17]; however, it lacks the ability to describe edge information. On the other hand, NSCT is a flexible multi-scale and multidirectional image decomposition method [

18] that can extract geometric structural features of images, but it has limitations in capturing fine details. Therefore, this system leverages the complementary characteristics of SWT and NSCT for road surface image fusion, generating fused road surface images, as illustrated in

Figure 8. This approach not only enriches the fused images with more detailed textural features, thereby improving image quality, but also enhances edge and shape features, ultimately increasing the clarity of the fused images.

When wavelet transform is applied to image processing, let φ(x) and φ(y) be one-dimensional scaling functions. The two-dimensional scaling function φ(x, y) = L

2(R

2) is expressed as φ(x, y) = φ(x)φ(y). Let Ψ(x) and Ψ(y) be one-dimensional wavelet functions, respectively; the two-dimensional dyadic wavelets are expressed as

It can be known from the above three transformation forms that the two-dimensional wavelet function is decomposed into the product of two one-dimensional functions. This structural characteristic is called a two-dimensional separable wavelet function. In specific applications, high-pass or low-pass filters matched with the wavelet function can effectively extract the required frequency components from the input signal. During the wavelet transform process, by eliminating downsampling and performing upsampling operations, the problem of the wavelet coefficients lacking translation invariance is solved, and the effect of image information extraction is improved at the same time.

The contourlet transform is a multi-resolution and multidirectional transform that allows for a different number of directions at each scale. Due to the downsampling operations performed in the two stages of Laplacian Pyramid (LP) and Directional Filter Bank (DFB), the redundancy of image Contourlet coefficients is significantly reduced. However, this also results in the transform lacking translation invariance, leading to image distortion. In contrast, the nonsubsampled contourlet transform (NSCT) adopts a nonsubsampled pyramid decomposition and a directional filter bank, which solves problems such as the lack of translation invariance and the Gibbs phenomenon.

The NSCT consists of two main steps: first, multi-scale decomposition of the image is achieved by removing downsampling and upsampling the filters. When the number of decomposition levels is J, the redundancy of the nonsubsampled pyramid decomposition is J + 1, which satisfies the Bezout identity for perfect reconstruction:

Among them, H0(z) and G0(z) are the low-pass decomposition filter and the low-pass synthesis filter, respectively, while H1(z) and G1(z) are the high-pass decomposition filter and the high-pass synthesis filter, respectively.

Subsequently, all filters are derived from a single fan filter to implement the nonsubsampled directional filter bank, which satisfies the Bezout identity:

Among them, U0(z) and V0(z) are the low-pass decomposition filter and synthesis filter of the directional filter bank, respectively, while U1(z) and V1(z) are the high-psass decomposition filter and synthesis filter of the directional filter bank, respectively.

The specific steps of the road surface image fusion algorithm are as follows:

- Step 1

Perform nonsubsampled contourlet transform (NSCT) decomposition on the two preprocessed images, A and B, with their sub-band coefficients denoted as NSCTA{s,d} and NSCTB{s,d}, respectively. where s = 0, 1, …, S and d = 1, 2, …, 2n. Here, S represents the number of decomposed sub-bands, and 2n denotes the total number of directions in each sub-band. NSCTA{s,d} and NSCTB{s,d} correspond to the low-frequency sub-bands.

- Step 2

Select pixels with higher energy values for fusion. The window size is 5 × 5, i.e.,

The calculation formula for pixel energy is

where S represents the total number of scales, and D denotes the total number of directional frequencies. The selection rule for fusion coefficients is as follows:

- Step 3

For the low-frequency coefficients NSCTA{0,1} and NSCTB{0,1}, image fusion is performed using the stationary wavelet transform (SWT). The algorithm is as follows:

- (a)

Apply a three-level stationary wavelet decomposition to the two low-frequency coefficients to obtain the corresponding wavelet coefficients.

- (b)

Fuse the low-frequency coefficients in the transform domain using a weighted averaging operator.

Then, w = 0.5.

- (c)

The high-frequency transform coefficients are fused using the pixel energy maximum method. The regional characteristics of the pixels are captured through the high-frequency sub-band window W within each scale, and the significance of the coefficients is comprehensively evaluated. The calculation formula is as follows:

where S is the total number of scales and D is the total number of orientations. The coefficients in the transform domain are selected according to the following principles.

- (d)

Perform an inverse stationary wavelet transform on the fused multi-resolution image to obtain the fused image NSCTF{0,1}. Finally, Execute an inverse nonsubsampled contourlet transform on the NSCTF{s,d} of the road surface fusion image to generate the final fused road surface image.

Image registration is the process of estimating image similarity by matching the feature relationships between images and is a core step in image fusion. Typically, an image rich in features is selected as the reference, and the target image is projected onto the reference image plane to ensure alignment in the same coordinate system. Intermediate images are often used as reference images during registration to reduce the accumulation of errors. Registration challenges mainly arise from differences in the imaging conditions. Currently, most image-registration algorithms are based on image features, with point-feature-based registration offering high accuracy and effectiveness. Therefore, the selection of point features is crucial for image registration. The SIFT (scale-invariant feature transform) [

19] algorithm, which is known for its scale and spatial invariance, can adapt to images under varying exposure and acquisition conditions. In addition, its rich feature point information makes it suitable for fast and accurate image registration. Thus, this system uses an SIFT-based point-feature image registration algorithm to estimate image similarity.

Subjective quality evaluation criteria for image fusion include absolute and relative evaluations. The former involves observers directly assessing the quality of fused images, whereas the latter requires observers to classify and compare fused images generated by different methods before providing scores. Although subjective evaluation is simple and intuitive, it is prone to interference from subjective factors and faces challenges in practical applications that often lack precision. Therefore, objective quality evaluation methods for image fusion are required.

Objective quality evaluation metrics, such as spatial frequency (SF), mutual information (MI), root mean square error (RMSE), and QAB/F, are employed to effectively and comprehensively evaluate the performance of image fusion algorithms in road-surface image fusion. Higher values of SF, MI, and QAB/F indicate greater clarity, richer information, and better fusion performance in the fused images. Conversely, a lower RMSE value indicates a better quality of the fused images.

The definition of SF is given by

In the equation, RF represents row frequency, and CF represents column frequency. A higher value indicates greater clarity of the fused image.

MI measures the amount of information transferred to the fused image. The mutual information between the source image A and the fused image F is given by

In the equation,

hA,F(i,j) represents the normalized joint gray-level histogram of Images A and F,

hA(i) and

hF(i) are the normalized histograms of the two images, and

L is the number of gray levels. The mutual information between the source image B and the fused image F can be denoted as

MIB,F. The image fusion result is thus expressed as

A higher MI value indicates a better quality of the fused image.

RMSE between the actual fused image F and the ideal fused image R is defined as

A smaller value indicates a better quality of the fused image.

The Q

AB/F calculates the edge strength information g(n, m) and orientation information a(n, m) in the source images A and B, as well as the fused image F, using the Sobel edge detection operator. For image A,

where

sAx (n,m) and

sAy (n,

m) are the outputs of the vertical Sobel template and the horizontal Sobel template, respectively, convolved with the source image centered at pixel P

A(n,m). The related strength information

and the related orientation information

between the source image A and the fused image F are expressed as follows:

where the weights

= [

]

L and

= [

]

L, with L being a constant set to 1.

A higher QAB/F value indicates that the fused image retains richer edge information from the source images.