1. Introduction and Motivation

The motivation of this research is based on [

1], where the AI-based DARIA (Data-driven Risk Analysis in the Construction Industry) approach to identify financial risks in the execution phase of transportation infrastructure construction projects has been introduced [

2]. To be more precise, this research represents the second part of [

2] and had visually been presented at the Innovate 2025—World AI, Automation, and Technology Forum conference [

3].

“DARIA shows exceptional project risk identification results at an early stage of the execution of transportation infrastructure construction projects and has already been deployed into enterprise-wide production in all transportation infrastructure construction units of the STRABAG group” [

2].

However, and to justify its practical application, it must offer

trustworthy, i.e., reproducible, valid, and capable features.

Calibrated trust [

4] aims to ensure that the level of trust placed in a system accurately reflects its capabilities. Users need to have calibrated trust in the results because of, e.g., business rules of due diligence. In this way,

regulatory compliance is achieved. However, trust is a subtle psychological concept that cannot only be based on regulatory compliance alone. If users doubt, e.g., the AI capability, they are unlikely to utilize it. Therefore, DARIA has to offer suitable indicators to convey its trustworthiness.

Due to DARIA’s deployment into enterprise-wide production [

2],

“concern and doubts about the trustworthiness of the DARIA machine learning (ML) algorithm are certainly possible, especially if and when DARIA identifies risky projects at a time when all conventional metrics within the STRABAG controlling system do not identify any problems” [

2] (

primary motivation).

“If artificial intelligence (AI) systems do not prove to be worthy of trust, their widespread acceptance and adoption will be hindered, and the potentially vast societal and economic benefits will not be fully realized” [

5]. Moreover,

“DARIA aims not to replace human workers” [

2].

“Instead, and because intelligent people and intelligent computers have complementary abilities” [

6],

“people and computers can realize opportunities together that neither can realize alone” [

7]. In this way, and based on the results of [

2],

“the observation of the human–computer interaction to identify potential concern and open questions about the trustworthiness of the DARIA AI application during its successful deployment into enterprise-wide production” [

2] to define an approach for DARIA and future deployments of trustworthy DARIA-like approaches [

6] can be identified as

secondary motivation [

6].

Whereas

“AI is a foundational catalyst for digital business” [

8], in reality most organizations struggle to scale their AI pilots into enterprise-wide production, which limits the ability to realize AI’s potential business value [

9]. To be more precise, organizations

“are discovering ’AI pilot paradox’, where launching pilots is deceptively easy but deploying them into production is notoriously challenging” [

9]. Apart from these challenges inherent to DARIA, trust is a human emotion, which depends on many factors that are hard to address from a technical point of view. The way of instilling trust in DARIA is by demonstrating its trustworthiness. There are hardly any agreed upon metrics of trust in AI systems that are applicable to DARIA

(first problem statement). So, how can the trustworthiness of DARIA be qualified?

In order to be reproducible, an AI system’s complete internal state must be known. This state or behavior is determined by the inputs, training data, and hyper-parameters. Initially, the training data and hyper-parameters as well as the selected ML model of DARIA were not perpetually accessible. Reproducibility serves as an indirect proxy measure of reliability by demonstrating consistency across repeated experiments or analyses. It also supports validity by confirming that findings are robust and not artifacts of methodological flaws or random chance. Therefore, reproducibility is a key factor for establishing trust in both the validity and reliability of a system like DARIA. To provide reproducible outputs of DARIA during its risk prediction, the necessary information to reproduce the system’s internal state needs to be collected, managed, archived, and preserved. Unfortunately, DARIA lacks such functionalities (second problem statement). So how can reproducibility be guaranteed?

This research on DARIA follows Nunamaker’s approach to systems development [

10] for the phases

observation,

theory building,

implementation, and

evaluation. Based on the derived problem statements, this paper focuses on the identification of suitable criteria to establish trustworthiness of the DARIA ML algorithm in the interaction between individuals and systems

(RQ1) as well as on the modeling of the reproducibility of the internal state of DARIA’s ML model

(RQ2). In this way, after a review of relevant state of the art in science and technology, the results of the implemented DARIA ML model (c.f. [

2]) will be outlined and validated before DARIA’s deployment process, including a user study to evaluate trustworthiness in the interaction between individuals and systems, will be described and discussed.

2. Selected Relevant State of the Art in Science and Technology

Related to the first goal of this paper to derive suitable criteria that the trustworthiness of the DARIA ML algorithm in the interaction between individuals and systems can be qualified (RQ1), the initial review of relevant selected state of the art is focusing on the definition of trust and trustworthiness first. In order to model the reproducibility of DARIA’s internal state (RQ2), relevant related work will be outlined and discussed.

Although it has been shown that trust is a crucial factor in the adoption of innovative technologies,

“surprisingly, trust literature offers very little guidance for systematically integrating the vast amount of behavioral trust results into the development of computing systems” [

11]. Nevertheless, trust is much more ambivalent [

12].

“This becomes clear when the term is approached philosophically” [

6]. David Easton highlights

“that trust is based on experience with the outputs of decision-makers” [

13].

“In contrast to specific support, however, it does not refer to the daily outputs of concrete decision-makers, but to ongoing positive experiences with outputs of various decision-makers over a longer period of time” [

13]. In this way, Easton defines trust based on the

reliability and

competence as well as on the output of an individual or system instead of an individual or system itself [

6].

“This becomes even clearer when combined with Annette Baier’s statements” [

6]. Baier emphasizes that

“trust is much easier to maintain than it is to get started and is never hard to destroy” [

14].

“Trust is often discussed when it becomes fragile or when it ceases to be perceived as a given background and starts being questioned” [

12]. Therefore, and as discussed in [

6], trust can be defined based on the

honesty of an individual and plays a significant role where there is no security or certainty [

12].

“Especially in the context of AI, where the analytics process is turned into what is commonly referred to as a ’black box’ that is impossible to interpret, and therefore, humans are challenged to comprehend and retrace how the algorithm produces a result” [

6].

Summarizing the discussion about trust and according to [

6],

“between individuals, trust is, on the one hand, based on their competence, and, on the other, based on their honesty” [

12]. Therefore, trust and trustworthiness have both at least a knowledge component and a moral component [

15].

“With regard to the initial rational definition of trust (reliability, truth, and ability), truth and ability can be replaced by honesty and competence, whereas reliability remains as an important third attribute, according to the interpretation of Easton’s argumentation” [

6].

“Even if these dimensions of trust are suitable for individuals, they are inappropriate for describing a system” [

6]. Therefore, Ref. [

6] introduced and discussed the corresponding dimensions that are able

“to define trustworthiness based on the output of an individual or system instead of an individual or system itself” [

6,

13].

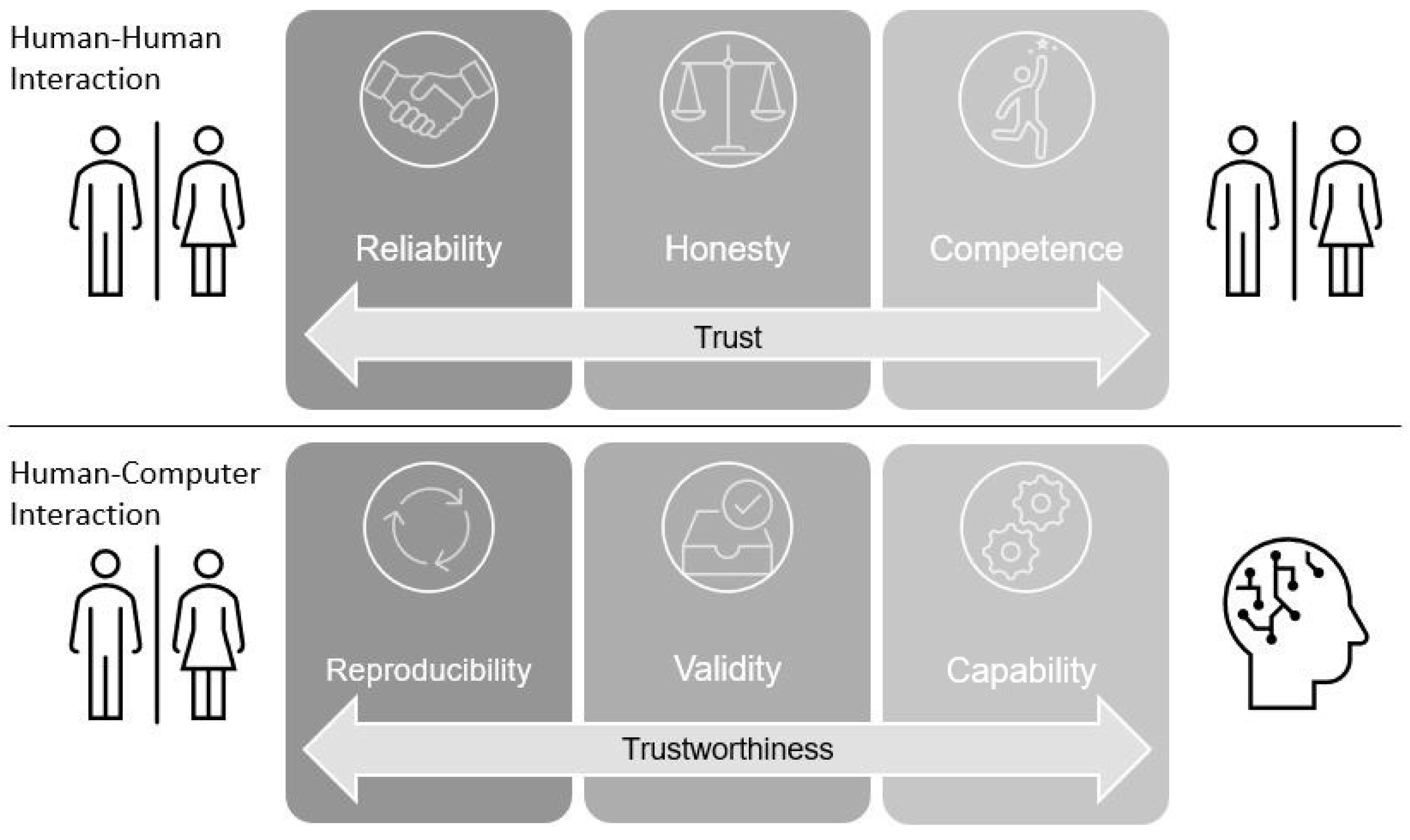

Figure 1 illustrates the derived

“dimensions of trust and trustworthiness in the interaction between individuals as well as between individuals and systems” [

6].

As outlined within [

6],

“the first trust dimension reliability, which describes the quality of individuals to perform consistently, can be represented by reproducibility in the context of a system” by describing the ability

“of obtaining consistent computational results using the same input data, computational steps, methods, code, and conditions of analysis” [

16].

“The next dimension honesty, which can be defined as adherence to the facts between individuals, can be represented by second dimension of trust in the context of a system validity, which describes the degree to which data or results are correct” [

6]. Finally,

“the dimension competence, which characterizes the ability of a person to do something well, can be represented by the term capability, which describes the general potential of a system to accomplish something” [

6].

“Following the definition of trustworthiness in the interaction between individuals and systems that addresses the modeling of trustworthiness in AI-based big data analysis” [

6], the Trustworthy AI-based Big Data Management (TAI-BDM) Reference Model—a combination of Kaufmann’s refined BDM Reference Model [

17] with the AIGO’s (AI Group of Experts at the OECD) high-level structure of a generic AI system [

18]—has been introduced in 2024 [

6].

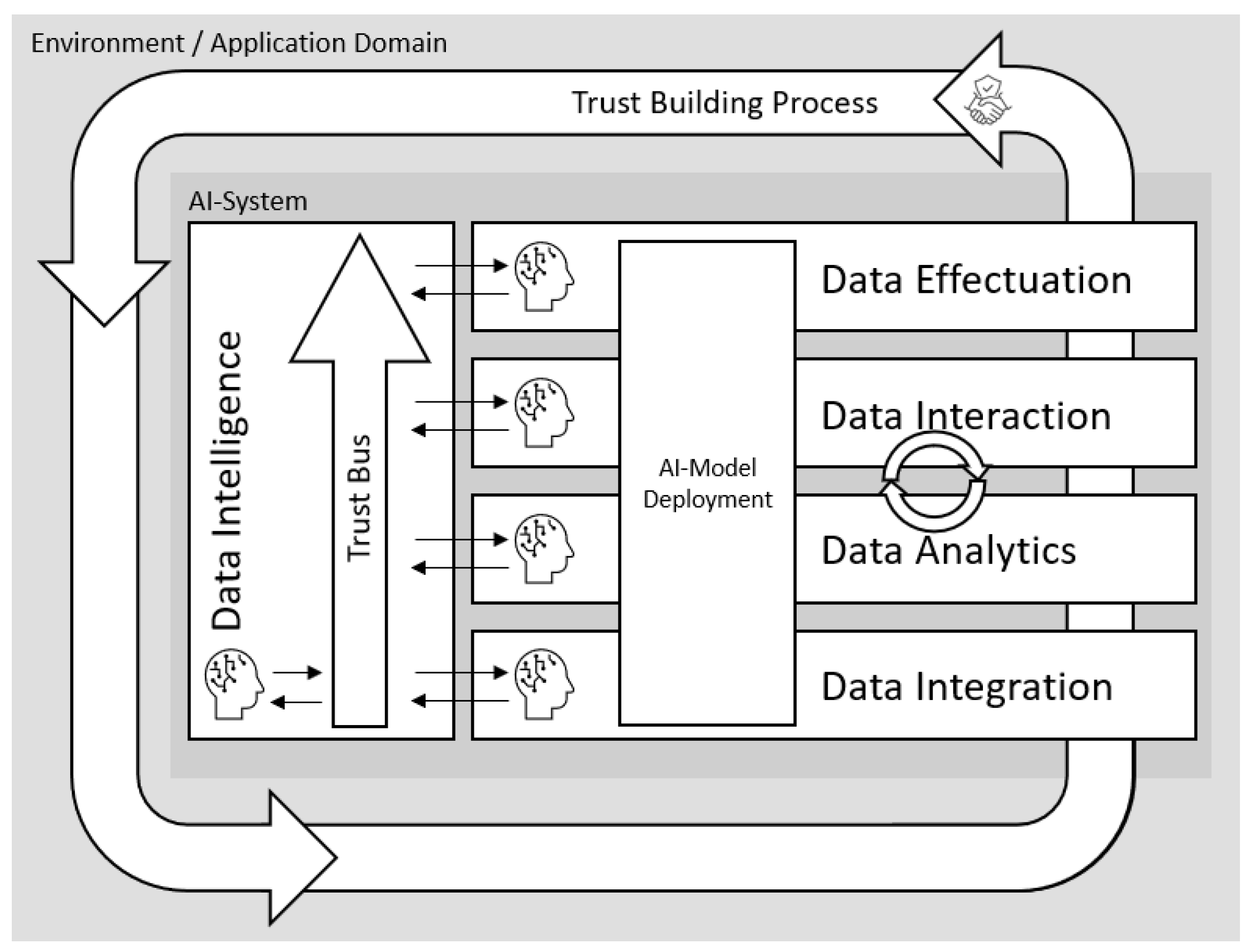

Figure 2 illustrates the Trustworthy AI-based Big Data Management Reference Model that integrates trustworthy AI into the whole big data analysis process [

6].

As introduced and outlined in [

6] and with focus on the right side of the conceptual TAI-BDM Reference Model,

“technical aspects of AI within the whole big data analysis process are only located within the first three layers data integration, data analytics, and data interaction” [

6]. The fourth

data effectuation layer focuses

”on the utilization of the data analysis results to create value in products, services, and operations of the organization” [

6,

17].

“Therefore, AI does not play a role in this layer. The second data analytics layer represents the most significant layer in the context of AI at first glance. Nevertheless, AI also plays an important role within both the data integration and the data interaction layers” [

6].

Regarding the left side,

data intelligence refers

“to the ability of the organization to acquire knowledge and skills in the context of big data management” [

6].

“Revisiting Easton’s definition that trust is based on the output of an individual or system instead of an individual or system itself [13] in combination with the integration of AI within the right-sided layers of the conceptual reference model for trustworthiness in AI-based big data analysis” [

6], each layer with AI-support needs an individual trust component based on its output within the

data intelligence layer [

6].

“Even then, the overall trustworthiness of a system depends on the trustworthiness of the outcome of the entire process” [

6]. Thus,

“the individual trustworthiness of the AI-supported layers needs to be integrated into an overall trust building process” [

6]. In this process,

“the dimensions of trustworthiness in the interaction between individuals and AI components within certain layers as well as information about past interactions between individuals and the overall AI system” [

6] in

“the emergent knowledge process of higher order” [

17], symbolized by the outer loop of the conceptual reference model for trustworthiness in AI-based big data analysis, need to be stored to gauge trustworthiness of the overall AI-based big data analysis to enable a trustworthy AI [

6,

19].

Focusing on TAI-BDM’s

trust bus component, [

20] introduced the concept of Abbass’ trust bus as a reference architecture designed to enhance trustability analytics [

6]. As illustrated in

Figure 3,

“the trust bus is based on a Belief-Desire-Intention-Trust-Motivation (BDI-TM) framework, which involves several components such as actors and entities memory, trust production, identity management, intent management, emotion management, risk management, and complexity management” [

20].

“This architecture aims to facilitate interactions within AI systems and between AI systems and users by synthesizing qualified trust through a rule-based expert system” [

6].

“The trust bus processes metadata to recommend analytics, preprocessing, and visualization methods that maximize user trust in different scenarios” [

20]. Actual domain experts can initially substitute an expert system [

6].

After a review of relevant state of the art to derive suitable criteria for evaluating the trustworthiness of the DARIA ML algorithm in the interaction between individuals and systems, relevant related work has been outlined and discussed. In the following section, the process of establishment of trustworthiness in DARIA will be outlined based on the TAI-BDM Reference Model.

3. Establishment of Trustworthiness in DARIA

As described in detail in [

2],

“using big data for risk analysis of construction projects is a largely unexplored area”.

“In this traditional industry, risk identification is often based either on so-called domain expert knowledge, in other words on experience, or on different statistical and quantitative analysis of individual of projects that have already been completed” [

2,

21].

“Tools, commonly used among construction managers and quantity surveyors, include but are not limited to checklists, cause analysis, SWOT-analysis [22], decision trees [23] and fault tree analysis [23], singular surveys, or multi-stage survey procedures (e.g., Delphi method)” [

2].

“At best, such manual methods can only reveal the reasons for the success or failure of individual projects after they have been completed, whereas the main goal must be to reliably identify high-risk projects during their execution in order to mitigate risks and minimize financial losses” [

2].

“When examining numerous construction projects, statistical patterns emerge. Using, e.g., STRABAG’s internal construction business data from its various infrastructure construction business units, it has already been proven that empirical project margins always follow an asymmetrical Laplace distribution [24], which is only determined by three parameters” [

2]. According to [

24],

“these three parameters allow analysis, comparison, benchmarking and even trend analysis of the distribution” [

2].

Probabilistic applications like the Monte Carlo simulation are interesting approaches in risk analysis because large amounts of data can be processed [

25],

“but they are more useful for analyzing input parameters that are not clearly defined” [

6].

“While Monte Carlo simulations are well suited for dealing with uncertainty in individual input parameters, ML methods are the method of choice for classifying projects through pattern recognition” [

1].

“Nowadays the utilization of ML is a promising approach to assist in decision making for risk assessment” [

6,

26].

“However, there are only a few approaches that are relevant for the construction industry” [

6]. As outlined within [

2], Gondia et al. [

27]

“developed two ML models: Decision trees and naive Bayes classifiers, which predict schedule risks in a construction project with an accuracy of 74.5% and 78.4%, respectively” [

2]. Another approach to ML models is proposed by Yaseen et al. [

23]

“where a hybrid model is presented, which consists of a combination of a random forest and a genetic algorithm” [

2].

“This model predicts the delay in a construction project in Iraq with an accuracy of 91.67%” [

6]. Egwim et al. [

28] use Ensemble Machine Learning Algorithms (EMLA) for the first time in literature to predict time delays in construction projects to

“prove that the use of ensemble algorithms for predicting delays in construction projects has greater predictive power compared to a single algorithm” [

2].

“Currently, ML models are mainly being developed for predicting time delays, i.e. for schedule risks” [

6].

“These continue to be a major influencing factor in construction projects, having a significant negative impact on both the industry and the global economy” [

1].

As a part of transforming STRABAG into a data-driven organization, DARIA was brought to life with the idea to utilize ML algorithms to automatically predict the financial outcome based on commercial controlling data without using domain expert knowledge in order to potentially assist business domain experts in the mitigation of financial losses with an objective and data-driven approach. At that time, company-wide AI applications had not yet been applied. Accordingly, potential business domain experts of DARIA were not familiar with the usage of AI applications. Thus, the initial DARIA experiments were conducted without domain expert knowledge. Until this point, the first stable DARIA ML approach utilized financial project data of around 10,000 completed transportation infrastructure construction projects to train an XGBoost [

29] ML model. With a particular focus on the impact, the defined and implemented data-driven and AI2VIS4BigData-based [

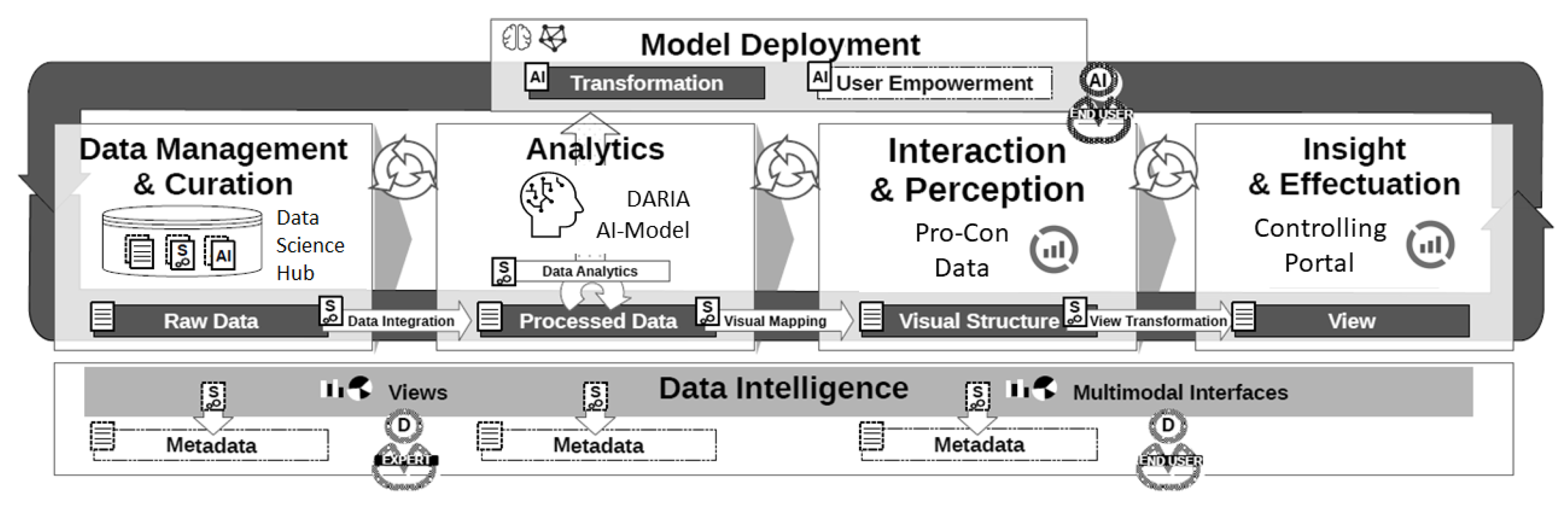

30] DARIA approach (c.f.

Figure 4) has initially been evaluated by means of a quantitative evaluation [

6].

DARIA has been integrated into an existing productive industrial organization system architecture [

2]. Nevertheless,

“there is no direct interchange between the business domain experts and DARIA because the resulting risk predictions have been integrated into the existing standardized group-wide controlling applications as additional risk indicators” [

2]. In this way,

“the DARIA results could be easily evaluated by the aid of state-of-the-art statistical (AI-)algorithm performance metrics such as precision and recall” [

2].

From a global perspective, DARIA’s ML model allows a project outcome prediction based on the four classes (

flop, negative, positive, top) [

2].

“These classes are in alignment with STRABAG internally defined thresholds for project performance, which are based on financial results in relation to contract value” [

2]. Regarding the aforementioned evaluation performance metrics and as outlined within [

2],

recall measures how well the model identifies true positives, i.e. out of all

flop projects, how many were correctly identified, whilst

precision answers the question

“out of all projects predicted as flop, how many are actually flop, thus, providing insights about the quality of the positive predictions” [

2].

Precision and

recall are defined as follows within [

2]:

“The earlier project risks can be identified, the greater the potential for successful mitigation” [

2].

“Considering this as well as the relatively short duration of transportation infrastructure construction projects, DARIA focuses on the first 12 months of project execution” [

2]. Therefore and as outlined in

Table 1, a 3-, 6-, 9-, and 12-month ML model was implemented, trained, and discussed in [

2].

During model development, the most crucial part of generating the ML model was intensive feature engineering. After performing a hyperparameter tuning, a recall of 64.29% could be achieved for the flop class within the 3 month ML model. The recall increased up to 89.29% in the 12 month ML model.

After this initial quantitative evaluation of the algorithm itself (c.f. [

6]), the trust building process of DARIA will be described in detail. This includes the three dimensions of trustworthiness

reproducibility,

validity, and

capability (c.f.

Figure 1) which had to be consistently considered during DARIA’s development and especially for its deployment into enterprise-wide production.

The first step in the development of DARIA ML approach is to conduct various statistical experiments, e.g., in depth time-series analysis, to identify the most promising variables for distinguishing construction projects with a high financial risk from non-risky projects. This leads to a selection of variables, which could be used for training a state-of-the-art neural network. The direct choice of algorithm is based on the fact that an abundance of different algorithms in the first phase of DARIA would probably have overwhelmed the users and hence damaged trust. The selection and disclosure of a single reliable state-of-the-art algorithm fosters trust. Communicating the DARIA results to end users is one of the main project challenges as typical for the development and deployment of any AI solution. As humans respond much better to visualizations of complex facts in comparison to just describing them with a metric, visualizations were created and used to enable an initial trust building process.

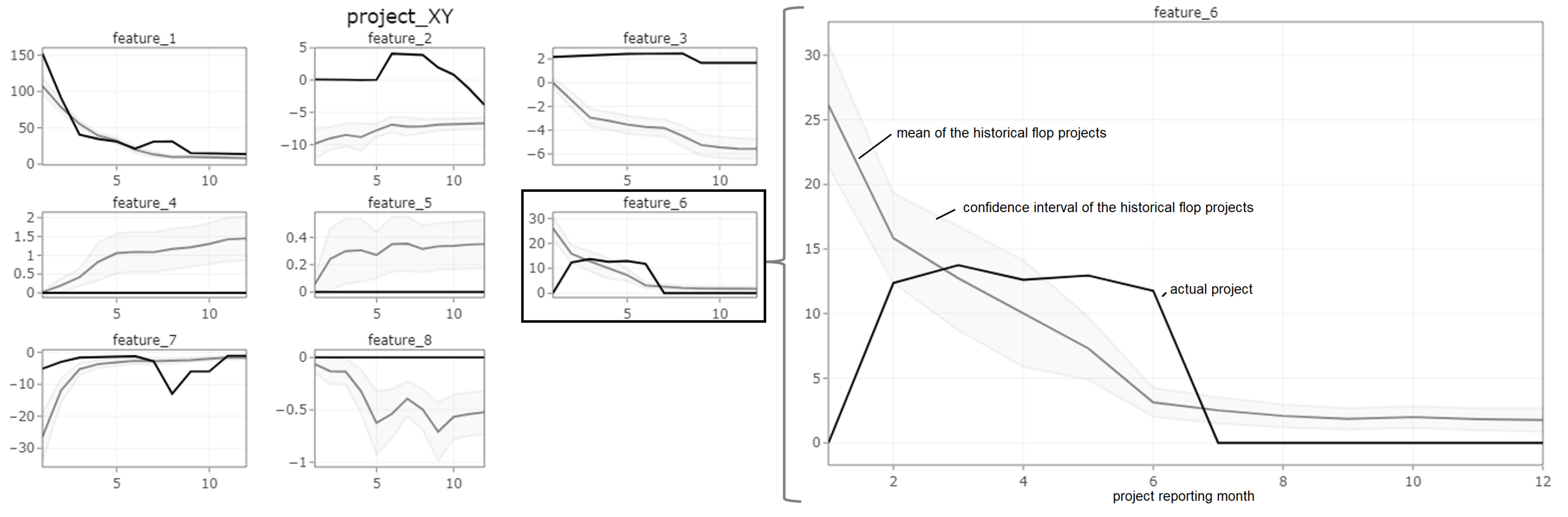

The first visualization is based on the fact that most of the end users of the solution are project controllers who work regularly with controlling data. This allows them to interpret typical time series of said data and find insights based on their experience. Therefore, a user-centered system design approach is determined by the domain experts’ visual habits. A visualization (

Figure 5), depicting trends of relevant key figures, has been created as a result.

To generate the overall data basis for this plot, the data needs to be grouped by following variables

variable_name,

month_ongoing, and

label, where

variable_name represents the name of the respective KPI of the controlling data,

month_ongoing represents the ongoing month of the construction project, and

label represents a categorical variable with the possible value

top,

positive,

negative, or

flop in alignment with the STRABAG classes for project performance. Subsequently, the mean and the confidence interval for each group is calculated. All values are normalized as percentage values to make them comparable for different project sizes. With this illustration that enables the comparison of typical project controlling data (relevant key figures) of a specific project over time in the context of a typical progression of the predicted class (flop in this illustration), business domain experts are empowered to gain insights based on their domain experience without understanding DARIA’s ML algorithm in detail. In this way, the

validity (c.f.

Figure 1) of DARIA can be attested.

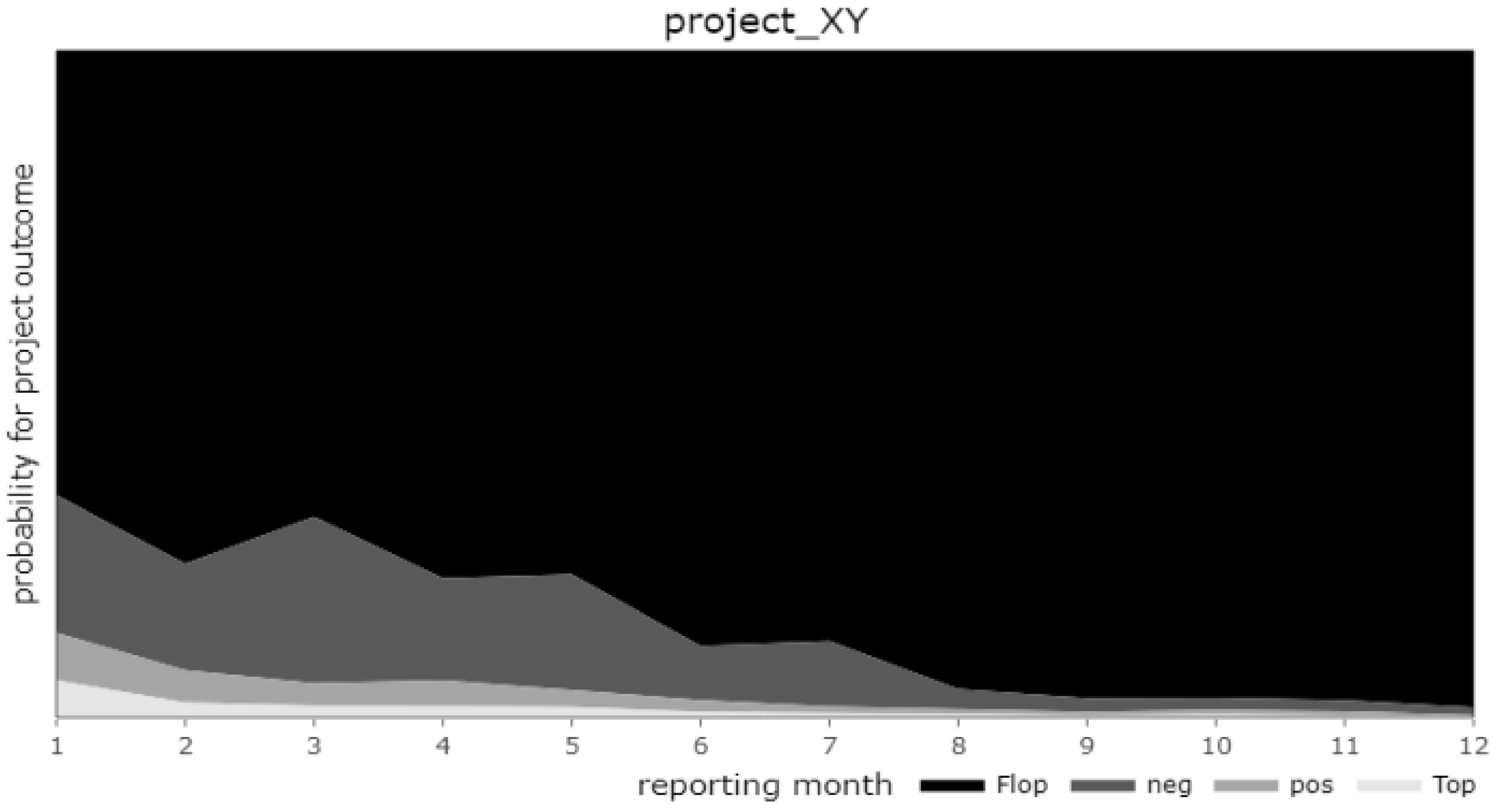

The second visualization (

Figure 6) illustrates the development of probabilities of the four classes

top,

positive,

negative, or

flop over the ongoing months of a construction project. This enables a concise interpretation of the results.

In this exemplary visualization, it can be clearly observed that the ML model predicts the financial outcome as flop already in the first month of project execution. Over time, DARIA enables more precise predictions, which results from the increasing amount of project data. This example illustrates typical patterns of flop classes.

These results also had to be presented to domain experts. In order to show the

capability (c.f.

Figure 1) of DARIA’s ML model, both visualizations are subsequently included in a web-based dashboard, which allows a dynamic use and evaluation. These visualizations enable an intensive dialog with domain experts and contributed to a more comprehensive selection of feature variables based on specific domain knowledge. Considering domain experts’ suggestions not only improves the ML model, but also enhanced the trust of the business domain experts.

After the successful verification of

validity and

capability and in alignment with the identified dimensions of trust and trustworthiness in the interaction between individuals and systems (c.f.

Figure 1), relevant business domain users highlighted DARIA’s

reproducibility as a crucial factor for creating sustainable trustworthiness

(RQ2). End users expect to receive identical results when using identical input data. As a consequence, a switch from neural networks to XGBoost has been performed due to the reproducibility of training results [

6]. Neural networks are often referred to as a “black box” that imitates the human brain and include many random processes during training, such as random weights initialization or drop out layers. XGBoost, on the other hand, is a series of decision trees, where each tree corrects the errors of its predecessor and also includes the possibility to work with randomization. However, it has the option of eliminating random elements, hence making training results completely reproducible. Moreover,

“results show that tree-based models remain state-of-the-art on medium-sized data (approx. 10k samples) even without accounting for their superior speed” [

31]. In this way, the

reproducibility (c.f.

Figure 1) of DARIA can be attested.

After improving DARIA’s ML model with the feedback of business domain experts (risk managers), a sufficient degree of trust has been obtained to provide this solution to selected end users (project controllers) within an initial and supervised test phase. In this test phase, the overall trust building process between end users and DARIA highly depends on how accurately DARIA predicted the financial outcome of transportation infrastructure construction projects.

For project controllers that tested DARIA they were of significant importance how many false positives DARIA’s ML algorithm generated. The introduced

precision metric is generally a good indicator for this question. However, during the ML model development phase with participation of domain experts it became clear that there was a significant difference between a false positive value, when a

flop project was predicted as a

negative project or as a

positive or even

top project. Predicting a

negative as a

flop project was deemed acceptable by the domain experts. Therefore, the

adjusted precision metric has been defined as follows [

2]:

Recall is generally suitable to answer the question, how many of all

flop projects could be detected at all. This was always a very important metric in DARIA, but it must be considered in relation to

precision, as these two metrics are in trade-off. Training an ML model with extraordinary

recall usually leads to poor

precision, and vice versa. As already outlined within [

2],

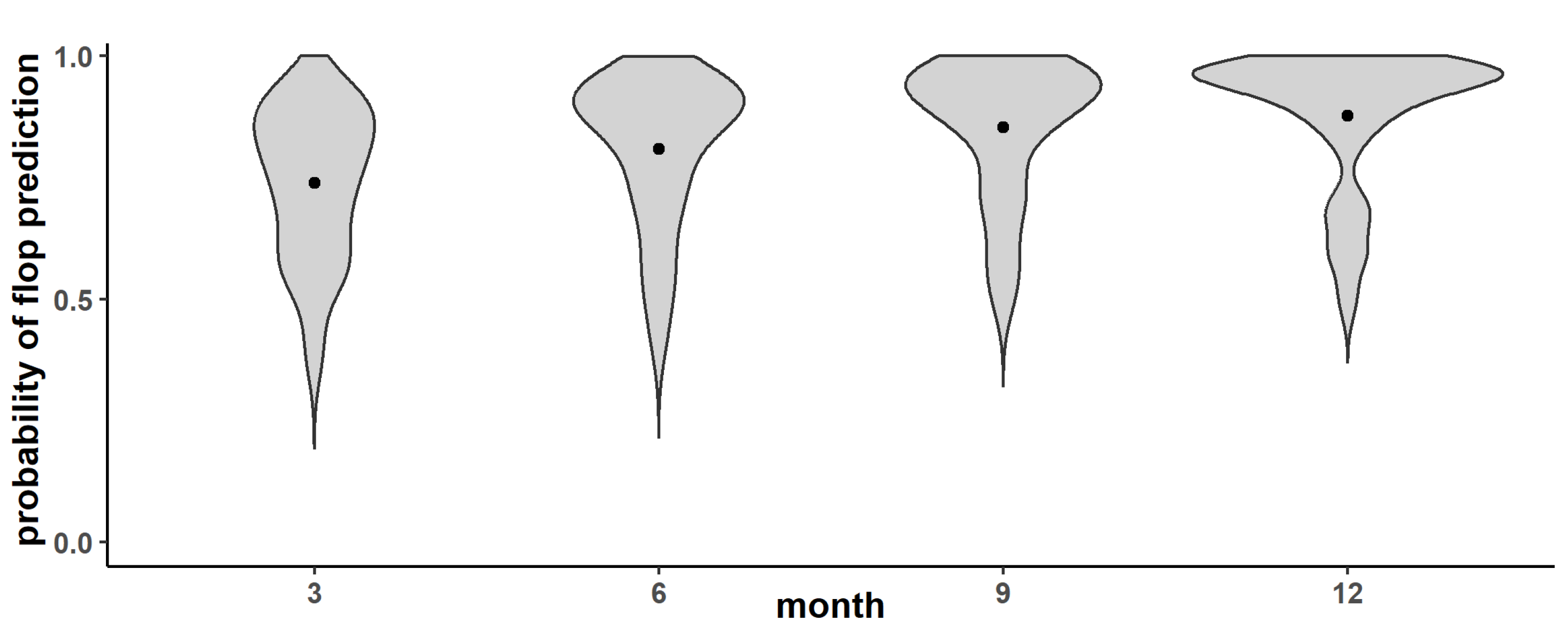

Table 2 shows the detailed

flop classifier results for the

adjusted precision for the 3-, 6-, 9-, and 12-month ML model and

Figure 7 shows the distribution of the adjusted precision (flop probability) for projects correctly predicted as flops for the months 3, 6, 9, and 12 [

2].

“It can be observed that in each violin plot the mean (represented by a black dot) is clearly above 0.5” [

2].

“Moreover, shape of all four violin plots indicates that the data distribution is negatively skewed, meaning that the majority of values are above the mean” [

2]. In conclusion,

“it can be stated that correct flop predictions are made with a high degree of certainty, which increases for each later model in the time series” [

2].

Due to the fact that the defined and implemented DARIA approach (c.f.

Figure 4)

“has been integrated into an existing productive industrial organization system architecture” [

2],

“there is no direct interchange between the business domain experts and DARIA” [

2].

“The resulting risk predictions have been integrated into the existing standardized group-wide controlling applications as additional indicators” [

2]. This decision supports the overall trust building process as the STRABAG Controlling Portal is an existing and trusted system [

2]. Moreover,

“this strategy kickstarted the trust qualification process from the very beginning” [

2].

Even though standardized and enterprise-wide software components within the STRABAG Group (

Data Science Hub, Controlling Portal) that are utilized to implement the

data management & curation,

insight & effectuation, and

interaction & perception process stages, the respective decision for the (most important) AI-modeling (

analytics) process stage remained open [

2]. In addition to the technical algorithm-related capabilities, the capability of easily deploying an endpoint, which could be used by the Controlling Portal to provide live predictions, is crucial for deploying DARIA into enterprise-wide production [

2]. In this way, Databricks [

32] on Microsoft Azure serves as basis of the DARIA MLOps technology stack, whereas the endpoint deployment is realized via a deep integration of MLflow [

33] into Databricks [

2].

“MLflow covers all major parts of the ML life cycle, from automatic logging of the training of ML models to the deployment of an application programming interface (API) endpoint” [

33]. MLflow also considers security and scalability,

“which are crucial factors when deploying AI pilots into enterprise-wide production and hence contributes to the overall trustworthiness” [

2].

In conclusion, suitable criteria to evaluate the trustworthiness of DARIA’s ML algorithm have been derived in this section as per the first goal of this paper. With a focus on the reproducibility of the internal state of DARIA’s ML model (RQ2), even though DARIA is based on the TAI-BDM Reference Model and therefore, implements the concept of a trust bus, its software architecture is a step towards a full implementation of the trust bus, which can be identified as a remaining challenge for further research.

4. Evaluation

After an initial quantitative evaluation of the algorithm itself and an intensive qualitative evaluation based on the feedback of the business domain experts to prove DARIA’s trustworthiness according to the derived dimensions validity, capability, and reproducibility, DARIA was exposed to selected test users (project controllers) within a supervised test phase. The overall trust building process between end users and DARIA was evaluated for a first time in this phase.

However, as there were no company-wide AI applications at that point in time, concern and doubts about the trustworthiness of DARIA’s ML algorithm are certainly reasonable from an end user perspective, especially in cases where DARIA identifies risky projects while all conventional metrics within the STRABAG controlling system do not identify any problems. Therefore, in order to evaluate and boost further DARIA’s trustworthiness prior to its enterprise-wide deployment, an extensive pilot phase was conducted with input of 30 business domain users (commercial division managers, project controllers, and construction managers) across 10 countries (Relevant countries with transportation infrastructure construction projects: DE, AT, PL, CZ, SK, HU, RS, HR, RO, and BG) of the STRABAG Group.

All participants evaluated DARIA’s prediction performance based on more than one hundred both completed and ongoing transportation infrastructure construction projects (10 per country) by means of cognitive walkthrough sessions. Thus, a combination of structured questionnaires and several feedback rounds engaged DARIA’s business domain users not only to gather insights on the ML model’s accuracy but also to foster a sense of collaborative development to establish an overall trustworthiness of DARIA, despite the fact that DARIA has been integrated into the existing productive industrial organization system architecture.

The questionnaire’s results show a strong alignment between DARIA predictions and pilot users’ assessments. A total of 75% of the business domain users confirmed that DARIA’s predictions match their assessment of the tested projects. Moreover, they highlighted that the additional visualizations (c.f.

Figure 5 and

Figure 6) supported the trust building process. As outlined in

Section 3, these visualizations facilitated a dynamic use and evaluation. For example, model input variables, such as financial result and working capital, were frequently cited as accurate markers that reinforced pilot users’ trust in DARIA’s predictions. The business domain users consistently reported that this alignment boosted their confidence as they were provided with a visualization of how DARIA is reflecting the quantitative criteria well-known to their roles. Most importantly, the pilot users unanimously stated that they find the overall trends in the predictions correct and valuable. This underlines their belief in the

validity,

capability, and

reproducibility of DARIA.

During this pilot phase the business domain users also highlighted potential areas for improvement. Country-specific details, like project contract and payment structure, could lead to an incorrect prediction due to a misinterpretation of the working capital indicator. Moreover, construction managers discovered that winter pauses in project activity are not weighted sufficiently in the ML model, which leads to overly optimistic projections during inactive months. Thus, the optimization of the ML model (consideration of contract type, winter pause, etc.) can be identified as a remaining challenge for further research.

Regardless of the aforementioned areas for improvement, the business domain users unanimously stated that no data-driven ML model could make a completely accurate prediction because of poor or manipulated data. This highlights that the pilot users developed calibrated trust in the model based on prior knowledge from their domain of expertise. In this way, and summarizing the evaluation phase, both the feedback loop and the early business domain user involvement during the piloting process itself contributed greatly to the trust building process of DARIA.

5. Conclusions and Outlook

As outlined in

Section 1, the motivation of this research is based on [

1], where the AI-based DARIA approach to identify financial risks in the execution phase of transportation infrastructure construction projects (c.f. [

2])

“has been introduced, presented, and discussed” [

2]. To be more precise, this research represents the second part of [

2] and had visually been presented at the Innovate 2025—World AI, Automation, and Technology Forum conference [

3].

“Although DARIA shows exceptional project risk identification results at an early project execution stage and has already been deployed into enterprise-wide production in all transportation infrastructure construction units of the STRABAG group” [

2],

“concern and doubts about the trustworthiness of DARIA’s ML algorithm are certainly possible, especially if DARIA identifies risky projects at a time when all other metrics within the STRABAG controlling system are still unremarkable” [

2].

“If AI systems do not prove to be worthy of trust, their widespread acceptance and adoption will be hindered, and the potentially [...] economic benefits will not be fully realized” [

5]. Concluding the establishment of trustworthiness in DARIA and related to the first goal of this paper, suitable criteria to qualify the trustworthiness (

validity,

capability, and

reproducibility) have been derived and evaluated

(RQ1). With a particular focus on the reproducibility of the internal state of DARIA’s ML model

(RQ2),

“even though DARIA is based on TAI-BDM and therefore, implements the concept of a trust bus, its software architecture is a step towards a full implementation of the trust bus” [

6], which can be identified as a first

remaining challenge for further research.

“Even though DARIA has been integrated into the existing productive industrial organization system architecture” [

2], the integration of DARIA’s ML model into the company’s existing processes took significantly longer than initially anticipated [

2]. Due to the fact that DARIA is the first company-wide and self-developed AI framework, a robust MLOps framework, a new data governance standard, and security protocols had to be established before deploying DARIA into enterprise-wide production.

While business domain experts during the model development and the test phase as well as further users during the pilot phase developed calibrated trust in DARIA’s ML model based on prior knowledge from their domain of expertise, potential areas for improvement were recognized. Country-specific details like, e.g., project contract and payment structure can lead to an incorrect prediction due to a misinterpretation of the working capital indicator. Moreover, construction managers within the business domain users discovered that winter pauses in project activity are not considered well in the ML model, which leads to overly optimistic projections during inactive months. Thus, the optimization of the ML model (e.g., consideration of contract type, winter pause, etc.) could be identified as a second remaining challenge for further research.

Moreover,

“identifying risks for the financial outcome of a construction project as early as possible is crucial for successful mitigation and reduction in potential financial losses” [

2].

“In the current version, DARIA is able to identify risks after three months of project execution on a trustworthy base” [

2].

“Unfortunately, even if risky projects are identified at an early execution stage, this detection only enables a reduction in the potential loss (damage limitation)” [

2]. Therefore, DARIA needs to be adjusted accordingly to be able

“to identify risks prior to project start or even during the selection phase of potential construction projects” [

2]. However, and as already discussed within [

2],

“during both selection and tender phases of construction projects, no extensive financial reporting exists” [

2]. In this way, the extension of DARIA’s ML model to both selection and tender phases can be hence identified as a third

remaining challenge for further research.