Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks

Abstract

1. Introduction

- Proposing a functional framework to support tracing renewable energy use across the supply chain network from the original equipment manufacturer perspective. Compared to consumer-oriented solutions, such as green energy trading, the proposed framework supports manufacturers in analyzing and managing diverse information (e.g., renewable energy use) within the supply chain context, thereby reducing reliance on blockchain-based solutions.

- Combining the LLM and domain knowledge to manage and analyze the extensive unstructured information provides a generic solution to trace the renewable energy across supply chain networks. Compared to existing works that rely on smart contracts with strictly pre-defined models, the LLM enables semantic and syntactic analysis of unstructured information to facilitate downstream tasks by generating structured responses.

- Using logic programming to support the traceability analysis of renewable energy across supply chain networks by formulating specifications in terms of rules and facts. Compared to formal verification, which involves exhaustive recursion in elements of smart contracts, logic programming offers a more flexible solution that allows end-users to refine and update specifications regarding the analyzed information from domain knowledge.

2. Related Work

2.1. Modeling and Managing Supply Chain Information

- (1)

- Knowledge-enabled approaches are heuristic-based solutions by analyzing the input data via deductive learning (reasoning) where a set of defined rules is defined [15]. These rules can be formulated as clauses by expert experience and industrial standards. Each clause is the composition of literals, which are atomic elements to represent objects related to the key concepts of supply chain. These clauses and literals can represent the ontology of a set of relational models. Within the ontology, Terminology Box (TBox) refers to concepts and their properties, and the ABox includes the set of statements (e.g., relationships) among concepts defined in the TBox. Knowledge bases are typical solutions to initialize the instances by consisting of a set of the ABox and TBox [16,17]. To materialize the knowledge base, one common solution is to adopt knowledge graphs (KGs) which are a kind of graph-based model by identifying the relational data in terms of entities and their interactions. For example, a heuristic-based solution is proposed in [18] to search for keywords and model the supply chain information. Specifically, the entities and their interactions related to the keywords are structured as the nodes and edges to facilitate the construction of the KG. Additionally, a decision-focused framework is used in lean chain management by deriving from a set of logic rules and specifications [19]. However, these heuristic-based approaches are often less flexible due to the strict specifications of their rules. Specifically, given the variety of input data representations, these specifications become obstacles to accurately interpreting the input data. While most existing tools use different query languages (e.g., SQL and CQL) to model and manage knowledge, they are often inefficient and inflexible at reasoning over relational data for tailored use cases [20].

- (2)

- Data-driven approaches are usually learning-enabled methods which learn the patterns from the extensive input data via inductive learning. In particular, Deep Neural Networks (DNN) with extensive parameters can model spatial and temporal patterns of the supply chain information by analyzing and extracting features from multivariate data in supply chains. For example, by analyzing the numeric data related to the supply chain, Long Short-Term Memory (LSTM) is used to predict the time-series data and detect anomalies across the supply chain [21]. Additionally, due to the implied interactions within the multivariate data within the supply chain, DNN also allows us to identify the underlying relationships by analyzing the complicated unstructured data (e.g., natural languages). For example, combining the LSTM and autoencoder supports the identification and extraction of the entities and their relationships related to supply chain information [22]. Although the data-driven methods are promising solutions to support the supply chain management by handling various data representations, the training-intensive nature of these methods usually require extensive data, posing challenges to collect sufficient and balanced data in the field of supply chain management. Moreover, given the multiple rules to model the supply chain networks, solely relying on data-driven solutions is insufficient to further exploit the intricate relationships.

2.2. Using LLMs for Traceability Analysis Across Applications

- (1)

- Across unstructured and structured data. This kind of traceability analysis usually requires analyzing unstructured data such as nature language representations and tracing their potential links to structured models which contain a set of specifications (e.g., domain-specific models). For example, an LLM-based framework used in the automotive industry is proposed to generate functional safety traceability by analyzing requirements [30]. Given the extensive safety requirements provided by domain experts, LLMs enable traceability analysis by linking these natural language requirements to potential functional specifications defined within system architectural models.

- (2)

- Within structured data. For example, an LLM-based approach is used to recover traceability between functional requirements and goals, both represented in structured data formats (e.g., JSON files), within software engineering to mitigate potential threats [31]. Specifically, the LLMs trace the requirements to the goals by analyzing graph-based models of virtual interior designs for software systems.

- (3)

- Within unstructured data. This approach involves a conversation-based agent that processes and responds using natural language. In the field of blockchain networks, an LLM-based interface is designed for attaining the farm-to-fork traceability [32]. Specifically, this work proposes the use of RAG to enhance the traceability generated by the LLMs through the synthesis of a knowledge base which contains domain knowledge. As a result, the LLM-based agent enables us to make human-understandable traceability analysis regarding the user queries.

2.3. Using Formal Logic to Support LLM Inference

3. Methodology

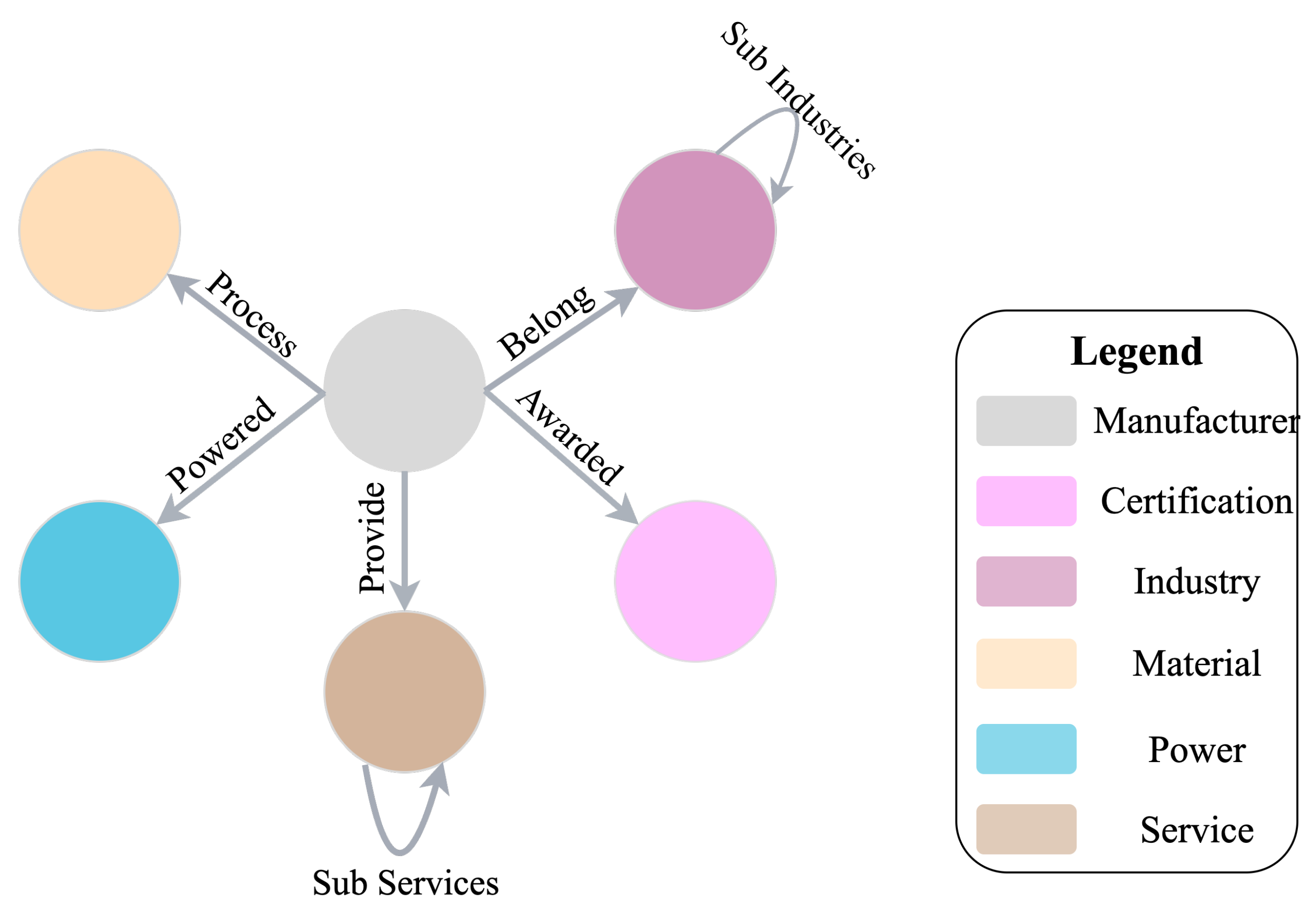

3.1. Modeling the KG via Knowledge-Enabled Methods

3.2. Generating Responses via LLMs by Retrieving from the Knowledge Base

3.3. Tracing the Use of Renewable Energy via Logic Programming

4. Case Study

4.1. Creation of the Graph-Based Knowledge Base

4.2. Structured Response Generation via RAG-Based LLMs

- You are an expert AI assistant specializing in supply chain management. Your task is to give responses based on the retrieved graph-based models.

- The retrieved graph-based models should not more than 2-hops depth. If the retrieved models cannot directly match the queries, we need to infer the possible results based on the known situations.

- After generating the responses regarding the retrieved graph-based models, convert the generated responses following this format: Stereotype(Node Name), Relationship(Node Name, Node Name).

- For example, the manufacturer 149401-us.all.biz enables us to process rubber, and it certifies ISO 9001. The expected output is Manufacturer (149401-us.all.biz). Certification (ISO 9001). Material (rubber). Certify_award (149401-us.all.biz, ISO 9001). Process (149401-us.all.biz, rubber).

- Given specific services, which manufacturers can provide this service?

4.3. Traceability Analysis via Logic Programming

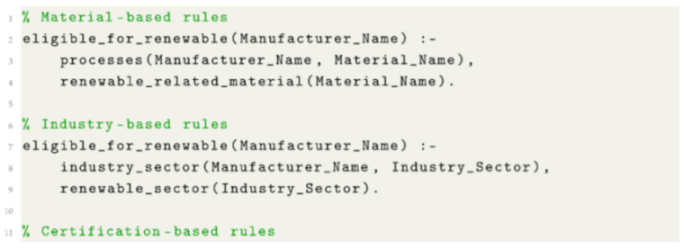

| Listing 1. Prolog rules to define the rules. |

|

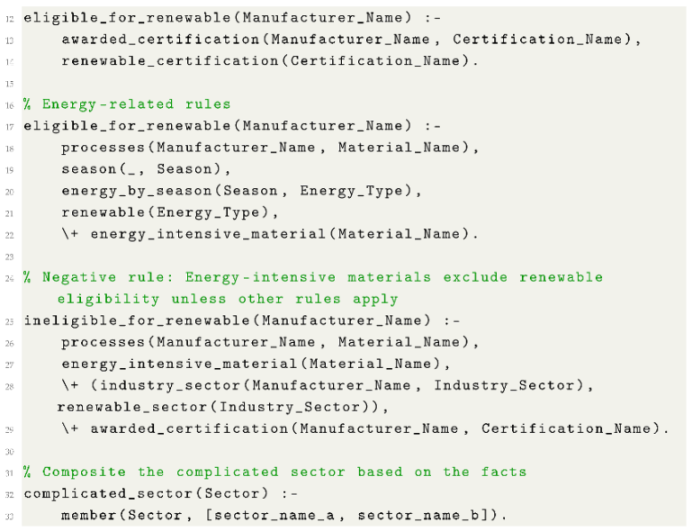

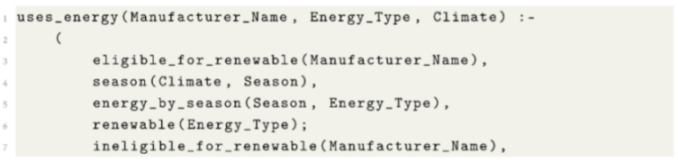

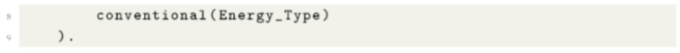

| Listing 2. Synthesized logics to support the tracability of using renewable energy. |

|

4.4. Comparative Studies

5. Conclusion and Future Work

- While the proposed framework demonstrates the use of logic programming with rules derived from commonsense knowledge, refining these rules with expert knowledge aligned to the renewable energy could further improve the efficiency of traceability analysis. For example, contract-based green power certifications often incorporate formal logic rules, whose definition and specification could be integrated into logic programming to enhance the proposed framework with sound and complete logical inference. In addition, to ensure the completeness and rigor of the facts generated by the LLM, their alignment still needs to be verified using syntax checkers (e.g., data validators or compilers) in future work.

- While we present several types of rules to support logical reasoning, future work could extend these rules by incorporating additional collected information and applying various techniques to interpret this information into Prolog-supported logical representations. Additionally, the formulation of the rules can further optimize the efficiency of Prolog. With more clearly defined rules, Prolog compilation can mitigate redundant iterations.

- While Prolog provides deterministic logical inference for traceability analysis, renewable energy usage often exhibits probabilistic and statistical characteristics, making Prolog less effective for certain cases. For example, geographical information, including the location and country of suppliers, can provide a statistical perspective on renewable energy usage. ProbLog, which extends Prolog with probabilistic reasoning, supports incorporating these features.

- While the proposed framework follows a sequential pipeline which is from question analysis to logic programming, the inferred results from Prolog can further enrich and augment the graph-based models in the knowledge base due to the sound and precise nature of logical reasoning. For example, defining rules through logic programming provides a flexible solution to investigate novel relationship types that were not initially present or specified in the knowledge base.

- While the proposed framework shows promising performance on the current dataset, further optimization could focus on cloud-based or distributed deployment of the knowledge base to reduce computational cost as the size of the knowledge base increases.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pasgaard, M.; Strange, N. A quantitative analysis of the causes of the global climate change research distribution. Glob. Environ. Chang. 2013, 23, 1684–1693. [Google Scholar] [CrossRef]

- International Energy Agency. Global Energy Review 2025; International Energy Agency: Paris, France, 2025; Available online: https://www.iea.org/reports/global-energy-review-2025 (accessed on 9 September 2025).

- Wintergreen, J.; Delaney, T. ISO 14064, international standard for GHG emissions inventories and verification. In Proceedings of the 16th Annual International Emissions Inventory Conference, Raleigh, NC, USA, 26 April 2007. [Google Scholar]

- Aristizábal-Alzate, C.E.; González-Manosalva, J.L. Application of NTC-ISO 14064 standard to calculate the Greenhouse Gas emissions and Carbon Footprint of ITM’s Robledo campus. Dyna 2021, 88, 88–94. [Google Scholar] [CrossRef]

- Panwar, N.L.; Kaushik, S.C.; Kothari, S. Role of renewable energy sources in environmental protection: A review. Renew. Sustain. Energy Rev. 2011, 15, 1513–1524. [Google Scholar] [CrossRef]

- Siddi, M. The European Green Deal: Asseasing its current state and future implementation. In Upi Report; United Press International: Washington, DC, USA, 1 January 2020; Volume 114. [Google Scholar]

- Belchior, R.; Vasconcelos, A.; Guerreiro, S.; Correia, M. A survey on blockchain interoperability: Past, present, and future trends. Acm Comput. Surv. (CSUR) 2021, 54, 1–41. [Google Scholar] [CrossRef]

- Tolmach, P.; Li, Y.; Lin, S.W.; Liu, Y.; Li, Z. A survey of smart contract formal specification and verification. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Liu, Q.; Fang, D. Deceptive greenwashing by retail electricity providers under renewable portfolio standards: The impact of market transparency. Energy Policy 2025, 202, 114591. [Google Scholar] [CrossRef]

- Liu, D.; Jiang, Y.; Peng, C.; Jian, J.; Zheng, J. Can green certificates substitute for renewable electricity subsidies? A Chinese experience. Renew. Energy 2024, 222, 119861. [Google Scholar] [CrossRef]

- Li, A.; Choi, J.A.; Long, F. Securing smart contract with runtime validation. In Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation, London, UK, 15–20 June 2020; pp. 438–453. [Google Scholar]

- Jia, R.; Li, Y.; Zhou, Y.; Gao, M.; Sun, H.; Jiang, W. Design and implementation of smart contracts for power networked command system. In Proceedings of the 2024 8th International Conference on Electrical, Mechanical and Computer Engineering (ICEMCE), Xi’an, China, 25–27 October 2024; pp. 881–886. [Google Scholar]

- Abdirad, M.; Krishnan, K. Industry 4.0 in logistics and supply chain management: A systematic literature review. Eng. Manag. J. 2021, 33, 187–201. [Google Scholar] [CrossRef]

- Sheth, A.; Kusiak, A. Resiliency of smart manufacturing enterprises via information integration. J. Ind. Inf. Integr. 2022, 28, 100370. [Google Scholar] [CrossRef]

- Xiao, F.; Li, C.M.; Luo, M.; Manya, F.; Lü, Z.; Li, Y. A branching heuristic for SAT solvers based on complete implication graphs. Sci. China Inf. Sci. 2019, 62, 72103. [Google Scholar] [CrossRef]

- Tiddi, I.; Schlobach, S. Knowledge graphs as tools for explainable machine learning: A survey. Artif. Intell. 2022, 302, 103627. [Google Scholar] [CrossRef]

- Su, P.; Kang, S.; Tahmasebi, K.N.; Chen, D. Enhancing safety assurance for automated driving systems by supporting operation simulation and data analysis. In Proceedings of the ESREL 2023, 33nd European Safety and Reliability Conference, Southampton, UK, 3–8 September 2023. [Google Scholar]

- Guo, L.; Yan, F.; Li, T.; Yang, T.; Lu, Y. An automatic method for constructing machining process knowledge base from knowledge graph. Robot. Comput.-Integr. Manuf. 2022, 73, 102222. [Google Scholar] [CrossRef]

- Liu, S.; Leat, M.; Moizer, J.; Megicks, P.; Kasturiratne, D. A decision-focused knowledge management framework to support collaborative decision making for lean supply chain management. Int. J. Prod. Res. 2013, 51, 2123–2137. [Google Scholar] [CrossRef]

- Junghanns, M.; Kießling, M.; Averbuch, A.; Petermann, A.; Rahm, E. Cypher-based graph pattern matching in Gradoop. In Proceedings of the Fifth International Workshop on Graph Data-management Experiences &Systems, Chicago, IL, USA, 19 May 2017; pp. 1–8. [Google Scholar]

- Nguyen, H.D.; Tran, K.P.; Thomassey, S.; Hamad, M. Forecasting and Anomaly Detection approaches using LSTM and LSTM Autoencoder techniques with the applications in supply chain management. Int. J. Inf. Manag. 2021, 57, 102282. [Google Scholar] [CrossRef]

- Kumar, A.; Starly, B. “FabNER”: Information extraction from manufacturing process science domain literature using named entity recognition. J. Intell. Manuf. 2022, 33, 2393–2407. [Google Scholar] [CrossRef]

- Su, P.; Xu, R.; Quan, Y.; Chen, D. Leveraging large language models for health management in cyber-physical systems. In IET Conference Proceedings CP927; IET: Stevenage, UK, 2025; Volume 2025, pp. 91–97. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Shazeer, N.; Mirhoseini, A.; Maziarz, K.; Davis, A.; Le, Q.; Hinton, G.; Dean, J. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. arXiv 2017, arXiv:1701.06538. [Google Scholar]

- Pope, R.; Douglas, S.; Chowdhery, A.; Devlin, J.; Bradbury, J.; Heek, J.; Xiao, K.; Agrawal, S.; Dean, J. Efficiently scaling transformer inference. Proc. Mach. Learn. Syst. 2023, 5, 606–624. [Google Scholar]

- Bonner, M.; Zeller, M.; Schulz, G.; Savu, A. LLM-based Approach to Automatically Establish Traceability between Requirements and MBSE. In Proceedings of the INCOSE International Symposium, Dublin, Ireland, 2–6 July 2024; Wiley Online Library: Hoboken, NJ, USA, 2024; Volume 34, pp. 2542–2560. [Google Scholar]

- Hassine, J. An llm-based approach to recover traceability links between security requirements and goal models. In Proceedings of the 28th International Conference on Evaluation and Assessment in Software Engineering, Salerno, Italy, 18–21 June 2024; pp. 643–651. [Google Scholar]

- Benzinho, J.; Ferreira, J.; Batista, J.; Pereira, L.; Maximiano, M.; Távora, V.; Gomes, R.; Remédios, O. LLM Based Chatbot for Farm-to-Fork Blockchain Traceability Platform. Appl. Sci. 2024, 14, 8856. [Google Scholar] [CrossRef]

- Xie, Y.; Xu, Z.; Kankanhalli, M.S.; Meel, K.S.; Soh, H. Embedding symbolic knowledge into deep networks. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Xie, Y.; Zhou, F.; Soh, H. Embedding symbolic temporal knowledge into deep sequential models. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 4267–4273. [Google Scholar]

- Yu, D.; Yang, B.; Liu, D.; Wang, H.; Pan, S. A survey on neural-symbolic learning systems. Neural Netw. 2023, 166, 105–126. [Google Scholar] [CrossRef] [PubMed]

- Di, Z.; Zhang, C.; Lv, H.; Cui, L.; Liu, L. LoRP: LLM-based Logical Reasoning via Prolog. Knowl.-Based Syst. 2025, 327, 114140. [Google Scholar] [CrossRef]

- Bashir, A.; Peng, R.; Ding, Y. Logic-infused knowledge graph QA: Enhancing large language models for specialized domains through Prolog integration. Data Knowl. Eng. 2025, 157, 102406. [Google Scholar] [CrossRef]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph retrieval-augmented generation: A survey. arXiv 2024, arXiv:2408.08921. [Google Scholar] [CrossRef]

- Su, P.; Chen, D. Designing a knowledge-enhanced framework to support supply chain information management. J. Ind. Inf. Integr. 2025, 47, 100874. [Google Scholar] [CrossRef]

- Kosasih, E.E.; Margaroli, F.; Gelli, S.; Aziz, A.; Wildgoose, N.; Brintrup, A. Towards knowledge graph reasoning for supply chain risk management using graph neural networks. Int. J. Prod. Res. 2024, 62, 5596–5612. [Google Scholar] [CrossRef]

- Li, Y.; Liu, X.; Starly, B. Manufacturing service capability prediction with Graph Neural Networks. J. Manuf. Syst. 2024, 74, 291–301. [Google Scholar] [CrossRef]

- International Organization for Standardization (ISO). ISO 9001:2015; Quality Management Systems–Requirements. ISO: Geneva, Switzerland, 2015. Available online: https://www.iso.org/standard/62085.html (accessed on 17 September 2025).

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Bahr, L.; Wehner, C.; Wewerka, J.; Bittencourt, J.; Schmid, U.; Daub, R. Knowledge graph enhanced retrieval-augmented generation for failure mode and effects analysis. J. Ind. Inf. Integr. 2025, 45, 100807. [Google Scholar] [CrossRef]

- Shu, Y.; Yu, Z.; Li, Y.; Karlsson, B.F.; Ma, T.; Qu, Y.; Lin, C.Y. Tiara: Multi-grained retrieval for robust question answering over large knowledge bases. arXiv 2022, arXiv:2210.12925. [Google Scholar] [CrossRef]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Ma, J.; Li, R.; Xia, H.; Xu, J.; Wu, Z.; Liu, T.; et al. A survey on in-context learning. arXiv 2022, arXiv:2301.00234. [Google Scholar]

- International Energy Agency. Steel and Aluminium; International Energy Agency: Paris, France, 2023; Available online: https://www.iea.org/reports/steel-and-aluminium (accessed on 9 September 2025).

- Capodaglio, A.G. Developments and Issues in Renewable Ecofuels and Feedstocks. Energies 2024, 17, 3560. [Google Scholar] [CrossRef]

- Tang, J.; Xiao, X.; Han, M.; Shan, R.; Gu, D.; Hu, T.; Li, G.; Rao, P.; Zhang, N.; Lu, J. China’s sustainable energy transition path to low-carbon renewable infrastructure manufacturing under green trade barriers. Sustainability 2024, 16, 3387. [Google Scholar] [CrossRef]

- International Organization for Standardization (ISO). ISO 14001:2015; Environmental Management Systems—Requirements with Guidance for Use. ISO: Geneva, Switzerland, 2015. Available online: https://www.iso.org/standard/60857.html (accessed on 16 October 2025).

- Ikram, M.; Zhang, Q.; Sroufe, R.; Shah, S.Z.A. Towards a sustainable environment: The nexus between ISO 14001, renewable energy consumption, access to electricity, agriculture and CO2 emissions in SAARC countries. Sustain. Prod. Consum. 2020, 22, 218–230. [Google Scholar] [CrossRef]

- Jiang, H.; Yao, L.; Qin, J.; Bai, Y.; Brandt, M.; Lian, X.; Davis, S.J.; Lu, N.; Zhao, W.; Liu, T.; et al. Globally interconnected solar-wind system addresses future electricity demands. Nat. Commun. 2025, 16, 4523. [Google Scholar] [CrossRef] [PubMed]

- Yan, H.; Yang, J.; Wan, J. KnowIME: A system to construct a knowledge graph for intelligent manufacturing equipment. IEEE Access 2020, 8, 41805–41813. [Google Scholar] [CrossRef]

- Supply Chain Data Set for Data Analytics Project Portfolio. Available online: https://www.kaggle.com/datasets/shivaiyer129/supply-chain-data-set (accessed on 17 September 2025).

- DataCo Smart Supply Chain for Big Data Analysis. Available online: https://www.kaggle.com/datasets/shashwatwork/dataco-smart-supply-chain-for-big-data-analysis (accessed on 17 September 2025).

- Supply Chain Management for Car. Available online: https://www.kaggle.com/datasets/prashantk93/supply-chain-management-for-car/data (accessed on 17 September 2025).

- Lu, Y.; Shen, M.; Wang, H.; Wang, X.; van Rechem, C.; Fu, T.; Wei, W. Machine learning for synthetic data generation: A review. arXiv 2023, arXiv:2302.04062. [Google Scholar]

- Su, P. Supporting Self-Management in Cyber-Physical Systems by Combining Data-Driven and Knowledge-Enabled Methods. Ph.D. Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2025. [Google Scholar]

- Miller, J.J. Graph database applications and concepts with Neo4j. In Proceedings of the Southern Association for Information Systems Conference, Atlanta, GA, USA, 23–24 March 2013; Volume 2324, pp. 141–147. [Google Scholar]

- Green, A.; Guagliardo, P.; Libkin, L.; Lindaaker, T.; Marsault, V.; Plantikow, S.; Schuster, M.; Selmer, P.; Voigt, H. Updating graph databases with Cypher. In Proceedings of the 45th International Conference on Very Large Data Bases (VLDB), Los Angeles, CA, USA, 26–30 August 2019; Volume 12, pp. 2242–2254. [Google Scholar]

- Francis, N.; Green, A.; Guagliardo, P.; Libkin, L.; Lindaaker, T.; Marsault, V.; Plantikow, S.; Rydberg, M.; Selmer, P.; Taylor, A. Cypher: An evolving query language for property graphs. In Proceedings of the 2018 International Conference on Management of Data, Houston, TX, USA, 10–15 June 2018; pp. 1433–1445. [Google Scholar]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Luo, L.; Zhao, Z.; Haffari, G.; Li, Y.F.; Gong, C.; Pan, S. Graph-constrained reasoning: Faithful reasoning on knowledge graphs with large language models. arXiv 2024, arXiv:2410.13080. [Google Scholar] [CrossRef]

- Bast, H.; Haussmann, E. More accurate question answering on freebase. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, Australia, 18–23 October 2015; pp. 1431–1440. [Google Scholar]

- Wielemaker, J.; Schrijvers, T.; Triska, M.; Lager, T. Swi-prolog. Theory Pract. Log. Program. 2012, 12, 67–96. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Zhang, J. Graph-toolformer: To empower llms with graph reasoning ability via prompt augmented by chatgpt. arXiv 2023, arXiv:2304.11116. [Google Scholar]

- Pan, L.; Albalak, A.; Wang, X.; Wang, W.Y. Logic-lm: Empowering large language models with symbolic solvers for faithful logical reasoning. arXiv 2023, arXiv:2305.12295. [Google Scholar] [CrossRef]

| Components | Advantages |

|---|---|

| Graph-Based Knowledge Base | (1) Graph-Based models support the relational data within supply chain networks (2) Knowledge base with graph-based models provides an interpretable solution |

| RAG-based LLM | (1) Flexible analysis of input queries with natural language representations (2) Mitigation of hallucination via retrieving domain knowledge |

| Logic Programming | (1) A flexible solution to analyze traceability regarding each output from LLM (2) Support of multiple step reasoning via user-specific rules |

| Our Framework | RAG-Based LLM | LLM with CoT | |

|---|---|---|---|

| Precision | 0.73 | 0.52 | 0.59 |

| Recall | 1.00 | 0.55 | 0.65 |

| F1-score | 0.84 | 0.53 | 0.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, P.; Xu, R.; Wu, W.; Chen, D. Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks. Appl. Syst. Innov. 2025, 8, 160. https://doi.org/10.3390/asi8060160

Su P, Xu R, Wu W, Chen D. Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks. Applied System Innovation. 2025; 8(6):160. https://doi.org/10.3390/asi8060160

Chicago/Turabian StyleSu, Peng, Rui Xu, Wenbin Wu, and Dejiu Chen. 2025. "Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks" Applied System Innovation 8, no. 6: 160. https://doi.org/10.3390/asi8060160

APA StyleSu, P., Xu, R., Wu, W., & Chen, D. (2025). Integrating Large Language Model and Logic Programming for Tracing Renewable Energy Use Across Supply Chain Networks. Applied System Innovation, 8(6), 160. https://doi.org/10.3390/asi8060160