A QR-Enabled Multi-Participant Quiz System for Educational Settings with Configurable Timing

Abstract

1. Introduction

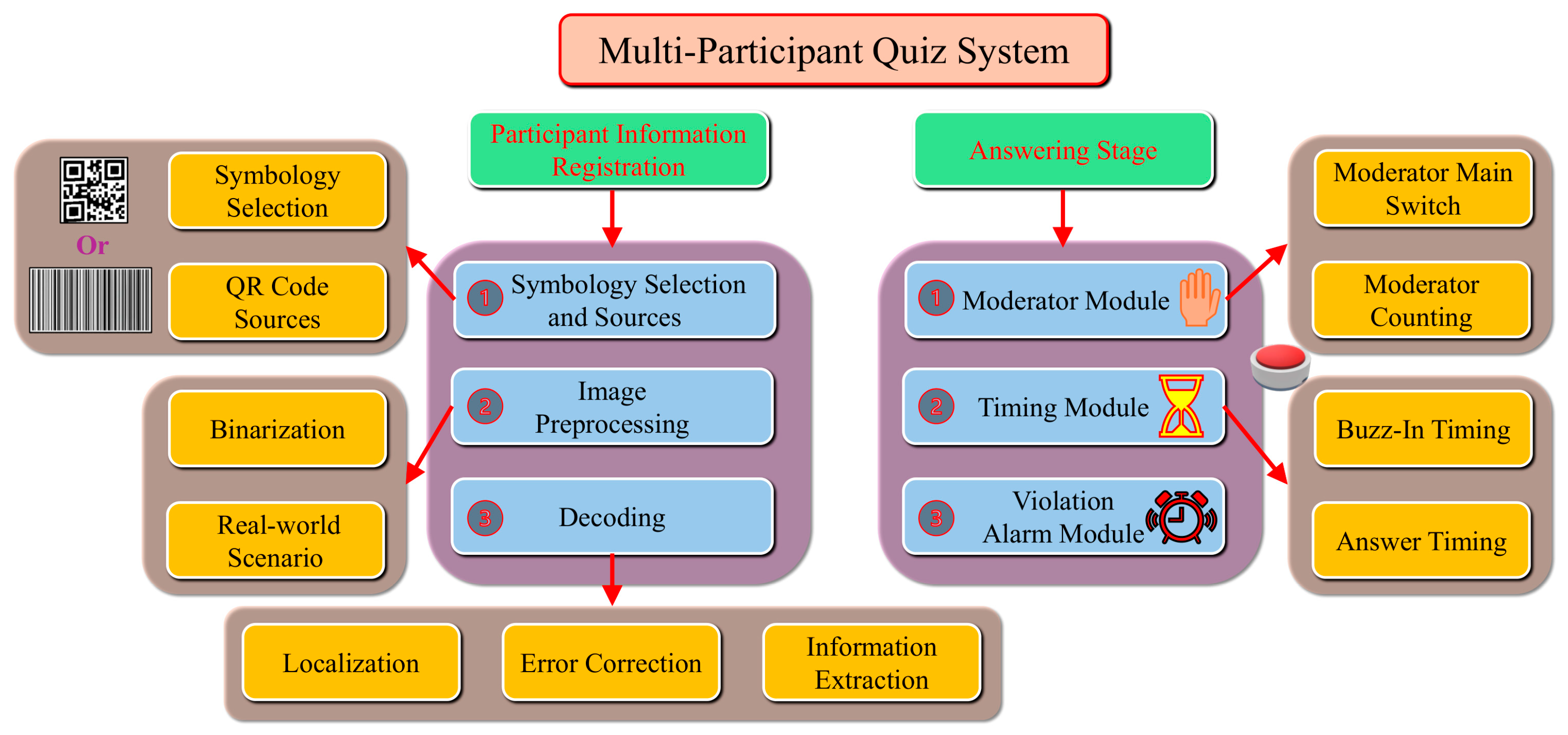

2. System Overview

3. Participant Information Registration

3.1. Symbology Selection and Sources

3.1.1. Symbology Selection

3.1.2. QR Code Sources

3.2. Image Preprocessing

3.2.1. Binarization

3.2.2. Real-World Scenario Simulation

3.3. Decoding

3.3.1. Localization

3.3.2. Error Correction

3.3.3. Information Extraction

4. Answering Stage

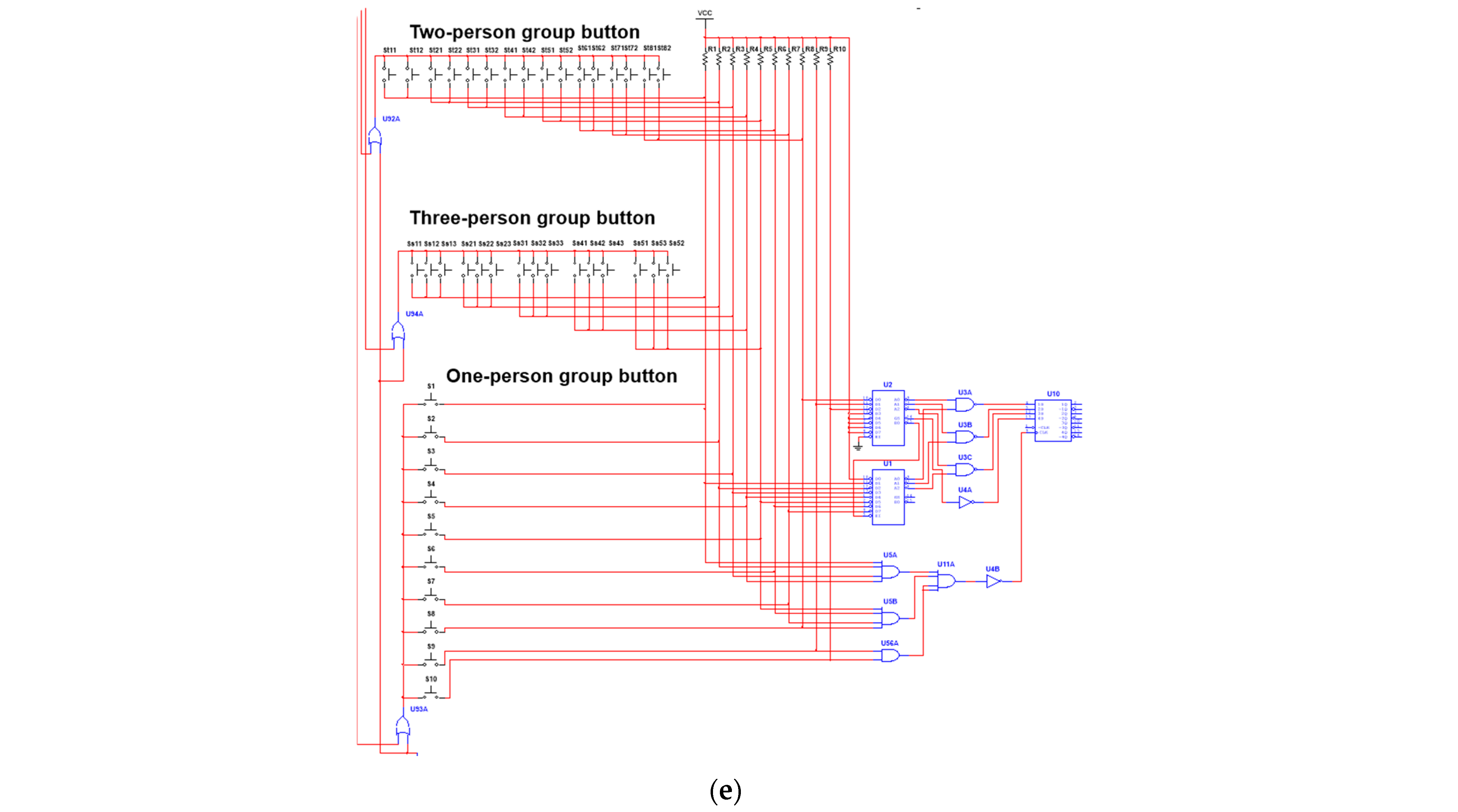

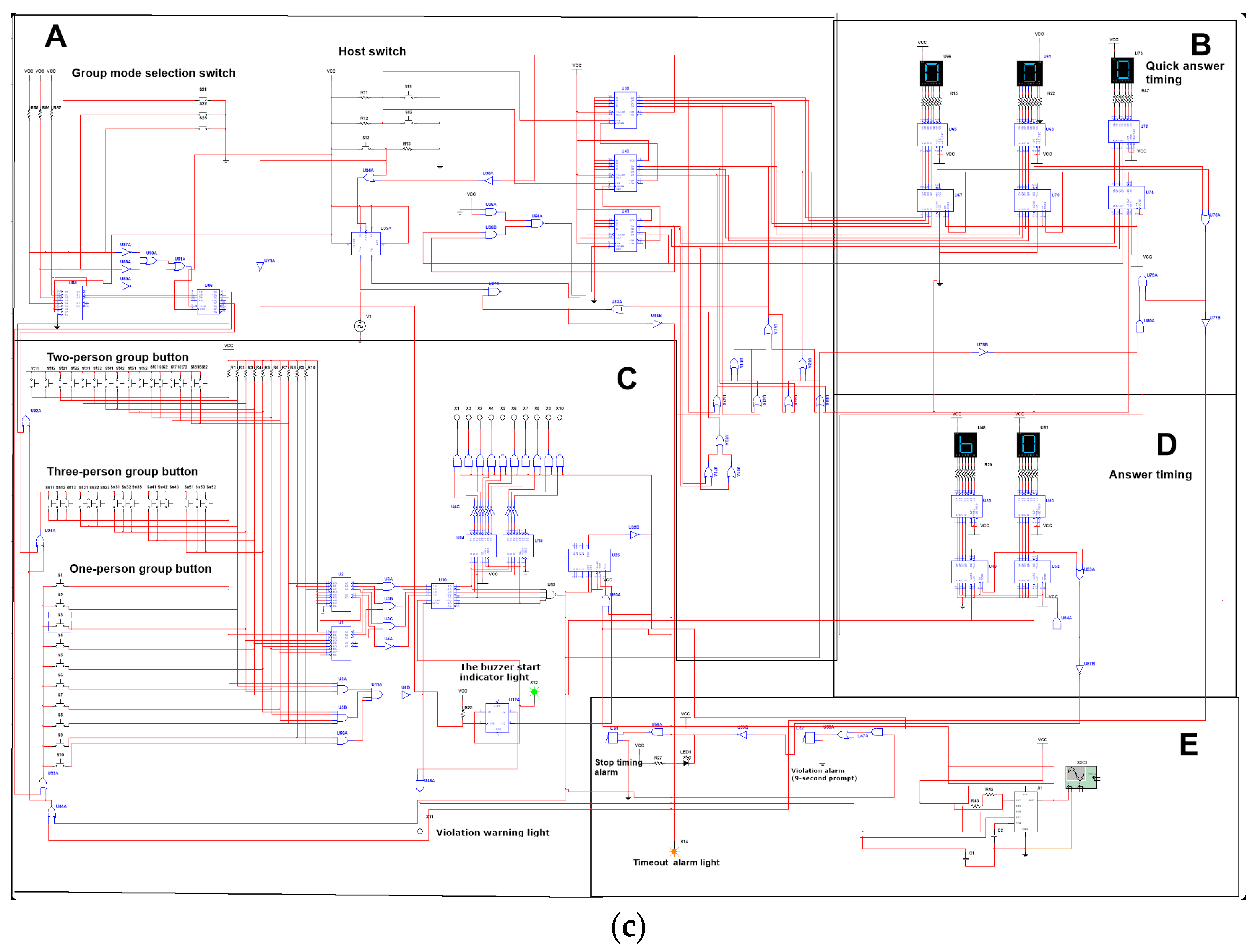

4.1. Moderator Module

4.1.1. Moderator Main Switch

4.1.2. Moderator Counting

4.2. Timing Module

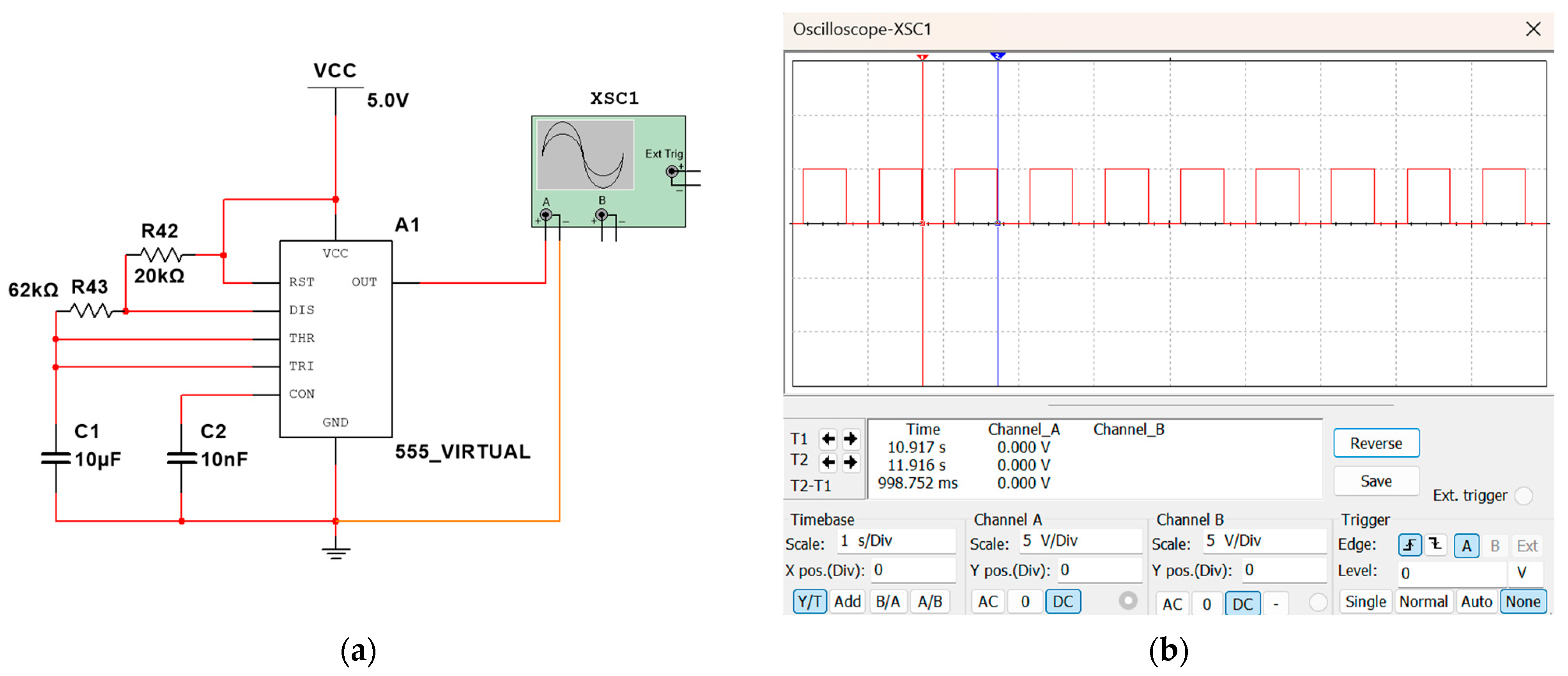

4.2.1. Buzz-In Timing

4.2.2. Answer Timing

4.3. Violation Alarm Module

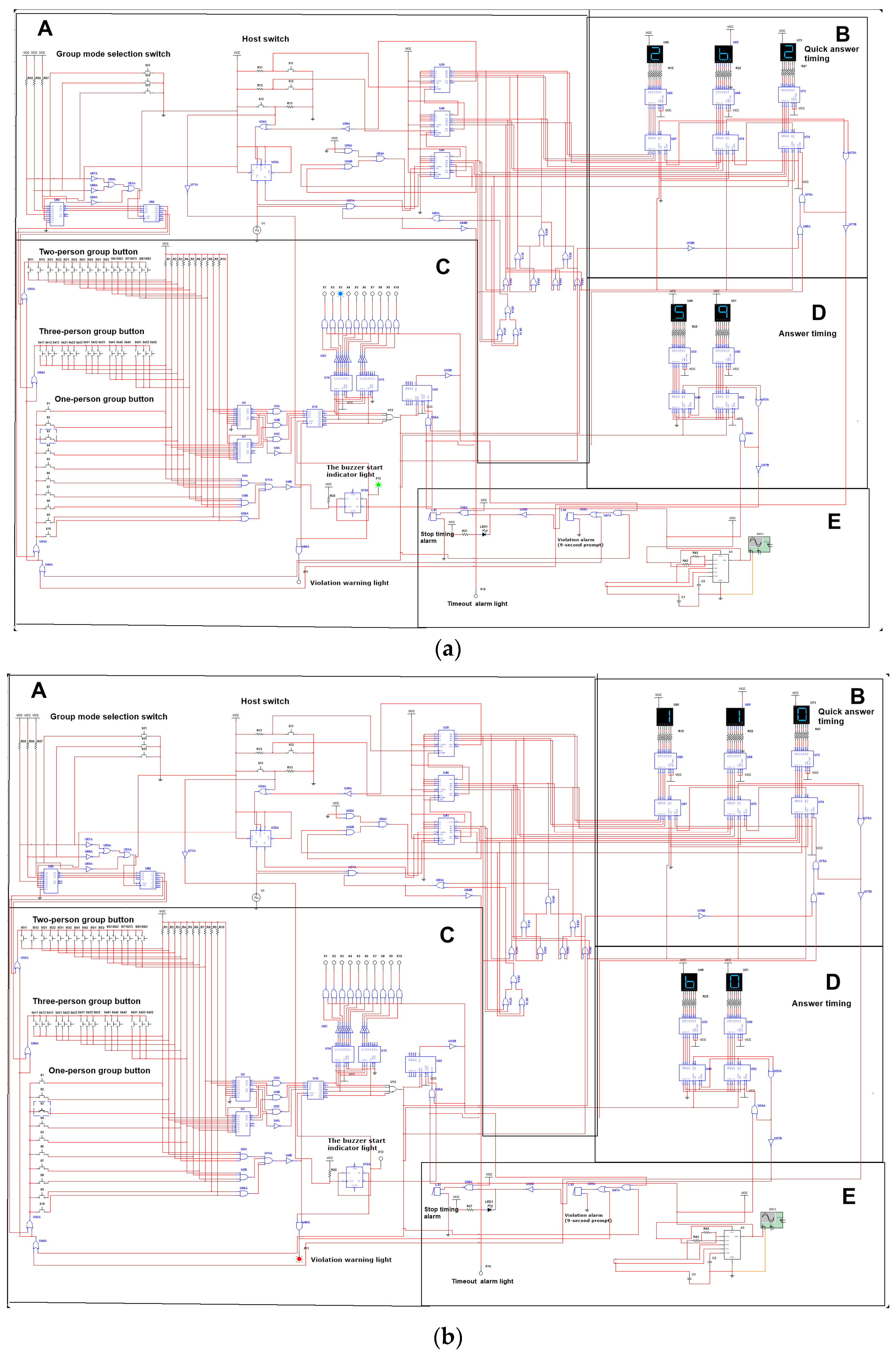

5. System Integration and Performance Testing

5.1. System-Level Evaluation

5.1.1. Functional Testing Results

5.1.2. Performance Comparison

5.2. Usability and Ethical Considerations

5.3. Portability and Runtime Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

Appendix A.2

Appendix A.3

References

- Corsini, F.; Gusmerotti, N.M.; Testa, F.; Frey, M. Exploring the drivers of the intention to scan QR codes for environmentally related information when purchasing clothes. J. Glob. Fash. Mark. 2025, 16, 18–31. [Google Scholar] [CrossRef]

- Alam, S.S.; Ahmed, S.; Kokash, H.A.; Mahmud, M.S.; Sharnali, S.Z. Utility and hedonic perception—Customers’ intention towards using of QR codes in mobile payment of Generation Y and Generation Z. Electron. Commer. Res. Appl. 2024, 65, 101389. [Google Scholar] [CrossRef]

- Pathak, S.K.; Jain, R. Use of QR Code Technology for Providing Library and Information Services in Academic Libraries: A Case Study. Pearl J. Libr. Inf. Sci. 2018, 12, 43. [Google Scholar] [CrossRef]

- AlNajdi, S.M. The effectiveness of using augmented reality (AR) to enhance student performance: Using quick response (QR) codes in student textbooks in the Saudi education system. Educ. Technol. Res. Dev. 2022, 70, 1105–1124. [Google Scholar] [CrossRef]

- Yan, L.Y.; Tan, G.W.H.; Loh, X.M.; Hew, J.J.; Ooi, K.B. QR code and mobile payment: The disruptive forces in retail. J. Retail. Consum. Serv. 2021, 58, 102300. [Google Scholar] [CrossRef]

- Dey, S.; Saha, S.; Singh, A.K.; McDonald-Maier, K. FoodSQRBlock: Digitizing food production and the supply chain with blockchain and QR code in the cloud. Sustainability 2021, 13, 3486. [Google Scholar] [CrossRef]

- Jiang, H.; Vialle, W.; Woodcock, S. Redesigning Check-In/Check-Out to Improve On-Task Behavior in a Chinese Classroom. J. Behav. Educ. 2023, 34, 371–398. [Google Scholar] [CrossRef]

- Madorin, S.; Laleff, E.; Lankshear, S. Optimizing Student Self-Efficacy and Success on the National Registration Examination. J. Nurs. Educ. 2023, 62, 535–536. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, F.; Yang, H.; Han, S. Does checking-in help? Understanding L2 learners’ autonomous check-in behavior in an English-language MOOC through learning analytics. ReCALL 2024, 36, 343–358. [Google Scholar] [CrossRef]

- Bockstedt, J.; Druehl, C.; Mishra, A. Incentives and stars: Competition in innovation contests with participant and submission visibility. Prod. Oper. Manag. 2022, 31, 1372–1393. [Google Scholar] [CrossRef]

- Tsai, K.Y.; Wei, Y.L.; Chi, P.S. Lightweight privacy-protection RFID protocol for IoT environment. Internet Things 2025, 30, 101490. [Google Scholar] [CrossRef]

- Tu, Y.J.; Zhou, W.; Piramuthu, S. Critical risk considerations in auto-ID security: Barcode vs. RFID. Decis. Support Syst. 2021, 142, 113471. [Google Scholar] [CrossRef]

- Ferreira, M.C.; Dias, T.G.; e Cunha, J.F. ANDA: An innovative micro-location mobile ticketing solution based on NFC and BLE technologies. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6316–6325. [Google Scholar] [CrossRef]

- JosephNg, P.S.; BrandonChan, P.S.; Phan, K.Y. Implementation of Smart NFC Door Access System for Hotel Room. Appl. Syst. Innov. 2023, 6, 67. [Google Scholar] [CrossRef]

- Huberty, J.; Green, J.; Puzia, M.; Stecher, C. Evaluation of mood check-in feature for participation in meditation mobile app users: Retrospective longitudinal analysis. JMIR Mhealth Uhealth 2021, 9, e27106. [Google Scholar] [CrossRef] [PubMed]

- Xu, F.Z.; Zhang, Y.; Zhang, T.; Wang, J. Facial recognition check-in services at hotels. J. Hosp. Mark. Manag. 2021, 30, 373–393. [Google Scholar] [CrossRef]

- Liu, J.; Chen, C. Optimal Design of Sports Event Timer Structure Based on Ferroelectric Memory. J. Nanomater. 2022, 1, 6712693. [Google Scholar] [CrossRef]

- Read, R.L.; Kincheloe, L.; Erickson, F.L. General purpose alarm device: A programmable annunciator. HardwareX 2024, 20, e00590. [Google Scholar] [CrossRef]

- Huang, L.; Sun, Y. User Repairable and Customizable Buzzer System using Machine Learning and IoT System. In Proceedings of the Computer Science & Information Technology (CS & IT) Conference, Chennai, India, 20–21 August 2022. [Google Scholar]

- Khan, M.M.; Tasneem, N.; Marzan, Y. ‘Fastest Finger First—Educational Quiz Buzzer’ Using Arduino and Seven-Segment Display for Easier Detection of Participants. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 27–30 January 2021; pp. 1093–1098. [Google Scholar]

- Wurtele, S.K.; Drabman, R.S. “Beat the buzzer” for classroom dawdling: A one-year trial. Behav. Ther. 1984, 15, 403–409. [Google Scholar] [CrossRef]

- Nkeleme, V.O.; Tahir, A.A.; Mohammed, Y. Design and Implementation of Classroom Crowd Monitoring System. Afr. Sch. J. Afr. Innov. Adv. Stud. (JAIAS-2) 2022, 2, 165–178. [Google Scholar]

- Mashat, A. A QR code-enabled framework for fast biomedical image processing in medical diagnosis using deep learning. BMC Med. Imaging 2024, 24, 198. [Google Scholar] [CrossRef]

- Huo, L.; Zhu, J.; Singh, P.K.; Pavlovich, P.A. Research on QR image code recognition system based on artificial intelligence algorithm. J. Intell. Syst. 2021, 30, 855–867. [Google Scholar] [CrossRef]

- Kim, J.I.; Gang, H.S.; Pyun, J.Y.; Kwon, G.R. Implementation of QR code recognition technology using smartphone camera for indoor positioning. Energies 2021, 14, 2759. [Google Scholar] [CrossRef]

- Xu, J.; Li, Z.; Zhang, K.; Yang, J.; Gao, N.; Zhang, Z.; Meng, Z. The principle, methods and recent progress in RFID positioning techniques: A review. IEEE J. Radio Freq. Identif. 2023, 7, 50–63. [Google Scholar] [CrossRef]

- Zhang, B.; Li, S.; Qiu, J.; You, G.; Qu, L. Application and research on improved adaptive Monte Carlo localization algorithm for automatic guided vehicle fusion with QR code navigation. Appl. Sci. 2023, 13, 11913. [Google Scholar] [CrossRef]

- Sun, D. Mathematics of QR Codes: History, Theory, and Implementation. Intell. Planet J. Math. Its Appl. 2025, 2, 1–12. [Google Scholar]

- Ohigashi, T.; Kawaguchi, S.; Kobayashi, K.; Kimura, H.; Suzuki, T.; Okabe, D.; Ishibashi, T.; Yamamoto, H.; Inui, M.; Miyamoto, R.; et al. Detecting fake QR codes using information from error-correction. J. Inf. Process. 2021, 29, 548–558. [Google Scholar] [CrossRef]

- Estevez, R.; Rankin, S.; Silva, R.; Indratmo, I. A model for web-based course registration systems. Int. J. Web Inf. Syst. 2014, 10, 51–64. [Google Scholar] [CrossRef]

- Redolar Soldado, S. Development and Evaluation of a System for Detecting Barcodes and QR Codes Using YOLO11: A Comparative Study of Accuracy and Efficiency. Bachelor’s Thesis, Universitat Politècnica de València, Valencia, Spain, 2025. [Google Scholar]

- Barzazzi, D. A Quantitative Evaluation of the QR Code Detection and Decoding Performance in the Zxing Library. Master’s Thesis, Università Ca’ Foscari Venezia, Venice, Italy, 2023. [Google Scholar]

- Powell, C.; Shaw, J. Performant barcode decoding for herbarium specimen images using vector-assisted region proposals (VARP). Appl. Plant Sci. 2021, 9. [Google Scholar] [CrossRef] [PubMed]

- Sellers, K.K.; Gilron, R.E.; Anso, J.; Louie, K.H.; Shirvalkar, P.R.; Chang, E.F.; Little, S.J.; Starr, P.A. Analysis-rcs-data: Open-source toolbox for the ingestion, time-alignment, and visualization of sense and stimulation data from the Medtronic Summit RC+S system. Front. Hum. Neurosci. 2021, 15, 714256. [Google Scholar] [CrossRef]

- Vatsa, A.; Hati, A.S.; Kumar, P.; Margala, M.; Chakrabarti, P. Residual LSTM-based short duration forecasting of polarization current for effective assessment of transformers insulation. Sci. Rep. 2024, 14, 1369. [Google Scholar] [CrossRef]

- Prud’homme, A.; Nabki, F. Cost-effective photoacoustic imaging using high-power light-emitting diodes driven by an avalanche oscillator. Sensors 2025, 25, 1643. [Google Scholar] [CrossRef]

- Yang, S.; Li, D.; Feng, J.; Gong, B.; Song, Q.; Wang, Y.; Yang, Z.; Chen, Y.; Chen, Q.; Huang, W. Secondary order RC sensor neuron circuit for direct input encoding in spiking neural network. Adv. Electron. Mater. 2024, 10, 2400075. [Google Scholar] [CrossRef]

- Chan, E.K.F.; Othman, M.A.; Razak, M.A. IoT based smart classroom system. J. Telecommun. Electron. Comput. Eng. 2017, 9, 95–101. [Google Scholar]

- Burunkaya, M.; Duraklar, K. Design and implementation of an IoT-based smart classroom incubator. Appl. Sci. 2022, 12, 2233. [Google Scholar] [CrossRef]

- Tribak, H.; Gaou, M.; Gaou, S.; Zaz, Y. QR code recognition based on HOG and multiclass SVM classifier. Multimed. Tools Appl. 2024, 83, 49993–50022. [Google Scholar] [CrossRef]

- Yang, S.Y.; Jan, H.C.; Chen, C.Y.; Wang, M.S. CNN-Based QR Code Reading of Package for Unmanned Aerial Vehicle. Sensors 2023, 23, 4707. [Google Scholar] [CrossRef] [PubMed]

- Kim, I.P.; Kräuter, A.R. VDR decomposition of Chebyshev–Vandermonde matrices with the Arnoldi Process. Linear Multilinear Algebra 2024, 72, 2810–2822. [Google Scholar] [CrossRef]

- Wei, L. Design of Smart Pill Box Using Multisim Simulation. In Proceedings of the Institution of Engineering and Technology (IET) Conference Proceedings CP895, Stevenage, UK, 24–25 June 2024; pp. 418–425. [Google Scholar]

- Liu, Z. Systematic Analysis of Sequential Circuits in Digital Clock and Its Display Mode Comparison. Appl. Comput. Eng. 2025, 129, 51–57. [Google Scholar] [CrossRef]

- Samoylenko, V.; Fedorenko, V.; Kucherov, N. Modeling of the Adjustable DC Voltage Source for Industrial Greenhouse Lighting Systems. In Proceedings of the International Conference on Actual Problems of Applied Mathematics and Computer Science, Cham, Switzerland, 3–7 October 2022; pp. 167–178. [Google Scholar]

- Teoh, M.K.; Teo, K.T.; Yoong, H.P. Numerical computation-based position estimation for QR code object marker: Mathematical model and simulation. Computation 2022, 10, 147. [Google Scholar] [CrossRef]

- Wang, W.; Huai, C.; Meng, L. Research on the detection and recognition system of target vehicles based on fusion algorithm. Math. Syst. Sci. 2024, 2, 2760. [Google Scholar] [CrossRef]

- Kadhim, S.A.; Yas, R.M.; Abdual Rahman, S.A. Advancing IoT Device Security in Smart Cities: Through Innovative Key Generation and Distribution With D_F, GF, and Multi-Order Recursive Sequences. J. Cybersecur. Inf. Manag. 2024, 13, 84–95. [Google Scholar]

- Abas, A.; Yusof, Y.; Ahmad, F.K. Expanding the data capacity of QR codes using multiple compression algorithms and base64 encode/decode. J. Telecommun. Electron. Comput. Eng. (JTEC) 2017, 9, 41–47. [Google Scholar]

| Perturbations | Acc (%) | Consis (%) | Time (ms) |

|---|---|---|---|

| Salt-and-Pepper Noise | 90.0 | 72.2 | 14 |

| Gaussian Noise | 90.0 | 77.8 | 14 |

| Shadowing | 83.3 | 68.0 | 14 |

| Skew | 93.3 | 86.0 | 12 |

| Surface Creasing | 86.7 | 74.0 | 14 |

| Missing Regions | 78.3 | 61.7 | 15 |

| Smudging | 85.0 | 72.5 | 14 |

| Average | 86.7 | 73.6 | 14 |

| Technical Schemes | System-Level Power Consumption (W) | Cost ($) | Deployment Complexity |

|---|---|---|---|

| FPGA | 5–10 | 80–200 | Challenging |

| STM32 | 0.7–1.5 | 30–50 | Difficult |

| Arduino Mega 2560 | 0.7–1.2 | 23–40 | Moderate |

| ESP32 | 0.6–1.0 | 16–30 | Moderate |

| This system | 1.5–3.0 | 25–40 | Moderate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Bian, W.; Diao, Y.; Zou, T.; Yang, X.; Kang, B. A QR-Enabled Multi-Participant Quiz System for Educational Settings with Configurable Timing. Appl. Syst. Innov. 2025, 8, 158. https://doi.org/10.3390/asi8060158

Li J, Bian W, Diao Y, Zou T, Yang X, Kang B. A QR-Enabled Multi-Participant Quiz System for Educational Settings with Configurable Timing. Applied System Innovation. 2025; 8(6):158. https://doi.org/10.3390/asi8060158

Chicago/Turabian StyleLi, Junjie, Wenyuan Bian, Yuan Diao, Tianji Zou, Xinqing Yang, and Boqi Kang. 2025. "A QR-Enabled Multi-Participant Quiz System for Educational Settings with Configurable Timing" Applied System Innovation 8, no. 6: 158. https://doi.org/10.3390/asi8060158

APA StyleLi, J., Bian, W., Diao, Y., Zou, T., Yang, X., & Kang, B. (2025). A QR-Enabled Multi-Participant Quiz System for Educational Settings with Configurable Timing. Applied System Innovation, 8(6), 158. https://doi.org/10.3390/asi8060158