Abstract

In recent years, artificial intelligence (AI) technology has advanced rapidly and gradually permeated fields such as healthcare, the Internet of Things, and industrial production, and the dance field is no exception. Currently, various aspects of dance, including choreography, teaching, and performance, have initiated exploration into integration with AI technology. This paper focuses on the research and application of AI technology in the dance field, expounds on the core technical system and application scenarios of AI, analyzes existing issues restricting the prosperity and development of the dance field, summarizes and introduces specific research and application cases of AI technology in this domain, and presents the practical achievements of technology–art integration. Finally, it proposes the problems to be addressed in the future application of AI technology in the dance field.

1. Introduction

Dance is an art form that employs the body as a brush and time–space as paper. Throughout history, it has carried human beings’ rich emotions and the cultural imprints of eras, as well as society’s collective memories and value consensus, thus occupying an irreplaceable position in the evolution of human civilization. As an ancient art form, dance traces its origins to ancient times, with roots dating back to the Paleolithic Age tens of thousands of years ago. From serving as a symbol of power and an instrument of order in the era of ancient civilizations to functioning as a tool for dialogue in class mobility and social changes in the modern and contemporary era, it has further evolved into a diversified carrier of cross-cultural communication, group identity, and social care in the present age.

The progress of material civilization and the rise of aesthetic aspirations have driven continuous innovation and evolution in both the expressive forms and content of dance, while its traditional essence has been preserved. Empowered by science and technology, dance has been deeply integrated with emerging technologies, injecting new vitality into choreography, teaching, and performance and elevating the art of dance to new heights. Among these technologies, AI is an emerging discipline that simulates human intelligent behaviors via computational algorithms. It was first formally proposed at the 1956 Dartmouth Conference [1]. The core principle of AI is to endow machines with capabilities, such as perception, learning, reasoning, and decision making. Its objectives are to enhance efficiency, augment decision-making accuracy, mitigate costs, and deliver personalized and tailored services as required. Currently, AI technologies have been widely applied in numerous fields, including the Internet of Things, healthcare, finance, manufacturing, and agriculture. Notably, the innovative application of AI in dance is driving multiple transformations. For example, the open-source AI platform DeepSeek can rapidly generate a comprehensive dance creative framework that includes a story core and stage design based on input thematic requirements. It also enables the transfer of dance movements to virtual characters, supporting personalized choreography. X-Dancer, developed by ByteDance, achieves zero-shot, music-driven dance-video generation via Transformer-diffusion technology. It can generate diverse dance movements with fine synchronization to musical rhythms and convert them into high-resolution realistic videos. As shown in Figure 1, via data and algorithms, AI can expand dance’s boundaries across dimensions like choreography, teaching, and performance, making dance more efficient, diverse, and innovative [2,3,4]. In this paper, we present an overview of key AI technologies and analyze the current challenges in the dance domain, particularly those pertaining to choreography, teaching, and performance. First, it systematically constructs a technology-application framework for AI-enabled dance, filling the gap in comprehensive panoramic reviews; second, it provides an in-depth diagnosis of the critical bottlenecks currently hindering the development of the field, offering a clear list of issues to overcome the barriers between technology and artistic integration; third, it demonstrates the feasibility and value of integrating technology and art through detailed case studies; and fourth, it proposes forward-looking development recommendations, providing a roadmap for future research and practice. Furthermore, we focus on elaborating on existing research endeavors in which AI technologies are applied to tackle these challenges.

Figure 1.

The application of AI technology in the dance field.

2. Artificial Intelligence Technology

AI is, essentially, a key branch of computer science, aiming to enable machines to simulate human thinking processes and achieve intelligent behaviors. Leveraging massive data, AI technologies process complex information and generate intelligent responses by virtue of algorithmic models such as machine learning and deep learning, along with the computing power provided by dedicated chips like GPUs. Currently, AI technologies have been used to achieve mature applications in multiple fields [5].

The core technical system of AI mainly includes machine learning, deep learning, natural language processing, computer vision, knowledge graphs, reinforcement learning, and generative AI. These technologies collectively serve the goal of enabling machines to simulate human intelligent behaviors and form the foundation for AI to achieve perception, understanding, decision making, learning, and interaction.

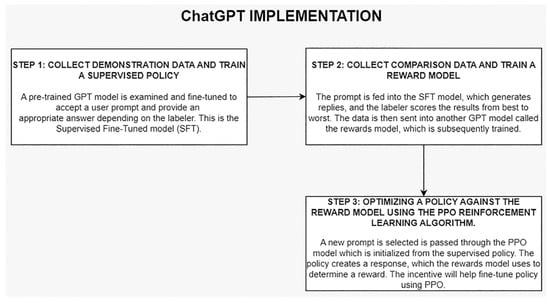

The first is natural language processing, which mainly enables machines to recognize, process, understand, and generate human language [6,7]. Its core lies in converting signals such as text or speech into digital signals that can be directly processed by machines and enabling machines to master language rules through model learning. Typical applications of natural language processing mainly include Google Translate, the initial ChatGPT (as shown in Figure 2), DeepSeek, and other intelligent dialogue systems [8].

Figure 2.

Diagram of the initial GPT implementation process [8]. Copyright © 2025 Association for Information Science and Technology.

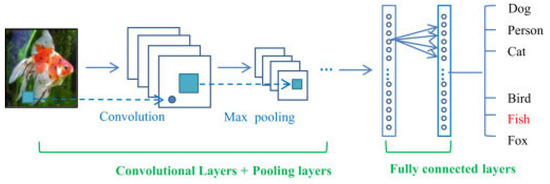

The second field is computer vision, which primarily enables machines to “understand” image and video information. Its core is to empower machines to acquire, analyze, and comprehend semantic features from pixel data, thereby achieving the perception and interpretation of visual information [9,10,11,12]. As shown in Figure 3, computer vision mainly involves technologies such as convolutional neural networks, object detection, and image segmentation. Typical applications include face recognition, medical image diagnosis, and visual perception in autonomous driving [13].

Figure 3.

The pipeline of the general CNN architecture [13]. Copyright © 2025 Elsevier B.V. All rights reserved.

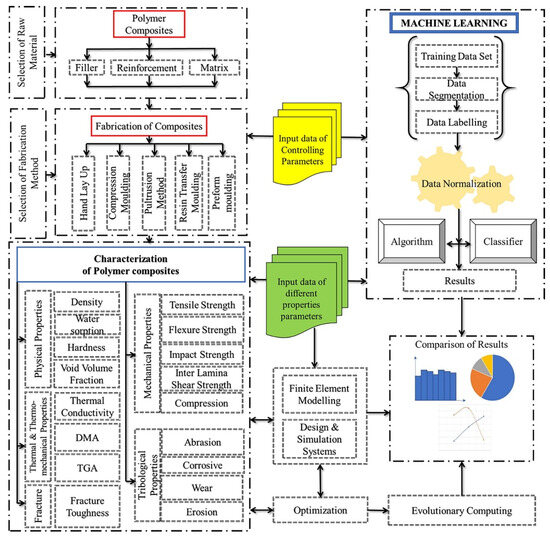

The third is data analysis based on pattern recognition and machine learning algorithms, which primarily enables machines to conduct autonomous learning from massive data and grasp their rules [14,15,16]. Currently, mainstream machine learning algorithms can be categorized into unsupervised learning, supervised learning, and semi-supervised learning, with differences in data annotation requirements, learning objectives, and application scenarios. For instance, personalized content recommendation for users mainly relies on unsupervised learning algorithms, while medical disease risk prediction is primarily based on supervised learning algorithms. Figure 4 illustrates the application of machine learning for predicting the overall behavior of fiber-reinforced polymer composites [17].

Figure 4.

Role of machine learning in fiber-reinforced polymer composites [17]. Copyright © 2025 Elsevier Ltd. All rights reserved. Selection and peer-review under the responsibility of the scientific committee of the International Conference on Advances in Materials Processing & Manufacturing Applications.

Fourth is robotics technology, which integrates mechanical engineering, electronic information, computer science, sensor technology, and AI algorithms [18,19]. Robots can adapt to complex working environments and accomplish diverse instructions and tasks. Robotics technology primarily involves core technologies, such as environmental perception, motion control, and task planning, and has currently achieved a particular scale of production and application. For instance, collaborative robots on production lines enable the automatic assembly of mobile phone motherboards; restaurant delivery robots can navigate around customers to deliver meals to designated locations; and robotic systems in medical surgeries can precisely execute surgical plans [20].

Fifth is intelligent decision making and planning, a pivotal application field of AI technology. It utilizes algorithmic models and data-driven approaches to autonomously analyze complex problems, mitigate risks, and, ultimately, provide optimal solutions or action strategies [21]. This technology is driving the transformation of decision-making models across various industries from experience-oriented to scientifically efficient and intelligence-oriented. Examples include supply-chain warehousing and distribution schemes and risk assessment and asset allocation in the financial and investment sectors (Figure 5) [22].

Figure 5.

Cultural and creative-industry-related venture project recommendation and resource optimization model based on a neural network algorithm [22]. Copyright © 2025 Elsevier Ltd. All rights reserved.

In fact, the core mechanism of these technologies is the combination of data and algorithms. They are not entirely independent but intersect and integrate in various application scenarios, jointly achieving the goal of assisting or replacing humans to complete tasks with higher efficiency and lower cost. The significant advantages of AI technologies have continuously expanded their application boundaries, benefiting fields including choreography, teaching, and performance.

3. Traditional Constraints in Dance

A complete dance expression, in addition to the indispensable and most fundamental core element of human movement, relies on the synergistic interaction of musical rhythm, stage space, emotional expression, and style and form design. As an art form integrating physical expression, emotional communication, and cultural connotation, the further development of dance has long been constrained by factors such as technology, resources, and creative logic.

First is the field of choreography. Pluralistic integration is a current development trend across various domains, and dance is no exception. Combining multiple dance genres (e.g., hip-hop, classical dance, and ethnic dance) or various artistic performance forms (such as dance and drama) is expected to create more creative, novel, and appealing artistic works. Traditional choreography is highly dependent on choreographers’ personal styles, experience, and knowledge of dance techniques, which makes choreographers prone to rigidified thinking, stylistic limitations, and creative bottlenecks. This leads to issues in their works, such as homogenization, awkward juxtaposition, or lack of innovation. Moreover, for large-scale group dance works, it is necessary to coordinate dancers’ physical conditions, such as appearance, height, and flexibility, along with repeated rehearsals and adjustments, rendering the process costly and inefficient.

Second is the field of teaching. The rapid development of internet technology and cultural industries has enabled more people to understand and appreciate dance, awakening their awareness of self-expression and emotional release through dance. However, traditional dance teaching is mainly conducted offline, which subjects it to temporal and spatial constraints, particularly for office workers. Furthermore, disparities exist in teaching resources and styles across different regions, which may fail to meet learners’ needs. This hinders the development, dissemination, and integration of dance. In addition, dance learning involves imitating teachers’ movements, but students vary in physical coordination, comprehension, and memory. As a result, in most one-to-many dance classes, students progress at different paces, yet teachers have to proceed according to the average level. This leads to some students having surplus learning capacity while others fall behind, resulting in low teaching efficiency. More importantly, teachers can only assess the accuracy of students’ movements through visual observation, making it difficult to quantify and analyze details. They cannot precisely correct errors related to joint angles, force application sequences, deviations in center-of-gravity shifts, or positioning, nor can they simultaneously monitor everyone’s performance.

Third is the field of performance. For a long time, dance performances have been confined to the stage, separating dancers and audiences into two areas. During performances, interactions among dancers, lighting, sound effects, and audiences are mostly preprogrammed, with fixed forms that cannot be flexibly adjusted based on the audience’s real-time reactions. Moreover, most dance performances are limited to the traditional stage framework and lack sufficient integration with emerging technologies, such as the metaverse, virtual reality (VR), and augmented reality (AR). As a result, for some audiences, especially young audiences, these performances lack real-time interactivity, with room for improvement in the sense of enjoyment and immersive experience. Additionally, dance includes long-duration, high-difficulty movements, such as lifts, aerial flips, and continuous spins. These movements often serve as key highlights and are indispensable in performances. However, they place high demands on dancers’ physical stamina and interpersonal rapport. If dancers make mistakes or experience fatigue, they are prone to injury, which significantly impacts the performance quality. Therefore, from choreography to performance, it is necessary to balance performance quality and risk. This balance, to some extent, limits the creativity and possibilities of dance performances. Furthermore, existing stage designs are constrained by high costs of procuring and maintaining stage props, as well as venue specifications and load-bearing capacity constraints. This also prevents some planned dance performances from achieving their intended effects.

4. Applications of Artificial Intelligence Technology in the Dance Domain

The development of dance serves as a crucial pillar for cultural inheritance, artistic innovation, and the connection between social and individual values. From the perspective of cultural inheritance, dance records ethnic customs, historical evolution, and regional characteristics, acting as a medium for intergenerational and cross-cultural dialogue. From the perspective of artistic expression, dance conveys emotions and reflections through physical rhythms, transcending linguistic barriers to allow more people to directly perceive the essence of beauty and, thus, driving the continuous exploration of human body expressiveness. From the perspective of linking social and individual values, as an integral part of public cultural life, dance unites independent individuals and communities, transmits positive energy through performances, fosters a healthy social atmosphere, and promotes the development of personal abilities. Therefore, advancing the development of the dance field is both important and necessary.

The current constraints on dance development urgently require resolution through advanced scientific and technological means. A variety of advanced technologies have been applied in the dance field, such as AI technology, motion capture technology, augmented/virtual reality (AR/VR) technology, and holographic projection technology [23]. Among these, AI technology has broken the boundaries of traditional dance art from multiple dimensions, injecting possibilities of efficiency, personalization, and innovation into dance choreography, teaching, and performance through technological empowerment [24]. Furthermore, the integration of AI technology with other technologies can break temporal and spatial limitations, enabling dance to align deeply with the aesthetics and interaction modes of the current digital era while preserving its spiritual core. This expands the audience base of the dance field and drives its prosperous development.

4.1. Applications of AI Technology in Dance Choreography

Dance choreography is a creative process in which choreographers integrate their ideas, emotions, and cultural connotations into the design of physical movements, formation arrangements, and rhythmical structures, ultimately creating an expressive dance work. It is not merely a simple arrangement and combination of dancers’ various physical movements but a crucial link that endows dance with soul through conceptual design in terms of space, dynamics, and narrative logic [25,26]. Against this backdrop, AI technology offers significant value for choreography. Based on existing dance databases, it can provide references for choreographers, inspire creativity, and preview choreographic effects through intelligent algorithms. Its application will lower the threshold for cross-genre and cross-domain integration, injecting new vitality into dance choreography [27,28,29].

4.1.1. AI-Driven Choreographic Innovation

In the dance field, computer programming was first applied to capture dance notation systems using “interactive and graphic movement systems”, serving as supportive tools for choreographers and dancers. In 1964, at the University of Pittsburgh, Jeanne Beaman and Paul Le Vasseur initiated the use of computers for composing dance movements from the perspectives of time, space, and movement types [30]. Through random permutation of these movements, they generated performable dance sequences. In 1986, Simon Fraser University developed Lifeforms, an early computer tool for assisting choreography, which enabled visual design of movement sequences through three interactive windows (sequence editor, spatial window, and timeline window) [31]. In his 1991 work “Trackers”, choreographer Merce Cunningham utilized this program to generate one-third of the movements. Its core lies in transforming choreographic concepts into digital arrangements across spatial and temporal dimensions, facilitating exploration of the possibilities of human movement and expanding the physical limitations of traditional choreography.

Now, with the popularization and application of motion capture technology and AI technology, it has become possible to generate unprecedented dance movements based on massive dance data, providing choreographers with some non-traditional choreographic ideas.

4.1.2. Style-Specific AI Choreography Systems

In 2019, British choreographer Wayne McGregor collaborated with Memo Akten from Google Arts & Culture Lab. They fed the AI with an interactive atlas containing tens of thousands of movements from McGregor’s 25-year creative archive, as well as the dance movements of ten solo dancers. This collection is referred to as the “Life Archive” [32]. In the project, a recurrent neural network, a deep machine learning algorithm, was used to analyze and train on the Life Archive video database, enabling the AI to grasp McGregor’s choreographic style fully. During the choreographic process, the dancers’ movements were captured via webcam, and after 5-10 s, the AI would predict the most probable subsequent skeletal movement sequences that fit the dance sequence. Eventually, based on these AI-generated movement sequences, they staged a 30 min dance work entitled “Life Archive: An AI Experiment” at the Los Angeles Music Center.

In 2020, Pontus Lidberg, Artistic Director of the Danish Dance Theatre, collaborated with computer artist Cecilie Wagner Falkenstrøm to develop David, an AI application for choreography [33]. In the project, they input various contents and information into David, including Lidberg’s dances, and these data were analyzed and learned by a series of machine learning algorithms. For instance, long short-term memory networks were used to generate dance structures and elements; swarm intelligence was employed to create unconscious group interactions among dancers; reinforcement learning combined with genetic algorithms was applied to build structures according to specific goals; Transformer models for natural language processing were utilized to reconstruct dramatic literature into narratives; and speech synthesis technology enabled David to issue real-time instructions to dancers. These technologies were not only used in creation but also played a role in real time on stage. In the performance of the AI-choreographed work “Centaurus”, nine dancers interacted with a screen equipped with AI. David guided the dancers to make movements through voice instructions, and after capturing and analyzing the dancers’ reactions in real time, he issued subsequent instructions, forming a dynamic feedback process. Due to the complexity of the algorithms, David’s outputs were unpredictable and, at the same time, dancers’ understanding and feedback of the instructions varied. This made each performance of “Centaurus” present differently, making it a dance work that combines AI with human wisdom.

Pat et al. developed Text2Tradition, a system that connects modern language processing with traditional dance knowledge through AI technology, converting user-generated text prompts into Thai classical dance movement sequences [34]. The system takes the 59 foundational movements “Mae Bot Yai” of Thai classical dance as the core vocabulary. It integrates the “Six Elements” (energy, circles and curves, pivot points, synchronized limbs, external space, and transitional relationships) as the choreographic framework. It parses user prompts via a large language model (GPT-4o) to generate dance sequences that conform to traditional styles, which are ultimately presented through 3D virtual characters on a web interface. They also transformed the Six Elements into algorithmic programs and used TypeScript to write computational models for dynamically adjusting the movements of virtual characters. Based on encoded choreographic principles, the virtual characters can respond in real time to the movements of human dancers, forming improvisational interactions. Moreover, human dancers, choreographers, and even audiences are allowed to adjust the parameters of virtual characters via voice [35]. The outcome is embodied in the dance work “Cyber Subin”, demonstrating the potential of human–AI co-dancing in inheriting traditional dance knowledge and expanding the possibilities of choreography.

4.1.3. Multi-Style Compatible AI Choreography Systems

Gao et al. utilized an advanced computer-aided 3D system for dance creation [36]. The core of this system lies in constructing a model integrating principal component analysis (PCA) and neural networks, which is applied to dance movement prediction, classification, and creation assistance. In the research, PCA was used to reduce the dimensionality of 3D dance movement data and extract key features (such as movement trajectory, velocity, and acceleration). Combined with tools like Mayavi and Plotly, the movement data processed by PCA can be converted into 3D visual models, intuitively displaying movement trajectories and spatial relationships. Furthermore, neural networks were integrated to classify and model the features, enabling the analysis and prediction of movement dynamics, patterns, and stylistic elements of different dance genres. The research results indicate that this system can effectively evaluate auxiliary points of dance movements, generate dance contour sketches by referring to videos, and create or assist in creating dance movements, thereby providing references and targeted suggestions for choreographers.

Zeng proposed DanceMoveAI, an AI choreography tool based on the multilayer perceptron (MLP) model [4]. It can automatically generate adaptive dance movement suggestions for dancers of varying skill levels by analyzing features such as musical beats and rhythms. Through the MLP model, DanceMoveAI analyzes musical features, including beats (BPM), rhythm patterns (e.g., waltz and tango), and rhythm complexity, dynamically adapting to different music genres and dancers’ needs. It provides efficient and innovative technical support for the choreographic process, enhancing the efficiency and diversity of dance creation. For instance, for the same piece of music, the model can output five movement combinations of different styles (such as versions emphasizing power, softness, or improvisation), helping choreographers break through mental constraints and enrich choreographic schemes.

While AI technology offers significant advantages in choreography, the ownership of AI-related choreographic works remains highly contentious in both legal and ethical contexts. The copyright systems within most countries’ existing legal frameworks still center on human creativity, rendering purely AI-generated choreographic works ineligible for copyright protection. Practically, human–AI collaborative creation dominates current AI-driven practices: choreographers typically provide conceptual inputs and materials, with AI undertaking creative tasks through learning and reasoning. In such cases, the ownership of dance works is highly ambiguous and cannot be simply ascribed to a single party. Furthermore, the material databases used for AI training are critical to dance work generation, and the copyright issues surrounding the works contained in these databases further complicate ownership determination. These issues are pivotal and urgently require unified consensus and standardization to advance the development and application of AI technology in the dance field.

4.2. Applications of AI Technology in Dance Teaching

Breaking through the temporal and spatial constraints and personalized limitations of traditional dance teaching, AI technology is reshaping conventional teaching models and improving the efficiency of dance instruction [37,38,39]. On the one hand, by integrating with motion capture technology, AI can collect dancers’ characteristic parameters in real time, such as joint angles and movement trajectories. Combined with deep learning algorithms and compared with standard movement databases, AI can accurately identify deviations in dancers’ movements, such as insufficient toe point angles in ballet dancers, and then generate reports with professional recommendations [40]. On the other hand, by analyzing students’ data, including learning progress, interests and hobbies, and ability levels, AI can recommend the most suitable learning resources and paths for students. This not only breaks down the temporal and spatial barriers of high-quality dance teaching resources but also better stimulates learners’ potential and enhances their confidence [41].

4.2.1. Core Technical Models

Core technical models refer to the fundamental algorithms and model innovations that underpin AI-driven dance teaching, serving as the technical prerequisite for achieving precise movement recognition and assessment. Core technical models typically demonstrate a high degree of versatility and can empower various AI applications in dance. However, their utilization usually requires users to have a certain technical background, making them difficult for ordinary dancers to apply directly. Accurate motion recognition and evaluation models form the foundation for the application of AI in the field of dance teaching [42,43]. Xie et al. proposed a GCN-SNN model that combines graph convolutional networks (GCNs) and Siamese neural networks (SNNs). This model aims to accurately identify the characteristics of sports dance movements and provide technical support for personalized teaching [44]. In the research, a human skeleton graph is constructed. A GCN is used to extract the spatial features of joint nodes and bone connections, as well as temporal dynamics. Then, an SNN is employed to compare the feature similarity of different skeleton sequences, thereby achieving high-precision recognition of other dance styles.

Li et al. developed a dance action recognition and feedback model based on the graph attention mechanism (GA) and bidirectional gated recurrent unit (3D-Resnet-BigRu) [45]. The model first extracts spatial features of video sequences (such as body posture and spatial layout) through 3D-ResNet, then uses BiGRU to capture time-series features (such as movement coherence and rhythm changes), and finally dynamically adjusts node weights via GA to optimize motion recognition performance. Experimental results show that the model exhibits high efficiency in terms of testing time, training time, and CPU occupancy, enabling real-time analysis of students’ movements and provision of targeted feedback.

Zhen et al. proposed a 3D-ResNet ethnic dance movement recognition model that integrates 3D Convolutional Neural Networks (3D-CNN) and Residual Networks (ResNet) [46]. This model can optimize feature extraction for the unique styles of different ethnic dances. The research constructed a dataset containing dance movements of the following six ethnic groups: Miao, Dai, Tibetan, Uyghur, Mongolian, and Yi. Through model training and testing, it was found that its recognition accuracy exceeded 95% both on the self-built dataset and the NTU-RGBD60 database, demonstrating strong adaptability and generalization ability to different ethnic dances. The model can accurately capture the spatiotemporal features of dance movements, providing technical support for ethnic dance teaching. It particularly shows application potential in cross-cultural dance teaching, while emphasizing the need to balance cultural sensitivity and data diversity in technical applications.

4.2.2. Integrated Application Systems

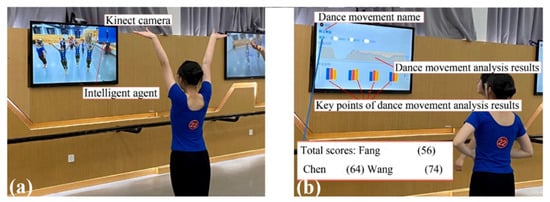

Integrated Application Systems are comprehensive teaching platforms built upon many core technologies, demonstrating the fully-realized applications of AI technology. Integrated application systems can customize functions according to the specific needs of dance and teaching scenarios, thus fitting practical contexts well. Moreover, they are usually equipped with complete client terminals, featuring low usage thresholds and enhanced user experience and interactivity. However, the integration of multiple technologies and modules determines that such systems involve high maintenance difficulty and costs. Xu et al. developed an AI-based Dance Skills Teaching, Evaluation, and Visual Feedback (DSTEVF) system [47]. The system includes an AI tutor module, which captures students’ movements via Kinect devices and compares them with the standard movement database of professional teachers based on the Dynamic Time Warping (DTW) algorithm, scoring from dimensions such as movement matching degree, fluency, and synchronization with music rhythm. As shown in Figure 6, the AI-generated report records the practice times, score changes, and improvement suggestions for different movements. It provides demonstration videos of standard movements by the AI tutor for reference. They randomly divided 40 dance students into an experimental group (using the DSTEVF system) and a control group (receiving traditional teaching). After 8 weeks of teaching experiments, it was found that the DSTEVF system significantly improved students’ dance skills and self-efficacy.

Figure 6.

Students practice dancing in the classroom with DSTEVF: (a) students practicing; (b) visual evaluation results [47]. Copyright © 2025 Elsevier Ltd. All rights are reserved, including those for text and data mining, AI training, and similar technologies.

A mature AI dance teaching system can only be constructed by integrating a high-precision motion recognition model with a real-time feedback and multimodal interaction system, a personalized learning path planning module, and a human–machine collaborative auxiliary teaching module, thereby forming a complete closed loop of recognition–feedback–teaching–evolution. Liu et al. designed an AI-based teaching system for adolescent health Latin dance, whose core lies in improving teaching effectiveness by optimizing motion recognition models [48]. The system adopts computer vision technology and posture recognition algorithms, locates human targets through two-stage or single-stage object detection algorithms, extracts global features (such as overall movement trajectory) and local features (such as joint angles), and identifies the correctness of Latin dance movements in combination with deep learning models. It provides functions of motion data analysis and posture monitoring and correction, helping students adjust and correct dance postures accurately, prevent sports injuries, and improve dance skills. In addition, the system realizes a personalized recommendation system and a virtual coach function, recommending suitable course content and training plans through machine learning algorithms. This not only increases the fun and motivation of learning but also helps students better understand and master dance movements.

Kumar et al. proposed an AI-based automatic dance evaluation system called Smart Steps, which can address the problems of intense subjectivity and inconsistent standards in traditional dance training [43]. The system uses MediaPipe to extract key human joints (such as shoulder joints, hip joints, and knee joints) and generate direction vectors of limbs. It processes video frames through OpenCV, calculates the cosine similarity of limb direction vectors, aggregates them to obtain the overall motion matching degree (in percentage), and presents the results with real-time feedback and a visual interface. The interface synchronously displays the skeleton models of the reference posture and the learner’s posture, showing the alignment through visual markers and matching percentages and providing immediate feedback for posture improvement.

The integration of AI and VR technologies can achieve dual empowerment in dance teaching, as follows: data-driven precision and the sense of immersion from immersive experiences, breaking through the temporal-spatial constraints and sensory limitations of traditional education. Zhang et al. constructed a VR-based digital sports dance teaching platform [49]. The platform is divided into a server (including a database and web services) and a client (supporting functions such as action preview, simulation training, and virtual interaction). The system captures motion data via Kinect somatosensory devices, constructs a 3D human motion model, and realizes motion comparison and error correction by combining multi-feature fusion algorithms (such as distance and angle features). In the project, two motion sequence segmentation technologies were adopted, and an adaptive module was used to optimize the topological structure of ST-GCNs (spatial temporal graph convolutional networks) to enhance the recognition of dance features. The introduction of the ST-GCN attention module enables model training to focus on key motion features (such as the hip lifting and waist tucking details of the “three bends” in Dai dance). By using transfer learning, the recognition accuracy of the system is further improved, solving the problem of “vague error correction” in traditional teaching (e.g., “lift your arm a bit higher”) and realizing quantitative feedback (e.g., “the knee angle should be opened by another 15°”) to generate personalized training plans.

Shen et al. designed a virtual teaching robot system, exploring the application of gamified learning experiences involving digital media and virtual teaching robots in dance teaching [50]. The system integrates technologies, such as 3D motion capture, posture calculation, loss function constraint, and similarity matching. It presents dance movements through methods like skeleton orientation, audio–visual synchronization of digital media, and analysis of robot movement effects, and it integrates these into the gamified learning process. The virtual teaching robot can dynamically adjust the speed of movement demonstration according to music beats, helping students understand the correlation between movements and rhythms. With the support of VR technology, students can intuitively learn dance by following the virtual teaching robot. In addition, the system will generate gamified elements, such as customized tasks, challenges, and rewards, based on students’ learning data, stimulating students’ learning interest and participation, as well as improving learning effects.

The application of AI technology in the field of dance teaching has largely reconstructed the current ecological environment of dance education. For students, the introduction of AI technology has lowered the threshold for dance learning, providing more people with opportunities to learn dance. With the help of AI technology, they can receive one-on-one professional guidance and adjust their dance learning plans in real time according to their progress. This effectively improves the efficiency of dance learning and helps enhance their confidence. For teachers, AI can efficiently identify and judge the standardization of students’ dance movements, which liberates teachers from mechanical error correction and enables them to devote more energy to guiding students in emotional expression, style shaping, etc. Based on the students’ training reports provided by AI, teachers can adjust the curriculum plans and learning intensity in a targeted manner, realizing hierarchical teaching. In the future, AI will further develop toward in-depth collaboration. Combining motion capture technology with higher recognition accuracy and algorithms capable of emotional interaction, it will achieve comprehensive optimization in the field of dance teaching, making dance an art that everyone interested can have the opportunity to learn.

However, the application of AI technology also presents challenges to the inheritance of traditional culture and the development of creative professions. On the one hand, the inheritance of traditional cultural dance involves not only movements and techniques but also historical contexts, cultural connotations, and emotional cores. AI-driven standardized teaching relying on motion capture and data modeling transforms meaningful dance movements into mechanical, digital parameters. This may cause dancers to overly focus on movement precision, neglecting the emotional expression and intrinsic meaning of dance gestures and, thus, reducing dance to a mere accumulation of movements. On the other hand, creative professionals such as dance teachers and choreographers base their core professional value on personalized teaching design, original movement choreography, and emotional interaction guidance. The application of AI teaching technology will significantly impact their employment environment and limit their livelihoods. Therefore, the application models and scenarios of AI technology in the dance domain call for further examination and refinement.

4.3. Applications of AI Technology in Dance Performances

AI is profoundly reconstructing the expressive dimensions of stage art, creating performance paradigms that transcend traditional limitations through multiple pathways, such as generating virtual dancers, enabling real-time interactions, and analyzing audience emotions. From music-driven digital human dance generation via Transformer-based diffusion models to non-human dance subjects trained by motion capture systems and further to dynamic creative loops constructed through emotion recognition and narrative engines, AI not only expands the physical boundaries of dance but also establishes an intelligent ecosystem of real-time human–machine–environment responses within the stage space, making performances organic living entities of multidimensional linkage. A growing number of dance scholars argue that using AI to shape the aesthetic preferences of perceivers and artificially generate choreography and dance performances will become an important research direction in dance art [51].

4.3.1. Human–AI Collaborative Dance Performances

Ryo Ishii et al. developed a new-generation model based on the Transformer-diffusion model, enabling AI virtual characters to generate dances according to any music, audio, and dance style selected by users [51]. This makes it more feasible for digital character dances to be applied in stage performances. In 2018, the “dance2dance” neural network, modified based on Google’s seq2seq architecture, adopted a hybrid RNN model to process time-series data. Discrete Figures by Rhizomatiks Research and Kyle McDonald reconstructed the virtual–real interaction paradigm of dance performances through multilayer AI technologies [52]. The core of the work lies in the dynamic dialogue between dancers and pre-recorded digital images on projection screens. These virtual entities, which include 2D line animations and 3D anthropomorphic characters, derive their motion data from the original performances recorded by authentic dancers wearing Vicon motion capture systems, covering improvisational interpretations of 11 emotional themes (such as anger, sensuality, and robotics).

Furthermore, Diego Marin-Bucio et al. created the “Dancing Embryo” AI dance system, aiming to break through the dependence of traditional dance on the human body and expand the definition of dance to non-human subjects [53]. The project was developed through collaboration between dancer–choreographers and computational scientists, with its core being an AI system capable of generating and converting dance movements in real time and interacting with human dancers. Trained on motion capture data from 30 contemporary dancers, the AI model can generate responsive dance sequences based on the movements of human dancers and achieve rhythm synchronization through visual-motor communication. The research adopts frameworks such as posthumanism philosophy and 4E cognitive theory, emphasizing that dance, as a “sensorimotor phenomenon”, can be accomplished through human–AI interaction even if AI lacks consciousness. The project verified the feasibility of human–AI co-dancing through global tours (such as its premiere in Cambridge and tour in Mexico), explored the potential of AI as a “creative collaborator” rather than a tool, and reflected on the impact of technical limitations (such as equipment dependence and network stability) on performances.

4.3.2. AI-Driven Dynamic Adjustment of Dance Performances

In “DoPPioGioco”, an interactive play by Rossana Damiano’s team, the AI system constructs a dynamic creative loop driven by audience emotions [52]. The core architecture of the work is rooted in the pre-trained GEMEP multidimensional emotion model, which establishes a cognitive framework by analyzing over 7000 samples of 18 emotional categories performed by professional actors. During the performance, cameras in the audience capture the group’s facial expressions at the end of each act; after algorithmic cluster analysis, abstract emotional dimensions (such as pleasure/arousal) are extracted. These data are converted into four narrative tendency options (e.g., “strengthen emotional resonance” or “create dramatic conflict”), which are selected by performers via tablet computers. The selection results immediately trigger a narrative engine written in PHP, which, as a web service, calls prefabricated modules in the MySQL database to generate two outputs in real time, as follows: script texts matching emotional logic (read live by performers) and algorithmically choreographed images projected on the background canvas. This mechanism of “audience emotional input → AI semantic translation → performer decision making → multimodal content generation” positions AI as an emotional-narrative converter that subverts the power structure of traditional theater. Technology no longer passively responds to instructions but reconstructs the audience–performer relationship through an intermediated creative process, making the audience implicit co-creators and performers transformed into living media connecting human emotions and machine logic.

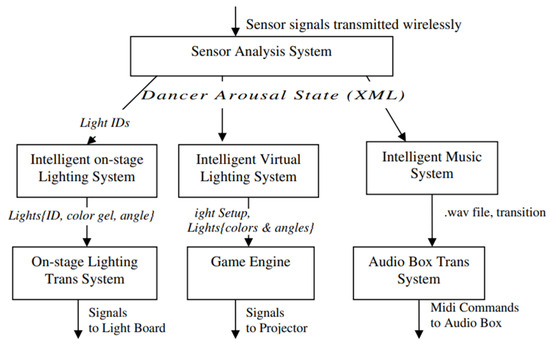

Beyond enabling AI-driven virtual characters to perform complicated dance moves or having digital humans mimic the postures of authentic dancers, AI can generate real-time dynamic visual effects (such as projections and lighting changes) based on live music, audience reactions, or dancers’ movements. These effects synchronize and resonate with the dance, enhancing the immersive experience. Magy Seif El-Nasr et al. created an intelligent environment for dance spaces, with the core being AI-driven real-time dynamic changes in stage lighting and projections, which serve as an extended medium for dancers to express themselves [54]. The framework of the AI intelligent system is shown in Figure 7. Its key innovation lies in using AI to enable lighting (color, direction, and brightness) and projections to directly respond to and map the dancer’s arousal state (physiological excitement) rather than complex emotional states that are difficult to capture accurately. This design choice is based on two considerations: first, current AI technology for recognizing mixed emotions is immature and susceptible to environmental interference; second, the project draws inspiration from film lighting techniques, using light and shadow to shape atmosphere (such as tension) rather than specific emotions. Therefore, the system focuses on converting the dancer’s arousal level into a visual “digital signature” projected into the actual performance space. In terms of technical implementation, the AI system specifically avoids vision-based motion capture and, instead, uses pressure sensors to track the dancer’s position and movement intensity reliably. The integration of AI processing of arousal states, sensor inputs, and dynamic lighting control not only overcomes the limitations of traditional visual technologies but also accurately establishes spatial mapping between the dancer’s position and lighting responses. Ultimately, as an intelligent intermediary, AI seamlessly converts the dancer’s physiological state and movement energy into immediate, adaptive changes in environmental light and shadow, significantly expanding the expressive dimensions and immersion of dance, and turning the entire stage into a dynamic canvas for the dancer’s emotions and energy.

Figure 7.

Architecture of the system [54]. Copyright © 2025 Elsevier Inc. All rights reserved.

Innovation in AI-based stage presentation focuses on the following three core directions: virtual character generation, human–machine collaboration mechanisms, and environmental intelligent response. Through sensor fusion, affective computing, and reinforcement learning frameworks, these technologies transform the stage into a dynamic perceptual network, enabling a paradigm shift from one-way performance to multi-agent co-creation. Future research should further enhance technical stability, deepen the autonomy of AI as a “creative collaborator”, and establish an artistic evaluation system for human–AI co-performance, ultimately propelling stage art into a new era driven by perceptual intelligence.

5. Summary and Prospect

Overall, in the current context, the application of AI technology in the dance field presents both opportunities and challenges for dancers. Regarding opportunities, AI can serve as an empowering tool for creation and teaching; it can assist dancers in digitizing improvisational movements, generate multiple choreographic schemes on demand to overcome creative bottlenecks, or provide real-time training feedback via motion recognition systems. Additionally, it facilitates the transition of dancers from “movement executors” to “AI creation guides”, enhancing their creative subjectivity by enabling them to lead artistic directions. As for challenges, technology may reshape the professional ecosystem of the dance industry. The efficiency of AI in generating standardized movements risks squeezing the living space in scenarios such as commercial dance performances. Furthermore, over-reliance on AI might weaken dancers’ physical cognition and independent creative capacities. Notably, in terms of cultural expression risks, AI tends to strip movements of their cultural connotations; long-term use of such “de-culturalized” generated movements by dancers could undermine their understanding and inheritance of dance cultural traditions. The balance between opportunities and challenges is a matter that warrants thorough consideration.

5.1. Achievements

As an art form that has flourished over a long course of time, dance is deeply beloved by people. It carries the historical culture and ethnic customs, bearing distinct characteristics of the times. The in-depth application of AI technology in the dance field will provide a strong impetus for its development. In terms of choreography, AI technology can draw on massive amounts of data to offer inspiration and references to choreographers, enabling them to design dance works with novel styles. Simulating the physical conditions of different dancers, such as their appearance and physical strength, can help preview and optimize the arrangement of large-scale dance performances, effectively reducing costs and improving efficiency. In terms of teaching, AI technology can enable high-quality dance learning resources to break free from temporal and spatial constraints. Furthermore, by combining AI technology with motion capture technology, customized and personalized teaching can be realized. For students with different interest orientations, learning foundations, and progress, AI technology can effectively enhance their learning experience and efficiency. In terms of performance, the integration of AI technology with AR/VR technologies can break the limitations of traditional performances, creating immersive dance performances and allowing the audience to enjoy a better viewing experience and sense of participation.

5.2. Problems

It is foreseeable that the iterative development and application of AI technology in the future will boost the prosperity and widespread dissemination of the dance field. However, the current application of AI technology in the dance field faces several issues. Firstly, dance is an art form embodying profound connotations, including humanity, history, and emotions. Currently, AI-generated choreographic movements primarily depend on existing data models, which may lead to dance works with overly prominent technical characteristics and certain limitations. Secondly, the current costs of AI technology and supporting technologies, such as motion capture and AR/VR, remain relatively high, and their technical maturity requires further improvement. For instance, motion capture technology still suffers from insufficient accuracy and portability. This hinders their popularization and application in the dance field. Additionally, there remains ambiguity in copyright ownership regarding aspects such as the collection of dance movement data and AI-generated dance works, which may give rise to data and ethical risks. These issues must be resolved in the future promotion and application of AI technology in the dance field.

5.3. Future Directions

Looking ahead, the integration of AI in the field of dance promises not only to enhance existing practices but also to redefine artistic and educational paradigms. To fully realize this potential, it is essential to establish robust quantitative evaluation metrics that can objectively measure the impact of AI technologies across choreography, teaching, and performance. The development and adoption of such metrics, such as efficiency, quality of learning, and immersiveness, will be critical in guiding future research, optimizing applications, and ensuring meaningful human–AI collaboration. In conclusion, the future of AI in dance holds immense promise, but its success will depend on our ability to measure its contributions objectively. By defining and tracking relevant performance indicators, the dance community can ensure that AI not only advances technical capabilities but also enriches artistic expression and learning outcomes. This metrics-driven approach will pave the way for deeper, more responsible, and more impactful innovation at the intersection of dance and technology.

Author Contributions

Conceptualization, Y.Z.; formal analysis, H.N. and R.Y.; investigation, X.F. and Z.L.; writing—original draft preparation, X.F. and Z.L.; writing—review and editing, H.N. and Q.C.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

This work was supported by the Guangdong Philosophy and Social Sciences Planning Project (GD25LN40) and the Educational Commission of Guangdong Province (grant no. 2022ZDZX1002).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cordeschi, R. AI Turns Fifty: Revisiting Its Origins. Appl. Artif. Intell. 2007, 21, 259–279. [Google Scholar] [CrossRef]

- Li, X. The Art of Dance from the Perspective of Artificial Intelligence. J. Phys. Conf. Ser. 2021, 1948, 042011. [Google Scholar] [CrossRef]

- Wallace, B.; Hilton, C.; Nymoen, K.; Torresen, J.; Martin, C.P.; Fiebrink, R. Embodying an Interactive AI for Dance through Movement Ideation. In Proceedings of the 15th Conference on Creativity and Cognition, New York, NY, USA, 19–21 June 2023; pp. 454–464. [Google Scholar]

- Zeng, D. AI-Powered Choreography Using a Multilayer Perceptron Model for Music-Driven Dance Generation. Informatica 2025, 49, 137–148. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L. Artificial Intelligence for Remote Sensing Data Analysis: A Review of Challenges and Opportunities. IEEE Geosci. Remote Sens. Mag. 2022, 10, 270–294. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, H.; Yu, K.; Zhou, R. Artificial Intelligence Methods in Natural Language Processing: A Comprehensive Review. Highlights Sci. Eng. Technol. 2024, 85, 545–550. [Google Scholar] [CrossRef]

- Lauriola, I.; Lavelli, A.; Aiolli, F. An Introduction to Deep Learning in Natural Language Processing: Models, Techniquexcs, and Tools. Neurocomputing 2022, 470, 443–456. [Google Scholar] [CrossRef]

- Lund, B.D.; Wang, T.; Mannuru, N.R.; Nie, B.; Shimray, S.; Wang, Z. ChatGPT and a New Academic Reality: Artificial Intelligence-Written Research Papers and the Ethics of the Large Language Models in Scholarly Publishing. J. Assoc. Inf. Sci. Technol. 2023, 74, 570–581. [Google Scholar] [CrossRef]

- Kakani, V.; Nguyen, V.H.; Kumar, B.P.; Kim, H.; Pasupuleti, V.R. A Critical Review on Computer Vision and Artificial Intelligence in Food Industry. J. Agric. Food Res. 2020, 2, 100033. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer Vision and Artificial Intelligence in Precision Agriculture for Grain Crops: A Systematic Review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Gollapudi, S. Artificial Intelligence and Computer Vision. In Learn Computer Vision Using OpenCV: With Deep Learning CNNs and RNNs; Springer: Cham, Switzerland, 2019; pp. 1–29. [Google Scholar]

- Mishra, B.K.; Kumar, R. Natural Language Processing in Artificial Intelligence; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep Learning for Visual Understanding: A Review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Zhang, J.; Li, S.L.; Zhou, X.L. Application and Analysis of Image Recognition Technology Based on Artificial Intelligence—Machine Learning Algorithm as an Example. In Proceedings of the 2020 International Conference on Computer Vision, Image and Deep Learning (CVIDL), Chongqing, China, 10–12 July 2020; pp. 173–176. [Google Scholar]

- Saba, T.; Rehman, A. Effects of Artificially Intelligent Tools on Pattern Recognition. Int. J. Mach. Learn. Cybern. 2013, 4, 155–162. [Google Scholar] [CrossRef]

- Tyagi, A.K.; Chahal, P. Artificial Intelligence and Machine Learning Algorithms. In Challenges and Applications for Implementing Machine Learning in Computer Vision; IGI Global: Hershey, PA, USA, 2020; pp. 188–219. [Google Scholar]

- Pattnaik, P.; Sharma, A.; Choudhary, M.; Singh, V.; Agarwal, P.; Kukshal, V. Role of Machine Learning in the Field of Fiber Reinforced Polymer Composites: A Preliminary Discussion. Mater. Today Proc. 2021, 44, 4703–4708. [Google Scholar] [CrossRef]

- Vrontis, D.; Christofi, M.; Pereira, V.; Tarba, S.; Makrides, A.; Trichina, E. Artificial Intelligence, Robotics, Advanced Technologies and Human Resource Management: A Systematic Review. Artif. Intell. Int. HRM 2022, 6, 1237–1266. [Google Scholar] [CrossRef]

- Goel, P.; Kaushik, N.; Sivathanu, B.; Pillai, R.; Vikas, J. Consumers’ Adoption of Artificial Intelligence and Robotics in Hospitality and Tourism Sector: Literature Review and Future Research Agenda. Tour. Rev. 2022, 77, 1081–1096. [Google Scholar] [CrossRef]

- Kim, S.S.; Kim, J.; Badu-Baiden, F.; Giroux, M.; Choi, Y. Preference for Robot Service or Human Service in Hotels? Impacts of the COVID-19 Pandemic. Int. J. Hosp. Manag. 2021, 93, 102795. [Google Scholar] [CrossRef]

- Zhou, G.; Zhang, C.; Li, Z.; Ding, K.; Wang, C. Knowledge-Driven Digital Twin Manufacturing Cell towards Intelligent Manufacturing. Int. J. Prod. Res. 2020, 58, 1034–1051. [Google Scholar] [CrossRef]

- Wang, Z.; Deng, Y.; Zhou, S.; Wu, Z. Achieving Sustainable Development Goal 9: A Study of Enterprise Resource Optimization Based on Artificial Intelligence Algorithms. Resour. Policy 2023, 80, 103212. [Google Scholar] [CrossRef]

- Wang, Z. Artificial Intelligence in Dance Education: Using Immersive Technologies for Teaching Dance Skills. Technol. Soc. 2024, 77, 102579. [Google Scholar] [CrossRef]

- Sumi, M. Simulation of Artificial Intelligence Robots in Dance Action Recognition and Interaction Process Based on Machine Vision. Entertain. Comput. 2025, 52, 100773. [Google Scholar] [CrossRef]

- Wallace, B.; Nymoen, K.; Torresen, J.; Martin, C.P. Breaking from Realism: Exploring the Potential of Glitch in AI-Generated Dance. Digit. Creat. 2024, 35, 125–142. [Google Scholar] [CrossRef]

- Yang, L. Influence of Human–Computer Interaction-Based Intelligent Dancing Robot and Psychological Construct on Choreography. Front. Neurorobot. 2022, 16, 819550. [Google Scholar] [CrossRef]

- Braccini, M.; De Filippo, A.; Lombardi, M.; Milano, M. Dance Choreography Driven by Swarm Intelligence in Extended Reality Scenarios: Perspectives and Implications. In Proceedings of the 2025 IEEE International. Conference on Artificial Intelligence and extended and Virtual Reality (AIxVR 2025), Lisbon, Portugal, 27–29 January 2025; pp. 348–354. [Google Scholar]

- Sanders, C.D., Jr. An Exploration into Digital Technology and Applications for the Advancement of Dance Education. Master’s Thesis, University of California, Irvine, CA, USA, 2021. [Google Scholar]

- Feng, H.; Zhao, X.; Zhang, X. Automatic Arrangement of Sports Dance Movement Based on Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 9722558. [Google Scholar] [CrossRef]

- Baía Reis, A.; Vašků, P.; Solmošiová, S. Artificial Intelligence in Dance Choreography: A Practice-as-Research Exploration of Human–AI Co-Creation Using ChatGPT-4. Int. J. Perform. Arts Digit. Media 2025, 1–21. [Google Scholar] [CrossRef]

- Copeland, R. Merce Cunningham: The Modernizing of Modern Dance; Routledge: London, UK, 2004. [Google Scholar]

- Jordan, J. AI as a Tool in the Arts, Arts Manage; Technology Laboratory, Carnegie Mellon University: Pittsburgh, PA, USA, 2020. [Google Scholar]

- Curtis, G. Dances with Robots, and Other Tales from the Outer Limits. International New York Times, 5 November 2020. [Google Scholar]

- Pataranutaporn, P.; Archiwaranguprok, C.; Bhongse-tong, P.; Maes, P.; Klunchun, P. Bridging Tradition and Technology: Human–AI Interface for Exploration and Co-Creation of Classical Dance Heritage. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama Japan, 26 April 2025; pp. 1–6. [Google Scholar]

- Pataranutaporn, P.; Mano, P.; Bhongse-Tong, P.; Chongchadklang, T.; Archiwaranguprok, C.; Hantrakul, L.; Eaimsa-ard, J.; Maes, P.; Klunchun, P. Human–AI Co-Dancing: Evolving Cultural Heritage through Collaborative Choreography with Generative Virtual Characters. In Proceedings of the 9th International Conference on Movement and Computing, Utrecht, The Netherlands, 30 May–2 June 2024; pp. 1–10. [Google Scholar]

- Gao, L. Dance Creation Based on the Development and Application of a Computer Three-Dimensional Auxiliary System. Int. J. Maritime Eng. 2024, 1, 347–358. [Google Scholar]

- Zhang, B.; Gang, Y. Evaluation and Improvement of College Dance Course Based on Multiple Intelligences Theory. Res. Adv. Educ. 2024, 3, 10–19. [Google Scholar] [CrossRef]

- Li, J.; Ahmad, M.A. Evolution and Trends in Online Dance Instruction: A Comprehensive Literature Analysis. Front. Educ. 2025, 10, 1523766. [Google Scholar] [CrossRef]

- Zhang, J. The Practice of AI Technology Empowering the Reform of Higher Dance Education Management Research. Educ. Rev. 2024, 8, 1097–1101. [Google Scholar] [CrossRef]

- Zejing, M.; Luen, L.C. A Comprehensive Method in Dance Quality Education Assessment for Higher Education. Int. J. Acad. Res. Bus. Soc. Sci. 2024, 14, 298–306. [Google Scholar] [CrossRef] [PubMed]

- Du, W.; Chen, J.; Xu, S. Exploring the Current Landscape and Future Directions of Information Technology in Dance Education. In Proceedings of the 26th IEEE/ACIS International Winter Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD-Winter), Taichung, Taiwan, 6–8 December 2023; pp. 120–126. [Google Scholar]

- Yue, Z. Investigating the Application of Computer VR Technology and AI in the Examination of Dance Movement Patterns. J. Ambient Intell. Humaniz. Comput. 2025, 16, 629–640. [Google Scholar] [CrossRef]

- SureshKumar, M.; Sukhresswarun, R.; Raviraaj, S.V.; Shanmugapriya, P. Smart Steps: Enhancing Dance Through AI Driven Analysis. In Proceedings of the 2024 International Conference on Power, Energy, Control and Transmission Systems. (ICPECTS 2024), Chennai, India, 8–9 October 2024; pp. 1–5. [Google Scholar]

- Xie, Y.; Yan, Y.; Li, Y. The Use of Artificial Intelligence-Based Siamese Neural Network in Personalized Guidance for Sports Dance Teaching. Sci. Rep. 2025, 15, 12112. [Google Scholar] [CrossRef] [PubMed]

- Li, M. The Analysis of Dance Teaching System in Deep Residual Network Fusing Gated Recurrent Unit Based on Artificial Intelligence. Sci. Rep. 2025, 15, 1305. [Google Scholar] [CrossRef]

- Zhen, N.; Keun, P.J. Ethnic Dance Movement Instruction Guided by Artificial Intelligence and 3D Convolutional Neural Networks. Sci. Rep. 2025, 15, 16856. [Google Scholar] [CrossRef]

- Xu, L.-J.; Wu, J.; Zhu, J.-D.; Chen, L. Effects of AI-Assisted Dance Skills Teaching, Evaluation and Visual Feedback on Dance Students’ Learning Performance, Motivation and Self-Efficacy. Int. J. Hum.-Comput. Stud. 2025, 195, 103410. [Google Scholar] [CrossRef]

- Liu, X.; Soh, K.G.; Dev Omar Dev, R.; Li, W.; Yi, Q. Design and Implementation of Adolescent Health Latin Dance Teaching System under Artificial Intelligence Technology. PLoS ONE 2023, 18, e0293313. [Google Scholar] [CrossRef]

- Zhang, X. The Application of Artificial Intelligence Technology in Dance Teaching. In Proceedings of the First International Conference on Real Time Intelligent Systems, Luton, UK, 9–11 October 2023; pp. 23–30. [Google Scholar]

- Zhenyu, S. Gamified Learning Experience Based on Digital Media and the Application of Virtual Teaching Robots in Dance Teaching. Entertain. Comput. 2025, 52, 100785. [Google Scholar] [CrossRef]

- Ishii, R.; Eitoku, S.; Matsuo, S.; Makiguchi, M.; Hoshi, A.; Morency, L.-P. Let’s Dance Together! AI Dancers Can Dance to Your Favorite Music and Style. In Proceedings of the Companion 26th International Conference on Multimodal Interaction, San Jose, Costa Rica, 4–8 November 2024; pp. 88–90. [Google Scholar]

- Befera, L.; Bioglio, L. Classifying Contemporary AI Applications in Intermedia Theatre: Overview and Analysis of Some Cases. In Proceedings of the CEUR Workshop Proceedings, Virtual Conference, 23–27 October 2022; pp. 42–54. [Google Scholar]

- Marin-Bucio, D. Dancing Embryo: Enacting Dance Experience Through Human–AI Kinematic Collaboration. Documenta 2025, 42, 247–277. [Google Scholar] [CrossRef]

- El-Nasr, M.S.; Vasilakos, A.V. DigitalBeing–Using the Environment as an Expressive Medium for Dance. Inf. Sci. 2008, 178, 663–678. [Google Scholar] [CrossRef][Green Version]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).