FPH-DEIM: A Lightweight Underwater Biological Object Detection Algorithm Based on Improved DEIM

Abstract

1. Introduction

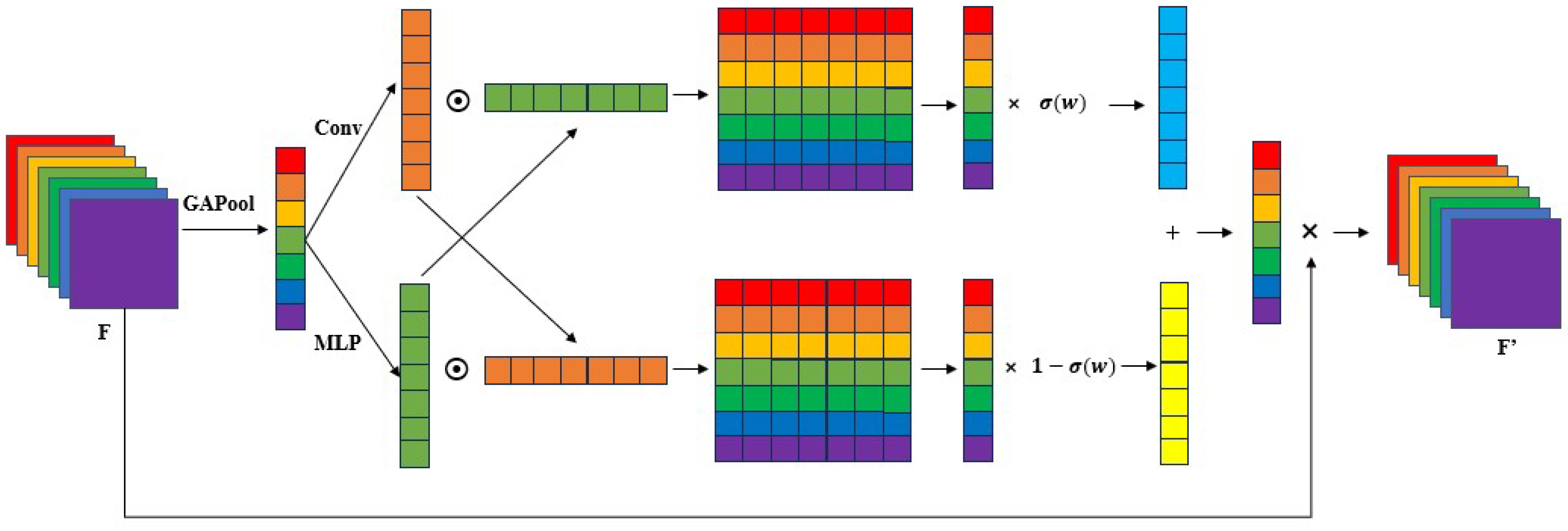

- Introduction of the Fine-grained Channel Attention (FCA) mechanismThis module models the interaction between global average features and local channel context to dynamically generate channel-wise weighting vectors. Compared with conventional SE modules, FCA better adapts to underwater images where local noise interference and low contrast are prominent. It effectively suppresses redundant background information, enhances salient biological features, and improves robustness in complex environments [12].

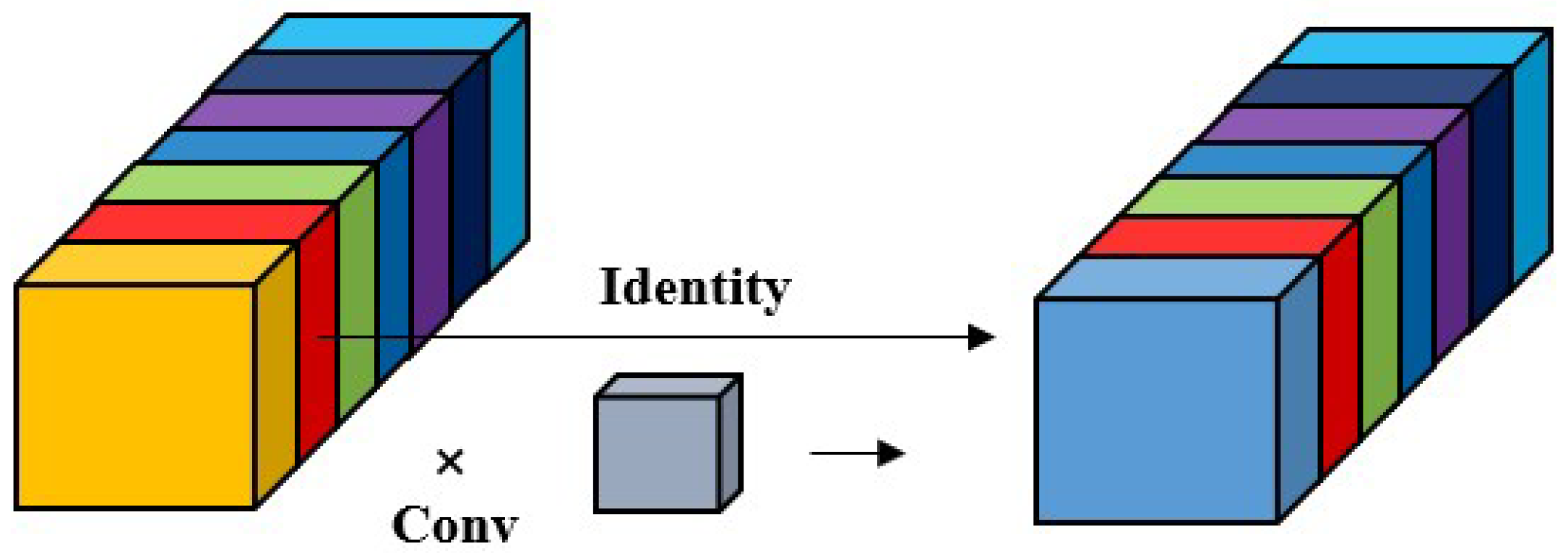

- Adoption of the efficient Partial Convolution (PConv) operator to replace standard convolution modulesPConv selectively activates parts of the input feature map for convolution, significantly reducing memory access and computational redundancy without sacrificing recognition accuracy. This makes it well-suited for deployment on embedded underwater platforms and mobile AUVs with limited computational capacity [13].

- Integration of the Haar Wavelet Downsampling (HWDown) module to enhance small object preservationTraditional downsampling methods, such as max-pooling or strided convolution, often discard critical information while reducing spatial resolution, especially when object sizes approach the lower bound of the receptive field. The HWDown module employs Haar wavelet transforms for information compression, retaining the main energy distribution in the frequency domain. This enhances the model’s semantic representation and discriminative power for small targets such as jellyfish, fish larvae, and micro-crustaceans [14].

2. Related Work

2.1. Overview of Underwater Object Detection Methods

- The standard YOLO uses a one-to-many (O2M) matching strategy, leading to high prediction box density and difficulty in learning stable representations for low-contrast, occluded, or small targets;

- Its feature extraction backbone is not specifically designed for the unique characteristics of underwater imagery, resulting in limited ability to perceive fine-grained semantic information.

2.2. Transformer-Based Object Detection

2.3. The DEIM Framework

- Dense One-to-One Matching: DEIM increases the density of detectable objects within a single image via data augmentation techniques such as Mosaic and MixUp, thereby improving positive sample utilization without altering the core DETR structure.

- Matchability-Aware Loss (MAL): This novel loss function uses confidence scores to guide IoU-based supervision, allowing the model to optimize for low-quality matched samples and improve overall detection robustness.

2.4. Model Lightweighting and Perception Enhancement Techniques

3. Materials and Methods

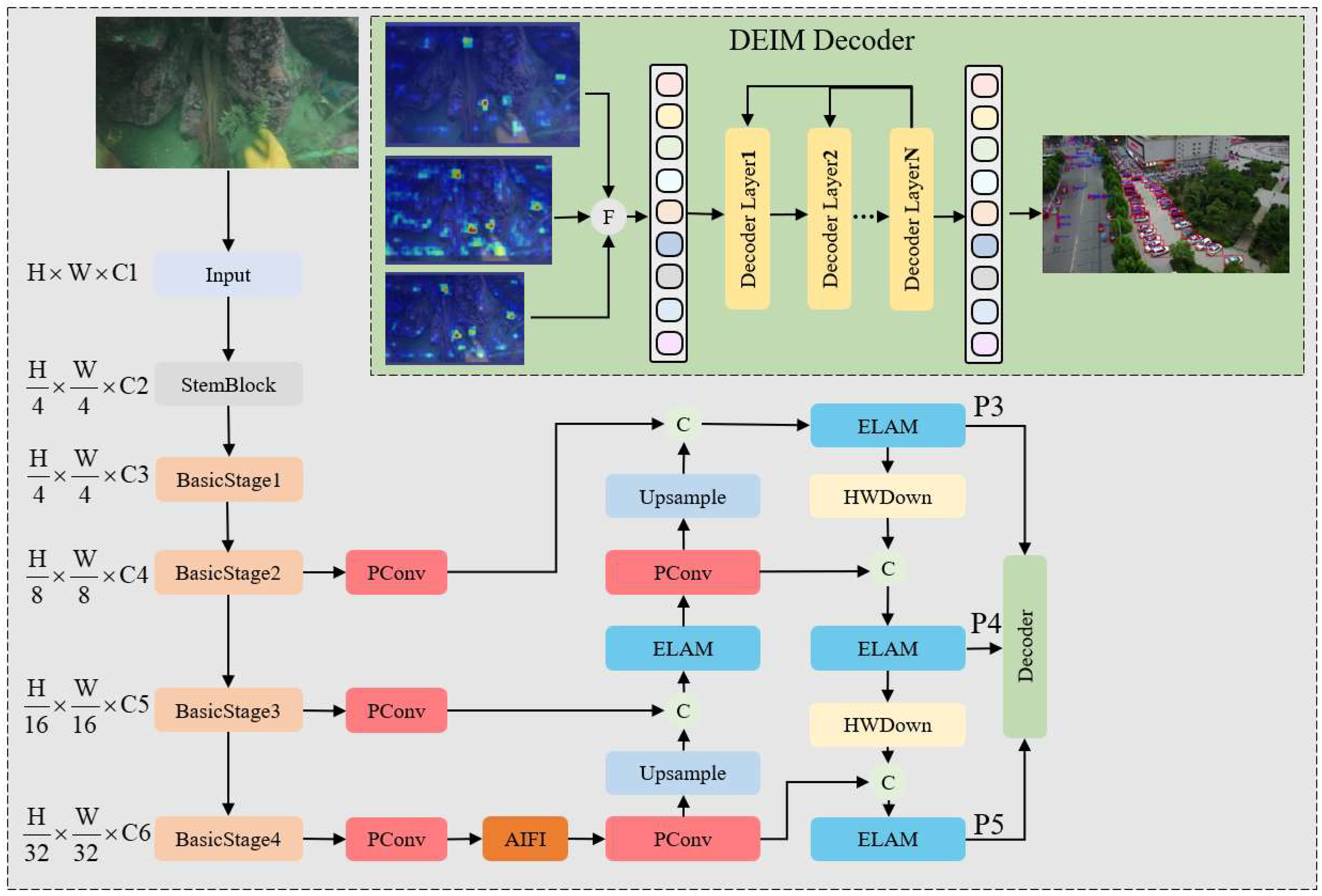

3.1. FPH-DEIM: An Improved DEIM-Based Lightweight Approach for Underwater Object Detection

- Image input and preprocessing.

- Lightweight backbone for feature extraction, integrating FCA and PConv.

- Multi-scale feature downsampling and fusion, including HWDown.

- Encoder–decoder with one-to-one target matching, keeping DEIM’s MAL.

- Output module for bounding box regression and object classification.

3.2. Fine-Grained Channel Attention Module

3.3. Partial Convolution Module

3.4. HWDown Downsampling Module

4. Experiments

4.1. Experimental Setup

4.2. Experimental Dataset

4.3. Performance Metrics

4.4. Experimental Results

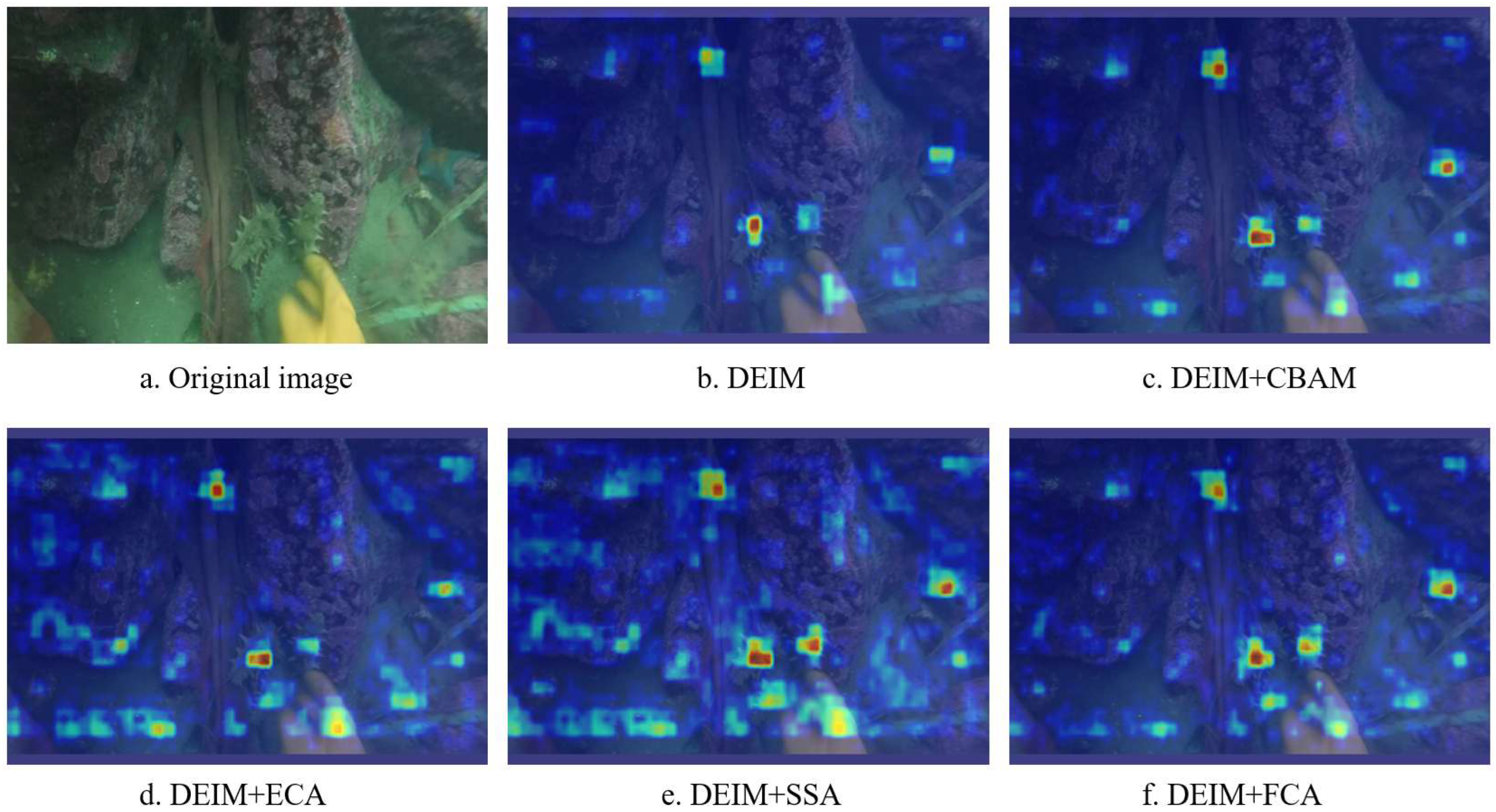

4.4.1. Comparison of Attention Modules

4.4.2. Ablation Study

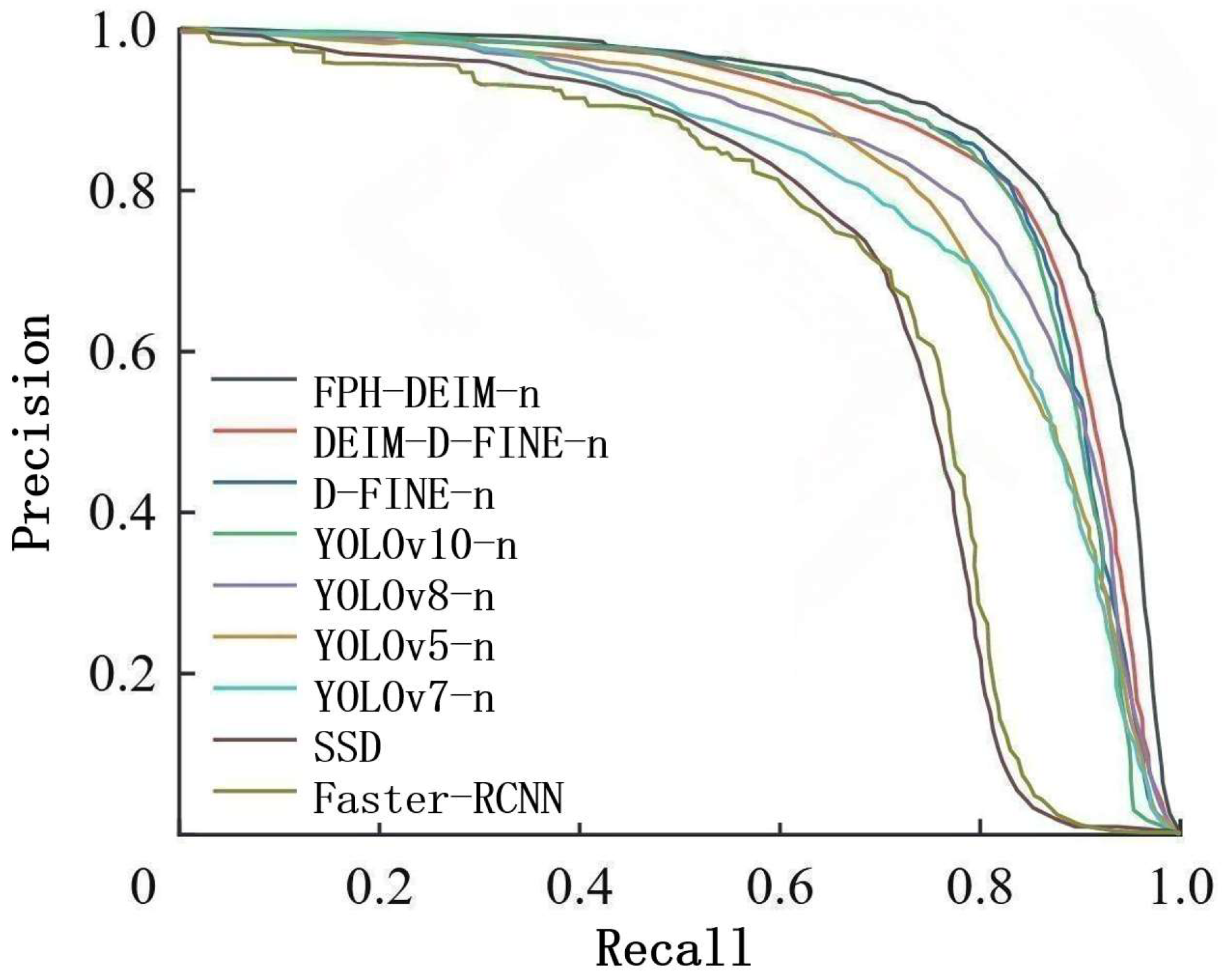

4.4.3. Performance Comparison Among Detection Models

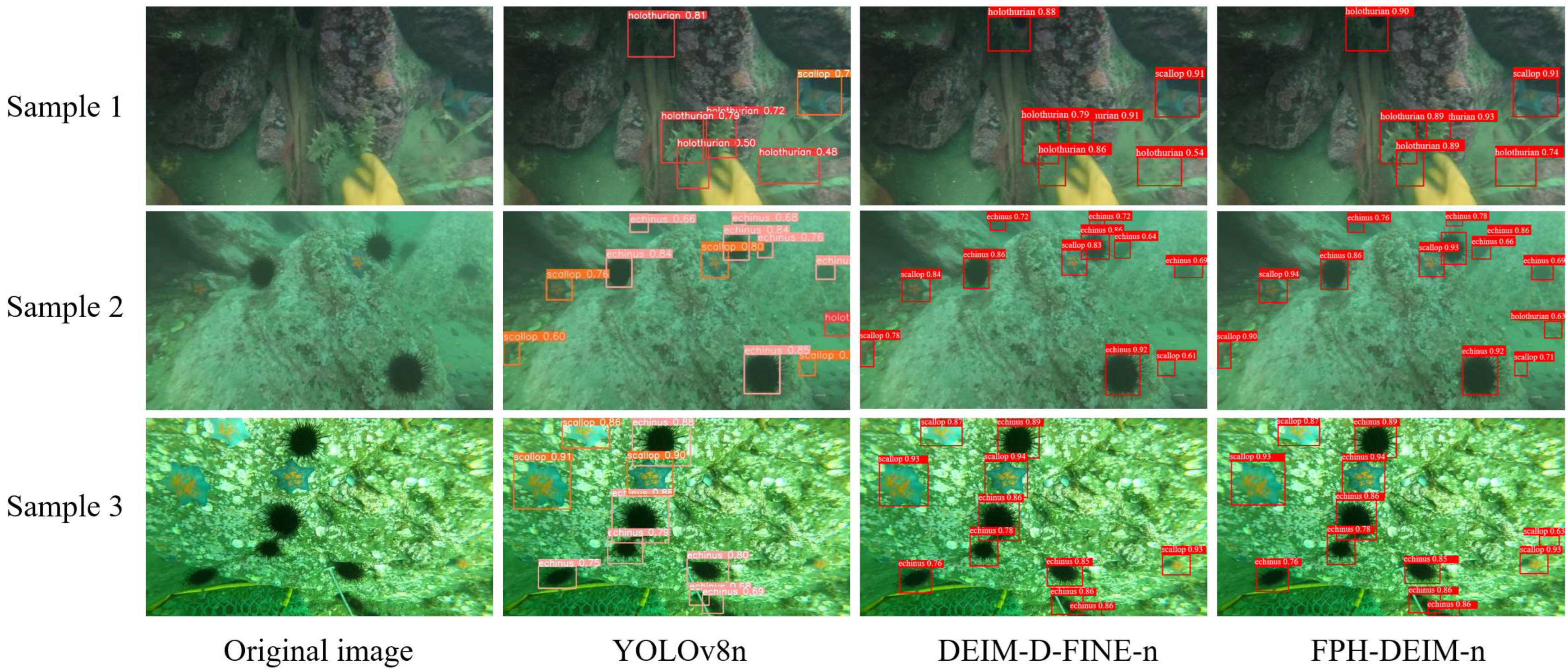

4.5. Real-World Monitoring Experiment of the Improved Model

5. Discussion

6. Conclusions

- A feature-aware mechanism integrating FCA is introduced to effectively enhance the representational contrast between blurred targets and noisy backgrounds in underwater images, significantly improving detection accuracy.

- A highly efficient PConv unit is designed to reduce computational redundancy, resulting in lower model latency and memory access load.

- A novel HWDown module based on Haar wavelets is proposed, which preserves spatial detail during downsampling and enhances the model’s capability to identify small objects.

- Extensive experiments on public underwater datasets and real underwater robotic platforms demonstrate that FPH-DEIM outperforms mainstream lightweight detectors in terms of accuracy, model size, and inference speed, showing strong potential for practical deployment.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, C.; Guo, J.; Cong, R.; Pang, Y.; Wang, B. Underwater Image Enhancement via Medium Transmission-Guided Multi-Color Space Embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Li, J.; Yang, W.; Qiao, S.; Gu, Z.; Zheng, B.; Zheng, H. Self-Supervised Marine Organism Detection from Underwater Images. IEEE J. Ocean. Eng. 2025, 50, 120–135. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, L.; Wang, K.; Xu, L.; Gulliver, T.A. Underwater Acoustic Intelligent Spectrum Sensing with Multimodal Data Fusion: A Mul-YOLO Approach. Future Gener. Comput. Syst. 2025, 173, 107880. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the 14th European Conference on Computer Vision (ECCV 2020), Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Conference, 3–7 May 2021; Available online: https://openreview.net/forum?id=gZ9hCDWe6ke (accessed on 21 August 2025).

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You Only Look One-Level Feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 13039–13048. [Google Scholar] [CrossRef]

- Huang, S.; Lu, Z.; Cun, X.; Yu, Y.; Zhou, X.; Shen, X. DEIM: DETR with Improved Matching for Fast Convergence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 15162–15171. [Google Scholar]

- Musa, U.I.; Roy, A. Marine Robotics: An Improved Algorithm for Object Detection Underwater. Int. J. Comput. Graph. Multimed. 2022, 2, 1–8. [Google Scholar] [CrossRef]

- Sun, H.; Wen, Y.; Feng, H.; Zheng, Y.; Mei, Q.; Ren, D.; Yu, M. Unsupervised Bidirectional Contrastive Reconstruction and Adaptive Fine-Grained Channel Attention Networks for Image Dehazing. Neural Netw. 2024, 176, 106314. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 12021–12031. [Google Scholar]

- Xu, G.; Liao, W.; Zhang, X.; Li, C.; He, X.; Wu, X. Haar Wavelet Downsampling: A Simple but Effective Downsampling Module for Semantic Segmentation. Pattern Recognit. 2023, 143, 109819. [Google Scholar] [CrossRef]

- Yu, H.; Yin, Y.; Huang, T.; Liu, C. U-YOLO: An Improved YOLOv3 Model for Underwater Object Detection. Appl. Sci. 2022, 12, 3481. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Er, M.J.; Chen, J.; Zhang, Y.; Gao, W. Research Challenges, Recent Advances, and Popular Datasets in Deep Learning-Based Underwater Marine Object Detection: A Review. Sensors 2023, 23, 1990. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems, Proceedings of the NIPS 2015; MIT Press: Cambridge, MA, USA, 2015; Volume 1, pp. 91–99. [Google Scholar]

- Zhang, Y.; Cui, Y.; Wu, X.; Wang, Y. Underwater Object Detection Based on Improved YOLOv3 Model. J. Mar. Sci. Eng. 2021, 9, 311. [Google Scholar] [CrossRef]

- Meng, D.; Chen, X.; Fan, H.; Xu, G.; Xiang, S.; Pan, C.; Sun, J. Conditional DETR for Fast Training Convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 3651–3660. [Google Scholar]

- Zhang, H.; Zhang, F.; Liu, S.; Zhu, J.; Ni, L.M.; Shum, H.Y. DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023; Available online: https://openreview.net/forum?id=3mRwyG5one (accessed on 21 August 2025).

- Li, X.; Wang, Y.; Zhang, H.; Zhou, T.; Li, H.; Sun, J. DN-DETR: Accelerate DETR Training via Denoising Anchor Boxes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 13619–13628. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, C.; Li, H.; Wang, S.; Zhu, M.; Wang, D.; Fan, X.; Wang, Z. A Dataset and Benchmark of Underwater Object Detection for Robot Picking. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

| Method | Configuration |

|---|---|

| Learning rate | 0.0002 |

| Weight_decay | 0.0001 |

| Batch size | 8 |

| Optimizer | Adamw |

| Image size | 640640 |

| Epochs | 300 |

| Method | P (%) | R (%) | mAP@0.5 (%) | Parameters (M) | GFLOPs (B) |

|---|---|---|---|---|---|

| DEIM | 86.3 | 85.6 | 86.2 | 8.1 | 7.5 |

| DEIM + CBAM | 81.7 | 79.8 | 82.2 | 9.1 | 8.6 |

| DEIM + ECA | 83.7 | 81.4 | 86.3 | 10.7 | 9.7 |

| DEIM + SSA | 83.9 | 80.5 | 85.2 | 8.1 | 7.6 |

| DEIM + FCA | 88.9 | 87.6 | 88.6 | 10.4 | 9.5 |

| ID | FCA | PConv | HWDown | P (%) | R (%) | mAP (%) | Parameters (M) | GFLOPs (B) |

|---|---|---|---|---|---|---|---|---|

| 1 | × | × | × | 86.3 | 85.6 | 86.2 | 8.1 | 7.5 |

| 2 | √ | × | × | 87.9 | 87.8 | 88.5 | 9.4 | 10.2 |

| 3 | × | √ | × | 85.8 | 84.7 | 85.4 | 4.6 | 4.3 |

| 4 | × | × | √ | 87.8 | 87.7 | 87.4 | 8.6 | 9.2 |

| 5 | √ | √ | × | 88.6 | 89.7 | 88.9 | 8.2 | 10.5 |

| 6 | √ | × | √ | 86.5 | 88.8 | 88.9 | 10.4 | 11.2 |

| 7 | × | √ | √ | 89.2 | 88.1 | 88.2 | 5.2 | 4.9 |

| 8 | √ | √ | √ | 89.8 | 87.7 | 89.4 | 7.2 | 7.1 |

| Method | P (%) | R (%) | mAP@0.5 (%) | Parameters (M) | GFLOPs (B) |

|---|---|---|---|---|---|

| Faster-RCNN | 68.2 | 59.4 | 67.8 | 136.7 | 18.7 |

| SSD | 74.2 | 68.7 | 75.4 | 25.1 | 74.8 |

| YOLOv5-n | 83.1 | 76.0 | 86.1 | 8.2 | 16.5 |

| YOLOv7-n | 85.1 | 76.1 | 84 | 6.1 | 13.1 |

| YOLOv8-n | 83.8 | 76.7 | 86.2 | 11.2 | 28.6 |

| YOLOv10-n | 81.5 | 76.3 | 84.6 | 9.1 | 21.6 |

| D-FINE-n | 84.7 | 83.2 | 84.7 | 22.5 | 18.8 |

| DEIM-D-FINE-n | 86.3 | 85.6 | 86.2 | 8.1 | 7.5 |

| FPH-DEIM-n | 89.8 | 87.7 | 89.4 | 7.2 | 7.1 |

| Component | Description |

|---|---|

| Main Control Board | NVIDIA Jetson Xavier NX |

| Operating System | Ubuntu 20.04 + Jetpack 5.0 |

| Camera | FLIR Blackfly S underwater industrial camera (1080 p, 30 fps) |

| Communication | Acoustic modem + Wi-Fi feedback |

| Power System | 14.8 V lithium battery pack, rated at 10 Ah |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Song, W. FPH-DEIM: A Lightweight Underwater Biological Object Detection Algorithm Based on Improved DEIM. Appl. Syst. Innov. 2025, 8, 123. https://doi.org/10.3390/asi8050123

Li Q, Song W. FPH-DEIM: A Lightweight Underwater Biological Object Detection Algorithm Based on Improved DEIM. Applied System Innovation. 2025; 8(5):123. https://doi.org/10.3390/asi8050123

Chicago/Turabian StyleLi, Qiang, and Wenguang Song. 2025. "FPH-DEIM: A Lightweight Underwater Biological Object Detection Algorithm Based on Improved DEIM" Applied System Innovation 8, no. 5: 123. https://doi.org/10.3390/asi8050123

APA StyleLi, Q., & Song, W. (2025). FPH-DEIM: A Lightweight Underwater Biological Object Detection Algorithm Based on Improved DEIM. Applied System Innovation, 8(5), 123. https://doi.org/10.3390/asi8050123