A Systematic Review of Responsible Artificial Intelligence Principles and Practice

Abstract

1. Introduction

2. Systematic Review Methodology

2.1. Search Strategy

2.2. Data Sources

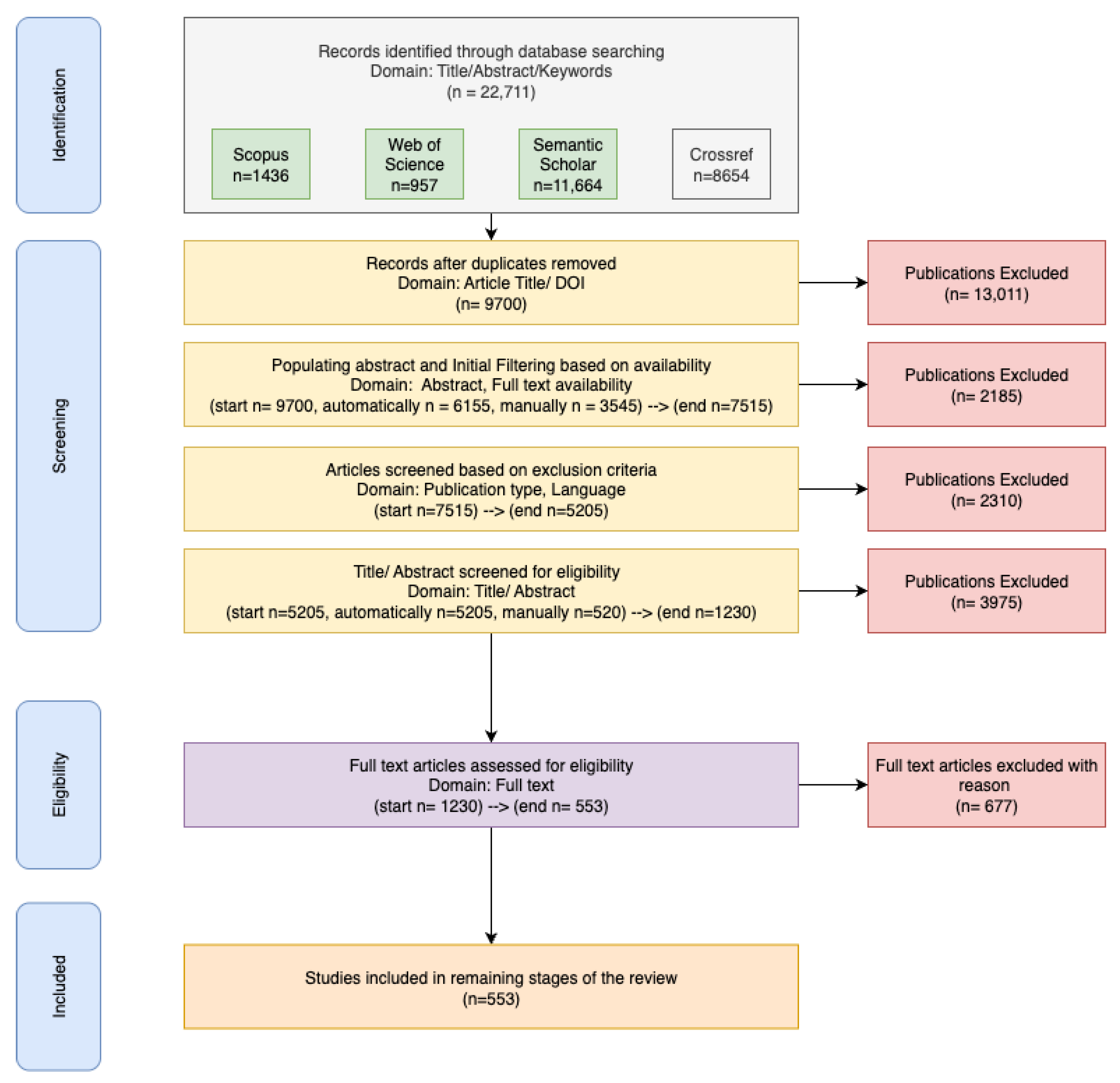

2.3. Study Selection

2.3.1. Stage 1: Initial Search and De-Duplication

2.3.2. Stage 2: Abstract Search and Screening

2.3.3. Stage 3: LLM-Assisted Semantic Screening

2.3.4. Stage 4: Full-Text Screening

2.4. Data Extraction and Analysis

- Publication details: Title, authorship, publication year, and source venue;

- Study characteristics: Publication format (journal article or conference proceeding), publishing house, and bibliometric data;

- Terminology and definitions: Explicit definitions of “responsible AI,” “AI responsibility,” and associated concepts where available;

- Framework identification: Recognition of responsible AI frameworks, principles, guidelines, or regulatory standards examined;

- Principle mapping: Identification of specific responsible AI principles discussed;

- Application context: Practical implementations, organizational barriers, evaluation methodologies, or governance mechanisms;

- Sectoral focus: Particular industries or application domains where responsible AI concepts are implemented.

3. Finding 1: Topics and Themes in Review Results

4. Finding 2: Foundations of Responsibility

5. Finding 3: Responsibility in AI

6. Finding 4: The Need for Responsible AI

7. Finding 5: Principles of Responsible AI

7.1. Transparency and Explainability

7.2. Fairness and Algorithmic Bias

7.3. Privacy and Data Protection

7.4. Robustness and Reliability

7.5. Accountability

7.6. Human Agency and Oversight

7.7. Socially Beneficial

7.8. Other Principles

8. Finding 6: Responsible AI in Practice

8.1. Responsible AI in Healthcare

8.2. Responsible AI in Education

9. Discussion

10. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kitano, H. Artificial intelligence to win the nobel prize and beyond: Creating the engine for scientific discovery. AI Mag. 2016, 37, 39–49. [Google Scholar] [CrossRef]

- Burki, T. Nobel Prizes honour AI pioneers and pioneering AI. Lancet Digit. Health 2025, 7, e11–e12. [Google Scholar] [CrossRef]

- Cottier, B.; Rahman, R.; Fattorini, L.; Maslej, N.; Besiroglu, T.; Owen, D. The rising costs of training frontier AI models. arXiv 2024, arXiv:2405.21015. [Google Scholar] [CrossRef]

- Chui, M.; Hazan, E.; Roberts, R.; Singla, A.; Smaje, K. The Economic Potential of Generative AI: The Next Productivity Frontier. McKinsey & Company. 2023. Available online: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier (accessed on 1 April 2025).

- Cuéllar, M.F.; Dean, J.; Doshi-Velez, F.; Hennessy, J.; Konwinski, A.; Koyejo, S.; Moiloa, P.; Pierson, E.; Patterson, D. Shaping AI’s Impact on Billions of Lives. arXiv 2024, arXiv:2412.02730. [Google Scholar] [CrossRef]

- Acemoglu, D. The simple macroeconomics of AI. Econ. Policy 2025, 40, 13–58. [Google Scholar] [CrossRef]

- Shojaee, P.; Mirzadeh, I.; Alizadeh, K.; Horton, M.; Bengio, S.; Farajtabar, M. The illusion of thinking: Understanding the strengths and limitations of reasoning models via the lens of problem complexity. arXiv 2025, arXiv:2506.06941. [Google Scholar] [PubMed]

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.S.; Sun, L. A comprehensive survey of ai-generated content (aigc): A history of generative ai from gan to chatgpt. arXiv 2023, arXiv:2303.04226. [Google Scholar] [CrossRef]

- Slattery, P.; Saeri, A.K.; Grundy, E.A.; Graham, J.; Noetel, M.; Uuk, R.; Dao, J.; Pour, S.; Casper, S.; Thompson, N. The ai risk repository: A comprehensive meta-review, database, and taxonomy of risks from artificial intelligence. arXiv 2024, arXiv:2408.12622. [Google Scholar]

- Bengio, Y.; Hinton, G.; Yao, A.; Song, D.; Abbeel, P.; Darrell, T.; Harari, Y.N.; Zhang, Y.Q.; Xue, L.; Shalev-Shwartz, S.; et al. Managing extreme AI risks amid rapid progress. Science 2024, 384, 842–845. [Google Scholar] [CrossRef] [PubMed]

- Hendrycks, D.; Mazeika, M.; Woodside, T. An overview of catastrophic AI risks. arXiv 2023, arXiv:2306.12001. [Google Scholar] [CrossRef]

- Kenthapadi, K.; Lakkaraju, H.; Rajani, N. Generative ai meets responsible ai: Practical challenges and opportunities. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 5805–5806. [Google Scholar]

- Mikalef, P.; Conboy, K.; Lundström, J.E.; Popoviˇc, A. Thinking responsibly about responsible AI and ‘the dark side’ of AI. Eur. J. Inf. Syst. 2022, 31, 257–268. [Google Scholar] [CrossRef]

- McGregor, S. Preventing repeated real world AI failures by cataloging incidents: The AI incident database. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 May 2021; Volume 35, pp. 15458–15463. [Google Scholar] [CrossRef]

- Welcome to the Artificial Intelligence Incident Database— Incidentdatabase.ai. Available online: https://incidentdatabase.ai (accessed on 10 July 2025).

- OECD AI Policy Observatory Portal—oecd.ai. Available online: https://oecd.ai/en/ai-principles (accessed on 13 July 2025).

- Smuha, N.A. Regulation 2024/1689 of the Eur. Parl. & Council of June 13, 2024 (Eu Artificial Intelligence Act). Int. Leg. Mater. 2025, 1–148. [Google Scholar] [CrossRef]

- Continental Artificial Intelligence Strategy | African Union — au.int. Available online: https://au.int/en/documents/20240809/continental-artificial-intelligence-strategy (accessed on 13 July 2025).

- Roberts, H.; Hine, E.; Taddeo, M.; Floridi, L. Global AI governance: Barriers and pathways forward. Int. Aff. 2024, 100, 1275–1286. [Google Scholar] [CrossRef]

- Zaidan, E.; Ibrahim, I.A. AI governance in a complex and rapidly changing regulatory landscape: A global perspective. Humanit. Soc. Sci. Commun. 2024, 11, 1–18. [Google Scholar] [CrossRef]

- Pojman, L.; Fieser, J. Cengage Advantage Books: Ethics: Discovering Right and Wrong; Nelson Education: Toronto, ON, Canada, 2011. [Google Scholar]

- Dignum, V. Responsible Artificial Intelligence: How to Develop and Use AI in a Responsible Way; Springer: Berlin/Heidelberg, Germany, 2019; Volume 2156. [Google Scholar] [CrossRef]

- Dignum, V. The role and challenges of education for responsible AI. Lond. Rev. Educ. 2021, 19, 1–11. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Corchado, J.M.; Mehmood, R.; Li, R.Y.M.; Mossberger, K.; Desouza, K. Responsible Urban Innovation with Local Government Artificial Intelligence (AI): A Conceptual Framework and Research Agenda. J. Open Innov. Technol. Mark. Complex. 2021, 7, 71. [Google Scholar] [CrossRef]

- Kumar, P.; Dwivedi, Y.K.; Anand, A. Responsible Artificial Intelligence (AI) for Value Formation and Market Performance in Healthcare: The Mediating Role of Patient’s Cognitive Engagement. Inf. Syst. Front. 2021, 25, 2197–2220. [Google Scholar] [CrossRef] [PubMed]

- Askell, A.; Brundage, M.; Hadfield, G. The role of cooperation in responsible AI development. arXiv 2019, arXiv:1907.04534. [Google Scholar] [CrossRef]

- Gough, D.; Thomas, J.; Oliver, S. An introduction to systematic reviews (2nd Edition). Psychol. Teach. Rev. 2017, 23, 95–96. [Google Scholar] [CrossRef]

- Tranfield, D.; Denyer, D.; Smart, P. Towards a methodology for developing evidence-informed management knowledge by means of systematic review. Br. J. Manag. 2003, 14, 207–222. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- PRISMA Statement—Prisma-Statement.org. Available online: www.prisma-statement.org (accessed on 10 July 2025).

- Anagnostou, M.; Karvounidou, O.; Katritzidaki, C.; Kechagia, C.; Melidou, K.; Mpeza, E.; Konstantinidis, I.; Kapantai, E.; Berberidis, C.; Magnisalis, I.; et al. Characteristics and Challenges in the Industries towards Responsible AI: A Systematic Literature Review. Ethics Inf. Technol. 2022, 24, 37. [Google Scholar] [CrossRef]

- Sadek, M.; Kallina, E.; Bohné, T.; Mougenot, C.; Calvo, R.A.; Cave, S. Challenges of responsible AI in practice: Scoping review and recommended actions. AI Soc. 2025, 40, 199–215. [Google Scholar] [CrossRef]

- Radanliev, P.; Santos, O.; Brandon-Jones, A.; Joinson, A. Ethics and responsible AI deployment. Front. Artif. Intell. 2024, 7, 1377011. [Google Scholar] [CrossRef] [PubMed]

- Siala, H.; Wang, Y. SHIFTing artificial intelligence to be responsible in healthcare: A systematic review. Soc. Sci. Med. 2022, 296, 114782. [Google Scholar] [CrossRef] [PubMed]

- Lukkien, D.R.M.; Nap, H.H.; Buimer, H.P.; Peine, A.; Boon, W.P.C.; Ket, J.C.F.; Minkman, M.M.N.; Moors, E.H.M. Toward Responsible Artificial Intelligence in Long-Term Care: A Scoping Review on Practical Approaches. Gerontologist 2021, 63, 155–168. [Google Scholar] [CrossRef] [PubMed]

- Rakova, B.; Yang, J.; Cramer, H.; Chowdhury, R. Where Responsible AI Meets Reality: Practitioner Perspectives on Enablers for Shifting Organizational Practices. Proc. ACM Hum.-Comput. Interact. 2021, 5. [Google Scholar] [CrossRef]

- Peters, D.; Vold, K.; Robinson, D.; Calvo, R.A. Responsible AI—Two Frameworks for Ethical Design Practice. IEEE Trans. Technol. Soc. 2020, 1, 34–47. [Google Scholar] [CrossRef]

- Clarke, R. Principles and business processes for responsible AI. Comput. Law Secur. Rev. 2019, 35, 410–422. [Google Scholar] [CrossRef]

- Leslie, D. Tackling COVID-19 Through Responsible AI Innovation: Five Steps in the Right Direction. Harv. Data Sci. Rev. 2020. Available online: https://hdsr.mitpress.mit.edu/pub/as1p81um (accessed on 18 April 2025). [CrossRef]

- Wearn, O.R.; Freeman, R.; Jacoby, D.M.P. Responsible AI for conservation. Nat. Mach. Intell. 2019, 1, 72–73. [Google Scholar] [CrossRef]

- Trocin, C.; Mikalef, P.; Papamitsiou, Z.; Conboy, K. Responsible AI for Digital Health: A Synthesis and a Research Agenda. Inf. Syst. Front. 2021, 25, 2139–2157. [Google Scholar] [CrossRef]

- Wang, Y.; Xiong, M.; Olya, H. Toward an Understanding of Responsible Artificial Intelligence Practices. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020. [Google Scholar]

- Fosso Wamba, S.; Queiroz, M.M. Responsible Artificial Intelligence as a Secret Ingredient for Digital Health: Bibliometric Analysis, Insights, and Research Directions. Inf. Syst. Front. 2023, 25, 2123–2138. [Google Scholar] [CrossRef] [PubMed]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Becht, E.; McInnes, L.; Healy, J.; Dutertre, C.A.; Kwok, I.W.H.; Ng, L.G.; Ginhoux, F.; Newell, E.W. Dimensionality reduction for visualizing single-cell data using UMAP. Nat. Biotechnol. 2019, 37, 38–44. [Google Scholar] [CrossRef] [PubMed]

- McInnes, L.; Healy, J.; Astels, S. hdbscan: Hierarchical density based clustering. J. Open Source Softw. 2017, 2, 205. [Google Scholar] [CrossRef]

- Punishment and Responsibility: Essays in the Philosophy of Law; Oxford University Press: Oxford, UK, 1968.

- Strawson, P.F. Freedom and Resentment and Other Essays; Routledge: London, UK, 2008. [Google Scholar] [CrossRef]

- Moore, M.S. Causation and Responsibility: An Essay in Law, Morals, and Metaphysics; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- Van Norren, D.E. The ethics of artificial intelligence, UNESCO and the African Ubuntu perspective. J. Inf. Commun. Ethics Soc. 2023, 21, 112–128. [Google Scholar] [CrossRef]

- Banks, S. Ethics and Values in Social Work; Bloomsbury Publishing: London, UK, 2020. [Google Scholar]

- Carroll, A.B. The pyramid of corporate social responsibility: Toward the moral management of organizational stakeholders. Bus. Horizons 1991, 34, 39–48. [Google Scholar] [CrossRef]

- Edmans, A. Grow the Pie: How Great Companies Deliver Both Purpose and Profit–Updated and Revised; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Galston, W.A. Liberal Purposes: Goods, Virtues, and Diversity in the Liberal State; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- Schwartz, M.S.; Carroll, A.B. Corporate social responsibility: A three-domain approach. Bus. Ethics Q. 2003, 13, 503–530. [Google Scholar] [CrossRef]

- Jonas, H. The Imperative of Responsibility: In Search of an Ethics for the Technological Age; University of Chicago Press: Chicago, IL, USA, 1984. [Google Scholar]

- Xia, B.; Lu, Q.; Perera, H.; Zhu, L.; Xing, Z.; Liu, Y.; Whittle, J. Towards Concrete and Connected AI Risk Assessment (C2AIRA): A Systematic Mapping Study. In Proceedings of the 2023 IEEE/ACM 2nd International Conference on AI Engineering–Software Engineering for AI (CAIN), Los Alamitos, CA, USA, 15–16 May 2023; pp. 104–116. [Google Scholar] [CrossRef]

- Cheng, L.; Varshney, K.R.; Liu, H. Socially Responsible AI Algorithms: Issues, Purposes, and Challenges. J. Artif. Int. Res. 2021, 71, 1137–1181. [Google Scholar] [CrossRef]

- Ryan, M. In AI We Trust: Ethics, Artificial Intelligence, and Reliability. Sci. Eng. Ethics 2020, 26, 2749–2767. [Google Scholar] [CrossRef] [PubMed]

- Leslie, D. Understanding Artificial Intelligence Ethics and Safety: A Guide for the Responsible Design and Implementation of AI Systems in the Public Sector. SSRN Electron. J. 2019. [CrossRef]

- Deshpande, A.; Sharp, H. Responsible AI Systems: Who are the Stakeholders? In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, Oxford, UK, 1–3 August 2022; AIES ’22. pp. 227–236. [Google Scholar] [CrossRef]

- Brumen, B.; Göllner, S.; Tropmann-Frick, M. Aspects and Views on Responsible Artificial Intelligence. In Proceedings of the Machine Learning, Optimization, and Data Science; Nicosia, G., Ojha, V., La Malfa, E., La Malfa, G., Pardalos, P., Di Fatta, G., Giuffrida, G., Umeton, R., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 384–398. [Google Scholar]

- Lynch, T.W. What Is the Difference Between AI Ethics, Responsible AI, and Trustworthy AI? We ask our Responsible AI Leads - Institute for Experiential AI — ai.northeastern.edu. 2023. Available online: https://ai.northeastern.edu/what-is-the-difference-between-ai-ethics-responsible-ai-and-trustworthy-ai-we-ask-our-responsible-ai-leads/ (accessed on 28 November 2023).

- Barletta, V.S.; Caivano, D.; Gigante, D.; Ragone, A. A Rapid Review of Responsible AI Frameworks: How to Guide the Development of Ethical AI. In Proceedings of the 27th International Conference on Evaluation and Assessment in Software Engineering, Oulu, Finland, 14–16 June 2023; EASE ’23. pp. 358–367. [Google Scholar] [CrossRef]

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Madhavi, I.; Chamishka, S.; Nawaratne, R.; Nanayakkara, V.; Alahakoon, D.; De Silva, D. A deep learning approach for work related stress detection from audio streams in cyber physical environments. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), IEEE. Vienna, Austria, 8–11 September 2020; Volume 1, pp. 929–936. [Google Scholar]

- Buhmann, A.; Fieseler, C. Towards a deliberative framework for responsible innovation in artificial intelligence. Technol. Soc. 2021, 64, 101475. [Google Scholar] [CrossRef]

- Ghallab, M. Responsible AI: Requirements and challenges. AI Perspect. 2019, 1, 3. [Google Scholar] [CrossRef]

- Bandaragoda, T.; Ranasinghe, W.; Adikari, A.; de Silva, D.; Lawrentschuk, N.; Alahakoon, D.; Persad, R.; Bolton, D. The Patient-Reported Information Multidimensional Exploration (PRIME) framework for investigating emotions and other factors of prostate cancer patients with low intermediate risk based on online cancer support group discussions. Ann. Surg. Oncol. 2018, 25, 1737–1745. [Google Scholar] [CrossRef] [PubMed]

- Buruk, B.; Ekmekci, P.E.; Arda, B. A Critical Perspective on Guidelines for Responsible and Trustworthy Artificial Intelligence. Med. Health Care Philos. 2020, 23, 387–399. [Google Scholar] [CrossRef] [PubMed]

- Rawas, S. AI: The Future of Humanity. Discov. Artif. Intell. 2024, 4, 25. [Google Scholar] [CrossRef]

- Merhi, M.I. An Assessment of the Barriers Impacting Responsible Artificial Intelligence. Inf. Syst. Front. 2023, 25, 1147–1160. [Google Scholar] [CrossRef]

- Cachat-Rosset, G.; Klarsfeld, A. Diversity, Equity, and Inclusion in Artificial Intelligence: An Evaluation of Guidelines. Appl. Artif. Intell. 2023, 37, 2176618. [Google Scholar] [CrossRef]

- Osasona, F.; Amoo, O.O.; Atadoga, A.; Abrahams, T.O.; Farayola, O.A.; Ayinla, B.S. Reviewing the Ethical Implications of AI in Decision-Making Processes. Int. J. Manag. Entrep. Res. 2024, 6, 322–335. [Google Scholar] [CrossRef]

- Lu, Q.; Zhu, L.; Xu, X.; Whittle, J.; Zowghi, D.; Jacquet, A. Responsible AI Pattern Catalogue: A Collection of Best Practices for AI Governance and Engineering. ACM Comput. Surv. 2024, 56, 1–35. [Google Scholar] [CrossRef]

- Yang, Q. Toward Responsible AI: An Overview of Federated Learning for User-centered Privacy-preserving Computing. ACM Trans. Interact. Intell. Syst. 2021, 11, 1–22. [Google Scholar] [CrossRef]

- Herrmann, H. What’s next for responsible artificial intelligence: A way forward through responsible innovation. Heliyon 2023, 9, e14379. [Google Scholar] [CrossRef] [PubMed]

- Ibáñez, J.C.; Olmeda, M.V. Operationalising AI Ethics: How Are Companies Bridging the Gap Between Practice and Principles? An Exploratory Study. AI & SOCIETY 2022, 37, 1663–1687. [Google Scholar] [CrossRef]

- Li, B.; Qi, P.; Liu, B.; Di, S.; Liu, J.; Pei, J.; Yi, J.; Zhou, B. Trustworthy AI: From Principles to Practices. ACM Comput. Surv. 2023, 55, 1–46. [Google Scholar] [CrossRef]

- Akinrinola, O.; Okoye, C.C.; Ofodile, O.C.; Ugochukwu, C.E. Navigating and Reviewing Ethical Dilemmas in AI Development: Strategies for Transparency, Fairness, and Accountability. GSC Adv. Res. Rev. 2024, 18, 050–058. [Google Scholar] [CrossRef]

- Akter, S.; Dwivedi, Y.K.; Biswas, K.; Michael, K.; Bandara, R.; Sajib, S. Addressing Algorithmic Bias in AI-Driven Customer Management. J. Glob. Inf. Manag. 2021, 29, 1–27. [Google Scholar] [CrossRef]

- Aitken, M.; Toreini, E.; Carmichael, P.; Coopamootoo, K.; Elliott, K.; van Moorsel, A. Establishing a social license for Financial Technology: Reflections on the role of the private sector in pursuing ethical data practices. Big Data Society 2020, 7, 2053951720908892. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Drozdowski, P.; Rathgeb, C.; Dantcheva, A.; Damer, N.; Busch, C. Demographic bias in biometrics: A survey on an emerging challenge. IEEE Trans. Technol. Soc. 2020, 1, 89–103. [Google Scholar] [CrossRef]

- Varsha, P.S. How can we manage biases in artificial intelligence systems—A systematic literature review. Int. J. Inf. Manag. Data Insights 2023, 3, 100165. [Google Scholar] [CrossRef]

- Wu, X.; Duan, R.; Ni, J. Unveiling Security, Privacy, and Ethical Concerns of ChatGPT. J. Inf. Intell. 2023, 2, 102–115. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, Y.; Zhang, L.; Liu, C.; Khurshid, S. DeepRoad: GAN-Based Metamorphic Testing and Input Validation Framework for Autonomous Driving Systems. In Proceedings of the 2018 33rd IEEE/ACM International Conference on Automated Software Engineering (ASE), Montpellier, France, 3–7 September 2018; pp. 132–142. [Google Scholar]

- Yazdanpanah, V.; Gerding, E.; Stein, S.; Dastani, M.; Jonker, C.M.; Norman, T.J.; Ramchurn, S.D. Reasoning About Responsibility in Autonomous Systems: Challenges and Opportunities. AI & Society 2022, 38, 1453–1464. [Google Scholar] [CrossRef]

- Osipov, E.; Kahawala, S.; Haputhanthri, D.; Kempitiya, T.; De Silva, D.; Alahakoon, D.; Kleyko, D. Hyperseed: Unsupervised learning with vector symbolic architectures. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 6583–6597. [Google Scholar] [CrossRef] [PubMed]

- Kleyko, D.; Osipov, E.; De Silva, D.; Wiklund, U.; Alahakoon, D. Integer self-organizing maps for digital hardware. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), IEEE. Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Demirtas, H. AI Responsibility Gap: Not New, Inevitable, Unproblematic. Ethics Inf. Technol. 2024, 27, 7. [Google Scholar] [CrossRef]

- Constantinescu, M.; Vică, C.; Uszkai, R.; Voinea, C. Blame It on the AI? On the Moral Responsibility of Artificial Moral Advisors. Philos. Technol. 2022, 35, 35. [Google Scholar] [CrossRef]

- Himmelreich, J.; Köhler, S. Responsible AI Through Conceptual Engineering. Philos. Technol. 2022, 35, 60. [Google Scholar] [CrossRef]

- Constantinescu, M.; Voinea, C.; Uszkai, R.; Vică, C. Understanding Responsibility in Responsible AI: Dianoetic Virtues and the Hard Problem of Context. Ethics Inf. Technol. 2021, 23, 803–814. [Google Scholar] [CrossRef]

- Raji, I.D.; Smart, A.; White, R.N.; Mitchell, M.; Gebru, T.; Hutchinson, B.; Smith-Loud, J.; Theron, D.; Barnes, P. Closing the AI Accountability Gap: Defining an End-to-End Framework for Internal Algorithmic Auditing. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; FAT* ’20. pp. 33–44. [Google Scholar] [CrossRef]

- Walker, K. A Shared Agenda for Responsible AI Progress—blog.google. 2023. Available online: https://blog.google/technology/ai/a-shared-agenda-for-responsible-ai-progress (accessed on 1 December 2024).

- Brauner, P.; Hick, A.; Philipsen, R.; Ziefle, M. What Does the Public Think About Artificial Intelligence?—A Criticality Map to Understand Bias in the Public Perception of AI. Front. Comput. Sci. 2023, 5, 1113903. [Google Scholar] [CrossRef]

- de Hond, A.A.H.; Leeuwenberg, A.M.; Hooft, L.; Kant, I.M.J.; Nijman, S.W.J.; van Os, H.J.A.; Aardoom, J.J.; Debray, T.P.A.; Schuit, E.; van Smeden, M.; et al. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: A scoping review. NPJ Digit. Med. 2022, 5, 2. [Google Scholar] [CrossRef] [PubMed]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef] [PubMed]

- Biswas, A.; Talukdar, W. Intelligent Clinical Documentation: Harnessing Generative AI for Patient-Centric Clinical Note Generation. Int. J. Innov. Sci. Res. Technol. 2024, 9, 994–1008. [Google Scholar] [CrossRef]

- Tarisayi, K.S. Strategic leadership for responsible artificial intelligence adoption in higher education. CTE Workshop Proc. 2024, 11, 4–14. [Google Scholar] [CrossRef]

- Maksuti, E.; Erbas, I. The Impact of Artificial Intelligence on Education. Int. J. Innov. Res. Multidiscip. Educ. 2024, 2, 11–20. [Google Scholar]

- García-López, I.M.; González González, C.S.; Ramírez-Montoya, M.S.; Molina-Espinosa, J.M. Challenges of implementing ChatGPT on education: Systematic literature review. Int. J. Educ. Res. Open 2025, 8, 100401. [Google Scholar] [CrossRef]

- Rudolph, J.; Mohamed Ismail, M.F.b.; Popenici, S. Higher Education’s Generative Artificial Intelligence Paradox: The Meaning of Chatbot Mania. J. Univ. Teach. Learn. Pract. 2024, 21, 14–48. [Google Scholar] [CrossRef]

- Rasul, T.; Nair, S.; Kalendra, D.; Robin, M.; De Oliveira Santini, F.; Junior Ladeira, W.; Sun, M.; Day, I.; Rather, R.A.; Heathcote, L. The Role of ChatGPT in Higher Education: Benefits, Challenges, and Future Research Directions. J. Appl. Learn. Teach. 2023, 6. [Google Scholar] [CrossRef]

- Adiguzel, T.; Kaya, H.; Cansu, F. Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemp. Educ. Technol. 2023, 15, ep429. [Google Scholar] [CrossRef] [PubMed]

- Al-Zahrani, A.M.; Alasmari, T.M. Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications. Humanit. Soc. Sci. Commun. 2024, 11, 912. [Google Scholar] [CrossRef]

- Fu, Y.; Weng, Z. Navigating the ethical terrain of AI in education: A systematic review on framing responsible human-centered AI practices. Comput. Educ. Artif. Intell. 2024, 7, 100306. [Google Scholar] [CrossRef]

- Willis, V. The Role of Artificial Intelligence (AI) in Personalizing Online Learning. J. Online Distance Learn. 2024, 3, 1–13. [Google Scholar] [CrossRef]

- Introducing OpenAI o3 and o4-mini — openai.com. Available online: https://openai.com/index/introducing-o3-and-o4-mini/ (accessed on 13 July 2025).

- OpenAI o3 Breakthrough High Score on ARC-AGI-Pub — arcprize.org. Available online: https://arcprize.org/blog/oai-o3-pub-breakthrough (accessed on 13 July 2025).

- Damar, M.; Özen, A.; Çakmak, Ü.E.; Özoğuz, E.; Erenay, F.S. Super AI, Generative AI, Narrow AI and Chatbots: An Assessment of Artificial Intelligence Technologies for The Public Sector and Public Administration. J. AI 2024, 8, 83–106. [Google Scholar] [CrossRef]

- Sebo, J.; Long, R. Moral consideration for AI systems by 2030. AI Ethics 2025, 5, 591–606. [Google Scholar] [CrossRef]

- Feng, B. Potential safety issues and moral hazard posed by artificial general intelligence. Appl. Comput. Eng. 2024, 106, 32–36. [Google Scholar] [CrossRef]

- Morgan Stanley. Could AI Robots Help Fill the Labor Gap? 2024. Available online: https://www.morganstanley.com/ideas/humanoid-robot-market-outlook-2024 (accessed on 13 July 2025).

- De Silva, D.; Withanage, S.; Sumanasena, V.; Gunasekara, L.; Moraliyage, H.; Mills, N.; Manic, M. Robotic Motion Intelligence Using Vector Symbolic Architectures and Blockchain-Based Smart Contracts. Robotics 2025, 14, 38. [Google Scholar] [CrossRef]

| Component | Description |

|---|---|

| Research Questions | (1) What is the current state of responsible AI principles and practice? (2) What are the foundations of responsibility? (3) How do these foundations define responsibility in AI? (4) What factors drive the need for responsible AI? (5) What are the principles of responsible AI? and (6) How do these principles translate into the practice of responsible AI? |

| ine Databases | Scopus, Web of Science, Semantic Scholar, CrossRef (search conducted in March 2025) |

| ine Search Query | (“Responsible AI” OR “Responsible artificial intelligence” OR “Responsible machine learning” OR “Responsible ML” OR “AI responsibility” OR “Responsibility in AI”) AND (Principles OR Application OR Frameworks OR Guidelines OR Implementations OR Challenges OR Assessment OR Governance OR “Regulatory compliance” OR “Future directions”). |

| ine Search Strategy | Peer-reviewed journal articles and conference proceedings; published between 2020-2025; search terms in title, abstract, and keywords; English language only. |

| ine Inclusion Criteria | (1) Peer-reviewed journal articles and conference proceedings published in English; (2) studies published between 2020 and 2025 (inclusive); (3) studies that explicitly address responsible AI concepts, frameworks, principles, or implementations; (4) studies providing theoretical frameworks, empirical evidence, practical guidelines, or case studies related to responsible AI; (5) studies focusing on AI ethics, fairness, transparency, accountability, or related responsible AI principles; (6) studies with accessible full-text content. |

| ine Exclusion Criteria | (1) Duplicate publications across databases; (2) non-peer-reviewed publications (blog posts, white papers, thesis submissions); (3) studies without available abstracts or full-text access; (4) studies that only mention responsible AI tangentially without substantial focus; (5) studies in languages other than English; (6) studies published before 2020. |

| ine Quality Assessment | (1) Only peer-reviewed publications indexed in major academic databases; (2) studies with adequate academic rigor and clear methodology; (3) LLM-assisted semantic filtering with high inter-rate reliability. |

| ine Analysis Method | BERTopic modeling for thematic analysis; narrative synthesis of findings. |

| Topic | Article Count | Percentage |

|---|---|---|

| Topic 0: AI in Healthcare and Digital Medicine Keywords: Healthcare, digital health, patient care, decision support, medical education, physicians | 120 | 22% |

| Topic 1: Responsible AI Principles and Stakeholder Governance Keywords: RAI principles, stakeholder engagement, governance, software engineering, capabilities, agents, RAI tools | 61 | 11.2% |

| Topic 2: ChatGPT and Academic Integrity in Education Keywords: ChatGPT education, academic integrity, student assessment, higher education, education, educators, academic, students | 53 | 9.7% |

| Topic 3: Transparency, Accountability, and Human Rights in AI Keywords: Accountability, transparency, intelligibility, human rights, privacy, governance, decision making | 47 | 8.6% |

| Topic 4: AI-driven Finance, Regulation, and Corporate Accountability Keywords: Financial regulation, corporate digital, auditing, compliance, accountability | 44 | 8.1% |

| Topic 5: Generative AI, Creativity, and Intellectual Property Keywords: Generative applications, adversarial, creativity, natural language, infringement, copyright | 41 | 7.5% |

| Topic 6: Moral Agency, Accountability, and AI Keywords: Moral judgments, judgement, moral agency, human agents, accountability, robots | 36 | 6.6% |

| Topic 7: Explainable and Interpretable AI (XAI) Keywords: Explainable XAI, interpretability, algorithmic, explanations | 24 | 4.4% |

| Topic 8: National AI Strategies and Policy Governance Keywords: Governance policy, national strategies, national policies, governments | 23 | 4.2% |

| Topic 9: Sustainable AI for Agriculture and the Environment Keywords: Smart farming, environmental sustainability, IoT, precision agriculture, environmental conservation, sustainable business, sustainable goals, renewable energy | 21 | 3.9% |

| Topic 10: Legal and Judicial Frameworks for AI Keywords: Law, International law, legal liability, judicial, predictive justice, legal operation | 13 | 2.4% |

| Topic 11: Algorithmic Bias, Privacy, and Human–AI Collaboration Keywords: Algorithmic bias, human AI collaboration, trust software, humanitarian, human ai, humanitarian actors | 12 | 2.2% |

| Topic 12: AI-Driven Cybersecurity and the Metaverse Keywords: Cybersecurity, semantic metaverse, cyber threats, virtual reality | 9 | 1.7% |

| Topic 13: Designing for Trust and Trustworthiness in AI Keywords: Trustworthiness, trust design, trust judgments, evaluate trust | 6 | 1.1% |

| Topic 14: Federated and Privacy Preserving AI Keywords: Privacy federated, privacy preserving, federated learning, data privacy | 4 | 0.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gunasekara, L.; El-Haber, N.; Nagpal, S.; Moraliyage, H.; Issadeen, Z.; Manic, M.; De Silva, D. A Systematic Review of Responsible Artificial Intelligence Principles and Practice. Appl. Syst. Innov. 2025, 8, 97. https://doi.org/10.3390/asi8040097

Gunasekara L, El-Haber N, Nagpal S, Moraliyage H, Issadeen Z, Manic M, De Silva D. A Systematic Review of Responsible Artificial Intelligence Principles and Practice. Applied System Innovation. 2025; 8(4):97. https://doi.org/10.3390/asi8040097

Chicago/Turabian StyleGunasekara, Lakshitha, Nicole El-Haber, Swati Nagpal, Harsha Moraliyage, Zafar Issadeen, Milos Manic, and Daswin De Silva. 2025. "A Systematic Review of Responsible Artificial Intelligence Principles and Practice" Applied System Innovation 8, no. 4: 97. https://doi.org/10.3390/asi8040097

APA StyleGunasekara, L., El-Haber, N., Nagpal, S., Moraliyage, H., Issadeen, Z., Manic, M., & De Silva, D. (2025). A Systematic Review of Responsible Artificial Intelligence Principles and Practice. Applied System Innovation, 8(4), 97. https://doi.org/10.3390/asi8040097