A Privacy-Preserving Record Linkage Method Based on Secret Sharing and Blockchain

Abstract

1. Introduction

- (1)

- We apply secret sharing techniques to data encoded with Bloom filters, introducing masking and random permutation to decompose similarity computations into basic operations that each computation node can independently perform locally. Only a single interaction involving the sharing of masked differences is required between nodes, significantly reducing the communication and computational overhead compared to computationally intensive approaches like those based on homomorphic encryption and effectively enhancing resilience against collusion attacks.

- (2)

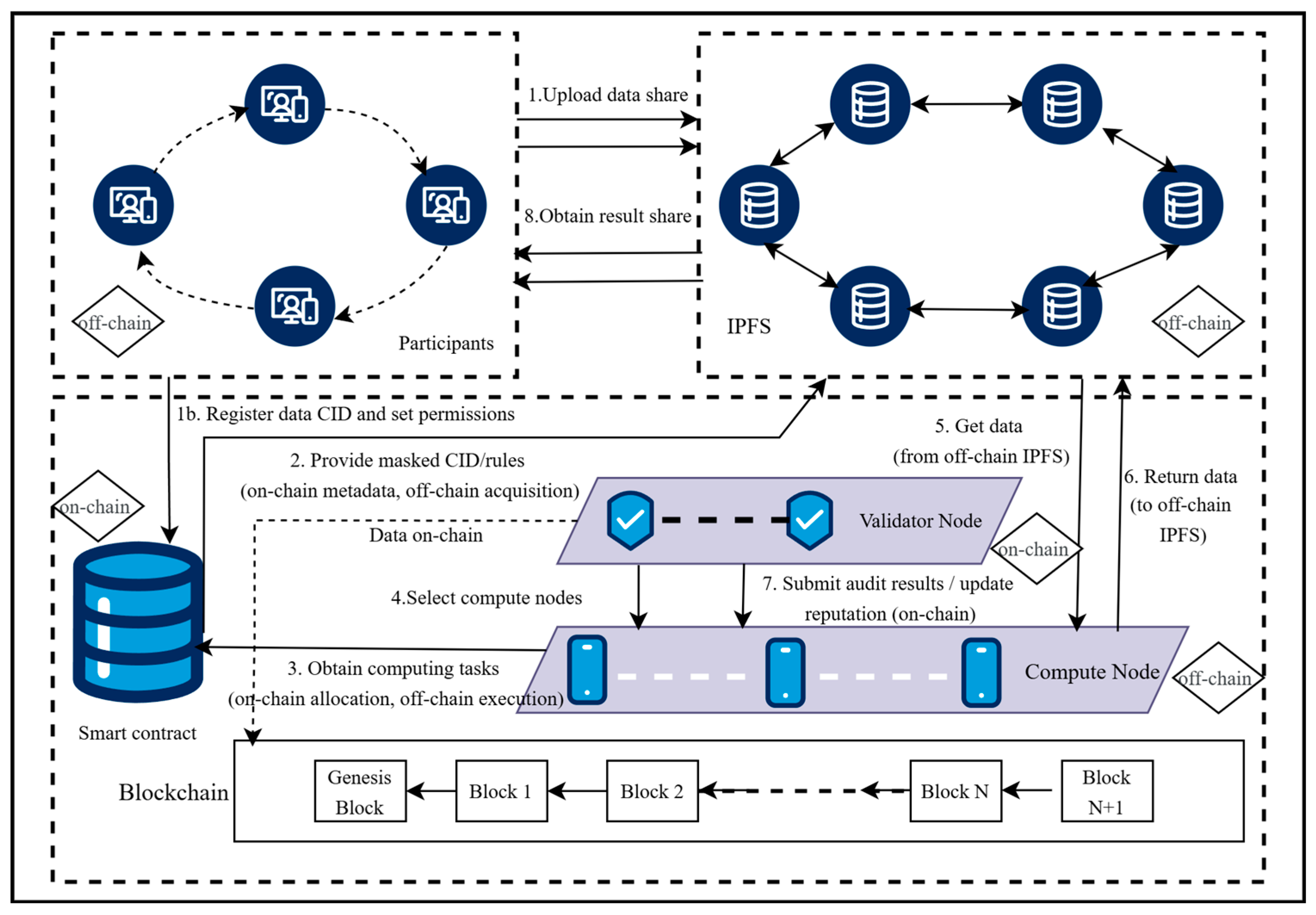

- Addressing the trust issues of third parties and participants in existing PPRL methods, we utilize blockchain smart contracts to manage the entire PPRL process (including verifiable mask generation, task allocation, and node management) and enable trusted auditing. Combined with IPFS for efficient data storage and distribution, the use of tamper-proof records and automated rule execution enhances process transparency and the trustworthiness of computation nodes.

- (3)

- Through theoretical analysis and experimental validation, we demonstrate that this method ensures data privacy and security while achieving high linkage quality and good scalability, making it suitable for large-scale multi-party collaboration scenarios.

2. Related Works

3. Preliminaries and Background

3.1. Blockchain

3.2. Smart Contracts

3.3. InterPlanetary File System

3.4. Bloom Filters

3.5. Secret Sharing

3.6. Hamming Distance

4. Methods

4.1. System Architecture

4.2. Data Preparation and Generation Module

4.2.1. Data Encoding

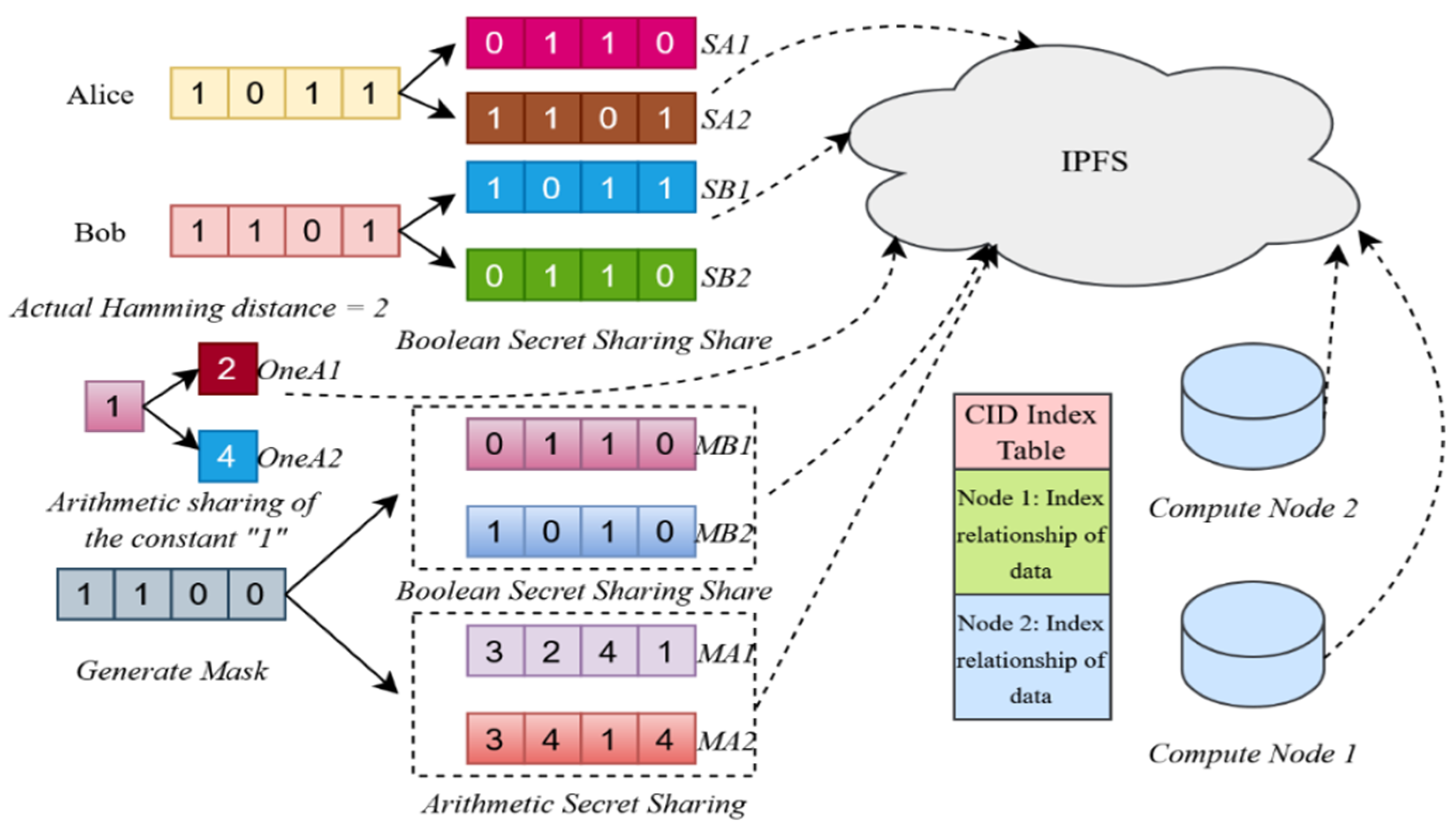

4.2.2. Share Generation of Boolean Secret Sharing

- Determine shares (a system parameter, e.g., ), randomly generating the first shares and adjusting the last to satisfy the XOR condition.

- For to , randomly generate uniformly distributed , introducing randomness to conceal frequency patterns.

- For Alice, set (same for Bob), ensuring the XOR result is correct.

- The generated shares form and , which are uploaded to IPFS, with CIDs stored on the blockchain.

4.2.3. Mask Generation and Data Distribution

- Record Pair Grouping: The total number of record pairs () is divided into groups (, e.g., or a fixed value like ) using deterministic hashing, balancing security and efficiency.

- Mask Generation: For each group () and attribute (), a mask of length is generated using a Verifiable Random Function (VRF) with and as inputs, ensuring randomness and fairness.

- Share Decomposition: Boolean shares: is decomposed into shares. Generate random Boolean shares , and compute: Arithmetic shares: is decomposed into shares. Generate random arithmetic shares , and compute: Constant 1 Shares: To support Boolean-to-arithmetic (B2A) conversion, generate arithmetic shares , satisfying by randomly generating shares and computing .

- Storage and Management: The generated Boolean shares , arithmetic shares , and constant 1 shares are uploaded to IPFS, obtaining CIDs. These CIDs, along with the CIDs of secret shares uploaded during the data preparation phase, are recorded and managed by smart contracts (e.g., mask management or data distribution contracts) to ensure efficient and secure data distribution.

4.2.4. Compute Node Selection

- Selection Process:

- Weighted Scoring: The task requester specifies the number of nodes and security level (corresponding to high, medium, or low security requirements). Validation nodes compute each node’s weighted score:where weights are (reputation), (computational power), (completion rate), satisfying . Weights can be adjusted through participant negotiation.

- Random Adjustment: To ensure new nodes () have a chance to participate, a Verifiable Random Function (VRF) adjusts scores:where is collectively generated by validation nodes to ensure fairness, and controls the random offset magnitude.

- Grouping and Selection: Nodes are divided into four groups based on :

- ○

- (high reputation);

- ○

- (medium reputation);

- ○

- (low reputation);

- ○

- (new nodes). Proportions are set based on , e.g., for (high security): , , , . Calculate group sizes:

- If the total is less than , increase . After verifying node availability, select nodes from each group in descending order of adjusted scores , ensuring the total number of nodes is .

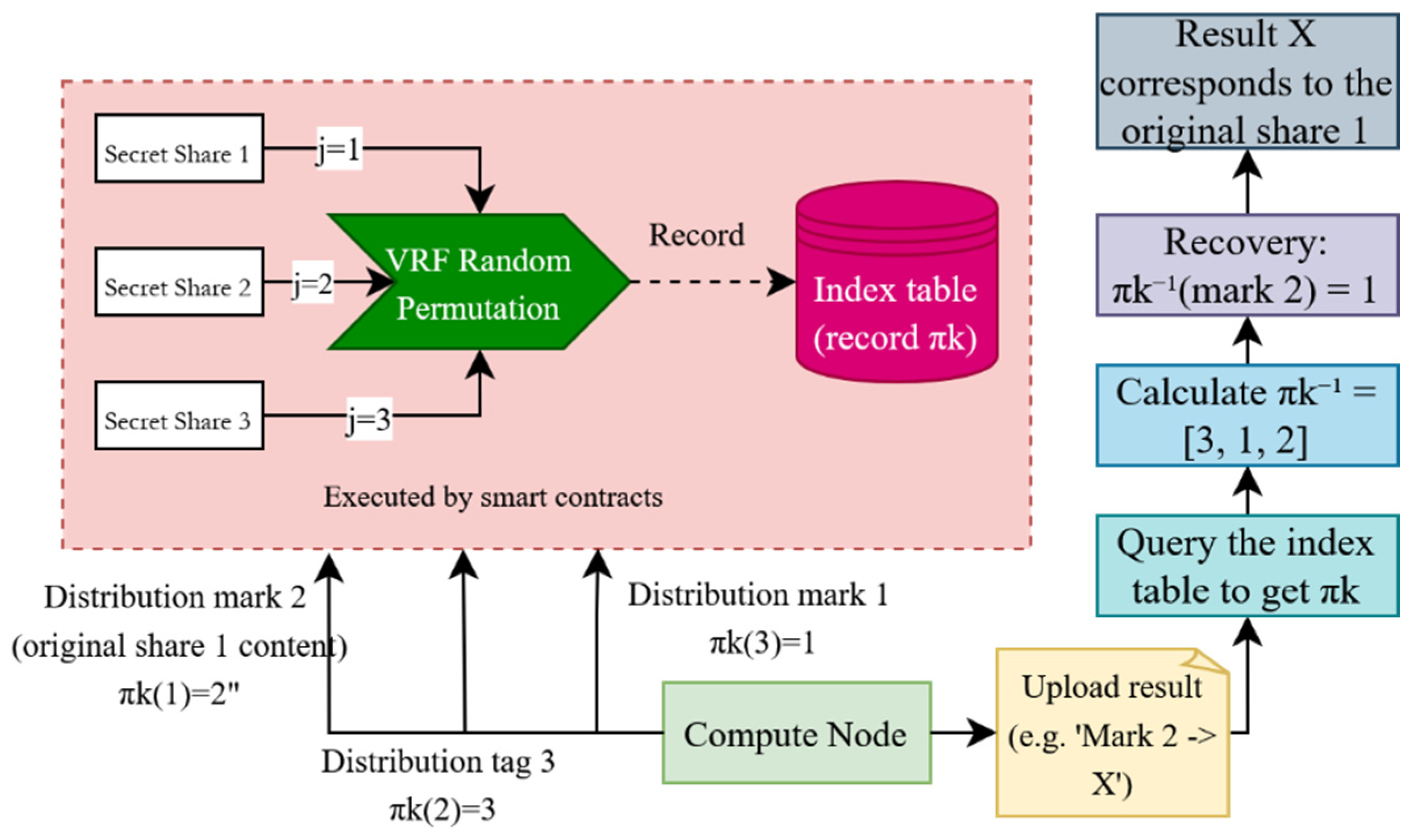

4.2.5. Data Distribution

| Algorithm 1 Data Distribution Algorithm |

| Input: record_pairs: List of record pairs to be processed; k: Number of mask groups; n: Number of computation nodes per record pair; total_nodes: Total selected computation nodes Output: index_table: Mapping of tasks to computation nodes with CIDs and permutations 1: for each record_pair in record_pairs do 2: group_id ← DeterministicHash(record_pair) mod k 3: selected_nodes ← VRFSelectNodes(total_nodes, n) 4: for each node in selected_nodes do 5: perm ← VRFGeneratePermutation(n, seed = (record_pair, node)) 6: physical_share ← perm(1) 7: task_key ← (group_id, record_pair, node, 1) 8: task_value ← (GetCIDs(record_pair, group_id, physical_share), perm) 9: index_table[task_key] ← task_value 10: end for 11: end for 12: StoreIndexTableToBlockchain(index_table) 13: return index_table |

4.3. Approximate Matching Module

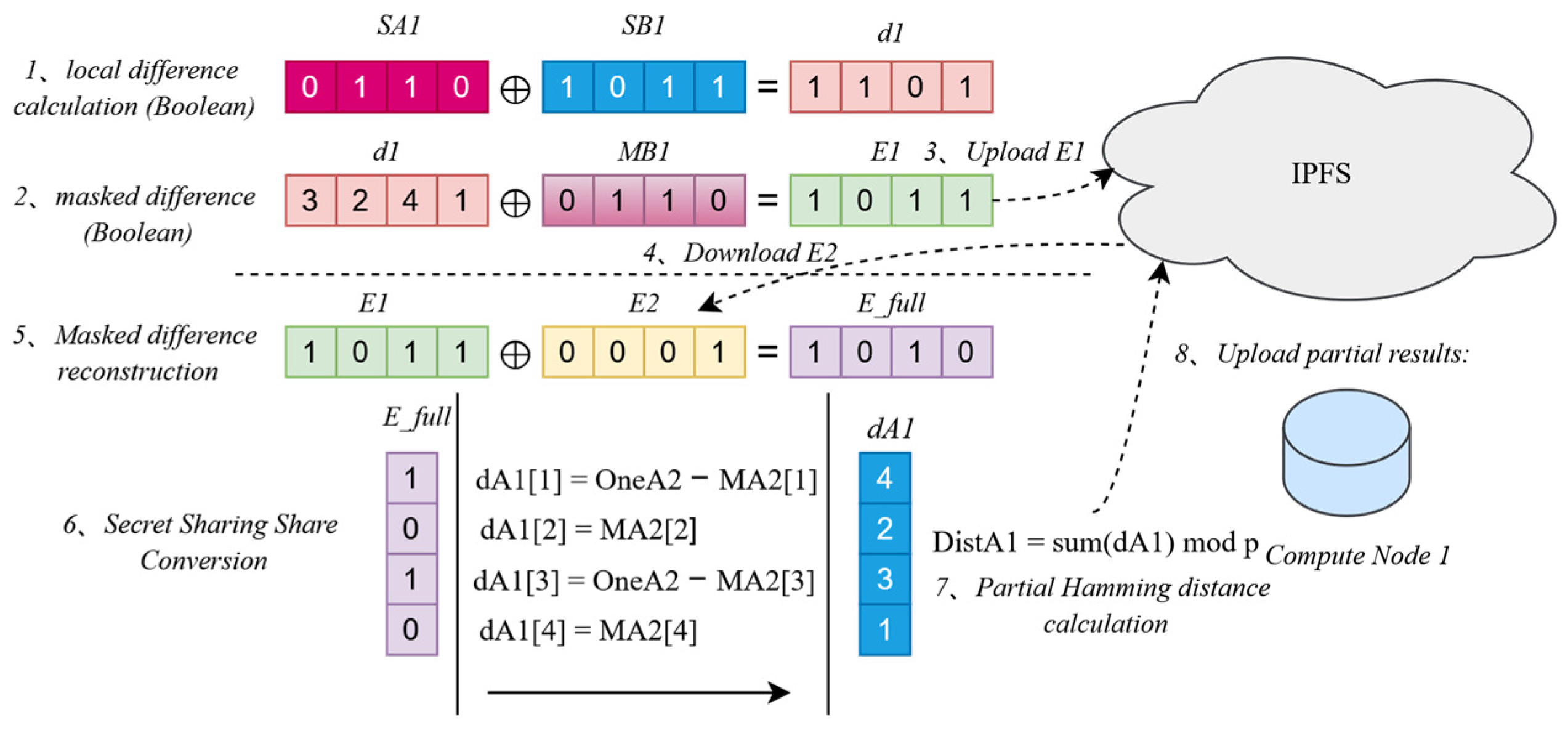

4.3.1. Local Difference Calculation and Masked Difference Vector Generation

4.3.2. Batch Interaction and Data Sharing

4.3.3. Masked Difference Reconstruction and Conversion

| Algorithm 2 Share And Reconstruct Masked Diff Algorithm |

| Input: masked_diff: Masked difference vector; n: Number of computation nodes; perm: Permutation function for data reordering Output: full_masked_diff: Fully reconstructed masked difference 1: cid_masked_diff ← UploadToIPFS(masked_diff) 2: SubmitToSmartContract(task_id, node_id, cid_masked_diff) 3: WaitForAllNodes(task_id, n) 4: other_cids ← GetOtherNodesCIDs(task_id, n) 5: other_masked_diffs ← [DownloadFromIPFS(cid) for cid in other_cids] 6: Reorder(other_masked_diffs, perm) 7: full_masked_diff ← masked_diff 8: for j ← 1 to n − 1 do 9: for i ← 1 to m do 10: full_masked_diff[i] ← full_masked_diff[i] XOR other_masked_diffs[j][i] 11: end for 12: end for 13: return full_masked_diff |

4.3.4. Hamming Distance Calculation and Result Upload

| Algorithm 3 B2A conversion And Hamming Distance Algorithm |

| Input: full_masked_diff: Fully reconstructed masked difference; mask_arith_share: Arithmetic mask share; const_one_share: Arithmetic share of constant one; m: Length of Bloom filter Output: partial_hamming_share: Partial Hamming distance share 1: arith_diff ← [] 2: for i ← 1 to m do 3: if full_masked_diff[i] = 0 then 4: arith_diff.append(mask_arith_share[i]) 5: else 6: arith_diff.append(const_one_share[i] - mask_arith_share[i]) 7: end if 8: end for 9: partial_hamming_share ← 0 10: for i ← 1 to m do 11: partial_hamming_share ← partial_hamming_share + arith_diff[i] 12: end for 13: return partial_hamming_share |

4.4. Output and Audit Module

4.4.1. Result Aggregation and Output

4.4.2. Audit and Verification

- Trigger and Sampling: Upon activation, validation nodes use a Verifiable Random Function to randomly select a portion of the result shares for verification.

- Data Retrieval: For sampled shares , validation nodes query the blockchain’s index table to obtain CIDs and download from IPFS: the sampled share , corresponding Bloom filter boolean shares and , mask boolean shares , mask arithmetic shares , constant “1” arithmetic shares , and permutation data .

- Recomputation: Using the retrieved input and mask shares, validation nodes independently recompute the core steps of the approximate matching module to generate a reference value, denoted .

- Verification: Validation nodes compare the uploaded share with the recomputed reference . If they satisfy , the share passes verification; otherwise, it is marked as incorrect. The trustworthiness of a computing node’s task execution is determined based on the verification results of all sampled shares [31].

4.4.3. Reputation Management

- Reputation Score Update: , initialized at 0, is updated based on task outcomes:Correct task: . Incorrect task: . Severe malicious behavior (e.g., data tampering): . Incentives for consistency: Three consecutive correct tasks: ; two consecutive incorrect tasks: .

- Computing Power Update: , initialized at 0, is periodically updated via off-chain tests (e.g., benchmarks), increasing with hardware performance.

- Task Completion Rate Update: , initialized at 0, reflects historical reliability, calculated as follows:

4.4.4. Record Keeping and Provenance

| Algorithm 4 Audit And Reputation Management Algorithm |

| Input: task_id: Task identifier; sample_rate: Proportion of results to audit; index_table: Index table with CIDs; node_performance: Node historical performance data Output: audit_results: Audit results with verification outcomes; updated_reputation: Updated reputation scores; blockchain_records: Stored records on blockchain 1: sampled_shares ← RandomSample(partial_hamming_shares, sample_rate) 2: for each share in sampled_shares do 3: task_data ← GetTaskData(index_table, share) 4: reference_share ← RecomputeShare(task_data) 5: if reference_share == share then 6: audit_results[share] ← “Verified” 7: UpdateNodeReputation(node, outcome = “Success”) 8: else 9: audit_results[share] ← “Failed” 10: UpdateNodeReputation(node, outcome = “Failure”) 11: end if 12: end for 13: cid_audit, cid_reputation ← UploadResultsToIPFS(audit_results, up_reputation) 14: cid_reputation ← UploadToIPFS(updated_reputation) 15: return audit_results, up_reputation, StoreToBlockchain(cid_audit, cid_reputation) |

5. Method Analysis

5.1. Complexity Analysis

5.2. Privacy Analysis

- If a majority of computation nodes are compromised: On the privacy front, an attacker’s chances of collecting all t shares for a record increase, but they still face the t! complexity from random permutation. In terms of result integrity, if most computation nodes in a specific task are malicious, they could collude to submit erroneous results, relying on verification node sampling audit as the main check.

- If a majority of validation nodes are compromised: This would destroy the system’s core trust mechanism, as the audit and reputation systems (maintained by the audit verification and reputation management smart contracts) would fail.

- If both types of nodes are compromised on a large scale: This is a catastrophic scenario. Attackers could submit malicious data and have it “legitimized” by compromised validation nodes, causing the defense system built by various smart contracts and IPFS to face systemic failure. The immutability of the blockchain would only preserve a trace for post-mortem analysis.

5.3. Linkage Quality Analysis

6. Experimental Results

6.1. Experiment Preparation

6.2. Experimental Results and Analysis

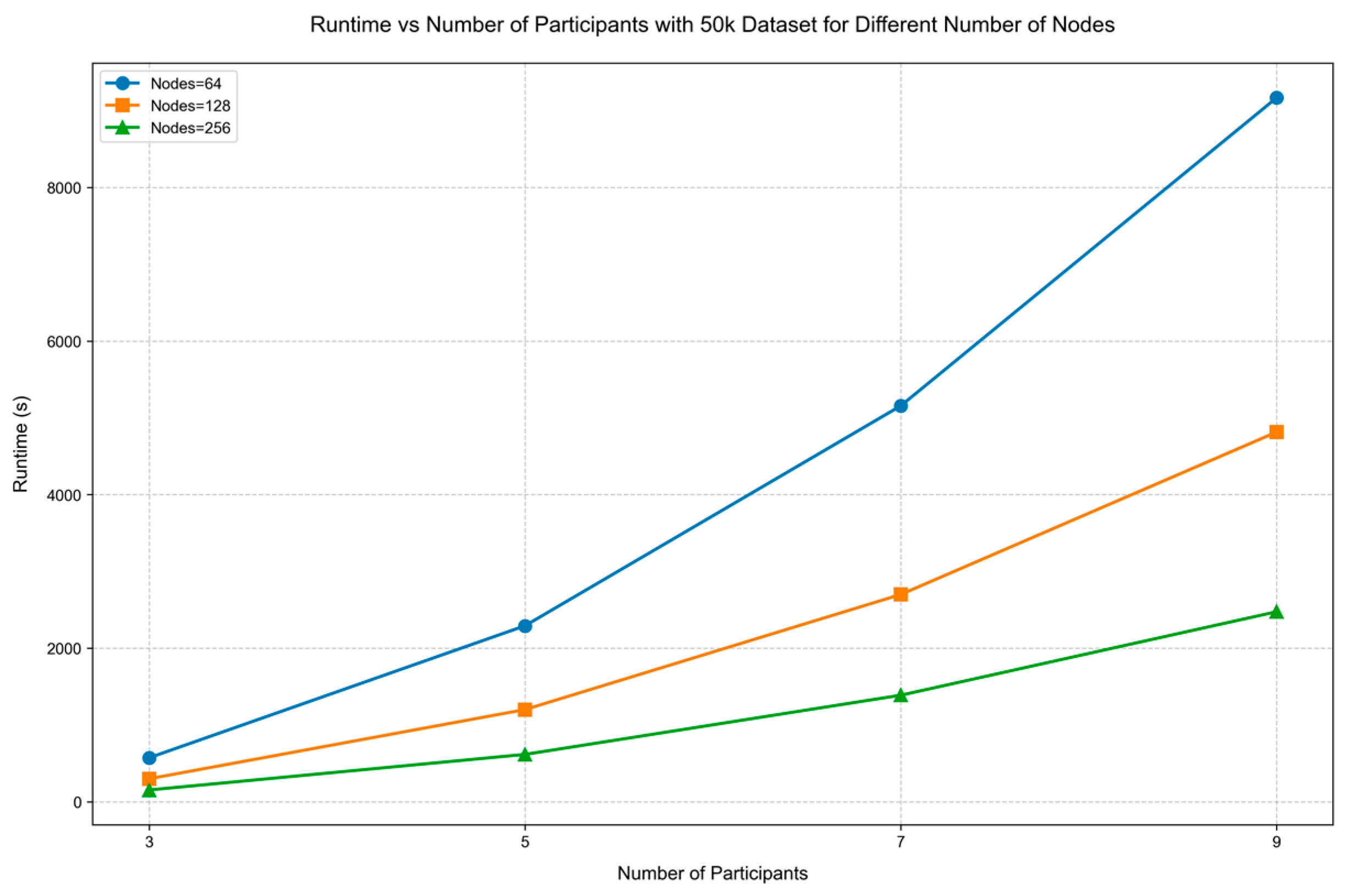

6.2.1. Scalability Assessment

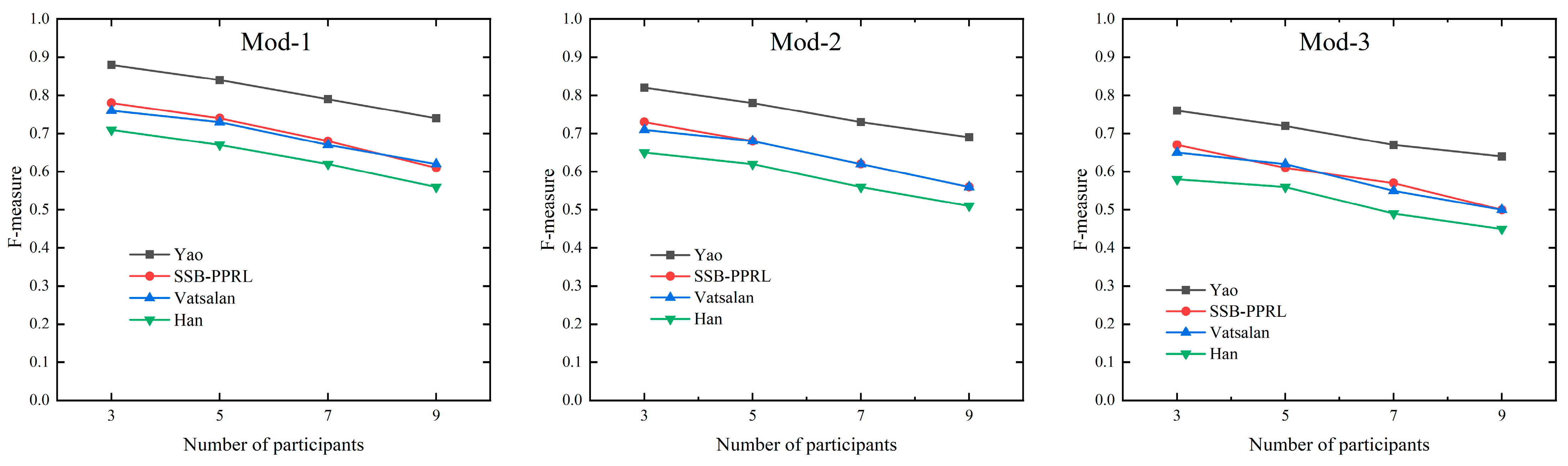

6.2.2. Method Performance Evaluation

6.2.3. Security Evaluation

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sayers, A.; Ben-Shlomo, Y.; Blom, A.W.; Steele, F. Probabilistic Record Linkage. Int. J. Epidemiol. 2016, 45, 954–964. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Gu, Y.; Zhou, X.; Ma, Q.; Yu, G. An Effective and Efficient Truth Discovery Framework over Data Streams. In Proceedings of the EDBT, Venice, Italy, 21–24 March 2017; pp. 180–191. [Google Scholar]

- Vatsalan, D.; Christen, P.; Verykios, V.S. A Taxonomy of Privacy-Preserving Record Linkage Techniques. Inf. Syst. 2013, 38, 946–969. [Google Scholar] [CrossRef]

- Pathak, A.; Serrer, L.; Zapata, D.; King, R.; Mirel, L.B.; Sukalac, T.; Srinivasan, A.; Baier, P.; Bhalla, M.; David-Ferdon, C.; et al. Privacy Preserving Record Linkage for Public Health Action: Opportunities and Challenges. J. Am. Med. Inf. Assoc. 2024, 31, 2605–2612. [Google Scholar] [CrossRef]

- Gkoulalas-Divanis, A.; Vatsalan, D.; Karapiperis, D.; Kantarcioglu, M. Modern Privacy-Preserving Record Linkage Techniques: An Overview. IEEE Trans. Inform. Forensic Secur. 2021, 16, 4966–4987. [Google Scholar] [CrossRef]

- Vidanage, A.; Ranbaduge, T.; Christen, P.; Schnell, R. A Taxonomy of Attacks on Privacy-Preserving Record Linkage. J. Priv. Confidentiality 2022, 12, 1. [Google Scholar] [CrossRef]

- Nóbrega, T.; Pires, C.E.S.; Nascimento, D.C. Blockchain-Based Privacy-Preserving Record Linkage: Enhancing Data Privacy in an Untrusted Environment. Inf. Syst. 2021, 102, 101826. [Google Scholar] [CrossRef]

- Christen, P.; Schnell, R.; Ranbaduge, T.; Vidanage, A. A Critique and Attack on “Blockchain-Based Privacy-Preserving Record Linkage”. Inf. Syst. 2022, 108, 101930. [Google Scholar] [CrossRef]

- Randall, S.M.; Brown, A.P.; Ferrante, A.M.; Boyd, J.H.; Semmens, J.B. Privacy Preserving Record Linkage Using Homomorphic Encryption. In Proceedings of the First International Workshop on Population Informatics for Big Data, Sydney, Australia, 13 April 2015; Volume 10. [Google Scholar]

- Han, S.; Wang, Z.; Shen, D.; Wang, C. A Parallel Multi-Party Privacy-Preserving Record Linkage Method Based on a Consortium Blockchain. Mathematics 2024, 12, 1854. [Google Scholar] [CrossRef]

- Schnell, R.; Bachteler, T.; Reiher, J. Privacy-Preserving Record Linkage Using Bloom Filters. BMC Med. Inf. Decis. Making 2009, 9, 41. [Google Scholar] [CrossRef]

- Vatsalan, D.; Yu, J.; Henecka, W.; Thorne, B. Fairness-Aware Privacy-Preserving Record Linkage. In Proceedings of the International Workshop on Data Privacy Management, Surrey, UK, 17–18 September 2020; pp. 3–18. [Google Scholar]

- Laud, P.; Pankova, A. Privacy-Preserving Record Linkage in Large Databases Using Secure Multiparty Computation. BMC Med. Genomics 2018, 11, 84. [Google Scholar] [CrossRef]

- Han, S.; Shen, D.; Nie, T.; Kou, Y.; Yu, G. Private Blocking Technique for Multi-Party Privacy-Preserving Record Linkage. Data Sci. Eng. 2017, 2, 187–196. [Google Scholar] [CrossRef]

- Wu, J.; Li, T.; Chen, L.; Gao, Y.; Wei, Z. SEA: A Scalable Entity Alignment System. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 3175–3179. [Google Scholar]

- Karapiperis, D.; Verykios, V.S. A Distributed Framework for Scaling up LSH-Based Computations in Privacy Preserving Record Linkage. In Proceedings of the 6th Balkan Conference in Informatics, Thessaloniki, Greece, 19–21 September 2013; pp. 102–109. [Google Scholar]

- Vaiwsri, S.; Ranbaduge, T.; Christen, P.; Schnell, R. Accurate Privacy-Preserving Record Linkage for Databases with Missing Values. Inf. Syst. 2022, 106, 101959. [Google Scholar] [CrossRef]

- Rohde, F.; Christen, V.; Franke, M.; Rahm, E. Multi-Layer Privacy-Preserving Record Linkage with Clerical Review Based on Gradual Information Disclosure. arXiv 2024, arXiv:2412.04178. [Google Scholar]

- Lu, K.; Zhang, C. Blockchain-Based Multiparty Computation System. In Proceedings of the 2020 IEEE 11th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 16 October 2020; pp. 28–31. [Google Scholar]

- Sanchez-Gomez, N.; Torres-Valderrama, J.; Mejias Risoto, M.; Garrido, A. Blockchain Smart Contract Meta-Modeling. J. Web Eng. 2021, 20, 2059–2079. [Google Scholar] [CrossRef]

- Kumar, R.; Tripathi, R. Implementation of Distributed File Storage and Access Framework Using IPFS and Blockchain. In Proceedings of the 2019 Fifth International Conference on Image Information Processing (ICIIP), Shimla, India, 15–17 November 2019; pp. 246–251. [Google Scholar]

- Kang, P.; Yang, W.; Zheng, J. Blockchain Private File Storage-Sharing Method Based on IPFS. Sensors 2022, 22, 5100. [Google Scholar] [CrossRef]

- Chattopadhyay, A.K.; Saha, S.; Nag, A.; Nandi, S. Secret Sharing: A Comprehensive Survey, Taxonomy and Applications. Comput. Sci. Rev. 2024, 51, 100608. [Google Scholar] [CrossRef]

- Cheng, N.; Zhang, F.; Mitrokotsa, A. Efficient Three-Party Boolean-to-Arithmetic Share Conversion. In Proceedings of the 2023 20th Annual International Conference on Privacy, Security and Trust (PST), Copenhagen, Denmark, 21–23 August 2023; pp. 1–6. [Google Scholar]

- Dehkordi, M.H.; Mashhadi, S.; Farahi, S.T.; Noorallahzadeh, M.H. Changeable Essential Threshold Secret Image Sharing Scheme with Verifiability Using Bloom Filter. Multimed. Tools Appl. 2023, 83, 1–37. [Google Scholar] [CrossRef]

- Maskey, S.R.; Badsha, S.; Sengupta, S.; Khalil, I. Reputation-Based Miner Node Selection in Blockchain-Based Vehicular Edge Computing. IEEE Consum. Electron. Mag. 2021, 10, 14–22. [Google Scholar] [CrossRef]

- Zhang, X.; He, M. Collusion Attack Resistance and Practice-Oriented Threshold Changeable Secret Sharing Schemes. In Proceedings of the 2010 24th IEEE International Conference on Advanced Information Networking and Applications, Perth, Australia, 20–23 April 2010; pp. 745–752. [Google Scholar]

- Mukhedkar, M.; Kote, P.; Zonde, M.; Jadhav, O.; Bhasme, V.; Dawande, N.A. Advanced and Secure Data Sharing Scheme with Blockchain and IPFS: A Brief Review. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; pp. 1–5. [Google Scholar]

- Liu, S.; Li, Y.; Guan, P.; Li, T.; Yu, J.; Taherkordi, A.; Jensen, C.S. FedAGL: A Communication-Efficient Federated Vehicular Network. IEEE Trans. Telligent Veh. 2024, 9, 3704–3720. [Google Scholar] [CrossRef]

- Davidson, A.; Snyder, P.; Quirk, E.B.; Genereux, J.; Livshits, B.; Haddadi, H. Star: Secret Sharing for Private Threshold Aggregation Reporting. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, CA, USA, 7–11 November 2022; pp. 697–710. [Google Scholar]

- Chen, L.; Fu, Q.; Mu, Y.; Zeng, L.; Rezaeibagha, F.; Hwang, M.-S. Blockchain-Based Random Auditor Committee for Integrity Verification. Future Gener. Comput. Syst. 2022, 131, 183–193. [Google Scholar] [CrossRef]

- Eren, H.; Karaduman, O.; Gencoglu, M.T. Security Challenges and Performance Trade-Offs in On-Chain and Off-Chain Blockchain Storage: A Comprehensive Review. Appl. Sci. 2025, 15, 3225. [Google Scholar] [CrossRef]

- Wei, Y.; Trautwein, D.; Psaras, Y.; Castro, I.; Scott, W.; Raman, A.; Tyson, G. The Eternal Tussle: Exploring the Role of Centralization in {IPFS}. In Proceedings of the 21st USENIX Symposium on Networked Systems Design and Implementation (NSDI 24), Santa Clara, CA, USA, 16–18 April 2024; pp. 441–454. [Google Scholar]

- Järvinen, K.; Leppäkoski, H.; Lohan, E.-S.; Richter, P.; Schneider, T.; Tkachenko, O.; Yang, Z. PILOT: Practical Privacy-Preserving Indoor Localization Using Outsourcing. In Proceedings of the 2019 IEEE European Symposium on Security and Privacy (EuroS&P), Stockholm, Sweden, 17–19 June 2019; pp. 448–463. [Google Scholar]

- Vatsalan, D.; Christen, P. Multi-Party Privacy-Preserving Record Linkage Using Bloom Filters. arXiv 2016, arXiv:1612.08835. [Google Scholar]

- Yao, S.; Ren, Y.; Wang, D.; Wang, Y.; Yin, W.; Yuan, L. SNN-PPRL: A Secure Record Matching Scheme Based on Siamese Neural Network. J. Inf. Secur. Appl. 2023, 76, 103529. [Google Scholar] [CrossRef]

- Luo, L.; Guo, D.; Ma, R.T.B.; Rottenstreich, O.; Luo, X. Optimizing Bloom Filter: Challenges, Solutions, and Comparisons. IEEE Commun. Surv. Tutor. 2019, 21, 1912–1949. [Google Scholar] [CrossRef]

- Song, Y.; Gu, Y.; Li, T.; Qi, J.; Liu, Z.; Jensen, C.S.; Yu, G. CHGNN: A Semi-Supervised Contrastive Hypergraph Learning Network. IEEE Trans. Knowl. Data Eng. 2024, 36, 4515–4530. [Google Scholar] [CrossRef]

- Ke, Y.; Liang, Y.; Sha, Z.; Shi, Z.; Song, Z. DPBloomfilter: Securing Bloom Filters with Differential Privacy. arXiv 2025, arXiv:2502.00693. [Google Scholar]

- Huang, C.; Yao, Y.; Zhang, X. Robust Privacy-Preserving Aggregation against Poisoning Attacks for Secure Distributed Data Fusion. Inf. Fusion 2025, 122, 103223. [Google Scholar] [CrossRef]

| Dataset Size | Node Count = 64 | Node Count = 128 | Node Count = 256 |

|---|---|---|---|

| 5 K | 5.73 | 3.02 | 1.57 |

| 10 K | 22.92 | 12.13 | 6.28 |

| 50 K | 572.92 | 300.00 | 154.25 |

| 100 K | 2291.67 | 1201.00 | 617.34 |

| 500 K | 57,291.67 | 30,120.00 | 15,480.50 |

| Participants | Node Count = 64 | Node Count = 128 | Node Count = 256 |

|---|---|---|---|

| 3 | 572.92 | 300 | 154.25 |

| 5 | 2291.67 | 1201 | 617.34 |

| 7 | 5156.25 | 2700 | 1388 |

| 9 | 9166.67 | 4815 | 2475.3 |

| Method | Participants = 3 | Participants = 5 | Participants = 7 | Participants = 9 |

|---|---|---|---|---|

| SSB-PPRL | 0.78 (Mod-1) | 0.74 (Mod-1) | 0.68 (Mod-1) | 0.61 (Mod-1) |

| 0.73 (Mod-2) | 0.68 (Mod-2) | 0.62 (Mod-2) | 0.56 (Mod-2) | |

| 0.67 (Mod-3) | 0.61 (Mod-3) | 0.57 (Mod-3) | 0.5 (Mod-3) | |

| Han | 0.71 (Mod-1) | 0.67 (Mod-1) | 0.62 (Mod-1) | 0.56 (Mod-1) |

| 0.65 (Mod-2) | 0.62 (Mod-2) | 0.56 (Mod-2) | 0.51 (Mod-2) | |

| 0.58 (Mod-3) | 0.56 (Mod-3) | 0.49 (Mod-3) | 0.45 (Mod-3) | |

| Yao | 0.88 (Mod-1) | 0.84 (Mod-1) | 0.79 (Mod-1) | 0.74 (Mod-1) |

| 0.82 (Mod-2) | 0.78 (Mod-2) | 0.73 (Mod-2) | 0.69 (Mod-2) | |

| 0.76 (Mod-3) | 0.72 (Mod-3) | 0.67 (Mod-3) | 0.64 (Mod-3) | |

| Vatsalan | 0.76 (Mod-1) | 0.73 (Mod-1) | 0.67 (Mod-1) | 0.62 (Mod-1) |

| 0.71 (Mod-2) | 0.68 (Mod-2) | 0.62 (Mod-2) | 0.56 (Mod-2) | |

| 0.65 (Mod-3) | 0.62 (Mod-3) | 0.55 (Mod-3) | 0.5 (Mod-3) |

| Method | Participants = 3 | Participants = 5 | Participants = 7 | Participants = 9 |

|---|---|---|---|---|

| SSB-PPRL | 0.76 (Mod-1) | 0.72 (Mod-1) | 0.66 (Mod-1) | 0.59 (Mod-1) |

| 0.71 (Mod-2) | 0.66 (Mod-2) | 0.61 (Mod-2) | 0.53 (Mod-2) | |

| 0.65 (Mod-3) | 0.59 (Mod-3) | 0.56 (Mod-3) | 0.47 (Mod-3) | |

| Han | 0.69 (Mod-1) | 0.65 (Mod-1) | 0.6 (Mod-1) | 0.54 (Mod-1) |

| 0.63 (Mod-2) | 0.6 (Mod-2) | 0.54 (Mod-2) | 0.49 (Mod-2) | |

| 0.57 (Mod-3) | 0.55 (Mod-3) | 0.47 (Mod-3) | 0.43 (Mod-3) | |

| Yao | 0.86 (Mod-1) | 0.83 (Mod-1) | 0.78 (Mod-1) | 0.73 (Mod-1) |

| 0.8 (Mod-2) | 0.77 (Mod-2) | 0.72 (Mod-2) | 0.68 (Mod-2) | |

| 0.73 (Mod-3) | 0.71 (Mod-3) | 0.66 (Mod-3) | 0.63 (Mod-3) | |

| Vatsalan | 0.75 (Mod-1) | 0.73 (Mod-1) | 0.65 (Mod-1) | 0.6 (Mod-1) |

| 0.7 (Mod-2) | 0.67 (Mod-2) | 0.6 (Mod-2) | 0.54 (Mod-2) | |

| 0.65 (Mod-3) | 0.61 (Mod-3) | 0.54 (Mod-3) | 0.47 (Mod-3) |

| Method | Participants = 3 | Participants = 5 | Participants = 7 | Participants = 9 |

|---|---|---|---|---|

| SSB-PPRL | 0.77 (Mod-1) | 0.73 (Mod-1) | 0.67 (Mod-1) | 0.61 (Mod-1) |

| 0.72 (Mod-2) | 0.67 (Mod-2) | 0.61 (Mod-2) | 0.54 (Mod-2) | |

| 0.66 (Mod-3) | 0.61 (Mod-3) | 0.56 (Mod-3) | 0.48 (Mod-3) | |

| Han | 0.71 (Mod-1) | 0.66 (Mod-1) | 0.61 (Mod-1) | 0.55 (Mod-1) |

| 0.64 (Mod-2) | 0.61 (Mod-2) | 0.56 (Mod-2) | 0.51 (Mod-2) | |

| 0.57 (Mod-3) | 0.55 (Mod-3) | 0.48 (Mod-3) | 0.44 (Mod-3) | |

| Yao | 0.87 (Mod-1) | 0.83 (Mod-1) | 0.78 (Mod-1) | 0.73 (Mod-1) |

| 0.81 (Mod-2) | 0.77 (Mod-2) | 0.72 (Mod-2) | 0.68 (Mod-2) | |

| 0.74 (Mod-3) | 0.71 (Mod-3) | 0.66 (Mod-3) | 0.63 (Mod-3) | |

| Vatsalan | 0.75 (Mod-1) | 0.73 (Mod-1) | 0.66 (Mod-1) | 0.61 (Mod-1) |

| 0.71 (Mod-2) | 0.67 (Mod-2) | 0.61 (Mod-2) | 0.55 (Mod-2) | |

| 0.65 (Mod-3) | 0.61 (Mod-3) | 0.54 (Mod-3) | 0.49 (Mod-3) |

| N | c | Theoretical Reconstruction Rate | Experimental Reconstruction Rate |

|---|---|---|---|

| 50 | 10 | 0 | |

| 50 | 20 | 0 | |

| 50 | 40 | 0.0000002 | |

| 100 | 20 | 0 | |

| 100 | 40 | 0 | |

| 100 | 80 | 0.0000001 | |

| 200 | 40 | 0 | |

| 200 | 80 | 0 | |

| 200 | 160 | 0.0000001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Wang, Z.; Zhao, Q.; Shen, D.; Wang, C.; Xue, Y. A Privacy-Preserving Record Linkage Method Based on Secret Sharing and Blockchain. Appl. Syst. Innov. 2025, 8, 92. https://doi.org/10.3390/asi8040092

Han S, Wang Z, Zhao Q, Shen D, Wang C, Xue Y. A Privacy-Preserving Record Linkage Method Based on Secret Sharing and Blockchain. Applied System Innovation. 2025; 8(4):92. https://doi.org/10.3390/asi8040092

Chicago/Turabian StyleHan, Shumin, Zikang Wang, Qiang Zhao, Derong Shen, Chuang Wang, and Yangyang Xue. 2025. "A Privacy-Preserving Record Linkage Method Based on Secret Sharing and Blockchain" Applied System Innovation 8, no. 4: 92. https://doi.org/10.3390/asi8040092

APA StyleHan, S., Wang, Z., Zhao, Q., Shen, D., Wang, C., & Xue, Y. (2025). A Privacy-Preserving Record Linkage Method Based on Secret Sharing and Blockchain. Applied System Innovation, 8(4), 92. https://doi.org/10.3390/asi8040092