1. Introduction

Entropy serves as a foundational concept in various scientific domains, encompassing fields such as physics, chemistry, computer science, or information theory [

1]. In physics, entropy functions as a metric for the degree of disorder or randomness within a system. The second law of thermodynamics posits that the entropy of an isolated system tends to increase over time, ultimately reaching a state of maximal disorder. In chemistry, entropy finds relevance in its association with the distribution of energy among the microscopic states of particles within a system. During a chemical reaction, alterations in entropy can signify a system’s inclination towards either a more disorganized or a more ordered state. In essence, entropy can be seen as intricately linked to the dispersion of energy within a given system [

2].

Moving to computer science, particularly information theory, entropy extends beyond its thermodynamic origins, serving as a measure of uncertainty or unpredictability related to a source of information [

3]. For instance, measuring the uncertainty of a neural network output is relevant in AI applications such as deep learning-based image classification. In this context, the entropy of the output can serve as a reliable metric for gauging the level of uncertainty in the system [

4].

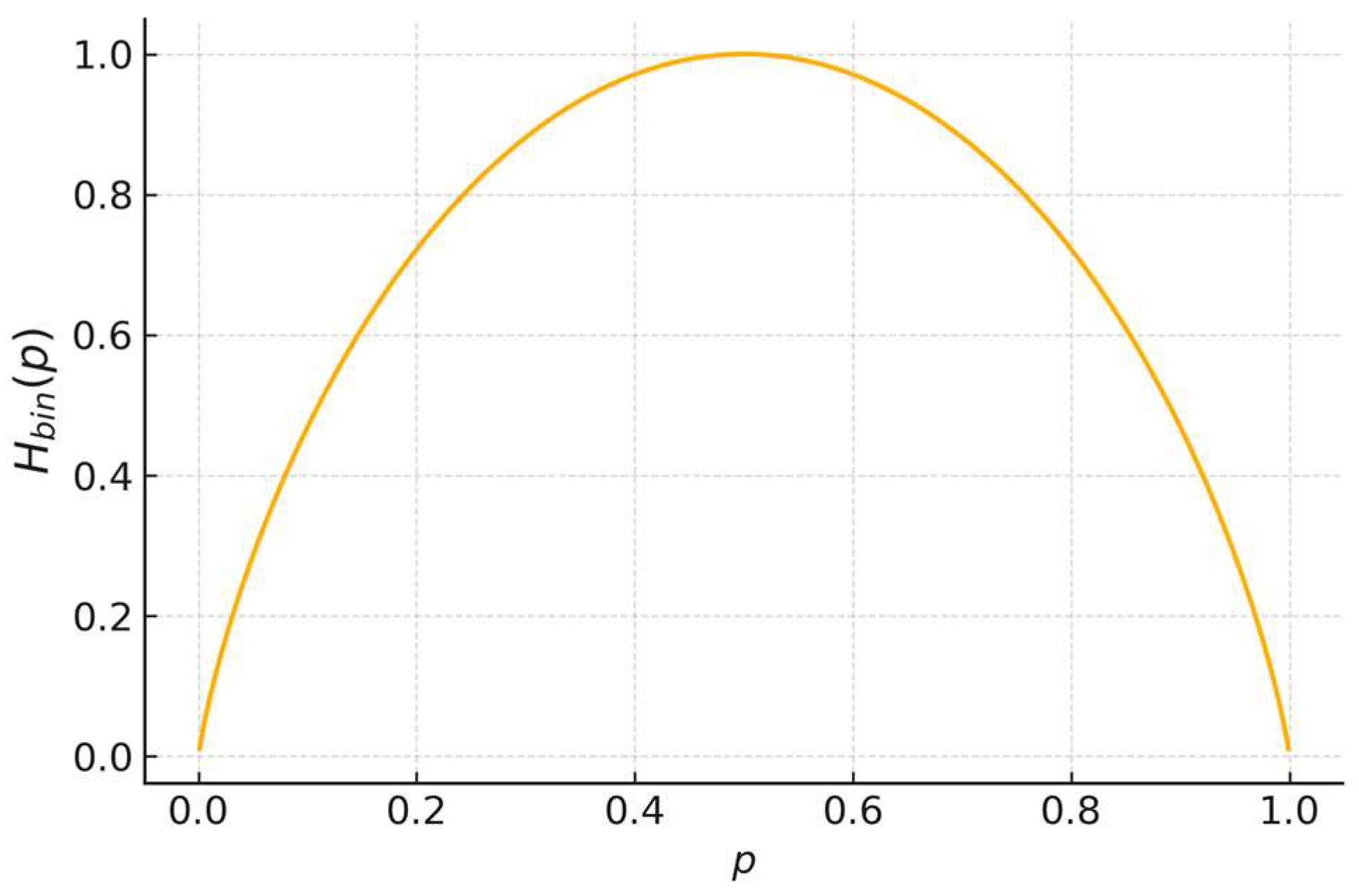

When considering a sequence of symbols in a message, an increase in entropy corresponds to a higher level of unexpectedness for the subsequent symbol. Beyond apparent disorder, entropy reveals the inherent uncertainty in a message represented by a sequence of data, essentially acting as a measure of outcome likelihoods for a random event. Claude Shannon’s seminal 1948 article [

5] introduced information entropy, a metric designed to quantify the average information content within a message. A core tenet of Shannon’s theory posits a direct correlation between uncertainty and information: the greater the uncertainty surrounding an event’s outcome, such as the next symbol in a message, the more information it conveys when it occurs [

6]. In short, Shannon entropy assigns a value based on the probability of each message outcome. Less probable outcomes contribute more significantly to the overall entropy, as their unexpected nature translates to more informational content [

7].

In industrial management, a significant challenge arises from the inherent complexity that emerges when multiple companies collaborate to form a supply chain (SC). At least one prior, recent investigation [

8] posits that SCs can be regarded as complex adaptive systems (CASs), often evolving autonomously and independent of a deliberate creation process. Like other types of CASs, it proves beneficial to assess and manage the level of complexity that an SC accrues during its evolution [

9], as demonstrated by previous related studies. Among many others, ref. [

10] analyzed the underpinning structure of SCs, refs. [

11,

12] identified drivers that may boost the complexity level in SCs, and refs. [

13,

14] examined how complexity can influence business performance in SCs, while ref. [

15] studied a dedicated tool conceived to measure complexity in SCs. Finally, ref. [

16] presents an entropy-based algorithm tailored to measure complexity in SCs. These last two approaches both have a similar gap, which this study aims to bridge.

Each company within the collaboration scenario depicted by the SC pursues distinct objectives, spanning areas such as raw material supply, engineering services, transportation, warehousing, manufacturing, and distribution. Despite having individual goals, they all have a shared overarching objective: collectively meeting the requirements of a specific customer type [

17]. Beyond their unique operational strategies tailored to meet local objectives, companies operating within an SC must adhere to a meta-strategy that governs the entire system. This meta-strategy outlines global objectives, encompassing the collective goals of all members involved in activities [

18]. The success of an SC operation hinges not only on each company achieving its partial or local objectives but on the attainment of global objectives that entail satisfying customer requirements [

19]. In short, only the achievement of global objectives ensures the overall success of the SC. This study focuses on applying entropy, as conceived in information theory, to the realm of administrative sciences, more specifically, supply chain management (SCM). It employs Shannon entropy to assess the required information exchanged between agents during SCM. The underlying assumption is that information entropy serves as a proxy measure for the complexity within the SC.

Complexity is not the same as complicatedness [

20]. In scientific realms, complexity refers to the inherent nature of a system composed of many interconnected and mutually dependent parts, usually exhibiting emergent, non-linear properties that cannot be explained by individual, isolated components [

21]. Replacing even a single component yields sensible variation in outcomes, as the replacement influences internal mutually dependent relationships. Complicatedness refers to a system that is difficult to understand or deal with due to having a high number of parts, but these parts are mutually independent [

22]. Replacing one part with another that is similar does not change outcomes. In essence, complexity is inherent to nature, while complicatedness arises from the number of parts. For example, an aircraft engine that entails thousands of parts may be complicated but not complex. A soccer team, even entailing only eleven agents, may be complex but is not complicated. In short, complex systems may show emergent behavior difficult to predict and control, while complicated systems, even when difficult to handle, can be predicted and linearly escalated [

23].

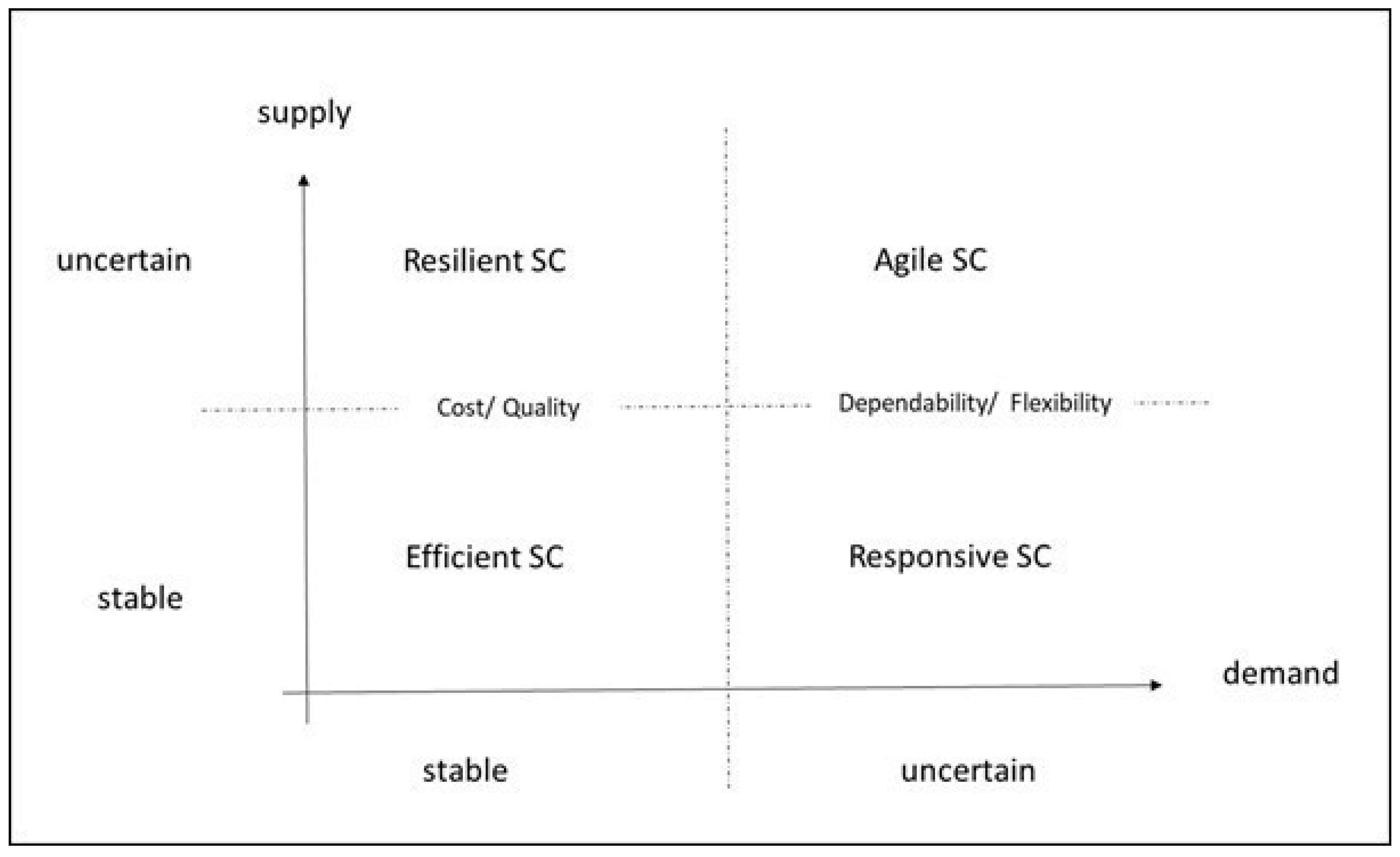

Given the uncertainties in SCM, it becomes important to not only assess but also manage the inherent complexity that arises from SC dynamics [

24]. Controlling complexity does not solely imply reduction; in some instances, it may imply an increase. For example, in stable environments, reducing complexity can translate to cost reduction, making the SC more efficient. In unstable scenarios, in which innovation and variety are essential, an increase in complexity is often required, making the SC more flexible [

25].

This study employs a measure of entropy that connects the concepts of uncertainty and complexity. According to this measure, as a system becomes more uncertain, it also becomes more complex [

11]. To describe and monitor a complex system, it is necessary to access a range of information to manage unpredictability, underscoring a fundamental relationship. The greater the complexity, the more information is required to understand the system’s behavior and anticipate possible outcomes presented by complex systems due to their unpredictable nature.

A search on Scopus from 2020 to 2025 using the keywords “Complexity” AND “Supply Chains” yielded 4468 results. In contrast, the search for “Complexity measurement” AND “Supply Chain” returned only three results, and there was just one article found for “Complexity” AND “Supply Chains” AND “Shannon entropy”. This indicates that while the topic of complexity in SCs is significant, there are gaps in the recent, high-impact literature concerning the measurement of complexity and, specifically, the application of Shannon entropy.

Therefore, the purpose of the study is to propose a quantitative modeling method, employing Shannon’s entropy model as a proxy to assess the complexity in SCs. The research method is quantitative modeling. The core principle is to use the theoretical framework of Shannon’s entropy to quantify informational flow entropy as a proxy variable of complexity. The model measures the uncertainty in the flow of information permeating the SC. The underlying assumption is that the complexity of the SC is directly proportional to the amount of information required to manage it effectively [

26]. The research subject includes four focal companies of an industrial SC, two from the agrifood industry and two from the metalworking industry, all located in Southern Brazil. This study contributes to SCM studies by encompassing four SC meta-strategies, four competitive priorities, and an entropy-based measure in a single study.

The subsequent sections are structured to provide a comprehensive review of SC complexity, delineate the methodology, present and discuss the results, and draw conclusions from the findings. This systematic approach ensures a thorough exploration and understanding of the intricate dynamics surrounding complexity in industrial SCs.

3. Materials and Methods

According to [

54], complexity can stem from three distinct sources: materials, finances, and information flows. This study acknowledges that when both material and financial uncertainties arise, it can lead to unanticipated requirements for additional information exchange. Hence, by monitoring the information flow in relevant channels, mainly uncommon requests for further information, all three sources of complexity can be effectively managed. Therefore, the primary research assumption is that to evaluate complexity, the SCM must manage relevant flows of information to fulfill the manufacturing orders received by the focal company. A previous study [

55] has established a list of twenty different types of information (

j = 1, …, 20) that may or may not be necessary to complete a manufacturing order. Whether or not information

j is required to fulfill an order is a binary output for a random event (

i = 1 if the information is required; 0 otherwise). Future studies should consider intermediate levels in which information may be partially required (in this case, the outcome would be discrete, not binary). Moreover, the study has identified five relevant paths (

k = 1, …, 5) that the 20 pieces of information can follow. For each SC, the study must compute one hundred probabilities of information

j being required in path

k to fulfill a manufacturing order. Equation (7) uses these probabilities to calculate Shannon entropy.

where:

2 is the number of possible outcomes for a piece of information (required or not); 20 is the number of types of information of interest;

5 is the number of agents considered in the SC;

pijk are the probabilities of the information jk (20 pieces and 5 agents) that is required (i = 1) or not (i = 2).

Setting all probabilities to 0.5 results in the maximum possible entropy. Dividing the first result (Equation (7)) by the second provides the percentage entropy, which is more useful in managing the inherent complexity of the SC. Furthermore, given that [p1jk + p2jk] = 1, that is, the sum of the probability of the information jk that is required and the probability of the information that is not required must equal 1, it follows that both probabilities cannot exceed or drop below 0.5 at the same time. For example, if p1jk = 0.25, then it is necessary that p2jk = 0.75. Since the study must focus only on one value, we chose the lowest one, regardless of whether the character is active or inactive. Switching will not affect the uncertainty.

The study followed a particular methodology, divided into the following steps:

Four focal companies were selected: two from the agrifood industry and two from the metalworking industry. In each industry, one company prioritizes competition according to cost/quality and the other according to dependability/flexibility;

At the headquarters of companies, two managers were interviewed together, one responsible for manufacturing and the other for logistics activities. The interviews lasted around two hours. The respondents answered two rounds of questions in consensus. In the first round, they prioritized the four competition objectives. In the second round, they estimated the probabilities. To make the estimate, respondents previously consulted the fifty most important manufacturing orders from the most recent days and counted how many times information j was requested in path k. Further information on orders from all respondents is confidential;

After the first meeting, managers had enough time to search the orders’ documentation to identify the number of times each piece of information was required. Managers had the chance to contact practitioners to clarify doubts. The number was rounded to facilitate communication. The experience of managers and practitioners, the accuracy of internal documents, and the triangulation during the interviews ensure the reliability of the measurement;

Equation (7), which expresses the Shannon entropy, was used to calculate maximum entropy and percentage entropy, a proxy variable that describes relative complexity of the SC [

16];

In a final meeting, the results and some avenues for future complexity control were discussed with managers.

Table 2 and

Figure 3, respectively, present the twenty pieces of information and the five paths included in the research.

Regarding

Table 2, there are five groups responsible for managing twenty pieces of information. These groups include planning and scheduling, which handles manufacturing capacity and deadlines; logistics, which handles raw materials and deliveries; workforce, which handles operators’ skills required to fulfill orders’ requirements; finance, which handles cash flows; and technology and innovation, which handles the technical demands and abilities necessary to satisfy customers’ needs.

Figure 3 outlines five essential pathways (R1, R2, R3, R11, and R21) for tracking information exchange. These include the R1 flow of stocked raw materials in continuous demand, the R2 flow of specific engineered materials utilized only as needed, and the R3 flow of engineering and design services engaged on an as-needed basis. Additionally, the manufacturing to distribution pathway R11 encompasses fabrication, maintenance, transportation, and warehousing. Finally, the customer service pathway R21 covers delivery and technical support services. Additional information or pathways should be explored in future studies.

4. Results and Discussion

4.1. The Companies

Company A is an agrifood industry player that specializes in producing soybean meal and oil. The company prioritizes highly standardized products originating from soy cultures. Supplier and customer markets are reasonably stable. Company A relies on large distributors and local distribution channels that serve retailers and companies specializing in agrifood products. Although the company does not frequently modify its product designs, any changes undergo rigorous scrutiny by technical and engineering services for certification before being approved for production and sale.

Company B is an agrifood industry player that specializes in producing fertilizers from the three main mineral groups: nitrogen, phosphate, and potassium, with production capacity across all three. The company incorporates technological advances in processes and products and must rely on specialized technical assistance extended to customers. The company is constantly innovating its product lines to offer its customers, mainly farmers, production enhancements and cost reductions in rural activity. The company operates primarily in markets in which raw material offers and prices are stable, while consumer markets can vary owing to climate events and seasonality. The company sells its products through large distributors and national and international distribution channels that supply large retailers. Any product design changes must undergo technical services that certify their quality before being released for production and sale.

Company C is a metalworking industry player that specializes in producing metallic components for large industrial items, specifically catering to the machinery and equipment industries, as well as the automotive industry. The company uses metallic plates received in large lots for the steelmaking industry, metal closures, safety devices, and, eventually, wood packaging such as pallets. The company operates with stable raw material markets and moderately variable consumer markets. To distribute its products, it uses both local and national logistics operators that store and supply large quantities to buyers.

Company D is a metalworking industry player that specializes in engineered solutions of high complexity to specific markets. The company uses metallic, electric, and electronic parts received in medium and small lots from various suppliers. The raw material markets are somehow unstable, and the consumer markets are highly variable. The company often receives manufacturing orders that require prior engineering designs. The company works with local and national logistics operators who store and transport large parts to buyers with stringent safety requirements. Innovation is continuous, and safety is a requirement, which means that any product design modifications undergo technical services that certify them before their release for production and sale.

Concerning the competitive priorities of focal companies A, B, C, and D, certain patterns emerge in their strategic emphasis.

Focal company A prioritizes compliance with quality standards, primarily driven by stringent food safety requirements. The next is cost reduction, given the intense competition in the sector, in which pricing plays a pivotal role in consumer choices. Flexibility and dependability assume diminished significance for this company, attributed to its management of substantial inventories that promptly cater to unforeseen demands. Among the four SC meta-strategies, the SC is closer to the efficient pattern.

Focal company B prioritizes flexibility due to the changing nature of agrifood activity and the inherent uncertainty in climate conditions. Subsequently, dependability assumes prominence, driven by the temporal commitments of farmers tied to seasonality and prices in the global market. The second is quality, particularly due to the difficulty for customers of handling manufacturing errors. Cost reduction (and consequently price reduction) occupies a relatively lower position on the priority scale, given that the competition in the sector is not too fierce. Among the four SC meta-strategies, the SC is closer to the responsive pattern.

Focal company C prioritizes cost reduction. The standardization of its product influences this strategic choice, its relative ease of production, and the heightened competition within the sector. The second is quality, due to the difficulty for customers of dealing with dimensional, structural, and appearance errors in raw material supplied to other industries. Flexibility and dependability assume less critical roles, given the company’s effective management of substantial inventories to promptly address unexpected demands. Among the four SC meta-strategies, the SC is closer to the efficient pattern.

Finally, focal company D prioritizes dependability. This emphasis is underscored by the industrial market’s stringent deadlines and narrow delivery windows, in which any lapse could result in a loss of business. Flexibility assumes the secondary position, driven by the swift variations in project features and uncertainties surrounding the definition of standardized batches. Quality follows, as manufacturing errors are difficult to amend by the company’s delivery model. Cost reduction, though not irrelevant, takes a less central role, as the packaging cost in industrial deliveries has a less influential role. Among the four SC meta-strategies, the SC is closer to the agile pattern.

4.2. Entropy Calculation

Table 3 and

Table 4 synthesize the results of the evaluations conducted by managers and the entropies (the sign minus in the entropy was omitted for clarity), respectively. In the first row of

Table 4, together with the name of the focal company, it is possible to find the percentage entropy of each SC. The maximum entropy for all applications is 100 bits.

Table 5 provides the relationship between the percentage entropy and priorities of the focal company, as well as the number of empty cells in each dashboard. An empty cell means no need to randomly exchange (it is not required or was never required) that kind of information and, consequently, an absence of uncertainty and related complexity.

The cases show that SCs focusing on cost and quality tend to be less complex than those emphasizing flexibility and dependability in the same industry. Companies prioritizing cost and quality have fewer empty cells, indicating fewer complex aspects, than those prioritizing flexibility and dependability.

Table 6 shows the percentage entropy per group of information per company.

The table reveals that companies that prioritize flexibility and dependability have a greater value in planning and scheduling (company B), finance, and technology and innovation (company D). In contrast, one of the companies (company A) that prioritizes cost and quality has a greater value in logistics. The workforce is a less relevant source of uncertainty in the studied sample of companies. Therefore, company A should care about logistics while companies B and D should focus on planning and scheduling and technology and innovation as the main sources of uncertainty.

Moving to

Table 7, the table shows the average entropy per path per company.

The table reveals that the most significant difference between the average entropies is observed in R2 and R11, corresponding to specially engineered products and deliveries from the manufacturer, respectively. In R2 and R11, companies A and C, prioritizing cost and quality, exhibit notably lower average entropies than companies B and D. This suggests that ensuring flexibility and dependability requires greater organizational complexity in SCM in relation to manufactured products and agile deliveries. Such drivers require greater coordination efforts, which increases complexity. Conversely, companies emphasizing cost reduction and quality assurance tend to employ standardized products and larger transfer batches, which reduce concerns regarding project and product development, as well as agile deliveries, variable batches, and changes in the mix. Such drivers require less coordination effort, which decreases complexity.

This may not be a coincidence. Future studies should conduct surveys to test the following hypothesis: Companies that prioritize cost and quality generally exhibit an SCM system with lower relative complexity compared to companies that rely on flexibility and dependability for competition.

5. Final Remarks

The purpose of the study was to propose a quantitative modeling method, employing Shannon’s entropy model as a proxy to assess the complexity in SCs. This study’s object was four SCs in the agrifood and metalworking industries of Southern Brazil. The study’s findings suggest that companies that prioritize cost and quality tend to have lower SCM complexity than those that prioritize flexibility and dependability. The study observed significant differences in the average entropies of specific paths related to engineered products and deliveries. These observations indicate that organizational complexities vary based on competitive priorities.

The findings have implications for industrial practitioners. Companies that prioritize cost and quality may benefit from lower complexity, which leads to streamlined processes. In contrast, those who prioritize flexibility and dependability may need more complex organizational structures to meet dynamic market demands. In summary, the study provided valuable insights for industrial practitioners, including how to align competitive priorities with the inherent complexity of SCs. The study’s findings suggest that prioritizing cost and quality can lead to lower SCM complexity, while flexibility and dependability may lead to higher organizational complexities.

Looking ahead, future research can delve deeper into testing the hypothesis that companies prioritizing cost and quality exhibit lower SCM complexity. The study also suggests exploring the broader applicability of information entropy in assessing and controlling complexity across diverse industries, other types of systems, and global SCs. This exploration would contribute to the evolving field of SCM.

In terms of external validity, the current methodology demonstrates both generalizability and extensibility across various production system typologies. The core principle—quantifying informational flow entropy as an indicator of complexity—remains universally applicable, grounded in Shannon entropy’s theoretical framework for assessing informational complexity. When extending the methodology to other systems, several considerations should be included. (i) Information granularity and diversity: Future models could integrate a wider and more varied array of informational elements, such as sustainability metrics, regulatory compliance parameters, or cybersecurity data. Additionally, incorporating intermediate states (information partially required) could refine entropy measurement and address further nuances of uncertainty. (ii) Multitier interactions: For broader applicability, the mapping scope should include interactions beyond the focal company to encompass second- and third-tier agents. (iii) Large-scale data acquisition: While interview-based methodologies prove effective for detailed case studies, achieving broader generalizability requires collecting transactional data from enterprise resource planning (ERP) and SCM systems. Automated data collection may provide probability calculations with greater statistical significance.

Future research should also include the meta-strategy of the SC. One possibility is to lead four similar surveys, each one focusing on a different meta-strategy to gather enough data to compare outcomes. Another possibility is the use of multicriteria methods to calculate weights in the case of multiple strategies and multiple priorities. At least one study [

30] uses confidence intervals to address the inherent uncertainty observed in multicriteria studies focused on investigating the strategic aspects of and preferences in SCs.

One important topic that the study should have addressed, which could be the focus of future research, is the relationship between complexity and performance in SCs. Among other approaches, performance can be measured using the framework provided by the SCOR (Supply Chain Operations Reference) model, as demonstrated in [

56]. Among many others, some indicators that can be connected to measure performance are raw material cost, process inventory, and design and sales cost (cost priority); percentage of rework, client satisfaction, and percentage of waste (quality priority); lead time to orders, back orders, and on-time deliveries (dependability priority); and time-to-market, setup time, and average mix of products (flexibility priority).

This study observed that the SCs that prioritize flexibility and dependability generate more uncertainty in planning and scheduling and technology and innovation. On the other hand, one of the SCs that prioritizes cost and quality generates more uncertainty in logistics. These findings can help practitioners identify key areas for future performance improvement actions. Furthermore, it remains to be seen whether there is an analytical relationship between complexity and performance. The need for more information is a result of the need for greater coordination of activities, regardless of the level of complexity. It is also reasonable to assume that too much coordination can lead to reduced performance due to an excess of intermediate activities. At the same time, too little coordination can lead to errors in operations and reduced performance. Therefore, a U-shaped relationship may exist between performance and complexity: an optimal level of complexity maximizes SC performance. This topic is worth exploring further in future research.