Biometric-Based Key Generation and User Authentication Using Voice Password Images and Neural Fuzzy Extractor

Abstract

1. Introduction

2. State of the Art

3. Materials and Methods

3.1. Voice Image Databases

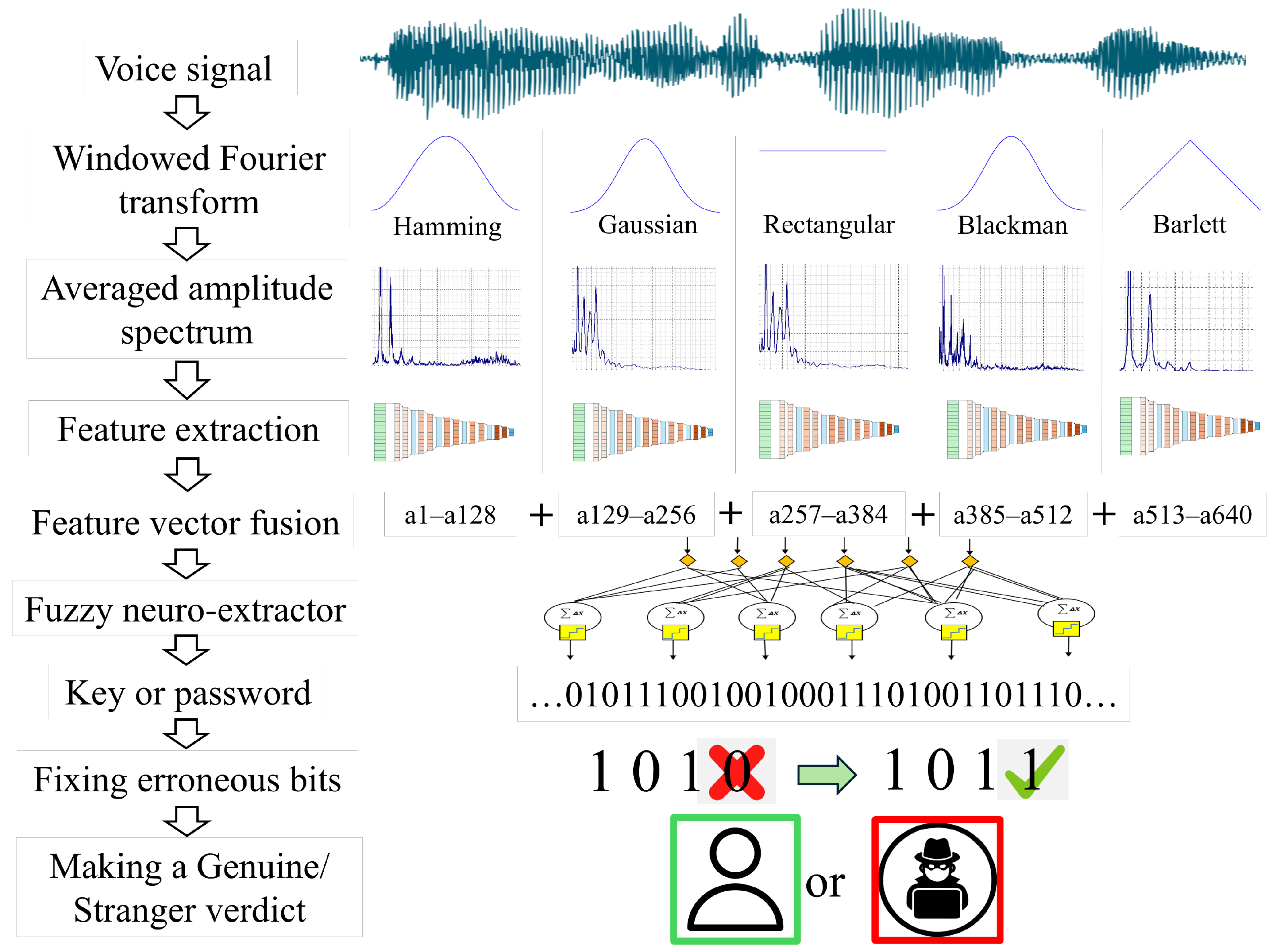

3.2. Extracting Features from a Voice Image

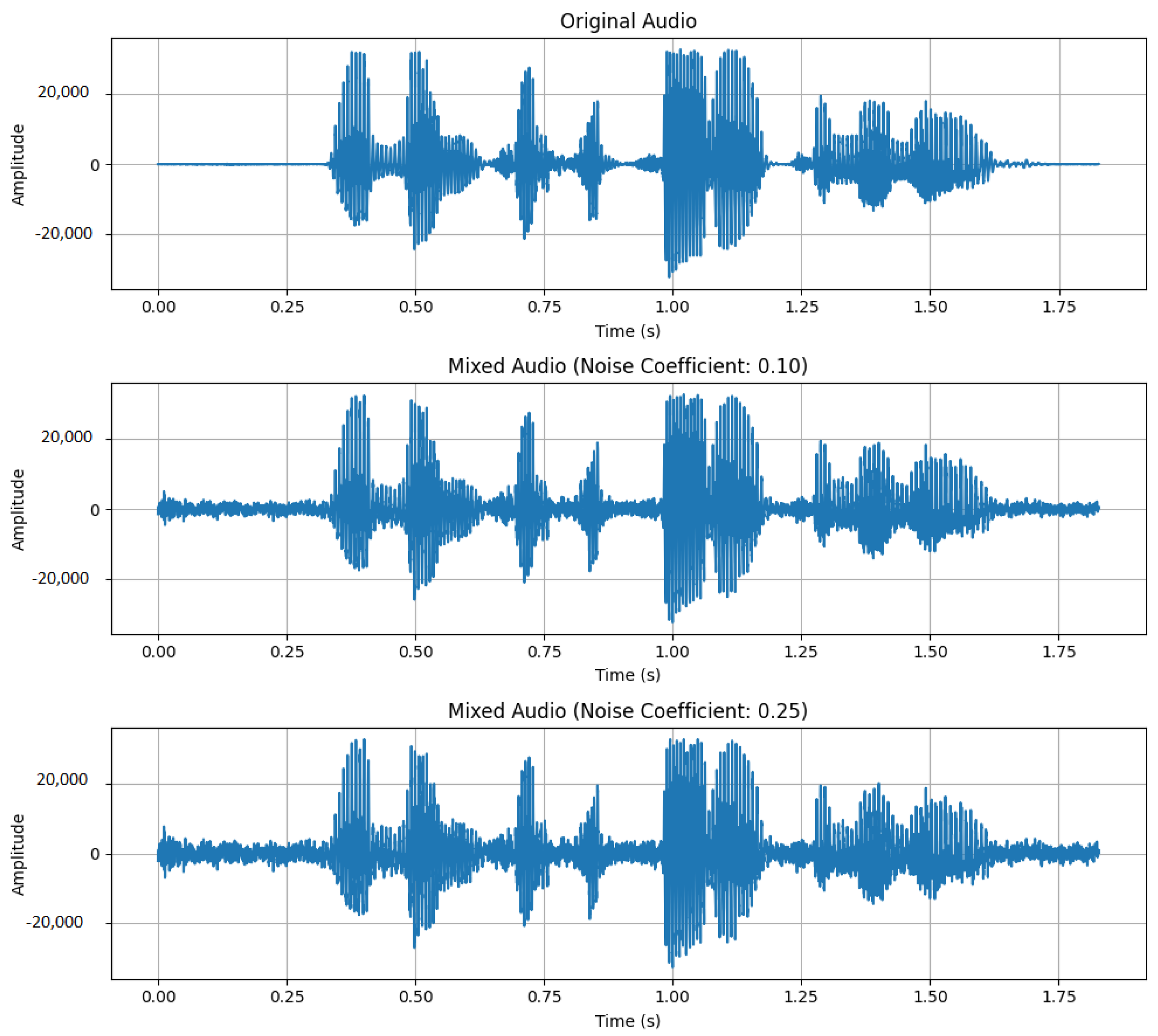

3.2.1. Preprocessing of Voice Recordings

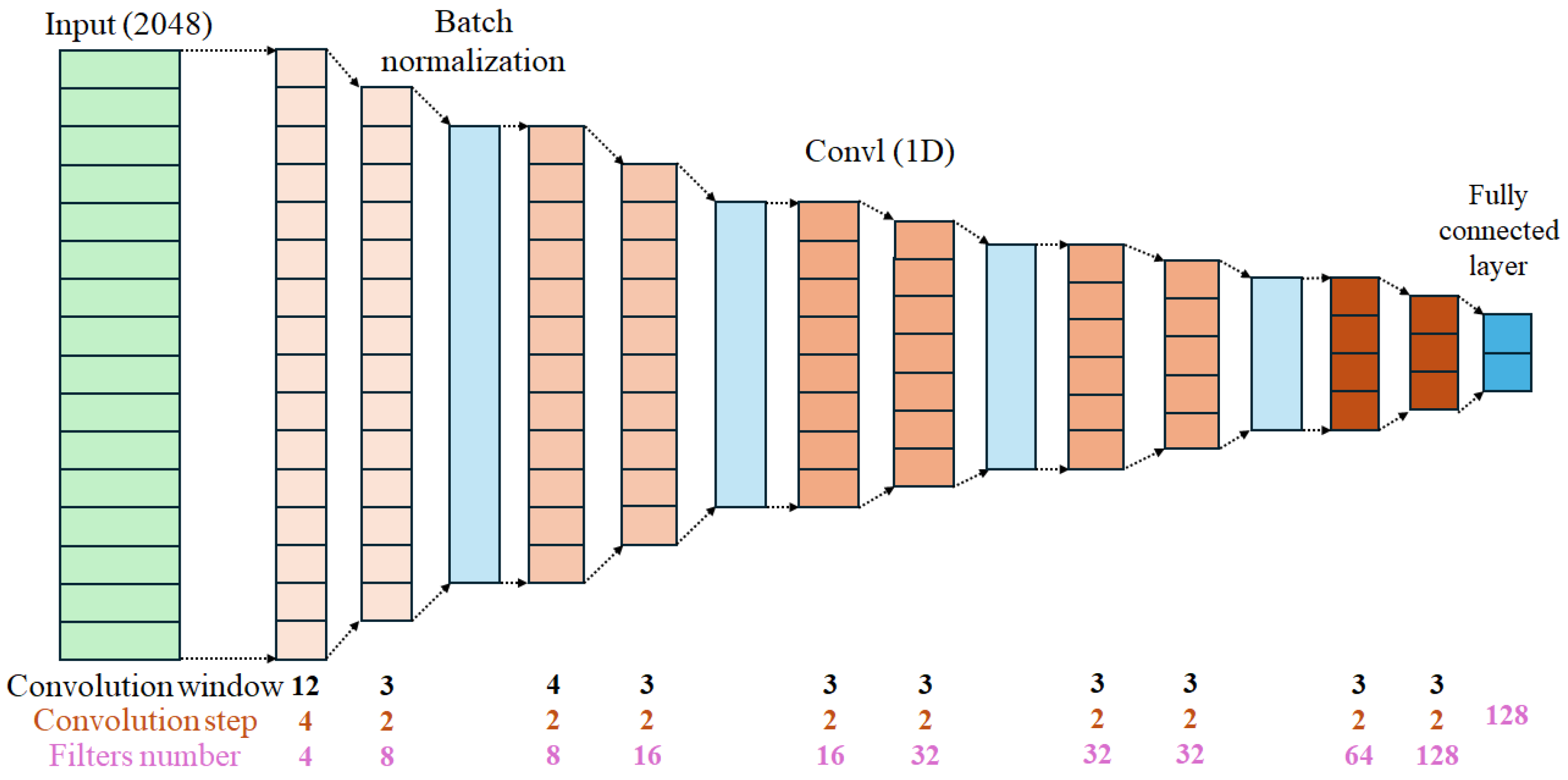

3.2.2. CNN Architecture for Voice Phrase Feature Extraction

3.2.3. Ensembling Pre-Trained CNNs

3.3. Fuzzy Neuro-Extractor

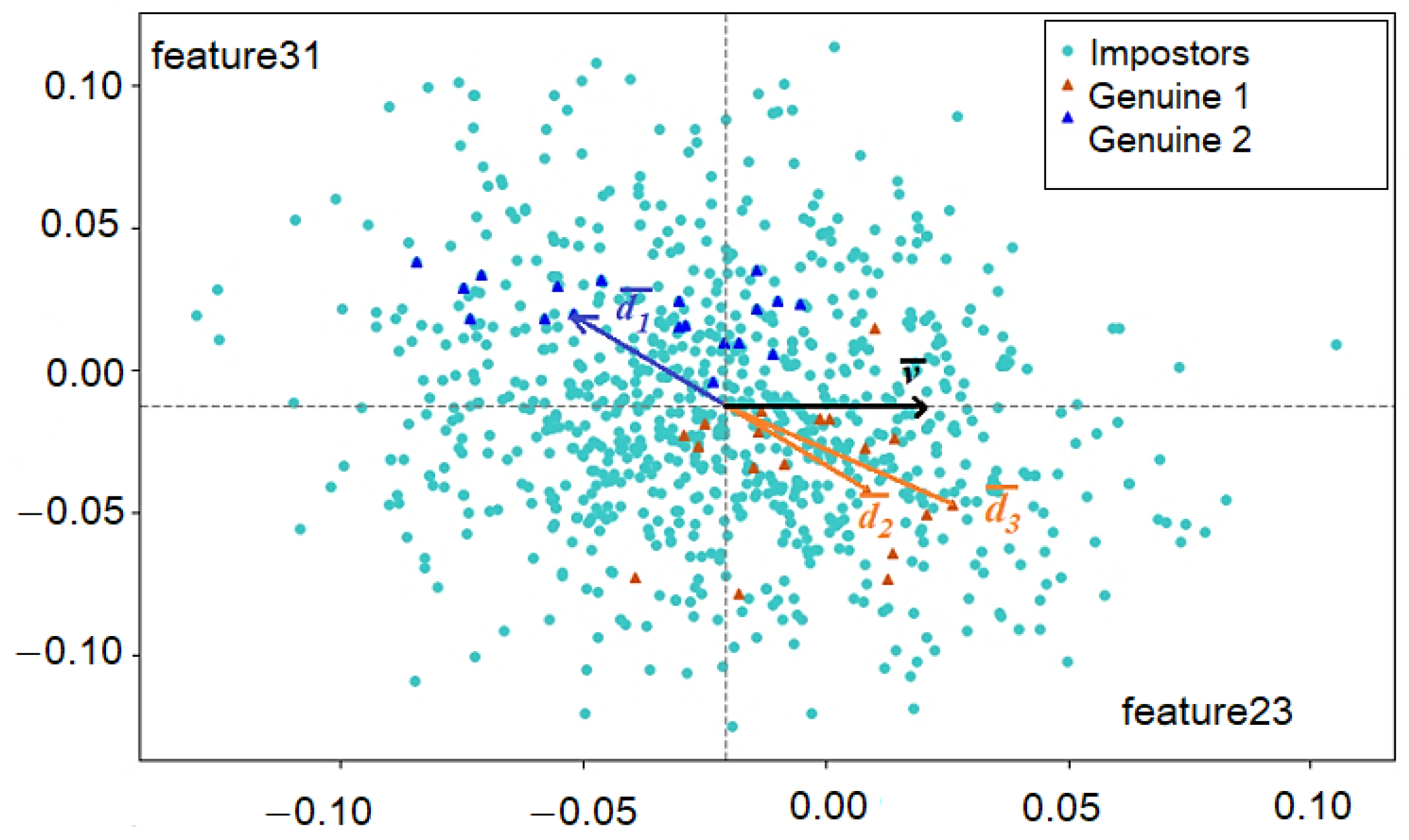

3.3.1. Neuron Model

- Each pair generates one type of meta-features: cosine or cotangent. The input of one neuron receives either pairs in the subspace of which the correlation dependence of features is expressed (cotangent type of meta-features) or such pairs in whose subspaces the correlation dependence is absent or is weak according to the Chaddock scale (cosine type of meta-features).

- Relative to its thresholds and in the subspace, each pair of all “Genuine” images entered one of the three sectors . The threshold values and for the activation function of neurons are saved as the average values of the ancient thresholds and for the subspaces of pairs of features obtained using the calibration algorithm that described in Section 3.3.2. The following formulas are used to calculate threshold values: and , where k is the number of synapses (inputs) of a neuron; z is the number of a synapse (input) of a neuron; and and are the thresholds for a pair of features entering the input of a neuron, obtained sequentially because of the algorithm’s operation.

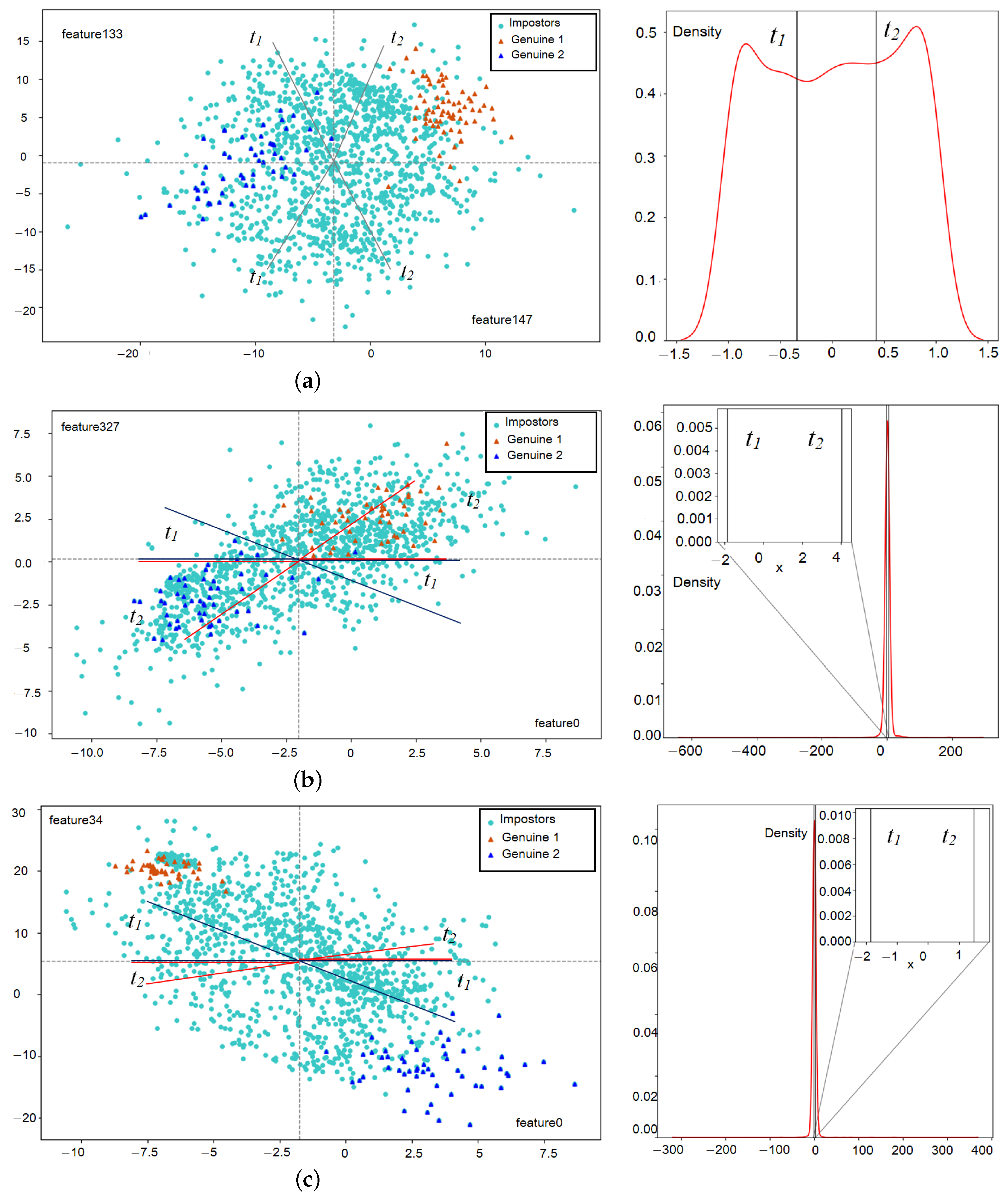

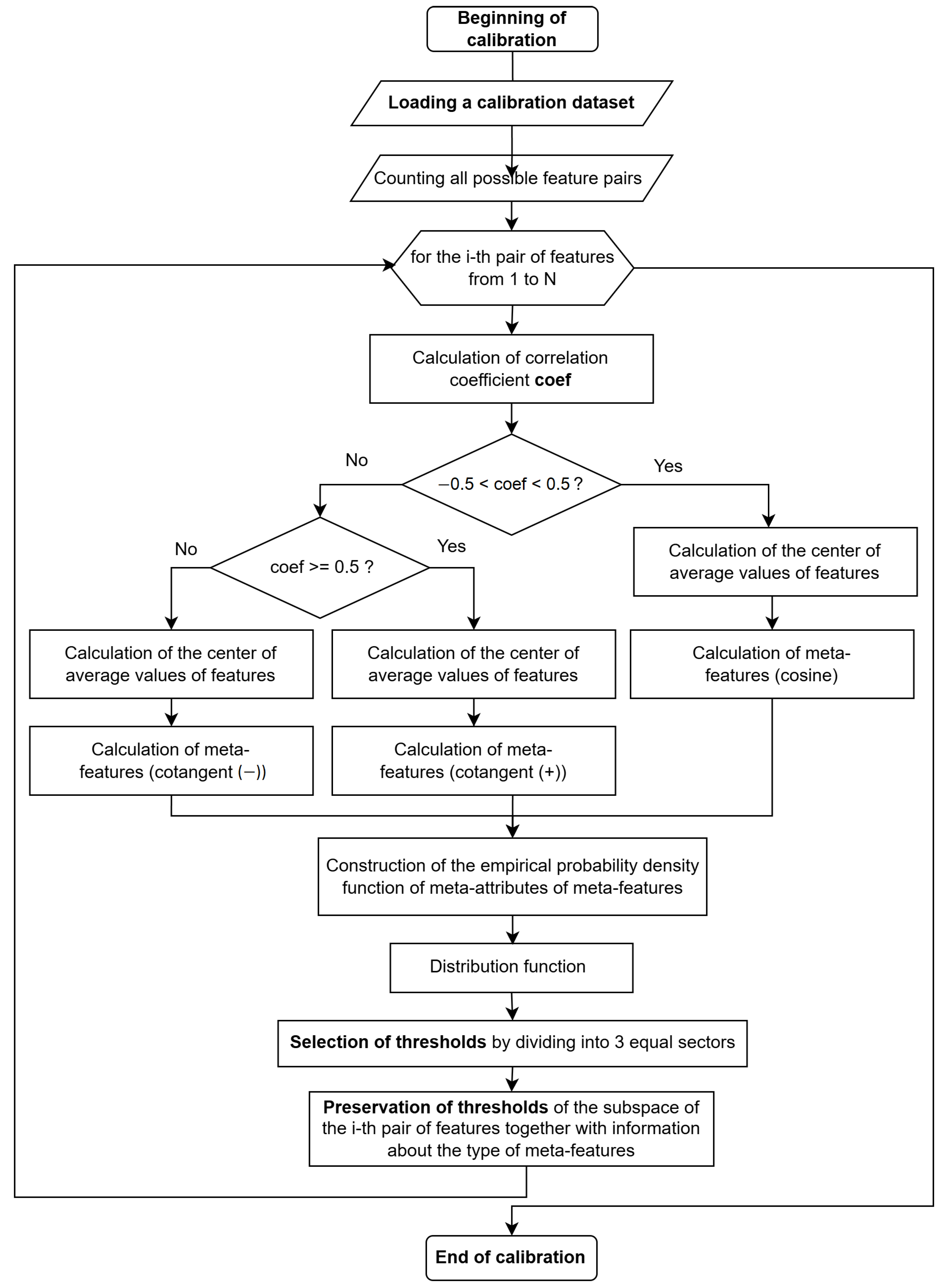

3.3.2. Calibration of the Biometric System

- The coef that is the correlation coefficient of a pair of features is calculated;

- An empirical probability density function of meta-features is constructed, calculated according to the corresponding formula;

- The function is integrated in order to find the distribution function ;

- The interval is divided into m equal sectors ;

3.3.3. Training Neural Fuzzy Extractor

4. Experimental Results

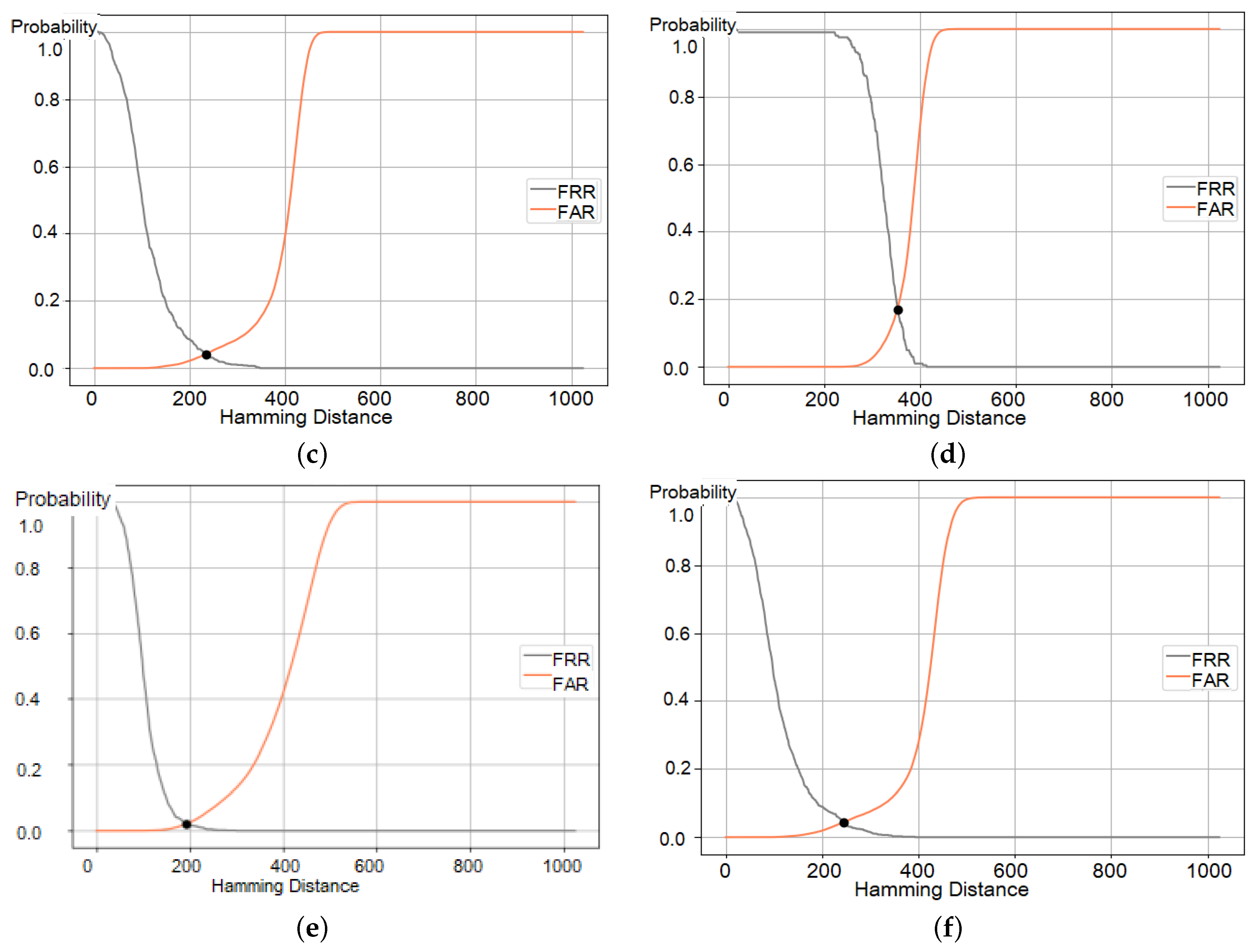

4.1. Metrics

4.2. Experimental Setup

4.3. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sulavko, A. Biometric-Based Key Generation and User Authentication Using Acoustic Characteristics of the Outer Ear and a Network of Correlation Neurons. Sensors 2022, 22, 9551. [Google Scholar] [CrossRef] [PubMed]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-Vectors: Robust DNN Embeddings for Speaker Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5329–5333. [Google Scholar] [CrossRef]

- Li, Y.; Chang, S.; Wu, Q. A short utterance speaker recognition method with improved cepstrum—CNN. SN Appl. Sci. 2022, 4, 330. [Google Scholar] [CrossRef]

- Heo, H.S.; Lee, B.J.; Huh, J.; Chung, J.S. Clova Baseline System for the VoxCeleb Speaker Recognition Challenge 2020. arXiv 2020, arXiv:2009.14153. [Google Scholar] [CrossRef]

- Xiao, X.; Kanda, N.; Chen, Z.; Zhou, T.; Yoshioka, T.; Chen, S.; Zhao, Y.; Liu, G.; Wu, Y.; Wu, J.; et al. Microsoft Speaker Diarization System for the VoxCeleb Speaker Recognition Challenge 2020. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021. [Google Scholar] [CrossRef]

- Kanagasundaram, A.; Sridharan, S.; Sriram, G.; Prachi, S.; Fookes, C. A Study of x-Vector Based Speaker Recognition on Short Utterances. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 2943–2947. [Google Scholar] [CrossRef]

- Liu, T.; Lee, K.A.; Wang, Q.; Li, H. Disentangling Voice and Content with Self-Supervision for Speaker Recognition. Adv. Neural Inf. Process. Syst. 2023, 36, 50221–50236. [Google Scholar] [CrossRef]

- Jin, Y.; Hu, G.; Chen, H.; Miao, D.; Hu, L.; Zhao, C. Cross-Modal Distillation for Speaker Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 12977–12985. [Google Scholar] [CrossRef]

- Sarma, K.; Pyrtuh, F.; Chakraborty, D. Speaker Verification System using Wavelet Transform and Neural Network for short utterances. Asian J. Converg. Technol. 2020, 6, 30–35. [Google Scholar] [CrossRef]

- Aronowitz, H. Speaker recognition using common passphrases in RedDots. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5405–5409. [Google Scholar] [CrossRef]

- Maghsoodi, N.; Sameti, H.; Zeinali, H.; Stafylakis, T. Speaker Recognition With Random Digit Strings Using Uncertainty Normalized HMM-Based i-Vectors. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1815–1825. [Google Scholar] [CrossRef]

- Jindal, A.K.; Shaik, I.; Vasudha, V.; Chalamala, S.R.; Ma, R.; Lodha, S. Secure and Privacy Preserving Method for Biometric Template Protection using Fully Homomorphic Encryption. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 1127–1134. [Google Scholar] [CrossRef]

- Zhang, K.; Cui, H.; Yu, Y. Facial Template Protection via Lattice-Based Fuzzy Extractors. Cryptology ePrint Archive, Paper 2021/1559, 2021. Available online: https://eprint.iacr.org/2021/1559.pdf (accessed on 8 December 2024).

- Monrose, F.; Reiter, M.; Li, Q.; Wetzel, S. Cryptographic key generation from voice. In Proceedings of the 2001 IEEE Symposium on Security and Privacy, S&P 2001, Oakland, CA, USA, 14–16 May 2001; pp. 202–213. [Google Scholar] [CrossRef]

- Chee, K.Y.; Jin, Z.; Cai, D.; Li, M.; Yap, W.S.; Lai, Y.L.; Goi, B.M. Cancellable speech template via random binary orthogonal matrices projection hashing. Pattern Recognit. 2018, 76, 273–287. [Google Scholar] [CrossRef]

- Ghouzali, S.; Bousnina, N.; Mikram, M.; Lafkih, M.; Nafea, O.; Al-Razgan, M.; Abdul, W. Hybrid Multimodal Biometric Template Protection. Intell. Autom. Soft. Comput. 2021, 27, 35–51. [Google Scholar] [CrossRef]

- Sardar, A.; Umer, S.; Rout, R.K.; Sahoo, K.S.; Gandomi, A.H. Enhanced Biometric Template Protection Schemes for Securing Face Recognition in IoT Environment. IEEE Internet Things J. 2024, 11, 23196–23206. [Google Scholar] [CrossRef]

- Sulavko, A.; Inivatov, D.; Vasilyev, V.; Lozhnikov, P. Authentication based on voice passwords with the biometric template protection using correlation neurons. Inf. Control Syst. 2024, 21–38. [Google Scholar]

- Alam, M.J.; Kenny, P.; Gupta, V. Tandem Features for Text-Dependent Speaker Verification on the RedDots Corpus. In Proceedings of the Interspeech 2016, Francisco, CA, USA, 8–12 September 2016; pp. 420–424. [Google Scholar] [CrossRef]

- Sarkar, A.K.; Sarma, H.; Dwivedi, P.; Tan, Z.H. Data Augmentation Enhanced Speaker Enrollment for Text-dependent Speaker Verification. In Proceedings of the 2020 3rd International Conference on Energy, Power and Environment: Towards Clean Energy Technologies, Shillong, India, 5–7 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Alaya, B.; Laouamer, L.; Msilini, N. Homomorphic encryption systems statement: Trends and challenges. Comput. Sci. Rev. 2020, 36, 100235. [Google Scholar] [CrossRef]

- Fuller, B.; Meng, X.; Reyzin, L. Computational fuzzy extractors. Inf. Comput. 2020, 275, 104602. [Google Scholar] [CrossRef]

- Rathgeb, C.; Kolberg, J.; Uhl, A.; Busch, C. Deep Learning in the Field of Biometric Template Protection: An Overview. arXiv 2023, arXiv:2303.02715. [Google Scholar] [CrossRef]

- Jindal, A.K.; Chalamala, S.; Jami, S.K. Face Template Protection Using Deep Convolutional Neural Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 575–5758. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Zakharov, D.; Frontoni, E. Deep learning-based biometric cryptographic key generation with post-quantum security. Multimed. Tools Appl. 2024, 83, 56909–56938. [Google Scholar] [CrossRef]

- Sadjadi, O. NIST SRE CTS Superset: A large-scale dataset for telephony speaker recognition. arXiv 2021, arXiv:2108.07118. [Google Scholar]

- Zue, V.; Seneff, S.; Glass, J. Speech database development at MIT: Timit and beyond. Speech Commun. 1990, 9, 351–356. [Google Scholar] [CrossRef]

- El-Moneim, S.A.; Nassar, M.A.; Dessouky, M.I.; Ismail, N.A.; El-Fishawy, A.S.; El-Samie, F.E.A. Cancellable template generation for speaker recognition based on spectrogram patch selection and deep convolutional neural networks. Int. J. Speech Technol. 2022, 25, 689–696. [Google Scholar] [CrossRef]

- Mahum, R.; Irtaza, A.; Javed, A. EDL-Det: A Robust TTS Synthesis Detector Using VGG19-Based YAMNet and Ensemble Learning Block. IEEE Access 2023, 11, 134701–134716. [Google Scholar] [CrossRef]

- Xia, P.; Zhang, L.; Li, F. Learning similarity with cosine similarity ensemble. Inf. Sci. 2015, 307, 39–52. [Google Scholar] [CrossRef]

- Akhmetov, B.; Ivanov, A.; Alimseitova, Z. Training of neural network biometry-code converters. News Natl. Acad. Sci. Repub. Kazakhstan Ser. Geol. Tech. Sci. 2018, 1, 61–68. [Google Scholar]

- Marshalko, G.B. On the security of a neural network-based biometric authentication scheme. Math. Probl. Cryptogr. 2014, 5, 87–98. [Google Scholar] [CrossRef][Green Version]

- Bogdanov, D.S.; Mironkin, V.O. Data recovery for a neural network-based biometric authentication scheme. Math. Probl. Cryptogr. 2019, 10, 61–74. [Google Scholar] [CrossRef]

- Malygin, A.; Seilova, N.; Boskebeev, K.; Alimseitova, Z. Application of artificial neural networks for handwritten biometric images recognition. Comput. Model. New Technol. 2017, 5, 31–38. [Google Scholar]

| Methods | Modality, Dataset | Obtained Results |

|---|---|---|

| i-vector, x-vector [2] | Voice, NIST SRE 2016, VoxCeleb, SWBD | EER = 9.23%, EER = 5.21% |

| 2D-CNN (MFCC) [3] | Voice, authors’ own archive | F1-score = 99.6% |

| ResNet (AM-Softmax, AAM-Softmax) [4] | Voice, VoxSRC 2020 | EER = 5.19% |

| Res2Net [5] | Voice, VoxCeleb | EER = 0.83% |

| PLDA + x-vector [6] | Voice | EER = 13.35% |

| RecXi [7] | Voice, VoxCeleb, SITW | EER reduction by 9.56% |

| VGSR [8] | Voice + Face, CN-Celeb, VoxCeleb1 | Reduce EER by 10–15% |

| Wavelet-based classification [9] | Voice, RedDots | EER = 4.85% |

| GMM (MAP + NAP) [10] | Voice, RedDots | EER = 2.6% |

| HMM + i-vector [11] | Voice, RedDots | EER = 1.52% (men), 1.77% (women) |

| Homomorphic encryption [12] | Face | Matching time 2.38 ms, memory 32.8 KB |

| Lattice-based fuzzy extractors [13] | Face | Entropy equal to 45 bits, high security |

| Fuzzy vault + DTW [14] | Voice | Impossibility of template inversion |

| RBOMP with i-vector and prime factorization [15] | Voice | EER = 3.43%, ARM attack protection |

| Hybrid method: homomorphic encryption + cancelable biometrics [16] | Face | Authentication time is about 7 s |

| Hybrid scheme: cancelable biometrics + biocryptography [17] | Face, CVL, FEI, FERET. | Accuracy: CVL = 99.47%, FEI = 98.10%, FERET = 100% |

| Neural fuzzy extractor with correlation neurons [18] | Voice, RedDots | EER = 2.64% |

| Fusion of four systems and tandem features [19] | Voice, RedDots | EER = 1.96–2.28% for male, 2.7–3.48% for female |

| TD-SV based on GMM-UBM [20] | Voice, RedDots | EER = 3.06% with 5 db noise, 2.7% with 10 db noise |

| Measure | Number of Synapses, | Dataset | |

|---|---|---|---|

| AIC-spkr-130 | RedDots | ||

| Cosine | 4 | 0.055 | 0.15 |

| 6 | 0.061 | 0.147 | |

| 8 | 0.081 | 0.155 | |

| Cotangent | 4 | 0.044 | 0.061 |

| 6 | 0.049 | 0.117 | |

| 8 | 0.047 | 0.132 | |

| Cosine + cotangent | 4 | 0.021 | 0.032 |

| 6 | 0.061 | 0.069 | |

| 8 | 0.065 | 0.071 | |

| Methods | Used Part of RedDots | EER, % |

|---|---|---|

| Wavelet transform, NN [9] | The first 8 standard phrases | 4.85 |

| GMM, MAP, NAP, Bagging [10] | 10 standard phrases (ImpostorCorrect) | 2.6 |

| HMM, i-vector, digit-specific models [11] | 33rd and 34th phrases, (ImpostorCorrect) | 1.52–1.77 |

| Fusion of four systems and tandem features [19] | Part 1 (ImpostorCorrect) | 2.28 for male, 3.48 for female |

| Fusion of four systems and tandem features [19] | Part 4 (ImpostorCorrect) | 1.96 for male, 3.22 for female |

| TD-SV based on GMM-UBM [20] | Part 1 (TargetWrong, ImpostorCorrect, ImpostorWrong) | 3.06 with 5 db noise, 2.7 with 10 db noise |

| Methods | Key Length | EER, % | RedDots |

|---|---|---|---|

| Neural fuzzy extractor trained in accordance with GOST R 52633.5 [34] | 160 bit | 2.7 | 3.5 |

| Proposed solution | 1024 bit | 2.1 | 3.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Institute of Knowledge Innovation and Invention. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sulavko, A.; Panfilova, I.; Inivatov, D.; Lozhnikov, P.; Vulfin, A.; Samotuga, A. Biometric-Based Key Generation and User Authentication Using Voice Password Images and Neural Fuzzy Extractor. Appl. Syst. Innov. 2025, 8, 13. https://doi.org/10.3390/asi8010013

Sulavko A, Panfilova I, Inivatov D, Lozhnikov P, Vulfin A, Samotuga A. Biometric-Based Key Generation and User Authentication Using Voice Password Images and Neural Fuzzy Extractor. Applied System Innovation. 2025; 8(1):13. https://doi.org/10.3390/asi8010013

Chicago/Turabian StyleSulavko, Alexey, Irina Panfilova, Daniil Inivatov, Pavel Lozhnikov, Alexey Vulfin, and Alexander Samotuga. 2025. "Biometric-Based Key Generation and User Authentication Using Voice Password Images and Neural Fuzzy Extractor" Applied System Innovation 8, no. 1: 13. https://doi.org/10.3390/asi8010013

APA StyleSulavko, A., Panfilova, I., Inivatov, D., Lozhnikov, P., Vulfin, A., & Samotuga, A. (2025). Biometric-Based Key Generation and User Authentication Using Voice Password Images and Neural Fuzzy Extractor. Applied System Innovation, 8(1), 13. https://doi.org/10.3390/asi8010013