A Systematic Approach for the Selection of Optimization Algorithms including End-User Requirements Applied to Box-Type Boom Crane Design

Abstract

1. Introduction

2. Related Work

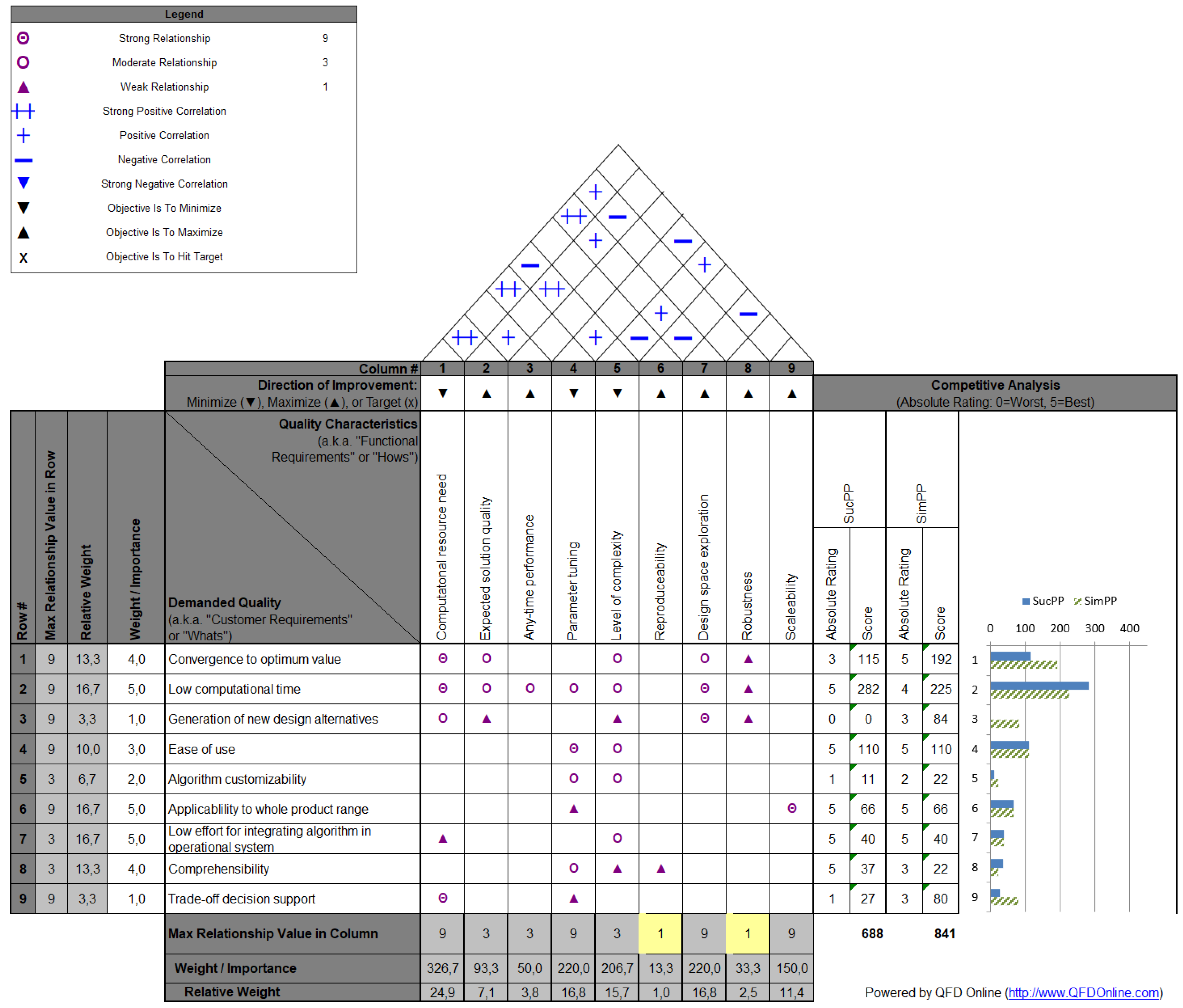

2.1. Quality Function Deployment

2.2. Optimization Algorithm Selection

3. Method

3.1. Methodology—Building a Decision Matrix

3.2. Resulting Decision Matrix for Algorithm Selection

3.2.1. Demanded Quality Criteria

- Convergence to optimum value: The capability to always converge towards near-optimal solutions.

- Low computational time: The capability to find high quality solutions in a for the end-user acceptable time.

- Generation of new design alternatives: The capability to generate distinct design alternatives other than those obtained by small variations of the initial product configuration.

- Ease of use: The effort required to set up the algorithm for the problem. This includes, among others, the selection process for the algorithmic parameter values and how the user can interact with the algorithm.

- Algorithm customization: The possibility and the effort required to make significant changes to the algorithm to increase the application range or to change parts of the algorithm.

- Applicability to whole product range: The effort required to apply the algorithm to the whole product range, e.g., lower/higher dimensional variants of the same problem or problems with slightly changed constraints.

- Low effort for integrating algorithm in operational system: The effort required to implement the algorithm into the system and to set up connections with the required external tools, e.g., CAD and CAE systems, databases, computing resources, and software libraries.

- Comprehensibility: The level of expert knowledge required to understand the solution creation process and the solution representation.

- Trade-off decision support: The capability to investigate different objectives and different constraint values with the same or a slightly modified algorithm.

3.2.2. Quality Characteristics Criteria

- Computational resource need: The computational resources, e.g., number of function evaluations or run times, required to obtain a high-quality solution. One aims at minimizing the need for computational resources.

- Expected solution quality: The expected quality of the solution obtained. Solution quality is directly related to the objective function value and the constraint satisfaction. It is to be maximized, regardless of whether the objective function is minimized or maximized.

- Any-time performance: The ability of an algorithm to provide a solution at any-time and to continuously improve this solution by using more resources. In an ideal situation, the solution improvement and the increase in resources exhibit a linear relationship. The aim is to maximize any-time performance.

- Parameter tuning: A combination of the required computational resources and expert knowledge to select well-performing values for the algorithmic parameters. The direction of improvement for parameter tuning is to minimize it.

- Level of complexity: The increase in complexity of an algorithm compared with Random Search. Complexity is increased by using special operators or methods for the solution generation, but also by using surrogates or parallel and distributed computing approaches. The complexity increase should result in finding better solutions and/or getting solutions of the same quality in less time. The aim is to minimize the level of complexity.

- Reproducibility: The capability of an algorithm to provide the same, or close to, solution by rerunning the algorithm with the same setup. Reproducibility is to be maximized.

- Design space exploration: The capability of an algorithm to create and evaluate vastly different solutions within the feasible design space. A maximum of design space exploration is preferred.

- Robustness: The capability to provide solutions which exhibit only small changes in their quality when the problem itself undergoes small changes. Robustness is to be maximized.

- Scalability: The capability to obtain high-quality solutions on problems with increased dimensionality within an acceptable time and with at most changing the values for the algorithmic parameters at the same time. The aim is to maximize scalability.

3.3. General Remarks on Criteria, Correlations, and Relationships

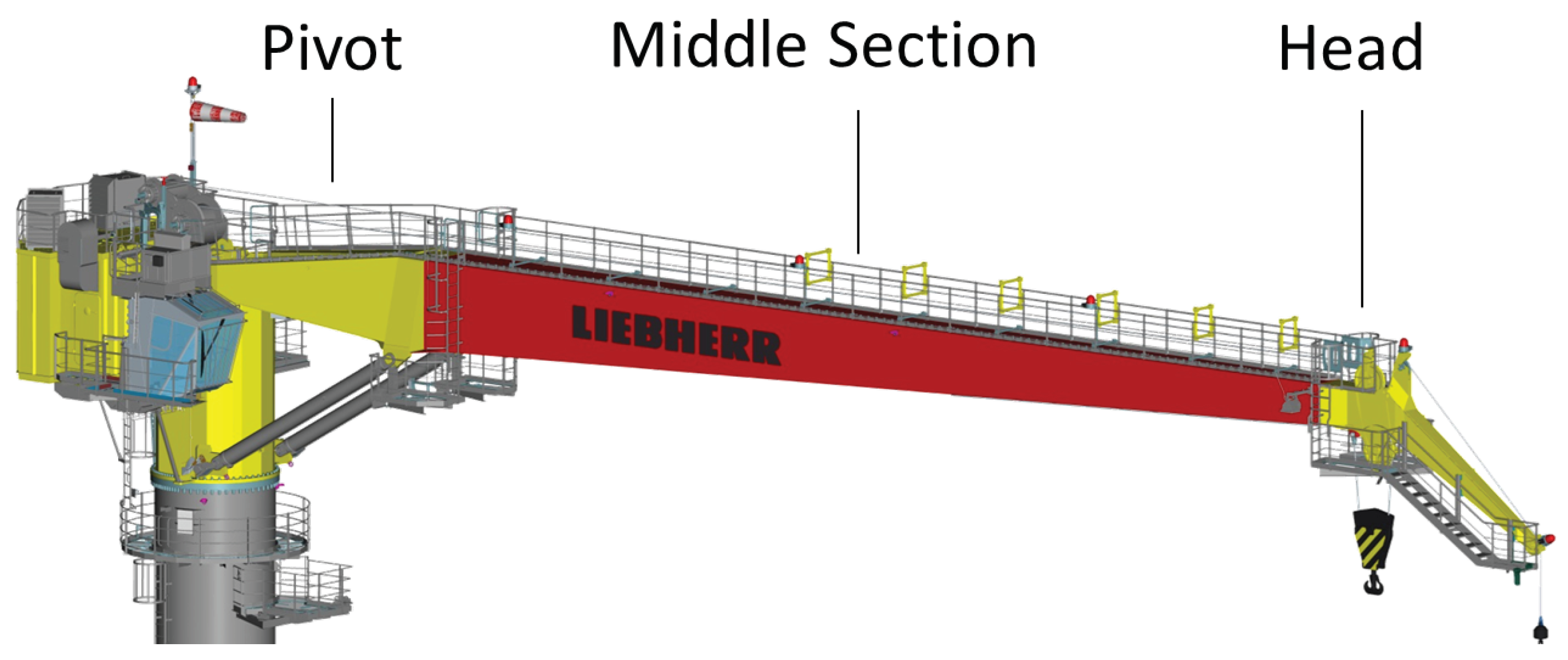

4. Box-Type Boom Design and Optimization

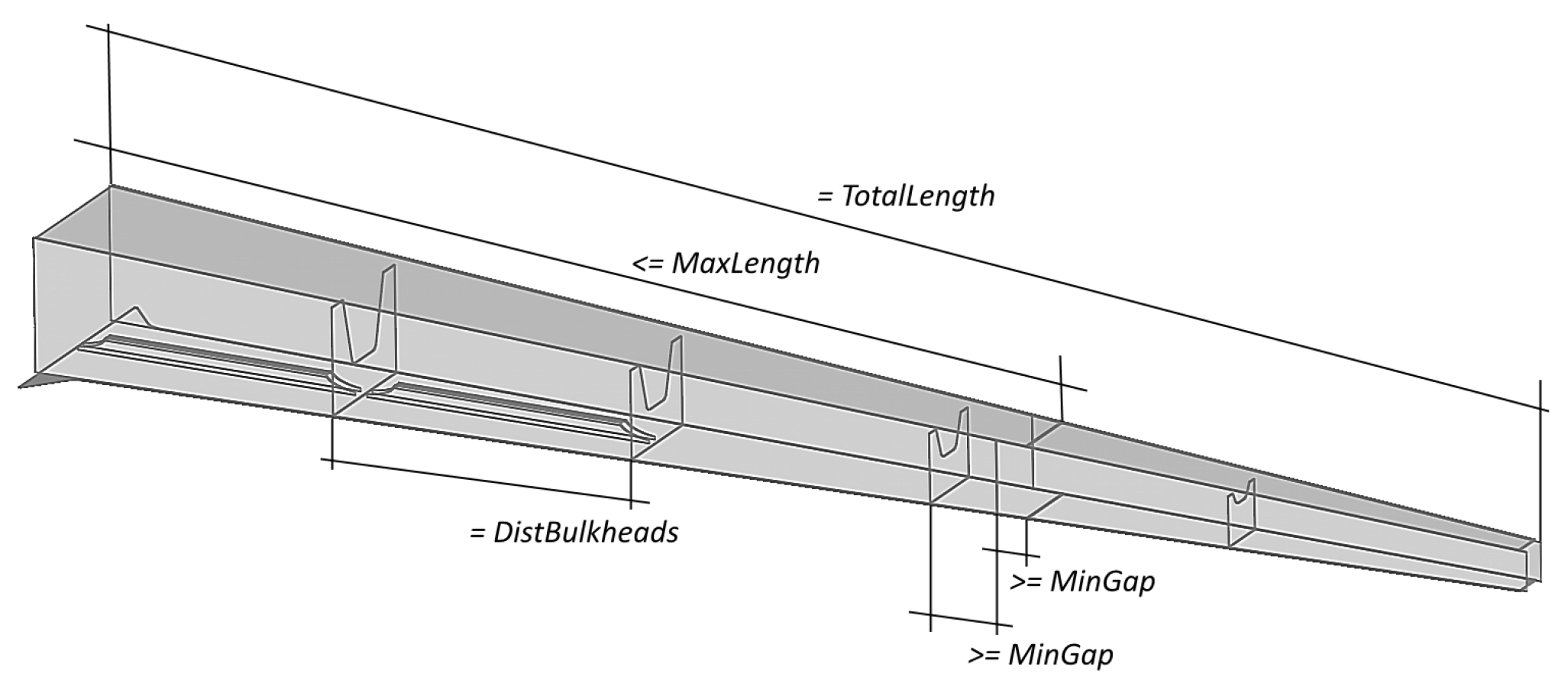

4.1. BTB Design Task

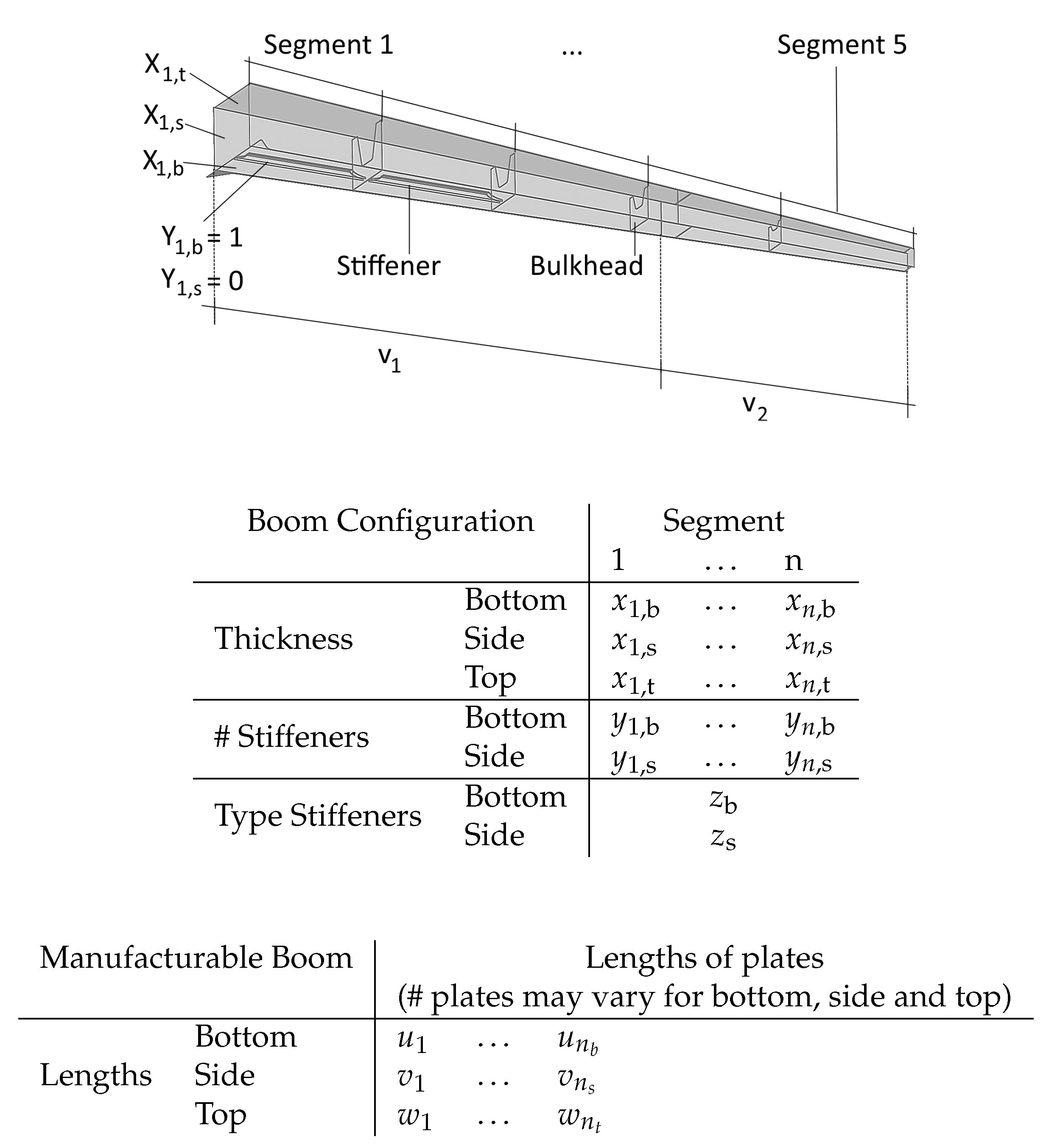

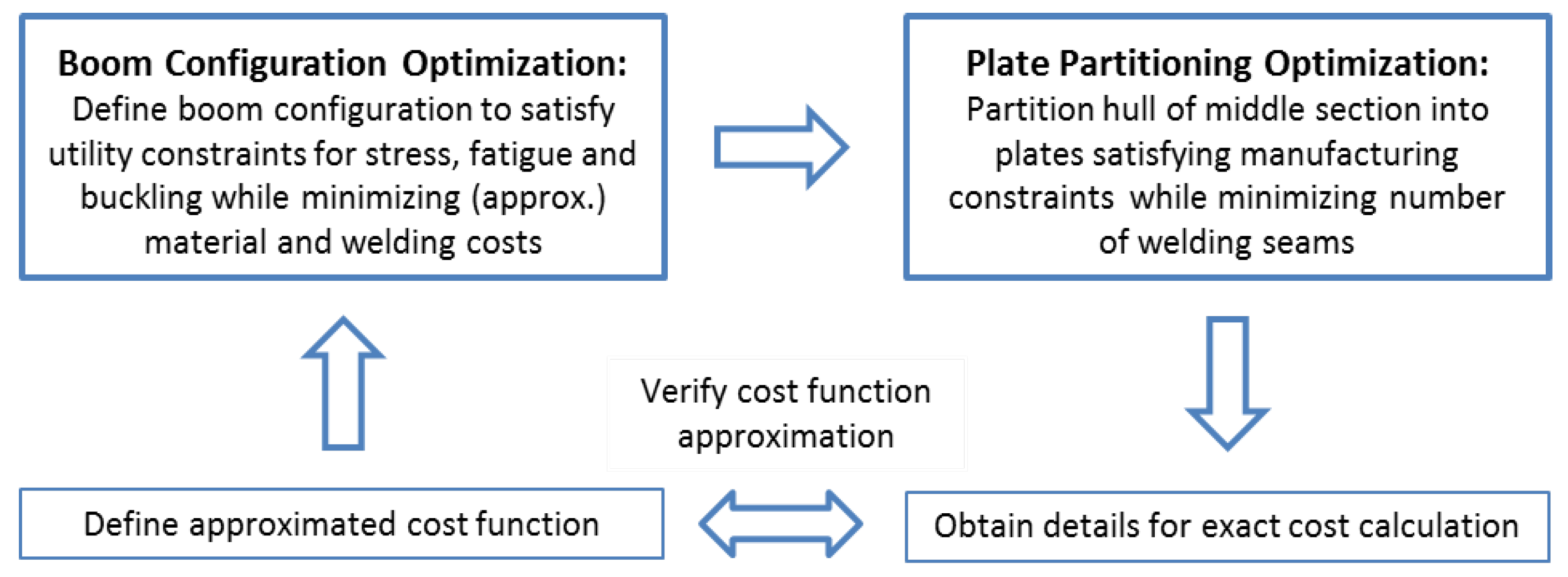

4.2. Optimization Potential of the Box-Type Boom Design

- Boom Configuration: The structural analysis defines the metal sheet thicknesses as well as the number and type of stiffeners in each segment (boom configuration), to define a BTB that can safely lift certain load cases at a given length. Currently, these parameters are determined by the engineer, through experience and a trial-and-error strategy, and evaluated using a structural analysis tool.

- Plate Partitioning: An appropriate partitioning of the hull of the BTB into single plates is required to be able to manufacture the boom. Initially, this task was performed manually by the design engineer, and later, in the course of the implementation of the KBE-application, replaced by a heuristic approach (termed Successive Plate Partitioning) [42].

5. Boom Configuration Optimization

5.1. Problem Definition

| material costs of the metal depending on the thickness ; | |

| material costs of the stiffener depending on the stiffener type ; | |

| welding costs of the stiffener depending on the stiffener type ; and | |

| welding costs of the plates depending on all thicknesses . |

- two consecutive segments have different metal thicknesses; or

- two consecutive segments have the same metal thicknesses and the previous three segments were already combined (i.e., were also of the same thickness).

5.2. Optimization Approaches

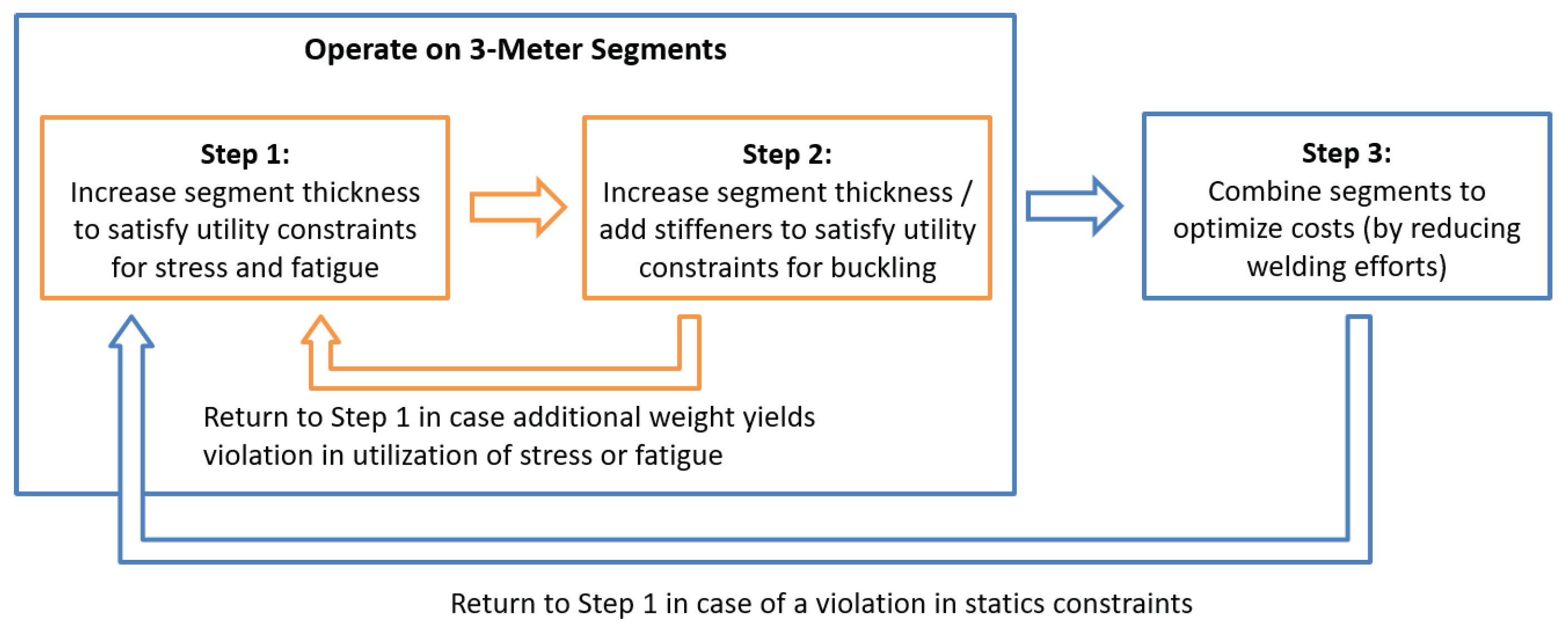

5.2.1. Heuristic Optimization Approach

(1). Fulfilling utility of stress and fatigue (constraints in Equations (5) and (6))

(2). Fulfilling utility of buckling (constraint in Equation (7))

- (a)

- Taking constraints of Equations (11) and (14) into accountConsidering the boom configuration output of Step 1, if there are any violations in the utilization of buckling, or non-increasing plate thicknesses or the number of stiffeners, the boom configuration is updated as follows. For each segment (i.e., starting from the segment closest to the head end), it is verified whether the thickness and/or the number of stiffeners of bottom, side or top is higher than the previous segment i. If so, the respective thickness and/or the number of stiffeners of segment is set to that value of segment i. If any changes were made, the updated configuration is stored as the first suggestion for the next boom configuration to be evaluated. Then, all possible combinations of more stable segments for the bottom and side, respectively, are generated and if the generated version is cheaper than the currently defined next configuration, the latter is replaced with the cheaper one. This is done to trade-off segment thickness and the number of stiffeners with regard to costs. Once the update is made for each segment at the bottom, side, and top, the boom configuration is evaluated with the structural analysis tool. This procedure is repeated until all buckling constraints as well as the constraints in Equations (11) and (14) are fulfilled. Afterward, the utilizations of stress and fatigue are checked again. If there are any violations, the procedure returns to Step 1, otherwise, it continues with Step 2b. In addition, in this step, it might be possible, due to the simultaneous changes, that the global optimum is missed out. However, similar to the argument in Step 1, the simultaneous changes are required to avoid run-time explosions.

- (b)

- Taking constraints of Equation (15) into accountRecall that, thus far, due to the independent consideration of all segments, each segment could have different stiffener types. This is corrected now to fulfill the constraints of the same type of stiffeners for all bottom segments and all side segments. First, the currently used types on the bottom and side, respectively, are extracted from the segment configurations. Restricting the types of stiffeners to the already used ones, all more stable configurations are generated (one at a time for bottom and side, respectively). For each of these boom configurations, the utilizations of stress, fatigue, and buckling are calculated and, if all statics constraints are fulfilled, the costs are calculated. The cheapest of these configurations is used. If none of these configurations fulfills all statics constraints, the procedure returns to Step 1; otherwise, it continues with Step 3.

(3). Optimizing for costs

5.2.2. Metaheuristic Optimization Approach

- Convert the constrained optimization problem into an unconstrained one.

- Overcome run time issues due to expensive evaluations of the structural constraints by using surrogate-assisted optimization.

Unconstrained Optimization Problem

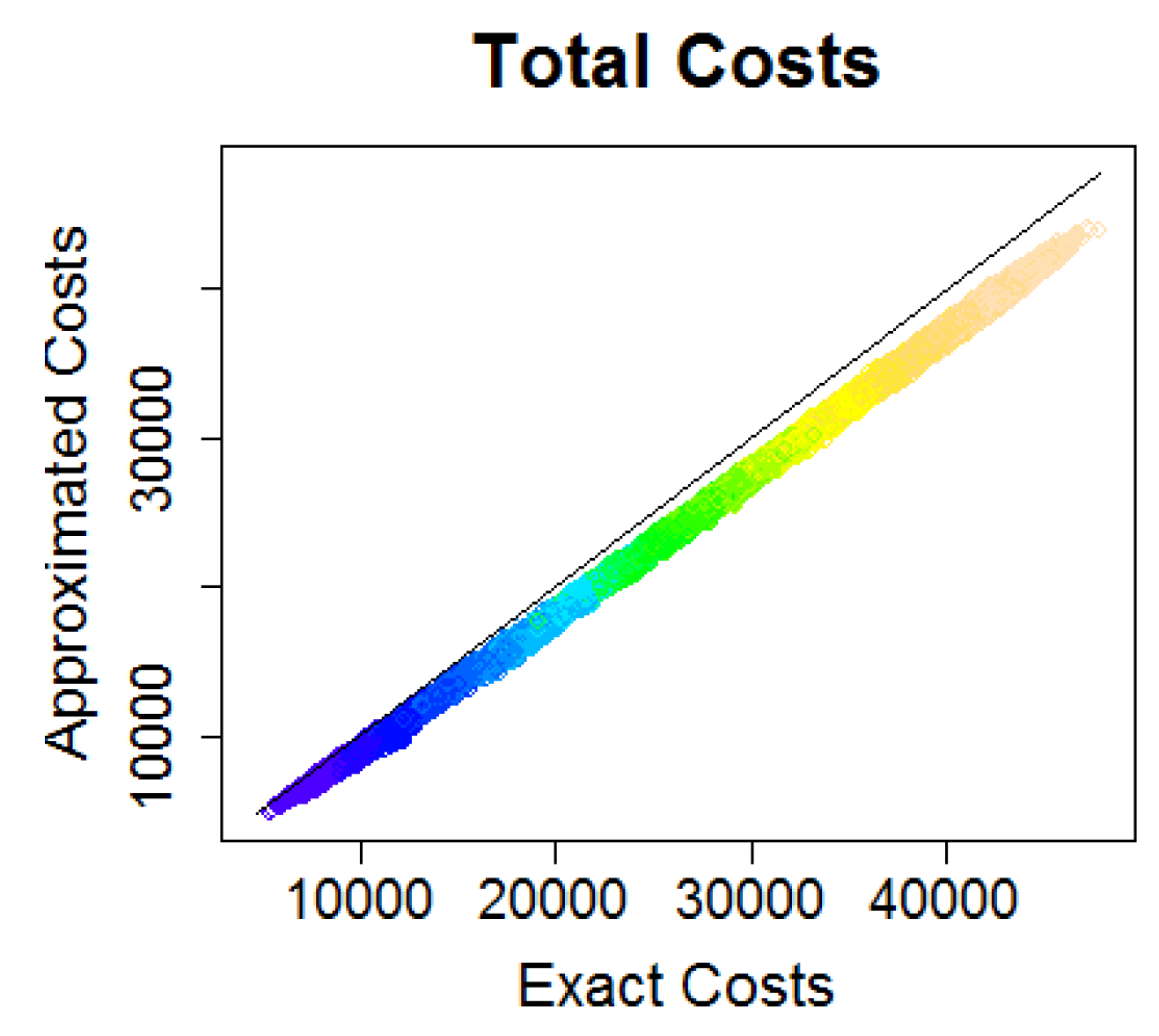

Run-Time Reduction By Surrogate-Assisted Optimization

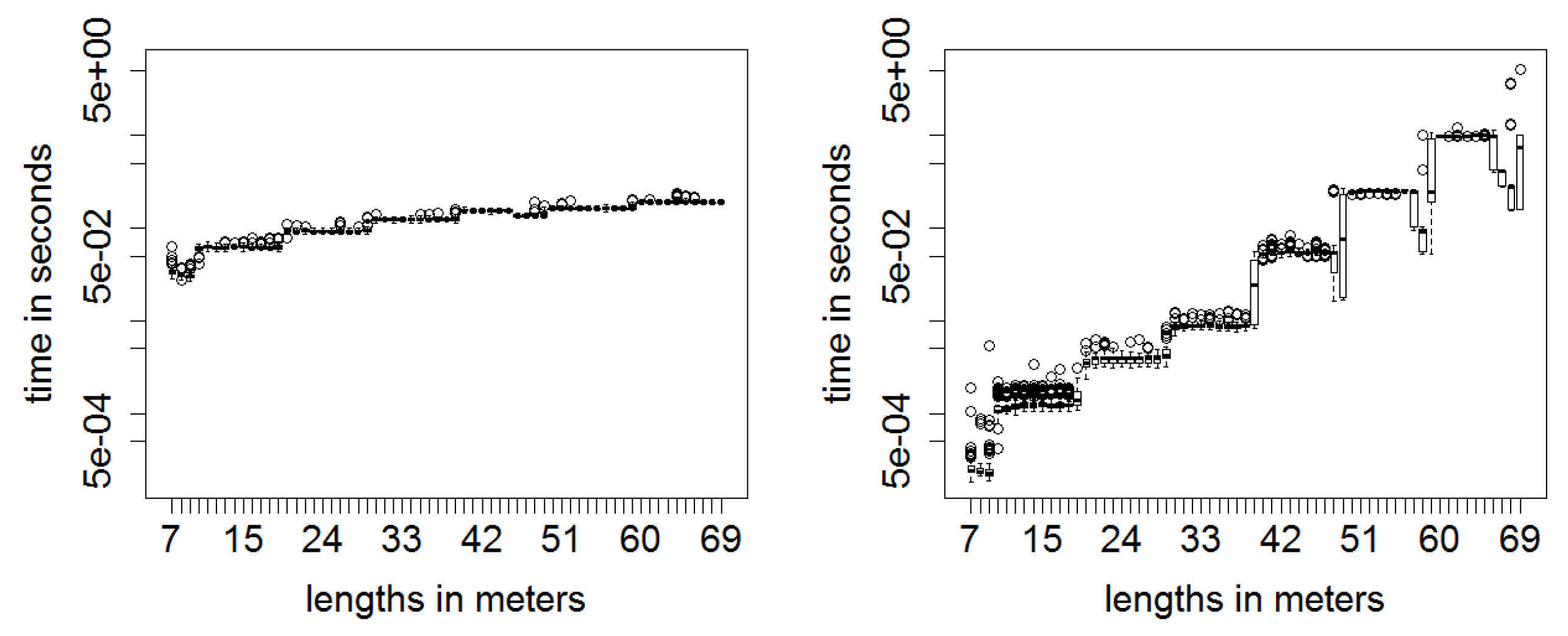

5.3. Results

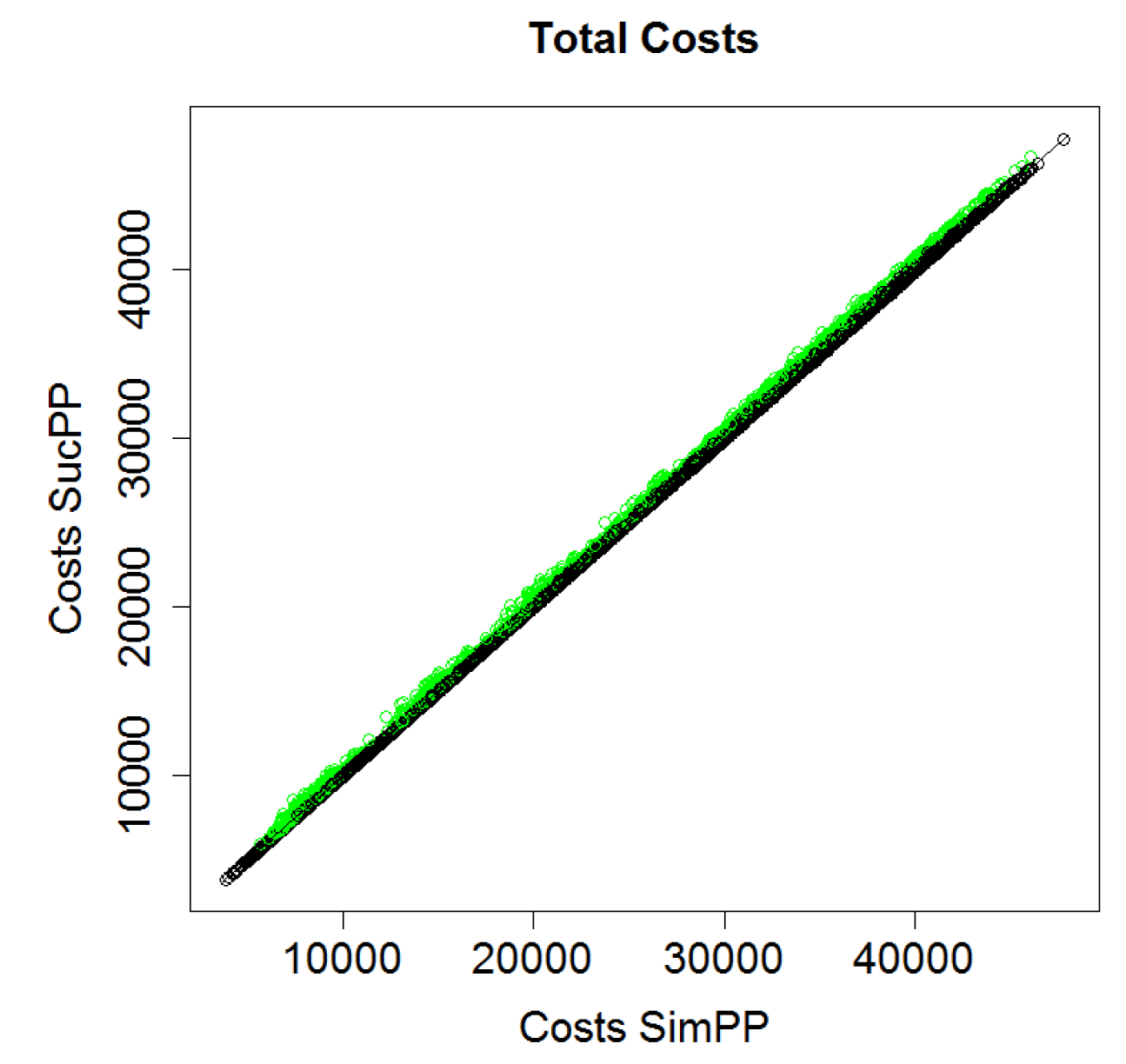

5.3.1. Cost Function Comparison

5.3.2. Optimization Comparison

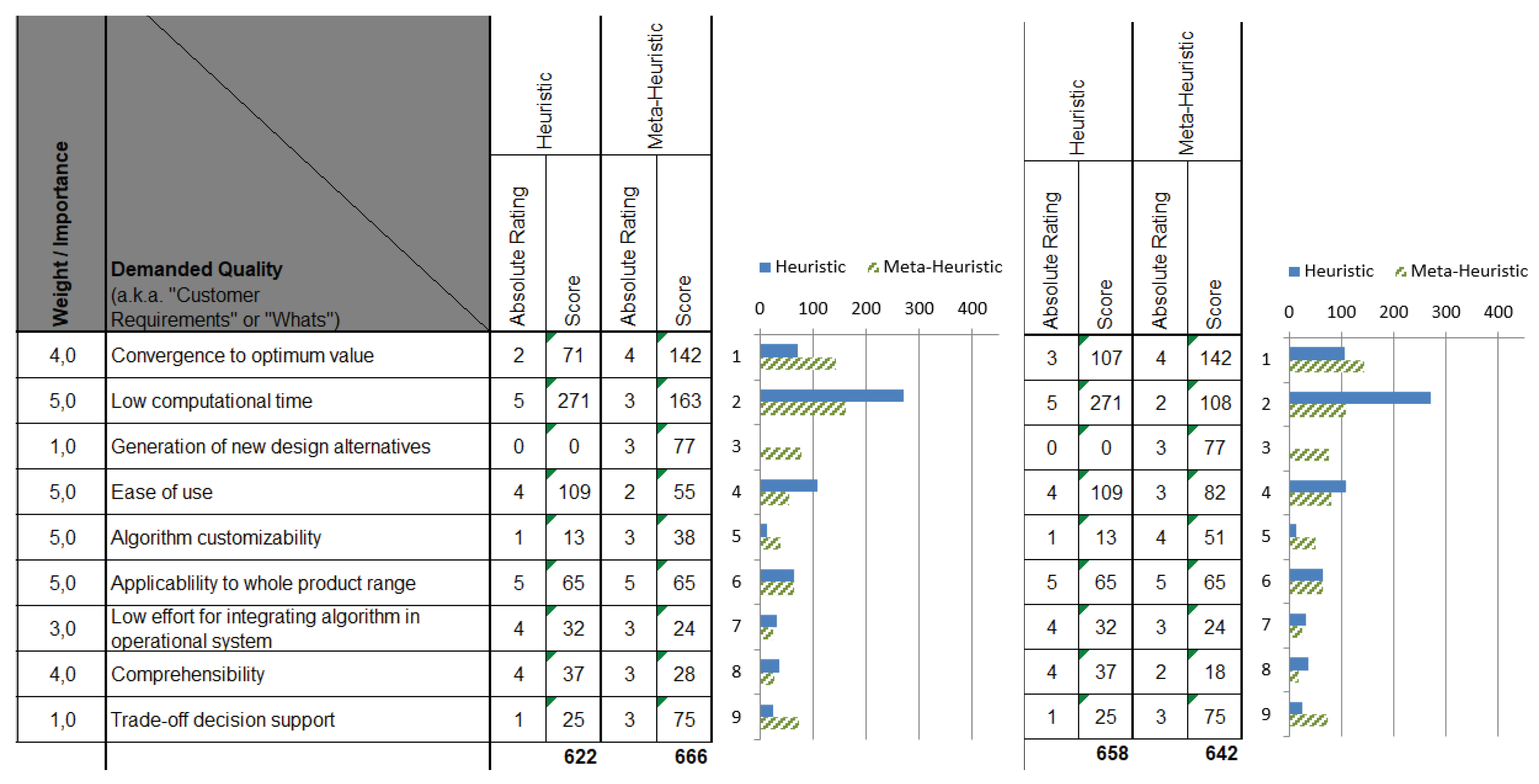

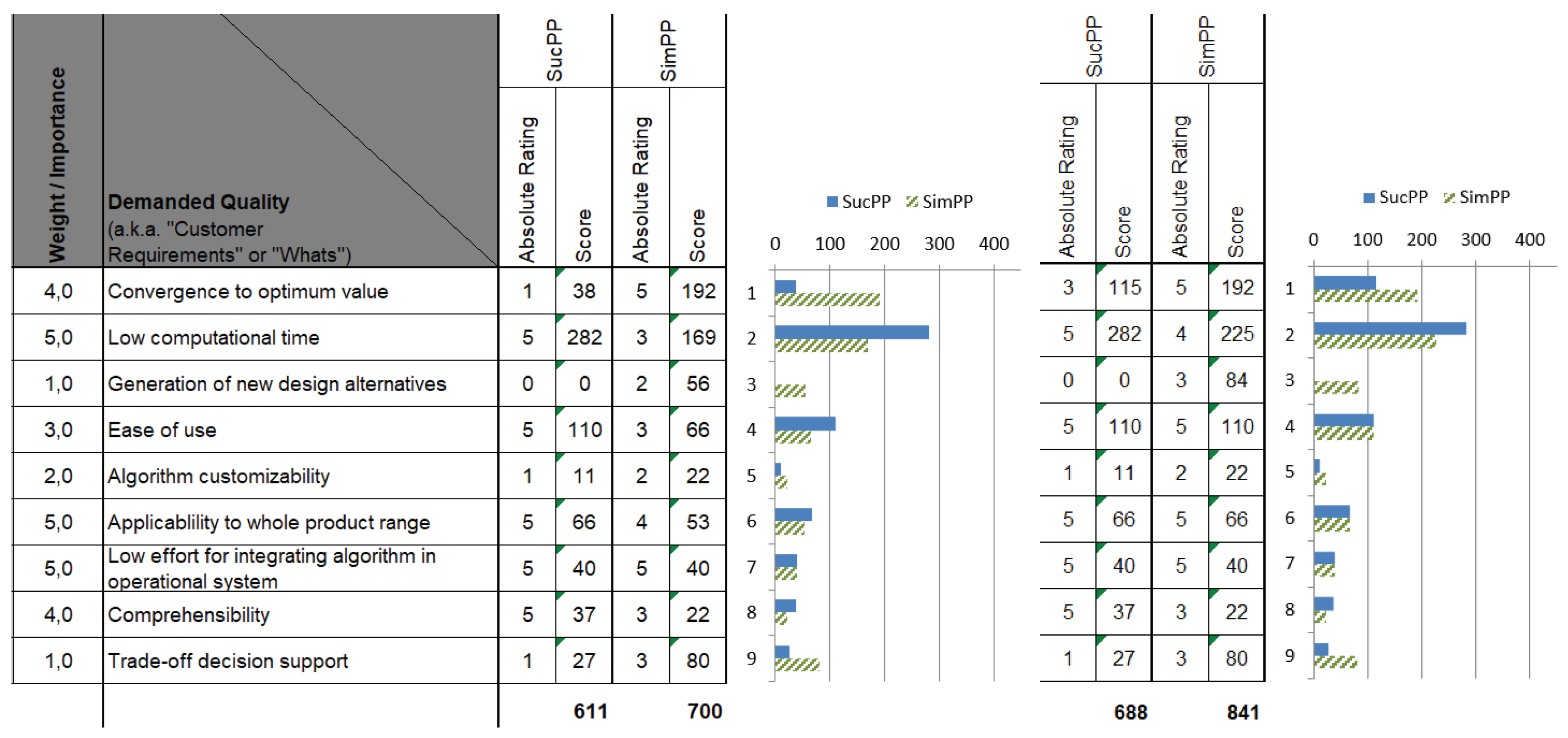

5.3.3. Decision Matrix for Boom Configuration Optimization

6. Plate Partitioning Optimization

6.1. Problem Definition

6.2. Optimization Approaches

6.2.1. Successive Plate Partitioning

- Determine the lengths of the top plates: Since no other lengths are set yet and the bulkheads are not welded to the top plates, the length of each top plate is chosen as long as possible, i.e., is set to , or, in the case of the last plate, is determined such that the sum of all plates is equal to , the length of the middle section.

- Determine the lengths of the bottom plates: The lengths of the bottom plates are restricted by the positions of the bulkheads. Since the lengths of the side plates are not determined yet, these constraints can be neglected at this point. Thus, the length of each bottom plate is determined to be as long as possible, yet avoiding conflicts with the bulkheads. In particular, if the gap between the welding seam of two bottom plates and the welding seam of a bottom plate and a bulkhead would be smaller than , the bottom plate is shortened to an appropriate length. As for the top plates, the last bottom plate is assigned the appropriate length to reach .

- Determine lengths of side plates: All determined parts have to be taken into account, meaning that the length of each side plate is restricted by the lengths of the top and bottom plates, as well as the position of the bulkheads. As in Point 2, if any two welding seams are too close to each other, the side plate is shortened to an appropriate length. As above, the length of the last side plate is chosen to reach .

6.2.2. Simultaneous Plate Partitioning

The Decision Variables

The Objective Function

The Constraints

- Considering minimum gap between welding seams between plates and bulkheads (corresponding to Equation (21)), as mentioned in Section 4, the bulkheads are positioned at equal distances, starting from the pivot section. To be able to formulate the optimization problem as a linear one, in each LP, only the position of one bulkhead for the first to second last bottom plate can be considered at a time. Thus, a series of LPs is considered, differing in the selection of the bulkheads, among which the best solution is chosen. As an example, assume that, for the current formulation, the third and seventh bulkheads are selected. The constraints are set up such that the first and second bottom plate end between the third and fourth, and seventh and eighth bulkheads, respectively:The formulation for the welding seams between the side plates and the bulkheads is similar.

- Considering minimum gap between welding seams (corresponding to Equation (22)), since all plates have the same restrictions on the length, the constraints of the welding seams between the bottom and side plates can be restricted, such that the welding seams of the first and second bottom plates to the welding seams of the first and second side plates differ in at least . This holds similarly for the welding seams between the second and third bottom and side plates. Thus, we define the following constraints:A similar formulation is made for the side and top plates.

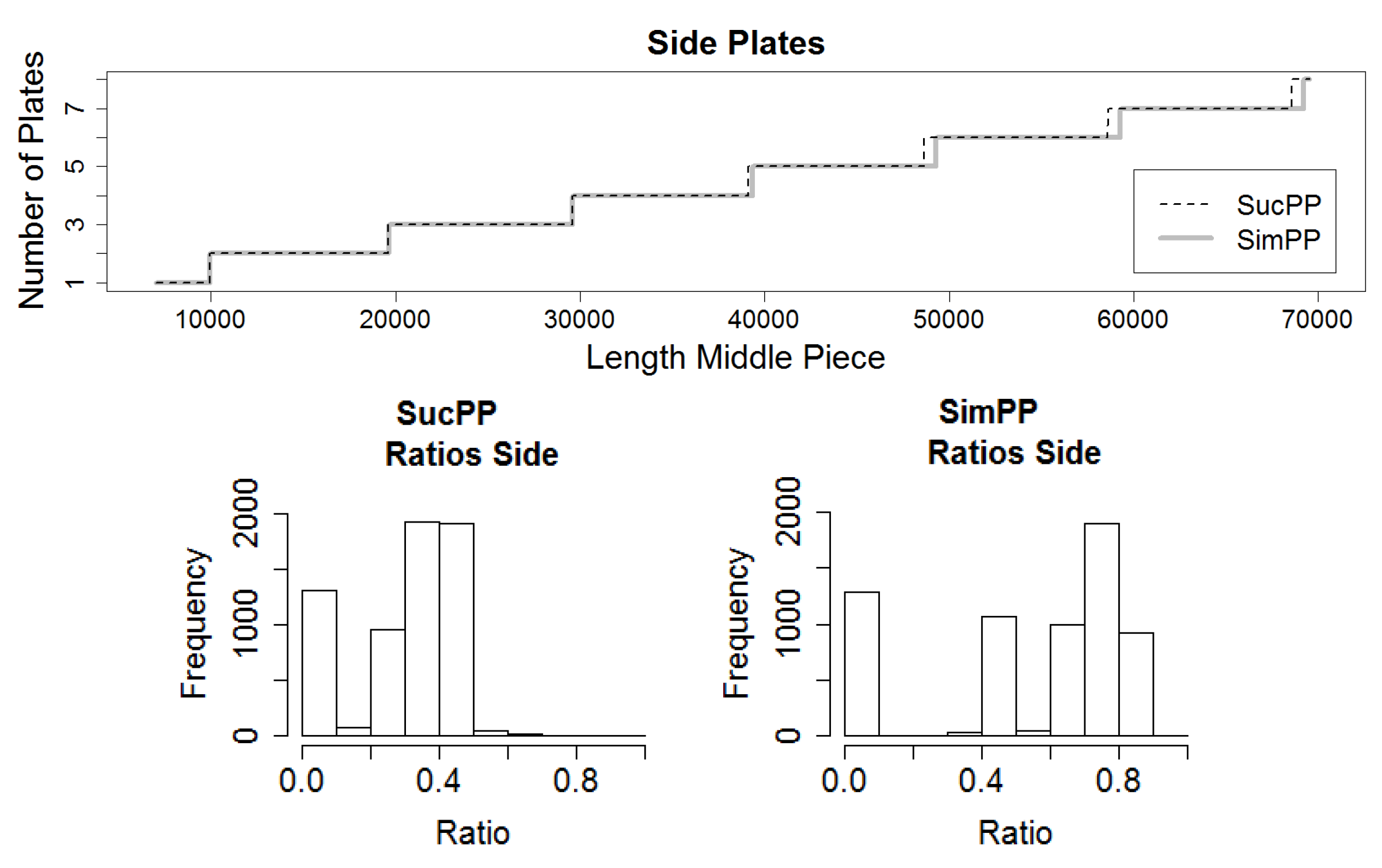

6.3. Results

Decision Matrix for Plate Partitioning

7. Discussion

7.1. Algorithm Selection

7.2. Optimization Case Studies

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Expected Solution Quality | Any-Time Performance | Parameter Tuning | Level of Complexity | |

|---|---|---|---|---|

| Computational resource need | SPC: finding better solutionsrequires resources | SPC: less parameter tuningrequires less resources | NC: increasing complexitypartly aims at finding solutions with less resources (e.g., use of surrogates) | |

| Expected solution quality | PC: a good any-time performance improvesthe solution quality continuously | SPC: increasing complexity aimsat finding improved solutions | ||

| Parameter tuning | PC: increased complexity requiresmore parameters to be selected |

| Reproducibility | Design Space Exploration | Robustness | Scalability | |

|---|---|---|---|---|

| Computational resource need | SPC: exploring more solutions requires resources | PC: assessing robustness requires resources | ||

| Expected solution quality | PC: exploration is required to obtain different high quality solutions | NC: maximizing solution qualities can yield highly sensitive solutions | ||

| Parameter tuning | SNC: a large required effort for parameter selection, e.g., by tuning methods, decreases scalability | |||

| Level of complexity | NC: increase in complexity, i.e., use of stochastic operators, can reduce reproducibility | PC: increase in complexity, i.e., use of stochastic operators, can increase the design space exploration | PC: increase in complexity, e.g., surrogates, parallel approaches, aims at achieving scalability | |

| Reproducibility | NC: high exploration has a high chance of producing different high quality solutions, i.e., a low reproducibility | |||

| Design space exploration | NC: high exploration can decrease the scalability (i.e., producing many low quality solutions in high dimensions or requiring an exponential increase in computational resources to obtain high quality solutions) |

| Computational Resource Need | Expected Solution Quality | Any-Time Performance | Parameter Tuning | |

|---|---|---|---|---|

| Convergence to optimum value | SR: more resources are required to find optimum value with high accuracy | MR: some, but not necessarily all, high quality solutions are located close to the optimum value | ||

| Low computational time | SR: computational time depends on the used computational resources | MR: low computational time requirements can prohibit algorithms to obtain high quality solutions | MR: any-time algorithms can provide improved solutions, compared to the initial solution, in short time | MR: parameter tuning effort requires time |

| Generation of new design alternatives | MR: resources are required to create and evaluate (many) alternative designs | WR: new design alternatives can have an improved solution quality | ||

| Ease of use | SR: parameter selection involves additional methods or special knowledge | |||

| Algorithm customization | MR: expert knowledge/tuning effort is required to apply the algorithm to different problem or to change part of the algorithm | |||

| Applicability to whole product range | WR: parameters may have to be changed for each product type | |||

| Low effort for integrating algorithm in operational system | WR: computationally demanding algorithms require resource allocation | |||

| Comprehensibility | MR: the effect of the choice of parameter values on the obtained solution quality is not always be clear | |||

| Trade-off decision support | SR: investigations of several characteristics requires more resources | WR: different characteristics may requires different parameter choices (and respective tuning) |

| Level of Complexity | Reproducibility | Design Space Exploration | Robustness | Scalability | |

|---|---|---|---|---|---|

| Convergence to optimum value | MR: an increase in complexity can aim at avoiding local extreme values | MR: exploration is required to move from initial point towards optimum value | WR: compromise optimality for stability | ||

| Low computational time | MR: some complexity (e.g., surrogates, use of parallel approaches) aims at finding solutions faster | SR: exploring many solutions increases run time | WR: checking for robustness requires time | ||

| Generation of new design alternatives | WR: complexity in the solution creation process aims at creating diverse solutions (e.g., mutation and recombination operators) | SR: exploration of the design space is required to find alternative designs | WR: having different high quality solutions increases the probability of obtaining a high quality robust solution | ||

| Ease of use | MR: increased complexity requires more effort in setting up the algorithms (e.g., more parameters have to be set) | ||||

| Algorithm customization | MR: expert knowledge is required to decide how the algorithms complexity transfers to different problems | ||||

| Applicability to whole product range | SR: only scalable algorithms can be applied across the product range | ||||

| Low effort for integrating algorithm in operational system | MR: some types of complexity, e.g., parallel resources, requires additional resources, tools, and software | ||||

| Comprehensibility | WR: some complexity, i.e., using approximate values, can hinder comprehensibility | WR: a low reproducibility hinders comprehensibility |

References

- Liebherr-Werk Nenzing GmbH. Available online: http://www.liebherr.com/en-GB/35267.wfw (accessed on 6 March 2017).

- Frank, G.; Entner, D.; Prante, T.; Khachatouri, V.; Schwarz, M. Towards a Generic Framework of Engineering Design Automation for Creating Complex CAD Models. Int. J. Adv. Syst. Meas. 2014, 7, 179–192. [Google Scholar]

- Fleck, P.; Entner, D.; Münzer, C.; Kommenda, M.; Prante, T.; Affenzeller, M.; Schwarz, M.; Hächl, M. A Box-Type Boom Optimization Use Case with Surrogate Modeling towards an Experimental Environment for Algorithm Comparison. In Proceedings of the EUROGEN 2017, Madrid, Spain, 13–15 September 2017. [Google Scholar]

- Hansen, N.; Auger, A.; Mersmann, O.; Tušar, T.; Brockhoff, D. COCO: A Platform for Comparing Continuous Optimizers in a Black-Box Setting. arXiv 2016, arXiv:1603.08785. [Google Scholar]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real Parameter Optimization; Technical Report; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Ho, Y.C.; Pepyne, D.L. Simple Explanation of the No-Free-Lunch Theorem and Its Implications. J. Optim. Theory Appl. 2002, 115, 549–570. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Akao, Y. New product development and quality assurance: System of QFD, standardisation and quality control. Jpn. Stand. Assoc. 1972, 25, 9–14. [Google Scholar]

- Sullivan, L.P. Quality Function Deployment—A system to assure that customer needs drive the product design and production process. Qual. Prog. 1986, 19, 39–50. [Google Scholar]

- Chan, L.K.; Wu, M.L. Quality function deployment: A literature review. Eur. J. Oper. Res. 2002, 143, 463–497. [Google Scholar] [CrossRef]

- Bouchereau, V.; Rowlands, H. Methods and techniques to help quality function deployment (QFD). Benchmark. Int. J. 2000, 7, 8–20. [Google Scholar] [CrossRef]

- Hauser, J. The House of Quality. Harv. Bus. Rev. 1988, 34, 61–70. [Google Scholar]

- Akao, Y. Quality Function Deployment: Integrating Customer Requirements into Product Design; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Rice, J.R. The Algorithm Selection Problem. In Advances in Computers; Rubinoff, M., Yovits, M.C., Eds.; Elsevier: Amsterdam, The Netherlands, 1976; Volume 15, pp. 65–118. [Google Scholar]

- Roy, R.; Hinduja, S.; Teti, R. Recent advances in engineering design optimisation: Challenges and future trends. CIRP Ann.-Manuf. Technol. 2008, 57, 697–715. [Google Scholar] [CrossRef]

- Affenzeller, M.; Winkler, S.; Wagner, S. Evolutionary System Identification: New Algorithmic Concepts and Applications. In Advances in Evolutionary Algorithms; I-Tech Education and Publishing: Vienna, Austia, 2008; pp. 29–48. [Google Scholar]

- Dantzig, G.B. Linear Programming and Extensions, 11th ed.; Princeton University Press: Princeton, NJ, USA, 1998. [Google Scholar]

- Mehrotra, S. On the Implementation of a Primal-Dual Interior Point Method. SIAM J. Optim. 1992, 2, 575–601. [Google Scholar] [CrossRef]

- Bertsekas, D. Nonlinear Programming; Athena Scientific: Nashua, NH, USA, 1999. [Google Scholar]

- Fletcher, R. Practical Methods of Optimization, 2nd ed.; John Wiley & Sons: New York, NY, USA, 1987. [Google Scholar]

- Conn, A.R.; Scheinberg, K.; Vicente, L.N. Introduction to Derivative-Free Optimization; MPS-SIAM Series on Optimization; SIAM: Philadelphia, PA, USA, 2009. [Google Scholar]

- Gendreau, M.; Potvin, J. Handbook of Metaheuristics; International Series in Operations Research & Management Science; Springer International Publishing: New York, NY, USA, 2018. [Google Scholar]

- Talbi, E.G. Metaheuristics: From Design to Implementation; John Wiley & Sons: New York, NY, USA, 2009. [Google Scholar]

- Muñoz, M.A.; Sun, Y.; Kirley, M.; Halgamuge, S.K. Algorithm selection for black-box continuous optimization problems: A survey on methods and challenges. Inf. Sci. 2015, 317, 224–245. [Google Scholar] [CrossRef]

- Auger, A.; Doerr, B. Theory of Randomized Search Heuristics: Foundations and Recent Developments; Series on Theoretical Computer Science; World Scientific: Singapore, 2011. [Google Scholar]

- Borenstein, Y.; Moraglio, A. Theory and Principled Methods for the Design of Metaheuristics; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Finck, S.; Beyer, H.G.; Melkozerov, A. Noisy Optimization: A Theoretical Strategy Comparison of ES, EGS, SPSA & IF on the Noisy Sphere. In Proceedings of the 13th Annual Conference on Genetic And Evolutionary Computation, Dublin, Ireland, 12–16 July 2011; pp. 813–820. [Google Scholar] [CrossRef]

- Beham, A.; Wagner, S.; Affenzeller, M. Algorithm Selection on Generalized Quadratic Assignment Problem Landscapes. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 253–260. [Google Scholar] [CrossRef]

- Jamil, M.; Yang, X.S. A Literature Survey of Benchmark Functions For Global Optimization Problems. Int. J. Math. Model. Numer. Optim. 2013, 4. [Google Scholar] [CrossRef]

- Himmelblau, D. Applied Nonlinear Programming; McGraw-Hill: New York, NY, USA, 1972. [Google Scholar]

- Garey, M.R.; Johnson, D.S. Computers and Intractability: A Guide to the Theory of NP-Completeness; W. H. Freeman & Co.: New York, NY, USA, 1979. [Google Scholar]

- Lemonge, A.; Nacional de Computação Científica, L.; Borges, C.; Silva, F.B. Constrained Optimization Problems in Mechanical Engineering Design Using a Real-Coded Steady-State Genetic Algorithm. Mecánica Computacional 2010, 29, 9287–9303. [Google Scholar]

- Ravindran, A.; Ragsdell, K.; Reklaitis, G. Engineering Optimization: Methods and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Koziel, S.; Yang, X. Computational Optimization, Methods and Algorithms; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Floudas, C.; Pardalos, P.; Adjiman, C.; Esposito, W.; Gümüs, Z.; Harding, S.; Klepeis, J.; Meyer, C.; Schweiger, C. Handbook of Test Problems in Local and Global Optimization; Nonconvex Optimization and Its Applications; Springer: New York, NY, USA, 2013. [Google Scholar]

- Neumaier, A. Global Optimization Test Problems. Available online: http://www.mat.univie.ac.at/~neum/glopt/test.html#test_constr (accessed on 20 April 2018).

- Dominique, O. CUTEr—A Constrained and Unconstrained Testing Environment, Revisited. Available online: http://www.cuter.rl.ac.uk/. (accessed on 20 April 2018).

- Branke, J.; Elomari, J.A. Meta-optimization for Parameter Tuning with a Flexible Computing Budget. In Proceedings of the 14th Annual Conference on Genetic and Evolutionary Computation, Philadelphia, PA, USA, 7–11 July 2012; pp. 1245–1252. [Google Scholar] [CrossRef]

- Hutter, F.; Hoos, H.H.; Leyton-Brown, K. Sequential Model-based Optimization for General Algorithm Configuration. In Proceedings of the 5th International Conference on Learning and Intelligent Optimization; Springer: Berlin, Germany, 2011; pp. 507–523. [Google Scholar] [CrossRef]

- López-Ibáñez, M.; Dubois-Lacoste, J.; Pérez Cáceres, L.; Birattari, M.; Stützle, T. The irace package: Iterated racing for automatic algorithm configuration. Oper. Res. Perspect. 2016, 3, 43–58. [Google Scholar] [CrossRef]

- Liao, T.; Molina, D.; Stützle, T. Performance evaluation of automatically tuned continuous optimizers on different benchmark sets. Appl. Soft Comput. 2015, 27, 490–503. [Google Scholar] [CrossRef]

- Frank, G. An Expert System for Design-Process Automation in a CAD Environment. In Proceedings of the Eighth International Conference on Systems (ICONS), Seville, Spain, 27 January–1 February 2013; pp. 179–184. [Google Scholar]

- Rechenberg, I. Cybernetic Solution Path of an Experimental Problem; Library Translation No. 1122; Royal Aircraft Establishment, Farnborough: Hampshire, UK, 1965. [Google Scholar]

- Schwefel, H.P. Kybernetische Evolution als Strategie der Experimentellen Forschung in der Strömungstechnik. Master’s Thesis, Technische Universität Berlin, Berlin, Germany, 1965. [Google Scholar]

- Coello, C.A.C. Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: A survey of the state of the art. Comput. Methods Appl. Mech. Eng. 2002, 191, 1245–1287. [Google Scholar] [CrossRef]

- Carson, Y.; Maria, A. Simulation Optimization: Methods And Applications. In Proceedings of the 1997 Winter Simulation Conference, Atlanta, GA, USA, 7–10 December 1998; pp. 118–126. [Google Scholar]

- Fu, M.C.; Glover, F.; April, J. Simulation optimization: A review, new developments, and applications. In Proceedings of the 37th Winter Simulation Conference, Orlando, FL, USA, 4–7 December 2005; pp. 83–95. [Google Scholar]

- Forrester, A.I.; Keane, A.J. Recent advances in surrogate-based optimization. Prog. Aerosp. Sci. 2009, 45, 50–79. [Google Scholar] [CrossRef]

- Bishop, C. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Wang, G.G.; Shan, S. Review of metamodeling techniques in support of engineering design optimization. J. Mech. Des. 2007, 129, 370–380. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Affenzeller, M.; Wagner, S.; Winkler, S.; Beham, A. Genetic Algorithms and Genetic Programming: Modern Concepts And Practical Applications; Chapman and Hall/CRC: Boca Raton, FL, USA, 2009. [Google Scholar]

- Abdolshah, M.; Moradi, M. Fuzzy Quality Function Deployment: An Analytical Literature Review. J. Ind. Eng. 2013, 2013, 682532. [Google Scholar] [CrossRef]

| Obj.Function Value | Analysis Tool Calls | Stress | Fatigue | Buckling | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case | Ref. | Heu. | ES | Heu. | ES | Ref. | Heu. | ES | Ref. | Heu. | ES | Ref. | Heu. | ES |

| 1 | 28,117 | 25428 | 22,907 | 13 | 500 + 160 | 0.57 | 0.57 | 0.61 | 0.58 | 0.58 | 0.59 | 0.99 | 0.50 | 0.55 |

| 2 | 25,184 | 17,347 | 16,163 | 6 | 500 + 153 | 0.39 | 0.43 | 0.46 | 0.51 | 0.54 | 0.55 | 0.29 | 0.51 | 0.61 |

| 3 | 19,482 | 15,451 | 14,184 | 6 | 500 + 165 | 0.39 | 0.39 | 0.47 | 0.56 | 0.56 | 0.64 | 0.32 | 0.39 | 0.54 |

| 4 | 12,749 | 7986 | 9367 | 3 | 500 + 155 | 0.25 | 0.32 | 0.30 | 0.26 | 0.32 | 0.35 | 0.16 | 0.43 | 0.45 |

| 5 | 28,355 | 23,322 | 19,050 | 8 | 500 + 150 | 0.47 | 0.53 | 0.64 | 0.59 | 0.66 | 0.77 | 0.54 | 0.43 | 0.71 |

| Length of Section | SucPP—Lengths of Side Plates | SimPP—Lengths of Side Plates |

|---|---|---|

| 26,948 | 9683 9948 7317 ( ) | 9679 9952 7317 () |

| 43,036 | 9685 9950 9535 9532 4334 () | 9684 9952 9952 9952 3496 () |

| 48,916 | 9685 9951 9536 9530 9912 302 () | 9497 9952 9952 9952 9563 () |

| 52,606 | 9685 9951 9538 9527 9952 3953 () | 9497 9952 9952 9950 9952 3302 () |

| 61,915 | 9686 9951 9538 9526 9952 9952 3310 () | 9497 9952 9952 9950 9952 9952 2660 () |

| 69,405 | 9686 9951 9540 9524 9952 9952 9197 1603 () | 9497 9952 9952 9950 9952 9952 9447 703 () |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Entner, D.; Fleck, P.; Vosgien, T.; Münzer, C.; Finck, S.; Prante, T.; Schwarz, M. A Systematic Approach for the Selection of Optimization Algorithms including End-User Requirements Applied to Box-Type Boom Crane Design. Appl. Syst. Innov. 2019, 2, 20. https://doi.org/10.3390/asi2030020

Entner D, Fleck P, Vosgien T, Münzer C, Finck S, Prante T, Schwarz M. A Systematic Approach for the Selection of Optimization Algorithms including End-User Requirements Applied to Box-Type Boom Crane Design. Applied System Innovation. 2019; 2(3):20. https://doi.org/10.3390/asi2030020

Chicago/Turabian StyleEntner, Doris, Philipp Fleck, Thomas Vosgien, Clemens Münzer, Steffen Finck, Thorsten Prante, and Martin Schwarz. 2019. "A Systematic Approach for the Selection of Optimization Algorithms including End-User Requirements Applied to Box-Type Boom Crane Design" Applied System Innovation 2, no. 3: 20. https://doi.org/10.3390/asi2030020

APA StyleEntner, D., Fleck, P., Vosgien, T., Münzer, C., Finck, S., Prante, T., & Schwarz, M. (2019). A Systematic Approach for the Selection of Optimization Algorithms including End-User Requirements Applied to Box-Type Boom Crane Design. Applied System Innovation, 2(3), 20. https://doi.org/10.3390/asi2030020