1. Introduction

As the world navigates a transformative artificial intelligence (AI) revolution, the full extent of its impact remains uncertain, though significant advancements are steadily introducing innovative applications across various industries, including medicine. As demands on healthcare systems continue to increase [

1], it is imperative to integrate emerging AI tools safely to enhance workflow efficiency, elevate quality of care, and improve accessibility to services.

Generative AI, exemplified by tools like ChatGPT, has become widely influential due to its accessibility and versatility [

2]. Despite significant developments, the integration of AI into clinical practice remains fragmented. Current literature supports the implementation of AI in areas with standardised inputs, such as diagnostic radiology, where numerous studies demonstrate its efficacy in aiding diagnosis [

3,

4]. However, evidence surrounding the use of generative AI in clinical settings remains limited. It is often confined to controlled environments where the quality of outputs is assessed, such as comparative analyses of ChatGPT responses against urology consultants in answering clinical case-based questions [

5,

6,

7]. With the exponential growth of large language models (LLMs), research into various other uses such as patient education, clinical decision-making, and clinical documentation is emerging [

8,

9]. Examples include the use of ChatGPT as an educational tool for simulated prostate cancer patients, offering high-quality responses validated by established metrics and expert urologists in both explaining the disease and detailing potential surgical complications [

10,

11].

While the potential value of these tools is becoming evident through closed testing and pilot studies, there is currently no literature detailing interactions between patients and generative AI tools in live clinical settings. The hesitancy to initiate such studies is understandable, given the necessary precautions required when employing generative AI in healthcare [

12]. Several valid concerns persist, including the security of confidential data, the reliability of information, and issues such as AI hallucinations and biases. Ongoing research into mitigating risks and exploring feasibility in healthcare addresses gaps in understanding their performance in real-world settings, with our study evaluating generative AI’s role in patient education. Using the common scenario of obtaining informed consent for a flexible cystoscopy to investigate haematuria, we aim to assess ChatGPT’s ability to deliver clinically appropriate, relevant, and accurate information. Unlike prior research confined to closed settings, our study delves into real patient interactions with ChatGPT, offering valuable insights into its practical utility, patient perceptions, limitations, and the complexities involved in integrating such tools into routine clinical practice.

2. Materials and Methods

A prospective feasibility study was conducted at a single institution consisting of patients referred with either macroscopic or microscopic haematuria. Ethics was approved by the hospital’s Human Research Ethics Committee. The inclusion criteria comprised patients over 18 years of age presenting with haematuria, who could understand English, and were able to use a computer. Patients with language barriers or disabilities affecting comprehension were excluded. Eligibility was assessed through a screening process via telehealth conducted by a research doctor.

Patients were selected based on their referral date and successful recruitment. Once recruited, patients attended an in-person clinic review, where they had access to ChatGPT-4o mini. Patients at no point disclosed their name, age, sex, or location to ChatGPT and were provided with an information booklet on data safety prior to written consent. The temporary chat feature, which ensures information is not saved or used to train future models, was active for each interaction. A list of questions related to their clinical presentation and necessary investigations was provided to the patients, including detailed information about flexible cystoscopy, its risks, and its purpose. Patients were required to ask six questions in total, including what is haematuria; what are the causes of haematuria; why would I need to have a flexible cystoscopy for haematuria; what is the process involved in a flexible cystoscopy; what are the risks associated with a flexible cystoscopy; and are there any alternative treatments? Patients then had the opportunity to ask further questions to ChatGPT. Upon completion, they then underwent a normal consultation with a research doctor who checked the understanding of their presentation and procedure and consented to the patient for the procedure. The doctor then filled out a clinician questionnaire (

Supplementary File S3) at the end of the consult that evaluated the accuracy and reproducibility of ChatGPT for education and surgical consent while also gathering insights into potential integration pitfalls. The study involved two research doctors to account for variations in assessing ChatGPT responses.

Basic patient parameters such as age, sex, primary language, and past medical history were collected for descriptive purposes. The ChatGPT responses from each patient were then independently assessed by three urologists separately using the validated Patient Education Materials Assessment Tool (PEMAT) and DISCERN instruments to assess the functionality and usability of ChatGPT, and the quality of its written text responses from each patient for patient education with a focus on treatment choices, respectively (see

Supplementary Files S1 and S2). Readability was graded using the Flesch Reading tests.

3. Results

A total of ten patients were recruited. One patient attended their consultation yet declined to use ChatGPT due to language barriers. The median age was 47 years, ranging from 26 to 70 years with six female and three male participants. Several different professional backgrounds were represented, from nursing to manual labour (see

Table 1).

All nine patients agreed or strongly agreed that ChatGPT provided a good overview of explaining the details of a flexible cystoscopy. They all found ChatGPT to be informative; the responses given were understandable and easy to follow; and the programme was easy to use. 100% of participants found ChatGPT helpful in allowing the patient to make an informed decision about their surgery, and 78% said they would use ChatGPT again for information about medical procedures. However, nearly half (44%) of the patients expressed varying degrees of difficulty in understanding the information given by ChatGPT.

44% of patients agreed or strongly agreed that the information provided by ChatGPT was sufficient and they did not require additional explanations from the doctor. In contrast, 33% disagreed that ChatGPT’s information was sufficient, and 22% were neutral. Furthermore, 56% of patients felt confident enough in understanding their condition and the procedure that it was unnecessary for the doctor to verify their comprehension. Lastly, 56% of patients strongly disagreed that ChatGPT could replace doctors in performing informed consent for a medical procedure, 11% disagreed, 11% were neutral, and 22% agreed ChatGPT was able to consent independently.

ChatGPT gave detailed responses to the specified patient prompts. For example, it explained the steps in a flexible cystoscopy with “Sure! Here’s a simple overview of the flexible cystoscopy process: 1. Preparation—Before the Procedure: You might be asked to avoid food or drink for a few hours. You’ll also fill out some paperwork and provide your medical history. 2. Anesthesia—Local Anesthesia: Usually, a local anesthetic gel is applied to numb the urethra, making the procedure more comfortable. Sometimes, mild sedation may be offered. 3. Insertion of the Cystoscope—Flexible Cystoscope: The doctor gently inserts the thin, flexible tube (cystoscope) into your urethra and then into your bladder. The cystoscope has a camera and light at the end, allowing the doctor to see inside. 4. Examination—Viewing the Bladder: The doctor will examine the lining of the bladder and urethra for any abnormalities, such as lesions, stones, or signs of infection. 5. Additional Procedures (if needed)—Biopsy or Treatment: If necessary, the doctor can take small tissue samples (biopsies) or perform minor treatments, like removing small growths. 6. Completion—Finishing Up: Once the examination is complete, the cystoscope is carefully removed. 7. Recovery—Post-Procedure: You might experience mild discomfort or a burning sensation while urinating for a short time after the procedure. Drinking plenty of fluids can help. 8. Follow-Up—Results: Your doctor will discuss the findings with you and any next steps if necessary. The whole process typically takes about 15 to 30 min, and you can usually go home the same day.” Further ChatGPT responses can be viewed in

Supplementary File S4.

There were minor variations between the ChatGPT responses of each patient, such as arteriovenous malformation or Alport syndrome not mentioned as causes in some responses (

Supplementary File S4). There were also minor spelling errors in some of the questions typed by the patients, but ChatGPT was able to understand the question and provide a response without issue. Additional questions asked by one patient included the following: “Is cystoscopy the only procedure used for diagnosing haematuria apart from ultrasound? Would the CT scan follow a cystoscopy or done after? How often are mistakes made in cystoscopy? Statistically, how rare are mistakes with cystoscopy?” A second patient asked “I’m bit worry if something happen after the procedure”.

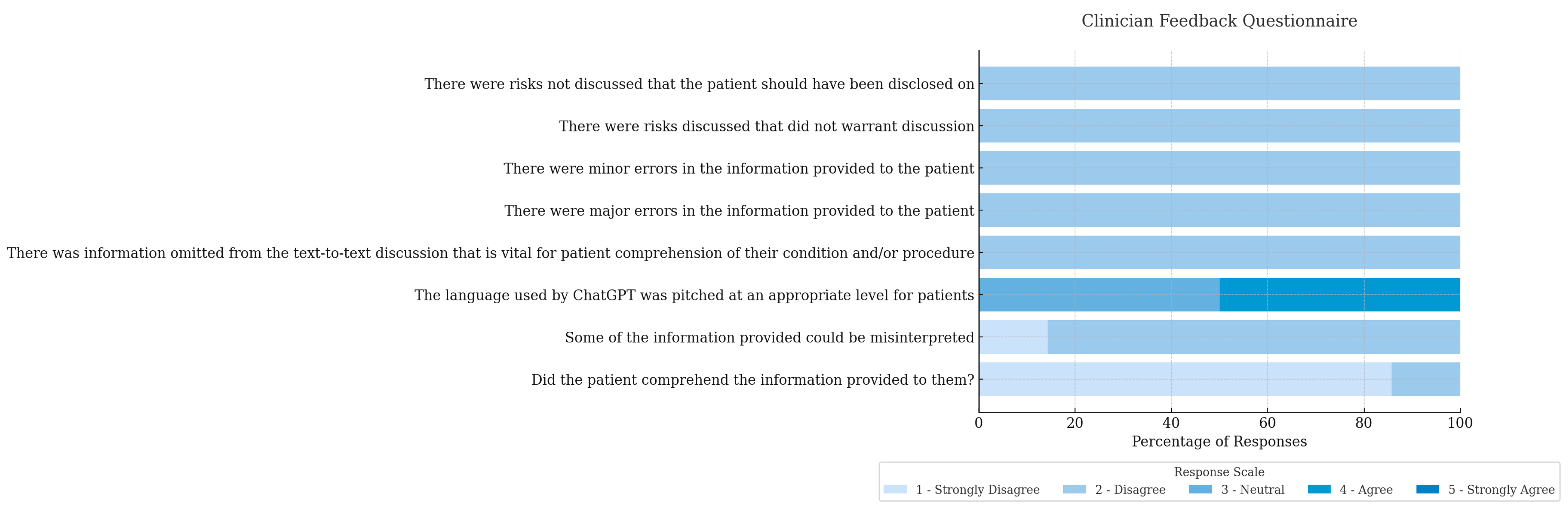

Results for the clinician questionnaire revealed no major or minor errors in the ChatGPT responses, with clear, informative content and appropriate explanation of risks (see

Figure 1). All patients were able to understand the questions, and only two patients asked follow-up questions. No hallucinations were detected in the responses generated by ChatGPT.

Furthermore, the ChatGPT responses of each patient were graded with an overall score by three urologists using PEMAT and DISCERN instrument tools. The mean PEMAT score for understandability was 77.8%, and the lower actionability score was 63.8%. The mean DISCERN score was 57.7 out of a total of 80 (72.1%), which indicates ‘good’ quality. In the subsections, the reliability score was 63.3%, which equated to ‘fair’ quality, and treatment choice information was 78.1%, or ‘good’ quality. The overall rating of the ChatGPT answers was ‘excellent’ quality with 93.3%.

The Flesch Reading Ease and Flesch–Kincaid Grade Level scores were applied to the ChatGPT responses. The Flesch Reading Ease was only 30.2, indicating that the responses were difficult to read, with the writing level comparable to a US grade level of 13. Passive sentences accounted for 22.1% of the total.

Overall, our results show that patients experienced a positive interaction with ChatGPT. Most patients found that ChatGPT was a useful resource in providing them with medical information about their presentation; however, most did not feel it could replace a clinician answering patient questions or providing informed consent for a procedure. An outline of the responses can be found in

Figure 2.

4. Discussion

This study highlights the potential of ChatGPT as a valuable tool for augmenting patient education in urology. By leveraging generative AI, patients were provided with clear, accessible information about their condition and procedures, addressing key components of informed consent according to Australian standards [

13]. Positive evaluations using the PEMAT and DISCERN tools, along with consensus from urologists on the content’s clarity, highlight the credibility and usefulness of the information, though its actionability was rated lower due to a lack of visual aids. Implementing such visuals would be challenging given the current nature of LLMs requiring specific prompting with highly variable results; however, this may be easier in the future with new iterations of generative AI. ChatGPT’s treatment pathway responses, while scoring nearly 80% on the DISCERN tool, lost points in ‘reliability’ due to omissions regarding consequences of no treatment and missing source citations. Other AI chatbots, such as Perplexity, address these shortfalls by providing reference sources. These results show slight improvements over earlier studies using similar metrics [

7], likely due to pre-determined prompts and the enhanced capabilities of the latest ChatGPT model. Evidence for the improved quality and accuracy of responses in later versions is also demonstrated in Gibson et al.’s paper reviewing prostate cancer patient information [

10]. Continued research and expanded parameter analysis will enhance these tools’ healthcare applications, with the emergence of diverse models like Bard, Claude, and Gemini, alongside comparative studies [

14,

15], underscoring current innovation in the field.

The integration of ChatGPT into patient care has the potential to ease clinicians’ workloads by reducing the time required for patient education. Obtaining informed consent often involves extensive discussions with time-poor clinicians, who can lead to situations where patients are inadequately briefed. A 2021 systematic review on patient comprehension revealed a lack of understanding regarding the procedures they consent to, with few patients able to list more than one risk following a consultation with their doctor [

16]. Feedback from participating clinicians highlighted that patients were often able to recall two or more risks associated with flexible cystoscopy following their interaction with ChatGPT. Study participants tend to be more diligent, which may account for their higher level of comprehension and knowledge retention. Tools like ChatGPT help build a foundational understanding of their medical condition allowing patients more time to seek further clarification from their doctor. Furthermore, dynamic AI-powered voice models operating in multiple languages holds significant potential to improve access to critical healthcare information and enhance decision-making for culturally and linguistically diverse populations often experiencing poorer health outcomes [

17,

18,

19]. This enables clinicians to focus on acute issues and address specific queries during consultations.

In the era of information abundance, it is unsurprising that population surveys reveal 68% of individuals rely on the internet for healthcare-related information [

20]. However, the reliability of much of this content is questionable, with many sources lacking scientific accuracy or presenting incomplete advice [

21,

22,

23]. With most participants anticipating future use of ChatGPT for medical information, the growing reliance on generative AI raises concerns about misinformation, highlighting the need to understand how patients interact with these tools. Participants often highlighted the challenge of “not knowing what you don’t know,” reflecting difficulty in distinguishing factual and accurate information from potential inaccuracies in LLM-generated text. Evaluating ChatGPT’s output solely through the lens of a medical practitioner overlooks the importance of patient comprehension, a common limitation of in vitro studies. Readability of information is another concern, with scores of 30.2% in the Fleisch–Kincaid test, indicating the material is best understood by university graduates, potentially exceeding patient comprehension. Complexity of medical jargon is also not considered in this scoring system and includes terms clinicians may commonly overlook, such as ‘haematuria’. Strategies such as prompting ChatGPT to “explain at an elementary school level” can produce more easily understood and accessible information. As the appreciation for the nuances of prompt engineering grows, optimising inputs based on a deep understanding of LLMs has become essential to achieving desired results and is now a crucial consideration for future studies [

24,

25].

While this study demonstrates the potential of ChatGPT in augmenting patient education, several limitations warrant consideration. The small sample size of nine patients affects the generalisability of the results. There may also be selection bias, as patients were pre-screened, English speakers, and had possibly higher levels of technological proficiency, which can constrain the applicability to broader patient populations, particularly older adults or those with limited digital literacy.

The use of six predefined prompts does not encompass the full scope of real-life patient interactions. While these questions were selected to provide a structured framework for preliminary evaluation, future studies may incorporate a broader set of questions to more closely replicate real-world interactions. Expanding the scope would also facilitate a more robust evaluation of AI hallucinations.

Lastly, the qualitative assessment metrics, including PEMAT and DISCERN, are subject to bias and require further validation in the context of generative AI.

Our study intends to serve as a springboard for further studies with a larger and more diverse patient population that utilises generative AI in a real-world setting. The set of questions asked can be expanded for different urological presentations and presented to several different chatbots for comparative purposes. Requests for visual aids such as images of anatomy or procedures, especially as these technologies rapidly progress, can be included in prompting. Modifications can also be made to ask for the answers to be given at easier reading comprehension levels to improve understandability and be suitable for an even larger audience. Objective pre- and post-knowledge assessments can be used in future studies to assess retention of patient knowledge. A control group can also be included with brochure-based information to be used as a comparative arm.

Given generative AI’s reliance on input analysis for model improvement, robust data privacy and security safeguards are crucial, particularly in future AI studies involving sensitive patient data, to ensure ethical and safe healthcare deployment. Currently, all conversations with chatbots are stored in the cloud to train its models. There has been an explosion in the number of LLMs created since ChatGPT, and the degree of privacy offered varies between each model. When utilised in healthcare, there often is a higher chance of sensitive data being included in the information given. There is a risk of leakage of this data through hacking and data breaches. As the public version of ChatGPT is not compliant with local or international privacy regulations (e.g., Health Insurance Portability and Accountability Act [HIPAA]), the use of identifiable or personal health information is inappropriate, and implementation within healthcare organisations is limited to enterprise or vendor-supported solutions that provide the necessary compliance frameworks.