The purpose of GS-PI-DeepONet neural framework is to tackle the difficulties associated with solving parametric PDEs in structural analysis, especially for issues that involve complex geometries and boundary conditions. This method combines GNNs with Physical-Informed Deep Operator Networks (PI-DeepONets), harnessing the advantages of both approaches to provide efficient, and accurate solutions. Compared to vanilla DeepONets, GS-PI-DeepONet surpasses this limitation by utilizing graph-structured representations. In this context, nodes encode physical quantities such as displacements and loads, while edges represent geometric or topological relationships. This allows the model to adapt seamlessly to unstructured meshes or point clouds, which are common in FE analysis. Moreover, to enhance the efficiency and accuracy of the solution, PINNs are introduced and PDEs are embedded as soft constraints—irrespective of input dimensionality. The details of GS-PI-DeepONet are presented as follows.

3.1. Geometric (Graph Attention Networks) Embedding DeepONet

In structural analysis, inputs such as material distribution and boundary conditions, as well as outputs like solutions, often exist in the form of unstructured grids, typically represented as FE mesh node data or 3D point cloud topologies. Traditional data-driven methods face significant limitations in such problems: fully connected networks ignore geometric topological information and forcibly fit input-output mappings through dense weight matrices, leading to an exponential expansion of the parameter space. This not only drastically increases computational resource consumption but also raises severe overfitting risks. Graph-structured data can share weights across different nodes, significantly reducing the number of parameters while effectively capturing complex geometric relationships. Through message-passing mechanisms, it can also model local strain and global stress transmission paths, enabling efficient feature extraction from the data.

A graph represents relationships between a set of entities—nodes and edges in finite element analysis—and can generally be expressed as , where is the set of all vertices in the graph, and is the set of all edges. To further describe each node, edge, or the entire graph, information can be stored in various parts of the graph.

Let be a node in the graph, and be an edge from node to node . The neighborhood of node is , which is the set of all nodes adjacent to . For the adjacency matrix of the graph, if , then ; otherwise, . The size of the matrix is .

Commonly used graph neural network models include Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Gated Graph Neural Networks (GGNNs). Among these, GCNs draw inspiration from CNNs (Convolutional Neural Networks) and aim to generalize traditional convolution operations to non-Euclidean spaces, i.e., graph-structured data. The core idea is the neighborhood aggregation mechanism, which updates node representations using the features of nodes and their neighbors, thereby capturing local and global graph features. Unlike CNNs, which extract features from local neighborhoods on regular grids (e.g., image data), GCNs aggregate information from neighboring nodes to update the current node’s representation, capturing local dependencies in the graph structure.

Generally, graph-structured data can be described using the Laplacian matrix:

where

is the adjacency matrix, representing connections between nodes,

is the degree matrix, with diagonal elements indicating the number of neighbors for each node.

For numerical stability, the normalized Laplacian matrix is often used

Similar to traditional neural networks, GCNs propagate node information through forward propagation. Kipf and Welling proposed a simplified GCN that achieves efficient convolution operations via first-order approximation [

43]. The node feature update formula for each layer is:

where

is the adjacency matrix with self-loops added,

is the degree matrix of

,

is the node feature matrix at layer

,

is the learnable weight matrix, and

is the activation function.

Unlike GCNs, which assign fixed weights to all neighbors as shown in

Figure 2, GATs generate adaptive weights for each node pair through attention mechanisms, accurately capturing the importance of information propagation and significantly improving the flexibility of graph data modeling.

For node

and its neighbor

, the attention coefficient

is calculated using a shared attention mechanism and normalized via the softmax function as

where

and

are the feature vectors of nodes

and

, respectively,

is a learnable weight matrix,

is the parameter vector of the attention mechanism,

denotes concatenation.

Similar to GCNs, the updated features for node

are obtained by weighted aggregation of neighbor information. To enhance robustness, GATs often employ multi-head attention:

where

is the number of attention heads, and

denotes feature concatenation (or averaging in the final layer).

In summary, the implementation of GATs involves the following steps:

Linear Projection: Map input node features to a latent space using learnable weight matrices to enhance feature representation.

Attention Calculation: Dynamically compute adaptive weights between neighboring nodes using a shared attention mechanism.

Normalization: Apply softmax to normalize attention weights, ensuring they form a probability distribution.

Aggregation: Generate new node embedding by weighted aggregation of neighbor features, incorporating local topological information.

Multi-Head Attention: Combine results from multiple independent attention heads to improve robustness and capture multi-dimensional interaction patterns.

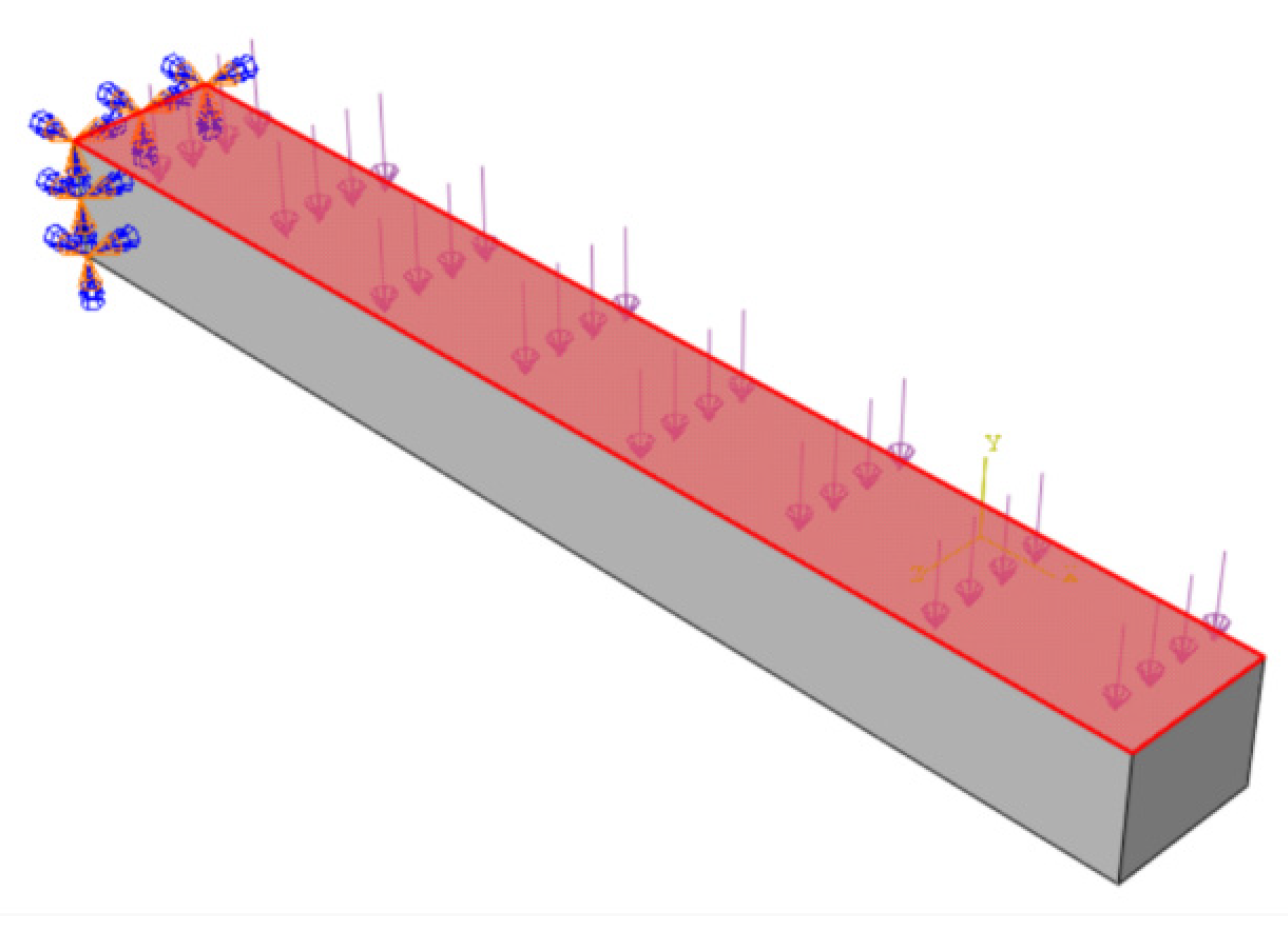

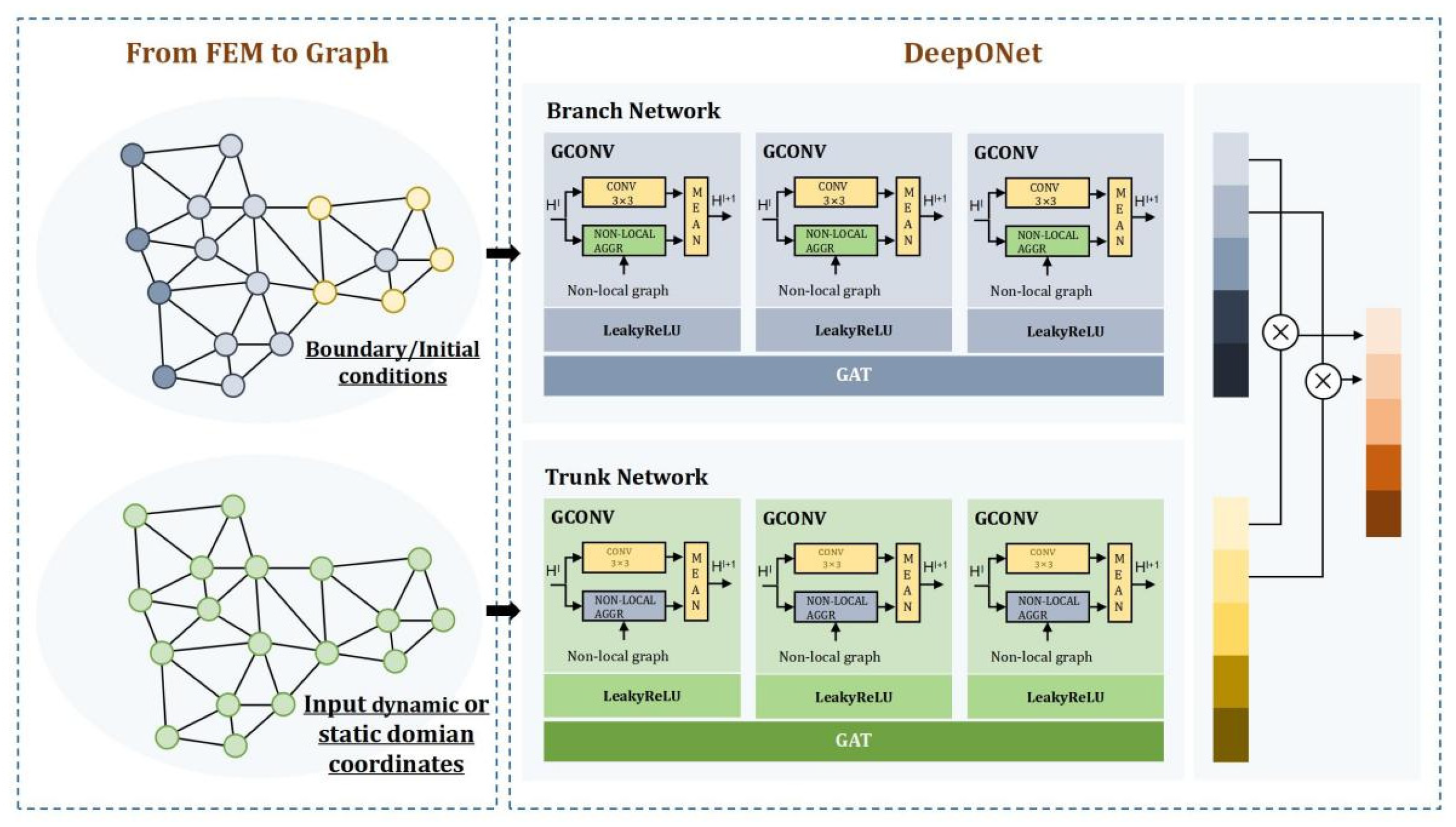

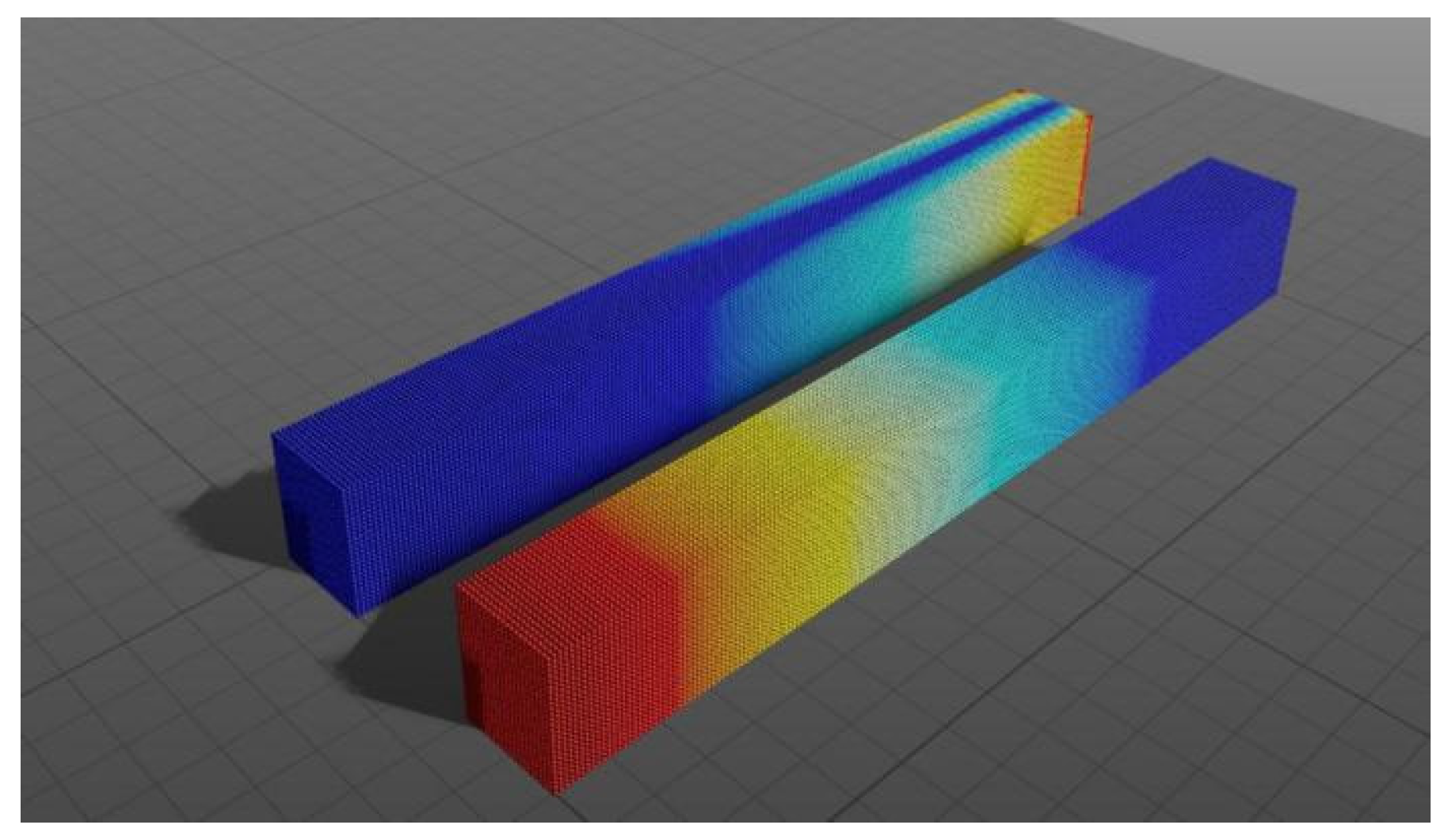

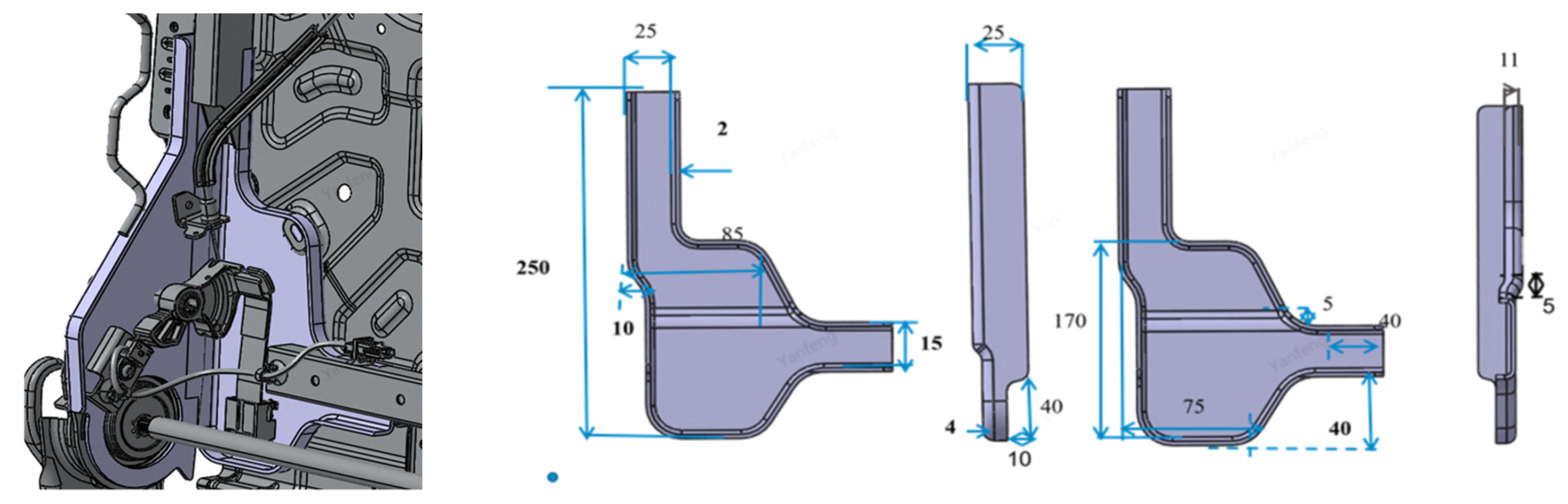

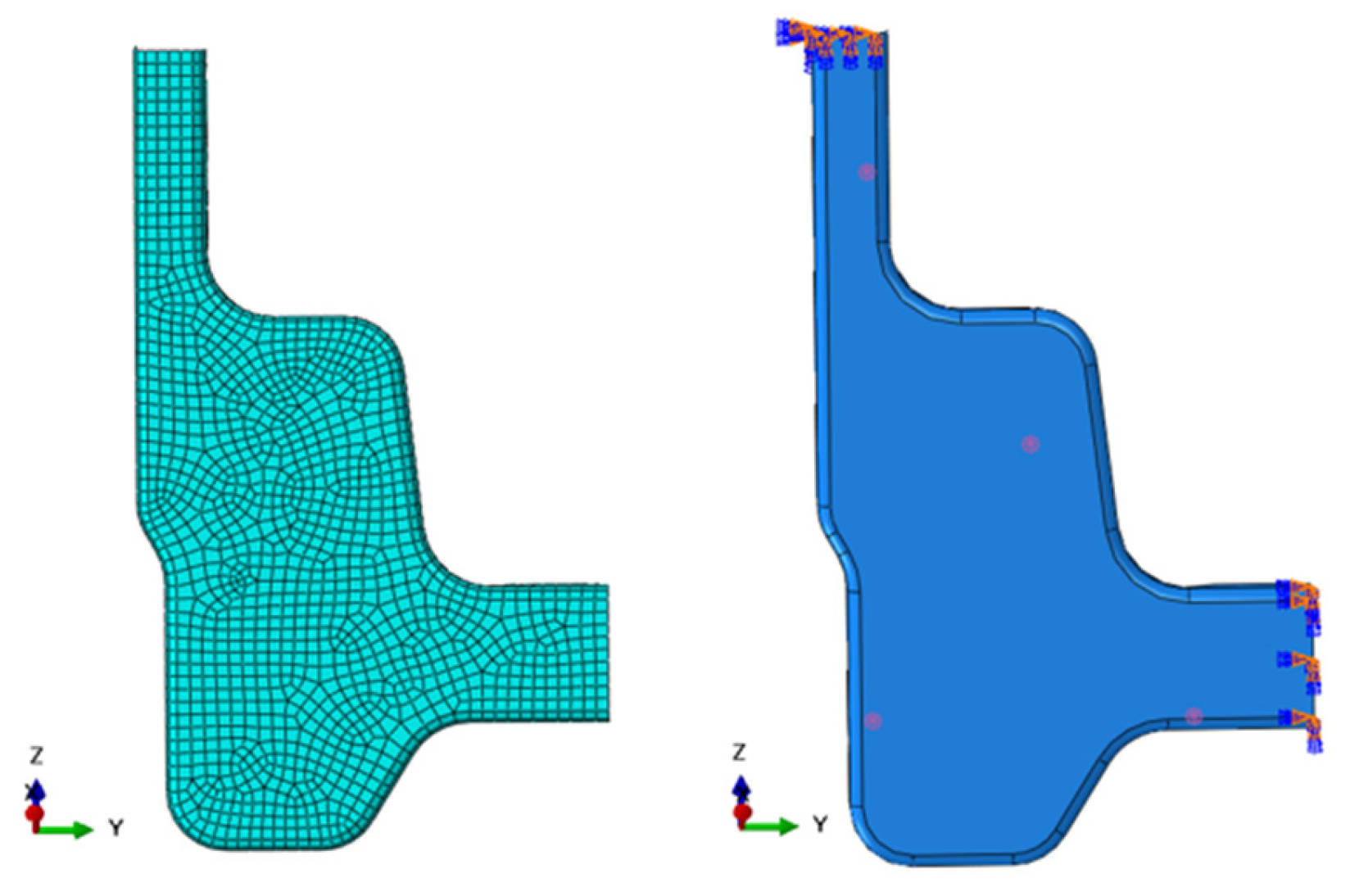

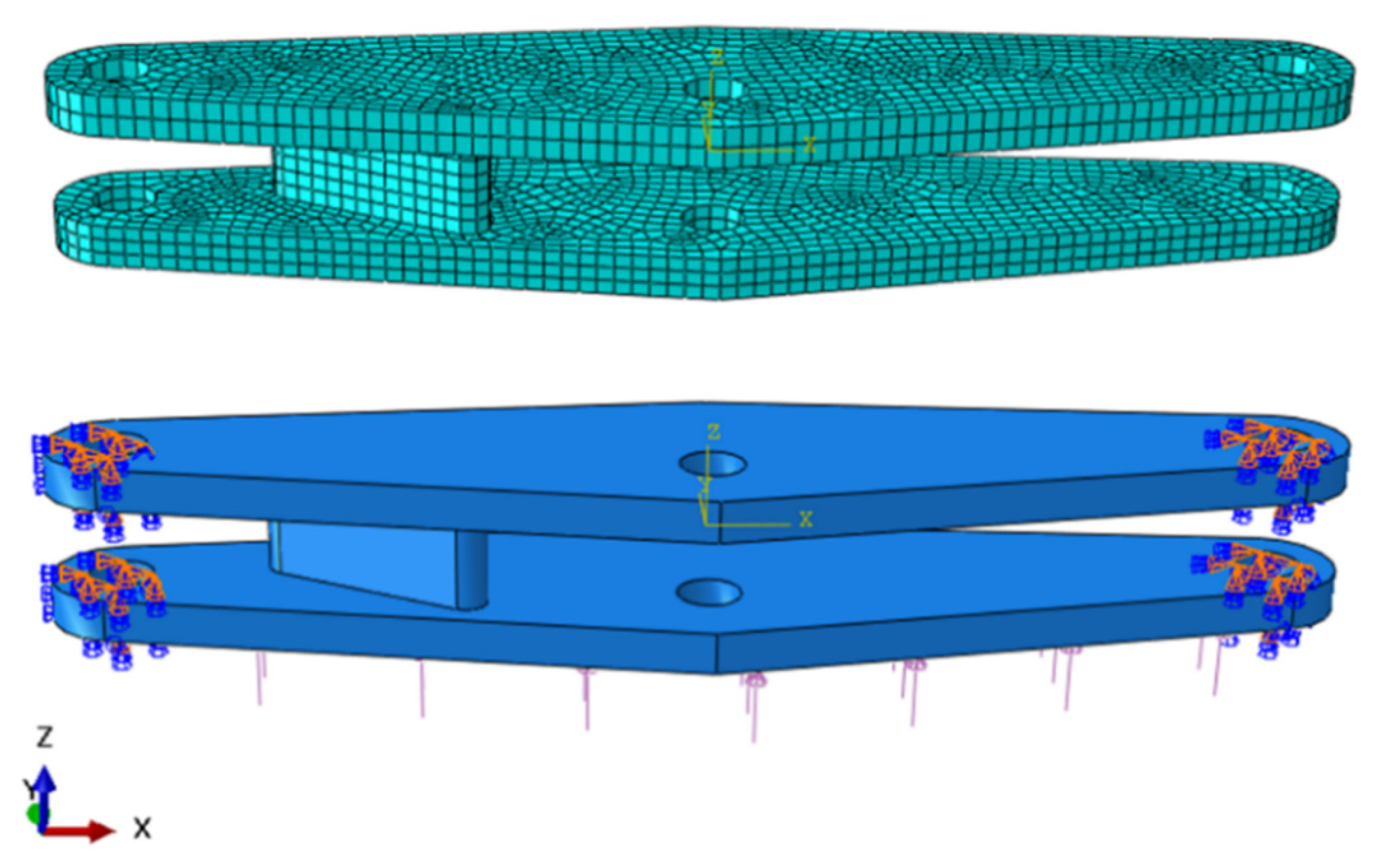

In structural strength analysis, high-fidelity numerical solutions—particularly for strongly nonlinear problems—typically require significant computational and storage resources. The propagation of graph-based simulation networks relies directly on discretized representations such as computational grids or particles (i.e., CAE models). Therefore, efficient GATs are essential for rapid prediction in such problems. Based on the traditional GAT, this study introduces a differential-algebraic equation constraint mechanism for precisely embedding the initial and the boundary conditions within the discretized input space of deep operators as shown in

Figure 3. This approach not only provides structured input for data-driven neural network training but also establishes a topological adaptation foundation for the fusion.

In the original DeepONets framework, the architectural design of the branch and trunk networks is typically open-ended, with no strict constraints on their specific implementations. Therefore, this study embeds a graph attention neural network as the core subnet within DeepONets. The branch and trunk networks map the discrete representations of solution functions and initial/boundary conditions, respectively, into a shared latent variable space of graph structures. The implementation details are as follows:

Branch Network Input: Graph-structured representations of boundary conditions and initial conditions , where non-boundary nodes have zero features.

Trunk Network Input: Graph-structured representations of Euclidean spatial coordinates, where node features consist of spatial coordinates , and edge features retain topological connectivity information required for field discretization.

Operator Network Output: The physical field variable , whose latent space is a sequence of graph structures , with representing latent variables obtained via graph dot product operations.

Through graph attention neural network propagation, the operator network generates predicted outputs

for the field variable

. The trunk network

is expressed as:

where

denotes latent variables encoding structural geometric features. The branch network

is expressed as:

where

represents latent variables encoding boundary/initial conditions. The latter part of the operator network takes the dot product of trunk and branch network latent variables as input:

where

denotes the graph dot product operation.

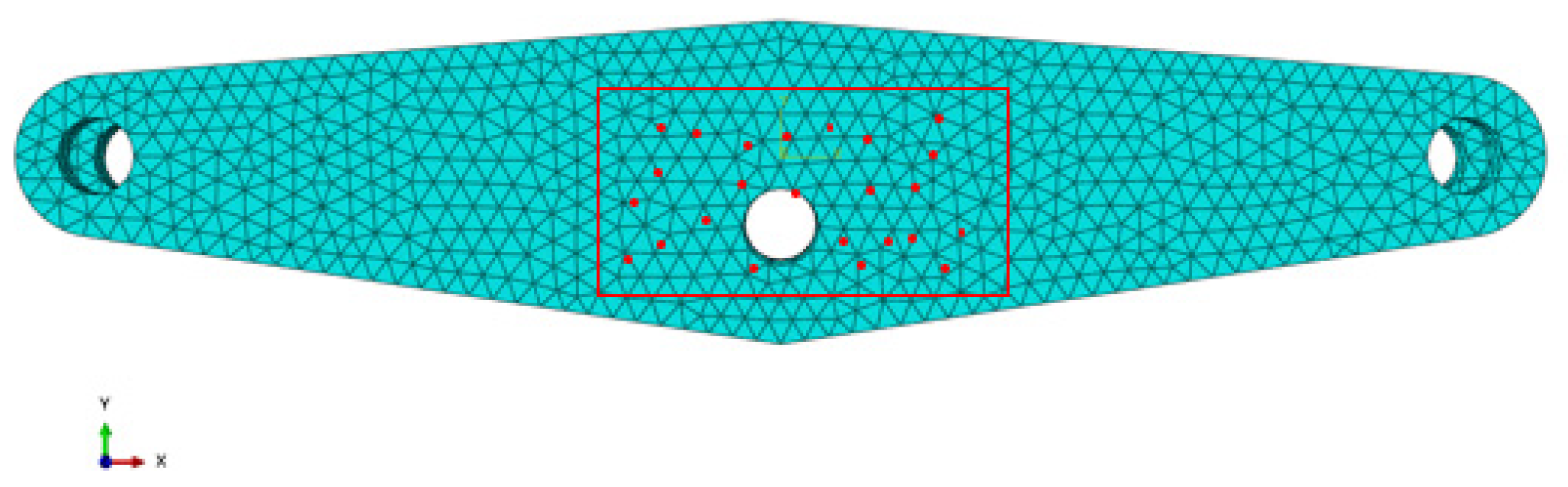

This study adopts the inherent mesh topology from FEA. For node feature extraction, an undirected graph

includes a node set

corresponding to FE mesh nodes and an edge set

connecting nodes that share at least one element. Research shows that feature encoding in graph neural networks significantly impacts model performance [

44]. Therefore, initial conditions are not directly used as input features. Instead, for structural analysis problems, the input node features are derived from the initial load vector

. A load vector

field is constructed via interpolation and evaluated at graph nodes. The input

feature encoding for the graph can be expressed as:

where

is a radial basis function (e.g., Gaussian kernel) weighting distance effects;

is the coordinate offset of node

relative to load point

;

is the magnitude of the load vector;

is a weighting coefficient balancing point-to-point and point-to-line distances;

is

a scaling hyperparameter for line distance.

This encoding method compresses high-dimensional load vector field information into low-dimensional node features through a geometry-physics coupled weighting strategy. It preserves the topological properties of FEA grids—including point-to-point distance, point-to-line distance, directional metrics, and initial load magnitude—while flexibly adapting to different scenarios via hyperparameters, thereby providing high-fidelity input representations for graph neural network-based physical field predictions.

3.2. Physical Enriched Geometric Embedding DeepONet

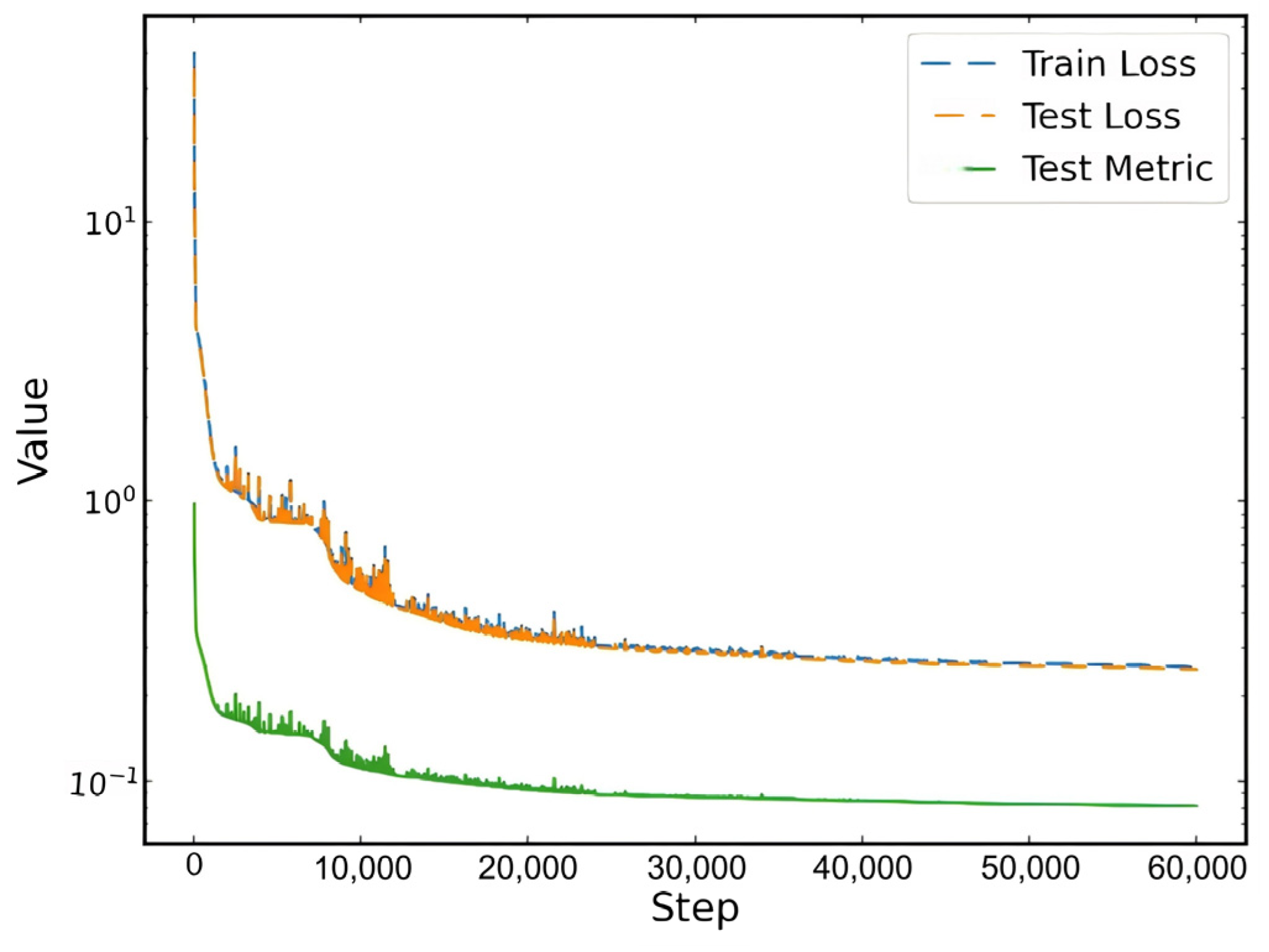

Based on the Geometric Embedding DeepONet, physical information is suggested to enrich structural analysis. Therefore, PINNsare introduced. PINNs integrate observational data and PDE constraints into the loss function of neural networks. Through Automatic Differentiation (AD), PDEs are embedded as soft constraints—regardless of input dimensionality, AD requires only one forward pass and one backward pass to compute all partial derivatives. Consider a parametric PDE:

where

is the physical field to be solved;

is the problem domain;

represents equation parameters;

is

the differential operator.

Sequentially, PI-DeepONets unify PINNs and DeepONets through data-physics co-optimization, efficiently solving parametric PDEs. By embedding physical constraints into operator learning, this approach enforces consistency between DeepONet outputs and governing laws, accelerating convergence and enhancing predictive accuracy. A neural network

is constructed, where

denotes trainable weights and biases, and

represents nonlinear activation functions. Given training data

and PDE residual points

, the total loss

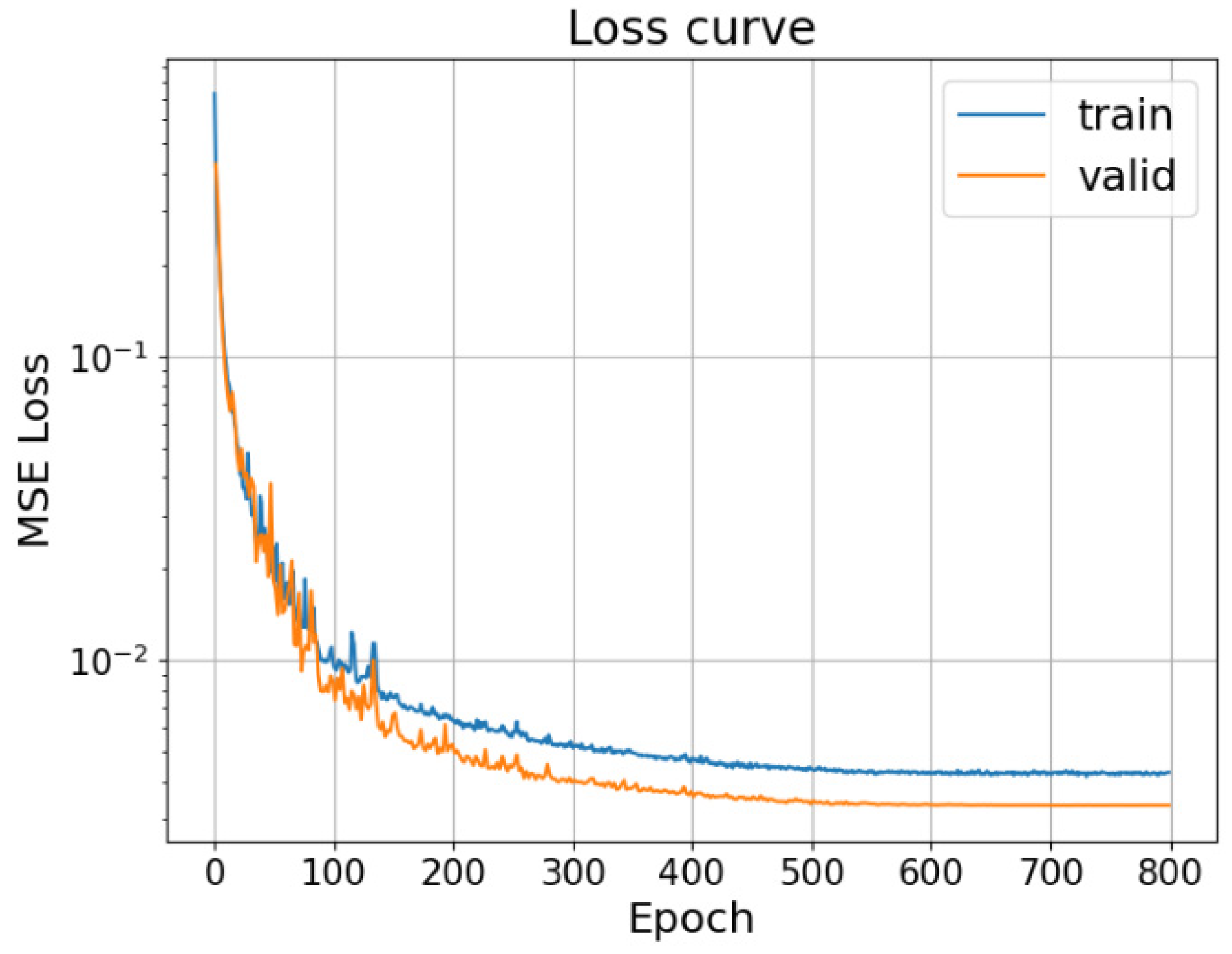

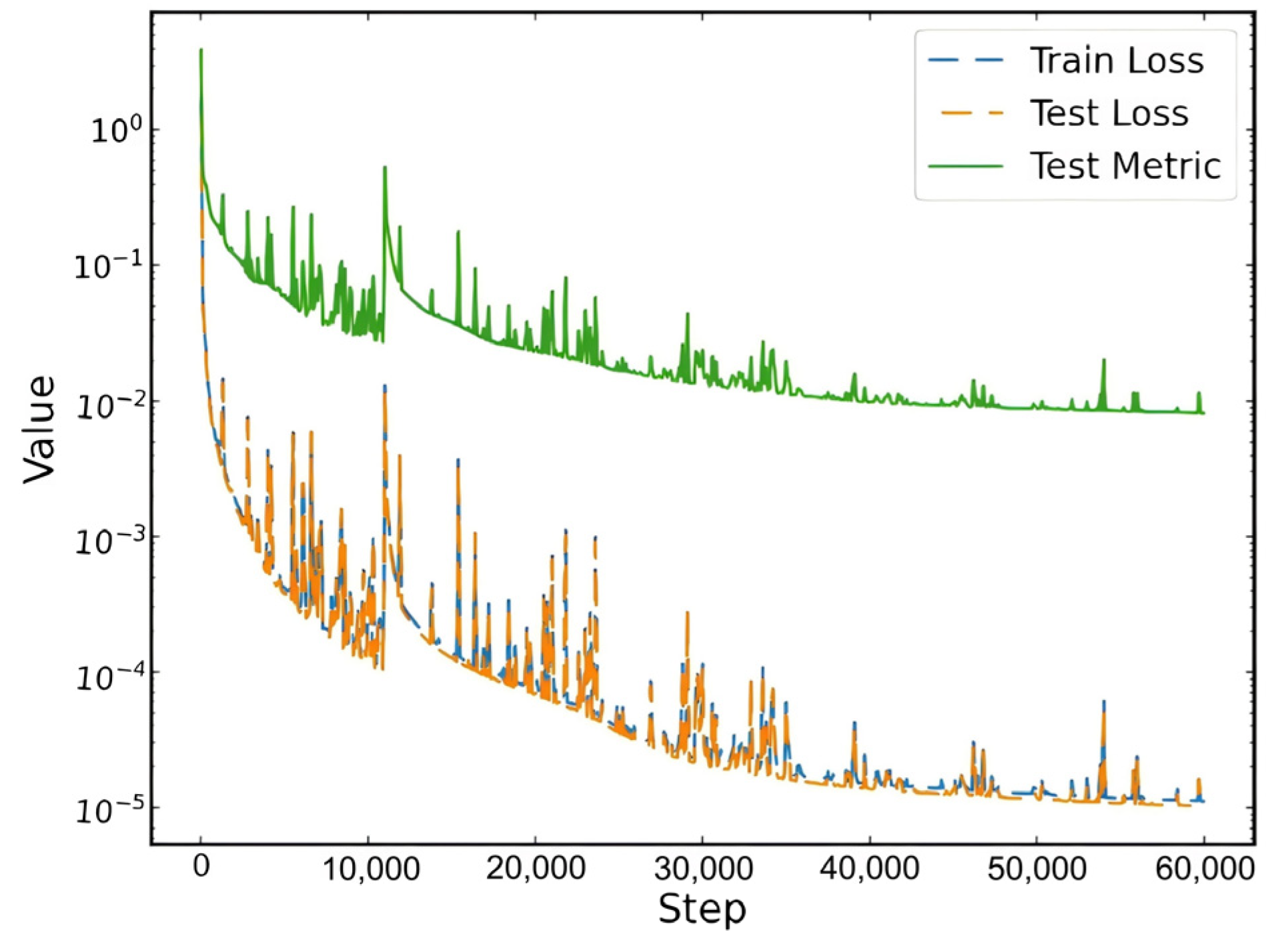

function combines weighted contributions from data and PDE constraints:

where

,

, and

are weighting coefficients balancing loss terms;

and

are point sets sampled from the domain;

and

are

point sets sampled at initial/boundary locations.

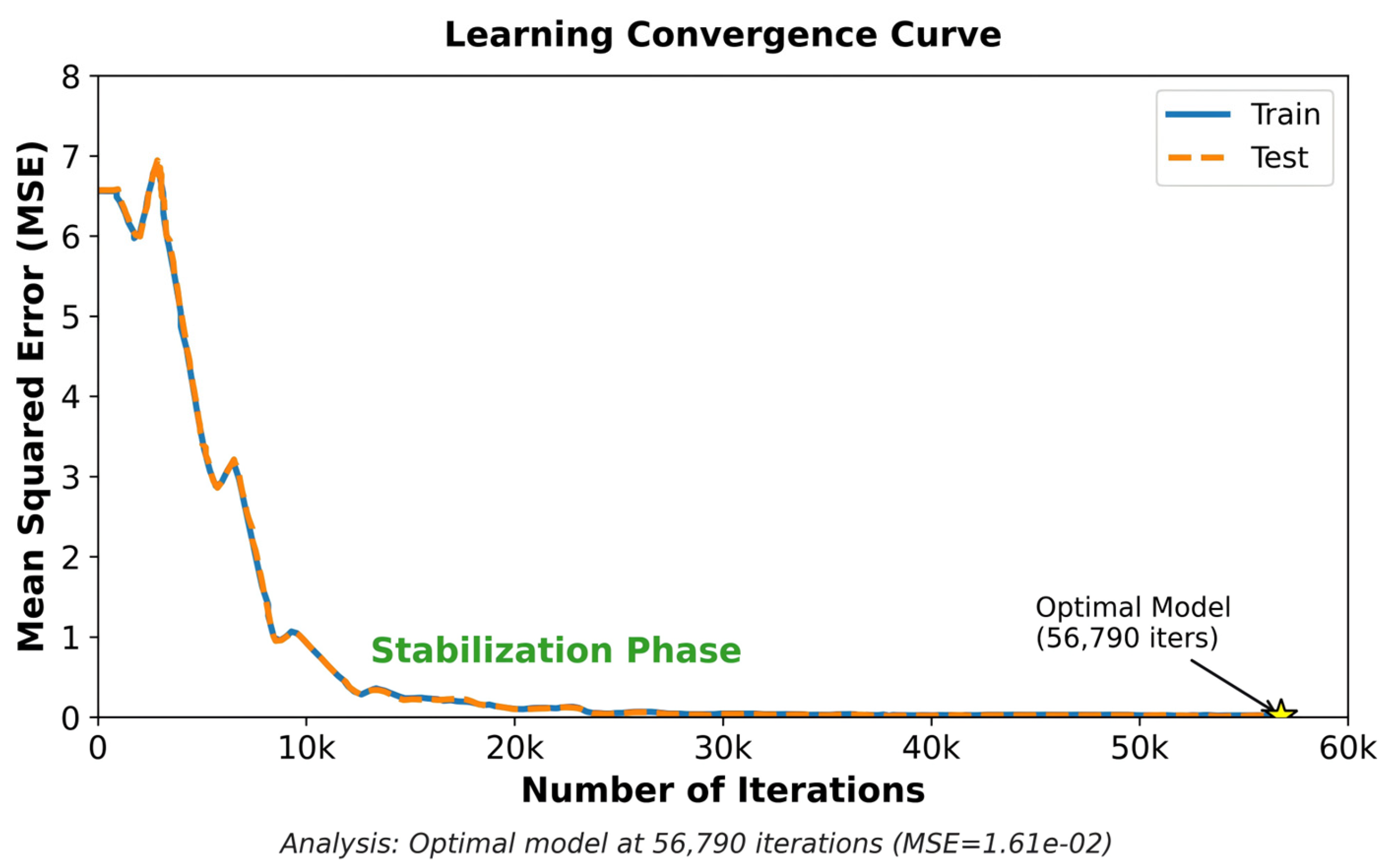

The optimal parameters are obtained by minimizing , ensuring approximates the true solution .

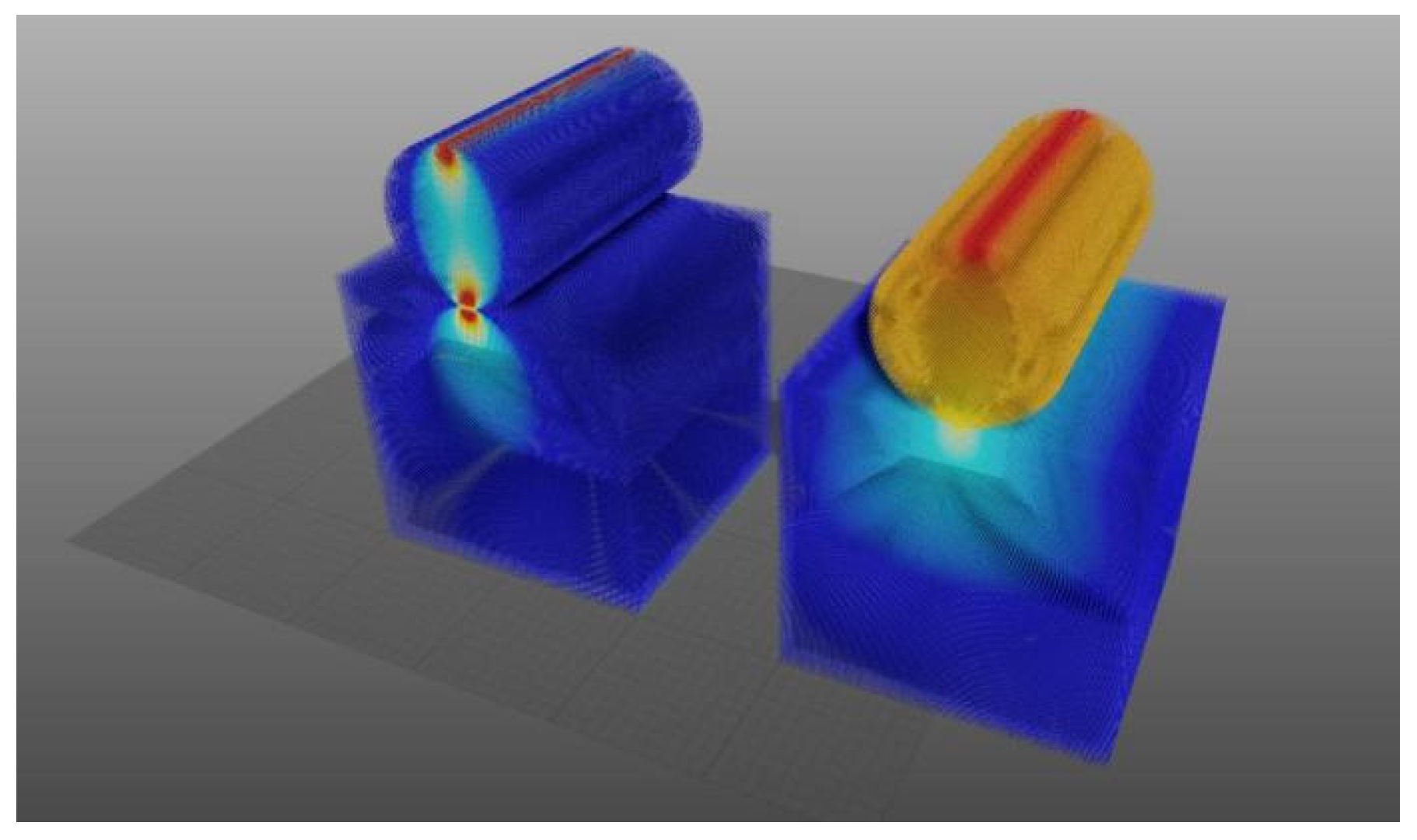

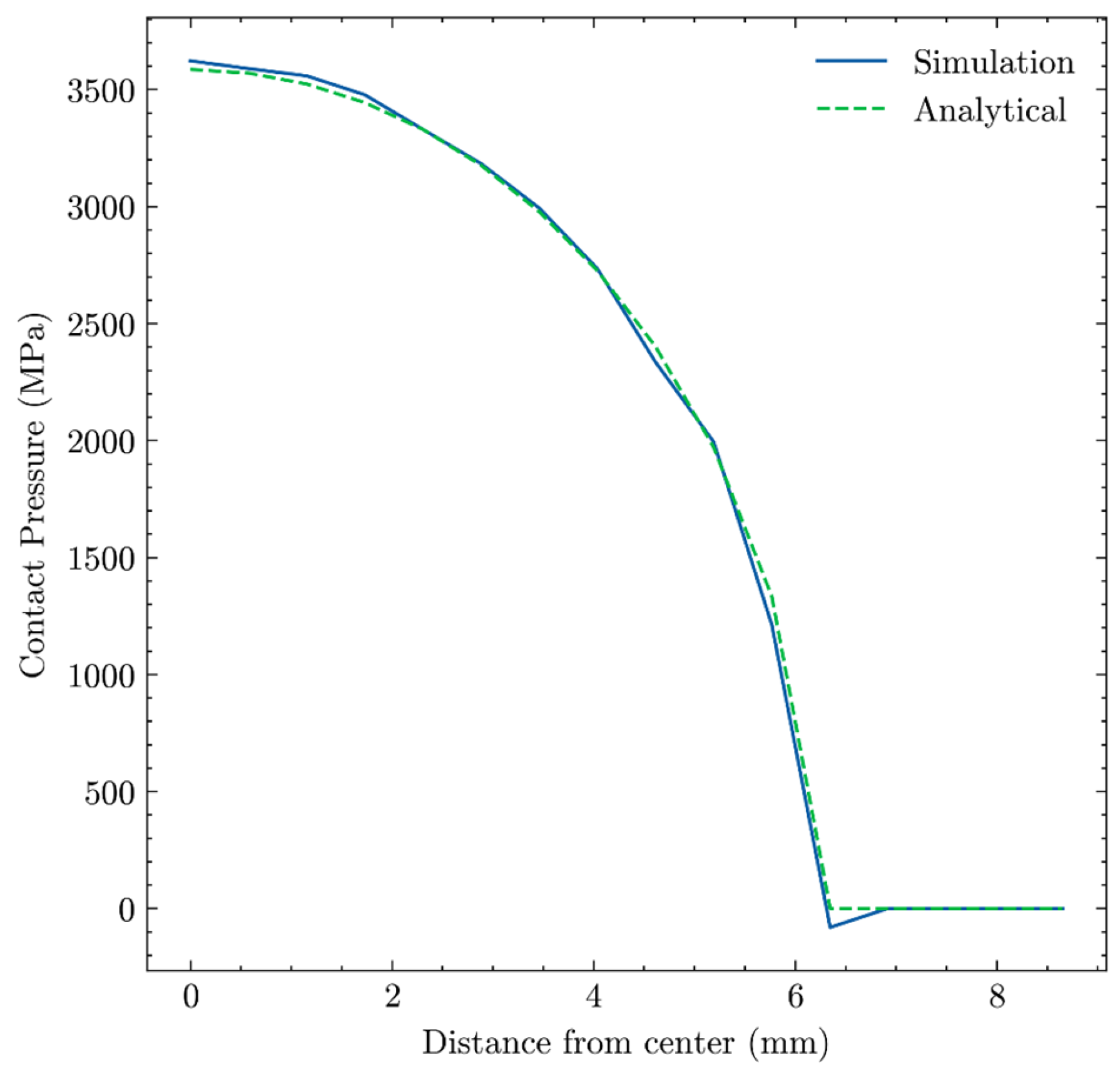

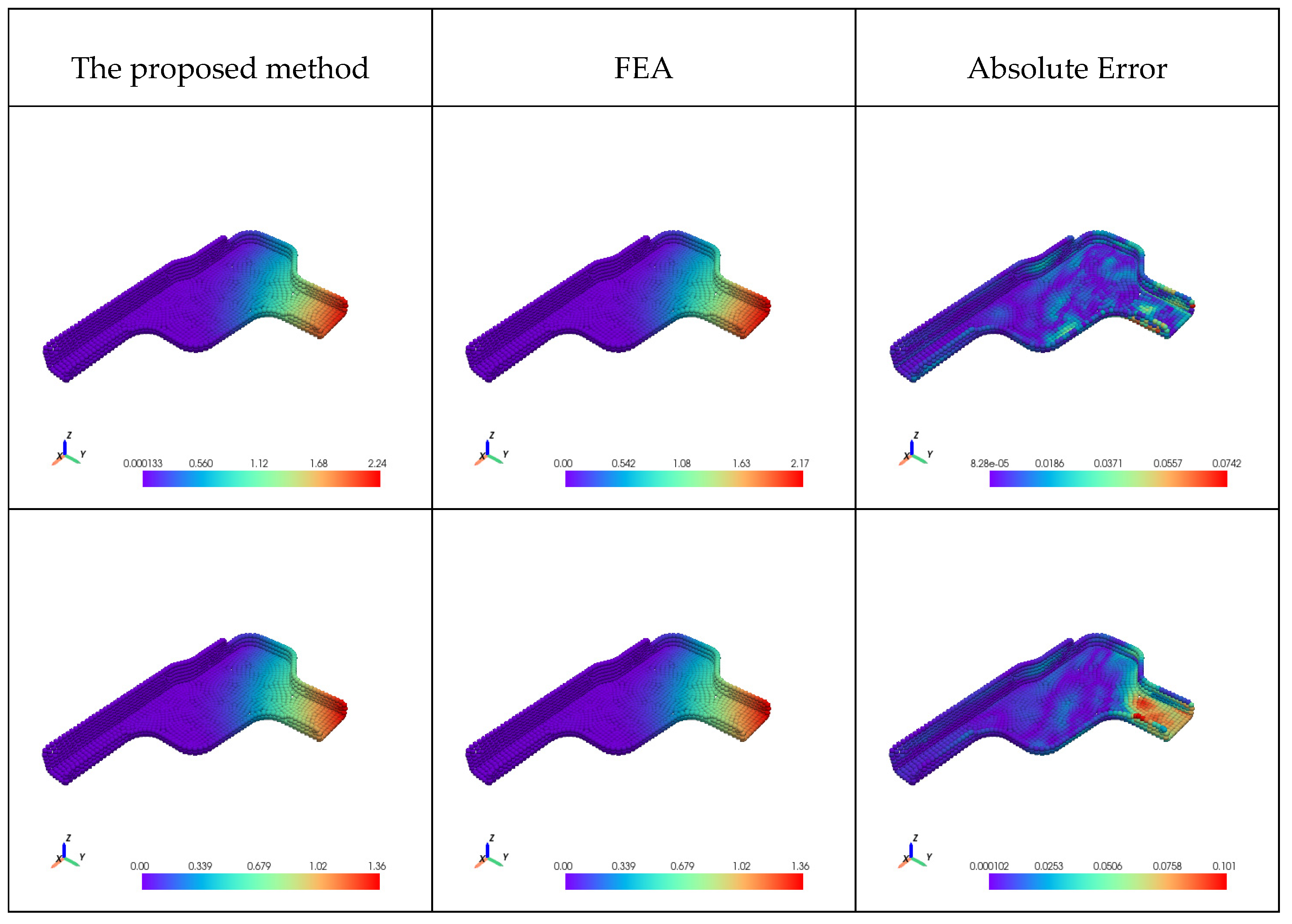

The Graph-Structured PI-DeepONet (GS-PI-DeepONet) integrates GNNs, DeepONets, and PINNs to address unstructured geometric problems in parametric structural analysis as shown in

Figure 4. Its core innovation lies in embedding physical fields and their governing equations into graph-structured feature learning, leveraging non-Euclidean topology modeling to overcome limitations of traditional mesh-based methods in complex geometries, nonlinearities, and multiphysics coupling.

3.3. Boundary Conditions Enforcement in GS-PI-DeepONet

In the GS-PI-DeepONet framework, boundary conditions are enforced through a combination of a hard-constraint ansatz integrated into the operator network’s output and soft-constraint penalties in the physics-informed loss function.

Hard Constraints via Solution Ansatz

The core idea is to structure the output of the operator network to automatically satisfy the essential (geometric) boundary conditions. The raw prediction from the DeepONet is not the final solution but is transformed into a function that inherently fulfills the BCs.

The final solution

is constructed as:

where

is the output of the Graph-Structured DeepONet operator network,

is a function chosen to satisfy the non-homogeneous essential boundary conditions. For homogeneous BCs,

,

is a smooth lifting function that is zero at the locations where essential BCs are applied, Ensuring the network’s output

does not violate them.

For example, application to a Cantilever Beam (Fixed at

) as shown in

Section 6.1 can be expressed as follows.

The essential boundary conditions are:

A suitable lifting function that satisfies

and

is:

with homogeneous BCs,

. Therefore, the final solution ansatz used to calculate derivatives and the loss is:

This formulation guarantees that and , no matter what the network outputs for are.

Soft Constraints via Physics-Informed Loss

The natural (force) boundary conditions and the governing PDE itself are enforced as soft constraints by embedding them directly into the loss function, as defined in Equations (19)–(22).

For the cantilever beam example (free at

), the natural

boundary conditions are zero moment and zero shear:

These are incorporated into the loss term

. The total

physics-informed loss function becomes:

where

penalizes the violation of the Euler-Bernoulli equation

at collocation points inside the domain,

now includes penalties for the natural BCs at

:

. All derivatives

are computed from the ansatz

using automatic differentiation.

Generally, in the suggested framework:

Hard Constraints (Ansatz): Essential BCs are enforced exactly by defining the final solution as .

Soft Constraints (Loss Function): The governing PDE and natural BCs are enforced by minimizing their residuals, which are calculated from the hard-constrained solution .

This hybrid approach ensures strict adherence to geometric constraints while allowing the model to learn the physics and force-boundary conditions from data, which is a hallmark of the GS-PI-DeepONet method.