1. Introduction

Industrial operations involve dynamic environments where the safe use of machinery is essential for preventing accidents and ensuring worker well-being. Timely detection of safety risks, particularly those involving mobile machinery, plays a critical role in maintaining operational integrity. Traditional safety risk detection systems—often reliant on manual oversight or static, rule-based algorithms—struggle to adapt to changing conditions such as varying lighting, camera angles, or equipment configurations. This lack of adaptability limits their effectiveness in real-world deployments.

Recent advancements in deep learning have shown promise in automating video-based safety monitoring by learning complex spatial and temporal patterns from operational footage [

1]. However, these models frequently face challenges in generalizing across different scenarios, especially when trained on data from a single operational context. A noticeable decline in performance, often reflected by F1-scores dropping below 0.85 in unfamiliar environments, highlights the need for more adaptable and data-efficient solutions.

To address this limitation, this work explores the integration of feature transfer learning into the safety risk detection pipeline. By leveraging pre-trained models and progressively retraining them with limited data from new scenarios (5–50%), the approach enables the creation of generalizable detection systems without the need for extensive retraining or large annotated datasets [

2]. This is particularly beneficial in industrial safety applications, where certain risk events are rare and difficult to reproduce [

3,

4].

The proposed methodology focuses on forklift operations and evaluates detection performance across nine safety risk categories defined by OSHA 3949. A hybrid deep learning architecture is implemented, combining convolutional and temporal processing to capture both object features and movement patterns. The system’s adaptability is benchmarked against commercial tools—NVIDIA DeepStream SDK and Amazon Rekognition Custom Labels—using F1-score as a primary performance metric.

To ensure practical deployment, the methodology also examines model efficiency in embedded environments such as Raspberry Pi and Jetson Nano. Metrics such as inference time, model weight, and data acquisition latency are assessed to validate real-time applicability. Furthermore, model interpretability is addressed through SHAP (Shapley Additive Explanations) analysis, which demonstrates a post-transfer shift in feature relevance toward critical elements like equipment forks, load position, and workspace boundaries. This not only enhances transparency but also supports efficient annotation in future training iterations.

The paper is organized as follows:

Section 2 reviews related work in deep learning-based risk detection and transfer learning;

Section 3 details the proposed methodology;

Section 4 presents classification results; discusses interpretability and feature relevance; benchmarks inference performance across platforms;

Section 5 offers concluding remarks; and outlines future research directions.

3. Methodology

This study proposes a safety risk event detection system for forklift operations using a dual-stage deep learning architecture (Deep Risk Network, DRN), enhanced by progressive transfer learning and validated through a stratified scenario-based experimental design. In short, the proposed approach processes video data to identify safety-critical events. The methodology is structured into five sequential components: data acquisition, scenario structuring, model design, training protocol, and evaluation metrics.

3.1. Stratified Scenario Design and Data Processing

To ensure robust generalization under realistic deployment conditions, this study employs a scenario-based stratification strategy rather than conventional random or class-balanced stratified sampling. Each scenario reflects a distinct combination of operational conditions—such as camera angles, lighting environments, and workspace layouts—that are likely to influence model performance. The objective is to assess model generalization to unseen operational contexts.

The scenario design was informed by the following criteria: environmental variability (e.g., daylight vs. low light, reflective surfaces); camera positioning (e.g., front, lateral, and overhead perspectives); operational activity (e.g., routine operation vs. risk-prone behaviors); and layout constraints (e.g., open vs. cluttered zones and presence of blind spots).

The dataset includes video recordings from 9 stratified scenarios (see

Table 1), each constructed to capture a unique combination of these attributes. Within each scenario, forklift operations were recorded using three synchronized RGB cameras at 15 frames per second.

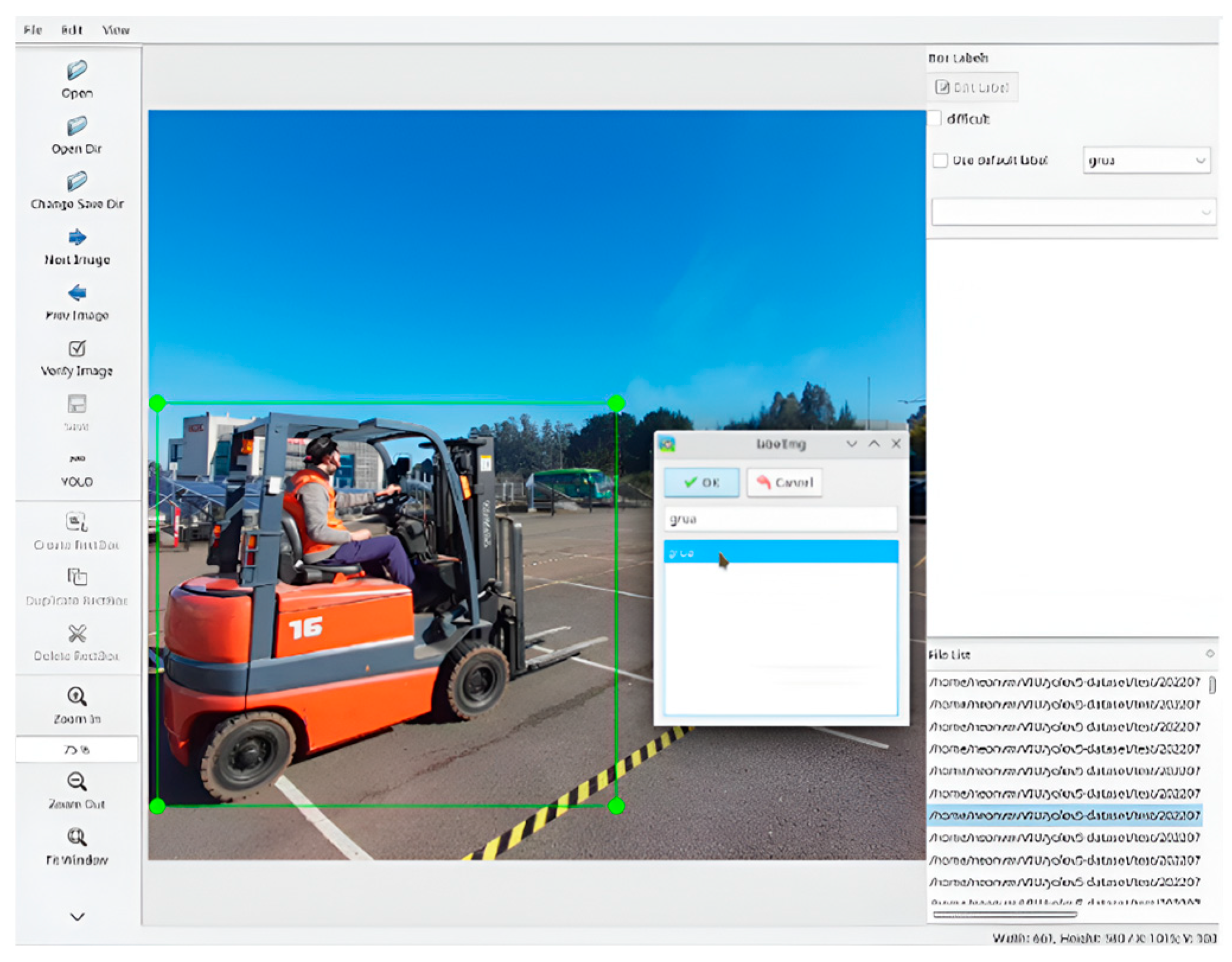

Annotation of safety-relevant elements (such as forklift forks, operator presence, and load position) was performed using the LabelImg tool. Labels correspond to OSHA 3949 safety risk categories, supporting both object-level and event-level classification tasks. To mitigate class imbalance and enhance robustness against visual variability, synthetic data augmentation techniques were applied during preprocessing.

Data Labeling Process: To train the DRN’s object detection component, annotations were generated using LabelImg, an open-source graphical labeling tool implemented in Python 3.9 with a Qt-based GUI. This tool enables precise frame-by-frame annotation of relevant objects, such as forklift chassis, fork arms, load positions, and operator locations, among others. Each annotation includes bounding boxes and corresponding class labels, stored in structured JSON files. These labels provide the spatial supervision required for effective object detection and allow the model to learn visual patterns associated with risk-relevant elements in the scene.

In parallel, annotations were prepared for two benchmark platforms used for performance comparison:

Amazon Rekognition Custom Labels: Requires bounding-box annotations for each frame, generated and managed using Amazon SageMaker. This method aligns with Rekognition’s object detection framework.

NVIDIA DeepStream SDK: Operates using video segments labeled with high-level activity classes (e.g., transporting elevated load, turning on a slope), rather than frame-level object annotations. This facilitates a more temporal labeling approach suited for event detection.

Synthetic Data Augmentation: To address the class imbalance inherent in industrial datasets—where hazardous events are significantly less frequent than normal operations—synthetic data augmentation techniques were applied to increase training diversity. These included image rotation (±15–30 degrees), salt-and-pepper noise injection to simulate sensor noise, saturation and brightness adjustments for lighting variability, and image composition to generate new scenes containing risk-related objects in novel arrangements.

These augmentations enhance the model’s robustness by exposing it to a wider distribution of visual patterns and conditions, thereby reducing overfitting and improving generalization in unseen environments.

3.2. Scenario-Based Data Splitting Strategy

Stratified sampling of a dataset split applies when splitting the same dataset (usually an image dataset) into training, validation, and test sets. The purpose is to ensure that each split has the same distribution of key labels or classes. This approach is particularly useful in cases of class imbalance, during cross-validation, and for preventing biased training or evaluation results caused by skewed class distributions. However, this method accounts only for class distribution (or other stratifying features) and does not control for real-world variations such as lighting conditions, camera types, environmental settings, or user differences [

33].

Stratified scenarios in experimental design refers to designing multiple experimental conditions (scenarios) where strata are defined by external, domain-specific factors—e.g., weather conditions, time of day, camera angle, or hardware device. Here, the purpose is to evaluate model robustness and generalization across distinct, often real-world, meaningful scenarios—not just balanced class splits. This approach is particularly useful in computer vision applications with known sources of domain shift or operational variability (e.g., autonomous driving, medical imaging, or safety monitoring), especially when evaluating generalization across diverse environments or subpopulations to simulate real-world deployment scenarios [

34]. Although this approach involves a more complex setup, it requires metadata annotations or scenario labels and often demands larger or specifically structured datasets.

Scenarios are defined as coherent groups of samples that share a specific combination of environmental and operational conditions. These scenarios serve as the primary unit of stratification. In this paper, the dataset is partitioned as follows:

Training Set: Includes samples from a subset of scenarios, capturing intra-scenario variability while limiting cross-scenario exposure.

Validation Set: Contains samples from the same scenario groups as the training set, but with disjoint identities or instances to prevent overlap. Held-out identities or time windows from the same scenarios are used, preserving distributional consistency.

Testing Set: Comprises samples from entirely unseen scenarios, thereby simulating domain shift at deployment, providing a realistic evaluation of domain shift.

This setup reflects a cross-domain generalization task and imposes a more rigorous evaluation protocol than conventional i.i.d. class-based sampling, as the model is assessed on distributions not observed during training. Thus, it enforces non-i.i.d. generalization and prevents leakage of scenario-specific features between splits, emulating real deployment where the model encounters unfamiliar site conditions [

35].

3.3. Deep Risk Network Architecture Structural Overview

The Deep Risk Network (DRN) employed in this work is a dual-stage deep learning architecture designed for robust and accurate detection of safety risk events in industrial environments. Its structure integrates both spatial and temporal feature learning, making it particularly well-suited for analyzing complex video data from forklift operations. The architecture has demonstrated efficiency, robustness, and adaptability in experimental evaluations, with performance comparable to state-of-the-art time-series classification models [

36].

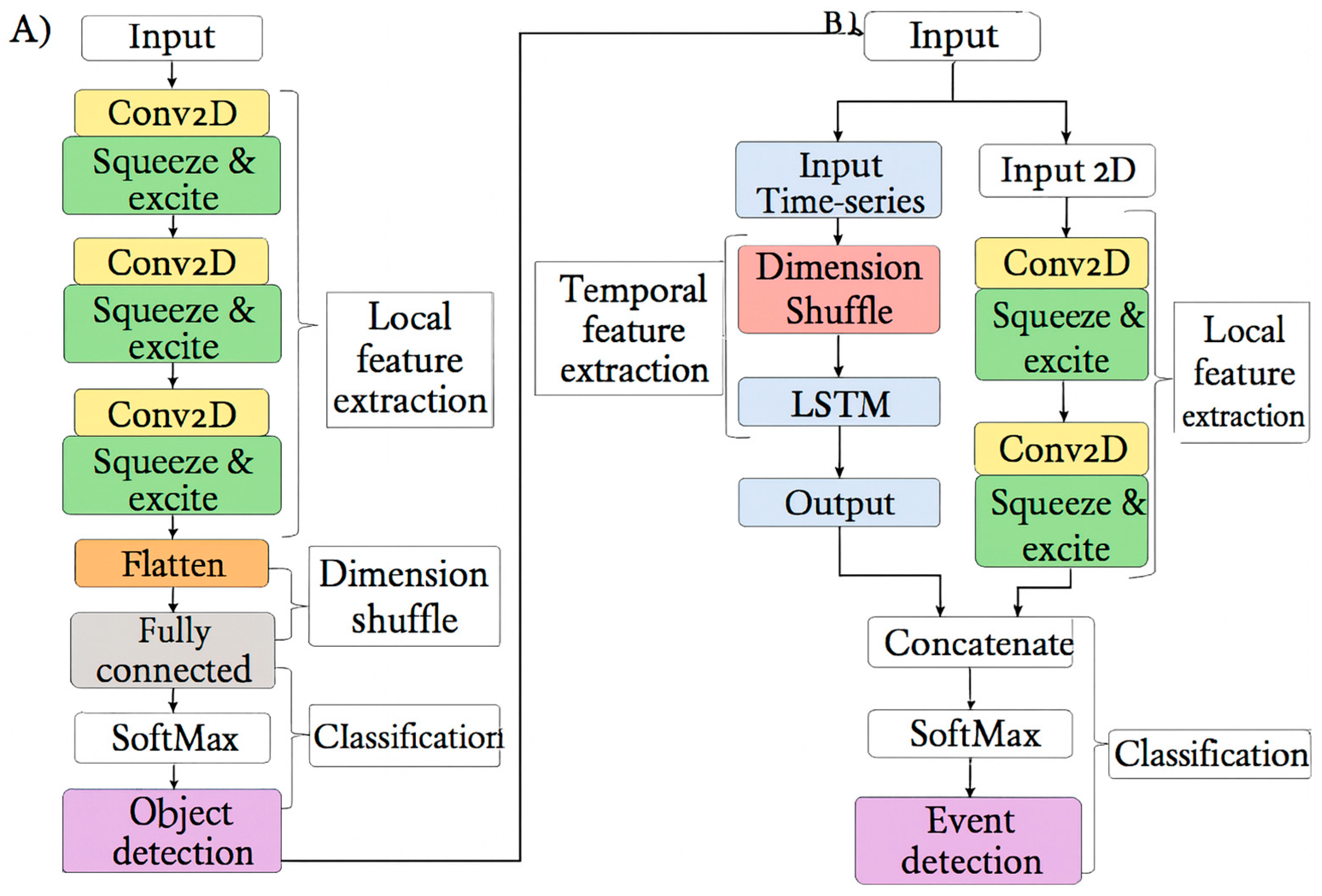

Figure 1 illustrates the dual-stage structure of the DRN architecture, composed of two main components:

Spatial Feature Extraction (Object Detection): (i) Input: single-frame or multimodal image (e.g., from three cameras); (ii) Conv2D + SE Blocks (x4): sequential convolution layers with Squeeze-and-Excitation for channel attention; (iii) Flatten: converts the spatial map into a vector representation; (iv) Fully Connected Layer + Softmax: performs object classification; and (v) Output: provides spatial labels for key visual elements (e.g., forks, loads, operators).

Temporal Feature Integration (Event Detection): (i) Input: combines 2D spatial features and temporal time-series data; (ii) Dimension Shuffle: rearranges data to fit the LSTM input format; (iii) LSTM Block: learns temporal relationships across frames; (iv) Additional Conv2D + SE Blocks: refines frame-level features; (v) Concatenation + Softmax: fuses spatial and temporal outputs for final classification; and (vi) Output: delivers event-level predictions (e.g., lifting high loads, turning on slopes, raising personnel) [

37,

38,

39].

Key Strengths of the DRN Architecture:

Thus, the DRN architecture is a purpose-built, high-performance solution for intelligent video-based risk detection in industrial settings. By effectively combining spatial feature recognition and temporal sequence modeling, the DRN achieves strong generalization and accuracy even in variable conditions. Its modularity, explainability, and compatibility with transfer learning further position it as a scalable foundation for next-generation industrial safety systems.

3.4. Cloud and Edge Computing Platforms for Benchmarking

To benchmark the performance of the selected open-access, gold-standard DRN for prediction at the edge, this study incorporates two widely used commercial platforms: Amazon Rekognition Custom Labels and NVIDIA DeepStream SDK. These standardized tools provide industry-grade pipelines for training and deploying computer vision models, enabling meaningful comparisons with the proposed architecture under similar conditions [

43,

44].

Amazon Rekognition Custom Labels enables the rapid development of custom object detection and activity recognition models, leveraging pre-trained architectures developed from millions of annotated images across diverse categories. This platform allows efficient model training on smaller, domain-specific datasets, making it well-suited for industrial applications such as safety monitoring, process automation, and video analytics. The automated workflow consists of the following sequence:

A video file is uploaded to Amazon Simple Storage Service (S3).

This action triggers an AWS Lambda function, which in turn calls the Amazon Rekognition Custom Labels inference endpoint.

Detected results are processed and passed through the Amazon Simple Queue Service (SQS) for downstream actions or integration with dashboards.

This cloud-native architecture facilitates quick deployment, seamless scalability, and cost-effective model training for industrial video applications. It supports high-level abstraction, enabling users to train and deploy custom classifiers with minimal coding or infrastructure overhead.

For on-edge processing, the NVIDIA DeepStream SDK was implemented using the Jetson AGX Xavier development board, which offers high-performance computing suitable for real-time deep learning inference. Unlike Amazon Rekognition, which is cloud-based, DeepStream is designed for edge computing, enabling video analytics directly on local devices without dependence on cloud services [

45,

46]. In this case, the implementation process involved the following steps:

Videos are stored and labeled locally to serve as a dataset for training.

A Convolutional Neural Network (CNN) is trained and fine-tuned using DeepStream’s native support for accelerated model training and inference.

The development followed NVIDIA’s recommended pipeline, which integrates pre-trained detection and classification models for rapid prototyping and deployment.

After training, the model is evaluated using a separate set of test videos not included in the training set. The SDK generates outputs in JSON format, with frame-by-frame classification results that indicate detected operational states. Additionally, DeepStream supports real-time video rendering, enabling overlays that visualize model predictions either during live inference or in post-processing.

Both platforms offer complementary advantages. Amazon Rekognition excels in cloud-based scalability and rapid prototyping, whereas NVIDIA DeepStream SDK provides low-latency, on-device inference optimized for real-time monitoring in bandwidth-limited or disconnected environments. These characteristics make them ideal reference points for evaluating the adaptability, performance, and deployment efficiency of the proposed DRN model in diverse industrial contexts. To assess model performance, we employed a set of standard classification metrics.

F1-score was used as the primary measure given its robustness to class imbalance, which is common in safety event detection, where risk categories are significantly rarer than normal operations. The F1-score is defined as the harmonic mean of precision and recall, balancing both false positives and false negatives.

In addition, we report the area under the receiver operating characteristic curve (AUC-ROC), which evaluates the ability of the classifier to discriminate between positive and negative classes across varying decision thresholds. AUC-ROC values close to 1.0 indicate strong separability, while values near 0.5 correspond to random classification.

To better capture performance on imbalanced scenarios, particularly rare risk events, we also included the Precision–Recall AUC (PR-AUC). Unlike AUC-ROC, PR-AUC focuses on the trade-off between precision and recall, providing a more informative measure of classifier reliability when positive cases are scarce.

This combination of metrics (F1, AUC-ROC, and PR-AUC) enables a comprehensive evaluation of both balanced and imbalanced safety event detection tasks.

3.5. Transfer Learning for Generalization

Transfer learning has proven to be an effective strategy to address the limited generalization of deep learning models, particularly in industrial contexts where labeled data are scarce or expensive to obtain. It enables the reuse of knowledge acquired from a source task or domain to enhance performance in a related target task, thereby improving the adaptability and scalability of video-based safety risk detection systems across heterogeneous operational environments [

47]. Unlike conventional transfer learning approaches that rely on full model fine-tuning or one-shot adaptation to new domains, our method adopts an incremental forward-transfer strategy. In this approach, scenario-specific data are progressively integrated in small proportions (5–50%), while the model is evaluated only on previously unseen scenarios [

48]. This differs fundamentally from existing industrial safety applications, which often perform a complete retraining step for each new deployment [

49]. Our incremental design mitigates the degradation of previously acquired knowledge, reduces computational overhead, and enables rapid adaptation to heterogeneous environments where annotation resources are limited [

50].

This study adopts a feature-based transfer learning approach, leveraging latent representations—intermediate features learned by convolutional and recurrent neural networks. These representations are assumed to encode high-level, domain-invariant properties, enabling recognition in new environments without requiring full model retraining [

51]. This is especially advantageous in industrial applications, where collecting and annotating data for each new scenario can be impractical [

52].

The proposed strategy is computationally efficient and particularly effective when source and target tasks are closely related, and the target dataset is limited. By reusing features from the final hidden layers, the model maintains reliable performance without retraining from scratch.

To evaluate generalization and adaptability, an incremental transfer learning strategy is implemented, based on scenario-driven data expansion. Training data from new operational contexts is progressively integrated, allowing the model to adapt to novel environments while preserving previously learned knowledge. This structured approach supports robust deployment in diverse and dynamic industrial settings [

53].

The proposed experiment involves training a feature-based transfer learning model in one scenario and subsequently testing it in a different, previously unseen scenario [

42]. This approach mitigates overfitting and enables the evaluation of transfer learning performance across 10 different scenarios. Typically, feature transfer is conducted by reusing between 50% and 100% of the available data from the source domain [

54,

55]. In contrast, our method retrains the model using only a small fraction of the data, aiming to evaluate its generalization capabilities under conditions of extremely limited data availability.

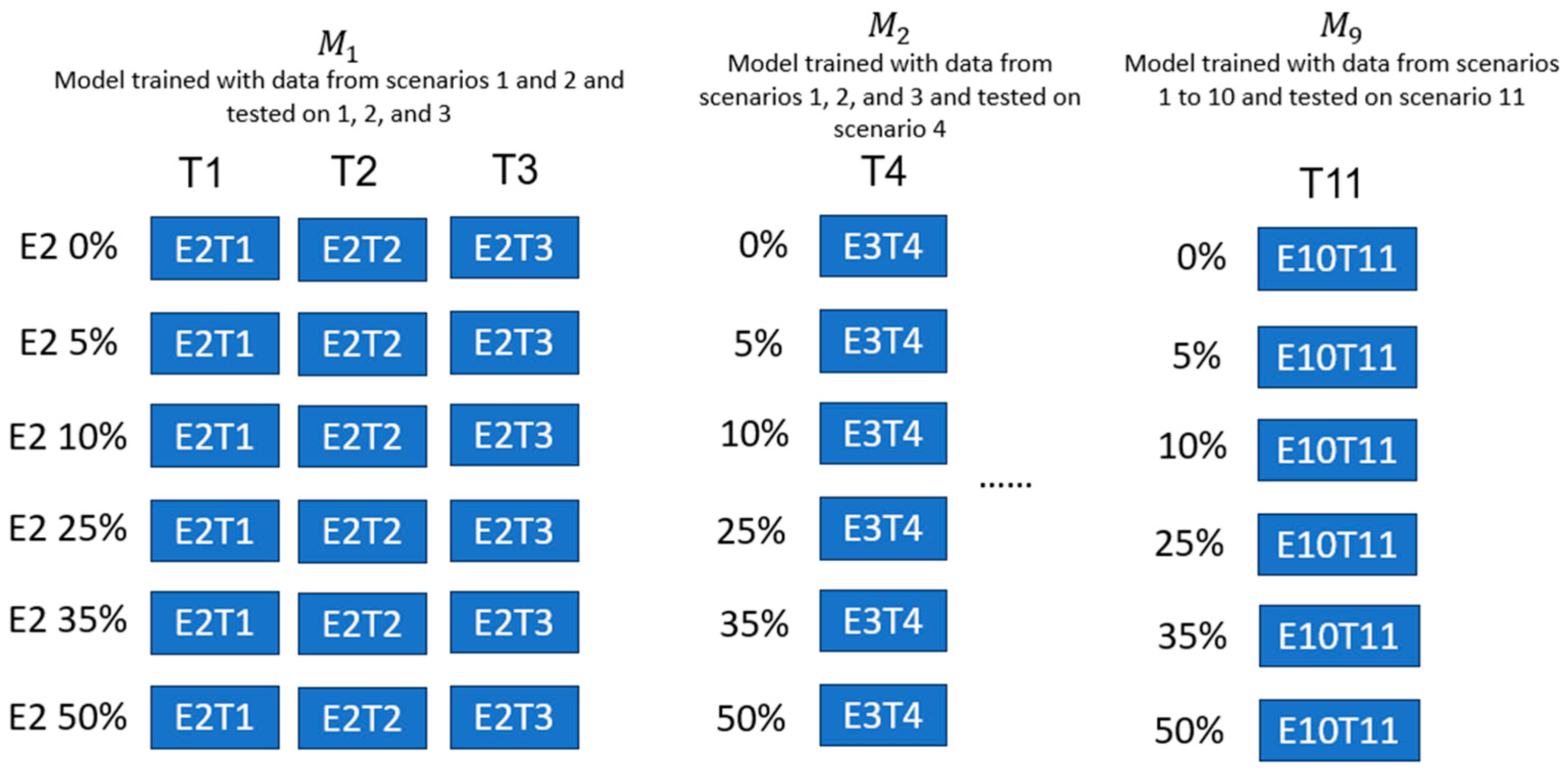

The initial model

M1 is trained using the complete training set from scenario

E1, along with a specified percentage % of the training set from scenario

E2. The resulting model is evaluated on the test sets of scenarios

T1,

T2, and

T3. Formally, the training set for

M1 is defined in Equation (1):

For subsequent models

Mi, where

i ∈ [2, 9], the training set includes the full data from scenario

E1 and incremental portions

p% from scenarios

E2 to

Ei+1. These models are evaluated only on a single new test scenario

Tei+2, distinct from training inputs, to streamline responsiveness and focus evaluation on the most recently introduced conditions. This approach balances computational efficiency with adaptive performance tracking. The training set is defined as:

The evaluated values of % include 0%, 5%, 10%, 25%, 35%, and 50%, representing varying levels of exposure to novel operating environments.

For each test scenario Test, all models Mi trained with different percentages p% of additional scenario data are evaluated using a predefined performance metric (e.g., accuracy, F1-score). The model achieving the highest score is selected as the best-performing configuration. The corresponding training composition is then identified as the optimal percentage of new scenario data required to maximize performance under that scenario.

This selection procedure quantifies the trade-off between the amount of transferred knowledge and the performance gain achieved, while identifying the minimum data proportion necessary for effective generalization.

Only the first round of retraining (model M1) is evaluated across multiple test scenarios (T1, T2, and T3.). All subsequent models are evaluated solely on a single, newly introduced test scenario Ti+2, in accordance with the forward-transfer protocol and responsiveness requirements.

This design provides a scalable framework for evaluating adaptive learning in evolving environments. It is especially relevant for real-world applications requiring continual model updating with limited retraining and testing budgets—such as industrial monitoring, safety-critical systems, and predictive maintenance in non-stationary contexts.

The experimental methodology, illustrated in

Figure 2, is applied to a dataset comprising 9 different operational scenarios, each simulating realistic industrial conditions aligned with OSHA 3949 safety categories. Additionally, the commercial standard solutions—Amazon Rekognition Custom Labels and NVIDIA DeepStream SDK—are included for comparative analysis under the same experimental protocol as a benchmark.

The main goal is to optimize the trade-off between training data volume and generalization performance, particularly in data-scarce industrial settings. The proposed approach evaluates the scalability and transferability of safety risk detection models by systematically analyzing how incremental data inclusion impacts metrics such as F1-score, inference time, and robustness to domain shifts.

4. Results

4.1. Data Sources and Testbed

To ensure the effectiveness of any deep learning algorithm, whether for classification or regression, it is essential that the training dataset is both diverse and sufficient in volume. In this study, the datasets were constructed from a combination of third-party video sources and data captured in a controlled testbed environment specifically designed to replicate industrial forklift operations, referred to as Scenario 1.

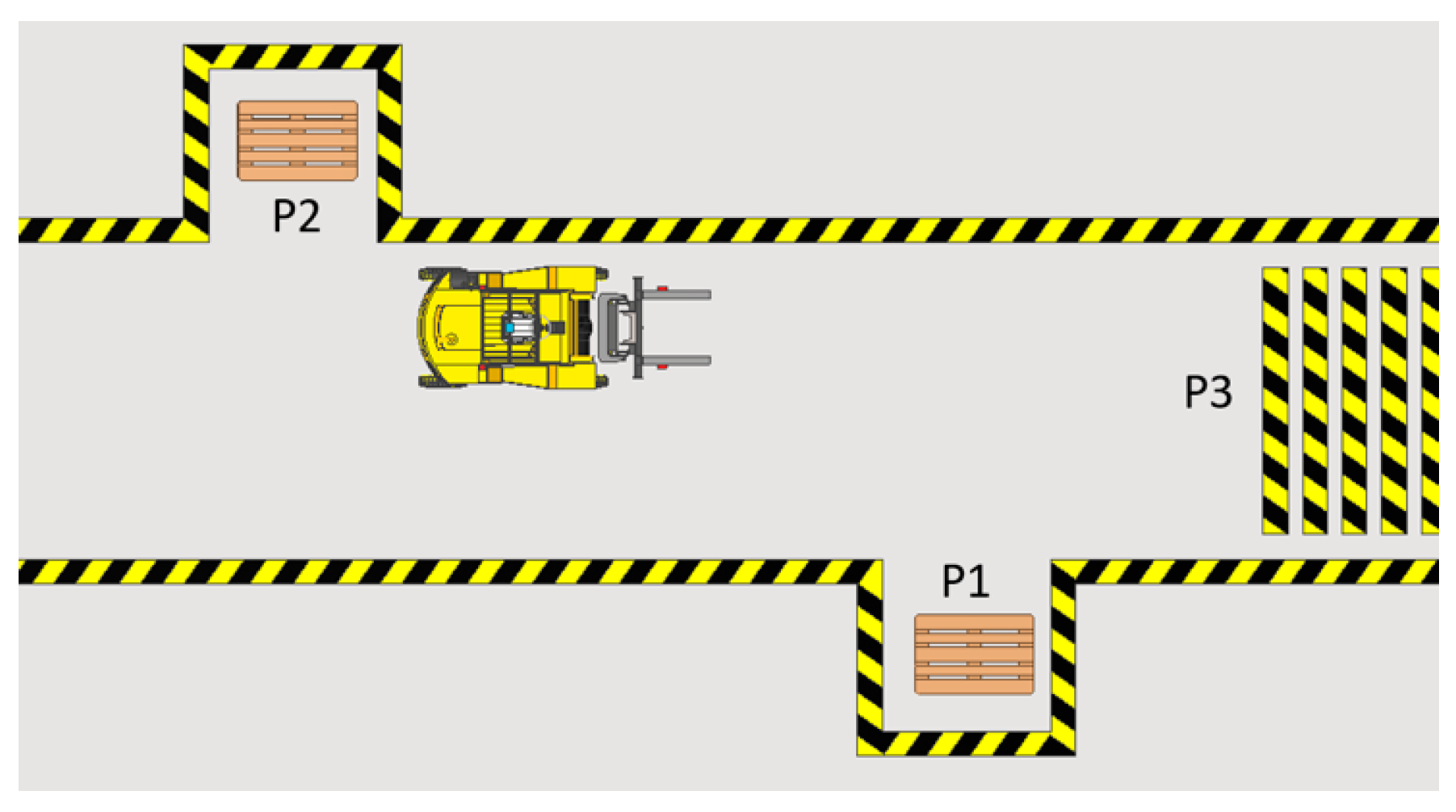

The testbed for Scenario 1 was engineered to simulate the key operational scenarios outlined in

Table 1. It includes the following elements: (i) an operational area marked on the ground, (ii) pallets with varying load configurations, (iii) a ramp to simulate slope navigation, and (iv) a video acquisition system consisting of three strategically positioned cameras. As illustrated in

Figure 3, the testbed layout is organized around three main reference points: Point 1 (P1) for initial loading, Point 2 (P2) for unloading, and Point 3 (P3), a zone for inclined maneuvers.

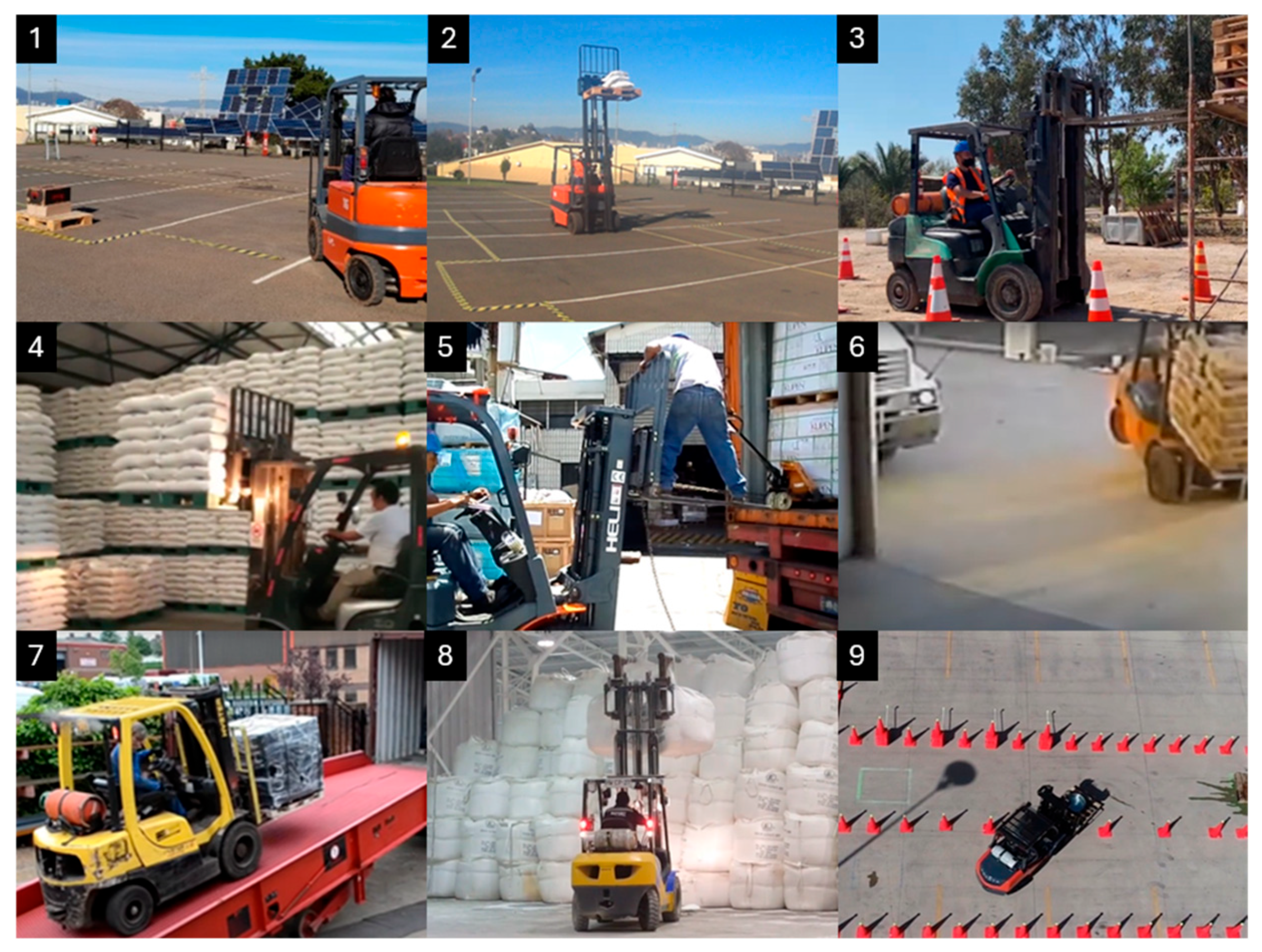

Figure 4 shows the actual implementation of the testbed. Additionally, a curated set of forklift operation videos was sourced from external environments, featuring variations in lighting, camera angles, and background context.

Table 1 provides a detailed overview of the external video sources and the testbed recordings used to enhance the dataset’s generalization capacity for Scenario 1. Representative examples of key events from the scenarios (or datasets) used for transfer learning experimentation are shown in

Figure 4.

4.2. Dataset Composition

In this study, a scenario is defined as a collection of audiovisual recordings obtained under a consistent operational context. This includes the physical characteristics of the environment (e.g., indoor or outdoor settings), as well as factors such as operator behavior, machinery, the video capture system, and the frequency of safety risk-related maneuvers. For instance, Scenario 1 corresponds to recordings obtained in the controlled testbed environment; Scenario 2 includes videos captured with mobile phone cameras during outdoor training sessions; and Scenario 3 consists of footage from surveillance cameras installed in an industrial warehouse. The remaining scenarios were grouped based on their contextual and technical coherence.

Thus, each scenario represents a unique domain instance dataset, characterized by distinct variations in lighting, resolution, camera perspective, physical environment, and operating style. This diversity is essential for evaluating the model’s ability to generalize to previously unseen conditions. Within this framework, a key methodological requirement is that performance evaluation following the transfer learning process must be conducted using data from a different scenario than the one used for training. This strategy not only prevents data leakage but also enables a more rigorous and representative assessment of the model’s generalization capacity across varied operational contexts.

The datasets comprised 115 videos across nine activity classes; each operation was recorded in both exterior and interior environments, with a total average duration of 250 min of annotated footage.

The datasets used for training and evaluation in this study demonstrate strong adherence to essential data quality standards for machine learning, particularly in industrial safety applications. The datasets exhibit notable diversity, encompassing nine distinct forklift operation types—including both normal and OSHA 3949-defined risk events—captured across interior and exterior environments with multi-angle video recording. This diversity supports robust generalization to operational variability.

In terms of volume, the datasets include over 135,000 estimated frames for normal operations, with other classes contributing 9000–40,000 frames each, providing sufficient data to support deep learning architectures. While class balance is not strictly uniform, it is thoughtfully engineered: high-risk or visually complex activities are weighted more heavily (15%), while rarer events are represented by 5%, aligning the data with practical risk modeling needs. The use of F1-score ensures fair evaluation despite these imbalances.

The datasets benefit from high-quality and consistent labeling, guided by the OSHA taxonomy and implemented with standardized annotation tools (LabelImg with JSON output). Relevance is strong, as all data correspond directly to safety-critical operational contexts. The datasets are also complete, with no missing data and full frame coverage across all scenarios.

The datasets remain representative of their intended domain. Efforts to assess generalization through scenario-based testing and transfer learning further strengthen their applicability.

Finally, annotation granularity is well-matched to the task: labels are detailed enough to capture specific risk events without being overly fine-grained. Overall, the datasets are well-structured, balanced for industrial risk detection, and suitable for training high-performance, generalizable models. The datasets satisfy key machine learning data principles for robustness and generalization. They have sufficient volume and class coverage for supervised deep learning and provide a well-calibrated benchmark for transfer learning across scenarios.

4.3. Dataset Labeling

The proposed safety risk event recognition system was composed of two classification stages. The first classifier focused on object detection, identifying elements such as the forklift, its forks, the load, and safety boundary tape. This process required frame-level segmentation of video sequences. Each video was divided into individual frames, where objects of interest were annotated using bounding boxes and class labels. The conversion of videos into frames was performed using a GStreamer-based pipeline. For manual annotation, the open-source tool LabelImg was employed [

56]. This tool produced two outputs per frame: the annotated image and a corresponding JSON file specifying the bounding box coordinates and class label.

Figure 5 shows the LabelImg interface used during the annotation workflow.

The second classification algorithm analyzed the detected objects and context to classify the specific type of safety risk event taking place. For this purpose, a training set was generated from labeled video segments. The previously annotated frames were re-rendered into video clips with overlaid object labels. These rendered videos were then categorized according to the action being executed, forming the input for training the event classifier. The original video recordings used in this process ranged from 60 to 120 frames per second and were captured in Full HD (1080 p). However, to reduce computational demands during processing, all videos were downsampled to 15 fps and HD resolution (720 p).

In the standardized tools employed for deep learning model evaluation—such as the NVIDIA DeepStream SDK—the input comprised video segments labeled by activity rather than by individual objects and context.

Despite the availability of a diverse dataset, deep learning models typically require a large volume of training data to achieve optimal performance. To address this challenge, synthetic image generation techniques were employed to augment the dataset. Synthetic data generation was a fundamental practice in this study, as it enhanced the training dataset by introducing variability and compensating for data limitations. It allowed the model to learn from a broader range of examples and become more robust to environmental variations.

In addition to improving generalization, synthetic data helped mitigate class imbalance—a common issue in real-world datasets where safety risk events occurred significantly less frequently than normal operations [

57,

58]. Several augmentation techniques were applied, including image rotation, salt-and-pepper noise injection, saturation modification, and composite image generation with objects of interest [

59,

60].

Figure 6 presents examples of synthetic images derived from the testbed environment.

Accurate labeling and a balanced count of images per classification category were critical for training supervised deep learning models. These labels served as ground truth, guiding the model to learn meaningful patterns while reducing the risk of overfitting [

61]. The quantity and distribution of labeled samples had a direct impact on classification performance, especially in multi-class scenarios.

To support this study, the videos referenced in

Table 1 and

Table 2 were fully annotated, and the number of labeled frames per event type was systematically organized and analyzed by category. It is important to clarify that the term “normal operation” refers to a standardized sequence of forklift maneuvers performed under safe and controlled conditions. This sequence includes the following steps:

Positioning the forklift in front of the pallet and adjusting the height and tilt of the forks.

Inserting the forks into the pallet.

Lifting the loaded pallet to a height of 10 to 20 cm above the ground.

Transporting the load along designated pathways to the delivery location.

Lowering the load to the ground, reversing, and disengaging the forks from the pallet.

4.4. Binary Classification Results Without Transfer Learning

The initial analysis involved training safety risk event detection algorithms to perform binary classification—distinguishing between risk and non-risk events.

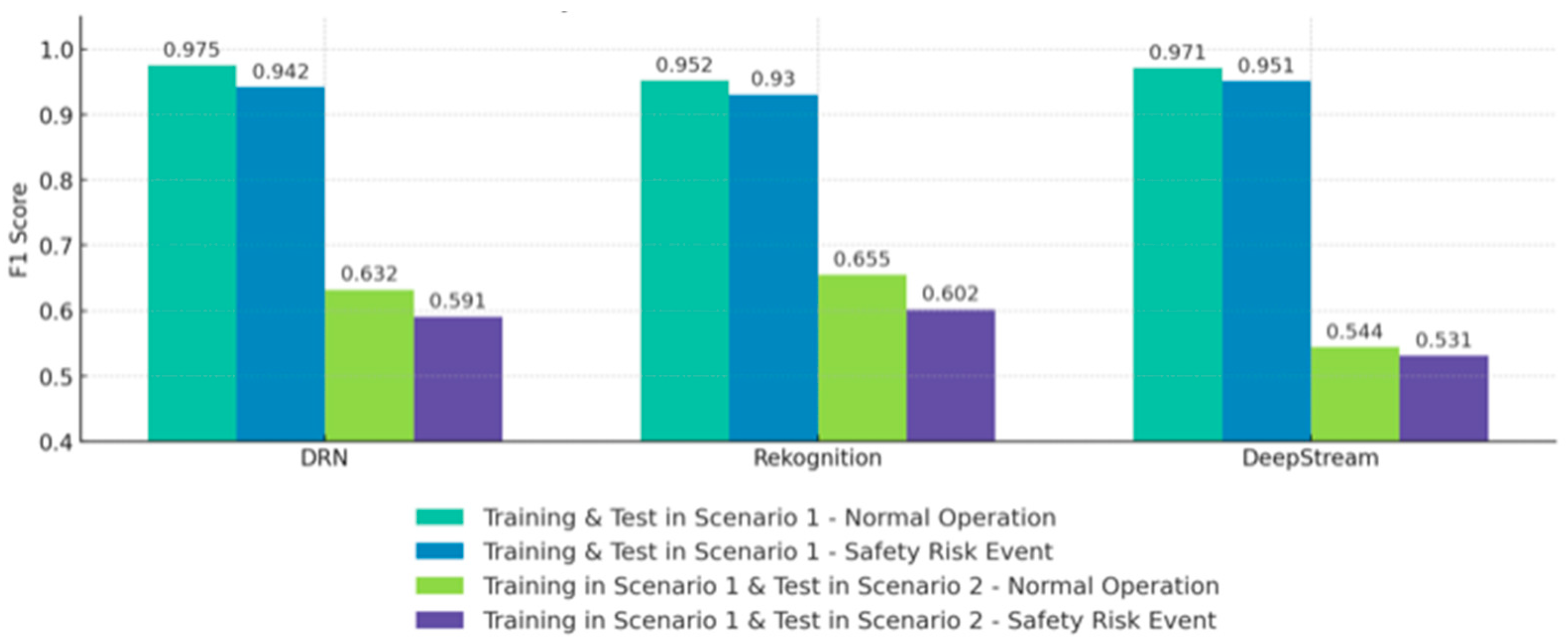

Figure 7 presents a comparison between the proposed DRN algorithm and two standardized tools: Amazon Rekognition Custom Labels and NVIDIA DeepStream SDK. The F1-score was used as the primary performance metric, as it is the standard evaluation criterion reported by both baseline tools.

In Scenario 1, where the inference data belong to the same context as the training data, all three models demonstrated high and consistent performance. The DRN outperformed AWS Rekognition and performed comparably to DeepStream, with all models requiring approximately the same amount of time for training and inference. The DRN achieved an average F1-score of 0.96, while AWS Rekognition and DeepStream reached average F1-scores of 0.94 and 0.96, respectively. All models exhibited low standard deviations (DRN: 0.023, AWS Rekognition: 0.016, DeepStream: 0.014), indicating stable performance and reliable classification under conditions similar to their training data. These results confirm the models’ ability to effectively identify both risk and non-risk events when operating within their trained domain.

In contrast,

Figure 7 presents the results of applying the model to Scenario 2, where the inference data originate from a completely different context than the training set. In this setting, all models experienced a significant decline in performance. The DRN’s F1-score dropped to an average of 0.61, while AWS Rekognition and DeepStream recorded averages of 0.63 and 0.54, respectively, representing a relative decline exceeding 34% compared to Scenario 1.

This performance degradation highlights the models’ limited generalization capabilities across domains. Although AWS Rekognition slightly outperformed the others, the differences were marginal and insufficient to establish robustness against contextual variability. The substantial drop in accuracy is attributed to several factors, including overfitting to the specific characteristics of the training dataset. This overfitting prevents the models from generalizing to new conditions. Additionally, contextual variations—such as differences in camera angles, lighting conditions, and types of operations—introduced significant noise and complexity that further degraded performance.

The pre-trained architectures of AWS Rekognition and DeepStream, although effective within their domains, appear inadequately adapted for cross-domain applications in industrial settings. These findings underscore the importance of designing algorithms with improved generalization for deployment in real-world environments.

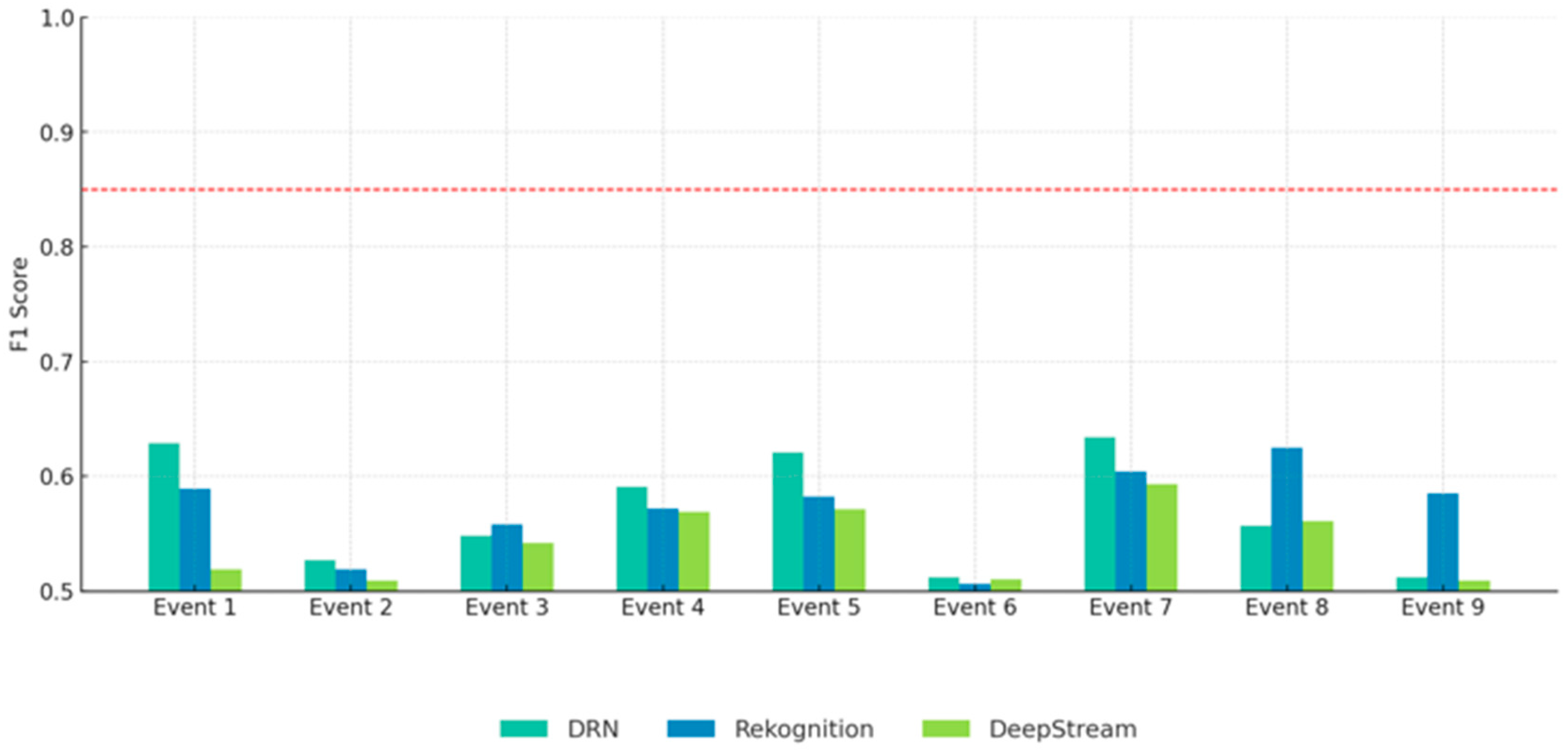

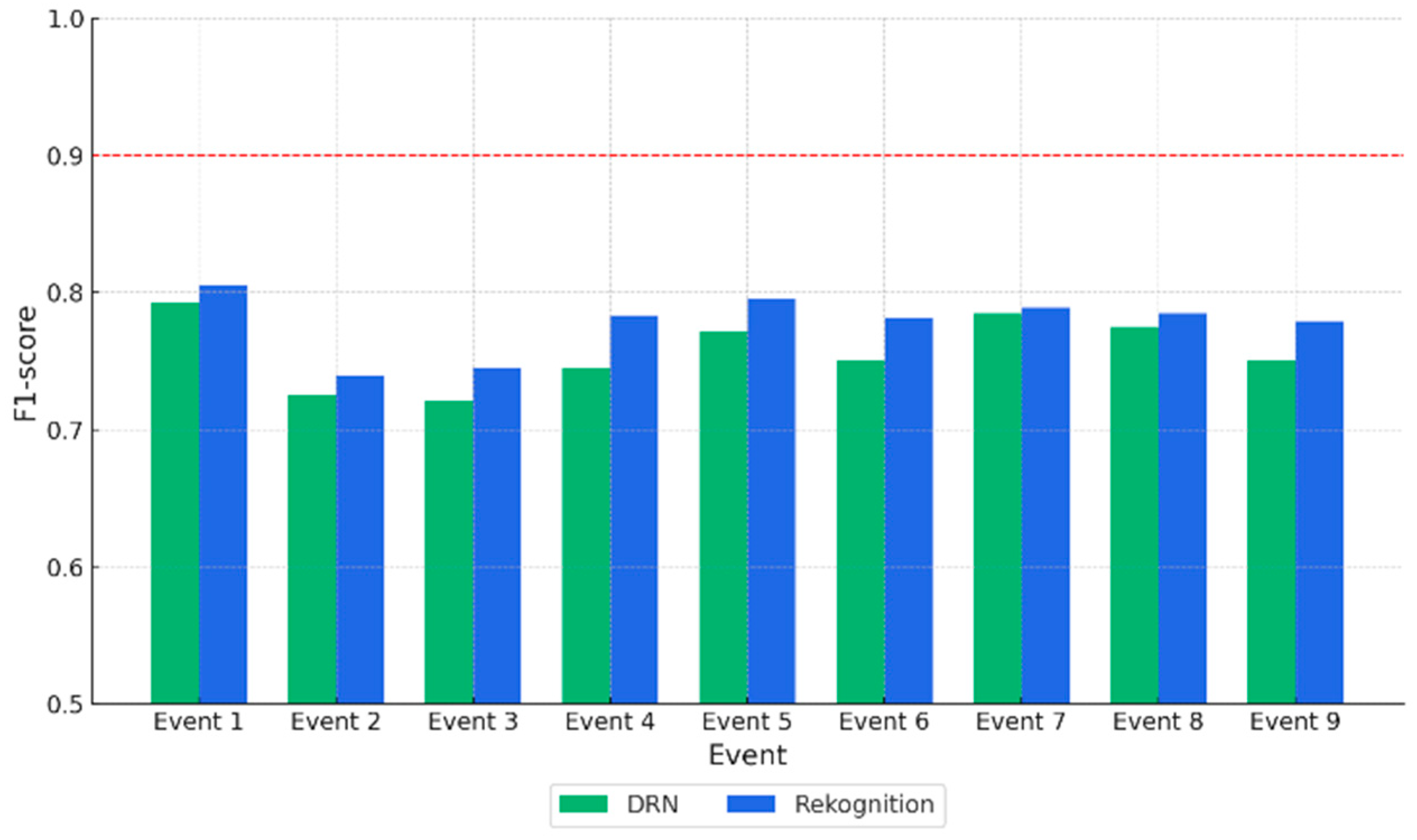

4.5. Multi-Class Classification Results Without Transfer Learning

The second analysis focused on training multi-state risk event detection algorithms to classify all activities listed in

Table 1. As with the binary classification, models were first trained and tested using data from Scenario 1, and subsequently evaluated with data from Scenario 2. The results presented in

Figure 8 show that all three models—DRN, AWS Rekognition Custom Labels, and NVIDIA DeepStream—achieve high F1-scores across all activity categories when both training and testing are conducted within the same context. In this controlled setting, AWS Rekognition and DeepStream exhibit slightly superior performance compared to DRN in most activity classes. Notably, DeepStream achieves the highest F1-scores in specific categories, such as “Raise or lower the load while transporting” (F1 = 0.970) and “Normal operation” (F1 = 0.966). While DRN generally performs slightly below the other two models, it still delivers competitive results, maintaining an average F1-score above 0.90 across all activities.

The activity “Turn on slopes” showed the lowest F1-scores among all categories (DRN: 0.85, Rekognition: 0.90, DeepStream: 0.91), suggesting it is more difficult to classify accurately. Despite these differences, the consistently low standard deviations across models suggest stable performance when operating under conditions similar to those seen during training. All models effectively distinguish between normal and risk activities in known operational contexts.

Figure 9 presents results when testing is conducted on data from Scenario 2, which differs significantly from the training context. The results reveal a notable decline in classification performance across all models.

Although DRN outperforms AWS Rekognition and DeepStream in most categories, all models suffer substantial performance drops—often exceeding 40% compared to Scenario 1. For example, the F1-score for “Normal operation” falls from 0.92 to 0.63 for DRN, 0.96 to 0.59 for AWS Rekognition, and 0.97 to 0.52 for DeepStream. Other complex activities, such as “Moving high loads with unbalanced loads” and “Turn on slopes”, also exhibit severe performance degradation, further highlighting the limited generalization capabilities of all models when faced with unseen environments. The noticeable performance decline when testing in Scenario 2 underscores the challenges these models face in generalizing to new industrial contexts. This limitation is critical for real-world applications, where operational conditions often vary significantly from those seen during training.

These findings underline the need for enhanced model architectures that can reduce domain dependency—particularly in industrial applications characterized by diverse and evolving operating conditions. Addressing this issue requires models capable of adapting to new contexts with minimal retraining. Integrating transfer learning techniques presents a promising direction for improving model adaptability. Developing deep learning architectures that can adapt to new conditions enhances both robustness and generalization, supporting reliable performance in dynamic and heterogeneous industrial environments.

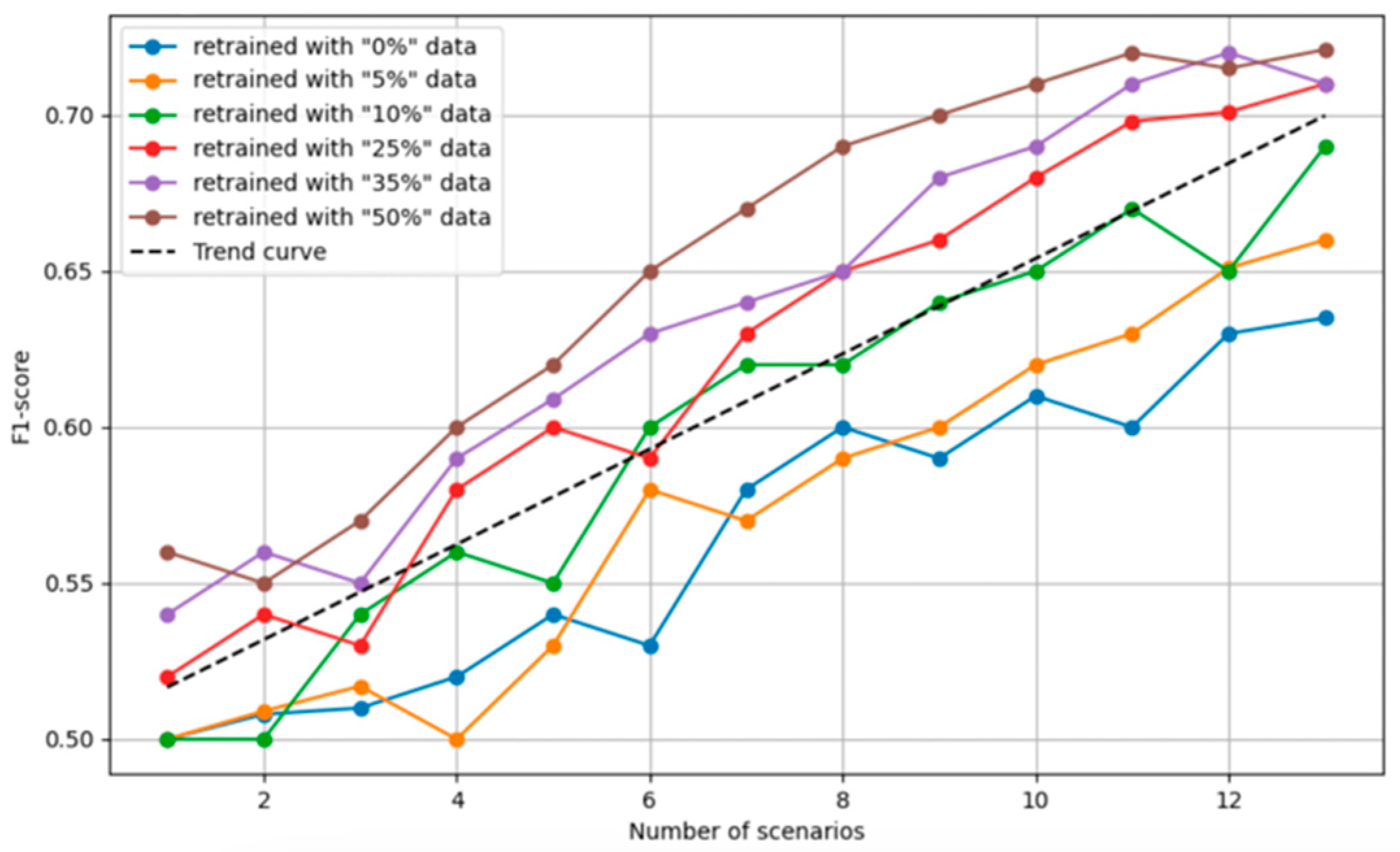

4.6. Binary Classification Results with Transfer Learning

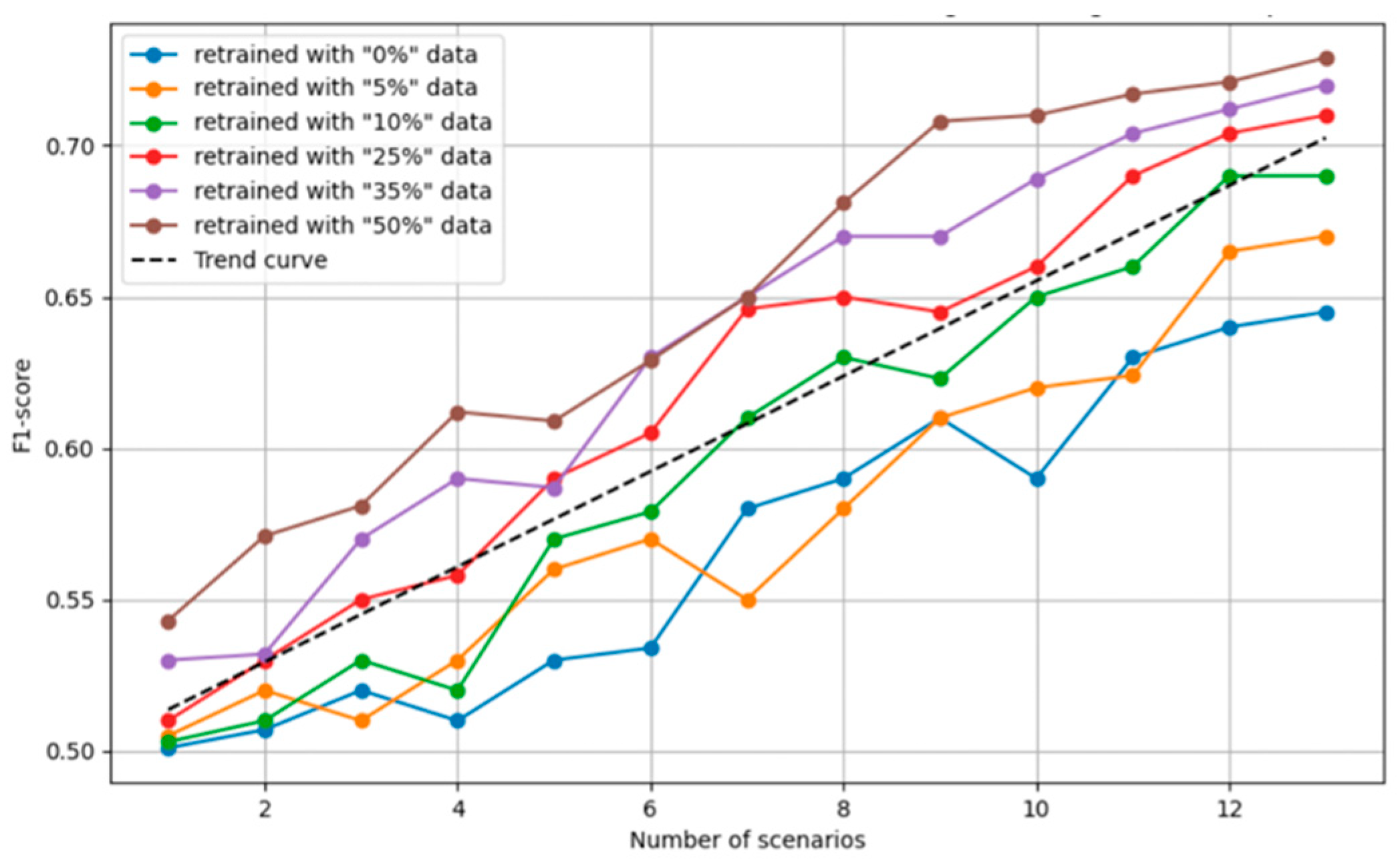

To evaluate the impact of transfer learning on model generalization, experiments were conducted using various retraining percentages (0%, 5%, 10%, 25%, 35%, and 50%) across multiple new scenarios. When analyzing the data presented in

Table 1 and

Table 2, the descriptive statistics of the selected video subsets (5% to 50% of the total number of videos and their corresponding durations) reveal considerable variability in both the quantity of videos and the time they represent across different events. On average, 5% of the videos correspond to 0.64 videos per event (SD = 0.11), while 50% equate to approximately 6.39 videos (SD = 1.08). Regarding viewing time, the mean duration for the 5% subset is 2.59 min (SD = 2.50), ranging from 0.55 to 7.5 min, whereas the 50% subset covers an average of 25.89 min (SD = 24.96), with a minimum of 5.5 min and a maximum of 75 min. These findings indicate substantial heterogeneity in content distribution, suggesting that a small percentage of videos may account for a disproportionately large—or small—portion of total viewing time, depending on the event.

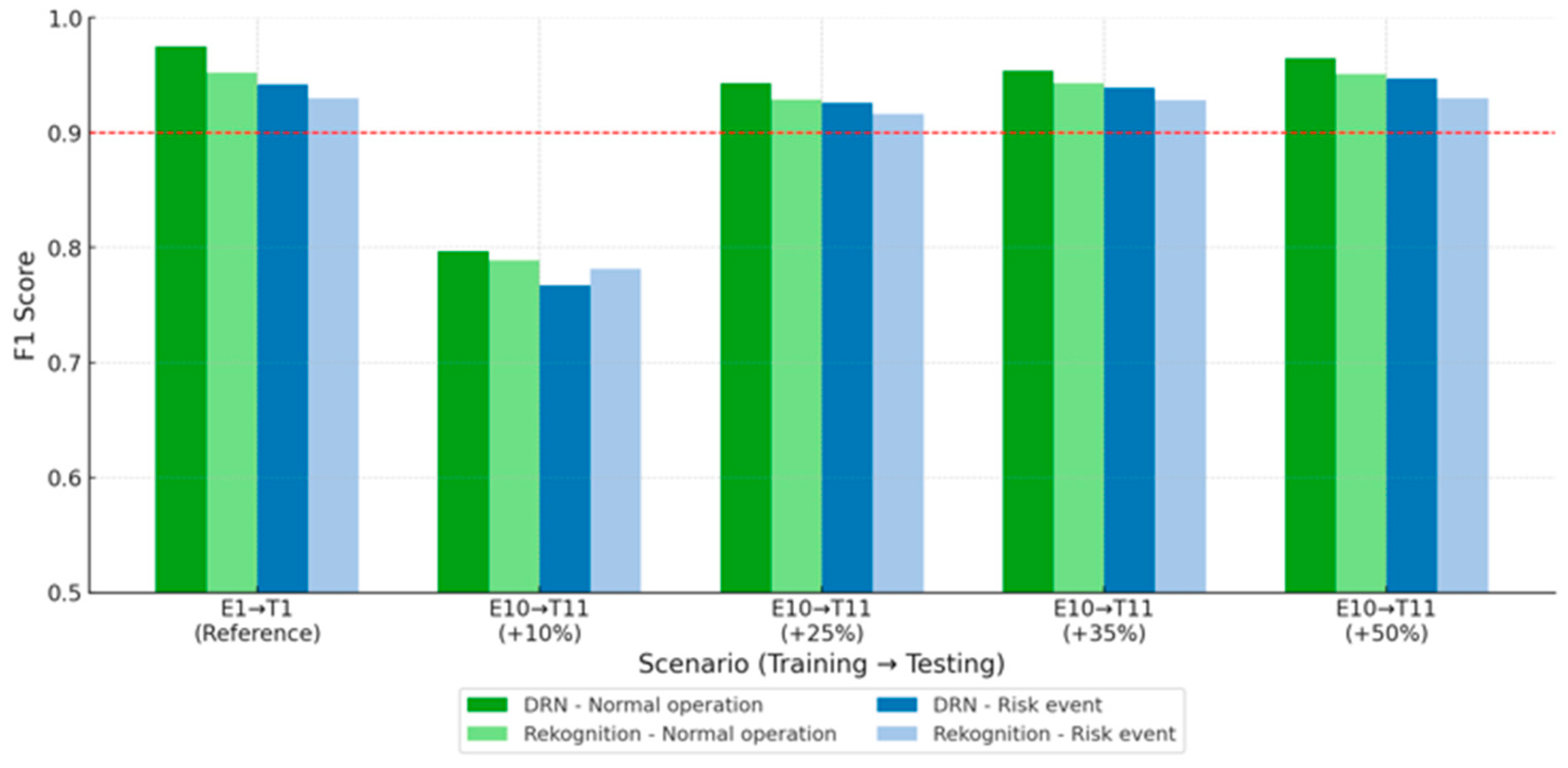

This variability underscores the need for scenario-specific sampling and sourcing strategies when selecting representative subsets for training and evaluation in video-based safety risk detection algorithms and models. Two primary models, DRN and AWS Rekognition, were selected for detailed comparison due to their consistent behavior under the same retraining methodology. Although NVIDIA DeepStream followed the same retraining pipeline, it did not exhibit comparable improvements and is discussed separately. As shown in

Figure 10, both binary classifiers exhibited performance gains as the retraining percentage increased. The highest F1-score (0.962) was achieved by the DRN model at 50% retraining, particularly for normal operations, indicating enhanced classification accuracy and completeness. This trend suggests that retraining data from the same operational context positively influences model performance.

Retraining consistently improved F1-scores in safety risk event classification. DRN reached a maximum of 0.95, while AWS Rekognition attained 0.93 at 50% retraining. These results confirm that feature transfer learning effectively recovers model performance in previously unseen conditions. For normal operations, performance improvements were less pronounced, with DRN showing slightly better results than AWS Rekognition, suggesting stronger adaptability in routine tasks.

A positive correlation was observed between retraining percentage and generalization capability (as expected). The 50% configuration consistently yielded the highest scores. Even with 25% retraining, performance remained strong, making it a viable approach when labeled data are limited. Notably, DRN outperformed AWS Rekognition across all configurations, particularly in normal operation classification.

Performance gains, however, varied with the testing scenario. In Scenario 1, where training and testing conditions aligned, high F1-scores were achieved without retraining (e.g., 0.975 for DRN). In contrast, when a new Scenario 10 introduced domain shifts, transfer learning significantly improved results—from 0.80 to 0.97 with 50% retraining—demonstrating its effectiveness in adapting to new conditions.

Overall, DRN consistently outperformed AWS Rekognition across all retraining percentages, especially in high-variability contexts. These findings suggest that DRN is more resilient to operational variability, offering superior generalization capabilities. To further consolidate these findings, we conducted a detailed analysis of the minimum data requirements and video quality conditions that enable reliable adaptation. Our experiments revealed that reliable adaptation can be achieved with as little as 10–25% of new scenario data, equivalent to approximately 2.5–25 min of annotated video per activity class. Below this threshold, performance deteriorates rapidly, with F1-scores dropping below 0.80 and high variance across runs. Regarding video quality, downsampled inputs (<720 p, <10 fps) or segments with strong compression noise produced a 15–20% decline in performance. Incomplete or corrupted sequences further disrupted temporal modeling in the LSTM stage, resulting in delayed or incorrect event recognition. These findings emphasize that, while the method is efficient, it requires a minimum standard of video quality and completeness to maintain robustness. This is further illustrated in

Table 2.

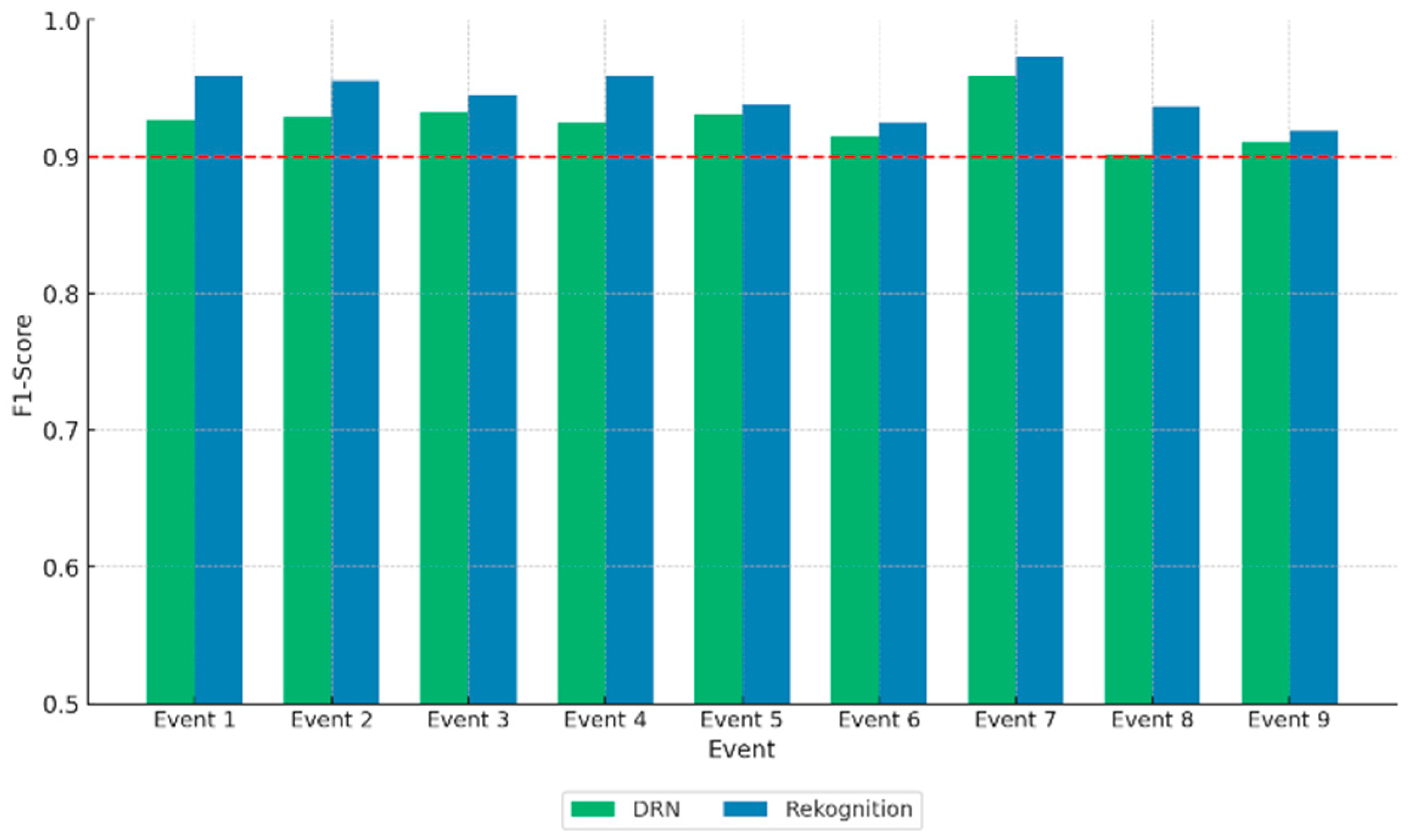

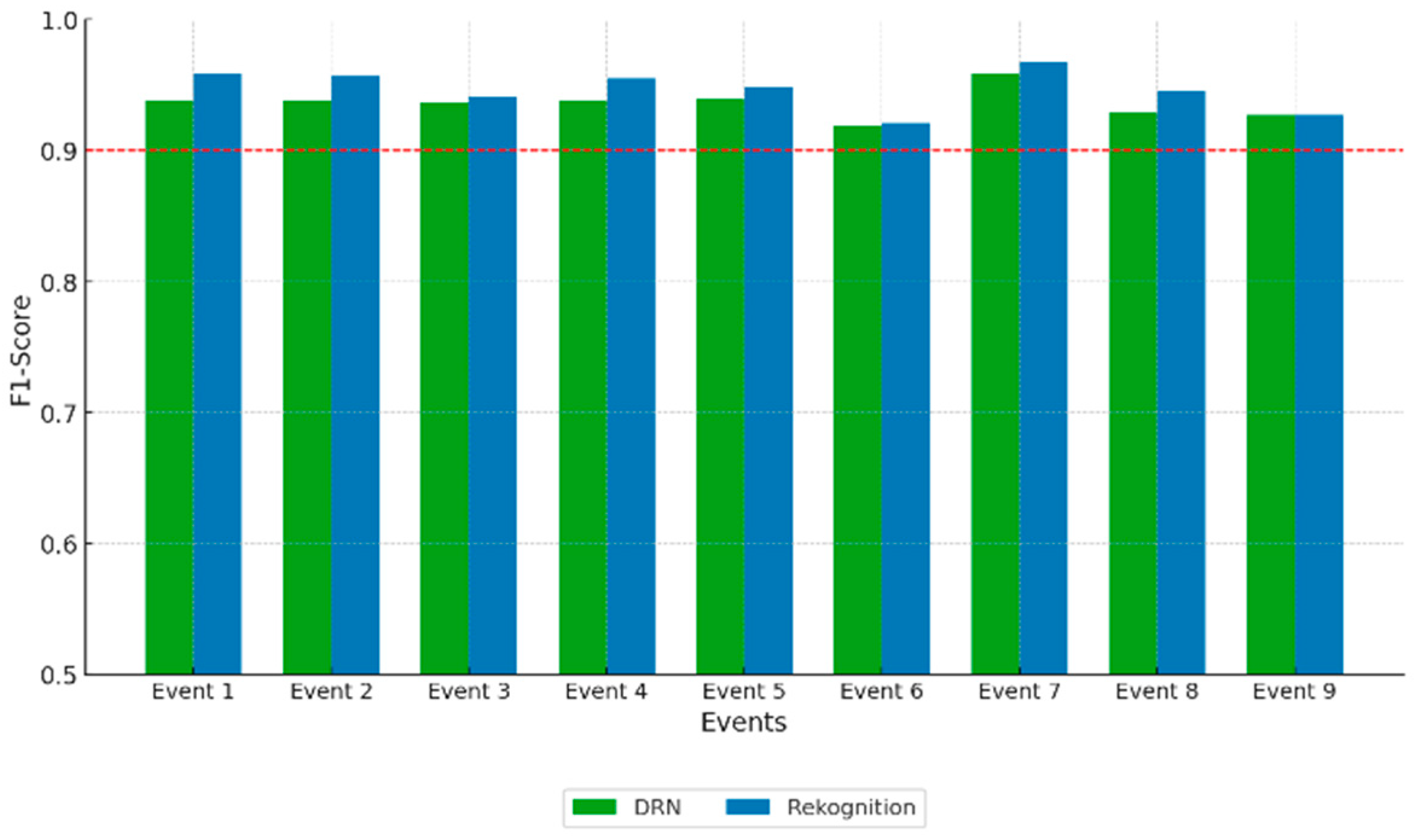

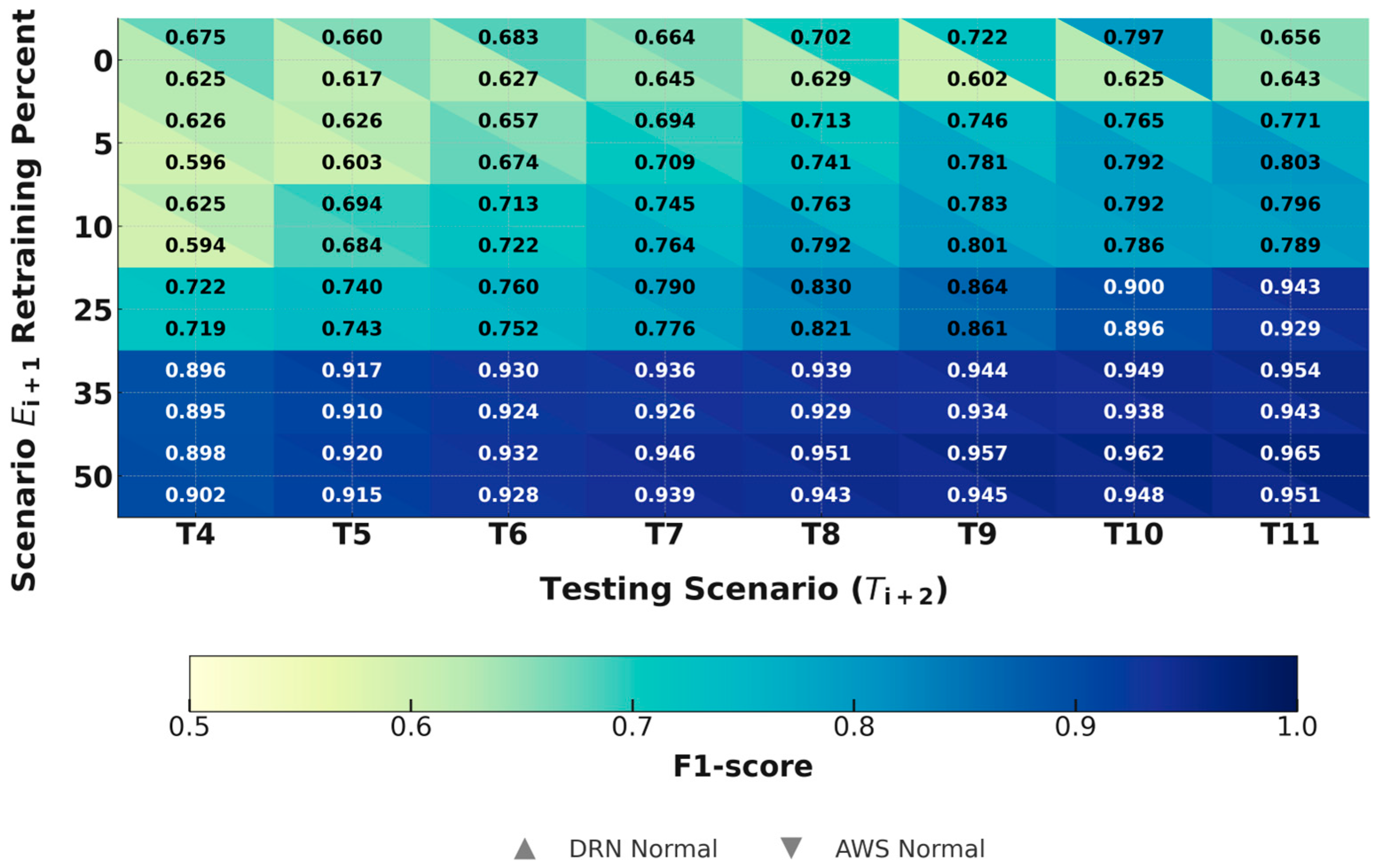

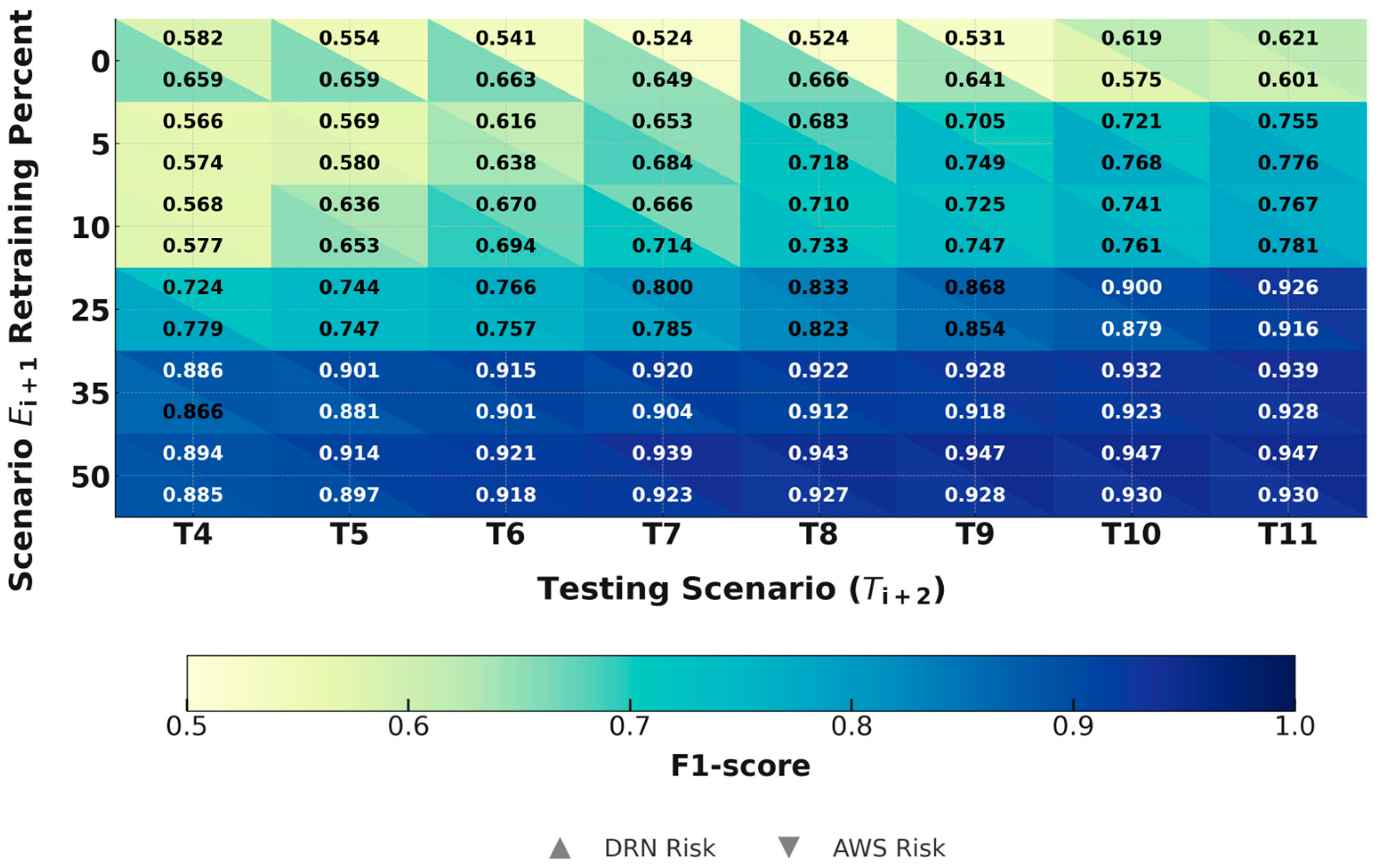

4.7. Multi-Class Classification Results with Transfer Learning

Following the methodology applied to binary classification models, multi-class classifiers were evaluated to assess the effectiveness of transfer learning under dynamic industrial conditions. The results, presented in

Figure 11,

Figure 12 and

Figure 13, compare the performance of the DRN and AWS Rekognition models across multiple retraining configurations and scenarios. These results underline how varying the retraining percentage of data from new scenario influences the generalization capacity of the models when faced with previously unseen operational contexts.

When trained and tested within the same scenario (

Figure 10), both models demonstrated high F1-scores across all activity categories, with AWS Rekognition slightly outperforming DRN in most tasks. Notably, AWS Rekognition achieved superior accuracy in structured activities, such as “Raise operators on the forks” (0.98 vs. 0.96) and “Raise or lower the load while transporting” (0.97 vs. 0.92). The largest margin was observed in “Turn on slopes”, where AWS Rekognition (0.90) outperformed DRN (0.85). These results suggest that AWS Rekognition benefits from its pre-trained architecture in familiar operational conditions, while DRN remains highly competitive across diverse tasks.

However, introducing a new scenario with only 10% retraining (

Figure 11) led to a noticeable performance drop for both models, particularly in safety risk-related activities. For instance, the F1-score for “Moving high loads with unbalanced loads” decreased from 0.93 to 0.73 for DRN and from 0.96 to 0.74 for AWS Rekognition. Similarly, scores dropped in “Go down slopes head-on with the crane loaded” (DRN: 0.72; AWS: 0.75). Even in normal operations, performance declined (DRN: 0.80; AWS: 0.81), indicating that minimal retraining is insufficient for effective generalization when adapting to new scenarios.

Increasing the retraining percentage to 35% (see

Figure 12) significantly improved classification performance, restoring F1-scores close to original levels. DRN reached 0.93 in normal operation classification, nearly matching its baseline of 0.92, while AWS Rekognition recovered to 0.96. The performance gap between the two models narrowed, with DRN showing greater gains in safety risk-related tasks such as “Turn on slopes” (from 0.75 to 0.91) and “Moving high loads with unbalanced loads” (from 0.73 to 0.93). These findings emphasize DRN’s strong adaptability to previously unseen conditions.

At 50% retraining (see

Figure 13), both models achieved their highest post-retraining scores. DRN reached 0.94 in normal operation classification and 0.93 in “Turn on slopes”, while AWS Rekognition achieved 0.96 and 0.93, respectively. The marginal differences at this stage indicate that with sufficient retraining, DRN achieves comparable performance to AWS Rekognition, even in complex multi-class scenarios.

Overall, the results confirm that increasing the retraining percentage substantially enhances model generalization. While AWS Rekognition initially shows an advantage in structured environments, DRN demonstrates superior adaptability to domain shifts, particularly in high-risk safety activities. These observations emphasize the importance of transfer learning in multi-class classification and underscore the necessity of a sufficiently high retraining percentage to ensure robust adaptation to dynamic operational environments for these models.

In contrast, models based on Nvidia DeepStream showed limited gains in both binary and multi-class settings. Despite incorporating additional scenarios and retraining data, only a slight upward trend in F1-score was observed, with no significant improvements in distinguishing normal operations from safety risk events. This limited responsiveness suggests that DeepStream models struggle to generalize effectively through transfer learning compared to DRN and AWS Rekognition for the use case and application under study. As shown in

Figure 14 and

Figure 15, when using the proposed feature transfer learning strategy in DeepStream, both the binary and multi-class classifiers show only a modest upward trend, with no significant improvements in distinguishing normal operations from safety risk events.

To further validate our approach, we compared it with state-of-the-art domain adaptation (DANN) and few-shot learning (Prototypical Networks) baselines reported in recent studies. DANN typically required 35–50% of the target domain data to achieve F1-scores > 0.85, while our DRN with incremental transfer learning reached similar performance with only 10–25% [

62]. Few-shot approaches achieved acceptable results in stable domains but dropped below F1 = 0.70 under severe domain shifts, especially with strong lighting variability. By contrast, our method consistently maintained F1 > 0.85 with minimal adaptation [

49]. These results indicate that the incremental feature transfer strategy offers a better balance between generalization and efficiency, particularly for deployment on edge devices where computational resources are constrained. This is further illustrated in

Table 3.

4.8. Feature Significance and Model Interpretability

SHAP (Shapley Additive Explanations) values are a widely used technique for interpreting machine learning models by quantifying the contribution of each input feature to the model’s output. This method provides insight into how specific variables influence predictions, enabling the identification of the most relevant features while minimizing the impact of non-informative or redundant inputs. In this study, SHAP values were applied to evaluate how transfer learning affects the relative importance of detected objects in image-based classification tasks for DRN.

The analysis demonstrated that transfer learning modifies the distribution of feature importance, resulting in a model that is more focused and generalizable. As shown in

Table 4, features such as the forklift and workspace became significantly more influential after transfer learning, with SHAP values increasing from 0.53 to 0.88 and from 0.58 to 0.88, respectively. In contrast, the relevance of features such as the chassis and upper cover decreased notably—from 0.78 to 0.25 and from 0.35 to 0, respectively—indicating that the model deprioritized areas in video scenes contributing less to accurate classification.

This reallocation of feature importance towards semantically meaningful regions contributes to improved model performance, particularly in safety-critical applications. The trends observed in

Figure 14 and

Figure 15 reflect an overall improvement in F1-score for both normal and risk event classification across increasing retraining scenarios. By emphasizing the most representative visual features, the model becomes more robust and interpretable, while also reducing noise introduced by less discriminative elements.

Furthermore, the refined feature focus introduced by transfer learning can reduce the number of annotated samples required in training iterations, optimizing the annotation process and decreasing computational costs. This is particularly beneficial in industrial safety contexts, where manual labeling is time-consuming and costly. The improved interpretability also enhances transparency and trust in real-time decision-making systems.

SHAP-based analysis confirms that transfer learning not only improves classification performance but also contributes to a more efficient and interpretable model. These benefits support the scalability and operational viability of the proposed approach in dynamic industrial environments. By guiding annotators to concentrate solely on safety-critical regions (e.g., forks, load zones, safety boundaries), SHAP effectively eliminated the need to label non-informative areas such as background clutter [

63]. This focus reduced redundant bounding boxes and labeling steps, resulting in a 20–30% decrease in average annotation time per frame [

64]. Such savings are particularly relevant in industrial video datasets, where manual annotation is often the bottleneck in developing and adapting new models.

The evaluation of transfer learning across scenarios and retraining levels reveals distinctive patterns in model performance when comparing binary and multi-class classification tasks, particularly for the detection of normal versus safety risk events.

In the binary classification results (

Figure 16), which include both normal and risk event detection, the DRN and AWS models demonstrate consistent improvements in F1-score with increasing retraining percentages. DRN generally outperforms AWS across most scenarios and retraining levels. For instance, in the T1 test scenario at 0% retraining, DRN achieves an F1-score of 0.95 for normal events and 0.97 for risk events, while AWS reaches slightly lower values. This trend continues across T2 and T3, indicating DRN’s superior generalization under minimal adaptation. As retraining increases to 50%, both models converge to high F1-scores (typically > 0.90), reflecting effective domain adaptation through incremental scenario inclusion.

Notably, binary classification performance is robust even with minimal retraining, particularly for DRN. This suggests that binary discrimination (normal vs. risk) is inherently simpler and benefits from the domain-invariant feature representations learned by the base models.

Figure 17 and

Figure 18 disaggregate performance into multi-class classification tasks, allowing deeper insight into how the models handle increased label granularity. In

Figure 17 (normal events only), a similar trend of performance improvement with retraining is observed. DRN consistently achieves higher F1-scores than AWS, especially in early retraining stages (0–25%), with scores improving sharply from ~0.67 at 0% retraining to ~0.94–0.96 at 50%. AWS shows more modest gains under low retraining levels, suggesting that DRN’s learned features are more transferable to unseen but related normal event classes.

However, the performance gap between DRN and AWS narrows at higher retraining levels, indicating that both models can effectively adapt given sufficient data from new scenarios. This convergence supports the feasibility of incremental retraining as a practical deployment strategy for evolving environments.

Figure 18 presents multi-class classification results for risk events, which are typically more variable and visually complex. Consequently, baseline performance at 0% retraining is lower compared to normal events, particularly for AWS (F1-scores < 0.55 across most test scenarios). DRN, while still affected, shows comparatively higher initial performance, with values around 0.58–0.66, reinforcing its generalization advantage. As retraining percentage increases, both models improve significantly, but the disparity remains evident. At 25% retraining, DRN surpasses 0.80 F1-score across several test scenarios, while AWS lags behind by a small margin in most cases. At 50% retraining, both models approach parity, reaching F1-scores above 0.92 in most scenarios.

Interestingly, the variance in performance across scenarios is higher for risk events than for normal events. This may be attributed to the increased contextual complexity and rarity of risk events, which require more training diversity to achieve stable classification performance. DRN exhibits higher transferability across unseen scenarios and minimal retraining conditions, particularly for risk-related tasks. AWS benefits more from higher retraining percentages. Incremental retraining yields substantial gains for both models, especially in multi-class risk classification, underscoring the necessity of scenario-specific data inclusion in training pipelines. Test scenarios T8–T10 show the most rapid improvement across retraining levels, suggesting favorable conditions for model generalization in these contexts (possibly due to event diversity or visual consistency). Binary classification is significantly easier and more stable than multi-class classification, especially for rare risk categories. This highlights the need for balanced dataset curation and tailored retraining strategies.

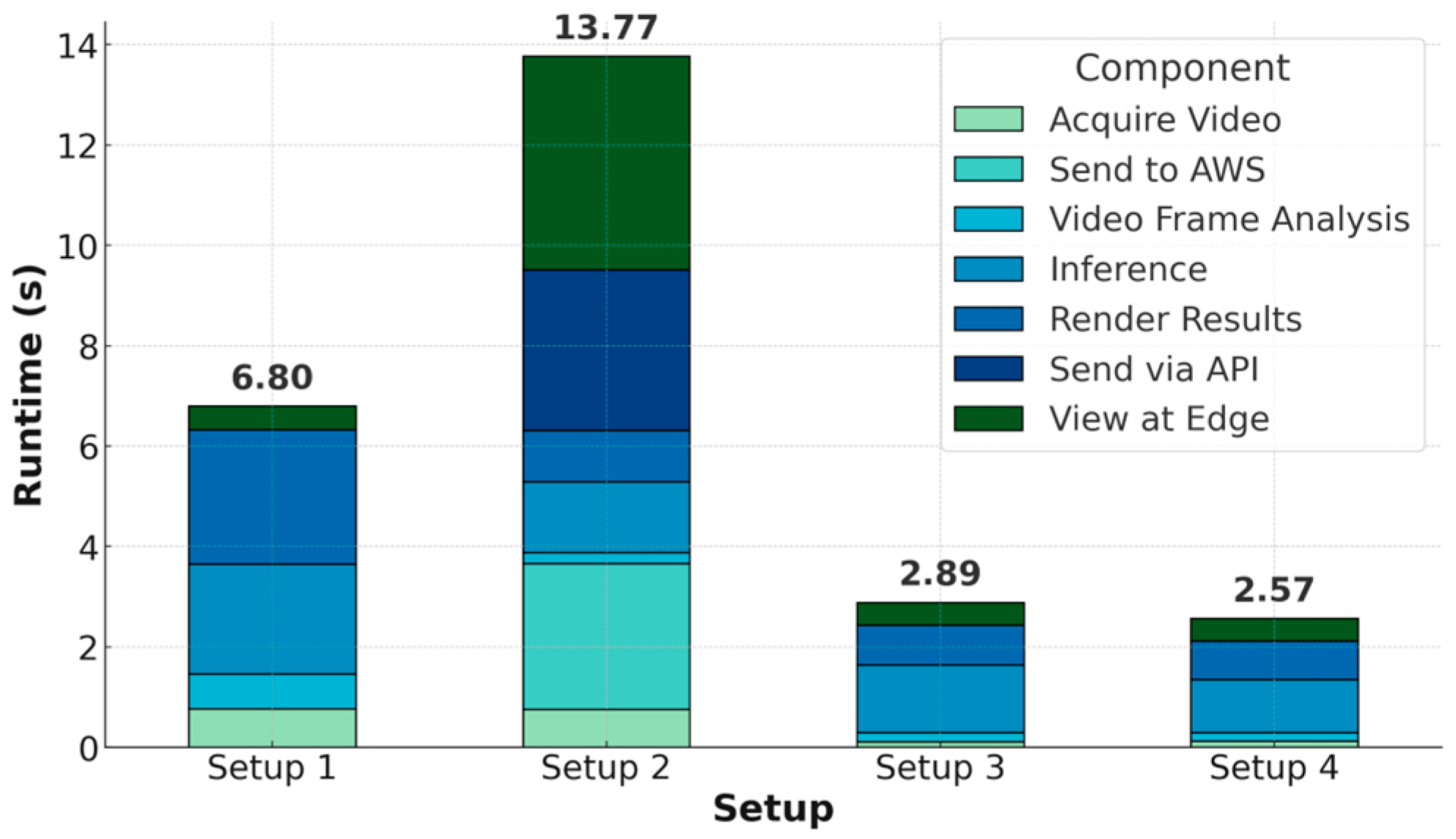

4.9. Inference Benchmark

To assess model performance under real-world operational conditions—where latency is critical for timely safety risk event detection, this section evaluates the end-to-end inference efficiency of trained models deployed across both edge and cloud platforms. Beyond conventional metrics such as F1-score, it is essential to consider the system’s ability to generate timely alerts. In industrial environments, where hazardous events can escalate rapidly, detection systems must provide not only accurate but also prompt warnings. Delays in detection and communication may significantly increase the risk of accidents, and compromise the safety of operators and surrounding personnel. Therefore, analyzing the complete inference pipeline—from video acquisition to user notification—is fundamental for meeting real-time operational demands.

The literature emphasizes that acceptable response times vary according to industry and event severity. Critical systems in manufacturing and heavy machinery often require responses within milliseconds to a few seconds. To quantify these performance characteristics, three metrics are considered:

Input data read time: Duration required to acquire and preprocess video data before model inference.

Inference time: Time taken by the model to process inputs and generate predictions.

Delivery time: Time elapsed before the detection results reach the user or operator.

The benchmark replicates real-world operational flow, encompassing video capture, inference, and visualization. It includes Full HD camera acquisition, edge-based embedded processing, and both local and remote result presentation via a web interface. Four deployment configurations were analyzed:

Setup 1: DRN deployed on a Raspberry Pi 5, performing inference entirely on the edge.

Setup 2: Inference on a Raspberry Pi 5 with data transferred to AWS Rekognition for cloud-based processing and visualization.

Setup 3: DRN running on a Jetson Nano, utilizing onboard GPU acceleration.

Setup 4: DeepStream deployed on a Jetson Nano for comparative edge inference.

In cloud-based configurations, such as those involving AWS Rekognition, video segments are uploaded to an S3 bucket, triggering inference on EC2 instances. Results are then distributed through AWS Greengrass for visualization. In contrast, DeepStream—optimized for NVIDIA CUDA architectures using TensorRT—executes inference locally on Jetson Nano devices. On Raspberry Pi devices, TensorFlow Lite conversion is required due to limited hardware support, involving calibration, quantization, and export stages to ensure performance efficiency.

While all implementations follow a similar data processing structure, fundamental differences in deployment—edge versus cloud—significantly impact timing, bandwidth, and system responsiveness (see

Figure 19 and

Figure 20).

The comparative analysis of inference runtimes across binary and multi-class classification modes reveals significant differences in performance among the evaluated setups. Quantitative results show that DRN deployed on the Jetson Nano in Setup 3 achieves the lowest end-to-end latency, with total processing times ranging from 2.25 to 2.89 s, followed closely by DeepStream in Setup 4 (2.05 to 2.57 s). In contrast, AWS Rekognition in Setup 2 exhibits the highest latency, with total runtimes exceeding 13 s due to video upload and result retrieval overhead. DRN on Raspberry Pi in Setup 1 also demonstrates competitive latency (5.84 to 6.8 s), outperforming the cloud-based alternative by avoiding cloud communication delays.

In terms of per-frame inference performance, DeepStream in Setup 4 achieves the fastest execution, ranging from 0.56 to 1.05 s. This efficiency is attributable to its optimized GPU pipelines and use of TensorRT acceleration. DRN also performs efficiently on the Jetson Nano in Setup 3, with inference times between 0.76 and 1.35 s across binary and multi-class tasks. While AWS Rekognition in Setup 2 shows comparable inference speeds (0.86 to 1.41 s), the total latency is dominated by communication steps such as video upload (2.9 to 3.1 s), API transmission (3.1 to 3.2 s), and remote visualization (4.22 to 4.25 s).

These findings underscore the advantage of edge-deployed DRN models (Setup 1 and 3) in reducing communication overhead. DRN eliminates the need for extensive data transmission and enables faster delivery of results to local edge monitors. Notably, DRN on Jetson Nano in Setup 3 achieves remote visualization times as low as 0.43 to 0.45 s, compared to AWS Rekognition in Setup 2, which requires over four seconds for the same task. Additionally, DRN on Raspberry Pi in Setup 1 achieves moderate end-to-end runtimes, confirming its suitability for deployment on low-power devices when real-time processing is not critically constrained.

Model size also plays a critical role in deployment suitability. The DRN model has a compact footprint of 0.9 GB, compared to approximately 4 GB for AWS Rekognition and 2 GB for DeepStream. This smaller memory requirement enables efficient deployment in resource-constrained environments, reducing memory load and initialization time while enhancing system responsiveness.

Across both binary and multi-class classification modes, the relative ranking of total processing time remains consistent: DRN on Jetson Nano in Setup 3 outperforms DeepStream in Setup 4 and AWS Rekognition in Setup 2, followed by DRN on Raspberry Pi in Setup 1. Although multi-class classification introduces additional latency due to increased computational complexity, the DRN model maintains a consistent advantage in both classification types.

In summary, DRN demonstrates superior performance across multiple dimensions, including latency, inference speed, communication efficiency, and model compactness. These characteristics make it a highly suitable candidate for deployment in real-time industrial safety monitoring applications. In contrast, AWS Rekognition is best suited for scenarios where cloud infrastructure is available and latency is not critical. DeepStream offers fast inference but requires more computational resources and larger memory allocation. Overall, DRN provides a balanced trade-off between performance and deployability, making it an optimal solution for scalable, low-latency edge deployments.

The edge deployment experiments also revealed important trade-offs. On Raspberry Pi 5, end-to-end latency ranged between 5.8 and 6.8 s with low power consumption (~5 W), sufficient for periodic monitoring but inadequate for real-time alerting [

65]. On Jetson Nano, latency dropped below 3 s with higher power draw (~10 W), making it suitable for real-time inference but less appropriate for battery-powered deployments. AWS Rekognition, although accurate, introduced delays exceeding 13 s due to communication overhead [

66]. These results confirm that latency, energy, and model footprint must be balanced depending on application needs. In future work, we plan to incorporate quantization and pruning to reduce the DRN’s size below 500 MB and achieve below 2 s inference on ultra-low-power devices [

67]. The performance comparison across platforms is summarized in

Table 5.

5. Conclusions

This study presented a transfer learning-based methodology to enhance the generalization capacity of video-driven safety risk detection systems in industrial environments. Addressing the limitations of conventional deep learning models—which often underperform in unseen operational contexts—the proposed approach enables incremental adaptation to new scenarios using only small subsets of annotated data. By integrating a dual-stage deep neural architecture (DRN) with scenario-driven retraining, the system demonstrated consistent improvements in both binary and multi-class classification tasks across diverse forklift operation scenarios defined by OSHA 3949.

Extensive experimental results confirmed that transfer learning significantly improves detection accuracy and robustness in cross-scenario evaluations. The DRN consistently outperformed existing solutions such as AWS Rekognition and NVIDIA DeepStream, particularly under limited data availability. The transfer learning approach enabled effective adaptation with as little as 10–25% of new scenario data, making it highly practical for industrial applications where labeled data are often scarce. Moreover, the DRN achieved state-of-the-art performance on embedded edge devices such as the Jetson Nano, with low inference latency, small memory footprint, and strong real-time response—validating its suitability for resource-constrained industrial settings.

Interpretability analysis using SHAP further revealed that transfer learning reorients the model’s focus toward semantically meaningful regions, enhancing transparency and reducing annotation requirements. This shift not only supports more efficient model retraining but also increases trust in automated safety monitoring systems.

Despite promising results, limitations must be acknowledged. The current validation is restricted to forklift operations, and generalization to machinery with different kinematic profiles (e.g., mining shovels, overhead cranes, or CAEX trucks) has not yet been demonstrated. Similarly, extreme lighting conditions such as nighttime operation, glare, or infrared-only imaging were not tested. Preliminary simulations with artificially darkened videos showed an average performance drop of ~25% in F1-score, highlighting that multimodal sensing (thermal, depth, or radar) may be necessary for robust deployment in such conditions.

The performance of our DRN with incremental transfer learning, reaching F1-scores above 0.90 and AUC values exceeding 0.95 under domain shift, is competitive with or superior to recently published frameworks for video anomaly detection. Weakly supervised methods based on multimodal and multiscale feature fusion report AUC values around 0.97 on standard benchmarks [

68], while semantic keyframe extraction combined with pre-trained deep models has achieved accuracy close to 96% [

30] Spatiotemporal architectures leveraging multi-stream fusion have reported AUC ≈ 0.95 even in noisy environments [

69], and other multimodal solutions, including transformer-based models for violence recognition [

70] and UAV-based anomaly detection frameworks [

71] also reach performance levels above 90%. However, unlike these approaches, our framework achieves high performance with only 10–25% of new scenario data and a significantly smaller computational footprint. This efficiency is critical for industrial deployments where annotation costs are high and computational resources are limited. By enabling adaptation with minimal scenario-specific data and supporting inference on edge devices such as the Jetson Nano, the proposed DRN framework provides a practical, scalable, and resource-efficient alternative to more complex multimodal or transformer-based methods.

Overall, the results demonstrate that the proposed feature-based transfer learning strategy provides a scalable, efficient, and interpretable solution for deploying safety risk detection systems in dynamic and heterogeneous industrial environments. Future work will explore domain adaptation under federated learning settings, active learning strategies to further reduce annotation costs, and extending the architecture to multimodal sensor fusion.