1. Introduction

Deep reinforcement learning (DRL) algorithms [

1] enable agents to learn optimal policies through interaction and achieve superhuman performance in domains ranging from game play [

2,

3,

4] to robotics [

5,

6,

7]. Despite successes in various domains, policies learned by DRL algorithms are often brittle because of neural network parameterization. As first demonstrated in image classification tasks [

8], neural networks are vulnerable to adversarial perturbations. These perturbations, typically imperceptible to humans, can lead to erroneous predictions with high confidence [

9]. Similarly, trained DRL agents can fail catastrophically when deployed in environments slightly different from training settings or when subjected to noise or adversarial perturbations [

10,

11]. This lack of robustness limits the deployment of agents in real-world scenarios where model misspecification, inherent environmental stochasticity, and the potential for malicious adversarial interventions are prevalent. Addressing this susceptibility to perturbations and modeling differences remains a critical challenge in the field.

Numerous techniques have been proposed to address the vulnerabilities of trained policies in DRL. By integrating safety constraints [

12,

13], domain randomization [

14], and adversarial training frameworks [

15], researchers have developed methods to improve agent robustness while maintaining its performance in dynamic environments [

16,

17]. Among these techniques, adversarial reinforcement learning (ARL) enhances the standard training process by exposing the agent to worst-case (or near worst-case) perturbations during training, thereby improving its robustness [

18]. In ARL, two agents typically compete with each other: an adversary agent and a protagonist agent. The adversary is trained alongside the protagonist rather than being held fixed. The core idea is to formulate a minimax optimization problem in which the protagonist aims to maximize the cumulative reward, while the adversary seeks to minimize it through perturbations [

19].

Adversarial attacks in DRL can be broadly categorized according to which aspect of the process they target [

20]: the state observations received by the agent [

21,

22], the actions taken by the agent [

23], the reward function that guides the learning process [

24], or the dynamics of the environment [

25]. Existing methods typically focus on a single attack type, neglecting their interactions and mutual effects [

26]. Some studies have shown that adversarial training with an action-disrupting adversary improves the resilience of the agent to parametric variations during training, while the impact of such training on other factors remains largely unexplored [

27]. However, in practical scenarios, multiple disruptions occur simultaneously and influence each other. For example, an autonomous vehicle utilizes sensors (e.g., cameras and LiDAR) for environmental perception and controls its steering and speed through actuation. Both the sensing and actuation processes are susceptible to noise and uncertainties at any time during the operation [

28]. There remains a significant gap in the literature regarding the extent to which an agent, trained to be robust to a specific type of disturbance through such adversarial training, can effectively generalize its robustness to novel and distinct forms of disruption.

In this study, we investigate the limitations of various adversarial training methods, with a particular focus on their inability to achieve robustness across mixed-attack scenarios simultaneously. To address these shortcomings, we introduce the Adversarial Synthesis and Adaptation (ASA) framework. It integrates and balances mixed adversarial attack strategies to achieve robustness against both observational and action-based attacks simultaneously. Our method adaptively learns to scale diverse attack types during training to effectively balance the trade-offs between them. The results in Mujoco Playground environments [

29] demonstrate the ability of ASA to adaptively balance adversarial attacks, leading to improved performance over baseline methods in default settings, as well as under single-type and mixed adversarial attacks. In summary, our study offers the following key contributions:

- 1.

Empirical Evidence of Asymmetric Robustness: We are the first to empirically demonstrate that robustness to observation perturbations does not imply robustness to action perturbations and vice versa. This distinction is often overlooked in prior work, which typically focuses on single-type adversarial attacks in isolation. Our results highlight the critical need to address mixed perturbations that simultaneously target multiple components of the decision-making pipeline.

- 2.

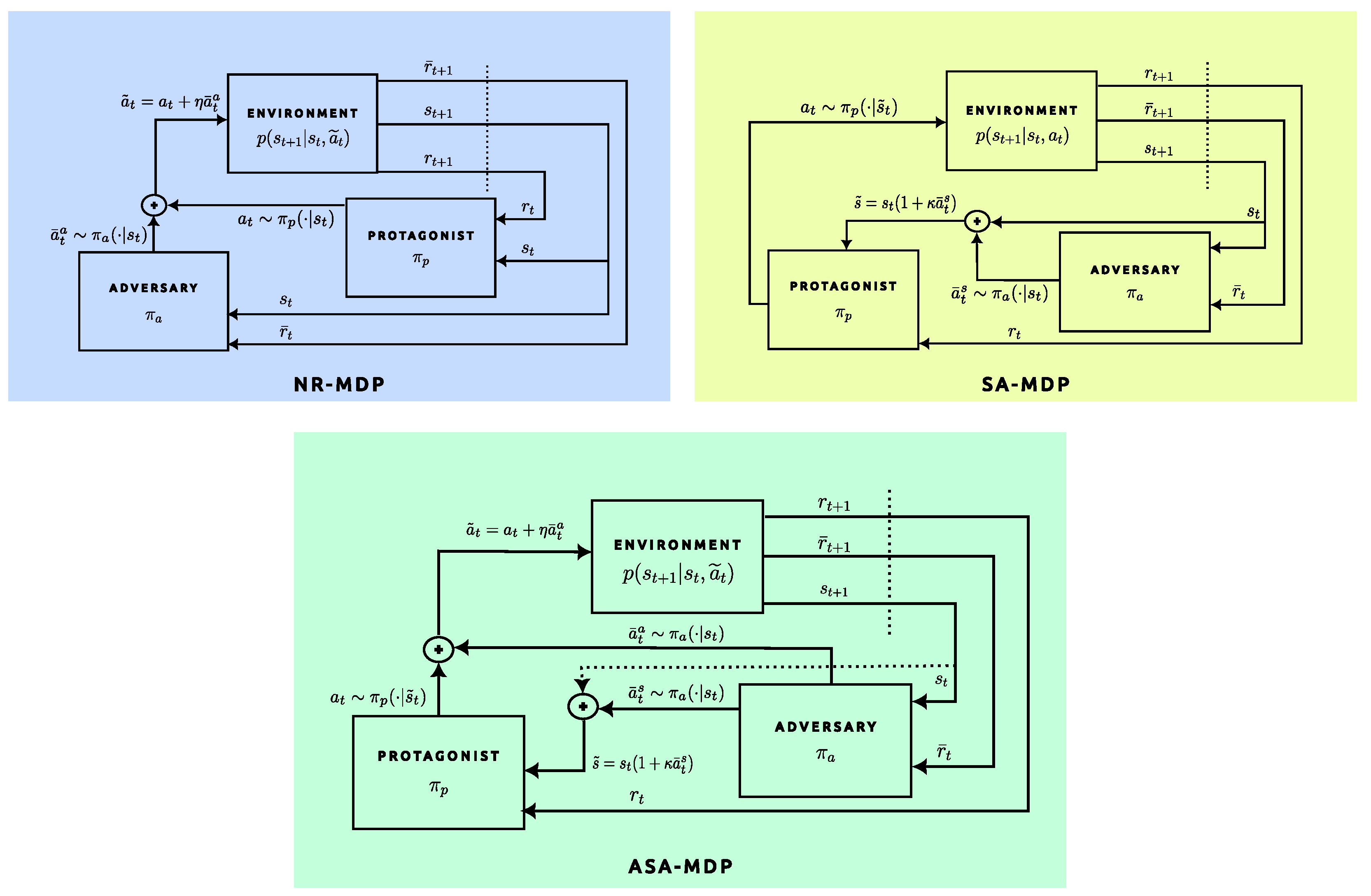

A Generalized Framework for Mixed Adversarial Attacks: We introduce Action and State-Adversarial Markov Decision Process (ASA-MDP), a novel game-theoretic extension of the standard MDP. Unlike prior formulations such as NR-MDP [

23] and SA-MDP [

21], ASA-MDP formally models mixed adversarial attacks involving simultaneous perturbations to both the actions and observations. In particular, ASA-MDP subsumes MDP, NR-MDP, and SA-MDP as special cases through the appropriate parameterization of adversarial strengths, providing a unified and flexible framework for studying adversarial robustness.

- 3.

Balanced Adversarial Training via ASA-PPO: We propose ASA-PPO, a hybrid adversarial training algorithm in which the adversary dynamically balances its perturbation budget in both the state and action spaces. Unlike naive unbalanced mixed-attack strategies, ASA-PPO promotes the learning of protagonist policies that are significantly more robust across a spectrum of attack modalities, including single-type and mixed perturbations. Extensive experiments validate the superiority of ASA-PPO over the existing baselines.

The remainder of this paper is organized as follows.

Section 2 reviews related works, providing a comprehensive overview of the existing research in the field.

Section 3 introduces the notation and background necessary to understand this study.

Section 4 presents the formulation of the problem and the proposed solution framework, detailing its components and methodology.

Section 5 evaluates the framework through simulations and discusses the results, highlighting the key findings and implications. Finally,

Section 6 concludes the paper by summarizing the contributions and outlining potential directions for future work.

2. Related Works

Adversarial learning originates from early findings in deep learning, which revealed that small perturbations to input data can cause models to produce incorrect classifications [

8,

9]. Building on adversarial attacks in deep learning, a wide range of attack strategies have been proposed for different problem domains [

30,

31]. Specifically in DRL, early studies by Huang et al. [

10] and Kos et al. [

15] demonstrated that minor perturbations to the observed states of an agent can significantly degrade the performance of the learned policy, often resulting in failures in the control tasks. Subsequent research has shown that trained agents are also vulnerable to action-space perturbations [

32], reward poisoning [

24,

25], and model attacks [

33], causing similar performance failures. All of these findings underscored the critical need for robust learning strategies in DRL [

21], especially for safety-critical applications.

Beyond direct manipulation of MDP aspects, the concept of adversarial policies emerged, where an adversary agent in a multi-agent setting learns to specifically exploit the weaknesses of a DRL agent, leading it into undesirable states or forcing suboptimal actions, even without directly altering agent’s sensory inputs [

34]. Adversarial reinforcement learning (ARL) incorporates manipulated samples into the training process as a defense mechanism, preventing performance degradation in the presence of adversarial or natural noise [

23,

35]. Attacks in ARL can be classified into three settings: white-box, gray-box, and black-box based on the level of knowledge available to the attacker. In the white-box setting, gradient-based methods, such as the Fast Gradient Sign Method (FGSM) [

9] and Projected Gradient Descent (PGD) [

36], exploit gradients of the loss function to perturb control policies, causing significant performance degradation [

35,

37]. In the gray-box setting, adversaries with limited knowledge of the target policy employ man-in-the-middle strategies [

38]. In the black-box setting, attacks can reduce the performance of the agent without access to model parameters [

33].

Despite the initial successes of adversarial training, robustness evaluation often suffered from a static perspective. This led to a critical understanding that robust evaluation requires considering adaptive attacks that are specifically designed to overcome particular defenses [

39]. After all, a protagonist that is aware of the static attack mechanism can often devise strategies to bypass it, leading to a false sense of security. A foundational approach in this area is Robust Adversarial Reinforcement Learning (RARL), proposed by Pinto [

19]. RARL formalizes the problem as a zero-sum game between a protagonist agent and an adversarial agent, where the adversary actively learns to apply destabilizing forces on the predetermined joints. The protagonist, in turn, learns a policy that is robust to the optimal disruptive strategies employed by the adversary. Later, Pan et al. extended RARL by proposing the Risk-Averse Robust Adversarial Reinforcement Learning (RARARL) algorithm in which the risk-averse protagonist and the risk-seeking adversary alternate control of the executed actions [

40]. The introduction of risk-averse behavior significantly reduced test-time catastrophes for protagonist agents trained with a learned adversary, compared to those trained without one. Building on their previous work in [

21], Zhang et al. uses the same zero-sum formulation under the ARL framework to train an agent that is robust to perturbations in the observation space [

41]. This coadaptation process forces the agent to learn to perform well even under worst-case (or near worst-case) conditions generated during training, thereby improving its generalization to previously unforeseen disturbances.

Even though co-adaptation has advantages over static attacks, Gleave [

34] showed that the protagonist trained with an adversary via DRL is not robust to replacement of the adversarial policy at a test time in multi-agent reinforcement learning (MARL) settings [

42]. In order to improve robustness in this case, some researchers used a population of adversaries and randomly picked an adversary from the pool to use it to train the protagonist in the min–max formulation [

43]. They also showed that using a single adversary does not provide robustness against different adversaries. In a more theoretical study, He et.al. proposed a robust multi-agent Q-learning algorithm (RMAQ) and robust multi-agent actor–critic (RMAAC) algorithms to provide robustness in MARL [

44].

As DRL agents are increasingly deployed in complex real-world scenarios, they often process information from multiple sources or modalities. This introduces the challenge of mixed attacks, where adversaries can craft perturbations across one or more input modalities simultaneously. Mandelekar et al. analyzed the individual effect of perturbations on performance: model parameter uncertainty, process noise, and observation noise using probabilistic and gradient-based attacks [

45]. Rakhsha et al. also investigated optimal poisoning reward and transition attacks to force the agent to executing a target policy [

25]. However, in these studies, the authors did not investigate the collaborative effects of the attacks on agents. More recently, Liu and Lai [

46] analyzed attacks in MARL, where an exogenous adversary injects perturbations into the actions and rewards of the agents. They show that combined action and reward poisoning can drive agents toward behaviors selected by the attacker, even without access to the environment. Although recent studies have explored various strategies for attacking and improving the robustness of DRL algorithms, the impact of mixed attacks on the learned policy, as well as their potential use for enhancing robustness, remains an open problem—one that is addressed in this work.

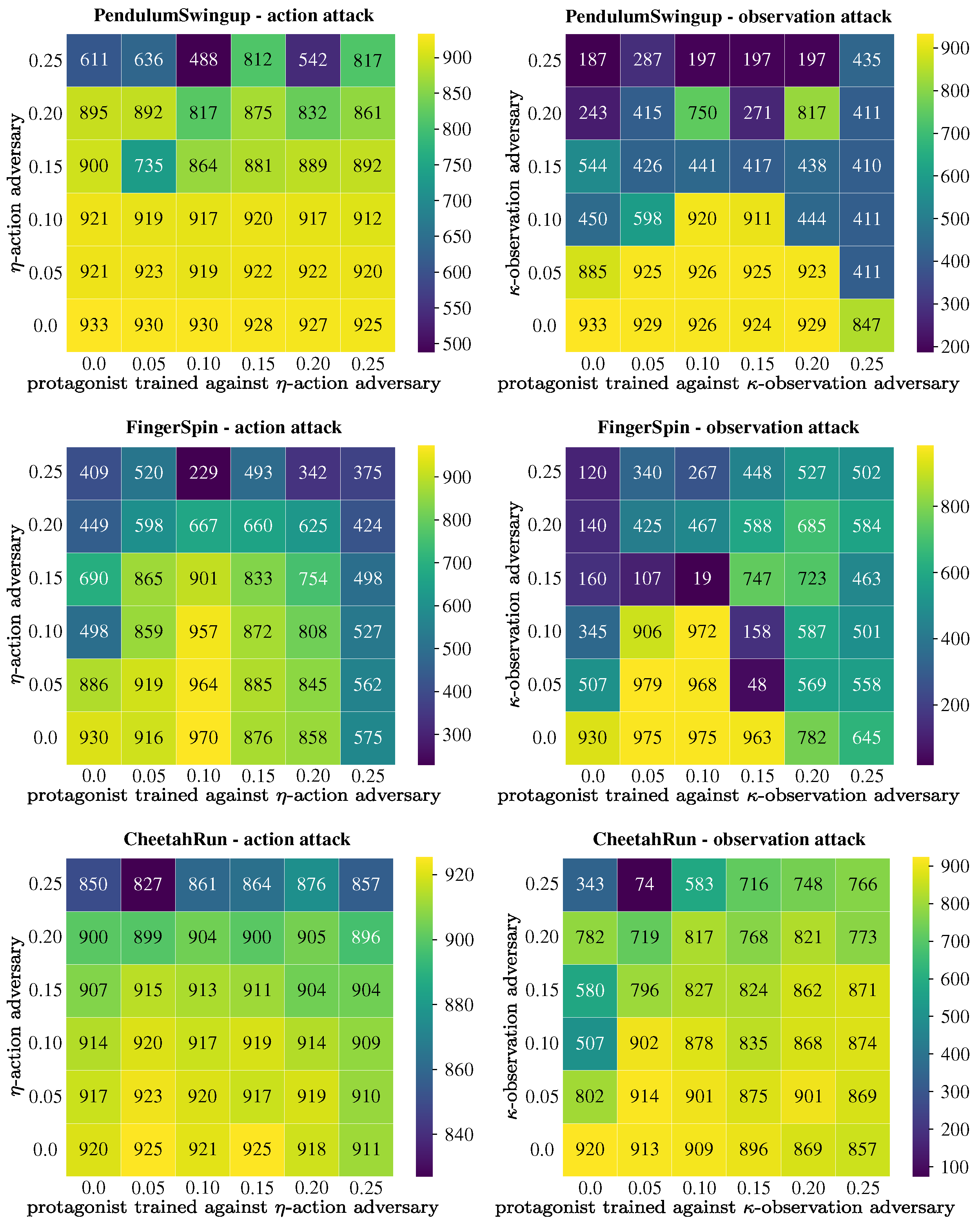

5. Experiments

In this section, we present experiments conducted on the Mujoco Playground [

29], using environments from the DeepMind Control Suite [

52] and the MuJoCo physics simulator [

53]. As baselines, we selected the random hybrid noise-augmented PPO algorithm (NA-PPO), the PPO implementation of NR-MDP [

23] (NR-PPO) for action-based adversarial attacks, and ATLA-PPO [

41] for observation-based adversarial attacks. Also, N-HYBRID-PPO, a naive version of our proposed algorithm ASA-PPO, is included as baselines for comparison. All baselines, except NA-PPO, employ learned perturbation strategies, rather than fixed attack patterns, consistent with the approach used in our proposed framework. We train and compare all methods on three continuous control tasks: PendulumSwingup, FingerSpin, and CheetahRun. We normalize the environment’s state

and reward

to the range [−1.0, 1.0], and we also clip the actions of both the adversarial and protagonist agents to the same range. Continuous perturbations are discretized into five levels with

. The PPO implementation is adapted from the codebase provided in [

54], which we modified to integrate the baselines and our proposed algorithm. Our setup enables vectorized PPO training on GPU using JAX [

55]. In the simulations, we optimized the hyperparameters of the PPO algorithm in a non-robust setting and applied the same values during the training of adversarial methods.

Table 1 provides a list of these parameters, with some having environment-specific values. In all baseline methods and for all agents (both protagonist and adversary), the neural network architecture is identical: two hidden layers with 256 nodes each, followed by an output layer whose size matches the dimensionality of the corresponding output. The activation function used in all layers is

tanh.

We investigate a diverse set of adversarial attacks and their effectiveness in training robust policies. Specifically, our aim is to address the following research questions:

Question 1: Does robustness to action perturbation attacks imply robustness to observation-perturbation attacks, and vice versa?

Question 2: Which type of adversarial attack leads the reinforcement learning agent to (near) worst-case performance?

Question 3: Can training with a mixed adversarial attack enable the protagonist agent to develop simultaneous robustness against action, observation, and mixed perturbations?

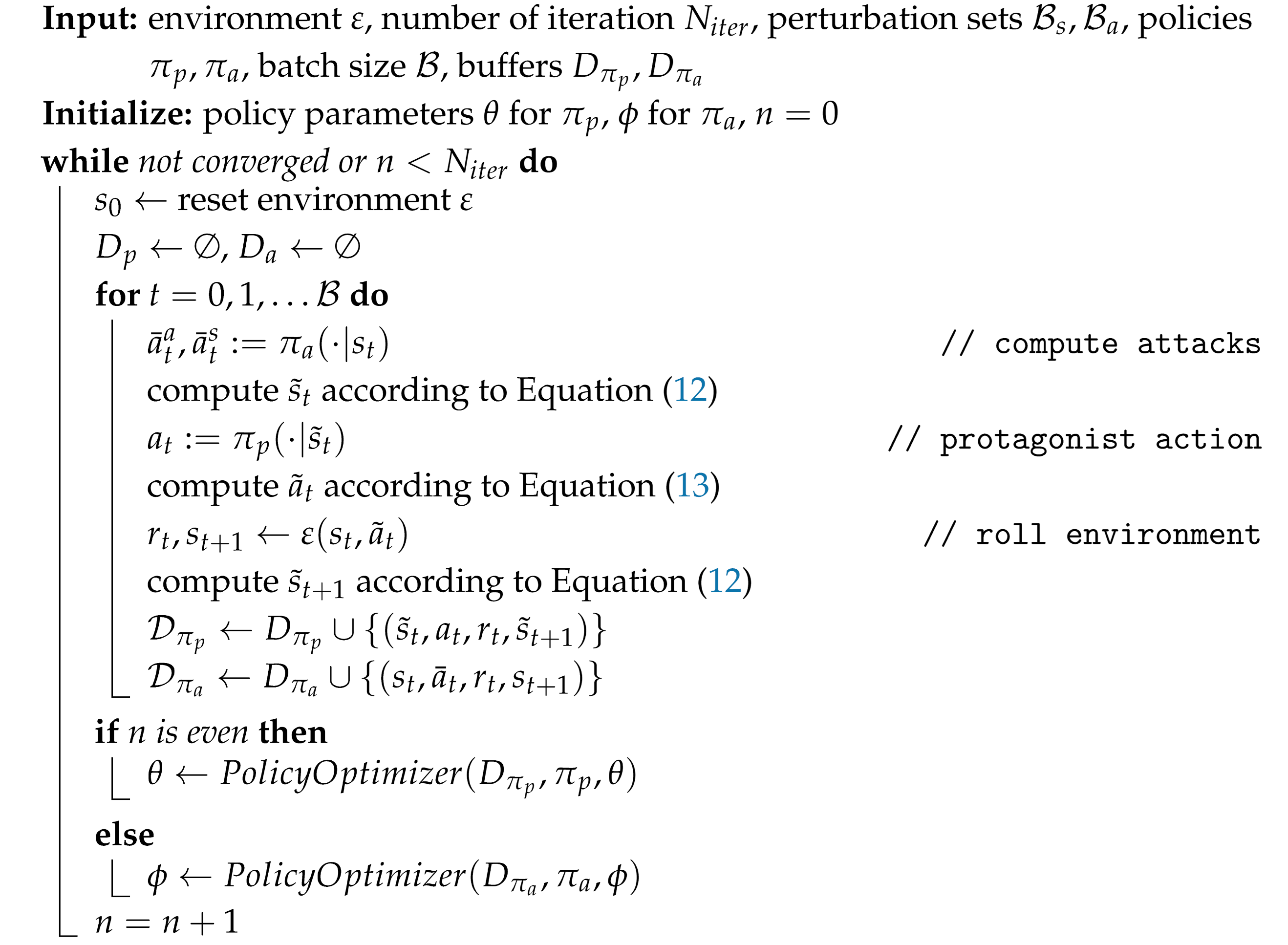

We first trained the baseline action and state-adversarial agents alongside a non-robust agent for each environment and compared their performance. Each training was repeated with five different random seeds.

Figure 3 illustrates the training curves, where the solid lines represent the mean performance across all seeds, and the shaded areas indicate the standard deviations. We repeat the trainings for all perturbation levels (

and

) of the adversaries. The lower performance variability observed in CheetahRun is due to its more stable dynamics, as reported in previous studies [

22,

56]. Its high-dimensional state–action space and complex multi-joint dynamics provide natural robustness, allowing the agent to compensate for perturbations through alternative movement patterns. In contrast, PendulumSwingup and FingerSpin require precise control and timing, resulting in greater variability under different adversarial attacks.

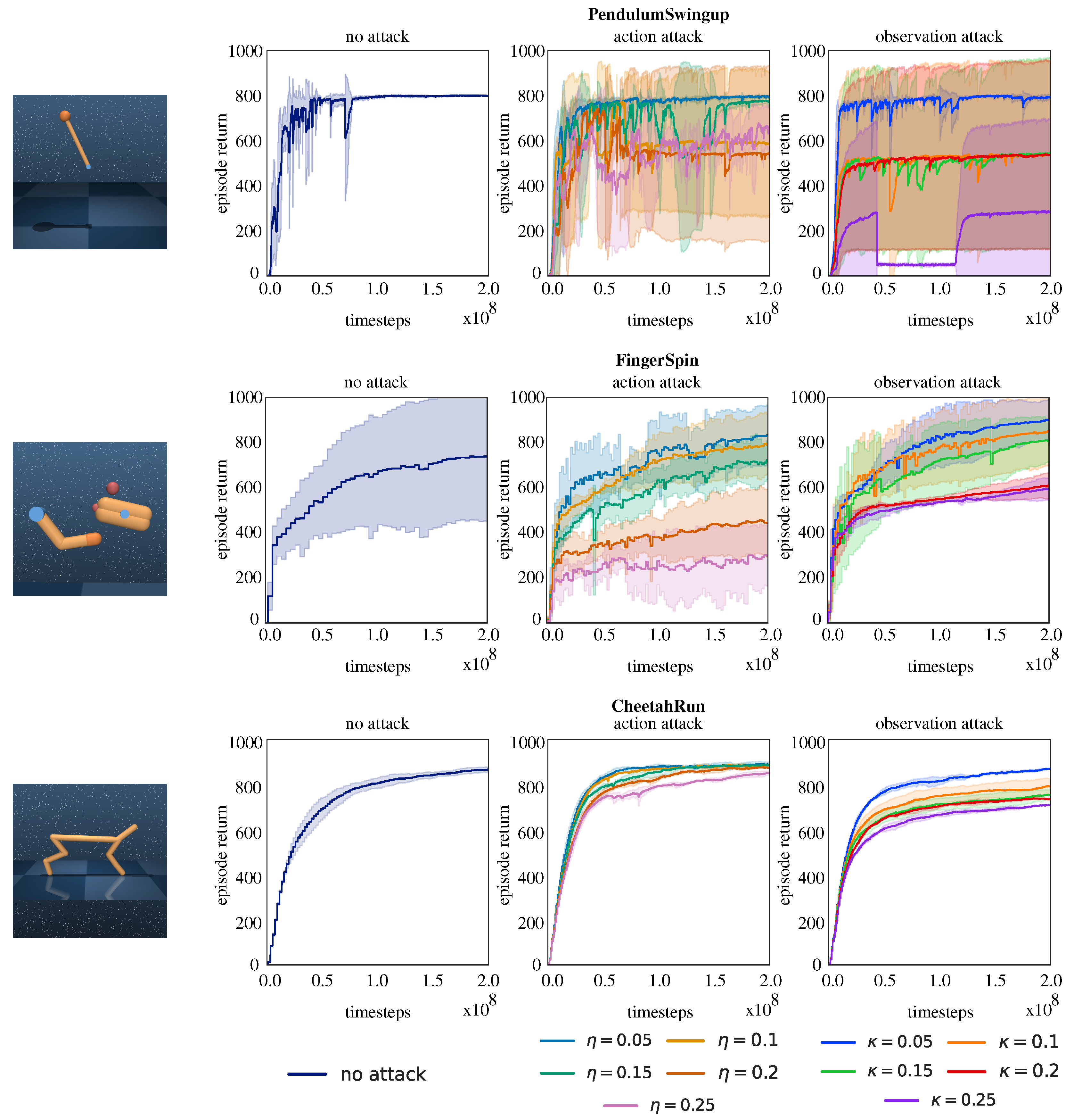

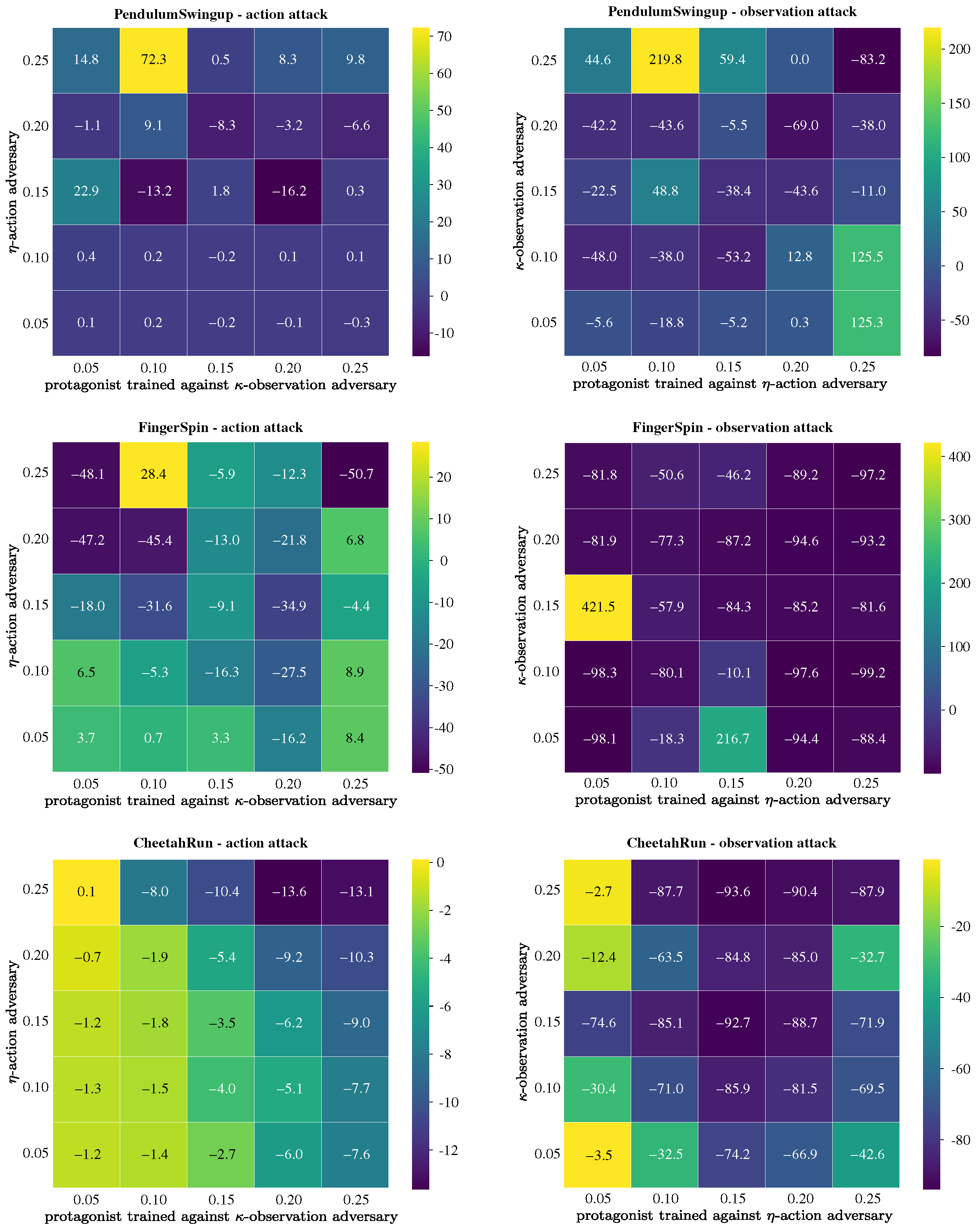

After training was completed, we evaluated the robustified protagonist agents under various perturbation levels, including levels that differed from those used by the adversary during training. Additionally, to evaluate the transferability of robustness, we tested action-robust agents against observation-based adversaries and vice versa. In all cases, the reported scores correspond to the performance of the median-performing agent, evaluated over 20 episodes. These results are presented in

Figure 4 and

Figure 5, where the horizontal axis indicates the perturbation strength of the adversary used during the training of the protagonist, and the vertical axis represents the perturbation strength of the adversary used during evaluation. The values in the grid represent the test performance of the protagonist when evaluated against the corresponding adversary.

The existing literature in ARL highlights the importance of perturbation magnitude in training robust agents. Excessive perturbation can destabilize the training process, although it may lead to better performance in highly challenging environments. On the other hand, very small perturbations may fail to yield any significant improvements in robustness. An analysis of

Figure 4 supports this observation, showing that the average performance of the protagonist agent tends to deteriorate when facing adversaries stronger than those it was trained against, although the resulting performance gap does not exhibit a strictly monotonic pattern. The results also indicate that average performance on certain tasks, such as FingerSpin, degrades more significantly under strong perturbations. In contrast, methods trained with weaker perturbations are better able to preserve performance levels comparable to those achieved under unperturbed conditions.

To address the first research question, we evaluate the protagonist agents against different types of adversaries from those against which they were trained to be robust. In

Figure 5, we present the relative change in test performance, expressed as percentages, relative to the performance against the original adversary encountered during training. With a few exceptions, performance declines in most test cases, indicating that robustness to action attacks does not necessarily translate to robustness against observation attacks. This highlights the necessity of training robust policies against both types of attack, particularly in real-world scenarios where uncertainties and perturbations simultaneously impact both action and observation spaces.

To address the second research question, we evaluated our adversarial attacks on trained non-robust PPO protagonist agents and shared the results in

Figure 6. As shown in the figure, non-robust agents across all environments are most vulnerable to hybrid attacks—especially under large perturbation budgets—and are least sensitive to random and action-based attacks. This indicates that both naive and balanced hybrid attacks are more effective than single-type attacks, highlighting the importance of taking into account such threats when training robust agents.

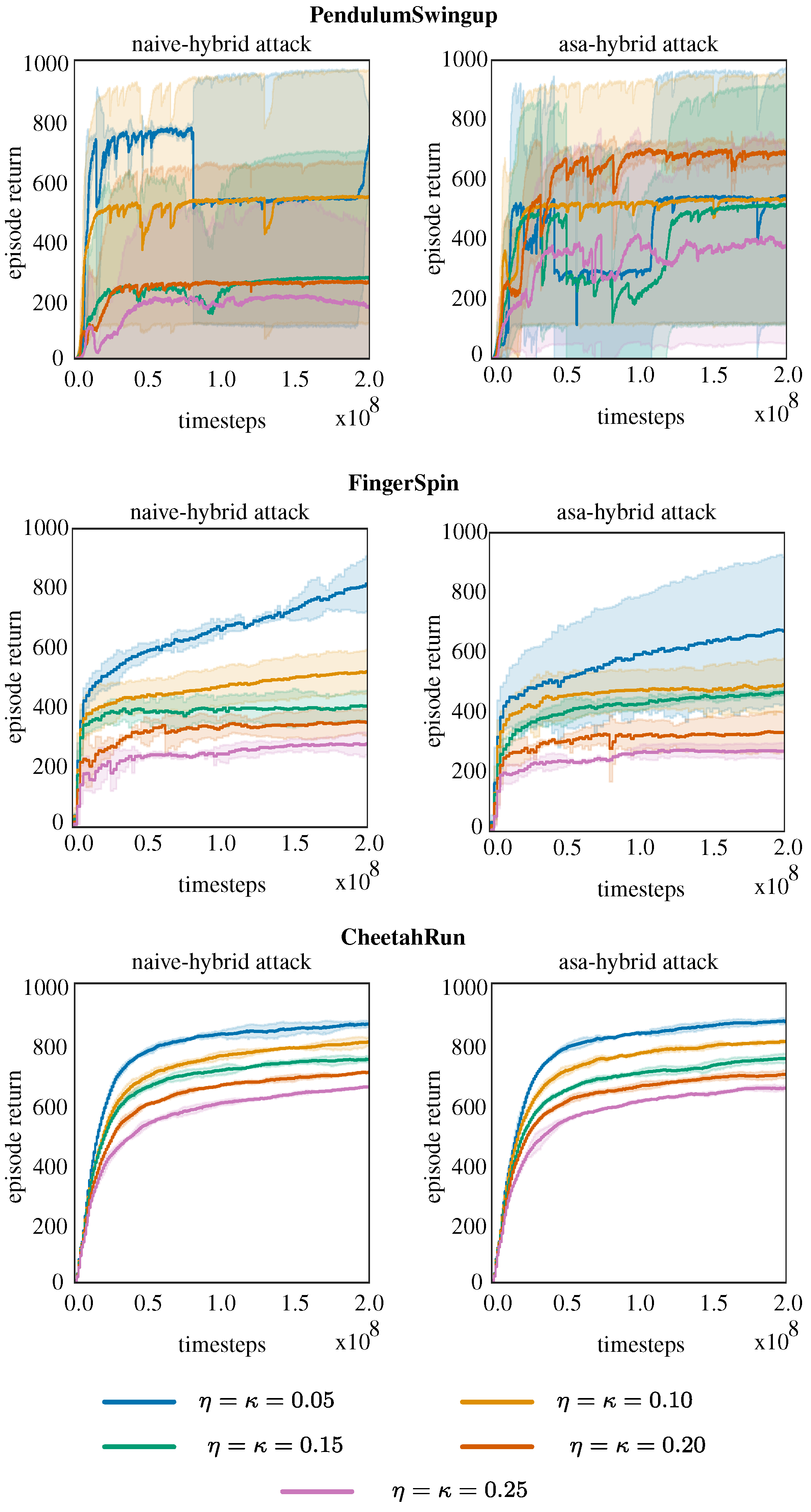

To address the final question, we trained hybrid agents.

Figure 7 presents the training curves of the protagonist agents when trained against both a learning naive adversary and an ASA-HYBRID adversary. Without loss of generality, we selected specific perturbation levels for each environment to compare the robustness performance of the baseline methods with their hybrid counterparts. The perturbation levels selected for the experiments are as follows:

Before addressing the final question, we examined the performance trade-off associated with robustness, as shown in

Table 2. Although N-HYBRID-PPO and ASA-PPO were trained under more severe perturbations compared to other baselines, their performance in non-adversarial environments remains competitive.

To evaluate robustness against hybrid perturbations, we replaced the adversaries used during training with hybrid attackers. The results presented in

Table 3 and

Table 4 demonstrate that NR-PPO and ATLA-PPO trained protagonists remain vulnerable to hybrid attacks, underscoring the necessity of a dedicated framework for training against such hybrid adversaries. It is also important to note that the N-HYBRID-PPO algorithm applies unbalanced attacks, primarily targeting observations, causing the agent trained with NR-PPO to perform worse than the agent trained with ATLA-PPO when evaluated against these attacks. Against balanced hybrid attacks, the performance of NR-PPO and ATLA-PPO is more comparable than under unbalanced attacks, yet both remain inferior to that of ASA-PPO.

Finally, we evaluated the protagonist trained against hybrid attacks by testing it against adversaries applying perturbations solely to actions or observations. As shown in

Table 5, across all environments and attack scenarios, the protagonist trained against a balanced adversary consistently outperforms the one trained against a naive-HYBRID adversary.

The N-HYBRID-PPO adversary applies strong perturbations to observations, causing the protagonist agent trained against it to underperform when evaluated against adversaries that perturb only actions or only observations. In contrast, the ASA-PPO adversary learns to balance and diversify its attacks across both modalities, resulting in a protagonist that is more resilient to individual perturbation types. This highlights the importance of balancing mixed adversarial attacks to achieve a realistic and comprehensive measure of robustness. Furthermore, despite being trained under hybrid and stronger adversarial conditions, the proposed ASA-PPO method maintains robust performance across natural (unperturbed), action-only, and observation-only attack scenarios.

As a complementary experiment, we evaluate the robustness of the trained agents under parametric variations in the environment.

Figure 8 shows the performance of the agents, including our proposed ASA-HYBRID and N-HYBRID approaches, under changes in environment dynamics—specifically mass and damping scales—in the CheetahRun, PendulumSwingup, and FingerSpin tasks. At the nominal scale of 1.0, all methods attain comparable high episode returns, reflecting effective baseline performance. As the scales deviate from 0.25 to 2.0, returns generally degrade. Our hybrid methods, designed to counter both action and observation perturbations, show moderate resilience at mild deviations but experience sharper declines at extreme values compared to baselines such as NR-PPO and ATLA-PPO. This limited transfer of robustness arises from the distinction between adversarial perturbations, which our methods are trained to mitigate, and parametric changes, which introduce out-of-distribution dynamics not explicitly addressed in the framework.

Notably, the adversarial attack budgets used during training were randomly predetermined to enhance the robustness of our agents. Since the hybrid agents were trained against a stronger adversary—entailing larger budgets for mixed action and observation perturbations—this focus on defending against targeted adversarial threats may reduce their effectiveness in handling parametric variations as it prioritizes adversarial robustness over adaptation to changes in environmental dynamics. While ASA-HYBRID and N-HYBRID excel against malicious attacks, their sensitivity to environment parameter shifts highlights the orthogonality of these robustness dimensions and motivates future work combining adversarial training with domain randomization or explicit parameter adaptation to achieve broader resilience.

6. Conclusions and Future Works

This work addresses the open problem of mixed adversarial attacks on the performance of a learned policy and their use in enhancing robustness. The proposed ASA-PPO algorithm was trained and evaluated on three distinct tasks, showing its effectiveness compared with algorithms designed for single-type attacks. The results indicate that while methods trained with weaker perturbations maintain performance levels comparable to unperturbed conditions, they falter against stronger adversaries. We also demonstrated that robustness to action perturbations does not guarantee robustness to observation attacks and vice versa, highlighting the necessity of training against mixed attacks. Non-robust agents were found to be most vulnerable to hybrid attacks, underscoring the effectiveness of the balanced hybrid attack models. The ASA-PPO method, despite being trained for more severe hybrid attacks, showed robust performance in non-adversarial settings, as well as in action-only and observation-only attack scenarios.

In this work, we employ multiplicative perturbations for states and additive perturbations for actions. Although this separation provides stable and interpretable adversarial settings, aligning perturbation metrics across states and actions would enable more direct comparisons and fairer benchmarking. We view the development of such a unified metric as a valuable avenue for future research. Beyond this, several broader limitations merit discussion, highlighting the areas for further improvement. For instance, the observed sensitivity to parameter variations likely arises because adversarial training against action and observation perturbations does not inherently confer robustness to changes in the underlying system dynamics, highlighting the need for complementary approaches that explicitly account for parametric uncertainty during training.

While the approach is effective, it relies on access to a simulator and currently lacks theoretical guarantees. The framework assumes that the adversary can observe the true state, which may not be feasible in a partially observable setting. Future work could extend this framework to Partially Observable Markov Decision Processes (POMDPs) [

57] by incorporating history-based policies into the framework. Furthermore, the balance between the strengths of the state perturbation

and the action perturbation

requires a task-specific hyperparameter adjustment as their relative impacts vary significantly. The increased computational load from dual perturbations necessitates efficient implementations, such as parallel rollouts. Another direction is the development of an adaptive adversarial training framework that dynamically adjusts perturbation strength based on the agent’s progress, which could better balance robustness and task performance, as proposed in [

27].