Saliency-Guided Local Semantic Mixing for Long-Tailed Image Classification

Abstract

1. Introduction

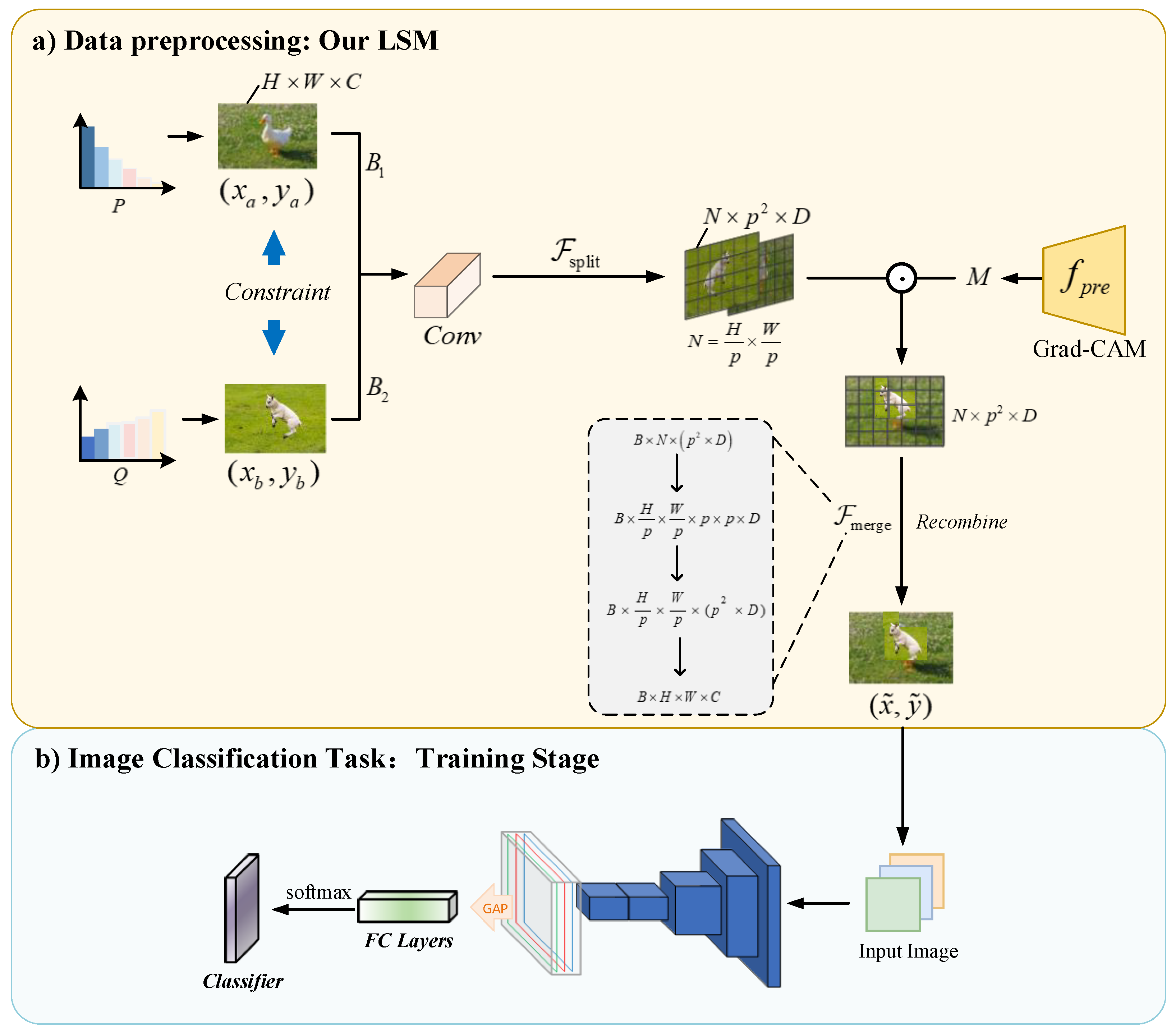

- We propose a differentiable local feature decoupling framework that maps input images into N independent semantic units through a designed parameterized patch operator, enabling pixel-level feature control. This breakthrough overcomes the destructive interference of traditional global mixing on fine-grained features.

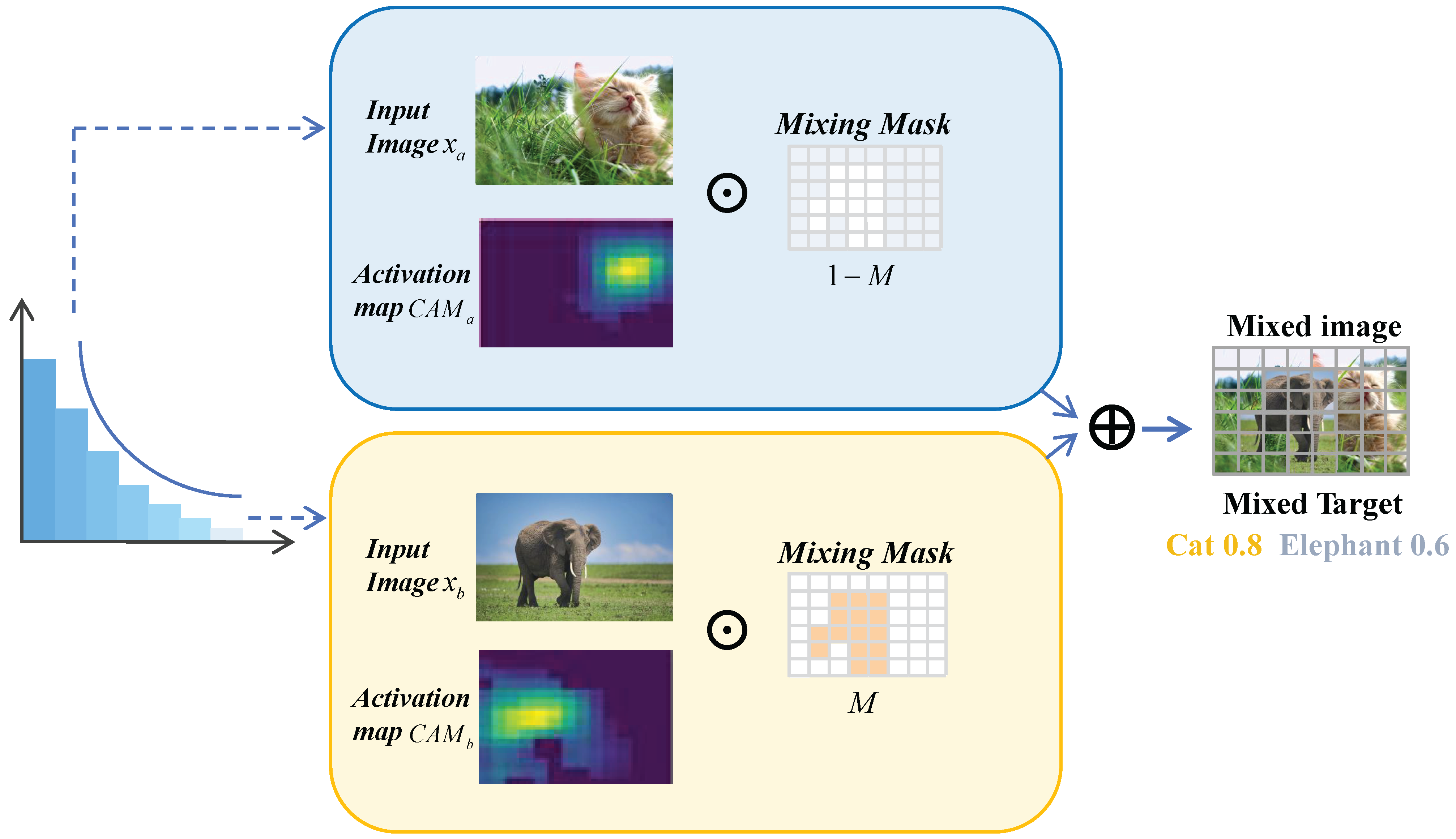

- We develop a local semantic mixing and dynamic label fusion strategy that obtains regional saliency weights through spatial alignment and patch averaging of gradient-weighted CAMs. This allows for semantic-aware blending of discriminative tail-class regions with head-class backgrounds while dynamically generating soft labels, effectively augmenting tail-class data.

- Extensive experiments on three standard long-tailed benchmark datasets demonstrate that LSM achieves dual breakthroughs in both classification performance and training stability.

2. Related Works

2.1. Rebalancing Strategies

2.2. Augmentation-Based Methods

3. Method

3.1. Preliminaries

3.2. Dual-Branch Balanced Sampling Mechanism

3.3. Feature Decoupling

3.4. Patch-Level Saliency-Guided Mask Generation

3.4.1. Gradient-Weighted Class Activation Map Generation

3.4.2. Patch-Level Mask Generation

3.5. Semantic-Aware Mixed Image Generation and Label Assignment

4. Experiments

4.1. Datasets

4.2. Experimental Setup

4.3. Comparison with State-of-the-Art Methods

4.4. Discussion

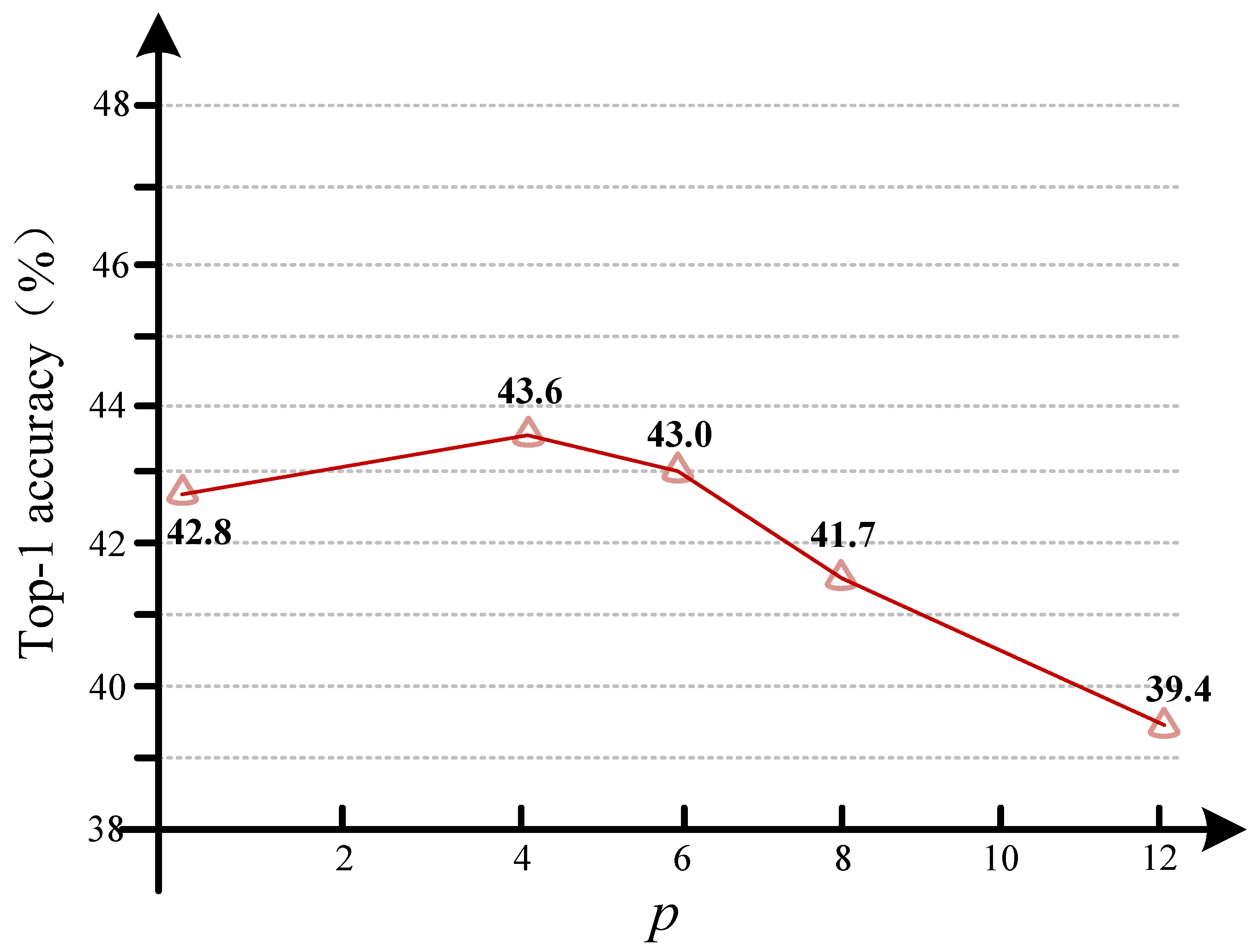

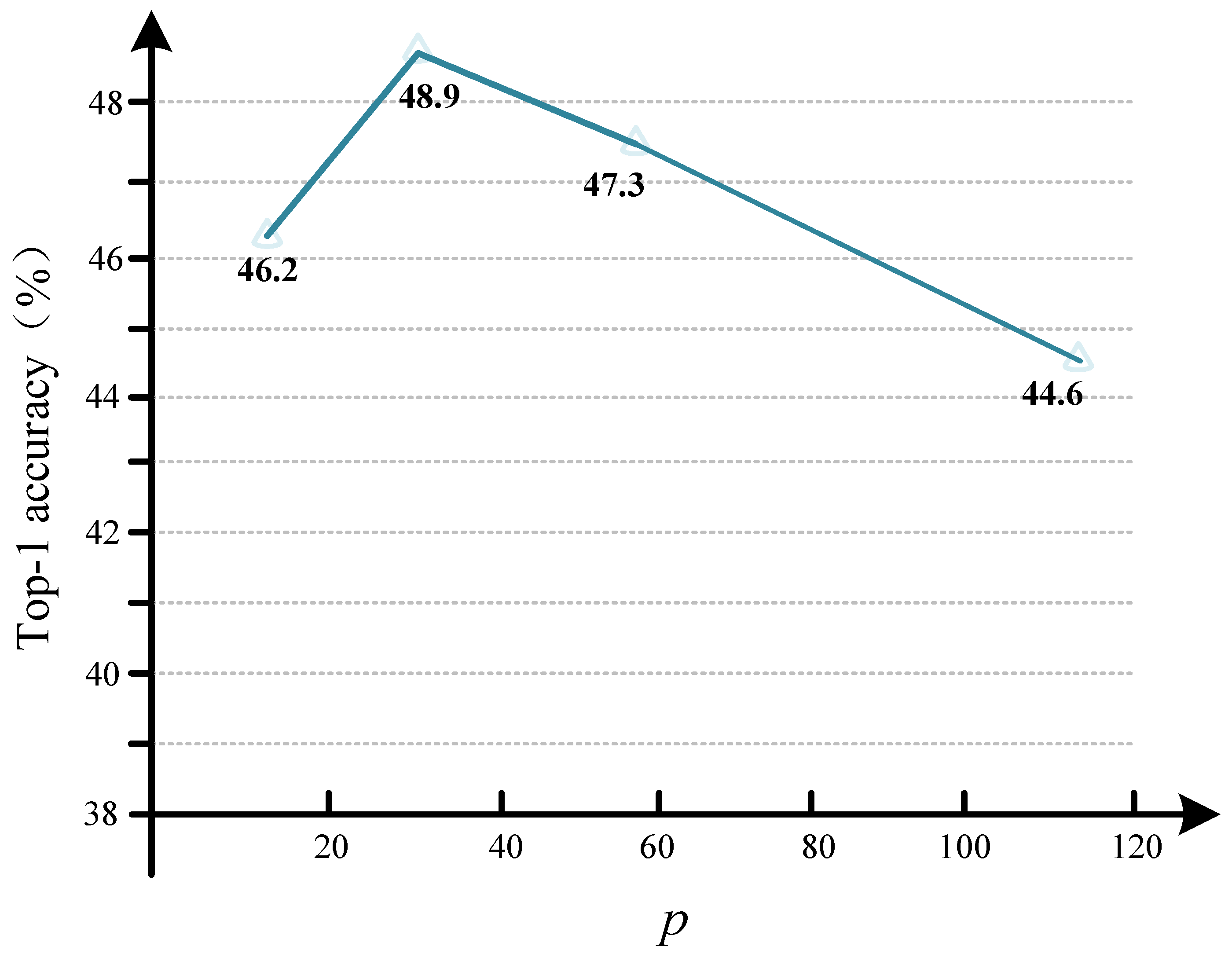

4.5. Hyperparameter Sensitivity Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Vailaya, A.; Jain, A.; Zhang, H.J. On image classification: City images vs. landscapes. Pattern Recognit. 1998, 31, 1921–1935. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; Volume 13, pp. 740–755. [Google Scholar]

- Liu, Z.; Miao, Z.; Zhan, X.; Wang, J.; Gong, B.; Yu, S.X. Large-scale long-tailed recognition in an open world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2537–2546. [Google Scholar]

- Cao, K.; Wei, C.; Gaidon, A.; Arechiga, N.; Ma, T. Learning imbalanced datasets with label-distribution-aware margin loss. Adv. Neural Inf. Process. Syst. 2019, 32, 1567–1578. [Google Scholar]

- Li, T.; Cao, P.; Yuan, Y.; Fan, L.; Yang, Y.; Feris, R.S.; Indyk, P.; Katabi, D. Targeted supervised contrastive learning for long-tailed recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6918–6928. [Google Scholar]

- Tan, J.; Wang, C.; Li, B.; Li, Q.; Ouyang, W.; Yin, C.; Yan, J. Equalization loss for long-tailed object recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11662–11671. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Rezvani, S.; Wang, X. A broad review on class imbalance learning techniques. Appl. Soft Comput. 2023, 143, 110415. [Google Scholar] [CrossRef]

- Miao, W.; Pang, G.; Bai, X.; Li, T.; Zheng, J. Out-of-distribution detection in long-tailed recognition with calibrated outlier class learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 4216–4224. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Zhou, B.; Cui, Q.; Wei, X.S.; Chen, Z.M. Bbn: Bilateral-branch network with cumulativelearning for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9719–9728. [Google Scholar]

- Kang, B.; Xie, S.; Rohrbach, M.; Yan, Z.; Gordo, A.; Feng, J.; Kalantidis, Y. Decoupling representation and classifier for long-tailed recognition. arXiv 2019, arXiv:1910.09217. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef]

- Ren, J.; Yu, C.; Ma, X.; Zhao, H.; Yi, S. Balanced meta-softmax for long-tailed visual recognition. Adv. Neural Inf. Process. Syst. 2020, 33, 4175–4186. [Google Scholar]

- Vu, D.Q.; Thu, M.T.H. Smooth Balance Softmax for Long-Tailed Image Classification. In Proceedings of the International Conference on Advances in Information and Communication Technology, Phu Tho, Vietnam, 16–17 November 2024; Springer Nature: Cham, Switzerland, 2024; pp. 323–331. [Google Scholar]

- Zang, Y.; Huang, C.; Loy, C.C. Fasa: Feature augmentation and sampling adaptation for long-tailed instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3457–3466. [Google Scholar]

- Wang, T.; Li, Y.; Kang, B.; Li, J.; Liew, J.; Tang, S.; Hoi, S.; Feng, J. The devil is in classification: A simple framework for long-tail instance segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 728–744. [Google Scholar]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8769–8778. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 17 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Cao, C.; Zhou, F.; Dai, Y.; Wang, J.; Zhang, K. A survey of mix-based data augmentation: Taxonomy, methods, applications, and explainability. Acm Comput. Surv. 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Qin, H.; Jin, X.; Zhu, H.; Liao, H.; El-Yacoubi, M.A.; Gao, X. Sumix: Mixup with semantic and uncertain information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 70–88. [Google Scholar]

- Zhang, Y.; Kang, B.; Hooi, B.; Yan, S.; Feng, J. Deep long-tailed learning: A survey. arXiv 2021, arXiv:2110.04596. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Guo, H.; Wang, S. Long-tailed multi-label visual recognition by collaborative training on uniform and re-balanced samplings. In Proceedings of the IEEE/CVF Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15089–15098. [Google Scholar]

- Drummond, C.; Holte, R.C. C4. 5, class imbalance, and cost sensitivity: Why under-sampling beats over-sampling. In Imbalanced Datasets II; ICML: Washington, DC, USA, 2003; Volume 11, pp. 1–8. [Google Scholar]

- Byrd, J.; Lipton, Z. What is the effect of importance weighting in deep learning? In International Conference on Machine Learning; PMLR: New York, NY, USA, 2019; pp. 872–881. [Google Scholar]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9268–9277. [Google Scholar]

- Menon, A.K.; Jayasumana, S.; Rawat, A.S.; Jain, H.; Veit, A.; Kumar, S. Long-tail learning via logit adjustment. arXiv 2020, arXiv:2007.07314. [Google Scholar]

- Alexandridis, K.P.; Luo, S.; Nguyen, A.; Deng, J.; Zafeiriou, S. Inverse image frequency for long-tailed image recognition. IEEE Trans. Image Process. 2003, 32, 5721–5736. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Shu, J.; Xie, Q.; Yi, L.; Zhao, Q.; Zhou, S.; Xu, Z.; Meng, D. Meta-weight-net: Learning an explicit mapping for sample weighting. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 1919–1930. [Google Scholar]

- Zhu, J.; Wang, Z.; Chen, J.; Chen, Y.P.; Jiang, Y.G. Balanced contrastive learning for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6908–6917. [Google Scholar]

- Iqbal, F.; Abbasi, A.; Javed, A.R.; Almadhor, A.; Jalil, Z.; Anwar, S.; Rida, I. Data augmentation-based novel deep learning method for deepfaked images detection. Acm Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–15. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, P.; Liu, K.; Wang, P.; Fu, Y.; Lu, C.T.; Aggarwal, C.C.; Pei, J.; Zhou, Y. A comprehensive survey on data augmentation. arXiv 2024, arXiv:2405.09591. [Google Scholar] [CrossRef]

- DeVries, T.; Taylor, G.W. Improved regularization of convolutional neural net works with cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Salehin, I.; Kang, D.K. A review on dropout regularization approaches for deep neural networks within the scholarly domain. Electronics 2023, 12, 3106. [Google Scholar] [CrossRef]

- Li, L.; Li, A. A2-aug: Adaptive automated data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2267–2274. [Google Scholar]

- Park, S.; Hong, Y.; Heo, B.; Yun, S.; Choi, J.Y. The majority can help the minority: Context-rich minority oversampling for long-tailed classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6887–6896. [Google Scholar]

- Zhong, Z.; Cui, J.; Liu, S.; Jia, J. Improving calibration for long-tailed recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16489–16498. [Google Scholar]

- Chou, H.P.; Chang, S.C.; Pan, J.Y.; Wei, W.; Juan, D.C. Remix: Rebalanced mixup. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 95–110. [Google Scholar]

- Li, J.; Yang, Z.; Hu, L.; Liu, J.; Tao, D. CRmix: A regularization by clipping images and replacing mixed samples for imbalanced classification. Digit. Signal Process. 2023, 135, 103951. [Google Scholar] [CrossRef]

- Doane, D.P.; Seward, L.E. Measuring skewness: A forgotten statistic? J. Stat. Educ. 2011, 19, n2. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hong, Y.; Han, S.; Choi, K.; Seo, S.; Kim, B.; Chang, B. Disentangling label distribution for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6626–6636. [Google Scholar]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 18661–18673. [Google Scholar]

- Wang, P.; Han, K.; Wei, X.S.; Zhang, L.; Wang, L. Contrastive learning based hybrid networks for long-tailed image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 943–952. [Google Scholar]

- Hou, C.; Zhang, J.; Wang, H.; Zhou, T. Subclass-balancing contrastive learning for long-tailed recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 5395–5407. [Google Scholar]

- Zhou, Z.; Li, L.; Zhao, P.; Heng, P.A.; Gong, W. Class-conditional sharpness-aware minimization for deep long-tailed recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3499–3509. [Google Scholar]

- Mahajan, D.; Girshick, R.; Ramanathan, V.; He, K.; Paluri, M.; Li, Y.; Bharambe, A.; Van Der Maaten, L. Exploring the limits of weakly supervised pretraining. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 181–196. [Google Scholar]

- Wang, X.; Lian, L.; Miao, Z.; Liu, Z.; Yu, S.X. Long-tailed recognition by routing diverse distribution-aware experts. arXiv 2020, arXiv:2010.01809. [Google Scholar]

- Sharma, S.; Xian, Y.; Yu, N.; Singh, A. Learning prototype classifiers for long-tailed recognition. arXiv 2023, arXiv:2302.00491. [Google Scholar] [CrossRef]

| Datasets | Number of Classes | Training/Test Samples | |

|---|---|---|---|

| CIFAR-LT | 100 | 10.8 K/10 K | {100, 50, 10} |

| ImageNet-LT | 1000 | 115.8 K/50 K | 256 |

| iNaturalist 2018 | 8142 | 437.5 K/24.4 K | 500 |

| Datasets | CIFAR-100-LT | ImageNet-LT | iNaturalist 2018 |

|---|---|---|---|

| Backbone | ResNet-32 | ResNet-50 | ResNet-50 |

| Epochs | 200 | 100 | 200 |

| Batch size | 128 | 256 | 512 |

| Initial learning rate | 0.01 | 0.1 | 0.1 |

| SGD momentum | 0.9 | 0.9 | 0.9 |

| block size p | 4 | 28 | 28 |

| Imbalance Factor | 100 | 50 | 10 |

|---|---|---|---|

| Cross Entropy (CE) | 38.6 | 44.0 | 56.4 |

| CE-DRW | 41.1 | 45.6 | 57.9 |

| LDAM-DRW [7] | 41.7 | 47.9 | 57.3 |

| BBN [14] | 42.6 | 47.1 | 59.2 |

| CMO [42] | 43.9 | 48.3 | 59.5 |

| BalancedSoftmax [17] | 45.1 | 49.9 | 61.6 |

| LDAE [50] | 45.4 | 50.5 | 61.7 |

| SupCon [51] | 45.8 | 52.0 | 64.4 |

| Hybrid-SC [52] | 46.7 | 51.8 | 63.0 |

| Remix [44] | 45.8 | 49.5 | 59.2 |

| SBCL [53] | 44.9 | 48.7 | 57.9 |

| CC-SAM [54] | 49.2 | 51.9 | 62.0 |

| CE + LSM | 43.6 | 47.9 | 58.9 |

| CE-DRW + LSM | 46.8 | 51.6 | 61.0 |

| LDAM-DRW + LSM | 46.9 | 52.2 | 58.6 |

| Methods | All | Many | Medium | Few |

|---|---|---|---|---|

| CE | 41.6 | 64.0 | 33.8 | 5.8 |

| Focal Loss [34] | 43.7 | 64.3 | 37.1 | 8.2 |

| Decouple-cRT [16] | 47.3 | 58.8 | 44.0 | 26.1 |

| Decouple-LWS [16] | 47.7 | 57.1 | 45.2 | 29.3 |

| LWS [55] | 49.9 | 60.2 | 47.2 | 30.3 |

| Remix [44] | 48.6 | 60.4 | 46.9 | 30.7 |

| CMO [42] | 49.1 | 67.0 | 42.3 | 20.5 |

| LDAM-DRW [7] | 49.8 | 60.4 | 46.9 | 30.7 |

| CE-DRW [7] | 50.1 | 61.7 | 47.3 | 28.8 |

| BalancedSoftmax [17] | 51.0 | 60.9 | 48.8 | 32.1 |

| LADE [50] | 51.9 | 62.3 | 49.3 | 31.2 |

| CE + LSM | 48.9 | 58.6 | 45.8 | 35.8 |

| CE-DRW + LSM | 50.9 | 60.2 | 47.3 | 36.4 |

| LDAM-DRW + LSM | 50.7 | 60.0 | 47.4 | 34.3 |

| Methods | All | Many | Medium | Few |

|---|---|---|---|---|

| CE | 61.0 | 73.9 | 63.5 | 55.5 |

| IB Loss | 65.4 | - | - | - |

| LDAM-DRW [7] | 66.1 | - | - | - |

| Decouple-cRT [16] | 68.2 | 73.2 | 68.8 | 66.1 |

| Decouple-LWS [16] | 69.5 | 71.0 | 69.8 | 68.8 |

| BBN [14] | 69.6 | - | - | - |

| BalancedSoftmax [17] | 70.0 | 70.0 | 70.2 | 69.9 |

| LADE [50] | 70.0 | - | - | - |

| Remix [44] | 70.5 | - | - | - |

| MiSLAS [43] | 71.6 | 73.2 | 72.4 | 72.7 |

| RIDE (3E) [56] | 72.2 | 70.2 | 72.2 | 72.7 |

| TSC [9] | 69.7 | 72.6 | 70.6 | 67.8 |

| PC [57] | 70.6 | 71.6 | 70.6 | 70.2 |

| CE + LSM | 67.1 | 76.5 | 69.0 | 66.9 |

| CE-DRW + LSM | 70.5 | 67.8 | 70.0 | 72.5 |

| LDAM-DRW + LSM | 68.9 | 75.3 | 69.1 | 67.2 |

| RIDE (3E) + LSM | 72.6 | 69.0 | 72.2 | 73.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, J.; Lei, J.; Zhang, J.; Chen, C.; Li, S. Saliency-Guided Local Semantic Mixing for Long-Tailed Image Classification. Mach. Learn. Knowl. Extr. 2025, 7, 107. https://doi.org/10.3390/make7030107

Lv J, Lei J, Zhang J, Chen C, Li S. Saliency-Guided Local Semantic Mixing for Long-Tailed Image Classification. Machine Learning and Knowledge Extraction. 2025; 7(3):107. https://doi.org/10.3390/make7030107

Chicago/Turabian StyleLv, Jiahui, Jun Lei, Jun Zhang, Chao Chen, and Shuohao Li. 2025. "Saliency-Guided Local Semantic Mixing for Long-Tailed Image Classification" Machine Learning and Knowledge Extraction 7, no. 3: 107. https://doi.org/10.3390/make7030107

APA StyleLv, J., Lei, J., Zhang, J., Chen, C., & Li, S. (2025). Saliency-Guided Local Semantic Mixing for Long-Tailed Image Classification. Machine Learning and Knowledge Extraction, 7(3), 107. https://doi.org/10.3390/make7030107