A Review of Large Language Models for Automated Test Case Generation

Abstract

1. Introduction

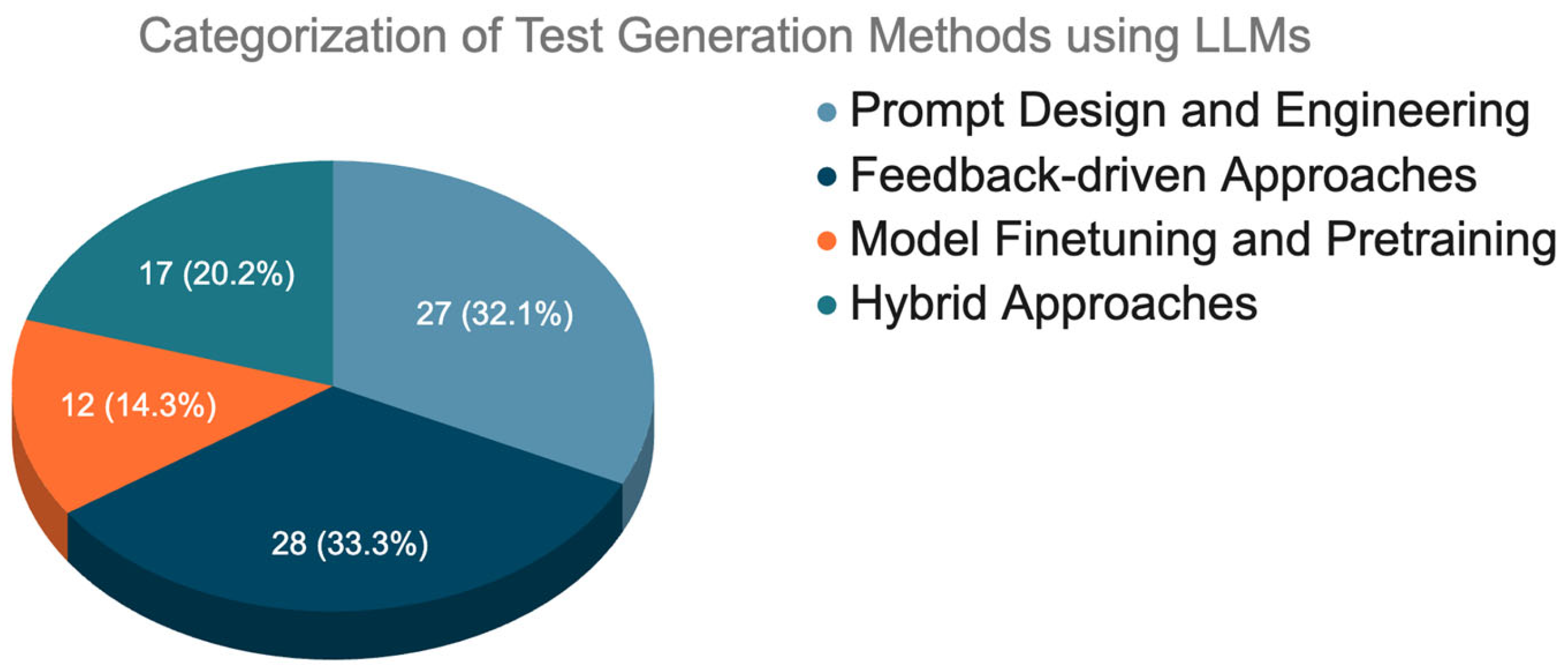

- Analysis of proposed methods: A categorization of methods for using LLMs in test case generation is provided. These methods are classified into prompt design and engineering, feedback-driven approaches, model fine-tuning and pre-training, and hybrid approaches, which offer insights into their strengths and limitations.

- Evaluation of effectiveness: An assessment of the effectiveness of LLMs relative to current testing tools was performed, emphasizing the instances where LLMs excel and fall short compared with existing tools.

- Future research directions include identifying key areas for improvement, including expanding the applicability of LLMs to multiple programming languages, integrating domain-specific knowledge, and leveraging hybrid techniques to address the current challenges.

2. Background

2.1. Large Language Models (LLMs)

2.2. Software Testing

2.3. Applications of LLMs in Test Case Generation

3. Methodology

3.1. Research Questions

- RQ-1: What methods have been proposed for using LLMs in automated test case generation?

- RQ-2: How effective are LLMs in improving the quality and efficiency of test case generation compared to traditional methods?

- RQ-3: What future directions have been identified for using LLMs in automated test case generation?

3.2. Information Sources

3.3. Eligibility Criteria

3.3.1. Inclusion Criteria

- Publication type: Accepted publications included conference papers, journal articles, and preprint papers.

- Language: Only studies written in English were considered.

- Relevance: Studies need to explicitly focus on the application of LLMs in test case generation.

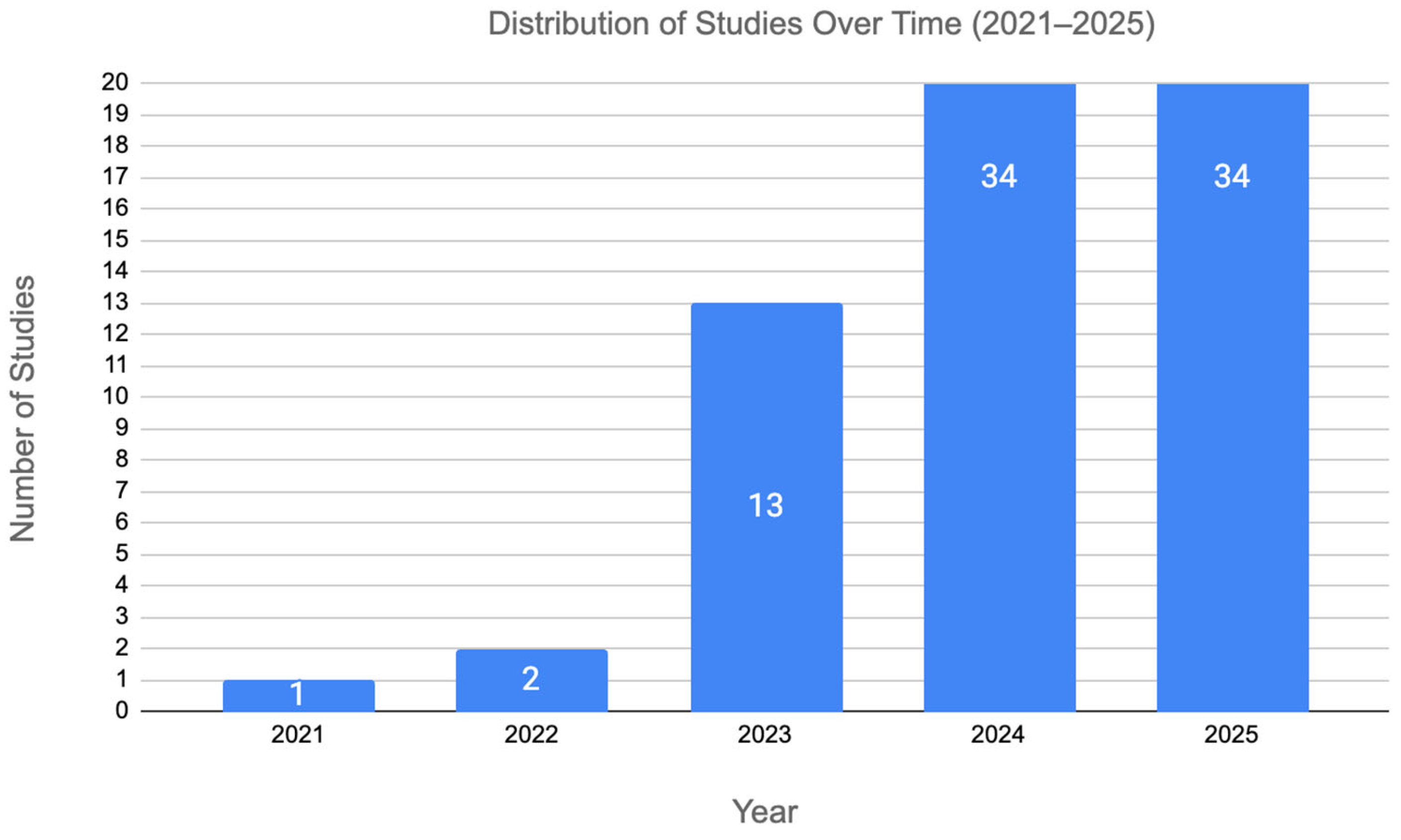

- Time Frame: The review was restricted to studies published between 2022 and 2025. One study from 2021 [28] was also included due to its particular relevance to the topic.

3.3.2. Exclusion Criteria

- Irrelevance: Studies that did not focus primarily on test case generation using LLMs were excluded.

3.4. Data Collection Procedure

3.5. Results

- Prompt Engineering: Studies in this category explore techniques for constructing and refining LLM prompts to improve test generation outcomes.

- Feedback-driven Approaches: This category includes research that incorporates iterative interactions and mechanisms to further refine and enhance the relevance and quality of the generated test cases.

- Model Fine-tuning and Pre-training: Studies in this group investigated fine-tuning and pre-training approaches aimed at optimizing LLM performance for test generation tasks.

- Hybrid Approaches: Research in this category has examined how LLM-based test generation can be combined with traditional testing tools and methodologies.

4. Findings

4.1. RQ-1: What Methods Have Been Proposed for Using LLMs in Automated Test Case Generation?

4.1.1. Prompt Design and Engineering

4.1.2. Feedback-Driven Approaches

4.1.3. Model Fine-Tuning and Pre-Training

4.1.4. Hybrid Approaches

4.2. RQ-2: How Effective Are LLMs in Improving the Quality and Efficiency of Test Case Generation Compared to Traditional Methods?

4.2.1. Improvement over Existing Tools

4.2.2. No Clear Improvement over Existing Tools

4.2.3. Mixed/Context-Dependent Outcomes

4.3. RQ-3: What Future Directions Are Suggested in the Literature for Using LLMs in Test Case Generation?

4.3.1. Hybrid Approaches and Integration with Existing Tools

4.3.2. Prompt Engineering

4.3.3. Project-Specific Knowledge

4.3.4. Scalability and Performance

4.3.5. Expanding Applicability: Languages, Models, Metrics and Benchmarks

5. Discussion

5.1. Synergistic Potential of Combined Approaches

5.2. Fragmentation in Evaluation Practices

5.3. The Role and Risks of Contextual Information

5.4. Real-World Applications and Industrial Evaluation

5.5. Gaps in Tool Ecosystem and Language Coverage

5.6. Beyond Functional Correctness: Addressing Non-Functional Requirements

6. Threats to Validity

6.1. Threats to Internal Validity

6.2. Threats to External Validity

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Putra, S.J.; Sugiarti, Y.; Prayoga, B.Y.; Samudera, D.W.; Khairani, D. Analysis of Strengths and Weaknesses of Software Testing Strategies: Systematic Literature Review. In Proceedings of the 2023 11th International Conference on Cyber and IT Service Management (CITSM), Makassar, Indonesia, 10–11 November 2023; pp. 1–5. [Google Scholar]

- Gurcan, F.; Dalveren, G.G.M.; Cagiltay, N.E.; Roman, D.; Soylu, A. Evolution of Software Testing Strategies and Trends: Semantic Content Analysis of Software Research Corpus of the Last 40 Years. IEEE Access 2022, 10, 106093–106109. [Google Scholar] [CrossRef]

- Pudlitz, F.; Brokhausen, F.; Vogelsang, A. What Am I Testing and Where? Comparing Testing Procedures Based on Lightweight Requirements Annotations. Empir. Softw. Eng. 2020, 25, 2809–2843. [Google Scholar] [CrossRef]

- Kassab, M.; Laplante, P.; Defranco, J.; Neto, V.V.G.; Destefanis, G. Exploring the Profiles of Software Testing Jobs in the United States. IEEE Access 2021, 9, 68905–68916. [Google Scholar] [CrossRef]

- De Silva, D.; Hewawasam, L. The Impact of Software Testing on Serverless Applications. IEEE Access 2024, 12, 51086–51099. [Google Scholar] [CrossRef]

- Alshahwan, N.; Harman, M.; Marginean, A. Software Testing Research Challenges: An Industrial Perspective. In Proceedings of the 2023 IEEE Conference on Software Testing, Verification and Validation (ICST), Dublin, Ireland, 16–20 April 2023; pp. 1–10. [Google Scholar]

- Aniche, M.; Treude, C.; Zaidman, A. How Developers Engineer Test Cases: An Observational Study. IEEE Trans. Softw. Eng. 2021, 48, 4925–4946. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2024, arXiv:2303.18223. [Google Scholar] [CrossRef]

- Wang, J.; Huang, Y.; Chen, C.; Liu, Z.; Wang, S.; Wang, Q. Software Testing With Large Language Models: Survey, Landscape, and Vision. IEEE Trans. Softw. Eng. 2024, 50, 911–936. [Google Scholar] [CrossRef]

- Chen, L.; Guo, Q.; Jia, H.; Zeng, Z.; Wang, X.; Xu, Y.; Wu, J.; Wang, Y.; Gao, Q.; Wang, J.; et al. A Survey on Evaluating Large Language Models in Code Generation Tasks. arXiv 2024, arXiv:2408.16498. [Google Scholar] [CrossRef]

- Raiaan, M.A.K.; Mukta, M.d.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A Review on Large Language Models: Architectures, Applications, Taxonomies, Open Issues and Challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Fan, A.; Gokkaya, B.; Harman, M.; Lyubarskiy, M.; Sengupta, S.; Yoo, S.; Zhang, J.M. Large Language Models for Software Engineering: Survey and Open Problems. In Proceedings of the 2023 IEEE/ACM International Conference on Software Engineering: Future of Software Engineering (ICSE-FoSE), Melbourne, Australia, 14–20 May 2023; pp. 31–53. [Google Scholar]

- ISO/IEC/IEEE 24765:2017(E); ISO/IEC/IEEE International Standard—Systems and Software Engineering—Vocabulary. IEEE: New York, NY, USA, 2017; pp. 1–541. [CrossRef]

- Mayeda, M.; Andrews, A. Evaluating Software Testing Techniques: A Systematic Mapping Study. In Advances in Computers; Missouri University of Science and Technology: Rolla, MO, USA, 2021; ISBN 978-0-12-824121-9. [Google Scholar]

- Lonetti, F.; Marchetti, E. Emerging Software Testing Technologies. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2018; Volume 108, pp. 91–143. ISBN 978-0-12-815119-8. [Google Scholar]

- Clark, A.G.; Walkinshaw, N.; Hierons, R.M. Test Case Generation for Agent-Based Models: A Systematic Literature Review. Inf. Softw. Technol. 2021, 135, 106567. [Google Scholar] [CrossRef]

- Hou, X.; Zhao, Y.; Liu, Y.; Yang, Z.; Wang, K.; Li, L.; Luo, X.; Lo, D.; Grundy, J.; Wang, H. Large Language Models for Software Engineering: A Systematic Literature Review. arXiv 2024, arXiv:2308.10620. [Google Scholar] [CrossRef]

- Tang, Y.; Liu, Z.; Zhou, Z.; Luo, X. ChatGPT vs. SBST: A Comparative Assessment of Unit Test Suite Generation. IEEE Trans. Softw. Eng. 2024, 50, 1340–1359. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, Z.; Zhi, C.; Han, J.; Deng, S.; Yin, J. ChatUniTest: A Framework for LLM-Based Test Generation. arXiv 2024, arXiv:2305.04764. [Google Scholar] [CrossRef]

- Rao, N.; Jain, K.; Alon, U.; Goues, C.L.; Hellendoorn, V.J. CAT-LM Training Language Models on Aligned Code And Tests. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Luxembourg, 11–15 September 2023; pp. 409–420. [Google Scholar] [CrossRef]

- Lemieux, C.; Inala, J.P.; Lahiri, S.K.; Sen, S. CodaMosa: Escaping Coverage Plateaus in Test Generation with Pre-trained Large Language Models. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 919–931. [Google Scholar] [CrossRef]

- Zhang, Q.; Fang, C.; Gu, S.; Shang, Y.; Chen, Z.; Xiao, L. Large Language Models for Unit Testing: A Systematic Literature Review. arXiv 2025, arXiv:2506.15227. [Google Scholar] [CrossRef]

- Yi, G.; Chen, Z.; Chen, Z.; Wong, W.E.; Chau, N. Exploring the Capability of ChatGPT in Test Generation. In Proceedings of the 2023 IEEE 23rd International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Chiang Mai, Thailand, 22–26 October 2023; pp. 72–80. [Google Scholar] [CrossRef]

- Elvira, T.; Procko, T.T.; Couder, J.O.; Ochoa, O. Digital Rubber Duck: Leveraging Large Language Models for Extreme Programming. In Proceedings of the 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE), Las Vegas, NV, USA, 24–27 July 2023; pp. 295–304. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, F.; Nguyen, A.; Zan, D.; Lin, Z.; Lou, J.-G.; Chen, W. CodeT: Code Generation with Generated Tests. arXiv 2022, arXiv:2207.10397. [Google Scholar] [CrossRef]

- Yuan, Z.; Lou, Y.; Liu, M.; Ding, S.; Wang, K.; Chen, Y.; Peng, X. No More Manual Tests? Evaluating and Improving ChatGPT for Unit Test Generation. arXiv 2024, arXiv:2305.04207. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; EBSE: Keele, UK, 2007. [Google Scholar]

- Tufano, M.; Drain, D.; Svyatkovskiy, A.; Deng, S.K.; Sundaresan, N. Unit Test Case Generation with Transformers and Focal Context. arXiv 2021, arXiv:2009.05617. [Google Scholar] [CrossRef]

- Li, V.; Doiron, N. Prompting Code Interpreter to Write Better Unit Tests on Quixbugs Functions. arXiv 2023, arXiv:2310.00483. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, W.; Ji, Z.; Yao, D.; Meng, N. How well does LLM generate security tests? arXiv 2023, arXiv:2310.00710. [Google Scholar] [CrossRef]

- Guilherme, V.; Vincenzi, A. An Initial Investigation of ChatGPT Unit Test Generation Capability. In Proceedings of the 8th Brazilian Symposium on Systematic and Automated Software Testing, Campo Grande, MS, Brazil, 25–29 September 2023; pp. 15–24. [Google Scholar] [CrossRef]

- Siddiq, M.L.; Da Silva Santos, J.C.; Tanvir, R.H.; Ulfat, N.; Al Rifat, F.; Lopes, V.C. Using Large Language Models to Generate JUnit Tests: An Empirical Study. In Proceedings of the 28th International Conference on Evaluation and Assessment in Software Engineering, Salerno Italy, 18–21 June 2024; pp. 313–322. [CrossRef]

- Yang, L.; Yang, C.; Gao, S.; Wang, W.; Wang, B.; Zhu, Q.; Chu, X.; Zhou, J.; Liang, G.; Wang, Q.; et al. On the Evaluation of Large Language Models in Unit Test Generation. In Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering, Sacramento, CA, USA, 27 October 2024; pp. 1607–1619. [Google Scholar] [CrossRef]

- Chang, H.-F.; Shirazi, M.S. A Systematic Approach for Assessing Large Language Models’ Test Case Generation Capability. arXiv 2025, arXiv:2502.02866. [Google Scholar] [CrossRef]

- Xu, J.; Pang, B.; Qu, J.; Hayashi, H.; Xiong, C.; Zhou, Y. CLOVER: A Test Case Generation Benchmark with Coverage, Long-Context, and Verification. arXiv 2025, arXiv:2502.08806. [Google Scholar] [CrossRef]

- Wang, Y.; Xia, C.; Zhao, W.; Du, J.; Miao, C.; Deng, Z.; Yu, P.S.; Xing, C. ProjectTest: A Project-level LLM Unit Test Generation Benchmark and Impact of Error Fixing Mechanisms. arXiv 2025, arXiv:2502.06556. [Google Scholar] [CrossRef]

- Bayrı, V.; Demirel, E. AI-Powered Software Testing: The Impact of Large Language Models on Testing Methodologies. In Proceedings of the 2023 4th International Informatics and Software Engineering Conference (IISEC), Ankara, Türkiye, 21–22 December 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Plein, L.; Ouédraogo, W.C.; Klein, J.; Bissyandé, T.F. Automatic Generation of Test Cases based on Bug Reports: A Feasibility Study with Large Language Models. In Proceedings of the 2024 IEEE/ACM 46th International Conference on Software Engineering: Companion Proceedings, Lisbon, Portugal, 14–20 April 2024; pp. 360–361. [Google Scholar] [CrossRef]

- Heiko, K.; Virendra, A.; Soumyadip, B.; Chandrika, K.R. Automated Control Logic Test Case Generation using Large Language Models. arXiv 2024, arXiv:2405.01874. [Google Scholar] [CrossRef]

- Yin, H.; Mohammed, H.; Boyapati, S. Leveraging Pre-Trained Large Language Models (LLMs) for On-Premises Comprehensive Automated Test Case Generation: An Empirical Study. In Proceedings of the 2024 9th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 21–23 November 2024; pp. 597–607. [Google Scholar] [CrossRef]

- Rao, N.; Gilbert, E.; Green, H.; Ramananandro, T.; Swamy, N.; Le Goues, C.; Fakhoury, S. DiffSpec: Differential Testing with LLMs using Natural Language Specifications and Code Artifacts. arXiv 2025, arXiv:2410.04249. [Google Scholar] [CrossRef]

- Zhang, Q.; Shang, Y.; Fang, C.; Gu, S.; Zhou, J.; Chen, Z. TestBench: Evaluating Class-Level Test Case Generation Capability of Large Language Models. arXiv 2024, arXiv:2409.17561. [Google Scholar] [CrossRef]

- Jiri, M.; Emese, B.; Medlen, P. Leveraging Large Language Models for Python Unit Test. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence Testing (AITest), Shanghai, China, 15–18 July 2024; pp. 95–100. [Google Scholar] [CrossRef]

- Ryan, G.; Jain, S.; Shang, M.; Wang, S.; Ma, X.; Ramanathan, M.K.; Ray, B. Code-Aware Prompting: A study of Coverage Guided Test Generation in Regression Setting using LLM. arXiv 2024, arXiv:2402.00097. [Google Scholar] [CrossRef]

- Gao, S.; Wang, C.; Gao, C.; Jiao, X.; Chong, C.Y.; Gao, S.; Lyu, M. The Prompt Alchemist: Automated LLM-Tailored Prompt Optimization for Test Case Generation. arXiv 2025, arXiv:2501.01329. [Google Scholar] [CrossRef]

- Wang, W.; Yang, C.; Wang, Z.; Huang, Y.; Chu, Z.; Song, D.; Zhang, L.; Chen, A.R.; Ma, L. TESTEVAL: Benchmarking Large Language Models for Test Case Generation. arXiv 2025, arXiv:2406.04531. [Google Scholar] [CrossRef]

- Ouédraogo, W.C.; Kaboré, K.; Li, Y.; Tian, H.; Koyuncu, A.; Klein, J.; Lo, D.; Bissyandé, T.F. Large-scale, Independent and Comprehensive study of the power of LLMs for test case generation. arXiv 2024, arXiv:2407.00225. [Google Scholar] [CrossRef]

- Khelladi, D.E.; Reux, C.; Acher, M. Unify and Triumph: Polyglot, Diverse, and Self-Consistent Generation of Unit Tests with LLMs. arXiv 2025, arXiv:2503.16144. [Google Scholar] [CrossRef]

- Sharma, R.K.; Halleux, J.D.; Barke, S.; Zorn, B. PromptPex: Automatic Test Generation for Language Model Prompts. arXiv 2025, arXiv:2503.05070. [Google Scholar] [CrossRef]

- Li, Y.; Liu, P.; Wang, H.; Chu, J.; Wong, W.E. Evaluating large language models for software testing. Comput. Stand. Interfaces 2025, 93, 103942. [Google Scholar] [CrossRef]

- Godage, T.; Nimishan, S.; Vasanthapriyan, S.; Palanisamy, V.; Joseph, C.; Thuseethan, S. Evaluating the Effectiveness of Large Language Models in Automated Unit Test Generation. In Proceedings of the 2025 5th International Conference on Advanced Research in Computing (ICARC), Belihuloya, Sri Lanka, 19–20 February 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Roy Chowdhury, S.; Sridhara, G.; Raghavan, A.K.; Bose, J.; Mazumdar, S.; Singh, H.; Sugumaran, S.B.; Britto, R. Static Program Analysis Guided LLM Based Unit Test Generation. In Proceedings of the 8th International Conference on Data Science and Management of Data (12th ACM IKDD CODS and 30th COMAD), Jodhpur, India, 18–21 December 2024; pp. 279–283. [Google Scholar] [CrossRef]

- Kang, S.; Yoon, J.; Yoo, S. Large Language Models are Few-shot Testers: Exploring LLM-based General Bug Reproduction. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 2312–2323. [Google Scholar] [CrossRef]

- Lahiri, S.K.; Fakhoury, S.; Naik, A.; Sakkas, G.; Chakraborty, S.; Musuvathi, M.; Choudhury, P.; von Veh, C.; Inala, J.P.; Wang, C.; et al. Interactive Code Generation via Test-Driven User-Intent Formalization. arXiv 2023, arXiv:2208.05950. [Google Scholar] [CrossRef]

- Yu, S.; Fang, C.; Ling, Y.; Wu, C.; Chen, Z. LLM for Test Script Generation and Migration: Challenges, Capabilities, and Opportunities. In Proceedings of the 2023 IEEE 23rd International Conference on Software Quality, Reliability, and Security (QRS), Chiang Mai, Thailand, 22–26 October 2023; pp. 206–217. [Google Scholar] [CrossRef]

- Nashid, N.; Bouzenia, I.; Pradel, M.; Mesbah, A. Issue2Test: Generating Reproducing Test Cases from Issue Reports. arXiv 2025, arXiv:2503.16320. [Google Scholar] [CrossRef]

- Chen, M.; Liu, Z.; Tao, H.; Hong, Y.; Lo, D.; Xia, X.; Sun, J. B4: Towards Optimal Assessment of Plausible Code Solutions with Plausible Tests. In Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering, Sacramento, CA, USA, 27 October–1 November 2024; pp. 1693–1705. [Google Scholar] [CrossRef]

- Ni, C.; Wang, X.; Chen, L.; Zhao, D.; Cai, Z.; Wang, S.; Yang, X. CasModaTest: A Cascaded and Model-agnostic Self-directed Framework for Unit Test Generation. arXiv 2024, arXiv:2406.15743. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, Z.; Hu, Y.; Lin, Y.; Gao, X.; Sun, H. LLM-based Unit Test Generation for Dynamically-Typed Programs. arXiv 2025, arXiv:2503.14000. [Google Scholar] [CrossRef]

- Bhatia, S.; Gandhi, T.; Kumar, D.; Jalote, P. Unit Test Generation using Generative AI: A Comparative Performance Analysis of Autogeneration Tools. In Proceedings of the 1st International Workshop on Large Language Models for Code, Lisbon, Portugal, 20 April 2024; pp. 54–61. [Google Scholar] [CrossRef]

- Schäfer, M.; Nadi, S.; Eghbali, A.; Tip, F. An Empirical Evaluation of Using Large Language Models for Automated Unit Test Generation. IEEE Trans. Softw. Eng. 2024, 50, 85–105. [Google Scholar] [CrossRef]

- Kumar, N.A.; Lan, A. Using Large Language Models for Student-Code Guided Test Case Generation in Computer Science Education. arXiv 2024, arXiv:2402.07081. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, K.; Li, G.; Jin, Z. HITS: High-coverage LLM-based Unit Test Generation via Method Slicing. In Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering, Sacramento, CA, USA, 27 October–1 November 2024; pp. 1258–1268. [Google Scholar] [CrossRef]

- Etemadi, K.; Mohammadi, B.; Su, Z.; Monperrus, M. Mokav: Execution-driven Differential Testing with LLMs. arXiv 2024, arXiv:2406.10375. [Google Scholar] [CrossRef]

- Yang, C.; Chen, J.; Lin, B.; Zhou, J.; Wang, Z. Enhancing LLM-based Test Generation for Hard-to-Cover Branches via Program Analysis. arXiv 2024, arXiv:2404.04966. [Google Scholar] [CrossRef]

- Alshahwan, N.; Chheda, J.; Finegenova, A.; Gokkaya, B.; Harman, M.; Harper, I.; Marginean, A.; Sengupta, S.; Wang, E. Automated Unit Test Improvement using Large Language Models at Meta. arXiv 2024, arXiv:2402.09171. [Google Scholar] [CrossRef]

- Pizzorno, J.A.; Berger, E.D. CoverUp: Effective High Coverage Test Generation for Python. Proc. ACM Softw. Eng. 2025, 2, 2897–2919. [Google Scholar] [CrossRef]

- Jain, K.; Goues, C.L. TestForge: Feedback-Driven, Agentic Test Suite Generation. arXiv 2025, arXiv:2503.14713. [Google Scholar] [CrossRef]

- Gu, S.; Nashid, N.; Mesbah, A. LLM Test Generation via Iterative Hybrid Program Analysis. arXiv 2025, arXiv:2503.13580. [Google Scholar] [CrossRef]

- Straubinger, P.; Kreis, M.; Lukasczyk, S.; Fraser, G. Mutation Testing via Iterative Large Language Model-Driven Scientific Debugging. arXiv 2025, arXiv:2503.08182. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X.; Lin, Y.; Gao, X.; Sun, H.; Yuan, Y. LLM-based Unit Test Generation via Property Retrieval. arXiv 2024, arXiv:2410.13542. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, S.; Wang, H.; Wen, S.; Guan, H.; Tao, Y.; Liu, Y. Advancing Bug Detection in Fastjson2 with Large Language Models Driven Unit Test Generation. arXiv 2024, arXiv:2410.09414. [Google Scholar] [CrossRef]

- Pan, R.; Kim, M.; Krishna, R.; Pavuluri, R.; Sinha, S. ASTER: Natural and Multi-language Unit Test Generation with LLMs. arXiv 2025, arXiv:2409.03093. [Google Scholar] [CrossRef]

- Gu, S.; Zhang, Q.; Li, K.; Fang, C.; Tian, F.; Zhu, L.; Zhou, J.; Chen, Z. TestART: Improving LLM-based Unit Testing via Co-evolution of Automated Generation and Repair Iteration. arXiv 2025, arXiv:2408.03095. [Google Scholar] [CrossRef]

- Li, K.; Yu, H.; Guo, T.; Cao, S.; Yuan, Y. CoCoEvo: Co-Evolution of Programs and Test Cases to Enhance Code Generation. arXiv 2025, arXiv:2502.10802. [Google Scholar] [CrossRef]

- Cheng, R.; Tufano, M.; Cito, J.; Cambronero, J.; Rondon, P.; Wei, R.; Sun, A.; Chandra, S. Agentic Bug Reproduction for Effective Automated Program Repair at Google. arXiv 2025, arXiv:2502.01821. [Google Scholar] [CrossRef]

- Liu, J.; Li, C.; Chen, R.; Li, S.; Gu, B.; Yang, M. STRUT: Structured Seed Case Guided Unit Test Generation for C Programs using LLMs. Proc. ACM Softw. Eng. 2025, 2, 2113–2135. [Google Scholar] [CrossRef]

- Alagarsamy, S.; Tantithamthavorn, C.; Aleti, A. A3Test: Assertion-Augmented Automated Test Case Generation. arXiv 2023, arXiv:2302.10352. [Google Scholar] [CrossRef]

- Shin, J.; Hashtroudi, S.; Hemmati, H.; Wang, S. Domain Adaptation for Code Model-Based Unit Test Case Generation. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, Vienna, Austria, 16–20 September 2024; pp. 1211–1222. [Google Scholar] [CrossRef]

- Rehan, S.; Al-Bander, B.; Ahmad, A.A.-S. Harnessing Large Language Models for Automated Software Testing: A Leap Towards Scalable Test Case Generation. Electronics 2025, 14, 1463. [Google Scholar] [CrossRef]

- He, Y.; Huang, J.; Rong, Y.; Guo, Y.; Wang, E.; Chen, H. UniTSyn: A Large-Scale Dataset Capable of Enhancing the Prowess of Large Language Models for Program Testing. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, Vienna, Austria, 16–20 September 2024; pp. 1061–1072. [Google Scholar] [CrossRef]

- Tufano, M.; Drain, D.; Svyatkovskiy, A.; Sundaresan, N. Generating accurate assert statements for unit test cases using pretrained transformers. In Proceedings of the 3rd ACM/IEEE International Conference on Automation of Software Test, Pittsburgh, PA, USA, 17–18 May 2022; pp. 54–64. [Google Scholar] [CrossRef]

- Zhang, Q.; Fang, C.; Zheng, Y.; Zhang, Y.; Zhao, Y.; Huang, R.; Zhou, J.; Yang, Y.; Zheng, T.; Chen, Z. Improving Deep Assertion Generation via Fine-Tuning Retrieval-Augmented Pre-trained Language Models. ACM Trans. Softw. Eng. Methodol. 2025, 34, 3721128. [Google Scholar] [CrossRef]

- Primbs, S.; Fein, B.; Fraser, G. AsserT5: Test Assertion Generation Using a Fine-Tuned Code Language Model. In Proceedings of the 2025 IEEE/ACM International Conference on Automation of Software Test (AST), Ottawa, ON, Canada, 28–29 April 2025. [Google Scholar] [CrossRef]

- Storhaug, A.; Li, J. Parameter-Efficient Fine-Tuning of Large Language Models for Unit Test Generation: An Empirical Study. arXiv 2024, arXiv:2411.02462. [Google Scholar] [CrossRef]

- Alagarsamy, S.; Tantithamthavorn, C.; Takerngsaksiri, W.; Arora, C.; Aleti, A. Enhancing Large Language Models for Text-to-Testcase Generation. arXiv 2025, arXiv:2402.11910. [Google Scholar] [CrossRef]

- Shang, Y.; Zhang, Q.; Fang, C.; Gu, S.; Zhou, J.; Chen, Z. A Large-Scale Empirical Study on Fine-Tuning Large Language Models for Unit Testing. Proc. ACM Softw. Eng. 2025, 2, 1678–1700. [Google Scholar] [CrossRef]

- Dakhel, A.M.; Nikanjam, A.; Majdinasab, V.; Khomh, F.; Desmarais, M.C. Effective test generation using pre-trained Large Language Models and mutation testing. Inf. Softw. Technol. 2024, 171, 107468. [Google Scholar] [CrossRef]

- Steenhoek, B.; Tufano, M.; Sundaresan, N.; Svyatkovskiy, A. Reinforcement Learning from Automatic Feedback for High-Quality Unit Test Generation. arXiv 2025, arXiv:2310.02368. [Google Scholar] [CrossRef]

- Li, T.-O.; Zong, W.; Wang, Y.; Tian, H.; Cheung, S.-C. Nuances are the Key: Unlocking ChatGPT to Find Failure-Inducing Tests with Differential Prompting. In Proceedings of the 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Luxembourg, 11–15 September 2023; pp. 14–26. [Google Scholar] [CrossRef]

- Liu, K.; Chen, Z.; Liu, Y.; Zhang, J.M.; Harman, M.; Han, Y.; Ma, Y.; Dong, Y.; Li, G.; Huang, G. LLM-Powered Test Case Generation for Detecting Tricky Bugs. arXiv 2024, arXiv:2404.10304. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, X.; Xia, X.; Cheung, S.-C.; Li, S. Automated Unit Test Generation via Chain of Thought Prompt and Reinforcement Learning from Coverage Feedback. ACM Trans. Softw. Eng. Methodol. 2025. [Google Scholar] [CrossRef]

- Sapozhnikov, A.; Olsthoorn, M.; Panichella, A.; Kovalenko, V.; Derakhshanfar, P. TestSpark: IntelliJ IDEA’s Ultimate Test Generation Companion. In Proceedings of the 2024 IEEE/ACM 46th International Conference on Software Engineering: Companion Proceedings, Lisbon, Portugal, 14–20 April 2024; pp. 30–34. [Google Scholar] [CrossRef]

- Li, J.; Shen, J.; Su, Y.; Lyu, M.R. LLM-assisted Mutation for Whitebox API Testing. arXiv 2025, arXiv:2504.05738. [Google Scholar] [CrossRef]

- Li, K.; Yuan, Y. Large Language Models as Test Case Generators: Performance Evaluation and Enhancement. arXiv 2024, arXiv:2404.13340. [Google Scholar] [CrossRef]

- Huang, D.; Zhang, J.M.; Luck, M.; Bu, Q.; Qing, Y.; Cui, H. AgentCoder: Multi-Agent-based Code Generation with Iterative Testing and Optimisation. arXiv 2024, arXiv:2312.13010. [Google Scholar] [CrossRef]

- Mündler, N.; Müller, M.N.; He, J.; Vechev, M. SWT-Bench: Testing and Validating Real-World Bug-Fixes with Code Agents. arXiv 2025, arXiv:2406.12952. [Google Scholar] [CrossRef]

- Taherkhani, H.; Hemmati, H. VALTEST: Automated Validation of Language Model Generated Test Cases. arXiv 2024, arXiv:2411.08254. [Google Scholar] [CrossRef]

- Lops, A.; Narducci, F.; Ragone, A.; Trizio, M.; Bartolini, C. A System for Automated Unit Test Generation Using Large Language Models and Assessment of Generated Test Suites. arXiv 2024, arXiv:2408.07846. [Google Scholar] [CrossRef]

- Yang, R.; Xu, X.; Wang, R. LLM-enhanced evolutionary test generation for untyped languages. Autom. Softw. Eng. 2025, 32, 20. [Google Scholar] [CrossRef]

- Xu, J.; Xu, J.; Chen, T.; Ma, X. Symbolic Execution with Test Cases Generated by Large Language Models. In Proceedings of the 2024 IEEE 24th International Conference on Software Quality, Reliability and Security (QRS), Cambridge, UK, 1–5 July 2024; pp. 228–237. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, Q.; Liu, K.; Dou, W.; Zhu, J.; Qian, L.; Zhang, C.; Lin, Z.; Wei, J. CITYWALK: Enhancing LLM-Based C++ Unit Test Generation via Project-Dependency Awareness and Language-Specific Knowledge. arXiv 2025, arXiv:2501.16155. [Google Scholar] [CrossRef]

- Ouédraogo, W.C.; Plein, L.; Kaboré, K.; Habib, A.; Klein, J.; Lo, D.; Bissyandé, T.F. Enriching automatic test case generation by extracting relevant test inputs from bug reports. Empir. Softw. Eng. 2025, 30, 85. [Google Scholar] [CrossRef]

- Xu, W.; Pei, H.; Yang, J.; Shi, Y.; Zhang, Y.; Zhao, Q. Exploring Critical Testing Scenarios for Decision-Making Policies: An LLM Approach. arXiv 2024, arXiv:2412.06684. [Google Scholar] [CrossRef]

- Duvvuru, V.S.A.; Zhang, B.; Vierhauser, M.; Agrawal, A. LLM-Agents Driven Automated Simulation Testing and Analysis of small Uncrewed Aerial Systems. arXiv 2025, arXiv:2501.11864. [Google Scholar] [CrossRef]

- Petrovic, N.; Lebioda, K.; Zolfaghari, V.; Schamschurko, A.; Kirchner, S.; Purschke, N. LLM-Driven Testing for Autonomous Driving Scenarios. In Proceedings of the 2024 2nd International Conference on Foundation and Large Language Models (FLLM), Dubai, United Arab Emirates, 26–29 November 2024; pp. 173–178. [Google Scholar] [CrossRef]

| Study | Year | Finding | Limitation |

|---|---|---|---|

| Tang, Y. [18] | 2023 | Language models such as GPT-3.5-Turbo, GPT-4, and their variants generate unit tests that are readable and syntactically valid. Some models achieve competitive mutation scores under prompt tuning. | Many tests generated by these models fail due to compilation errors, hallucinated symbols, and incorrect logic. Performance drops on arithmetic, loops, and long-context tasks. Token constraints further reduce effectiveness in large-scale or complex systems. |

| Yi, G. [23] | 2023 | ||

| Guilherme, V. [31] | 2023 | ||

| Siddiq, M. [32] | 2023 | ||

| Yang, L. [33] | 2024 | ||

| Chang, H. [34] | 2025 | ||

| Xu, J. [35] | 2025 | ||

| Wang, Y. [36] | 2025 | ||

| Elvira, T. [24] | 2023 | Providing examples, basic context, or integrating LLMs into workflows enables the generation of effective and human-readable tests. Some models support collaboration through logic explanations and bug reproduction. | The use of single-shot prompting, small datasets, or simplified workflows limits real-world applicability. Incorrect or incomplete assertions often require manual adjustments. Generalization to more complex systems remains limited. |

| Li, V. [29] | 2024 | ||

| Zhang, Y. [30] | 2023 | ||

| Bayri, V. [37] | 2023 | ||

| Plein, L. [38] | 2023 | ||

| Koziolek, H. [39] | 2024 | ||

| Yin, H. [40] | 2024 | Structured prompting techniques such as few-shot chaining and diversity-guided optimization, boost test quality and coverage. Enhanced performance is observed when prompt inputs are rich in context or aligned with domain-specific expectations. | Test generation quality varies depending on model size and context window. Studies often assume accurate focal methods or omit dynamic refinement steps. Manual evaluation introduces subjectivity and reduces scalability. |

| Rao, N. [41] | 2024 | ||

| Zhang, Q. [42] | 2024 | ||

| Jiri, M. [43] | 2024 | ||

| Ryan, G. [44] | 2024 | ||

| Gao, S. [45] | 2025 | ||

| Wang, W. [46] | 2024 | Language models can produce highly executable and diverse tests, but face limitations in reasoning over code paths and logic. | Evaluations are based on Python LeetCode problems, which may not reflect the complexity or diversity of real-world systems. |

| Ouédraogo, W. [47] | 2024 | Tree-of-Thought and Chain-of-Thought strategies enhance the readability and maintainability of test cases while increasing partial coverage. | Despite syntactic correctness, most tests fail to compile successfully. Low compilability rates and test smells persist across generated outputs. |

| Khelladi, D. [48] | 2025 | By combining cross-language input and high-temperature sampling, LLM-generated test cases can be unified into comprehensive test suites without execution-based feedback. | Experiments focus on self-contained problems. Generalization is difficult for codebases with interdependent inputs or methods. |

| Sharma, R. [49] | 2025 | Extracting concrete input and output specifications from prompts enables the detection of specification-violating outputs across multiple models more effectively. | The method does not support multi-turn interactions or structured prompts, limiting its application in more complex testing workflows. |

| Li, Y. [50] | 2025 | Prompting LLMs with follow-up questions enhances bug localization by triggering self-correction and uncovering previously missed errors. This iterative engagement mirrors human review processes and increases detection beyond initial outputs. | Hallucinations occur across test generation, error tracing, and bug localization, including non-executable outputs and incorrect diagnoses. Performance varies widely across models, with some (e.g., New Bing) failing to detect any bugs. Low overall test quality and model inconsistency limit standalone applicability in real-world testing workflows. |

| Godage, T. [51] | 2025 | Claude 3.5 achieved the highest performance with 93.33% success rate, 98.01% statement coverage, and 89.23% mutation score, outperforming GPT-4o and others. | Results are limited to JavaScript, and generalizability to other languages remains untested. |

| Chowdhury, S. [52] | 2025 | Static analysis-guided prompts enabled LLaMA 7B to generate tests for 99% of 103 methods, resulting in 175% improvement. Similar gains were observed for LLaMA 70B and CodeLLaMA 34B. | The study only evaluated whether a test was generated, without assessing its correctness, coverage, or runtime behavior. It also lacks dynamic context, which limits test accuracy. |

| Study | Year | Finding | Limitation |

|---|---|---|---|

| Kang, S. [53] | 2022 | Incorporating natural language prompts, issue reports, or scenario understanding enables LLMs to generate test cases tailored to real-world failures or UI contexts. | Effectiveness declines in scenarios involving external resources, project-specific configurations, or undocumented dependencies, often necessitating manual intervention. |

| Yu, S. [55] | 2023 | ||

| Nashid, N. [56] | 2025 | ||

| Chen, Y. [19] | 2023 | Breaking down test generation into structured subtasks such as focal context handling, intention inference, oracle refinement, and type correction leads to improved test quality, correctness, and coverage. | Multi-stage generation strategies encounter scalability issues due to LLM token limits, dependence on curated demonstration examples, and difficulties in retrieving relevant context within large codebases. |

| Yuan, Z. [26] | 2023 | ||

| Chen, M. [57] | 2024 | ||

| Ni, C. [58] | 2024 | ||

| Liu, R. [59] | 2025 | ||

| Bhatia, S. [60] | 2023 | Iterative prompting and feedback loops significantly improve test coverage and assertion quality, often rivaling or exceeding the quality of traditional tools. | Performance is constrained when dealing with ambiguous documentation, less modular code, or limited and fixed code examples that reduce generalizability. |

| Schäfer, M. [61] | 2023 | ||

| Kumar, N. [62] | 2024 | ||

| Wang, Z. [63] | 2024 | ||

| Lahiri, S. [54] | 2022 | Execution feedback, control flow analysis, and hypothesis-driven iteration guide LLMs to generate more accurate, high-coverage, and fault-detecting tests. | Limitations include invalid test cases, misclassified outputs, and reduced performance on complex features such as inheritance, behavioral equivalence, or low-confidence assertions. |

| Etemadi, K. [64] | 2024 | ||

| Yang, C. [65] | 2024 | ||

| Alshahwan, N. [66] | 2024 | ||

| Pizzorno, J. [67] | 2025 | ||

| Jain, K. [68] | 2025 | ||

| Gu, S. [69] | 2025 | ||

| Straubinger, P. [70] | 2025 | ||

| Chen, B. [25] | 2022 | Combining LLM-based generation with dual execution strategies, property-based retrieval, or static analysis improves correctness and adaptability across applications. | Large-scale executions, dependency on existing test-rich repositories, and the high cost of repeated LLM invocations pose significant barriers to scaling and full automation. |

| Zhang, Z. [71] | 2024 | ||

| Zhong, Z. [72] | 2024 | ||

| Pan, R. [73] | 2025 | ||

| Gu, S. [74] | 2025 | Employing evolutionary algorithms, template-guided repair, and task-planning strategies powered by LLMs improves the reliability and executability of generated test cases across both open-source and industrial software systems. | Generalizability remains limited due to dataset constraints, potential accuracy decline in long evolution loops, and dependence on high-quality training signals. |

| Li, K. [75] | 2025 | ||

| Cheng, R. [76] | 2025 | ||

| Liu, J. [77] | 2025 | STRUT improves test generation for C programs by using structured seed-case prompts with feedback optimization. It achieves a 96.01% test execution pass rate, 51.83% oracle correctness, 77.67% line coverage, and 63.60% branch coverage. Compared to GPT-4o, it improves line coverage by 37.34%, branch coverage by 33.02%, test pass rate by 42.81%, and oracle correctness by 32.90%. | STRUT struggles with complex conditional branches and suffers from test redundancy. Despite generating 21% more test cases than SunwiseAUnit, it offers only ~6% coverage improvement, indicating inefficiencies in pruning duplicates and handling nuanced logic. |

| Study | Year | Finding | Limitation |

|---|---|---|---|

| Tufano, M. [28] | 2021 | Fine-tuning or adapting LLMs with project-specific or focal context information significantly improves test case quality, including accuracy, readability, and coverage. For example, Rehan et al. [80] fine-tuned LLaMA-2 using QLoRA and achieved an F1 score of 33.95%, with human validation confirming clarity and edge case handling. | Reliance on contextual signals or heuristics such as focal function matching or the availability of developer-written tests can undermine output reliability when these elements are missing, incomplete, or inconsistent across projects. |

| Shin, J. [79] | 2023 | ||

| He, Y. [81] | 2024 | ||

| Rehan, S. [80] | 2025 | ||

| Tufano, M. [82] | 2022 | Pre-training followed by fine-tuning, particularly for assertion generation, improves syntactic and semantic quality of test cases and assertions. | Common challenges include limited generalizability due to restricted datasets, difficulties in reproducing results, and integration issues that arise from incomplete context, missing helper functions, or truncated outputs. |

| Zhang, Q. [83] | 2025 | ||

| Primbs, S. [84] | 2025 | ||

| Rao, N. [20] | 2023 | Incorporating domain knowledge or verification strategies into fine-tuned models yields more readable, accurate, and coverage-enhancing test cases. | Limitations include redundancy in generated outputs or compatibility issues due to deprecated APIs or private access restrictions. |

| Alagarsamy, S. [78] | 2023 | ||

| Storhaug, A. [85] | 2024 | Parameter-efficient or large-scale fine-tuning with effective prompts can match or surpass full fine-tuning in generating high-quality unit tests with reduced cost. | Limitations stem from high variance in model performance and underexplored tuning configurations, which may affect consistency and full potential. |

| Alagarsamy, S. [86] | 2025 | ||

| Shang, Y. [87] | 2025 | Fine-tuned LLMs outperformed traditional tools across tasks. DeepSeek-Coder-6b achieved a 107.77% improvement over AthenaTest (33.68% vs. 16.21%) in test generation. CodeT5p-220m reached a CodeBLEU score of 88.25%, outperforming ATLAS (63.60%). | Bug detection remains limited. DeepSeek-Coder-6b found only 8/163 bugs with 0.74% precision. Over 90% of test failures were build errors, showing weak alignment between syntactic and semantic correctness. |

| Study | Year | Finding | Limitation |

|---|---|---|---|

| Dakhel, A. [88] | 2023 | Improving fault-detection and test robustness through reinforcement learning, mutation-based prompting, and differential testing strategies that target semantic bugs and promote behavioral diversity. | Effectiveness relies on subtle indicators such as surviving mutants or inferred program intentions, but these signals can become unreliable in complex or noisy codebases, leading to redundant test cases or false positives. |

| Steenhoek, B. [89] | 2023 | ||

| Li, T. [90] | 2023 | ||

| Liu, K. [91] | 2024 | ||

| Zhang, J. [92] | 2025 | ||

| Lemieux, C. [21] | 2023 | When integrated into search-based or hybrid testing workflows such as those used within development environments or automated service testing, LLMs enhance usability and increase test coverage by enabling guided exploration alongside feedback from code execution. | Key challenges include slow response times during LLM queries, limited large-scale empirical validation, and restricted applicability to environments such as open-source systems or narrowly scoped testing domains. |

| Sapozhnikov, A. [93] | 2025 | ||

| Li, J. [94] | 2024 | ||

| Li, K. [95] | 2024 | Employing multi-agent systems or execution-aware test generation pipelines significantly improves test accuracy, reliability, and fail-to-pass effectiveness by structuring test and code generation workflows. | The dependency on powerful language models and customized toolchains restricts the adaptability of these approaches across different model architectures, programming languages, and real-world software development settings. |

| Huang, D. [96] | 2025 | ||

| Mündler, N. [97] | 2025 | ||

| Taherkhani, H. [98] | 2024 | Techniques such as chain-of-thought prompting, input repair, and guided mutation enhance test executability and coverage in zero-shot or low-supervision settings. | Syntactic inconsistencies, ambiguous or incomplete documentation, and unstructured datasets continue to result in invalid test inputs, ultimately undermining test coverage and the effectiveness of automated testing pipelines. |

| Lops, A. [99] | 2025 | ||

| Yang, R. [100] | 2024 | ||

| Xu, J. [101] | 2024 | Incorporating symbolic execution, context awareness across multiple files, and specialized domain knowledge enables LLMs to generate high-quality unit tests in complex ecosystems such as formal verification tools or statically typed languages. | Limitations stem from scalability, path explosion, and difficulty modeling sophisticated code features or environmental interactions accurately. |

| Zhang, Y. [102] | 2025 | ||

| Ouédraogo, W. [103] | 2025 | Mining concrete inputs from bug reports before seeding EvoSuite or Randoop boosts both effectiveness and efficiency: BRMiner exposes 58 extra bugs across 13 Defects4J projects (24 beyond default EvoSuite) and raises branch/instruction coverage by ≈13 pp/12 pp while producing about 21% fewer test cases. | Effectiveness relies on high-quality, version-aligned bug reports; stale or noisy reports can seed irrelevant inputs, and the GPT-3.5-turbo filtering step lacks semantic validation, occasionally admitting plausible yet ineffective test data. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Celik, A.; Mahmoud, Q.H. A Review of Large Language Models for Automated Test Case Generation. Mach. Learn. Knowl. Extr. 2025, 7, 97. https://doi.org/10.3390/make7030097

Celik A, Mahmoud QH. A Review of Large Language Models for Automated Test Case Generation. Machine Learning and Knowledge Extraction. 2025; 7(3):97. https://doi.org/10.3390/make7030097

Chicago/Turabian StyleCelik, Arda, and Qusay H. Mahmoud. 2025. "A Review of Large Language Models for Automated Test Case Generation" Machine Learning and Knowledge Extraction 7, no. 3: 97. https://doi.org/10.3390/make7030097

APA StyleCelik, A., & Mahmoud, Q. H. (2025). A Review of Large Language Models for Automated Test Case Generation. Machine Learning and Knowledge Extraction, 7(3), 97. https://doi.org/10.3390/make7030097