Harnessing Language Models for Studying the Ancient Greek Language: A Systematic Review

Abstract

1. Introduction

- We synthesize fragmented literature across Natural Language Processing (NLP), digital humanities, and pedagogy to provide a unified and critical overview;

- We explicitly distinguish between general-purpose foundation LMs and domain-specific models trained for Ancient Greek, analyzing their strengths, limitations, and areas of application;

- We critically assess both technical advances and persistent challenges, and propose concrete future directions for integrating research and education.

- In which domains have LMs been applied for the study of the Ancient Greek language?

- How effective are current LMs in the analysis and processing of Ancient Greek?

- What challenges and limitations have been identified in the application of LMs to Ancient Greek?

2. Materials and Methods

2.1. Study Design

2.2. Eligibility Criteria

- IC-1: The study applies transformer-based LMs such as ChatGPT (3.5/4/4o), Bidirectional Encoder Representations from Transformers (BERT), LLaMA (2/3), Generative Pre-trained Transformer (GPT)-3/4, or similar to the analysis or teaching of Ancient Greek;

- IC-2: The study is a peer-reviewed journal article or conference proceeding;

- IC-3: The full text is available, and the article is written in English.

- EC-1: The study does not apply transformer-based LMs;

- EC-2: The focus is on languages other than Ancient Greek, with no meaningful reference to Ancient Greek;

- EC-3: The study is not peer-reviewed, or the full text is not accessible;

- EC-4: The article is written in a language other than English;

- EC-5: The study is purely theoretical or conceptual, without empirical application of an LM.

2.3. Search Strategy

- Transformer-based LMs;

- Education, pedagogy, and digital learning;

- The study and teaching of Ancient Greek.

- LLM-related terms included: “large language models”, “generative AI”, “foundation models”, “ChatGPT”, “BERT”, “LLaMA”, “autoregressive models”, and similar.

- Education-related terms included: “pedagogy”, “education”, “digital humanities”, “instructional technology”, “e-learning”, and others.

- Ancient Greek-related terms included: “Ancient Greek”, “Classical Greek”, “Koine Greek”, “Homeric Greek”, and related historical variants.

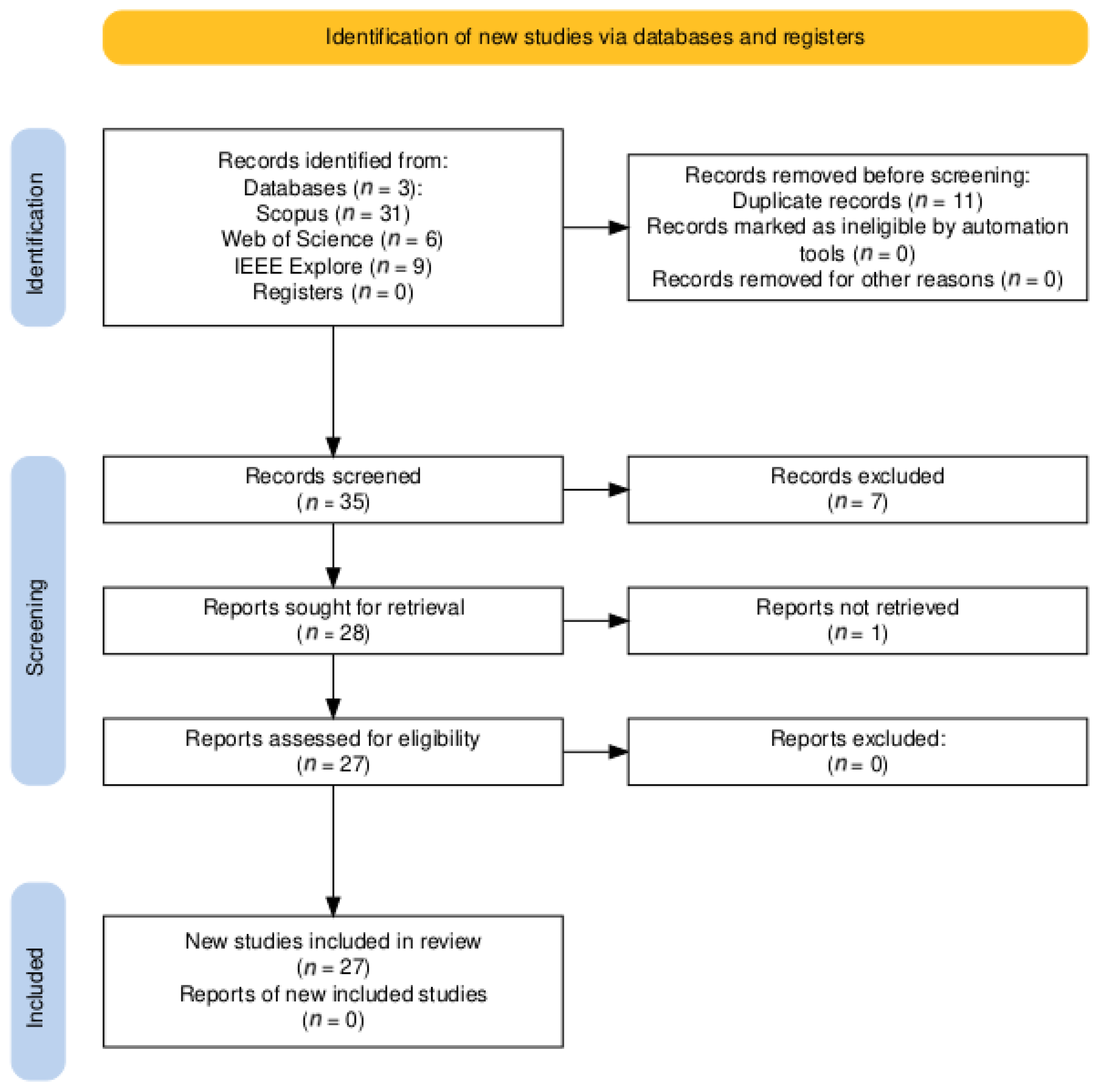

2.4. Study Selection Process

2.5. Data Collection Process and Categorization

- The year of publication;

- The corpus or dataset used;

- The type of language model (foundation or domain-specific);

- The primary NLP technique or approach employed;

- The specific application domain (e.g., translation, Named Entity Recognition (NER), etc.).

2.6. Risk of Bias

3. Results

3.1. Identification and Inclusion of Studies

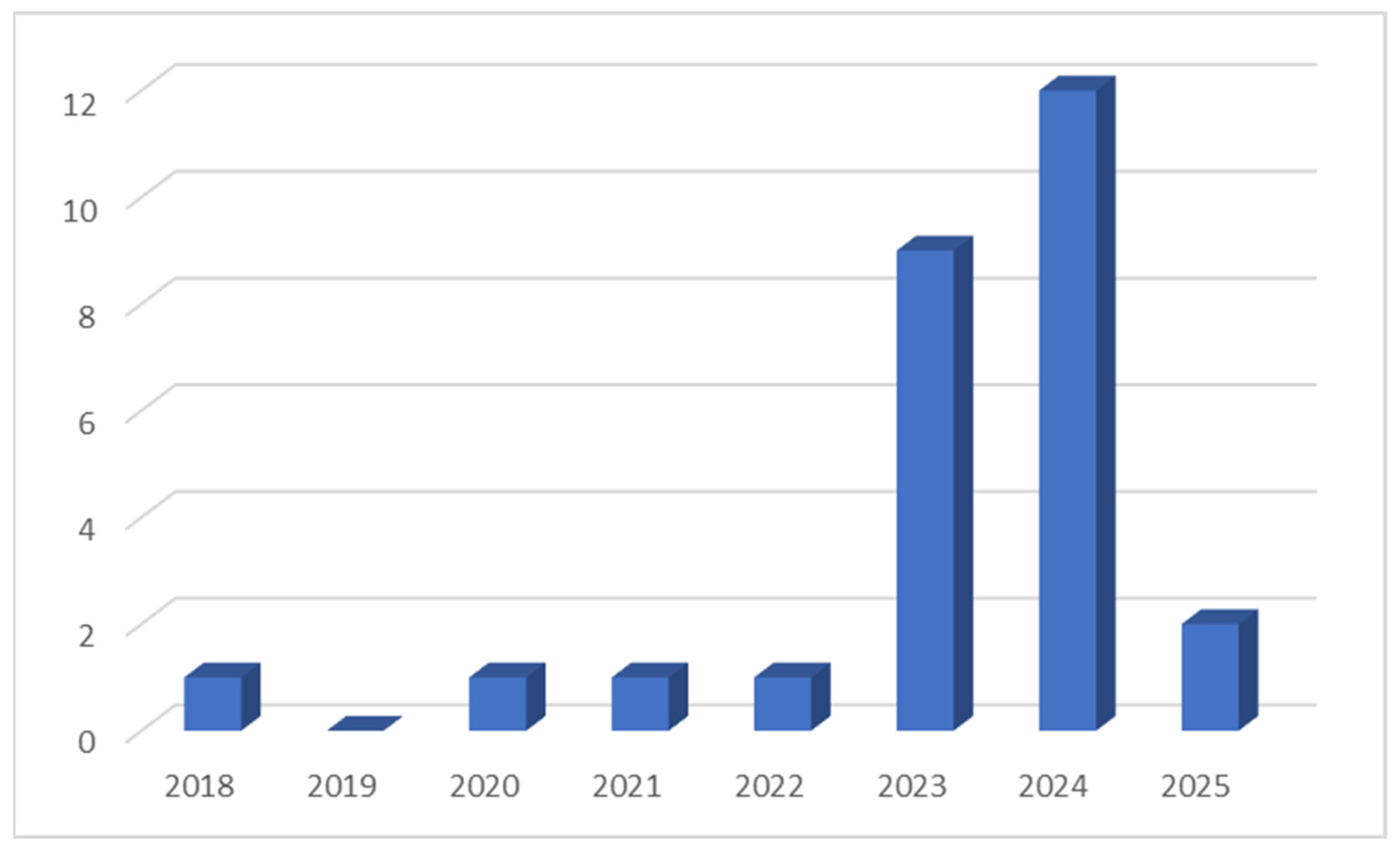

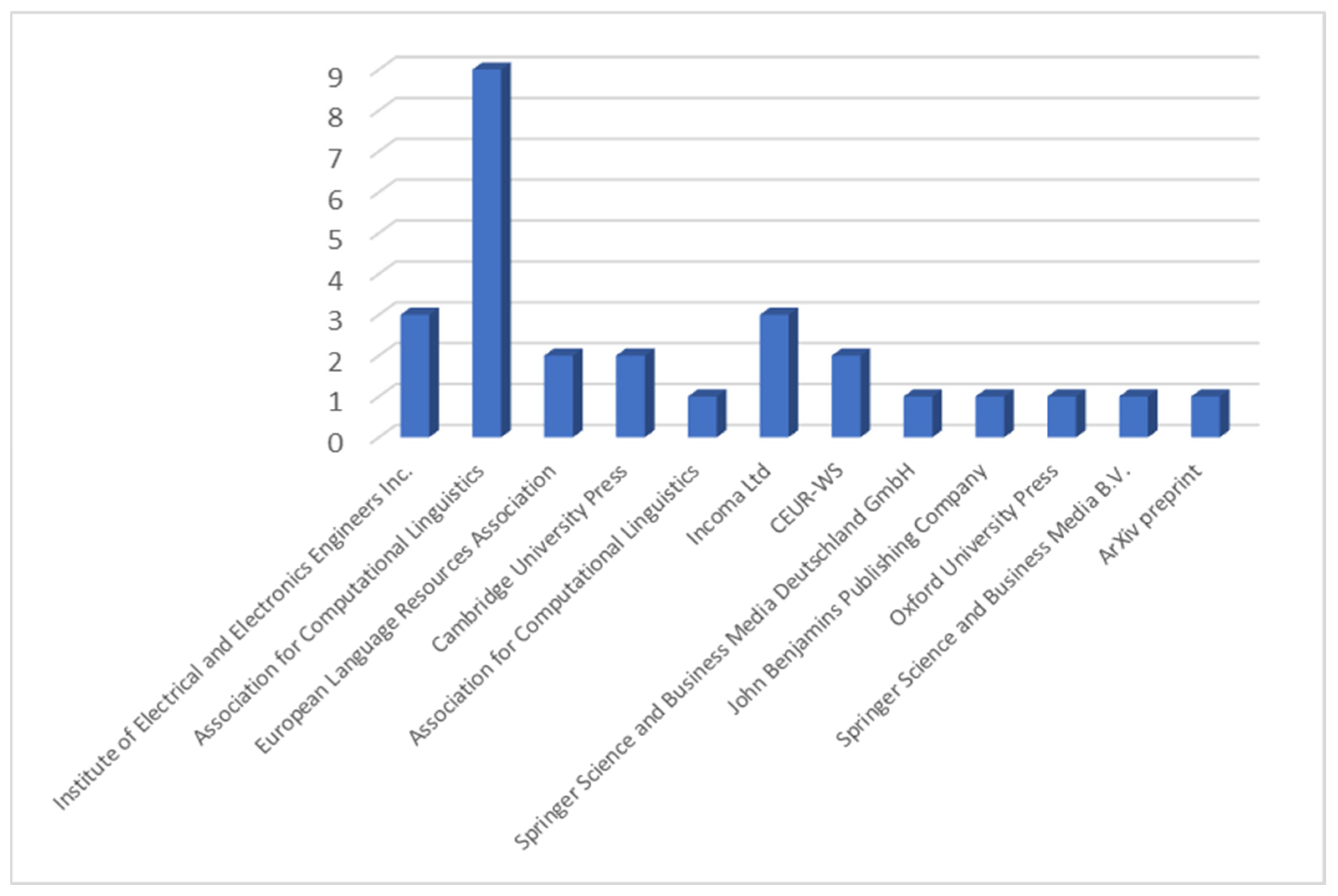

3.2. Descriptive Overview of Included Studies

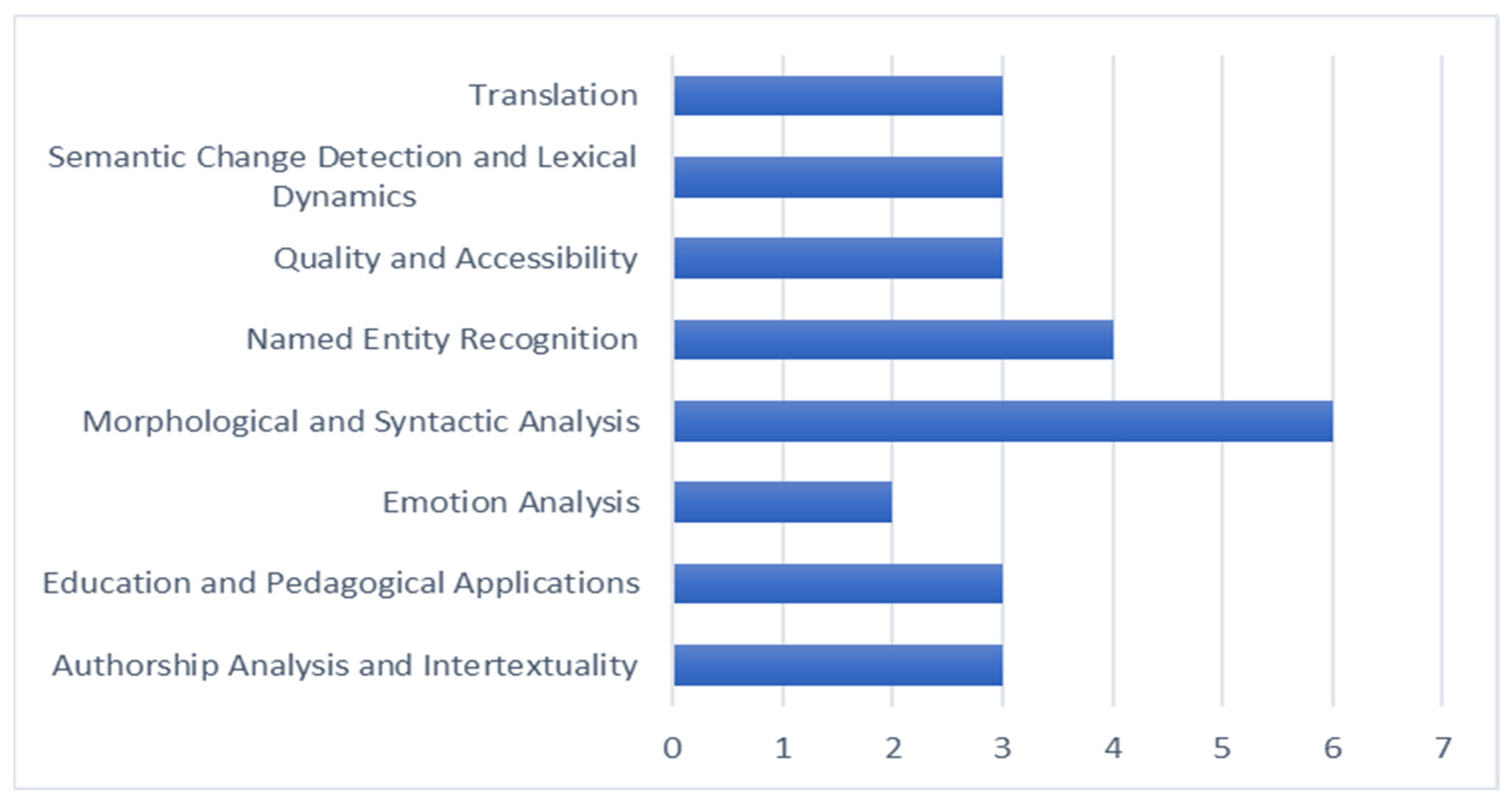

3.3. Thematic Analysis of Applications of LMs in Ancient Greek

3.3.1. Morphological and Syntactic Processing of Ancient Greek Using LMs

3.3.2. NER in Ancient Greek with Language Model Technology

3.3.3. Machine Translation of Ancient Greek Using LMs

3.3.4. LMs for Authorship Attribution and Intertextuality in Ancient Greek

3.3.5. Emotion Analysis in Ancient Greek Literature Using LMs

3.3.6. LMs for Semantic Change and Lexical Dynamics in Ancient Greek

3.3.7. Improving Quality and Accessibility of Ancient Greek Texts with LMs

3.3.8. Educational and Pedagogical Applications of LMs in Ancient Greek

4. Discussion

4.1. Critical Analysis of Key Findings

4.2. Practical Implications

4.3. Synthesis and Future Research Directions

4.3.1. Data Resources and Benchmarks

4.3.2. Modeling Approaches and Methodological Innovation

4.3.3. Pedagogical Integration and Ethical Evaluation

4.4. Ethical Considerations and Bias in Language Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AGDT | Ancient Greek Dependency Treebank |

| AGREE | Ancient Greek Relatedness Evaluation benchmark |

| AUC | Area Under the Curve |

| BLEU | Bilingual Evaluation Understudy |

| BERT | Bidirectional Encoder Representations from Transformers |

| CBOW | Continuous Bag of Words |

| CER | Character Error Rate |

| CLTK | Classical Language Toolkit |

| CoNLL | Conference on Computational Natural Language Learning |

| DBBE | Database of Byzantine Book Epigrams |

| ELMo | Embeddings from Language Models |

| F1-score | Harmonic mean of precision and recall |

| FEEL | French Expanded Emotion Lexicon |

| GenAI | Generative Artificial Intelligence |

| GLAUx | Greek and Latin Annotated Universal Corpus |

| GPT | Generative Pre-trained Transformer |

| GCSE | Greek General Certificate of Secondary Education |

| HTR | Handwritten Text Recognition |

| ILCS | Immersive Learning Case Sheet |

| LAS | Labeled Attachment Score |

| LLM | Large Language Model |

| LM | Language Model |

| LOGION | Language model for Greek Philology |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| METEOR | Metric for Evaluation of Translation with Explicit Ordering |

| MSE | Mean Squared Error |

| MT5 | Multilingual T5 Transformer Model |

| NER | Named Entity Recognition |

| NLP | Natural Language Processing |

| NMT | Neural Machine Translation |

| OCR | Oxford, Cambridge and RSA |

| OPUS | Open Parallel corpus |

| POS tagging | Part-of-Speech tagging |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PROIEL | Pragmatic Resources in Old Indo-European Languages |

| RNN | Recurrent Neural Network |

| RoBERTa | Robustly Optimized BERT Pretraining Approach |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| SG | Skip-Gram |

| SimCSE | Simple Contrastive Sentence Embeddings |

| SOTA | State of the Art |

| T5 | Text-To-Text Transfer Transformer |

| TER | Translation Edit Rate |

| TLG | Thesaurus Linguae Graecae |

| UD | Universal Dependencies Treebank |

Appendix A

| # | Authors (Year) | Model(s) Used | Type of Model | Corpus/Dataset | NLP Task/Application | Main Contribution/Focus | Evaluation Method |

|---|---|---|---|---|---|---|---|

| 1 | Lamar and Chambers [28] | Encoder–decoder RNNs (OpenNMT-py, LSTM) | Task-specific NMT (custom-trained) | 16k Socratic + 15k non-Socratic Greek lines; 11k Cicero sentences (pivot via English) | Machine Translation (Ancient Greek → Latin) | Style-preserving NMT using pivot language in low-resource classical texts | BLEU scores, rhetorical feature retention |

| 2 | Lim and Park [11] | ELMo, BERT, Multi-view character-based models | Deep contextualized LMs and character-based representations | Universal Dependencies (UD) CoNLL 2018 Shared Task dataset | POS tagging | Introduced joint multiview learning combining sentence- and word-based character embeddings for better tagging in morphologically rich languages, including Ancient Greek | Accuracy metrics across multiple languages including Ancient Greek; ablation studies and model comparison |

| 3 | Singh et al. [21] | Expanded Ancient Greek BERT | Domain-specific (fine-tuned BERT) | AGDT, PROIEL, Gorman treebanks, Perseus, First1KGreek, DBBE | Morphological analysis, PoS tagging | Development of BERT-based model for Ancient/Byzantine Greek; fine-grained PoS tagging | Accuracy on validation/test sets; comparison with RNNTagger; Gold standard for DBBE |

| 4 | Pavlopoulos et al. [39] | GreekBERT (fine-tuned) | Pretrained Transformer (fine-tuned on emotion annotation) | Modern Greek translation of Book 1 of the Iliad | Sentiment analysis (perceived emotion labeling) | Annotated dataset + sentiment modeling for Homeric text using deep learning; multivariate emotion time-series | MSE, MAE, inter-annotator agreement |

| 5 | Ross [9] | ChatGPT 3.5 | Foundation model (LLM) | Ancient Greek GCSE materials; OCR curriculum texts | Translation, grammar explanation, pedagogical support | Evaluated ChatGPT’s ability to parse, translate, and explain Ancient Greek, Latin, and Sanskrit for pedagogical use in secondary and university-level education | Manual comparison with Perseus and Whitaker’s Words; qualitative analysis; practical classroom use cases |

| 6 | Kostkan et al. [22] | Ancient-Greek-BERT (odyCy) | Domain-specific (fine-tuned transformer) | UD Perseus Treebank and UD Proiel Treebank | POS tagging, morphological analysis, lemmatization, syntactic dependency parsing | Developed a modular spaCy-based NLP pipeline for Ancient Greek with improved generalizability and performance across dialects | Accuracy on individual tasks; comparison with CLTK, greCy, Stanza, UDPipe; joint and per-treebank models |

| 7 | Riemenschneider and Frank [18] | GRεBERTA, GRεTA, PHILBERTA, PHILTA | Encoder-only (RoBERTa), Encoder–decoder (T5), Monolingual and Multilingual | Open Greek and Latin, Greek Medieval Texts, Patrologia Graeca, Internet Archive (~185.1M tokens) | PoS tagging, lemmatization, dependency parsing, semantic probing | Created and benchmarked 4 LMs for Ancient Greek; evaluated architectural and multilingual impacts | Benchmarks on Perseus and PROIEL treebanks, semantic/world knowledge probing tasks |

| 8 | Pavlopoulos et al. [34] | GreekBERT:M, GreekBERT:M+A, MT5 | Pretrained LLMs, Transformer, Encoder–Decoder | HTREC2022 (Byzantine Greek manuscripts, 10th–16th c.) | Error detection and correction in HTR | Detecting and mitigating adversarial errors in post-correction of HTR outputs | Average Precision, AUC, F1 score, Century-based and CER-based analysis |

| 9 | Krahn et al. [36] | GRCmBERT, GRCXLM-R, SimCSE | Multilingual sentence embeddings via knowledge distillation and contrastive learning | 380k Ancient Greek–English parallel sentence pairs; Perseus, First1KGreek, OPUS | Semantic similarity, translation search, semantic retrieval | Developed sentence embedding models for Ancient Greek using cross-lingual knowledge distillation; created evaluation datasets | Translation similarity accuracy, semantic textual similarity (Spearman ρ), semantic retrieval (Recall@10, mAP@20), MRR for translation bias |

| 10 | Cowen-Breen et al. [12] | LOGION (BERT-based contextual model) | Domain-specific transformer model | ~70M words of premodern Greek (Perseus, First1KGreek, TLG, etc.) | Scribal error detection and correction in Ancient Greek | Introduced LOGION for automated error detection in philological texts using confidence-based statistical metrics | Top-1 accuracy (90.5%) on artificial errors; 98.1% correction accuracy; expert validation on real data |

| 11 | Riemenschneider and Frank [31] | SPHILBERTA | Multilingual Sentence Transformer | Parallel corpora from Bible, Perseus, OPUS, Rosenthal; English-Greek-Latin trilingual texts | Cross-lingual sentence embeddings; Intertextuality detection | Developed SPHILBERTA, a trilingual sentence transformer for Ancient Greek, Latin, and English to detect intertextual parallels | Translation accuracy using cosine similarity across test corpora; case study on Aeneid–Odyssey allusions |

| 12 | Gessler and Schneider [24] | SynCLM, SLA, MicroBERT | Transformer-based LMs with syntactic inductive bias | Ancient Greek Wikipedia (9M tokens), UD treebanks, WikiAnn, PrOnto benchmark | Syntactic parsing, NER, sequence classification | Assessed whether syntax-guided inductive biases improve performance of LMs for low-resource languages like Ancient Greek | LAS for parsing, F1 for NER, accuracy for PrOnto tasks; comparison across model variants |

| 13 | González-Gallardo et al. [26] | ChatGPT-3.5 | General-purpose instruction-tuned LLM | AJMC (historical commentaries on Sophocles’ Ajax, 19th c.); multilingual NER corpus | NER in historical and code-switched documents | Zero-shot NER performance evaluation of ChatGPT on historical texts involving Ancient Greek; discussed challenges of LLMs with multilingual and ancient code-switching | Token-level F1 score (strict and fuzzy); qualitative analysis of NER failures |

| 14 | Beersmans et al. [25] | AG_BERT, ELECTRA, GrεBerta, UGARIT | Transformer-based NER models with domain adaptation | OD, DEIPN, SB, PH, GLAUx corpus | NER for Ancient Greek, focus on personal names | Comparison of transformer models for NER; domain knowledge and syntactic information integration; focus on improving NER for personal names | F1-score on Held-out and GLAUx TEST sets; qualitative error analysis; comparison of rule-based vs. mask-based gazetteer methods |

| 15 | Picca and Pavlopoulos [35] | Multilingual-BERT; FEEL lexicon | Pretrained transformer model and lexicon-based classifier | 468 speech excerpts from Iliad (Books 1, 16–24) annotated in French | Emotion recognition in Ancient Greek literature | Introduced a French-annotated dataset of Iliad for emotion detection; evaluated lexicon-based vs. deep learning approaches | MSE, MAE; Inter-annotator agreement; qualitative comparison via confusion matrix |

| 16 | Wannaz and Miyagawa [29] | Claude Opus, GPT-4o, GPT-4, GPT-3.5, Claude Sonnet, Claude Haiku, Gemini, Gemini Advanced | General-purpose LLMs | 4 Ancient Greek ostraca from papyri.info (TM 817897, 89219, 89224, 42504) | Machine Translation (Ancient Greek → English) | Evaluation of 8 LLMs for Ancient Greek-English machine translation using 6 NLP metrics | Quantitative metrics: BLEU, TER, Levenshtein, METEOR, ROUGE, custom ‘school’ metric |

| 17 | Umphrey et al. [33] | Claude Opus | Foundation Model (LLM) | Biblical Koine Greek texts (e.g., Matthew, Clement, Sirach, Acts) | Intertextual analysis (quotations, allusions, echoes) | Evaluating LLMs in detecting intertextual patterns using an expert-in-the-loop method | Expert evaluation using Hays’ intertextuality criteria (thematic, lexical, structural alignment) |

| 18 | Abbondanza [41] | ChatGPT 3.5 | Foundation Model (LLM) | Educational prompts in Latin and Ancient Greek used by Italian high school students | Interactive educational support, translation, grammar and syntax evaluation | Exploration of ChatGPT’s role as a supportive tool in high school Latin and Greek education, including prompt-based evaluations | Qualitative assessment of ChatGPT’s responses to student-style questions; case-based analysis |

| 19 | Schmidt et al. [32] | GreBerta, Ancient Greek BERT, Modern Greek BERT | Fine-tuned transformer models (BERT/RoBERTa variants) | Greek rhetorical texts from the Second Sophistic period, including Pseudo-Dionysius’s Ars Rhetorica | Authorship attribution | Analysis of authorial identity in the Ars Rhetorica; identification of internal textual structure | F1 score, accuracy, comparison of attribution profiles, majority voting across text chunks |

| 20 | González-Gallardo et al. [27] | Llama-2-70B, Llama-3-70B, Mixtral-8 × 7B, Zephyr-7B | Open Instruct LLMs (Instruction-tuned) | AJMC, HIPE, NewsEye historical corpora (19th–20th c.), multilingual (with Ancient Greek code-switching) | NER | Assessed performance of open instruction-tuned LLMs for NER in historical documents using few-shot prompting | Precision, Recall, F1-score under strict and fuzzy boundary matching; comparison with SOTA benchmarks |

| 21 | Keersmaekers and Mercelis [10] | ELECTRA (electra-grc), LaBERTa (RoBERTa) | Transformer-based, domain-specific | GLAUx corpus (Greek, 1.46M tokens), PROIEL treebank (Latin, 205K tokens) | Morphological tagging | Systematic comparison of adaptations for transformer-based tagging in Ancient Greek and Latin | Accuracy scores; statistical tests (McNemar’s); error analysis (by feature, text type, lexicon use) |

| 22 | Ross and Baines [42] | ChatGPT 3.5/4, Bard AI, Claude-2, Bing Chat, custom GPTs | General-purpose LLMs (instruction-tuned) | Not applicable (evaluated user interaction in educational context) | Ethical education on AI; student attitudes toward AI in Ancient Greek pedagogy | Impact of AI ethics sessions on student perceptions and potential educational use of LLMs | Surveys before and after information sessions, qualitative analysis |

| 23 | Kasapakis and Morgado [40] | Custom ChatGPT Assistant (ILCS Assistant) | Customized LLM assistant for qualitative research | VRChat-based immersive learning case on Ancient Greek technology (Phyctories, Aeolosphere) | Standardization of case reporting; educational case support | Application of co-intelligent LLM to improve consistency and structure in immersive learning case documentation using ILCS methodology | Iterative human–AI co-authoring; framework alignment analysis |

| 24 | Stopponi et al. [37] | Word2Vec (SG and CBOW) | Distributional semantic embeddings | Diorisis Ancient Greek Corpus | Semantic similarity and relatedness | Developed AGREE, a benchmark for evaluating semantic models of Ancient Greek using expert-annotated word pairs | Expert judgment surveys, inter-rater agreement analysis, qualitative and quantitative validation of semantic scores |

| 25 | Stopponi et al. [38] | Count-based, Word2vec, Graph-based (Node2vec with syntax) | Count-based, Word Embeddings, Syntactic Embeddings | Diorisis Corpus, Treebanks (PROIEL, PapyGreek, etc.) | Semantic representation, semantic change detection | Comparison of model architectures for Ancient Greek semantic analysis; evaluation with AGREE benchmark | AGREE benchmark; cosine similarity; nearest neighbors; precision scores |

| 26 | Rapacz and Smywiński-Pohl [30] | GreTa, PhilTa, mT5-base, mT5-large | Transformer-based (T5 variants) | Greek New Testament (BibleHub and Oblubienica interlinear corpora) | Interlinear translation (Ancient Greek → English/Polish) | Introduced morphology-enhanced neural models for interlinear translation; showed large BLEU gains using morphological embeddings. | BLEU, SemScore (semantic similarity); 144 experimental setups; statistical significance via Mann–Whitney U tests |

| 27 | Swaelens et al. [23] | BERT, ELECTRA, RoBERTa | Transformer-based LMs (custom-trained) | DBBE, First1KGreek, Perseus, Trismegistos, Modern Greek Wikipedia, Rhoby epigrams | Fine-grained Part-of-Speech Tagging (Morphological Analysis) | Development of a POS tagger for unnormalized Byzantine Greek using newly trained LMs; evaluation across multiple architectures and datasets | Accuracy, Precision, Recall, F1-score; Inter-annotator agreement; Comparison with Morpheus and RNN Tagger |

References

- Carter, D. The influence of Ancient Greece: A historical and cultural analysis. Int. J. Sci. Soc. 2023, 5, 257–265. [Google Scholar] [CrossRef]

- Peraki, M.; Vougiouklaki, C. How Has Greek Influenced the English Language. British Council. Available online: https://www.britishcouncil.org/voices-magazine/how-has-greek-influenced-english-language (accessed on 25 April 2025).

- Di Gioia, I. I think learning ancient Greek via video game is…’: An online survey to understand perceptions of digital game-based learning for ancient Greek. J. Class. Teach. 2024, 25, 173–180. [Google Scholar] [CrossRef]

- Reggiani, N. Multicultural education in the ancient world: Dimensions of diversity in the first contacts between Greeks and Egyptians. In Multicultural Education: From Theory and Practice; Cambridge Scholars Publishing: Cambridge, UK, 2013. [Google Scholar]

- World Stock Market. France: Latin and Ancient Greek No Longer Excite Students. Available online: https://www.worldstockmarket.net/france-latin-and-ancient-greek-no-longer-excite-students/ (accessed on 10 March 2025).

- Hunt, S. Classical studies trends: Teaching classics in secondary schools in the UK. J. Class. Teach. 2024, 25, 198–214. [Google Scholar] [CrossRef]

- Le Hur, C. A new classical Greek qualification. J. Class. Teach. 2022, 23, 79–80. [Google Scholar] [CrossRef]

- Tzotzi, A. Ancient Greek and Language Policy 1830–2020, Reasons–Goals–Results. Master’s Thesis, Hellenic Open University, Patras, Greece, 2020. Available online: https://apothesis.eap.gr/archive/download/df8d7eb2-c928-402a-84d8-694485d2562c.pdf (accessed on 3 May 2025).

- Ross, E.A.S. A new frontier: AI and ancient language pedagogy. J. Class. Teach. 2023, 24, 143–161. [Google Scholar] [CrossRef]

- Keersmaekers, A.; Mercelis, W. Adapting transformer models to morphological tagging of two highly inflectional languages: A case study on Ancient Greek and Latin. In Proceedings of the 1st Workshop on Machine Learning for Ancient Languages (ML4AL 2024), Bangkok, Thailand, 15 August 2024; pp. 165–176. [Google Scholar]

- Lim, K.; Park, J. Part-of-speech tagging using multiview learning. IEEE Access 2020, 8, 195184–195196. [Google Scholar] [CrossRef]

- Cowen-Breen, C.; Brooks, C.; Graziosi, B.; Haubold, J. Logion: Machine-learning based detection and correction of textual errors in Greek philology. In Proceedings of the Ancient Language Processing Workshop (RANLP-ALP 2023), Varna, Bulgaria, 8 September 2023; pp. 170–178. Available online: https://aclanthology.org/2023.alp-1.20.pdf (accessed on 3 May 2025).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Lampinen, A. Can language models handle recursively nested grammatical structures? A case study on comparing models and humans. Comput. Linguist. 2024, 50, 1441–1476. [Google Scholar] [CrossRef]

- Berti, M. (Ed.) Digital Classical Philology: Ancient Greek and Latin in the Digital Revolution; Walter de Gruyter GmbH & Co KG: Berlin, Germany, 2019; Volume 10. [Google Scholar]

- Sommerschield, T.; Assael, Y.; Pavlopoulos, J.; Stefanak, V.; Senior, A.; Dyer, C.; Bodel, J.; Prag, J.; Androutsopoulos, I.; De Freitas, N. Machine learning for ancient languages: A survey. Comput. Linguist. 2023, 49, 703–747. [Google Scholar] [CrossRef]

- Riemenschneider, F.; Frank, A. Exploring large language models for classical philology. arXiv 2023, arXiv:2305.13698. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Sohrabi, C.; Franchi, T.; Mathew, G.; Kerwan, A.; Nicola, M.; Griffin, M.; Agha, M.; Agha, R. PRISMA 2020 statement: What’s new and the importance of reporting guidelines. Int. J. Surg. 2021, 88, 105918. [Google Scholar] [CrossRef] [PubMed]

- Singh, P.; Rutten, G.; Lefever, E. A pilot study for BERT language modelling and morphological analysis for ancient and medieval Greek. In Proceedings of the 5th Joint SIGHUM Workshop on Computational Linguistics for Cultural Heritage, Social Sciences, Humanities and Literature, Punta Cana, Dominican Republic, 11 November 2021; pp. 128–137. [Google Scholar]

- Kostkan, J.; Kardos, M.; Mortensen, J.P.B.; Nielbo, K.L. OdyCy–A general-purpose NLP pipeline for Ancient Greek. In Proceedings of the 7th Joint SIGHUM Workshop on Computational Linguistics for Cultural Heritage, Social Sciences, Humanities and Literature (EACL 2023), Dubrovnik, Croatia, 5 May 2023; pp. 128–134. [Google Scholar]

- Swaelens, C.; De Vos, I.; Lefever, E. Linguistic annotation of Byzantine book epigrams. Lang. Resour. Eval. 2025, 1, 1–26. [Google Scholar] [CrossRef]

- Gessler, L.; Schneider, N. Syntactic inductive bias in transformer language models: Especially helpful for low-resource languages? arXiv 2023, arXiv:2311.00268. [Google Scholar] [CrossRef]

- Beersmans, M.; Keersmaekers, A.; Graaf, E.; Van de Cruys, T.; Depauw, M.; Fantoli, M. “Gotta catch ‘em all!”: Retrieving people in Ancient Greek texts combining transformer models and domain knowledge. In Proceedings of the 1st Workshop on Machine Learning for Ancient Languages (ML4AL 2024), Bangkok, Thailand, 15 August 2024; pp. 152–164. [Google Scholar]

- González-Gallardo, C.E.; Tran, H.T.H.; Hamdi, A.; Doucet, A. Leveraging open large language models for historical named entity recognition. In Proceedings of the 28th International Conference on Theory and Practice of Digital Libraries (TPDL 2024), Ljubljana, Slovenia, 24–27 September 2024; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 379–395. [Google Scholar]

- González-Gallardo, C.E.; Boros, E.; Girdhar, N.; Hamdi, A.; Moreno, J.G.; Doucet, A. Yes but… can ChatGPT identify entities in historical documents? In Proceedings of the 2023 ACM/IEEE Joint Conference on Digital Libraries (JCDL 2023), Santa Fe, NM, USA, 26–30 June 2023; IEEE: New York, NY, USA, 2023; pp. 184–189. [Google Scholar]

- Lamar, A.K.; Chambers, A. Preserving personality through a pivot language low-resource NMT of ancient languages. In Proceedings of the 2018 IEEE MIT Undergraduate Research Technology Conference (URTC 2018), Cambridge, MA, USA, 5–7 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–4. [Google Scholar]

- Wannaz, A.C.; Miyagawa, S. Assessing large language models in translating Coptic and Ancient Greek ostraca. In Proceedings of the 4th International Conference on Natural Language Processing for Digital Humanities (NLP4DH 2024), Miami, FL, USA, 16 November 2024; pp. 463–471. [Google Scholar]

- Rapacz, M.; Smywiński-Pohl, A. Low-resource interlinear translation: Morphology-enhanced neural models for Ancient Greek. In Proceedings of the First Workshop on Language Models for Low-Resource Languages, Abu Dhabi, United Arab Emirates, 20 January 2025; pp. 145–165. Available online: https://aclanthology.org/2025.loreslm-1.11.pdf (accessed on 3 May 2025).

- Riemenschneider, F.; Frank, A. Graecia capta ferum victorem cepit: Detecting Latin allusions to ancient Greek literature. arXiv 2023, arXiv:2308.12008. [Google Scholar] [CrossRef]

- Schmidt, G.; Vybornaya, V.; Yamshchikov, I.P. Fine-tuning pre-trained language models for authorship attribution of the Pseudo-Dionysian Ars Rhetorica. In Proceedings of the Computational Humanities Research Conference (CHR 2024), Aarhus, Denmark, 4-6 December 2024; Available online: https://ceur-ws.org/Vol-3834/paper139.pdf (accessed on 3 May 2025).

- Umphrey, R.; Roberts, J.; Roberts, L. Investigating expert-in-the-loop LLM discourse patterns for ancient intertextual analysis. arXiv 2024, arXiv:2409.01882. [Google Scholar]

- Pavlopoulos, J.; Kougia, V.; Platanou, P.; Essler, H. Detecting erroneous handwritten Byzantine text recognition. In Findings of the Conference on Empirical Methods in Natural Language Processing (EMNLP 2023); Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; Available online: https://ipavlopoulos.github.io/files/pavlopoulos_etal_2023_htrec.pdf (accessed on 3 May 2025).

- Picca, D.; Pavlopoulos, J. Deciphering emotional landscapes in the Iliad: A novel French-annotated dataset for emotion recognition. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; pp. 4462–4467. Available online: https://aclanthology.org/2024.lrec-main.399.pdf (accessed on 3 May 2025).

- Krahn, K.; Tate, D.; Lamicela, A.C. Sentence embedding models for Ancient Greek using multilingual knowledge distillation. arXiv 2023, arXiv:2308.13116. [Google Scholar] [CrossRef]

- Stopponi, S.; Peels-Matthey, S.; Nissim, M. AGREE: A new benchmark for the evaluation of distributional semantic models of ancient Greek. Digit. Scholarsh. Humanit. 2024, 39, 373–392. [Google Scholar] [CrossRef]

- Stopponi, S.; Pedrazzini, N.; Peels-Matthey, S.; McGillivray, B.; Nissim, M. Natural language processing for Ancient Greek: Design, advantages and challenges of language models. Diachronica 2024, 41, 414–435. [Google Scholar] [CrossRef]

- Pavlopoulos, J.; Xenos, A.; Picca, D. Sentiment analysis of Homeric text: The 1st Book of the Iliad. In Proceedings of the Thirteenth Language Resources and Evaluation Conference (LREC 2022), Marseille, France, 20–25 June 2022; pp. 7071–7077. Available online: https://aclanthology.org/2022.lrec-1.765.pdf (accessed on 3 May 2025).

- Kasapakis, V.; Morgado, L. Ancient Greek technology: An immersive learning use case described using a co-intelligent custom ChatGPT assistant. In Proceedings of the 2024 IEEE 3rd International Conference on Intelligent Reality (ICIR 2024), Coimbra, Portugal, 5–6 December 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar]

- Abbondanza, C. Generative AI for teaching Latin and Greek in high school. In Proceedings of the Second International Workshop on Artificial Intelligence Systems in Education, Co-Located with the 23rd International Conference of the Italian Association for Artificial Intelligence (AIxIA 2024), Bolzano, Italy, 25–28 November 2024; CEUR Workshop Proceedings. Available online: https://ceur-ws.org/Vol-3879/AIxEDU2024_paper_11.pdf (accessed on 3 May 2025).

- Ross, E.A.; Baines, J. Treading water: New data on the impact of AI ethics information sessions in classics and ancient language pedagogy. J. Class. Teach. 2024, 25, 181–190. [Google Scholar] [CrossRef]

| Database | Search Query |

|---|---|

| Scopus | TITLE-ABS-KEY (ChatGPT OR bert OR llama OR “Transformer Models” OR “Generative AI” OR “Large Language Models” OR “LLMs” OR “Neural Language Models” OR “Autoregressive Models” OR “Foundation Models” OR “Deep Learning for NLP” OR “AI-generated Text” OR “Neural Machine Translation” OR “Language Models”) AND TITLE-ABS-KEY (pedagogy OR education OR learning OR teaching OR study OR school OR universities OR training OR “academic institutions” OR classroom OR “computer-assisted learning” OR “digital humanities” OR “intelligent tutoring systems” OR “AI in education” OR “e-learning” OR “distance learning” OR “curriculum development” OR “didactics” OR “instructional technology”) AND TITLE-ABS-KEY (“Ancient Greek” OR “Classical Greek” OR “Attic Greek” OR “Archaic Greek” OR “Koine Greek” OR “Homeric Greek” OR “Old Greek” OR “Ancient Hellenic” OR “Pre-Modern Greek” OR “Historical Greek”) |

| Web of Science | TS = (“ChatGPT” OR “bert” OR “llama” OR “Transformer Models” OR “Generative AI” OR “Large Language Models” OR “LLMs” OR “Neural Language Models” OR “Autoregressive Models” OR “Foundation Models” OR “Deep Learning for NLP” OR “AI-generated Text” OR “Neural Machine Translation” OR “Language Models”) AND TS = (“pedagogy” OR “education” OR “learning” OR “teaching” OR “study” OR “school” OR “universities” OR “training” OR “academic institutions” OR “classroom” OR “computer-assisted learning” OR “digital humanities” OR “intelligent tutoring systems” OR “AI in education” OR “e-learning” OR “distance learning” OR “curriculum development” OR “didactics” OR “instructional technology”) AND TS = (“Ancient greek” OR “Classical Greek” OR “Attic Greek” OR “Archaic Greek” OR “Koine Greek” OR “Homeric Greek” OR “Old Greek” OR “Ancient Hellenic” OR “Pre-Modern Greek” OR “Historical Greek”) |

| IEEE Xplore | (“ChatGPT” OR “BERT” OR “LLaMA” OR “Transformer Models” OR “Generative AI” OR “Large Language Models” OR “LLMs” OR “Neural Language Models” OR “Autoregressive Models” OR “Foundation Models” OR “Deep Learning for NLP” OR “AI-generated Text” OR “Neural Machine Translation” OR “Language Models”) AND (pedagogy OR education OR learning OR teaching OR study OR school OR universities OR training OR “academic institutions” OR classroom OR “computer-assisted learning” OR “digital humanities” OR “intelligent tutoring systems” OR “AI in education” OR “e-learning” OR “distance learning” OR “curriculum development” OR didactics OR “instructional technology”) AND (“Ancient Greek” OR “Classical Greek” OR “Attic Greek” OR “Archaic Greek” OR “Koine Greek” OR “Homeric Greek” OR “Old Greek” OR “Ancient Hellenic” OR “Pre-Modern Greek” OR “Historical Greek”) |

| Study/Model | Task | Corpus/Dataset | Performance Metrics | Notes |

|---|---|---|---|---|

| Exploratory Studies | ||||

| Singh et al. [21]—BERT | Morphological Tagging | AGDT, PROIEL, Gorman | Accuracy ≈ 0.87–0.92 (Fine-grained), 0.97 (Coarse POS) | Exploratory |

| Keersmaekers and Mercelis [10]—Transformer | Morphological Tagging | GLAUx | Accuracy ≈ 0.96–0.97 | Exploratory |

| Swaelens et al. [23]—BERT/RoBERTa/ELECTRA | Morphological Tagging | Byzantine Epigrams | Accuracy ≈ 0.69 | Exploratory |

| Kostkan et al. [22]—OdyCy | POS Tagging, Lemmatization | UD Perseus, UD Proiel | Accuracy ≈ 0.95–0.98 (POS), 0.83–0.94 (Lemma) | Exploratory |

| Lim and Park [11]—Multiview Learning | POS Tagging | UD CoNLL | Accuracy ≈ 0.98 | Exploratory |

| Beersmans et al. [25]—Transformer | NER | Ancient Greek texts | F1 ≈ 0.80 (Macro), F1 ≈ 0.87 (Person) | Exploratory |

| Gonzalez-Gallardo et al. [26,27]—ChatGPT | NER | Historical documents | F1 < 0.40 | Exploratory |

| Wannaz and Miyagawa [29]—GPT/Claude | Machine Translation | Ostraca (Coptic and Greek) | BLEU up to ≈ 39.6 | Exploratory |

| Rapacz and Smywiński-Pohl [30]—PhilTa/mT5 | Interlinear Translation | Ancient Greek corpus | BLEU up to ≈ 60 | Exploratory |

| Krahn et al. [36]—Distillation Model | Sentence Embeddings | Ancient Greek—English parallel corpora | Retrieval Acc. ≈ 0.92–0.97; STS Spearman ≈ 0.86 | Exploratory |

| Riemenschneider and Frank [31]—SPHILBERTA | Sentence Embeddings | Parallel corpora | Retrieval Acc. >≈ 0.96 | Exploratory |

| Picca and Pavlopoulos [35]—Multilingual BERT | Sentiment Analysis | Iliad dataset | MSE ≈ 0.10; MAE ≈ 0.19 | Exploratory |

| Pavlopoulos et al. [39]—GreekBERT | Sentiment Analysis | Iliad Book 1 | MSE ≈ 0.06; MAE ≈ 0.19 | Exploratory |

| Pavlopoulos et al. [35]—GreekBERT | Error Detection | Byzantine manuscripts | Average Precision ≈ 0.97 | Exploratory |

| Cowen-Breen et al. [12]—LOGION | Error Correction | Premodern Greek corpora | Top-1 correction accuracy ≈ 0.91 | Exploratory |

| Schmidt et al. [32]—GreBerta | Authorship Attribution | Greek rhetorical texts | F1 ~90%; Accuracy ~90% | Exploratory |

| Umphrey et al. [33]—Claude Opus | Intertextuality Detection | Biblical Koine Greek texts | Expert qualitative evaluation | Exploratory |

| Kasapakis and Morgado [40]—Custom ChatGPT | Pedagogical Support | VR learning scenarios | Qualitative improvement in standardization | Exploratory |

| Partial Validation Studies | ||||

| Abbondanza [41]—Generative AI | Teaching Latin and Greek | High school courses | Surveys (positive outcomes) | Partial Validation |

| Ross [9]—ChatGPT | Pedagogical Applications | Secondary/University Latin and Greek curricula | Surveys, manual error analysis; mixed performance for Ancient Greek | Partial Validation |

| Ross and Baines [42]—AI Ethics Module | Pedagogy and Ethics | Undergraduate courses | Surveys (positive outcomes) | Partial Validation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tzanoulinou, D.; Triantafyllopoulos, L.; Verykios, V.S. Harnessing Language Models for Studying the Ancient Greek Language: A Systematic Review. Mach. Learn. Knowl. Extr. 2025, 7, 71. https://doi.org/10.3390/make7030071

Tzanoulinou D, Triantafyllopoulos L, Verykios VS. Harnessing Language Models for Studying the Ancient Greek Language: A Systematic Review. Machine Learning and Knowledge Extraction. 2025; 7(3):71. https://doi.org/10.3390/make7030071

Chicago/Turabian StyleTzanoulinou, Diamanto, Loukas Triantafyllopoulos, and Vassilios S. Verykios. 2025. "Harnessing Language Models for Studying the Ancient Greek Language: A Systematic Review" Machine Learning and Knowledge Extraction 7, no. 3: 71. https://doi.org/10.3390/make7030071

APA StyleTzanoulinou, D., Triantafyllopoulos, L., & Verykios, V. S. (2025). Harnessing Language Models for Studying the Ancient Greek Language: A Systematic Review. Machine Learning and Knowledge Extraction, 7(3), 71. https://doi.org/10.3390/make7030071