A Review of Methods for Unobtrusive Measurement of Work-Related Well-Being

Abstract

1. Introduction

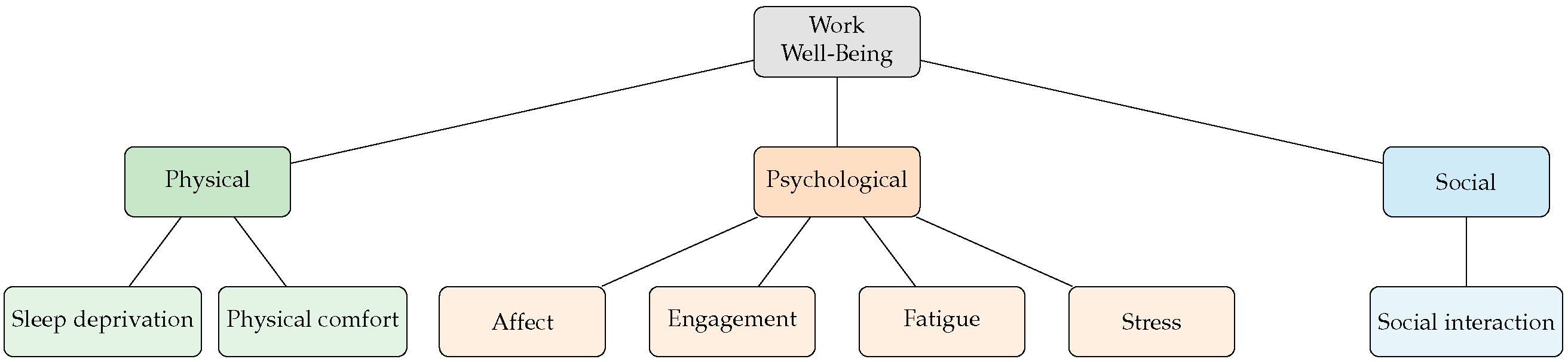

2. Conceptualizing Well-Being

2.1. Taxonomies of Well-Being

2.2. Proposed Concept of Well-Being

- Psychological well-being: As per Ryff [19], psychological well-being includes happiness (the experience of pleasure) and eudaimonic well-being (referring to flourishing, engagement, feeling a sense of purpose):

- –

- Affect: Affect is defined as the experiences of negative and positive affect. As per the circumplex model [20], each affect can be described as a combination of two independent dimensions: pleasure and arousal. Negative affect is a negative well-being indicator, whereas positive affect is a positive well-being indicator.

- –

- Engagement: Engagement is defined as a state where an employee has a high level of energy, is enthusiastic, and is immersed in their work. Engagement is composed of vigor, dedication, and absorption. Vigor refers to high energy and resilience levels, dedication refers to being strongly involved in one’s work, and absorption refers to being focused on and immersed in one’s work [21]. Engagement is a positive well-being indicator.

- –

- Fatigue: Fatigue is defined as a state that happens as a consequence of long periods of demanding cognitive activity [20]. Fatigue is a negative well-being indicator.

- –

- Stress: As per the APA dictionary [22], stress is recognized as the physiological or psychological response to stressors, which can be either internal or external. The physiological response can manifest in sweating, a dry mouth, accelerated speech, etc., and influences how people behave and feel. Stress is a negative well-being indicator.

- Physical well-being: As per Seligman [23], physical well-being extends beyond the absence of sickness, capturing an individual’s capability to realize their fullest wellness potential:

- –

- Physical comfort: As defined by Kölsch et al. [24], the physical comfort zone is composed of postures and motions that are voluntarily adopted, as opposed to those that are avoided. Physical comfort positively impacts well-being.

- –

- Sleep deprivation: Sleep deprivation occurs when there is either a total absence of sleep or a reduction in sleep duration [25]. Sleep deprivation negatively impacts well-being.

- Social well-being: As per Pressman [26], social well-being is experienced when various social needs, such as the feeling of support and belonging, are met:

- –

- Social interactions: A social interaction is defined as a process that entails mutual interaction or responses between two or more individuals [27]. There is evidence of the link between frequent and deeper social interactions and well-being [28], indicating that enriching interactions and social support can contribute to increased well-being.

3. Paper Selection Method

- Focus on one of the identified sub-dimensions of well-being.

- Focus on unobtrusive sensing methods.

4. Psychological Well-Being

4.1. Affect

4.2. Engagement

4.3. Fatigue

4.4. Stress

5. Physical Well-Being

5.1. Physical Comfort

5.2. Sleep Deprivation

6. Social Well-Being

Social Interactions

7. Privacy-Aware Sensing

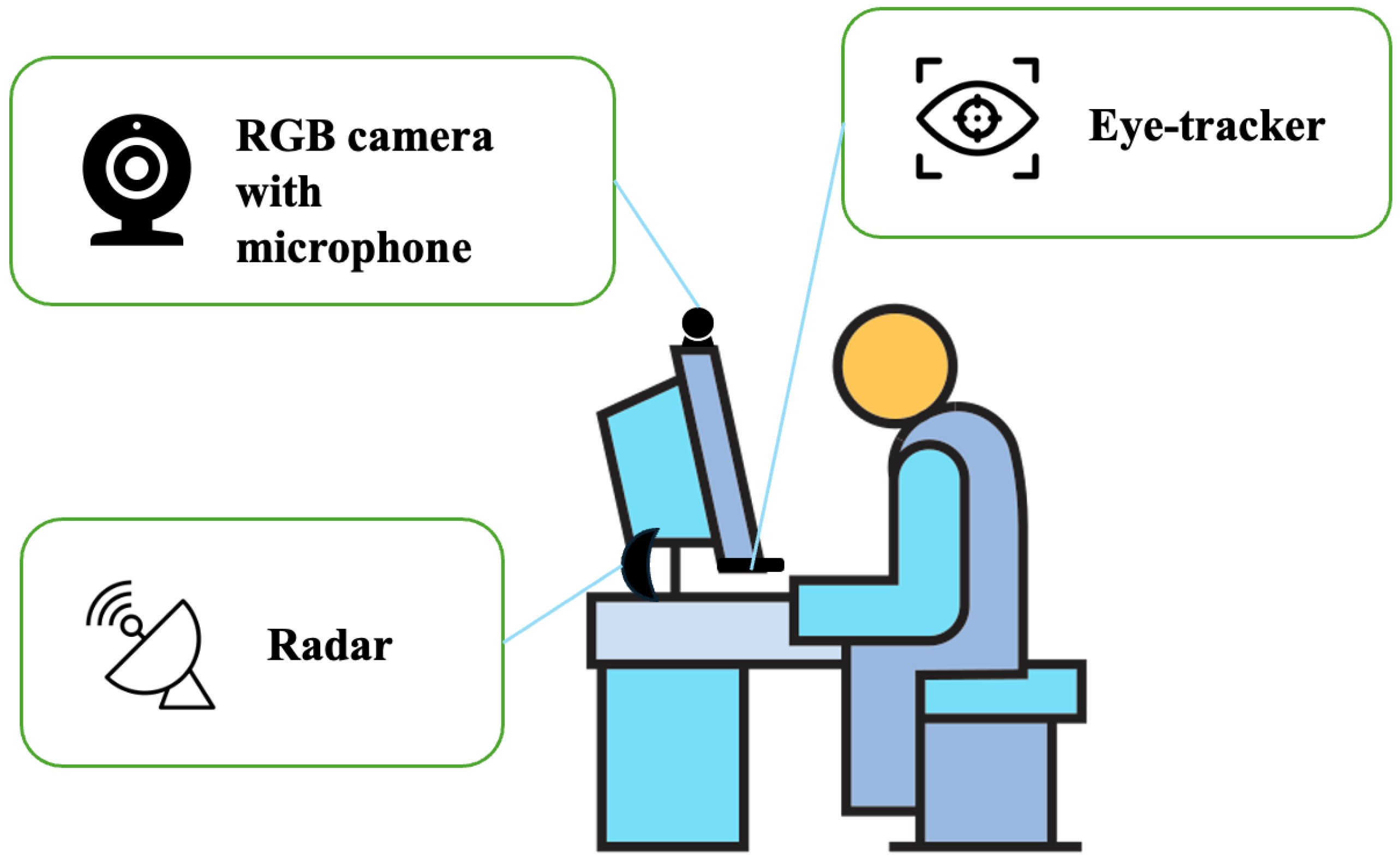

8. Proposed Setup

9. Discussion

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| APA | American Psychological Association |

| ML | machine learning |

| PERCLOS | percentage of eyelid closure |

| RGB | red, green, and blue |

| WHO | World Health Organisation |

Appendix A

| Sub-Dimension | Keywords Used |

|---|---|

| Physical comfort | (“comfort” OR “physical comfort”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) |

| Sleep deprivation | (“sleepiness” OR “sleep deprivation”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) |

| Engagement | (“engagement”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) |

| Affect | (“emotions” OR “emotion” OR “affect” OR “affects”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) AND (“review” OR “literature review” OR “survey”) |

| Fatigue | (“fatigue”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) |

| Stress | (“stress”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) AND (“review” OR “literature review” OR “survey”) |

| Social interactions | (“social interaction” OR “social relations” OR “relationships”) AND ((“unobtrusive” OR “non-contact” OR “contact-free” OR “contact free”) AND (“sensors” OR “sensing”)) |

References

- American Psychological Association. Well-being. In APA Dictionary of Psychology; American Psychological Association: Washington, DC, USA, 2018. [Google Scholar]

- Wijngaards, I.; King, O.C.; Burger, M.J.; van Exel, J. Worker well-being: What it is, and how it should be measured. Appl. Res. Qual. Life 2021, 17, 795–832. [Google Scholar] [CrossRef]

- Grawitch, M.; Gottschalk, M.; Munz, D. The Path to a Healthy Workplace A Critical Review Linking Healthy Workplace Practices, Employee Well-being, and Organizational Improvements. Consult. Psychol. J. Pract. Res. 2006, 58, 129–147. [Google Scholar] [CrossRef]

- Isham, A.; Mair, S.; Jackson, T. Worker wellbeing and productivity in advanced economies: Re-examining the link. Ecol. Econ. 2021, 184, 106989. [Google Scholar] [CrossRef]

- Pradhan, R.K.; Hati, L. The Measurement of Employee Well-being: Development and Validation of a Scale. Glob. Bus. Rev. 2022, 23, 385–407. [Google Scholar] [CrossRef]

- Czerw, A. Diagnosing Well-Being in Work Context—Eudemonic Well-Being in the Workplace Questionnaire. Curr. Psychol. 2019, 38, 331–346. [Google Scholar] [CrossRef]

- Chari, R.; Sauter, S.L.; Petrun Sayers, E.L.; Huang, W.; Fisher, G.G.; Chang, C.C. Development of the National Institute for Occupational Safety and Health Worker Well-Being Questionnaire. J. Occup. Environ. Med. 2022, 64, 707–717. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, X.; Peng, S.; Jiang, X.; Xu, K.; Chen, C.; Wang, Z.; Dai, C.; Chen, W. A review of wearable and unobtrusive sensing technologies for chronic disease management. Comput. Biol. Med. 2021, 129, 104163. [Google Scholar] [CrossRef]

- Slapničar, G.; Wang, W.; Luštrek, M. Feasibility of Remote Blood Pressure Estimation via Narrow-band Multi-wavelength Pulse Transit Time. ACM Trans. Sen. Netw. 2024, 20, 77. [Google Scholar] [CrossRef]

- Carucci, K.; Toyama, K. Making Well-being: Exploring the Role of Makerspaces in Long Term Care Facilities. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; CHI ’19. pp. 1–12. [Google Scholar] [CrossRef]

- Ola, O.; Harrington, B. Exploring Lightweight Practices to Support Students’ Well-being. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 2, Providence, RI, USA, 3–5 March 2022; SIGCSE 2022. pp. 1070–1071. [Google Scholar] [CrossRef]

- Wallace, B.; Larivière-Chartier, J.; Liu, H.; Sloan, T.; Goubran, R.; Knoefel, F. Frequency Response of a Novel IR Based Pressure Sensitive Mat for Well-being Assessment. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; pp. 481–486. [Google Scholar] [CrossRef]

- World Health Organization. Constitution of the World Health Organization; World Health Organization: Geneva, Switzerland, 1995. [Google Scholar]

- Dodge, R.; Daly, A.; Huyton, J.; Sanders, L. The challenge of defining wellbeing. Int. J. Wellbeing 2012, 2, 222–235. [Google Scholar] [CrossRef]

- Diener, E. Subjective well-being. Psychol. Bull. 1984, 95, 542. [Google Scholar] [CrossRef]

- Fisher, C. Conceptualizing and Measuring Wellbeing at Work. In Work and Wellbeing; Wiley Blackwell: Hoboken, NJ, USA, 2014; pp. 1–25. [Google Scholar] [CrossRef]

- Sonnentag, S. Dynamics of well-being. Annu. Rev. Organ. Psychol. Organ. Behav. 2015, 2, 261–293. [Google Scholar] [CrossRef]

- Ryff, C.D.; Keyes, C.L.M. The structure of psychological well-being revisited. J. Personal. Soc. Psychol. 1995, 69, 719. [Google Scholar] [CrossRef] [PubMed]

- Ryff, C.D. Happiness is everything, or is it? Explorations on the meaning of psychological well-being. J. Personal. Soc. Psychol. 1989, 57, 1069. [Google Scholar] [CrossRef]

- Russell, J. A Circumplex Model of Affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Bakker, A.B.; Demerouti, E. Towards a model of work engagement. Career Dev. Int. 2008, 13, 209–223. [Google Scholar] [CrossRef]

- American Psychological Association. Stress. In APA Dictionary of Psychology; American Psychological Association: Washington, DC, USA, 2018. [Google Scholar]

- Seligman, M.E. Positive health. Appl. Psychol. 2008, 57, 3–18. [Google Scholar] [CrossRef]

- Kölsch, M.; Beall, A.C.; Turk, M. An objective measure for postural comfort. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage CA: Los Angeles, CA, USA, 2003; Volume 47, pp. 725–728. [Google Scholar]

- Orzeł-Gryglewska, J. Consequences of sleep deprivation. Int. J. Occup. Med. Environ. Health 2010, 23, 95–114. [Google Scholar] [CrossRef]

- Pressman, S.D.; Kraft, T.; Bowlin, S. Well-Being: Physical, Psychological, Social. In Encyclopedia of Behavioral Medicine; Gellman, M.D., Turner, J.R., Eds.; Springer: New York, NY, USA, 2013; pp. 2047–2052. [Google Scholar] [CrossRef]

- American Psychological Association. Social interaction. In APA Dictionary of Psychology; American Psychological Association: Washington, DC, USA, 2018. [Google Scholar]

- Sun, J.; Harris, K.; Vazire, S. Is well-being associated with the quantity and quality of social interactions? J. Personal. Soc. Psychol. 2020, 119, 1478. [Google Scholar] [CrossRef]

- Adão Martins, N.R.; Annaheim, S.; Spengler, C.M.; Rossi, R.M. Fatigue Monitoring Through Wearables: A State-of-the-Art Review. Front. Physiol. 2021, 12, 790292. [Google Scholar] [CrossRef]

- Salama, W.; Abdou, A.H.; Mohamed, S.A.K.; Shehata, H.S. Impact of Work Stress and Job Burnout on Turnover Intentions among Hotel Employees. Int. J. Environ. Res. Public Health 2022, 19, 9724. [Google Scholar] [CrossRef]

- Chen, C.W.; Määttä, T.; Wong, K.B.Y.; Aghajan, H. A collaborative framework for ergonomic feedback using smart cameras. In Proceedings of the 2012 Sixth International Conference on Distributed Smart Cameras (ICDSC), Hong Kong, China, 30 October–2 November 2012; pp. 1–6. [Google Scholar]

- Forrester, N. How better sleep can improve productivity. Nature 2023, 619, 659–661. [Google Scholar] [CrossRef] [PubMed]

- Dutton, J.E. Energize Your Workplace: How to Create and Sustain High-Quality Connections at Work; John Wiley & Sons: Hoboken, NJ, USA, 2003; Volume 5. [Google Scholar]

- Braun, M.; Weber, F.; Alt, F. Affective Automotive User Interfaces–Reviewing the State of Driver Affect Research and Emotion Regulation in the Car. ACM Comput. Surv. 2021, 54, 137. [Google Scholar] [CrossRef]

- Gong, J.; Zhang, X.; Huang, Y.; Ren, J.; Zhang, Y. Robust Inertial Motion Tracking through Deep Sensor Fusion across Smart Earbuds and Smartphone. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 62. [Google Scholar] [CrossRef]

- Röddiger, T.; Clarke, C.; Breitling, P.; Schneegans, T.; Zhao, H.; Gellersen, H.; Beigl, M. Sensing with Earables: A Systematic Literature Review and Taxonomy of Phenomena. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 135. [Google Scholar] [CrossRef]

- Jones, C.; Jonsson, I.M. Using Paralinguistic Cues in Speech to Recognise Emotions in Older Car Drivers. In Affect and Emotion in Human-Computer Interaction: From Theory to Applications; Peter, C., Beale, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 229–240. [Google Scholar] [CrossRef]

- Huang, D.Y.; Seyed, T.; Li, L.; Gong, J.; Yao, Z.; Jiao, Y.; Chen, X.A.; Yang, X.D. Orecchio: Extending Body-Language through Actuated Static and Dynamic Auricular Postures. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, Berlin, Germany, 14 October 2018; UIST ’18. pp. 697–710. [Google Scholar] [CrossRef]

- Abdelrahman, Y.; Schmidt, A. Beyond the visible: Sensing with thermal imaging. Interactions 2018, 26, 76–78. [Google Scholar] [CrossRef]

- Nocera, A.; Senigagliesi, L.; Raimondi, M.; Ciattaglia, G.; Gambi, E. Machine learning in radar-based physiological signals sensing: A scoping review of the models, datasets and metrics. IEEE Access 2024, 12, 156082–156117. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Stampf, A.; Colley, M.; Rukzio, E. Towards Implicit Interaction in Highly Automated Vehicles—A Systematic Literature Review. Proc. ACM Hum.-Comput. Interact. 2022, 6, 191. [Google Scholar] [CrossRef]

- Jones, C.; Sutherland, J. Acoustic Emotion Recognition for Affective Computer Gaming. In Affect and Emotion in Human-Computer Interaction: From Theory to Applications; Peter, C., Beale, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 209–219. [Google Scholar] [CrossRef]

- Dunne, R.; Morris, T.; Harper, S. A Survey of Ambient Intelligence. ACM Comput. Surv. 2021, 54, 73. [Google Scholar] [CrossRef]

- Soundariya, R.; Renuga, R. Eye movement based emotion recognition using electrooculography. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Zeng, K.; Liu, G. Emotion recognition based on millimeter wave radar. In Proceedings of the 2023 3rd International Conference on Bioinformatics and Intelligent Computing, Sanya, China, 10–12 February 2023; BIC ’23. pp. 232–236. [Google Scholar] [CrossRef]

- Ashwin, T.S.; Guddeti, R.M.R. Unobtrusive Behavioral Analysis of Students in Classroom Environment Using Non-Verbal Cues. IEEE Access 2019, 7, 150693–150709. [Google Scholar] [CrossRef]

- Gao, Y.; Jin, Y.; Choi, S.; Li, J.; Pan, J.; Shu, L.; Zhou, C.; Jin, Z. SonicFace: Tracking Facial Expressions Using a Commodity Microphone Array. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 156. [Google Scholar] [CrossRef]

- Whitehill, J.; Serpell, Z.; Lin, Y.C.; Foster, A.; Movellan, J.R. The Faces of Engagement: Automatic Recognition of Student Engagementfrom Facial Expressions. IEEE Trans. Affect. Comput. 2014, 5, 86–98. [Google Scholar] [CrossRef]

- Aslan, S.; Alyuz, N.; Tanriover, C.; Mete, S.E.; Okur, E.; D’Mello, S.K.; Arslan Esme, A. Investigating the Impact of a Real-time, Multimodal Student Engagement Analytics Technology in Authentic Classrooms. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; CHI ’19. pp. 1–12. [Google Scholar] [CrossRef]

- Verma, D.; Bhalla, S.; Sahnan, D.; Shukla, J.; Parnami, A. ExpressEar: Sensing Fine-Grained Facial Expressions with Earables. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 129. [Google Scholar] [CrossRef]

- Vedernikov, A.; Sun, Z.; Kykyri, V.L.; Pohjola, M.; Nokia, M.; Li, X. Analyzing Participants’ Engagement during Online Meetings Using Unsupervised Remote Photoplethysmography with Behavioral Features. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 17–18 June 2024; pp. 389–399. [Google Scholar] [CrossRef]

- Huynh, S.; Kim, S.; Ko, J.; Balan, R.K.; Lee, Y. EngageMon: Multi-Modal Engagement Sensing for Mobile Games. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 13. [Google Scholar] [CrossRef]

- Li, J.; Li, H.; Umer, W.; Wang, H.; Xing, X.; Zhao, S.; Hou, J. Identification and classification of construction equipment operators’ mental fatigue using wearable eye-tracking technology. Autom. Constr. 2020, 109, 103000. [Google Scholar] [CrossRef]

- Turetskaya, A.; Anishchenko, L.; Ivanisova, E. Non-Contact Detection of Respiratory Pattern Changes due to Mental Workload. In Proceedings of the 2020 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT), Yekaterinburg, Russia, 14–15 May 2020; pp. 125–127. [Google Scholar] [CrossRef]

- Zhou, L.; Fischer, E.; Brahms, C.M.; Granacher, U.; Arnrich, B. Using Transparent Neural Networks and Wearable Inertial Sensors to Generate Physiologically-Relevant Insights for Gait. In Proceedings of the 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), Nassau, Bahamas, 12–14 December 2022; pp. 1274–1280. [Google Scholar] [CrossRef]

- Soleymanpour, R.; Shishavan, H.H.; Heo, J.S.; Kim, I. Novel Driver’s Drowsiness Detection System and its Evaluation in a Driving Simulator Environment. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 1204–1208. [Google Scholar] [CrossRef]

- Chen, H.; Han, X.; Hao, Z.; Yan, H.; Yang, J. Non-contact Monitoring of Fatigue Driving Using FMCW Millimeter Wave Radar. ACM Trans. Internet Things 2023, 5, 3. [Google Scholar] [CrossRef]

- Ice, G.H.; James, G.D. Conducting a field study of stress. In Measuring Stress in Humans; Ice, G.H., James, G.D., Eds.; Cambridge University Press: Cambridge, UK, 2006; Chapter 1; pp. 3–24. [Google Scholar]

- Selye, H. The general adaptation syndrome and the diseases of adaptation. J. Clin. Endocrinol. Metab. 1946, 6, 117–230. [Google Scholar] [CrossRef]

- Alberdi, A.; Aztiria, A.; Basarab, A. Towards an automatic early stress recognition system for office environments based on multimodal measurements. J. Biomed. Inform. 2016, 59, 49–75. [Google Scholar] [CrossRef]

- Arsalan, A.; Anwar, S.M.; Majid, M. Mental Stress Detection using Data from Wearable and Non-wearable Sensors: A Review. arXiv 2023, arXiv:2202.03033. [Google Scholar] [CrossRef]

- Gedam, S.; Paul, S. A Review on Mental Stress Detection Using Wearable Sensors and Machine Learning Techniques. IEEE Access 2021, 9, 84045–84066. [Google Scholar] [CrossRef]

- Taskasaplidis, G.; Fotiadis, D.A.; Bamidis, P.D. Review of Stress Detection Methods Using Wearable Sensors. IEEE Access 2024, 12, 38219–38246. [Google Scholar] [CrossRef]

- Masri, G.; Al-Shargie, F.; Tariq, U.; Almughairbi, F.; Babiloni, F.; Al-Nashash, H. Mental Stress Assessment in the Workplace: A Review. IEEE Trans. Affect. Comput. 2024, 15, 958–976. [Google Scholar] [CrossRef]

- Wu, Y.; Ni, H.; Mao, C.; Han, J.; Xu, W. Non-intrusive Human Vital Sign Detection Using mmWave Sensing Technologies: A Review. ACM Trans. Sens. Netw. 2023, 20, 16. [Google Scholar] [CrossRef]

- Ha, U.; Madani, S.; Adib, F. WiStress: Contactless Stress Monitoring Using Wireless Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 103. [Google Scholar] [CrossRef]

- Zakaria, C.; Balan, R.; Lee, Y. StressMon: Scalable Detection of Perceived Stress and Depression Using Passive Sensing of Changes in Work Routines and Group Interactions. Proc. ACM Hum.-Comput. Interact. 2019, 3, 37. [Google Scholar] [CrossRef]

- Nosakhare, E.; Picard, R. Probabilistic Latent Variable Modeling for Assessing Behavioral Influences on Well-Being. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019. KDD ’19. [Google Scholar] [CrossRef]

- Olsen, G.F.; Brilliant, S.S.; Primeaux, D.; Najarian, K. Signal processing and machine learning for real-time classification of ergonomic posture with unobtrusive on-body sensors; application in dental practice. In Proceedings of the 2009 ICME International Conference on Complex Medical Engineering, Tempe, AZ, USA, 9–11 April 2009; pp. 1–11. [Google Scholar] [CrossRef]

- Wac, M.; Kou, R.; Unlu, A.; Jenkinson, M.; Lin, W.; Roudaut, A. TAILOR: A Wearable Sleeve for Monitoring Repetitive Strain Injuries. In Proceedings of the CHI ’20: CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; CHI EA ’20. pp. 1–8. [Google Scholar] [CrossRef]

- Jan, M.T.; Hashemi, A.; Jang, J.; Yang, K.; Zhai, J.; Newman, D.; Tappen, R.; Furht, B. Non-intrusive Drowsiness Detection Techniques and Their Application in Detecting Early Dementia in Older Drivers. In Proceedings of the Future Technologies Conference (FTC) 2022, Volume 2; Arai, K., Ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2023; pp. 776–796. [Google Scholar]

- Zhang, C.; Wu, X.; Zheng, X.; Yu, S. Driver drowsiness detection using multi-channel second order blind identifications. IEEE Access 2019, 7, 11829–11843. [Google Scholar] [CrossRef]

- Kundinger, T.; Sofra, N.; Riener, A. Assessment of the Potential of Wrist-Worn Wearable Sensors for Driver Drowsiness Detection. Sensors 2020, 20, 1029. [Google Scholar] [CrossRef]

- Yamamoto, K.; Toyoda, K.; Ohtsuki, T. Doppler Sensor-Based Blink Duration Estimation by Analysis of Eyelids Closing and Opening Behavior on Spectrogram. IEEE Access 2019, 7, 42726–42734. [Google Scholar] [CrossRef]

- Shah, D.; Upasini, A.; Sasidhar, K. Findings from an experimental study of student behavioral patterns using smartphone sensors. In Proceedings of the 2020 International Conference on COMmunication Systems & NETworkS (COMSNETS), Bengaluru, India, 7–11 January 2020; pp. 768–772. [Google Scholar] [CrossRef]

- Jo, E.; Bang, H.; Ryu, M.; Sung, E.J.; Leem, S.; Hong, H. MAMAS: Supporting Parent–Child Mealtime Interactions Using Automated Tracking and Speech Recognition. Proc. ACM Hum.-Comput. Interact. 2020, 4, 66. [Google Scholar] [CrossRef]

- Bi, C.; Xing, G.; Hao, T.; Huh-Yoo, J.; Peng, W.; Ma, M.; Chang, X. FamilyLog: Monitoring Family Mealtime Activities by Mobile Devices. IEEE Trans. Mob. Comput. 2019, 19, 1818–1830. [Google Scholar] [CrossRef]

- Tan, E.T.S.; Rogers, K.; Nacke, L.E.; Drachen, A.; Wade, A. Communication Sequences Indicate Team Cohesion: A Mixed-Methods Study of Ad Hoc League of Legends Teams. Proc. ACM Hum.-Comput. Interact. 2022, 6, 225. [Google Scholar] [CrossRef]

- Sefidgar, Y.S.; Seo, W.; Kuehn, K.S.; Althoff, T.; Browning, A.; Riskin, E.; Nurius, P.S.; Dey, A.K.; Mankoff, J. Passively-sensed Behavioral Correlates of Discrimination Events in College Students. Proc. ACM Hum.-Comput. Interact. 2019, 3, 114. [Google Scholar] [CrossRef] [PubMed]

- Maxhuni, A.; Hernandez-Leal, P.; Morales, E.F.; Sucar, L.E.; Osmani, V.; Mayora, O. Unobtrusive Stress Assessment Using Smartphones. IEEE Trans. Mob. Comput. 2020, 20, 2313–2325. [Google Scholar] [CrossRef]

- Langheinrich, M. Privacy by design—Principles of privacy-aware ubiquitous systems. In Proceedings of the International Conference on Ubiquitous Computing; Springer: Berlin/Heidelberg, Germany, 2001; pp. 273–291. [Google Scholar]

- Du, W.; Li, A.; Zhou, P.; Niu, B.; Wu, D. Privacyeye: A privacy-preserving and computationally efficient deep learning-based mobile video analytics system. IEEE Trans. Mob. Comput. 2021, 21, 3263–3279. [Google Scholar] [CrossRef]

- Hoyle, R.; Templeman, R.; Anthony, D.; Crandall, D.; Kapadia, A. Sensitive lifelogs: A privacy analysis of photos from wearable cameras. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 1645–1648. [Google Scholar]

- Korayem, M.; Templeman, R.; Chen, D.; Crandall, D.; Kapadia, A. Enhancing lifelogging privacy by detecting screens. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 4309–4314. [Google Scholar]

- Templeman, R.; Korayem, M.; Crandall, D.J.; Kapadia, A. PlaceAvoider: Steering First-Person Cameras away from Sensitive Spaces. In Proceedings of the NDSS, Citeseer, 2014. San Diego, CA, USA, 23–26 February 2014; Volume 14, pp. 23–26. [Google Scholar]

- Kumar, S.; Nguyen, L.T.; Zeng, M.; Liu, K.; Zhang, J. Sound shredding: Privacy preserved audio sensing. In Proceedings of the 16th International Workshop on Mobile Computing Systems and Applications, Santa Fe, NM, USA, 12–13 February 2015; pp. 135–140. [Google Scholar]

- Dwork, C. Differential privacy. In Proceedings of the International Colloquium on Automata, Languages, and Programming; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Dedovic, K.; Rexroth, M.; Wolff, E.; Duchesne, A.; Scherling, C.; Beaudry, T.; Lue, S.D.; Lord, C.; Engert, V.; Pruessner, J.C. Neural correlates of processing stressful information: An event-related fMRI study. Brain Res. 2009, 1293, 49–60. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Thieme, A.; Belgrave, D.; Doherty, G. Machine Learning in Mental Health: A Systematic Review of the HCI Literature to Support the Development of Effective and Implementable ML Systems. ACM Trans. Comput.-Hum. Interact. 2020, 27, 34. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- VanderWeele, T.J. On the promotion of human flourishing. Proc. Natl. Acad. Sci. USA 2017, 114, 8148–8156. [Google Scholar] [CrossRef]

- Menniti, M.; Laganà, F.; Oliva, G.; Bianco, M.; Fiorillo, A.S.; Pullano, S.A. Development of Non-Invasive Ventilator for Homecare and Patient Monitoring System. Electronics 2024, 13, 790. [Google Scholar] [CrossRef]

| Sub-Dimension | No. of Articles After the Keyword Search | No. of Articles After the Screening |

|---|---|---|

| Affect | 55 | 6 |

| Engagement | 98 | 7 |

| Fatigue | 112 | 2 |

| Stress | 32 | 3 |

| Physical comfort | 176 | 3 |

| Sleep deprivation | 34 | 8 |

| Social interactions | 162 | 6 |

| Sum | 644 | 35 |

| Sub-Dimension | Behavioral Marker | Sensors | Relevant Literature |

|---|---|---|---|

| Affect | Facial expressions | RGB camera, microphone | [34,35] |

| Speech | Microphone | [34,37] | |

| Auricular positions | RGB camera | [38] | |

| Facial temperature changes | Thermal camera | [39] | |

| Eye movement and position | RGB camera, EOG signals | [45] | |

| Engagement | Facial expressions | RGB camera, microphone array | [47,48,49,50,51,52] |

| Posture | RGB camera, microphone array | [47,48] | |

| Hand gestures | RGB camera | [47] | |

| Upper-body motion | Depth camera | [53] | |

| Gaze tracking and direction | RGB camera | [52] | |

| Head rotation | RGB camera | [52] | |

| Fatigue | Eye movement | Eye tracker | [54] |

| Respiratory pattern | Radar | [55] | |

| Stress | Body movements | Millimeter-wave sensor | [67] |

| Activity information | WiFi-based localization system | [68] | |

| Communication patterns | Smartphone | [69] | |

| Phone usage | Smartphone | [69] | |

| Location | Smartphone | [69] | |

| Physical comfort | Posture | Inclinometer, RGB camera | [31,70] |

| Hand and elbow movement | Wearable sleeve | [71] | |

| Sleep deprivation | Eye blinking | RGB camera | [72,73,74] |

| Distraction | RGB camera | [72] | |

| Yawning | RGB camera | [57,72,73,74] | |

| Head movement | RGB camera | [57,72] | |

| Blink duration | Doppler sensor | [75] | |

| Micro-sleep events | RGB camera | [74] | |

| Nodding | RGB camera | [74] | |

| Social interactions | Communication frequency | Microphone | [79] |

| Category frequency | Microphone | [79] | |

| Spoken conversations | Microphone | [77,78] | |

| Duration of interaction | Microphone | [76] | |

| Number and duration of phone calls | Smartphone | [80,81] | |

| Number of SMSs | Smartphone | [81] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anžur, Z.; Žinkovič, K.; Lukan, J.; Barbiero, P.; Slapničar, G.; Li, M.; Gjoreski, M.; Debus, M.E.; Trojer, S.; Luštrek, M.; et al. A Review of Methods for Unobtrusive Measurement of Work-Related Well-Being. Mach. Learn. Knowl. Extr. 2025, 7, 62. https://doi.org/10.3390/make7030062

Anžur Z, Žinkovič K, Lukan J, Barbiero P, Slapničar G, Li M, Gjoreski M, Debus ME, Trojer S, Luštrek M, et al. A Review of Methods for Unobtrusive Measurement of Work-Related Well-Being. Machine Learning and Knowledge Extraction. 2025; 7(3):62. https://doi.org/10.3390/make7030062

Chicago/Turabian StyleAnžur, Zoja, Klara Žinkovič, Junoš Lukan, Pietro Barbiero, Gašper Slapničar, Mohan Li, Martin Gjoreski, Maike E. Debus, Sebastijan Trojer, Mitja Luštrek, and et al. 2025. "A Review of Methods for Unobtrusive Measurement of Work-Related Well-Being" Machine Learning and Knowledge Extraction 7, no. 3: 62. https://doi.org/10.3390/make7030062

APA StyleAnžur, Z., Žinkovič, K., Lukan, J., Barbiero, P., Slapničar, G., Li, M., Gjoreski, M., Debus, M. E., Trojer, S., Luštrek, M., & Langheinrich, M. (2025). A Review of Methods for Unobtrusive Measurement of Work-Related Well-Being. Machine Learning and Knowledge Extraction, 7(3), 62. https://doi.org/10.3390/make7030062