Abstract

In vitro fertilization (IVF) is a well-established and efficient assisted reproductive technology (ART). However, it requires a series of costly and non-trivial procedures, and the success rate still needs improvement. Thus, increasing the success rate, simplifying the process, and reducing costs are all essential challenges of IVF. These can be addressed by integrating artificial intelligence techniques, like deep learning (DL), with several aspects of the IVF process. DL techniques can help extract important features from the data, support decision making, and perform several other tasks, as architectures can be adapted to different problems. The emergence of AI in the medical field has seen a rise in DL-supported tools for embryo selection. In this work, recent advances in the use of AI and DL-based embryo selection for IVF are reviewed. The different architectures that have been considered so far for each task are presented. Furthermore, future challenges for artificial intelligence-based ARTs are outlined.

1. Introduction

1.1. In Vitro Fertilization

Assisted reproductive technology (ART) is a term used for fertility treatments that handle eggs or embryos [1]. It encompasses techniques used to extract eggs from ovaries, inject them with sperm in a laboratory environment, and place them in the uterus of the treated woman for implantation to occur [2]. According to the CDC, about 2.3% of all children born in the U.S. every year are conceived using ART [3]. In the U.S., around 82% of the clinical pregnancies from ART cycles started in 2021 resulted in live birth delivery [4].

The most widely considered ART technique is in vitro fertilization (IVF). It involves several steps, from medication for ovarian stimulation, egg retrieval and sperm retrieval, egg fertilization, and embryo transfer to live birth delivery. IVF has a variable success rate, with many factors affecting the outcome, age being among the most important ones. So, naturally, medical professionals are constantly trying to improve every aspect of the IVF process, in a collective effort to improve the chances of success for the patients but also to improve other aspects of the process, like the costs for patients, the number of visits required, and improving the overall experience. This is where artificial intelligence comes in as a valuable support tool to improve many aspects of the IVF process, as discussed next.

1.2. Artificial Intelligence in ART

Artificial intelligence, through machine learning (ML) and deep learning (DL), has steadily evolved over the past 20 years to be an effective decision-support tool in the medical sciences. Many topics related to obstetrics and gynecology have benefited from the use of AI, such as fetal weight estimation [5], placental volume estimation [6], electronic fetal monitoring [7], and more [8]. In IVF, AI algorithms can be used as non-invasive tools [9] to evaluate embryo development and make predictions [10,11].

For the important task of embryo selection, AI algorithms take advantage of the developments in Computer Vision (CV) and image processing, to process embryo data gathered from patients, like time-lapse [12,13] or static images in grayscale or Red–Green–Blue (RGB) format, and perform tasks like embryo component segmentation, grading, live birth prediction, and more. Usually, the embryo data are combined with the patient’s clinical data to further improve predictions. In addition to the direct task of embryo selection, AI can also provide assistance for several aspects of the IVF cycle, like strategy selection, ovarian stimulation, and even quality assurance for the clinic. Of course, due to the interdisciplinarity of the topic, the development of such algorithms requires the cooperation of clinical personnel, medical practitioners, embryologists, biologists, physicists, and computer scientists.

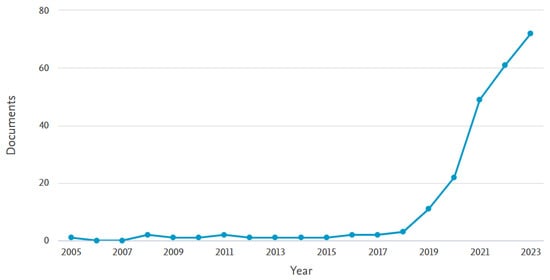

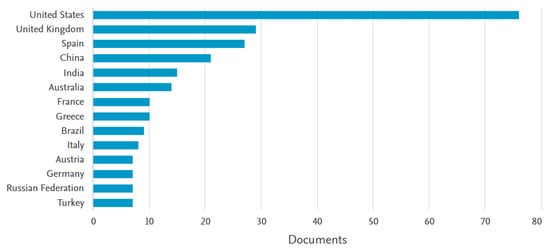

It is clear from recent developments in the field that AI can bring significant positive changes to IVF if developed properly. This is why more and more research groups are focusing on this topic, with the topic gaining considerable attention in recent years. Indicatively, Figure 1 depicts a graph of publications in the area of AI-assisted ART over the last 20 years. This was extracted from Scopus, following a keyword search of “artificial intelligence” AND “in-vitro fertilization” in the title, abstract, and keywords. There are 233 results published from 2005 to 2023 with both keywords. The graph shows a very clear upward trend, as the previous decade has seen few contributions to this field, with a very steep increase over the last 5 years. This is indicative of the emerging nature of the field of AI and specifically DL in IVF. As seen in Figure 2, of these publications, the highest contributors are the United States with more than 70 articles, followed by the United Kingdom, Spain, China, India, and Australia, with the rest of the countries following, with six or fewer contributions not being shown.

Figure 1.

Scopus results for publications with the keyword search “artificial intelligence” AND “in-vitro fertilization” in the title, abstract, and keywords, for the years 2005–2023.

Figure 2.

Scopus results for contributing countries, with the keyword search “artificial intelligence” AND “in-vitro fertilization” in the title, abstract, and keywords, for the years 2005–2023.

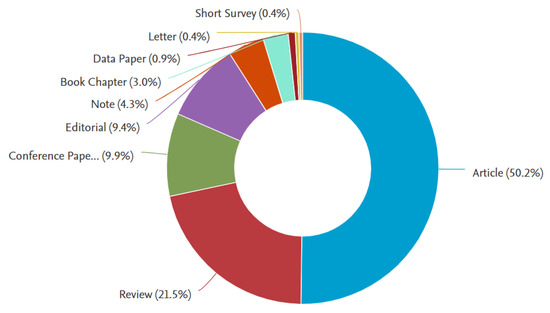

Regarding the types of contribution, looking at Figure 3, as expected, the highest percentage refers to journal publications (50%) and conference papers (10%). However, fortunately, there is a high percentage of review articles (21%). This can be understood because this field is highly interdisciplinary and requires some contributions to serve as guidance for new researchers joining the field from different backgrounds.

Figure 3.

Scopus results for paper publication types, with the keyword search “artificial intelligence” AND “in-vitro fertilization” in the title, abstract, and keywords, for the years 2005–2023.

1.3. Motivation and Contributions

Motivated by the increase in the use of AI-assisted applications in ART, this work provides a review of the latest advancements in IVF. The purpose of this review is to serve as a guide for all researchers in the field, providing a roadmap of current developments and identifying future challenges. Specifically, its contributions can be identified as follows:

- The different DL architectures are briefly outlined first.

- Several tasks are reviewed that cover IVF applications which can be addressed using AI techniques.

- An emphasis is given to more recent works, from 2021 onward.

- Emphasis is also given to DL techniques, as they constitute the state of the art in AI and ML methodologies.

- Future research directions and challenges are discussed.

Thus, this work consists of three parts, a brief outline of ML techniques, an extensive review of recent developments in AI-assisted IVF, and a discussion on the future of the field.

2. Review Methodology

Starting this review, it is important to define the conditions for choosing the works to be covered. The works included in this review were selected following the criteria below:

- The work should be published in a peer-reviewed scientific journal, presented at an international conference and included in its proceedings, or published as a book chapter in a collected volume. So, the works have already been submitted to a peer-review process.

- The publications are in English.

- Publications should have a digital object identifier (DOI).

- The works should be listed on an indexing service like Google Scholar, Scopus, or Web of Science.

The literature search was performed on Google Scholar and Scopus, following the keywords "artificial intelligence" and "in-vitro fertilization". By cross-checking the citations received from the works found in this way, the literature search was enriched.

Concerning notation and abbreviations, scalars are denoted by lowercase letters, matrices by bold capital letters, and vectors with lowercase bold letters. Math operators are denoted by capital letters. Abbreviations can be found in the Table at the end.

The rest of the work is structured as follows. Section 3 provides a brief overview of AI methodologies. Section 4 reviews the recent developments in AI-assisted IVF, covering different topics. Section 5 discusses the open challenges in the future of the field. Finally, Section 6 concludes the work, providing some final remarks.

3. Overview of AI Methodologies

In this section, the most common ML and DL architectures are briefly presented. Interested readers can find details of the respective references for each model. You can also refer to recent reviews in healthcare and medicine using reinforcement learning [14], federated learning [15], and self-supervised learning [16].

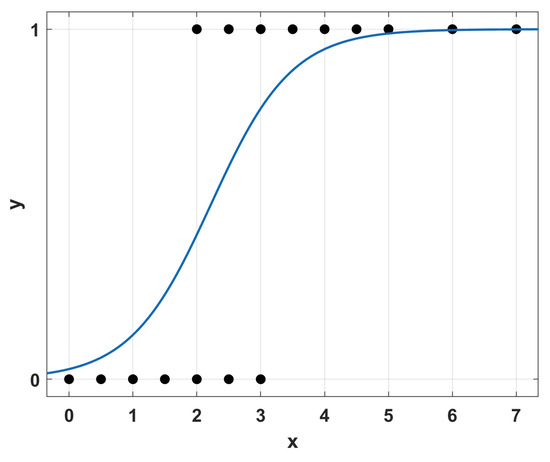

3.1. Regression Learning

Regression techniques encompass a class of statistical approaches to find the best rule that connects a series of input variables to one or more outputs; see Figure 4. Its simplest form is linear regression, where a linear rule between the input(s) and output(s) is considered, but several more exist, like logistic regression.

Figure 4.

Simple example of regression analysis.

3.2. Decision Tree Learning

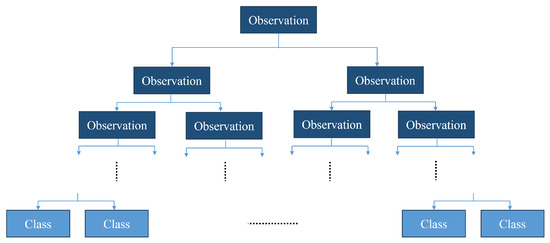

Decision trees are based on the building of hierarchical graphs to categorize data based on a series of observations; see Figure 5. A tree can consist of multiple nodes, each divided into child nodes, based on the result of an observation. The end nodes (leaves) represent the end classes.

Figure 5.

Structure of a decision tree.

3.3. Artificial Neural Networks

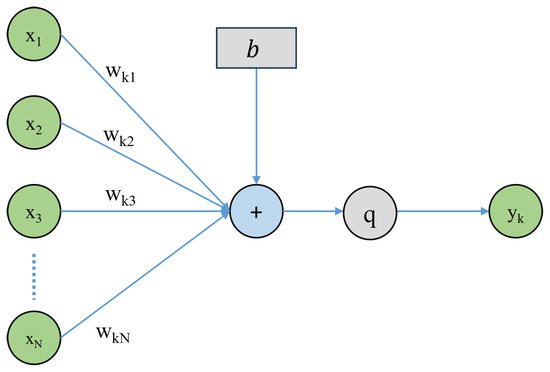

The central part of any NN architecture is a simple structured computation unit, termed the artificial neuron. By combining multiple such artificial neurons in sequential groups, called layers, a neural network (NN) is formed. In this network, the k-th neuron takes as an input an N-dimensional vector , and computes an output as follows:

Here, the scalars are the weights of the neuron, and b is called bias. The weights of all neurons can be combined in a weight matrix . The function q is called the activation function, as a reference to the activation of biological neurons. Figure 6 shows a visual representation of the above operations that form the structure of the neuron.

Figure 6.

Artificial neuron architecture.

In ML, each model consists of multiple neurons and layers, connected in different ways based on the model type. Model training is performed by updating the weight values in each iteration. The mechanism implementing this process is stochastic gradient descent (SGD). The most sufficient way to implement this is through error backpropagation, which is simply termed backpropagation. Backpropagation utilizes the principle of the chain rule of differentiation to compute the gradients through the entire network, starting from the end neuron and proceeding backwards toward the first neuron layer [17].

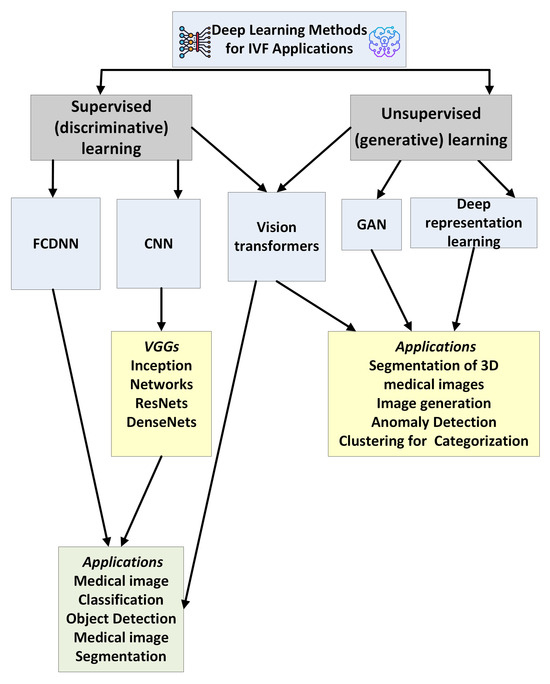

3.4. Deep Learning Methods

The DL techniques fall into two main types: DL networks for supervised or discriminative learning and DL networks for unsupervised or generative learning. Figure 7 provides a brief overview of DL methods for IVF applications. In the following, a brief description of the most common DL architectures is provided.

Figure 7.

A taxonomy of deep learning methods used in IVF applications.

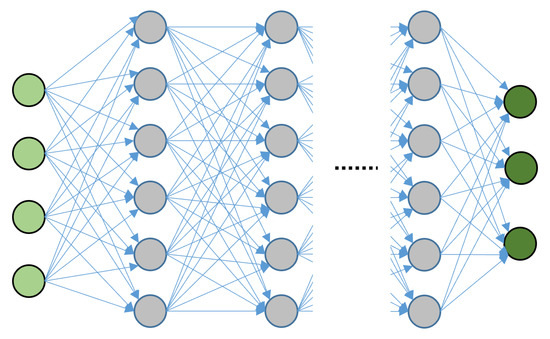

3.4.1. Fully Connected Deep Neural Networks

Fully Connected Deep Neural Networks (FCDNNs) constitute the most basic DL architecture. Here, the neurons in each layer are connected to all the neurons in the next layer, as shown in Figure 8. In FCDNN models, the information is processed in a forward manner, as there are no loops or recursion operations. Their simpler structure makes training more straightforward, but they still face challenges in Computer Vision (CV) problems. Due to the full connection between the layers, with deeper nettworks, the number of parameters becomes high, resulting in inefficiencies and possibly overfitting. Moreover, FCDNNs are not suitable for exploiting the spatial correlation of image features. In other words, FCNNs are not translation-invariant, which means that they will perform differently under a given input image and its shifted versions.

Figure 8.

Fully connected neural network. The light green nodes represent the inputs, the gray nodes are the hidden layers and the dark green nodes are the outputs.

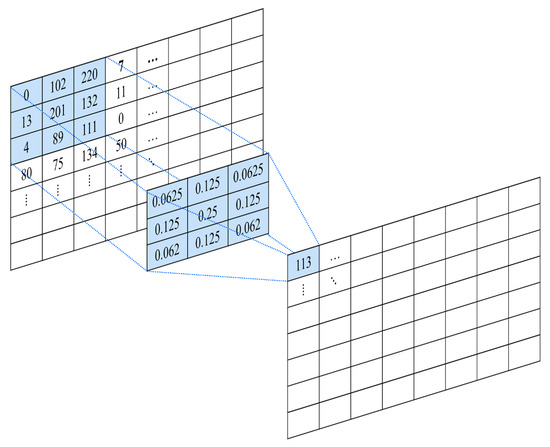

3.4.2. Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are especially suitable for CV applications due to their ability to process spatial data with a grid-like topology. As images and video frames are 2D data, where adjacent pixels are grouped to display information, CNNs are suitable for their processing. CNNs make use of the convolution operator instead of matrix multiplication, which requires fewer tuning parameters. A visual representation of the convolution operation is shown in Figure 9. In contrast to FCDNNs, the layers are sparsely connected, allowing for deeper architectures.

Figure 9.

Example of convolution operation.

A CNN model is built up by the following layers and operations:

- Input layer: The first layer of the network accepts the input data and, if required, transforms them in a format suitable for further processing. For example, RGB image data can be rearranged into multi-dimensional arrays.

- Convolution layers: The convolution layers are the distinct blocks of the CNN. They are used for feature extraction. In contrast to a fully connected layer, where each neuron receives input from all neurons in the previous layer, the neurons in the convolutional layer have a smaller receptive field. The receptive field indicates that every neuron receives input from only a restricted subset of the previous layer.

- Activation function: Most CNNs in the literature consider either a Rectified Linear Unit (ReLU) function or a variant of it. ReLU is defined as [17]:A variant of ReLU that has been successfully considered in many CV problems is Leaky ReLU, defined as:The parameter is usually taken as 0.01.

- Pooling layers: They are used to reduce the size of the incoming data by summarizing small groups of features using a computationally efficient method. For example, a max pooling layer will extract the maximum element from a feature region, like an image subregion, effectively reducing the feature data for the next processing step.

- Flattening: This operation reshapes the data into a 1D vector.

- Output layer: This is the end layer, which provides the model’s prediction.

There are several different archetypes of CNN architectures that are used in practice. The most common are listed below. Of course, variations and, more importantly, combinations of these can be considered which combine layers from different architectures.

- Visual Geometry Group models (VGGs) have a network ranging from 11 to 19 layers. They were initially proposed in order to demonstrate that deeper networks can outperform networks with fewer layers. Using a smaller size of convolutional kernels (), they can have fewer parameters and increased accuracy [18].

- The problem of overfitting can be avoided by Inception Networks that use modules consisting of multiple filters of varying sizes on the same level, effectively making the network ‘wider’. Here, the problem of the vanishing gradient is mitigated by alternating between fully connected layers and average pooling instead and also by adding auxiliary classifiers to the intermediate layers. Several improvements have been developed, like InceptionV2, InceptionV3, and InceptionV4 [19].

- Xception is an architecture built on the InceptionV3 model. Specifically, it replaces the inception modules with depth-wise separable convolutions, that is, a 2D convolution that is independent for each channel, followed by a 1D point-wise convolution. This architecture outperforms InceptionV3 in several image recognition tasks. Its parameter set is also reduced, leading to a decrease in learning latency [20].

- Residual Networks (ResNets) resolve the problem of degradation that can appear in several deep CNN architectures. Their implementation allows deeper networks to be trained and perform better. This is possible through the residual learning technique. Here, instead of using parameter layers to learn the relation between inputs and outputs, similar to VGG, they are used to extract the residual between inputs and outputs [21].

- Densely Connected Convolutional Networks (DenseNets) are inspired by ResNets. They establish maximum flow of information between layers by connecting all of them directly with each other with matching feature-map sizes. Therefore, DenseNet resolves the vanishing-gradient problem and underlines feature propagation and reuse. They also have a reduced set of parameters [22].

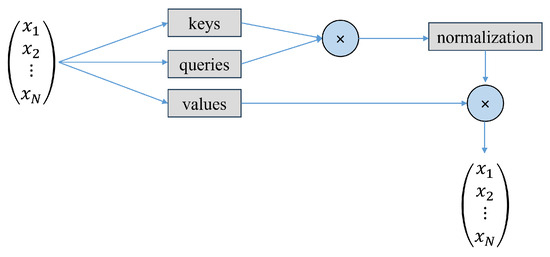

3.4.3. Attention-Based Models

Models based on attention only (Figure 10), that is, transformers [23], are widely used in the field of natural language processing (NLP) [24] and have been used effectively in CV problems [25]. In [25], emphasis is given to the analysis of the transformer model presented first, which is the basis for all the models developed afterwards.

Figure 10.

Self-attention mechanism.

The transformer architecture follows an encoder–decoder structure. The encoder block maps the sequence of inputs to a sequence of vector representations . The decoder then takes this vector representation, which is the output of the encoder, and computes a sequence of outputs .

Vision transformers (ViTs) use transformer modules for VT tasks. Each module consists of an encoder transformer block.The ViT divides the image into smaller subimages and transforms them linearly to obtain a patch embedding. The position embedding is then added to form the input to the transformer encoder. The accuracy of ViTs is similar to that of CNNs. Vision transformers can be used for both supervised and unsupervised tasks.

However, it must be noted that an important drawback of transformers is the requirement of a large data size to achieve their maximum performance and compare to other state-of-the-art architectures. Moreover, current models can have more than a million parameters, making training and testing difficult and requiring time-consuming labor.

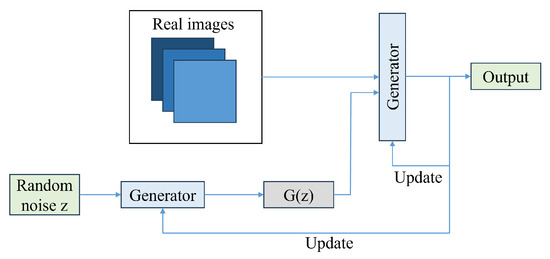

3.4.4. Generative Adversarial Networks

In the category of unsupervised DL models, generative models and specifically Generative Adversarial Networks (GANs) [26] (Figure 11) have found applications in medical imaging. Unsupervised methods try to identify patterns and structures in data that are not labeled [17]. For example, 3D-CNNs and K-means have been used in unsupervised segmentation of 3D medical images [27].

Figure 11.

GAN architecture.

To design a generative model, a dataset with samples drawn from a probability distribution P is required. The model must learn to represent an estimate of that distribution. The output can be an explicit estimation of a probability distribution Q, or the generation of samples from Q. GANs work in the second approach and employ the core idea of two adversarial entities, the generator block and the discriminator block. The input to the generator is random noise, and from this the generator tries to output sample data that come from the original training data distribution. The discriminator then examines the data that are output from the generator and judges whether they are real or fake.

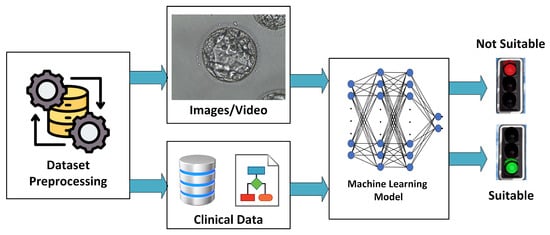

4. DL-Empowered Embryo Selection for IVF Application

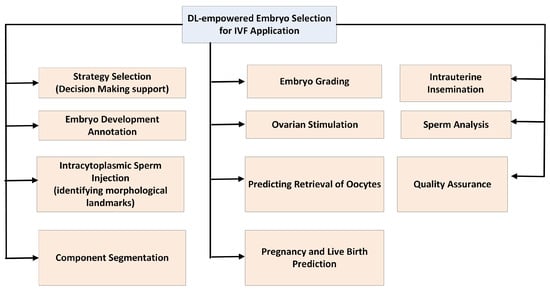

In this section, recent developments in several IVF tasks are reviewed. For each work, a brief outline is provided, with information on the models that were used, the main results, and the starting dataset information. Naturally, many of these works may include several more sub-problems studied and their corresponding volumes, but reporting each result would push this review to an unacceptable length. So, only the most important results are reported here. Interested readers are encouraged to look up each work for further information. Note also that some works may address problems that cover multiple tasks (for example, component segmentation and implantation prediction), but they are only reported in one subsection.

A general outline of an ML architecture used for an IVF task is shown in Figure 12. Starting from a given dataset, an initial preprocessing is performed to homogenize the data, discard unusable data, and possibly address any missing information in them. Data could be embryo images, clinical information, or both. These data are then used to train and validate an ML model, which can use either one or both of these types of data to make a decision. This decision could be a suggestion for an action to be taken, a grading, or a prediction. Figure 13 shows the IVF applications that we will discuss in the following sections. Brief descriptions of each are provided below.

- Strategy Selection: DL is used as a support tool in medical decision-making.

- Embryo Development Annotation: In this case, the researchers develop an automated annotation tool for human embryo development in time-lapse devices based on image analysis.

- Intracytoplasmic Sperm Injection: In this case, DL is being implemented in intracytoplasmic sperm injection (ICSI) procedures to improve the selection, analysis, and ultimately success rates of fertilization. This includes the creation of models that can be used to identify high-quality sperm, evaluate DNA fragmentation, and even monitor sperm movement during the procedure.

- Component Segmentation: Semantic segmentation of images in combination with an object detection technique can support the further processing of embryos for tasks like grading or outcome prediction.

- Embryo Grading: It involves a classification task in which embryo images are classified according to a specific grading system that evaluates the quality and developmental potential of embryos.

- Ovarian Stimulation: Ovarian stimulation is a critical stage in IVF technologies, requiring the formulation of numerous decisions regarding drug protocols, dosing, and timing that can be customized to the individual profile of each patient. DL has the potential to help fertility physicians recommend personalized treatment plans, optimize the number of retrieved oocytes, and improve patient outcomes by analyzing extensive datasets from previous IVF cycles.

- Predicting Retrieved Oocytes: It involves ML methods to predict the number of retrieved oocytes.

- Pregnancy and Live Birth Prediction: ML has been extensively employed to evaluate the prospective maternal risks during pregnancy and predict the mode of childbirth

- Intrauterine Insemination (IUI): In this case, ML methods are applied for predicting clinical pregnancy outcomes from intrauterine insemination (IUI) and identifying significant factors affecting pregnancy.

- Sperm Analysis: ML has the potential to improve intracytoplasmic sperm injection by assisting clinicians in the objective selection of sperm. This is a classfication task.

- Quality Assurance: DL is applied as an assistive quality assurance tool to identify perturbations in the embryo culture environment that may affect clinical outcomes.

Figure 12.

Outline of an ML architecture used in IVF.

Figure 13.

The IVF applications discussed in this review.

4.1. Reviews on the Topic of AI in IVF

Given the interdisciplinarity and complexity of the topic of AI in IVF, there have been several reviews, as well as discussion articles on the future promise of the field [28]. In each of these reviews, some common conclusions are drawn regarding the challenges in the field. In addition to these similarities, based on the different backgrounds, experience, and specializations of the authors in each paper, the interpretations which are the most important contributions of AI to IVF or which are the most important future challenges show variations.

The work [29] reviews the use of AI in IVF and healthcare in general, giving examples of successful and unsuccessful commercial implementations.

The work [30] discusses several aspects of improving IVF outcomes from the introduction of new technologies into laboratories. With respect to the use of AI, the potential impacts include increased fertilization rates as a result of accurate identification of the most viable spermatozoa, decreased time to pregnancy, consistency in embryo grading, and improved laboratory quality management. For all tasks, embryologists will always be an integral part of the lab and help ensure the success of ART.

In [31], the authors review the progress made towards automation in ART. Some of the topics covered are data management, patient treatment pathways, trans-vaginal oocyte retrieval, oocyte selection, semen analysis and preparation, insemination and Intra-Cytoplasmic Sperm Injection (ICSI), embryo culture and selection, Preimplantation Genetic Testing (PGT) and Metabolomics, endometrial evaluation for personalized embryo transfer, cryopreservation, and cryostorage. For the future, the authors speculate if the role of the embryologist will switch from repetitive manual tasks to precise critical thinking for decision-making.

In [32], the authors review the use of DL-based machine vision in IVF. Four important tasks are identified where DL can be of service. The first is embryo development annotation, which refers to identifying and annotating the development stage of an embryo. The second is embryonic cell detection and tracking, where the goal is to authorize the automatic annotation of the embryo development stage through images and locate each cell in an image or video. The third is IVF cycle outcome prediction. The fourth is embryo grading and selection decisions. The authors note here an important issue in IVF, namely the lack of correlation between the predicted and actual outcome of a pregnancy. They also remark that clinical implementations of AI/DL techniques in the future are important to test their potential.

In [33], the authors review the developments of AI in IVF and embryology and identify three main topics. These are the authomatic annotation of embryo development, embryo grading, and embryo selection for implantation. They also identify the potential in predicting ploidy, miscarriages, as well as other topics related to infertility, like measuring ovarian reserve by antral follicle count and the evaluation of the endometrium and contour of the uterus. The authors foresee that the use of AI in the future will encompass many more topics in reproductive medicine.

The work [34] reviews the developments in AI-assisted IVF in the years 2010–2023. The tasks covered include the assessment of oocyte quality, sperm selection, fertilization assessment, embryo assessment, ploidy prediction, embryo transfer selection, cell tracking, embryo witnessing, micromanipulation, and quality management. The importance of removing human subjectivity through AI is noted.

The work [35] discusses the use of AI technologies in IVF for 2023 and beyond. The topics covered include prestimulation testing, outcome prediction, initial dose of gonadotropin for ovarian stimulation, monitoring schedule and workflow during ovarian stimulation, assignment of the trigger day option, and prediction of the ovarian response. Three important topics are also identified for the future. First, the assessment of new tools, such as large language models (Chat-GPT), in clinical care. Second, the clinical culture for the adoption of new clinical tools. Third, the development of guidelines and criteria for publishing results of AI studies in the clinical literature.

In [36], the use of AI in time-lapse systems is reviewed. Some limitations identified include model interpretability, acquisition of standardized datasets, the problem of sharing data among clinics, and the necessity for external validation. Among the future challenges reported are the development of explainable AI algorithms to understand their decision making process, the study of algorithm robustness through large studies in many clinics with a diverse patient population, the use of collaborative federated learning [37] among clinics to allow data sharing without compromising privacy, and the participation of clinicians in the development and refinement of AI models.

The work [38] reviews the use of AI in ovarian stimulation. Some of the aspects covered include the development of decision support systems, outcome prediction, the selection of doses and protocol, and scheduling. The authors underline the importance of maintaining the contact between patients and healthcare practitioners, so the AI developments should not build a distance between the two groups. AI technologies have the prospect of democratizing access to ART care by increasing its capacity for clinical service.

The review [39] focuses on the problem of embryo selection. It was observed that AI techniques outperformed the clinical teams in all the studies that focused on embryo morphology and clinical outcome prediction during the embryo selection assessment. Of the articles reviewed, the median accuracy of AI systems was 75.5%, with a range of 59–94% on predicting the embryo morphology grade. When predicting clinical pregnancy through patient clinical treatment information, the median accuracy was 77.8%, with range of 68–90%. When both image and clinical data were provided, the median accuracy rose to 81.5%, with a range of 67–98%. These results are very promising but have limitations. For example, the authors note that many works used their own datasets, which were gathered from the local population, thus creating subjectivity in the ground truth for each study.

The short review [40] underlines the significance of time lapse imaging in embryo selection. Although there is not a single kinetic parameter that can provide predictions with absolute accuracy, the use of time-lapse imaging has helped in gathering large data and providing a ground for ML techniques to be developed.

The work [41] also reviews the use of time-lapse imaging systems, spent embryo culture media, and morphological criteria for the non-invasive evaluation of embryo quality and transfer selection. The fusion of complementary methodologies can be a key in enhancing their effectiveness.

The work [42] reviews the use of CNN models in embryo evaluation using time-lapse monitoring. Three tasks were considered, successful in vitro fertilization, blastocyst stage classification, and blastocyst quality. Of the articles reviewed, most of them reported an accuracy greater than 80%, and in certain works the models performed better than the practitioners. Observing the heterogeneity of the studies with respect to the DL architectures, reference standards, datasets used, and final results, the authors underscore the importance of sharing databases between laboratories, as well as standardizing the reporting.

In [43], the authors review DL approaches for blastocyst grading. Among the challenges, they identify the importance of having large datasets to train the models. They also highlight the importance of spatial and temporal information obtained from time-lapse imaging to improve the model’s efficacy. The authors note that integrating the patient’s clinical data and the outcome of IVF into the prediction model could help recognize scenarios of increased or decreased pregnancies.

The work [44] reviews AI techniques for embryo ploidy status non-invasive prediction. The importance of mosaicism inclusion for future studies is underlined. The integration of AI techniques into microscopy equipment and embryoscope platforms will be key for the wide use of non-invasive genetic testing. The predictive power of algorithms will be increased by optimizing the use of clinical considerations and incorporating the minimally necessary covariates.

The work [45] provides a review of DL applications for embryo classification and evaluation. The work outlines the problem tasks and architectures used and gives a short comparison between some recent works, pointing to the ones that yielded the highest accuracy, although each work was tested on different datasets.

The work [46] reviews the task of embryo selection and AI. The authors foresee that the use of AI methods will support successful IVF clinic business models, and its use will eventually become standardized in IVF laboratories. As the grading and ranking of the embryos is a time-consuming task, automating it in an objective and reproducible way through AI would greatly allow the reallocation of the researchers efforts to other tasks. It will also help reduce inter/intra-observer variability in grading, which is an important issue [47]. Moreover, it will further support data sharing between laboratories, a topic discussed in Section 5.3.

The short reflection [48] takes note of the importance of finding a balance between the use of AI techniques and human experts for the progression of the field. Also, when considering the use of a new algorithm, it is important to consider not only its performance but also its practical feasibility for being implemented in a clinical setting.

The work [49] reviews the developments of AI techniques in the prediction of the best embryo for transfer. The authors take note that despite many AI prediction models slightly outperforming the embryologists in the studies covered, they are still too early in their development stage to claim superior performance over the embryologists’ assessments.

The work [50] reviews the use of AI in the embryology laboratory. The topics covered include spermatozoa analysis, ovarian stimulation management, oocyte analysis, pronuclear-stage embryos, cleavage-stage embryos, blastocyst-stage embryos, time-lapse microscopy image analysis, static image analysis of blastocysts, automated annotation of blastocysts, implantation prediction, and non-invasive ploidy screening. The authors note that although the use of AI in the improvement of outcomes is not yet fully proven and established, it has the potential to help address many persistent problems in the field of reproductive medicine.

In [51], the developments of deeptech and femtech for IVF are reviewed. The DIY IVF cycle is discussed, with its potential leading to reduced treatment costs and, subsequently, democratized access to this service. However, the importance of human interaction with physicians for patients undergoing IVF is underlined, as well as the need for data security. The need for inclusive and diverse population datasets is also noted.

The work [52] reviews the use of AI in sperm selection, a task highly relevant to ART. The topics discussed are sperm morphology, DNA integrity, and motility. The authors denote the importance of sample size and representation in the data used, as well as the ethical concerns that span from automating the sperm selection process.

The work [53] reviews the use of quantitative models in clinical reproductive endocrinology. They identify examples where AI models can be used to support decision making in endocrinological interventions, like the selection of gonadotropin doses for ovarian stimulation, the prevention of premature ovulation, and the induction of oocyte maturation.

In [54], the use of predictive models for the initial dose of gonadotropin in controlled ovarian hyperstimulation is reviewed. For future research, the authors underscore the need to have some common consensus on study parameters, for example, having the same range of the number of retrieved oocytes in patients with normal responses and considering MII oocyte number, MII oocyte rate, or follicle output rate as outcome indicators. In addition, the need to include potential hidden variables, such as a history of pelvic surgery or chronic pelvic inflammatory disease, that can affect the predictive model.

In [55], the use of AI in ultrasound is reviewed, with applications like monitoring follicles, assessing endometrial receptivity, and predicting the pregnancy outcome of IVF and embryo transfer. Current limitations are discussed, such as ethical and liability concerns; dataset issues such as sample size, quality, and diversity; and the need for systematic thinking that only clinicians can provide. It is suggested that proper use of AI should focus on early detection and detection.

In [56], the authors provide an ethical assessment on the use of AI in IVF. They underline the importance of transparency in the algorithms being developed to avoid bias and understand their decision making.

Finally, as a topic generally related to ART technology, the work [57] reviews the use of deep technology to optimize cryostorage.

4.2. Strategy Selection

Prior to designing DL techniques for IVF, AI methods can also be used as a support tool in medical decision-making. For example, in [58], several machine learning models were considered to support the decision according to the type of treatment strategy used by medical professionals. Seven strategies were considered, the long strategy, short strategy, antagonist strategy, ultralong strategy, ovulation induction with clomiphene citrate, letrozole microstimulation for ovulation, and ovulation induction in the luteal phase, with focus on the first four strategies. Ten different algorithms were tested, logistic regression, random forest, k-nearest neighbor, NN, support vector machine (SVM), Bagging, AdaBoost [59], gradient boosting decision tree, extreme gradient boosting, and light gradient boosting machine [60], giving varying precisions for different treatment strategies and age groups. The dataset consisted of 95,868 records of couples who had gone through IVF-ET in the Reproductive Medicine Center of Tongji Hospital, Tongji Medical College, affiliated with Huazhong University of Science and Technology (2006–2019).

4.3. Embryo Development Annotation

Development annotation is a fundamental task for embryo image evaluation and can be a basis for further processing actions.

In [61], the problem of early stage development classification was considered using time-lapse videos. A multi-task deep learning with dynamic programming (MTDL-DP) architecture was developed. Initially, MTDL classifies each frame of the time-lapse video as a development stage. Then, the DP part optimizes the stage sequencing so that it is monotonically non-decreasing. The ResNet50 model [21] was considered as a basis, pretrained on ImageNet [62]. One-to-many, many-to-one, and many-to-many architectures were considered. The one-to-many and many-to-many models had the best results, with accuracies up to 85–86.9%, but the one-to-many model obtained the best balance between performance and computational cost. The dataset consisted of 170 time-lapse videos, obtained using an EmbryoScope+ microscope, with 59,500 frames overall, obtained from the Reproductive Medicine Center of Tongji Hospital, Huazhong University of Science and Technology, Wuhan, China.

In [63], a model was developed for predicting blastocyst development from oocytes. The architecture considered was EfficientNet B-7 [64], pretrained on ImageNet [62], which performed a binary classification (positive/negative blastocyst prediction). The model achieved an AUC of 0.64 and 0.63 on the test and external data. The dataset consisted of 37,133 oocyte images from eight clinics in six countries (Canada, USA, UK, India, Spain, Czechia) between 2014 to 2022. An additional 12,357 pieces of image data were also used for validation, from two clinics in two coutnries (2017–2022).

In [65], the recognition of ploidy status was considered using time-lapse videos. The DL distinguished between aneuploid embryos (group 1) and other types (group 2, euploid, and mosaic). The model was the Two-Stream Inflated 3D ConvNet (I3D) [66], pretrained on the ImageNet [62] and Kinetics [67] datasets. The model used RBG and optical flow data in two I3D models, RGB-I3D and Optical flow-I3D, whose predictions are averaged into the final output. The AUC achieved was 0.74. The dataset was gathered from 108 patients undergoing 119 PGT-A cycles at the Lee Women’s Hospital, Taichung, Taiwan, and included 144,210 images from 690 videos.

In [68], oocyte classification was considered. The architecture consisted of two main parts, a DeepLabV3Plus [69] model for image analysis, used to extract oocyte regions, pretrained on ImageNet [62], and a network inspired by SqueezeNet [70], used for classification. This network was improved by a genetic algorithm in order to have a better generalization and reduce the number of learnable parameters, FLOPs, and inference time. The classification was performed in three classes, metaphase I meiotic division (MI), metaphase II meiotic division (MII), and prophase I meiotic division (PI). The mean accuracy coefficient achieved was 0.957 on the test set. The dataset consisted of 766 images, of which 44 were MI oocytes, 663 MII oocytes, and 59 PI oocytes, collected from 100 patients undergoing intracytoplasmic sperm injection (ICSI).

In [71], six pretrained models were studied for embryo development annotation in time-lapse videos, with 14 classes considered, based on their morphological differences. The highest accuracy achieved was 67.68%, from EfficientNet-B6 [64]. The dataset consisted of 163 embryo cycles and 15,831 embryo images, obtained from Morula IVF Jakarta Clinic, Jakarta, Indonesia.

In [72], a model was developed for anomaly detection in embryos using time-lapse images. The architecture designed consists of a local binary CNN [73] in series with an LSTM. The model can achieve an early (72 h) detection of abnormalities with a precision of 82.8%, outperforming other architectures. The dataset comprised 8 non-healthy embryos and 12 healthy embryos, with 120 monitoring hours, from Istanbul Aydin University, Turkey.

4.4. Intracytoplasmic Sperm Injection

In [74], the problem of identifying morphological landmarks in images of embryos in the cleavage stage was considered. Two problems were addressed. First, a CNN-ICSI model was developed to identify the optimal location for intracytoplasmic sperm injection through polar body identification. The model achieved a 98.9% accuracy. The second model, CNN-AH, was developed to identify the optimal location for assisted hatching on the zona pellucida, with a maximum distance from healthy blastomeres. This model achieved a 99.41% accuracy. The models classified images into 12 classifications, with each class resembling a location, similar to the 12 h digits on a clock, equally spaced by 30 degree angles. The dataset consisted of over 19,000 oocyte images and 19,000 cleavage stage embryo images collected from the Massachusetts General Hospital (MGH) Fertility Center in Boston, Massachusetts.

In [75], a CNN model was developed to identify the stages of intracytoplasmic sperm injection from video sequences. Two classes were considered for each frame, the selection stage and the injection stage. The CNN architecture consisted of four convolution layers, one max-pooling layer, and a flattening layer. The accuracy was over 99%. The dataset consisted of 50 videos at 15 frames per second, with 2550 frames in total, obtained from 10 clinics (2021–2022).

4.5. Component Segmentation

Component segmentation in embryo images is a fundamental task, as it can support the further processing of embryos for tasks like grading or outcome prediction.

In [76], the segmentation of two blastocyst components, trophectoderm (TE) and inner cell mass (ICM), was considered using texture analysis. The algorithms utilized include k-means and watershed segmentation. The accuracy achieved is 86.6% for TE and 91.3% for ICM. The work also introduces a novel open-source dataset. The dataset consists of 211 Hoffman Modulation Contrast (HMC) blastocyst images from the Pacific Centre for Reproductive Medicine (2012–2016).

In [77], implantation outcome prediction was considered using single-blastocyst images. The design has two parts. The first part is a blastocyst component segmentation. This architecture uses Dense Progressive Sub-Pixel Upsampling (DPSU), inspired by [78], in combination with DeepLabV3 [79]. The second part is a multi-stream cross-modality classification utilizing a Compact–Contextualize–Calibrate (C3) feature extraction technique for predicting the outcome of implantation. The mean accuracy achieved was 70.9%. The C3 unit was also incorporated into the ResNet50 [21] and InceptionV3 [80] models and also improved their performance. The dataset consisted of 578 blastocyst images from the Pacific Centre for Reproductive Medicine (PCRM) (2012–2018).

In [81], blastocyst segmentation into blastocoel (BC), zona pellucida (ZP), inner cell mass (ICM), trophectoderm (TE), and background (BG) components is studied. A sprint semantic segmentation (SSS-Net) architecture is proposed, based on a fully convolutional semantic segmentation scheme. The model uses a sprint convolutional block (SCB) that uses asymmetric kernel and depth-wise separable convolutions. Residual and dense connectivity models are considered, with a mean Jaccard index of 85.93%, and 86.34%, respectively. The model outperforms other architectures such as UNet-Baseline [82], TernausNet U-Net [83], PSP-Net [84], DeepLab V3 [79], and Blast-Net [85] and is also more computationally efficient, having 4.04 million parameters compared to the rest, which range from 10 to 40 million. The dataset was obtained from the work [76].

In [86], the same problem is considered for these five components: BC, ZP, ICM, TE, BG. The model is termed embryo component segmentation network (ECS-Net). It consists of two streams that use a convolutional block and a depth-wise separable convolutional block and also considers dense connectivities [22]. The model achieves a mean Jaccard index of 86.46% and outperforms other architectures such as UNet-Baseline [82], TernausNet [83], PSP-Net [84], DeepLab V3 [79], and Blast-Net [85] and is more computationally efficient, with 2.83 million parameters compared to the other models, which range from 10 to 40 million. The dataset used was [76], as in [81].

In [87], five-component segmentation is considered again. The architecture developed is termed the feature-supplementation-based blastocyst segmentation network (FSBS-Net). The architecture consists of convolutional layers, batch normalization layers, strided convolutional layers, transposed convolutional layers, ascending channel convolutional blocks, pixel classification layers, and feature supplementation mechanisms. The model achieved a mean intersection over a union value of 87.26%. It outperformed other architectures like UNet-Baseline [82], TernausNet U-Net [83], PSP-Net [84], DeepLab V3 [79], Blast-Net [85], SSS-Net Residual [81], SSS-Net Dense [81], and ECS-Net [86] with respect to segmentation accuracy and computational efficiency, as it consisted of 2.01 million trainable parameters, significantly fewer than the other models (2.83 million to 31.03 million). The dataset was obtained from the work [76], as in [81,86].

In [88], blastocyst segmentation was considered from its background using U-Net models [82]. Two models were developed. In the first model, the original U-Net encoder or contraction section was replaced by a pretrained DenseNet121 architecture [22]. The second model was developed by replacing all convolutional blocks in the U-Net by dense blocks, keeping the symmetry unchanged. Both models outperformed the basic U-Net, and the second model, termed Densely U-Net, achieved the best accuracy of 99.8%. The dataset consists of 327 images from the Indonesia Medical Education and Research Institute (IMERI) [89]. Each image includes two to three embryos.

In [90], a clustering-based system was developed for localizing and counting blastomeres, from day-2 and day-3 images. The model has a preprocessing, a segmentation, and a hierarchical clustering-based module for the segmented blastomeres, using an agglomerative hierarchical clustering algorithm. This clustering module combines the results of different experiments to improve the overall performance. The average precision was 87.9%. The dataset consisted of 50 images from the Assisted Reproduction Technology (ART) Unit, International Islamic Center for Population Studies and Research, Al-Azhar University, Cairo, Egypt.

In [91], the segmentation of the oocytes was studied using deep NN. There were 14 areas considered, including the background, and 71 different deep neural networks were tested, based on DeepLab v3+ [69], Fully CNNs, SegNet [92], and U-Net [82]. The best performance was achieved by a DeepLab-v3-ResNet-18 variant, with 79% validation accuracy. The dataset consisted of 334 oocyte images from 60 patients.

4.6. Embryo Grading

The grading and selection of embryos is another fundamental task at the core of IVF [93]. This task can be assisted by AI, as reviewed in [46], and is greatly supported by image processing architectures.

On the topic of data utilization, DL models generally perform better when working with numerical data rather than categorical data. As some aspects of embryo characterization are categorical, the work [94] addressed the task of converting them to numerical values to facilitate statistical analysis and understanding of the contribution of embryo quality to the cycle outcome. The Gardner embryo grading scale was converted to the numerical embryo quality scoring index (NEQsi), with values of 2 to 11.

In [93], the KIDScoreTM D5 v3 algorithm for grading was considered. The authors found the tool useful as a support tool for embryologists in selecting blastocysts for embryo transfer. It can improve consistency in embryo selection and also improve the embryologists’ workflow. The dataset consisted of 12,468 embryos from 1678 patients from IVI Valencia in Spain (2018–2020).

In [95], the problem of grading day-3 embryos was studied. Five grades were considered (A–D, and compacted). Two models were considered for this, ResNet50 [21] and Xception [20], pretrained on ImageNet [62], with some additional fully connected layers. The Xception model was the best, achieving an accuracy of up to 98%. The dataset consisted of 152 images from around 20 patients from the Vistana Fertility Center in Kedah, Malaysia.

In [96], the problem of blastocyst grading was studied. Four grades were considered, excellent, good, average, and poor. Two models were developed, a CNN, and a VGG-16 [18], with appropriate new classification layers. Both models achieve good results, with a testing accuracy of 90% for the CNN and 94% for the VGG-16. The dataset consisted of 110 blastocyst images, from Vistana Fertility Center, in Kedah, Malaysia.

In [97], a novel model for binary embryo classification (good/bad) was developed. The architecture combined InceptionV3 [98] and DenseNet201 [99] with a fusion of features from each model to obtain the classification. The models were pretrained on ImageNet [62]. The model achieved an accuracy of 95.83% and outperformed other architectures like ResNet50 [95], VGG16 [96], DenseNet201 [99], InceptionV3 [98], and InceptionResNetV2 [98]. The dataset consisted of 840 images from day 3 and day 5, from the fourth international competition of AI and data science on embryo classification on microscopic images by Hung Vuong Hospital [100].

In [101], a deep CNN with a morphology attention module (MAM) was applied to embryo grading. The design (LWMA-Net) consisted of six blocks and a classification head. Pre-training was performed using ImageNet [62]. The model achieved an AUC of 96.88% and 97.58% in four- and three-category gradings. More experiments showed that embryologists using LWMA-Net achieved an improved embryo grading performance on the four- and three-category grading tasks. The dataset used consisted of 4290 embryo images from 2639 couples (2016–2021).

In [102], the authors studied the sensitivity of automatic embryo grading to different focal planes, with the goal of ensuring consistency. They concluded that by performing test-time augmentation and ensemble modeling can reduce this sensitivity. ResNet18 and EfficientNet-b1 models were considered. The dataset consisted of blastocyst images from 11 IVF clinics in the US (2015–2020).

In [103], time-lapse images were used for blastocyst grading. The model predicts inner cell mass (ICM) and trophectoderm (TE) grades. The architecture consists of a CNN that extracts features from frames, coupled with an RNN that uses temporal information by combining image features over consecutive frames. The model performed on the same level as that of human embryologists. The dataset included 4032 treatments undergoing IVF (1169), intracytoplasmic sperm injection (ICSI) (2534), or a mixture of both (329). In total, 8664 embryos having reached the blastocyst stage were analyzed. Data were collected from four clinics.

The work [104], considered embryo grading following Gardner’s system, for blastocyst development (rank 3–6), ICM (A, B, C), and TE (a, b, c). The ResNet50 architecture [21] was considered, pre-trained on the ImageNet dataset [62]. It achieved a 96.24% accuracy for blastocyst development, 91.07% for ICM quality, and 84.42% for TE quality. The dataset consisted of 171,239 images of 16,201 embryos, from 4146 IVF cycles obtained from the Stork Fertility Center [105] (2014–2018).

In [106], the problem of embryo grading was addressed using a combination of CNN and LSTM networks. A CNN was used to extract features from embryo images, and then the features were used as input to LSTM to perform the classification. Various pretrained CNN models where considered, many of which gave a 100% validation accuracy score, like VGG16-LSTM, VGG19-LSTM, MobileNetV2-LSTM. Instead of the LSTM layer, a VGG16-GRU was also considered, which again gave 100% validation accuracy. The grading consisted of five classes (A—best, B—good, C—fair, D—poor, E—Non-viable). The dataset was obtained from Nova IVF Fertility, Ahmedabad, Gujarat, India [107], and consisted of 803 labeled samples from 60 patients, with 5458 image frames used overall.

In [108], blastocyst grading was considered using multifocal images. Three models were considered, using VGG-16 as a basis, appended by average pooling and fully connected layers. The first model used an ensemble network structure inspired by [109]. The second model used a voting mechanism for the classification. The third model only used the three images with the highest sharpness out of the inputs. The classification was performed in two classes, good and poor. The highest AUC was 0.936, achieved by the third model. Moreover, using Grad-CAM [110], a color-graded version of the blastocyst images was generated, to highlight the important image parts. The dataset consisted of 1025 embryos from the Center for Reproductive Medicine of the Affiliated Drum Tower Hospital of Nanjing University Medical School in China, with 11,275 images overall (2017–2018).

In [111], CNNs were considered to judge the consistency of grading. Five classes are considered (1—poor, 2—fair, 3—good, 4—great, 5—excellent). Although embryologists had a high degree of variability in their gradings, the DL model had a consistency of 83.92% in selecting blastocysts for biopsy and cryopreservation. The training dataset consisted of 3469 embryo images recorded at 70 and 113 h post insemination from the Massachusetts General Hospital (MGH) Fertility Center in Boston, Massachusetts. The dataset used for evaluation consisted of 748 embryo images at 70 h post insemination and 742 at 113 h.

In [112], embryo classification was studied on the third day, the cleavage stage. A network of eight layers, five convolution and three fully connected, achieved 75.24% accuracy in predicting the embryo destiny (discard or transfer). Considering the batch context, where embryos are predicted with relation to other ones from the same batch, the accuracy increased to 84.69%. The predictions made by the model were also related to clinical implantation rates. The dataset consisted of 38,000 records from UZ Leuven Hospital, each having multiple images at various focal depths, for a single embryo.

In [98], the grading problem for embryo images at 113 hpi was studied. The model had five grades, for non-blastocysts (grades 1–2) and blastocysts (grades 3–5). A collection of architectures were considered, like Inception V3 [80], ResNET-50 [21], Inception-ResNET-V2 [19], NASNetLarge [113], ResNeXt-101 [114], ResNeXt-50 [114], and Xception [20], pretrained on ImageNet [62], with the Xception model outperforming the rest. Data were obtained from the Massachusetts General Hospital (MGH) Fertility Center in Boston, Massachusetts, and consisted of 3469 embryo videos from 543 patients. The evaluation dataset included 2440 images at 113 hpi.

In [115], the selection problem for embryos with single-timepoint images collected at 113 hpi was studied. The architecture consisted of an Xception network from [98] paired with a genetic algorithm for rank ordering embryos by generating unified scores. The CNN was pretrained using ImageNet [62]. The accuracy for choosing the best embryo reached 90%. In addition, on the problem of evaluation of implantation potential, on a set of 97 euploid embryos capable of implantation, a CNN model outperformed a group of embryologists. The dataset included 3469 videos from 543 patients, from the Massachusetts General Hospital (MGH) Fertility Center in Boston, Massachusetts. The evaluation dataset had 2440 static human embryo images at 113 hpi, The test dataset included 97 patient cohorts and 742 embryo images.

In [116], an experiment was designed to test the aid of AI in embryo selection. Embryologists were given a set of embryos to choose the best embryo to transfer. The embryo images were first given without any further notes, and then, they were provided again, but this time accompanied by a suggestion made by the AI system as to which embryo should be transferred. With the aid of the algorithm, embryologists could identify successfully implanted embryos in 73.6% of the cases, compared to 65. 5% for the case in which no AI suggestion was provided. The model used was based on Xception described in [115], pretrained on ImageNet [62]. The dataset consisted of time-lapse images from 400 embryos and 160 patients, from the Massachusetts General Hospital Fertility Center (2014–2018).

In [117], the authors considered embryo grading at the blastocyst stage, under the scope of developing a model that can be equally applicable to all types of embryo data, regardless of microscope type, image capture day, and cycle type. Images were collected on days 5, 6, or 7 prior to transfer, biopsy, or freezing, using inverted microscopes, stereo zoom microscopes, or time-lapse incubation systems. A deep CNN, ResNet-18 [21] with dropout, was trained to rank images based on the possibility of reaching clinical pregnancy. In total, 5100 blastocysts from fresh, frozen, and frozen euploid transfers were considered and were matched to pregnancy outcomes, as well as 2,900 blastocysts, corresponding to aneuploid PGT-A results. The AUC achieved was 0.72 for all embryos. The model also outperformed manual grading for euploid transfers. Data were gathered from 11 IVF clinics in the US (2015–2020).

In [118], emphasis is given on applying image filters at the pre-processing stage, for day-3 embryo images for grading (excellent, moderate, poor). The images are passed through filters, and after a selection process, the most suitable are used as input to a CNN (VGG-Net [18]). The filters considered are Blur, Gaussian, Sharpening, Laplacian, Vertical-Sobel, Horizontal-Sobel, and Median. This model outperformed the models that used no filters, all the filters, or a random collection of them, by reducing the test error by over 8%. The dataset consisted of 1386 embryo images from 238 patients, which were obtained from an infertility clinic in Indonesia (2016–2018).

The authors of [99] considered the prediction of blastocyst formation and quality by time-lapse monitoring from the first three days. The model considered is termed the spatial-–temporal ensemble model (STEM), and predicted blastocyst formation. The STEM model uses a weighted average between a temporal stream (LSTM) model and a spatial stream (gradient boosting classifier) one. DenseNet201 [119] was implemented in the design. The accuracy was 78.2% for blastocyst formation prediction. The STEM+ model also performed well in usable blastocyst prediction, with 71.9% accuracy. The initial dataset consisted of 26,113 embryos, from 2594 IVF and intracytoplasmic sperm injection (ICSI) cycles, cultured in TLM incubators (2014–2017).

In [120], the grading of blastocysts was considered using multi-focus images. A ResNet-50 [21] architecture is used in combination with an attention module to use the high level features from the images. It was observed that the use of multi-focus images improved performance over the single image case. The model also outperformed embryologists for all three grading categories (development stage, ICM, TE). The dataset consisted of images of 4997 blastocysts, cultured in a time-lapse incubator (EmbryoScope, Vitrolife, Sweden) at CReATe Fertility Centre, in Toronto, Canada.

In [121], a euploid prediction algorithm (EPA) was developed using time-lapse imaging, embryo data, and clinical data. The algorithm has three main modules, one for extracting features from the embryo image sequences, one for normalizing the embryo and clinical data features, and one for fusing these features and making the prediction. For processing the image sequences, a 3D-ResNet50 model [122] is utilized as a basis. A fully connected layer network is used at the fusion and prediction part. The AUC achieved was 0.80. The dataset consisted of 469 PGT cycles and 1803 blastocysts, from the Reproductive Medicine Center of Tongji Hospital, Huazhong University of Science and Technology, Wuhan, China (2018–2019). In total, 155 PGT cycles and 523 blastocysts (2019–2020) were also used for verification.

In [123], blastocyst grading was considered for time-lapse images. The developed architecture was termed STORK, which is based on Google’s inception-V1 model [124], pre-trained on ImageNet [62]. The model predicted blastocyst quality with an AUC of over 0.98, and outperformed individual embryologists. It was also tested on datasets from two different clinics, from the Institute of Reproduction and Developmental Biology of Imperial College, London, UK, and Universidad de Valencia, Valencia, Spain, and achieved an AUC of 0.90 and 0.76, indicating its robustness. A descision tree was also designed that combined embryo quality with patient age for pregnancy likelihood prediction. The chances of pregnancy based on individual embryos ranges from 13.8% for poor quality embryos and ages over 41, up to 66.3%, for good quality embryos and ages below 37. The dataset included 10,148 embryos, obtained from the Center for Reproductive Medicine at Weill Cornell Medicine (2012–2017). Images were collected using the EmbryoScope (Vitrolife, Sweden) time-lapse system. There were 50,392 images used.

In [125], a model termed STORK-A was developed for predicting embryo ploidy status. The model uses image data from time-lapse microscopy, in combination with clinical data, like maternal age, morphokinetic parameters, and morphological assessment. STORK-A is based on ResNet18 [21] pretrained on ImageNet [62]. Three classification problems were considered. The accuracy for predicting aneuploid/euploid was 69.3%. The second case predicted complex aneuploidy versus euploidy and single aneuploidy, with accuracy of 74.0%. A third case was to predict complex aneuploidy versus euploidy, with accuracy of 77.6%. The dataset consisted of 10,378 human blastocysts at 110 h after intracytoplasmic sperm injection, from 1,385 patients at the Weill Cornell Medicine Center of Reproductive Medicine, New York (2012–2017). The model was also tested on two independent datasets for aneuploid versus euploid classification, one from the Weill Cornell Medicine Center using EmbryoScope+ machines, with 63.4% accuracy, and one from IVI Valencia, Health Research Institute la Fe, Valencia, Spain, with 65.7% accuracy, and thus showed generalizability.

In [126], a model was developed for embryo euploidy prediction from static images of day-5 blastocysts. The model was an ensemble one, following [127], and included ResNet [21] and DenseNet [22] architectures, along with techniques like data cleansing [128] and distillation [129]. The model’s accuracy was 65.3%, which increased to 77.4% once the dataset was cleaned of some poor and mislabeled images. The model can also be used for evaluating day-6 embryos. Using additional data from independent clinics, it was observed that the model was also generalizable to different patient demographics and it could be applied to images from multiple time-lapse systems. The initial dataset consisted of 15,192 images of embryos at the blastocyst stage from 10 clinics in the USA, India, Spain, and Malaysia.

In [130], two models were used to predict aneuploidy and mosaicism in IVF-conceived embryos. The data used for prediction were general, maternal, paternal, couple related, IVF cycle related, and embryo related. From the many algorithms considered, the random forest algorithm was the best in both cases, with an AUC of 0.792 for aneuploidy and 0.776 for mosaicism. The most important predictive variable for aneuploidy was maternal age, and after this was paternal and maternal karyotype and embryo quality. For mosaicism, the highest importance variable was the technique used in preimplantation genetic testing for aneuploidies and the embryo quality, followed by maternal age and the day of biopsy. The dataset consisted of 6989 embryos taken from 2476 cycles, from Instituto Bernabeu (2013–2020).

The work [131] considered grading for blastocysts and cleavage stage embryos. The classification was binary, with discarded embryos labeled as poor, and transferred or frozen labeled as good. Four models are considered, an EfficientNet variant [64] termed EfficientNet-L2 with Noisy Student Training [132], Swin Transformer [133], STORK [123], and AlexNet [134]. The models were pretrained on ImageNet [62]. The best performance was achieved by the Swin Transformer for both day-3 and day-5 embryos, with an accuracy of around 99.5%. The dataset was obtained from the Xinan laboratory, using time-lapse incubators, and consisted of 4543 videos, from 1037 patients. Overall, 21,915 images for day-5 embryos and 24,489 images for day-3 embryos were used.

In [135], DL was considered for predicting the blastocyst survival after thawing. The model was termed EmbryoNeXt, which was an ensemble of models, ResNet-18, ResNet-34, ResNet-50 [21], and DenseNet-121 [22], pretrained on ImageNet [62]. The ensemble model is averaging the rank predictions of these models. The model, and a team of embryologists, made predictions from images obtained at 0.5 h increments, starting from 0 h to 3 h post thaw. The model achieved an AUC of 0.869 at 2 h and 0.807 at 3 h, while the embryologists achieved an average of 0.829 at 2 h and 0.850 at 3 h. By combining predictions from both the model and the embryologists though, an AUC of 0.880 at 2 h, and 0.860 at 3 h was achieved. The dataset consisted of 652 time-lapse videos of freeze–thaw blastocysts from 119 patients from the Center for Reproductive Health at University of California, San Francisco (2019–2020).

In [136], a model to predict ploidy was developed, termed the Embryo Ranking Intelligent Classification Algorithm (ERICA). The model uses fully connected layers for classification. The model achieved an accuracy of 0.70. It also ranked a euploid blastocyst first in 78.9% of the cases, and at least one euploid embryo in the top two positions in 94.7% of the cases. The dataset of static images consisted of 1231 blastocyst micrographs from three New Hope Fertility Centers in Mexico City, Guadalajara, and New York City (2015–2019). Images were gathered within 5 or 6 days after fertilization and prior to any other intervention.

In [137], a model is developed for embryo grading. A Blast-Net segmentation model is used, and a CNN and VGG-16 are considered for classification. The VGG-16 model had an accuracy of 94%. This architecture is also implemented as a web application, for ease of use. The dataset was provided by the Vistana Fertility Center.

In [138], the authors considered blastocyst grading under a heavily imbalanced dataset, as only 1% of the samples belonged to the minority class. The authors made sure that these samples were included in training, validation, and test phases. The proposed model was VGG16-Multi-Label, which simultaneously grades ICM, TE, and ZP by having some shared convolution and pooling layers between the three labels. The model achieved an accuracy of 73.9% for ICM, 67.3% for TE, and 81.8% for ZP grading, outperforming ResNet50 [21], InceptionV3 [80], and VGG16 [18]. The models were pretrained on ImageNet [62]. The dataset consisted of 704 images provided by the Pacific Centre for Reproductive Medicine (PCRM) in Burnaby, BC, Canada (2012–2018), with some images being from online sources.

In [139], the iDAScore v1.0 was evaluated for time-lapse images. The AUC for euploidy was 0.60 and for live-birth prediction 0.66, which was almost on par with the embryologists. Also, iDAScore v1.0 placed euploid blastocysts as top quality in 63% of cases with one or more euploid and aneuploid blastocysts from the same cohort. When at least two euploid blastocysts were identified within each cohort, the embryologists and the model were both successful in prioritizing the euploid competent blastocyst for transfer in 52% of the cases. The dataset included 3,604 blastocysts and 808 euploid transfers from 1232 cycles, gathered from a private clinic Clinica Valle Giulia, GeneraLife IVF, Rome, Italy (2013–2022).

In [140], day-3 embryos were classified in four categories. An ensemble learning model was proposed (DenseNet169, Inception V3, ResNet50 and VGG19) and achieved an average 74.14% accuracy. When categories 1 and 2 were combined, the accuracy increased to 89.16%. The model outperformed DenseNet121 [141], DenseNet169 [141], InceptionV3 [142], ResNet50 [143], VGG16 [144], and VGG19 [144] in both cases. The models were pretrained on ImageNet [62]. The model also performed better than the embryologist average in both cases in an independent test cohort. The dataset consisted of 3601 images of day-3 embryos from 1800 couples (2016–2018). An independent test set of 699 images form 350 couples (2018) was also used to test the models.

The work [89] considers day-3 embryo grading after IVF. The grading was performed on five classes, based on the Veeck criteria [145]. Several DL architectures were considered, ResNet18, ResNet34, ResNet50, ResNet101 [21], DenseNet121, DenseNet169 [22], Xception [20], and MobileNetV2 [146], developed in fastai [147]. The ResNet50 model achieved the highest accuracy of 91.79%. The dataset included 1084 images from 1226 embryos of 246 IVF cycles captured at the third day after fertilization, obtained from the Yasmin IVF Clinic, Jakarta, Indonesia.

In [148], the authors developed two platforms for embryo image capture and evaluation. The first is a standalone device which can be controlled wirelessly though a smartphone, with a development cost of around USd 85. The second device is attachable to a smartphone and had a cost of around USD 3. The Xception architecture [20] was used, pretrained on ImageNet [62]. The accuracy for classifying between blastocysts and non-blastocysts captured from the stand-alone system was 96.69%, and for the smartphone it was 92.16%, so both systems performed well. The dataset consisted of over 2450 embryos collected using a commercial time-lapse imaging system, along with additional images captured with the devices and gathered from the Massachusetts General Hospital’s fertility center. So training was performed using both the high-quality images and the images captured from the devices separately.

The work [149] studied DL as an early detection system for possible adverse outcomes and to monitor performance of embryologists performing intracytoplasmic sperm injection. The model considered was Xception [20], pretrained on ImageNet [62]. The dataset consisted of EmbryoScope videos from 2366 embryos obtained from a fertility center in Boston, MA, USA.

In [150], a model was developed for predicting the euploidy of blastocysts and live birth in PGT-A treatments, combining morphokinetic and morphological characteristics of blastocysts, as well ass the patient’s clinical parameters. The methodology combined t2, t3, t5, tB, KIDScore, Gardner grade, female age, and the number of embryonic frozen days with two-logistic regression to predict the euploidy of blastocysts. The AUC for euploidy prediction was 0.879, which was a positive outcome, but the model was not successful in predicting live birth after frozen embryo transfer. The study consisted of 403 patients who underwent PGT-A treatment at the Reproductive Medicine Centre of Xuzhou Maternal and Child Health Care Hospital (2019–2022).

In [151], several models were tested for ploidy prediction using morphokinetic, embryological, and clinical data. The models considered were mixed-effects multivariable logistic regression, random forest classifier, extreme gradient boosting, and a deep learning architecture. The logistic regression model with 22 predictors was the best performing one, with an AUC of 0.71. When only morphokinetic predictors were used, the AUC was 0.61. The dataset consisted of 8147 biopsied blastocysts from 1725 patients, gathered from nine IVF clinics in the UK (2012–2020).

The work [152] considered embryo ploidy status classification. Two cases were considered, the first was a classification between euploid/aneuploid/mosaic, while the second was between euploid/(aneuploid + mosaic). In case study 1, the models considered achieved low accuracy scores. For the second case, a gradient boosting algorithm with histogram of oriented gradient and principal component analysis performed the best, with an accuracy of 0.74. The second best model was a decision tree with histogram of oriented gradient and principal component analysis, which achieved an accuracy of 0.70. Other models like DenseNet [22] had inferior results. The dataset consisted of 1123 embryo samples from 483 couples, obtained from the Morula IVF Jakarta Clinic, Jakarta, Indonesia.

The work [153] studied the prediction of oocyte maturation in the GnRH antagonist flexible IVF protocol. The algorithm considered was XGBoost [154], which achieved an accuracy of 75% for the prediction of a high oocyte maturation rate. The most important parameters for prediction were the peak estradiol level on trigger day, the estradiol level on antagonist initiation day, the average dose of gonadotropins per day, and the progesterone level on trigger day. The dataset consisted of 462 patients (2005–2015) of age less than or equal to 38 years on their first IVF-ICSI cycle using a flexible GnRH antagonist protocol.

In [155], the problem of blastocyst classification into two classes, suitable/unsuitable for transfer, was considered. A DL ensemble model was developed based on AlexNet [134], VGG11 [18], and a variant of VGG with no dropout layers, in contrast to the AlexNet and VGG11. The ensemble model has an accuracy of 78%, more than the individual models. By further implementing a voting classifier from five instances of the ensemble model, the accuracy reaches 81%. The dataset consisted of 2269 images.

In [156], Small Non-Coding RNAs were studied as biomarkers for the prediciton of embryo quality. A prediction pipeline model was developed for this, utilizing an XGBoost model, a Lasso model, extra random trees, and a voting mechanism. Two miRNAs and five piRNAs could be used to predictively distinguish a high-quality embryo from a low-quality one, with an average accuracy of 86%. The dataset consisted of spent blastocyst medium samples from 60 patients with idiopathic infertility.

A variation of the problem of embryo grading, in [157] the problem of patient identification was considered from cleavage and blastocyst stage embryos. The model was initially fed embryo images on day 3 and day 5 from each patient. It could then evaluate embryos at a different timepoints on day 3 and 5 and match them to the same patient. Such a task can be of high importance for specimen tracking in a lab. The CNN model was based on [98,158], in combination with a genetic algorithm [115], which computed the unique identification scores for the embryos. The model achieved 100% accuracy for patient identification when choosing from random pools of eight patient embryo cohorts. The dataset consisted of TLI data from 400 patients from the Massachusetts General Hospital (MGH) Fertility Center in Boston, MA.

In [159], a random forest algorithm was developed to predict blastocyst ploidy, using copy number variation patterns. The ensemble learner used multiple decision trees and a bagging strategy. The embryos were categorized in three grade classes, based on their euploidy probability. Overall, the A- and B-grade embryos had better outcomes compared to the C-grade ones. The initial dataset consisted of 345 blastocyst embryos, and the system was validated on 266 patients.

In [160], a model was considered for binary classification of embryos (good/poor). The model was based on Inception-V121, pretrained on ImageNet [62]. The accuracy achieved was 93.8%. A dataset of 68 images was used, obtained from GitHub.

In [161], a pipeline termed BlastAssist was developed for measuring features of embryos. The features considered included fertilization status, cell symmetry, developmental timing, pronuclei fade time, degree of fragmentation on day 2, and blastulation time. The pipeline consisted of six neural networks for different tasks, building upon results of previous works [162,163,164]. The model can perform on par or outperform embryologists. The dataset consisted of time-lapse image data of 32,939 embryos, with 67,043,973 images overall, with clinical annotations, from the IVF unit of the Tel Aviv Sourasky Medical Center in Israel (2012–2017).