1. Introduction

Understanding cognitive load is essential in collaborative learning contexts, as it directly affects how individuals process, store, and apply new information [

1,

2]. According to Cognitive Load Theory (CLT), learning becomes less effective when the cognitive demands of a task exceed the limited capacity of working memory [

3,

4]. Managing cognitive load is particularly critical in complex learning settings—such as collaborative learning—where learners must juggle multiple streams of information, coordinate with peers, and make decisions under time constraints [

5]. In such environments, cognitive overload can hinder deep learning and effective collaborative learning, while well-managed cognitive load can promote engagement and knowledge construction [

6]. As a result, understanding and accurately measuring cognitive load is fundamental for optimizing learning performance and promoting meaningful learning experiences.

Cognitive load measurement in collaborative learning has traditionally relied on subjective measurement typically involving self-reports or rating scales where participants assess their perceived mental effort and task difficulty [

7]. Common tools, such as Paas’s cognitive load scale or the NASA Task Load Index [

8,

9], are frequently used to measure these perceptions. These methods provide valuable insights into learners’ experiences of cognitive load across different tasks, but they can be prone to bias and inconsistency, as participants may struggle to accurately estimate their cognitive load. Moreover, subjective ratings are typically gathered after task completion, making it difficult to capture real-time cognitive load fluctuations during learning. Hence, these limitations may hinder the reliability and validity of the findings.

In recent years, advancements in neurophysiological and behavioral measurement techniques have opened up new possibilities for more objective and precise assessments of cognitive load. Neuroimaging techniques are increasingly employed in the field of education to understand brain activity associated with learning, cognition, and instructional design. For instance, Friedman et al. demonstrated the potential of electroencephalography (EEG) combined with machine learning models like Random Forest and XGBoost to predict cognitive load during problem-solving tasks [

10], highlighting EEG features such as power spectrum metrics and neural connectivity as strong predictors of cognitive load. Similarly, Misra et al. explored cognitive distraction detection using machine learning techniques, underscoring the effectiveness of combining multiple data sources, including eye-tracking and physiological measures, to improve the accuracy of cognitive load detection [

11]. Karmakar et al. further illustrated the utility of functional near-infrared spectroscopy (fNIRS) for real-time cognitive load detection by leveraging deep learning models to classify cognitive states [

12].

Despite the growing use of these techniques, it remains necessary to explore and refine the specific features of physiological variables that have been identified to be predictive of learners’ cognitive load. This study addressed this gap by combining fNIRS and eye-tracking data to predict learners’ cognitive load during collaborative learning. Data were collected from participants engaged in a collaborative ideation task, with various machine learning algorithms—such as Random Forest, Decision Tree, and Support Vector Machine—applied to classify cognitive load levels. By integrating fNIRS and eye-tracking data, this study aims to offer a more accurate, data-driven approach to cognitive load measurement, moving beyond traditional methods to deepen our understanding of cognitive dynamics in collaborative learning environments.

2. Literature Review

2.1. Cognitive Load Measurement in Collaborative Learning

Cognitive Load Theory (CLT), introduced by John Sweller in the late 1980s, provides a theoretical framework for optimizing instructional design in alignment with the cognitive architecture of learners [

1]. The theory is grounded in the understanding that human working memory is limited in capacity, allowing individuals to process only a small amount of information at any given moment [

8,

13]. When these limits are exceeded, cognitive overload occurs, impeding the learning process [

14,

15].

CLT identifies three types of cognitive load: intrinsic, extraneous, and germane [

16,

17,

18]. Intrinsic cognitive load is associated with the inherent complexity of the material being learned and directly impacts working memory [

18]. In contrast, extraneous cognitive load arises from unnecessary or irrelevant elements in instructional design that detract from learning [

19]. Germane cognitive load involves the mental effort required to process, organize, and integrate information in a meaningful way [

1,

15]. The goal of instructional design, according to CLT, is to minimize extraneous load while effectively managing intrinsic and germane loads to enhance learning outcomes [

2]. This study focuses on overall cognitive load, which encompasses the mental effort from all three types. This holistic perspective aligns with the use of both subjective and physiological indicators, such as fNIRS and eye-tracking, to capture real-time cognitive effort without attempting to disaggregate the subtypes of load.

Research demonstrates that collaborative learning often imposes a greater cognitive load on students compared to individual learning [

20,

21,

22]. This increased load arises from the demands of multitasking, as students must simultaneously process information while managing interaction and communication within the group. Cognitive load has traditionally been assessed using subjective questionnaires [

3]. Using these metrics, subjective measures such as multi-item scales have been moderately effective in measuring various aspects of the learning process [

23]. However, questions remain about whether learners can reliably distinguish between different forms of cognitive load, particularly in situations involving multiple overlapping cognitive processes. Recently, physiological methodologies, such as fNIRS and eye-tracking, have been proposed as objective measures of cognitive load [

24,

25]. For instance, Multu-Bayraktar et al. stated that physiological indicators such as fixation and EEG frequency bands provide valuable insights into cognitive load [

26]. Their study found a positive correlation between fixation counts and EEG band power, and a negative correlation between fixation counts and retention performance—highlighting how physiological measures can reveal cognitive load effects not captured by self-reports. Similarly, İşbilir et al. showed that combining fNIRS and eye-tracking data can differentiate expert and novice responses during task execution [

27]. Experts displayed increased dlPFC activation and longer fixations following unexpected events, while novices showed weaker responses, indicating differences in attention and cognitive control. These findings support the use of physiological measures for capturing real-time cognitive dynamics in complex tasks.

These methods provide significant advantages, including objectivity and real-time monitoring, which enhance our understanding of cognitive processes [

28,

29]. However, their reliability and interpretation remains hindered by confounding factors, including the interplay between task complexity, learning activities, and stress [

26,

30]. Based on these findings, this study seeks to validate fNIRS and eye-tracking indicators to develop a predictive model for measuring learners’ cognitive load in collaborative learning.

2.2. Cognitive Load Measures: fNIRS and Eye-Tracking

While traditional self-report measures and behavioral measurement remain fundamental in educational research, the integration of neuroimaging techniques provides an objective and biologically grounded approach to understanding cognitive load and neural function across diverse learning tasks [

31]. These methods enable a more precise evaluation of cognitive processes, thereby informing data-driven instructional strategies to optimize learning outcomes. Tommerdahl introduced the Levels of Development Model [

32], which outlines how the gap between neuroscience and education can be bridged by examining neurobiological mechanisms underlying learning phenomena, such as collaboration. This framework emphasizes the importance of interdisciplinary translation—integrating insights from neuroscience, cognitive neuroscience, psychological mechanisms, and educational theory—before applying findings to classroom practices. Importantly, rather than viewing these stages as discrete, the model underscores the need for a dynamic feedback loop, ensuring that findings from different levels inform and refine each other for a more holistic understanding of learning.

In the field of cognitive neuroscience, a range of neuroimaging techniques enable the real-time measurement of neural activity during cognitive and learning tasks, including electroencephalography (EEG), functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy (fNIRS). Although each of these modalities provide distinct neural markers, all contribute to a more comprehensive understanding of the brain’s role in learning and cognition. Among these, fNIRS offers key advantages in spatial resolution and reduced susceptibility to motion artifacts, facilitating freer and more naturalistic measurements to be collected. Brain activity is collected using near-infrared light to detect changes in oxygenated and deoxygenated hemoglobin concentrations, providing an indirect index of cortical activation through hemodynamic responses [

33]. Its portability and ecological validity make it particularly well-suited for studying cognitive load in real-world educational settings, such as classrooms [

34]. Working memory and executive function—both crucial for knowledge retrieval, idea generation, and discussion—are supported by the prefrontal cortex (PFC), leading to numerous studies focusing on its role in learning-related cognitive demands [

35]. Additionally, regions associated with social cognition, such as the right temporoparietal junction (rTPJ), have been explored in the context of collaborative learning [

36]. However, it is important to recognize that the brain functions as an interconnected network rather than a collection of isolated regions. Thus, a comprehensive investigation of neural activity must consider both the cognitive and social dimensions of learning, ensuring that instructional designs effectively support cognitive efficiency. Emerging research further highlights how neuroimaging data, such as that captured by fNIRS, can be integrated with machine learning models to build predictive frameworks of cognitive load. These data-driven models hold potential for developing adaptive learning systems that personalize instruction based on real-time cognitive state estimation.

Recent research also highlights the potential of eye-tracking technology in advancing multimedia learning theories by analyzing learners’ cognitive processes through visual behavior [

37]. Eye-tracking data provides valuable insights into attention patterns, revealing areas of focus, overlooked elements, and potential distractions [

38]. In cognitive load measurement, eye-tracking indicators such as fixation metrics and pupil diameter are critical for assessing cognitive activity and mental effort.

Fixations, where new information is processed, are essential for evaluating perceptual and cognitive activity, serving as direct indicators of cognitive engagement [

37]. Studies consistently demonstrate a strong positive correlation between metrics like total fixation time and cognitive load, with longer fixation times often reflecting increased cognitive demand [

39]. Pupil diameter, as established by Krejtz et al., is another reliable measure of cognitive load, with dilation corresponding to task difficulty [

25]. This phenomenon, known as the Task-Evoked Pupillary Response (TEPR), reinforces the validity of pupil size as an indicator of cognitive demand [

40,

41]. By analyzing these eye-tracking metrics, researchers gain comprehensive insights into learners’ cognitive processing, making it an invaluable tool for understanding the cognitive demands of instructional materials and the strategies learners employ. Consequently, eye-tracking plays a pivotal role in assessing cognitive load in both individual and collaborative learning environments.

Previous research has demonstrated the utility of combining fNIRS and eye-tracking data to improve the accuracy of cognitive load prediction in individual learning or working settings. For instance, Yu et al. and Qu et al. successfully employed multimodal approaches in mental arithmetic and human–computer interaction tasks [

42,

43], showing that data fusion can outperform unimodal models in classifying cognitive workload. Broadbent et al. explored cognitive load under dual-task driving conditions [

44]. However, these studies have primarily focused on individual learning in controlled settings. There is hardly any research examining multimodal cognitive load prediction within a collaborative learning context, where social and cognitive processes interact in more complex ways. This study addresses this gap by applying fNIRS and eye-tracking data to model individual cognitive load during collaborative problem-solving, offering new insights into cognitive dynamics in collaborative learning environments.

2.3. Machine Learning in Cognitive Load Measurement

Traditional data analysis methods, such as correlations, analysis of variance, and linear regressions, have long been standard techniques for hypothesis testing in research. However, the increasing availability of multimodal data (MMD) necessitates the adoption of more advanced analytical techniques, such as machine learning algorithms, for more accurate data prediction and classification. Machine learning has been effectively utilized in multimodal learning analytics to classify and predict cognitive load, offering insights that traditional statistical methods may overlook. For example, logistic regression, a relatively simple yet powerful machine learning technique, has been used to predict student outcomes based on MMD, uncovering patterns that are less detectable with conventional approaches [

45]. Additionally, more complex machine learning models—such as Random Forest and Support Vector Machines (SVMs)—are well-suited for capturing non-linear relationships and feature interactions in educational data. This growing body of work falls under the broader field of multimodal data fusion, which involves integrating signals from multiple data streams (e.g., physiological, behavioral, neural) to enhance prediction performance. In the context of cognitive load research, physiological fusion of fNIRS and eye-tracking data has shown particular promise. For instance, Badarin et al. used this multimodal approach to investigate compensatory brain mechanisms during prolonged working memory tasks [

33], revealing how changes in network-level neural efficiency and attention patterns reflect adaptation to mental fatigue. Similarly, Wang et al. applied Linear Discriminant Analysis to jointly analyze prefrontal oxygenation and gaze data during engineering design tasks [

46], achieving over 91% classification accuracy between near- and far-transfer conditions. These studies highlight the strength of fusion approaches—in which features from multiple modalities are concatenated before classification—demonstrating their effectiveness in improving the accuracy of cognitive state detection. Chakladar and Roy demonstrated that machine learning applied to multimodal physiological data (e.g., EEG, fNIRS, eye-tracking) outperforms unimodal models [

47]. They argue that combining signals provides complementary information and enables robust detection of cognitive load variations in dynamic tasks.

Most existing studies have focused on individual task settings, such as arithmetic reasoning, human–computer interaction, or driving simulations. Very few have applied multimodal data fusion using machine learning in collaborative learning contexts, where cognitive demands are deeply intertwined with social interaction and coordination. This study contributes to the field by applying the fusion of fNIRS and eye-tracking data to predict individual cognitive load in a collaborative learning setting, extending multimodal analytics into socially interactive learning environments.

2.4. Research Questions

RQ1. To what extent do machine learning models, trained on features extracted from fNIRS and eye-tracking data, accurately predict individual cognitive load during collaborative learning?

RQ2. How do these features contribute to the accurate prediction of individual cognitive load during collaborative learning?

3. Materials and Methods

3.1. Participants

This study was conducted at a university in Singapore, where participants (30 male, 48 female) aged 21 to 40 years were recruited. The participants, recruited from diverse disciplines including education, engineering, and mathematics, were all right-handed and had normal or corrected vision. While no restrictions were imposed on gender, age, or academic background when forming dyads, the participants were paired with individuals they did not know to reduce the potential effects of interpersonal familiarity on collaboration outcomes. In total, 39 dyads were formed. Ethical approval was obtained from the university’s Institutional Review Board, and all participants provided written informed consent prior to this study in accordance with established ethical guidelines.

3.2. Experimental Setup and Tasks

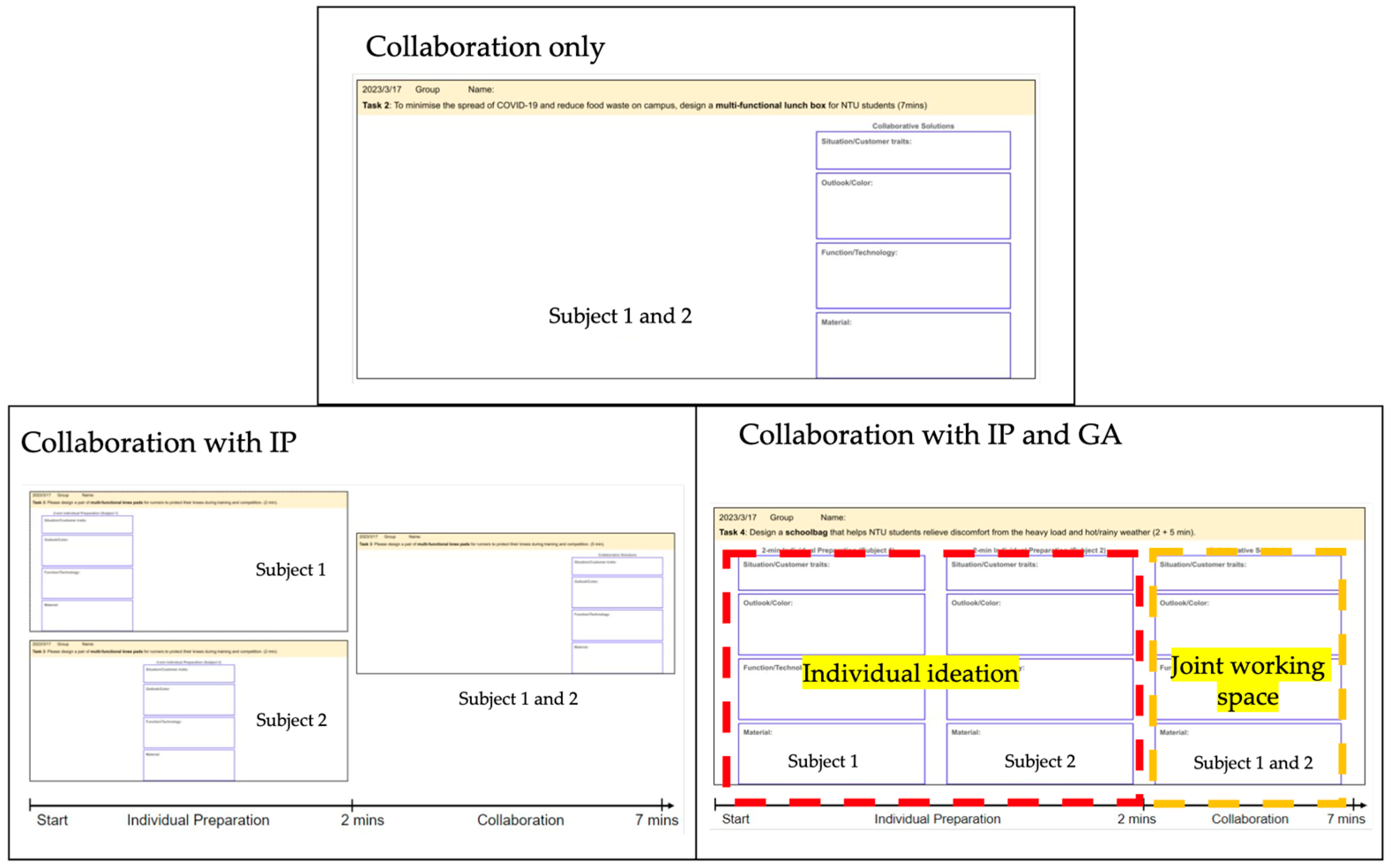

The experiment took place in a co-located, computer-supported collaborative learning (CSCL) environment, where participants worked side by side but interacted primarily through a shared online workspace. The setup consisted of two computers, two eye-tracking sets, and an fNIRS system for capturing neurophysiological responses from both participants in each dyad simultaneously (see

Figure 1A). Participants sat at separate computers to access an online platform (Google Slides) designed to support real-time collaboration through synchronized updates. A crossover design was employed, with each dyad completing three randomly ordered, comparable product ideation tasks developed by the research team in a single session. Each task lasted 7 min, requiring dyads to design common everyday products based on given scenarios. Verbal discussions were permitted to allow participants to jointly generate, develop, and integrate their ideas. While working together, participants’ gaze behaviors and prefrontal brain activity were recorded to examine the physiological mechanisms of cognitive processing during collaboration (

Figure 2).

3.3. Data Collection

Eye-tracking data were collected from each participant using a set of lightweight eye-tracking glasses (version 1.0.7.4., ETVision, Argus Science, Tyngsborough, MA, USA). These devices captured binocular gaze data at a sampling rate of 180 Hz. Before each trial, gaze calibration was performed using the ETVision software’s automatic calibration tool (version 1.0.7.4., ETVision, Argus Science, Tyngsborough, MA, USA). Participants were instructed to fixate on a target against the screen, allowing the system to automatically map gaze coordinates to the scene camera. The integrated eye and scene cameras continuously monitored the participant’s point of gaze in real time throughout the task (see

Figure 1B).

To examine brain activation patterns, an fNIRS-based hyperscanning approach was employed to simultaneously record hemodynamic responses in the prefrontal cortex (PFC) and right temporoparietal junction (rTPJ) at a sampling rate of 10 Hz (Oxymon MkIII, Artinis Medical Systems, Elst, The Netherlands). For each dyad, twenty optodes (eight transmitters and twelve receivers) were used, operating at 760 nm and 850 nm wavelengths to detect changes in hemoglobin levels. The optodes were equally divided between participants, positioned according to the international 10-10 EEG placement system, and spaced 3 cm apart, yielding ten measurement channels per participant (

Figure 2). Differential path-length factors (DPFs) were calculated individually using the system’s inbuilt formula to account for age-related variations.

Following each experimental condition, participants completed a post-task survey to self-report the cognitive load experienced during the activity. A 20-item questionnaire adapted from the cognitive load scale was used for retrospective assessment [

40,

48], covering a total of five dimensions: (1) task demands (TDE), (2) mental effort (MEN), (3) perceived task difficulty (DIF), (4) self-evaluation (SEV), and (5) usability (USE). TDE assessed the level of physical effort exerted to complete the ideation task, while MEN measured the cognitive resources participants devoted to the task. The MEN dimension was aligned with the concept of germane load [

48]. DIF captured individuals’ subjective ratings of task complexity and SEV gauged participants’ confidence in their performance. Finally, USE assessed the ease of online interface usage, particularly regarding the inclusion of collaborative tools like individual preparation and group awareness support features. Each item was rated on a 7-point Likert scale (1 = “Not true at all” to 7 = “Extremely true”), with overall cognitive load scores calculated as the sum of all items.

To ensure stable cognitive load measurement at the individual level, we computed the average score for each participant across the three experimental conditions for each of the five dimensions. This was performed by summing all item responses within a dimension for each condition and then taking the mean of the three condition scores. The resulting participant-level average scores were used in subsequent analysis.

3.4. Data Preprocessing

Using the Oxysoft software (version 3.2.72, Artinis Medical Systems, Elst, The Netherlands), raw fNIRS data was recorded throughout the entire session to capture all three collaboration-based activities. Data files were subsequently segmented into three 7 min recordings for each type of collaborative activity, using event markers to denote trial onset. While changes in oxygenated hemoglobin concentrations, deoxygenated hemoglobin concentrations, and total oxygenation levels were collected, the data analysis process of this study focused on oxygenated hemoglobin (O2Hb) data, given its sensitivity to changes in cerebral blood flow [

49]. The pre-processing of the fNIRS data involved denoising and motion correction to enhance signal quality, followed by calculating O2Hb values as an indicator of brain activation within targeted regions, thus serving as a measure of cognitive load. Key preprocessing steps included pruning channels with low signal-to-noise ratios or channels that did not meet optimal source–detector distances, applying a bandpass filter with cutoffs at 0.01 Hz and 0.1 Hz to remove high- and low-frequency noise, correcting for motion artifacts, and extracting specific timepoint values based on event markers to isolate O2Hb data for further analysis.

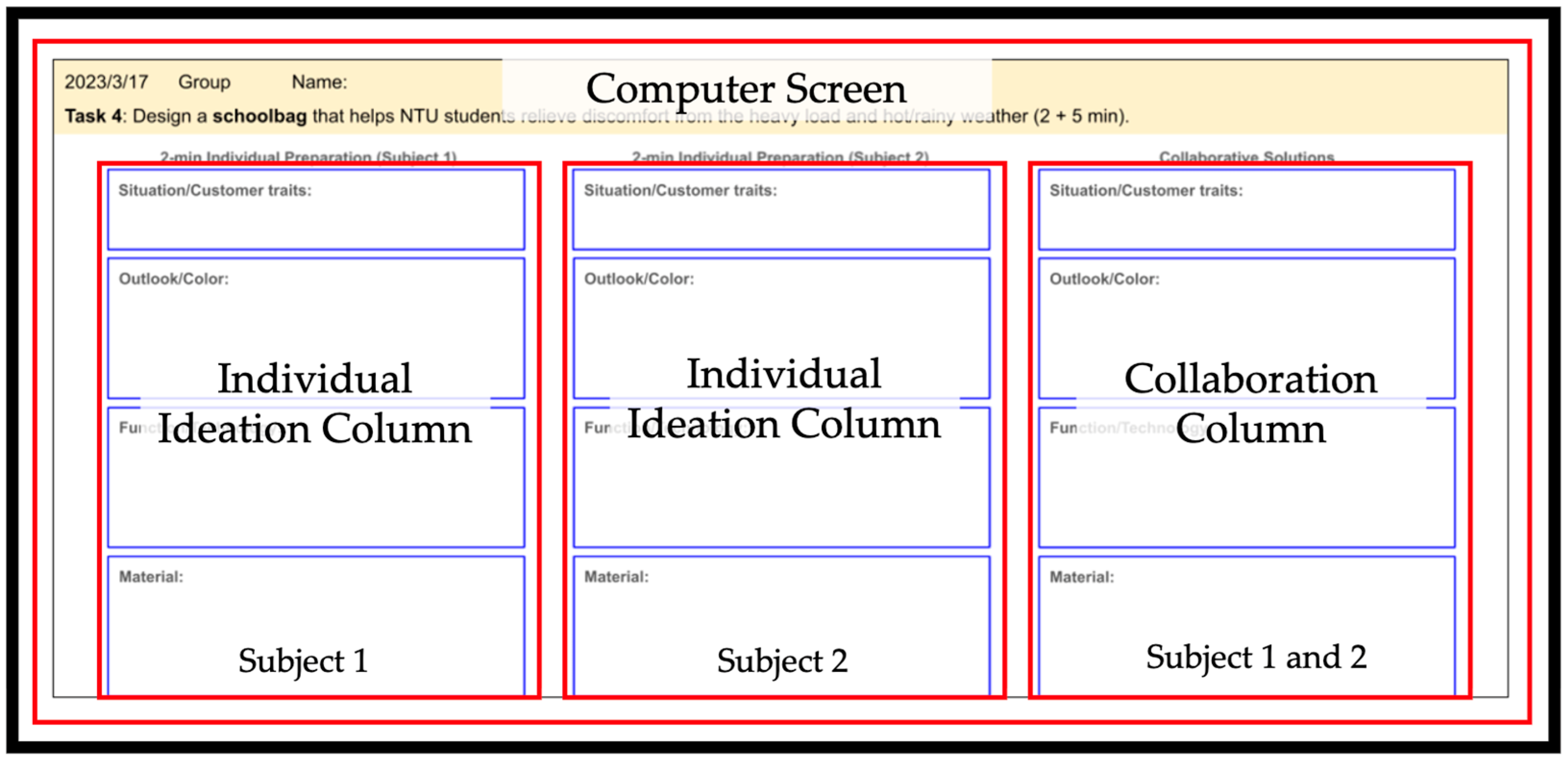

Eye-tracking data were collected and analyzed through area-of-interest (AOI) analysis to extract relevant features, including Fixation Duration, Fixation Counts, and Pupil Diameter. The analysis was performed with the software provided by the eye-tracking manufacturer (version 1.0.8.5, ETAnalysis, Argus Science, Tyngsborough, MA, USA). The AOIs were defined based on specific regions on the computer screen, including individual ideation columns and the collaborative working column (as illustrated in

Figure 3). Each fixation on the collaboration columns was interpreted as an indication of participants’ cognitive engagement with the task. Additionally, metrics such as Fixation Duration and Counts provide insights into visual attention, while Pupil Diameter can serve as a physiological marker of cognitive load and arousal [

50,

51]. Together, these metrics enabled a comprehensive understanding of participants’ visual behavior and cognitive processing during the collaborative task.

Finally, self-reported cognitive load level, gathered through survey questionnaires, was incorporated to measure perceived individual cognitive load. These scores were then used to categorize individuals into high- and low-cognitive load groups, based on a median split of the overall score distribution. By combining these three data sources, we aimed to achieve a comprehensive understanding of cognitive load dynamics during collaborative activities.

This study comprised three distinct collaborative ideation tasks, resulting in a total of 234 collaborative learning events. After excluding events with incomplete or unusable data from either fNIRS, eye-tracking, or cognitive load assessments, our final dataset comprised 188 collaborative learning events, each containing synchronized fNIRS signals, eye-tracking metrics, and corresponding cognitive load ratings. This dataset provided a robust foundation for training and evaluating machine learning models to predict individual cognitive load during collaborative activities.

3.5. Features Extraction

Feature extraction involved deriving relevant metrics from the fNIRS and eye-tracking data. Focusing on the prefrontal cortex (PFC) and right temporoparietal junction (rTPJ) regions, the mean oxygenated hemoglobin (HbO) concentration was extracted from these regions as a primary indicator of neural activity. From the eye-tracking data, several features were calculated that reflect visual attention and cognitive processing: Fixation Count, Total Fixation Duration, Average Fixation Duration, Average Inter-Fixation Duration, Average Inter-Fixation Degree (angular distance between consecutive fixations), Average Pupil Diameter, and First Fixation Time (latency to first fixation on relevant stimulus). Cognitive load was categorized into two levels, “Low” and “High”, which were determined by applying a median split to participants’ mean cognitive load scores that calculated across the five dimensions of cognitive load scale, whereby scores in the lower 50th percentile were designated ‘Low’ and scores in the upper 50th percentile were designated ‘High’.

3.6. Machine Learning Modeling

This study utilized machine learning to predict individual learner’s cognitive load during collaborative learning by analyzing features extracted from the fNIRS and eye-tracking data. We evaluated seven distinct models to capture a wide array of data characteristics: Logistic Regression, Decision Tree, Naive Bayes, Support Vector Machine (SVM), Multi-Layer Perceptron (MLP), XGBoost, and Random Forest. These models were chosen for their diverse strengths in handling various data types and in capturing complex relationships. The dataset consisted of 188 collaborative events, each made up of nine features sourced from the participants. To assess the generalization ability of these models, a between-subject cross-validation strategy was employed. Specifically, this study randomly allocated all participants into distinct subsets, with 70% used for training, 20% for validation, and 10% for testing. Each subset exclusively contained data from one participant to prevent data leakage and more accurately simulate the models’ ability to predict cognitive load for entirely new individuals. Every model was trained on the training set while hyperparameter optimization was conducted using grid search cross-validation on the validation set. Model performances were then assessed on an independent test set using metrics of accuracy, precision, recall, F1 score, and Area Under the Receiver Operating Characteristic Curve (AUC). Furthermore, to understand the contribution of each feature to the cognitive load prediction, feature importance was computed. The computation of feature importance depended on the employed model, mainly utilizing the standard methods available in the scikit-learn library. For tree-based models (Decision Tree, XGBoost, and Random Forest), importance was assessed using criteria like Gini impurity. For linear models (Logistic Regression, SVM), importance was inferred from the absolute value of the coefficient or weight vectors.

All data processing and analysis were conducted using the Python programming language (version 3.9.9). Key libraries utilized include pandas (version 2.0.3) for data manipulation, NumPy (version 1.23.4) for numerical computations, and scikit-learn (version 1.3.2) for machine learning tasks. The XGBoost model was implemented using the XGBoost library (version 2.1.1). The experiments were performed on a macOS Sequoia v. 15 system.

4. Results

4.1. Predictive Accuracy of Machine Learning Models

The performance on the test set of various machine learning models in predicting individual cognitive load is summarized in

Table 1. Among the models evaluated, Random Forest achieved the highest predictive F1 score at 0.88, followed by XGBoost with 0.83 and Decision Tree at 0.82. SVM, MLP, and Naive Bayes demonstrated similar F1 score levels, achieving 0.76, 0.75, and 0.74. In contrast, Logistic Regression demonstrated a lower predictive F1 score at 0.67. All models, except Logistic Regression, surpassed an F1 score threshold of 0.74, highlighting the efficacy of machine learning in leveraging fNIRS and eye-tracking data for cognitive load prediction.

Comparing the predictive F1 scores of models utilizing different data modalities, the Random Forest model revealed a clear advantage in bimodal integration. As shown in

Table 2, the Random Forest model incorporating both fNIRS and eye-tracking data achieved the highest F1 score (0.87), significantly outperforming models based on either modality alone. Specifically, the model using only eye-tracking data achieved an F1 score of 0.79, while the model using only fNIRS data achieved an F1 score of 0.68. Consistently with the Random Forest findings, Naive Bayes, SVM, and MLP also demonstrated superior performance when integrating both fNIRS and eye-tracking data. These findings underscore the complementary nature of fNIRS and eye-tracking data in capturing distinct facets of cognitive load during collaborative problem-solving. The superior performance of the bimodal models suggests that integrating these modalities provides a more comprehensive and nuanced assessment of cognitive load than relying on a single modality. However, not all models derived equivalent benefits from data fusion. Notably, for Logistic Regression, the model utilizing only fNIRS data achieved the highest performance, surpassing both the bimodal model and the eye-tracking-only model. Similarly, the Decision Tree and XGBoost models yielded slightly better or comparable performance using single modalities compared to the bimodal approach. This pattern may be partly attributed to the relatively limited sample size (234 samples), which could constrain the ability of more complex models or those with increased feature dimensionality to fully leverage multimodal fusion and may lead to a higher risk of overfitting.

4.2. Key Features Contributing to Cognitive Load Prediction

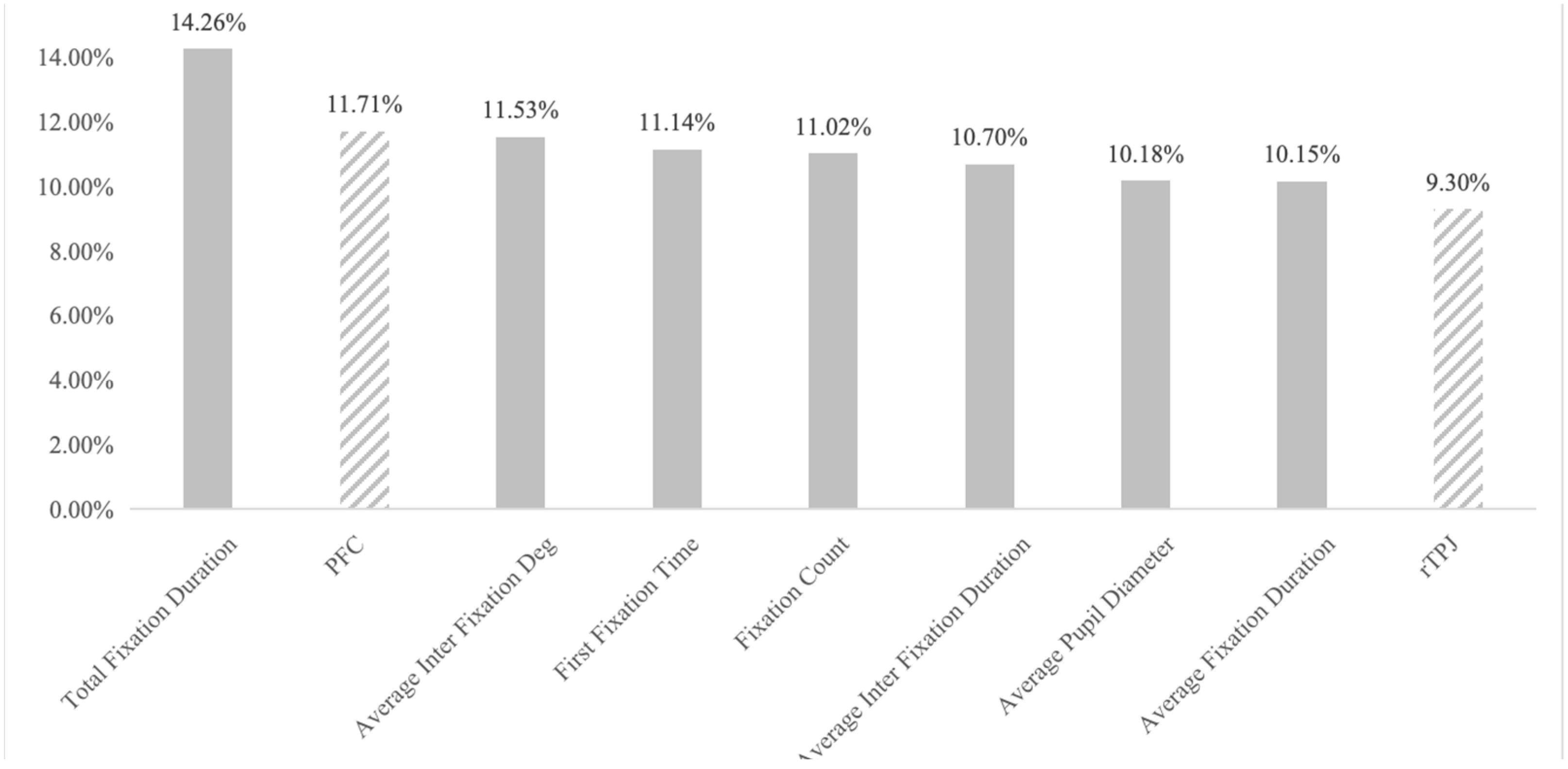

Analysis of feature importance within the best-performing Random Forest model revealed the relative contributions of individual features to cognitive load prediction. As shown in

Figure 4, “Total Fixation Duration” emerged as the most influential feature, accounting for 14.26% of the total feature importance in the Random Forest model. This value indicates the proportion of the model’s prediction ability attributed to this feature relative to all others, with the total importance across all features normalized to 100%. The results suggest that individuals experiencing higher cognitive load tended to exhibit longer overall Fixation Durations on elements relevant to the collaborative problem-solving task. This may reflect increased attentional focus, deeper processing of visual information, or difficulty in disengaging attention from complex stimuli. Following this, “Total Fixation Duration”, “PFC” activity (11.71%), and “Average Inter-Fixation Degree” (11.53%) were identified as strong contributors to prediction accuracy. The prominence of PFC activity aligns with its well-established role in executive functions, working memory, and cognitive control, which are heavily taxed during demanding collaborative tasks. “Average Inter-Fixation Degree”, representing the angular distance between consecutive fixations, likely reflects an individual’s visual search strategy and the spatial distribution of attention. A larger Average Inter-Fixation Degree may indicate broader visual exploration or greater difficulty in locating relevant information.

Other influential features included “First Fixation Time” (11.14%), “Fixation Count” (11.02%), “Average Inter-Fixation Duration” (10.70%), “Average Pupil Diameter” (10.18%), “Average Fixation Duration” (10.15%), and “rTPJ” activity (9.30%). The contribution of rTPJ activity, although relatively smaller, suggests that social cognition and perspective-taking processes may also be modulated by cognitive load during collaborative interactions. While differences in feature importance were observed, the overall contribution of each feature was relatively balanced, suggesting that cognitive load is a multifaceted construct reflected in a constellation of neural and behavioral indicators.

5. Discussion

This study investigated the feasibility of predicting individual cognitive load during collaborative problem-solving using a combination of fNIRS and eye-tracking data. Our results provide compelling answers to both questions, offering valuable insights into the nature of cognitive load within collaborative contexts.

Firstly, the findings from this study demonstrate that machine learning models can indeed accurately predict individual cognitive load during collaborative problem-solving. The findings from the first research question highlight the potential of machine learning in decoding complex neurophysiological and behavioral patterns associated with cognitive load. It supports a growing body of research advocating for multimodal approaches to cognitive load measurement [

37]. Notably, the integration of fNIRS and eye-tracking data in the current study yielded a higher F1 score (0.87) than either modality alone, confirming that each modality captures distinct but complementary dimensions of cognitive processing. This pattern aligns with prior work by Wang et al. [

46], who used fNIRS and eye-tracking data to classify near- and far-transfer cognitive states, achieving an over 91% accuracy. Similarly, Badarin et al. found that fNIRS and eye-tracking provided distinct insights into attention regulation and neural adaptation during prolonged working memory tasks [

33]. However, unlike these studies, which focused on individual cognitive tasks, our study extends these findings to collaborative learning, where both executive and social–cognitive demands contribute to overall cognitive load.

Moreover, while prior studies have often identified a dominant modality depending on task context (e.g., fNIRS for neural efficiency or eye-tracking for attention), our results revealed a relatively balanced contribution of features across modalities, including both attentional (e.g., fixation metrics) and neural (e.g., PFC, rTPJ activity) indicators. This suggests that in collaborative learning contexts, cognitive load is distributed across attentional, executive, and social processes, reinforcing the value of multimodal fusion for a more holistic assessment.

Importantly, our analysis also revealed a notable interaction between model selection and multimodal data fusion effectiveness. While the integration of fNIRS and eye-tracking data generally improved predictive performance, the benefits were not equally realized across all classifiers. The observed underperformance of Logistic Regression, a model inherently limited to linear decision boundaries, compared to its non-linear counterparts, underscores the presence of complex, non-linear patterns within the dataset. This discrepancy indicates that more sophisticated models, such as ensemble methods like Random Forest and XGBoost, are better equipped to capture the intricate relationships between bimodal features and cognitive load. These findings suggest that the success of multimodal fusion depends not only on the inclusion of rich, complementary data streams but also on the model’s ability to effectively leverage the combined feature space. In other words, multimodal advantages may only be fully realized when paired with models capable of capturing higher-order interactions between neural and behavioral indicators. Ensemble-based models, in particular, demonstrated superior capacity in modeling the nuanced associations across modalities, thereby providing a more accurate representation of cognitive states.

Secondly, the feature importance analysis sheds light on the specific features that drive accurate prediction, addressing the second research question. The surprisingly high accuracy achieved with eye-tracking data alone highlights the sensitivity of eye-movement patterns to cognitive load fluctuations. This finding corroborates previous research demonstrating the value of eye-tracking in assessing cognitive effort during various tasks [

38]. Specifically, metrics such as “Total Fixation Duration” and “Average Inter-Fixation Degree” emerged as strong predictors, suggesting that individuals experiencing higher cognitive load tend to exhibit longer fixations and more dispersed visual exploration patterns on task-relevant stimuli. This may reflect increased attentional focus, deeper processing of visual information, or difficulty in disengaging attention from complex or ambiguous elements [

52]. In addition, the significant contribution of PFC activity to cognitive load prediction is consistent with its well-established role in executive functions, working memory, and cognitive control [

53]. As cognitive load increases, the PFC is increasingly recruited to manage the demands of the task, allocate attentional resources, and maintain task performance [

44]. However, a particularly noteworthy finding is the involvement of the right temporoparietal junction (rTPJ)—often associated with social cognition and perspective-taking [

54]. While less dominant than other features, its contribution suggests that cognitive load in collaborative contexts is not purely individual but also socially modulated. This extends existing cognitive load models by highlighting the integration of social cognitive processes during collaborative learning.

6. Implications and Limitations

This study provides compelling evidence that cognitive load is a multifaceted construct reflected in both neural and behavioral measures and that bimodal approaches offer a more comprehensive assessment compared to unimodal approaches. These findings have important implications for both theory and practice. Theoretically, this research extends current understanding of the cognitive processes involved in collaborative problem-solving, a context in which cognitive load has been less studied using physiological data. While multimodal measurement techniques have been applied in individual, high-stakes settings such as aviation and driving (e.g., pilot training, skill acquisition), the integration of fNIRS and eye-tracking in a collaborative learning context represents a novel contribution. This work highlights the need to consider how cognitive load emerges and evolves within social and interactive settings, where coordination and joint attention play critical roles.

Practically, this research demonstrates the feasibility of using real-time neural and behavioral indicators to monitor cognitive load during collaborative learning. Such data could inform adaptive learning systems that dynamically adjust task difficulty, provide targeted feedback, or support more effective communication strategies in educational and workplace environments. Moreover, although this study did not apply full hyperscanning analyses (e.g., inter-brain coherence or synchronization), the methodological setup involving simultaneous recording across participants paves the way for future work exploring intersubject neural dynamics. As hyperscanning continues to emerge in educational neuroscience, this study contributes to building the foundation for more socially aware, brain-based assessments of collaborative learning and cognition.

While this study provides valuable insights into the prediction of cognitive load during collaborative problem-solving, several limitations should be acknowledged. First, the sample was relatively small, which may limit the generalizability of the findings to broader and more diverse populations. Second, the study focused on three specific collaborative ideation tasks, which may not fully represent the variety of collaborative contexts in real-world educational or workplace settings. Third, individual differences—such as cognitive styles, prior experience with collaboration, and group interaction dynamics—were not explicitly controlled for, yet these factors may significantly influence cognitive load and associated physiological responses. Future research should consider incorporating these variables to better understand their moderating effects. Fourth, this study employed shallow machine learning classifiers (e.g., Random Forest, Decision Trees, SVM), rather than deep learning models. While this may be seen as a methodological limitation, deep learning approaches were not adopted because the relatively small sample size was not well-suited for training high-dimensional models. Finally, although this study integrated fNIRS and eye-tracking data, future investigations could benefit from including additional modalities, such as electroencephalography (EEG) or galvanic skin response (GSR), to capture a more comprehensive profile of learners’ cognitive, emotional, and physiological states and improve predictive accuracy through multimodal fusion.

7. Conclusions

This study demonstrates the feasibility of accurately predicting individual cognitive load during collaborative problem-solving using a combination of fNIRS and eye-tracking data. Machine learning models, particularly Random Forest, effectively decode neural and behavioral patterns associated with fluctuations in cognitive load. The integration of fNIRS and eye-tracking provides a more comprehensive assessment compared to using either modality alone, highlighting the multifaceted nature of cognitive load. Key features contributing to the prediction include “Total Fixation Duration”, PFC activity, and “Average Inter-Fixation Degree”, emphasizing the importance of visual attention and executive control processes. While most models benefited from multimodal integration, Decision Tree and XGBoost performed best with single-modality data, possibly due to the limited sample size, which may increase overfitting risk with more complex feature sets. These findings have implications for understanding cognitive load dynamics, designing effective collaborative learning environments, and improving human–computer interfaces. Future research should explore the generalizability of these results across different tasks and populations, incorporate additional physiological measures, and investigate the impact of individual differences and social dynamics on cognitive load during collaboration.

Author Contributions

Conceptualization, W.C., Z.L. and L.Z.; Data curation, L.Z. and M.-Y.M.H.; Formal analysis, Z.L. and L.Z.; Funding acquisition, W.C.; Investigation, Z.L., L.Z. and M.-Y.M.H.; Methodology, W.C., Z.L. and L.Z.; Project administration, W.C.; Resources, W.C. and M.-Y.M.H.; Software, Z.L.; Supervision, W.C., F.A. and W.P.T.; Validation, Z.L., L.Z. and M.-Y.M.H.; Visualization, Z.L. and M.-Y.M.H.; Writing—original draft, Z.L., L.Z. and M.-Y.M.H.; Writing—review and editing, W.C., Z.L., L.Z., M.-Y.M.H., F.A. and W.P.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Institute of Education Research Support for Senior Academic Administrator (RS-SAA) Grant (Grant number: RS 1/22 CWL) and administered by National Institute of Education (NIE), Nanyang Technological University (NTU), Singapore. The study was approved by the NTU IRB (IRB-2021-210). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of NIE and NTU.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to student privacy concerns.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| fNIRS | functional near-infrared spectroscopy |

| CLT | Cognitive Load Theory |

| EEG | electroencephalography |

| fMRI | functional magnetic resonance imaging |

| PFC | prefrontal cortex |

| rTPJ | right temporoparietal junction |

| TEPR | Task-Evoked Pupillary Response |

| MMD | multimodal data |

| SVMs | Support Vector Machines |

| DPF | differential path-length factors |

| TDE | task demands |

| MEN | mental effort |

| DIF | perceived task difficulty |

| SEV | self-evaluation |

| USE | usability |

| O2Hb | oxygenated hemoglobin |

| AOI | area of interest |

| MLP | Multi-Layer Perceptron |

| AUC | Area Under the Receiver Operating Characteristic Curve |

| GSR | galvanic skin response |

References

- Sweller, J. Cognitive Load During Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory and Instructional Design: Recent Developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- van Merriënboer, J.J.G.; Sweller, J. Cognitive Load Theory and Complex Learning: Recent Developments and Future Directions. Educ. Psychol. Rev. 2005, 17, 147–177. [Google Scholar] [CrossRef]

- Hawlitschek, A.; Joeckel, S. Increasing the Effectiveness of Digital Educational Games: The Effects of a Learning Instruction on Students’ Learning, Motivation and Cognitive Load. Comput. Hum. Behav. 2017, 72, 79–86. [Google Scholar] [CrossRef]

- Moreno, R.; Mayer, R. Interactive Multimodal Learning Environments. Educ. Psychol. Rev. 2007, 19, 309–326. [Google Scholar] [CrossRef]

- Sweller, J. Measuring Cognitive Load. Perspect. Med. Educ. 2018, 7, 1–2. [Google Scholar] [CrossRef]

- Paas, F.G.W.C.; van Merriënboer, J.J.G.; Adam, J.J. Measurement of Cognitive Load in Instructional Research. Percept. Mot. Ski. 1994, 79, 419–430. [Google Scholar] [CrossRef] [PubMed]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; Human Mental Workload: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Friedman, N.; Fekete, T.; Gal, K.; Shriki, O. EEG-Based Prediction of Cognitive Load in Intelligence Tests. Front. Hum. Neurosci. 2019, 13, 191. [Google Scholar] [CrossRef]

- Misra, A.; Samuel, S.; Cao, S.; Shariatmadari, K. Detection of Driver Cognitive Distraction Using Machine Learning Methods. IEEE Access 2023, 11, 18000–18012. [Google Scholar] [CrossRef]

- Karmakar, S.; Kamilya, S.; Dey, P.; Guhathakurta, P.K.; Dalui, M.; Bera, T.K.; Halder, S.; Koley, C.; Pal, T.; Basu, A. Real Time Detection of Cognitive Load Using fNIRS: A Deep Learning Approach. Biomed. Signal Process. Control 2023, 80, 104227. [Google Scholar] [CrossRef]

- Paas, F.; van Merriënboer, J.J.G. Cognitive-Load Theory: Methods to Manage Working Memory Load in the Learning of Complex Tasks. Curr. Dir. Psychol. Sci. 2020, 29, 394–398. [Google Scholar] [CrossRef]

- Kalyuga, S.; Renkl, A.; Paas, F. Facilitating Flexible Problem Solving: A Cognitive Load Perspective. Educ. Psychol. Rev. 2010, 22, 175–186. [Google Scholar] [CrossRef]

- Sweller, J.; van Merrienboer, J.J.G.; Paas, F.G.W.C. Cognitive Architecture and Instructional Design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Kirschner, P.A. Cognitive Load Theory: Implications of Cognitive Load Theory on the Design of Learning. Learn. Instr. 2002, 12, 1–10. [Google Scholar] [CrossRef]

- Klepsch, M.; Schmitz, F.; Seufert, T. Development and Validation of Two Instruments Measuring Intrinsic, Extraneous, and Germane Cognitive Load. Front. Psychol. 2017, 8, 1997. [Google Scholar] [CrossRef]

- Klepsch, M.; Seufert, T. Understanding Instructional Design Effects by Differentiated Measurement of Intrinsic, Extraneous, and Germane Cognitive Load. Instr. Sci. 2020, 48, 45–77. [Google Scholar] [CrossRef]

- Leahy, W.; Sweller, J. Cognitive Load Theory and the Effects of Transient Information on the Modality Effect. Instr. Sci. 2016, 44, 107–123. [Google Scholar] [CrossRef]

- Andrade, J. What Does Doodling Do? Appl. Cogn. Psychol. 2010, 24, 100–106. [Google Scholar] [CrossRef]

- Du, X.; Dai, M.; Tang, H.; Hung, J.-L.; Li, H.; Zheng, J. A Multimodal Analysis of College Students’ Collaborative Problem Solving in Virtual Experimentation Activities: A Perspective of Cognitive Load. J. Comput. High. Educ. 2023, 35, 272–295. [Google Scholar] [CrossRef]

- Ophir, E.; Nass, C.; Wagner, A.D. Cognitive Control in Media Multitaskers. Proc. Natl. Acad. Sci. USA 2009, 106, 15583–15587. [Google Scholar] [CrossRef] [PubMed]

- Leppink, J.; Paas, F.; Van der Vleuten, C.P.M.; Van Gog, T.; Van Merriënboer, J.J.G. Development of an Instrument for Measuring Different Types of Cognitive Load. Behav. Res. Methods 2013, 45, 1058–1072. [Google Scholar] [CrossRef] [PubMed]

- Kastaun, M.; Meier, M.; Küchemann, S.; Kuhn, J. Validation of Cognitive Load During Inquiry-Based Learning with Multimedia Scaffolds Using Subjective Measurement and Eye Movements. Front. Psychol. 2021, 12, 703857. [Google Scholar] [CrossRef] [PubMed]

- Krejtz, K.; Duchowski, A.T.; Niedzielska, A.; Biele, C.; Krejtz, I. Eye Tracking Cognitive Load Using Pupil Diameter and Microsaccades with Fixed Gaze. PLoS ONE 2018, 13, e0203629. [Google Scholar] [CrossRef]

- Mutlu-Bayraktar, D.; Ozel, P.; Altindis, F.; Yilmaz, B. Relationship between Objective and Subjective Cognitive Load Measurements in Multimedia Learning. Interact. Learn. Environ. 2023, 31, 1322–1334. [Google Scholar] [CrossRef]

- Mayer, E.; Çakır, M.P.; Acartürk, C.; Tekerek, A.Ş. Towards a Multimodal Model of Cognitive Workload Through Synchronous Optical Brain Imaging and Eye Tracking Measures. Front. Hum. Neurosci. 2019, 13, 375. [Google Scholar] [CrossRef]

- Ahmad, M.I.; Keller, I.; Robb, D.A.; Lohan, K.S. A Framework to Estimate Cognitive Load Using Physiological Data. Pers. Ubiquitous Comput. 2023, 27, 2027–2041. [Google Scholar] [CrossRef]

- Korbach, A.; Brünken, R.; Park, B. Differentiating Different Types of Cognitive Load: A Comparison of Different Measures. Educ. Psychol. Rev. 2018, 30, 503–529. [Google Scholar] [CrossRef]

- Ayres, P.; Lee, J.Y.; Paas, F.; van Merriënboer, J.J.G. The Validity of Physiological Measures to Identify Differences in Intrinsic Cognitive Load. Front. Psychol. 2021, 12, 702538. [Google Scholar] [CrossRef]

- Mayer, R.E. How Can Brain Research Inform Academic Learning and Instruction? Educ. Psychol. Rev. 2017, 29, 835–846. [Google Scholar] [CrossRef]

- Tommerdahl, J. A Model for Bridging the Gap between Neuroscience and Education. Oxf. Rev. Educ. 2010, 36, 97–109. [Google Scholar] [CrossRef]

- Badarin, A.A.; Antipov, V.M.; Grubov, V.V.; Andreev, A.V.; Pitsik, E.N.; Kurkin, S.A.; Hramov, A.E. Brain Compensatory Mechanisms During the Prolonged Cognitive Task: fNIRS and Eye-Tracking Study. IEEE Trans. Cogn. Dev. Syst. 2025, 17, 303–314. [Google Scholar] [CrossRef]

- Tan, L.; Thomson, R.; Koh, J.H.L.; Chik, A. Teaching Multimodal Literacies with Digital Technologies and Augmented Reality: A Cluster Analysis of Australian Teachers’ TPACK. Sustainability 2023, 15, 10190. [Google Scholar] [CrossRef]

- Chai, W.J.; Abd Hamid, A.I.; Abdullah, J.M. Working Memory from the Psychological and Neurosciences Perspectives: A Review. Front. Psychol. 2018, 9, 401. [Google Scholar] [CrossRef]

- Tang, H.; Mai, X.; Wang, S.; Zhu, C.; Krueger, F.; Liu, C. Interpersonal Brain Synchronization in the Right Temporo-Parietal Junction during Face-to-Face Economic Exchange. Soc. Cogn. Affect. Neurosci. 2016, 11, 23–32. [Google Scholar] [CrossRef] [PubMed]

- Mayer, R.E. Unique Contributions of Eye-Tracking Research to the Study of Learning with Graphics. Learn. Instr. 2010, 20, 167–171. [Google Scholar] [CrossRef]

- Koć-Januchta, M.; Höffler, T.; Thoma, G.-B.; Prechtl, H.; Leutner, D. Visualizers versus Verbalizers: Effects of Cognitive Style on Learning with Texts and Pictures—An Eye-Tracking Study. Comput. Hum. Behav. 2017, 68, 170–179. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; OUP Oxford: Oxford, UK, 2011. [Google Scholar]

- Moon, J.; Ryu, J. The Effects of Social and Cognitive Cues on Learning Comprehension, Eye-Gaze Pattern, and Cognitive Load in Video Instruction. J. Comput. High. Educ. 2021, 33, 39–63. [Google Scholar] [CrossRef]

- Tong, S.; Nie, Y. Measuring Designers’ Cognitive Load for Timely Knowledge Push via Eye Tracking. Int. J. Hum. Comput. Interact. 2023, 39, 1230–1243. [Google Scholar] [CrossRef]

- Yu, K.; Chen, J.; Ding, X.; Zhang, D. Exploring Cognitive Load through Neuropsychological Features: An Analysis Using fNIRS-Eye Tracking. Med. Biol. Eng. Amp Comput. 2025, 63, 45–57. [Google Scholar] [CrossRef]

- Qu, J.; Bu, L.; Zhao, L.; Wang, Y. A Training and Assessment System for Human-Computer Interaction Combining fNIRS and Eye-Tracking Data. Adv. Eng. Inform. 2024, 62, 102765. [Google Scholar] [CrossRef]

- Broadbent, D.P.; D’Innocenzo, G.; Ellmers, T.J.; Parsler, J.; Szameitat, A.J.; Bishop, D.T. Cognitive load, working memory capacity and driving performance: A preliminary fNIRS and eye tracking study. Transp. Res. Part F Traffic Psychol. Behav. 2023, 92, 121–132. [Google Scholar] [CrossRef]

- Blikstein, P. Multimodal Learning Analytics. In Proceedings of the Third International Conference on Learning Analytics and Knowledge, LAK ’13, Leuven, Belgium, 8–12 April 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 102–106. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, Z.; Cheng, K.; Fan, G.; Zhou, H. A Multimodal Approach for Fostering Knowledge Transfer in Engineering Design Activity: An Eye-Tracking and fNIRS Study. J. Eng. Des. 2024, 35, 1518–1549. [Google Scholar] [CrossRef]

- Das Chakladar, D.; Roy, P.P. Cognitive Workload Estimation Using Physiological Measures: A Review. Cogn. Neurodyn. 2024, 18, 1445–1465. [Google Scholar] [CrossRef]

- Ryu, J.-H.; Yu, J.-H. The Effects of Pedagogical Agents Realism on Persona Effect and Cognitive Load Factors in Cross-Use of Printed Resources and Mobile Device. J. Korean Assoc. Comput. Educ. 2012, 15, 55–64. [Google Scholar]

- Hoshi, Y. Functional Near-infrared Optical Imaging: Utility and Limitations in Human Brain Mapping. Psychophysiology 2003, 40, 511–520. [Google Scholar] [CrossRef] [PubMed]

- Kosel, C.; Michel, S.; Seidel, T.; Foerster, M. Exploring the Dynamic Interplay of Cognitive Load and Emotional Arousal by Using Multimodal Measurements: Correlation of Pupil Diameter and Emotional Arousal in Emotionally Engaging Tasks. arXiv 2024, arXiv:2403.00366. [Google Scholar]

- Skaramagkas, V.; Giannakakis, G.; Ktistakis, E.; Manousos, D.; Karatzanis, I.; Tachos, N.; Tripoliti, E.; Marias, K.; Fotiadis, D.I.; Tsiknakis, M. Review of Eye Tracking Metrics Involved in Emotional and Cognitive Processes. IEEE Rev. Biomed. Eng. 2023, 16, 260–277. [Google Scholar] [CrossRef]

- Jamet, E. An Eye-Tracking Study of Cueing Effects in Multimedia Learning. Comput. Hum. Behav. 2014, 32, 47–53. [Google Scholar] [CrossRef]

- Duran, R.; Zavgorodniaia, A.; Sorva, J. Cognitive Load Theory in Computing Education Research: A Review. ACM Trans. Comput. Educ. 2022, 22, 1–27. [Google Scholar] [CrossRef]

- Zhou, S.; Yang, H.; Yang, H.; Liu, T. Bidirectional Understanding and Cooperation: Interbrain Neural Synchronization during Social Navigation. Soc. Cogn. Affect. Neurosci. 2023, 18, nsad031. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).