Automatic Detection of Equatorial Plasma Bubbles in Airglow Images Using Two-Dimensional Principal Component Analysis and Explainable Artificial Intelligence

Abstract

1. Introduction

2. Methodology

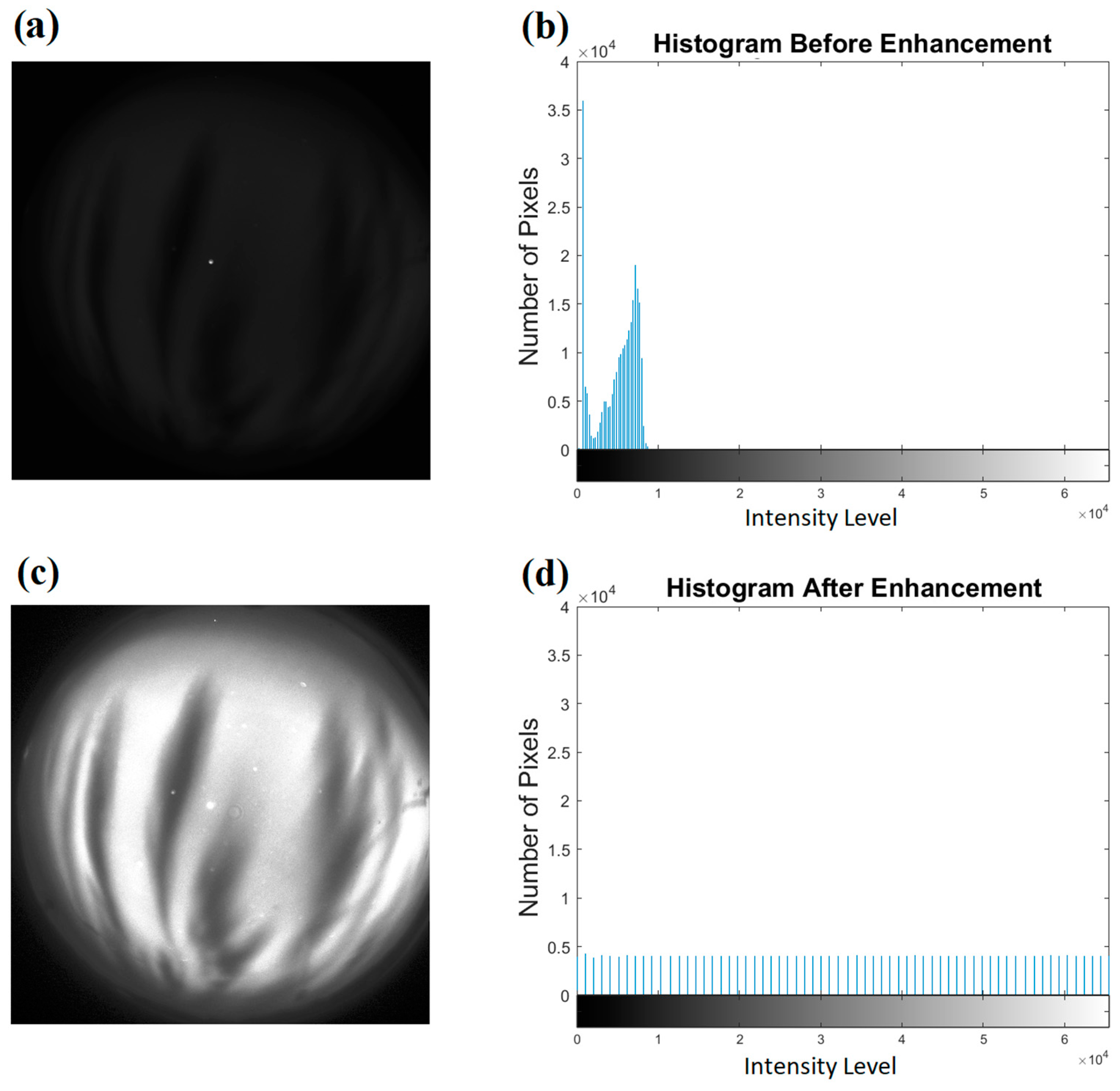

2.1. Images Pre-Processing

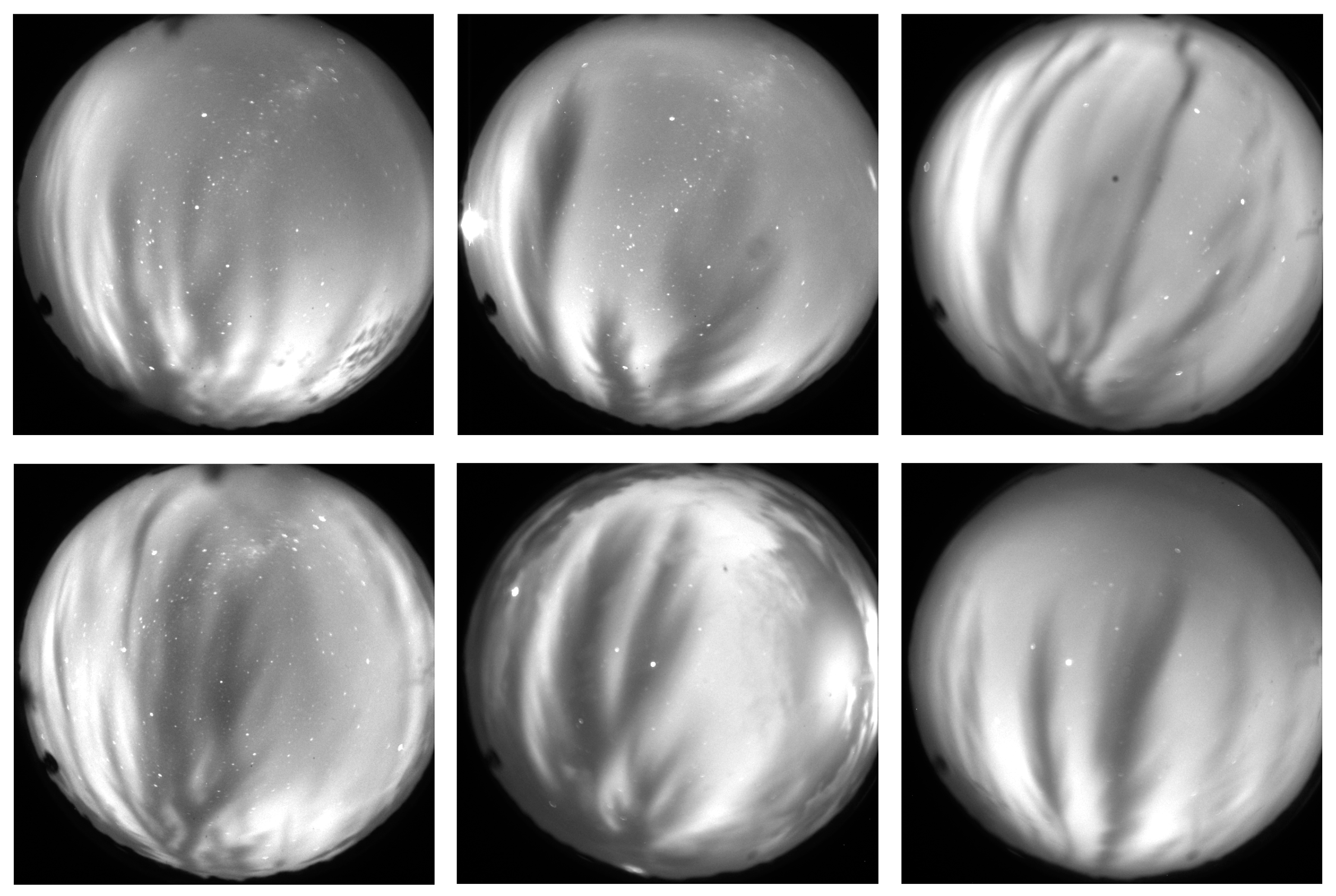

2.2. Dataset Preparation

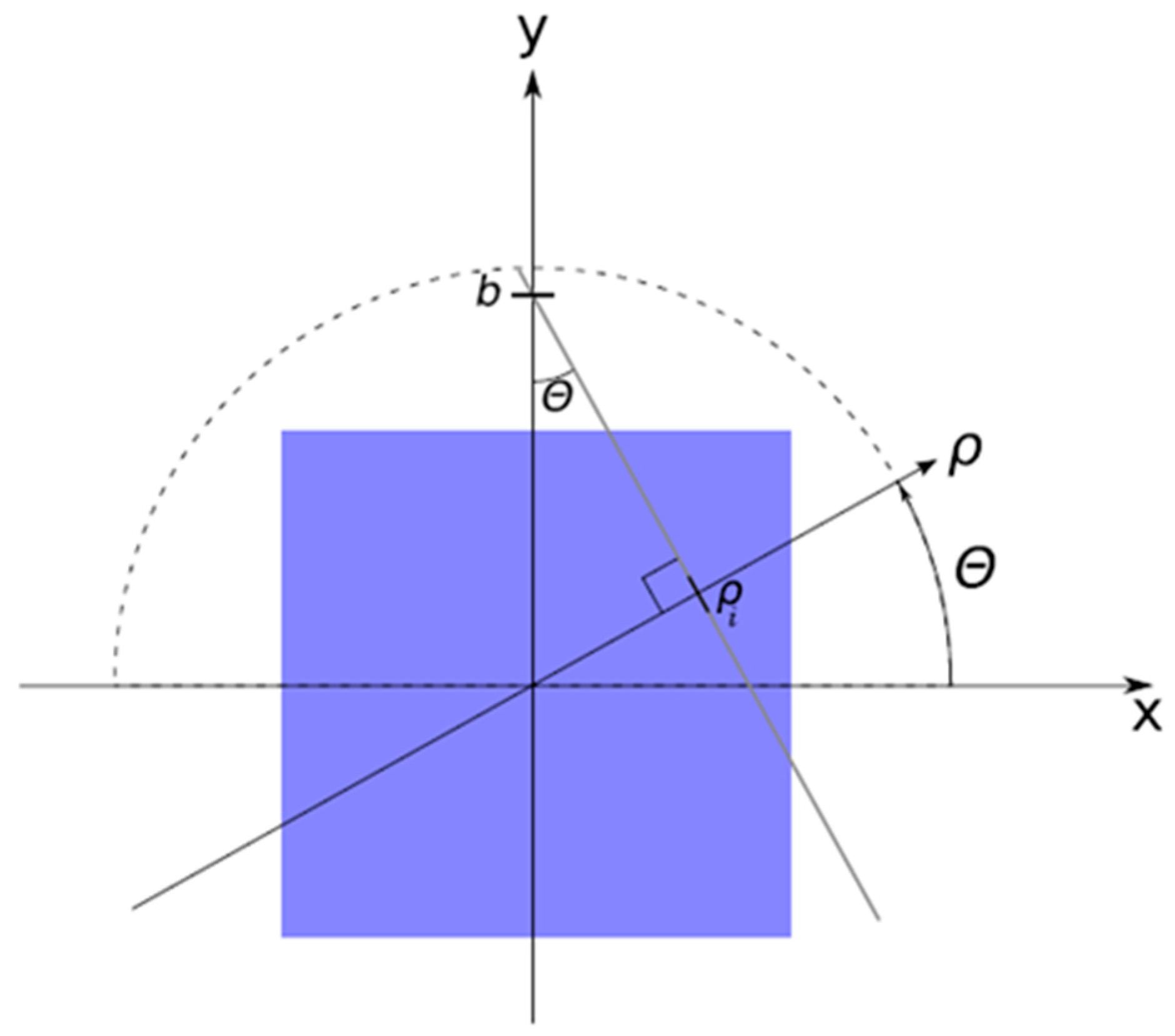

2.3. Radon Transform

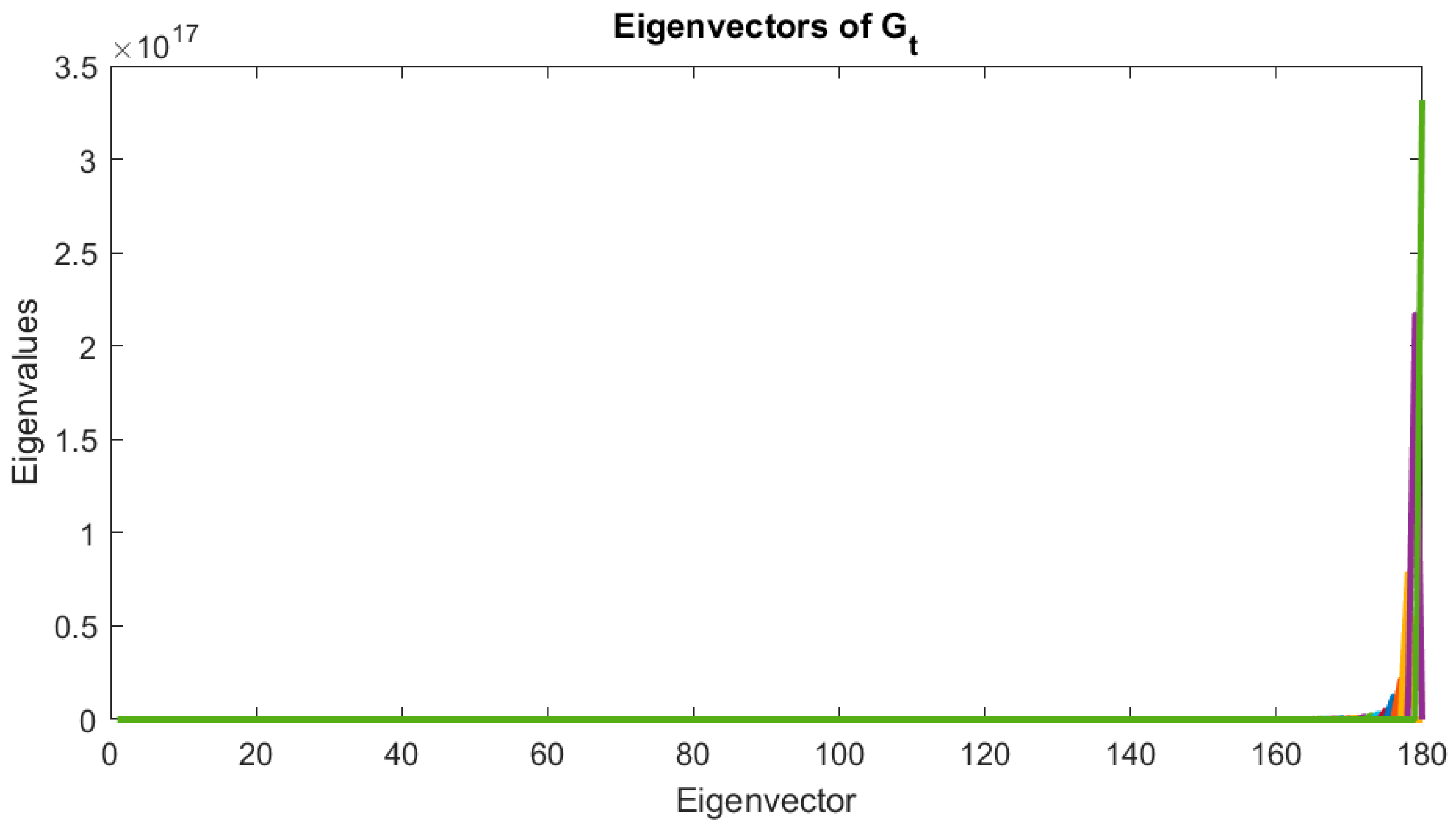

2.4. Two-Dimensional Principal Component Analysis

2.5. Explainable AI (XAI) Model

2.5.1. Random Forest

2.5.2. Recursive Feature Elimination (RFE)

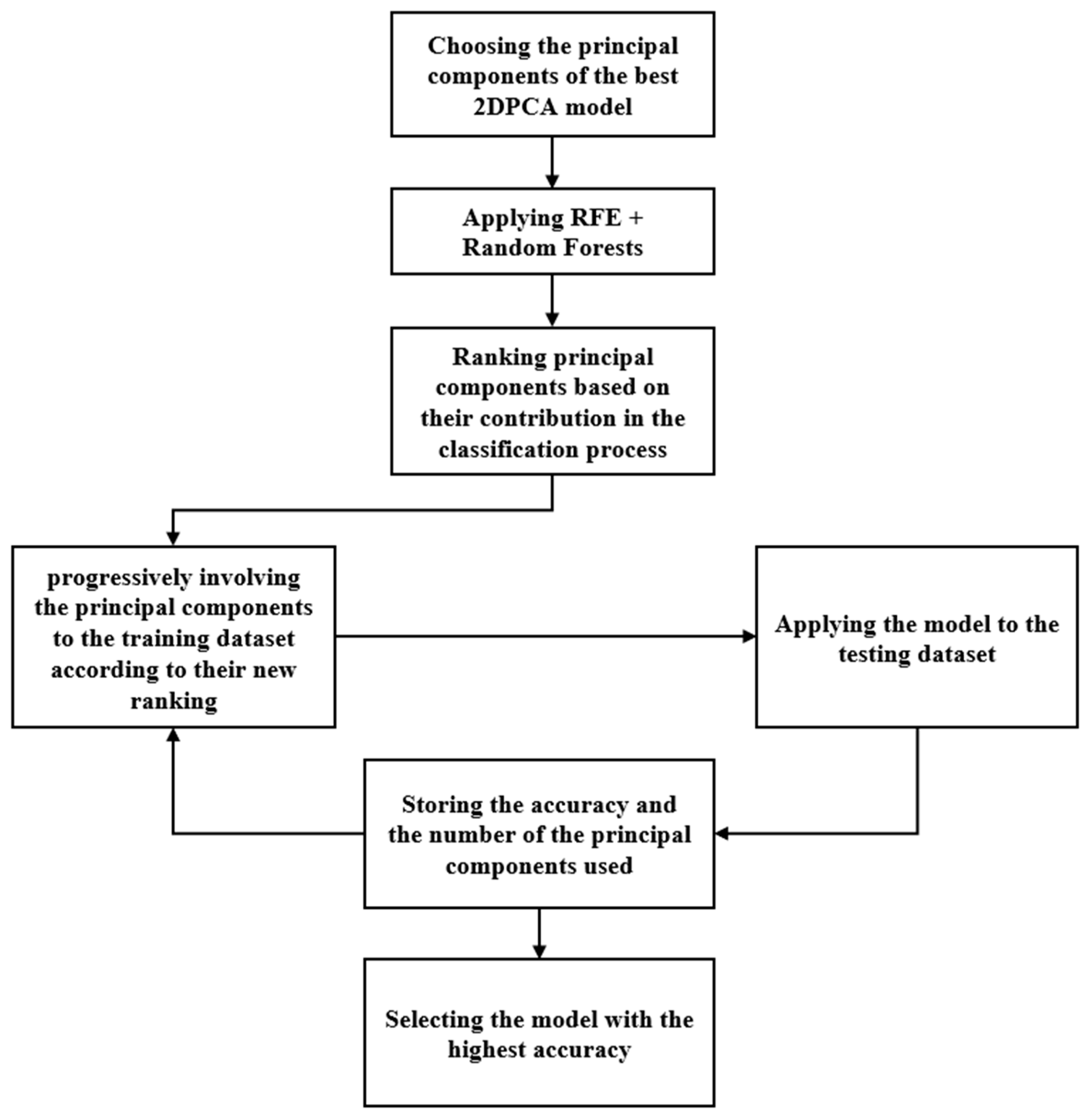

2.5.3. Algorithm Breakdown

2.6. Evaluation Parameters

- Accuracy measures the overall performance of the model by computing the ratio of correct predictions to the total number of tested samples:where is the number of images in which the model correctly detected an EPB when it was actually present. is the number of images in which the model correctly classified an image as not containing an EPB when it was absent;

- Precision evaluates the accuracy of positive predictions by calculating the proportion of correctly detected EPBs () out of all assumed detected EPBs:where is the number of images in which the model incorrectly detected an EPB when none were present;

- Recall assesses the model’s ability to identify all positive instances by calculating the ratio of True Positives to the sum of and :where is the number of images in which the model failed to detect an EPB when one was actually present (a missed detection);

- F1-score provides a balanced measure of the model’s performance by combining both precision and recall into a single metric:

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2DPCA | Two-Dimensional Principal Component Analysis |

| ASI | All-Sky Imager |

| BJL | Bom Jesus da Lapa Observatory |

| CA | São João do Cariri Observatory |

| CCD | Charged Coupled Device |

| CDF | Cumulative Distribution Function |

| CNN | Convolutional Neural Network |

| EPBs | Equatorial Plasma Bubbles |

| RF | Random Forest |

| RFE | Recursive Feature Elimination |

References

- Hysell, D.L.; Woodman, R.F. Imaging coherent backscatter radar observations of topside equatorial spread F. Radio Sci. 1997, 32, 2309–2320. [Google Scholar] [CrossRef]

- Kelley, M.C. The Earth’s Ionosphere; Academic Press: San Diego, CA, USA, 1989. [Google Scholar]

- Basu, S.; Kudeki, E.; Basu, S.; Valladares, C.E.; Weber, E.J.; Zengingonul, H.P.; Espinoza, J. Scintillations, plasma drift, and neutral winds in the equatorial ionosphere after sunset. J. Geophys. Res. 1996, 101, 26795–26809. [Google Scholar] [CrossRef]

- Kintner, P.M.; Kil, H.; Ledvina, B.M.; Paula, E.D. Fading timescales associated with GPS signals and potential consequences. Radio Sci. 2001, 36, 731–743. [Google Scholar] [CrossRef]

- Straus, P.R.; Anderson, P.C.; Danaher, J.E. GPS occultation sensor observations of ionospheric scintillation. Geophys. Res. Lett. 2003, 30, 1436. [Google Scholar] [CrossRef]

- Park, J.; Noja, M.; Stolle, C.; Luhr, H. The ionospheric bubble index deduced from magnetic field and plasma observations onboard swarm. Earth Planets Space 2013, 65, 1333–1344. [Google Scholar] [CrossRef]

- Weber, E.J.; Brinton, H.C.; Buchau, J.; Moore, J.G. Coordinated airborne and satellite measurements of equatorial plasma depletions. J. Geophys. Res. 1982, 87, 10503–10513. [Google Scholar] [CrossRef]

- Tinsley, B.A.; Rohrbaugh, R.P.; Hanson, W.B.; Broadfoot, A.L. Images of transequatorial F region bubbles in 630- and 777-nm emissions compared with satellite measurements. J. Geophys. Res. 1997, 102, 2057–2077. [Google Scholar] [CrossRef]

- Su, S.-Y.; Yeh, H.C.; Heelis, R.A. ROCSAT-1 ionospheric plasma and electrodynamics instrument observations of equatorial spread F: An early transitional scale result. J. Geophys. Res. 2001, 106, 29153–29159. [Google Scholar] [CrossRef]

- Kelley, M.C.; Makela, J.J.; Ledvina, B.M.; Kintner, P.M. Observations of equatorial spread-F from Haleakala, Hawaii. Geophys. Res. Lett. 2002, 29, 2003. [Google Scholar] [CrossRef]

- Makela, J.J.; Miller, E.S. Optical observations of the growth and day-to-day variability of equatorial plasma bubbles. J. Geophys. Res. Space Phys. 2008, 113, 1–7. [Google Scholar] [CrossRef]

- Sobral, J.H.; Takahashi, H.; Abdu, M.A.; Muralikrishna, P.; Sahai, Y.; Zamlutti, C.J.; De Paura, R.E.; Batista, P.P. Determination of the quenching rate of the O(1D) by O(3P) from rocket-borne optical (630 nm) and electron density data. J. Geophys. Res. 1993, 98, 7791–7798. [Google Scholar] [CrossRef]

- Pimenta, A.A.; Fagundes, P.R.; Bittencourt, J.A.; Sahai, Y.; Gobbi, D.; Medeiros, A.F.; Taylor, M.J.; Takahashi, H. Ionospheric plasma bubble zonal drift: A methodology using OI 630 nm all-sky imaging systems. Adv. Space Res. 2001, 27, 1219–1224. [Google Scholar] [CrossRef]

- Weber, E.J.; Buchau, J.; Eather, R.H.; Mende, S.B. North-south aligned equatorial depletions. J. Geophys. Res. 1978, 83, 712–716. [Google Scholar] [CrossRef]

- Mendillo, M.; Baumgardner, J.; Sultan, P.J. Airglow characteristics of equatorial plasma depletions. J. Geophys. Res. 1982, 87, 7641–7652. [Google Scholar] [CrossRef]

- Taylor, M.J.; Eccles, J.V.; Labelle, J.; Sobral, J.H.A. High-resolution OI (630 nm) image measurements of F-region depletion drifts during the Guara campaign. Geophys. Res. Lett. 1997, 24, 1699–1702. [Google Scholar] [CrossRef]

- Fagundes, P.R.; Sahai, Y.; Batista, I.S.; Abdu, M.A.; Bittencourt, J.A.; Takahashi, H. Observations of day-to-day variability in precursor signatures to equatorial F-region plasma depletions. Ann. Geophys. 1999, 17, 1053–1063. [Google Scholar] [CrossRef]

- Makela, J.J.; Ledvina, B.M.; Kelley, M.C.; Kintner, P.M. Analysis of the seasonal variations of equatorial plasma bubble occurrence observed from Haleakala. Hawaii. Ann. Geophys. 2004, 22, 3109–3121. [Google Scholar] [CrossRef]

- Mendillo, M.; Zesta, E.; Shodhan, S.; Doe, R.; Sahai, Y.; Baumgardner, J. Observations and modeling of the coupled latitude-altitude patterns of equatorial plasma depletions. J. Geophys. Res. 2005, 110, A09303. [Google Scholar] [CrossRef]

- Rajesh, P.K.; Liu, J.Y.; Sinha, H.S.S.; Banerjee, S.B.; Misra, R.N.; Dutt, N.; Dadhania, M.B. Observations of plasma depletions in 557.7-nm images over Kavalur. J. Geophys. Res. Space Phys. 2007, 112, A07307. [Google Scholar] [CrossRef]

- Liu, J.Y.; Tsai, H.F.; Jung, T.K. Airglow observations over the equatorial ionization anomaly zone in Taiwan. Ann. Geophys. 2011, 29, 749–757. [Google Scholar] [CrossRef]

- Shiokawa, K.; Otsuka, Y.; Lynn, K.J.; Wilkinson, P.; Tsugawa, T. Airglow-imaging observation of plasma bubble disappearance at geomagnetically conjugate points. Earth Planet Space 2015, 67, 43. [Google Scholar] [CrossRef]

- Fukushima, D.; Shiokawa, K.; Otsuka, Y.; Nishioka, M.; Kubota, M.; Tsugawa, T.; Nagatsuma, T.; Komonjinda, S.; Yatini, C.Y. Geomagnetically conjugate observation of plasma bubbles and thermospheric neutral winds at low latitudes. J. Geophys. Res. Space Phys. 2015, 120, 2222–2231. [Google Scholar] [CrossRef]

- Okoh, D.; Rabiu, B.; Shiokawa, K.; Otsuka, Y.; Segun, B.; Falayi, E.; Onwuneme, S.; Kaka, R. First study on the occurrence frequency of equatorial plasma bubbles over West Africa using an all-sky airglow imager and GNSS receivers. J. Geophys. Res. Space Phys. 2017, 122, 12430–12444. [Google Scholar] [CrossRef]

- Ghodpage, R.N.; Gurav, O.B.; Taori, A.; Sau, S.; Patil, P.T.; Erram, V.C.; Sripathi, S. Observation of an intriguing equatorial plasma bubble event over Indian sector. J. Geophys. Res. Space Phys. 2021, 126, e2020JA028308. [Google Scholar] [CrossRef]

- Wrasse, C.M.; Figueiredo, C.A.O.B.; Barros, D.; Takahashi, H.; Carrasco, A.J.; Vital, L.F.R.; Rezende, L.C.A.; Egito, F.; Rosa, G.M.; Sampaio, A.H.R. Interaction between equatorial plasma bubbles and a medium-scale traveling ionospheric disturbance, observed by OI 630 nm airglow imaging at Bom Jesus de Lapa, Brazil. Earth Planet. Phys. 2021, 5, 397–406. [Google Scholar] [CrossRef]

- Liu, J.-Y.; Rajesh, P.K.; Liao, Y.-A.; Chum, J.; Kan, K.-W.; Lee, I.-T. Airglow observed by a full-band imager together with multi-instruments in Taiwan during nighttime of 1 November 2021. Adv. Space Res. 2024, 73, 663–671. [Google Scholar] [CrossRef]

- Gentile, L.C.; Burke, W.J.; Rich, F.J. A global climatology for equatorial plasma bubbles in the topside ionosphere. Ann. Geophys. 2006, 24, 163–172. [Google Scholar] [CrossRef]

- Huang, C.-S.; Roddy, P.A. Effects of solar and geomagnetic activities on the zonal drift of equatorial plasma bubbles. J. Geophys. Res. Space Phys. 2016, 121, 628–637. [Google Scholar] [CrossRef]

- Wan, X.; Xiong, C.; Rodriguez-Zuluaga, J.; Kervalishvili, G.N.; Stolle, C.; Wang, H. Climatology of the occurrence rate and amplitudes of local time distinguished equatorial plasma depletions observed by Swarm satellite. J. Geophys. Res. Space Phys. 2018, 123, 3014–3026. [Google Scholar] [CrossRef]

- Adkins, V.J.; England, S.L. Automated detection and tracking of equatorial plasma bubbles utilizing Global-Scale Observations of the Limb and Disk (GOLD) 135.6 nm Data. Earth Space Sci. 2023, 10, e2023EA002935. [Google Scholar] [CrossRef]

- Chakrabarti, S.; Patgiri, D.; Rathi, R.; Dixit, G.; Krishna, M.V.S.; Sarkhel, S. Optimizing a deep learning framework for accurate detection of the Earth’s ionospheric plasma structures from all-sky airglow images. Adv. Space Res. 2024, 73, 5990–6005. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, D.; Frangi, A.F.; Yang, J. Two-dimensional PCA: A new approach to appearance-based face representation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 131–137. [Google Scholar] [CrossRef]

- Lin, J.-W. Ionospheric anomaly related to M=6.6, 26 August 2012, Tobelo earthquake near Indonesia: Two-dimensional principal component analysis. Acta Geod. Geophys. 2013, 48, 247–264. [Google Scholar] [CrossRef]

- Lin, J.-W. Generalized two-dimensional principal component analysis and two artificial neural network models to detect traveling ionospheric disturbances. Nat. Hazards 2022, 111, 1245–1270. [Google Scholar] [CrossRef]

- Lin, J.-W.; Chiou, J.-S.; Chao, C.-T. Detecting all possible ionospheric precursors by kernel-based two-dimensional principal component analysis. IEEE Access 2019, 7, 53650–53666. [Google Scholar] [CrossRef]

- Yu, J.; Liu, J. Two-dimensional principal component analysis-based convolutional autoencoder for wafer map defect detection. IEEE Trans. Ind. Electron. 2021, 68, 8789–8797. [Google Scholar] [CrossRef]

- Sun, Z.; Shao, Z.; Shang, Y.; Li, B.; Wu, J.; Bi, H. Randomized nonlinear two-dimensional principal component analysis network for object recognition. Mach. Vis. Appl. 2023, 34, 21. [Google Scholar] [CrossRef]

- Wang, L.; You, Z.H.; Yan, X. Using two-dimensional principal component analysis and rotation forest for prediction of protein-protein interactions. Sci. Rep. 2018, 8, 12874. [Google Scholar] [CrossRef]

- Abdelwahab, M.M.; Abdelrahman, S.A. Four layers image representation for prediction of lung cancer genetic mutations based on 2DPCA. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; 2017; pp. 599–602. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. (NeurIPS) 2017, 30. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Retzlaff, C.O.; Angerschmid, A.; Saranti, A.; Schneeberger, D.; Müller, D.; Holzinger, A. Post-hoc vs ante-hoc explanations: xAI design guidelines for data scientists. Cogn. Syst. Res. 2024, 86, 101243. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Wintz, P. Digital Image Processing, 3rd ed.Addison-Wesley: Reading, MA, USA, 1997. [Google Scholar]

- Carsten, H. The Radon Transform; Aalborg University: Aalborg, Denmark, 2007; VGIS, 07gr721. [Google Scholar]

- Yang, J.; Yang, J.Y. From image vector to matrix: A straightforward image projection technique—IMPCA vs. PCA. Pattern Recognit. 2002, 35, 1997–1999. [Google Scholar] [CrossRef]

- Yin, X.; Chen, L. A Cross-Modal Image and Text Retrieval Method Based on Efficient Feature Extraction and Interactive Learning CAE. Sci. Program. 2022, 1, 7314599. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

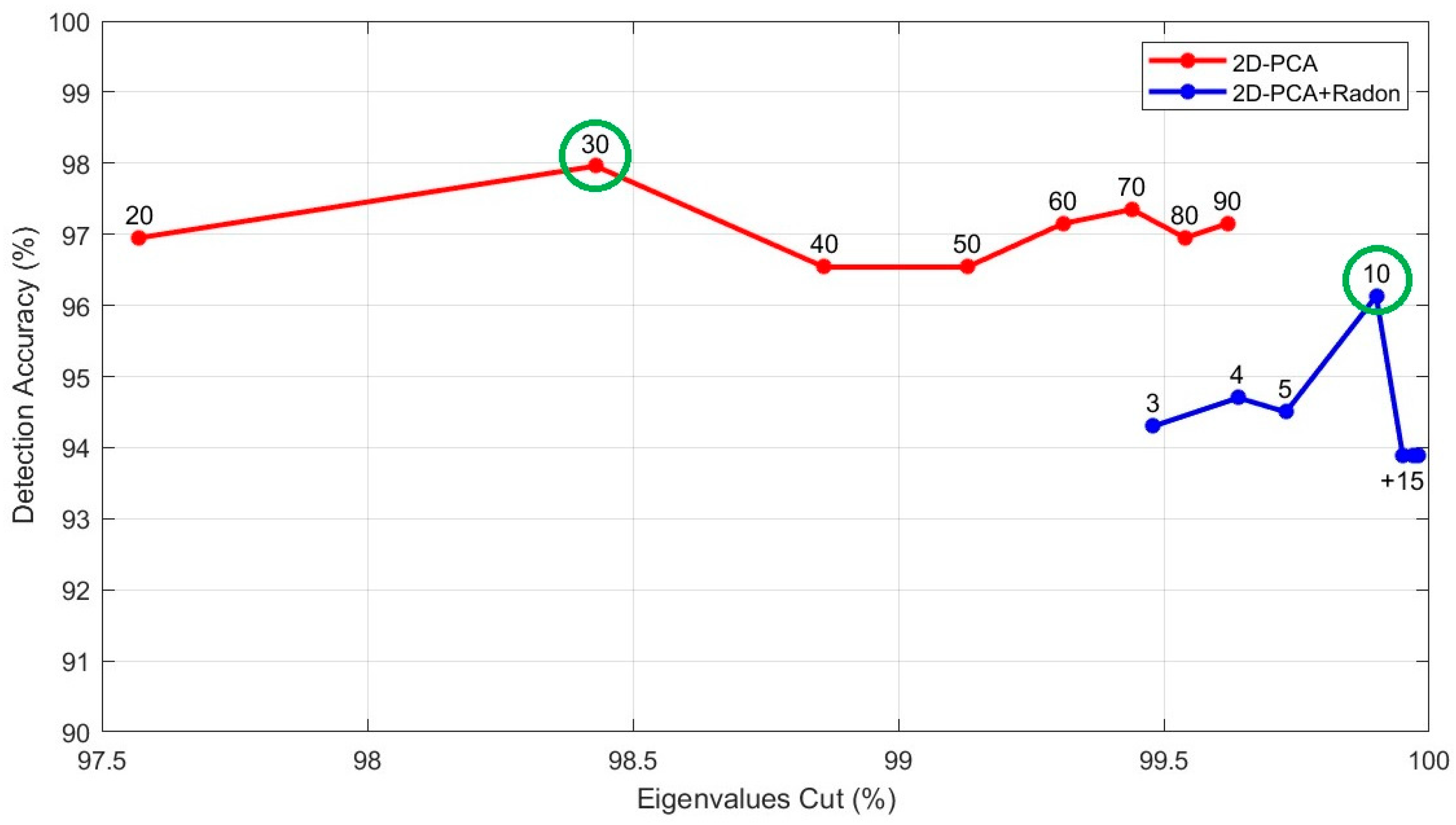

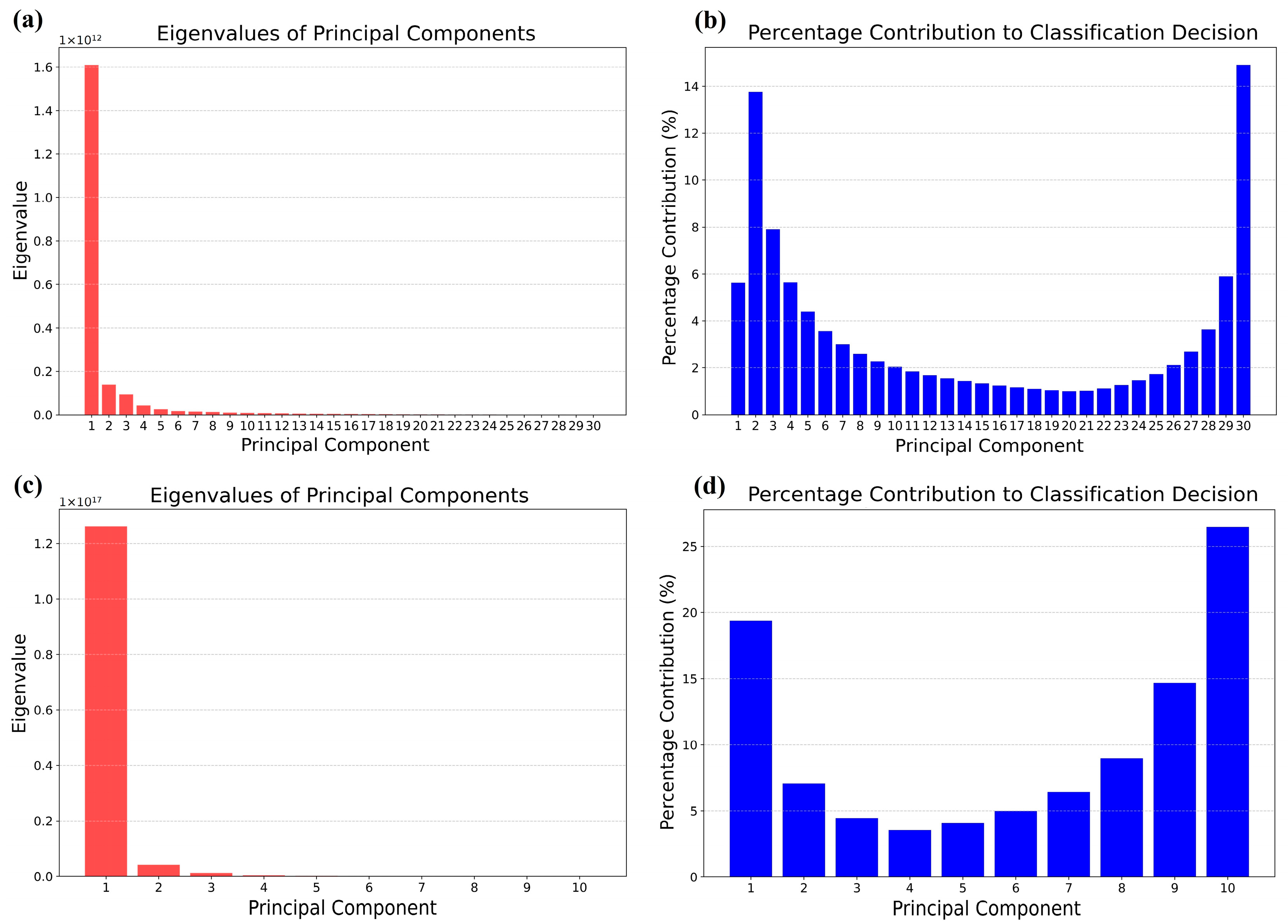

| Number of Principal Components | Eigenvalues Cut (%) | Accuracy (%) | Elapsed Time (s) |

|---|---|---|---|

| 20 | 97.57 | 96.95 | 301.692 |

| 30 | 98.43 | 97.96 | 301.748 |

| 40 | 98.86 | 96.54 | 301.705 |

| 50 | 99.13 | 96.54 | 301.896 |

| 60 | 99.31 | 97.15 | 301.766 |

| 70 | 99.44 | 97.35 | 301.918 |

| 80 | 99.54 | 96.95 | 301.916 |

| 90 | 99.62 | 97.15 | 301.879 |

| Number of Principal Components | Eigenvalues Cut (%) | Accuracy (%) | Elapsed Time (s) |

|---|---|---|---|

| 3 | 99.48 | 94.30 | 130.660 |

| 4 | 99.64 | 94.70 | 130.678 |

| 5 | 99.73 | 94.50 | 130.673 |

| 10 | 99.90 | 96.13 | 130.683 |

| 15 | 99.95 | 93.89 | 130.793 |

| 20 | 99.97 | 93.89 | 130.751 |

| 25 | 99.98 | 93.89 | 130.873 |

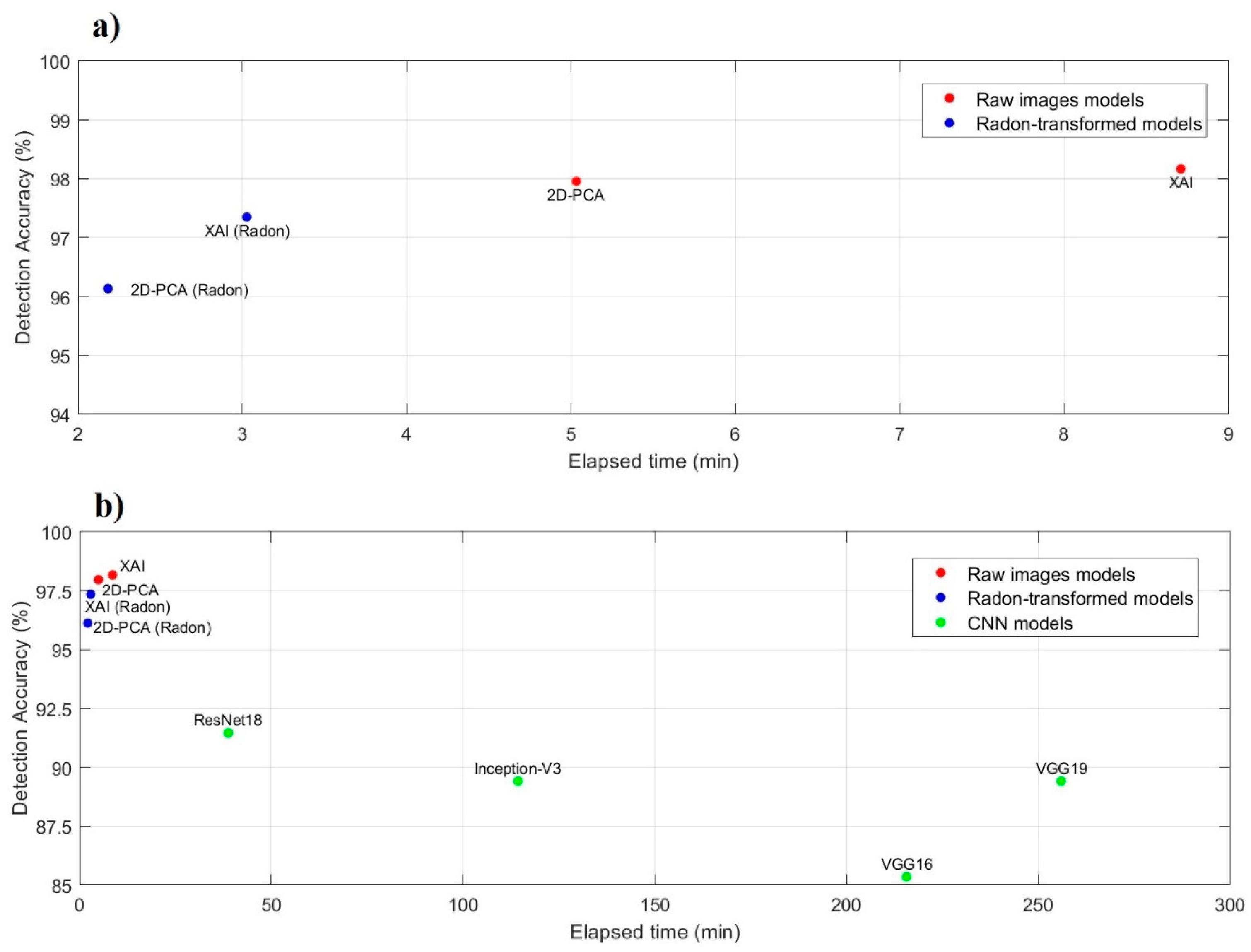

| Model | Accuracy (%) | Precision (%) | Sensitivity (%) | F1-Score (%) | Elapsed Time (min) |

|---|---|---|---|---|---|

| ResNet18 | 91.45 | 92.76 | 91.36 | 92.05 | 38.72 |

| Inception-V3 | 89.41 | 90.78 | 89.32 | 90.04 | 114.30 |

| VGG16 | 85.34 | 88.35 | 85.19 | 86.74 | 215.70 |

| VGG19 | 89.41 | 90.32 | 89.33 | 89.83 | 255.96 |

| 2DPCA | 97.96 | 98.03 | 97.95 | 97.99 | 5.03 |

| 2DPCA (Radon) | 96.13 | 96.37 | 96.17 | 96.27 | 2.18 |

| XAI | 98.17 | 98.18 | 98.16 | 98.17 | 8.71 |

| XAI (Radon) | 97.35 | 97.43 | 97.38 | 97.40 | 3.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yacoub, M.; Abdelwahab, M.; Shiokawa, K.; Mahrous, A. Automatic Detection of Equatorial Plasma Bubbles in Airglow Images Using Two-Dimensional Principal Component Analysis and Explainable Artificial Intelligence. Mach. Learn. Knowl. Extr. 2025, 7, 26. https://doi.org/10.3390/make7010026

Yacoub M, Abdelwahab M, Shiokawa K, Mahrous A. Automatic Detection of Equatorial Plasma Bubbles in Airglow Images Using Two-Dimensional Principal Component Analysis and Explainable Artificial Intelligence. Machine Learning and Knowledge Extraction. 2025; 7(1):26. https://doi.org/10.3390/make7010026

Chicago/Turabian StyleYacoub, Moheb, Moataz Abdelwahab, Kazuo Shiokawa, and Ayman Mahrous. 2025. "Automatic Detection of Equatorial Plasma Bubbles in Airglow Images Using Two-Dimensional Principal Component Analysis and Explainable Artificial Intelligence" Machine Learning and Knowledge Extraction 7, no. 1: 26. https://doi.org/10.3390/make7010026

APA StyleYacoub, M., Abdelwahab, M., Shiokawa, K., & Mahrous, A. (2025). Automatic Detection of Equatorial Plasma Bubbles in Airglow Images Using Two-Dimensional Principal Component Analysis and Explainable Artificial Intelligence. Machine Learning and Knowledge Extraction, 7(1), 26. https://doi.org/10.3390/make7010026