Long-Range Bird Species Identification Using Directional Microphones and CNNs

Abstract

1. Introduction

2. Materials and Methods

2.1. Directional Microphone Prototype

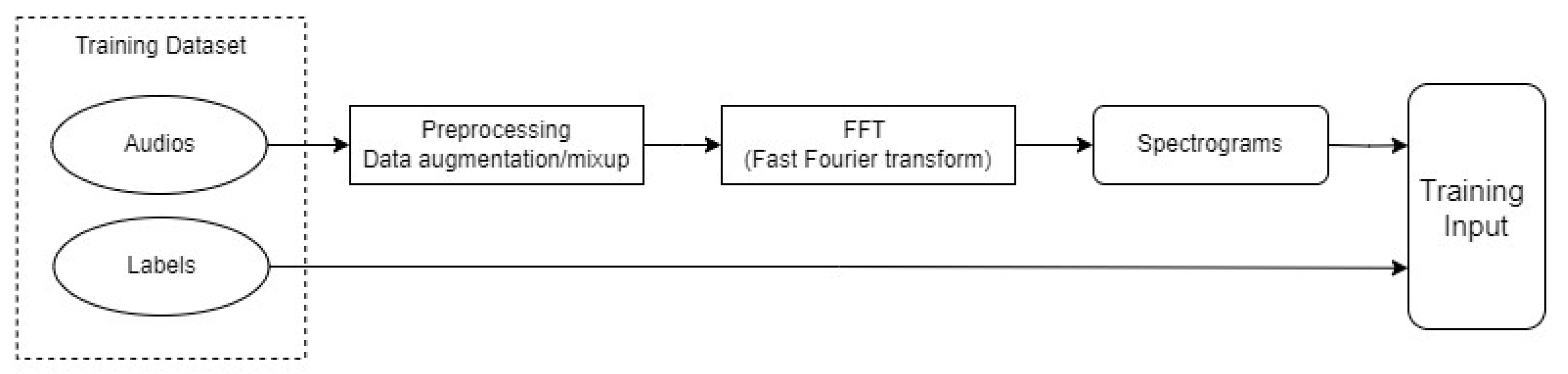

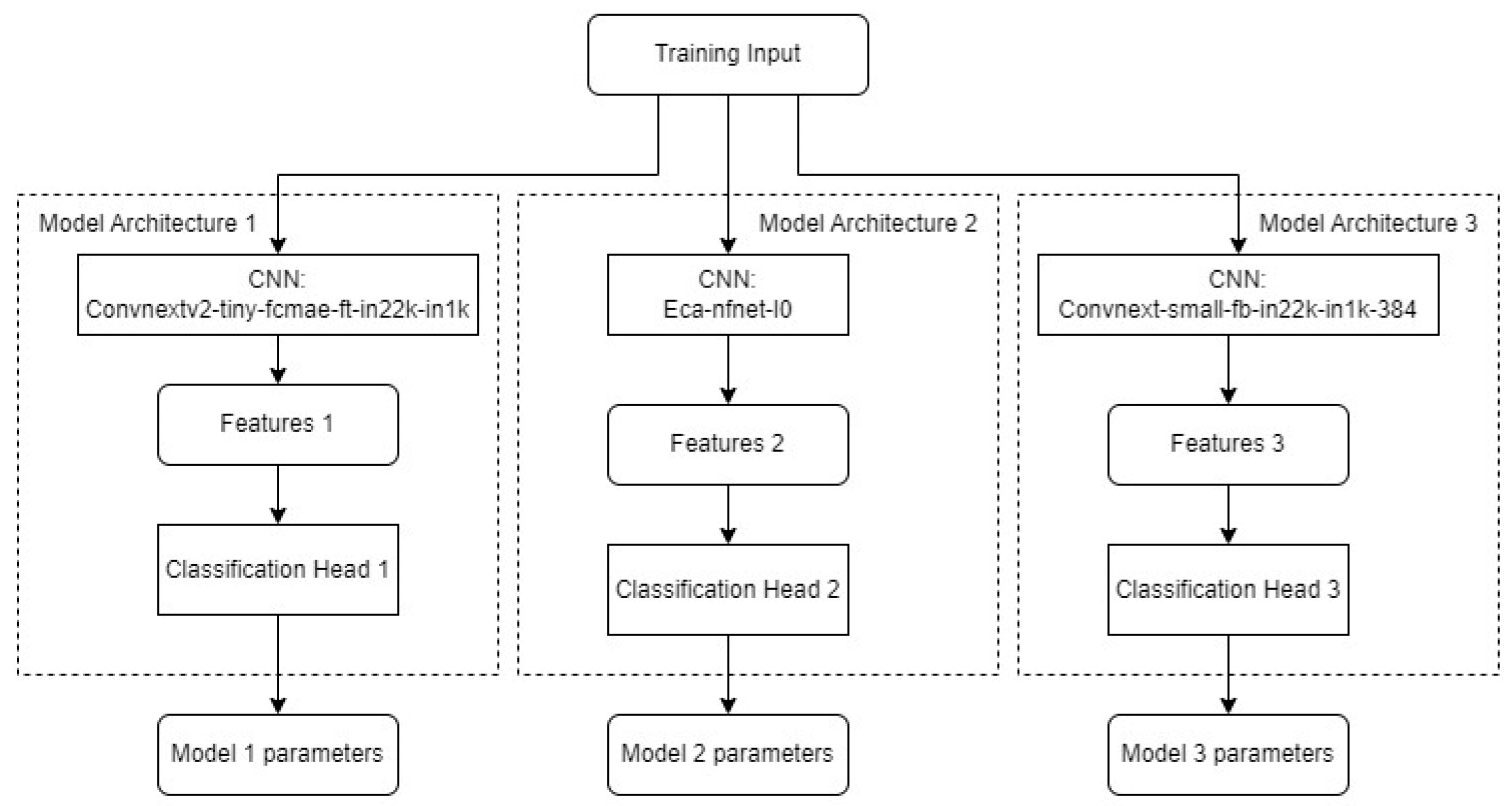

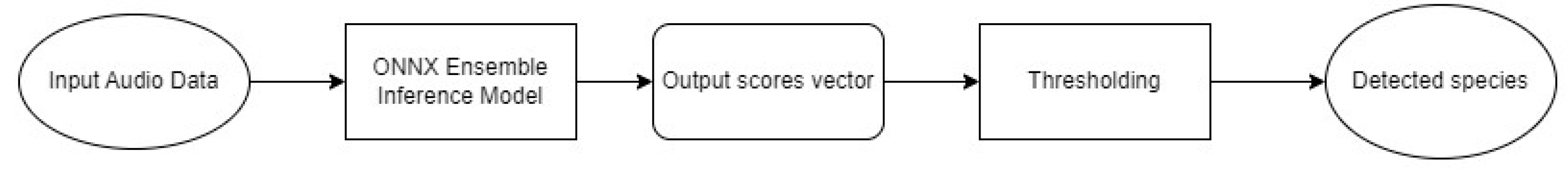

2.2. Deep Learning Model

2.2.1. Model Description

- Convnext_small: 10 h

- Convnextv2-tiny: 9.5 h

- Eca-nfnet: 5.5 h

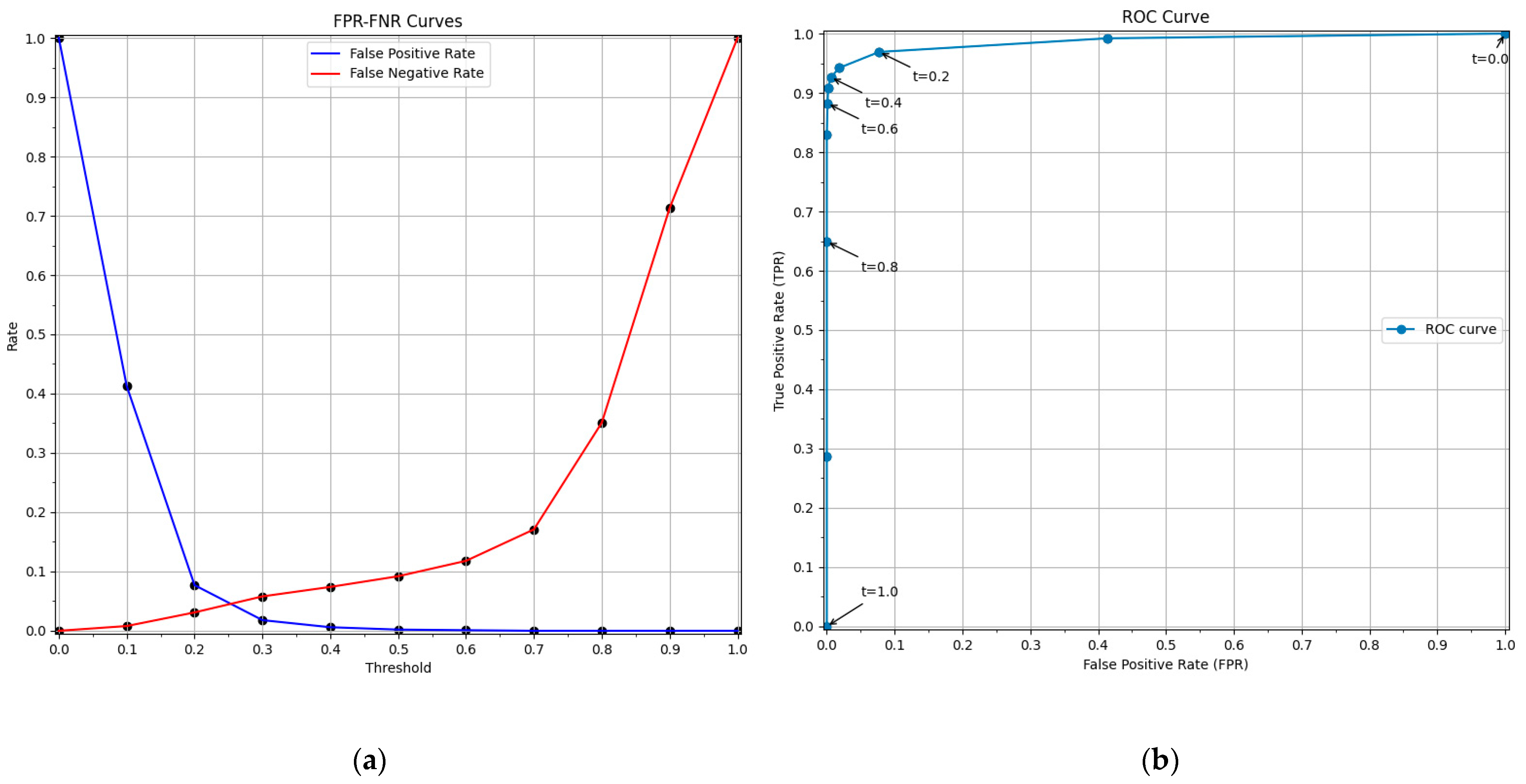

2.2.2. Model Validation

2.3. Field Testing Methodology

2.3.1. Field Testing Objectives

- The distance from the parabola, allowing to determine the effective range of the prototype.

- The relative angle of the parabola axis in relation to the sound source, allowing to determine the sound amplification as a function of the angle deviation from the exact direction of the target. Small deviations are expected to significantly reduce the sound amplification capabilities of the parabola.

- The frequency of the signal, therefore determining the influence of the sound frequency on the detection range and angle. Higher frequencies are expected to be more absorbed and distorted by air compared to lower ones. Including a range of different frequency signals allows the determination of the limit conditions at which a bird emitting a call on a specific frequency range will be detected.

2.3.2. Testing Location and Conditions

2.3.3. Testing Data and Procedure

- Close-range test: At a distance of 25 m, signal power was measured at intervals of one degree in each direction in the interval [−30°, 30°]. The objective was to obtain a detailed characterization of the prototype and determine the critical angles at which there was a significant drop in the signal intensity.

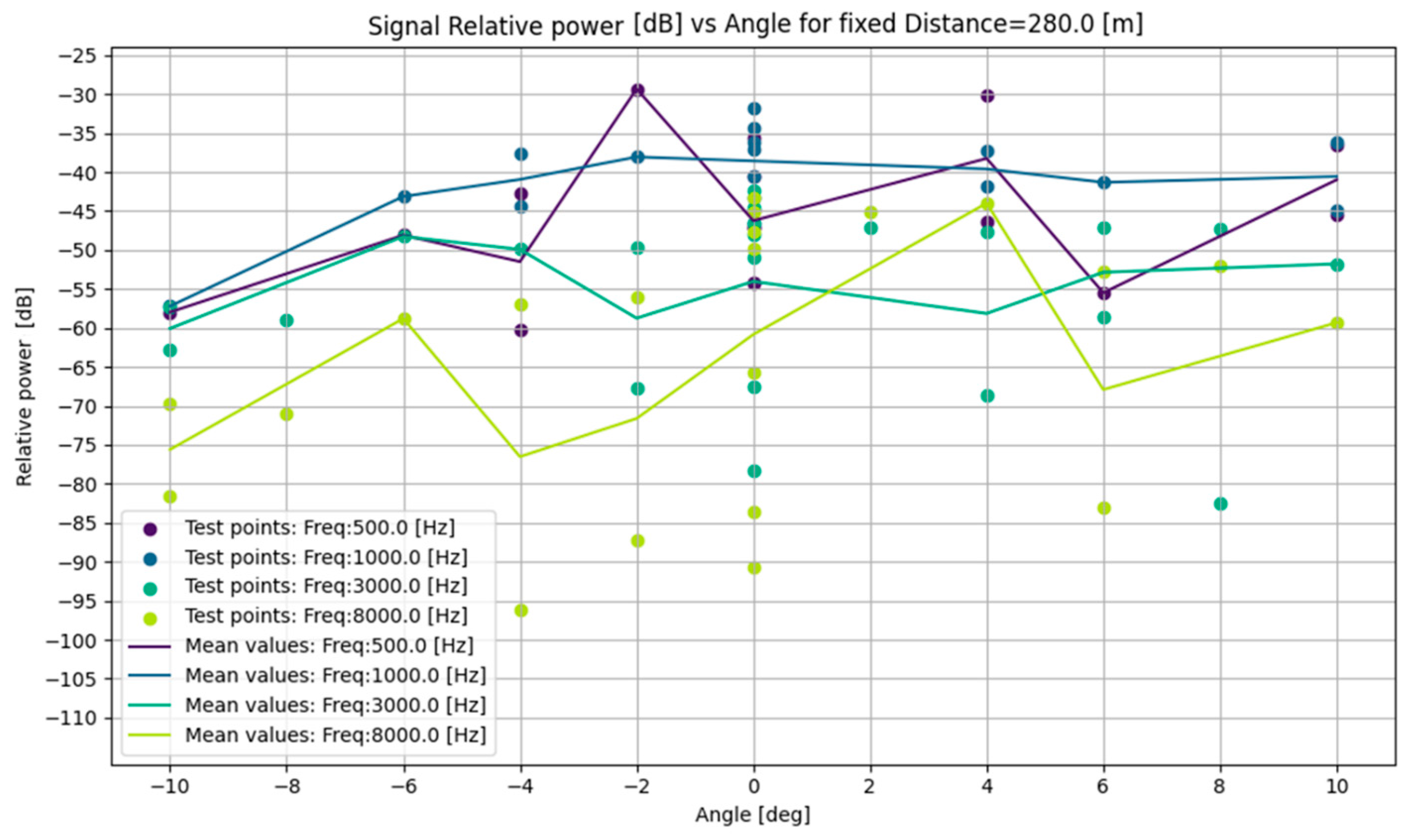

- Long-range test: At a distance of 280 m, signal power was measured at intervals of two degrees in the interval [−10°, 10°]. The objective was to obtain an approximation of the angular precision needed to obtain a quality audio sample at a distance at approximately the effective range of the prototype, where all the frequencies were still audible above the 20 dB signal-to-noise ratio. This distance was chosen while performing the distance characterization test, by real-time analysis of the spectrograms.

2.3.4. Theoretical Sound Decay Function

3. Results

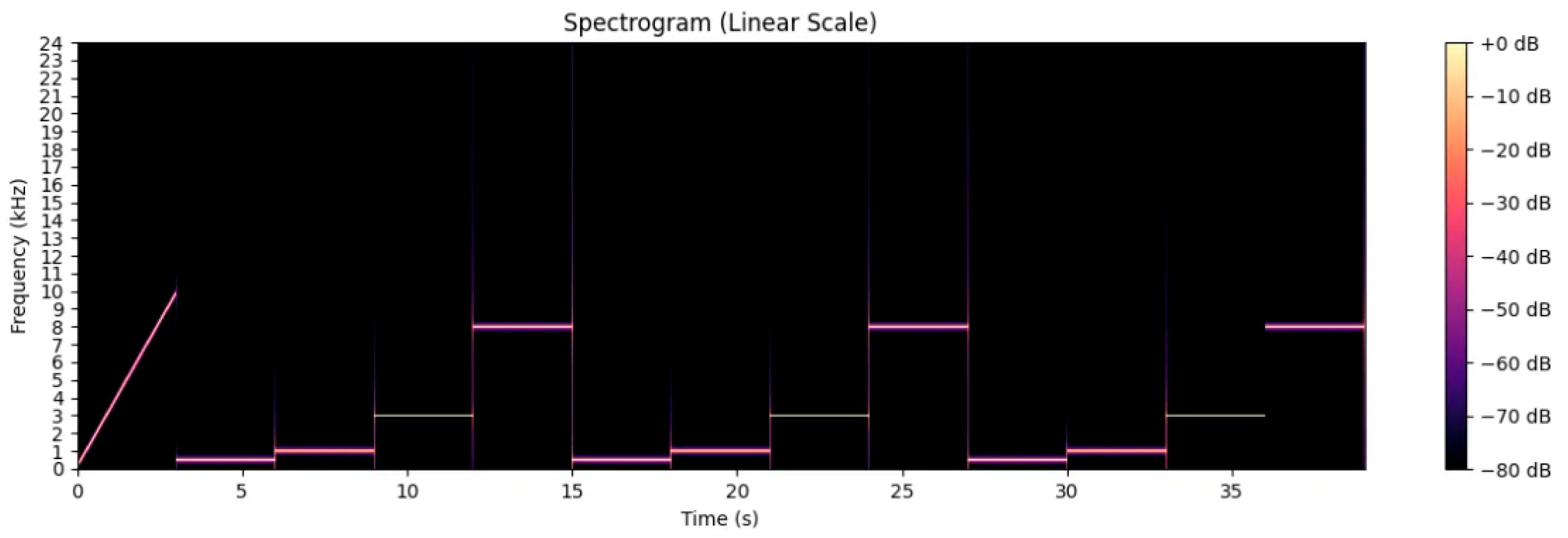

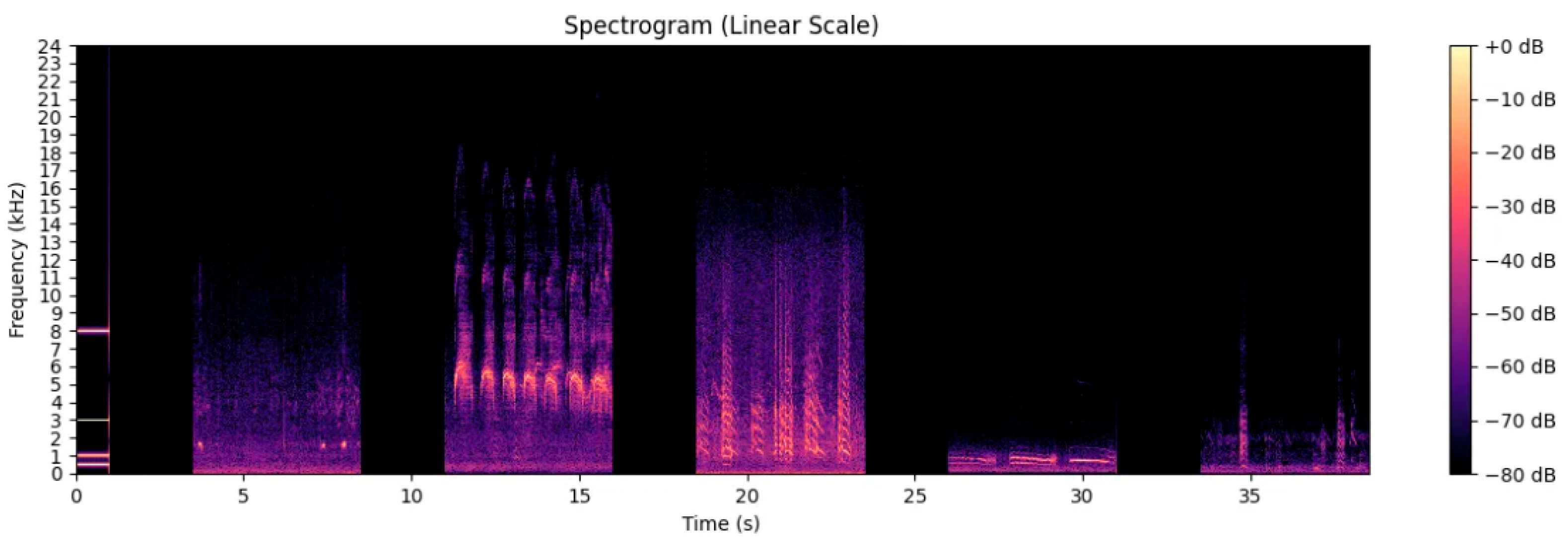

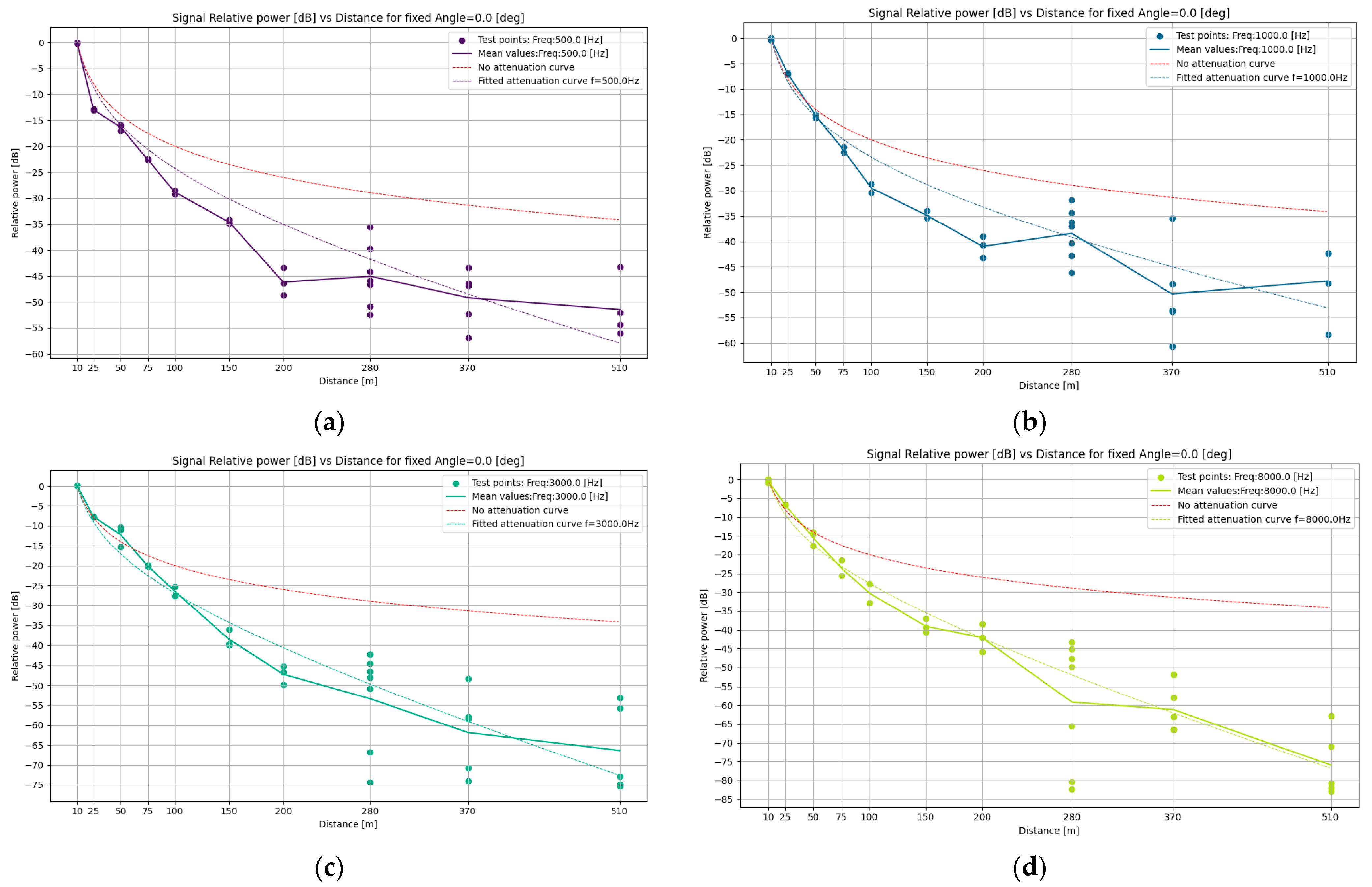

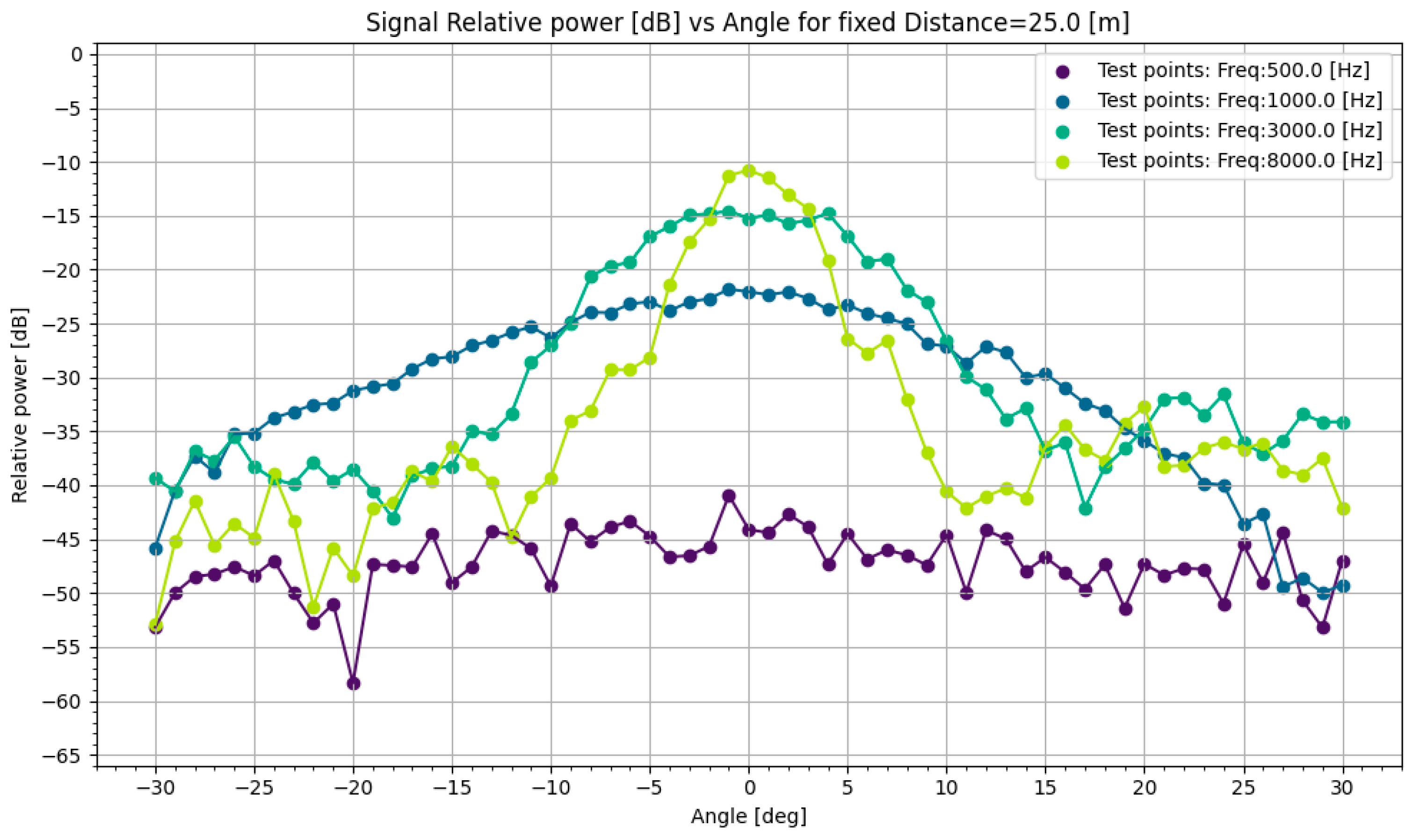

3.1. Simple-Frequency Audio Test Results

3.1.1. Distance Characterization with Frequency

3.1.2. Angle Characterization with Frequency and Distance

3.2. Species Detection with Distance Test Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bauer, S.; Hoye, B.J. Migratory animals couple biodiversity and ecosystem functioning worldwide. Science 2014, 344, 1242552. [Google Scholar] [CrossRef] [PubMed]

- Whelan, C.J.; Wenny, D.G.; Marquis, R.J. Ecosystem services provided by birds. Ann. N. Y. Acad. Sci. 2008, 1134, 25–60. [Google Scholar] [CrossRef] [PubMed]

- Lees, A.C.; Haskell, L.; Allinson, T.; Bezeng, S.B.; Burfield, I.J.; Renjifo, L.M.; Butchart, S.H. State of the world’s birds. Annu. Rev. Environ. Resour. 2022, 47, 231–260. [Google Scholar] [CrossRef]

- Yong, D.L.; Heim, W.; Chowdhury, S.U.; Choi, C.Y.; Ktitorov, P.; Kulikova, O.; Szabo, J.K. The state of migratory landbirds in the East Asian Flyway: Distributions, threats, and conservation needs. Front. Ecol. Evol. 2021, 9, 613172. [Google Scholar] [CrossRef]

- Robinson, W.D.; Bowlin, M.S.; Bisson, I.; Shamoun-Baranes, J.; Thorup, K.; Diehl, R.H.; Winkler, D.W. Integrating concepts and technologies to advance the study of bird migration. Front. Ecol. Environ. 2010, 8, 354–361. [Google Scholar] [CrossRef]

- Chen, X.; Pu, H.; He, Y.; Lai, M.; Zhang, D.; Chen, J.; Pu, H. An Efficient Method for Monitoring Birds Based on Object Detection and Multi-Object Tracking Networks. Animals 2023, 13, 1713. [Google Scholar] [CrossRef]

- Kumar, S.; Kondaveeti, H.K.; Simhadri, C.G.; Reddy, M.Y. Automatic Bird Species Recognition using Audio and Image Data: A Short Review. In Proceedings of the 2023 IEEE International Conference on Contemporary Computing and Communications (InC4), Bangalore, India, 21–22 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Viola, B.M.; Sorrell, K.J.; Clarke, R.H.; Corney, S.P.; Vaughan, P.M. Amateurs can be experts: A new perspective on collaborations with citizen scientists. Biol. Conserv. 2022, 274, 109739. [Google Scholar] [CrossRef]

- Leach, E.C.; Burwell, C.J.; Ashton, L.A.; Jones, D.N.; Kitching, R.L. Comparison of point counts and automated acoustic monitoring: Detecting birds in a rainforest biodiversity survey. Emu 2016, 116, 305–309. [Google Scholar] [CrossRef]

- Vold, S.T.; Handel, C.M.; McNew, L.B. Comparison of acoustic recorders and field observers for monitoring tundra bird communities. Wildl. Soc. Bull. 2017, 41, 566–576. [Google Scholar] [CrossRef]

- Žydelis, R.; Dorsch, M.; Heinänen, S.; Nehls, G.; Weiss, F. Comparison of digital video surveys with visual aerial surveys for bird monitoring at sea. J. Ornithol. 2019, 160, 567–580. [Google Scholar] [CrossRef]

- Margalida, A.; Oro, D.; Cortés-Avizanda, A.; Heredia, R.; Donázar, J.A. Misleading population estimates: Biases and consistency of visual surveys and matrix modelling in the endangered bearded vulture. PLoS ONE 2011, 6, e26784. [Google Scholar] [CrossRef] [PubMed]

- Gottschalk, T.K.; Huettmann, F.; Ehlers, M. Thirty years of analysing and modelling avian habitat relationships using satellite imagery data: A review. Int. J. Remote Sens. 2005, 26, 2631–2656. [Google Scholar] [CrossRef]

- Ozdemir, I.; Mert, A.; Ozkan, U.Y.; Aksan, S.; Unal, Y. Predicting bird species richness and micro-habitat diversity using satellite data. For. Ecol. Manag. 2018, 424, 483–493. [Google Scholar] [CrossRef]

- Fretwell, P.T.; Scofield, P.; Phillips, R.A. Using super–high resolution satellite imagery to census threatened albatrosses. IBIS 2017, 159, 481–490. [Google Scholar] [CrossRef]

- Regos, A.; Gómez-Rodríguez, P.; Arenas-Castro, S.; Tapia, L.; Vidal, M.; Domínguez, J. Model-assisted bird monitoring based on remotely sensed ecosystem functioning and atlas data. Remote Sens. 2020, 12, 2549. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.M.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef]

- Han, Y.G.; Yoo, S.H.; Kwon, O. Possibility of applying unmanned aerial vehicle (UAV) and mapping software for the monitoring of waterbirds and their habitats. J. Ecol. Environ. 2017, 41, 21. [Google Scholar] [CrossRef]

- Díaz-Delgado, R.; Mañez, M.; Martínez, A.; Canal, D.; Ferrer, M.; Aragonés, D. Using UAVs to map aquatic bird colonies. In The Roles of Remote Sensing in Nature Conservation: A Practical Guide and Case Studies; Springer: Cham, Switzerland, 2017; pp. 277–291. [Google Scholar]

- Lee, W.Y.; Park, M.; Hyun, C.U. Detection of two Arctic birds in Greenland and an endangered bird in Korea using RGB and thermal cameras with an unmanned aerial vehicle (UAV). PLoS ONE 2019, 14, e0222088. [Google Scholar] [CrossRef]

- Michez, A.; Broset, S.; Lejeune, P. Ears in the sky: Potential of drones for the bioacoustic monitoring of birds and bats. Drones 2021, 5, 9. [Google Scholar] [CrossRef]

- Abrahams, C.; Geary, M. Combining bioacoustics and occupancy modelling for improved monitoring of rare breeding bird populations. Ecol. Indic. 2020, 112, 106131. [Google Scholar] [CrossRef]

- Shaw, T.; Hedes, R.; Sandstrom, A.; Ruete, A.; Hiron, M.; Hedblom, M.; Mikusiński, G. Hybrid bioacoustic and ecoacoustic analyses provide new links between bird assemblages and habitat quality in a winter boreal forest. Environ. Sustain. Indic. 2021, 11, 100141. [Google Scholar] [CrossRef]

- Alswaitti, M.; Zihao, L.; Alomoush, W.; Alrosan, A.; Alissa, K. Effective classification of birds’ species based on transfer learning. Int. J. Electr. Comput. Eng. IJECE 2022, 12, 4172–4184. [Google Scholar] [CrossRef]

- Rabhi, W.; Eljaimi, F.; Amara, W.; Charouh, Z.; Ezzouhri, A.; Benaboud, H.; Ouardi, F. An Integrated Framework for Bird Recognition using Dynamic Machine Learning-based Classification. In Proceedings of the 2023 IEEE Symposium on Computers and Communications (ISCC), Gammarth, Tunisia, 9–12 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 889–892. [Google Scholar]

- Chen, A.; Jacob, M.; Shoshani, G.; Charter, M. Using computer vision, image analysis and UAVs for the automatic recognition and counting of common cranes (Grus grus). J. Environ. Manag. 2023, 328, 116948. [Google Scholar] [CrossRef] [PubMed]

- Thomas, A.; Speldewinde, P.; Roberts, J.D.; Burbidge, A.H.; Comer, S. If a bird calls, will we detect it? Factors that can influence the detectability of calls on automated recording units in field conditions. Emu 2020, 120, 239–248. [Google Scholar] [CrossRef]

- Shonfield, J.; Bayne, E.M. Autonomous recording units in avian ecological research: Current use and future applications. Avian Conserv. Ecol. 2017, 12, 14. [Google Scholar] [CrossRef]

- Sound Approach. Common Scoters in Strange Places. Available online: https://soundapproach.co.uk/common-scoters-strange-places/ (accessed on 21 August 2024).

- Metcalf, O.C.; Bradnum, D.; Dunning, J.; Lees, A.C. Nocturnal overland migration of Common Scoters across England. Br. Birds 2019, 115, 130–141. [Google Scholar]

- Olson, H.F. Directional microphones. J. Audio Eng. Soc. 1967, 15, 420–430. [Google Scholar] [CrossRef]

- Smith, A.; Brown, T. Enhancing acoustic monitoring with directional microphones. Acoust. Res. Lett. 2021, 22, 134–142. [Google Scholar]

- Blumstein, D.T.; Mennill, D.J.; Clemins, P.; Girod, L.; Yao, K.; Patricelli, G.; Kirschel, A.N. Acoustic monitoring in terrestrial environments using microphone arrays: Applications, technological considerations and prospectus. J. Appl. Ecol. 2011, 48, 758–767. [Google Scholar] [CrossRef]

- Verreycken, E.; Simon, R.; Quirk-Royal, B.; Daems, W.; Barber, J.; Steckel, J. Bio-acoustic tracking and localization using heterogeneous, scalable microphone arrays. Commun. Biol. 2021, 4, 1275. [Google Scholar] [CrossRef]

- Suzuki, R.; Matsubayashi, S.; Hedley, R.W.; Nakadai, K.; Okuno, H.G. HARKBird: Exploring acoustic interactions in bird communities using a microphone array. J. Robot. Mechatron. 2017, 29, 213–223. [Google Scholar] [CrossRef]

- Gayk, Z.G.; Mennill, D.J.; Daniel, J. Acoustic similarity of flight calls corresponds with the composition and structure of mixed-species flocks of migrating birds: Evidence from a three-dimensional microphone array. Philos. Trans. R. Soc. B 2023, 378, 20220114. [Google Scholar] [CrossRef]

- Chakraborty, D.; Mukker, P.; Rajan, P.; Dileep, A.D. Bird call identification using dynamic kernel based support vector machines and deep neural networks. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 280–285. [Google Scholar]

- Andono, P.N.; Shidik, G.F.; Prabowo, D.P.; Pergiwati, D.; Pramunendar, R.A. Based on Combination Feature Extraction and Reduction Dimension with the K-Nearest Neighbor. Int. J. Intell. Eng. Syst. 2022, 15, 262–272. [Google Scholar]

- Lasseck, M. Improved Automatic Bird Identification Through Decision Tree Based Feature Selection and Bagging. CLEF Work. Notes 2015, 1391. Available online: https://ceur-ws.org/Vol-1391/160-CR.pdf (accessed on 23 July 2024).

- Ross, J.C.; Allen, P.E. Random forest for improved analysis efficiency in passive acoustic monitoring. Ecol. Inform. 2014, 21, 34–39. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Patel, R.; Kumar, S. Application of CNNs in environmental sound classification. J. Acoust. Soc. Am. 2021, 149, 1234–1245. [Google Scholar]

- Kahl, S.; Wilhelm-Stein, T.; Klinck, H.; Kowerko, D.; Eibl, M. Recognizing birds from sound—The 2018 BirdCLEF baseline system. arXiv 2018, arXiv:1804.07177. [Google Scholar]

- Jasim, H.A.; Ahmed, S.R.; Ibrahim, A.A.; Duru, A.D. Classify bird species audio by augment convolutional neural network. In Proceedings of the 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 9–11 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Sprengel, E.; Jaggi, M.; Kilcher, Y.; Hofmann, T. Audio based bird species identification using deep learning techniques. LifeCLEF 2016, 2016, 547–559. [Google Scholar]

- Kaggle. BirdCLEF2023 1st Place Solution: Correct Data Is All You Need. Available online: https://www.kaggle.com/competitions/birdclef-2023/discussion/412808 (accessed on 21 August 2024).

- Kaggle BirdCLEF2023 6th Place Solution: BirdNET Embedding + CNN. Available online: https://www.kaggle.com/competitions/birdclef-2023/discussion/412708 (accessed on 21 August 2024).

- Kaggle. BirdCLEF2023 2nd Place Solution. Available online: https://www.kaggle.com/competitions/birdclef-2021/discussion/243463 (accessed on 21 August 2024).

- Kahl, S.; Wood, C.M.; Eibl, M.; Klinck, H. BirdNET: A deep learning solution for avian diversity monitoring. Ecol. Inform. 2021, 61, 101236. [Google Scholar] [CrossRef]

- GitHub. BirdNET-Analyzer. Available online: https://github.com/kahst/BirdNET-Analyzer (accessed on 21 August 2024).

- Nolan, V.; Scott, C.; Yeiser, J.M.; Wilhite, N.; Howell, P.E.; Ingram, D.; Martin, J.A. The development of a convolutional neural network for the automatic detection of Northern Bobwhite Colinus virginianus covey calls. Remote Sens. Ecol. Conserv. 2023, 9, 46–61. [Google Scholar] [CrossRef]

- Wildlife Acoustics. Song Meter SM4. Available online: https://www.wildlifeacoustics.com/products/song-meter-sm4 (accessed on 21 August 2024).

- Capture Systems. CARACAL. Available online: https://www.capture-sys.com/caracal (accessed on 21 August 2024).

- Hugging Face. ECA-NFNet-L0. Available online: https://huggingface.co/timm/eca_nfnet_l0 (accessed on 23 July 2024).

- Brock, A.; De, S.; Smith, S.L.; Simonyan, K. High-performance large-scale image recognition without normalization. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 1059–1071. [Google Scholar]

- Hugging Face. ConvNext-Small-FB-IN22K-IN1K-384. Available online: https://huggingface.co/facebook/convnext-base-384-22k-1k (accessed on 23 July 2024).

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Hugging Face. ConvNextV2-Tiny-FCMAE-FT-IN22K-IN1K. Available online: https://huggingface.co/facebook/convnextv2-tiny-22k-384 (accessed on 23 July 2024).

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16133–16142. [Google Scholar]

- Kaggle. Birdclef-2023-data-part1. Available online: https://www.kaggle.com/datasets/vladimirsydor/birdclef-2023-data-part1 (accessed on 21 August 2024).

- Kaggle. Bird-clef-2023-models. Available online: https://www.kaggle.com/datasets/vladimirsydor/bird-clef-2023-models (accessed on 21 August 2024).

- Microsoft. Introduction to Page Files. Available online: https://learn.microsoft.com/en-us/troubleshoot/windows-client/performance/introduction-to-the-page-file (accessed on 21 August 2024).

- Xeno-Canto. Xeno-Canto Public Dataset. Available online: https://xeno-canto.org/ (accessed on 23 July 2024).

- Xeno-Canto. FAQ. Available online: https://xeno-canto.org/help/FAQ (accessed on 21 August 2024).

- Maclean, K.; Triguero, I. Identifying bird species by their calls in Soundscapes. Appl. Intell. 2023, 53, 21485–21499. [Google Scholar] [CrossRef]

- GitHub. ESC-50 Dataset. Available online: https://github.com/karolpiczak/ESC-50 (accessed on 23 July 2024).

- Zenodo Soundscape Collections. BirdCLEF 2023 Discussion. Available online: https://www.kaggle.com/competitions/birdclef-2023/discussion/394358#2179605 (accessed on 23 July 2024).

- Gonçalves, L.; Subtil, A.; Oliveira, M.R.; de Zea Bermudez, P. ROC curve estimation: An overview. REVSTAT Stat. J. 2014, 12, 1–20. [Google Scholar]

- Dalvi, S.; Gressel, G.; Achutan, K. Tuning the false positive rate/false negative rate with phishing detection models. Int. J. Eng. Adv. Technol. 2019, 9, 7–13. [Google Scholar] [CrossRef]

- Voudoukis, N.; Oikonomidis, S. Inverse square law for light and radiation: A unifying educational approach. Eur. J. Eng. Technol. Res. 2017, 2, 23–27. [Google Scholar]

- Sü Gül, Z.; Xiang, N.; Çalışkan, M. Investigations on sound energy decays and flows in a monumental mosque. J. Acoust. Soc. Am. 2016, 140, 344–355. [Google Scholar] [CrossRef]

- Wahlberg, M.; Larsen, O.N. Propagation of sound. Comp. Bioacoust. Overv. 2017, 685, 61–120. [Google Scholar]

- Darras, K.F.; Deppe, F.; Fabian, Y.; Kartono, A.P.; Angulo, A.; Kolbrek, B.; Prawiradilaga, D.M. High microphone signal-to-noise ratio enhances acoustic sampling of wildlife. PeerJ 2020, 8, e9955. [Google Scholar]

- Stepanian, P.M.; Horton, K.G.; Hille, D.C.; Wainwright, C.E.; Chilson, P.B.; Kelly, J.F. Extending bioacoustic monitoring of birds aloft through flight call localization with a three-dimensional microphone array. Ecol. Evol. 2016, 6, 7039–7046. [Google Scholar]

- Darras, K.; Batáry, P.; Furnas, B.; Celis-Murillo, A.; Van Wilgenburg, S.L.; Mulyani, Y.A.; Tscharntke, T. Comparing the sampling performance of sound recorders versus point counts in bird surveys: A meta-analysis. J. Appl. Ecol. 2018, 55, 2575–2586. [Google Scholar] [CrossRef]

- Madhusudhana, S.; Pavan, G.; Miller, L.A.; Gannon, W.L.; Hawkins, A.; Erbe, C.; Thomas, J.A. Choosing equipment for animal bioacoustic research. In Exploring Animal Behavior Through Sound; Springer: Cham, Switzerland, 2022; Volume 37. [Google Scholar]

- Prince, P.; Hill, A.; Piña Covarrubias, E.; Doncaster, P.; Snaddon, J.L.; Rogers, A. Deploying acoustic detection algorithms on low-cost, open-source acoustic sensors for environmental monitoring. Sensors 2019, 19, 553. [Google Scholar] [CrossRef] [PubMed]

- Osborne, P.E.; Alvares-Sanches, T.; White, P.R. To bag or not to bag? How AudioMoth-based passive acoustic monitoring is impacted by protective coverings. Sensors 2023, 23, 7287. [Google Scholar] [CrossRef] [PubMed]

- Darras, K.; Furnas, B.; Fitriawan, I.; Mulyani, Y.; Tscharntke, T. Estimating bird detection distances in sound recordings for standardizing detection ranges and distance sampling. Methods Ecol. Evol. 2018, 9, 1928–1938. [Google Scholar] [CrossRef]

- Weisshaupt, N.; Saari, J.; Koistinen, J. Evaluating the potential of bioacoustics in avian migration research by citizen science and weather radar observations. PLoS ONE 2024, 19, e0299463. [Google Scholar] [CrossRef] [PubMed]

| Common Name | Scientific Name | Common Name | Scientific Name |

|---|---|---|---|

| Arctic Tern | Sterna paradisaea | Little Egret | Egretta garzetta |

| Atlantic Puffin | Fratercula arctica | Manx Shearwater | Puffinus puffinus |

| Balearic Shearwater | Puffinus mauretanicus | Meadow Pipit | Anthus pratensis |

| Bearded Bellbird | Procnias averano | Mediterranean Gull | Larus melanocephalus |

| Black-headed Gull | Larus ridibundus | Northern Gannet | Morus bassanus |

| Black-legged Kittiwake | Rissa tridactyla | Northern Lapwing | Vanellus vanellus |

| Common Blackbird | Turdus merula | Northern Pintail | Anas acuta |

| Common Moorhen | Gallinula chloropus | Pallid Swift | Apus pallidus |

| Common Murre | Uria aalge | Parasitic Jaeger | Stercorarius parasiticus |

| Common Ringed Plover | Charadrius hiaticula | Pied Avocet | Recurvirostra avosetta |

| Common Scoter | Melanitta nigra | Pomarine Jaeger | Stercorarius pomarinus |

| Common Tern | Sterna hirundo | Purple Heron | Ardea purpurea |

| Cory’s Shearwater | Calonectris borealis | Razorbill | Alca torda |

| Eurasian Coot | Fulica atra | Red Knot | Calidris canutus |

| Eurasian Whimbrel | Numenius phaeopus | Sandwich Tern | Thalasseus sandvicensis |

| Great Black-backed Gull | Larus marinus | Water Rail | Rallus aquaticus |

| Great Skua | Stercorarius skua | Yellow-legged Gull | Larus michahellis |

| Grey Plover | Pluvialis squatarola | Zino’s Petrel | Pterodroma madeira |

| Lesser Black-backed Gull | Larus fuscus |

| Scientific Name | Common Name | Vocalization Frequencies |

|---|---|---|

| Anas acuta | Northern Pintail | 1500–2000 Hz, harmonics up to 6000 Hz |

| Apus pallidus | Pallid Swift | 3000–6000 Hz, harmonics up to 15,000 Hz |

| Larus fuscus | Lesser Black-Backed Gull | Harmonics from 300 Hz up to 13,000 Hz |

| Pterodroma madeira | Zino’s Petrel | 500–1000 Hz, harmonics up to 4000 Hz |

| Uria aalge | Common Murre | 250–4000 Hz, harmonics up to 8000 Hz |

| Frequency (Hz) | Value | |

|---|---|---|

| 500 | 5.478 × 10−3 | 0.910 |

| 1000 | 4.358 × 10−3 | 0.924 |

| 3000 | 8.857 × 10−3 | 0.968 |

| 8000 | 9.811 × 10−3 | 0.985 |

| Distance | Anas acuta | Apus pallidus | Larus fuscus | Pterodroma madeira | Uria aalge | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| High | Avg. | High | Avg. | High | Avg. | High | Avg. | High | Avg. | |

| Original Recording | 0.88 | 0.88 | 0.88 | 0.88 | 0.92 | 0.92 | 0.92 | 0.92 | 0.80 | 0.80 |

| 10 | 0.82 | 0.80 | 0.89 | 0.85 | 0.77 | 0.73 | 0.83 | 0.82 | 0.78 | 0.78 |

| 25 | 0.87 | 0.87 | 0.86 | 0.85 | 0.80 | 0.80 | 0.78 | 0.80 | 0.77 | 0.76 |

| 50 | 0.86 | 0.85 | 0.86 | 0.86 | 0.75 | 0.74 | 0.83 | 0.80 | 0.77 | 0.60 |

| 75 | 0.85 | 0.80 | 0.88 | 0.87 | 0.71 | 0.70 | 0.78 | 0.76 | 0.61 | 0.59 |

| 100 | 0.82 | 0.80 | 0.85 | 0.82 | 0.68 | 0.64 | 0.34 | 0.27 | 0.16 | 0.10 |

| 150 | 0.82 | 0.73 | 0.75 | 0.65 | 0.63 | 0.55 | 0.68 | 0.32 | 0.59 | 0.40 |

| 200 | 0.79 | 0.71 | 0.78 | 0.74 | 0.57 | 0.43 | 0.12 | 0.09 | 0.11 | 0.08 |

| 280 | 0.58 | 0.51 | 0.76 | 0.63 | 0.46 | 0.28 | 0.16 | 0.07 | 0.12 | 0.07 |

| 370 | 0.19 | 0.14 | 0.39 | 0.15 | NR | NR | NR | NR | NR | NR |

| 510 | 0.26 | 0.19 | 0.48 | 0.18 | NR | NR | NR | NR | NR | NR |

| Distance | Anas acuta | Apus pallidus | Larus fuscus | Pterodroma madeira | Uria aalge | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| High | Avg. | High | Avg. | High | Avg. | High | Avg. | High | Avg. | |

| Original Recording | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 10 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 25 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 50 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 |

| 75 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 100 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 9 | 19 | 28.5 |

| 150 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 5 | 1 | 3.5 |

| 200 | 1 | 1 | 1 | 1 | 1 | 1.5 | 14 | 21.3 | 31 | 33 |

| 280 | 1 | 1 | 1 | 1 | 1 | 10.6 | 13 | 23.3 | 29 | 33.3 |

| 370 | 7 | 14.4 | 2 | 20 | NR | NR | NR | NR | NR | NR |

| 510 | 1 | 9.6 | 1 | 21 | NR | NR | NR | NR | NR | NR |

| Scientific Name | Effective Range | Maximum Detection Range |

|---|---|---|

| Anas acuta | 200 m | 280 m |

| Apus pallidus | 280 m | 510 m |

| Larus fuscus | 150 m | 280 m |

| Pterodroma madeira | 75 m | 150 m |

| Uria aalge | 75 m | 150 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia, T.; Pina, L.; Robb, M.; Maria, J.; May, R.; Oliveira, R. Long-Range Bird Species Identification Using Directional Microphones and CNNs. Mach. Learn. Knowl. Extr. 2024, 6, 2336-2354. https://doi.org/10.3390/make6040115

Garcia T, Pina L, Robb M, Maria J, May R, Oliveira R. Long-Range Bird Species Identification Using Directional Microphones and CNNs. Machine Learning and Knowledge Extraction. 2024; 6(4):2336-2354. https://doi.org/10.3390/make6040115

Chicago/Turabian StyleGarcia, Tiago, Luís Pina, Magnus Robb, Jorge Maria, Roel May, and Ricardo Oliveira. 2024. "Long-Range Bird Species Identification Using Directional Microphones and CNNs" Machine Learning and Knowledge Extraction 6, no. 4: 2336-2354. https://doi.org/10.3390/make6040115

APA StyleGarcia, T., Pina, L., Robb, M., Maria, J., May, R., & Oliveira, R. (2024). Long-Range Bird Species Identification Using Directional Microphones and CNNs. Machine Learning and Knowledge Extraction, 6(4), 2336-2354. https://doi.org/10.3390/make6040115