Capsule Network with Its Limitation, Modification, and Applications—A Survey

Abstract

1. Introduction

- To present state-of-the-art capsule models to motivate researchers.

- To explore possible future research areas.

- To present a comparative study of current state-of-the-art CapsNet architectures.

- To present a comparative study of current state-of-the-art CapsNet architecture routing algorithms.

- To explore the factors affecting the performance of capsule neural network architectures with their modifications and applications.

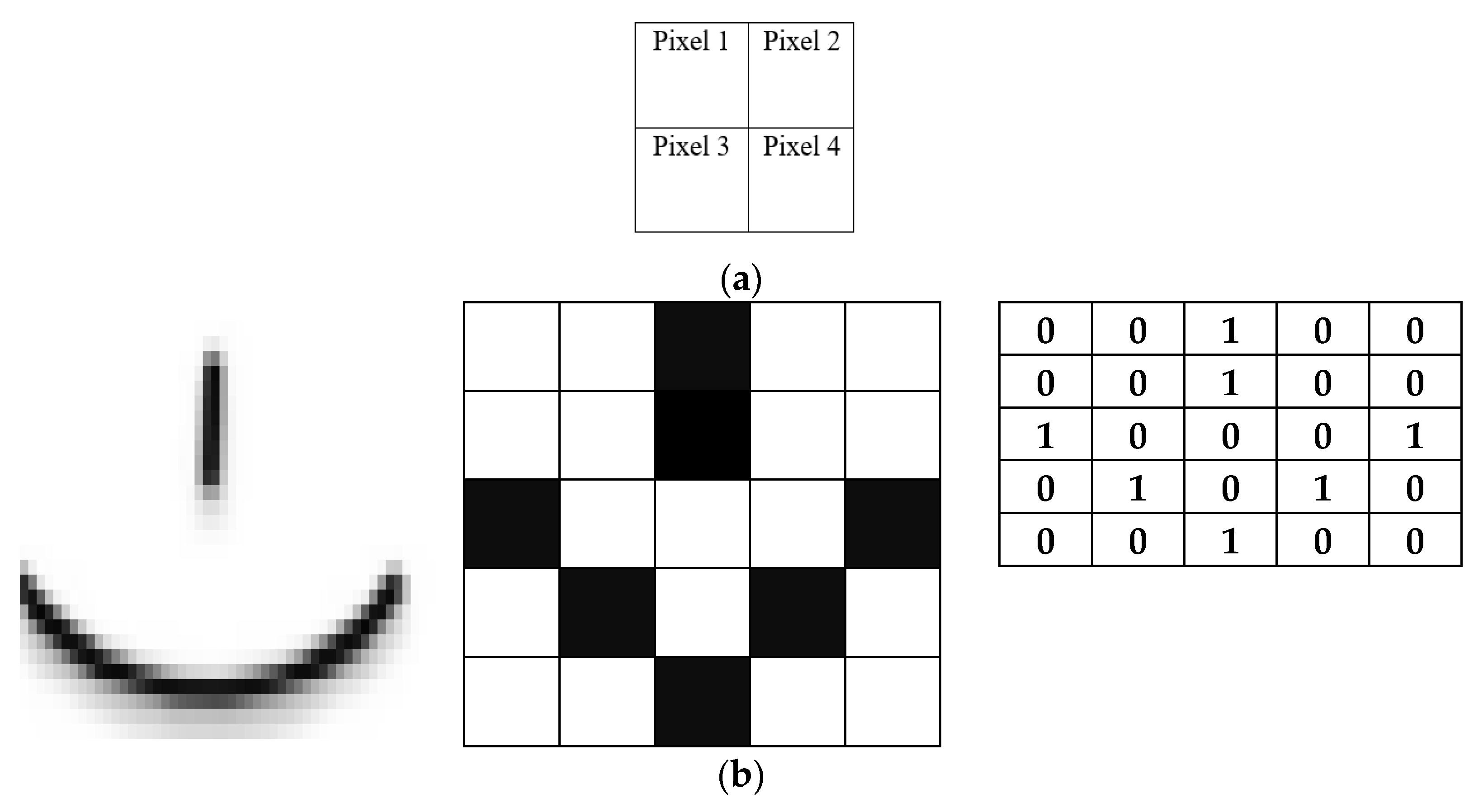

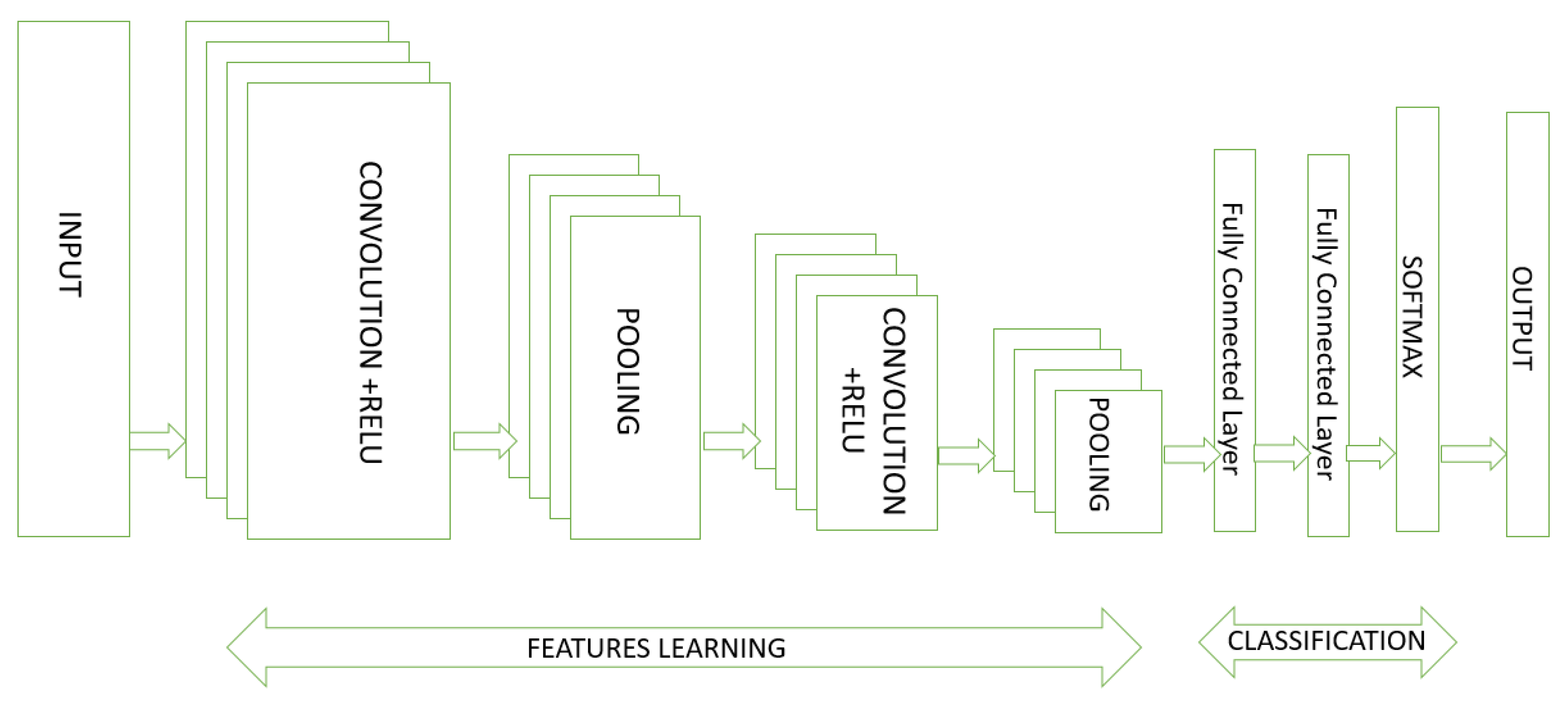

2. Convolutional Neural Network (CNN)

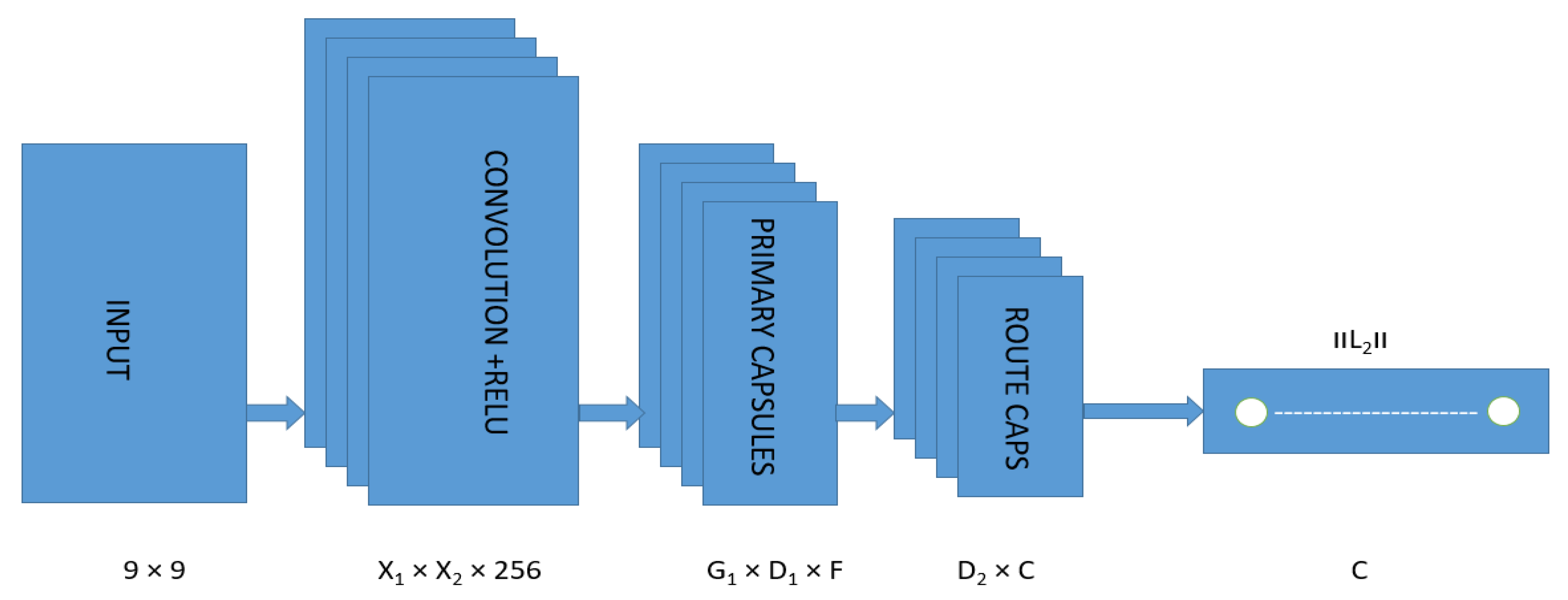

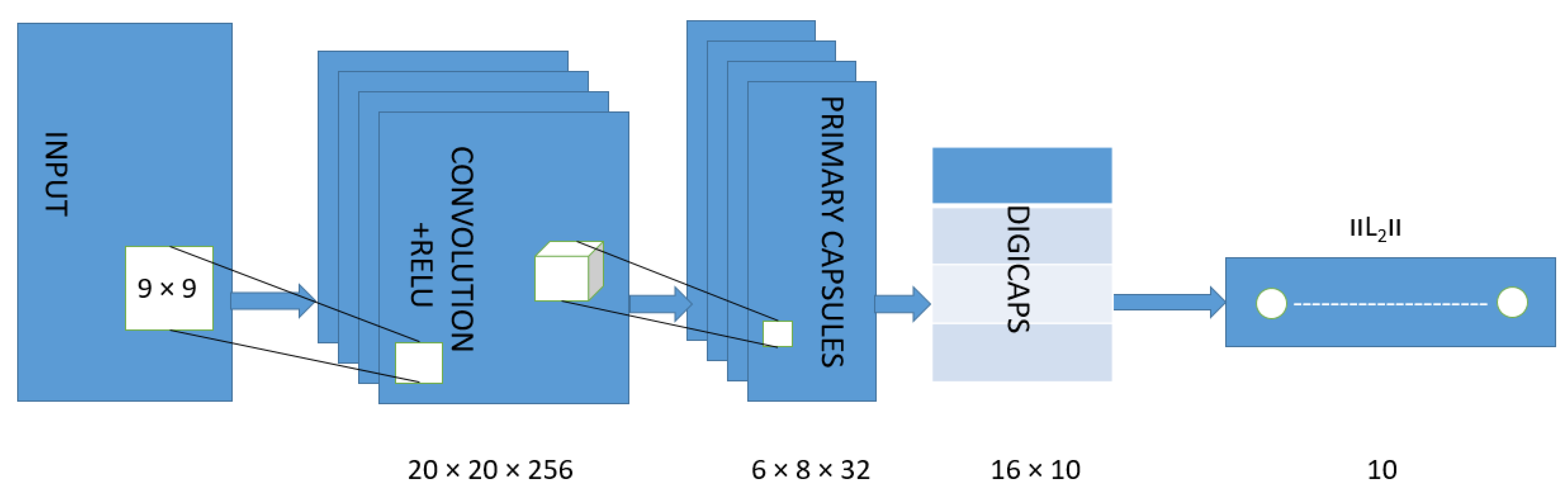

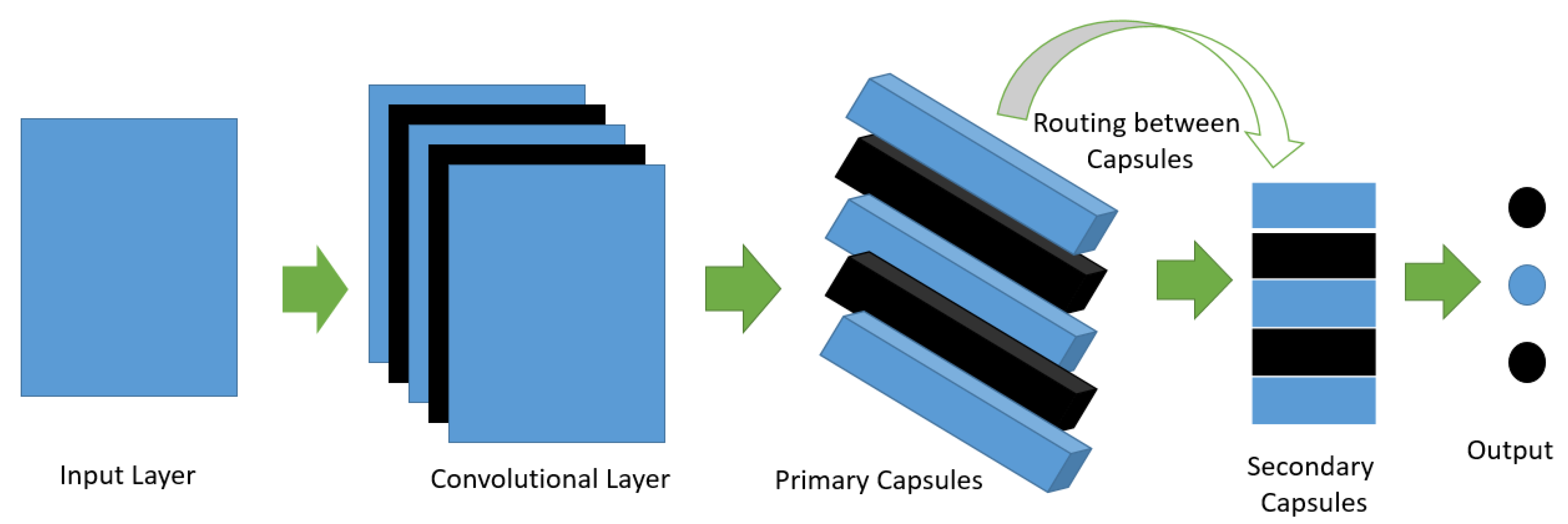

3. Capsule Network (CapsNet)

3.1. Mathematical Model of Capsule Network

3.2. Training and Inference Optimization Methods of CapsNet

3.3. NASCaps

4. Factors Affecting CapsNet’s Performance

4.1. Small Datasets

4.2. Large Dataset

4.3. Transfer Learning

4.4. Data Augmentation

4.5. CapsNet Architecture

4.6. Capsule Routing

4.7. Class Balancing

4.8. Model Complexity

5. Modification in CapsNet

6. Applications of CapsNet

6.1. Image Classification and Recognition

6.2. Object Pose Estimation

6.3. Image Generation

6.4. Medical Image Interpretation

6.5. Natural Language Processing (NLP)

6.6. Video Analysis

6.7. Few-Shot Learning

7. Why CapsNet Is Superior to CNN in Most Cases

7.1. Better Understanding of the Spatial Relationship between Features

7.2. Handling Variations

7.3. Better Generalization

7.4. Hierarchical Representation

7.5. Translation Invariance

7.6. Performance

8. Limitation/Challenges of Capsule Network

8.1. Limited Understanding

8.2. Computational Cost

8.3. Limited Real-World Applications

8.4. Model Complexity

8.5. Limited Interpretability

8.6. Sensitive to Small Variations

8.7. Limited Availability of Pre-Trained Models

9. Literature Survey

10. Routing Algorithms

11. Future Research Directions

11.1. Better Routing Mechanisms

11.2. Handling Big Data

11.3. Task Adaptation

11.4. CapsNets in Transfer Learning

11.5. Interpretability and Explainability

11.6. CapsNets for Few-Shot Learning

11.7. Dynamic Routing Improvements

11.8. CapsNets in Reinforcement Learning

11.9. CapsNets with Attention Mechanisms

11.10. Capsule Networks for 3D Data

11.11. CapsNets in Generative Models

12. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclatures

| Notation | Description |

| CapsNet | Capsule network |

| CNN | Convolutional neural network |

| MNIST dataset | Modified National Institute of Standards and Technology dataset. |

| CIFAR | Canadian Institute for Advanced Research |

| RNN | Recurrent neural network |

| SVHN dataset | Street View House Number dataset |

| SVM | Support vector machine |

| ANN | Artificial neural network |

| KNN | K-nearest neighbor |

| CapsGAN | Capsule GAN |

| PT-CapsNet | Prediction-tuning capsule network |

| FC Layers | Fully connected layers |

| LSTM | Long short-term memory |

| GAN | Generative adversarial network |

| WOS | Web of Science |

| ResNet | Residual neural network |

| FR | Face recognition |

| SVM | Support vector machine |

| NB | Naïve Bayesian |

| GRU | Gated recurrent unit |

| DirectCapsNet | Dual-directed capsule network model |

| NN | Neural network |

| VLR | Very low resolution |

| ReLU | Rectified linear unit |

| BGC | Blurb genre collection |

| LFW | Labeled faces in the wild |

| NAS | Neural architecture search |

| NASCaps | NAS capsule |

References

- Fahad, K.; Yu, X.; Yuan, Z.; Rehman, A.U. ECG classification using 1-D convolutional deep residual neural network. PLoS ONE 2023, 18, e0284791. [Google Scholar]

- Haq, M.U.; Shahzad, A.; Mahmood, Z.; Shah, A.A.; Muhammad, N.; Akram, T. Boosting the face recognition performance of ensemble based LDA for pose, non-uniform illuminations, and low-resolution images. KSII Trans. Internet Inf. Syst. 2019, 13, 3144–3164. [Google Scholar]

- Rehman, A.U.; Aasia, K.; Arslan, S. Hybrid feature selection and tumor identification in brain MRI using swarm intelligence. In Proceedings of the 2013 11th International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 16–18 December 2013; pp. 49–54. [Google Scholar]

- Patel, C.I.; Garg, S.; Zaveri, T.; Banerjee, A. Top-down and bottom-up cues based moving object detection for varied background video sequences. Adv. Multimed. 2014, 2014, 879070. [Google Scholar] [CrossRef][Green Version]

- Zhang, L.; Leng, X.; Feng, S.; Ma, X.; Ji, K.; Kuang, G.; Liu, L. Azimuth-Aware Discriminative Representation Learning for Semi-Supervised Few-Shot SAR Vehicle Recognition. Remote Sens. 2023, 15, 331. [Google Scholar] [CrossRef]

- Aamna, B.; Arif, A.; Khalid, W.; Khan, B.; Ali, A.; Khalid, S.; Rehman, A.U. Recognition and classification of handwritten urdu numerals using deep learning techniques. Appl. Sci. 2023, 13, 1624. [Google Scholar]

- Patrick, M.K.; Adekoya, A.F.; Mighty, A.A.; Edward, B.Y. Capsule networks—A survey. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1295–1310. [Google Scholar]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Zhang, D.; Wang, D. Relation classification via recurrent neural network. arXiv 2015, arXiv:1508.01006. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 777–780. [Google Scholar] [CrossRef]

- Patel, C.; Bhatt, D.; Sharma, U.; Patel, R.; Pandya, S.; Modi, K.; Cholli, N.; Patel, A.; Bhatt, U.; Khan, M.A.; et al. DBGC: Dimension-based generic convolution block for object recognition. Sensors 2022, 22, 1780. [Google Scholar] [CrossRef]

- Xi, E.; Bing, S.; Jin, Y. Capsule network performance on complex data. arXiv 2017, arXiv:1712.03480. [Google Scholar]

- Wang, Y.; Ning, D.; Feng, S. A novel capsule network based on wide convolution and multi-scale convolution for fault diagnosis. Appl. Sci. 2020, 10, 3659. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Z.; An, Y.; Zhao, J.; Zhao, Y.; Zhang, Y.-D. EEG emotion recognition based on the attention mechanism and pre-trained convolution capsule network. Knowl.-Based Syst. 2023, 265, 110372. [Google Scholar] [CrossRef]

- Sreelakshmi, K.; Akarsh, S.; Vinayakumar, R.; Soman, K.P. Capsule neural networks and visualization for segregation of plastic and non-plastic wastes. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; pp. 631–636. [Google Scholar]

- Li, Y.; Fu, K.; Sun, H.; Sun, X. An aircraft detection framework based on reinforcement learning and convolutional neural networks in remote sensing images. Remote Sens. 2018, 10, 243. [Google Scholar] [CrossRef]

- Xu, T.B.; Cheng, G.L.; Yang, J.; Liu, C.L. Fast aircraft detection using end-to-end fully convolutional network. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 139–143. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules Advances in Neural Information Processing Systems. In Proceedings of the Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Available online: https://en.wikipedia.org/wiki/Capsule_neural_network (accessed on 2 July 2023).

- Wu, J. Introduction to convolutional neural networks. Natl. Key Lab Nov. Softw. Technol. Nanjing Univ. China 2017, 5, 495. [Google Scholar]

- Kuo, C.C.J. Understanding convolutional neural networks with a mathematical model. J. Vis. Commun. Image Represent. 2016, 41, 406–413. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Saha, S. A comprehensive guide to convolutional neural networks—The ELI5 way. Towards Data Sci. 2018, 15, 15. [Google Scholar]

- Shahroudnejad, A.; Afshar, P.; Plataniotis, K.N.; Mohammadi, A. Improved explainability of capsule networks: Relevance path by agreement. In Proceedings of the 2018 IEEE Global Conference on Signal and Information Processing (GLOBALSIP), Anaheim, CA, USA, 26–29 November 2018; pp. 549–553. [Google Scholar]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Gu, J. Interpretable graph capsule networks for object recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, virtual, 2–9 February 2021; Volume 35, pp. 1469–1477. [Google Scholar]

- Gu, J.; Wu, B.; Tresp, V. Effective and efficient vote attack on capsule networks. arXiv 2021, arXiv:2102.10055. [Google Scholar]

- Hu, X.D.; Li, Z.H. Intrusion Detection Method Based on Capsule Network for Industrial Internet. Acta Electonica Sin. 2022, 50, 1457. [Google Scholar]

- Devi, K.; Muthusenthil, B. Intrusion detection framework for securing privacy attack in cloud computing environment using DCCGAN-RFOA. Trans. Emerg. Telecommun. Technol. 2022, 33, e4561. [Google Scholar] [CrossRef]

- Marchisio, A.; Nanfa, G.; Khalid, F.; Hanif, M.A.; Martina, M.; Shafique, M. SeVuc: A study on the Security Vulnerabilities of Capsule Networks against adversarial attacks. Microprocess. Microsyst. 2023, 96, 104738. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Guo, S.; Kong, L.; Cui, G. Capsule Network with Multiscale Feature Fusion for Hidden Human Activity Classification. IEEE Trans. Instrum. Meas. 2023, 72, 2504712. [Google Scholar] [CrossRef]

- Tokish, J.M.; Makovicka, J.L. The superior capsular reconstruction: Lessons learned and future directions. J. Am. Acad. Orthop. Surg. 2020, 28, 528–537. [Google Scholar] [CrossRef]

- Xiang, C.; Zhang, L.; Tang, Y.; Zou, W.; Xu, C. MS-CapsNet: A novel multi-scale capsule network. IEEE Signal Process. Lett. 2018, 25, 1850–1854. [Google Scholar] [CrossRef]

- Kang, J.S.; Kang, J.; Kim, J.J.; Jeon, K.W.; Chung, H.J.; Park, B.H. Neural Architecture Search Survey: A Computer Vision Perspective. Sensors 2023, 23, 1713. [Google Scholar] [CrossRef]

- Marchisio, A.; Massa, A.; Mrazek, V.; Bussolino, B.; Martina, M.; Shafique, M. NASCaps: A framework for neural architecture search to optimize the accuracy and hardware efficiency of convolutional capsule networks. In Proceedings of the 39th International Conference on Computer-Aided Design, Virtual, 2–5 November 2020; pp. 1–9. [Google Scholar]

- Marchisio, A.; Mrazek, V.; Massa, A.; Bussolino, B.; Martina, M.; Shafique, M. RoHNAS: A Neural Architecture Search Framework with Conjoint Optimization for Adversarial Robustness and Hardware Efficiency of Convolutional and Capsule Networks. IEEE Access 2022, 10, 109043–109055. [Google Scholar] [CrossRef]

- Haq, M.U.; Sethi, M.A.J.; Ullah, R.; Shazhad, A.; Hasan, L.; Karami, G.M. COMSATS Face: A Dataset of Face Images with Pose Variations, Its Design, and Aspects. Math. Probl. Eng. 2022, 2022, 4589057. [Google Scholar] [CrossRef]

- Gordienko, N.; Kochura, Y.; Taran, V.; Peng, G.; Gordienko, Y.; Stirenko, S. Capsule deep neural network for recognition of historical Graffiti handwriting. arXiv 2018, arXiv:1809.06693. [Google Scholar]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning. 2011. Available online: https://www.researchgate.net/publication/266031774_Reading_Digits_in_Natural_Images_with_Unsupervised_Feature_Learning (accessed on 2 July 2023).

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://www.bibsonomy.org/bibtex/cc2d42f2b7ef6a4e76e47d1a50c8cd86 (accessed on 2 July 2023).

- Zhang, X. The AlexNet, LeNet-5 and VGG NET applied to CIFAR-10. In Proceedings of the 2021 2nd International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE), Zhuhai, China, 24–26 September 2021; pp. 414–419. [Google Scholar]

- Doon, R.; Rawat, T.K.; Gautam, S. Cifar-10 classification using deep convolutional neural network. In Proceedings of the 2018 IEEE Punecon, Pune, India, 30 November–2 December 2018; pp. 1–5. [Google Scholar]

- Jiang, X.; Wang, Y.; Liu, W.; Li, S.; Liu, J. Capsnet, cnn, fcn: Comparative performance evaluation for image classification. Int. J. Mach. Learn. Comput. 2019, 9, 840–848. [Google Scholar] [CrossRef]

- Nair, P.; Doshi, R.; Keselj, S. Pushing the limits of capsule networks. arXiv 2021, arXiv:2103.08074. [Google Scholar]

- Gu, J.; Tresp, V.; Hu, H. Capsule network is not more robust than convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14309–14317. [Google Scholar]

- Amer, M.; Maul, T. Path capsule networks. Neural Process. Lett. 2020, 52, 545–559. [Google Scholar] [CrossRef]

- Peer, D.; Stabinger, S.; Rodriguez-Sanchez, A. Training deep capsule networks. arXiv 2018, arXiv:1812.09707. [Google Scholar]

- Jaiswal, A.; AbdAlmageed, W.; Wu, Y.; Natarajan, P. Capsulegan: Generative adversarial capsule network. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Saqur, R.; Vivona, S. Capsgan: Using dynamic routing for generative adversarial networks. In Advances in Computer Vision: Proceedings of the 2019 Computer Vision Conference (CVC); Springer International Publishing: Berlin/Heidelberg, Germany, 2020; Volume 21, pp. 511–525. [Google Scholar]

- Pérez, E.; Ventura, S. Melanoma recognition by fusing convolutional blocks and dynamic routing between capsules. Cancers 2021, 13, 4974. [Google Scholar] [CrossRef]

- Ramasinghe, S.; Athuraliya, C.D.; Khan, S.H. A context-aware capsule network for multi-label classification. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, L.; Edraki, M.; Qi, G.J. Cappronet: Deep feature learning via orthogonal projections onto capsule subspaces. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Zhao, Z.; Kleinhans, A.; Sandhu, G.; Patel, I.; Unnikrishnan, K.P. Capsule networks with max-min normalization. arXiv 2019, arXiv:1903.09662. [Google Scholar]

- Phaye, S.; Samarth, R.; Sikka, A.; Dhall, A.; Bathula, D. Dense and diverse capsule networks: Making the capsules learn better. arXiv 2018, arXiv:1805.04001. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. Fractalnet: Ultra-deep neural networks without residuals. arXiv 2016, arXiv:1605.07648. [Google Scholar]

- Chen, Z.; Crandall, D. Generalized capsule networks with trainable routing procedure. arXiv 2018, arXiv:1808.08692. [Google Scholar]

- Nguyen, H.P.; Ribeiro, B. Advanced capsule networks via context awareness. In Artificial Neural Networks and Machine Learning—ICANN 2019: Theoretical Neural Computation, Proceedings of the 28th International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 28, Part I; pp. 166–177. [Google Scholar]

- Lenssen, J.E.; Fey, M.; Libuschewski, P. Group equivariant capsule networks. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Wang, D.; Liu, Q. An Optimization View on Dynamic Routing between Capsules. 2018. Available online: https://openreview.net/forum?id=HJjtFYJDf (accessed on 2 July 2023).

- Kumar, A.D. Novel deep learning model for traffic sign detection using capsule networks. arXiv 2018, arXiv:1805.04424. [Google Scholar]

- Mandal, B.; Dubey, S.; Ghosh, S.; Sarkhel, R.; Das, N. Handwritten indic character recognition using capsule networks. In Proceedings of the 2018 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 7–9 December 2018; pp. 304–308. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Xia, C.; Zhang, C.; Yan, X.; Chang, Y.; Yu, P.S. Zero-shot user intent detection via capsule neural networks. arXiv 2018, arXiv:1809.00385. [Google Scholar]

- Kim, M.; Chi, S. Detection of centerline crossing in abnormal driving using CapsNet. J. Supercomput. 2019, 75, 189–196. [Google Scholar] [CrossRef]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef]

- Fezza, S.A.; Bakhti, Y.; Hamidouche, W.; Déforges, O. Perceptual evaluation of adversarial attacks for CNN-based image classification. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–6. [Google Scholar]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; De Souza, A.F. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Weng, Z.; Meng, F.; Liu, S.; Zhang, Y.; Zheng, Z.; Gong, C. Cattle face recognition based on a Two-Branch convolutional neural network. Comput. Electron. Agric. 2022, 196, 106871. [Google Scholar] [CrossRef]

- Afshar, P.; Mohammadi, A.; Plataniotis, K.N. Brain tumor type classification via capsule networks. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3129–3133. [Google Scholar]

- Mukhometzianov, R.; Carrillo, J. CapsNet comparative performance evaluation for image classification. arXiv 2018, arXiv:1805.11195. [Google Scholar]

- Saqur, R.; Vivona, S. Capsgan: Using dynamic routing for generative adversarial networks. arXiv 2018, arXiv:1806.03968. [Google Scholar]

- Guarda, L.; Tapia, J.E.; Droguett, E.L.; Ramos, M. A novel Capsule Neural Network based model for drowsiness detection using electroencephalography signals. Expert Syst. Appl. 2022, 201, 116977. [Google Scholar] [CrossRef]

- Chui, A.; Patnaik, A.; Ramesh, K.; Wang, L. Capsule Networks and Face Recognition. 2019. Available online: https://lindawangg.github.io/projects/capsnet.pdf (accessed on 2 July 2023).

- Teto, J.K.; Xie, Y. Automatically Identifying of animals in the wilderness: Comparative studies between CNN and C-Capsule Network. In Proceedings of the 2019 3rd International Conference on Compute and Data Analysis, Kahului, HI, USA, 6–8 March 2019; pp. 128–133. [Google Scholar]

- Mazzia, V.; Salvetti, F.; Chiaberge, M. Efficient-capsnet: Capsule network with self-attention routing. Sci. Rep. 2021, 11, 14634. [Google Scholar] [CrossRef]

- Pan, C.; Velipasalar, S. PT-CapsNet: A novel prediction-tuning capsule network suitable for deeper architectures. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11996–12005. [Google Scholar]

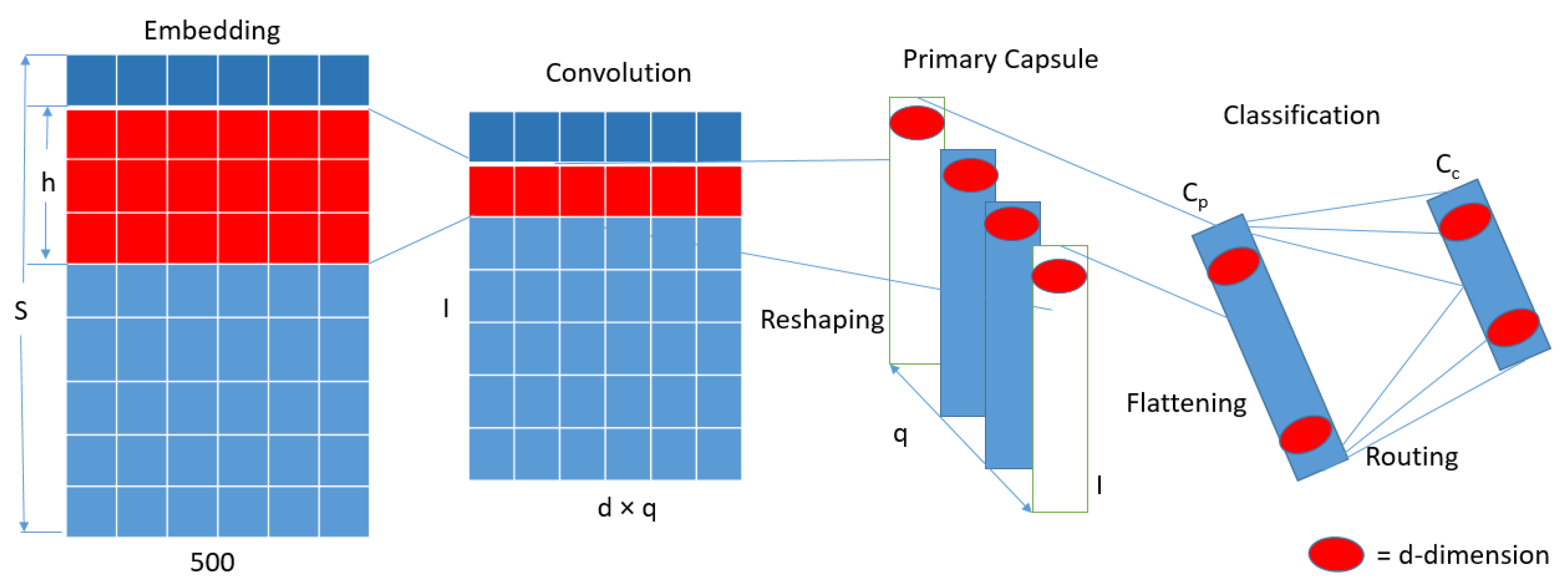

- Manoharan, J.S. Capsule Network Algorithm for Performance Optimization of Text Classification. J. Soft Comput. Paradig. 2021, 3, 1–9. [Google Scholar] [CrossRef]

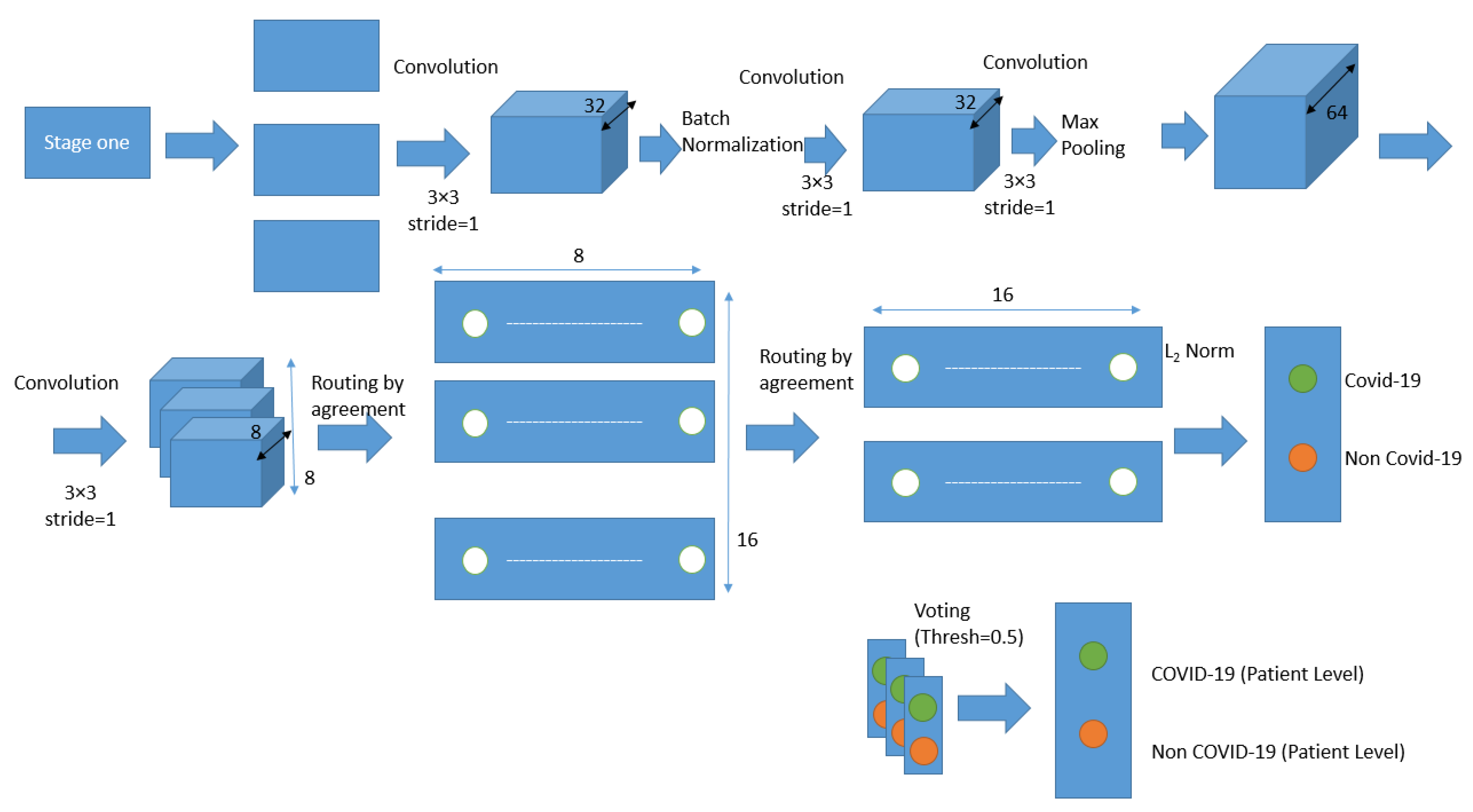

- Heidarian, S.; Afshar, P.; Enshaei, N.; Naderkhani, F.; Rafiee, M.J.; Fard, F.B.; Samimi, K.; Atashzar, S.F.; Oikonomou, A.; Plataniotis, K.N.; et al. Covid-fact: A fully-automated capsule network-based framework for identification of COVID-19 cases from chest ct scans. Front. Artif. Intell. 2021, 4, 8932. [Google Scholar] [CrossRef]

- Kumar, A.; Sachdeva, N. Multimodal cyberbullying detection using capsule network with dynamic routing and deep convolutional neural network. Multimed. Syst. 2021, 28, 2043–2052. [Google Scholar] [CrossRef]

- Tiwari, S.; Jain, A. Convolutional capsule network for COVID-19 detection using radiography images. Int. J. Imaging Syst. Technol. 2021, 31, 525–539. [Google Scholar] [CrossRef]

- Afshar, P.; Naderkhani, F.; Oikonomou, A.; Rafiee, M.J.; Mohammadi, A.; Plataniotis, K.N. MIXCAPS: A capsule network-based mixture of experts for lung nodule malignancy prediction. Pattern Recognit. 2021, 116, 107942. [Google Scholar] [CrossRef]

- Pöpperli, M.; Gulagundi, R.; Yogamani, S.; Milz, S. Capsule neural network-based height classification using low-cost automotive ultrasonic sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 661–666. [Google Scholar]

- Yang, B.; Bao, W.; Wang, J. Active disease-related compound identification based on capsule network. Brief. Bioinform. 2022, 23, bbab462. [Google Scholar] [CrossRef]

- Iqbal, T.; Ali, H.; Saad, M.M.; Khan, S.; Tanougast, C. Capsule-Net for Urdu Digits Recognition. In Proceedings of the 2019 10th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Metz, France, 18–21 September 2019; Volume 1, pp. 495–499. [Google Scholar]

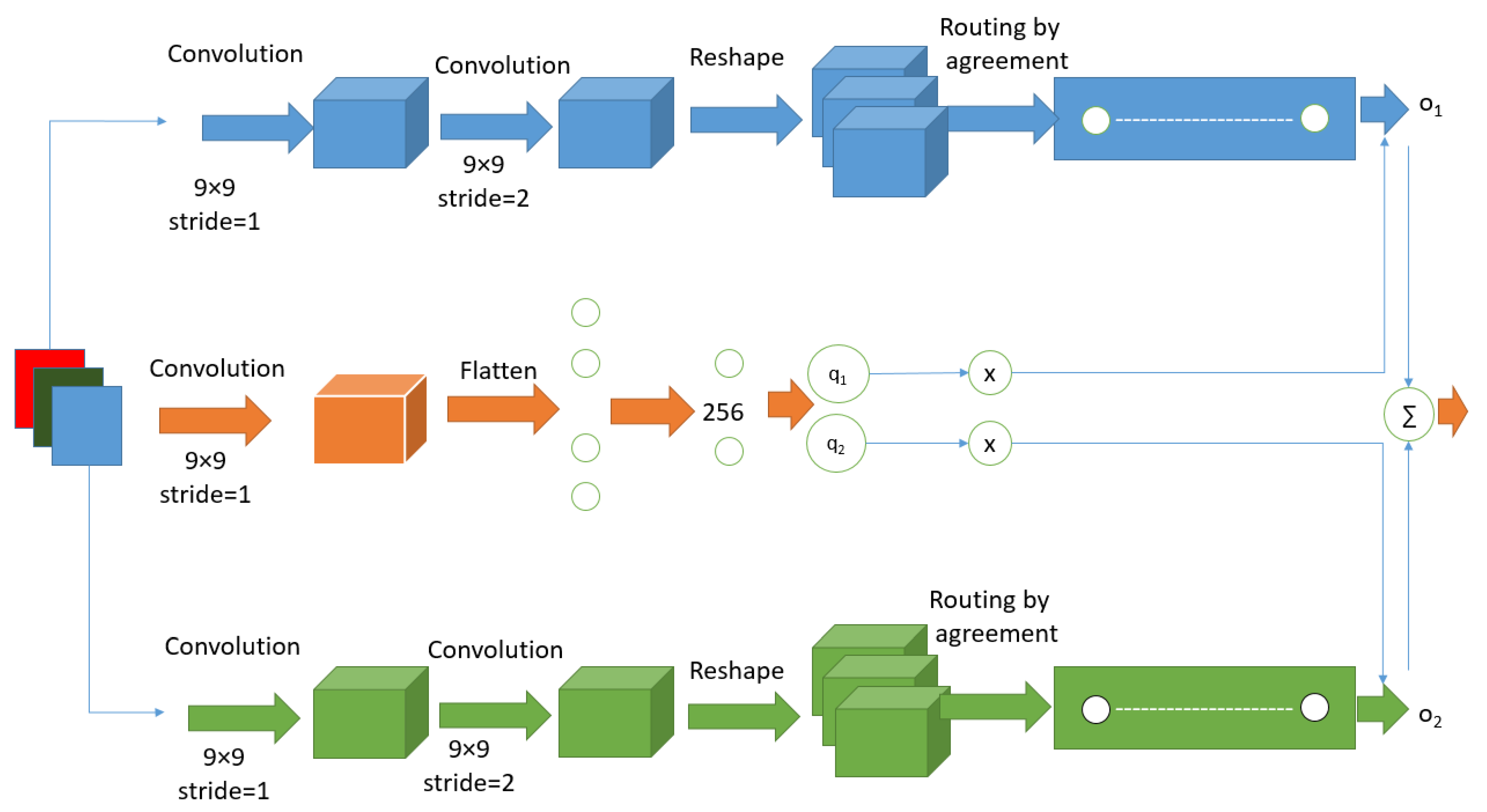

- Janakiramaiah, B.; Kalyani, G.; Karuna, A.; Prasad, L.V.; Krishna, M. Military object detection in defense using multi-level capsule networks. Soft Comput. 2021, 27, 1045–1059. [Google Scholar] [CrossRef]

- Cheng, J.; Huang, W.; Cao, S.; Yang, R.; Yang, W.; Yun, Z.; Wang, Z.; Feng, Q. Enhanced performance of brain tumor classification via tumor region augmentation and partition. PLoS ONE 2015, 10, e0140381. [Google Scholar] [CrossRef]

- Paul, J.S.; Plassard, A.J.; Landman, B.A.; Fabbri, D. Deep learning for brain tumor classification. In Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging; SPIE: Bellingham, WA, USA, 2017; Volume 10137, pp. 253–268. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Available online: https://www.kaggle.com/datasets/andrewmvd/convid19-x-rays (accessed on 2 July 2023).

- Armato, S.G., III; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Zhao, B.; Aberle, D.R.; Henschke, C.I.; Hoffman, E.A.; et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Med. Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Ali, H.; Ullah, A.; Iqbal, T.; Khattak, S. Pioneer dataset and automatic recognition of Urdu handwritten characters using a deep autoencoder and convolutional neural network. SN Appl. Sci. 2020, 2, 152. [Google Scholar] [CrossRef]

- Chao, H.; Dong, L.; Liu, Y.; Lu, B. Emotion recognition from multiband EEG signals using CapsNet. Sensors 2019, 19, 2212. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Rathnayaka, P.; Abeysinghe, S.; Samarajeewa, C.; Manchanayake, I.; Walpola, M. Sentylic at IEST 2018: Gated recurrent neural network and capsule network-based approach for implicit emotion detection. arXiv 2018, arXiv:1809.01452. [Google Scholar]

- Afshary, P.; Mohammadiy, A.; Plataniotis, K. Brain tumor type classification via capsule networks. arXiv 2018, arXiv:1802.10200. [Google Scholar]

- Cheng, J.; Yang, W.; Huang, M.; Huang, W.; Jiang, J.; Zhou, Y.; Yang, R.; Zhao, J.; Feng, Y.; Feng, Q.; et al. Retrieval of brain tumors by adaptive spatial pooling and fisher vector representation. PLoS ONE 2016, 11, e0157112. [Google Scholar] [CrossRef]

- Mobiny, A.; Nguyen, H.V. Fast capsnet for lung cancer screening. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Online, 26 September 2018; Springer: Cham, Switzerland, 2018; pp. 741–749. [Google Scholar]

- Jacob, I.J. Capsule network based biometric recognition system. J. Artif. Intell. 2019, 1, 83–94. [Google Scholar]

- Gunasekaran, K.; Raja, J.; Pitchai, R. Deep multimodal biometric recognition using contourlet derivative weighted rank fusion with human face, fingerprint and iris images. Autom. Časopis Autom. Mjer. Elektron. Računarstvo Komun. 2019, 60, 253–265. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, Y.; Huo, G.; Zhu, X. A deep learning iris recognition method based on capsule network architecture. IEEE Access 2019, 7, 49691–49701. [Google Scholar] [CrossRef]

- Singh, M.; Nagpal, S.; Singh, R.; Vatsa, M. Dual directed capsule network for very low-resolution image recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 340–349. [Google Scholar]

- Sim, T.; Baker, S.; Bsat, M. The CMU pose, illumination, and expression (PIE) database. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 53–58. [Google Scholar]

- Jain, R. Improving performance and inference on audio classification tasks using capsule networks. arXiv 2019, arXiv:1902.05069. [Google Scholar]

- Klinger, R.; De Clercq, O.; Mohammad, S.M.; Balahur, A. IEST: WASSA-2018 implicit emotions shared task. arXiv 2018, arXiv:1809.01083. [Google Scholar]

- Wang, W.; Yang, X.; Li, X.; Tang, J. Convolutional-capsule network for gastrointestinal endoscopy image classification. Int. J. Intell. Syst. 2022, 22, 815. [Google Scholar] [CrossRef]

- Pogorelov, K.; Randel, K.R.; Griwodz, C.; Eskeland, S.L.; de Lange, T.; Johansen, D.; Spampinato, C.; Dang-Nguyen, D.T.; Lux, M.; Schmidt, P.T.; et al. Kvasir: A multi-class image dataset for computer aided gastrointestinal disease detection. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 164–169. [Google Scholar]

- Borgli, H.; Thambawita, V.; Smedsrud, P.H.; Hicks, S.; Jha, D.; Eskeland, S.L.; Randel, K.R.; Pogorelov, K.; Lux, M.; Nguyen, D.T.D.; et al. HyperKvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Sci. Data 2020, 7, 283. [Google Scholar] [CrossRef]

- Khan, S.; Kamal, A.; Fazil, M.; Alshara, M.A.; Sejwal, V.K.; Alotaibi, R.M.; Baig, A.R.; Alqahtani, S. HCovBi-caps: Hate speech detection using convolutional and Bi-directional gated recurrent unit with Capsule network. IEEE Access 2022, 10, 7881–7894. [Google Scholar] [CrossRef]

- Hinton, G.E.; Sabour, S.; Frosst, N. Matrix capsules with EM routing. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Viriyasaranon, T.; Choi, J.H. Object detectors involving a NAS-gate convolutional module and capsule attention module. Sci. Rep. 2022, 12, 3916. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G. Matrix capsules with EM routing. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 15 February 2018; Volume 115. [Google Scholar]

- Gomez, C.; Gilabert, F.; Gomez, M.E.; López, P.; Duato, J. Deterministic versus adaptive routing in fat-trees. In Proceedings of the 2007 IEEE International Parallel and Distributed Processing Symposium, Long Beach, CA, USA, 26–30 March 2007; pp. 1–8. [Google Scholar]

- Dou, Z.Y.; Tu, Z.; Wang, X.; Wang, L.; Shi, S.; Zhang, T. Dynamic layer aggregation for neural machine translation with routing-by-agreement. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 17 July 2019; Volume 33, pp. 86–93. [Google Scholar]

- Hinton, G. How to represent part-whole hierarchies in a neural network. Neural Comput. 2022, 7, 1–40. [Google Scholar] [CrossRef]

- Wu, H.; Mao, J.; Sun, W.; Zheng, B.; Zhang, H.; Chen, Z.; Wang, W. Probabilistic robust route recovery with spatio-temporal dynamics. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1915–1924. [Google Scholar]

- Osama, M.; Wang, X.-Z. Localized routing in capsule networks. arXiv 2020, arXiv:2012.03012. [Google Scholar]

- Choi, J.; Seo, H.; Im, S.; Kang, M. Attention routing between capsules. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Huang, L.; Wang, J.; Cai, D. Graph capsule network for object recognition. IEEE Trans. Image Process. 2021, 30, 1948–1961. [Google Scholar]

- Dombetzki, L.A. An Overview over Capsule Networks. Network Architectures and Services. 2018. Available online: https://www.net.in.tum.de/fileadmin/TUM/NET/NET-2018-11-1/NET-2018-11-1_12.pdf (accessed on 2 July 2023).

- TajikTajik, M.N.; Rehman, A.U.; Khan, W.; Khan, B. Texture feature selection using GA for classification of human brain MRI scans. In Proceedings of the Advances in Swarm Intelligence: 7th International Conference, ICSI 2016, Bali, Indonesia, 25–30 June 2016; Proceedings, Part II 7. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 233–244. [Google Scholar]

- Ullah, H.; Haq, M.U.; Khattak, S.; Khan, G.Z.; Mahmood, Z. A robust face recognition method for occluded and low-resolution images. In Proceedings of the 2019 International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 27–29 August 2019; pp. 86–91. [Google Scholar]

- Munawar, F.; Khan, U.; Shahzad, A.; Haq, M.U.; Mahmood, Z.; Khattak, S.; Khan, G.Z. An empirical study of image resolution and pose on automatic face recognition. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 558–563. [Google Scholar]

| CapsNet Modification | Description | Impact on Robustness to Affine Transformations |

|---|---|---|

| Dynamic routing with RBA | Routing-by-agreement (RBA) variation of dynamic routing. | Enhances capsule consensus and adaptability to variations in position, rotation, and scale. |

| Aff-CapsNets | Affine CapsNets. | Significantly increases affine resilience with fewer parameters. |

| Transformation-aware capsules | Capsules explicitly designed to handle affine transformations. | Learns to detect and apply appropriate transformations to input features, improving invariance to affine transformations. |

| Capsule-capsule transformation (CCT) | Adaptive transformation between capsules. | Allows the network to handle varying degrees of affine transformations effectively. |

| Margin loss regularization | Adds margin loss terms during training. | Incentivizes larger margins between capsules, increasing resistance to affine transformations. |

| Capsule routing with EM routing | Utilizes an EM-like algorithm for capsule routing. | Improves capsule agreement process and feature learning for better robustness to affine transformations. |

| Self-routing | A supervised, non-iterative routing method. | Each capsule’s secondary routing network routes it in a separate manner. As a result, the agreement between capsules is no longer necessary, but upper-level capsule activations and postures are still obtained in a manner similar to mixture of experts (MoE). |

| Adversarial capsule networks | Combines CapsNet with adversarial training techniques. | Helps the network learn robust features by training against adversarial affine transformations. |

| Capsule dropouts | Applies dropouts to capsules during training. | Enhances generalization and robustness by reducing capsule co-adaptations. |

| Capsule reconstruction | Augments CapsNet with a reconstruction loss term. | Encourages the network to preserve spatial information, improving robustness to affine transformations. |

| Capsule attention mechanism | Incorporates attention mechanisms into capsules. | Improves focus on informative features, aiding robustness against affine transformations. |

| Ref No | Application/Problem Definition | Architecture and Parameters | Dataset | Compared with | Accuracy (%) | Recommendation/ Limitation |

|---|---|---|---|---|---|---|

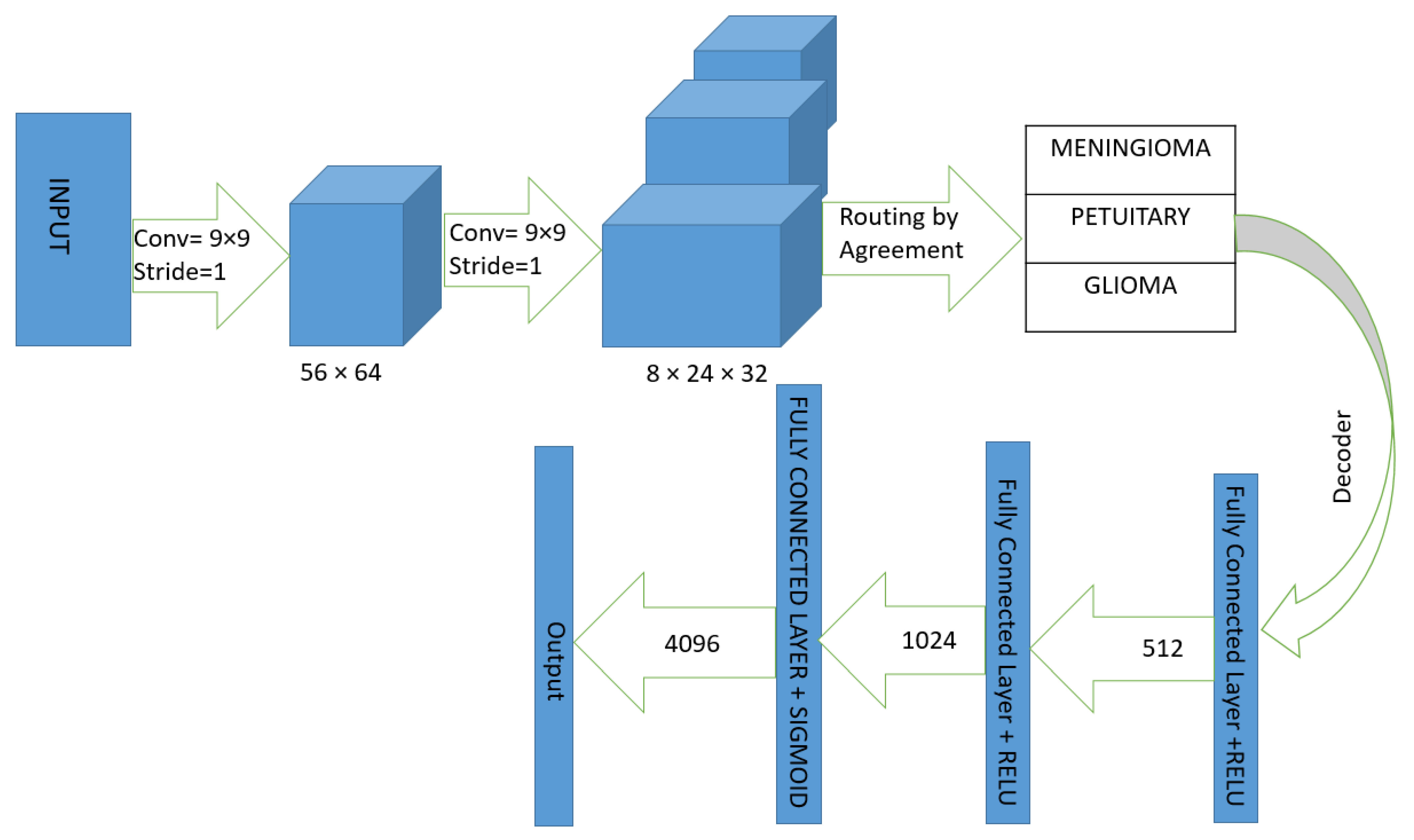

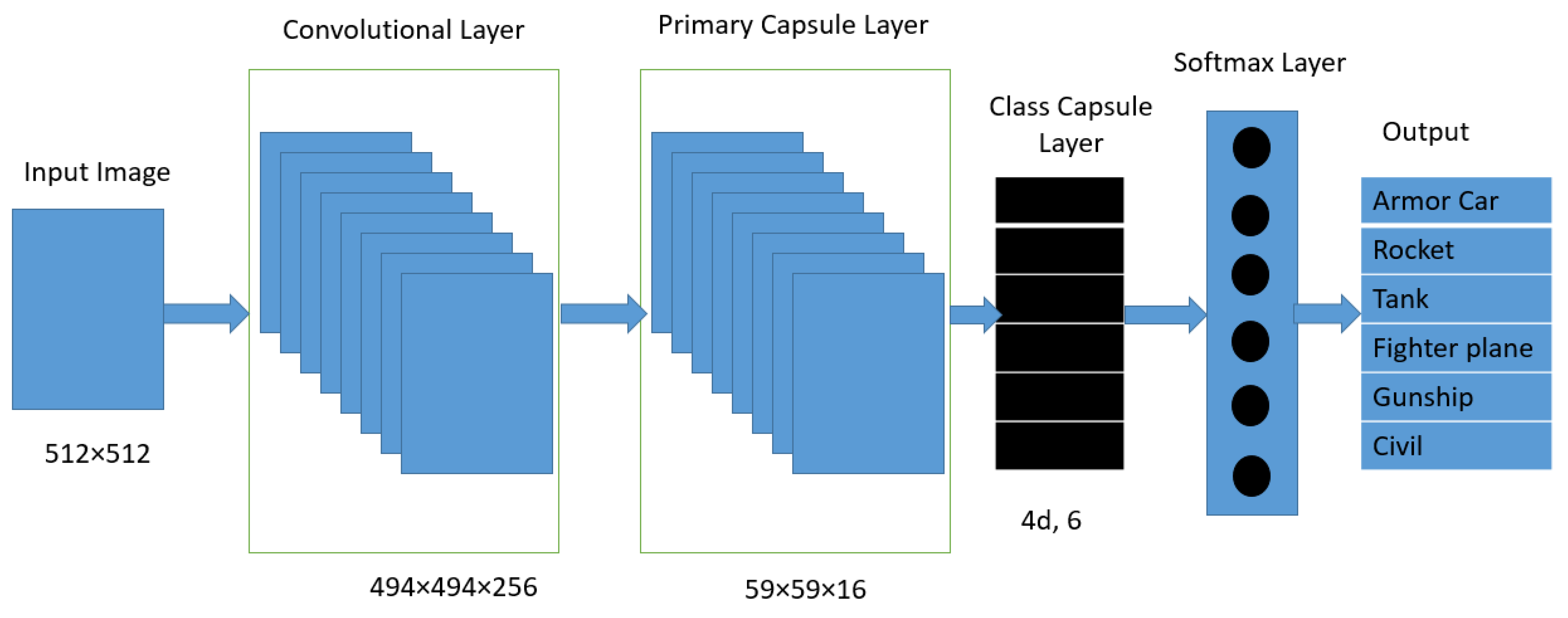

| [69] | Brain tumor type classification. | Primary layer: 64 × 9 × 9 convolution filters and stride of 1. Primary capsule layer: 256 × 9 × 9 convolutions with strides of 2. Decoder: Fully connected layers with 512 × 1024 × 4096 neurons. | Dataset used in [86]. | CNN presented by [87]. | 86.56. | Accuracy can be increased by varying the number of feature maps. |

| [70] | Comparative analysis of CapsNet with Fisherfaces, LeNet, and ResNet. | 256 feature maps, using a 9 × 9 kernel and valid padding. | Yale Face database B, MIT CBCL Face dataset, Belgium TS Traffic Sign dataset and CIFAR-100. | Fisherfaces, LeNet, and ResNet. | 95.3% on Yale dataset, 99.87% on MIT CBCl, 92% on Belgium TS Traffic Sign dataset, and 18% accuracy on CIFAR-100. | With more training iterations, CapsNet may have better results. |

| [71] | 3D image generation to find improved discriminators for generative adversarial network (GAN). | Dynamic routing: Convolution 1 layer: 32 × 32 input, with 9 × 9 kernel of stride 1 Primary capsule layer: 32 channels each 8 × 8 × 8, 8D. Output capsule layer: 16 × 1. | MNIST and Small NORB. | DCGAN [88]. | The MNIST used in this paper is a simplistic image, and additional experiments are needed using complex datasets. CapsGAN has the ability to capture geometric transformations. | |

| [65] | To classify ultrasonic data for self-driving cars. | Dynamic routing by agreement. Convolution: 256 kernels of 6 × 6 size, Activation function: ReLU. Primary Capsule: 32 channels of 6 × 6 × 8. Digit Caps: 16 × 4. | A dataset of 21,600 measurements. | Complex CNN. | 99.6% using complex CapsNet as compared to CNN (98.9). | A lot of research is needed to make ultrasonic technology appropriate for self-driving vehicles. |

| [73] | Face recognition. | A 3-layer capsule network having two convolutional and one fully connected layer. Primary capsules: 32 channels of convolutional 8-dimensional capsules. Final layer: 16 dimensional capsules per class. | LFW dataset. | Deep CNN. | 93.7. | Due to their unique equivariance properties, CapsNets can perform better than CNN on unseen transformed data. Experimenting with larger training set size and fewer epochs could avoid overfitting and improve accuracy. |

| [74] | To identify the animals in the wilderness. | C-CapsNet. | Serengeti dataset. | CNN. | 96.48. | - |

| [75] | Capsule network with self-attention routing. | Non-iterative, parallelizable routing algorithm instead of dynamic routing. | MNIST, smallNORB, and MultiMNIST. | CNN. | - | Achieved higher accuracy with a considerably lower number of parameters. |

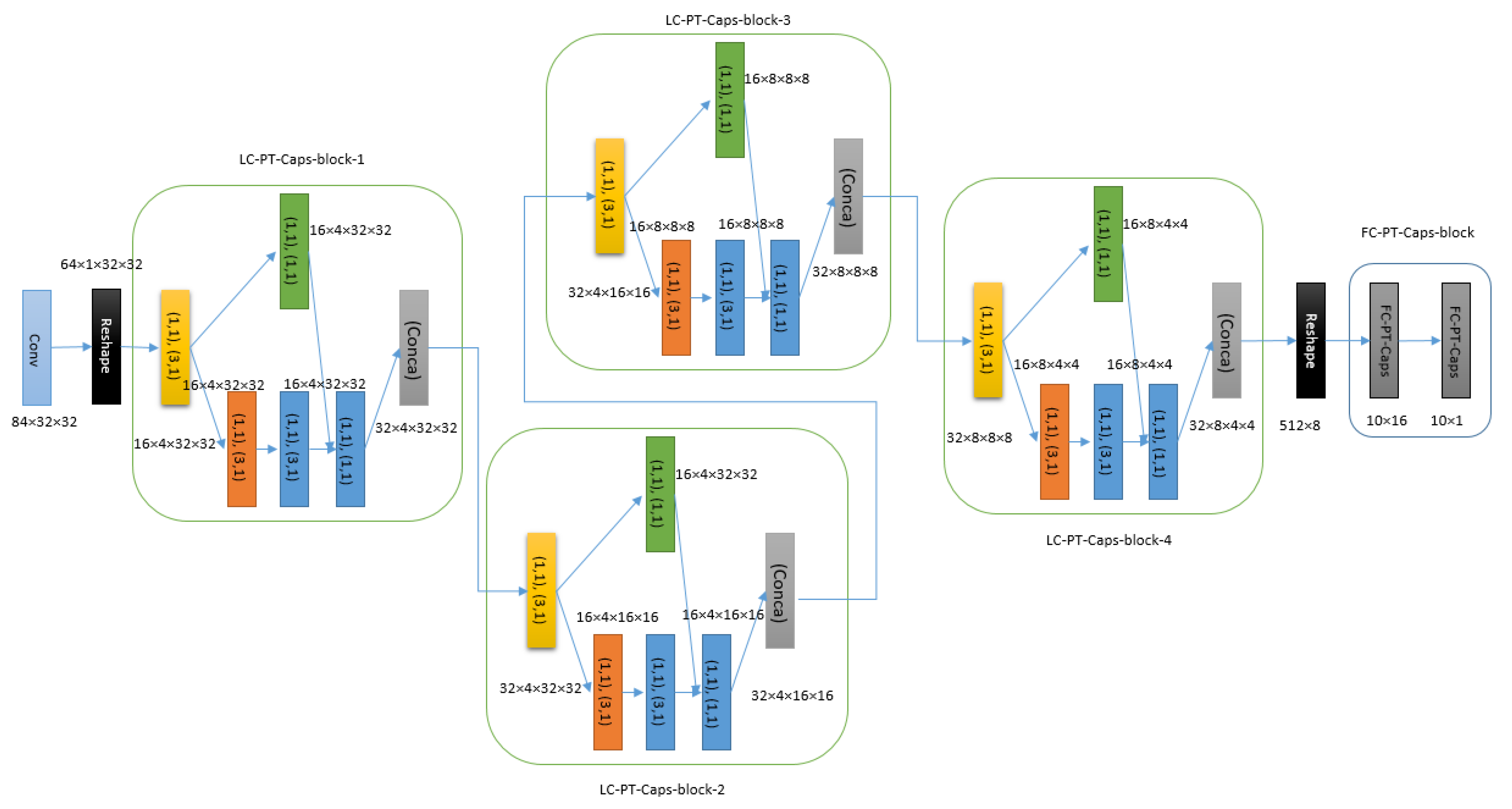

| [76] | PT-CapsNet for semantic segmentation, classification, and detection tasks. | Prediction-tuning capsule network (PT-CapsNet) with connected PT capsules and locally connected PT capsules. | CIFAR-10, CIFAR-100, Fashion-MNIST, DenseNet-100, ResNet-110. | DenseNet, ResNet, and CNN. | - | PT-CapsNet can perform better than CNN-based models with challenging datasets, higher image sizes, with a smaller number of network parameters. |

| [77] | Hierarchical multi-label text classification. | The features encoded in capsules and routing algorithm are combined. | BGC and WOS datasets. | SVM, LSTM, ANN, and CNN architectures. | - | Proposed algorithm performs efficiently. Future work should involve cascading capsule layers. |

| [78] | COVID-19 patient detection through chest CT scan images. | U-net-based segmentation model, capsule network. | COVID-CT-MD. | - | 90.82. | Proposed algorithm has been tested using a simple dataset of 171 images of COVID-19 positive patients, 60 patients with pneumonia, and 76 normal patients. |

| [79] | Cyberbullying detection. | CapsNet with dynamic routing and CNN. | 10,000 comments taken from YouTube, Twitter, and Instagram. | KNN, SVM, and NB. | 97.05. | High-dimensional, skewed, cross-lingual, and heterogeneous data are the limitations of proposed approach. Future work can be directed toward detecting and recognizing wordplay, creative spellings, and slang words. |

| [80] | To detect COVID-19 disease by means of radiography images. | VGG-CapsNet. | 2905 images having 219, 1345, and 1341 images of COVID-19 patients available at [89]. | CNN-CapsNet. | VGG-CapsNet has 97% for classifying COVID-19, non-COVID samples, and pneumonia with 92% accuracy. | Proposed approach can be used for clinical practices. |

| [81] | Lung nodule malignancy prediction. | MIXCAPS. | LIDC dataset [90] and IDRI dataset [91]. | - | 92.88%, with sensitivity of 93.2% and specificity of 92.3%. | The proposed approach is independent of pre-defined hand-shaped features and does not require fine annotation. |

| [82] | Drowsiness detection. | Generic CapsNet. | EEG signals. | CNN. | 86.44. | To improve drowsiness detection, a proper dataset will be required in the future. |

| [83] | To identify pneumonia-related compounds. | Five-layer capsule network. | 88 positive samples and 264 negative samples. | SVM, gcForest, RF, and forgeNet. | An improvement of 1.7–12.9% in terms of AU. | - |

| [84] | Urdu digit recognition. | Primary capsule layer: 8 convolutional units with two strides, 32 channels, and 9 × 9 kernel. Batch size = 100. Epochs = 50. | Own collected dataset of handwritten characters and digits of 900 people with 6086 training images and 1301 images for testing. | Autoencoder [92] and CNN. | 97.3. | The assumption that one pixel of an image has at most one instance type is a challenging task. So that it can be accurately represented by capsule. |

| [85] | Military vehicle recognition. | Multi-level CapsNet with class capsule layer. | 3500 images collected from the Internet. | CNN. | 96.54%. | - |

| [93] | Emotion recognition. | Features: Feature matrix Convolution 1 layer: 16 × 16 × 256 channels. Primary capsule layer: 7 × 7 × 256D vector. Class capsule layer: 2 × 49 × 32D. Dynamic routing. | DEAP dataset [94]. | Compared with five versions of capsule networks, SVM, Bayes classifier and Gaussian naive Bayes. | Arousal = 0.6828 valence = 0.6673 dominance = 0.6725. | To verify its comprehensiveness, the proposed method will be tested on more datasets of emotion recognition. |

| [95] | Emotion of tweets prediction. | Embedding layer, Bi-GRU layer with capsule network. Flattening with SoftMax layer. Dynamic routing by agreement. | WASSA 2018. | GRU with hierarchical attention, GRU with CNN. GRU with CapsNet. | F1 score = 0.692. | |

| [96] | Brain tumor classification. | Same as traditional CapsNet. Instead of 256 feature maps in convolutional layer, they used 64. | Dataset used in [97]. | CNN. | ||

| [98] | Lung cancer screening. | Encoder: 32 × 32 image to 24 × 24 with 256 channels. Primary capsule: 8 × 8 NoduleCapsule: 64 × 256 × 16 each. Decoder: 8 × 8 × 16. | 226 unique CT scan images. | CNN. | Three times faster with the same accuracy. | In the future, unsupervised learning will be explored with CNN. |

| [99] | Biometric recognition system (face and iris). | Fuzzified image filter is used as preprocessing step to remove background noise. Before the capsule network, Gabor wavelet transform is used to detect and extract the vascular pattern of eye retinal images. Max pooling enabled with dynamic routing. | Face 95 [100] and CASIA-Iris-Thousand [101]. | CNN. | 99% accuracy with an error rate of 0.3% to 0.5%. | To implement the proposed method in public sector for biometric recognition. |

| [102] | Low-resolution image recognition. | DirectCapsNet with targeted reconstruction loss and HR-anchor loss. | CMU multi-PIE dataset [103], SVHN dataset, and UCCS dataset. | Robust partially coupled nets, LMSoftmax, L2Softmax, and Centerloss for VLR. | 95.81%. | In the future, VLR FR can be performed in the presence of aging, adversarial attacks, and spectral variations. |

| [104] | Audio classification. | Agreement-based dynamic routing, flattening capsule network, Bi-GRU layer, SoftMax function, and embedding layer. | WASSA implicit emotion shared task [105]. | GRU + CNN. GRU + hierarchical attention. | 50%. | - |

| [106] | Image classification of gastrointestinal endoscopy. | Combination of CapsNet and midlevel CNN features (L-DenseNetCaps). | HyperKvasir dataset [107] and Kvasir v2 dataset [108]. | VGG16, DenseNet121, DenseNetCaps, and L-VGG16Caps. | 94.83. | This model can also be applied to the diagnosis of skin cancer, etc. |

| [109] | Hate speech detection. | HCovBi-Caps (convolutional, BiGRU, and capsule network). | DS1 dataset and DS2. | DNN, BiGRU, GRU, CNN, LSTM, etc. | Training accuracy = 0.93, and validation accuracy = 0.90. | The proposed model detects date propagation in speech only. |

| [110] | Object detection. | NASGC-CapANet. | MS COCO Val 2017 dataset. | Faster R-CNN. | 43.8% box mAP. | When compared to the present attention mechanism, performance is improved by incorporating the capsule attention module into the highest level of FPN. |

| Ref | Routing Algorithms | Description | Advantages | Applications | Limitation |

|---|---|---|---|---|---|

| [18] | Dynamic routing | A routing algorithm where the weighting of each capsule’s output depends on how well its prediction matches the output of the layer above. The network can learn to send information from lower-level capsules to higher-level capsules thanks to this weighting. | Better performance in complex tasks than static routing, ability to handle input transformations, translation equivariance, and viewpoint invariance. | Object recognition, speech recognition, natural language processing. | Computational complexity, sensitivity to initialization, and potential for overfitting. |

| [111] | EM routing | A routing technique that iteratively updates the coupling coefficients between capsules using the expectation-maximization (EM) algorithm. The coupling coefficients are changed at each iteration by maximizing a lower constraint on the data’s logarithmic likelihood. | Better performance than dynamic routing, more stable convergence, and improved generalization. | Object recognition, speech recognition, natural language processing. | Computationally expensive, high memory requirements, and potential for getting stuck in local optima. |

| [112] | Sparse routing | A routing algorithm that selects a small subset of capsules to route information to the next layer based on a sparsity constraint. The selected capsules are those with the highest activations, and the remaining capsules are discarded. | Reduced computational complexity, improved scalability, and increased robustness to adversarial attacks. | Object recognition, speech recognition, natural language processing. | Information loss due to discarding capsules, reduced expressiveness, and potential for overfitting. |

| [113] | Deterministic routing | A routing algorithm where each capsule in the lower layer is deterministically assigned to a specific capsule in the layer above based on the maximum activation. | Simple and computationally efficient, easy to implement. | Object recognition, speech recognition, natural language processing. | Lack of robustness to input transformations and viewpoint changes. |

| [114] | Routing by agreement or competition (RAC) | A routing algorithm where each capsule sends its output to multiple capsules in the layer above, and the coupling coefficients are computed based on either the agreement or the competition between the outputs. | Increased flexibility and performance compared to other routing algorithms. | Object recognition, speech recognition, natural language processing. | Requires additional parameters to compute the agreement or competition, computationally expensive. |

| [115] | K-means routing | A routing algorithm where each capsule in the lower layer is assigned to the closest cluster center in the layer above, which is learned using K-means clustering. | Simple and computationally efficient, captures higher-level concepts in a more structured way. | Speech recognition, natural language processing, and object recognition. | Requires setting the number of cluster centers in advance, sensitive to the initialization of the cluster centers. |

| [116] | Routing with temporal dynamics | A routing algorithm that uses recurrent neural networks to capture the temporal dynamics of the capsule activations over time. | Ability to handle sequential data, improved performance in video recognition tasks. | Video recognition, action recognition, speech recognition. | Higher computational complexity, potential for overfitting, requires careful initialization. |

| [117] | Localized routing | A routing algorithm that uses a localized attention mechanism to route information from lower-level capsules to nearby higher-level capsules. | Better performance than global routing, more robust to input transformations and viewpoint changes. | Object recognition, speech recognition, natural language processing. | Higher computational complexity, requires additional parameters to compute the attention. |

| [118] | Attention-based routing | A routing algorithm that uses an attention mechanism to weigh the contributions of each capsule in the lower layer to each capsule in the layer above. | Improved performance compared to other routing algorithms, can capture complex relationships between capsules. | Object recognition, speech recognition, natural language processing. | Higher computational complexity, requires additional parameters to compute the attention. |

| [119] | Graph-based routing | A routing algorithm that models the relationships between capsules in the lower layer and the layer above as a graph and performs message passing between the nodes in the graph to compute the coupling coefficients. | Better performance in capturing hierarchical relationships between objects, more robust to input transformations and viewpoint changes. | Object recognition, natural language processing. | Higher computational complexity, requires additional parameters to model the graph structure. |

| [120] | Dynamic routing with routing signals | A routing algorithm that uses a dynamic routing approach combined with routing signals to adjust the coupling coefficients during the inference stage. | Improved performance compared to other routing algorithms, can capture more complex relationships between capsules. | Object recognition, speech recognition, natural language processing. | Higher computational complexity, requires additional parameters to compute the routing signals. |

| Routing Algorithm | Advantages | Limitations | Best Aspect | Worst Aspect |

|---|---|---|---|---|

| Dynamic routing | Allows dynamic learning of activations | Computationally expensive with long sequences | General performance | Computational complexity |

| EM routing | Improved convergence and stability | Can still be computationally expensive | Convergence stability | Potential Local Optima |

| MaxMin routing | Reduces uniform probabilities | Parameter tuning for optimal balance | Probabilities distribution | Parameter Tuning |

| Routing by greement | Simplifies routing process | Suboptimal results without correct iteration choice | Simplicity | Suboptimal iteration choice |

| Orthogonal Routing | Improved stability and generalization | Additional regularization for orthogonality | Stability and invariance | Computational overhead |

| Group Equivariant capsules | Equivariance and invariance guarantees | Complex implementation and group choice | Equivariance and invariance | Complex implementation |

| Sparse routing | Efficient utilization of computation | Complex selection process for active capsules | Computation efficiency | Selection complexity |

| Deterministic routing | Deterministic routing for stable output | Restricted to deterministic assignment of capsules | Deterministic outputs | Limited capsule interaction |

| K-mean routing | Efficient use of clustering | May not handle complex data distributions | Efficient clustering | Limited data distributions |

| Routing with temporal dynamics | Considers temporal information | Increased complexity in modeling temporal relationships | Temporal information | Increased model complexity |

| Graph-based routing | Captures spatial relationships | Computational overhead in graph-based operations | Spatial relationships | Computational overhead |

| Attention-based routing | Focuses on informative capsules | May require careful tuning and be sensitive to hyperparameters | Attention mechanisms | Hyperparameter sensitivity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haq, M.U.; Sethi, M.A.J.; Rehman, A.U. Capsule Network with Its Limitation, Modification, and Applications—A Survey. Mach. Learn. Knowl. Extr. 2023, 5, 891-921. https://doi.org/10.3390/make5030047

Haq MU, Sethi MAJ, Rehman AU. Capsule Network with Its Limitation, Modification, and Applications—A Survey. Machine Learning and Knowledge Extraction. 2023; 5(3):891-921. https://doi.org/10.3390/make5030047

Chicago/Turabian StyleHaq, Mahmood Ul, Muhammad Athar Javed Sethi, and Atiq Ur Rehman. 2023. "Capsule Network with Its Limitation, Modification, and Applications—A Survey" Machine Learning and Knowledge Extraction 5, no. 3: 891-921. https://doi.org/10.3390/make5030047

APA StyleHaq, M. U., Sethi, M. A. J., & Rehman, A. U. (2023). Capsule Network with Its Limitation, Modification, and Applications—A Survey. Machine Learning and Knowledge Extraction, 5(3), 891-921. https://doi.org/10.3390/make5030047