Using Machine Learning with Eye-Tracking Data to Predict if a Recruiter Will Approve a Resume

Abstract

1. Introduction

2. Prior Work

2.1. Evaluating Resumes

2.1.1. Academic Qualifications

2.1.2. Work Experience

2.1.3. Extracurriculars

2.2. Eye-Tracking

2.3. Machine Learning

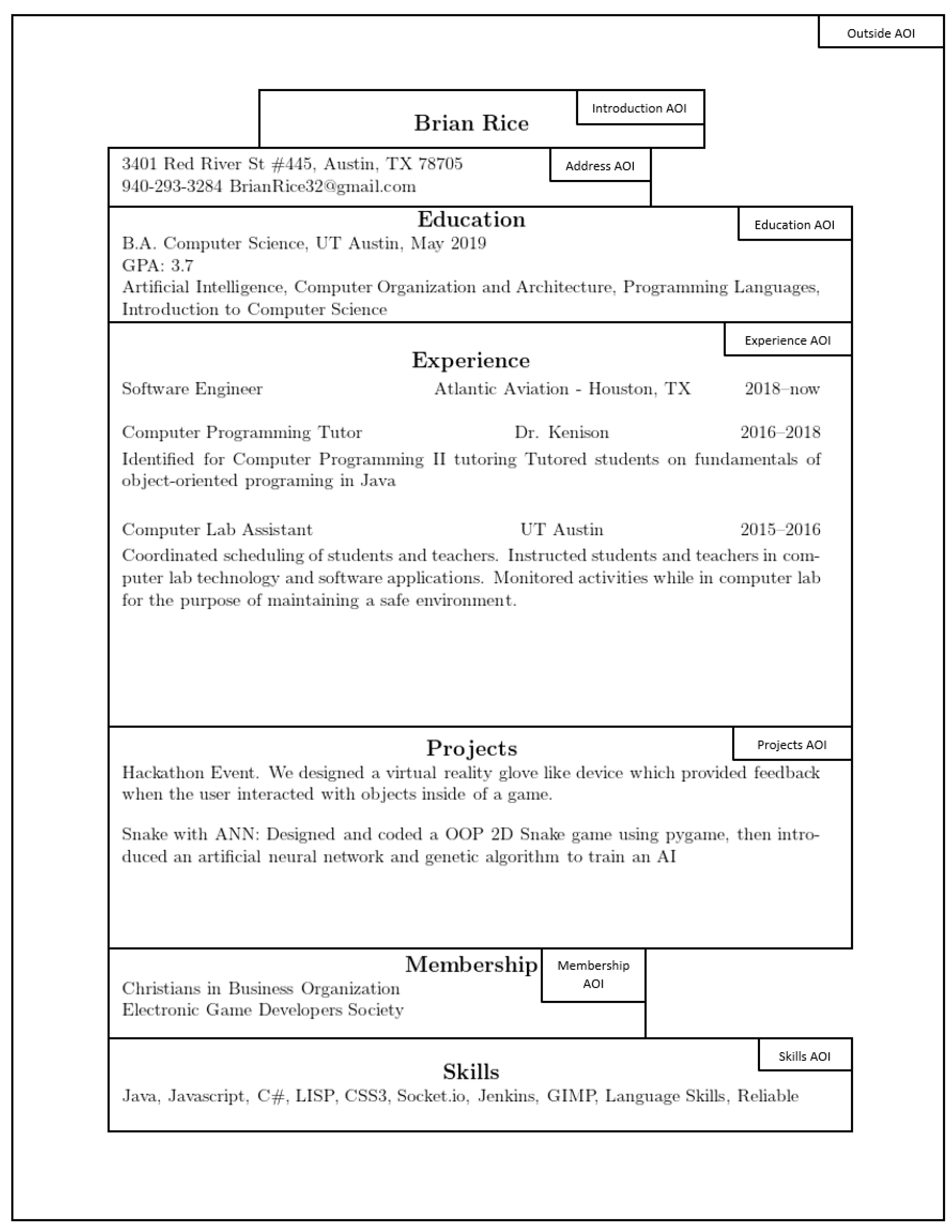

3. Research Methods

3.1. Study Recruitment

3.2. Experiment Process

3.3. Data Labeling

3.4. Machine Learning

4. Results

5. Discussion

6. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hornsby, J.S.; Smith, B.N. Resume content: What should be included and excluded. SAM Adv. Manag. J. 1995, 60, 4. [Google Scholar]

- Thoms, P.; McMasters, R.; Roberts, M.R.; Dombkowski, D.A. Resume Characteristics as Predictors of an Invitation to Interview. J. Bus. Psychol. 1999, 13, 339–356. [Google Scholar] [CrossRef]

- Kulkarni, S.B.; Che, X. Intelligent software tools for recruiting. J. Int. Technol. Inf. Manag. 2019, 28, 2–16. [Google Scholar] [CrossRef]

- Shellenbarger, S. Life & Arts: Make Your Job Application Robot-Proof—It Takes Planning to Make Sure AI Gatekeepers Don’t Bounce Your Resume before a Human Can Make a Call. Available online: https://www.wsj.com/articles/make-your-job-application-robot-proof-11576492201 (accessed on 10 November 2022).

- Schramm, R.M.; Dortch, N.R. An analysis of effective resume content, format, and appearance based on college recruiter perceptions. Bull. Assoc. Bus. Commun. 1991, 54, 18–23. [Google Scholar] [CrossRef]

- Noonan, R. STEM Jobs: 2017 Update; ESA Issue Brief# 02-17; US Department of Commerce: Washington, DC, USA, 2017. Available online: https://eric.ed.gov/?id=ED594354 (accessed on 10 November 2022).

- Jobvite. Recruiting Benchmark Report. Available online: https://www.jobvite.com/wp-content/uploads/2019/03/2019-Recruiting-Benchmark-Report.pdf (accessed on 10 November 2022).

- Kabicher, S.; Motschnig-Pitrik, R.; Figl, K. What competences do employers, staff and students expect from a Computer Science graduate? In Proceedings of the 39th IEEE Frontiers in Education Conference, San Antonio, TX, USA, 18–21 October 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Risavy, S. The Resume Research Literature: Where Have We Been and Where Should We Go Next? J. Educ. Dev. Psychol. 2017, 7, 169. [Google Scholar] [CrossRef]

- Guo, S.; Hammond, T. ResuMatcher: A Personalized Resume-Job Matching System; Texas A & M University: College Station, TX, USA, 2015. [Google Scholar]

- Stephen, B.K. Impressions of the Resume: The Effects of Applicant Education, Experience, and Impression Management. J. Bus. Psychol. 1994, 9, 33. [Google Scholar]

- Brown, B.K.; Campion, M.A. Biodata phenomenology: Recruiters’ perceptions and use of biographical information in resume screening. J. Appl. Psychol. 1994, 79, 897–908. [Google Scholar] [CrossRef]

- Bretz, J.R.D.; Rynes, S.L.; Gerhart, B. Recruiter Perceptions of Applicant Fit: Implications for Individual Career Preparation and Job Search Behavior. J. Vocat. Behav. 1993, 43, 310–327. [Google Scholar] [CrossRef]

- Cable, D.M.; Judge, T.A. Interviewers’ Perceptions of Person-Organization Fit and Organizational Selection Decisions. J. Appl. Psychol. 1997, 82, 546. [Google Scholar] [CrossRef]

- Roth, P.L.; Bobko, P. College grade point average as a personnel selection device: Ethnic group differences and potential adverse impact. J. Appl. Psychol. 2000, 85, 399–406. [Google Scholar] [CrossRef]

- Barr, T.F.; McNeilly, K.M. The value of students’ classroom experiences from the eyes of the recruiter: Information, implications, and recommendations for marketing educators. J. Mark. Educ. 2002, 24, 168–173. [Google Scholar] [CrossRef]

- Adkins, C.L.; Russell, C.J.; Werbel, J.D. Judgments of fit in the selection process: The role of work value congruence. Pers. Psychol. 1994, 47, 605–623. [Google Scholar] [CrossRef]

- Clark, J.G.; Walz, D.B.; Wynekoop, J.L. Identifying exceptional application software developers: A comparison of students and professionals. Commun. Assoc. Inf. Syst. 2003, 11, 8. [Google Scholar] [CrossRef]

- Roth, P.L.; BeVier, C.A.; Switzer, F.S., III; Schippmann, J.S. Meta-analyzing the relationship between grades and job performance. J. Appl. Psychol. 1996, 81, 548. [Google Scholar] [CrossRef]

- Hutchinson, K.L.; Brefka, D.S. Personnel Administrators’ Preferences for Résumé Content: Ten Years After. Bus. Commun. Q. 1997, 60, 67–75. [Google Scholar] [CrossRef]

- Quińones, M.A.; Ford, J.K.; Teachout, M.S. The relationship between work experience and job performance: A conceptual and meta-analytic review. Pers. Psychol. 1995, 48, 887–910. [Google Scholar] [CrossRef]

- Singer, M.S.; Bruhns, C. Relative effect of applicant work experience and academic qualification on selection interview decisions: A study of between-sample generalizability. J. Appl. Psychol. 1991, 76, 550. [Google Scholar] [CrossRef]

- Hutchinson, K.L. Personnel Administrators’ Preferences for Résumé Content: A Survey and Review of Empirically Based Conclusions. J. Bus. Commun. (1973) 1984, 21, 5–14. [Google Scholar] [CrossRef]

- Cole, M.S.; Rubin, R.S.; Feild, H.S.; Giles, W.F. Recruiters’ Perceptions and Use of Applicant Résumé Information: Screening the Recent Graduate. Appl. Psychol. 2007, 56, 319–343. [Google Scholar] [CrossRef]

- Sulastri, A.; Handoko, M.; Janssens, J.M.A.M. Grade point average and biographical data in personal resumes: Predictors of finding employment. Int. J. Adolesc. Youth 2015, 20, 306–316. [Google Scholar] [CrossRef]

- Baert, S.; Neyt, B.; Siedler, T.; Tobback, I.; Verhaest, D. Student internships and employment opportunities after graduation: A field experiment. Econ. Educ. Rev. 2019, 83, 1–11. [Google Scholar] [CrossRef]

- Ferguson, E. Changing qualifications for entry-level application developers. J. Comput. Sci. Coll. 2005, 20, 106–111. [Google Scholar]

- Aasheim, C.; Shropshire, J.; Li, L.; Kadlec, C. Knowledge and skill requirements for entry-level IT workers: A longitudinal study. J. Inf. Syst. Educ. 2019, 23, 8. [Google Scholar]

- Howard, A. College experiences and managerial performance. J. Appl. Psychol. 1986, 71, 530–552. [Google Scholar] [CrossRef]

- Nemanick, J.R.C.; Clark, E.M. The Differential Effects of Extracurricular Activities on Attributions in Résumé Evaluation. Int. J. Sel. Assess. 2002, 10, 206–217. [Google Scholar] [CrossRef]

- Rubin, R.S.; Bommer, W.H.; Baldwin, T.T. Using extracurricular activity as an indicator of interpersonal skill: Prudent evaluation or recruiting malpractice? Hum. Resour. Manag. 2002, 41, 441–454. [Google Scholar] [CrossRef]

- Poole, A.; Ball, L.J. Eye tracking in HCI and usability research. In Encyclopedia of Human Computer Interaction; IGI Global: Hershey, PA, USA, 2006; pp. 211–219. [Google Scholar] [CrossRef]

- Mele, M.L.; Federici, S. Gaze and eye-tracking solutions for psychological research. Cogn. Process. 2012, 13, 261–265. [Google Scholar] [CrossRef]

- El Haj, M.; Lenoble, Q. Eying the future: Eye movement in past and future thinking. Cortex 2018, 105, 97–103. [Google Scholar] [CrossRef]

- Diaz, C.S. Updating Best Practices: Applying On-Screen Reading Strategies to Résumé Writing. Bus. Commun. Q. 2013, 76, 427–445. [Google Scholar] [CrossRef]

- Lahey, J.N.; Oxley, D.R. Discrimination at the Intersection of Age, Race, and Gender: Evidence from a Lab-in-the-Field Experiment; National Bureau of Economic Research Working Paper Series, No. 25357; National Bureau of Economic Research: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: New York, NY, USA, 2014. [Google Scholar]

- Yu, K.; Guan, G.; Zhou, M. Resume information extraction with cascaded hybrid model. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05), Ann Arbor, MI, USA, 25–30 June 2005; pp. 499–506. [Google Scholar]

- Guo, S.; Alamudun, F.; Hammond, T. RésuMatcher: A personalized résumé-job matching system. Expert Syst. Appl. 2016, 60, 169–182. [Google Scholar] [CrossRef]

- Roy, P.K.; Chowdhary, S.S.; Bhatia, R. A Machine Learning approach for automation of Resume Recommendation system. Procedia Comput. Sci. 2020, 167, 2318–2327. [Google Scholar] [CrossRef]

- Alamudun, F.; Yoon, H.J.; Hudson, K.B.; Morin-Ducote, G.; Hammond, T.; Tourassi, G.D. Fractal analysis of visual search activity for mass detection during mammographic screening. Med. Phys. 2017, 44, 832–846. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Carrington, A.; Manuel, D.; Fieguth, P.; Ramsay, T.; Osmani, V.; Wernly, B.; Bennett, C.; Hawken, S.; Magwood, O.; Sheikh, Y.; et al. Deep ROC analysis and AUC as balanced average accuracy, for improved classifier selection, audit and explanation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 60, 329–341. [Google Scholar] [CrossRef] [PubMed]

| Feature Name | Definition |

|---|---|

| Gaze Points: X 1 | Samples taken by the eye tracker in screen coordinates |

| Number of Fixations: X 1 | Count of when gaze points are near and around each other for 100–300 milliseconds |

| Number of Dwells: X 1 | Count of when there are multiple fixations on one AOI ending with a fixation on another AOI |

| Dwell Duration: X 1 | Total time duration spent on dwells |

| Dwell Rate: X 1 | Number of dwells per the time spent looking at the AOI |

| Dwell Duration Average: X1 | Average time spent on the AOI per dwell |

| X 1 From Y 2 | Count of transitions from one AOI to another AOI |

| Fractal Dimension | Complexity of the eye movements in a resume |

| Stimulus Duration | Total time spent looking at the resume |

| Classifier | Accuracy | F1 | Precision | Recall | AUC |

|---|---|---|---|---|---|

| Random Forest | 0.629 ± 0.182 | 0.692 ± 0.177 | 0.667 ± 0.217 | 0.595 ± 0.181 | 0.595 ± 0.181 |

| Gradient Boosting | 0.613 ± 0.161 | 0.681 ± 0.177 | 0.649 ± 0.214 | 0.571 ± 0.175 | 0.571 ± 0.175 |

| AdaBoost | 0.605 ± 0.154 | 0.667 ± 0.181 | 0.644 ± 0.212 | 0.562 ± 0.159 | 0.562 ± 0.159 |

| Decision Tree | 0.567 ± 0.168 | 0.610 ± 0.191 | 0.638 ± 0.236 | 0.560 ± 0.178 | 0.560 ± 0.178 |

| Naive Bayes | 0.542 ± 0.180 | 0.511 ± 0.241 | 0.655 ± 0.291 | 0.554 ± 0.176 | 0.554 ± 0.176 |

| K-Nearest Neighbors | 0.568 ± 0.164 | 0.633 ± 0.187 | 0.611 ± 0.223 | 0.529 ± 0.166 | 0.529 ± 0.166 |

| Multilayer Perceptron | 0.541 ± 0.189 | 0.553 ± 0.255 | 0.599 ± 0.288 | 0.525 ± 0.182 | 0.525 ± 0.182 |

| SVM | 0.617 ± 0.232 | 0.734 ± 0.203 | 0.617 ± 0.232 | 0.500 ± 0.000 | 0.500 ± 0.000 |

| Majority | 0.617 ± 0.232 | 0.734 ± 0.203 | 0.617 ± 0.232 | 0.500 ± 0.000 | 0.500 ± 0.000 |

| Classifier | Accuracy | F1 | Precision | Recall | AUC |

|---|---|---|---|---|---|

| Random Forest | 0.775 ± 0.161 | 0.798 ± 0.168 | 0.778 ± 0.207 | 0.767 ± 0.175 | 0.767 ± 0.175 |

| Gradient Boosting | 0.777 ± 0.161 | 0.802 ± 0.164 | 0.778 ± 0.204 | 0.764 ± 0.180 | 0.764 ± 0.180 |

| AdaBoost | 0.767 ± 0.161 | 0.783 ± 0.177 | 0.779 ± 0.209 | 0.757 ± 0.168 | 0.757 ± 0.168 |

| SVM | 0.709 ± 0.184 | 0.741 ± 0.200 | 0.714 ± 0.229 | 0.715 ± 0.165 | 0.715 ± 0.165 |

| Decision Tree | 0.671 ± 0.177 | 0.696 ± 0.193 | 0.720 ± 0.220 | 0.650 ± 0.203 | 0.650 ± 0.203 |

| Multilayer Perceptron | 0.649 ± 0.190 | 0.667 ± 0.227 | 0.721 ± 0.244 | 0.645 ± 0.191 | 0.645 ± 0.191 |

| K-Nearest Neighbors | 0.666 ± 0.164 | 0.722 ± 0.165 | 0.687 ± 0.213 | 0.642 ± 0.176 | 0.642 ± 0.176 |

| Naive Bayes | 0.600 ± 0.182 | 0.573 ± 0.236 | 0.722 ± 0.262 | 0.605 ± 0.175 | 0.605 ± 0.175 |

| Majority | 0.617 ± 0.232 | 0.734 ± 0.203 | 0.617 ± 0.231 | 0.500 ± 0.000 | 0.500 ± 0.000 |

| Range | AUC | Precision | Recall | Specificity | |

|---|---|---|---|---|---|

| Full Range | 0.816 ± 0.201 | 0.768 ± 0.178 | 0.816 ± 0.201 | 0.816 ± 0.201 | |

| High Risk (Low ) | 0.796 ± 0.257 | 0.822 ± 0.267 | 0.651 ± 0.330 | 0.906 ± 0.221 | |

| Medium Risk (Medium ) | 0.814 ± 0.217 | 0.688 ± 0.177 | 0.855 ± 0.214 | 0.245 ± 0.272 | |

| Low Risk (High ) | 0.876 ± 0.249 | 0.620 ± 0.181 | 0.940 ± 0.145 | 0.016 ± 0.056 |

| Feature Name | Importance Percentage |

|---|---|

| Outside From Outside | 8.258 |

| Gaze Points: Outside | 7.557 |

| Number of Fixations: Outside | 4.965 |

| Dwell Duration Average: Outside | 4.624 |

| Dwell Duration: Outside | 4.618 |

| Stimulus Duration | 2.290 |

| Fractal Dimension Average | 2.190 |

| Number of Fixations: Experience | 2.084 |

| Dwell Rate: Education | 2.068 |

| Dwell Duration Average: Experience | 1.914 |

| Fractal Dimension | 1.873 |

| Dwell Rate: Experience | 1.760 |

| Dwell Duration: Experience | 1.738 |

| Dwell Rate: Outside | 1.736 |

| Feature Name | Importance Percentage |

|---|---|

| Gaze Points: Outside | 28.630 |

| Outside From Outside | 18.812 |

| Dwell Duration: Outside | 18.151 |

| Stimulus Duration | 18.110 |

| Dwell Duration Average: Experience | 16.297 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pina, A.; Petersheim, C.; Cherian, J.; Lahey, J.N.; Alexander, G.; Hammond, T. Using Machine Learning with Eye-Tracking Data to Predict if a Recruiter Will Approve a Resume. Mach. Learn. Knowl. Extr. 2023, 5, 713-724. https://doi.org/10.3390/make5030038

Pina A, Petersheim C, Cherian J, Lahey JN, Alexander G, Hammond T. Using Machine Learning with Eye-Tracking Data to Predict if a Recruiter Will Approve a Resume. Machine Learning and Knowledge Extraction. 2023; 5(3):713-724. https://doi.org/10.3390/make5030038

Chicago/Turabian StylePina, Angel, Corbin Petersheim, Josh Cherian, Joanna Nicole Lahey, Gerianne Alexander, and Tracy Hammond. 2023. "Using Machine Learning with Eye-Tracking Data to Predict if a Recruiter Will Approve a Resume" Machine Learning and Knowledge Extraction 5, no. 3: 713-724. https://doi.org/10.3390/make5030038

APA StylePina, A., Petersheim, C., Cherian, J., Lahey, J. N., Alexander, G., & Hammond, T. (2023). Using Machine Learning with Eye-Tracking Data to Predict if a Recruiter Will Approve a Resume. Machine Learning and Knowledge Extraction, 5(3), 713-724. https://doi.org/10.3390/make5030038