Abstract

Generally, when developing classification models using supervised learning methods (e.g., support vector machine, neural network, and decision tree), feature selection, as a pre-processing step, is essential to reduce calculation costs and improve the generalization scores. In this regard, the minimum reference set (MRS), which is a feature selection algorithm, can be used. The original MRS considers a feature subset as effective if it leads to the correct classification of all samples by using the 1-nearest neighbor algorithm based on small samples. However, the original MRS is only applicable to numerical features, and the distances between different classes cannot be considered. Therefore, herein, we propose a novel feature subset evaluation algorithm, referred to as the “E2H distance-weighted MRS,” which can be used for a mixture of numerical and categorical features and considers the distances between different classes in the evaluation. Moreover, a Bayesian swap feature selection algorithm, which is used to identify an effective feature subset, is also proposed. The effectiveness of the proposed methods is verified based on experiments conducted using artificially generated data comprising a mixture of numerical and categorical features.

1. Introduction

Generally, classification tasks are executed using supervised learning models, such as support vector machines, neural networks, and decision trees. However, the explicit use of the selected effectiveness features for classification is essential when developing classification models. This process is called feature selection, and it is known to reduce the calculation time and improve the estimation accuracy [,]. Therefore, several feature selection algorithms have been proposed in the field of machine learning; these include out-of-bag error [], inter–intra class distance ratio [,], genetic algorithms [,], bagging [,], CART [,], Lasso [,], BoLasso [], ReliefF [,], and atom search [].

In this study, we consider the minimum reference set (MRS) [], another feature selection algorithm, for developing a high-quality classification model. In the MRS, we select a feature subset from among the feature set and calculate the Euclidean distances for all the pairwise samples belonging to different classes. Next, we test the correct classification of all samples using the 1-nearest neighbor (1NN) algorithm based on paired samples with close distances. In this regard, if we achieve the correct classification of all samples using a small sample size, we regard the corresponding feature subsets as desirable. In other words, the sample size that leads to no classification error is considered as the evaluation value of the feature subset . Additional details on the MRS can be found in []. We consider the MRS to be reliable because it has been adopted in previous studies for feature selection [,,,]. However, the MRS presents the following two limitations:

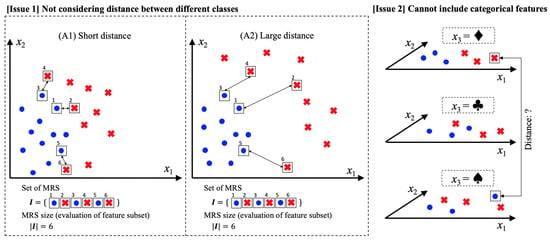

One of these limitations is represented as “Issue 1” in Figure 1. Here, (A1) and (A2) denote feature spaces with values and , and the blue circle and red cross represent the observation samples of two different classes. In these cases, the correct classification of all samples can be achieved using 1NN, explicitly based on samples enclosed in the square box. Notably, large distances between different classes in a feature space are often desirable to achieve a high generalization score. Therefore, feature space (A2) is better than space (A1). However, the feature space evaluations of (A1) and (A2) are the same (i.e., six) when using the original MRS [], even if the feature space (A2) is known to be desirable. We consider this an issue because the MRS is a method used for identifying a desirable feature subset for the classification problem.

Figure 1.

Issues related to the original minimum reference set (MRS) [] feature selection algorithm.

The second issue is indicated as “Issue 2” in Figure 1. The figure presents a three-dimensional feature space consisting of two numerical features and a categorical feature . Here, when , the samples are correctly classified by the numerical features and . In certain cases, categorical features, such as , are also effective for classification. However, the original MRS [] can only evaluate numerical features because it is based on the Euclidean distance. Although we can transform the categorical values into numerical values via one-hot encoding, large categorical values often lead to an increase in the dimension number []. When the sample size and dimension number are a and r, respectively, the time complexity of the 1NN algorithm based on the brute-force search is []. Moreover, although the k-d tree [] is a fast algorithm, it is affected by dimensionality []. The number of dimensions r increases the computational time, despite the existence of an algorithm with a time complexity of []. Therefore, we consider that the application of one-hot encoding to categorical features is not desirable because it leads to an increase in the dimensionality.

Therefore, herein, we propose a novel feature subset evaluation algorithm, called the E2H distance-weighted MRS (E2H MRS), to address the two aforementioned issues. Here, “E2” and “H” denote the squared Euclidian distance and Hamming distance, respectively. Note that we can measure the distances between samples in a feature space comprising a mixture of numerical and categorical values by using the mixture distance “E2H.” Moreover, we propose a Bayesian swap feature selection algorithm (BS-FS) to identify a subset of desirable features for classification. In this paper, we will present details regarding the E2H MRS and BS-FS algorithms.

2. Proposed Method

Herein, we explain the mathematical representation of feature subset selection and the proposed methods. The used variables are summarized in Appendixes Appendix A.1 and Appendix A.2.

2.1. Mathematical Representation of Feature Subset Selection

Let us denote a set consisting of numerical features and a set consisting of categorical features as follows:

For instance, , , , , and so on. As these features are known to mix, all features can be represented as

The proposed algorithm determines a feature subset consisting of m effective features from among the all features to estimate the class , that is,

where

Notably, the E2H MRS adopts a function L for evaluating the feature subset . Because the feature subset consists of a mixture of numerical and categorical features, let us denote the feature vector of class as

where

Here, is a vector consisting of numerical features, and is a vector consisting of categorical features. As examples, we use four features of “Sex”, “Embarked (Port of Embarkation)”, “Age”, “Fare” for Titanic survival prediction [,]. In this case, the features subset is . , , and because “Age” and “Fare” are numerical features, “Sex” and “Embarked” are categorical features. Moreover, feature vector consists of these values.

When the feature vector consists of only categorical or numerical features, or is satisfied. The feature vector of the i-th observation sample is defined as .

2.2. E2H Distance-Weighted MRS Algorithm

The E2H MRS algorithm developed for evaluating the feature subset is summarized in Algorithm 1. This algorithm outputs an evaluation by inputting the feature subset . After initialization, all pairwise distances between the classes and are computed (line 5). Next, the distance set is sorted by (line 6). Although these processes are also included in the original MRS [], only numerical features can be evaluated because the original MRS is based on the Euclidean distance. Therefore, we use another distance function to apply the MRS to the feature subset comprising a mixture of numerical and categorical features. The definitions of will be explained in Section 2.3.

| Algorithm 1 E2H MRS feature evaluation algorithm |

|

Next, two samples of different classes that are nearest to each other (i.e., ) are added to the set . We then test , which denotes the classification error resulting from the 1NN algorithm based on the set . The proposed distance function is used in the 1NN algorithm because the feature subset consists of a mixture of numerical and categorical features. If the error rate is not zero, that is, , paired samples related to are added to , and the error rate is rechecked. Note that the evaluation value of the feature subset is calculated when the error rate is zero, that is, . The computation of the evaluation value in the original MRS [] uses , which is the size of the set . This implies that the larger the sample size, the better the evaluation of feature subsets by the original MRS. However, although this method is valid, the distances between different classes are not considered. To consider the distances between different classes, we propose a novel feature subset evaluation function using , the average distance of , which is obtained in the growth process of the set . The evaluation value of the feature subset is obtained using these processes. Details pertaining to are explained in Section 2.4.

2.3. Distance Function

Let us now denote the distance between and , consisting of a mixture of numerical and categorical values, as

The first and second terms represent the squared Euclidean distance and the Hamming distance, respectively, that is,

where

In general, the Hamming distance is defined as the minimum number of substitutions required to change one string into another. In other words, it is the number of mismatches between two strings. Therefore, when regarding the -dimensional categorical features vector as a string of length , the number of mismatches between the categorical features vectors of two different classes can be represented by the Hamming distance. This number is calculated with Equations (9) and (10).

Moreover, we refer to as the “Hamming weight” because it is a weight parameter used for categorical features. The parameter is manually set by users, and when they have a hypothesis in which categorical features are important for classification, they set a large value. When we set , the effect of categorical features on distance disappears. The distance function defined by Equation (7) is similar to that used in the k-prototype algorithm [].

The following theorem is satisfied for the proposed distance function :

Theorem 1.

Proof.

□

In other words, the range of extends from zero to one when the condition is satisfied. The process involved in the standardization of numerical features from zero to one is presented in line 1 of Algorithm 1.

2.4. Evaluation Function of a Feature Subset

Here, we explain the evaluation function of the feature subset . In the original MRS [], the sample size of set leading to the correct classification (no error) of all samples is adopted as an evaluation function of a feature subset. By including the distances between different classes in the original MRS, we propose

as a novel feature subset evaluation function (line 17 in Algorithm 1). For the proposed evaluation function , the following theorem is satisfied:

Theorem 2.

Proof.

□

Note that the range of evaluation extends from zero to if the range of the numerical features extends from zero to one. is the average distance of set and is obtained using the different class distances represented in lines 12 and 16 of Algorithm 1. The range of extends from zero to one because it denotes the average of based on Theorem 1. Therefore, Theorem 2 is satisfied. Moreover, we understand that is a damping coefficient for , based on Equation (11). is referred to as the “distance weight” because it is a parameter used for adjusting the damping coefficient based on the distance . In a typical classification problem, the distance between different classes in a features space should be long to decrease classification errors. In some works, feature spaces leading to a long distance between different classes were used for classification [,]. Therefore, we included the parameter to represent the weight of the distance between different classes in the proposed method. This parameter is manually set by the users. The distance between the different classes is emphasized when setting to a large value. In contrast, the sample size of set is emphasized when setting to a small value. The value of the proposed evaluation function approaches zero when the distance between different classes is large, and the sample size of is small. Notably, the smaller the value of , the more effective the subset ; hence, we define

to evaluate . This function is expressed in Equation (4).

Note that when setting , the proposed evaluation function and the original MRS [] have the same form, owing to

In contrast, when setting , the evaluation value is

In most cases, approaches zero because (i.e., the average distance between classes and is zero) is not satisfied. This implies that when the distance weight is too large, the proposed evaluation function does not perform well.

2.5. Bayesian Swap Feature Selection Algorithm

In the brute-force search method, the number of calculations required to solve the optimization problem presented in Equation (4), that is, the number of calculations required for identifying a feature subset that minimizes , is

where n denotes the size of , and m is the size of . Therefore, when n is large, obtaining an optimal solution is difficult from the perspective of the calculation cost. In the original MRS [], an approach for finding the approximate solution is adopted. In particular, the first process randomly chooses m features from among the all features , and the second process gradually improves the evaluation value by swapping the features. Additional details on this method are explained in []. The number of calculations required when using this method is

Thus, this algorithm significantly reduces the calculation cost compared to the brute-force search. However, the final adopted feature subset depends on the initially selected feature subset. Therefore, we adopt an approach using the initial feature subset obtained via Bayesian optimization. The Bayesian optimization algorithm is a tree-structured parzen estimator algorithm (TPE) [], and it is used in the optimization framework “optuna” (v2.0.0) [].

A feature selection algorithm based on the described approach is outlined in Algorithm 2. The input values comprise the all features of set , the dimension number m, and the number of iterations in the Bayesian optimization b. The output is an approximate solution We represent because is expected to be close to , which is an evaluation of the optimal solution

| Algorithm 2 Bayesian swap feature subset selection algorithm (BS-FS) |

|

Note that lines 1–5 in Algorithm 2 detail the Bayesian optimization processes used for searching for the initial feature subset. We choose and determine its evaluation value, . Note that t denotes the iteration ID of the Bayesian optimization. The relevant Bayesian optimization processes are repeated b times, and the feature subset of the minimum evaluation value is selected as the initial subset (line 5). Subsequently, the evaluation value is improved by swapping each feature in the initial subset and the remaining subset . These processes are indicated in lines 6–14 of Algorithm 2. We refer to this algorithm as the “Bayesian swap feature selection algorithm (BS-FS)” because this method is a combination of Bayesian optimization and feature swapping.

Using Algorithm 2, the number of calculation evaluation functions in the BS-FS is

In general, is satisfied as a parameter relationship. Therefore, the time complexity of Algorithm 2 is . This algorithm is fast compared to the brute-force search method. However, if the number of maximum iterations b is too large, the number of calculations for BS-FS is larger than that for the brute-force search method (i.e., ). The boundary point is

In other words, the number of maximum iterations for the Bayesian optimization, b, must be less than .

3. Artificial Dataset for the Verification of the Proposed Methods

Further, we verified the effectiveness of the proposed methods using an artificial dataset. Note that the effective feature subset for classification is defined as

where , and . In other words, the combination of two numeric features and two categorical features forms an effective feature subset for classification tasks. Let us denote the values of these features as , , , and . In particular, because and are categorical features,

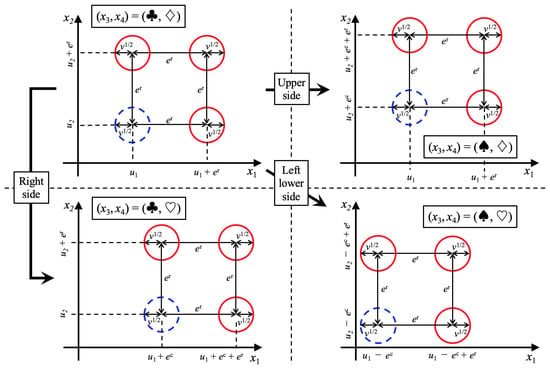

Although we set a binary state as a categorical feature for simplification, because we adopted the Hamming distance , the number of states of a categorical feature can be any number of states. In this study, we generated the feature vectors related to the feature subset based on the probability distribution. An overview of this is presented in Figure 2. The samples and of class (blue circles) are generated based on a Gaussian distribution , defined as

Figure 2.

Overview of the probability distributions defined by Equations (21) and (23) for generating artificial data. The blue and red circles represent the distributions used for generating samples belonging to and , respectively. The number of blue circles on each figure is one because samples belonging to class are generated by the Gaussian distribution. The number of red circles is three because the samples of class are generated based on a Gaussian mixture distribution. The radius of the circle represents the standard deviation. These figures indicate that the average values of these distributions are changed by and , the values of categorical features. Therefore, to correctly classify classes and , categorical features should be used. We used artificial samples generated by these distributions for verifying the effectiveness of the proposed methods E2H MRS and BS-FS. These results are described in Section 4 and Section 5.

To determine the effects of categorical features, the mean vector is defined as follows:

This implies that the average vector is shifted by depending on the categorical features. Only in the case of , the average vector is shifted by .

The samples , of class (red circles) are generated based on a Gaussian mixture distribution, defined as

To determine the effect of the categorical features, the mean vectors , and are defined as

In other words, the samples of class are shifted by compared with the samples of class . The variance–covariance matrix is defined as follows:

The distribution has the following parameters: and . For the artificial samples generated by the distribution based on large values of , the classification of two classes using the numerical features and is simple because the distances between different classes are large. For artificial samples generated by the distribution based on large values of , it is necessary to use the categorical features and for classification. Therefore, represents a “numerical effect,” and represents a “categorical effect.”

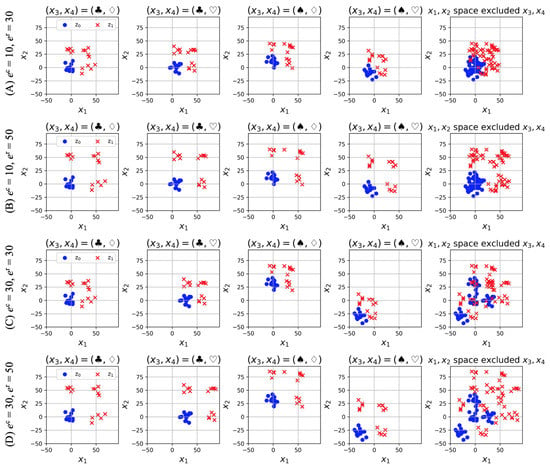

The generated feature spaces in four dimensions (, , ) are presented in Figure 3: (A) , (B) , (C) , and (D) . The values of the categorical features and change from left to right. The rightmost figure depicts an explicit scatter plot of the numerical features and , that is, it does not consider the categorical features and . Comparing (A) and (B), we can establish that the distance between different classes increases for a large value of the numerical effect . Moreover, even if we do not consider the categorical features and , we can classify the samples owing to the small value of the categorical effect . In contrast, (C) and (D) represent spaces with large categorical effects . In this case, we can observe that the categorical features and are required for correct classification. We can control the difficulty level of classification using the parameters and . Therefore, the method for the generation of artificial data described in this section is appropriate for verifying the proposed algorithms. Note that the generated values of the numerical features and are standardized from zero to one to satisfy Theorems 1 and 2.

Figure 3.

Artificial data generated by using Equations (21) and (23), and Figure 2. The cases (A)–(D) vary in parameters and . The values of categorical features and change from left to right. The rightmost figure presents an explicit scatter plot of the numerical features , i.e., no categorical features are considered.

The numerical examples of feature spaces (A)–(D) calculated by Algorithm 1 and Equation (11) are provided in Table 1. , , and represent the evaluation value, the size of MRS, and the damping coefficient, respectively. These values are calculated using Algorithm 1 and Equation (11). Notably, the lower the value of , the better the feature space is for classification. For Algorithm 1, we adopted as the Hamming weight and distance weight . The parameters and are manually set by the users. indicate the original MRS, and and represent the proposed method E2H MRS. In the case of , although (B) and (D) are perceptually desirable feature spaces for classification, the best space based on evaluation value is (A). The method did not determine (D) as the best feature space. This can be attributed to the Hamming weight , i.e., the method did not consider the effect of the categorical feature values and . Similarly, in the case of , the score of (B) was worse than that of (A), which can be attributed to the distance weight , i.e., it did not consider distance between different classes. In contrast, when adopting , the proposed method determined (B) and (D) as desirable feature spaces for classification because the effects of categorical features and the distance between different classes are considered. Moreover, when adopting , the effect of the damping coefficient on the evaluation value increased. Therefore, we consider the proposed method of E2H MRS to be better than original MRS method for evaluating features subset.

Table 1.

Evaluation scores of the feature spaces (A)–(D) shown in Figure 3. represents original MRS and and represent E2H MRS. Note that total samples size on each feature space is 120 (class : 60, class ).

4. Experiment 1: Relationship between the Distance between Different Classes and the E2H MRS Evaluation

4.1. Objective and Outline

In the original MRS [], the distance between different classes is not considered because the evaluation value of the feature subset is the sample size of the set . Therefore, we propose a novel evaluation function that includes the distance and sample size. To verify its effectiveness, we generate a feature subset comprising two numerical and two categorical features, and we calculate the evaluation value .

Notably, we adopt and as the parameters for generating artificial feature subsets. Moreover, is adopted for the sensitivity analysis of the distance weight. When , the evaluation functions of the original MRS [] and E2H MRS have the same form. In other words, the results of represent E2H MRS but not the original MRS. The number of generated samples is

where represents the samples of class . As stated, all numerical features are standardized from zero to one to satisfy Theorems 1 and 2. Moreover, we perform experiments using 100 random seeds to obtain stable results because the generated data depend on randomness.

4.2. Result and Discussion

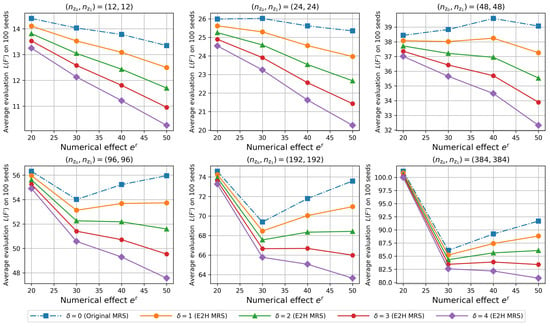

The results obtained are summarized in Figure 4. The vertical axis represents the average evaluation value on 100 seeds. The horizontal axis represents the numerical effect, . In other words, the greater the value of , the greater the distance between different classes in the feature subset. The dashed line indicates the result of the original MRS (), and the solid lines indicate the results of the E2H MRS ().

Figure 4.

Effect of the distance weight on the evaluation .

The greater the distance between different classes, the more effective the feature subset for classification. Therefore, when is large, the evaluation value should ideally be small. From this viewpoint, the results of the original MRS () are deemed to be inappropriate when the sample size is greater than 48. This is because the original MRS cannot consider the distance between different classes. In contrast, in the case of the E2H MRS (), the evaluation values are small when the distance between different classes is large. Therefore, the E2H MRS is effective in identifying feature subsets with large distances between different classes.

5. Experiment 2: Effectiveness of BS-FS in Finding Desirable Feature Subsets

5.1. Objective and Outline

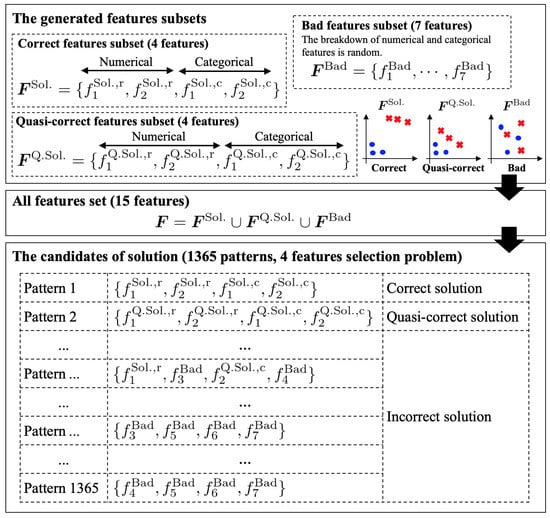

In this section, we describe whether the combination of the E2H MRS (Algorithm 1) and BS-FS (Algorithm 2) can determine an effective feature subset for classification. To this end, the following features are generated:

Among these, is the most effective feature subset comprising two numerical and two categorical features (a total of four features), which are generated based on the probability distribution of . Further, is a quasi-correct feature subset consisting of two numerical and two categorical features (a total of four features), and these features are generated based on the distribution of . Next, consists of seven randomly generated features (the breakdown of categorical and numerical features is also random). Therefore, is not an effective classification feature subset. Notably, the feature sets consist of the union of these feature subsets, and the total number of features is . We adopt the proposed algorithms E2H MRS and BS-FS to identify four effective features among all the 15 features. Note that the total number of solutions is , as shown in Figure 5; that is, the chance of obtaining the optimal solution in one trial is .

Figure 5.

The generated feature subsets for the experiment 2 and the candidates of solutions.

Although both and are effective feature subsets for correct classification, is better than owing to the adopted parameters, . That is, is the best solution, and is the second-best solution. In any case, identifying these subsets is a difficult problem because there is only one in all 1365 feature subsets, as shown in Figure 5. When the number of generated samples is extremely small, the evaluation value of the best subset may not be the minimum value owing to randomness. In this case, the proposed algorithms may identify the best or the second-best subset depending on the number of samples generated. Therefore, we tested various sample sizes defined by Equation (28). Moreover, we adopted to understand the effects of the Bayesian optimization and Hamming weight on the evaluation results. The corresponding experiment was conducted using 100 random seeds to verify the correct detection rate of and .

5.2. Result and Discussion

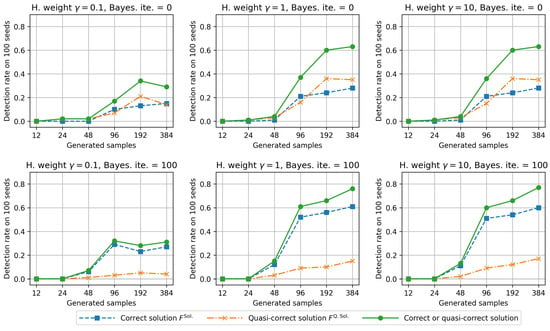

The rates for the correct detection of and for a total of 1365 solutions using the proposed algorithms are shown in Figure 6. The left-, center-, and right-side figures present the results for different Hamming weights. The top and bottom figures illustrate the results of the Bayesian optimization. The horizontal axis represents the number of generated samples, and the vertical axis represents the correct detection rate for 100 seeds.

Figure 6.

Detection rate of and for the E2H MRS and BS-FS (Bayesian optimization iteration , and Hamming weight ).

First, when the Hamming weight is too small (), the correct detection rate is also small compared with that for and . The Hamming weight refers to the weight of categorical features (see Equation (7)). Therefore, for , we consider that the detection rates decrease because the proposed method fails to detect correct categorical features. From the results for and , we can conclude that the correct detection rates improve for a large Hamming weight. However, because the results for and are almost the same, there may be an upper limit to its effectiveness.

Next, we discuss the effects of Bayesian optimization. When Bayesian optimization was not adopted (upper side in Figure 6), the detection rates of and were almost the same. In contrast, when searching for the initial feature subset using Bayesian optimization (bottom side in Figure 6), the detection rate of was higher than that of by approximately three times. For example, when the number of generated samples was 384, the detection rates of and were approximately 60% and 20%, respectively. Therefore, searching the initial feature subset using Bayesian optimization may be effective in identifying the best subset . Moreover, BS-FS is effective in detecting one of the following subsets: and because the total detection rate of and increases.

However, for small sample sizes, the detection rate of and is also small. When the sample size is too small, owing to an incorrect random bias, the E2H MRS may classify some features belonging to the bad feature subset as effective. Therefore, when the sample size is too small, selecting only two patterns of the correct solution and quasi-correct solution from a total of 1365 pattern candidates is difficult when using E2H MRS and BS-FS. In actual data, cases where the numbers of collected samples are not large are sometimes encountered. In such cases, the selection of all the correct features becomes unrealistic. Therefore, it is important to check the number of correct features among the four features selected by the proposed method.

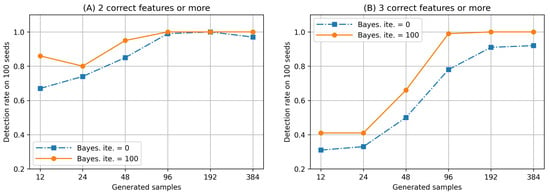

Further, we checked the detection rate of two or three correct features among the four features selected by the E2H MRS and BS-FS. Notably, the correct features are defined as features belonging to or . The corresponding results are presented in Figure 7. Here, (A) and (B) denote the detection rates obtained when the number of correct features is two and three or more, respectively. As can be observed, although the sample size is small, some correct features are selected. Moreover, we also understand that the detection rates increase when searching for the initial feature subset using Bayesian optimization. Therefore, we consider that the proposed methods, E2H MRA and BS-FS, are effective in identifying desirable feature subsets for classification tasks, even if the sample size is small.

Figure 7.

Detection rate of two or three correct features (Hamming weight and Bayesian iteration ).

6. Conclusions

In this paper, we propose an improved form of the original MRS [], which is a feature subset evaluation and selection algorithm. The improved algorithm is referred to as the E2H MRS. In particular, the E2H MRS (Algorithm 1) can evaluate numerical and categorical mixture feature subsets and consider the distance between different classes. Moreover, a subset selection algorithm for time complexity , referred to as BS-FS (Algorithm 2), is proposed. The proposed methods are validated using Experiments 1 and 2 based on artificial data.

In this study, we verified the effectiveness of the proposed methods, E2H MRS and BS-FS, by using samples sizes of several tens to hundreds. However, recently, large datasets with several million samples have emerged, and we did not verify the effectiveness on such a dataset. Moreover, we adopted 2:2 as the proportion of numerical/categorical features in the experiment described in Section 5. Cases of other proportions should also be verified. Therefore, we plan to perform experiments in future.

Author Contributions

Conceptualization, Y.O. and M.M.; methodology, Y.O. and M.M.; software, Y.O. and M.M.; validation, Y.O. and M.M.; formal analysis, Y.O. and M.M.; investigation, Y.O. and M.M.; resources, Y.O. and M.M.; data curation, Y.O. and M.M.; writing—original draft preparation, Y.O. and M.M.; writing—review and editing, Y.O. and M.M.; visualization, Y.O. and M.M.; supervision, Y.O.; project administration, Y.O.; funding acquisition, Y.O.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by JSPS Grant-in-Aid for Scientific Research (C) (Grant No. 21K04535), and JSPS Grant-in-Aid for Young Scientists (Grant No. 19K20062).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Variables and Their Meanings Table

Appendix A.1. Variables for Representing Problem Description

| Variables | Meanings |

| The all features set collected by the users who want to find desirable features subset. | |

| The all numerical features set in . | |

| The all categorical features set in | |

| The size of , i.e., . | |

| The size of , i.e., . | |

| n | The size of , i.e., . |

| The i-th element of , i.e., one of numerical features. | |

| The i-th element of , i.e., one of categorical features. | |

| One of the features subset of . | |

| m | The size of . |

| The evaluation function for the features subset . | |

| The optimal features subset leading to the minimum value of . | |

| z | Either class or . |

| The features vector of class . | |

| The part of feature vector that consists numerical values. | |

| The part of feature vector that consists categorical values. | |

| The dimension number of . | |

| The dimension number of . |

Appendix A.2. Variables for Representing the Proposed Methods

| Variables | Type 1 | Meanings |

|---|---|---|

| Calculation | The mixture distance between two features vectors and . | |

| Calculation | The squared Euclidean distance between two numerical features and . | |

| Calculation | The Hamming distance between two categorical features and . | |

| Calculation | The function for checking whether and are the same or not. If their are the same, it outputs 0, if not, it outputs 1. The function is used for the Hamming distance . Note that and are i-th elements of categorical features vectors and , respectively. | |

| Manually | The weight of the Hamming distance . When users have a hypothesis in which categorical features are important for classification, they set a large value. When users set , the effect of categorical features on distance disappears. The range is . | |

| Calculation | It is the minimum reference set (MRS) leading to the correct classification (no error) of all samples by using features subset . MRS was proposed in the original study []. | |

| Calculation | The average distance between different classes of set . Appears in Algorithm 1. | |

| Calculation | The evaluation function of features subset considered both of MRS size and distance . The lower the value, the better is the feature space for classification. This is equivalent to . | |

| Manually | The effect of the distance between different classes on the evaluation function. This parameter is manually set by the users. When they emphasize the distance between different classes compared with MRS size, they set a large value. The range is . | |

| b | Manually | Iterations of the Bayesian optimization. Appears in Algorithm 2. This parameter is manually set by the users. When they want to improve accuracy of the obtained solution, they set a large value. The computational cost is highly dependent on this value. |

| Calculation | The solution of features subset for classification obtained by Algorithm 2. The solution’s evaluation is expected to be close to the optimal solution’s evaluation . |

1 “Manually” means the users of the proposed methods need setting any value. “Calculation” means the values are automatically calculated.

References

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Gopika, N.; Kowshalaya, M. Correlation Based Feature Selection Algorithm for Machine Learning. In Proceedings of the 3rd International Conference on Communication and Electronics Systems, Coimbatore, Tamil Nadu, India, 15–16 October 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 692–695. [Google Scholar]

- Yao, R.; Li, J.; Hui, M.; Bai, L.; Wu, Q. Feature Selection Based on Random Forest for Partial Discharges Characteristic Set. IEEE Access 2020, 8, 159151–159161. [Google Scholar] [CrossRef]

- Yun, C.; Yang, J. Experimental comparison of feature subset selection methods. In Proceedings of the Seventh IEEE International Conference on Data Mining Workshops (ICDMW 2007), Omaha, NE, USA, 28–31 October 2007; pp. 367–372. [Google Scholar]

- Lin, W.C. Experimental Study of Information Measure and Inter-Intra Class Distance Ratios on Feature Selection and Orderings. IEEE Trans. Syst. Man Cybern. 1973, 3, 172–181. [Google Scholar] [CrossRef]

- Huang, C.L.; Wang, C.J. A GA-based feature selection and parameters optimizationfor support vector machines. Expert Syst. Appl. 2006, 31, 231–240. [Google Scholar] [CrossRef]

- Stefano, C.D.; Fontanella, F.; Marrocco, C.; Freca, A.S.D. A GA-based feature selection approach with an application to handwritten character recognition. Pattern Recognit. Lett. 2014, 35, 130–141. [Google Scholar] [CrossRef]

- Dahiya, S.; Handa, S.S.; Singh, N.P. A feature selection enabled hybrid-bagging algorithm for credit risk evaluation. Expert Syst. 2017, 34, e12217. [Google Scholar] [CrossRef]

- Li, G.Z.; Meng, H.H.; Lu, W.C.; Yang, J.Y.; Yang, M.Q. Asymmetric bagging and feature selection for activities prediction of drug molecules. BMC Bioinform. 2008, 9, S7. [Google Scholar] [CrossRef]

- Loh, W.Y. Fifty Years of Classification and Regression Trees. Int. Stat. Rev. 2014, 82, 329–348. [Google Scholar] [CrossRef]

- Loh, W.Y. Classification and regression trees. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Roth, V. The generalized LASSO. IEEE Trans. Neural Networks 2004, 15, 16–28. [Google Scholar] [CrossRef]

- Osborne, M.R.; Presnell, B.; Turlach, B.A. On the LASSO and its Dual. J. Comput. Graph. Stat. 2000, 9, 319–337. [Google Scholar] [CrossRef]

- Bach, F.R. Bolasso: Model Consistent Lasso Estimation through the Bootstrap. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008. [Google Scholar] [CrossRef]

- Palma-Mendoza, R.J.; Rodriguez, D.; de Marcos, L. Distributed ReliefF-based feature selection in Spark. Knowl. Inf. Syst. 2018, 57, 1–20. [Google Scholar] [CrossRef]

- Huang, Y.; McCullagh, P.J.; Black, N.D. An optimization of ReliefF for classification in large datasets. Data Knowl. Eng. 2009, 68, 1348–1356. [Google Scholar] [CrossRef]

- Too, J.; Abdullah, A.R. Binary atom search optimisation approaches for feature selection. Connect. Sci. 2020, 32, 406–430. [Google Scholar] [CrossRef]

- Chen, X.W.; Jeong, J.C. Minimum reference set based feature selection for small sample classifications. ACM Int. Conf. Proc. Ser. 2007, 227, 153–160. [Google Scholar] [CrossRef]

- Mori, M.; Omae, Y.; Akiduki, T.; Takahashi, H. Consideration of Human Motion’s Individual Differences-Based Feature Space Evaluation Function for Anomaly Detection. Int. J. Innov. Comput. Inf. Control. 2019, 15, 783–791. [Google Scholar] [CrossRef]

- Zhao, Y.; He, L.; Xie, Q.; Li, G.; Liu, B.; Wang, J.; Zhang, X.; Zhang, X.; Luo, L.; Li, K.; et al. A Novel Classification Method for Syndrome Differentiation of Patients with AIDS. Evid.-Based Complement. Altern. Med. 2015, 2015, 936290. [Google Scholar] [CrossRef]

- Mori, M.; Flores, R.G.; Suzuki, Y.; Nukazawa, K.; Hiraoka, T.; Nonaka, H. Prediction of Microcystis Occurrences and Analysis Using Machine Learning in High-Dimension, Low-Sample-Size and Imbalanced Water Quality Data. Harmful Algae 2022, 117, 102273. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, Y.; Zhu, Z.; Pan, J.S. MRS-MIL: Minimum reference set based multiple instance learning for automatic image annotation. In Proceedings of the International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 2160–2163. [Google Scholar]

- Cerda, P.; Varoquaux, G. Encoding High-Cardinality String Categorical Variables. IEEE Trans. Knowl. Data Eng. 2022, 34, 1164–1176. [Google Scholar] [CrossRef]

- Beliakov, G.; Li, G. Improving the speed and stability of the k-nearest neighbors method. Pattern Recognit. Lett. 2012, 33, 1296–1301. [Google Scholar] [CrossRef]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Ram, P.; Sinha, K. Revisiting kd-tree for nearest neighbor search. In Proceedings of the ACM International Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1378–1388. [Google Scholar]

- Ekinci, E.; Omurca, S.I.; Acun, N. A comparative study on machine learning techniques using Titanic dataset. In Proceedings of the 7th International Conference on Advanced Technologies, Hammamet, Tunisia, 26–28 December 2018; pp. 411–416. [Google Scholar]

- Kakde, Y.; Agrawal, S. Predicting survival on Titanic by applying exploratory data analytics and machine learning techniques. Int. J. Comput. Appl. 2018, 179, 32–38. [Google Scholar] [CrossRef]

- Huang, Z. Extensions to the k-Means Algorithm for Clustering Large Data Sets with Categorical Values. Data Min. Knowl. Discov. 1998, 2, 283–304. [Google Scholar] [CrossRef]

- Wen, T.; Zhang, Z. Effective and extensible feature extraction method using genetic algorithm-based frequency-domain feature search for epileptic EEG multiclassification. Medicine 2017, 96. [Google Scholar] [CrossRef] [PubMed]

- Song, J.; Zhu, A.; Tu, Y.; Wang, Y.; Arif, M.A.; Shen, H.; Shen, Z.; Zhang, X.; Cao, G. Human Body Mixed Motion Pattern Recognition Method Based on Multi-Source Feature Parameter Fusion. Sensors 2020, 20, 537. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. Adv. Neural Inf. Process. Syst. 2011, 42. [Google Scholar]

- Optuna: A Hyperparameter Optimization Framework. Available online: https://optuna.readthedocs.io/en/stable/ (accessed on 1 November 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).