1. Introduction

Ring-shaped structural components are widely used in critical fields such as nuclear power, aerospace, and pressure vessels, where weld quality directly impacts overall structural safety and service life [

1]. As high-end equipment manufacturing demands increasingly stringent welding quality and automation levels, welding robots are progressively replacing traditional manual welding, particularly excelling in complex trajectory scenarios like spatial closed curves. In such applications, precise identification and trajectory extraction of closed-curve welds constitute the primary prerequisite for achieving high-quality robotic welding while also serving as a crucial foundation for subsequent path planning and adaptive control [

2].

However, the traditional weld trajectory generation methods, whether through manual teaching or offline programming before welding, generate fixed initial trajectories [

3]. The fundamental limitation of such approaches lies in their inability to adapt to dynamic changes during welding, such as thermal deformation and stress release caused by high temperatures [

4]. Once welding commences, the preset path often fails to adapt to the actual positional changes of the weld, leading to diminished weld quality. Therefore, to achieve truly high-precision automated welding, it is necessary to shift from “pre-welding planning” to “guidance during welding”, that is, to perceive the true shape of the weld in real time during the welding process.

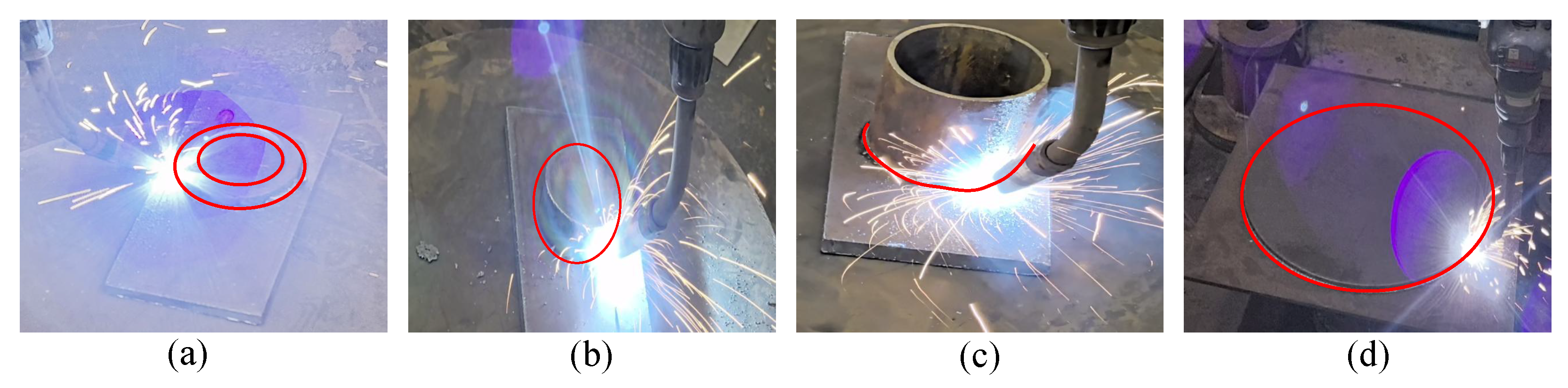

In practice, when using arc welding processes such as GMAW, the online sensing system faces severe challenges from multiple dynamics [

5,

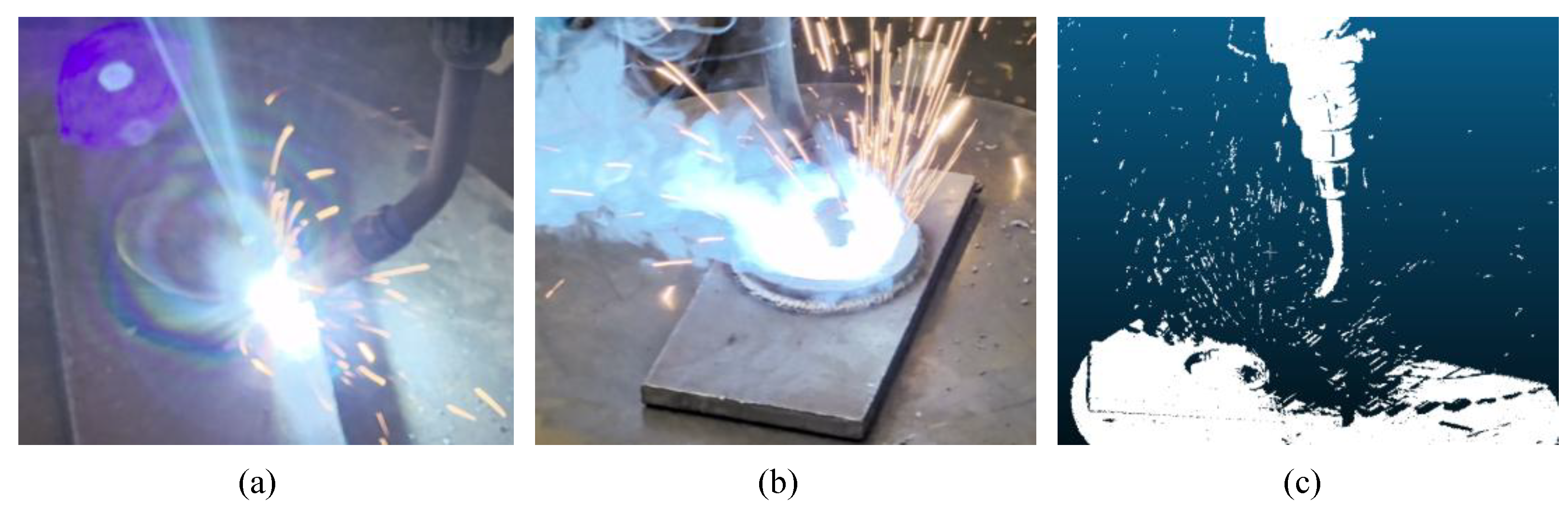

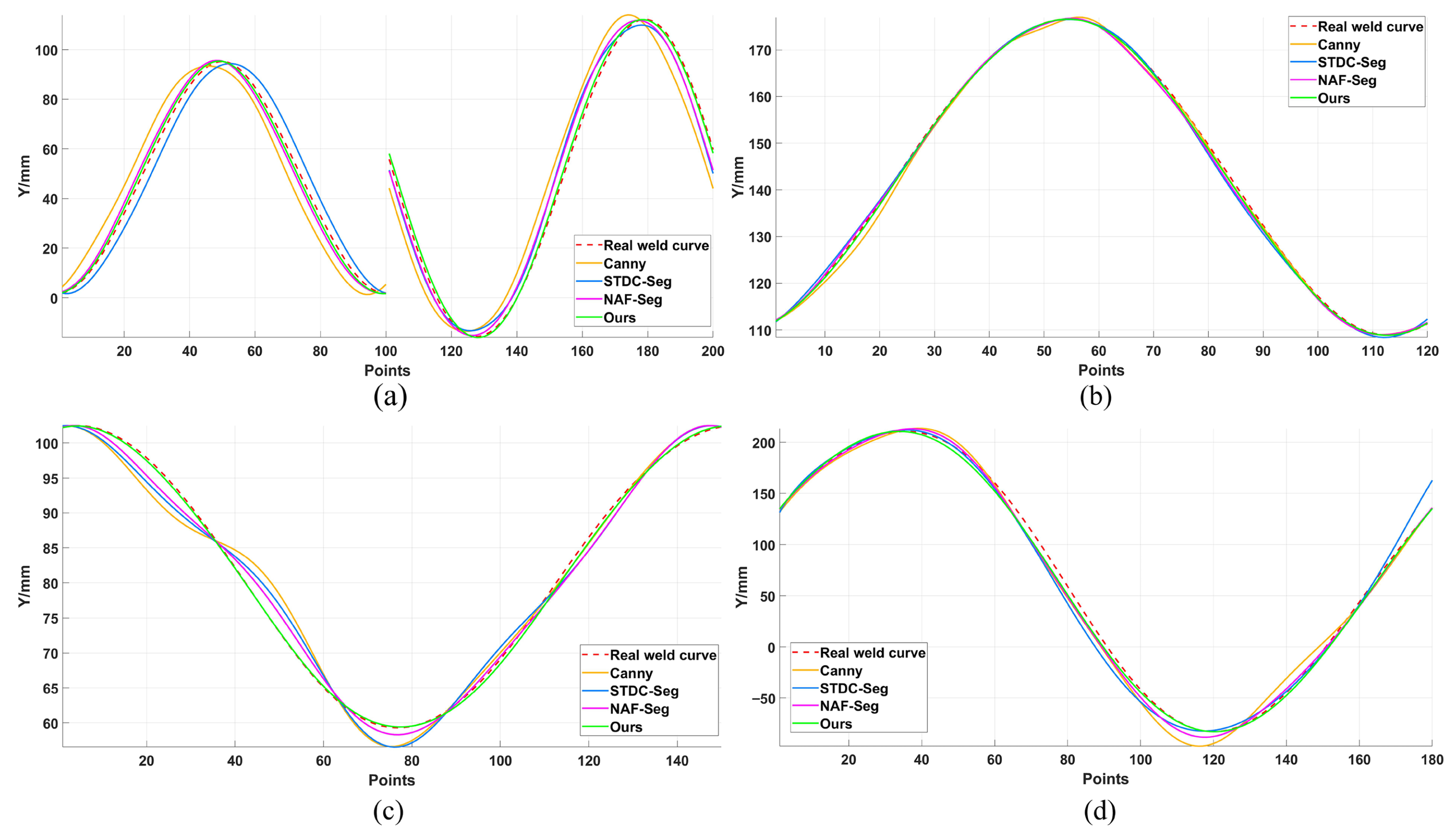

6]. As shown in

Figure 1, intense arc light causes local overexposure and edge blurring in RGB images [

7]. Dense fumes not only obscure visual information but also severely disrupt 3D point cloud acquisition, leading to missing points and noise. These interferences collectively weaken weld seam visual features and cause spatial information loss, significantly limiting the accuracy and robustness of the “weld-in-progress guidance” mode.

To achieve robotic automated welding, precise and robust weld seam trajectory extraction is a core prerequisite. In recent years, scholars worldwide have conducted extensive research on this issue. Based on the type of sensor data relied upon, mainstream approaches can be categorized into three technical pathways: methods based on 2D images, methods based on 3D point clouds, and methods based on multimodal fusion.

In the field of robotic welding, 2D vision-based seam perception stands as one of the most extensively researched and mature technical approaches. Early studies primarily relied on conventional image processing algorithms, which located the weld seam by analyzing hand-crafted features such as edges and textures in the weld zone [

8,

9]. However, this type of method is extremely sensitive to dynamic interferences such as strong arc light, metal reflections, and fumes that are common in the welding process, resulting in serious deficiencies in its robustness and generalization capabilities. To overcome the limitations of these traditional algorithms, deep learning techniques centered on Convolutional Neural Networks (CNNs) have emerged and rapidly become the dominant paradigm. For semantic segmentation tasks, U-Net and its variants have been widely adopted due to their efficient encoder–decoder architecture [

10]. Meanwhile, advanced architectures such as DeepLabv3+ achieve high-precision detection by incorporating dilated convolutions to capture multi-scale contextual information [

11]. To further distinguish between individual weld seam instances, two-stage instance segmentation networks, such as Mask R-CNN, are employed for more refined contour extraction [

12]. To meet the real-time requirements of robotic welding, single-stage instance segmentation methods such as YOLACT and YOLO-seg [

13,

14] achieve faster inference speeds while maintaining high accuracy.

To address the lack of depth information in pure 2D vision, weld seam extraction methods based on 3D point clouds have emerged. This technology directly captures the 3D geometric information of the workpiece surface, offering inherent robustness to lighting variations while accurately reconstructing the spatial pose of weld seams. Consequently, it has garnered significant attention in the field of automated welding. The core of existing 3D methods lies in identifying the unique geometric features of weld seams, which can be categorized into traditional approaches and deep learning methods. Traditional approaches primarily rely on prior knowledge, such as local geometric properties of point clouds. For instance, Zhang et al. [

15] proposed a feature descriptor based on Local Oriented Bounding Boxes (LOBBs), combined with K-means clustering to robustly extract general geometric features like edges and corners of workpieces, providing a basis for preliminary weld region localization. Fang et al. [

16] employed a stereo vision system to enhance imaging contrast in narrow weld regions via gray-level expectation optimization, and then reconstructed high-precision weld point clouds using triangulation. Deep learning-based methods, however, automatically learn abstract geometric features of welds through data-driven approaches to handle more complex scenarios. For instance, Wang et al. [

17] proposed the WeldNet architecture, which performs end-to-end circular weld detection by voxelizing point clouds and employing sparse convolutions with a Region Proposal Network (RPN), maintaining high recognition rates even in highly noisy environments. Kim et al. [

18] designed a multi-view fusion framework to address occlusion issues. By registering local weld point clouds captured from different perspectives, they reconstruct complete, continuous weld trajectories. However, while 3D point clouds can precisely represent spatial structures, they remain susceptible to noise, occlusion, and sparsity in highly disturbed environments, resulting in insufficient detail capture capabilities.

To overcome the inherent limitations of single-modal perception and leverage the rich semantic information of 2D images alongside the precise spatial geometric data of three-dimensional point clouds, multimodal fusion has emerged as a critical technological pathway for achieving robust perception in complex environments. In domains such as autonomous driving, integrating lidar and camera imagery has become a mature solution for enhancing environmental understanding [

19,

20]. In the field of welding, multimodal fusion similarly demonstrates significant potential. For instance, to address the complex surfaces of irregularly structured workpieces, Zhang et al. [

21] proposed an innovative weld seam extraction method. This approach deeply integrates semantic segmentation with point cloud features to precisely extract weld seam feature points. Ultimately, curve fitting is employed to reconstruct the weld seam, achieving high-precision extraction even on complex geometries. Similarly, Wu et al. [

22] developed an extraction algorithm combining 2D depth images and 3D point clouds for automated bicycle frame welding. Jiang et al. [

23] introduced SIMNet, a multimodal fusion network that innovatively integrates molten pool images with welding acoustic signals to enable real-time, quantitative prediction of weld back width.

Despite these advancements, most existing research has failed to systematically address the extreme challenges posed by the concurrent presence of arc light and fumes in welding processes like GMAW. In such scenarios, 2D images suffer from information distortion due to arc light-induced overexposure, while 3D point clouds are rendered incomplete by fume occlusion. The data quality from both modalities degrades simultaneously and dynamically across different spatial regions, which places exceptionally high demands on the robustness of any fusion algorithm.

To address the aforementioned challenges, this paper proposes a multimodal fusion method for weld seam extraction under arc light and fume interference, enabling high-precision, fully automated trajectory generation without reliance on manual teach-in programming. The primary contributions of this work include the following:

Proposing a collaborative fusion module for weld seam edge feature extraction (WSEF). By designing a multi-task learning framework that couples arc light suppression with semantic segmentation, it accurately segments the workpiece and substrate mask from disturbed images and robustly locates pixel-level edges of the weld seam based on the intersecting regions of the masks.

Designing a Local Point Cloud Feature extraction module guided by image–point cloud mapping (LPCF). This module precisely guides and constrains the processing region of 3D point clouds using 2D semantic masks. Combined with an enhanced PointNet++ network, it improves feature expression robustness against point cloud noise and occlusions.

Construct a cross-modal attention-driven multimodal feature fusion module (MFF). By introducing a cross-modal attention mechanism, it adaptively fuses 2D contour features with 3D point cloud structural features, generating a spatially consistent, detail-rich fused point cloud representation.

Proposes a hierarchical trajectory reconstruction and smoothing method. Through unsupervised clustering, principal component analysis-based ordering, and Fourier series fitting, it achieves topological reconstruction of discrete point sets, generating continuous, smooth, high-quality weld trajectories directly executable by robots.

2. Methodology

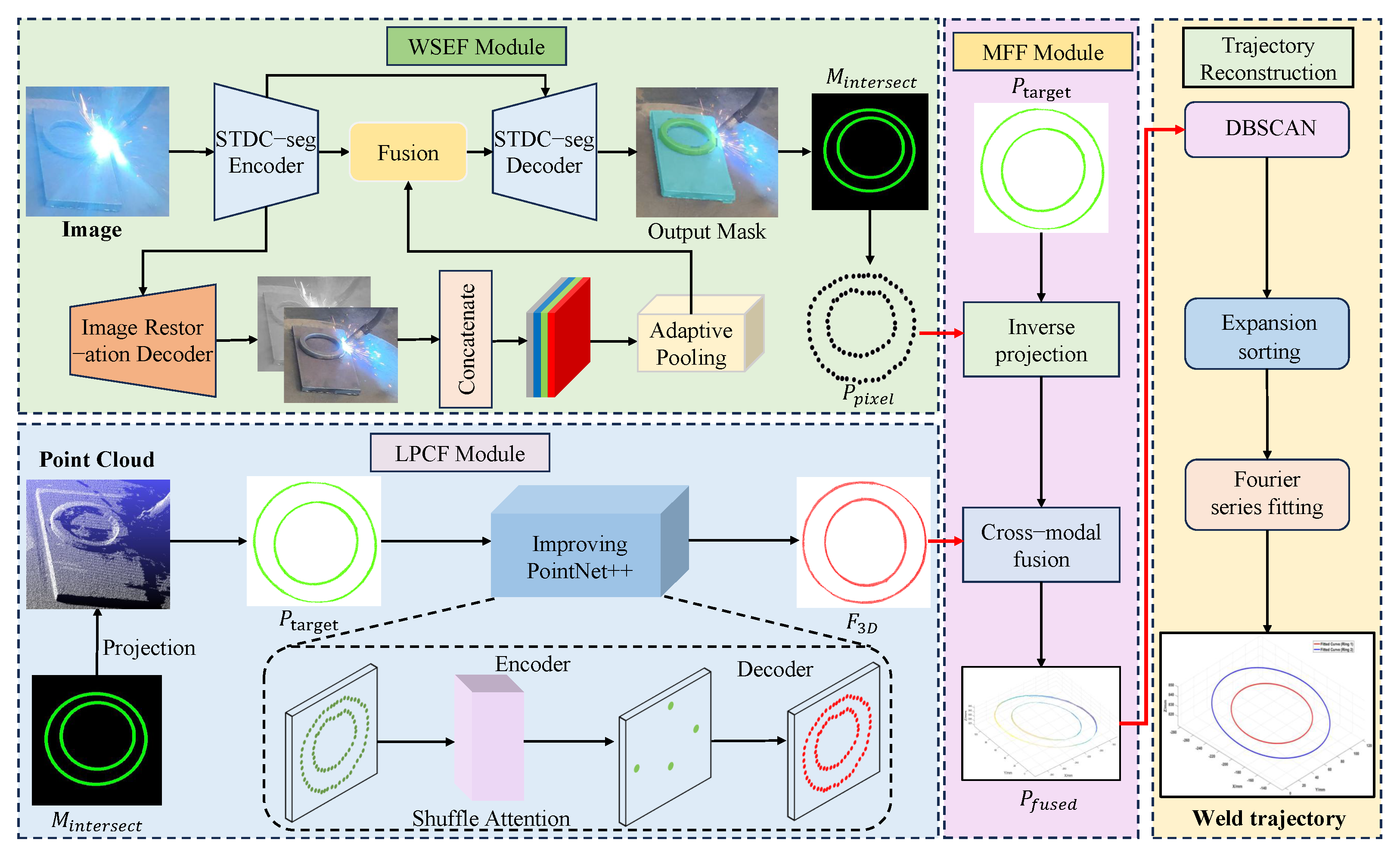

To achieve high-precision, robust extraction of circular closed-curve welds in arc light and fume environments, this paper proposes a generalized multimodal fusion perception and reconstruction method using a typical circular workpiece as the starting point. By integrating 2D visual details with 3D structural information, this method effectively overcomes perception uncertainties caused by welding disturbances such as arc light and fumes, achieving an end-to-end extraction process from raw sensor data to robot-executable trajectories. As shown in

Figure 2, the overall framework comprises four core components: the WSEF module, the LPCF module, the MFF module, and the weld seam trajectory reconstruction and smoothing method. First, the WSEF module performs instance segmentation on the workpiece and substrate to extract precise 2D weld seam contours. Subsequently, the LPCF module locates the weld region within the raw point cloud based on the 2D mask and utilizes an enhanced PointNet++ architecture to extract robust 3D local features. Next, the MFF module fuses the 2D edges and 3D features through a cross-modal attention mechanism, forming a unified and rich spatial feature representation. Finally, high-order continuous reconstruction and smoothing optimization of the trajectory are achieved via Fourier series fitting, outputting a weld trajectory directly usable for robotic motion planning. This chapter will detail the algorithmic structure and technical implementation of each module.

2.1. Weld Seam Edge Feature Extraction Module for Collaborative Fusion Networks

During the welding process, intense arc light interference is the primary factor causing degradation in RGB image quality, resulting in localized overexposure and feature blurring in the edge regions of circular workpiece welds. Additionally, diffuse fumes reduce overall image contrast, further complicating edge extraction. To address these challenges, this paper proposes a WSEF module. The guiding ideology of this module is a multi-task learning framework that synergistically couples the arc light removal task with the semantic segmentation task to achieve mutual enhancement.

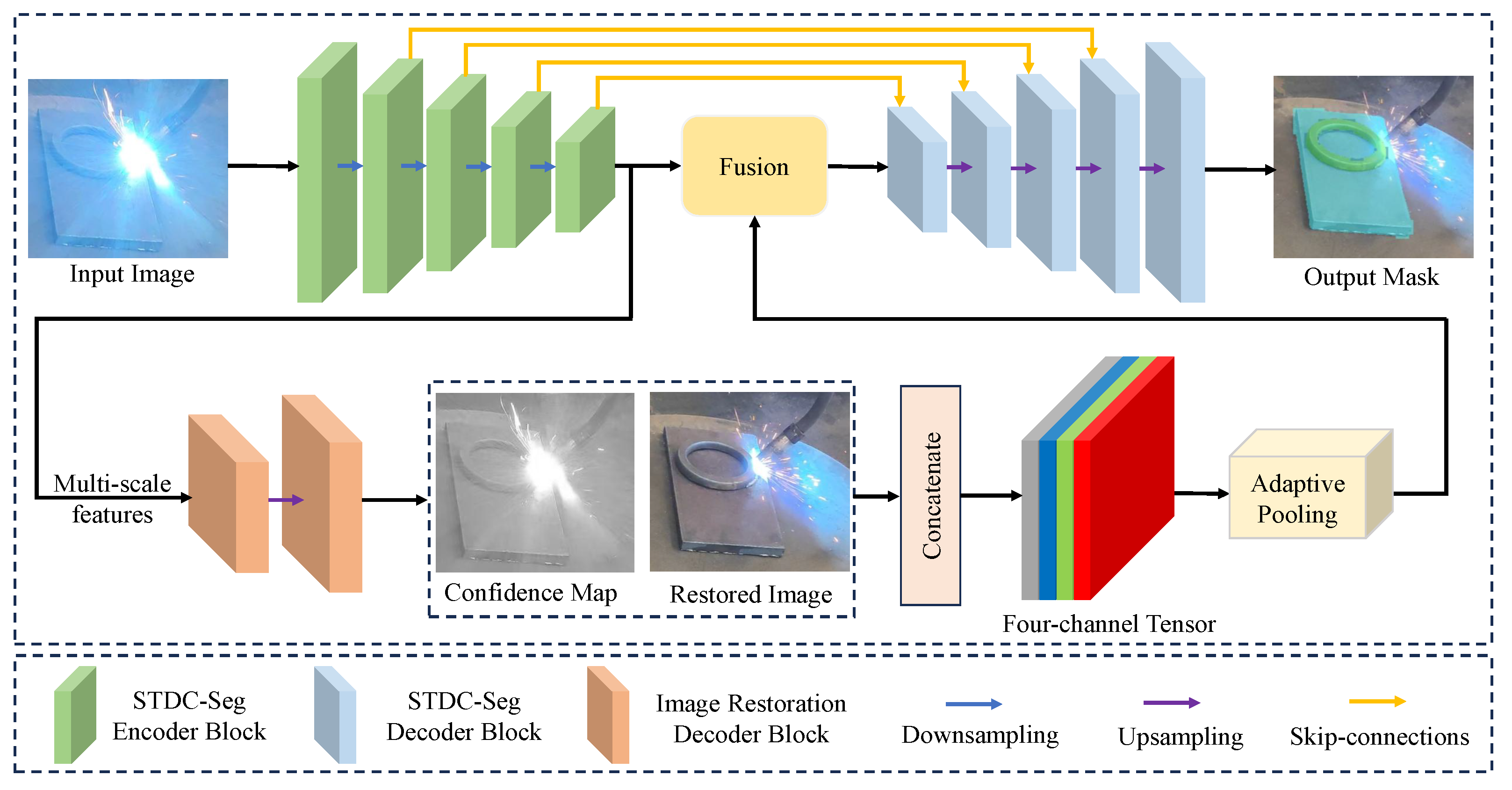

The overall architecture, as shown in

Figure 3, comprises a shared encoder backbone, an arc light suppression branch, and a segmentation branch. The shared encoder backbone adopts the STDC-Seg network [

24], which strikes an effective balance between speed and accuracy. The core principle is that for the network to perform high-precision segmentation, it must first effectively remove the overexposure to restore the underlying structural details. The segmentation task, in turn, provides a strong supervisory signal that forces the image restoration branch to generate feature representations that are not just visually plausible but also beneficial for accurately locating weld seam edges.

The implementation of this principle relies on the network’s structure and a joint loss function. The arc light removal branch, inspired by AOD-Net [

25], outputs two key results: a restored 3-channel RGB image and a single-channel arc confidence map. This map is produced by a lightweight convolutional head that is trained implicitly through the joint optimization of the primary segmentation and restoration tasks. It functions as a crucial spatial attention prior for the segmentation network by explicitly indicating which image regions are unreliable due to overexposure.

This collaborative mechanism is optimized through end-to-end joint training. The overall objective function for the WSEF module,

, is a weighted sum of a segmentation loss (

) and an image restoration loss (

):

where

and

are weighting coefficients to balance the two tasks. The segmentation loss,

, is the standard Cross-Entropy Loss, suitable for multi-class pixel classification. The image restoration loss,

, is the L1 Loss, which is effective at preserving edge details in the restored image. This joint loss function ensures the removal of overexposure by compelling the network to generate a clear restored image (to minimize

) as a necessary prerequisite to achieving an accurate segmentation result (to minimize

).

After obtaining high-precision masks for the annular workpiece and the medium-thickness plate substrate, this paper further extracts pixel-level edge point sets from the mask intersection region to ultimately achieve pixel-level positioning of the weld seam. Specifically, based on the medium-thickness plate substrate mask

and the annular workpiece mask

, the mask intersection region

is extracted:

where ⊕ denotes a morphological dilation operation, while

represents a 3 × 3 rectangular structural element used to compensate for segmentation boundary errors.

When both and labels are present within the 3 × 3 neighborhood of a pixel in the mask intersection region , that pixel is classified as an edge pixel. Based on this classification method, the final output is a pixel-level point set .

2.2. Image–Point Cloud Mapping-Guided Local Point Cloud Feature Extraction Module

When acquiring 3D point clouds, welding fumes constitute the primary source of interference, leading to significant data loss and the generation of outliers. Simultaneously, intense arc light may cause high reflectivity on specific material surfaces, disrupting depth camera measurements and introducing abnormal depth values. To overcome these 3D perception challenges, this paper proposes an LPCF module guided by image-to-point cloud mapping. Its core strategy employs the precise, interference-resistant 2D weld edge mask

obtained in

Section 2.1 to guide target region localization in 3D space. This cross-modal guidance effectively filters background noise and outliers caused by suspended fumes. Furthermore, the depth value screening mechanism designed within the module (retaining points with minimum depth values) not only eliminates suspended dust particles but also excludes anomalous depth points above the workpiece surface caused by arc light reflections. This significantly enhances the purity and geometric integrity of the target point cloud

.

First, based on the mask

generated in

Section 2.1 and the original 3D point cloud

of the workpiece, the 2D image and 3D space are aligned using the camera’s intrinsic and extrinsic parameters. Using the camera’s internal parameter matrix K and external parameters

, the 3D point cloud

P is projected onto the image plane. The projection relationship is expressed as follows:

For any projected point

, if its coordinates fall within the valid region of

, the corresponding 3D point

is marked as a weld candidate point, forming the candidate point set

:

To eliminate mapping ambiguities caused by fume particle occlusion—where multiple 3D points (including genuine workpiece points and fume particle points) project onto the same pixel coordinate—the module incorporates a depth value filtering mechanism. Under this mechanism, only the point with the smallest depth value

is retained. While unsuitable for complex geometries with deep grooves, this strategy is effective for the surface-level welds in this study, as fume particles typically float between the workpiece and the camera, resulting in larger depth values. After processing this step, the final target point cloud effectively filtered for fume interference is obtained and denoted as

:

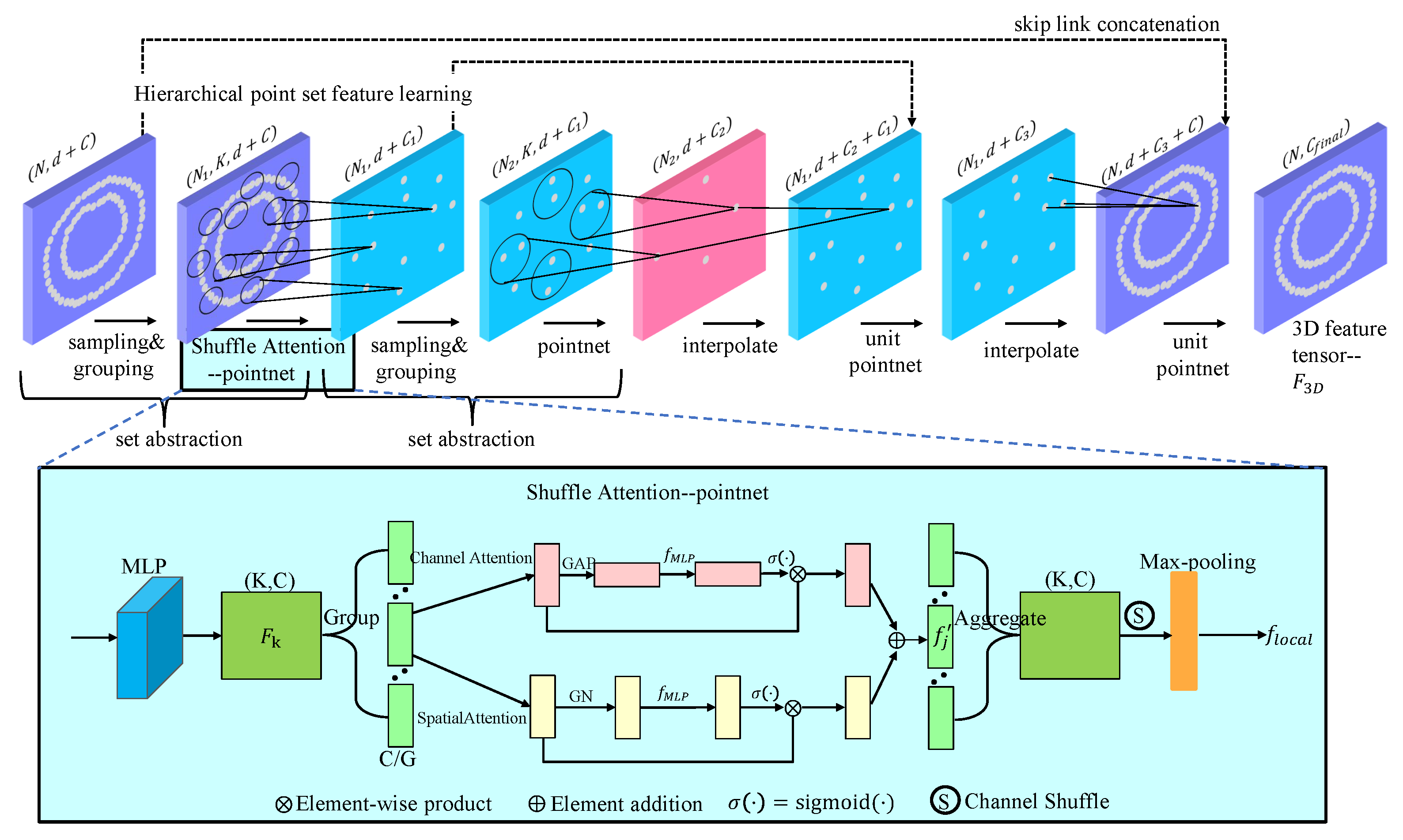

After obtaining the target point cloud

, LPCF employs an improved hierarchical PointNet++ architecture to extract its local features [

26]. The original PointNet++ employs max-pooling when aggregating neighborhood features. This operation treats all points within a neighborhood equally, making it highly sensitive to noise points caused by dust residue. To enhance feature extraction robustness, this paper embeds a Shuffle Attention (SA) module [

27] into the first Set Abstraction layer of PointNet++. This attention mechanism adaptively assigns weights to points within the neighborhood, enabling the network to focus on critical geometric features while suppressing the influence of outlier noise points. This is crucial for processing sparse and uneven point clouds contaminated by fume residue. The specific structure is illustrated in

Figure 4.

For a local neighborhood point set defined by Farthest Point Sampling and Ball Query, the feature encoding process is as follows:

First, a shared-weight multi-layer perceptron (MLP), denoted as

, elevates the raw features (e.g., coordinates and normals) of each point within the neighborhood to a higher-dimensional feature space, yielding the feature set

. Specifically, this MLP consists of three fully connected layers with output feature dimensions of (64, 128, and 128), with each layer followed by a Batch Normalization and a ReLU activation function. This architecture is adapted from the original PointNet++ framework and was confirmed through preliminary experiments to be effective for extracting geometric features from the weld seam data. It is important to note that this MLP is not trained in isolation; it is an integral component of the LPCF module and is trained end-to-end as part of the entire model. The specific optimizer and parameters used for the overall training are detailed in

Section 3.4.

Subsequently, the feature set

is input into the Shuffle Attention module. This module first divides the

C-dimensional feature channels into

G groups. For each group of features, the channel attention weights

and spatial attention weights

are computed in parallel.

where

denotes the features of group g, GAP represents global average pooling, GN stands for group normalization, and

is the sigmoid activation function. After attention weighting, the updated feature

is

where ⊗ denotes element-wise multiplication, and ⊕ denotes element-wise addition.

After attention-weighted features from all groups are processed, a channel shuffle operation is performed to facilitate cross-group information flow. Finally, the weighted features within the neighborhood are aggregated via max pooling to generate the final robust feature descriptor

for this local region:

Subsequently, LPCF employs the decoder architecture of PointNet++ for feature propagation. Through interpolation and skip connections, abstract features from sparse point sets are upsampled and fused back to the resolution of the original target point cloud. After passing through all feature propagation layers, a dense, point-wise 3D feature tensor is ultimately generated.

Each feature vector within

precisely encodes robust 3D geometric information for its corresponding point and its neighborhood, laying a solid foundation for high-precision multimodal fusion with 2D edge features, as described in

Section 2.3.

It is important to note that the LPCF module, as a feature extractor, does not have its own separate, explicit loss function. Its guiding ideology is to produce robust geometric features from noisy point clouds. The design, particularly the integration of the Shuffle Attention mechanism, serves this purpose. The parameters of the improved PointNet++ network within this module are optimized implicitly through the end-to-end training of the entire model, guided by the final trajectory reconstruction accuracy at the end of the pipeline.

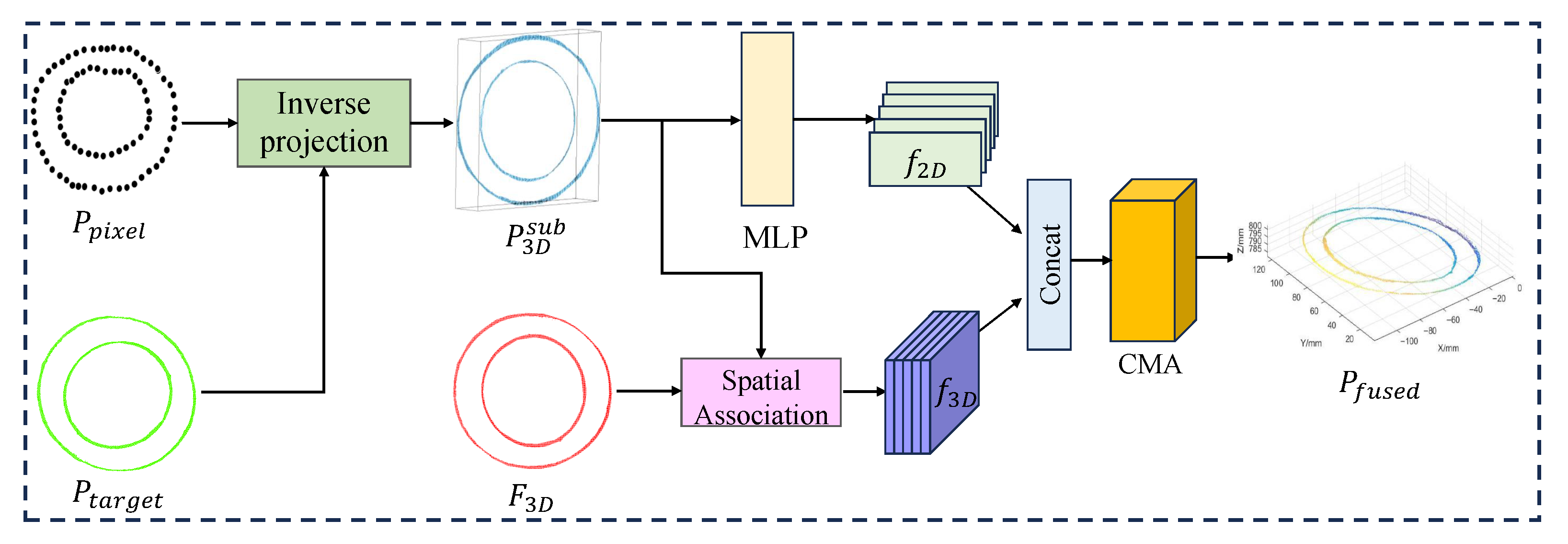

2.3. Cross-Modal Attention-Driven Multimodal Feature Fusion Module

Arc light and welding fumes severely compromise both 2D and 3D perception quality during welding processes. To address this, the WSEF and LPCF modules in this paper suppress arc light interference in images and fume contamination in point clouds, respectively, generating high-precision 2D edge point sets (robust

) and robust 3D features (

). The MFF module aims to fuse these complementary features. Through cross-modal attention (CMA), it adaptively integrates the geometric precision of 2D edges with the structural authenticity of 3D point clouds, forming a fusion point cloud

that is resistant to mixed interference and rich in detail. This provides a robust data foundation for trajectory reconstruction. The overall workflow of this module is shown in

Figure 5.

First, the set of edge points

in the two-dimensional image coordinate system is back-projected into three-dimensional space using the camera projection model, constructing its corresponding three-dimensional point set

. Specifically, for each pixel point

, its 3D coordinates are obtained via inverse projection transformation by combining its depth information (derived from the target point cloud

in

Section 2.2):

where

represents the image coordinates corresponding to the pixel point, and

denotes the minimum depth value at the corresponding position.

K is the camera intrinsic parameter matrix.

Subsequently, the point set

obtained from backprojection is spatially associated with the local point cloud features

extracted in

Section 2.2. For each backprojected point, the k nearest point cloud feature points within its neighborhood are searched, and their corresponding three-dimensional feature vectors

are obtained through interpolation.

To fully integrate the geometric precision of 2D edges with the structural information of 3D point clouds, this paper employs a feature fusion network based on an attention mechanism [

28]. For each associated point, its 2D pixel coordinate features are encoded into a 2D feature vector

via a lightweight MLP network. This vector is then concatenated with the corresponding 3D feature vector

to form a preliminary cross-modal feature

.

Subsequently,

is fed into the CMA module, which adaptively learns the importance weights

for the two modalities and generates the final fused feature

:

Ultimately, each fused feature vector corresponds to a 3D position point, forming the fused point set

. This point set not only achieves pixel-level accuracy but also contains rich 3D geometric structure information. The guiding ideology of the MFF module is to achieve this high-quality fusion adaptively. The parameters of the CMA mechanism, which determine the fusion weights, are learned as part of the end-to-end training process rather than being governed by a standalone loss. The final trajectory reconstruction error, defined in

Section 2.5, serves as the supervisory signal that optimizes the CMA’s parameters, allowing the module to learn the most effective fusion strategy. This ensures high consistency and robustness even in arc light and fume-filled environments, providing an accurate and dense data foundation for trajectory reconstruction.

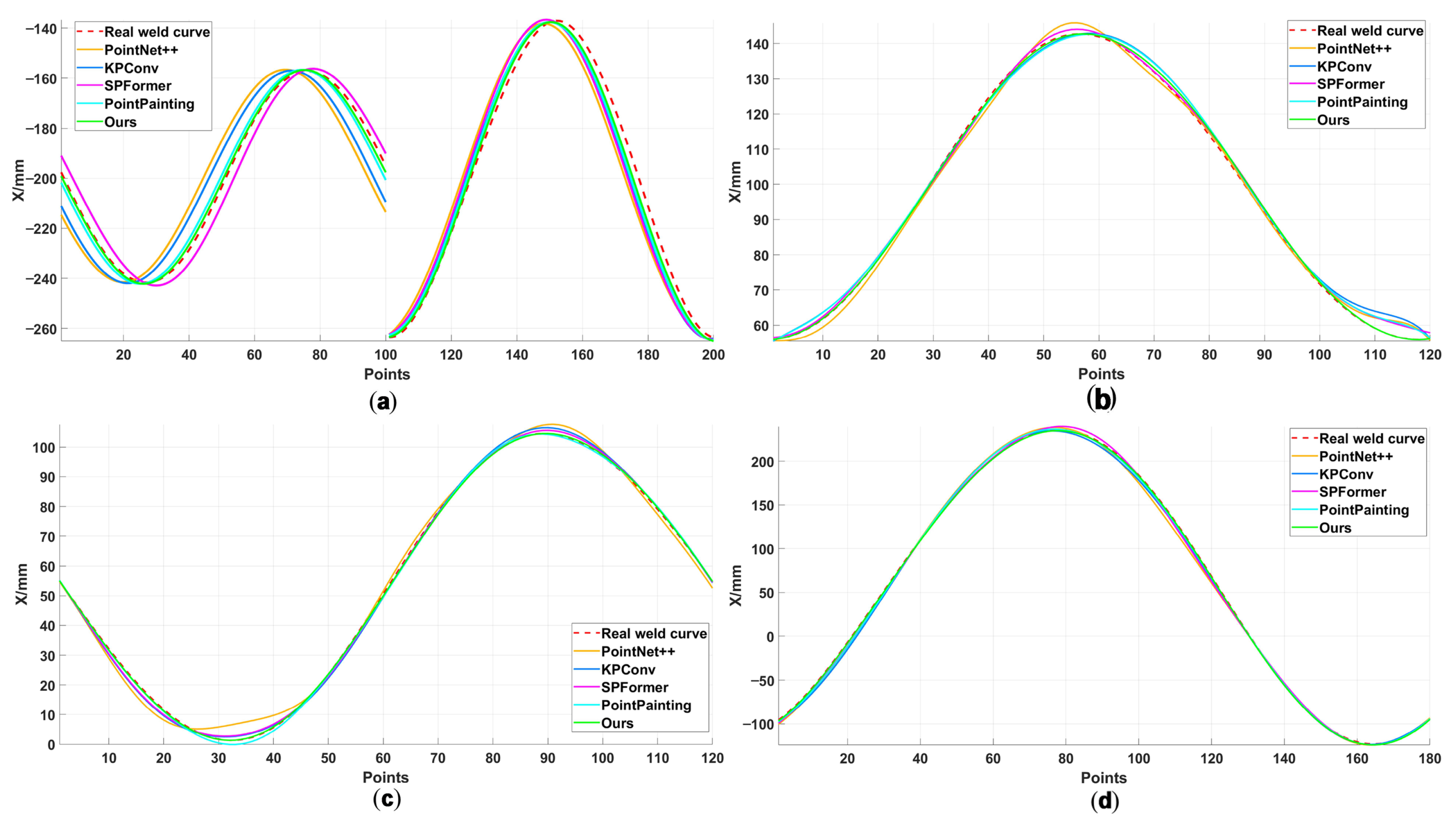

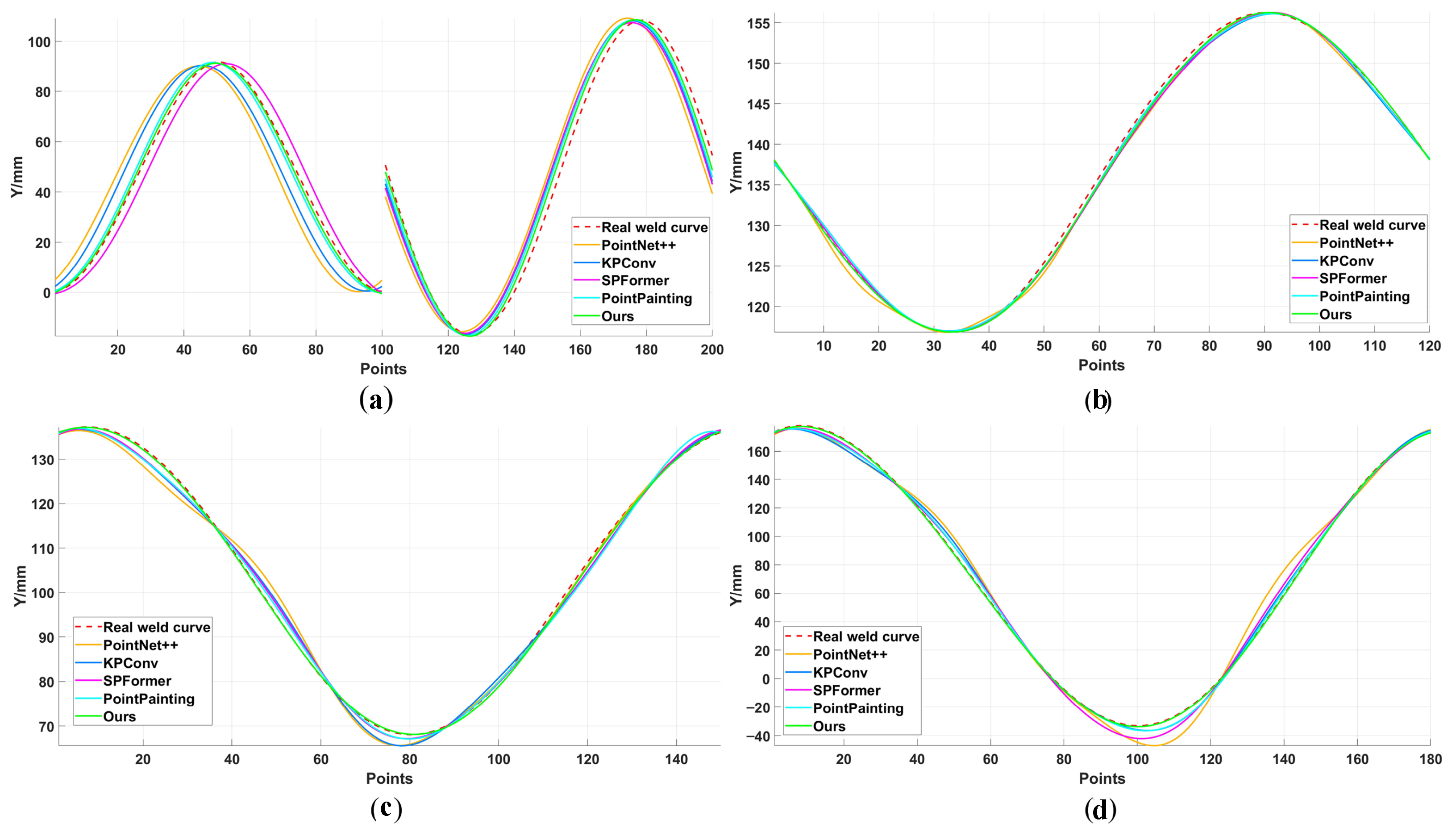

2.4. Weld Path Reconstruction and Smoothing

Through multimodal feature fusion, the fused point cloud was obtained, which possesses pixel-level 2D accuracy and rich 3D structural information. This point cloud exhibits a spatially discrete distribution and may contain multiple independent weld structures (e.g., inner and outer double rings). Affected by sensor noise and fusion errors, it is difficult to directly apply to robotic trajectory planning. To address this, this paper proposes a more general hierarchical trajectory reconstruction and smoothing method. It sequentially performs 3D spatial clustering segmentation, local coordinate system topological sorting, and parametric Fourier series fitting to achieve high-precision, smooth, and topologically correct weld seam trajectory reconstruction.

First, to achieve effective segmentation of annular welds in arbitrary spatial poses, this paper employs the unsupervised clustering algorithm DBSCAN (Density-Based Spatial Clustering of Applications with Noise). This algorithm clusters points directly in 3D space based on density distribution, robustly separating independent weld point sets (e.g., inner and outer rings) without requiring prior knowledge of weld quantity. Compared to traditional methods reliant on specific planar projections, DBSCAN exhibits greater robustness and geometric universality when handling workpieces in arbitrary orientations, as it is insensitive to the placement posture of the workpiece.

Subsequently, to establish an accurate topological order within each independent point cluster, a global ordering strategy based on Principal Component Analysis (PCA) [

29] was employed. The principal plane of the point set was extracted via PCA, and the three-dimensional points were projected onto this plane to obtain a two-dimensional projected point set. The polar angle

of each point was calculated using the 2D centroid as the pole, and points were sorted accordingly. The order was then mapped back to the 3D point set, yielding the ordered point set

. This method fully leverages the global geometric structure of the point set, overcoming the noise sensitivity of local nearest-neighbor methods and ensuring robust and accurate sorting.

Based on the ordered point set, parametric Fourier series are employed for closed trajectory fitting [

30]. Using the polar angle

as the natural parameter, the three-dimensional coordinates

are modeled as a Fourier series with respect to

:

The coefficients were fitted using the least squares method, with a lower order (p = 2) selected to suppress noise and prevent overfitting. The inherent periodicity of the Fourier series ensures the closed-loop nature and high-order continuity ( continuity) of the fitted curve.

The reconstructed trajectory not only accurately restores the three-dimensional geometry of the weld seam but also exhibits excellent smoothness and dynamic properties. This ensures smooth variations in velocity and acceleration during robotic motion, significantly reducing jitter and impact while enhancing process stability and weld quality. The trajectory can be directly converted into robotic control commands to drive the end-effector for high-precision automated welding operations.

2.5. Loss Function

The entire model is trained in an end-to-end fashion, guided by a total loss function that combines an intermediate supervision loss from the WSEF module with a final trajectory reconstruction loss.

The WSEF module is guided by the auxiliary loss,

, which is defined in

Section 2.1. This intermediate loss helps the shared encoder learn robust features early in the training process.

The primary, end-to-end loss for the entire framework is the trajectory reconstruction loss,

. This function measures the difference between the final reconstructed trajectory (

), generated after the hierarchical reconstruction and smoothing process, and the ground truth trajectory (

). This loss is defined using the Mean Squared Error (MSE) of the corresponding 3D points:

where N is the number of points sampled from the trajectory. This loss is the final supervisory signal that is backpropagated through the entire network. It is responsible for optimizing the parameters of the intermediate modules, including the LPCF module (

Section 2.2) and the MFF module (

Section 2.3), by guiding them to produce features that minimize the final trajectory error.

The total loss for training the entire framework is a weighted sum of the final and intermediate losses:

where

is a hyperparameter that balances the contribution of the auxiliary WSEF task to the total training objective. This combined objective ensures that the network not only learns to perform the final task of accurate trajectory reconstruction but also benefits from the rich, detailed feature extraction encouraged by the intermediate image restoration and segmentation tasks.

3. Materials and Experimental Setup

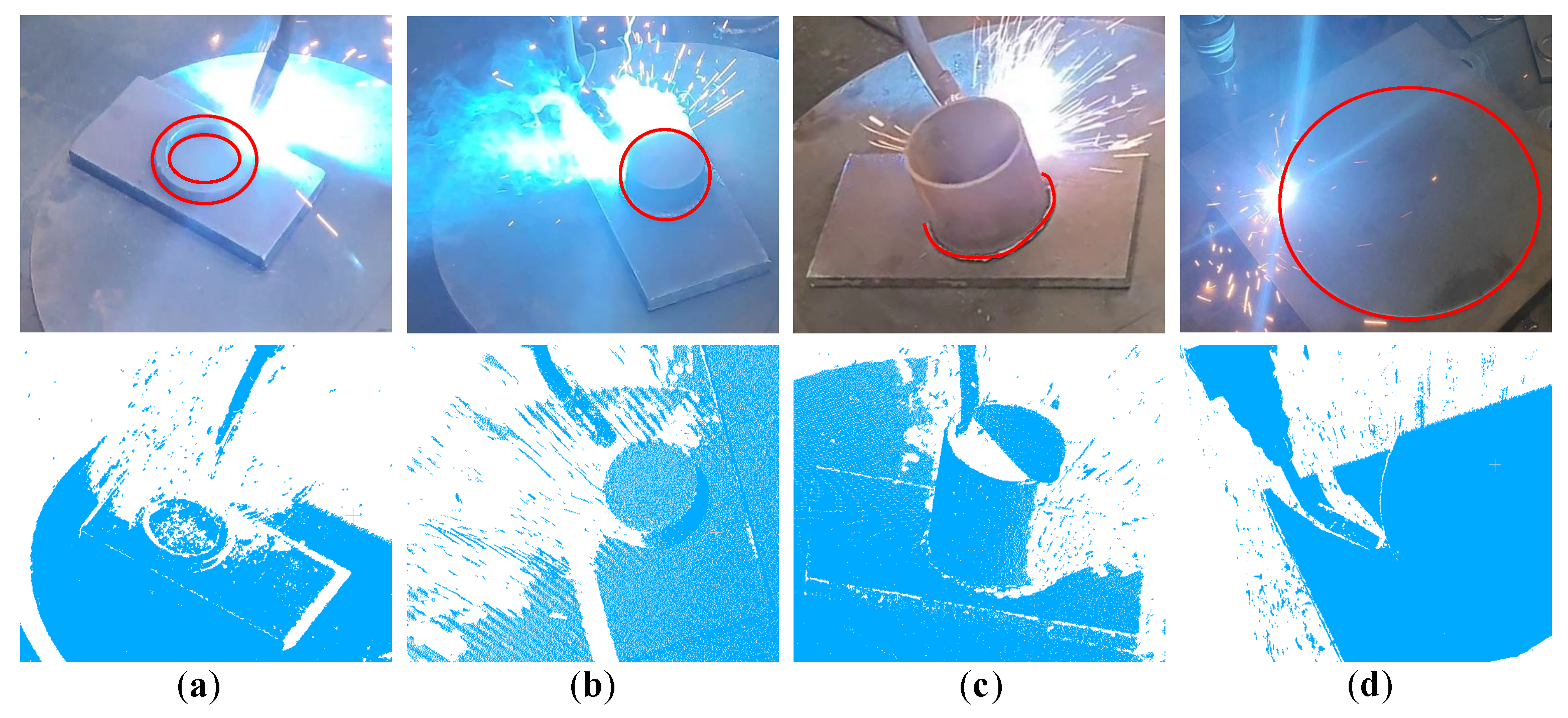

3.1. Hardware Platform and Materials

The experimental system was constructed on an integrated robotic welding workstation, as shown in

Figure 6. The primary hardware components include a YASKAWA MOTOMAN-MA2010 (YASKAWA, Shanghai, China) six-axis industrial robot equipped with a robotic controller, a GMAW power source, and a welding torch. A Photoneo PhoXi 3D scanner (Model M; Photoneo, Bratislava, Slovakia) employing structured light technology was also utilized. A high-performance computer configured with an Intel Core i7-14650HX CPU and NVIDIA GeForce RTX 4060 GPU was employed to meet the demands of deep learning model training and real-time inference.

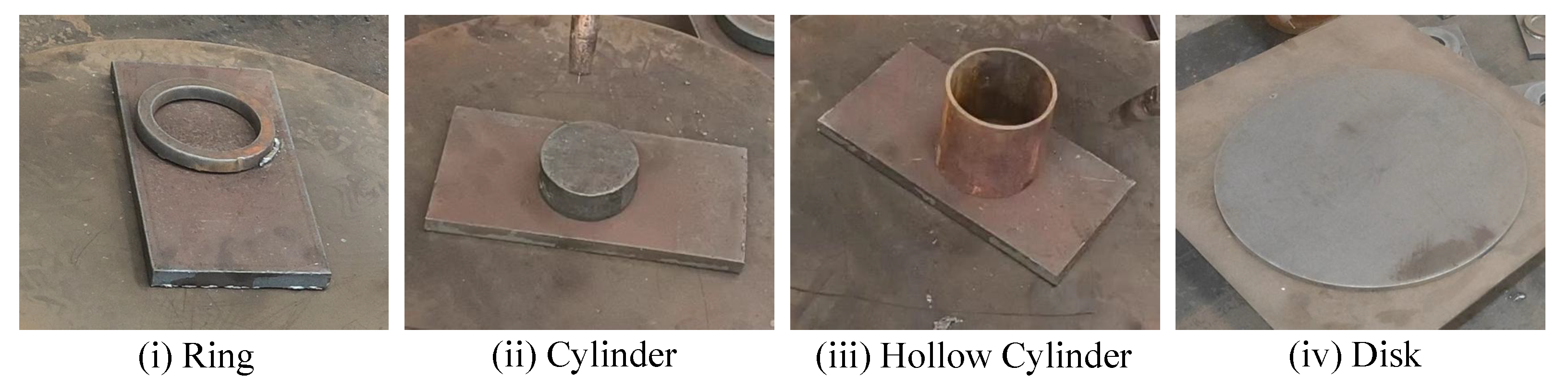

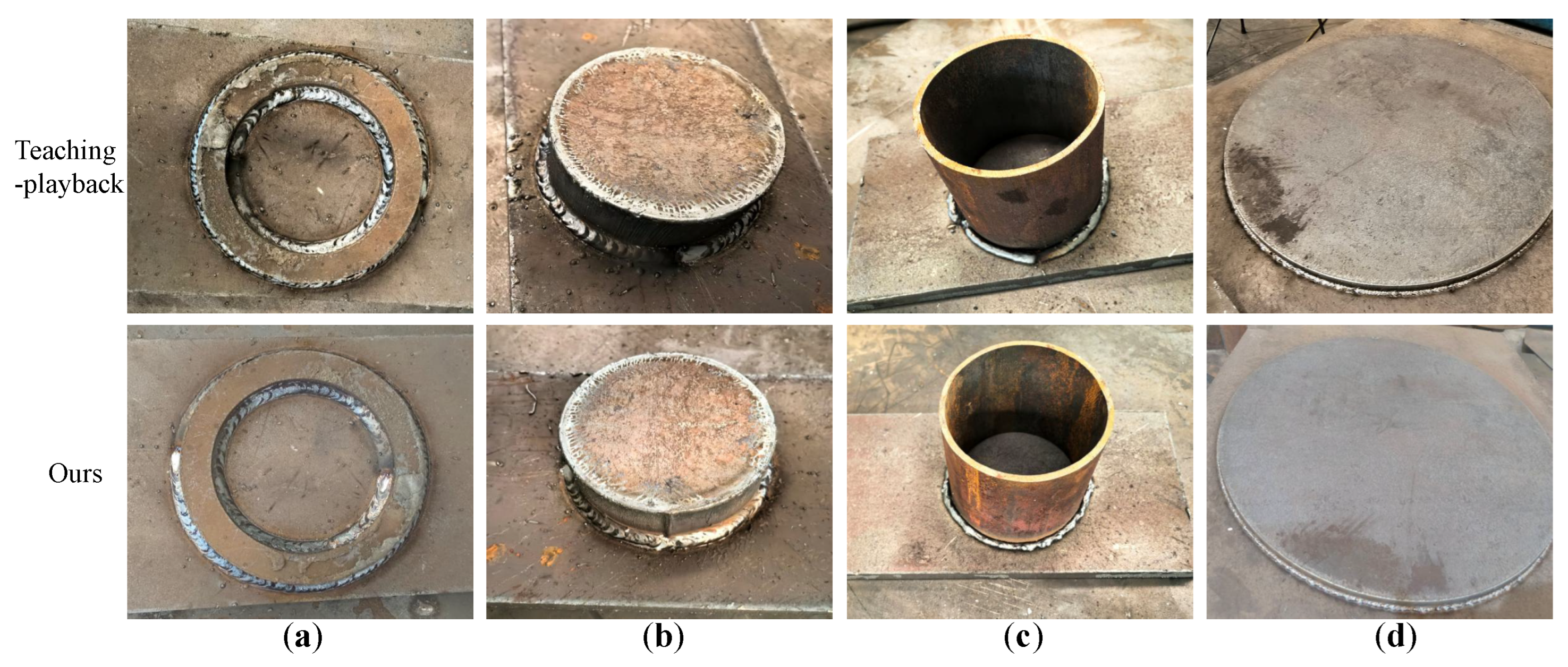

To comprehensively validate the method’s generalization capability, types of workpieces featuring annular closed-curve welds were used, as shown in

Figure 7. These consisted of (i) a ring with an outer diameter of 125 mm, inner diameter of 90 mm, and height of 12 mm; (ii) a cylinder with a diameter of 90 mm and height of 50 mm; (iii) a hollow cylinder with an outer diameter of 100 mm, inner diameter of 95 mm, and height of 100 mm; and (iv) a disk with a diameter of 390 mm and height of 25 mm. All workpieces, along with the 16 mm-thick base plate, were fabricated from Q235 carbon structural steel.

3.2. Dataset Construction

Due to the current lack of publicly available multimodal datasets featuring welding scenarios with strong interference, a specialized dataset for weld seam semantic segmentation and trajectory extraction was independently constructed to meet the requirements of this study. During data collection, welding parameters (current, voltage, and speed) and environmental interference intensity (arc light and fumes) were systematically controlled to ensure the diversity and challenge of the dataset. Specifically, the GMAW process parameters were set as follows: the welding current was 190–210 A, the arc voltage was 24–26 V, the robot’s travel speed was 5–7 mm/s, and an 80% Ar + 20% gas mixture was used as the shielding gas at a flow rate of 20 L/min. A total of 1200 synchronized image–point cloud pairs were collected, covering four typical annular workpiece geometries: rings, cylinders, hollow cylinders, and disks, with 300 pairs per category. The dataset was randomly split into training, validation, and test sets at a 7:2:1 ratio to ensure evaluation fairness and statistical validity.

For semantic annotation, this paper manually annotated all images at the pixel level using a fine polygon tool. The categories included “annular workpieces” (ring/cylinder/hollow cylinder/disk) and “medium-thick plate substrates,” generating high-precision semantic masks. For ground truth trajectory acquisition, each workpiece was scanned using a high-precision PhoXi 3D scanner under ideal conditions free from arc light and fume interference. This yielded sub-millimeter accuracy 3D models, from which the centerlines of inner and outer welds were extracted as the trajectory ground truth, with point cloud accuracy reaching 0.01 mm. To mitigate systematic errors from potential setup misalignments, a rigid registration was subsequently performed to finely align the experimental data with the ground truth model prior to error calculation.

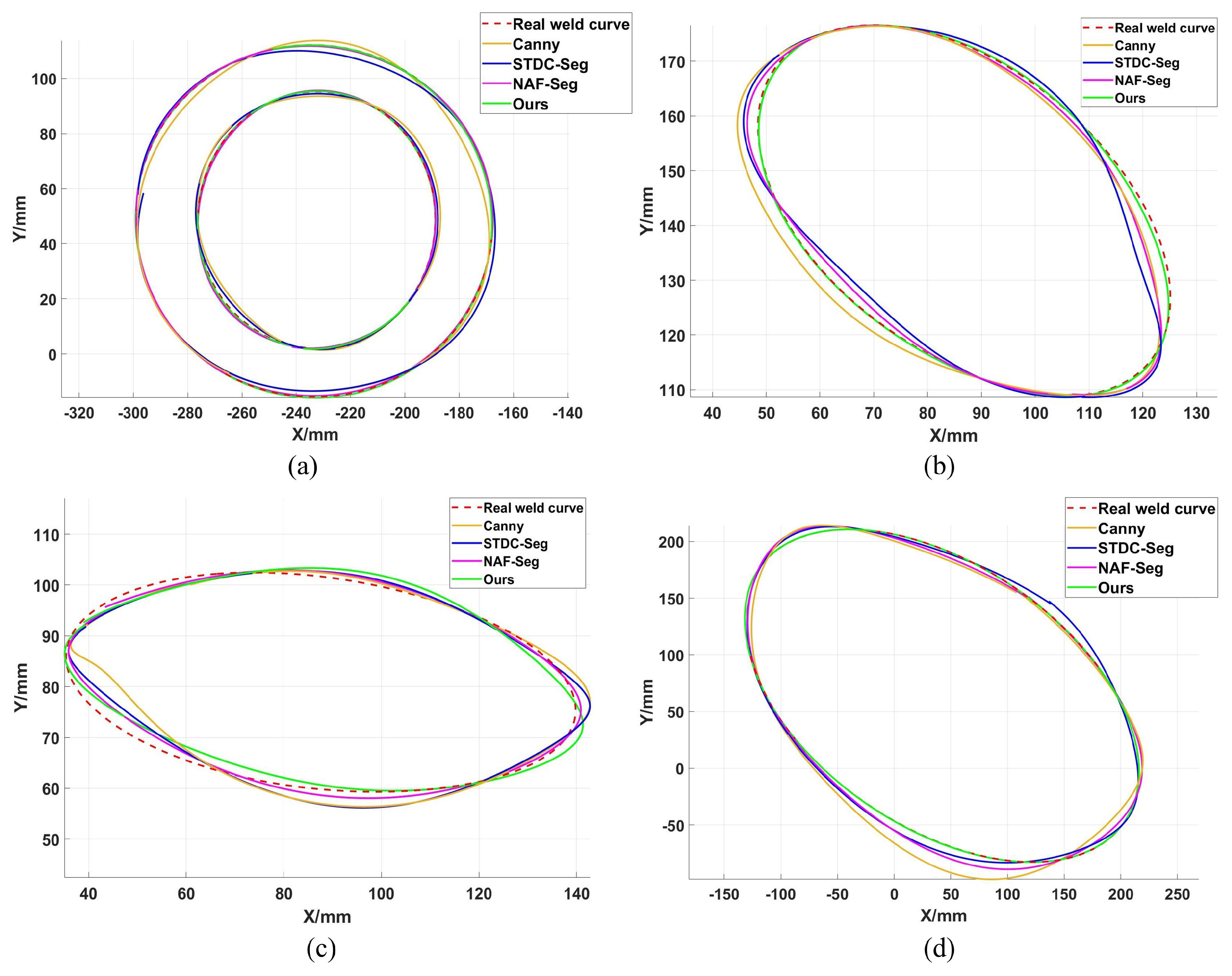

3.3. Evaluation Indicators

To comprehensively and quantitatively evaluate the overall performance of the proposed method, three core metrics were employed: Maximum Error (ME), Root Mean Square Error (RMSE), and processing time. These metrics were chosen to assess the algorithm from three critical perspectives essential for robotic welding applications: worst-case trajectory deviation, overall reconstruction accuracy and stability, and computational efficiency for real-time deployment.

ME measures the greatest Euclidean distance between any point on the algorithm-reconstructed weld seam trajectory and its corresponding point on the ground truth trajectory. This metric directly reflects the deviation level under worst-case conditions, making it crucial for evaluating trajectory stability and safety. Its calculation formula is

where

denotes a point on the reconstructed trajectory, while

represents the corresponding point on the ground truth trajectory. For two-dimensional planar trajectory evaluation, P is a two-dimensional coordinate vector

. For three-dimensional spatial trajectory evaluation, P is a three-dimensional coordinate vector

.

RMSE is the square root of the average of the squares of the Euclidean distances between reconstructed trajectory points and true trajectory points. RMSE is more sensitive to larger error values, thereby better reflecting the maximum deviation in a trajectory or the impact of outliers, and evaluating the smoothness and stability of the trajectory. Its formula is

Processing time records the total time consumed from inputting a single frame image and raw point cloud to the final output of a smooth weld seam trajectory. This metric evaluates the algorithm’s computational efficiency and whether it meets the real-time requirements of industrial automated welding scenarios. The unit is milliseconds (ms).

3.4. Implementation and Training Details

To ensure effective training and fair comparison of the proposed model, this section details the training settings and hyperparameter selection for each module. All experiments were conducted using the PyTorch2.0 framework on a workstation equipped with an NVIDIA RTX 4060 GPU.

A self-built multimodal dataset for circumferential seam welding was employed, comprising 1200 sets of synchronously acquired RGB images and corresponding 3D point cloud data. Images were uniformly resized to 640 × 640 resolution and standardized. Point cloud data underwent preprocessing via voxel grid downsampling (voxel size = 2 mm) to reduce computational complexity while preserving geometric features.

The entire model was trained in an end-to-end fashion, with all modules optimized jointly. The training process was driven by the total loss function (

), as defined in

Section 2.5, which combines the primary trajectory reconstruction loss (

) with the auxiliary loss from the WSEF module (

). The encoder backbone of the WSEF module (STDC-Seg network) was initialized using pretrained weights. For the overall training, the Adam optimizer was utilized with an initial learning rate of 0.001, and the learning rate was adjusted using a cosine annealing strategy. The model was trained for 300 epochs with a batch size of 16. Data augmentation included random rotation (±5°) and brightness/contrast perturbations to enhance the model’s robustness.

For the improved PointNet++ model in the LPCF module, the number of sampling points for Farthest Point Sampling (FPS) in each Set Abstraction layer was set to 1024, 256, and 64. The number of groups G in the Shuffle Attention module was set to 4, as this value was determined through preliminary experiments to provide the optimal balance between feature granularity and representation robustness.

For trajectory fitting, the order p of the Fourier series fitting was set to 2. This value is experimentally proven to effectively filter noise while preserving weld geometric details, avoiding overfitting or underfitting.