A Lightweight Feature Enhancement Model for UAV Detection in Real-World Scenarios

Highlights

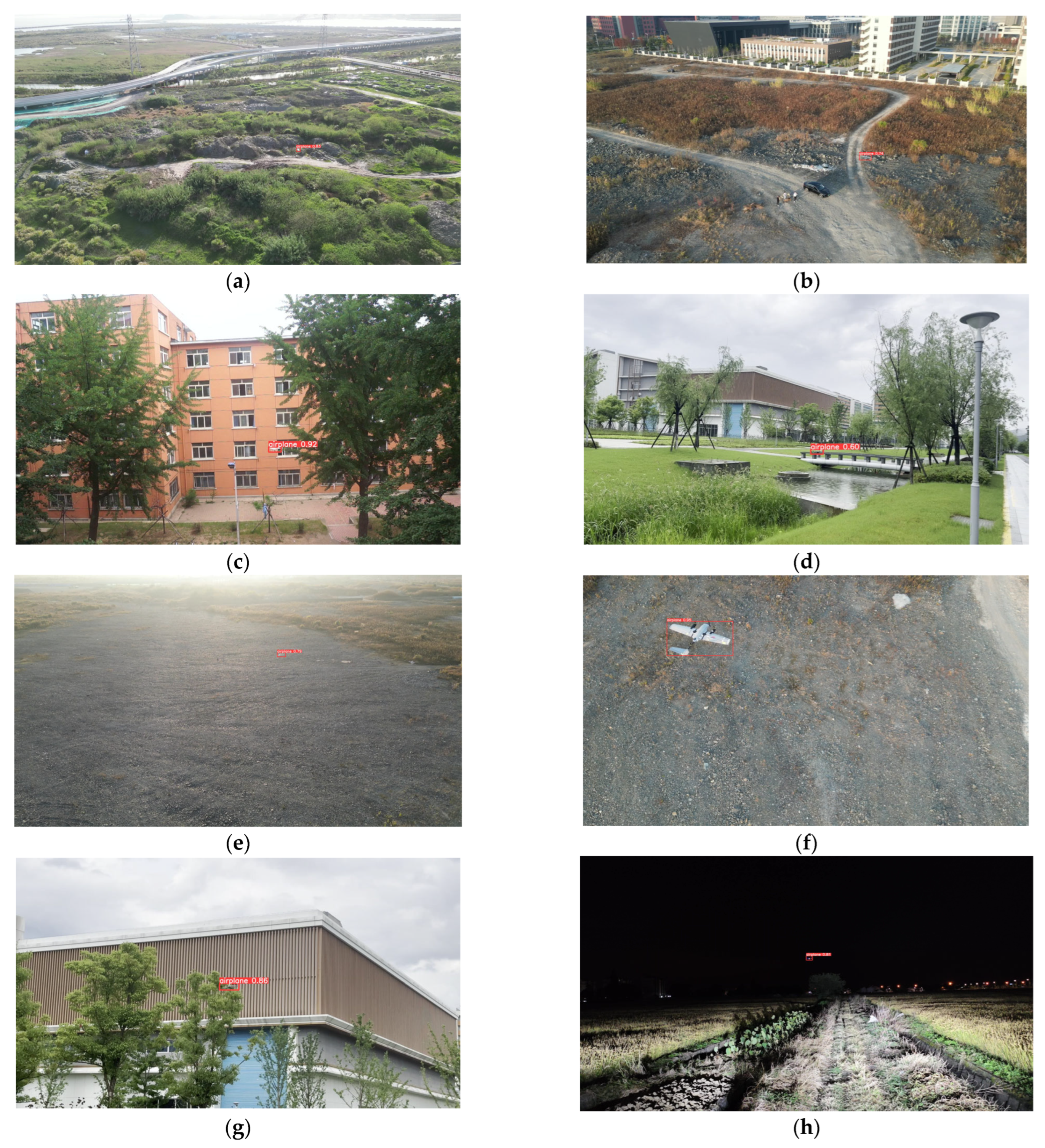

- We release RWSD, a large-scale UAV detection dataset of 14,592 real-world images covering diverse backgrounds, UAV sizes, and viewpoints to benchmark robust detectors.

- We present a lightweight feature enhancement model (LFEM) tailored for UAV detection, and extensive experimental results demonstrate the effectiveness and efficiency of our approach.

- RWSD provides the community with a challenging, openly available benchmark for evaluating UAV detectors under realistic, complex conditions.

- LFEM offers an accurate yet compact solution with the potential to be deployed on edge or mobile platforms for real-time aerial surveillance, narrowing the gap between research and field-ready anti-UAV systems.

Abstract

1. Introduction

- (1)

- We introduce RWSD, a new, large-scale, and publicly available dataset for UAV detection. It is specifically designed to address real-world challenges, comprising 14,592 images across training, validation, and testing sets, and featuring diverse UAV types, sizes, and complex environmental conditions.

- (2)

- We present a lightweight feature enhancement model (LFEM) tailored for UAV detection. Our model achieves superior accuracy compared to the original YOLOv5 while significantly reducing computational costs. Extensive experimental results demonstrate the effectiveness and efficiency of our approach.

- (3)

- We benchmark state-of-the-art object detection methods on the proposed RWSD dataset. Additionally, we conduct a comprehensive investigation and analysis of the performance of various object detectors for UAV detection in real-world scenarios.

2. Related Work

2.1. UAV Dataset

2.2. Object Detection Methodology

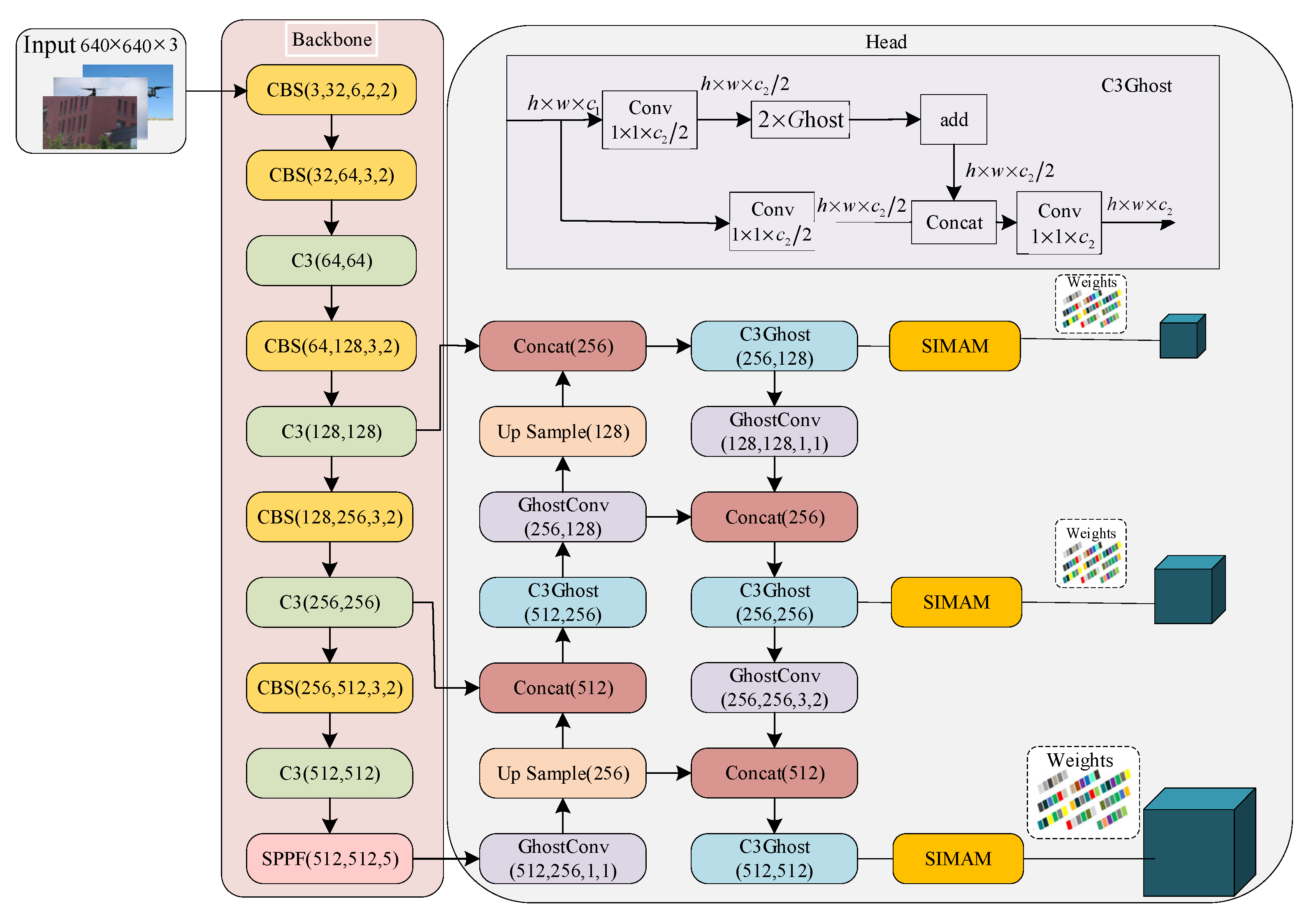

3. Methods

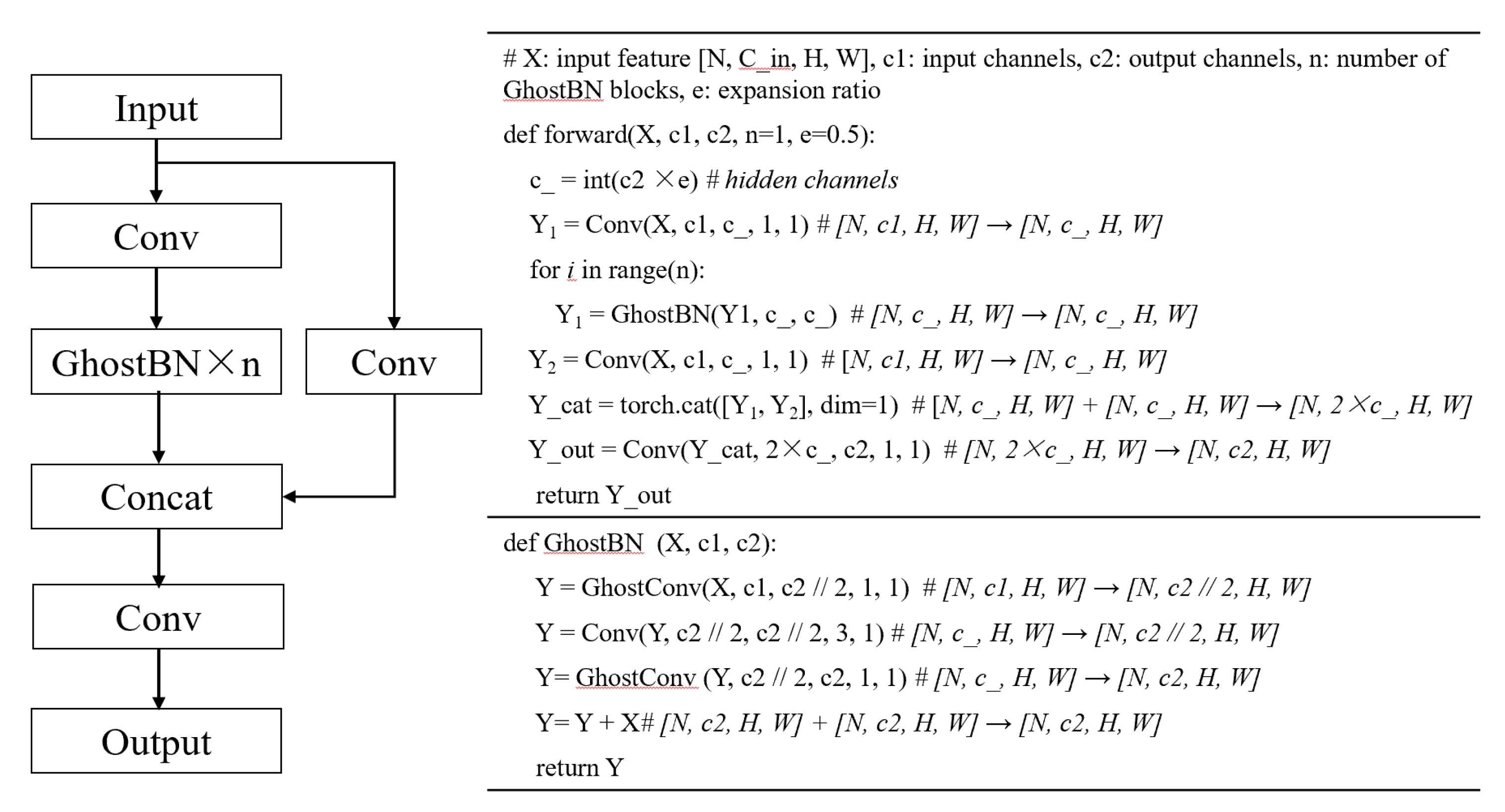

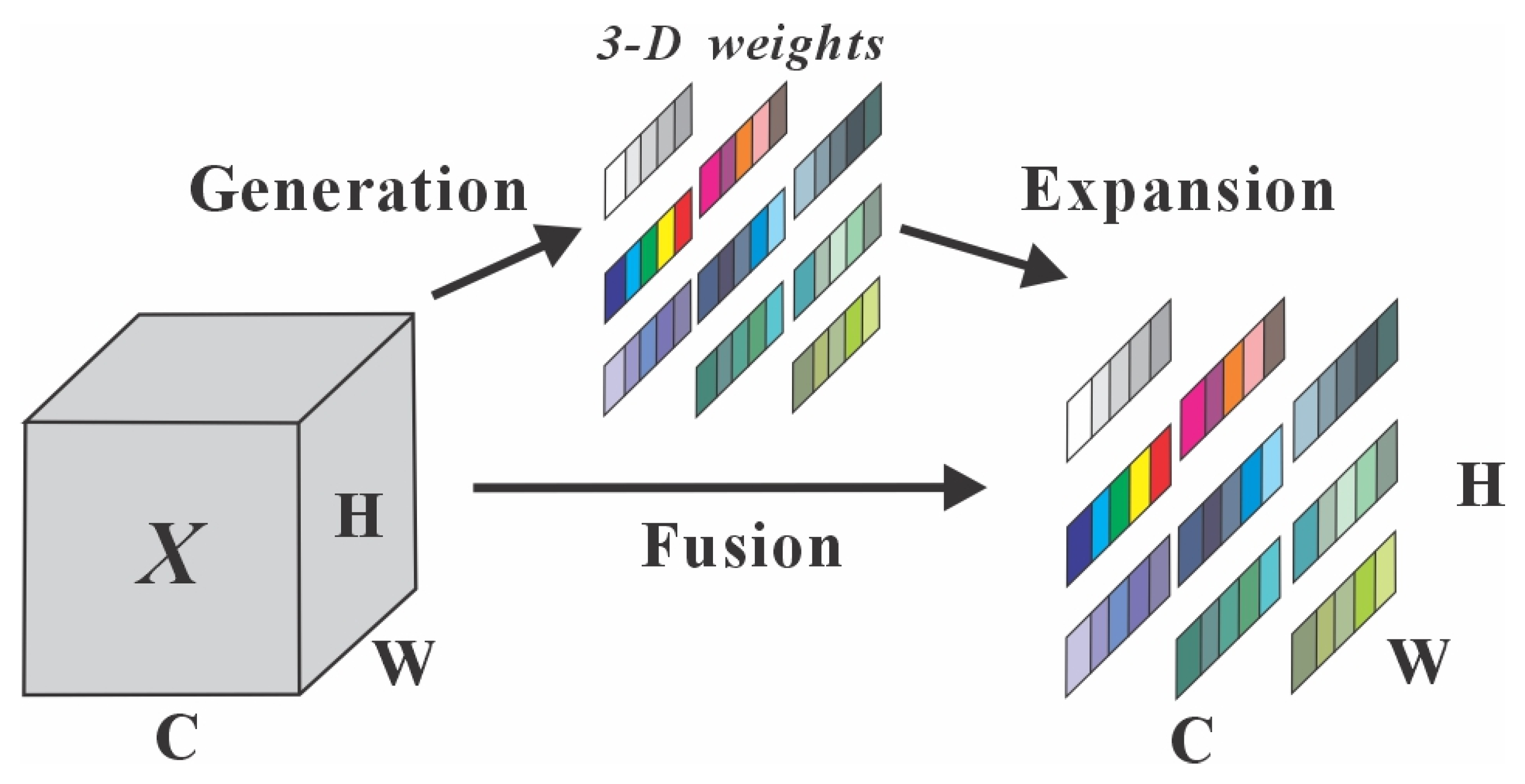

3.1. Ghost Lightweight Module

3.2. The Feature Enhancement Module

4. Proposed Dataset

5. Experiments and Results

5.1. Implementation Details

5.2. Benchmarks

| Model Name | Architectures | Key Features | Tasks |

|---|---|---|---|

| Faster-RCNN | CNN, Region Proposal Network, RoI Pooling, Classification & Bounding Box Regression | Two-stage detector, Anchor-based | Object Detection, Instance Segmentation |

| RetinaNet | ResNet + FPN, Classification Subnet, BBox Subnet, Anchor | One-stage detector, Focal Loss for class imbalance, Multi-scale feature fusion | Object Detection, Keypoint Estimation |

| YOLOv5 | CSPDarknet53, Path Aggregation Network, Decoupled Detection Head | Anchor-free detection, SWISH activation, PANet | Object Detection, Basic Instance Segmentation |

| YOLOv8 | CSPDarknet (C2f), Path Aggregation Network, Anchor-Free Decoupled Head | GANs, anchor-free detection | Object Detection, Instance Segmentation, Panoptic Segmentation, Keypoint Estimation |

| YOLOv10 | Enhanced CSPNet, Path Aggregation Network | Anchor-free detection, SWISH activation, PANet | Object Detection |

| LFEM | CSPDarknet53, Path Aggregation Network, Decoupled Detection Head, SIMAM, Ghost module | SIMAM feature enhancement; Ghost lightweight module | Object Detection |

5.3. Experimental Results

| Method | Param. (M) | mAP50 (%) | mAP50-95 (%) |

|---|---|---|---|

| Faster-RCNN | 41.70 | 73.39 ± 0.82 | 46.49 ± 0.73 |

| RetinaNet | 36.70 | 76.20 ± 0.91 | 43.44 ± 1.11 |

| YOLOv5 | 8.10 | 90.62 ± 0.11 | 56.33 ± 0.28 |

| YOLOv8 | 11.10 | 84.96 ± 0.41 | 53.98 ± 0.32 |

| YOLOv10 | 7.02 | 88.70 ± 0.66 | 56.50 ± 0.50 |

| LFEM | 5.62 | 93.04 ± 0.54 | 58.95 ± 0.43 |

| Method | Param. (M) | UAVDT | DOTAv1.0 | ||

|---|---|---|---|---|---|

| mAP50 (%) | Recall (%) | mAP50 (%) | Recall (%) | ||

| Yolov10 | 7.02 | 53.99 ± 1.90 | 50.68 ± 0.11 | 72.36 ± 0.27 | 67.63 ± 0.53 |

| Yolov8 | 11.10 | 49.75 ± 1.33 | 48.75 ± 0.80 | 71.88 ± 0.21 | 69.19 ± 0.13 |

| Yolov5 | 8.10 | 52.50 ± 0.77 | 48.41 ± 1.97 | 69.61 ± 0.37 | 67.61 ± 0.17 |

| LFEM | 5.62 | 54.79 ± 0.40 | 52.64 ± 1.28 | 73.10 ± 0.16 | 69.45 ± 0.40 |

| Method | Param. (M) | GFLOPs | Inf. Time (ms) | Precision (%) | Recall (%) | mAP50 (%) | mAP95 (%) |

|---|---|---|---|---|---|---|---|

| Yolov5 (Baseline) | 7.02 | 15.9 | 4.80 | 95.37 ± 0.70 | 85.74 ± 0.42 | 90.62 ± 0.11 | 56.33 ± 0.28 |

| +SIMAM | 7.02 | 15.9 | 5.20 | 96.61 ± 0.20 | 86.99 ± 0.60 | 92.01 ± 0.22 | 58.5 ± 0.37 |

| +Ghost | 5.62 | 13.4 | 4.10 | 95.07 ± 0.71 | 85.58 ± 0.55 | 90.33 ± 0.29 | 56.01 ± 0.31 |

| LFEM | 5.62 | 13.4 | 4.30 | 96.57 ± 0.41 | 87.49 ± 0.74 | 93.04 ± 0.54 | 58.95 ± 0.43 |

6. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lort, M.; Aguasca, A.; Lopez-Martinez, C.; Marín, T.M. Initial evaluation of SAR capabilities in UAV multicopter platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 127–140. [Google Scholar] [CrossRef]

- Škrinjar, J.P.; Škorput, P.; Furdić, M. Application of Unmanned Aerial Vehicles in Logistic Processes New Technologies, Development and Application 4; Springer: Berlin/Heidelberg, Germany, 2019; pp. 359–366. [Google Scholar]

- Xu, Y.; Yu, G.; Wang, Y.; Wu, X.; Ma, Y. Car Detection from Low-Altitude UAV Imagery with the Faster R-CNN. J. Adv. Transp. 2017, 2017, 2823617. [Google Scholar] [CrossRef]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An autonomous vision-based target tracking system for rotorcraft unmanned aerial vehicles. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 1732–1738. [Google Scholar]

- Hoffmann, F.; Ritchie, M.; Fioranelli, F.; Charlish, A.; Griffiths, H. Micro-Doppler based detection and tracking of UAVs with multistatic radar. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Abunada, A.H.; Osman, A.Y.; Khandakar, A.; Chowdhury, M.E.H.; Khattab, T.; Touati, F. Design and implementation of a RF based anti-drone system. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; IEEE: New York, NY, USA, 2020; pp. 35–42. [Google Scholar]

- Chang, X.; Yang, C.; Wu, J.; Shi, X.; Shi, Z. A surveillance system for drone localization and tracking using acoustic arrays. In Proceedings of the 2018 IEEE 10th Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; IEEE: New York, NY, USA, 2018; pp. 573–577. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics Yolov8, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 October 2024).

- Gao, G.; Yu, Y.; Yang, M.; Chang, H.; Huang, P.; Yue, D. Cross-resolution face recognition with pose variations via multilayer locality-constrained structural orthogonal procrustes regression. Inf. Sci. 2020, 506, 19–36. [Google Scholar] [CrossRef]

- Gao, G.; Yu, Y.; Xie, J.; Yang, J.; Yang, M.; Zhang, J. Constructing multilayer locality-constrained matrix regression framework for noise robust face super-resolution. Pattern Recognit. 2021, 110, 107539. [Google Scholar] [CrossRef]

- Gao, G.; Yu, Y.; Yang, J.; Qi, G.-J.; Yang, M. Hierarchical deep CNN feature set-based representation learning for robust cross-resolution face recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 32, 2550–2560. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Computer Vision–ECCV 2016 Workshops: Amsterdam, The Netherlands, October 8–10 and 15–16, 2016, Proceedings, Part II; 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Ross, T.-Y.; Dollár, G. Focal loss for dense object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Huang, Z.; Zhang, C.; Jin, M. Better Sampling, Towards Better End-to-End Small Object Detection. In International Conference on Computer Animation and Social Agents; Springer: Singapore, 2024; Volume 2374. [Google Scholar]

- Dai, T.; Wang, J.; Guo, H. FreqFormer: Frequency-aware transformer for lightweight image super-resolution. In Proceedings of the International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024. [Google Scholar]

- Chun, L.; Wang, X.; Lv, F. Vit-comer: Vision transformer with convolutional multi-scale feature interaction for dense predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Zhang, X.; Zhang, Y.; Yu, F. HiT-SR: Hierarchical transformer for efficient image super-resolution. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024. [Google Scholar]

- Fan, L. Diffusion-based continuous feature representation for infrared small-dim target detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5003617. [Google Scholar] [CrossRef]

- Khanna, S.; Liu, P.; Zhou, L. DiffusionSat: A Generative Foundation Model for Satellite Imagery. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Ultralytics. YOLOv5: YOLOv5 in PyTorch. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 15 October 2024).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Munir, A.; Siddiqui, A.J.; Anwar, S.; El-Maleh, A.; Khan, A.H.; Rehman, A. Vision-based UAV Detection under Adverse Weather Conditions. Authorea Prepr. 2023. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Luo, X.; Shao, B.; Cai, Z.; Wang, Y. A lightweight YOLOv5-FFM model for occlusion pedestrian detection. arXiv 2024, arXiv:2408.06633. [Google Scholar] [CrossRef]

- Jiang, N.; Wang, K.; Peng, X.; Yu, X.; Wang, Q.; Xing, J.; Li, G.; Zhao, J.; Guo, G.; Han, Z. Anti-UAV: A large multi-modal benchmark for UAV tracking. arXiv 2021, arXiv:2101.08466. [Google Scholar]

- Rodriguez-Ramos, A.; Rodriguez-Vazquez, J.; Sampedro, C.; Campoy, P. Adaptive inattentional framework for video object detection with reward-conditional training. IEEE Access 2020, 8, 124451–124466. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V; 13; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Anon. Available online: https://www.kaggle.com/datasets/dasmehdixtr/drone-dataset-uav (accessed on 12 January 2025).

- Zheng, Y.; Chen, Z.; Lv, D.; Li, Z.; Lan, Z.; Zhao, S. Air-to-air visual detection of micro-uavs: An experimental evaluation of deep learning. IEEE Robot. Autom. Lett. 2021, 6, 1020–1027. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Akyon, F.C.; Eryuksel, O.; Ozfuttu, K.A.; Altinuc, S.O. Drone-vs-bird detection challenge at IEEE AVSS2021. In Proceedings of the 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–8. [Google Scholar]

- Pawełczyk, M.; Wojtyra, M. Real world object detection dataset for quadcopter unmanned aerial vehicle detection. IEEE Access 2020, 8, 174394–174409. [Google Scholar] [CrossRef]

- Walter, V.; Vrba, M.; Saska, M. On training datasets for machine learning-based visual relative localization of micro-scale UAVs. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: New York, NY, USA, 2020; pp. 10674–10680. [Google Scholar]

- Munir, A.; Siddiqui, A.J.; Anwar, S. Investigation of UAV Detection in Images with Complex Backgrounds and Rainy Artifacts. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 221–230. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: New York, NY, USA, 2008; pp. 1–8. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I; 14; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Yao, H.; Zhao, S.; Lu, S.; Chen, H.; Li, Y.; Liu, G.; Xing, T.; Yan, C.; Tao, J.; Ding, G. Source-Free Object Detection with Detection Transformer. IEEE Trans. Image Process. 2025, 34, 5948–5963. [Google Scholar] [CrossRef]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual Event, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Zhang, J.; Li, X.; Li, J. Rethinking Mobile Block for Efficient Neural Models. arXiv 2023, arXiv:2301.01146. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. Micronet: Improving image recognition with extremely low flops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 468–477. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual Event, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Han. The Real-World Scenarios Dataset (RWSD) [Data Set]. 2025. Zenodo. Available online: https://zenodo.org/records/17694811 (accessed on 15 October 2025).

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Zhang, L. DOTA: A Large-scale Dataset for Object Detection in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.G.; Yang, Y. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, Y.; Yan, X.; Li, Y.; Li, D.; Liu, X.; Huang, H.; Bie, D. A Lightweight Feature Enhancement Model for UAV Detection in Real-World Scenarios. Drones 2025, 9, 874. https://doi.org/10.3390/drones9120874

Han Y, Yan X, Li Y, Li D, Liu X, Huang H, Bie D. A Lightweight Feature Enhancement Model for UAV Detection in Real-World Scenarios. Drones. 2025; 9(12):874. https://doi.org/10.3390/drones9120874

Chicago/Turabian StyleHan, Yanan, Xufei Yan, Yuan Li, Danyang Li, Xiaochao Liu, Haishan Huang, and Dawei Bie. 2025. "A Lightweight Feature Enhancement Model for UAV Detection in Real-World Scenarios" Drones 9, no. 12: 874. https://doi.org/10.3390/drones9120874

APA StyleHan, Y., Yan, X., Li, Y., Li, D., Liu, X., Huang, H., & Bie, D. (2025). A Lightweight Feature Enhancement Model for UAV Detection in Real-World Scenarios. Drones, 9(12), 874. https://doi.org/10.3390/drones9120874