2.1. Preliminaries

In cooperative UAV navigation, the primary challenge addressed in this study is the degradation of inter-agent range and range-rate measurements caused by communication delays, Doppler-induced distortions, and asynchronous message arrivals. These impairments directly affect the innovation sequence and often violate the Gaussian and consistency assumptions required for reliable Kalman filtering, leading to significant reductions in estimation accuracy. Existing innovation-based gating or thresholding approaches are insufficient under such time-varying fault conditions, because they rely on simplified scalar metrics and cannot capture the nonlinear characteristics of communication-induced errors. Therefore, a more robust, feature-rich, and data-driven measurement validation mechanism is required to ensure reliable state estimation in mesh-networked multi-UAV systems.

The proposed system architecture focuses on achieving sustainable cooperative navigation in environments where GNSS signals are weak, jammed, or unavailable. In this context, the simulation framework was designed to model both the diversity of non-GNSS measurement sources and the degradation scenarios that may affect them.

In this paper, the following notation is adopted. Scalar quantities are denoted using regular lowercase letters (e.g., x), vectors are represented using bold lowercase letters (e.g., x), and matrices are indicated using bold uppercase letters (e.g., P). All vectors are column vectors unless otherwise stated. This notation is used consistently throughout the system model, measurement equations, innovation definitions, and neural-network feature representations.

The multi-agent structure consists of several unmanned aerial vehicles (UAVs) flying cooperatively along predefined trajectories. Each UAV performs coordinated maneuvers at scheduled time intervals to maintain or modify its relative position within the formation. Even in the absence of GNSS signals, the agents generate a joint navigation solution by combining inter-agent communication-based measurements—such as range (Time of Arrival, ToA) and range-rate (Doppler shift)—with their own inertial sensor data.

A learning-based measurement filtering module is integrated into this framework to detect and reject degraded measurements before they enter the Kalman update. This integration ensures that the filter is updated only with reliable data, improving estimation robustness and accuracy.

Details of the simulation setup, algorithmic architecture, network-based measurement framework, error modeling, and training configuration used in this study are presented in the following subsections.

2.1.1. Simulation Environment

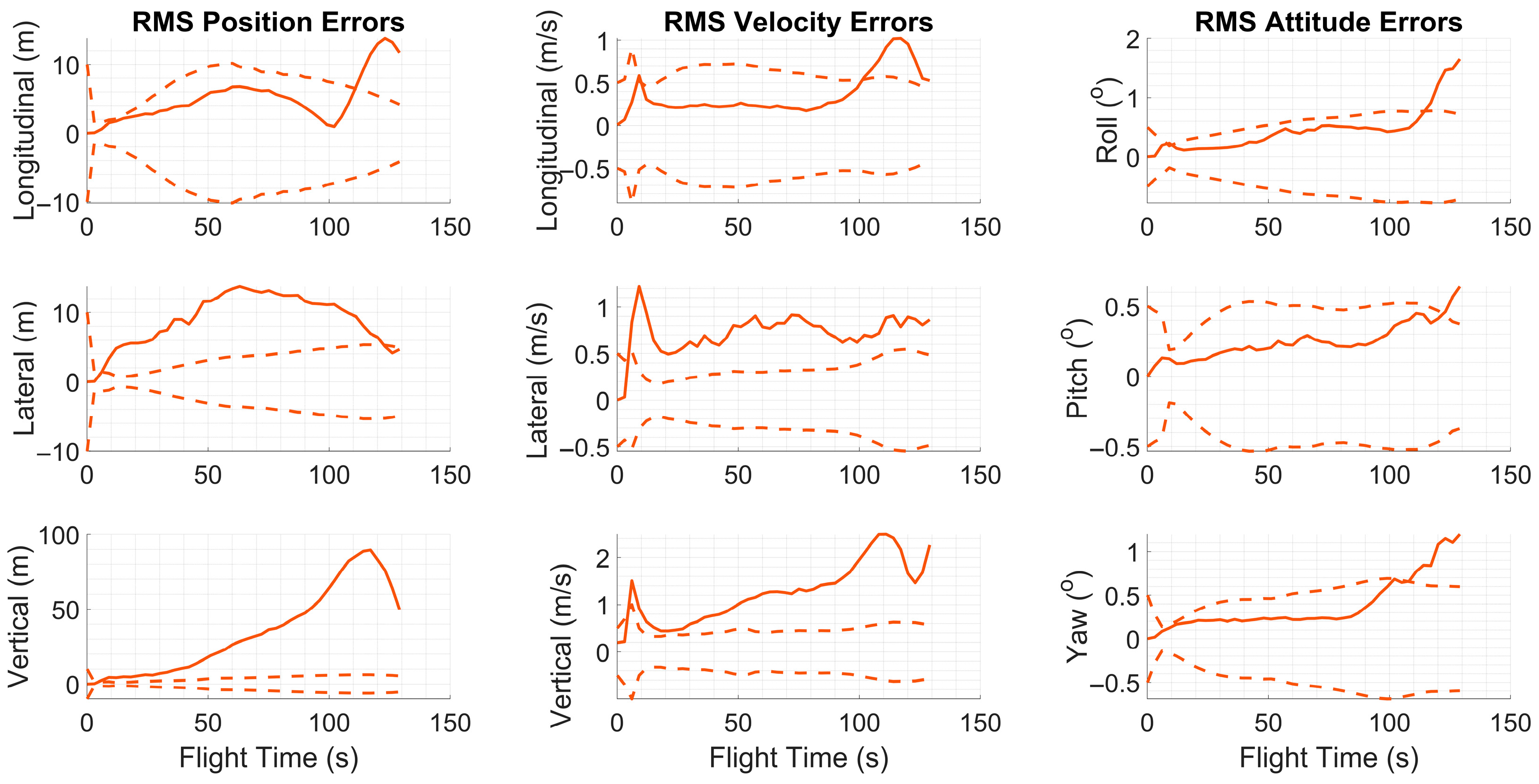

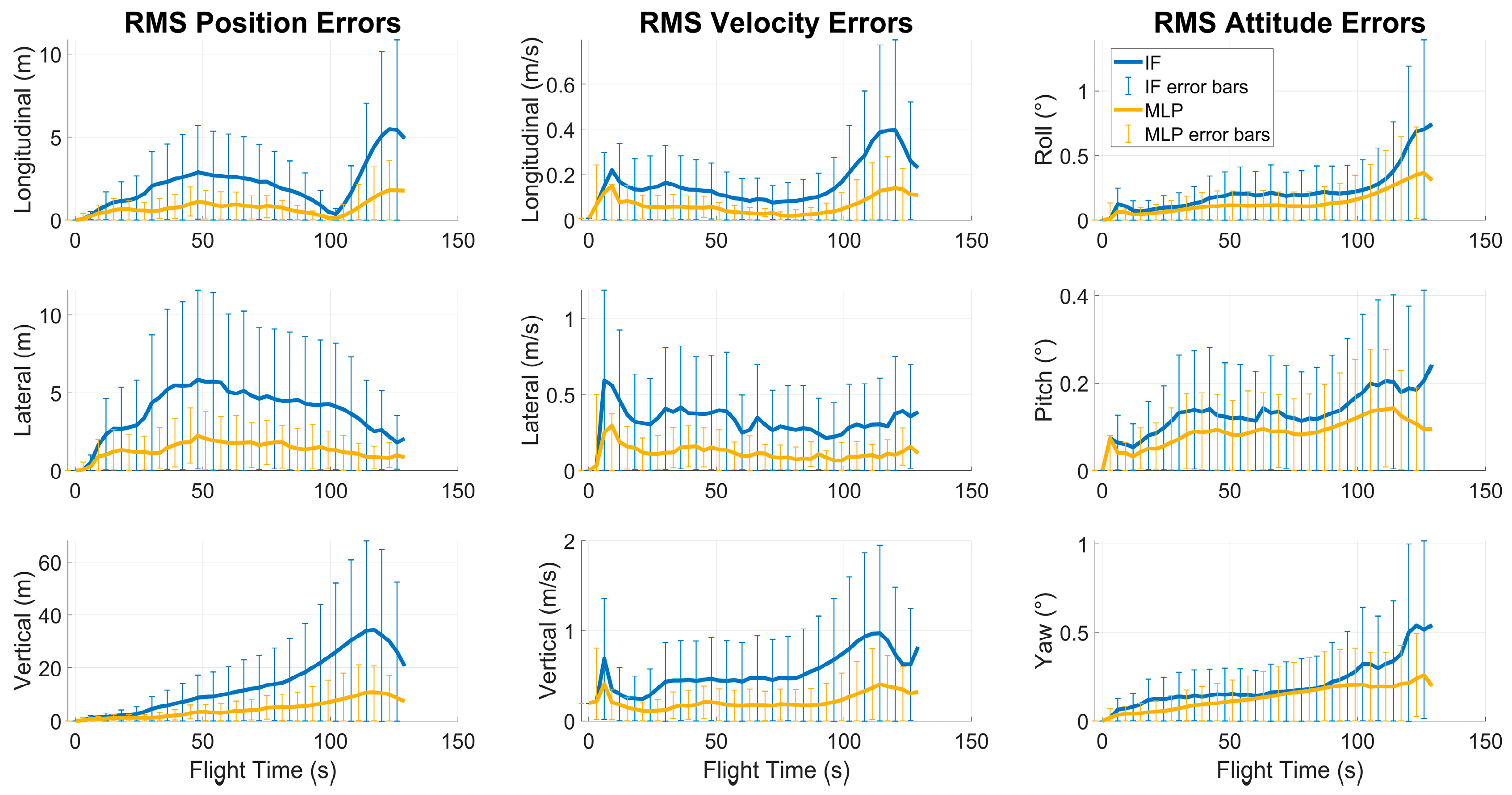

Monte Carlo (MC)-based simulations were performed to evaluate the performance of the proposed framework under a variety of stochastic measurement and communication conditions.

All simulations were implemented in MATLAB R2022b and Simulink. The complete multi-UAV navigation model—including sensor, communication, and filtering subsystems—was built in Simulink, while a MATLAB MC controller script called the model for each run. This structure ensured that every algorithm was executed with identical random-seed realizations of sensor and link errors, allowing fair statistical comparison across 256 runs. UAV trajectories and mission geometries were parameterized in MATLAB to allow consistent regeneration for each run.

The Simulink model consisted of modular sensor, communication, and filtering blocks, including a strapdown inertial navigation algorithm, time-of-arrival and Doppler-based inter-agent measurement models, and a Kalman filter augmented with the proposed learning-based decision module. A fixed-step discrete solver with a 30 ms update period was used to match the real-time processing constraints of UAV onboard computers. The IMU, GNSS-denied conditions, and link-impairment models were implemented using parameter sets derived from standard references and manufacturer specifications. This block-structured architecture allowed consistent timing behavior across agents and ensured reproducible evaluation of measurement delays, dropouts, and distortions within the Monte Carlo framework.

A kinematic flight model was developed for fixed-wing unmanned aerial vehicles (UAVs) to evaluate the proposed method. Each UAV represents an agent within a cooperative navigation network. Initially, all agents are positioned in a predefined formation and perform scheduled roll maneuvers to dynamically alter the formation geometry during the simulation.

The model produces reference trajectories containing position, velocity, and attitude data for each UAV. These reference data are generated using the ‘Kinematic_ECEF.m’ function provided in [

36], with a sampling interval of 30 ms. The outputs of this function represent the ground-truth reference values and are summarized in

Table 1. To ensure consistency and reproducibility, the kinematic and strapdown navigation models employed in this study were implemented using the verified reference codes provided in the appendices of [

36]. These components follow established formulations widely adopted in the literature and were used without modification, because they serve primarily as a standardized simulation basis for evaluating the proposed approach.

Using these reference trajectories, synthetic inertial sensor measurements are generated for each agent. The overall simulation framework is illustrated in

Figure 1. In the figure, green-labeled data represent ideal reference values, red-labeled data denote corrupted sensor measurements and estimation outputs, and dashed lines indicate communication between agents. Light-brown-labeled data correspond to inter-agent range and range-rate measurements transmitted via the network. Green arrows indicate error-free reference simulation values, while red arrows represent sensor-corrupted measurements or algorithm outputs. Data transmitted within communication messages are shown using dashed lines.

In the adopted notation, the subscript “e” denotes the ECEF frame, “b” represents the body frame of the UAV, and “p” refers to the peer agent with which communication occurs. The subscript “i” indicates the inertial reference frame, assumed to be fixed with respect to distant stars. The vector notation and frame representations adopted in this study are defined below for clarity:

where

,

,

, and

denote the position, velocity, attitude, and acceleration vectors, respectively. The indices

and

represent the reference and target frames, and

indicates the coordinate frame in which the vector is expressed.

The vectors , and represent the position, velocity, and attitude angles of the UAV with respect to the Earth, all expressed in the ECEF reference frame. The variable denotes time.

The vectors

and

correspond to the specific force and angular rate of the UAV relative to the inertial frame, expressed in the body frame. These quantities represent the ideal reference values expected to be measured by the inertial measurement unit (IMU). The corrupted sensor measurements used in the simulation are denoted by

and

, whose error characteristics are defined by the models presented in Equations (2) and (3).

The modeling details are comprehensively presented in [

37]. The vector

denotes the constant bias vector,

represents the misalignment matrix and

corresponds to the random walk component of the inertial sensor error model.

When modeling the inertial measurement unit (IMU), the selection of the sensor performance level was made by considering the complexity of the UAV systems addressed in this study, their expected navigation accuracy, mission profiles, and sensor-cost budgets. Accordingly, a tactical-grade or higher IMU was modeled as the most appropriate option. This level allows observable error dynamics while maintaining realistic bias and noise characteristics. A higher-grade IMU was intentionally avoided, since for MC analyses a moderate-quality sensor provides larger stochastic variations and more informative error distributions between runs. The adopted noise and bias characteristics were selected to represent typical tactical-grade IMUs used in UAV navigation, based on literature and manufacturer datasheets (e.g., InertialLab IMU-p, Honeywell HG1700 class), and the corresponding parameter set is summarized in

Table 2.

Communication-based computations use the position and velocity vectors of each agent, denoted as and , together with those of its peer agent and . Using these quantities, the inter-agent range and range-rate reference values are calculated. The measurement error model is applied to generate the corrupted measurements and .

Through the communication link, each agent also receives its peer’s estimated navigation states, including position (), velocity (), and the corresponding covariance matrix (), representing the uncertainty of these estimates.

Each agent outputs estimates of its own position, velocity, and attitude, along with the associated covariance matrices. These estimated states are compared with the reference trajectories generated by the simulation to evaluate the accuracy and reliability of the algorithm.

For clarity and reproducibility, the complete modeling procedure used in this study follows a step-wise structure in which each subsystem is explicitly associated with its governing equations. The kinematic and strapdown inertial navigation elements (Equations (1)–(3)) define the motion dynamics and inertial sensor behavior, while the IMU stochastic error components (

Table 2, Equations (2) and (3)) specify bias, noise and instability characteristics. The cooperative range and range-rate observations are modeled through the two-way ToA and Doppler equations (Equations (4)–(6)), and the communication-induced degradations are introduced using the stochastic link-fault models in Equations (7)–(9). The INS error-state propagation and its discrete-time formulation (Equations (10)–(14)) define the state prediction step of the Kalman filter, whereas the measurement-update stage relies on the residual definitions, Jacobians, and partial-update mechanisms summarized in Equations (15)–(23). Together, these steps constitute the end-to-end modeling pipeline implemented in the simulation environment and correspond directly to the block-level structure illustrated in

Figure 1.

2.1.2. Network-Based Measurement Architecture

The cooperative simulation setup employs a wireless mesh network (WMN) architecture for inter-UAV measurements.

The primary objective of the designed WMN structure is to enable each agent to share its local navigation solution, and the corresponding uncertainty estimates with other agents in the formation. During this exchange, inter-agent range and range-rate information are also communicated over the data link. By leveraging these shared measurements, each UAV can improve its own navigation accuracy based on the geometric configuration of the formation.

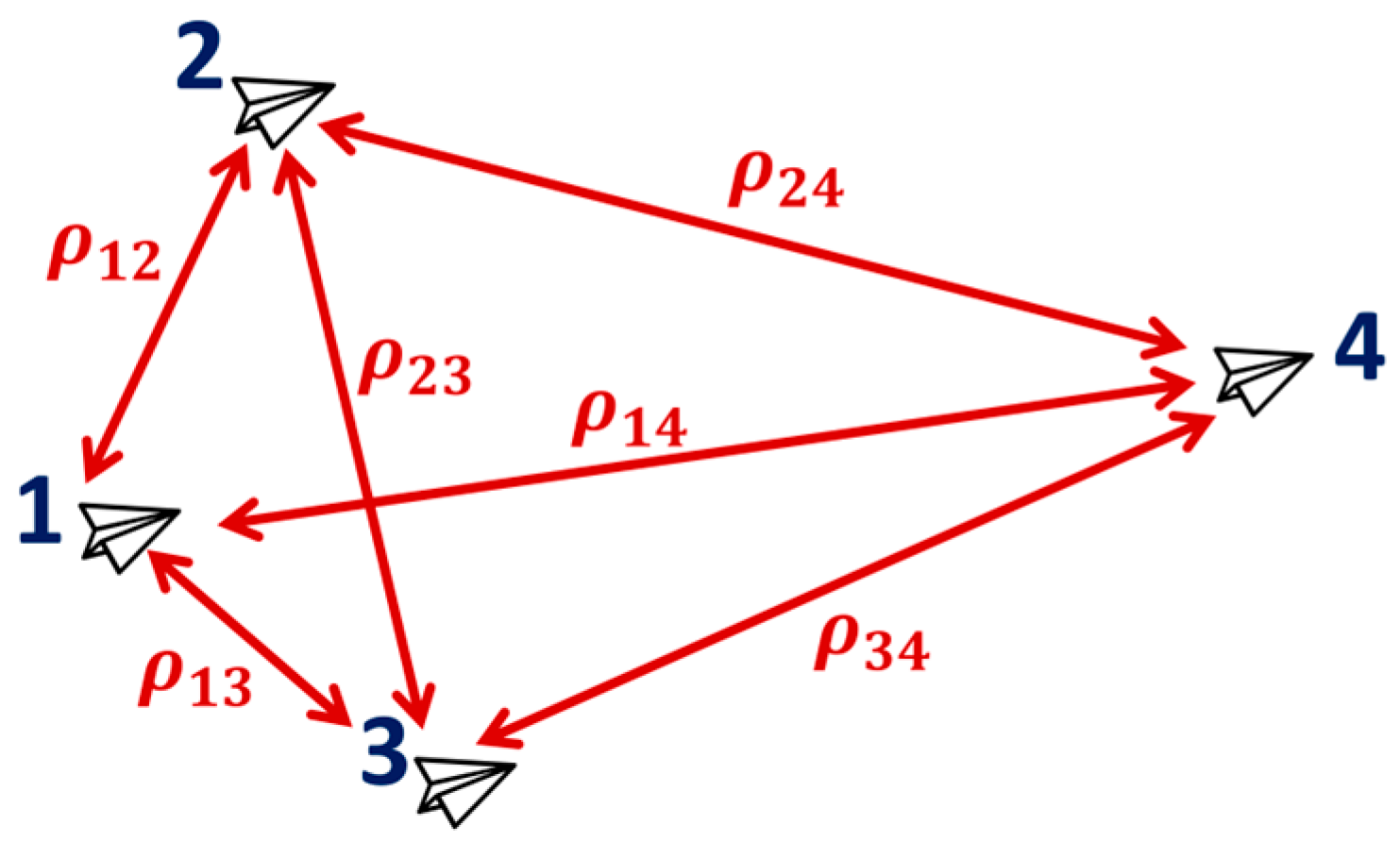

Communication among the agents follows a time-division multiple access (TDMA) protocol. As shown in

Figure 2, a four-agent network allows six possible pairwise communication combinations. At each time slot, only one agent pair is active, and the active link alternates cyclically according to the TDMA schedule. During an active communication period, both agents exchange their estimated positions and associated uncertainty data. This information is then used to enhance estimation accuracy along the line-of-sight direction between the communicating pair.

In an

-agent network, the inter-agent range measurement between a pair of communicating agents is modeled as:

where

and

denote the ECEF position vectors of the local and peer agents, respectively, and

is a zero-mean Gaussian noise term. In the absence of channel degradation, the standard deviation of

is denoted by

.

Similarly, the inter-agent range-rate measurement is defined as:

where

and

represent the ECEF velocity vectors of the two agents, and

denotes the Gaussian noise associated with the range-rate measurement. The unit line-of-sight vector between the agents is expressed as:

In nominal communication conditions, the standard deviation of is denoted by . The degradation behavior of these measurements is captured through dedicated stochastic error models.

2.1.3. WMN Measurement Error Model

In the mesh network configuration, each pair of communicating agents is subject to temporary and systematic measurement degradations that occur randomly over time. A stochastic error model is employed to simulate such degradations for each communication channel. The model checks at every measurement epoch whether a degradation event occurs; if it does, a fixed bias error and a time-varying instability component are introduced and maintained for a randomly determined duration. This structure allows the model to represent both transient and time-dependent error behaviors, as summarized in Equation (7).

where

is a constant bias term uniformly distributed within

. The term

represents a time-varying drift obtained by accumulating small Gaussian perturbations at each sampling step, as defined in Equation (8).

and

represent the nominal and degraded measurement noises modeled as zero-mean Gaussian processes with variances

and

, respectively.

denotes the simulation sampling period.

The degradation duration

is randomly determined as follows:

At each sampling step, the channel transition into the degraded state is governed by a Bernoulli process with probability . When a degradation event occurs, the total error added to the measurement consists of the fixed bias, its time-varying instability, and the white-noise component, all acting simultaneously during the degradation period . After this period, the channel returns to its nominal state characterized by white noise only, and the same link may subsequently re-enter a degraded state.

This modeling approach allows the simulated measurement data to more accurately reflect the transient error behaviors encountered in real-world conditions, such as communication interference and multipath effects. The overall flow of the error model, which is applied to both range and range-rate measurements, is illustrated in

Figure 3, where decision transitions are shown in yellow and error-computation steps are shown in green.

The parameters used in the error model are summarized in

Table 3.

2.1.4. Algorithm Framework

The integration of measurements obtained from the mesh network and the outputs of the inertial navigation system (INS) is performed within a linearized Kalman filter framework. The INS error models are defined in the ECEF frame, and all computations are carried out with respect to this reference frame. The filter estimates the error states contained in the error vector defined in Equation (10), which includes three-dimensional vectors representing position, velocity, and attitude errors, as well as the constant accelerometer and gyroscope biases associated with the inertial sensors.

In the simulation, constant biases were identified as the primary error sources significantly affecting the analysis; therefore, only these components were included in the error-state vector. The effects of other error types were represented implicitly through bias instability terms.

This configuration reduces the filter dimension while preserving realism, providing an efficient error model that focuses on the dominant INS error sources.

where

represents the position error vector in the ECEF frame,

denotes the velocity error vector in the same frame and

refers to the attitude error vector expressed in Euler angles with respect to the ECEF frame. The vectors

and

correspond to the constant bias components of the accelerometer and gyroscope, respectively.

The subscript indicates the body frame of the UAV and takes the values for agents in the network. These estimated error states are applied in a closed-loop configuration to correct navigation errors in real time.

The state variables defined by the position, velocity, and attitude errors are represented in continuous time by a linearized state-space model as:

where

is the skew-symmetric form of the Earth’s rotation vector

expressed in the ECEF frame:

The term

represents the negative of the skew-symmetric matrix of the accelerometer measurements in the ECEF frame and is defined as:

The matrix

is expressed as:

where

denotes the gravity vector computed using the WGS-84 gravity model and expressed in the ECEF frame.

The term represents the geocentric radius, which varies with the estimated latitude.

The state transition matrix is obtained by linearizing the continuous-time model described above, as detailed in [

37], and then discretizing it for numerical implementation.

The system noise covariance matrix is derived following the same procedure.

In the proposed approach, the measurement update step of the Kalman filter is performed exclusively using the available and validated measurements. The range and range-rate observations can be incorporated either jointly or independently. When both measurements are verified as reliable, they are applied simultaneously during the update process. If a degradation is detected in either observation, the filter update is carried out using only the valid measurement. The following formulation describes the update step in the case where only range measurements are utilized.

In the mesh network configuration, the range measurement received from a peer agent is compared with the corresponding predicted value obtained from the nonlinear system model. This comparison yields the measurement residual used in the innovation computation during the Kalman filter update step:

The predicted range is defined as:

where

denotes the measured range, and

is the predicted range derived from the system model. The vector

represents the ECEF position estimate of the local agent obtained from the integrated navigation filter, whereas

is the position estimate of the peer agent received through inter-agent communication. The Euclidean norm of the difference between these two position vectors yields the predicted range. The measurement matrix for the range update is defined as

where

is the unit line-of-sight vector between the communicating agents expressed in the ECEF frame, computed as

Range-rate measurements are incorporated into the filter in a similar manner and are used only when their integrity is verified. In this case, the measurement residual is computed as

with the predicted range-rate defined by

where

and

denote the estimated velocity vectors of the local and peer agents, respectively. The inner product of their velocity difference with the unit line-of-sight vector provides the predicted range-rate. The measurement matrix for range-rate updates is expressed as

When both range and range-rate measurements are validated simultaneously, the Kalman update step is performed using both observations jointly. In this case, the measurement residual is defined as

and the combined measurement matrix becomes

The decision table for the measurement update modes is presented in

Table 4.

Several mechanisms are implemented to dynamically manage the measurement update mode when faults occur, preventing degraded observations from adversely affecting the filter performance.

The following model is used to represent the uncertainty associated with the range measurements:

where

(for

) represents the first three diagonal elements of the covariance matrix associated with the peer agent’s position estimates. The scalar product of these terms with the unit line-of-sight vector

quantifies the position-estimate uncertainty along the communication direction. The term

denotes the measurement noise variance, previously defined in Equation (5).

A similar uncertainty model is applied for the range-rate measurements, with the measurement covariance term defined as

This formulation follows the same principle as the range-based model, directly reflecting the estimation uncertainty along the measurement direction and thereby improving the overall filter performance.

When both measurements are available and validated, the two covariance values are combined into a diagonal matrix

:

This configuration integrates both range and range-rate measurement uncertainties into the filter, enhancing update consistency by accounting for estimation uncertainty along the measurement direction.

The Kalman filter is implemented with a closed-loop feedback structure, which dynamically evaluates uncertainty along the measurement direction to ensure more consistent updates. Further methodological details are provided in [

36].

2.1.5. Simulation Scenario

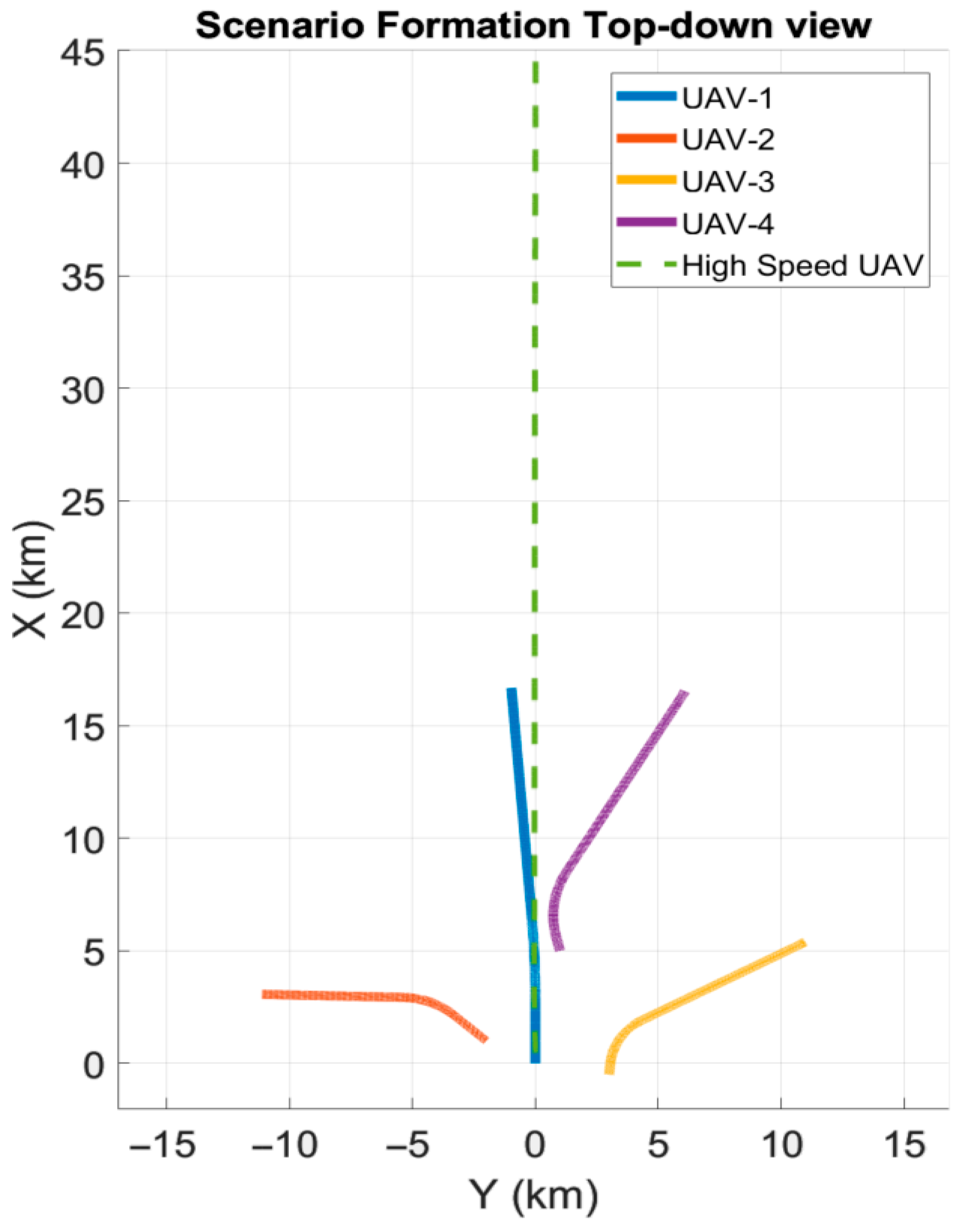

In the simulation environment, a scenario was designed to enable a fair comparison of the navigation performance of different methods. In this setup, a formation of four UAVs initially performs a coordinated flight in a predefined configuration. At the start of the simulation (), one of the UAVs in the formation releases a separate high-speed UAV, which accelerates approximately 45 km forward to execute an assigned mission.

The total simulation duration is set to 130 s. During this period, the remaining UAVs in the formation perform coordinated roll maneuvers, turning alternately to the right and left. This behavior creates a more favorable geometric distribution that enhances the navigation accuracy of the high-speed UAV. A top-down view of the formation and the flight trajectories of all agents are illustrated in

Figure 4. This scenario provides a dynamic and application-oriented test environment for evaluating data-sharing-based enhancement processes within the mesh network architecture.

Within this scenario, Monte Carlo (MC) simulations were conducted in which both inertial sensor and communication errors varied stochastically. To ensure a fair comparison among different measurement-mode selection strategies, a mechanism was implemented that generated all random variables identically across runs based on their execution index. This configuration ensured that, although the individual error realizations differed between runs, all methods were evaluated under identical stochastic conditions, maintaining consistency across simulations.

Due to the randomness of the inertial sensor errors, the formation geometry shown in

Figure 4 exhibited slight variations between runs within a predefined tolerance range. At the beginning of each run, the inertial sensor error parameters specific to that trial were randomly drawn from zero-mean normal distributions and kept constant throughout the simulation. The standard deviations of these distributions were determined based on the nominal error values provided in

Table 2, ensuring a statistically consistent and controlled error model.

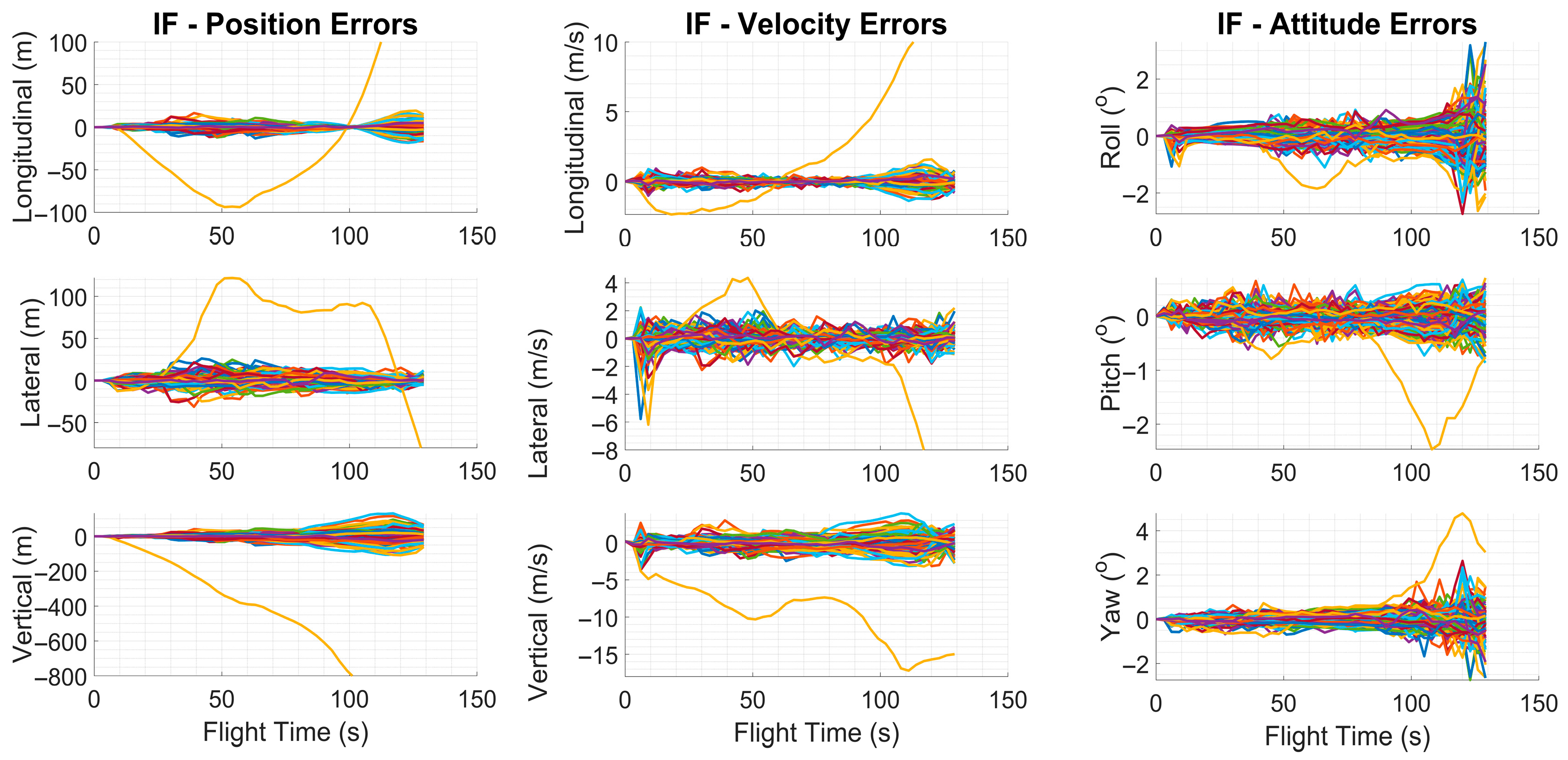

Under this configuration, 256 MC runs were performed for each method. For the high-speed UAV, position, velocity, and attitude errors were recorded, along with the algorithmic decisions regarding the measurement update mode and the corresponding communication degradations. This allowed direct observation of each algorithm’s decision accuracy and its influence on navigation performance under identical simulation conditions.

2.1.6. Training Dataset Configuration

To enable the deep learning-based algorithms to make accurate decisions regarding the presence of measurement faults, suitable input parameters were defined for both the algorithm and the simulation environment. In particular, for reinforcement learning (RL)-based architectures, several indirect features were introduced as additional inputs to allow the algorithm to learn the direct impact of potential measurement faults on navigation performance based on the current state. This configuration was designed to improve the decision accuracy and effectiveness of the developed models in achieving the intended outputs. The list of the 29 input parameters selected as features for the network is presented in

Table 5.

The 29-dimensional feature vector was selected after a series of ablation experiments conducted during network development. Early prototypes that used only raw range and range-rate measurements achieved poor discrimination performance, especially under intermittent link errors and Doppler-induced disturbances. Features related to innovation statistics, covariance-weighted residuals, and Doppler consistency proved essential—removing either group caused the MLP validation accuracy to drop by 10–15%. Additional tests confirmed that reducing the feature set below 20 elements consistently degraded classification accuracy, particularly in scenarios with asynchronous message arrivals or rapidly varying relative geometries. Because the decision mechanism is instantaneous rather than temporal, the network requires a sufficiently rich snapshot of the current measurement and its reliability indicators. The resulting 29-feature configuration provided the most reliable balance between representational richness and computational efficiency.

The last four input features, represented by the “MacOneHot” vector, were introduced to prevent LSTM- or CNN-based deep learning models from incorrectly capturing sequential patterns in the TDMA-based communication structure. Such models are capable of learning temporal dependencies; however, the periodic but alternating nature of the TDMA pattern may lead to false correlations. These features indicate which peer the high-speed UAV is communicating with at the current time step. Instead of a numerical (1–4) encoding, a separate bit is assigned to each agent through one-hot encoding, allowing the time-series models to make more accurate predictions without being affected by systematic biases arising from the communication sequence.

A Monte Carlo simulation consisting of 1024 runs was conducted to generate label data corresponding to communication degradations along with the selected input features. A different random number generator library was used for this simulation compared to that employed in the algorithm testing phase. This ensured statistical independence between the training and test datasets. In addition, a subset of 10 randomly selected runs from the training data was reserved as the validation set.

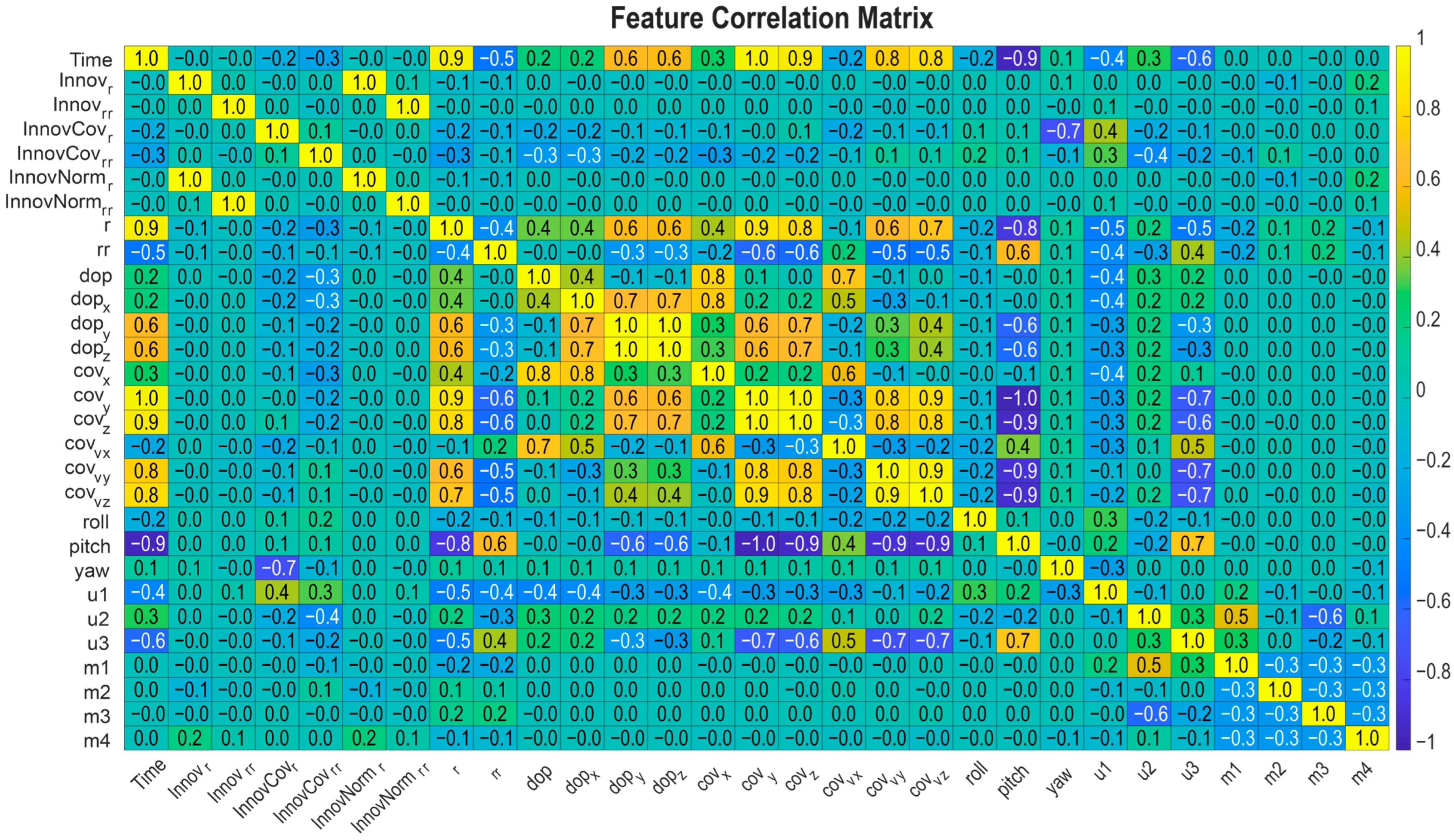

To assess the quality of the generated dataset, a correlation matrix was computed for all input features, and analyses were carried out based on this matrix. These analyses revealed linear relationships among features and allowed the identification of redundant or multicollinear inputs that could potentially affect model performance.

Although relatively high linear correlations were observed among some input parameters, these relationships were not found to have any significant adverse effect on the training process or the generalization capability of the model. To preserve feature diversity and to exploit potential nonlinear dependencies, all parameters were retained in the training phase without any feature elimination. The correlation matrix is illustrated in

Appendix A and

Figure A1.

2.2. Methods

Several algorithms are introduced to determine which measurements can be used while preserving Kalman filter performance under degraded conditions. Each algorithm is described in terms of how it adaptively manages the measurement update process and is evaluated with respect to its influence on overall navigation performance.

The algorithms—Innovation Filter (IF), Deep Q-Network (DQN), Multi-Layer Perceptron (MLP), and Long Short-Term Memory (LSTM)—were implemented in MATLAB/Simulink and executed on a standard workstation. Preliminary timing tests showed that the framework operates faster than real time, indicating that the proposed architecture does not impose significant computational demand. Additional implementation and hardware details are provided in

Appendix B.

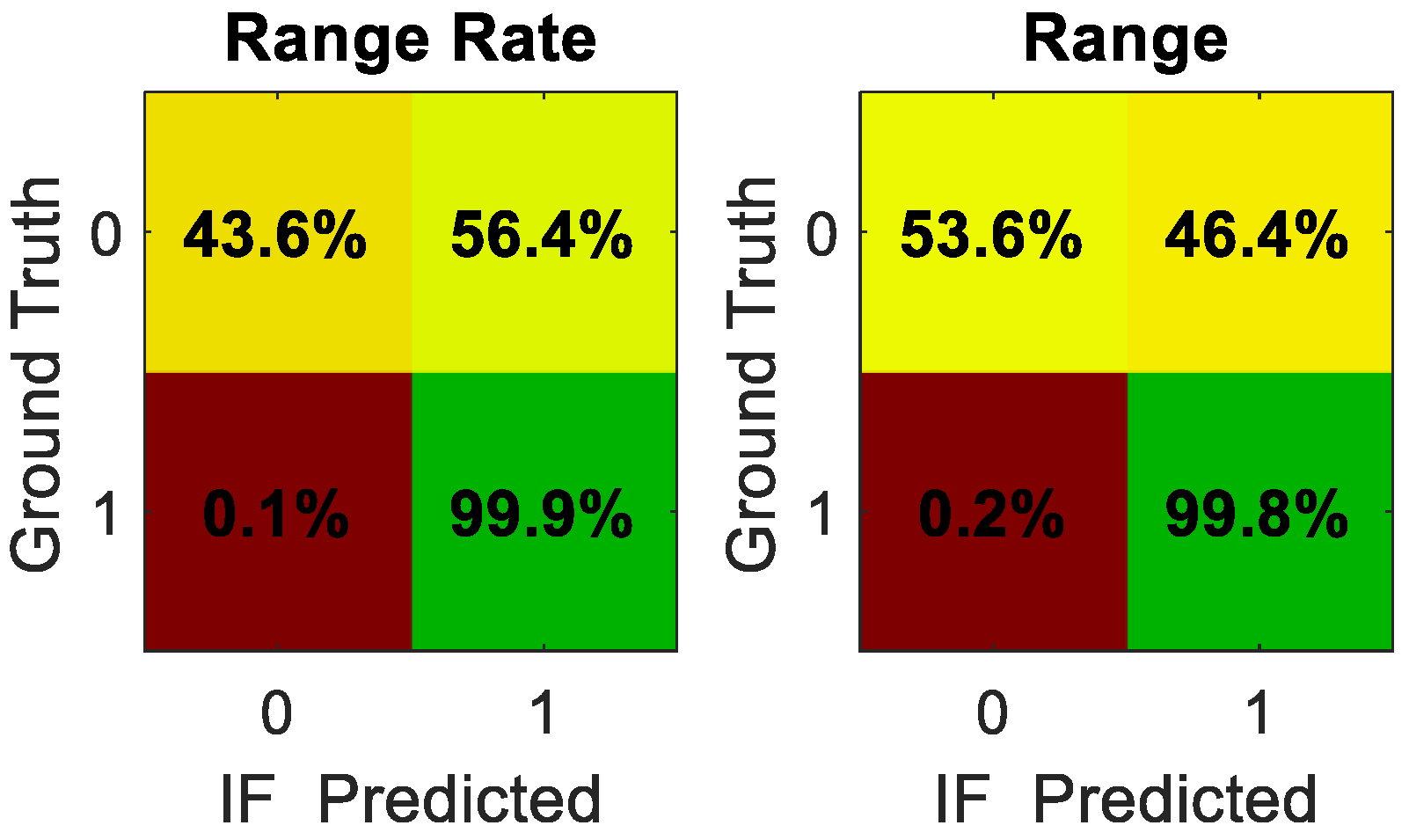

2.2.1. Innovation Filter (IF)

In conventional Kalman filter implementations, the Innovation Filter is frequently employed to assess measurement inconsistencies. As a reference approach, a threshold-based innovation algorithm was first examined. The inputs used in this approach include the innovation, its covariance, and the innovation norm—features also utilized by the other algorithms, as listed in

Table 5.

The innovation vector is calculated as:

The innovation is computed using both measurement components; however, during the Kalman update, only the parts corresponding to valid measurements are applied depending on the algorithmic decision.

Unlike scalar gating methods that compress multidimensional residuals into a single threshold value, the proposed vectorized innovation norm preserves component-wise information associated with range and range-rate measurements. This distinction is essential because the objective of the proposed approach is not only to reject severely corrupted observations but also to exploit partially valid measurement components whenever possible. For example, in cases where the range measurement is degraded while the Doppler-based range-rate remains reliable, using a scalar innovation norm would force both components to be discarded simultaneously. By evaluating the full innovation vector, the system can selectively retain the undistorted components, enabling partial measurement updates as defined in

Table 4. This allows the learning-based module to capture subtle inconsistencies across different measurement channels and ensures that useful information is not lost due to localized faults. Similarly, the innovation covariance is given by

and the vectorized innovation norm used to evaluate the reliability of each measurement component is defined as:

Since the vectorized innovation norm is normalized component-wise, it allows each measurement element to be assessed individually in proportion to its own reliability, in addition to the commonly used scalar innovation norm. The absolute value of each component is compared against a predefined threshold to evaluate the consistency of the corresponding measurement.

A scalar innovation norm was not considered as a baseline because it compresses all residual components into a single value and is therefore incompatible with the partial-update mechanism summarized in

Table 4, which requires preserving component-level innovation information.

The resulting consistency information is used as an input to the decision logic summarized in

Table 4, determining the measurement update mode applied in the Kalman filter.

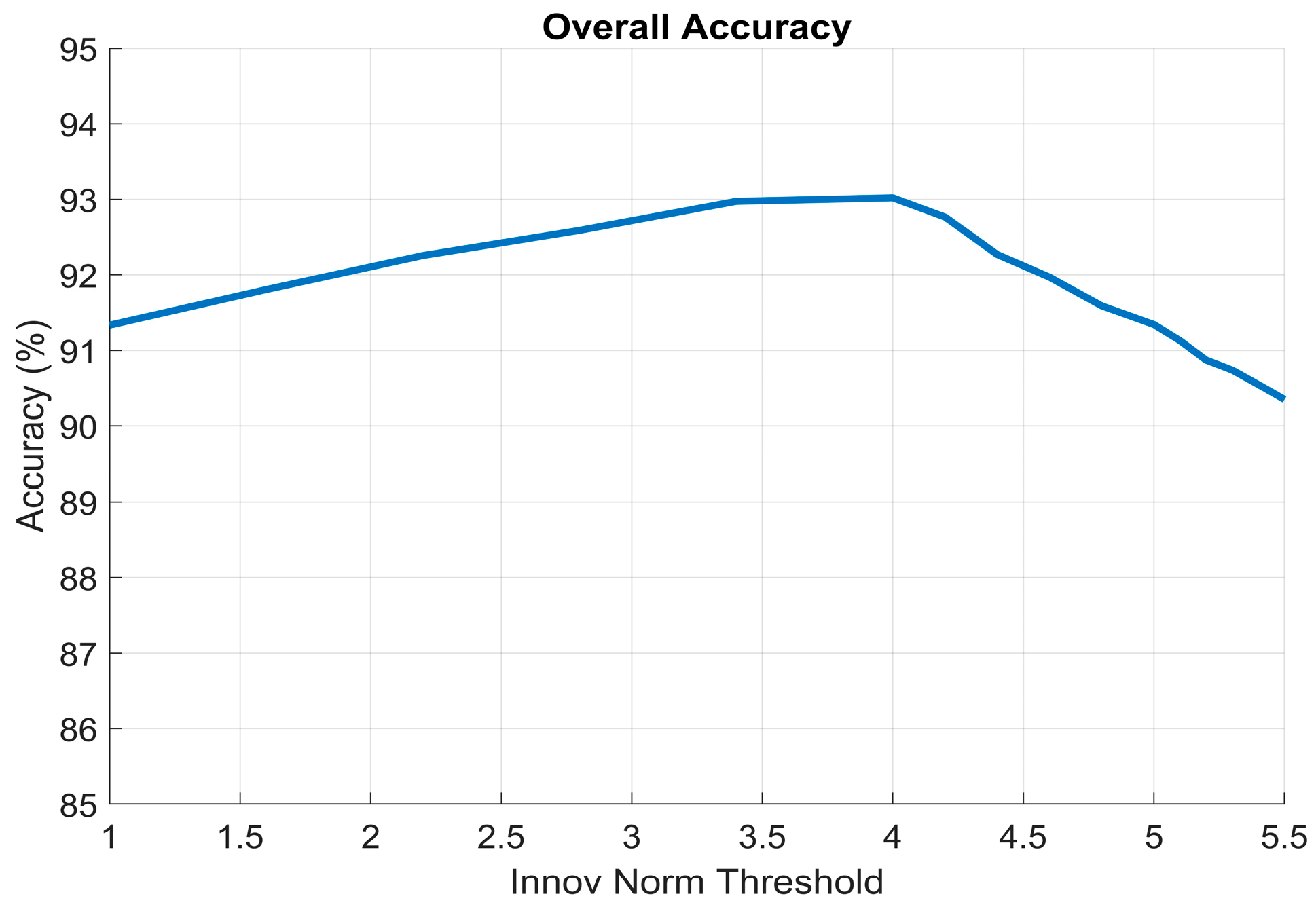

An ablation study was conducted to evaluate the sensitivity of the Innovation Filter to the choice of innovation-norm threshold. Sixteen threshold values between 1 and 5.5 were tested, and the resulting detection accuracy curve is shown in

Figure 5. The results indicate that thresholds below 4 are overly aggressive, causing unnecessary rejection of partially valid measurements and reducing overall accuracy due to decreased measurement utilization (increased false positives). Conversely, thresholds above 4 become too permissive, allowing a larger fraction of degraded observations to pass through the update step (increased false negatives), which in turn deteriorates navigation consistency. The threshold value of 4 provided the best balance between rejecting corrupted measurements and retaining reliable components, and was therefore selected as the nominal operating point for all experiments. The threshold value was set to 4 in the MC simulations.

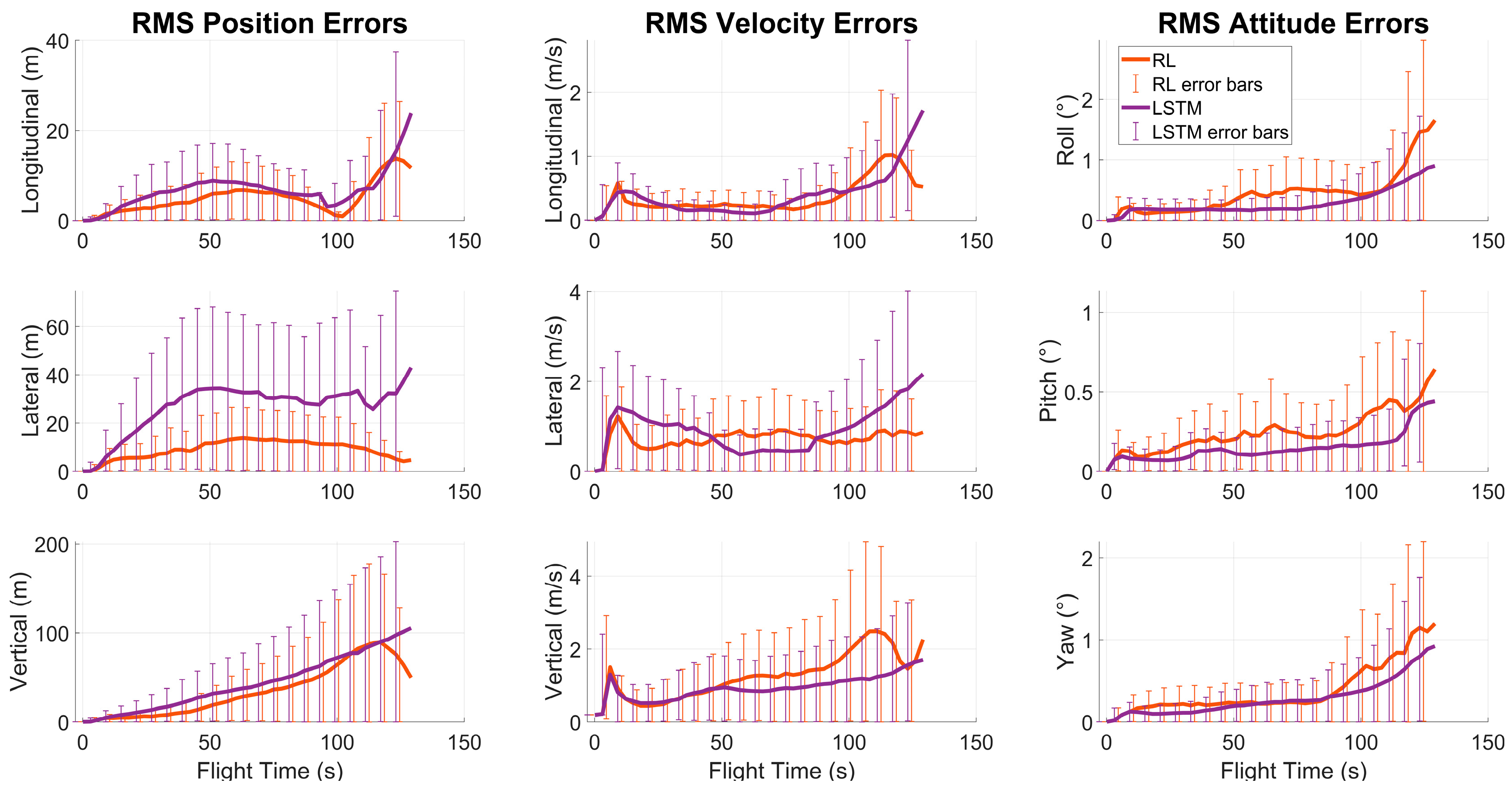

2.2.2. Reinforcement Learning (RL)

The simulation environment was configured so that the RL agent could observe the consequences of its decisions and receive rewards or penalties accordingly. The reward and penalty mechanism were defined based on the changes in navigation errors during simulation time steps where a WMN update occurred. If the position and velocity errors decreased after an update, a reward was given to the agent; conversely, an increase in these errors resulted in a penalty.

To prevent the RL agent from favoring a single type of update (e.g., only range or only range-rate), an additional bonus reward was introduced when both measurements were used simultaneously (measurement mode 3) and the reward condition was satisfied.

The total reward applied at each step consisted of two main components: a position-accuracy-based term (

) and a velocity-accuracy-based term (

), defined as follows:

The position- and velocity-based components, together with the bonus reward applied when the measurement mode equals three (

), are combined to yield the total reward value at each time step:

where

.

The network input features listed in

Table 5 were used as the observable states for the RL agent. Training was conducted by continuously varying the stochastic parameters in the simulation environment, ensuring a generalizable learning process distinct from the test dataset used in the MC simulations.

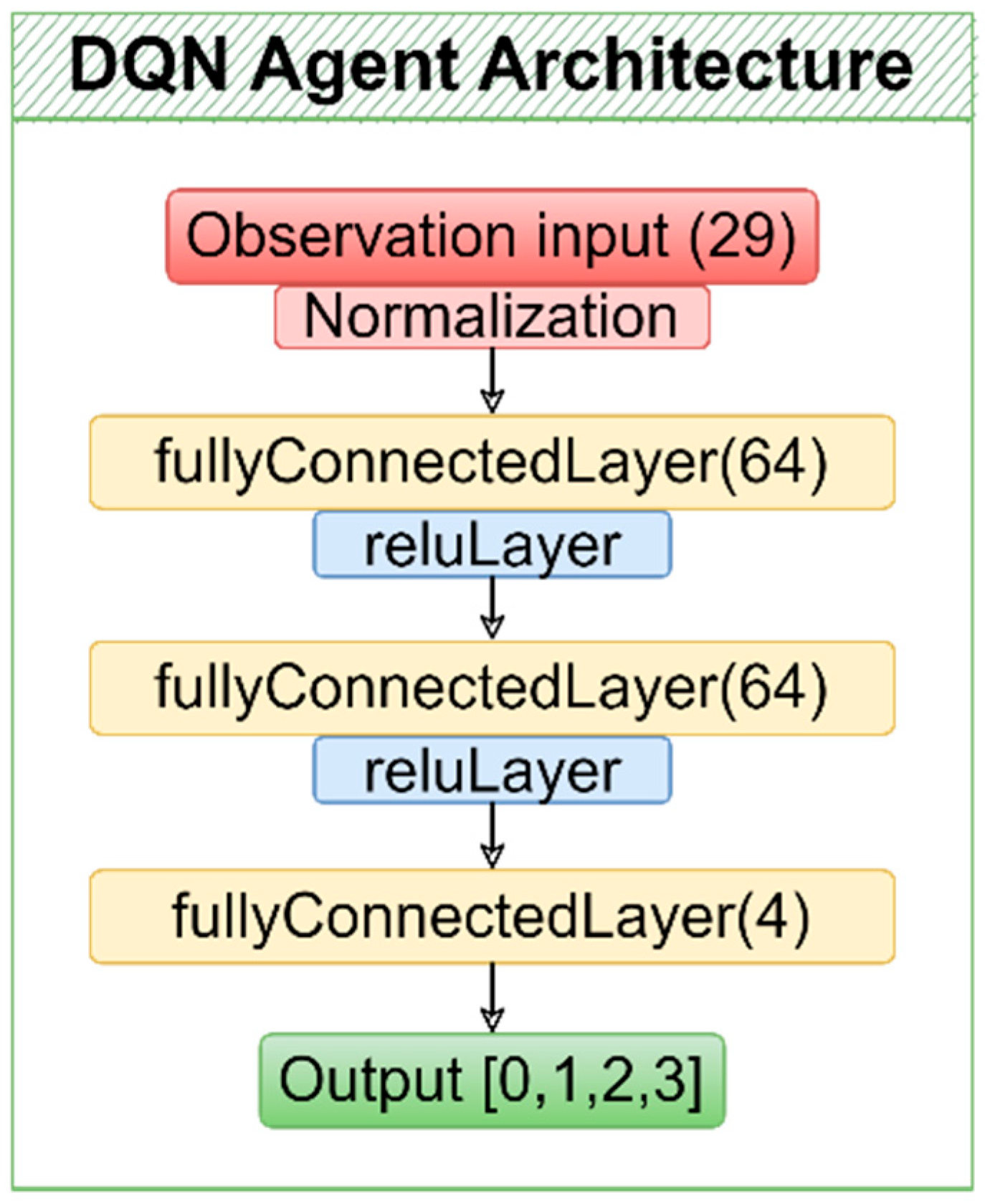

A Deep Q-Network (DQN)-based reinforcement learning agent was employed. The DQN structure is appropriate for this problem because it processes continuous feature vectors while operating over a discrete action space. The learning process was further stabilized through mechanisms such as the target network and experience replay buffer. For these reasons, the DQN agent was evaluated as an effective and suitable approach for the measurement selection problem. The architecture designed for the DQN agent is illustrated in

Figure 6.

During training, multiple hyperparameter variations—including changes in exploration rates, reward coefficients, discount factors, and target-network update frequency—were tested to stabilize convergence and improve decision consistency. Although certain configurations yielded smoother training trajectories, the overall learning performance remained constrained by the limited temporal structure imposed by the TDMA-based measurement sequence, which prevented the DQN agent from reliably exploiting long-term dependencies. The final parameter set reported in

Appendix C and

Table A2 represents the most stable and consistent configuration obtained.

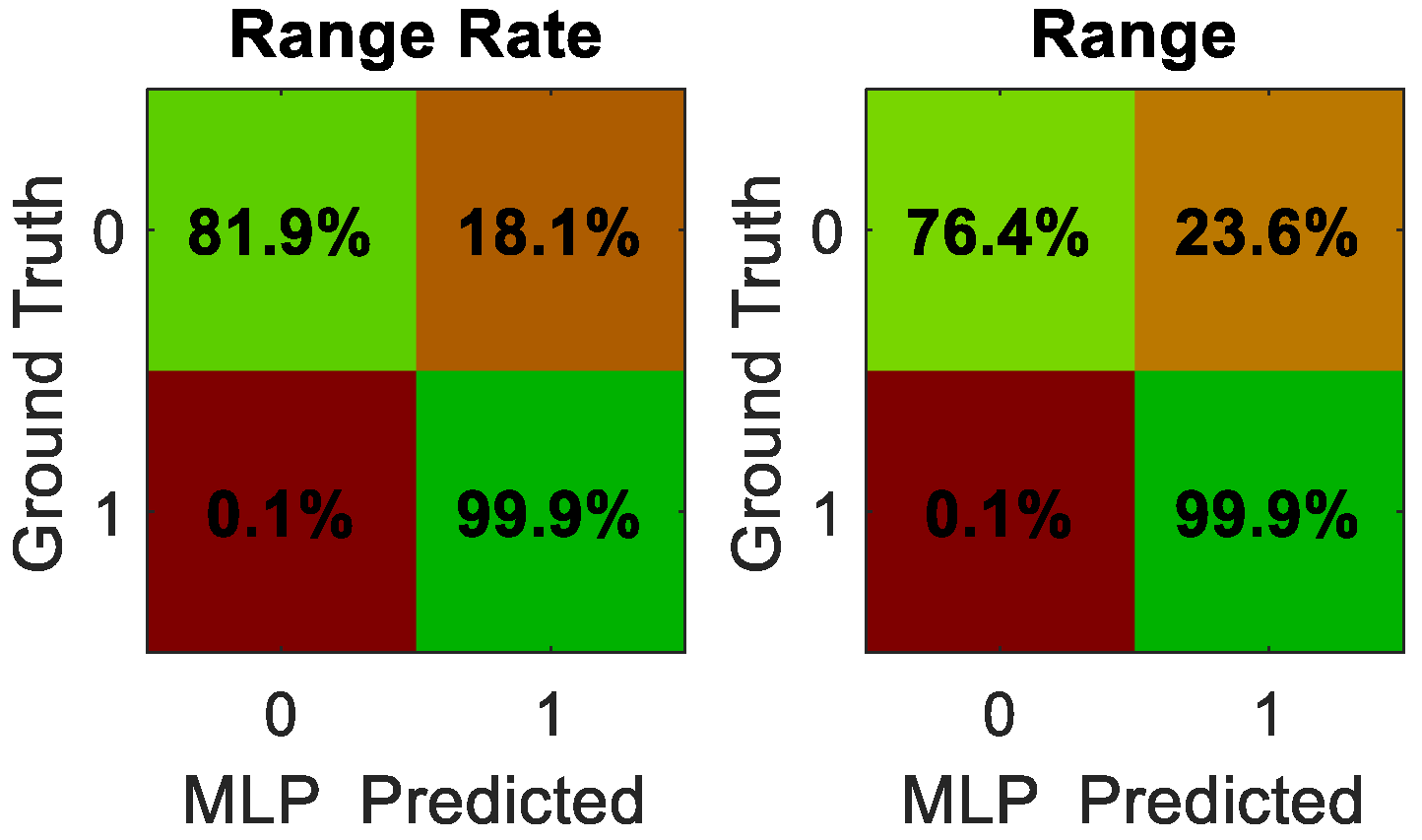

2.2.3. Multi-Layer Perceptron (MLP)

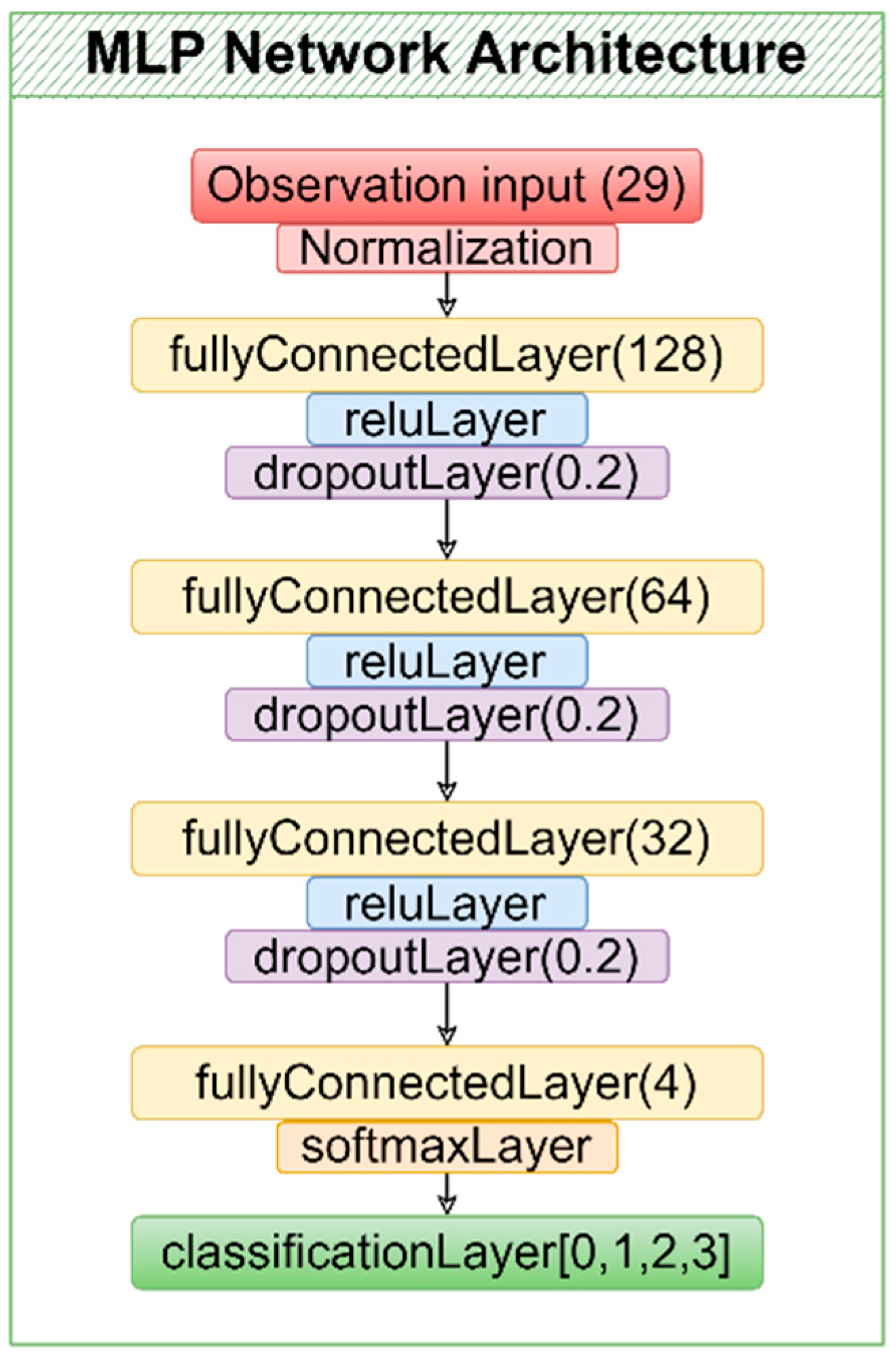

Multi-layer perceptrons are widely used neural-network models due to their capability to learn nonlinear decision boundaries, making them suitable for classification problems. An MLP-based structure was designed to make decisions solely from instantaneous observation data, without considering the temporal continuity of measurement errors. The designed network was trained to determine the appropriate measurement update mode, and its performance was evaluated through MC simulations after the training process was completed.

Training experiments demonstrated that network stability was highly sensitive to learning rate and mini-batch selection. High learning rates (1 × 10

−3 to 5 × 10

−4) converged quickly but consistently yielded lower validation accuracy (≈0.86), whereas reducing the learning rate to 1 × 10

−5 and increasing the batch size improved generalization. Furthermore, the application of z-score normalization and class-balanced weighting significantly improved classification robustness, increasing validation accuracy to nearly 0.97. The final training configuration summarized in

Table A3 reflects the most stable and generalizable setup identified after extensive experimentation with multiple architectural and hyperparameter combinations.

Several MLP architectures were evaluated during development, including both shallow (64-32-4) and deeper networks (64-64-16-4). Increasing network depth or width did not improve validation accuracy beyond approximately 0.86 and often introduced mild overfitting, especially in the presence of class imbalance. Conversely, smaller networks lacked sufficient capacity and produced unstable gating decisions. The best balance between accuracy, robustness, and computational efficiency was achieved with a compact 3-layer architecture (64-32-4) trained using z-score normalization and class-balanced weights, which increased validation accuracy to nearly 0.97. This architecture also provided the lowest inference latency, making it suitable for real-time deployment in resource-constrained UAV platforms.

The chosen architecture of the proposed MLP model is illustrated in

Figure 7. The optimized network parameters, which yielded the best training performance for the MLP, are summarized in

Appendix C and

Table A3.

2.2.4. Long-Short Term Memory (LSTM)

LSTM networks, known for their ability to learn temporal dependencies, provide effective solutions for capturing sequential patterns in time-series data. To account for the temporal continuity of measurement degradations, an LSTM-based architecture was developed. The objective was to enable the selection of the measurement update mode based not only on the instantaneous state but also on the temporal patterns observed in previous time steps, thereby achieving more consistent decision making. The architecture of the proposed LSTM model is illustrated in

Figure 8.

Several architectural and training variations were tested during model development, including different hidden-layer sizes, dropout configurations, minibatch settings, and class-weight adjustments. Although class-weighted models improved validation accuracy (reaching values above 95%), the limited temporal structure imposed by the TDMA-based measurement sequence prevented the LSTM from fully exploiting its long-term memory capability, ultimately constraining its decision-making performance compared to the MLP. The optimized network parameters, which yielded the best training performance for the LSTM, are summarized in

Appendix C and

Table A3.