A Low-Cost Experimental Quadcopter Drone Design for Autonomous Search-and-Rescue Missions in GNSS-Denied Environments

Abstract

1. Introduction

2. Related Works

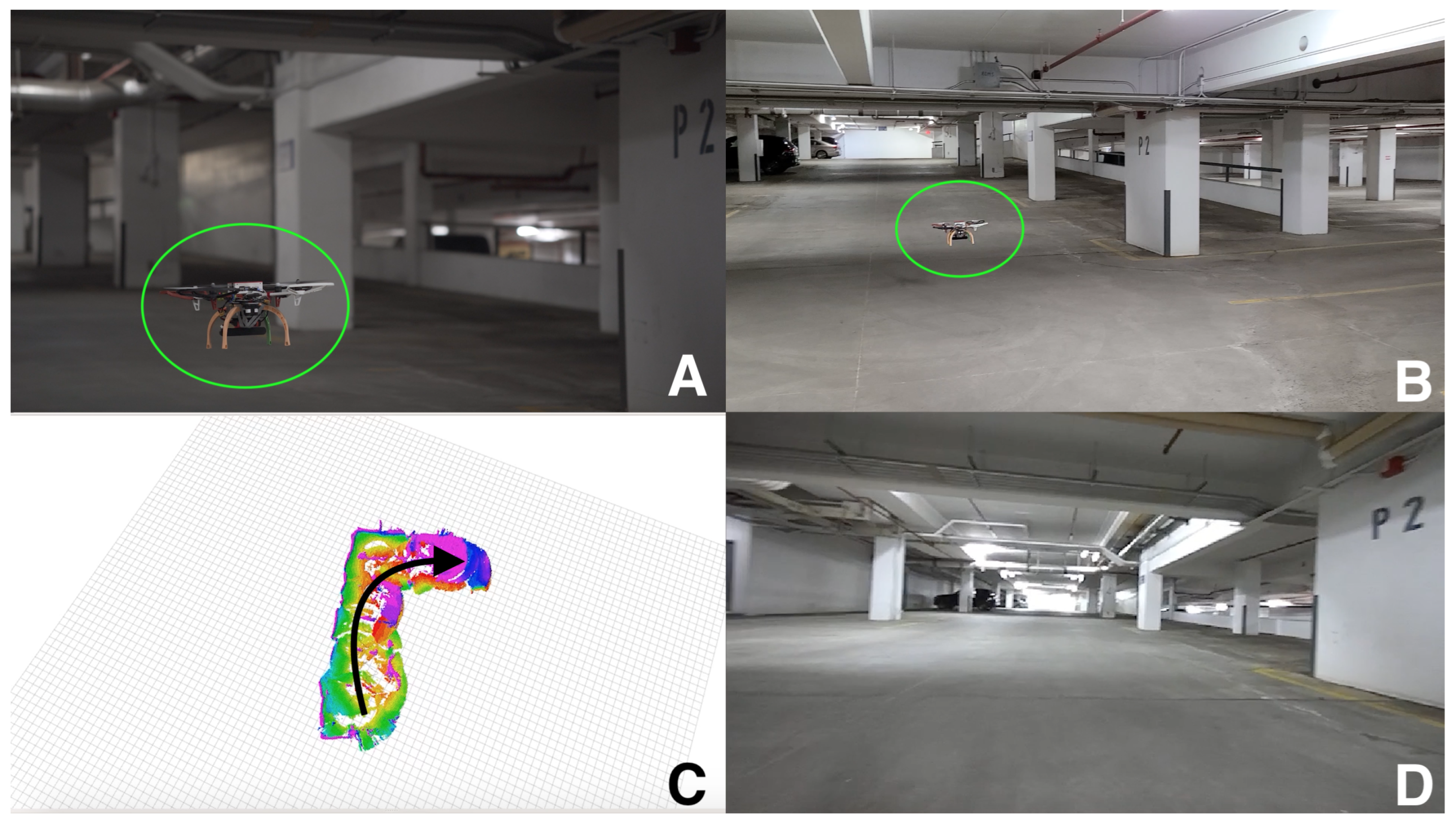

3. Description of Design

3.1. Hardware

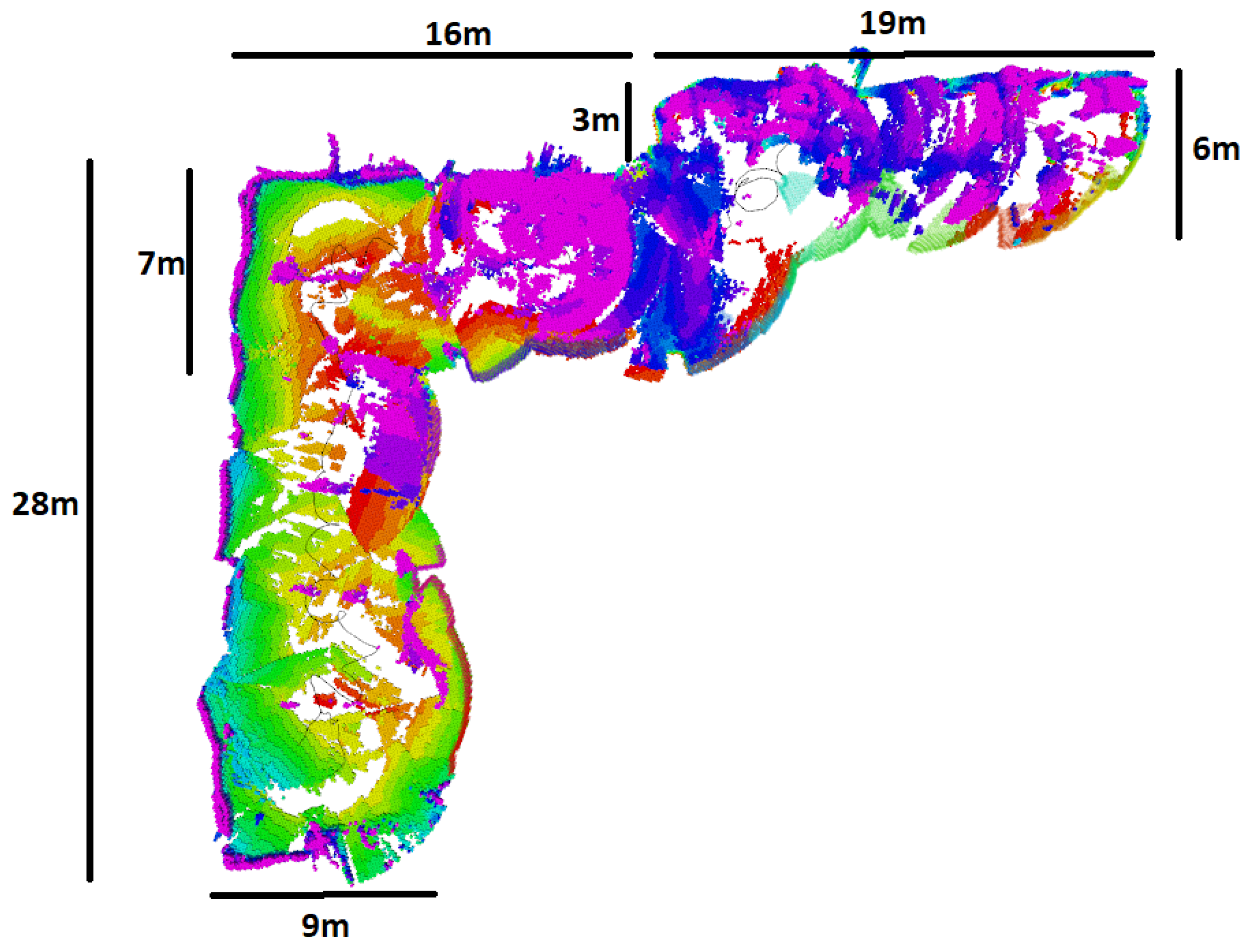

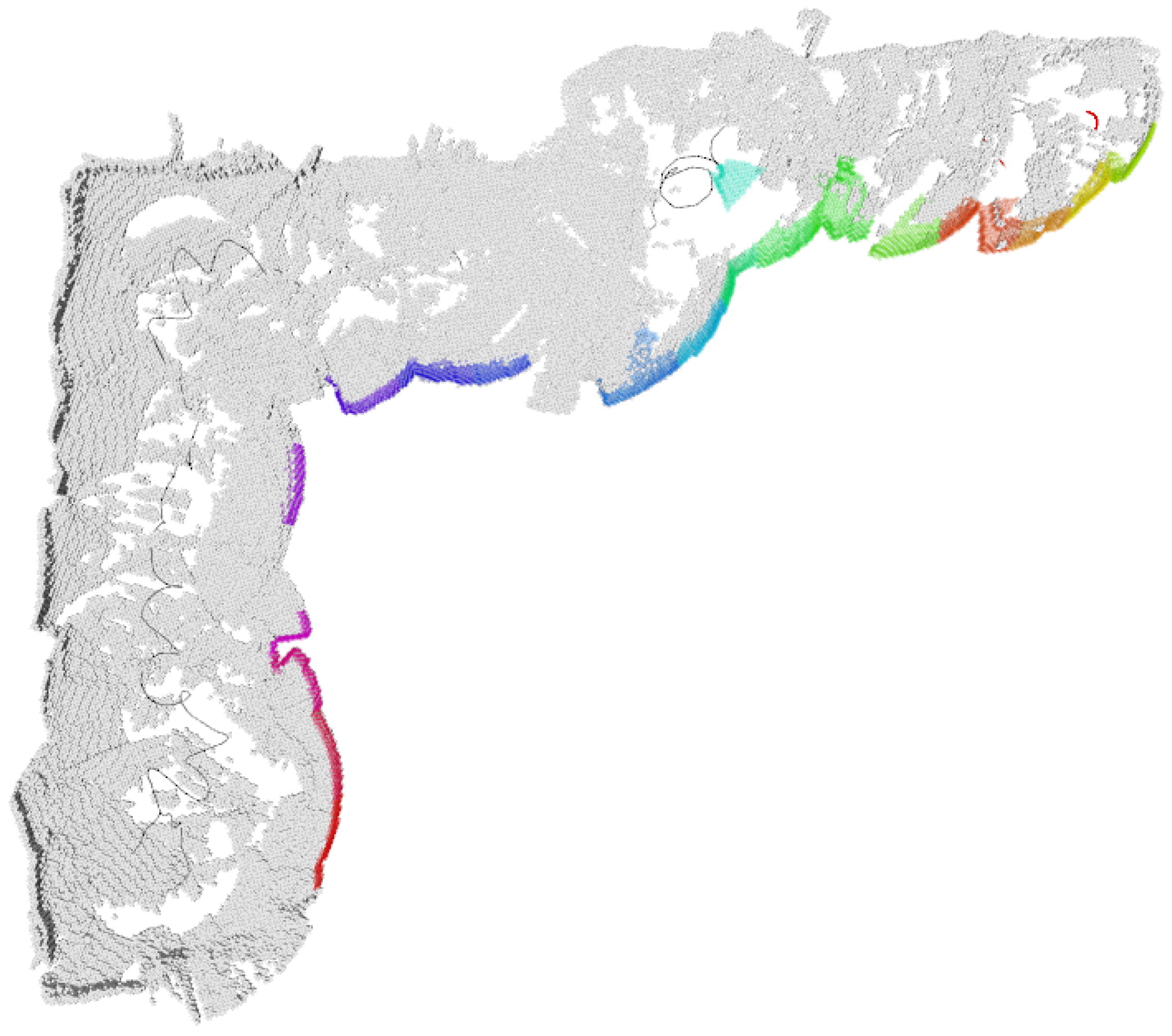

3.2. Environmental Perception

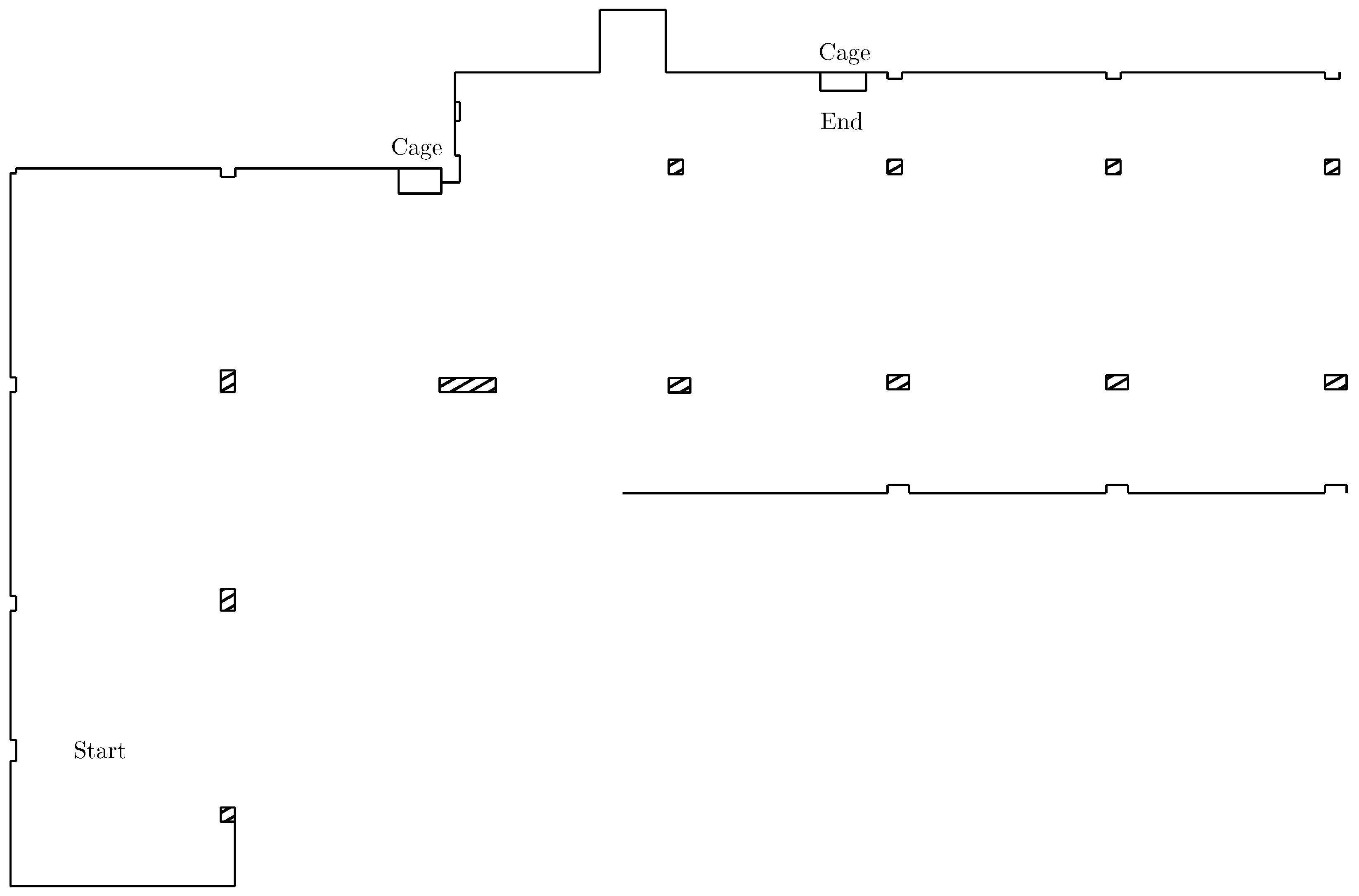

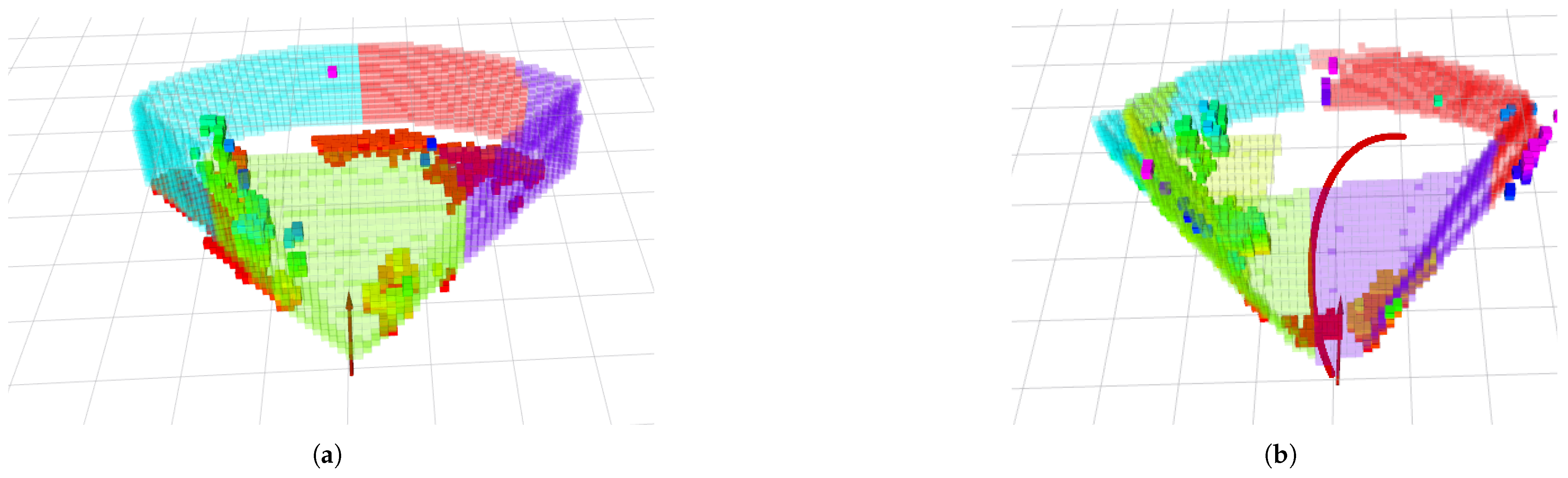

3.3. Path Planning

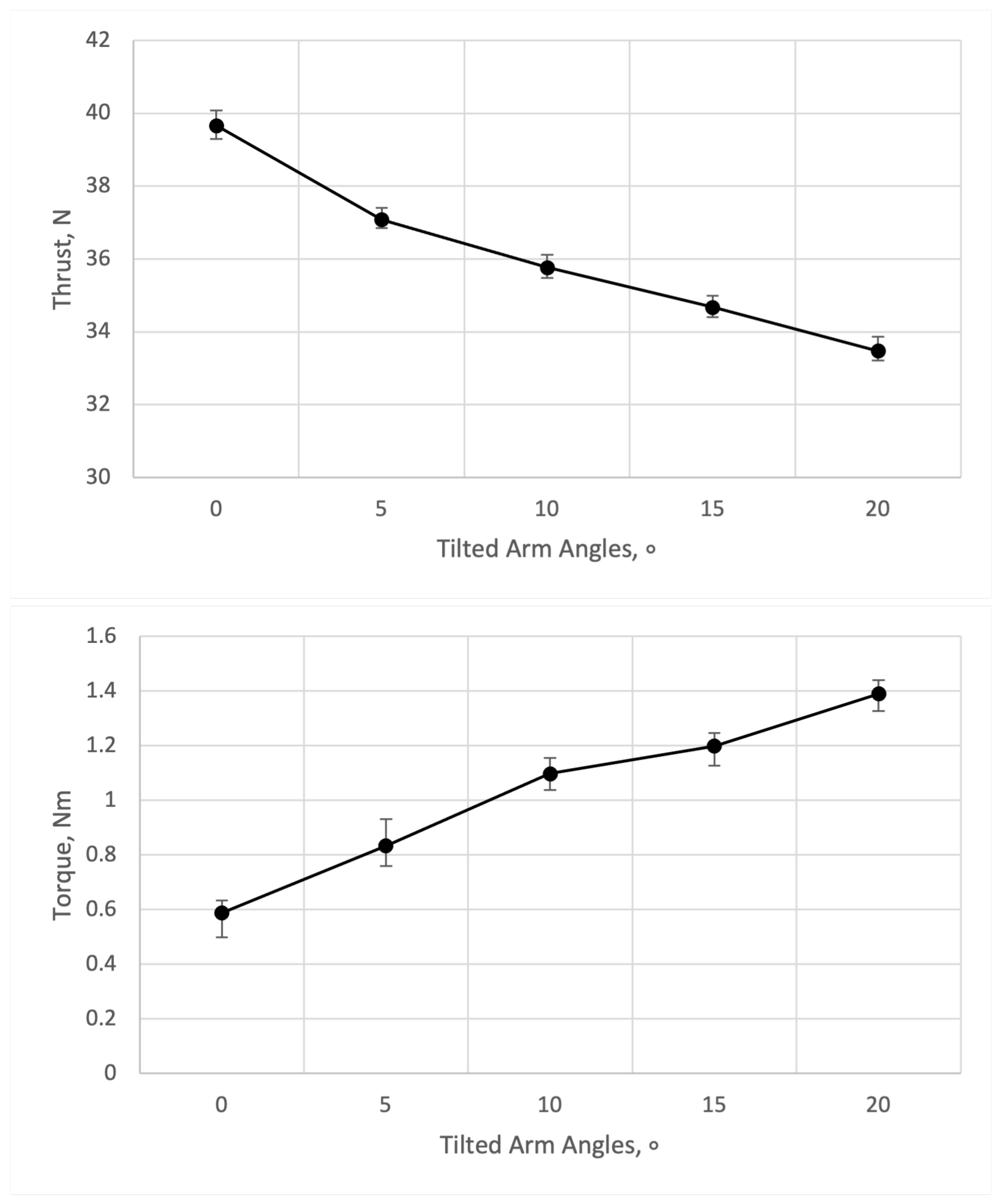

3.4. Rotor Arm Redesign

4. Results

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Marconi, L.; Melchiorri, C.; Beetz, M.; Pangercic, D.; Siegwart, R.; Leutenegger, S.; Carloni, R.; Stramigioli, S.; Bruyninckx, H.; Doherty, P.; et al. The SHERPA project: Smart collaboration between humans and ground-aerial robots for improving rescuing activities in alpine environments. In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics, College Station, TX, USA, 5–8 November 2012; pp. 1–4. [Google Scholar]

- De Cubber, G.; Doroftei, D.; Serrano, D.; Chintamani, K.; Sabino, R.; Ourevitch, S. The EU-ICARUS project: Developing assistive robotic tools for search and rescue operations. In Proceedings of the 2013 IEEE International Symposium on Safety, Security, and Rescue Robotics, Linköping, Sweden, 21–26 October 2013; pp. 1–4. [Google Scholar]

- Półka, M.; Ptak, S.; Kuziora, Ł. The use of UAV’s for search and rescue operations. Procedia Eng. 2017, 192, 748–752. [Google Scholar] [CrossRef]

- Huang, H.M. Autonomy Levels for Unmanned Systems (ALFUS) Framework Volume I: Terminology Version 2.0; Special Publication 1011; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2004. [CrossRef]

- Balestrieri, E.; Daponte, P.; De Vito, L.; Lamonaca, F. Sensors and Measurements for Unmanned Systems: An Overview. Sensors 2021, 21, 1518. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Li, K.; Hu, B.; Meng, C. Comparison of Kalman Filters for Inertial Integrated Navigation. Sensors 2019, 19, 1426. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spacial Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Ko, H.; Kim, B.; Kong, S.H. GNSS multipath-resistant cooperative navigation in urban vehicular networks. IEEE Trans. Veh. Technol. 2015, 64, 5450–5463. [Google Scholar] [CrossRef]

- Martz, J.; Al-Sabban, W.; Smith, R.N. Survey of unmanned subterranean exploration, navigation, and localisation. IET Cyber-Syst. Robot. 2020, 2, 1–13. [Google Scholar] [CrossRef]

- Forrest, S.W.; Recio, M.R.; Seddon, P.J. Moving wildlife tracking forward under forested conditions with the SWIFT GPS algorithm. Anim. Biotelemetry 2022, 10, 19. [Google Scholar] [CrossRef]

- Schmidt, G.T. Navigation sensors and systems in GNSS degraded and denied environments. Chin. J. Aeronaut. 2015, 28, 1–10. [Google Scholar] [CrossRef]

- Placed, J.A.; Strader, J.; Carrillo, H.; Atanasov, N.; Indelman, V.; Carlone, L.; Castellanos, J.A. A survey on active simultaneous localization and mapping: State of the art and new frontiers. IEEE Trans. Robot. 2023, 39, 1686–1705. [Google Scholar] [CrossRef]

- Jarraya, I.; Al-Batati, A.; Kadri, M.B.; Abdelkader, M.; Ammar, A.; Boulila, W.; Koubaa, A. Gnss-denied unmanned aerial vehicle navigation: Analyzing computational complexity, sensor fusion, and localization methodologies. Satell. Navig. 2025, 6, 9. [Google Scholar] [CrossRef]

- Orekhov, V.L.; Chung, T.H. The DARPA subterranean challenge: A synopsis of the circuits stage. Field Robot. 2022, 2, 735–747. [Google Scholar] [CrossRef]

- Tranzatto, M.; Miki, T.; Dharmadhikari, M.; Bernreiter, L.; Kulkarni, M.; Mascarich, F.; Andersson, O.; Khattak, S.; Hutter, M.; Siegwart, R.; et al. CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge. Field Robot. 2022, 2, 274–324. [Google Scholar] [CrossRef]

- Hudson, N.; Talbot, F.; Cox, M.; Williams, J.; Hines, T.; Pitt, A.; Wood, B.; Frousheger, D.; Surdo, K.L.; Molnar, T.; et al. Heterogeneous Ground and Air Platforms, Homogeneous Sensing: Team CSIRO Data61’s Approach to the DARPA Subterranean Challenge. Field Robot. 2022, 2, 595–636. [Google Scholar] [CrossRef]

- Jones, E.; Sofonia, J.; Canales, C.; Hrabar, S.; Kendoul, F. Applications for the Hovermap autonomous drone system in underground mining operations. J. S. Afr. Inst. Min. Metall. 2020, 120, 49–56. [Google Scholar] [CrossRef]

- Al Younes, Y.; Barczyk, M. Adaptive Nonlinear Model Predictive Horizon Using Deep Reinforcement Learning for Optimal Trajectory Planning. Drones 2022, 6, 323. [Google Scholar] [CrossRef]

- Al Younes, Y.; Barczyk, M. Optimal Motion Planning in GPS-Denied Environments Using Nonlinear Model Predictive Horizon. Sensors 2021, 21, 5547. [Google Scholar] [CrossRef] [PubMed]

- Al Younes, Y.; Barczyk, M. Nonlinear model predictive horizon for optimal trajectory generation. Robotics 2021, 10, 90. [Google Scholar] [CrossRef]

- Al Younes, Y.; Barczyk, M. A backstepping approach to nonlinear model predictive horizon for optimal trajectory planning. Robotics 2022, 11, 87. [Google Scholar] [CrossRef]

- Dang, T.; Mascarich, F.; Khattak, S.; Papachristos, C.; Alexis, K. Graph-based Path Planning for Autonomous Robotic Exploration in Subterranean Environments. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Macau, China, 3–8 November 2019; pp. 3105–3112. [Google Scholar] [CrossRef]

- Zhou, B.; Zhang, Y.; Chen, X.; Shen, S. FUEL: Fast UAV exploration using incremental frontier structure and hierarchical planning. IEEE Robot. Autom. Lett. 2021, 6, 779–786. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and Efficient Quadrotor Trajectory Generation for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, K.; Ok, K.; Tran, L.; Allen, D.; Roy, N.; How, J.P. Search and rescue under the forest canopy using multiple UAVs. Int. J. Robot. Res. 2020, 39, 1201–1221. [Google Scholar] [CrossRef]

- Schleich, D.; Beul, M.; Quenzel, J.; Behnke, S. Autonomous Flight in Unknown GNSS-denied Environments for Disaster Examination. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems, Athens, Greece, 15–18 June 2021; pp. 950–957. [Google Scholar] [CrossRef]

- Lindqvist, B.; Kanellakis, C.; Mansouri, S.S.; Agha-mohammadi, A.a.; Nikolakopoulos, G. COMPRA: A COMPact reactive autonomy framework for subterranean MAV based search-and-rescue operations. J. Intell. Robot. Syst. 2022, 105, 49. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Daniela, R. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- Dharmadhikari, M.; Dang, T.; Solanka, L.; Loje, J.; Nguyen, H.; Khedekar, N.; Alexis, K. Motion Primitives-based Path Planning for Fast and Agile Exploration using Aerial Robots. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 179–185. [Google Scholar] [CrossRef]

- Chan, T.H.; Halim, J.K.D.; Tan, K.W.; Tang, E.; Ang, W.J.; Tan, J.Y.; Cheong, S.; Ho, H.-N.; Kuan, B.; Shalihan, M.; et al. A Robotic System of Systems for Human-Robot Collaboration in Search and Rescue Operations. In Proceedings of the 2023 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Seattle, WA, USA, 28–30 June 2023; pp. 878–885. [Google Scholar] [CrossRef]

- Ngo, E.; Ramirez, J.; Medina-Soto, M.; Dirksen, S.; Victoriano, E.D.; Bhandari, S. UAV Platforms for Autonomous Navigation in GPS-Denied Environments for Search and Rescue Missions. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems, Dubrovnik, Croatia, 21–24 June 2022; pp. 1481–1488. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Elmokadem, T.; Savkin, A.V. Towards Fully Autonomous UAVs: A Survey. Sensors 2021, 21, 6223. [Google Scholar] [CrossRef] [PubMed]

- Tahir, A.; Boling, J.; Haghbayan, M.H.; Toivonen, H.T.; Plosila, J. Swarms of Unmanned Aerial Vehicles—A Survey. J. Ind. Inf. Integr. 2019, 16, 100106. [Google Scholar] [CrossRef]

- Giordan, D.; Adams, M.S.; Aicardi, I.; Alicandro, M.; Allasia, P.; Baldo, M.; Berardinis, P.D.; Dominici, D.; Godone, D.; Hobbs, P.; et al. The use of unmanned aerial vehicles (UAVs) for engineering geology applications. Bull. Eng. Geol. Environ. 2020, 79, 3437–3481. [Google Scholar] [CrossRef]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar] [CrossRef]

- Fischetti, M.; Lodi, A.; Toth, P. Exact Methods for the Asymmetric Traveling Salesman Problem. In The Traveling Salesman Problem and Its Variations; Springer: Boston, MA, USA, 2007; pp. 169–205. [Google Scholar] [CrossRef]

| Component | Brand |

|---|---|

| Airframe | DJI (Shenzhen, China) FlameWheel 450 |

| Altitude Sensor | Garmin (Olathe, KS, USA) LiDAR-LITE v3 |

| Flight Controller | Holybro (Shenzhen, China) Pixhawk 5x |

| Onboard Computer | NVIDIA (Santa Clara, CA, USA) Jetson Xavier NX |

| Depth Sensor | Stereolabs (Paris, France) ZED 2 Camera |

| Electronic Speed Controllers | DJI (Shenzhen, China) 430 Lite |

| Propeller Motors | DJI (Shenzhen, China) 2312E |

| Computer Storage | Samsung (Seoul, Korea) 970 EVO Plus SSD |

| WiFi Module | Waveshare (Shenzhen, China) AW-CB375NF |

| Propellers | DJI (Shenzhen, China) Z-Blade 9450 |

| Primary Battery | Venom (Rathdrum, ID, USA) 14.8 V 5000 mAh 50C LiPo |

| Secondary Battery | Venom (Rathdrum, ID, USA) 11.1 V 2200 mAh 50C LiPo |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Allan, S.; Barczyk, M. A Low-Cost Experimental Quadcopter Drone Design for Autonomous Search-and-Rescue Missions in GNSS-Denied Environments. Drones 2025, 9, 523. https://doi.org/10.3390/drones9080523

Allan S, Barczyk M. A Low-Cost Experimental Quadcopter Drone Design for Autonomous Search-and-Rescue Missions in GNSS-Denied Environments. Drones. 2025; 9(8):523. https://doi.org/10.3390/drones9080523

Chicago/Turabian StyleAllan, Shane, and Martin Barczyk. 2025. "A Low-Cost Experimental Quadcopter Drone Design for Autonomous Search-and-Rescue Missions in GNSS-Denied Environments" Drones 9, no. 8: 523. https://doi.org/10.3390/drones9080523

APA StyleAllan, S., & Barczyk, M. (2025). A Low-Cost Experimental Quadcopter Drone Design for Autonomous Search-and-Rescue Missions in GNSS-Denied Environments. Drones, 9(8), 523. https://doi.org/10.3390/drones9080523