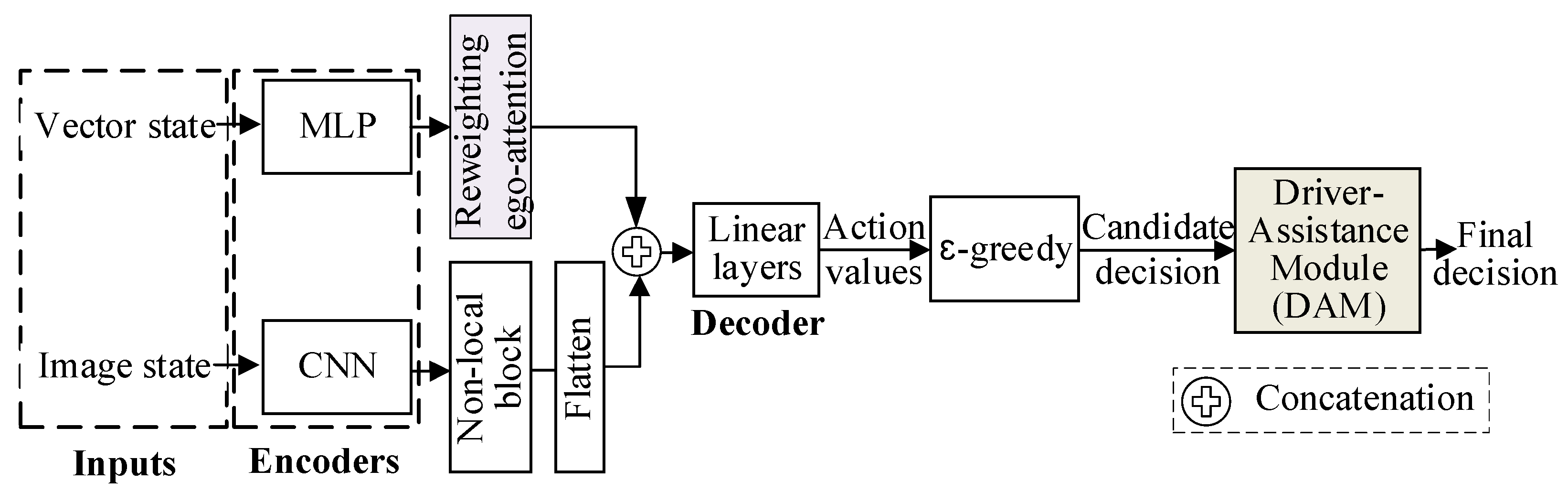

As shown in

Figure 3, we improved the dual-input decision-making model. By reweighting ego-attention and appending a driver-assistance module (DAM), we enhanced the model’s performance. Since images provide more intuitional representations of the relative positions of vehicles, the non-local block, an extension to image data of ego-attention, in the image input branch effectively captures vehicle-to-ego interactions [

34,

38]. Therefore, we first enhanced ego-attention in the vector state input branch to improve the model’s decision-making performance by capturing more vehicle-to-ego dependencies. The additional DAM is a key safety feature. It adopts candidate safety-checking rules to improve AD safety, ensuring dangerous decision actions are checked and substituted with safe ones, providing reassurance and confidence in the decision safety. The whole model is trained by the double deep Q-learning (DDQN) [

39], a DRL algorithm, with a modified reward function.

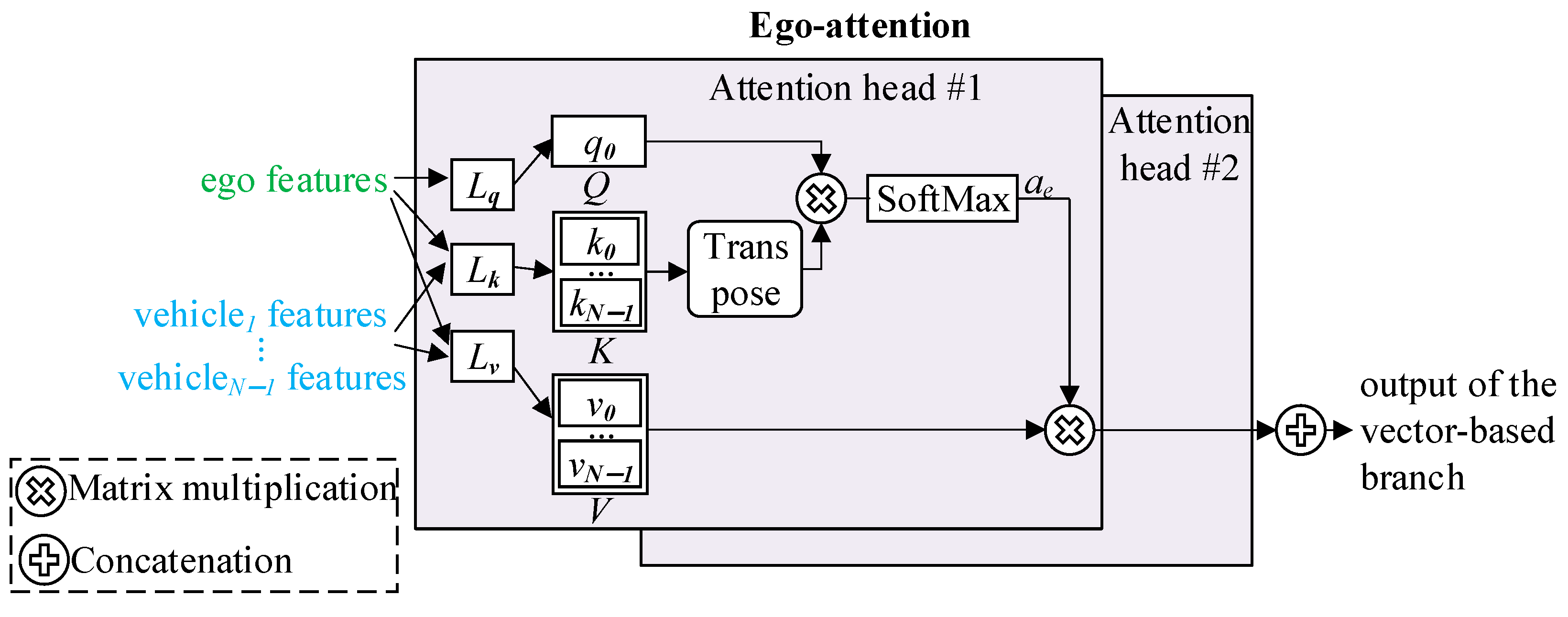

3.1. Reweighting Ego-Attention

As mentioned in

Section 2.2, conventional dot-product attention struggles to fully capture the structured and asymmetric interactions among vehicles, particularly in spatially considered highway environments. To address this, prior work [

40,

41] has introduced Gaussian-weighted multi-head self-attention, which enhances attention expressiveness by incorporating spatial priors.

Specifically, these methods construct a Gaussian weight matrix

, where each element

depends solely on the longitudinal distance

between vehicle

and vehicle

. This matrix is then used to modulate the attention score matrix in (2) via element-wise multiplication:

This mechanism effectively emphasizes spatially closer agents, refining attention distribution to focus on nearby tokens. However, we identify two critical limitations when applying this technique to AD decision making:

First, in prior Gaussian-weighted methods, attention scores are modulated based on the relative longitudinal distance between the ego vehicle and surrounding vehicles. However, in multi-lane highway scenarios, such distance-only weighting can lead to misaligned attention allocation. For example, suppose the ego vehicle faces two leading vehicles: one in the same lane (vehsame) and another in an adjacent lane (vehadj), with relative distances Δx1 and Δx2, respectively. Even if vehsame is farther ahead (Δx1 > Δx2), it poses a greater impact on the ego’s driving decision due to its lane relevance. Gaussian weighting, however, assigns more attention to vehadj merely due to its shorter longitudinal distance, neglecting lateral context. This often results in unnecessarily conservative behaviors, such as premature braking or lane-keeping.

Second, Gaussian weighting typically applies object-level scaling—each vehicle as a whole receives a single attention modulation factor. This approach fails to account for the differentiated significance of features (e.g., velocity) within a single vehicle. In decision-critical contexts, such coarse-grained modulation is insufficient, as the policy requires fine-grained, feature-level discrimination to balance safety and efficiency.

To address the limitations of Gaussian-weighted methods in AD decision making, we propose a novel feature-wise attention mechanism inspired by [

37,

42]. Specifically, we adopted a learnable matrix

W into the

KT of attention score. This learnable matrix enables the attention mechanism to adaptively capture vehicles’ features that are critical for decision making, such as driving speed and relative lateral distance, rather than focusing solely on relative longitudinal distance. Consequently, it allows the model to autonomously extract and utilize a broader range of informative state features.

Specifically, the attention score of the proposed reweighting ego-attention is given in (6).

where

is the novel proposed attention score, and

Q,

K,

KT, and

dK are the same as in (2). × and · denote matrix multiplication and Hadamard (element-wise) product, respectively.

W is the learnable weight matrix, whose dimension is the same with

KT. Here, each element of

W represents the importance of the corresponding encoding feature in

K. It is worth pointing out that (6) adopts the Hadamard product due to its excellent feature-expressing capability [

43] and light calculation cost.

3.2. DAM

An inadequately designed reward function may induce the model to exhibit unsafe behaviors. For example, underweighting the safety component could cause the ego vehicle to overemphasize efficiency, resulting in overly aggressive decisions and a heightened risk of hazardous scenarios. Although a carefully balanced reward function can theoretically address such issues, its design typically demands considerable domain expertise and incurs substantial retraining costs due to the need for extensive parameter tuning.

Therefore, it is essential to provide the model with explicit guidance—such as the restriction of unsafe actions—to prevent undesirable behaviors. Such guidance serves as a practical and efficient complement to reward design, especially in scenarios where the reward function alone fails to enforce critical safety constraints. Moreover, the intrinsic self-exploratory mechanism of DRL, along with the approximation of policy [

26], may result in dangerous actions during training and deployment. These unsafe behaviors significantly compromise the safety of autonomous driving systems.

To address the safety challenges, we propose DAM, a safety module based on rules designed to check dangerous actions given by the decision-making model. After checking, DAM replaces a dangerous action to a safe one.

3.2.1. The Dangerous Decision Analysis Without DAM

We thus introduced three representative types of dangerous scenarios, where although no actual collisions occur, the likelihood of collision would be extremely high without human intervention. These scenarios served as the foundation for the design of our DAM module, which is detailed in

Section 3.2.2.

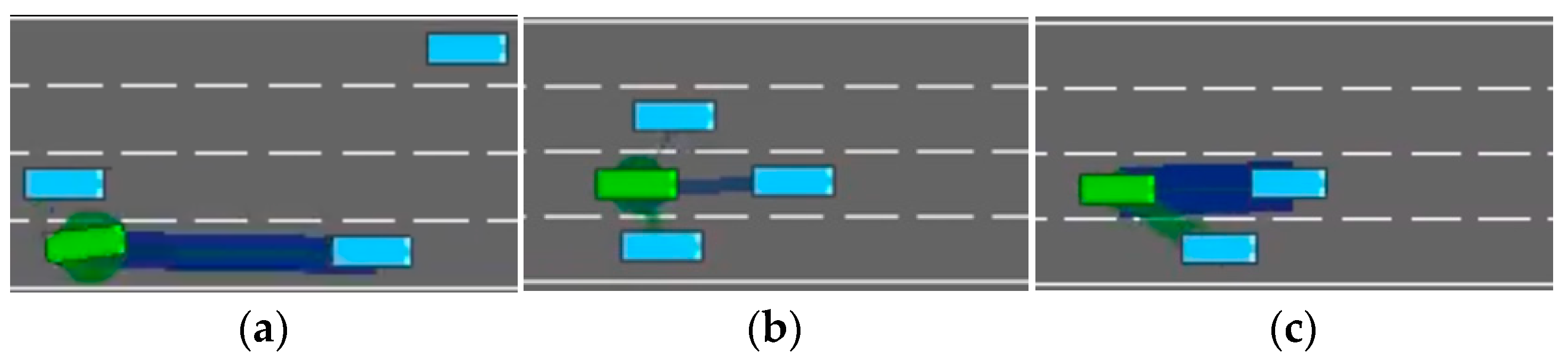

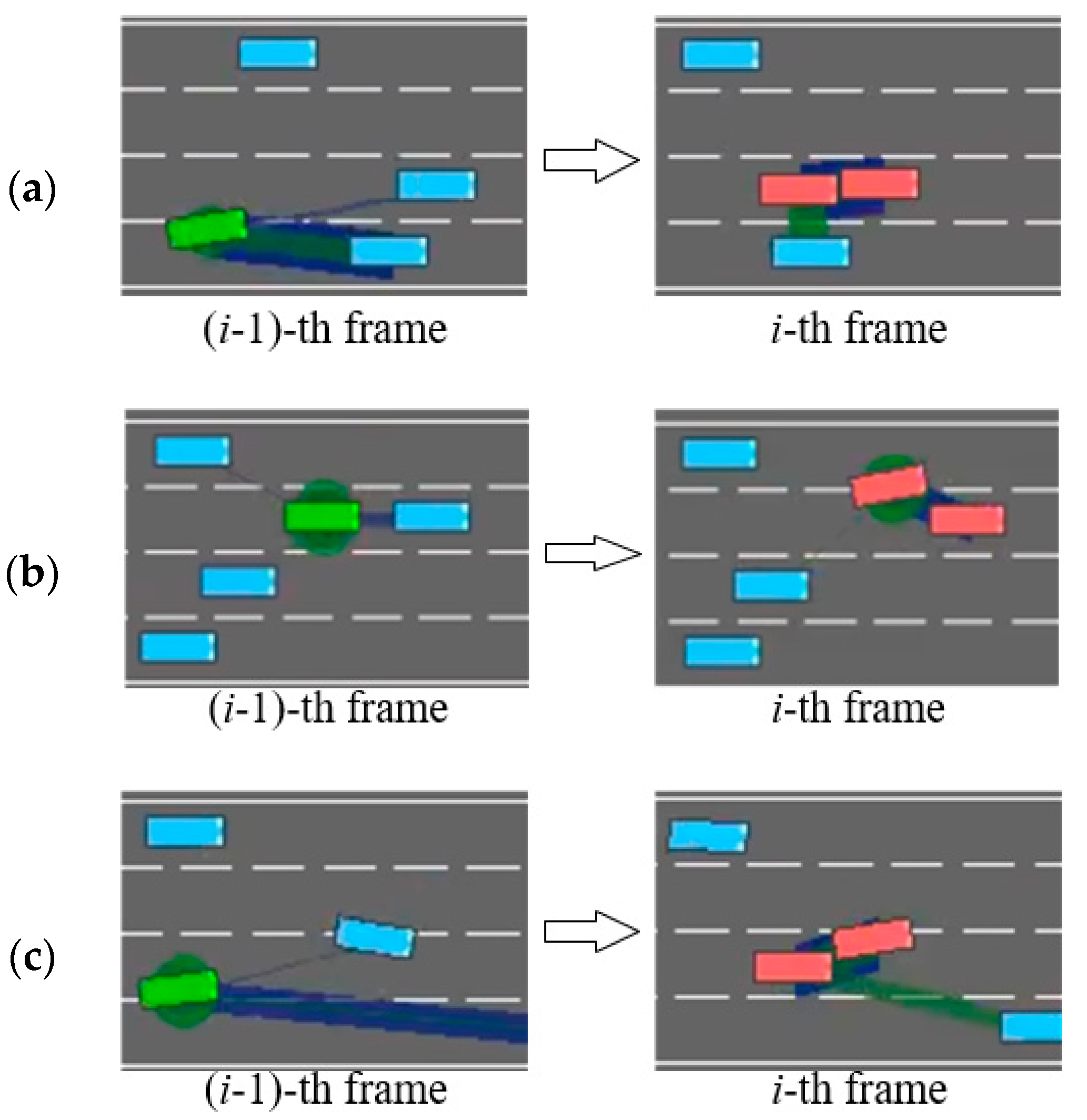

The first type of scenario, illustrated in

Figure 4a, occurs when a leading vehicle in the same lane as the ego vehicle decelerates; the model may choose to execute a risky lane-changing instead of the safer option of slowing down, in an attempt to maintain driving efficiency. However, if the vehicle in the target lane does not yield, this behavior can result in a collision. The second scenario, as shown in

Figure 4b, involves the ego vehicle traveling at a speed similar to that of vehicles in the adjacent lanes, while the preceding car suddenly decelerates. In this case, if the leading vehicle continues to decelerate, the ego vehicle cannot avoid a collision by simple slowing, as vehicles on both sides block any possible lane changes, and we configure the minimum speed of non-ego vehicles to be lower than that of the ego vehicle to effectively simulate overtaking scenarios. The root cause of such scenarios lies in the fact that past decisions made by the DRL model may potentially endanger the ego vehicle. In pursuit of driving efficiency, the model can exhibit short-term behavior and miss the optimal moment for deceleration, thereby falling into the above driving traps. In the third scenario, as illustrated in

Figure 4c, the ego vehicle is too close to the leading vehicle in the same lane. If the model fails to respond promptly with lane-changing or slowing actions, a collision may ultimately occur.

These three scenarios also provide practical insights for the design of Level-2 driver-assistance systems aimed at enhance driving safety in real-world settings. For example, as illustrated in

Figure 4a, a driver may initiate a lane change without checking the rear-view mirror or due to mirror blind spots. In

Figure 4b, when driving in the leftmost lane on a highway (with guardrails on the left and traffic flow on the right), maintaining insufficient following distance poses significant risks. Should the lead vehicle suddenly decelerate or change lanes due to emergent conditions, the following vehicle may lack adequate reaction time, substantially increasing collision risk. In

Figure 4c, distraction may prevent the driver from promptly detecting an imminent hazard, such as a pedestrian or another road user suddenly appearing in the vehicle’s path. In all of these cases, the proposed DAM module is capable of issuing timely risk alerts to help prevent accidents.

3.2.2. Design of DAM

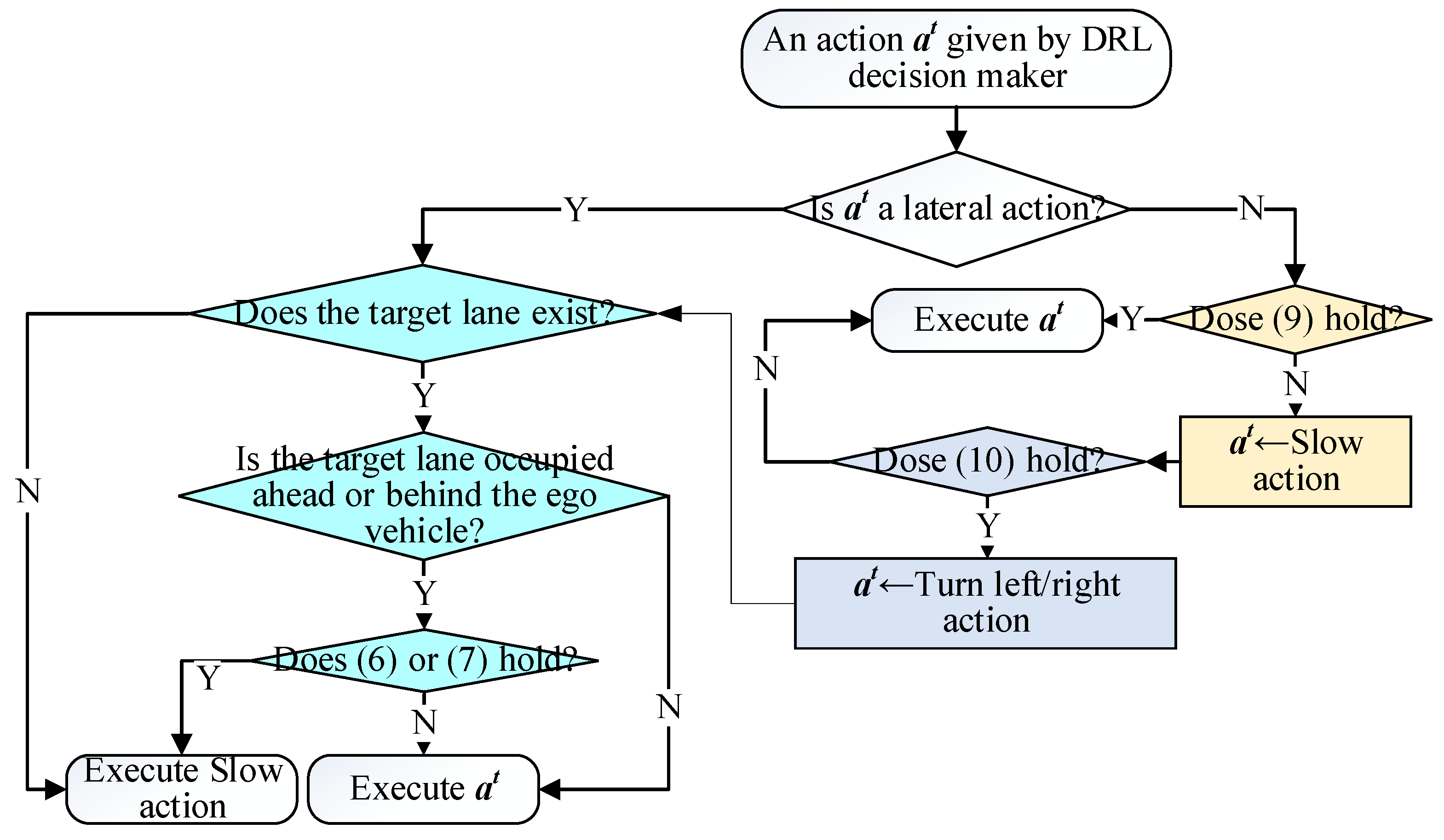

We designed a rule-based DAM, as shown in

Figure 5. In order to avoid local optimization at the cost of safety caused by DAM interventions, we (1) carefully calibrated the DAM parameters adhering to the principle in [

33] through extensive experiments to ensure that they both guarantee safety and allow the DRL agent sufficient flexibility to explore and (2) adopted a soft penalty mechanism (incorporating the DAM into reward function) instead of hard constraint termination. This design encourages the DRL model to weigh the trade-off between exploring better policies and incurring minor penalties rather than entirely avoiding such regions.

As illustrated in

Figure 5, the DAM operates downstream of the DRL-based decision-making framework. Upon receiving actions generated by the DRL model, DAM executes distinct logic depending on whether the action involves

lateral or

longitudinal control commands.

To prevent the model from pursuing overly aggressive lane-changing that could lead to dangerous scenarios like

Figure 4a, DAM implements the following safety protocol for lateral decisions: (1) When the DRL model outputs a lateral control command, DAM assesses the target lane-changing lane. If the requested lane change is infeasible (e.g., when the ego vehicle already occupies the rightmost/leftmost lane but attempts a rightward/leftward lane-changing), DAM immediately triggers a deceleration intervention. Otherwise, DAM examines the relative distances to both the preceding and following vehicles in the target lane, as in (7) and (8).

If conditions (7) and (8) are held, indicating an insufficient gap for lane-changing, DAM will override the DRL-generated action with a brake action. Otherwise, it will execute the lateral action given by DRL.

where

and

are the next time step location of the front and the rear vehicles on the target lane.

given in (9) is the location of the ego vehicle after executing action. We assume all vehicles are with the same length of

.

Assuming the speed remains constant during the maneuver, the lateral dynamic model of a vehicle is given in (9) [

44].

where

is the vehicle location in the current lane.

is the current vehicle speed.

= 0.5 s is the simulation time step.

When the DRL model outputs a longitudinal control action rather than a lateral maneuver, DAM verifies compliance with (10) to ensure the ego vehicle maintains a safe following distance from the leading vehicle. This safety constraint prevents the accumulation of myopic control decisions that could result in hazardous situations, such as the dangerous close-following scenario depicted in

Figure 4b. If condition (10) is held, the ego will execute the longitudinal action given by DRL. Otherwise, the DAM will check (11).

where

TTC(

t) is the time to collision (TTC) at time instant

t.

xpreceding and

xego are the locations of the preceding vehicle and the ego, whose rates are

vpreceding and

vego, respectively.

DAM checks (11) to address the situation depicted in

Figure 4c. As demonstrated in [

45], human drivers typically initiate steering maneuvers rather than relying solely on braking when facing imminent hazards during high-speed driving. To imitate this behavior, DAM activates an emergency collision avoidance maneuver when (11) is satisfied; otherwise, the system defaults to executing only slow maneuvers.

where

xpreceding and

xego are the positions of the preceding vehicle and the ego vehicle, respectively.

xmin is the acceptable minimal car-following distance.

vego and

vpreceding are the current rates of the ego and the preceding vehicle.