Three-Dimensional Defect Measurement and Analysis of Wind Turbine Blades Using Unmanned Aerial Vehicles

Abstract

1. Introduction

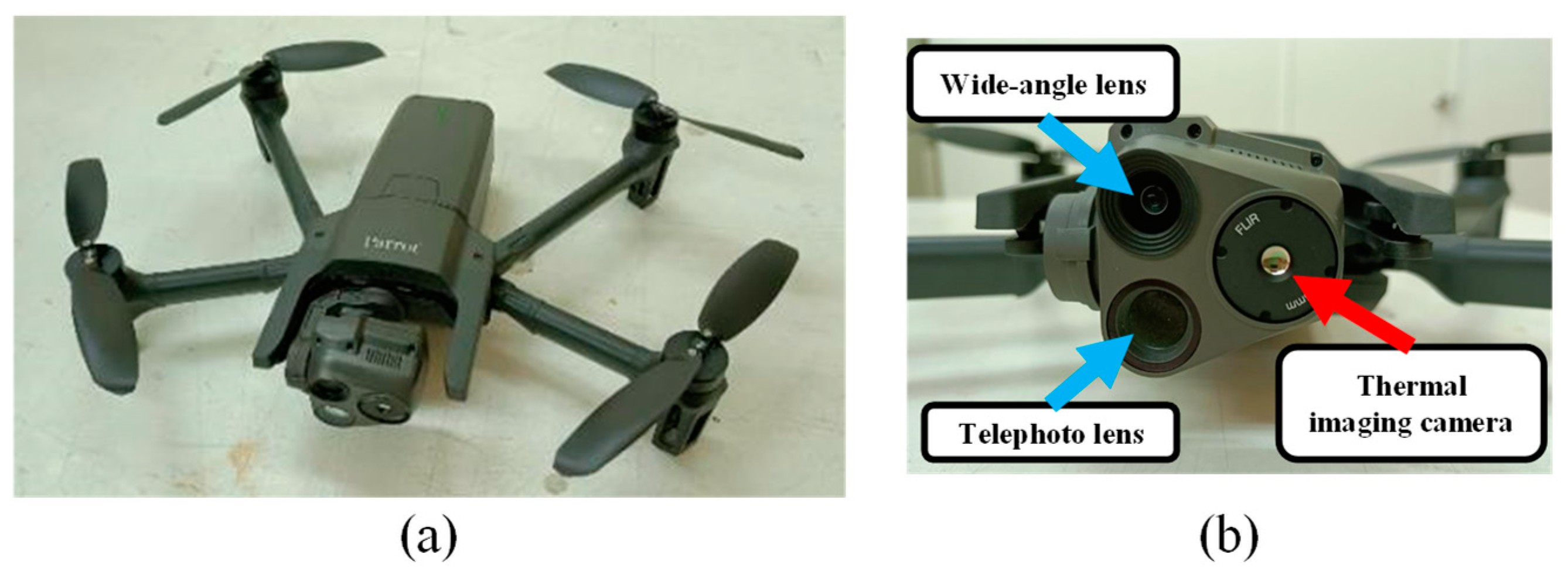

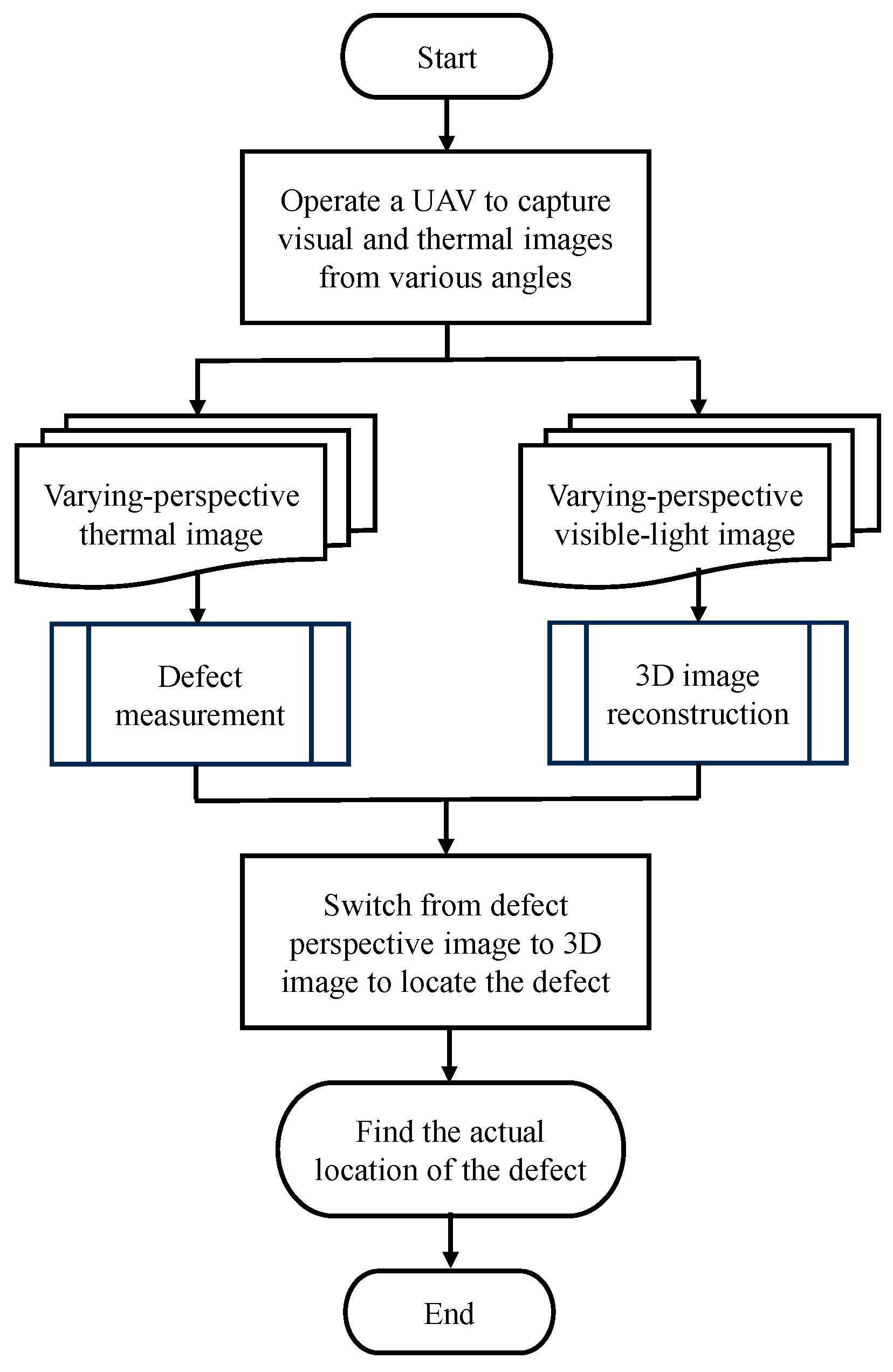

2. Sensing Method for Wind Turbine Blades

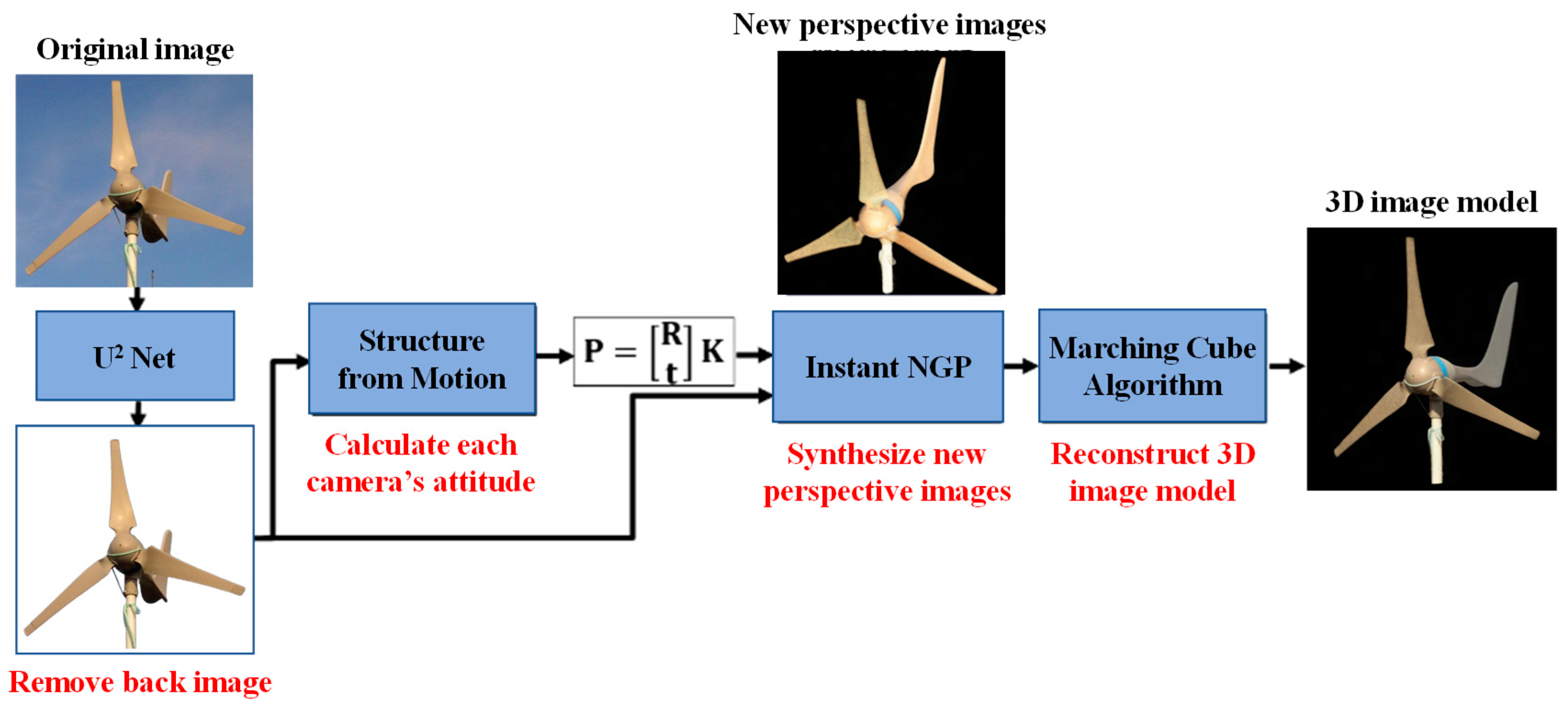

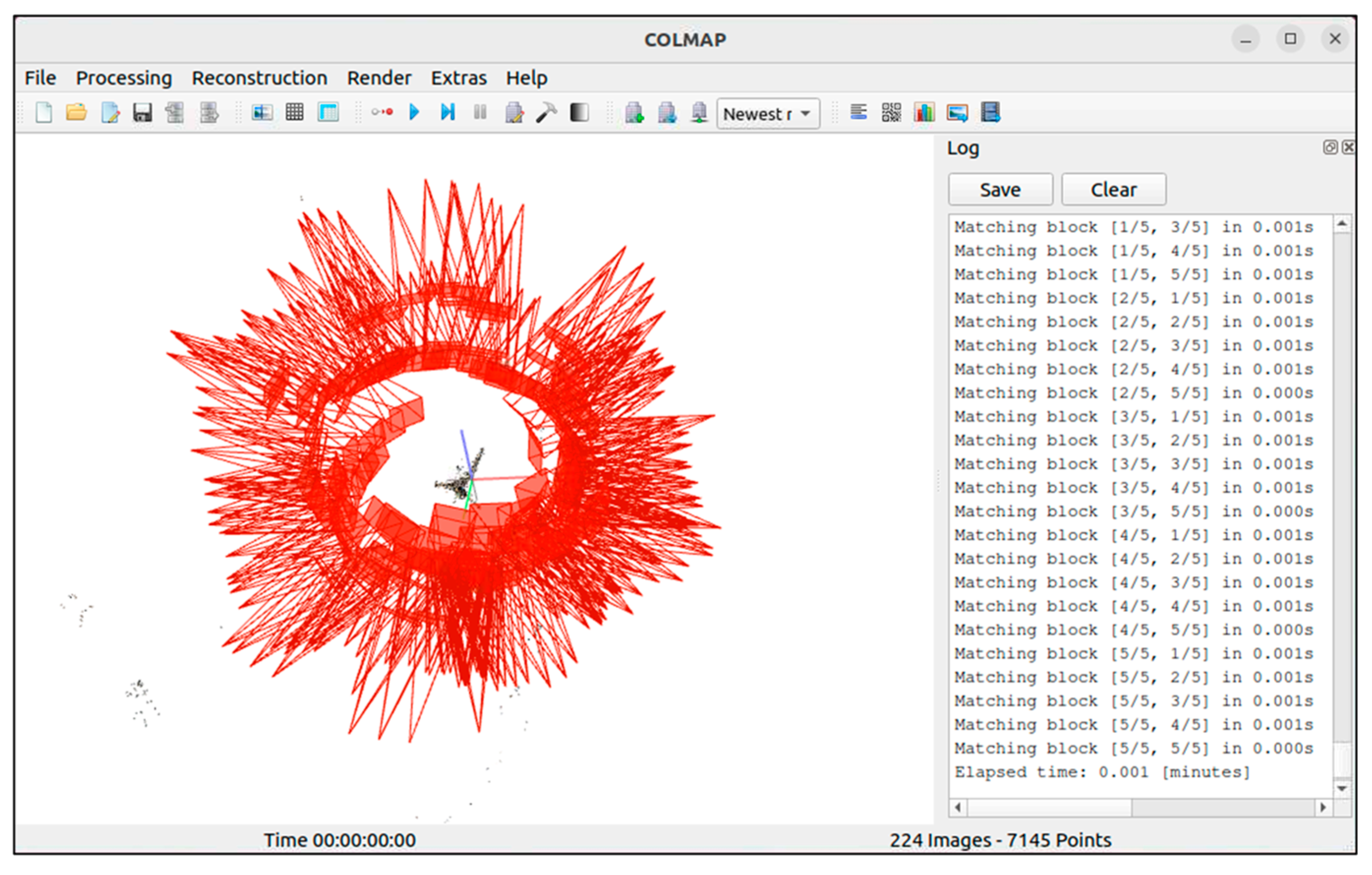

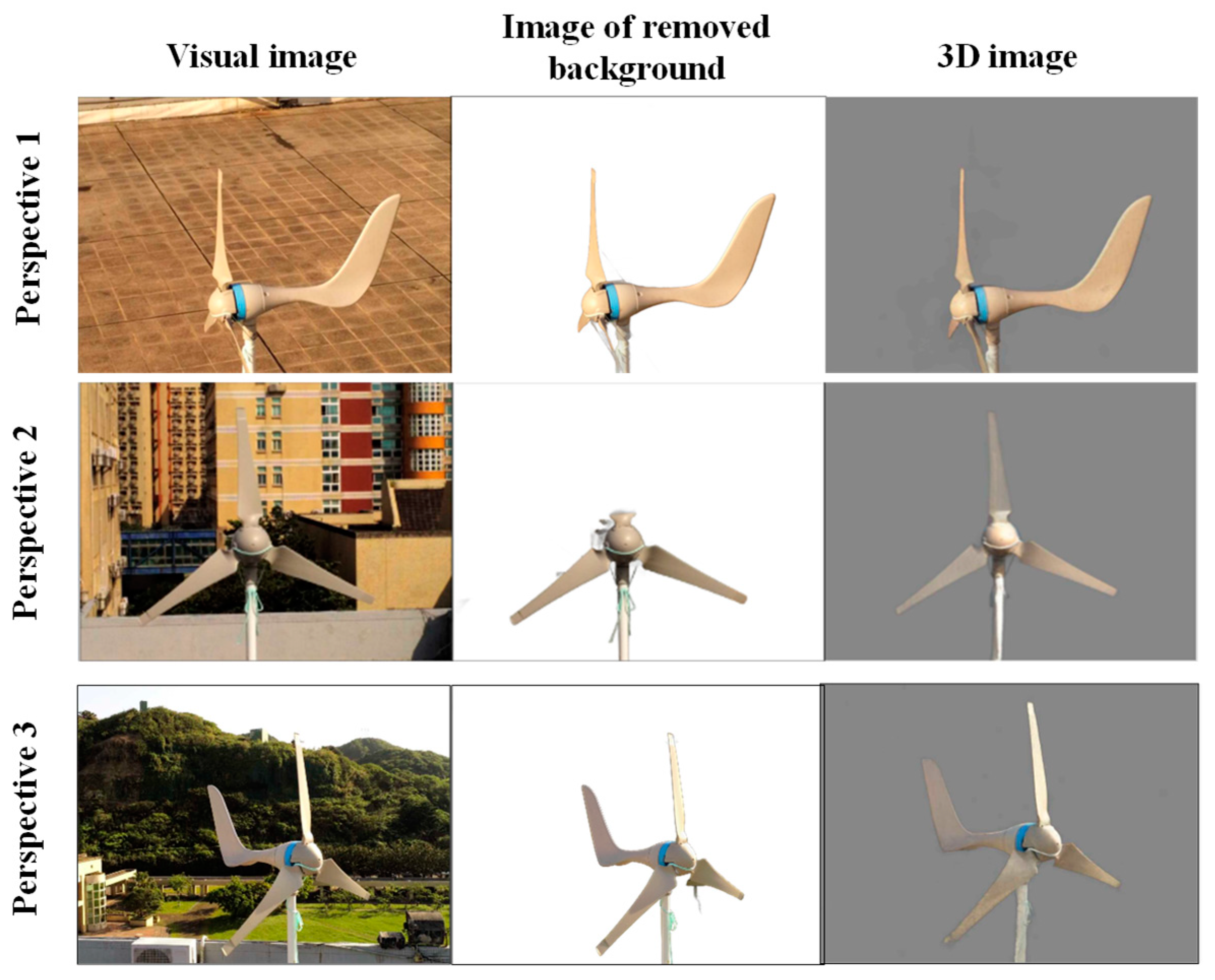

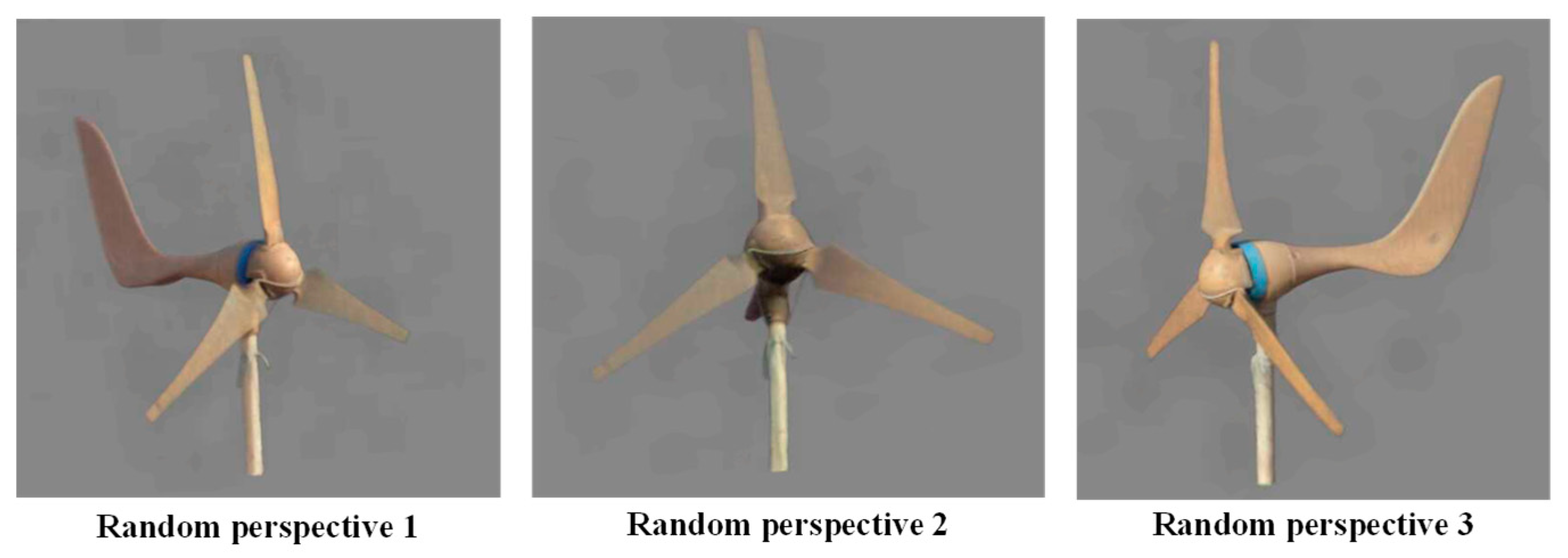

2.1. Three-Dimensional Reconstruction

2.1.1. Removing Background Using the U2 Net Method

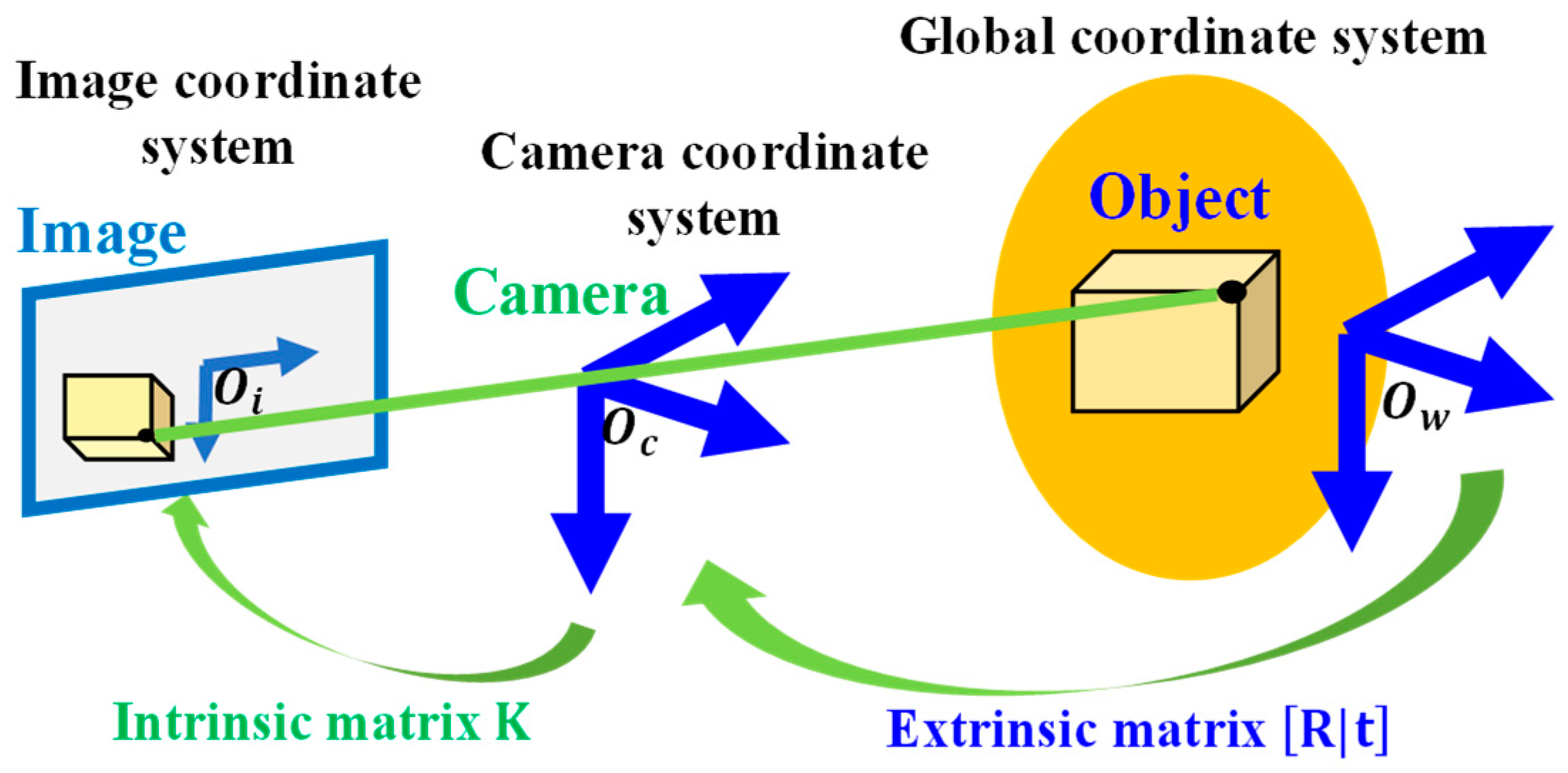

2.1.2. Coordinate Conversion of Camera Posture

Intrinsic Matrix

Extrinsic Matrix

Camera Matrix

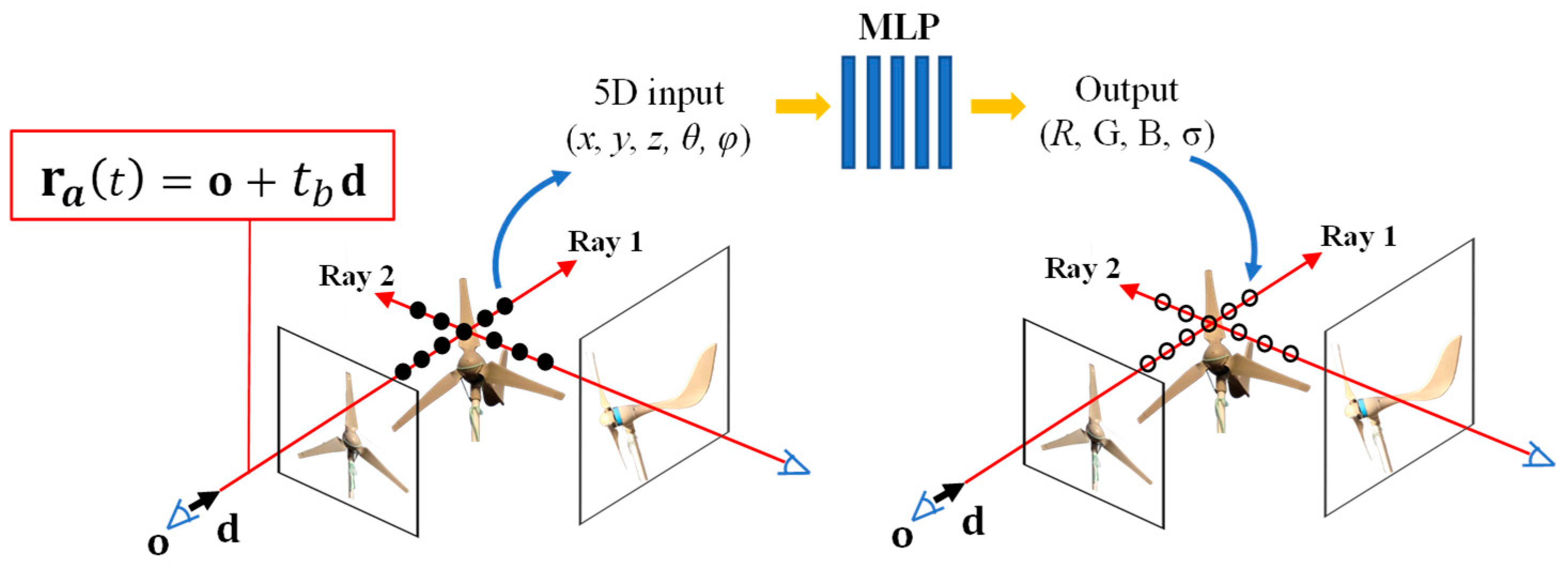

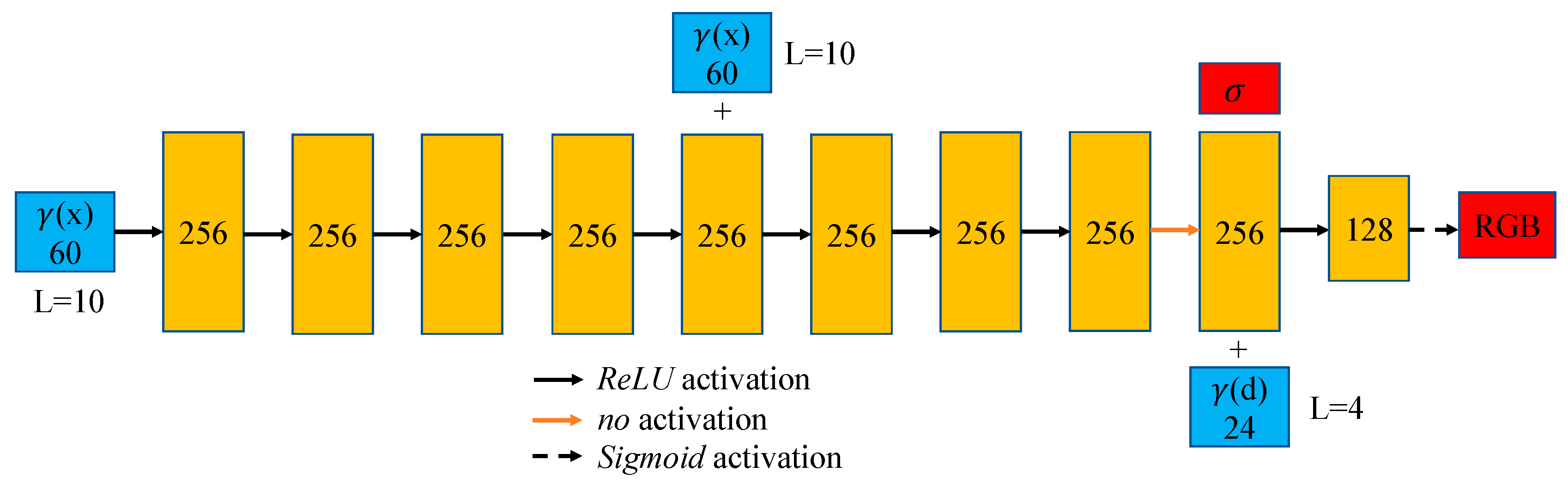

2.1.3. Image Process of Scene Radiation and Density Fields Using NeRF

2.1.4. Optimizing the NeRF Network Structure Using the Instant NGP Method

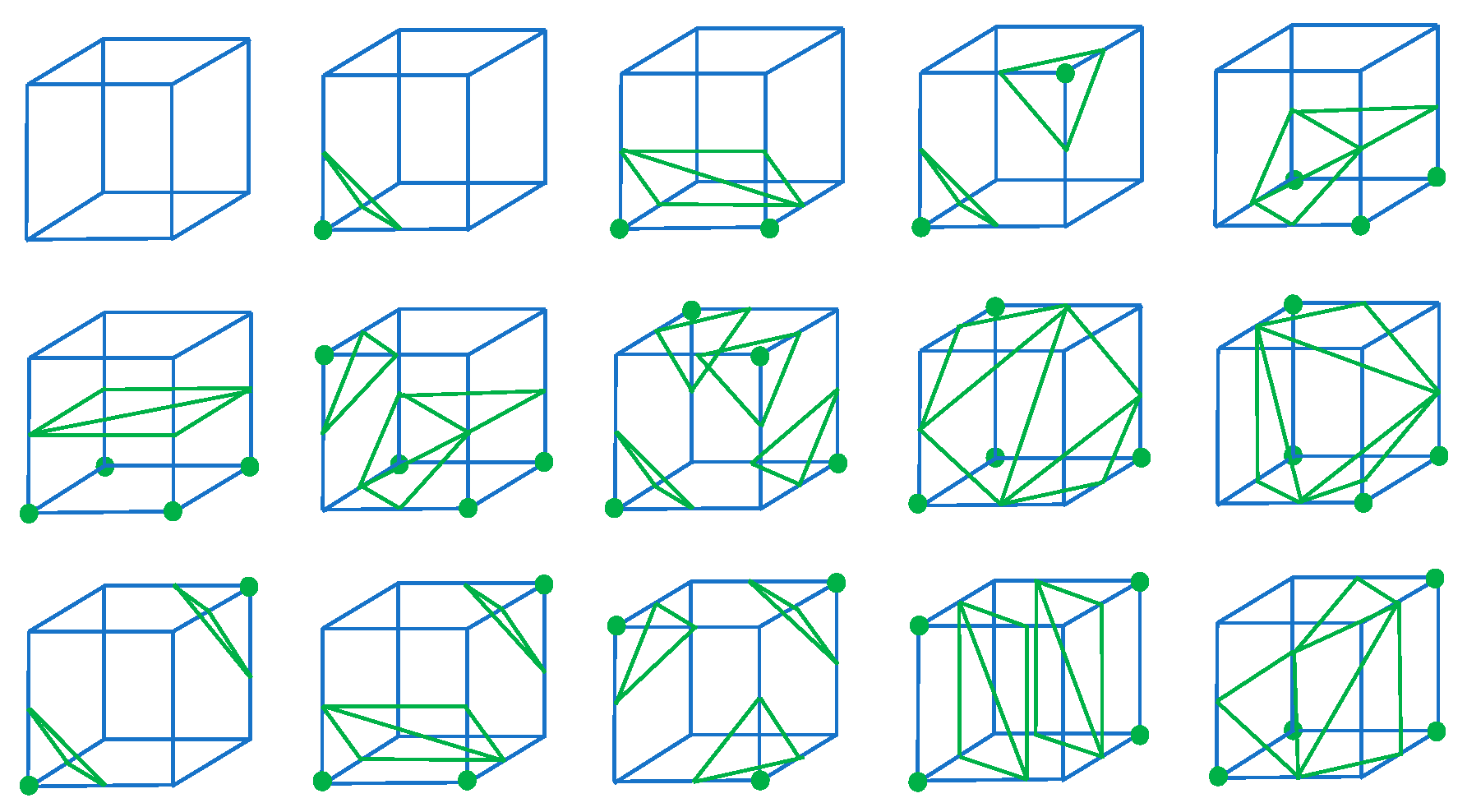

2.1.5. Three-Dimensional Grid Model Generation Using the Marching Cube Algorithm

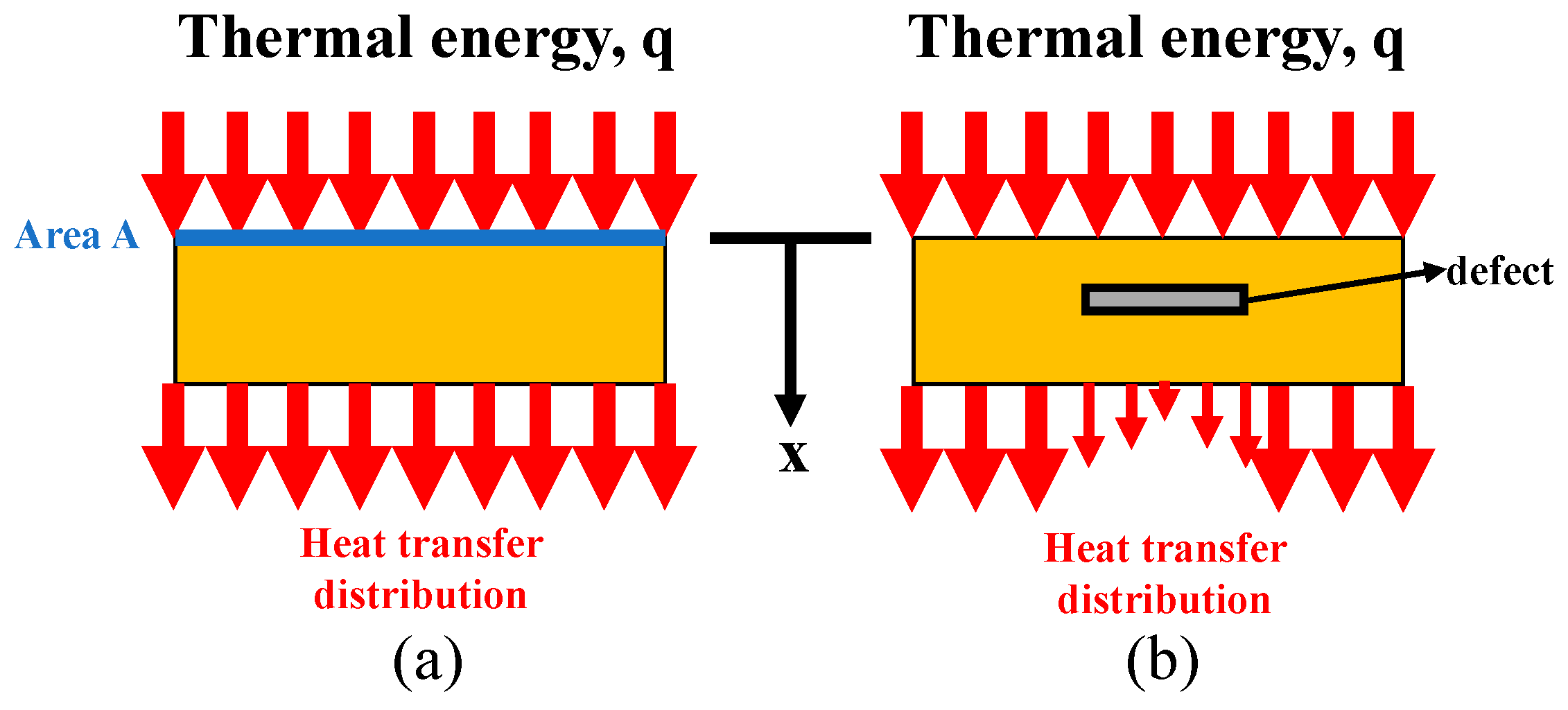

2.2. Heat Conduction Sensing

2.2.1. Thermal Image Analysis

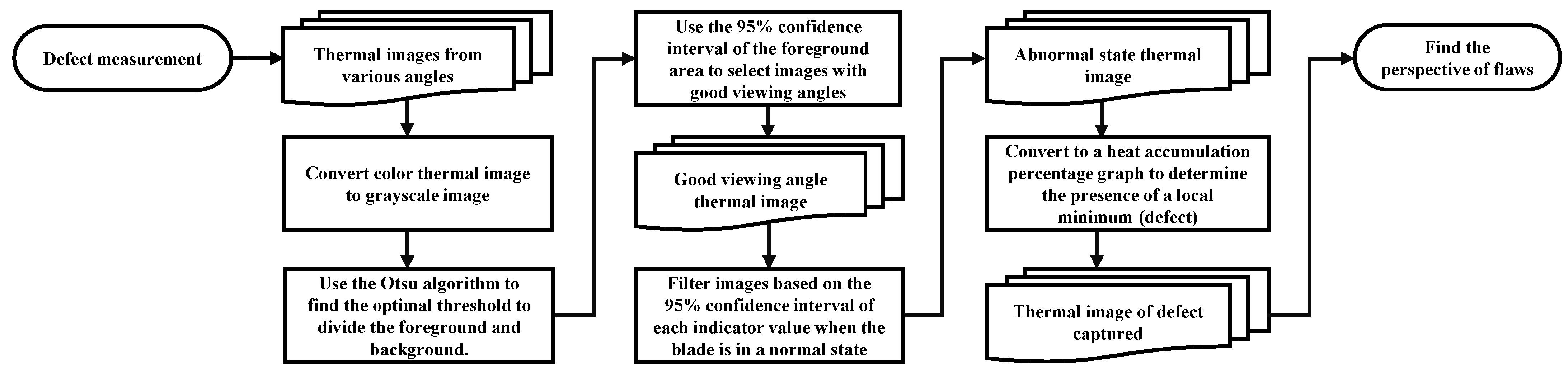

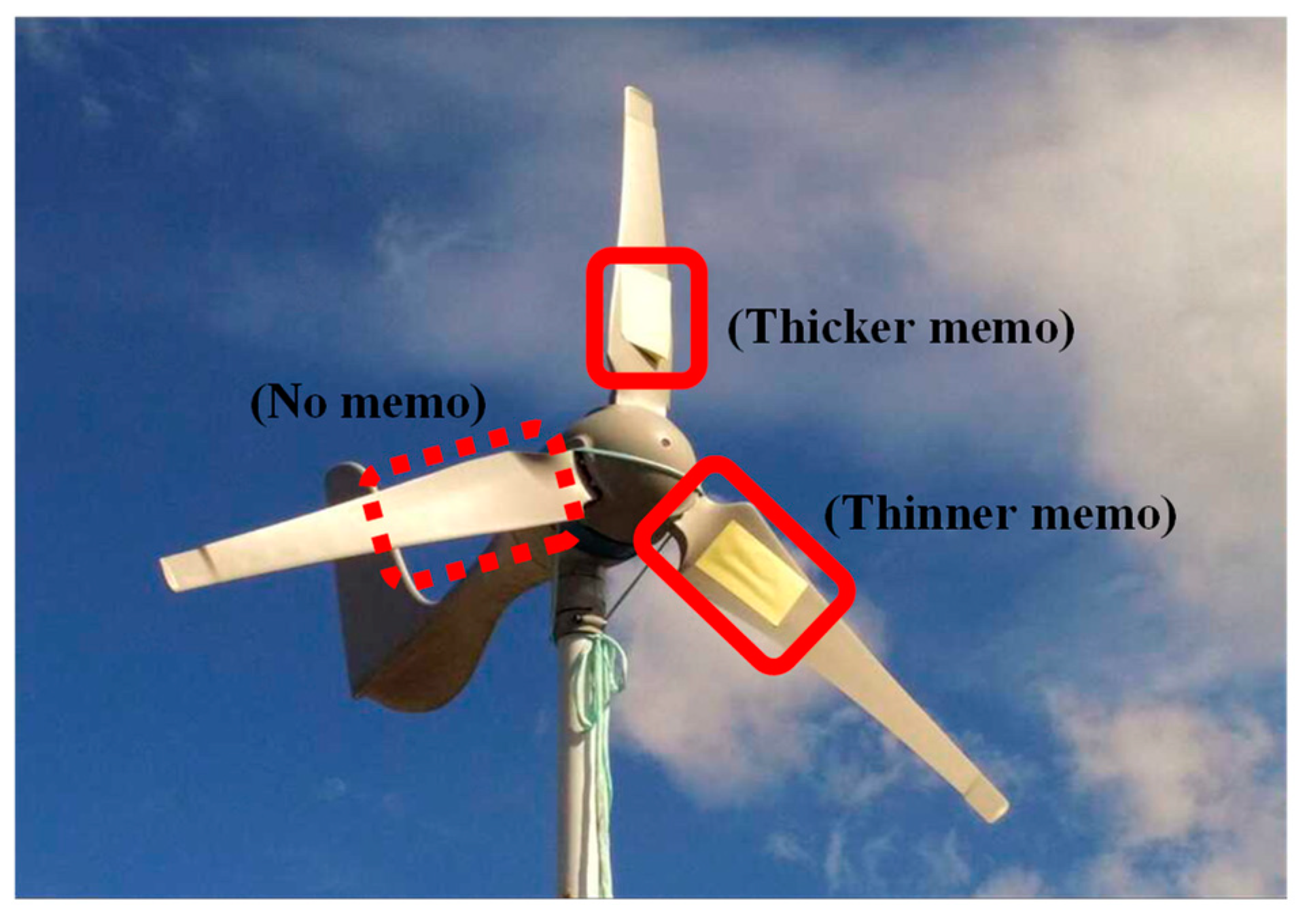

2.2.2. Defect Measurement of Wind Turbines

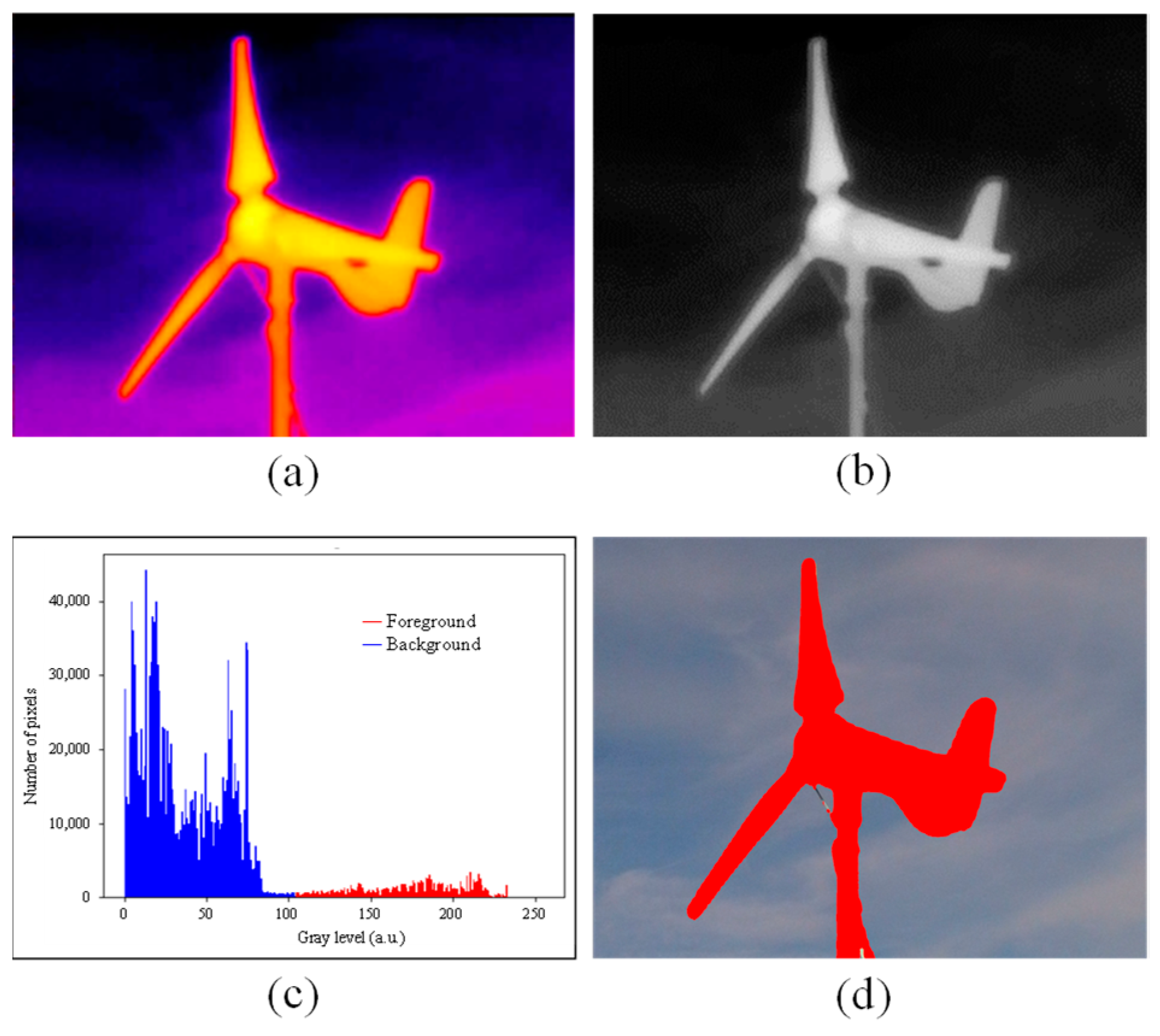

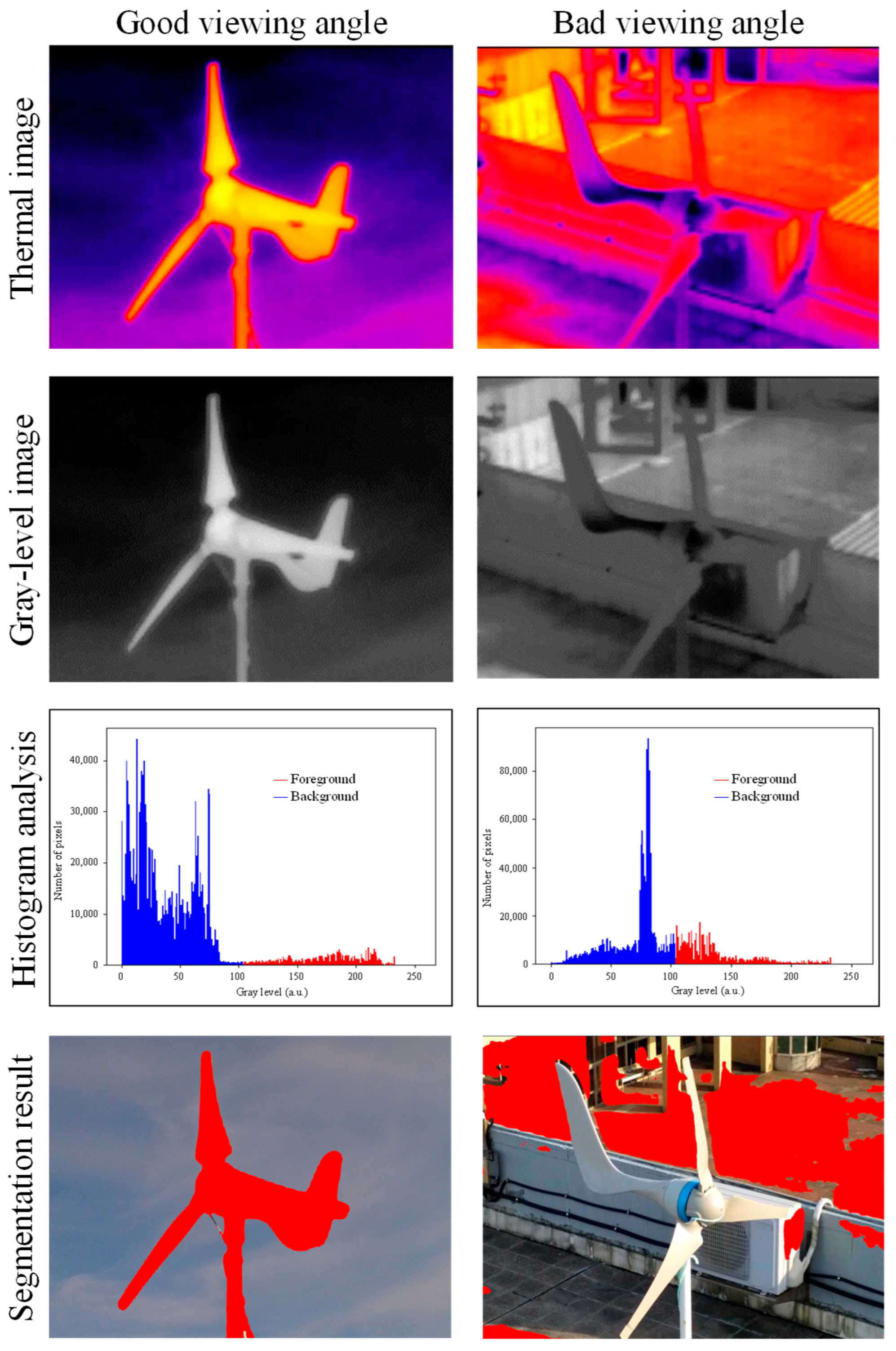

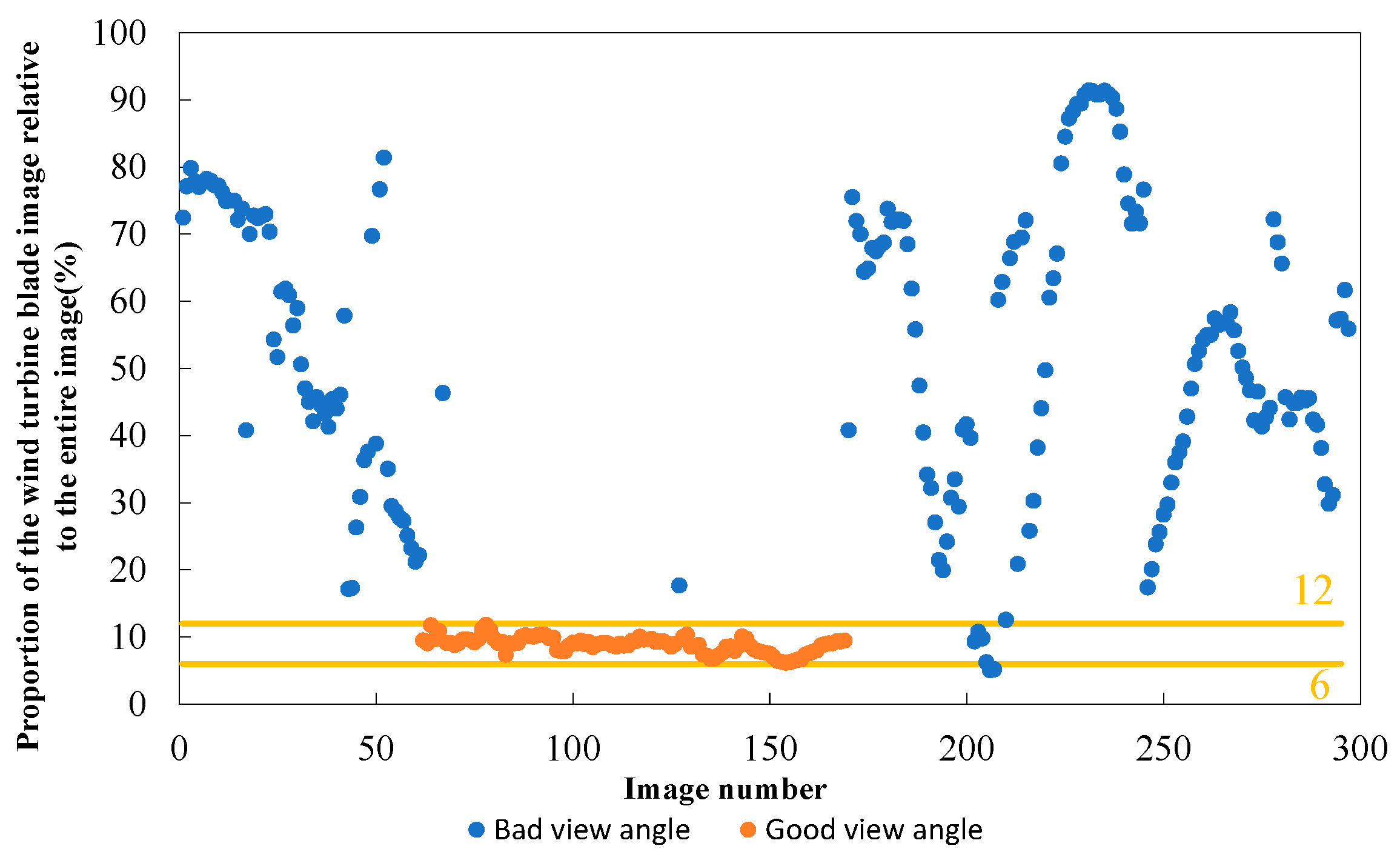

Good Viewing-Angle Image Screening

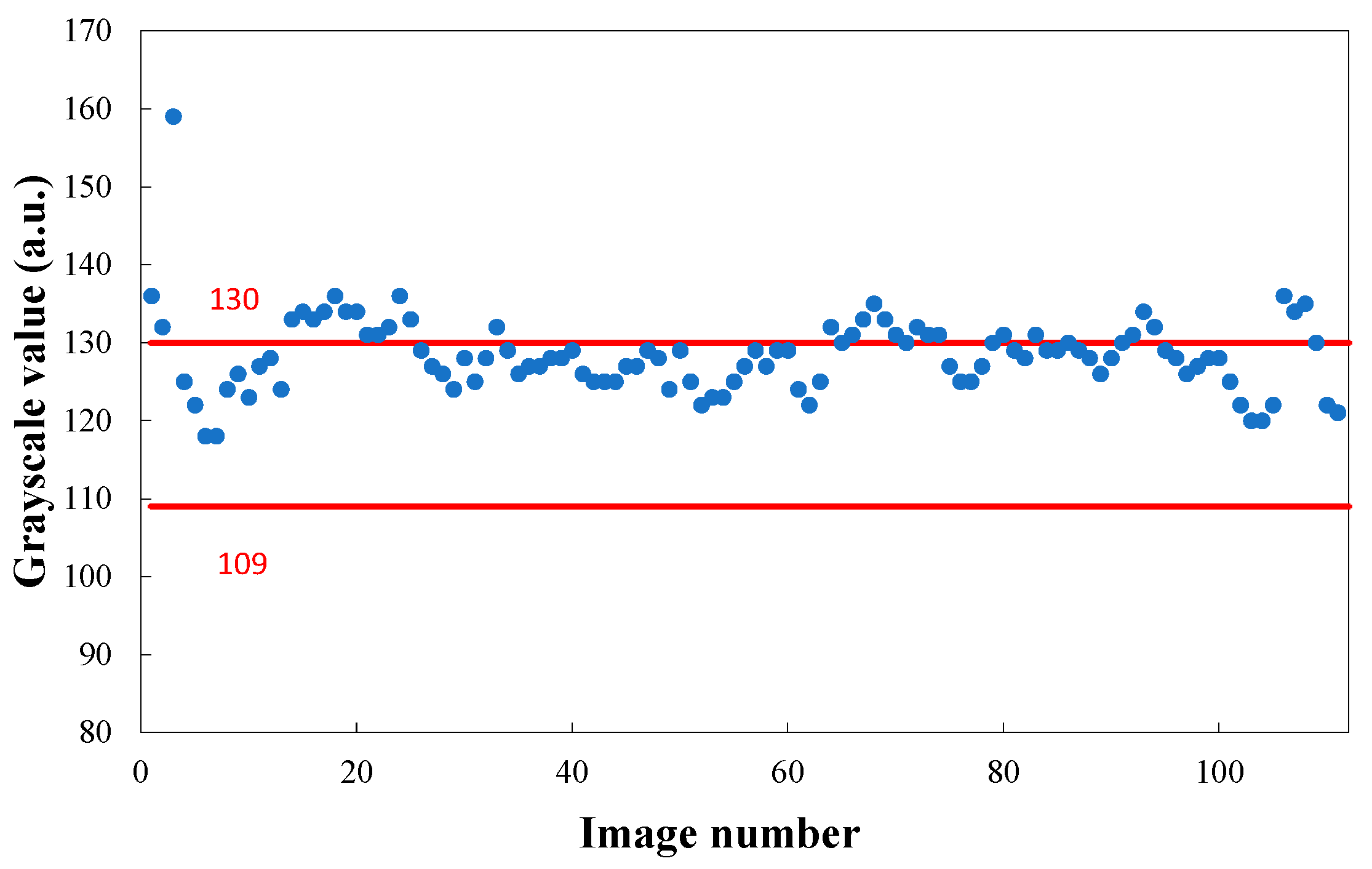

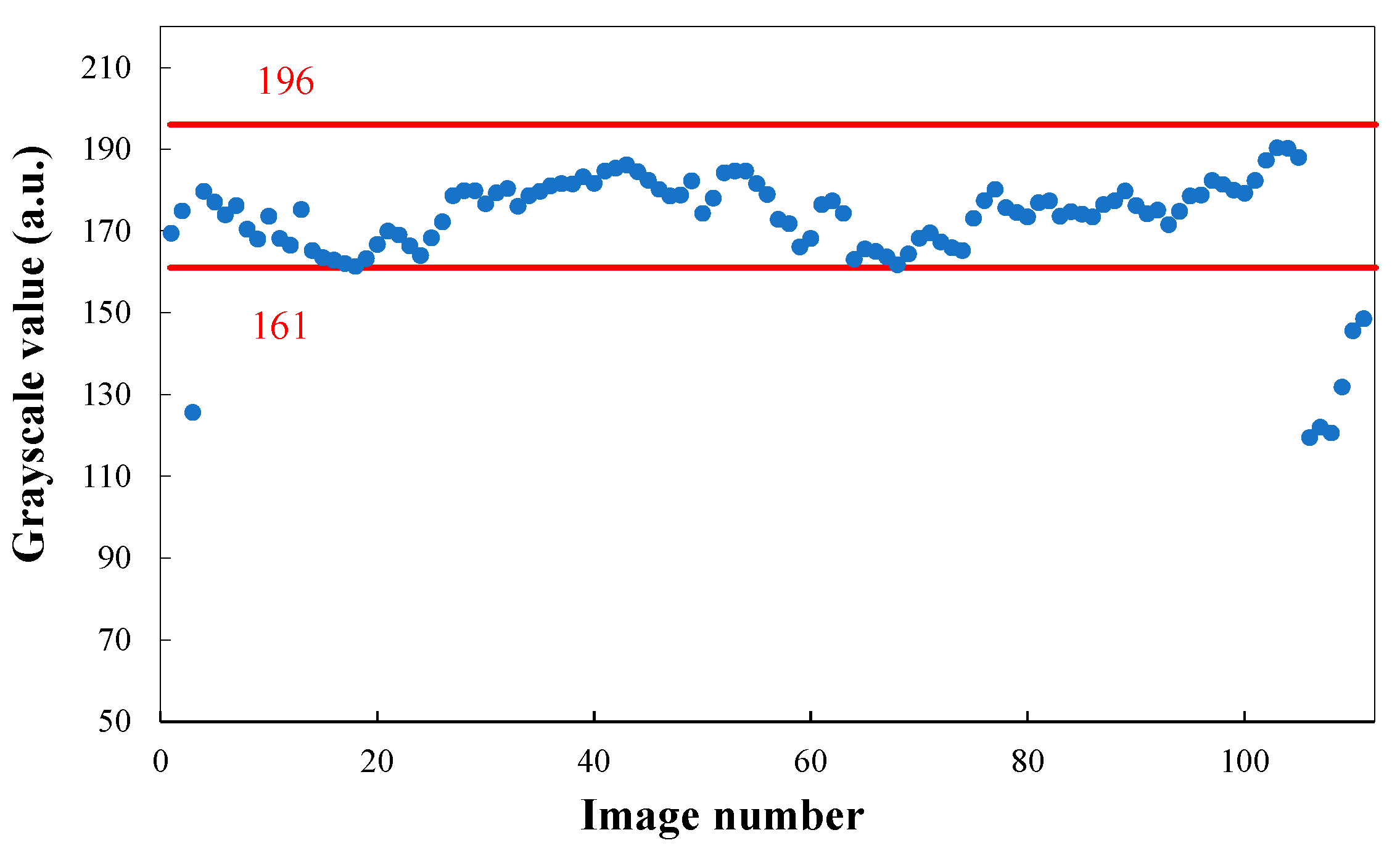

Abnormal Thermal Image Screening

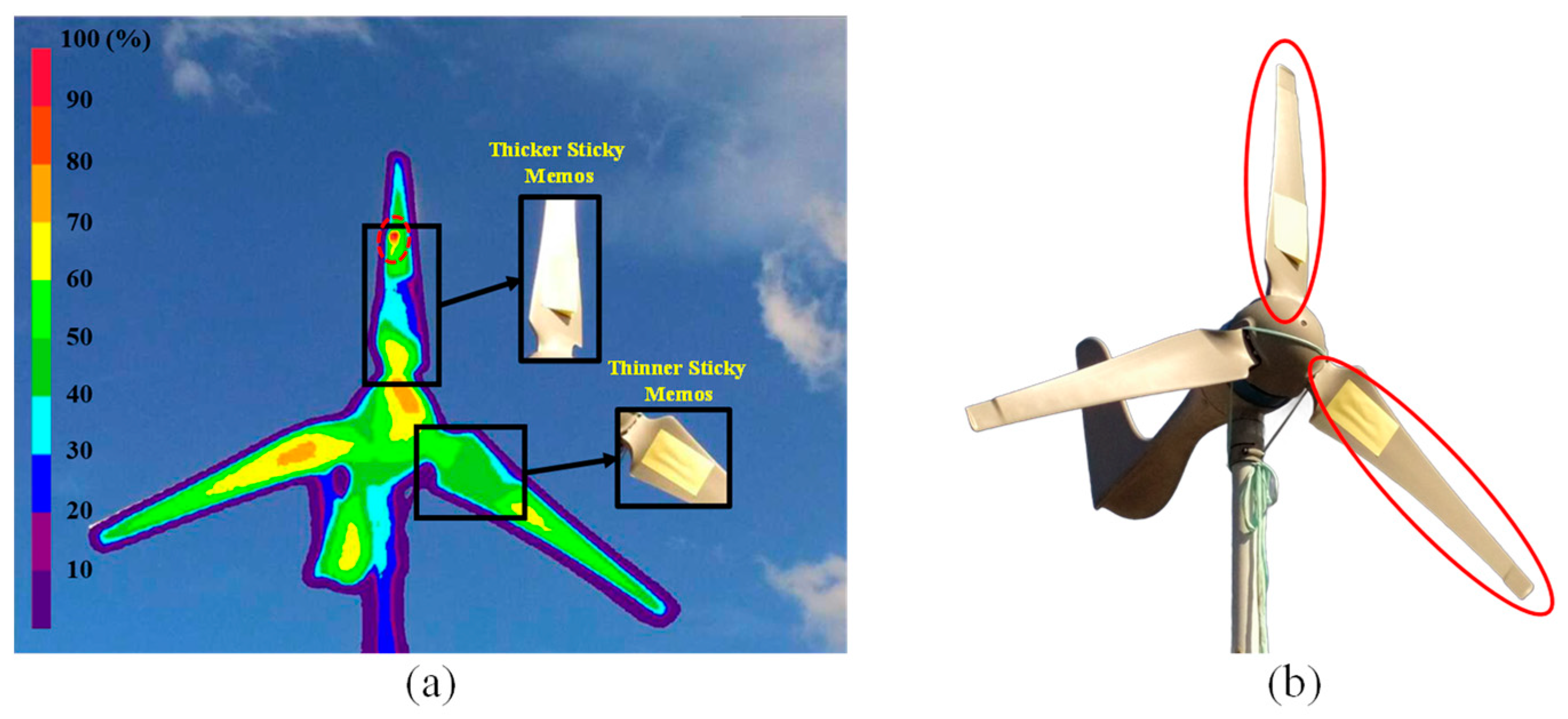

Heat Accumulation Percentage Analysis for Defect Measurement

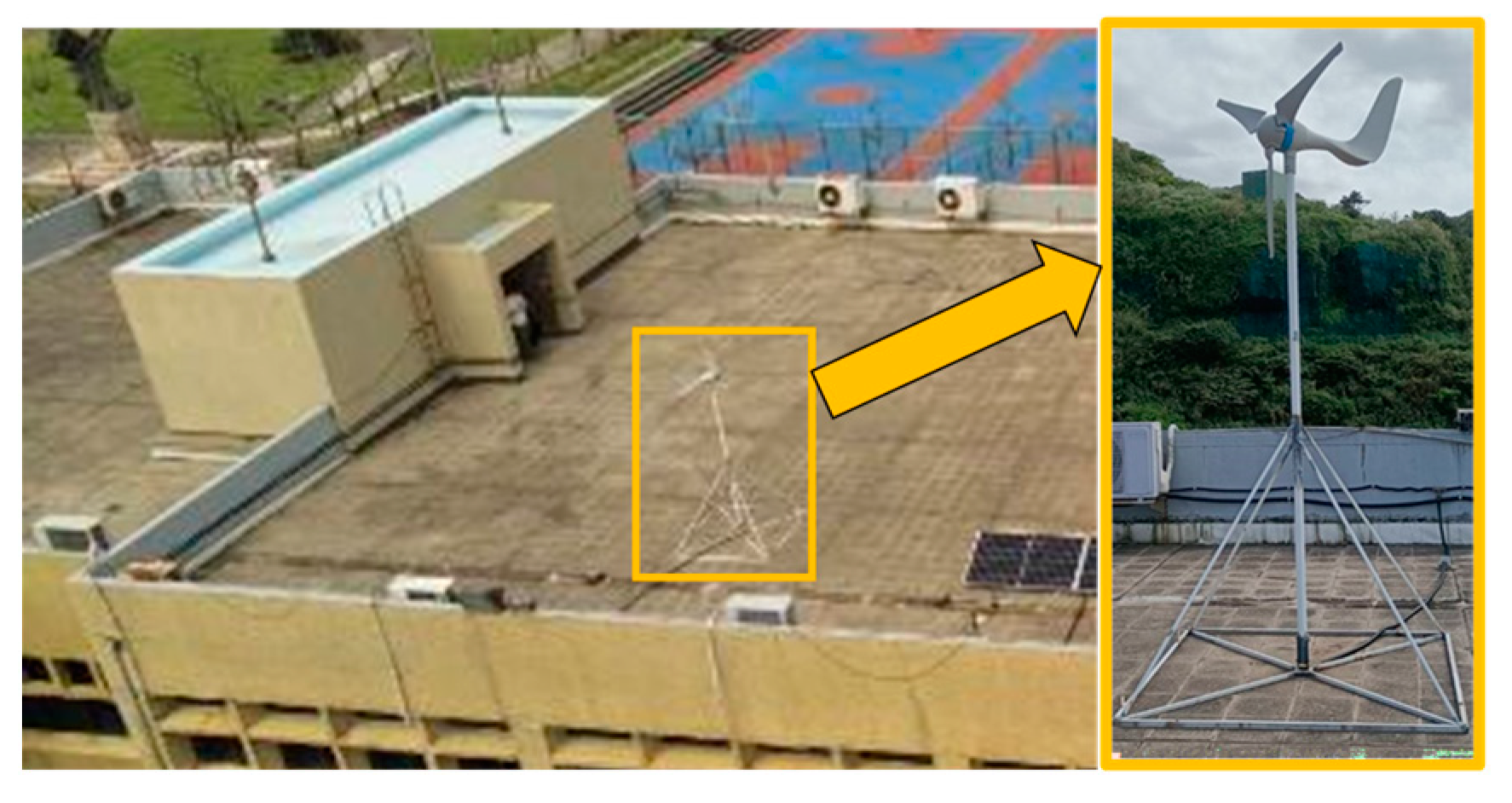

3. Measurement Experiment

4. Measurement Results and Discussion

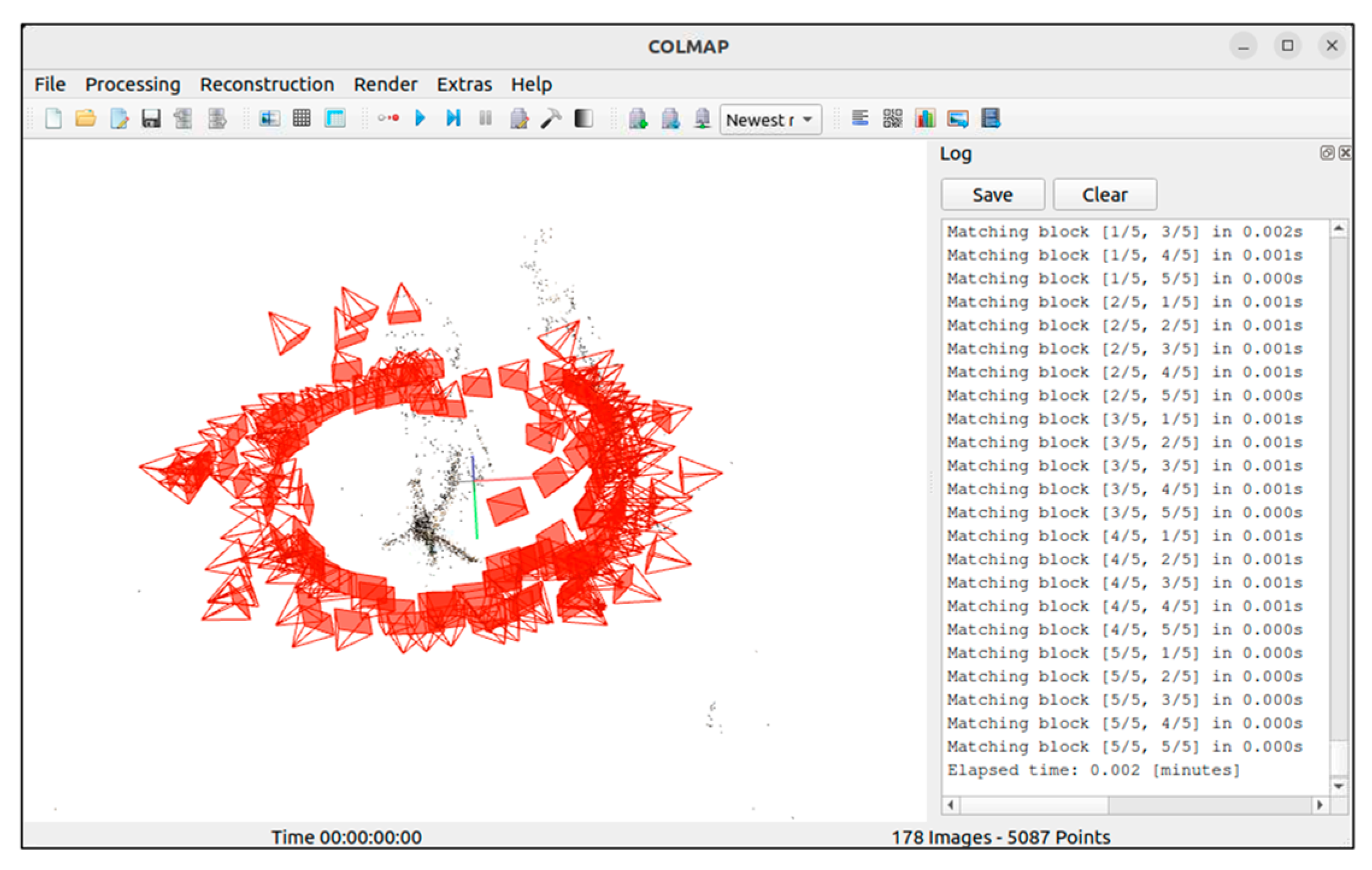

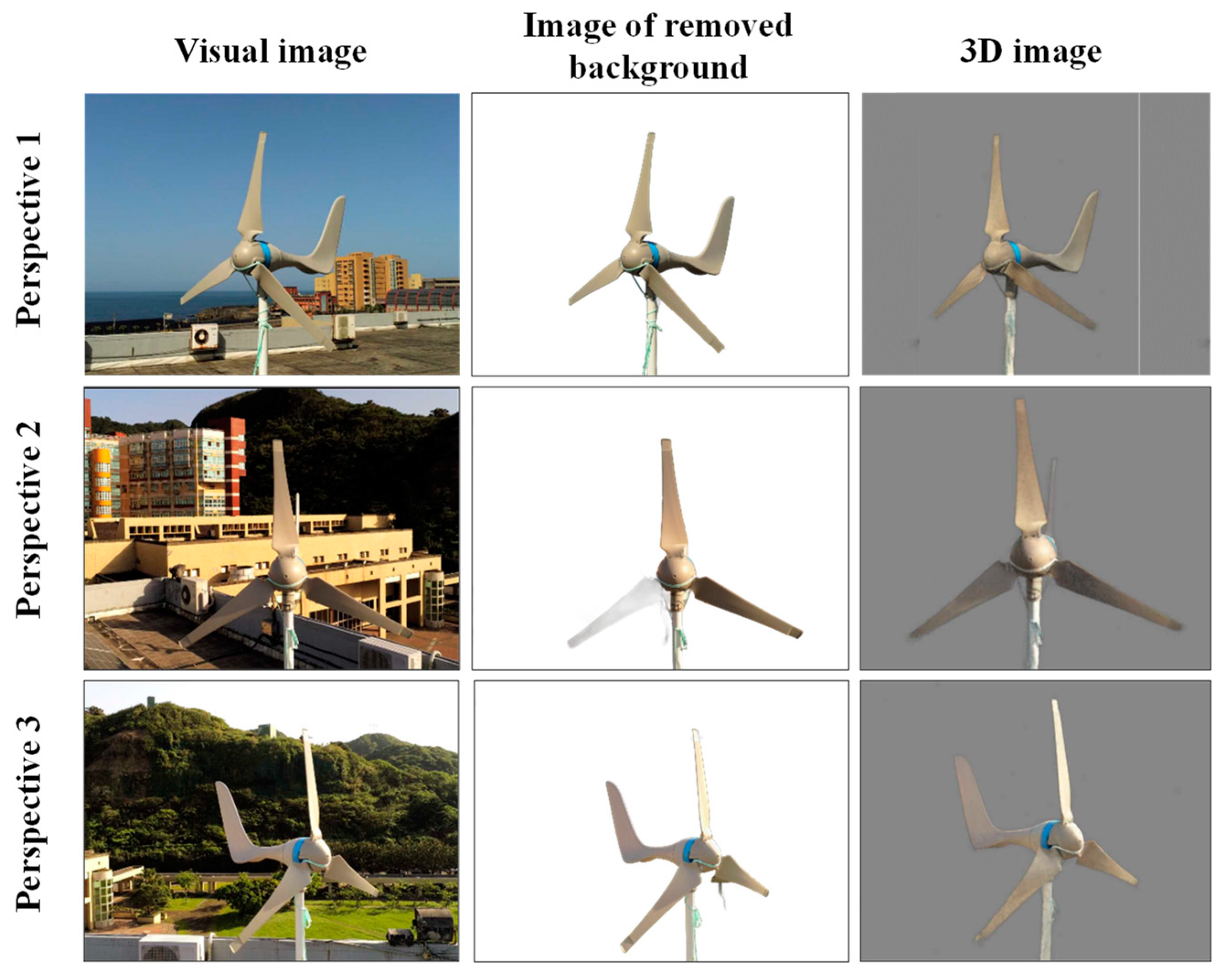

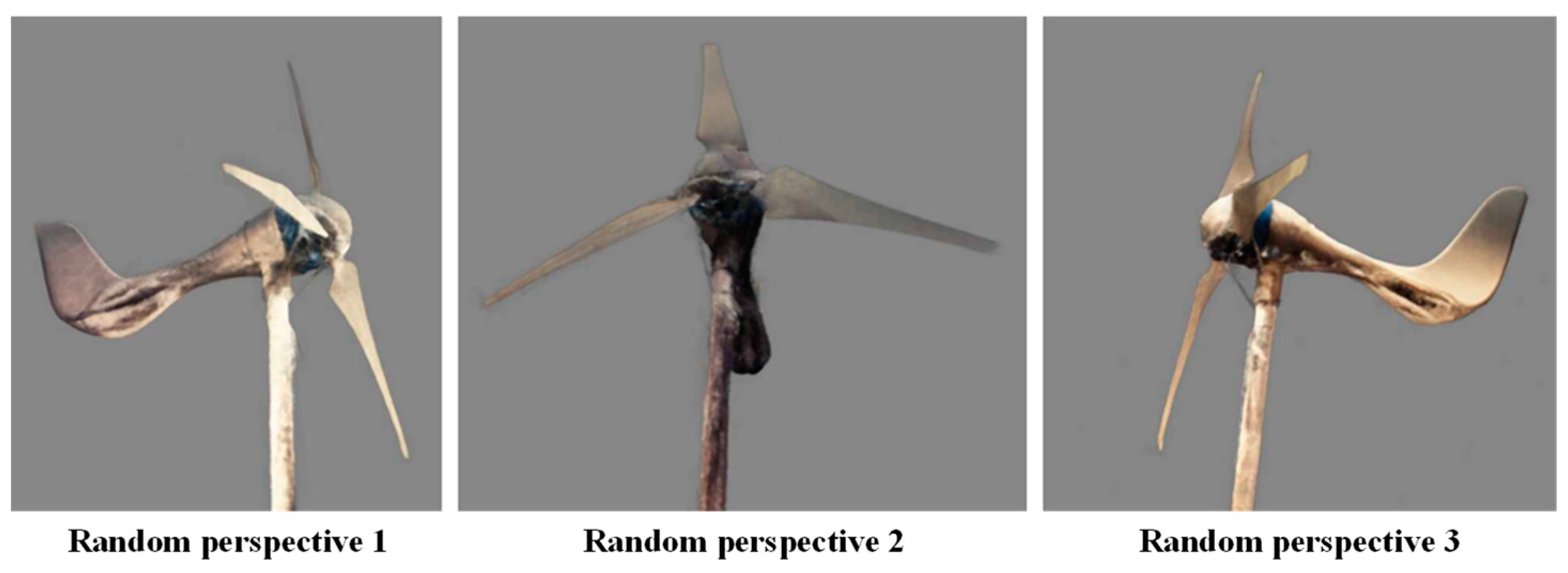

4.1. Three-Dimensional Image Reconstruction

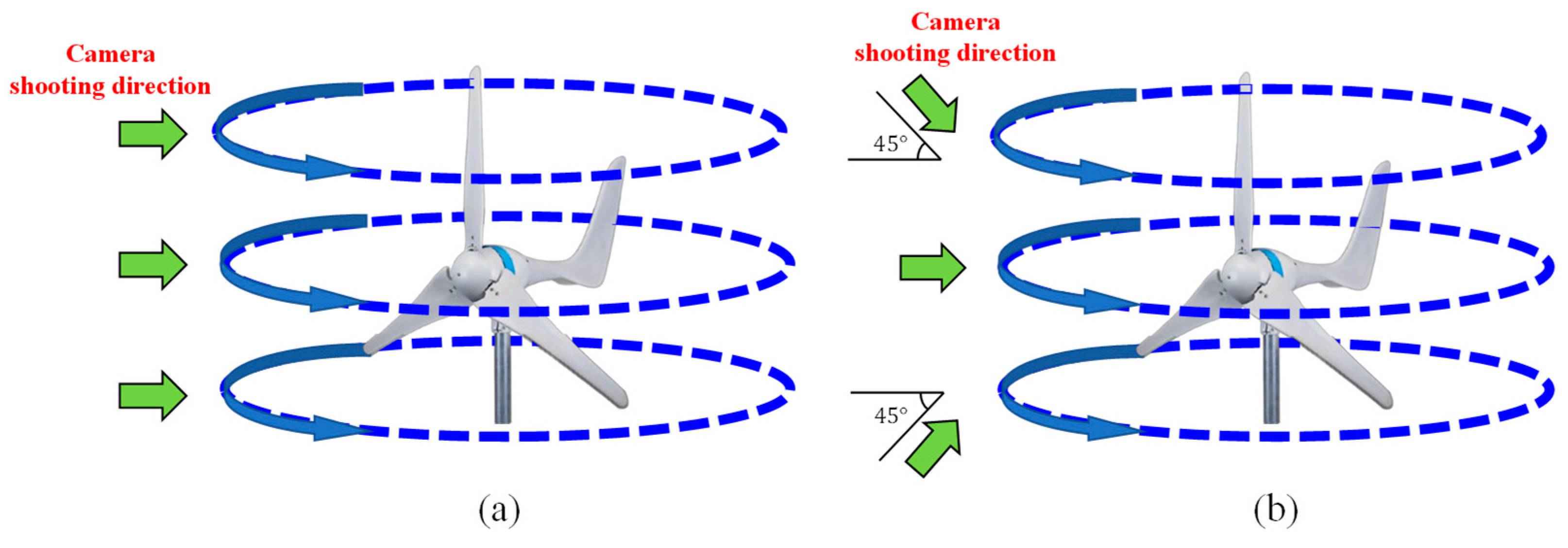

4.1.1. Orthophoto Reconstruction Scheme

4.1.2. Reconstruction Scheme of Tilt and Elevation Images

4.2. Defect Measurement

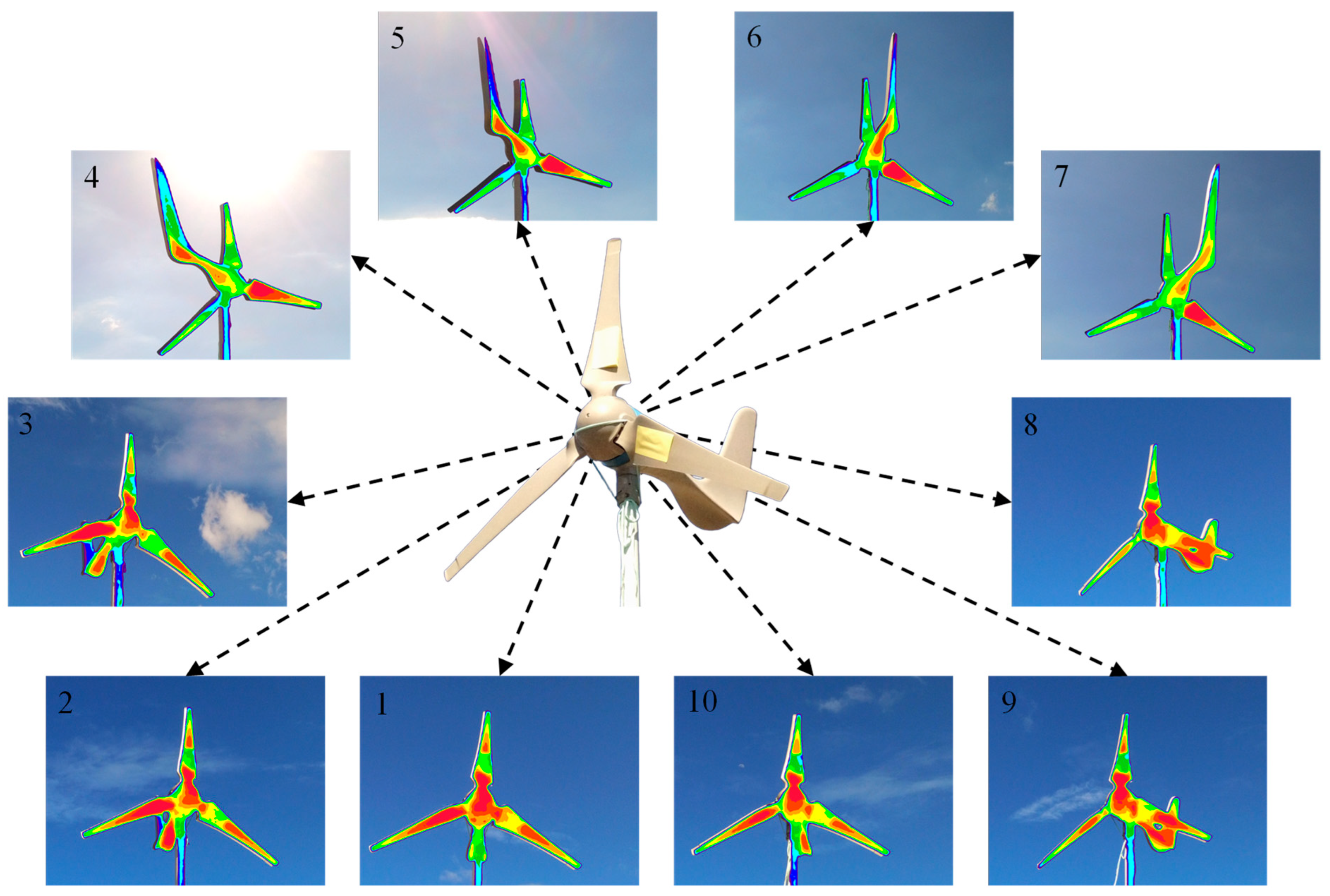

4.2.1. Good Viewing-Angle Image Measurement

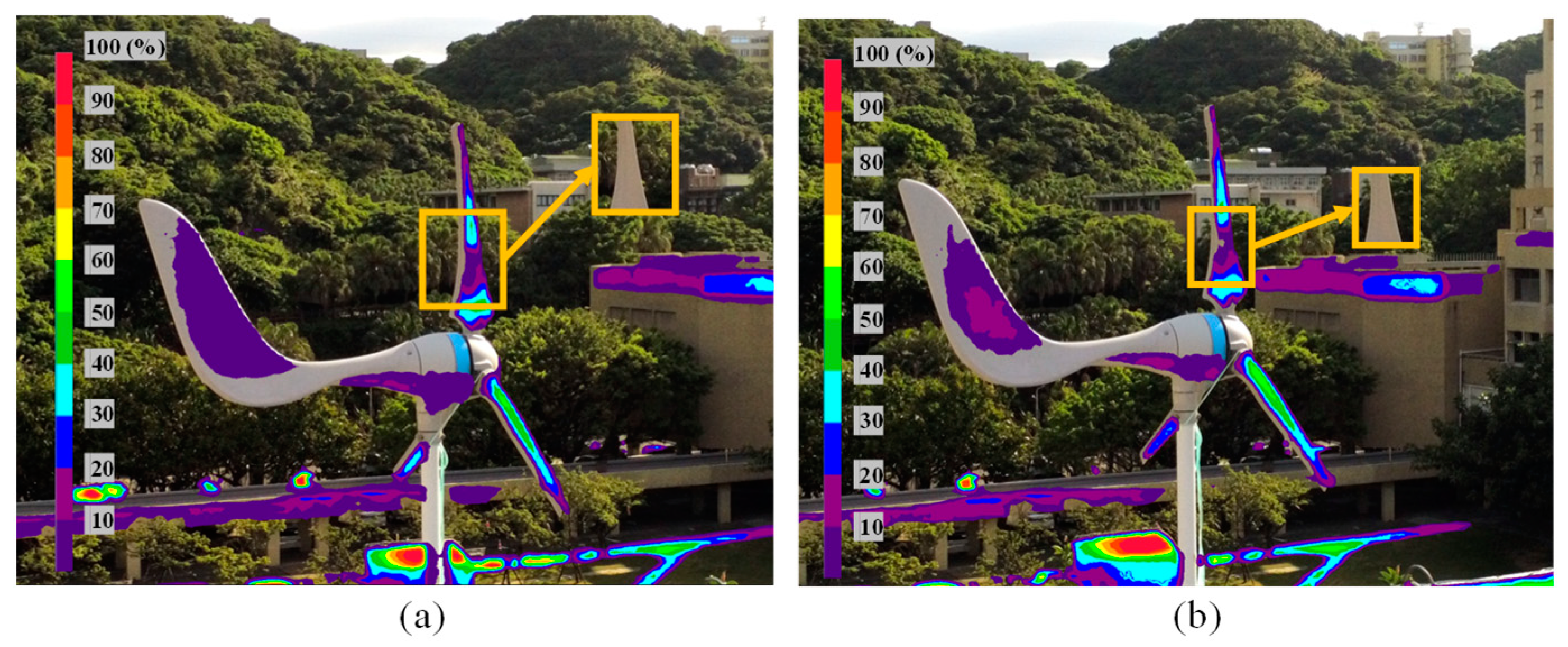

4.2.2. Abnormal Thermal Image Measurement

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kulsinskas, A.; Durdevic, P.; Ortiz-Arroyo, D. Internal Wind Turbine Blade Inspections Using UAVs: Analysis and Design Issues. Energies 2021, 14, 294. [Google Scholar] [CrossRef]

- Memari, M.; Shakya, P.; Shekaramiz, M.; Seibi, A.C.; Masoum, M.A.S. Review on the Advancements in Wind Turbine Blade Inspection: Integrating Drone and Deep Learning Technologies for Enhanced Defect Detection. IEEE Access 2024, 12, 33236–33282. [Google Scholar] [CrossRef]

- Rizk, P.; Rizk, F.; Karganroudi, S.S.; Ilinca, A.; Younes, R.; Khoder, J. Advanced Wind Turbine Blade Inspection with Hyperspectral Imaging and 3D Convolutional Neural Networks for Damage Detection. Energy AI 2024, 16, 100366. [Google Scholar] [CrossRef]

- Wang, W.; Xue, Y.; He, C.; Zhao, Y. Review of the Typical Damage and Damage-Detection Methods of Large Wind Turbine Blades. Energies 2022, 15, 5672. [Google Scholar] [CrossRef]

- Zhang, R.; Wen, C. SOD-YOLO: A Small Target Defect Detection Algorithm for Wind Turbine Blades Based on Improved YOLOv5. Adv. Theory Simul. 2022, 5, 2100631. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, Y.; Wang, X.; Li, H.; Sun, Y.; Wang, G. Algorithm for Detecting Surface Defects in Wind Turbines Based on a Lightweight YOLO Model. Sci. Rep. 2024, 14, 24558. [Google Scholar] [CrossRef]

- García Márquez, F.P.; Bernalte Sánchez, P.J.; Segovia Ramírez, I. Acoustic Inspection System with Unmanned Aerial Vehicles for Wind Turbines Structure Health Monitoring. Struct. Health Monit. 2022, 21, 485–500. [Google Scholar] [CrossRef]

- Solimine, J.; Inalpolat, M. Unsupervised Acoustic Detection of Fatigue-Induced Damage Modes from Wind Turbine Blades. Wind Eng. 2023, 47, 1116–1131. [Google Scholar] [CrossRef]

- Mielke, A.; Benzon, H.H.; McGugan, M.; Chen, X.; Madsen, H.; Branner, K.; Ritschel, T.K. Analysis of Damage Localization Based on Acoustic Emission Data from Test of Wind Turbine Blades. Measurement 2024, 231, 114661. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, L.; Carrasco, J. Vibration Analysis for Large-Scale Wind Turbine Blade Bearing Fault Detection with an Empirical Wavelet Thresholding Method. Renew. Energy 2020, 146, 99–110. [Google Scholar] [CrossRef]

- Loss, T.; Bergmann, A. Vibration-Based Fingerprint Algorithm for Structural Health Monitoring of Wind Turbine Blades. Applied Sciences 2021, 11, 4294. [Google Scholar] [CrossRef]

- Panagiotopoulos, A.; Dmitri, T.; Spilios, F.D. Damage Detection on the Blade of an Operating Wind Turbine via a Single Vibration Sensor and Statistical Time Series Methods: Exploring the Performance Limits of Robust Methods. Struct. Health Monit. 2023, 22, 433–448. [Google Scholar] [CrossRef]

- Wang, C.; Gu, Y. Research on Infrared Nondestructive Detection of Small Wind Turbine Blades. Results Eng. 2022, 15, 100570. [Google Scholar] [CrossRef]

- Sanati, H.; Wood, D.; Sun, Q. Condition Monitoring of Wind Turbine Blades Using Active and Passive Thermography. Appl. Sci. 2018, 8, 2004. [Google Scholar] [CrossRef]

- Collier, B.; Memari, M.; Shekaramiz, M.; Masoum, M.A.; Seibi, A. Wind Turbine Blade Fault Detection via Thermal Imaging Using Deep Learning. In Proceedings of the 2024 Intermountain Engineering, Technology and Computing (IETC), Logan, UT, USA, 13–14 May 2024; IEEE: New York, NY, USA, 2024; pp. 23–28. [Google Scholar]

- Chen, X.; Sheiati, S.; Shihavuddin, A.S.M. AQUADA PLUS: Automated Damage Inspection of Cyclic-Loaded Large-Scale Composite Structures Using Thermal Imagery and Computer Vision. Compos. Struct. 2023, 318, 117085. [Google Scholar] [CrossRef]

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Utsav, A.; Abhishek, A.; Suraj, P.; Badhai, R.K. An IoT-Based UAV Network for Military Applications. In Proceedings of the 2021 Sixth International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 25–27 March 2021; IEEE: New York, NY, USA, 2021; pp. 122–125. [Google Scholar]

- Morando, L.; Recchiuto, C.T.; Calla, J.; Scuteri, P.; Sgorbissa, A. Thermal and Visual Tracking of Photovoltaic Plants for Autonomous UAV Inspection. Drones 2022, 6, 347. [Google Scholar] [CrossRef]

- Wen, B.-J.; Chen, Y.-H. Night-Time Measurement and Skeleton Recognition Using Unmanned Aerial Vehicles Equipped with LiDAR Sensors Based on Deep-Learning Algorithms. IEEE Sens. J. 2023, 23, 23474–23485. [Google Scholar] [CrossRef]

- Chen, X.; Wang, C.; Liu, C.; Zhu, X.; Zhang, Y.; Luo, T.; Zhang, J. Autonomous Crack Detection for Mountainous Roads Using UAV Inspection System. Sensors 2024, 24, 4751. [Google Scholar] [CrossRef]

- Zhang, K.; Pakrashi, V.; Murphy, J.; Hao, G. Inspection of Floating Offshore Wind Turbines Using Multi-Rotor Unmanned Aerial Vehicles: Literature Review and Trends. Sensors 2024, 24, 911. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.-M. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Huang, M.; Zhao, M.; Bai, Y.; Gao, R.; Deng, R.; Zhang, H. Image-Based 3D Shape Estimation of Wind Turbine from Multiple Views. In Proceedings of IncoME-VI and TEPEN 2021: Performance Engineering and Maintenance Engineering; Springer International Publishing: Cham, Switzerland, 2022; pp. 1031–1044. [Google Scholar]

- Yang, H.; Tang, L.; Ma, H.; Deng, R.; Wang, K.; Zhang, H. WTBNeRF: Wind Turbine Blade 3D Reconstruction by Neural Radiance Fields. In Proceedings of the International Conference on the Efficiency and Performance Engineering Network, Baotou, China, 18–21 August 2022; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 675–687. [Google Scholar]

- Tempelis, A.; Mishnaevsky, L., Jr. Erosion Modelling on Reconstructed Rough Surfaces of Wind Turbine Blades. Wind Energy 2023, 26, 1017–1026. [Google Scholar] [CrossRef]

- Nakajima, M. Turbine Blades with Shape Estimation Using Multiple 2D Barcodes. In Proceedings of the International Workshop on Advanced Imaging Technology (IWAIT) 2023, Jeju, Republic of Korea, 9–11 January 2023; SPIE: Bellingham, WA, USA, 2023; Volume 12592, pp. 416–421. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Lorensen, W.E.; Cline, H.E. Marching Cubes: A High Resolution 3D Surface Construction Algorithm. In Seminal Graphics: Pioneering Efforts that Shaped the Field; Association for Computing Machinery: New York, NY, USA, 1998; pp. 347–353. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Ruelle, D. A Mechanical Model for Fourier’s Law of Heat Conduction. Commun. Math. Phys. 2012, 311, 755–768. [Google Scholar] [CrossRef]

- Mahmoud, M.A.; Henderson, G.R.; Epprecht, E.K.; Woodall, W.H. Estimating the Standard Deviation in Quality-Control Applications. J. Qual. Technol. 2010, 42, 348–357. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Rahaman, N.; Baratin, A.; Arpit, D.; Draxler, F.; Lin, M.; Hamprecht, F.; Bengio, Y.; Courville, A. On the Spectral Bias of Neural Networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; PMLR: Long Beach, CA, USA, 2019; pp. 5301–5310. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems (NIPS); Curran Associates, Inc.: Long Beach, CA, USA, 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Chabra, R.; Lenssen, J.E.; Ilg, E.; Schmidt, T.; Straub, J.; Lovegrove, S.; Newcombe, R. Deep Local Shapes: Learning Local SDF Priors for Detailed 3D Reconstruction. In Computer Vision—ECCV 2020: Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020, Part XXIX; Springer International Publishing: Cham, Switzerland, 2020; pp. 608–625. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Lankes, R.D.; Gross, M.; McClure, C. Cost, statistics, measures, and standards for digital reference services: A preliminary view. Ref. User Serv. Q. 2003, 43, 210–216. [Google Scholar]

- Gao, M.; Hugenholtz, C.H.; Fox, T.A.; Kucharczyk, M.; Barchyn, T.E.; Nesbit, P.R. Weather constraints on global drone flyability. Sci. Rep. 2021, 11, 12092. [Google Scholar] [CrossRef]

- Setiadi, D.R.I.M. PSNR vs SSIM: Imperceptibility quality assessment for image steganography. Multimed. Tools Appl. 2021, 80, 8423–8444. [Google Scholar] [CrossRef]

- Xu, Z.; Feng, Y.H.; Zhao, C.Y.; Huo, Y.L.; Li, S.; Hu, X.J.; Zhong, Y.J. Experimental and numerical investigation on aerodynamic performance of a novel disc-shaped wind rotor for the small-scale wind turbine. Energy Convers. Manag. 2018, 175, 173–191. [Google Scholar] [CrossRef]

| Full Range | Average Value | Standard Deviation | |

|---|---|---|---|

| Average value | 119 | 179 | 30 |

| Standard deviation | 5 | 9 | 2 |

| Real Area (mm2) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Average | Standard Deviation | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | Area (mm2) | TDEF (%) | ||

| Thicker defect | 9525 | 9399 | 27 | 9200 | 27 | 9087 | 24 | 9045 | 23 | 9034 | 21 | 9213 | 20 | 9346 | 20 | 9415 | 21 | 9386 | 28 | 9335 | 24 | 9246.0 | 23.5 | 150.30 | 3.03 |

| Thinner detect | 9525 | 8901 | 16 | 8944 | 15 | 8947 | 16 | 8895 | 19 | 9065 | 17 | 8840 | 15 | 8725 | 17 | 8690 | 16 | 9001 | 20 | 8890 | 16 | 8889.8 | 16.7 | 114.98 | 1.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hung, C.-Y.; Chu, H.-Y.; Wang, Y.-M.; Wen, B.-J. Three-Dimensional Defect Measurement and Analysis of Wind Turbine Blades Using Unmanned Aerial Vehicles. Drones 2025, 9, 342. https://doi.org/10.3390/drones9050342

Hung C-Y, Chu H-Y, Wang Y-M, Wen B-J. Three-Dimensional Defect Measurement and Analysis of Wind Turbine Blades Using Unmanned Aerial Vehicles. Drones. 2025; 9(5):342. https://doi.org/10.3390/drones9050342

Chicago/Turabian StyleHung, Chin-Yuan, Huai-Yu Chu, Yao-Ming Wang, and Bor-Jiunn Wen. 2025. "Three-Dimensional Defect Measurement and Analysis of Wind Turbine Blades Using Unmanned Aerial Vehicles" Drones 9, no. 5: 342. https://doi.org/10.3390/drones9050342

APA StyleHung, C.-Y., Chu, H.-Y., Wang, Y.-M., & Wen, B.-J. (2025). Three-Dimensional Defect Measurement and Analysis of Wind Turbine Blades Using Unmanned Aerial Vehicles. Drones, 9(5), 342. https://doi.org/10.3390/drones9050342