AI-Driven UAV and IoT Traffic Optimization: Large Language Models for Congestion and Emission Reduction in Smart Cities

Abstract

1. Introduction

- Wide-area coverage: Unlike ground-based sensors, UAVs can monitor multiple road segments simultaneously.

- Real-time congestion assessment: UAVs capture dynamic traffic patterns, including bottlenecks and queue formations.

- Enhanced AI input: Aerial imagery provides additional traffic state variables (e.g., lane density, vehicle trajectory prediction), which can be fused with IoT sensor data in the LLM prompt.

2. Related Work

- Image Processing Complexity: Extracting congestion metrics from UAV imagery requires object detection and computer-vision techniques.

- Measurement Error and Environmental Constraints: UAV observations may be affected by occlusion (e.g., buildings obstructing vehicle visibility), lighting conditions, and camera resolution.

- Real-time Data Transmission: Unlike induction loops that provide direct occupancy values, UAVs require high-bandwidth data links for real-time image processing.

- Battery and Flight Time Limitations: Most UAVs have limited operational endurance, requiring frequent battery replacements or recharging.

Contributions of This Study

- A novel AI-driven traffic optimization framework integrating Large Language Models (LLMs) with IoT-based monitoring (induction loops and drones).

- Implementation of real-time AI intervention in SUMO, where the LLM dynamically adjusts vehicle speeds based on congestion levels.

- Experimental evaluation across three urban scenarios: Pacific Beach and Coronado (San Diego) and Argüelles (Madrid).

- Demonstration of significant congestion and CO2 emission reductions, highlighting the potential of AI-enhanced urban mobility systems.

3. Methodology

3.1. Traffic Model and IoT Sensor Representation

- V is the set of intersections (nodes) in the network.

- E is the set of roads (edges) connecting intersections.

- is the length of the road segment.

- is the lane capacity, representing the maximum number of vehicles that can be accommodated per unit length.

- is the mean vehicle speed at time t for all vehicles traveling along the segment e. Unless stated otherwise, this average is computed as the harmonic mean speed, defined as:where is the number of vehicles on segment e at time t, and is the instantaneous speed of the o-th vehicle. The harmonic mean is chosen as it more accurately represents traffic flow conditions, particularly in congested scenarios.

- is the mean vehicle velocity measured at the UAV’s observation point or over the designated section u of given length.

- is the vehicle density per lane, calculated as:where is the number of vehicles observed within the UAV’s coverage area at time t, and is the length of the observed road section.

- represents the UAV’s camera angle and resolution, which both influence the precision of vehicle detection.

- is the number of vehicles detected at sensor at time t.

- is the road capacity at the sensor location, defined as the maximum number of vehicles that can be simultaneously present at the sensor’s detection zone.

- Real-world data sources: Traffic flow data from OpenStreetMap (OSM) and empirical studies were used to define major road usage patterns.

- Randomized route assignment: Vehicles were assigned routes stochastically based on probability distributions that reflect expected traffic conditions in each scenario. The source of randomness arises from multiple factors:

- −

- User perception of route costs: Drivers may select routes based on subjective assessments of travel time, congestion risk, or familiarity.

- −

- Variability in congestion conditions: Since congestion evolves dynamically, vehicles entering the network at different times may experience different levels of congestion, leading to probabilistic route selection.

- −

- Heterogeneous driver behavior: Individual vehicles may have different routing preferences, including shortest-path, fastest-path, or traffic-avoidance strategies.

- −

- Traffic assignment models: The simulation incorporates stochastic traffic assignment, where vehicles probabilistically choose between multiple feasible routes rather than always selecting the single shortest or fastest path.

- Dynamic demand variations: The total number of vehicles per simulation step was adjusted dynamically to simulate peak and off-peak conditions, ensuring that the network reflects real-world traffic fluctuations.

- Simulation-level classification: In SUMO, vehicles are explicitly assigned categories based on predefined vehicle type definitions. These include parameters such as length, maximum speed, acceleration profile, and emission characteristics. The assignment of vehicle types follows real-world proportions, ensuring a realistic traffic composition.

- Sensor-based classification: The IoT sensors (induction loops or UAV-based surveillance) provide indirect classification by measuring vehicle length, speed, and occupancy patterns. Induction loops can differentiate between light and heavy vehicles based on their presence duration in the sensor area, while UAV imagery could enhance classification accuracy using computer-vision techniques, detecting vehicle dimensions and shapes in real-time. Future extensions of this work could integrate deep-learning-based vehicle classification models for improved accuracy in drone-assisted monitoring.

3.2. Scenario Selection and Network Editing in SUMO

- Location Selection: We identified high-traffic areas in each city where congestion is a known issue.

- Data Extraction: Using SUMO’s osmWebWizard, see Figure 2, we downloaded road network data from OpenStreetMap. This tool allows users to specify a geographic region and automatically converts OSM data into a SUMO-compatible network.

- Conversion to SUMO Format: The extracted OSM data are converted into an XML-based SUMO network file (.net.xml), which includes information on road types, intersections, lane configurations, and traffic signals.

- Intersection Editing: Adjusting traffic light placements and modifying lane configurations to reflect real-world conditions.

- Road Modifications: Fine-tuning speed limits, lane connections, and vehicle flow dynamics to match observed traffic behavior.

- Sensor Placement: Adding induction-loop detectors and defining areas for drone-based monitoring to collect real-time traffic data.

3.3. AI-Driven Traffic Control

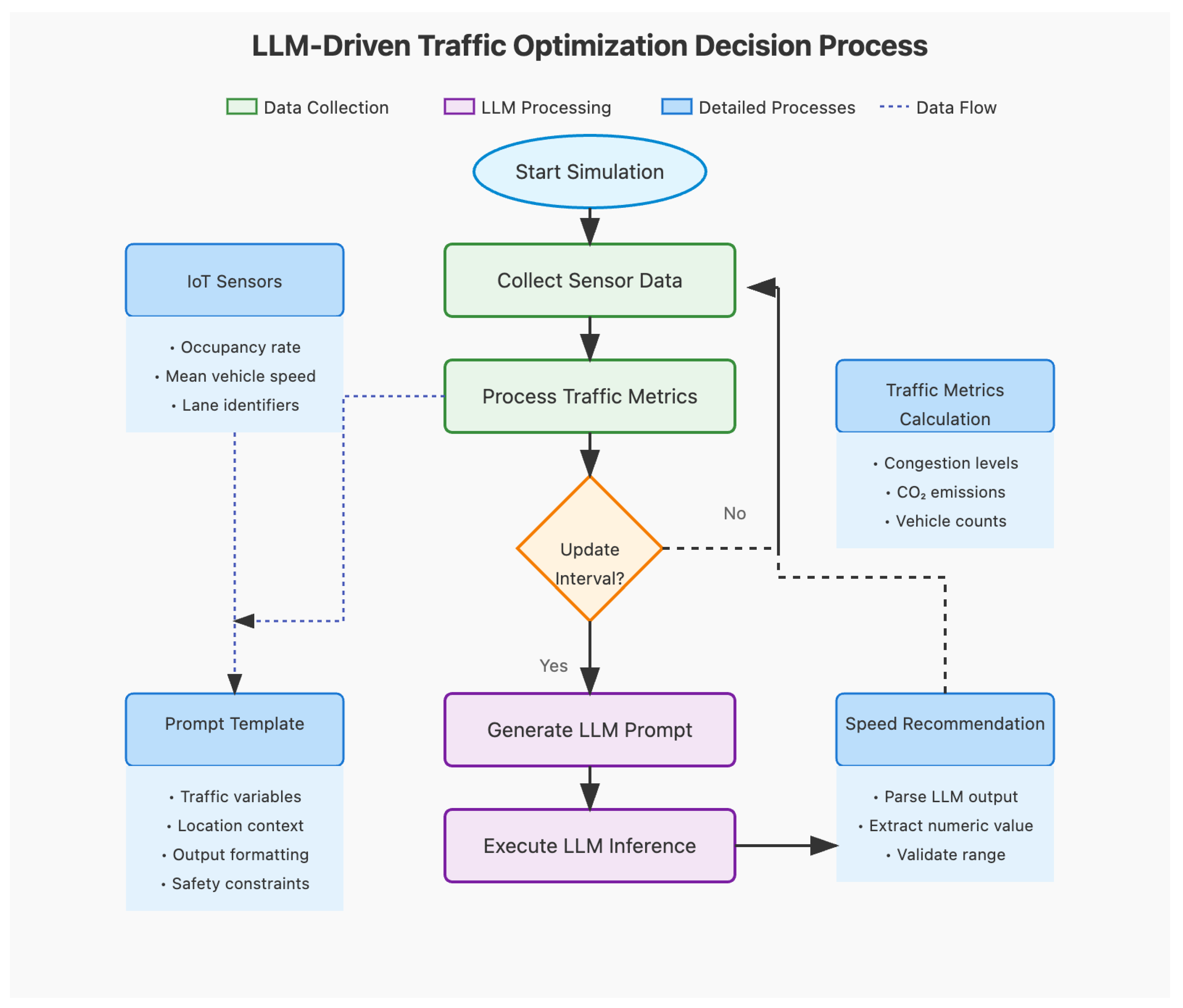

- Real-time congestion data are collected from loop detectors every simulation step.

- The traffic state vector is constructed, aggregating congestion and speed measurements.

- The LLM processes the traffic state vector and generates optimal speed adjustments:

- The recommended speeds are applied to the respective road segments, adjusting vehicle flow dynamically.

- The process repeats at fixed intervals, ensuring real-time adaptation to changing traffic conditions.

- is the adjusted speed for the next segment.

- is the recommended speed for the current segment.

- is the original speed of the next segment before adjustment.

- is a continuity factor (typically between 0.7 and 0.9) that controls the balance between maintaining previous speeds and applying the new AI-driven recommendation.

3.4. LLM Prompt Example

3.5. Decision Process Flow

3.6. Simulation Setup

- Urban scenarios: Pacific Beach (San Diego), Coronado (San Diego), and Argüelles (Madrid).

- Simulation duration: 1500 steps (≈25 min).

- Vehicle count: Dynamically generated based on real-world traffic conditions.

- IoT sensors: Induction loop detectors/drones positioned at key intersections.

- AI intervention: Speed adjustments computed every 5 steps using Gemini-2.0-Flash LLM.

- Base Traffic Demand: The simulation begins with an estimated traffic demand based on historical data for each location. This includes vehicle entry rates and expected peak congestion times.

- Stochastic Variation: Vehicle arrivals are modeled as a Poisson process, ensuring a realistic distribution of cars entering the network over time.

- Route Assignment: Each vehicle is assigned a route using the DuaRouter tool in SUMO, which estimates the most likely origin–destination pairs based on real-world road usage patterns.

- Dynamic Adaptation: The vehicle count dynamically adjusts based on congestion levels observed in the simulation. This ensures that bottlenecks and free-flow conditions emerge naturally rather than being pre-programmed.

- Baseline (No AI intervention): Vehicles follow standard SUMO rules.

- AI-driven control: The LLM adjusts vehicle speeds based on congestion predictions.

3.7. Performance Metrics

- C represents the mean congestion level across all road segments.

- is the final congestion level at road segment e at the end of the simulation.

- is the total number of road segments in the network.

- T is the total simulation time.

- represents the total CO2 emissions over the entire simulation duration.

- is the per-vehicle emission rate (in grams) of vehicle v at time t.

- V is the set of all vehicles in the network.

- T is the total simulation time.

- Vehicle category (e.g., passenger car, heavy-duty truck, bus).

- Instantaneous speed .

- Acceleration profile .

- Engine type and fuel consumption characteristics.

- Passenger vehicles (diesel/petrol/electric).

- Light commercial vehicles.

- Heavy-duty trucks.

- Buses.

3.8. Summary of Methodology

- IoT sensors collect real-time congestion and speed data.

- The LLM processes the data and generates optimal speed recommendations.

- Vehicles adjust their speeds based on AI outputs.

- Performance metrics (congestion, emissions) are recorded.

- Acceleration (a) and deceleration (b): Each vehicle follows predefined acceleration and braking parameters that determine how it responds to traffic ahead.

- Maximum speed (): Each vehicle type has a speed limit, typically set based on road regulations (e.g., 50 km/h for urban roads).

- Headway distance: Vehicles maintain a safe following distance, adapting their speeds based on surrounding traffic.

- Lane-changing behavior: The model allows vehicles to switch lanes if gaps are available, optimizing throughput based on SUMO’s built-in decision-making heuristics.

Differences Between Conventional and AI-Based Control

- Conventional Model: Vehicles operate under fixed-speed limits and react only to nearby traffic conditions. Speed adjustments occur naturally based on SUMO’s default rules, but there is no global optimization.

- AI-Based Model: The LLM dynamically suggests speed adjustments based on real-time congestion data, preventing bottlenecks and optimizing flow efficiency across multiple intersections.

4. Experimental Results

4.1. LLM-SUMO Integration Methodology

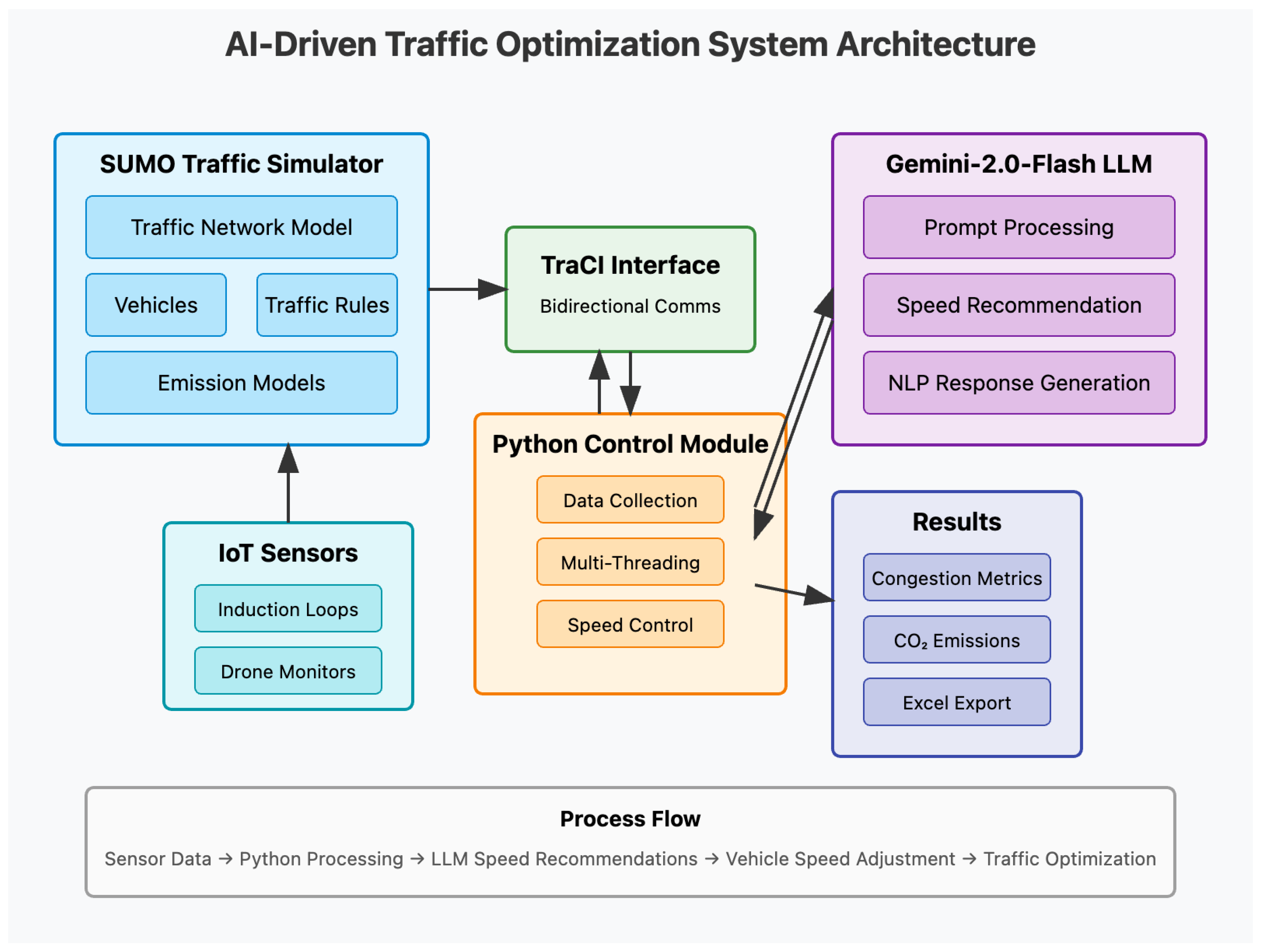

4.1.1. System Architecture

- Real-time data extraction from traffic sensors.

- Dynamic vehicle speed adjustments based on LLM recommendations.

- Continuous monitoring of congestion and emissions metrics.

4.1.2. Traffic Sensor Implementation

- Multiple sensors are positioned not only at critical intersections but also along key avenues, where they provide continuous vehicle flow data. In some cases, multiple loops are placed along a single stretch of road to capture speed variations and congestion propagation.

- Each sensor records real-time vehicle counts, mean speeds, and occupancy rates, providing a continuous rather than point-based assessment of congestion.

- A validation system ensures that only functional and properly calibrated sensors contribute data to the AI-driven optimization process.

4.1.3. LLM Configuration and Prompt Engineering

- Temperature setting of 1.0: A hyperparameter that controls the randomness of generated responses. Lower values (e.g., 0.1) make the model deterministic and repetitive, while higher values (e.g., 1.5) increase variability. A value of 1.0 was chosen to maintain a balance between predictable, interpretable speed adjustments and the ability to explore alternative solutions.

- Top-p (0.95) and top-k (40) parameters: These control the sampling strategy to ensure diverse yet relevant recommendations.

- Prompt engineering: The structured prompts provided to the LLM explicitly focus on congestion reduction based on real-time traffic metrics.

- -

- If set too low (e.g., 0.2), the AI would consistently repeat the most probable speed adjustment values, limiting adaptability to varying traffic patterns.

- -

- If set too high (e.g., 1.5), the AI might generate highly variable or inconsistent responses, reducing stability in speed control.

- -

- A value of 1.0 ensures that, while optimal speed adjustments remain structured, the model still has some flexibility to explore adaptive solutions in different traffic states.

4.1.4. Multi-Threaded Real-Time Processing

- Traffic metrics are collected at regular intervals (every 5 simulation steps).

- LLM queries are processed in separate threads to avoid blocking the main simulation.

- Regular expression parsing extracts numerical speed recommendations from natural language responses.

- Speed adjustments are applied at both lane and individual vehicle levels.

4.1.5. Speed Adjustment Mechanism

- Extracting recommended speed values from the LLM’s natural language responses.

- Setting maximum lane speeds in affected road segments.

- Adjusting individual vehicle speeds to conform to optimized values.

- Validating vehicles before modification to prevent simulation errors.

4.1.6. Data Collection for Analysis

- Per-step congestion metrics across all sensors.

- Cumulative CO2 emissions calculated from individual vehicle outputs.

- Vehicle count and flow rates at key intersections.

- LLM response patterns and recommendation effectiveness.

4.2. Traffic Congestion Analysis

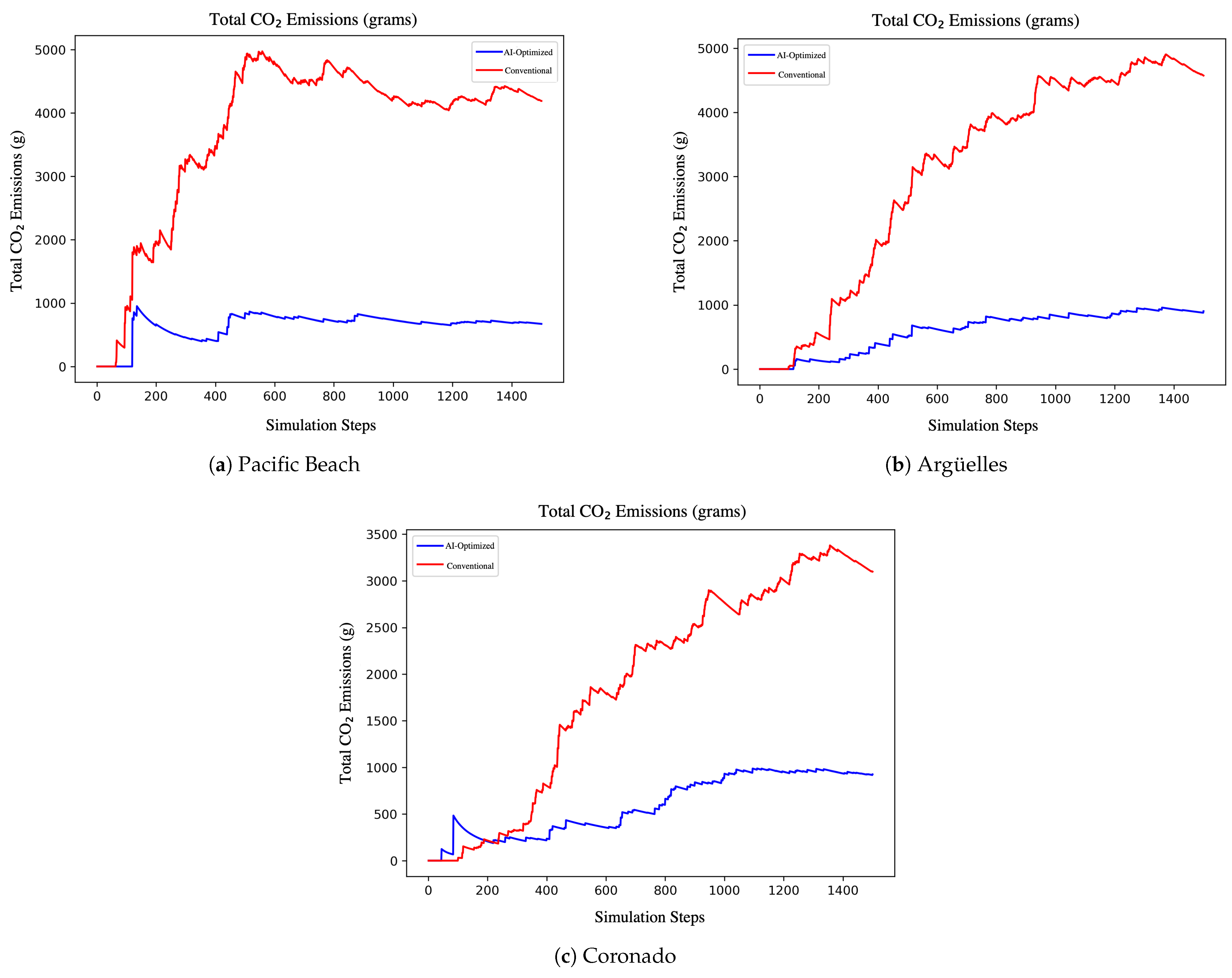

4.3. CO2 Emission Analysis

4.4. Summary of Findings

- Traffic congestion reduction: AI-driven traffic control reduces congestion by up to 82.2%.

- CO2 emission reduction: AI-controlled optimization achieves a maximum reduction of 75.0% in emissions.

- All three urban scenarios benefit significantly from real-time AI traffic optimization.

5. Computational Costs and Scalability Analysis

5.1. Computational Complexity of LLM Inference

- is the input sequence length (number of tokens in the prompt).

- is the hidden dimension size of the model.

- P is the number of model parameters.

5.2. Memory Requirements

- Model parameters: Stored in GPU VRAM or RAM as 16-bit floating point (FP16).For a 10-billion parameter model, this results in:

- Intermediate activations: Stored during inference and scale as:Given tokens and , the activation memory requirement per query is:

5.3. Inference Latency and Processing Time

5.4. Computational Scalability

- accounts for SUMO’s baseline computational cost.

- captures vehicle state updates.

- is the AI model’s inference cost.

5.5. Comparison to Alternative Methods

5.6. Optimizations for Deployment

- Quantization: Reducing FP16 to INT8 representation reduces memory overhead by 50%.

- Knowledge distillation: Compressing the LLM into a smaller, task-specific model decreases inference time.

- Edge computing: Deploying inference on traffic control edge devices minimizes latency.

5.7. Inference via API: Cloud-Based Computation

- API Response Latency: Time taken for request-response cycles.

- Network Transmission Overhead: Latency due to sending traffic data and receiving responses.

6. Discussion

6.1. Implications for Urban Traffic Management

- A reduction in traffic congestion by up to 82.2% (Argüelles).

- A decrease in CO2 emissions by up to 75.0% (Pacific Beach).

6.2. Comparison with Traditional Traffic Control Methods

- Fixed-timing traffic signals, which are unable to respond to real-time congestion.

- Rule-based adaptive control, which requires extensive manual configuration.

- Reinforcement-learning (RL)-based optimization, which often lacks generalizability across different urban environments.

6.3. Scalability and Real-World Deployment Challenges

- Latency in real-time AI inference: The computational overhead of LLM inference must be optimized for deployment in edge computing environments.

- Sensor reliability and accuracy: IoT sensors and drones must consistently provide accurate congestion and velocity data to maintain intervention effectiveness.

- Traffic rule compliance: Adjusting vehicle speeds must align with local traffic regulations to ensure road safety.

6.4. Generalization Across Urban Environments

- High-density metropolitan areas, where congestion patterns are more unpredictable.

- Highway traffic optimization, where speed recommendations must account for lane-changing behavior.

- Multi-modal transportation, integrating pedestrian and public transit flow into AI-based optimization.

6.5. Potential of UAV-Assisted Traffic Optimization

- High-resolution, aerial congestion mapping beyond fixed sensor locations.

- Enhanced real-time traffic modeling by tracking vehicle trajectories at a network level.

- Improved AI decision-making through additional data features, including lane-wise vehicle distributions and intersection queue lengths.

6.6. Ethical and Environmental Considerations

- Algorithmic bias: The AI model must ensure fair traffic optimization across all neighborhoods, avoiding disproportionate advantages or disadvantages to specific areas.

- Privacy concerns: The integration of drone-based traffic monitoring necessitates robust data protection measures to prevent misuse of real-time traffic surveillance.

- Energy consumption of AI models: While reducing congestion lowers fuel consumption, LLM inference itself has a computational footprint that must be optimized for sustainability.

6.7. Future Research Directions

- Hybrid AI models: Combining LLM-based decision-making with Reinforcement Learning (RL) could enhance adaptability in highly dynamic environments.

- Autonomous vehicle coordination: Extending AI-driven speed recommendations to autonomous vehicle fleets to create a fully AI-coordinated traffic network.

- Human-in-the-loop AI optimization: Allowing traffic engineers to interact with the AI system, refining speed recommendations based on human expertise.

- Real-world pilot studies: Deploying a scaled version of the proposed framework in live traffic settings to validate its feasibility beyond simulations.

7. Conclusions

- Novel AI-driven traffic control framework: A real-time traffic optimization system combining LLMs, IoT sensors, and drone-based monitoring.

- Significant reduction in congestion and emissions: The proposed method achieves up to 82.2% congestion reduction and 75.0% CO2 emission reduction.

- Dynamic speed adjustment mechanism: AI-generated recommendations optimize vehicle speeds in response to real-time congestion patterns.

- Scalability and generalization analysis: The framework is evaluated in three diverse urban scenarios, demonstrating its potential for adaptation in different environments.

- Discussion of ethical and deployment challenges: The study highlights potential biases, privacy concerns, and computational constraints that must be addressed for real-world implementation.

- Cities can reduce traffic congestion and emissions without expensive infrastructure modifications.

- AI-driven decision-making enables real-time, adaptive interventions that traditional traffic control systems lack.

- Drone-based monitoring could facilitate scalable, autonomous traffic management across metropolitan areas.

- Real-world deployment feasibility: Ensuring low-latency AI inference and reliable sensor data collection.

- Integration with autonomous vehicles: Extending AI-driven traffic control to coordinate with autonomous vehicle fleets.

- Multi-modal transportation optimization: Incorporating pedestrian flow, public transit, and emergency vehicles into AI-based traffic management.

- AI interpretability and safety: Ensuring that AI-generated speed recommendations align with real-world traffic regulations and human driver behavior.

- Pilot implementation studies: Deploying the proposed framework in real-world urban environments to validate its scalability and effectiveness.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLMs | Large Language Model |

| VLMs | Visual Language Models |

| SUMO | Simulation of Urban MObility |

| UAVs | Unmanned Aerial Vehicles |

| CAVs | Connected and Autonomous Vehicles |

| CO2 | Carbon Dioxide |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| RL | Reinforcement Learning |

| ITS | Intelligent Transportation Systems |

| IoT | Internet of Things |

| AIoT | Artificial Intelligence of Things |

| OSM | OpenStreetMap |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| RL | Reinforcement Learning |

| SUMO | Simulation of Urban MObility |

| TraCI | Traffic Control Interface |

| OD | Origin–Destination |

| V2X | Vehicle-to-Everything |

References

- Almukhalfi, H.; Noor, A.; Noor, T.H. Traffic management approaches using machine learning and deep learning techniques: A survey. Eng. Appl. Artif. Intell. 2024, 133, 108147. [Google Scholar] [CrossRef]

- Doraswamy, B.; Krishna, K.L.; Rao, T.C.S. A Comprehensive Review on Real Time Traffic Management Coordination for Smart Cities with IoT Connected Drones. In Proceedings of the 2024 International Conference on Cognitive Robotics and Intelligent Systems (ICC-ROBINS), Coimbatore, India, 17–19 April 2024; pp. 584–589. [Google Scholar]

- Ding, C.; Ho, I.W.H.; Chung, E.; Fan, T. V2X and deep reinforcement learning-aided mobility-aware lane changing for emergency vehicle preemption in connected autonomous transport systems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 7281–7293. [Google Scholar] [CrossRef]

- Colley, M.; Czymmeck, J.; Kücükkocak, M.; Jansen, P.; Rukzio, E. PedSUMO: Simulacra of Automated Vehicle-Pedestrian Interaction Using SUMO To Study Large-Scale Effects. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–14 March 2024; pp. 890–895. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Li, S.; Azfar, T.; Ke, R. Chatsumo: Large language model for automating traffic scenario generation in simulation of urban mobility. IEEE Trans. Intell. Veh. 2024, 1–12. [Google Scholar] [CrossRef]

- Guirado, R.; Padró, J.C.; Zoroa, A.; Olivert, J.; Bukva, A.; Cavestany, P. Stratotrans: Unmanned aerial system (uas) 4g communication framework applied on the monitoring of road traffic and linear infrastructure. Drones 2021, 5, 10. [Google Scholar] [CrossRef]

- de Curtò, J.; de Zarzà, I.; Calafate, C.T. Semantic Scene Understanding with Large Language Models on Unmanned Aerial Vehicles. Drones 2023, 7, 114. [Google Scholar] [CrossRef]

- Elloumi, M.; Dhaou, R.; Escrig, B.; Idoudi, H.; Saidane, L.A. Monitoring road traffic with a UAV-based system. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. Adv. Neural Inf. Process. Syst. 2022, 35, 23716–23736. [Google Scholar]

- Wang, W.; Lv, Q.; Yu, W.; Hong, W.; Qi, J.; Wang, Y.; Ji, J.; Yang, Z.; Zhao, L.; Song, X.; et al. Cogvlm: Visual expert for pretrained language models. Adv. Neural Inf. Process. Syst. 2025, 37, 121475–121499. [Google Scholar]

- Webster, F. Traffic Signal Settings; Road Research Technical Paper No. 39; Department of Scientific and Industrial Research: London, UK, 1958.

- Robertson, D. TRANSYT: A Traffic Network Study Tool; Road Research Laboratory Report; Road Research Laboratory: Berkshire, UK, 1969; Volume 253. [Google Scholar]

- Wiering, M. Multi-agent reinforcement learning for traffic light control. In Proceedings of the 17th International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; pp. 1151–1158. [Google Scholar]

- Van der Pol, E.; Oliehoek, F.A. Coordinated deep reinforcement learners for traffic light control. NIPS Deep. Reinf. Learn. Workshop 2016, 8, 21–38. [Google Scholar]

- Ramakrishnan, N.; Soni, T. Network traffic prediction using recurrent neural networks. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 187–193. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Zhao, H.; Dong, C.; Cao, J.; Chen, Q. A survey on deep reinforcement learning approaches for traffic signal control. Eng. Appl. Artif. Intell. 2024, 133, 108100. [Google Scholar] [CrossRef]

- Yogi, K.S.; Sharma, A.; Gowda, V.D.; Saxena, R.; Barua, T.; Mohiuddin, K. Innovative Urban Solutions with IoT-Driven Traffic and Pollution Control. In Proceedings of the 2024 International Conference on Automation and Computation (AUTOCOM), Dehradun, India, 14–16 March 2024; pp. 136–141. [Google Scholar]

- Xiao, Z.; Lim, H.B.; Ponnambalam, L. Participatory sensing for smart cities: A case study on transport trip quality measurement. IEEE Trans. Ind. Inform. 2017, 13, 759–770. [Google Scholar] [CrossRef]

- Christin, D.; Reinhardt, A.; Kanhere, S.S.; Hollick, M. A survey on privacy in mobile participatory sensing applications. J. Syst. Softw. 2011, 84, 1928–1946. [Google Scholar] [CrossRef]

- Bisio, I.; Garibotto, C.; Haleem, H.; Lavagetto, F.; Sciarrone, A. A systematic review of drone based road traffic monitoring system. IEEE Access 2022, 10, 101537–101555. [Google Scholar] [CrossRef]

- de Zarzà, I.; de Curtò, J.; Cano, J.C.; Calafate, C.T. Drone-based decentralized truck platooning with UWB sensing and control. Mathematics 2023, 11, 4627. [Google Scholar] [CrossRef]

- Hashem, I.A.; Siddiqa, A.; Alaba, F.A.; Bilal, M.; Alhashmi, S.M. Distributed intelligence for IoT-based smart cities: A survey. Neural Comput. Appl. 2024, 36, 16621–16656. [Google Scholar] [CrossRef]

- Zaman, M.; Puryear, N.; Abdelwahed, S.; Zohrabi, N. A review of IoT-based smart city development and management. Smart Cities 2024, 7, 1462–1501. [Google Scholar] [CrossRef]

- De Curtò, J.; de Zarzà, I.; Cano, J.C.; Manzoni, P.; Calafate, C.T. Adaptive truck platooning with drones: A decentralized approach for highway monitoring. Electronics 2023, 12, 4913. [Google Scholar] [CrossRef]

- Alaba, F.A.; Oluwadare, A.; Sani, U.; Oriyomi, A.A.; Lucy, A.O.; Najeem, O. Enabling sustainable transportation through iot and aiot innovations. In Artificial Intelligence of Things for Achieving Sustainable Development Goals; Springer: Berlin/Heidelberg, Germany, 2024; pp. 273–291. [Google Scholar]

- Annunziata, D.; Chiaro, D.; Qi, P.; Piccialli, F. On the Road to AIoT: A Framework for Vehicle Road Cooperation. IEEE Internet Things J. 2024, 12, 5783–5791. [Google Scholar] [CrossRef]

- Krajzewicz, D.; Erdmann, J.; Behrisch, M.; Bieker, L. Recent development and applications of SUMO—Simulation of Urban MObility. Int. J. Adv. Syst. Meas. 2012, 5, 128–138. [Google Scholar]

- Higuchi, T.; Zhong, L.; Onishi, R. NUMo: Nagoya urban mobility scenario for city-scale V2X simulations. In Proceedings of the 2024 IEEE Vehicular Networking Conference (VNC), Kobe, Japan, 29–31 May 2024; pp. 17–24. [Google Scholar]

- Roccotelli, M.; Volpe, G.; Fanti, M.P.; Mangini, A.M. A Co-Simulation Framework for Autonomous Mobility in Urban Mixed Traffic Context. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; pp. 812–817. [Google Scholar]

- Yang, K.; Tan, I.; Menendez, M. A reinforcement learning based traffic signal control algorithm in a connected vehicle environment. In Proceedings of the 17th Swiss Transport Research Conference (Strc 2017), STRC 2017, Ascona, Switzerland, 17–19 May 2017. [Google Scholar]

- Liu, J.; Qin, S.; Su, M.; Luo, Y.; Wang, Y.; Yang, S. Multiple intersections traffic signal control based on cooperative multi-agent reinforcement learning. Inf. Sci. 2023, 647, 119484. [Google Scholar] [CrossRef]

- Chen, W.; Yang, S.; Li, W.; Hu, Y.; Liu, X.; Gao, Y. Learning Multi-Intersection Traffic Signal Control via Coevolutionary Multi-Agent Reinforcement Learning. IEEE Trans. Intell. Transp. Syst. 2024, 25, 15947–15963. [Google Scholar] [CrossRef]

- Naranjo, J.E.; Serradilla, F.; Nashashibi, F. Speed control optimization for autonomous vehicles with metaheuristics. Electronics 2020, 9, 551. [Google Scholar] [CrossRef]

- Kebbati, Y.; Ait-Oufroukh, N.; Vigneron, V.; Ichalal, D.; Gruyer, D. Optimized self-adaptive PID speed control for autonomous vehicles. In Proceedings of the 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, UK, 2–4 September 2021; pp. 1–6. [Google Scholar]

- Ding, H.; Zhang, L.; Chen, J.; Zheng, X.; Pan, H.; Zhang, W. MPC-based dynamic speed control of CAVs in multiple sections upstream of the bottleneck area within a mixed vehicular environment. Phys. Stat. Mech. Its Appl. 2023, 613, 128542. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, Y.; Pu, Z.; Hu, J.; Wang, X.; Ke, R. Safe, efficient, and comfortable velocity control based on reinforcement learning for autonomous driving. Transp. Res. Part C Emerg. Technol. 2020, 117, 102662. [Google Scholar] [CrossRef]

- Du, Y.; Chen, J.; Zhao, C.; Liu, C.; Liao, F.; Chan, C.-Y. Comfortable and energy-efficient speed control of autonomous vehicles on rough pavements using deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2022, 134, 103489. [Google Scholar] [CrossRef]

- Shiwakoti, N.; Stasinopoulos, P.; Fedele, F. Investigating the state of connected and autonomous vehicles: A literature review. Transp. Res. Procedia 2020, 48, 870–882. [Google Scholar] [CrossRef]

- Ahmed, H.U.; Huang, Y.; Lu, P.; Bridgelall, R. Technology developments and impacts of connected and autonomous vehicles: An overview. Smart Cities 2022, 5, 382–404. [Google Scholar] [CrossRef]

- Talebpour, A.; Mahmassani, H.S. Influence of connected and autonomous vehicles on traffic flow stability and throughput. Transp. Res. Part C Emerg. Technol. 2016, 71, 143–163. [Google Scholar] [CrossRef]

- Sun, Y.; Fesenko, H.; Kharchenko, V.; Zhong, L.; Kliushnikov, I.; Illiashenko, O.; Morozova, O.; Sachenko, A. UAV and IoT-based systems for the monitoring of industrial facilities using digital twins: Methodology, reliability models, and application. Sensors 2022, 22, 6444. [Google Scholar] [CrossRef]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-based IoT platform: A crowd surveillance use case. IEEE Commun. Mag. 2017, 55, 128–134. [Google Scholar] [CrossRef]

- Cheng, N.; Wu, S.; Wang, X.; Yin, Z.; Li, C.; Chen, W.; Chen, F. AI for UAV-assisted IoT applications: A comprehensive review. IEEE Internet Things J. 2023, 10, 14438–14461. [Google Scholar] [CrossRef]

- Reza, S.; Ferreira, M.C.; Machado, J.; Tavares, J.M.R. A citywide TD-learning based intelligent traffic signal control for autonomous vehicles: Performance evaluation using SUMO. Expert Syst. 2025, 42, e13301. [Google Scholar] [CrossRef]

- Mamond, A.W.; Kundroo, M.; Yoo, S.E.; Kim, S.; Kim, T. FLDQN: Cooperative Multi-Agent Federated Reinforcement Learning for Solving Travel Time Minimization Problems in Dynamic Environments Using SUMO Simulation. Sensors 2025, 25, 911. [Google Scholar] [CrossRef]

- Janota, A.; Kalus, F.; Pirník, R.; Kafková, J.; Kuchár, P.; Skuba, M.; Holečko, P. Reinforcement Learning Approach to Adaptive Traffic Signal Control Using SUMO-RL. In Proceedings of the 2024 25th International Carpathian Control Conference (ICCC), Krynica Zdrój, Poland, 22–24 May 2024; pp. 1–6. [Google Scholar]

- Chavhan, S.; Doswada, R.; Gunjal, S.; Rodrigues, J.J. Next Generation Intelligent Traffic Signal Control: Empowering Electronics Consumers With Edge-AIoT Capabilities. IEEE Trans. Consum. Electron. 2025. [Google Scholar] [CrossRef]

- Din, I.U.; Almogren, A.; Rodrigues, J.J. AIoT integration in autonomous vehicles: Enhancing road cooperation and traffic management. IEEE Internet Things J. 2024, 11, 35942–35949. [Google Scholar] [CrossRef]

- Choudekar, P.; Singh, R. Traffic Management System Using AIoT. In Reshaping Intelligent Business and Industry: Convergence of AI and IoT at the Cutting Edge; John Wiley & Sons: Hoboken, NJ, USA, 2024; pp. 439–458. [Google Scholar]

- Forkan, A.R.M.; Kang, Y.B.; Marti, F.; Banerjee, A.; McCarthy, C.; Ghaderi, H.; Costa, B.; Dawod, A.; Georgakopolous, D.; Jayaraman, P.P. AIoT-citysense: AI and IoT-driven city-scale sensing for roadside infrastructure maintenance. Data Sci. Eng. 2024, 9, 26–40. [Google Scholar] [CrossRef]

| Scenario | Baseline (%) | AI-Controlled (%) | Reduction (%) |

|---|---|---|---|

| Pacific Beach | 10.8 | 2.1 | 80.6 |

| Argüelles | 12.4 | 2.2 | 82.2 |

| Coronado | 9.8 | 3.7 | 62.2 |

| Scenario | Baseline (g) | AI-Controlled (g) | Reduction (%) |

|---|---|---|---|

| Pacific Beach | 4800 | 1200 | 75.0 |

| Argüelles | 5000 | 1300 | 74.0 |

| Coronado | 3400 | 1450 | 57.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moraga , Á.; de Curtò, J.; de Zarzà, I.; Calafate, C.T. AI-Driven UAV and IoT Traffic Optimization: Large Language Models for Congestion and Emission Reduction in Smart Cities. Drones 2025, 9, 248. https://doi.org/10.3390/drones9040248

Moraga Á, de Curtò J, de Zarzà I, Calafate CT. AI-Driven UAV and IoT Traffic Optimization: Large Language Models for Congestion and Emission Reduction in Smart Cities. Drones. 2025; 9(4):248. https://doi.org/10.3390/drones9040248

Chicago/Turabian StyleMoraga , Álvaro, J. de Curtò, I. de Zarzà, and Carlos T. Calafate. 2025. "AI-Driven UAV and IoT Traffic Optimization: Large Language Models for Congestion and Emission Reduction in Smart Cities" Drones 9, no. 4: 248. https://doi.org/10.3390/drones9040248

APA StyleMoraga , Á., de Curtò, J., de Zarzà, I., & Calafate, C. T. (2025). AI-Driven UAV and IoT Traffic Optimization: Large Language Models for Congestion and Emission Reduction in Smart Cities. Drones, 9(4), 248. https://doi.org/10.3390/drones9040248