Infrared and Visible Image Fusion Techniques for UAVs: A Comprehensive Review

Highlights

- Identifies UAV-specific gaps in current IR–VIS fusion, including issues with small/fast targets, misalignment, and data bias.

- Advocates for task-specific metrics and lightweight, alignment-aware fusion methods for resource-constrained UAV platforms.

- Promotes task-guided fusion approaches to improve detection, segmentation, and tracking robustness in real-world UAV applications.

- Highlights the need for UAV-specific benchmarks and cross-modal robustness to advance the field.

Abstract

1. Introduction

- UAV-Centric Fused-Map Taxonomy: To the best of our knowledge, this is the first UAV-oriented survey of IR–VIS fusion. We introduce a fused-map perspective and a unified taxonomy that link data compatibility, fusion mechanisms, and task adaptivity across tasks including detection and segmentation.

- Systematic Analysis: We provide a structured synthesis of learning-based fused-map methods across AE/CNN/GAN/Transformer families, comparing architectural primitives, cross-spectral interaction strategies, and loss designs.

- Research Directions: We offer evaluation guidance (datasets, metrics) and deployment recommendations under power, bandwidth, and latency constraints, and outline concrete directions including misregistration-aware and hardware-efficient fusion, robustness to cross-modality perturbations, scalable UAV benchmarks beyond urban scenes, and task-aligned metrics.

1.1. Scope

1.2. Organization

2. Methods for IVIF on UAV Platforms

2.1. Visual Enhancement Oriented Fusion

2.1.1. AE-Based Approaches

2.1.2. CNN-Based Approaches

2.1.3. GAN-Based Approaches

2.1.4. Transformer-Based Approaches

2.1.5. Other Approaches

2.2. Task-Driven Fusion for UAV Perception

2.3. Summary and Discussion

2.3.1. Architectures

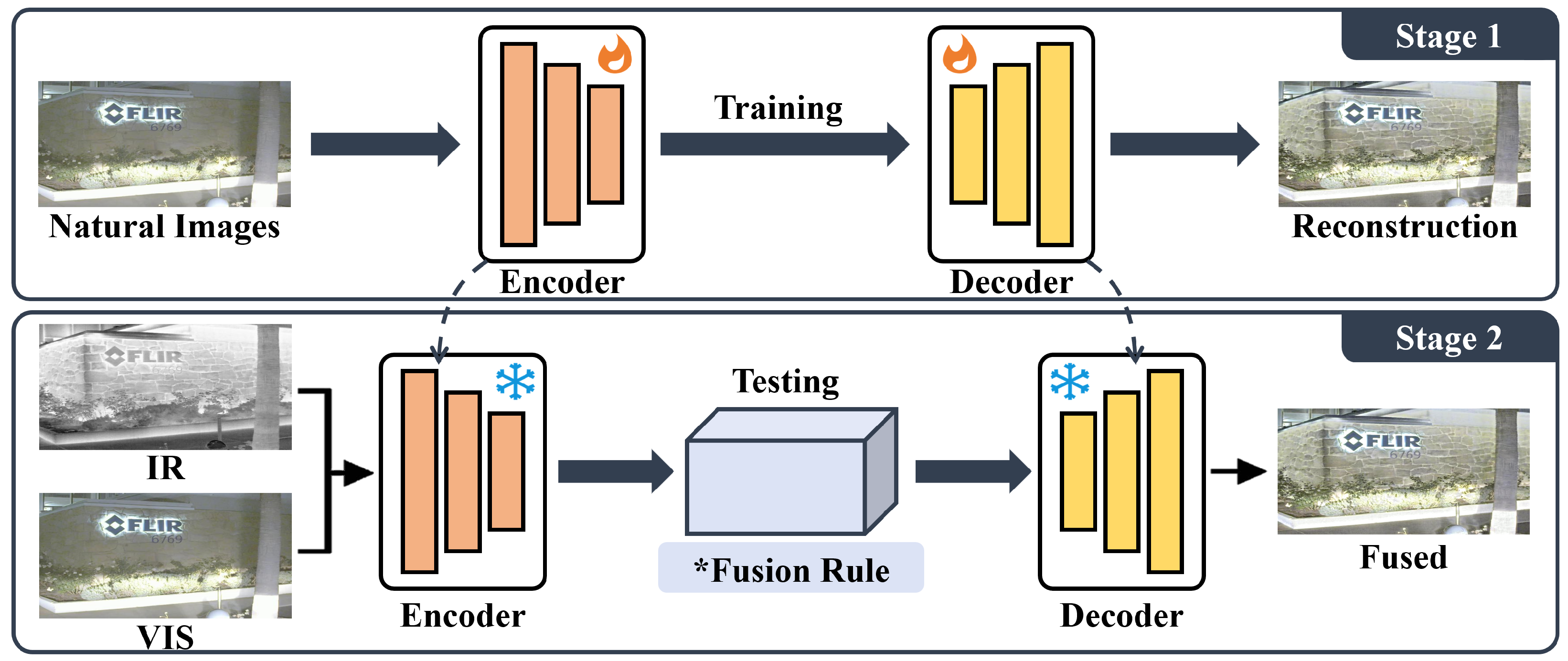

- Autoencoder-based Encoder–Decoder Models: Early works [23,24,25] adopt a three-stage encoder–fusion–decoder framework. Features are extracted via CNN encoders, fused through simple addition or attention strategies, and reconstructed by decoders. These models are straightforward but limited in capturing long-range dependencies.

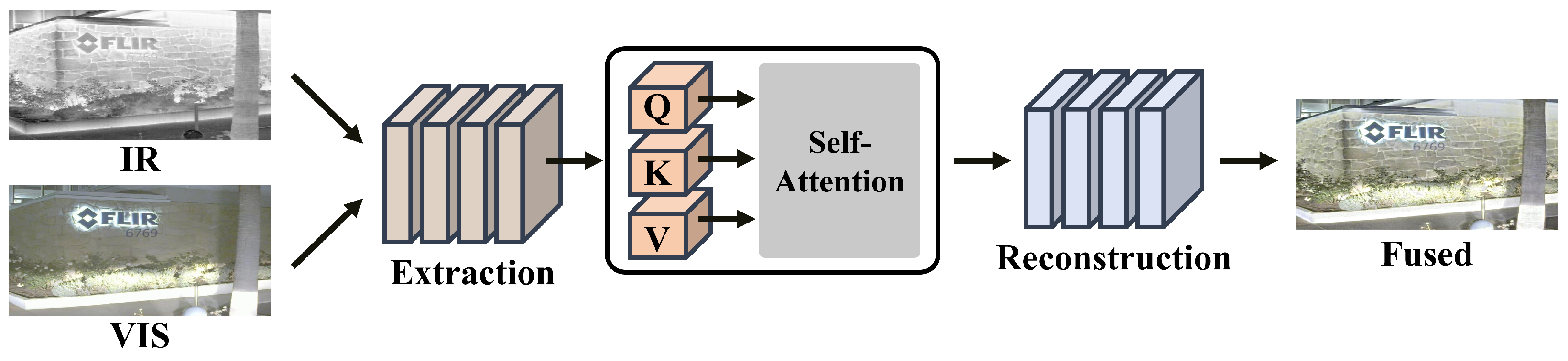

- Transformer-driven Fusion Networks: Recent advances leverage Transformers for global modeling. CDDFuse [54] combines Lite Transformer and INN for low/high-frequency decomposition; SwinFusion [51] and IFT [50] adopt shifted-window and spatio-transformer modules to capture both local and global contexts; YDTR [52] and CrossFuse [57] further exploit cross-modal attention and dynamic Transformer units. These methods improve semantic consistency and long-range interaction, becoming the mainstream paradigm.

- Semantics- and Language-guided Fusion: Emerging methods integrate high-level semantics or textual prompts. PromptFusion [58] and Text-IF [56] use CLIP-based semantic prompts to guide fusion; SMR-Net [44] introduces saliency detection as auxiliary supervision; MDDPFuse [59] and HaIVFusion [43] inject semantic priors or haze-recovery modules to enhance robustness in complex UAV scenarios. This reflects the trend of IVIF evolving from pure visual enhancement to semantic-driven integration.

- Cross-modal Registration and Task-driven Fusion: Beyond generic fusion, some UAV-focused methods integrate registration or downstream tasks. MulFS-CAP [108] performs cross-modal alignment before fusion, Collaborative Fusion and Registration [109] jointly optimizes registration and fusion, while COMO [64] and Fusion-Mamba [65] embed fusion into detection pipelines. These works highlight the necessity of coupling fusion with UAV-specific applications.

2.3.2. Loss Function

- Reconstruction and Similarity Losses: Most autoencoder-based methods employ pixel-level losses (L2/L1) combined with structural similarity (SSIM), such as DenseFuse [23], RFN-Nest [26], and SEDRFuse [25]. DIDFuse [24] additionally introduce gradient-consistency constraints to enhance edge preservation.

- Frequency- and Gradient-based Constraints: To better retain fine details, some methods add gradient or frequency terms into the loss. Examples include the Texture/Gradient Loss in SwinFusion [51], as well as wavelet/frequency consistency constraints in FISCNet [40]. These designs improve detail sharpness and texture fidelity.

- Semantic- and Task-guided Losses: Semantic-driven approaches often incorporate semantic segmentation or saliency supervision. For example, MDDPFuse [59] introduces semantic injection losses, SMR-Net [44] adopts saliency detection losses, and Text-IF uses semantic modulation losses. Such designs ensure visual quality while improving performance in downstream tasks.

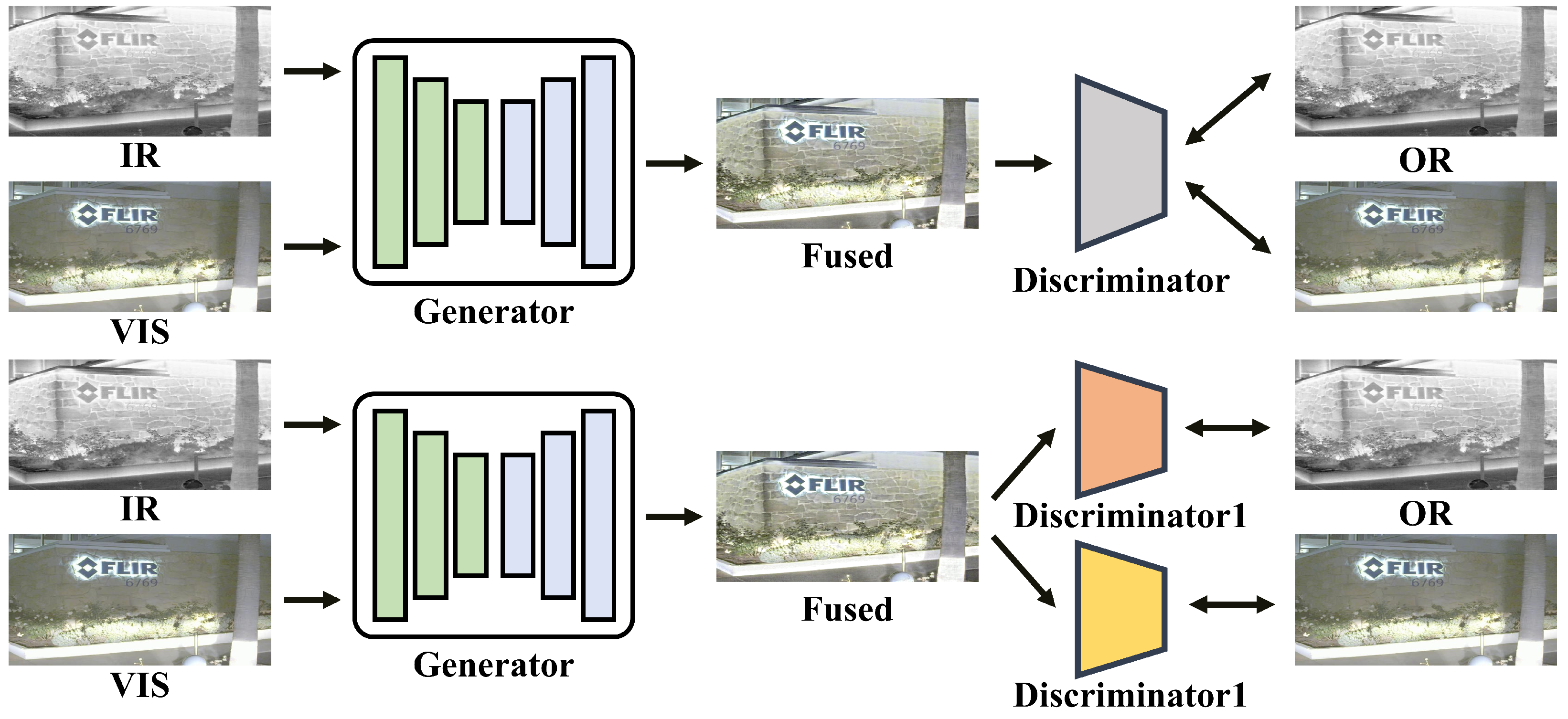

- Adversarial and Feature-consistency Losses: GAN-based methods typically include adversarial objectives. For instance, TGFus [53] employs feature differences from discriminators, while IG-GAN [49], AT-GAN [48], and SDDGAN [46] leverage dual discriminators or region-specific supervision. These are often combined with content, edge, or compatibility constraints to ensure fused images align with modality distributions while maintaining visual sharpness.

- Alignment and Correlation Losses: To address cross-modal alignment, some works introduce explicit correlation losses. For example, CDDFuse [54] applies correlation-driven decomposition to enforce low-frequency sharing and high-frequency decorrelation; MulFS-CAP [108] employs relative/absolute local correlation losses; and Collaborative Fusion and Registration [109] incorporates cyclic consistency and smoothness regularization in the registration stage. These designs improve spatial alignment and modality disentanglement in fused results.

3. Data Compatibility

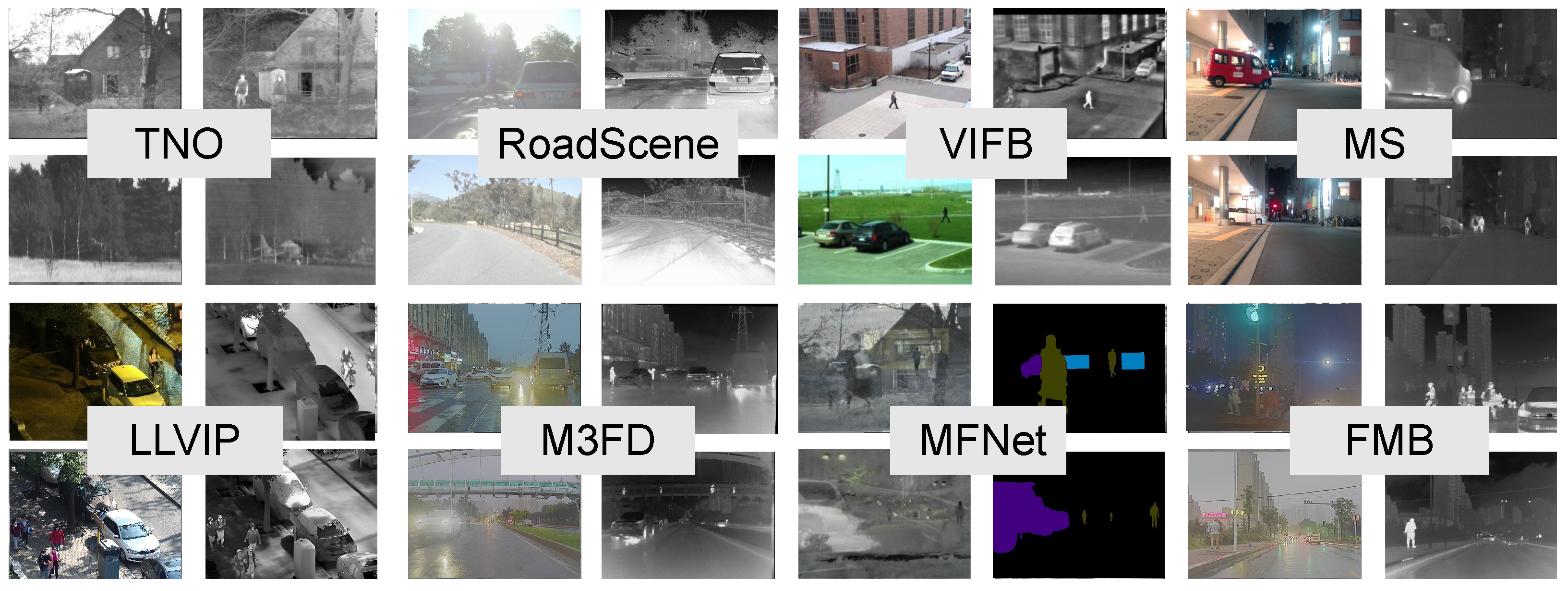

| Dataset | Img Pairs | Resolution | Camera Angle | Nighttime | Challenge Scenes |

|---|---|---|---|---|---|

| TNO [113] | 261 | horizontal | 65 | ✓ | |

| RoadScene [17] | 221 | Various | driving | 122 | × |

| VIFB [114] | 21 | Various | multiplication | 10 | × |

| MS [115] | 2999 | driving | 1139 | × | |

| LLVIP [116] | 16,836 | surveillance | all | × | |

| M3FD [117] | 4200 | multiplication | 1671 | ✓ | |

| MFNet [118] | 1569 | driving | 749 | × | |

| FMB [119] | 1500 | multiplication | 826 | × |

3.1. Registration-Free

3.2. General

4. Benchmark & Evaluation Metric

4.1. Benchmark

4.2. Evaluation Metric

4.3. Limitations of Existing Metrics for UAV-Oriented IVIF

5. Performance Summary and Analysis

5.1. Qualitative Evaluation

5.2. Quantitative Evaluation

6. Future Perspectives and Open Problems

6.1. Developing Benchmarks

- Constructing UAV-specific datasets with explicit annotations of cross-modal registration errors to simulate sensor misalignment and dynamic in-flight variations;

- Expanding scene diversity to include extreme weather (fog, rain, snow), challenging illumination (backlight, low-light), and multi-task UAV missions (search and rescue, inspection, environmental monitoring);

- Incorporating high-level task labels to promote IVIF applications in detection, segmentation, and multi-object tracking;

- Establishing a unified, open, and extensible evaluation platform that integrates both subjective and objective measures, enabling more representative and practical benchmarks for UAV fusion research across academia and industry.

6.2. Better Evaluation Metrics

- Developing task-driven metrics, such as evaluating fusion quality based on detection accuracy or segmentation performance, to better reflect UAV mission requirements.

- Integrating human perception models, leveraging deep-learning-based perceptual quality assessment methods (e.g., LPIPS, DISTS) to align more closely with subjective human judgments.

- Promoting multi-dimensional evaluation frameworks that jointly consider low-level quality, perceptual similarity, consistency, and task performance.

- Designing robust metrics for misaligned data, accommodating the non-strict registration and dynamic scenes commonly encountered in UAV operations.

6.3. Lightweight Design

- Developing lightweight Transformers or efficient cross-modal attention mechanisms that balance representational power with reduced computational cost.

- Leveraging dynamic inference and adaptive computation, selectively activating modules based on scene complexity and mission requirements.

- Advancing software–hardware co-design, tailoring model architectures to UAV hardware characteristics.

- Establishing energy-aware evaluation metrics that jointly consider fusion quality, inference latency, power consumption, and endurance, thereby enabling practical, real-time UAV deployment.

6.4. Combination with Various Tasks

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Miclea, V.C.; Nedevschi, S. Monocular depth estimation with improved long-range accuracy for UAV environment perception. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602215. [Google Scholar] [CrossRef]

- Slater, J.C. Microwave electronics. Rev. Mod. Phys. 1946, 18, 441. [Google Scholar] [CrossRef]

- Bearden, J.A. X-ray wavelengths. Rev. Mod. Phys. 1967, 39, 78. [Google Scholar] [CrossRef]

- Marpaung, D.; Yao, J.; Capmany, J. Integrated microwave photonics. Nat. Photonics 2019, 13, 80–90. [Google Scholar] [CrossRef]

- Simon, C.J.; Dupuy, D.E.; Mayo-Smith, W.W. Microwave ablation: Principles and applications. Radiographics 2005, 25, S69–S83. [Google Scholar] [CrossRef]

- Simone, G.; Farina, A.; Morabito, F.C.; Serpico, S.B.; Bruzzone, L. Image fusion techniques for remote sensing applications. Inf. Fusion 2002, 3, 3–15. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Xiao, H.; Liu, S.; Zuo, K.; Xu, H.; Cai, Y.; Liu, T.; Yang, Z. Multiple adverse weather image restoration: A review. Neurocomputing 2025, 618, 129044. [Google Scholar] [CrossRef]

- Gallagher, J.E.; Oughton, E.J. Assessing thermal imagery integration into object detection methods on air-based collection platforms. Sci. Rep. 2023, 13, 8491. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Meher, B.; Agrawal, S.; Panda, R.; Abraham, A. A survey on region based image fusion methods. Inf. Fusion 2019, 48, 119–132. [Google Scholar] [CrossRef]

- Tang, K.; Ma, Y.; Miao, D.; Song, P.; Gu, Z.; Tian, Z.; Wang, W. Decision fusion networks for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 36, 3890–3903. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Wang, H.; Liu, J.; Dong, H.; Shao, Z. A Survey of the Multi-Sensor Fusion Object Detection Task in Autonomous Driving. Sensors 2025, 25, 2794. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Tian, X.; Jiang, J.; Ma, J. Image fusion meets deep learning: A survey and perspective. Inf. Fusion 2021, 76, 323–336. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Luo, Z. Infrared and visible image fusion: Methods, datasets, applications, and prospects. Appl. Sci. 2023, 13, 10891. [Google Scholar] [CrossRef]

- Ma, J.; Liang, P.; Yu, W.; Chen, C.; Guo, X.; Wu, J.; Jiang, J. Infrared and visible image fusion via detail preserving adversarial learning. Inf. Fusion 2020, 54, 85–98. [Google Scholar] [CrossRef]

- Liu, J.; Wu, G.; Liu, Z.; Wang, D.; Jiang, Z.; Ma, L.; Zhong, W.; Fan, X. Infrared and visible image fusion: From data compatibility to task adaption. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2349–2369. [Google Scholar] [CrossRef]

- Zhang, X.; Demiris, Y. Visible and infrared image fusion using deep learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10535–10554. [Google Scholar] [CrossRef] [PubMed]

- Karim, S.; Tong, G.; Li, J.; Qadir, A.; Farooq, U.; Yu, Y. Current advances and future perspectives of image fusion: A comprehensive review. Inf. Fusion 2023, 90, 185–217. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, C.; Liu, J.; Li, P.; Zhang, J. DIDFuse: Deep image decomposition for infrared and visible image fusion. arXiv 2020, arXiv:2003.09210. [Google Scholar]

- Jian, L.; Yang, X.; Liu, Z.; Jeon, G.; Gao, M.; Chisholm, D. SEDRFuse: A symmetric encoder–decoder with residual block network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5002215. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Wang, J.; Xu, J.; Shao, W. Res2Fusion: Infrared and visible image fusion based on dense Res2net and double nonlocal attention models. IEEE Trans. Instrum. Meas. 2022, 71, 5005012. [Google Scholar] [CrossRef]

- Luo, X.; Wang, J.; Zhang, Z.; Wu, X.j. A full-scale hierarchical encoder-decoder network with cascading edge-prior for infrared and visible image fusion. Pattern Recognit. 2024, 148, 110192. [Google Scholar] [CrossRef]

- Liu, H.; Mao, Q.; Dong, M.; Zhan, Y. Infrared-visible image fusion using dual-branch auto-encoder with invertible high-frequency encoding. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 2675–2688. [Google Scholar] [CrossRef]

- Zhang, J.; Qin, P.; Zeng, J.; Zhao, L. DMRO-Fusion: Infrared and Visible Image Fusion Based on Recurrent-Octave Auto-Encoder via Two-Level Modulation. IEEE Trans. Instrum. Meas. 2025, 74, 5038617. [Google Scholar] [CrossRef]

- Li, J.; Jiang, J.; Liang, P.; Ma, J.; Nie, L. MaeFuse: Transferring omni features with pretrained masked autoencoders for infrared and visible image fusion via guided training. IEEE Trans. Image Process. 2025, 34, 1340–1353. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, W.; Wang, H.; He, Y. Weakly-supervised Cross Mixer for Infrared and Visible Image Fusion. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5629413. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, J.; Liang, C.; Zhang, C.; Liu, J. Efficient and model-based infrared and visible image fusion via algorithm unrolling. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1186–1196. [Google Scholar] [CrossRef]

- Li, H.; Cen, Y.; Liu, Y.; Chen, X.; Yu, Z. Different input resolutions and arbitrary output resolution: A meta learning-based deep framework for infrared and visible image fusion. IEEE Trans. Image Process. 2021, 30, 4070–4083. [Google Scholar] [CrossRef] [PubMed]

- Raza, A.; Liu, J.; Liu, Y.; Liu, J.; Li, Z.; Chen, X.; Huo, H.; Fang, T. IR-MSDNet: Infrared and visible image fusion based on infrared features and multiscale dense network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3426–3437. [Google Scholar] [CrossRef]

- Ma, J.; Tang, L.; Xu, M.; Zhang, H.; Xiao, G. STDFusionNet: An infrared and visible image fusion network based on salient target detection. IEEE Trans. Instrum. Meas. 2021, 70, 5009513. [Google Scholar] [CrossRef]

- Xu, D.; Zhang, N.; Zhang, Y.; Li, Z.; Zhao, Z.; Wang, Y. Multi-scale unsupervised network for infrared and visible image fusion based on joint attention mechanism. Infrared Phys. Technol. 2022, 125, 104242. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83, 79–92. [Google Scholar] [CrossRef]

- Li, H.; Xu, T.; Wu, X.J.; Lu, J.; Kittler, J. Lrrnet: A novel representation learning guided fusion network for infrared and visible images. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11040–11052. [Google Scholar] [CrossRef]

- Zheng, N.; Zhou, M.; Huang, J.; Zhao, F. Frequency integration and spatial compensation network for infrared and visible image fusion. Inf. Fusion 2024, 109, 102359. [Google Scholar] [CrossRef]

- Wu, B.; Nie, J.; Wei, W.; Zhang, L.; Zhang, Y. Adjustable Visible and Infrared Image Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 13463–13477. [Google Scholar] [CrossRef]

- Liu, X.; Huo, H.; Yang, X.; Li, J. A three-dimensional feature-based fusion strategy for infrared and visible image fusion. Pattern Recognit. 2025, 157, 110885. [Google Scholar] [CrossRef]

- Gao, X.; Gao, Y.; Dong, A.; Cheng, J.; Lv, G. HaIVFusion: Haze-free Infrared and Visible Image Fusion. IEEE/CAA J. Autom. Sin. 2025, 12, 2040–2055. [Google Scholar] [CrossRef]

- Xiao, G.; Liu, X.; Lin, Z.; Ming, R. SMR-Net: Semantic-Guided Mutually Reinforcing Network for Cross-Modal Image Fusion and Salient Object Detection. Proc. AAAI Conf. Artif. Intell. 2025, 39, 8637–8645. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, J.; Huang, S.; Wan, W.; Wen, W.; Guan, J. Infrared and visible image fusion via texture conditional generative adversarial network. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4771–4783. [Google Scholar] [CrossRef]

- Zhou, H.; Wu, W.; Zhang, Y.; Ma, J.; Ling, H. Semantic-supervised infrared and visible image fusion via a dual-discriminator generative adversarial network. IEEE Trans. Multimed. 2021, 25, 635–648. [Google Scholar] [CrossRef]

- Gao, Y.; Ma, S.; Liu, J. DCDR-GAN: A densely connected disentangled representation generative adversarial network for infrared and visible image fusion. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 549–561. [Google Scholar] [CrossRef]

- Rao, Y.; Wu, D.; Han, M.; Wang, T.; Yang, Y.; Lei, T.; Zhou, C.; Bai, H.; Xing, L. AT-GAN: A generative adversarial network with attention and transition for infrared and visible image fusion. Inf. Fusion 2023, 92, 336–349. [Google Scholar] [CrossRef]

- Sui, C.; Yang, G.; Hong, D.; Wang, H.; Yao, J.; Atkinson, P.M.; Ghamisi, P. IG-GAN: Interactive guided generative adversarial networks for multimodal image fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5634719. [Google Scholar] [CrossRef]

- Vs, V.; Valanarasu, J.M.J.; Oza, P.; Patel, V.M. Image fusion transformer. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3566–3570. [Google Scholar]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y. YDTR: Infrared and visible image fusion via Y-shape dynamic transformer. IEEE Trans. Multimed. 2022, 25, 5413–5428. [Google Scholar] [CrossRef]

- Rao, D.; Xu, T.; Wu, X.J. TGFuse: An infrared and visible image fusion approach based on transformer and generative adversarial network. IEEE Trans. Image Process. 2023; online ahead of print. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, H.; Zhang, J.; Zhang, Y.; Xu, S.; Lin, Z.; Timofte, R.; Van Gool, L. Cddfuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, Canada, 18–22 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 5906–5916. [Google Scholar]

- Park, S.; Vien, A.G.; Lee, C. Cross-modal transformers for infrared and visible image fusion. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 770–785. [Google Scholar] [CrossRef]

- Yi, X.; Xu, H.; Zhang, H.; Tang, L.; Ma, J. Text-if: Leveraging semantic text guidance for degradation-aware and interactive image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 27026–27035. [Google Scholar]

- Li, H.; Wu, X.J. CrossFuse: A novel cross attention mechanism based infrared and visible image fusion approach. Inf. Fusion 2024, 103, 102147. [Google Scholar] [CrossRef]

- Liu, J.; Li, X.; Wang, Z.; Jiang, Z.; Zhong, W.; Fan, W.; Xu, B. PromptFusion: Harmonized semantic prompt learning for infrared and visible image fusion. IEEE/CAA J. Autom. Sin. 2024, 12, 502–515. [Google Scholar] [CrossRef]

- Wang, M.; Pan, Y.; Zhao, Z.; Li, Z.; Yao, S. MDDPFuse: Multi-driven dynamic perception network for infrared and visible image fusion via data guidance and semantic injection. Knowl.-Based Syst. 2025, 327, 114027. [Google Scholar] [CrossRef]

- Cao, Z.H.; Liang, Y.J.; Deng, L.J.; Vivone, G. An Efficient Image Fusion Network Exploiting Unifying Language and Mask Guidance. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 9845–9862. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Shi, C.; Weng, Z.; Tian, Y.; Xian, X.; Lin, L. Crossfuse: Learning infrared and visible image fusion by cross-sensor top-k vision alignment and beyond. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 7579–7591. [Google Scholar] [CrossRef]

- Yue, J.; Fang, L.; Xia, S.; Deng, Y.; Ma, J. Dif-fusion: Toward high color fidelity in infrared and visible image fusion with diffusion models. IEEE Trans. Image Process. 2023, 32, 5705–5720. [Google Scholar] [CrossRef] [PubMed]

- Yi, X.; Tang, L.; Zhang, H.; Xu, H.; Ma, J. Diff-IF: Multi-modality image fusion via diffusion model with fusion knowledge prior. Inf. Fusion 2024, 110, 102450. [Google Scholar] [CrossRef]

- Liu, C.; Ma, X.; Yang, X.; Zhang, Y.; Dong, Y. COMO: Cross-Mamba Interaction and Offset-Guided Fusion for Multimodal Object Detection. Inf. Fusion 2024, 125, 103414. [Google Scholar] [CrossRef]

- Dong, W.; Zhu, H.; Lin, S.; Luo, X.; Shen, Y.; Guo, G.; Zhang, B. Fusion-mamba for cross-modality object detection. IEEE Trans. Multimed. 2025, 27, 7392–7406. [Google Scholar] [CrossRef]

- Zhu, J.; Dou, Q.; Jian, L.; Liu, K.; Hussain, F.; Yang, X. Multiscale channel attention network for infrared and visible image fusion. Concurr. Comput. Pract. Exp. 2021, 33, e6155. [Google Scholar] [CrossRef]

- Zhao, F.; Zhao, W.; Yao, L.; Liu, Y. Self-supervised feature adaption for infrared and visible image fusion. Inf. Fusion 2021, 76, 189–203. [Google Scholar] [CrossRef]

- Liu, J.; Wu, Y.; Huang, Z.; Liu, R.; Fan, X. Smoa: Searching a modality-oriented architecture for infrared and visible image fusion. IEEE Signal Process. Lett. 2021, 28, 1818–1822. [Google Scholar] [CrossRef]

- Wang, J.; Xi, X.; Li, D.; Li, F. FusionGRAM: An infrared and visible image fusion framework based on gradient residual and attention mechanism. IEEE Trans. Instrum. Meas. 2023, 72, 5005412. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, H.; Xu, B.; Yan, Z.; Kalantidis, Y.; Rohrbach, M.; Yan, S.; Feng, J. Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3435–3444. [Google Scholar]

- Yang, Z.; Zhang, Y.; Li, H.; Liu, Y. Instruction-driven fusion of Infrared–visible images: Tailoring for diverse downstream tasks. Inf. Fusion 2025, 121, 103148. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y. ITFuse: An interactive transformer for infrared and visible image fusion. Pattern Recognit. 2024, 156, 110822. [Google Scholar] [CrossRef]

- Cohen, T.; Welling, M. Group equivariant convolutional networks. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; PMLR: New York, NY, USA, 2016; pp. 2990–2999. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Long, Y.; Jia, H.; Zhong, Y.; Jiang, Y.; Jia, Y. RXDNFuse: A aggregated residual dense network for infrared and visible image fusion. Inf. Fusion 2021, 69, 128–141. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Durrani, T. NestFuse: An Infrared and Visible Image Fusion Architecture Based on Nest Connection and Spatial/Channel Attention Models. IEEE Trans. Instrum. Meas. 2020, 69, 9645–9656. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, J.; Wu, Y.; Xu, J.; Zhang, X. UNFusion: A unified multi-scale densely connected network for infrared and visible image fusion. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 3360–3374. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Sun, P.; Yan, H.; Zhao, X.; Zhang, L. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 2020, 54, 99–118. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Wang, Z.; She, Q.; Ward, T.E. Generative adversarial networks in computer vision: A survey and taxonomy. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 35, 3313–3332. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Granger, E.; Zhou, H.; Wang, R.; Celebi, M.E.; Yang, J. Image synthesis with adversarial networks: A comprehensive survey and case studies. Inf. Fusion 2021, 72, 126–146. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Touvron, H.; Cord, M.; Jégou, H. Deit iii: Revenge of the vit. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 516–533. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 568–578. [Google Scholar]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. Maxvit: Multi-axis vision transformer. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 459–479. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Dao, T.; Fu, D.; Ermon, S.; Rudra, A.; Ré, C. Flashattention: Fast and memory-efficient exact attention with io-awareness. Adv. Neural Inf. Process. Syst. 2022, 35, 16344–16359. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 10684–10695. [Google Scholar]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Li, H.; Zhao, J.; Li, J.; Yu, Z.; Lu, G. Feature dynamic alignment and refinement for infrared–visible image fusion: Translation robust fusion. Inf. Fusion 2023, 95, 26–41. [Google Scholar] [CrossRef]

- Zhang, X.; Zhai, H.; Liu, J.; Wang, Z.; Sun, H. Real-time infrared and visible image fusion network using adaptive pixel weighting strategy. Inf. Fusion 2023, 99, 101863. [Google Scholar] [CrossRef]

- Sun, Y.; Cao, B.; Zhu, P.; Hu, Q. Detfusion: A detection-driven infrared and visible image fusion network. In Proceedings of the 30th ACM International Conference on Multimedia (MM 2022), Lisbon, Portugal, 10–14 October 2022; ACM: New York, NY, USA, 2022; pp. 4003–4011. [Google Scholar]

- Zhang, Y.; Xu, C.; Yang, W.; He, G.; Yu, H.; Yu, L.; Xia, G.S. Drone-based RGBT tiny person detection. ISPRS J. Photogramm. Remote Sens. 2023, 204, 61–76. [Google Scholar] [CrossRef]

- Fu, H.; Yuan, J.; Zhong, G.; He, X.; Lin, J.; Li, Z. CF-Deformable DETR: An end-to-end alignment-free model for weakly aligned visible-infrared object detection. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence (IJCAI 2024), Jeju, Republic of Korea, 3–9 August 2024; pp. 758–766. [Google Scholar]

- Jiang, C.; Liu, X.; Zheng, B.; Bai, L.; Li, J. HSFusion: A high-level vision task-driven infrared and visible image fusion network via semantic and geometric domain transformation. arXiv 2024, arXiv:2407.10047. [Google Scholar]

- Dong, S.; Zhou, W.; Xu, C.; Yan, W. EGFNet: Edge-aware guidance fusion network for RGB–thermal urban scene parsing. IEEE Trans. Intell. Transp. Syst. 2023, 25, 657–669. [Google Scholar] [CrossRef]

- Wang, Y.; Li, G.; Liu, Z. SGFNet: Semantic-guided fusion network for RGB-thermal semantic segmentation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7737–7748. [Google Scholar] [CrossRef]

- Li, G.; Qian, X.; Qu, X. SOSMaskFuse: An infrared and visible image fusion architecture based on salient object segmentation mask. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10118–10137. [Google Scholar] [CrossRef]

- Zhang, T.; Guo, H.; Jiao, Q.; Zhang, Q.; Han, J. Efficient rgb-t tracking via cross-modality distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 5404–5413. [Google Scholar]

- Li, H.; Yang, Z.; Zhang, Y.; Jia, W.; Yu, Z.; Liu, Y. MulFS-CAP: Multimodal Fusion-Supervised Cross-Modality Alignment Perception for Unregistered Infrared-Visible Image Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 3673–3690. [Google Scholar] [CrossRef]

- Yang, X.; Xing, H.; Xu, L.; Wu, L.; Zhang, H.; Zhang, W.; Yang, C.; Zhang, Y.; Zhang, J.; Yang, Z. A Collaborative Fusion and Registration Framework for Multimodal Image Fusion. IEEE Internet Things J. 2025, 12, 29584–29600. [Google Scholar] [CrossRef]

- Rizzoli, G.; Barbato, F.; Caligiuri, M.; Zanuttigh, P. Syndrone-multi-modal uav dataset for urban scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Paris, France, 2–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 2210–2220. [Google Scholar]

- Perera, A.G.; Wei Law, Y.; Chahl, J. UAV-GESTURE: A dataset for UAV control and gesture recognition. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 117–128. [Google Scholar]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle detection from UAV imagery with deep learning: A review. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 6047–6067. [Google Scholar] [CrossRef] [PubMed]

- Toet, A. The TNO multiband image data collection. Data Brief 2017, 15, 249. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ye, P.; Xiao, G. VIFB: A visible and infrared image fusion benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 468–478. [Google Scholar]

- Takumi, K.; Watanabe, K.; Ha, Q.; Tejero-De-Pablos, A.; Ushiku, Y.; Harada, T. Multispectral object detection for autonomous vehicles. In Proceedings of the Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 35–43. [Google Scholar]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3496–3504. [Google Scholar]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 5802–5811. [Google Scholar]

- Ha, Q.; Watanabe, K.; Karasawa, T.; Ushiku, Y.; Harada, T. MFNet: Towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 5108–5115. [Google Scholar]

- Liu, J.; Liu, Z.; Wu, G.; Ma, L.; Liu, R.; Zhong, W.; Luo, Z.; Fan, X. Multi-interactive feature learning and a full-time multi-modality benchmark for image fusion and segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 8115–8124. [Google Scholar]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Ye, Y.; Xiang, Y.; Zhang, T. Deep learning in remote sensing image matching: A survey. ISPRS J. Photogramm. Remote Sens. 2025, 225, 88–112. [Google Scholar] [CrossRef]

- Geng, Z.; Liu, H.; Duan, P.; Wei, X.; Li, S. Feature-based multimodal remote sensing image matching: Benchmark and state-of-the-art. ISPRS J. Photogramm. Remote Sens. 2025, 229, 285–302. [Google Scholar] [CrossRef]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised misaligned infrared and visible image fusion via cross-modality image generation and registration. arXiv 2022, arXiv:2205.11876. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, J.; Fan, X.; Liu, R.; Zhong, W.; Luo, Z. Reconet: Recurrent correction network for fast and efficient multi-modality image fusion. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 539–555. [Google Scholar]

- Tang, L.; Deng, Y.; Ma, Y.; Huang, J.; Ma, J. SuperFusion: A versatile image registration and fusion network with semantic awareness. IEEE/CAA J. Autom. Sin. 2022, 9, 2121–2137. [Google Scholar] [CrossRef]

- Xu, H.; Yuan, J.; Ma, J. Murf: Mutually reinforcing multi-modal image registration and fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12148–12166. [Google Scholar] [CrossRef]

- Wang, D.; Liu, J.; Ma, L.; Liu, R.; Fan, X. Improving misaligned multi-modality image fusion with one-stage progressive dense registration. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10944–10958. [Google Scholar] [CrossRef]

- Li, H.; Liu, J.; Zhang, Y.; Liu, Y. A deep learning framework for infrared and visible image fusion without strict registration. Int. J. Comput. Vis. 2024, 132, 1625–1644. [Google Scholar] [CrossRef]

- Zheng, K.; Huang, J.; Yu, H.; Zhao, F. Efficient multi-exposure image fusion via filter-dominated fusion and gradient-driven unsupervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Vancouver, BC, Canada, 18–22 June 2023; pp. 2805–2814. [Google Scholar]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the Image Fusion: A Fast Unified Image Fusion Network based on Proportional aintenance of Gradient and Intensity. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12797–12804. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Liang, P.; Li, C.; Jiang, J. FusionGAN: A generative adversarial network for infrared and visible image fusion. Inf. Fusion 2019, 48, 11–26. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 5005014. [Google Scholar] [CrossRef]

- Cheng, C.; Xu, T.; Wu, X.J. MUFusion: A general unsupervised image fusion network based on memory unit. Inf. Fusion 2023, 92, 80–92. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, H.; Xu, T.; Wu, X.J.; Kittler, J. DDBFusion: An unified image decomposition and fusion framework based on dual decomposition and Bézier curves. Inf. Fusion 2025, 114, 102655. [Google Scholar] [CrossRef]

- Liu, J.; Li, S.; Liu, H.; Dian, R.; Wei, X. A lightweight pixel-level unified image fusion network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 18120–18132. [Google Scholar] [CrossRef]

- He, Q.; Zhang, J.; Peng, J.; He, H.; Li, X.; Wang, Y.; Wang, C. Pointrwkv: Efficient rwkv-like model for hierarchical point cloud learning. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 3410–3418. [Google Scholar]

- Fei, Z.; Fan, M.; Yu, C.; Li, D.; Huang, J. Diffusion-rwkv: Scaling rwkv-like architectures for diffusion models. arXiv 2024, arXiv:2404.04478. [Google Scholar]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 313–315. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

- Aslantas, V.; Bendes, E. A new image quality metric for image fusion: The sum of the correlations of differences. Aeu-Int. J. Electron. Commun. 2015, 69, 1890–1896. [Google Scholar] [CrossRef]

- Roberts, J.W.; Van Aardt, J.A.; Ahmed, F.B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Jagalingam, P.; Hegde, A.V. A review of quality metrics for fused image. Aquat. Procedia 2015, 4, 133–142. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.; Wang, Y. Combination of images with diverse focuses using the spatial frequency. Inf. Fusion 2001, 2, 169–176. [Google Scholar] [CrossRef]

- Xydeas, C.S.; Petrovic, V. Objective image fusion performance measure. Electron. Lett. 2000, 36, 308–309. [Google Scholar] [CrossRef]

- Guan, D.; Wu, Y.; Liu, T.; Kot, A.C.; Gu, Y. Rethinking the Evaluation of Visible and Infrared Image Fusion. arXiv 2024, arXiv:2410.06811. [Google Scholar] [CrossRef]

- Liu, Y.; Qi, Z.; Cheng, J.; Chen, X. Rethinking the effectiveness of objective evaluation metrics in multi-focus image fusion: A statistic-based approach. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5806–5819. [Google Scholar] [CrossRef]

- Chen, H.; Varshney, P.K. A human perception inspired quality metric for image fusion based on regional information. Inf. Fusion 2007, 8, 193–207. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, J.; Zhang, B.; Ma, L.; Fan, X.; Liu, R. PAIF: Perception-aware infrared-visible image fusion for attack-tolerant semantic segmentation. In Proceedings of the 31st ACM International Conference on Multimedia (MM ’23), Ottawa, ON, Canada, 29 October–3 November 2023; pp. 3706–3714. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. In Proceedings of the 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 2704–2713. [Google Scholar]

- Mehta, S.; Rastegari, M. Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv 2021, arXiv:2110.02178. [Google Scholar]

- Wu, K.; Zhang, J.; Peng, H.; Liu, M.; Xiao, B.; Fu, J.; Yuan, L. Tinyvit: Fast pretraining distillation for small vision transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 68–85. [Google Scholar]

| Aspects | Methods | Publication | Core Ideas | |

|---|---|---|---|---|

| Auto-Encoder | DenseFuse [23] | TIP | 2018 | Dense connection feature extraction with -norm fusion rule. |

| DIDFuse [24] | IJCAI | 2020 | DIDFuse learns deep background–detail decomposition to fuse effectively. | |

| SEDRFuse [25] | TIM | 2020 | Symmetric encoder–decoder with residual block, attention fusion, choose-max. | |

| RFNNest [26] | IF | 2021 | Residual fusion network with Nest connections replaces rules, preserving saliency. | |

| Res2Fusion [27] | TIM | 2022 | Res2Net with nonlocal attention for multiscale long-range dependencies. | |

| FSFuse [28] | PR | 2024 | Full-scale encoder–decoder with non-local attention and a cascading edge prior. | |

| IHFDBAE [29] | TCSVT | 2024 | Dual-branch autoencoder fusing invertible-wavelet HF and transformer LF. | |

| DMROFuse [30] | TIM | 2025 | Two-level modulation with a recurrent-octave auto-encoder. | |

| MaeFuse [31] | TIP | 2025 | MAE encoder with guided two-stage fusion aligns domains to integrate omni features. | |

| WSCM [32] | TGRS | 2025 | Segmentation-guided cross mixer with a shared adaptive decoder for loss-free IVIF. | |

| CNN | IVIF-Net [33] | TCSVT | 2021 | Unroll two-scale optimization into a trainable encoder–decoder. |

| MetaFusion [34] | TIP | 2021 | Meta-learning IR–visible fusion with different input and arbitrary output resolutions. | |

| MSDNet [35] | JSTARS | 2021 | IR–visible fusion with encoder–decoder and channel attention. | |

| STDFusion [36] | TIM | 2021 | Saliency-guided fusion via pseudo-siamese CNN with region-weighted pixel losses. | |

| MAFusion [37] | TIM | 2022 | IR–visible fusion with skip connections and feature-preserving loss. | |

| PIAFusion [38] | IF | 2022 | Illumination-aware IR–visible fusion with intensity and texture losses. | |

| LRR-Net [39] | TPAMI | 2023 | IR–visible fusion via LLRR and detail-to-semantic loss. | |

| FISCNet [40] | IF | 2024 | Frequency-phase IR–visible fusion with spatial compensation. | |

| AdFusion [41] | TCSVT | 2024 | Global control coefficients and semantics-aware pixel modulation. | |

| D3Fuse [42] | PR | 2025 | D3Fuse introduces a scene-common third modality, building a 3D space. | |

| HalVFusion [43] | JAS | 2025 | Texture restoration plus denoised, color-corrected haze fusion. | |

| SMR-Net [44] | AAAI | 2025 | Semantic coupling of fusion (PCI) and SOD (BPS) via a fused-image third modality. | |

| GAN | TC-GAN [45] | TCSVT | 2021 | Visible-texture-conditioned guidance for adaptive-filter-based fusion. |

| DDGAN [46] | TMM | 2021 | Semantics-guided IR–visible fusion with modality-specific dual discriminators. | |

| DCDRGAN [47] | TCSVT | 2022 | Content–modality decoupling: fuse content, inject modality via AdaIN. | |

| AT-GAN [48] | IF | 2023 | Adversarial fusion with intensity-aware attention and semantic transfer. | |

| IG-GAN [49] | TGRS | 2024 | Strong-modality–guided dual-stream GAN for unsupervised alignment and fusion. | |

| Transformer | IFT [50] | ICIP | 2022 | CNNs with Transformers enhance fusion. |

| SwinFuse [51] | JAS | 2022 | Modeling intra- and cross-domain dependencies enhances fusion. | |

| YDTR [52] | TMM | 2022 | Y-shaped structure with dynamic Transformers fuses infrared and visible features. | |

| TGFuse [53] | TIP | 2023 | Transformers with adversarial learning enhance fusion quality. | |

| Cddfuse [54] | CVPR | 2023 | Transformers model global context, INNs preserve details to enhance fusion. | |

| CMTFuse [55] | TCSVT | 2023 | Cross-modal Transformers enhance infrared-visible fusion. | |

| Text-if [56] | CVPR | 2024 | Transformers with textual semantic guidance enable interactive infrared-visible fusion. | |

| CrossFuse [57] | IF | 2024 | Self- and cross-attention boost infrared–visible fusion. | |

| PromptFuse [58] | JAS | 2024 | Transformers with frequency and prompt learning enhance fusion. | |

| MDDPFuse [59] | KBS | 2025 | Dynamic weighting and semantics boost IR–visible fusion. | |

| RWKVFuse [60] | TPAM | 2025 | Linear attention with semantic guidance improves fusion. | |

| Crossfuse [61] | TCSVT | 2025 | Multi-view with Transformers/CNNs boosts robustness. | |

| Other | DiFFusion [62] | TIP | 2023 | Build latent diffusion over RGB+IR to preserve color fidelity. |

| Diff-IF [63] | IF | 2024 | Train conditional diffusion with fusion prior generating images without ground truth. | |

| COMO [64] | IF | 2024 | Cross-Mamba interactions handle offset misalignment in detection. | |

| FusMamba [65] | TM | 2025 | Use Mamba state-space with cross-modal fusion capturing long-range context. |

| Methods | EN (↑) | SD (↑) | SF (↑) | MI (↑) | SCD (↑) | VIF (↑) | Qabf (↑) |

|---|---|---|---|---|---|---|---|

| MaeFuse [31] | 7.19 | 44.28 | 12.24 | 1.71 | 1.71 | 0.56 | 0.46 |

| FSFusion [28] | 7.07 | 48.18 | 12.51 | 2.34 | 1.67 | 0.64 | 0.46 |

| FISCNet [40] | 7.09 | 43.85 | 16.62 | 2.20 | 1.26 | 0.59 | 0.55 |

| PIAFusion [38] | 6.98 | 42.70 | 12.13 | 2.47 | 1.47 | 0.68 | 0.44 |

| CDDFuse [54] | 7.43 | 54.66 | 16.36 | 2.30 | 1.81 | 0.69 | 0.52 |

| TGFuse [53] | 7.17 | 43.14 | 14.03 | 1.85 | 1.42 | 0.61 | 0.53 |

| PromptFusion [58] | 7.39 | 53.15 | 16.24 | 2.38 | 1.92 | 0.68 | 0.50 |

| Text-IF [56] | 7.37 | 49.67 | 14.80 | 2.08 | 1.85 | 0.70 | 0.59 |

| Diff-IF [63] | 7.11 | 43.73 | 14.61 | 2.06 | 1.21 | 0.66 | 0.51 |

| Dif-Fusion [62] | 7.17 | 42.33 | 15.51 | 1.99 | 1.32 | 0.55 | 0.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Fan, C.; Ou, C.; Zhang, H. Infrared and Visible Image Fusion Techniques for UAVs: A Comprehensive Review. Drones 2025, 9, 811. https://doi.org/10.3390/drones9120811

Li J, Fan C, Ou C, Zhang H. Infrared and Visible Image Fusion Techniques for UAVs: A Comprehensive Review. Drones. 2025; 9(12):811. https://doi.org/10.3390/drones9120811

Chicago/Turabian StyleLi, Junjie, Cunzheng Fan, Congyang Ou, and Haokui Zhang. 2025. "Infrared and Visible Image Fusion Techniques for UAVs: A Comprehensive Review" Drones 9, no. 12: 811. https://doi.org/10.3390/drones9120811

APA StyleLi, J., Fan, C., Ou, C., & Zhang, H. (2025). Infrared and Visible Image Fusion Techniques for UAVs: A Comprehensive Review. Drones, 9(12), 811. https://doi.org/10.3390/drones9120811