Highlights

What are the main findings?

- The proposed UAV control system entailing a 4G LTE network and a cloud server was capable of maintaining a stable connection over a long distance of approximately 4200 km (Operator → Ground Control Station → UAV).

- The system provided seamless control and clear video streaming with an average latency of less than 150 ms during flight tests conducted under normal LTE capacity and internet load conditions.

What are the implications of the main findings?

- This study shows that commercial 4G LTE networks can be used for long-range UAV flight operations without needing special or expensive equipment.

- This setup can be even more effective in UAV applications when operated with 5G/6G networks or satellite connections.

Abstract

The operational range and reliability of most commercially available UAVs employed in surveillance, agriculture, and infrastructure inspection missions are limited due to the use of short-range radio frequency connections. To alleviate this issue, the present work investigates the possibility of real-time long-distance UAV control using a commercial 4G LTE network. The proposed system setup consists of a Raspberry Pi 4B as the onboard computer, connected to a Pixhawk-2.4 flight controller mounted on an F450 quadcopter platform. Flight tests were carried out in open-field conditions at altitudes up to 50 m above ground level (AGL). Communication between the UAV and the ground control station is established using TCP and UDP protocols. The flight tests demonstrated stable remote control operation, maintaining an average control delay of under 150 ms and a video quality resolution of 640×480, while the LTE bandwidth ranging from 3 Mbps to 55 Mbps. The farthest recorded test distance of around 4200 km from the UAV to the operator also indicates the capability of LTE systems for beyond-visual-line-of-sight operations. The results show that 4G LTE offers an effective method for extending UAV range at a reasonable cost, but there are limitations in terms of network performance, flight time and regulatory compliance. This study establishes essential groundwork for future UAV operations that will utilize 5G/6G and satellite communication systems.

1. Introduction

Unmanned aerial vehicles (UAVs) serve as cost-effective and versatile solutions in agriculture [1,2], surveillance [3,4], and infrastructure monitoring [5,6]. While their use continues to expand, most of the UAVs continue to rely on classic short-range radio frequency (RF) communications, generally at 2.4 or 5.8 GHz [7]. Although these systems are simple and have low latency, they inherently impose a low range of a few kilometers and line of sight for connectivity, restricting beyond-visual-line-of-sight (BVLOS) applications. Consequently, such systems restrict beyond-visual-line-of-sight (BVLOS) operations, limiting UAV deployment in large-scale missions or remote environments.

Recent advances in mobile communication have introduced 4G LTE and emerging 5G networks as promising enablers for long-range UAV control [8,9,10]. The wide coverage of LTE networks makes UAV service providers business feasible. Examples of 4G LTE technology use in UAVs include enhanced real-time data transmission, improved remote control capabilities, and efficient aerial surveillance operations [11]. Early attempts to integrate cellular communication into UAV systems date back to [12], where GPRS technology was utilized to transfer flight data and imagery between a mobile device and a UAV helicopter. The research continued to build upon these developments: In [13], an Android application was developed for UAV telemetry data acquisition, while a cloud-based communication system was proposed in [14], which used a single-board computer with a USB 4G modem for UAV–ground station communication. In [15], flight logs acquired via 4G networks were analyzed on the cloud server, and the IoT data were transmitted to the mobile devices of the users for monitoring and evaluation. Ref. [16] proposed StratoTrans, which represents a 4G communication framework for UAVs that is applied to monitor road traffic and infrastructure. The system used a virtual private network (VPN) to protect the transfer of high-resolution video and imagery between the fixed-wing UAV and its ground control station. The upcoming 5G and 6G communication technologies will improve UAV command and control systems. Common architectures, implementation requirements, and application examples, as well as the technical challenges of 5G and IoT technologies, are explained in detail in [17]. Ref. [18] presents a 6G-enabled framework for autonomous UAV traffic management, and Taleb et al. highlights, in [19], the potential of ultra-reliable low-latency communication for remote UAV missions.

Beyond their role as network users, UAVs have also been explored as active components of cellular networks. Considerable research over the past decade has investigated how UAVs can serve as aerial base stations to extend coverage and increase capacity in underserved regions [20]. Fotouhi et al. [21] examined UAV-assisted cellular architectures that improve spectral efficiency, particularly in scenarios where terrestrial infrastructure is insufficient. Release 15–Release 17 of the Third-Generation Partnership Project (3GPP) have standardized the integration of UAVs into cellular networks. These standards enable efficient and reliable airborne communication using existing LTE and 5G mobile infrastructure [22]. Empirical studies indicate that UAV video and telemetry streaming through LTE networks maintains acceptable bandwidth levels, but network traffic density, together with geographic factors, determine the extent of latency and reliability [23,24].

Although LTE provides a wide coverage area, various technical challenges arise when deploying terrestrial cellular systems to aerial platforms. For example, in [25], the latency of 4G networks at 2.6 GHz was measured, and an average latency of 200–300 ms at an altitude of 50–100 m was determined. This latency increases to 2.5–3 s at a 300 m altitude, exceeding the safety standards for remote piloting. Similarly, unstable connectivity and variable latency are reported in [24] for LTE-based beyond-visual-line-of-sight (BVLOS) operations. In parallel, ref. [26] presents that signal quality, signal strength, and downlink data rate decrease with altitude, while latency increases. In [27], it is demonstrated that LTE infrastructures can provide “good” or “moderate” connectivity at low-to-medium flight altitudes and at close distances to the base station, while the quality of the connection deteriorates as altitude and distance increase.

The research community holds different opinions about LTE network capacity for UAV command and payload data transmission: some experts believe it fulfills requirements, while others point out safety and performance problems that require actual field testing. To address this challenge, the present study proposes and tests a cost-effective architecture for real-time UAV control over 4G LTE. The system combines a Raspberry Pi 4B onboard computer with a Pixhawk 2.4 flight controller, which communicates through Transmission Control Protocol (TCP) for telemetry and User Datagram Protocol (UDP) for manual control and video streaming. The flight tests show that acceptable performance could be attained with the proposed architecture, providing both low latency and stable video transmission. The results further demonstrate the applicability of existing LTE networks for UAV operations without specialized infrastructure, while also highlighting challenges such as bandwidth fluctuations and occasional loss of control.

The main contributions of this study can be summarized as follows:

- An experimental setup demonstrating cloud-mediated control over 4G LTE in long-range UAV operations;

- Evaluation of latency, throughput, and signal quality under varying conditions;

- Analysis of trade-offs, bottlenecks, and recommendations for achieving real-time control via LTE in practice.

2. Related Work

A comprehensive literature survey was conducted, with emphasis placed on studies that provide experimental results related to UAV communication over 4G LTE networks. This requirement considerably narrowed the body of work applicable for comparison, as many studies focus primarily on theoretical analyses or simulations rather than operational implementations. To the best knowledge of the authors, a scheme that utilizes telemetry and video streaming as well as real-time command-and-control (C2) through 4G LTE has not been investigated in the literature previously.

A significant portion of the experimental work on the 4G LTE-based UAV communication focuses on the characterization of 4G/5G networks itself, rather than employing them for C2 applications. Studies like [9,23,27,28,29,30,31] all fall into this category. These studies primarily use drones equipped with smartphones or measurement tools to gather network metrics like RSRP, RSRQ, SINR, and throughput at various altitudes. The proposed work differs fundamentally in its objective from these studies in the sense that the primary focus of the present study is the implementation of a functional, end-to-end C2 and video-streaming system that operates over commercial LTE networks rather than network measurements.

Several other studies employ cloud- or server-based architectures; however, their data types and system objectives differ from those presented here. In [32], a cloud service is used to relay MAVLink messages from a Pixhawk flight controller, but no video transmission component is included. In contrast, the proposed architecture simultaneously supports reliable TCP-based telemetry transfer and a high-bandwidth UDP-based H.264 video stream. The study [14] also utilizes AWS for video and telemetry transmission, but it is designed for a fixed-wing platform, which exhibits substantially different C2 requirements compared to the rotary-wing UAV considered in this work. Additional studies, such as [33,34], use cloud servers to transmit low-bandwidth sensor data (e.g., radionuclide or atmospheric measurements) over protocols such as MQTT, representing application scenarios fundamentally different from systems requiring concurrent streaming of live video and real-time C2 commands.

Other research efforts implement complete C2 and video systems but employ architectures distinct from the one proposed here. For example, Ref. [16] adopts a protocol split similar to that used in the present work—TCP for telemetry and UDP (via RTSP) for video—but relies on a Virtual Private Network (VPN), differing from the scalable, service-oriented public-cloud architecture enabled by AWS. The system described in [35] appears to utilize a direct 4G link between the UAV and ground control station (GCS), without a cloud intermediary. By contrast, the proposed approach decouples the UAV from the GCS through the use of AWS, eliminating the need for static or public IP addresses and allowing both entities to operate behind separate firewalls. The system in [36] employs an inverted protocol selection, relying on TCP for video streaming and UDP for AT command transmission, and uses a 3G modem. The design choices reported in [36] differ substantially from those in the present work, which prioritizes low-latency UDP for both video and command channels while reserving TCP for non–flight-critical telemetry data.

Additional studies have deployed cellular-connected UAV platforms for specialized, high-level applications. For instance, Ref. [37] investigates offloading video-processing tasks (e.g., face recognition) to a Mobile Edge Computing (MEC) node, while [38,39] propose advanced frameworks for automated unmanned traffic management (UTM) and immersive virtual-reality control. These works address domain-specific application scenarios that differ from the manual, GCS-based C2 and video-monitoring framework described in this manuscript. Similarly, Refs. [40,41] present application-specific architectures for AI-based anomaly detection along railways and riverbanks, whereas the system proposed in this study provides a more general and application-agnostic communication backbone.

Overall, the reviewed literature indicates that existing experimental studies on UAV communication over LTE either focus on network characterization, address specialized application domains, or employ architectural choices that differ substantially from the system proposed here. The design presented in this manuscript distinguishes itself by providing an integrated, cloud-mediated architecture that supports simultaneous real-time C2, telemetry, and H.264 video streaming over commercial 4G LTE networks.

3. Materials and Methods

3.1. System Architecture

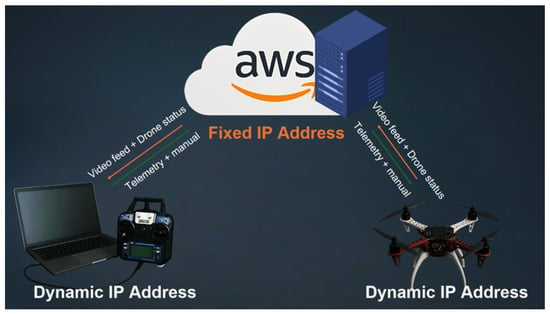

The system was designed to enable long-range UAV operation using commercially available 4G LTE (Sixfabs Inc., Allen, TX, USA) infrastructure. The architecture consisted of three main components: (i) UAV platform: an F450 quadcopter (DJI Da-Jiang Innovations, Shenzhen, China) integrating the Pixhawk 2.4 flight controller (3D Robotics 3DR, Berkeley, CA, USA) and Radiolink SE100 M8N GPS (Radiolink Electronic Limited, Shenzhen, China) for navigation and stabilization. (ii) Onboard processing unit: a Raspberry Pi 4B (Raspberry Pi Trading Ltd., Cambridge, UK) with 4G LTE connectivity that communicates with the flight controller and updates the telemetry parameters, receives manual joysticks inputs, and outputs them into pulse width modulated (PWM) signals, which are converted pulse position modulated (PPM) signals, by a converting module and then inputs to the flight controller and processes and encodes the drone onboard camera video feedback. (iii) The ground control station, which is a cloud-hosted server on Amazon Web Services (AWS) acting as an intermediary between the UAV and the operator’s device. The server has a static IP address that allows the UAV and the operator’s devices to communicate with each other through it. The communication is maintained by persistent TCP and UDP sockets, and collected pilot commands that are forwarded to the UAV over the LTE link. Simultaneously, it receives telemetry and video streams from the onboard unit, processes them, and relays the data back to the operator for real-time visualization. The AWS server, acting as a central hub, provides secure communication, session management, and seamless synchronization between control inputs, telemetry updates, and live video streams. As shown in Figure 1, the UAV sends data to the AWS relay through LTE while the operator uses the web interface to send commands and view telemetry data and live video feed.

Figure 1.

System architecture for UAV control over 4G LTE via Raspberry Pi and AWS relay.

3.2. UAV Hardware Platform

An F450 quadcopter frame was selected as the test bed due to its robust structure and availability. This frame with four mounting arms offers sufficient internal space for both the flight controller and the onboard processing unit. A universal high landing skid gear was also assembled to extend the internal space, so the area under the drone was used to mount the onboard processing unit, making the whole setup experimentally effective for testing and validation.

The propulsion system consists of four A2212 1400 KV brushless DC motors (JH Global Trading, Shenzhen, China) with four 40A electronic speed controllers (ESCs) (JH Global Trading, Shenzhen, China) that control the motors with PWM signal inputs. These components were selected to enhance the thrust-to-weight ratio of the airframe while also providing stable hovering and maneuvering. During flight tests, the quadcopter was powered by a 3S 3300 mAh 40C lithium polymer (LiPo) battery, achieving an average flight time of three to five minutes. The reason for choosing this battery was because of its light weight and also its size, which fits into the frame with the other components. Power was distributed to the propulsion and other systems through a central power distribution board located within the frame itself.

A Pixhawk 2.4 flight controller managed low-level navigation and stabilization. It was paired with a Radiolink SE100 M8N GPS module for outdoor operations, providing precise data about its position and orientation. An external 8 A universal battery eliminator circuit (UBEC) maintained the output at 5.2 V for the companion computer and communication peripherals. This was crucial to prevent sensitive components from receiving excessive power.

A Raspberry Pi 4B with 8 GB of RAM served as the companion computer; it was responsible for all kinds of communications between the drone and the server. It was firmly fixed to the frame using a custom 3D-printed holder to reduce vibration. This unit managed communication with the flight controller, transmitted telemetry data, and processed/encoded video. A Quectel EC25 modem Quectel Wireless Solutions, Shanghai, China was connected to the Raspberry Pi via a Sixfab 4G/3G LTE Base HAT. The modem facilitated 4G connectivity through a Taoglas Maximus Ultra Wide Band Flex Antenna (Taoglas Inc., San Diego, CA, USA) 700 MHz to 6 GHz. After inserting a SIM card into the Sixfab Base Hat, the 4G connection was established. This configuration allowed seamless data exchange between the UAV and the ground station over commercial LTE networks.

For imaging, a Raspberry Pi Global Shutter Camera (Raspberry Pi Trading Ltd., Cambridge, UK) with a 6 mm fixed-focus lens was fixed to the top plate of the quadcopter using a customized 3D-printed holder. The global shutter sensor was selected to reduce distortions from motion and the “jello effect” caused by frame vibrations, resulting in clearer real-time video streaming.

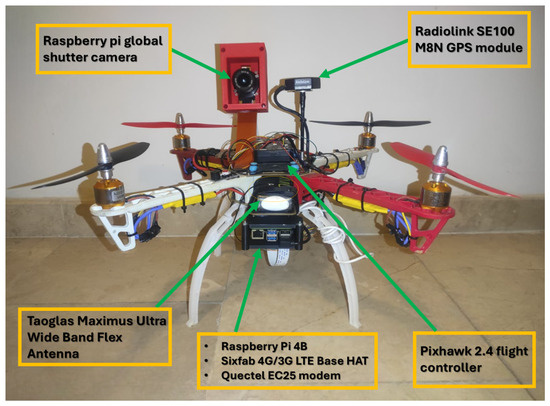

The hardware setup was designed to strike a balance between cost, the availability of off-the-shelf components, and performance requirements. The Raspberry Pi 4B’s LTE connectivity was a significant feature that enabled operation over extended distances, bypassing the limitations of standard wireless frequencies. The UAV platform and its main components have been illustrated in Figure 2.

Figure 2.

UAV platform based on the frame of the F450 quadcopter. Raspberry Pi 4B with the Sixfab 4G/3G LTE Base HAT and Quectel EC25 modem. Taoglas Maximus Ultra Wide Band Flex Antenna for LTE connectivity. A Pixhawk 2.4 flight controller handles the flight stability. A Radiolink SE100 M8N GPS module aids in navigation. A Raspberry Pi global shutter camera is used for live video stream.

3.3. Onboard Processing Unit

As previously mentioned, a Raspberry Pi 4B is a companion computer installed in the UAV platform. We used an 8 GB model for the first setup, but tests showed that 4 GB of RAM is sufficient to execute telemetry, control, and video processing activities all at the same time. The Raspberry Pi was the main communication link between the Pixhawk flight controller, the camera, and the ground control station.

The LTE hardware described in Section 3.2 made cellular connectivity possible, enabling the Raspberry Pi to establish a reliable data connection with the ground control station hosted on AWS. The Raspberry Pi executed unique Python 3.11.2 scripts that managed three crucial jobs over this link. First, it communicated with the Pixhawk using PyMAVLink to gather telemetry data and send operator commands. Joystick commands received from the ground station were transformed into PWM signals, which were subsequently processed through a PWM-to-PPM encoder. This encoder converts the PWM signals into a single PPM stream as the Pixhawk does not recieve PWM signals as an input, so by using this encoder we ensured compatibility with the flight controller. Second, the onboard video system supported two encoding modes: (i) JPEG compression using OpenCV, which allowed per-frame overlay of delay and frame rate information; and (ii) H.264 encoding via FFmpeg, tuned for low latency using the baseline profile, ultrafast preset, and Annex-B streaming format.

Python load (capture, telemetry, control, and monitoring scripts) was approximately 50–60% CPU on the Raspberry Pi 4B, as measured with htop. For the H.264 encoder, libx264 (software, CPU-based) used 16–25% CPU, whereas h264_v4l2m2m (hardware-accelerated via the GPU/V4L2 M2M) used 8–10% CPU. Although GPU encoding reduced CPU usage, it introduced color and frame-distortion artifacts during rapid camera motion, so the software encoder (libx264) was preferred for its more stable image quality and consistent performance.

The active mode could be switched in real time through server-issued setting commands, giving the operator flexibility to balance bandwidth, latency, and video clarity depending on link quality.

Finally, to enhance the communication, automatic reconnection techniques inside the code were developed, so that it can monitor socket health and restored connections during temporary LTE interruptions. A dedicated settings thread consistently synchronized the Raspberry Pi with the ground station, updating parameters like frame rate, JPEG quality, recording state, and video encoding mode. This allowed the onboard system to adapt dynamically to fluctuating network conditions without any input from the user.

3.4. Ground Control Station

The ground control station ran as a cloud server on Amazon Web Services (AWS) with a static IP, ensuring stable access for both the UAV and operator devices. The server served as the main relay hub, ensuring persistent bidirectional communication and handling data exchange between the UAV and the pilot interface.

The link was built around two main communication protocols. TCP sockets were used for telemetry data and for updating video and system settings, as their reliability ensured that no critical information was lost. UDP carried the latency-sensitive streams (live video and joystick control), prioritizing speed over guaranteed delivery where latency was more important than guaranteed delivery. In practice, this meant that the operator’s inputs were transmitted with minimal delay, while telemetry updates and configuration changes arrived consistently and in order. By routing all of this traffic through the AWS server, the UAV and operator avoided direct peer-to-peer links, which not only improved security but also made the system more resilient and easier to manage. For example, through this system, the user can manage a UAV at the same time from any device that has internet connectivity and is authorized to access the cloud server control dashboard.

3.4.1. Web-Based Ground Control Application

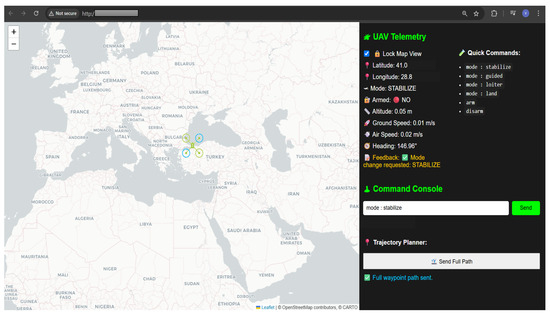

The AWS server hosted a web application that provided the operator with an integrated interface for telemetry monitoring and video streaming. The interface shown in Figure 3 presents the live video feed from the UAV in real time, along with the corresponding frame rate and frame processing delay. Operators could also adjust parameters such as frame rate, JPEG quality, encoding mode, and recording state directly through the interface, with changes instantly relayed to the UAV via TCP sockets. In addition to video streaming, a telemetry web-based dashboard, as illustrated in Figure 4, displays flight data such as GPS coordinates, altitude, heading, ground speed, and flight mode. The map view enables real-time tracking of the UAV’s position, while quick command shortcuts allow operators to switch flight modes or arm/disarm the system with a single click. A command console was included for sending MAVLink-compatible text commands, as well as a waypoint planner that supported uploading full flight paths to the UAV. This web application was platform-independent, requiring only a browser and network access, which made it possible to monitor and control flights from any authorized device connected to the internet.

Figure 3.

Web-based video streaming interface displaying live UAV feed with real-time annotations of frame rate and processing delay. Operators could adjust FPS, JPEG quality, encoding mode, and recording state directly from this dashboard.

Figure 4.

Telemetry dashboard showing UAV GPS coordinates, altitude, heading, speed, and flight mode. Includes real-time map tracking, quick command shortcuts, MAVLink console, and waypoint planner.

3.4.2. Windows-Based Joystick and H.264 Control Interface

For scenarios where both low-latency video monitoring and precise manual piloting were required, a Windows-based executable application was developed in Python and compiled with PyInstaller. Unlike the browser dashboard, which was optimized for MJPEG streaming due to its broad compatibility with web technologies, this standalone program was specifically designed to handle H.264 video decoding. H.264 provided superior compression efficiency and smoother playback at lower bitrates, but because most commercial web browsers do not have native support for H.264 streams, a dedicated desktop client was necessary.

In addition to H.264 video, the same executable integrated a joystick control module. A FlySky 6-channel transmitter connected via USB to the operator’s laptop was supported, and the program captured raw input values (throttle, yaw, pitch, and roll channels) at 50 Hz. These values were displayed on screen, along with trim adjustment and reversing options for each axis. The inputs were forwarded via UDP sockets to the AWS server, which relayed them to the UAV and ensured compatibility with the Pixhawk through the onboard PWM-to-PPM encoder.

By combining H.264 video decoding with low-latency joystick input, this Windows application complemented the browser-based dashboard. Operators could choose between the lightweight web interface (MJPEG stream + telemetry) for general monitoring, or the Windows executable for higher-efficiency video streaming and precise manual flight control when required.

3.5. Failsafe Configuration and Link-Loss Handling

Failsafes. The Pixhawk flight controller supports built-in failsafes (lost-link, low-battery, geofence/altitude). In all tests, these were enabled: lost-link action set to Return-to-Launch (RTL) or Land (per test), and the low-battery threshold was set to .

Application-layer behavior (manual control). The companion computer continuously outputs PWM to the Pixhawk. If the connection to the AWS/operator endpoint is lost, the application immediately neutralizes all four channels (roll, pitch, yaw, throttle ) for while the vehicle holds its altitude and position in Loiter mode. If the link does not recover within that window, the application stops PWM output, allowing the Pixhawk’s failsafe to take over (RTL or Land).

Application-layer behavior (telemetry). The companion also monitors the telemetry/command link to the AWS/operator. If this link remains unavailable for , and the local connection to the Pixhawk is intact, the companion commands a mode change from Guided to RTL.

4. Results

4.1. Flight Test Setup

Flight tests were conducted in an open outdoor field located in Istanbul, Turkey, which was partially surrounded by buildings. The site was not selected to minimize obstacles, meaning that the UAV operated under realistic LTE coverage conditions.

The test platform of the F450 quadcopter, which was powered by a 3S 3300 mAh 40C LiPo battery, provides an endurance of approximately 7 min. In practice, flight sessions were limited to 4–5 min per trial to maintain safe margins for recovery and analysis. The maximum flight altitude reached during testing was 50 m, while the average operating altitudes ranged from 7 to 10 m. This altitude is not the best or optimal, but government restrictions and permissions were a challenge that made it difficult for the drone to climb higher than that. Lateral displacement of the UAV from the operator remained minimal; however, the control chain included an intercontinental round-trip link of approximately 4200 km (operator laptop in Istanbul → AWS server in Frankfurt → UAV in Istanbul).

Network connectivity was provided via a Turkcell LTE SIM card installed in the onboard modem. Bandwidth measurements for the selected hardware were 3 Mbps and 55 Mbps, although the upper limit consistently peaked near 55 Mbps. End-to-end latency, measured as internet control message protocol (ICMP) ping times between the UAV and the AWS server, averaged between 68 ms and 92 ms. Tests were repeated under both strong and weak LTE coverage to evaluate performance across realistic conditions. The low measured latency was partly due to the server operating under light traffic load, and the relatively short geographic distance also contributed to reduced delay.

The ground control infrastructure was hosted on an AWS instance in Frankfurt, Germany, with a static public IP address ensuring stable connectivity. The operator accessed the system from Istanbul exclusively, using a browser-based interface for telemetry and MJPEG-based video streaming, while a separate Windows executable handled H.264 video playback and low-latency joystick control. This configuration allowed analysis of performance trade-offs between web-based monitoring and dedicated executable applications.

4.2. Control Latency Results

Control latency was experimentally quantified by directly comparing short-range RC transmission against the 4G LTE communication chain. A FlySky 6-channel transmitter was simultaneously connected to (i) the UAV’s RC receiver over a standard 2.4 GHz link at a distance of 1–2 m, and (ii) the operator’s laptop via USB, where the same input values were forwarded to the UAV through the 4G pipeline (operator laptop → AWS server in Frankfurt → Raspberry Pi onboard the UAV in Istanbul).

The PWM outputs of both the RC receiver and the Raspberry Pi (through the PWM-to-PPM encoder) were fed into an Arduino Nano, which measured the temporal offset between the two signals. This approach enabled precise quantification of the delay introduced by the 4G system relative to a near-zero-latency RC baseline.

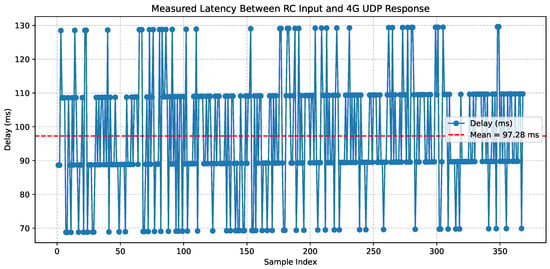

The statistical results, based on 368 samples, are summarized in Table 1. The measured mean delay was approximately 97 ms, with jitter (standard deviation) of 17.4 ms. Minimum delays were as low as 68 ms, while maximum peaks reached 129 ms.

Table 1.

Measured control latency between short-range RC and 4G LTE communication paths.

A few discrete peaks around 70, 90, 110, and 130 ms in Figure 5 arise from the LTE network’s fixed scheduling intervals and queuing in the LTE–AWS path: small additional delays push packets into the next 10–20 ms slot, so the latency appears in steps rather than as a perfectly smooth curve. This clustering is, therefore, an expected property of LTE-based links.

Figure 5.

Measured latency between RC and 4G inputs.

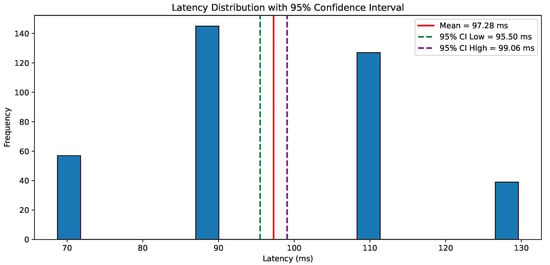

The distribution of the measured latency values is illustrated in Figure 6, which includes the 95% confidence interval to highlight the stability of the control channel.

Figure 6.

Latency distribution of 368 samples, showing the mean (97.3 ms) and indicating the 95% confidence interval (95.5–99.1 ms).

Our measured one-way control latency was 97.3 ms (95% CI 95.5–99.1 ms), which is comparable to LTE field measurements of 94 ms reported in [27] and 100 ms one-way interpreted from 200 ms round-trip LTE C2 links [42], and below the 160–170 ms joystick-to-actuator latency observed in a remote test-pilot setup [43].

The 368 latency samples were collected across four field sessions on three different days at open-field test sites in Istanbul (see Section 5.5 for a discussion of network variability limits).

The results confirm that the UAV could be manually piloted in real time through the LTE link, with delays remaining within acceptable limits for stable flight.

4.3. Video Streaming Results

Two encoding modes were tested for transmitting live video from the UAV: Motion JPEG (MJPEG) using OpenCV, and H.264 using FFmpeg. Both modes were dynamically switchable during flight through server-issued settings commands, allowing the operator to balance latency, frame quality, and bandwidth efficiency.

The MJPEG stream achieved frame rates up to 50 FPS, with an average of about 30 FPS under stable LTE conditions. At this frame rate, the observed video quality averaged around 70 (on a 0–100 scale) and could peak at 85 in favorable lighting conditions. Individual MJPEG frames were typically 25–30 KB in size, particularly when the scene contained high detail or strong lighting. Processing time for each frame was approximately 7.5 ms, but the total end-to-end latency was <300 ms. Despite MJPEG needing a lot of bandwidth, MJPEG was more reliable and provided a near-real-time experience, which made it better for manual piloting and streaming from a web browser.

On the other side, the H.264 encoder was limited to 30 FPS, constrained by the Raspberry Pi’s processing capability. Compression efficiency was significantly higher, about 50% better than the JPEG compression, which was used in the MJPEG streaming, with average frame sizes between 15 and 18 KB. At equivalent bitrates, H.264 produced clearer and sharper video than MJPEG; however, it introduced 0.7–0.8 s of additional delay due to encoding and buffering. This made it less suitable for instant pilot feedback, but more suitable for scenarios requiring higher clarity or lower bandwidth consumption, such as post-flight analysis or when operating under constrained network conditions.

Table 2 summarizes the comparison between both streaming modes.

Table 2.

Comparison of video streaming modes tested on the UAV.

4.4. Telemetry Reliability Results

Telemetry performance was evaluated in parallel with video and control testing. The telemetry stream included GPS coordinates, altitude, heading, ground speed, and flight mode, and was visualized on the web-based dashboard in both numerical and map views, as shown previously in Figure 4. In addition to monitoring, the interface allowed operators to issue high-level commands such as arming/disarming, changing flight modes, initiating takeoff/landing, directional maneuvers, and uploading waypoints by selecting positions directly on the map.

Telemetry was transmitted at 1–2 Hz by design, because these state variables (GPS, altitude, heading, flight mode) vary slowly and are used for situational awareness and command acknowledgment, so keeping the update rate at 1–2 Hz ensures that there is no congestion and high delays in weak and fluctuating conditions; real-time responsiveness was provided by the 50 Hz UDP joystick channel together with the low-latency video feed.

Reliability tests showed that telemetry over TCP was generally stable, with all messages eventually delivered even under fluctuating LTE coverage. While the update flow was not perfectly smooth, occasional delays or jitter did not compromise overall usability, as telemetry exchange is tolerant to minor latency compared to manual piloting inputs. Under weak LTE conditions, the update rate decreased and AUTHORSs were delayed, but system performance remained acceptable: one command arriving reliably was sufficient to trigger UAV behavior (e.g., a waypoint update or flight mode change).

Table 3 summarizes the relative robustness of telemetry compared to manual joystick control.

Table 3.

Comparison between telemetry and manual joystick communication over LTE.

4.5. System Robustness and Limitations

The system exhibited clear operating characteristics that varied with network conditions. In cases of weak or unstable LTE coverage, telemetry exchange over TCP remained dependable: commands such as arming, disarming, changing flight modes, or uploading waypoints were reliably delivered, even if delays were apparent. This robustness ensured that the UAV could always be efficiently commanded, making autonomous, semi-autonomous, and waypoint-based flights possible regardless of LTE link quality.

The system made it quite easy to manually pilot in real time using the joystick interface when LTE connectivity was good. Command transmission over UDP at 50 Hz provided responsive control with delays consistently under 150 ms, showing that the UAV could be flown smoothly over extended distances when bandwidth and latency were at their best.

This flexibility showed how strong the architecture was: the operator could rely on telemetry-driven commands in difficult network situations and switch to manual piloting when the connection allowed it. The main problems were that the H.264 video stream had a higher latency, which made it less useful for live piloting and short flying time (4–5 min with the 3S 3300 mAh battery). We chose the F450 (DJI-class) airframe because it is a low-cost, well-documented platform widely used for hobby and R&D work. The battery was selected to match the frame’s electrical limits and our added onboard electronics (Raspberry Pi 4 with LTE HAT and modem), while fitting the available space and keeping the mass centered so that all four motors shared the load evenly.

This study focused on demonstrating reliable long-range 4G control and measuring latency/robustness, not on payload delivery or maximum endurance. Individual test flights were, therefore, kept to 4–5 min to gather data and retain a safe landing reserve; overall testing time was mainly constrained by battery recharging between flights, not by an inability to fly longer in a single run, as 4–5 min was enough to obtain the required data for the analysis. For industrial missions, there are multirotor and hybrid platforms purpose-built for long endurance (larger energy stores, more efficient propulsion, or hybrid powertrains) [44].

Finally, the communication stack consumes relatively little power (Raspberry Pi 4 + LTE HAT ) compared with the brushless motors, so flight time is governed primarily by propulsive power rather than networking. In the end, the system worked well in a range of LTE coverage, showing that it might operate well for both autonomous and manual UAV operations over commercial cellular networks, but this also depends on the quality of the services in each country.

4.6. Failsafe Observations and Timings

In some flight sessions we observed transient LTE dropouts and, on one occasion, a persistent loss of the AWS/operator link. Transient events were absorbed by the 10 s neutral–PWM window without loss of control; the persistent loss triggered the Pixhawk failsafe as configured (RTL or Land). No uncontrolled states were observed. Root-cause analysis indicated that the persistent loss resulted from an improperly seated USB cable feeding the Sixfab base HAT, which briefly powered off the LTE modem; the connector was remounted and secured, after which no further persistent losses occurred. Failsafe behavior (neutral PWM → PWM halt → RTL/Land) was also validated in bench tests (props off) by intentionally disabling the link. All observed link conditions and and system responses are given in Table 4.

Table 4.

Observed link conditions and system response.

Because manual commands were transported over UDP (not delivery-guaranteed), occasional packet drops did not affect handling: at a 50 Hz command rate, even when there were short periods of loss, there were still several valid updates every second, and the vehicle continued to track the most recently received command. Under heavy traffic, packets continued to arrive but with noticeable delay (≳1 s); in those cases the pilot either switched to telemetry (Guided mode) monitoring or explicitly commanded a safe flight mode (RTL/Land). This behavior follows the intended chain: (i) tolerate brief loss with smooth control, (ii) degrade gracefully under delay, and (iii) failsafe on sustained outage.

5. Discussion

This work demonstrates that a low-cost companion computer (Raspberry Pi 4B) coupled to a Pixhawk 2.4 and a commercial 4G LTE modem can sustain real-time remote operation of a multirotor. Across multiple flights, the end-to-end control delay stayed below ≈150 ms, telemetry remained reliable over TCP even under fluctuating coverage, and video streaming at 640 × 480 was maintained while the available LTE bandwidth varied between 3 Mbps and 55 Mbps. A control chain spanning ∼4200 km (operator–server–UAV) was achievable without specialized infrastructure at the operator site. Below, we discuss what these results imply, how they compare with related studies, and where the approach is most useful.

5.1. Interpretation of Key Findings

The measured mean control delay of 97.3 ± 17.4 ms is within the range typically cited as acceptable for manual piloting of small multirotors performing non-aggressive maneuvers. In practice, pilots perceived the joystick loop as responsive when LTE was stable; when coverage degraded which was rarely happening (only at some areas where they were out of or at the edge of the LTE coverage), the UDP command stream exhibited jitter, while the TCP telemetry stream continued to deliver reliable (if bursty) updates. This is simply because in telemetry the flight controller needs only one command to act and take full control over the UAV. The contrast between MJPEG and H.264 confirmed a classic trade-off: MJPEG provided a “snappier” feel at the cost of bandwidth, whereas H.264 offered better visual quality and lower bitrate at the cost of ∼0.7–0.8 s extra buffering/encoding delay, which makes it less suitable for closed-loop piloting but more attractive for monitoring and recording.

5.2. Comparison with Prior Work

Extensive surveys report the breadth of UAV applications in agriculture, environmental monitoring, and civil infrastructure. Our results align with the growing body of work that explores using public cellular networks for beyond-visual-line-of-sight (BVLOS) telemetry and video, where practical feasibility hinges on latency stability and coverage variability rather than peak throughput. In line with these observations, we found that mission effectiveness depended more on jitter and short outages than on average bandwidth; designing the link such that safety-critical functions (arming/disarming, mode changes, RTL) ride on reliable channels (TCP with acknowledgments) while time-critical but loss-tolerant streams (video, stick inputs) use UDP provided a robust compromise. In Table 5, a comparison between short-range RC links and 4G/LTE IP links has been presented.

Table 5.

Comparison of short-range RC links and 4G/LTE IP links for UAV command and video.

5.3. Implications for System Design

Three design lessons emerged:

- Protocol separation improves robustness. Running telemetry/settings over TCP and piloting/video over UDP allowed the system to remain controllable when the network became bursty; a single successful TCP command was sufficient to switch modes or trigger failsafe actions, even if multiple UDP packets were dropped.

- Adaptive video is essential. Allowing the operator to switch at runtime between MJPEG and H.264, and to tune FPS and JPEG quality, helped match the stream to current link conditions. For web delivery and manual piloting, MJPEG at 20–30 FPS was generally preferable; for bandwidth-limited links or documentation, H.264 was superior.

- Resilience requires automated recovery. A lightweight supervisory Python script continuously monitored link health and the state of the control, telemetry, and video services. It automatically restarted those affected processes and re-established sockets when heartbeats disappeared or I/O stopped. This kept the UAV controlled even when LTE disruptions and software bugs were unexpected. This autonomic recovery layer was very important for flight safety. It made the pilot’s job easier, stopped the core scripts from failing completely, and turned short network dropouts into recoverable glitches instead of mission-ending events. Also, it is important to mention the importance of startup scripts, so once the system is powered on, the core scripts start to work without need for the pilot to manually start them.

5.4. Operational Envelope and Use Cases

Given the observed delays, the system is well suited to the following: (i) supervised autonomy where the vehicle executes waypoints while the operator monitors and can intervene; (ii) low-speed inspection, mapping, and agricultural scouting where precise stick timing is less critical; and (iii) training, testing, and R&D scenarios that benefit from anywhere-to-anywhere access via a relay server. Also, the benefit of this system is that it can be used easily to launch and control a UAV from the same operator’s device specifically using the telemetry control feature where, for example, in a package delivery application, a specific command is sent to the drone to navigate through a certain waypoint, drop a package, and then return back to the home location; all of this could be achieved through one operator device.

5.5. Limitations and Threats to Validity

The main limitations are the following: (i) Endurance—the test airframe’s 3S 3300 mAh battery yielded ∼4–7 min flights; larger packs or higher-efficiency propulsion will be needed for practical missions. (ii) Coverage and network variability—our results reflect the specific operator, frequency bands, and backhaul used in Istanbul, which ranks 91st of 151 cities in the Speedtest Global Index—Mobile [45]. Prior measurements on live LTE networks show that latency and data speed can vary widely by city and operator because of cell load, band assignment, and backhaul design [46]. Therefore, regions with stronger networks or lighter load may see lower control-loop latency, while heavier traffic or weaker infrastructure can lead to higher delay and jitter. Future work will replicate the tests across multiple regions to quantify this variability. (iii) Video pipeline latency—the current H.264 path added ∼0.7–0.8 s, driven by software encoding and buffering. (iv) Regulatory constraints—the experiments were conducted at low altitude with local restrictions; BVLOS operations on public networks remain subject to national regulations, spectrum rules, and specific authorizations.

5.6. Safety, Security, and Regulatory Considerations

Using the public internet raises issues with safety and cybersecurity. We recommend (i) encrypting and authenticating all control and telemetry channels at the transport layer; (ii) application-level heartbeats and explicit link loss handling that triggers autonomous return-to-launch (RTL); (iii) enforcing geofencing and altitude/keep-out limits on the flight controller; and (iv) logging operator actions for post-flight surveillance. From a regulatory point of view, the architecture provides risk protections that civil aviation authorities typically require, such as lost-link behavior, logging, and remote identification [47,48,49]. These features align with international frameworks for beyond-visual-line-of-sight (BVLOS) and unmanned traffic management (UTM), which emphasize reliable communication, remote ID, and data traceability [50].

In practical terms, the proposed 4G LTE control architecture satisfies several foundational elements of BVLOS compliance: the system maintains continuous telemetry exchange and server-side logging which enable traceability in accordance with ASTM F3411 tracking requirements; the automatic return-to-launch (RTL) and geofencing mechanisms correspond to lost-link and containment criteria defined by EASA and FAA guidance; and time-stamped command and telemetry records provide auditability consistent with U-Space service levels U2–U3.

To progress toward formal certification, the architecture could interface with a UTM network through API-based remote ID broadcasting and airspace authorization services, allowing real-time coordination and deconfliction in shared airspace. However, BVLOS approvals will still depend on the specific concept of operation and airspace classification defined by each national authority.

For example, in Turkey, UAV operations are controlled by the Directorate General of Civil Aviation (SHGM) through the İHA Kayıt Sistemi (UAV Registration System), which requires that each UAV is registered with technical specifications such as maximum take-off weight, battery capacity, communication link type, GPS, IMU, and FPV camera capability, before flight authorization is given [51]. This allows traceability and operational accountability aligned with national and international UAV safety standards.

5.7. Practical Guidance for Replication

For practitioners building a similar stack, we suggest the following: (i) select LTE antennas and modem firmware matched to local bands; (ii) pin CPU clock on the Raspberry Pi to reduce encoder jitter; (iii) timestamp video frames and stick packets at the source to measure per-hop delays; and (iv) configure independent failsafes—geofence plus auto–RTL/land on link loss—and, during tests, add link redundancy (dual SIM/carriers) for control continuity.

5.8. Future Work

Future extensions include the following: (i) replacing software H.264 with hardware-accelerated encoding on a more capable SoC/encoder and migrating to H.265/HEVC for greater compression efficiency—HEVC typically yields ∼40–50% bitrate reduction at comparable quality—while noting that the current platform cannot sustain real-time HEVC; (ii) 5G evaluation with network slicing, uplink QoS, and carrier aggregation to study latency/jitter improvements over LTE; (iii) redundant communication (LTE + secondary carrier or satellite) with seamless handover; and (iv) formal flight-test campaigns across times of day, cells, and loads to statistically model outage distributions and quantify risk for BVLOS safety cases.

6. Conclusions

This work demonstrates that a commercially available 4G LTE network can support real-time, long-range control of a multirotor UAV using an inexpensive companion computer (Raspberry Pi 4B), a Pixhawk 2.4 flight controller, and a cloud relay (AWS). Across repeated outdoor trials, the end-to-end control delay remained suitable for manual piloting (mean ≈ 97 ms; consistently < 150 ms), while browser-based MJPEG streaming delivered ∼30 fps with <300 ms video latency. H.264 provided higher visual clarity at lower bitrates but introduced a 0.7–0.8 s delay on the tested platform. Telemetry over TCP was reliable even under fluctuating LTE coverage, enabling autonomous and waypoint-based operation when continuous low-latency manual control was not available. The architecture demonstrated the viability of LTE for BVLOS flights without specialized infrastructure by maintaining an intercontinental operator–UAV separation of around ∼4200 km via the operator device (Istanbul) → server (Frankfurt) → UAV (Istanbul).

The main limitations observed were short endurance with the test battery (4–5 min flight windows within a 7 min envelope), bandwidth variability inherent to public cellular networks, and the latency of software-based H.264 for live piloting. Nevertheless, the system’s resilience benefited from supervisory process management on the companion computer, which automatically detected faults and restarted critical services, reducing pilot workload and accelerating recovery after transient link disruptions.

Implications. Off-the-shelf LTE is a viable, low-cost backhaul for BVLOS UAS when paired with (i) UDP for time-critical control/video, (ii) TCP for guaranteed-delivery telemetry/configuration, and (iii) onboard supervision to maintain service continuity. This lowers barriers to scalable remote operations in applications such as inspection, monitoring, and precision agriculture.

Operational recommendations. For those who are seeking to replicate or scale this approach, we suggest the following: (i) separate transport for time-critical versus reliable traffic (UDP for sticks/video; TCP for telemetry/configuration); (ii) always-on process supervision on the companion computer to restart failed services and preserve session state; (iii) careful encoder choice matched to mission needs (MJPEG for ultra-low interaction delay in browsers; H.264/H.265 for efficiency when added latency is acceptable); (iv) conservative battery reserves and per-phase energy logging to manage short endurance envelopes; and (v) layered failsafes (geofences, autonomous RTL, and lost-link timers) aligned with applicable regulations.

Overall, the results validate cellular connectivity as a practical path to safe, reliable long-range UAV operations and provide a baseline against which emerging 5G/6G and hybrid satellite–cellular solutions can be compared.

Author Contributions

Conceptualization, M.A.M.M. and Y.O.; methodology, M.A.M.M. and Y.O.; software, M.A.M.M.; validation, M.A.M.M. and Y.O.; formal analysis, M.A.M.M.; investigation, M.A.M.M.; resources, M.A.M.M. and Y.O.; data curation, M.A.M.M.; writing—original draft preparation, M.A.M.M.; writing—review and editing, M.A.M.M. and Y.O.; visualization, M.A.M.M.; supervision, Y.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to express their sincere appreciation to those who provided assistance throughout the course of this research.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3GPP | Third-Generation Partnership Project |

| AWS | Amazon Web Services |

| BVLOS | Beyond Visual Line of Sight |

| ESC | Electronic Speed Controller |

| FPS | Frame per Second |

| GPRS | General Packet Radio Service |

| GPS | Global Positioning System |

| HAT | Hardware Attached Top |

| ICMP | Internet Control Message Protocol |

| IP | Internet Protocol |

| LiPo | Lithium Polymer |

| LTE | Long-Term evolution |

| PPM | Pulse Position Modulation |

| PWM | Pulse Width Modulation |

| RC | Radio Control |

| RF | Radio Frequency |

| RTL | Return to Launch |

| TCP | Transmission Control Protocol |

| UAV | Unmanned Aerial Vehicle |

| UBEC | Universal Battery Eliminator Circuit |

| UDP | User Datagram Protocol |

| VPN | Virtual Private Network |

References

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in agriculture: A review and bibliometric analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Phang, S.K.; Chiang, T.H.A.; Happonen, A.; Chang, M.M.L. From satellite to UAV-based remote sensing: A review on precision agriculture. IEEE Access 2023, 11, 127057–127076. [Google Scholar] [CrossRef]

- Yun, W.J.; Park, S.; Kim, J.; Shin, M.; Jung, S.; Mohaisen, D.A.; Kim, J.H. Cooperative multiagent deep reinforcement learning for reliable surveillance via autonomous multi-UAV control. IEEE Trans. Ind. Inform. 2022, 18, 7086–7096. [Google Scholar] [CrossRef]

- Fang, Z.; Savkin, A.V. Strategies for optimized uav surveillance in various tasks and scenarios: A review. Drones 2024, 8, 193. [Google Scholar] [CrossRef]

- Daponte, P.; Paladi, F. Monitoring and Protection of Critical Infrastructure by Unmanned Systems; IOS Press: Amsterdam, The Netherlands, 2023; Volume 63. [Google Scholar]

- Munawar, H.S.; Ullah, F.; Heravi, A.; Thaheem, M.J.; Maqsoom, A. Inspecting Buildings Using Drones and Computer Vision: A Machine Learning Approach to Detect Cracks and Damages. Drones 2021, 6, 5. [Google Scholar] [CrossRef]

- Khan, M.A.; Menouar, H.; Eldeeb, A.; Abu-Dayya, A.; Salim, F.D. On the Detection of Unauthorized Drones—Techniques and Future Perspectives: A Review. IEEE Sens. J. 2022, 22, 11439–11455. [Google Scholar] [CrossRef]

- Rasheed, I.; Asif, M.; Ihsan, A.; Khan, W.U.; Ahmed, M.; Rabie, K.M. LSTM-Based Distributed Conditional Generative Adversarial Network for Data-Driven 5g-Enabled Maritime UAV Communications. IEEE Trans. Intell. Transp. Syst. 2023, 24, 2431–2446. [Google Scholar] [CrossRef]

- Homayouni, S.; Paier, M.; Benischek, C.; Pernjak, G.; Leinwather, M.; Reichelt, M.; Fuchsjäger, C. On the feasibility of cellular-connected drones in existing 4G/5G networks: Field trials. In Proceedings of the 2021 IEEE 4th 5G World Forum (5GWF), Montreal, QC, Canada, 13–15 October 2021; pp. 287–292. [Google Scholar]

- Zeng, Y.; Zhang, R.; Lim, T.J. Wireless communications with unmanned aerial vehicles: Opportunities and challenges. IEEE Commun. Mag. 2016, 54, 36–42. [Google Scholar] [CrossRef]

- Jawad, A.M.; Abu-AlShaeer, M.J. Revolutionizing Communication: How 5G, UAVs, and Cloud Technologies Are Shaping the New Telecommunications Landscape. Pidvodni Tehnol. 2024, 1, 75–84. [Google Scholar] [CrossRef]

- Wzorek, M.; Landen, D.; Doherty, P. GSM technology as a communication media for an autonomous unmanned aerial vehicle. In Proceedings of the 21st Bristol International Conference on UAV Systems, Bristol, UK, 12 May 2006; pp. 1–15. [Google Scholar]

- Chen, L.; Huang, Z.; Liu, Z.; Liu, D.; Huang, X. 4G network for air-ground data transmission: A drone based experiment. In Proceedings of the 2018 IEEE International Conference on Industrial Internet (ICII), Seattle, WA, USA, 21–23 October 2018; pp. 167–168. [Google Scholar]

- Burke, P.J. A Safe, Open Source, 4G Connected Self-Flying Plane With 1 Hour Flight Time and All Up Weight (AUW) <300 g: Towards a New Class of Internet Enabled UAVs. IEEE Access 2019, 7, 67833–67855. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, Z. Cloud-based UAV data delivery over 4G network. In Proceedings of the 2017 Tenth International Conference on Mobile Computing and Ubiquitous Network (ICMU), Toyama, Japan, 3–5 October 2017; pp. 1–2. [Google Scholar]

- Guirado, R.; Padro, J.C.; Zoroa, A.; Olivert, J.; Bukva, A.; Cavestany, P. StratoTrans: Unmanned Aerial System (UAS) 4G Communication Framework Applied on the Monitoring of Road Traffic and Linear Infrastructure. Drones 2021, 5, 10. [Google Scholar] [CrossRef]

- Pons, M.; Valenzuela, E.; Rodríguez, B.; Nolazco-Flores, J.A.; Del-Valle-Soto, C. Utilization of 5G Technologies in IoT Applications: Current Limitations by Interference and Network Optimization Difficulties—A Review. Sensors 2023, 23, 3876. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, R.; Bajracharya, R.; Kim, S. 6G Enabled Unmanned Aerial Vehicle Traffic Management: A Perspective. IEEE Access 2021. [Google Scholar] [CrossRef]

- Taleb, T.; Ksentini, A.; Hellaoui, H.; Bekkouche, O. On Supporting UAV Based Services in 5G and Beyond Mobile Systems. IEEE Netw. 2021, 35, 220–227. [Google Scholar] [CrossRef]

- Sharma, A.; Vanjani, P.; Paliwal, N.; Basnayaka, C.M.; Jayakody, D.N.K.; Wang, H.C.; Muthuchidambaranathan, P. Communication and networking technologies for UAVs: A survey. J. Netw. Comput. Appl. 2020, 168, 102739. [Google Scholar] [CrossRef]

- Fotouhi, A.; Qiang, H.; Ding, M.; Hassan, M.; Giordano, L.G.; Garcia-Rodriguez, A.; Yuan, J. Survey on UAV Cellular Communications: Practical Aspects, Standardization Advancements, Regulation, and Security Challenges. IEEE Commun. Surv. Tutor. 2019, 21, 3417–3442. [Google Scholar] [CrossRef]

- Muruganathan, S.D.; Lin, X.; Määttänen, H.L.; Sedin, J.; Zou, Z.; Hapsari, W.A.; Yasukawa, S. An overview of 3GPP release-15 study on enhanced LTE support for connected drones. IEEE Commun. Stand. Mag. 2022, 5, 140–146. [Google Scholar] [CrossRef]

- Lin, X.; Wiren, R.; Euler, S.; Sadam, A.; Maattanen, H.L.; Muruganathan, S.; Gao, S.; Wang, Y.P.E.; Kauppi, J.; Zou, Z.; et al. Mobile Network-Connected Drones: Field Trials, Simulations, and Design Insights. IEEE Veh. Technol. Mag. 2019, 14, 115–125. [Google Scholar] [CrossRef]

- Ivancic, W.D.; Kerczewski, R.J.; Murawski, R.W.; Matheou, K.; Downey, A.N. Flying Drones Beyond Visual Line of Sight Using 4g LTE: Issues and Concerns. In Proceedings of the 2019 Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 9–11 April 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Yang, G.; Lin, X.; Li, Y.; Cui, H.; Xu, M.; Wu, D.; Rydén, H.; Redhwan, S.B. A telecom perspective on the internet of drones: From LTE-advanced to 5G. arXiv 2018, arXiv:1803.11048. [Google Scholar] [CrossRef]

- Zulkifley, M.A.; Behjati, M.; Nordin, R.; Zakaria, M.S. Mobile network performance and technical feasibility of LTE-powered unmanned aerial vehicle. Sensors 2021, 21, 2848. [Google Scholar] [CrossRef]

- Behjati, M.; Zulkifley, M.A.; Alobaidy, H.A.; Nordin, R.; Abdullah, N.F. Reliable aerial mobile communications with RSRP & RSRQ prediction models for the Internet of Drones: A machine learning approach. Sensors 2022, 22, 5522. [Google Scholar] [CrossRef]

- Raffelsberger, C.; Muzaffar, R.; Bettstetter, C. A performance evaluation tool for drone communications in 4G cellular networks. In Proceedings of the 2019 16th International Symposium on Wireless Communication Systems (ISWCS), Oulu, Finland, 27–30 August 2019; pp. 218–221. [Google Scholar]

- Athanasiadou, G.E.; Tsoulos, G.V.; Zarbouti, D.; Tsakalidis, S.; Tsoulos, V.; Christopoulos, N. Empirical Evaluation of SINR and Throughput in 5G/4G Networks: A Drone-Assisted Measurement Approach. In Proceedings of the 2024 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Madrid, Spain, 8–11 July 2024; pp. 377–382. [Google Scholar] [CrossRef]

- Nuari, F.A.I.; Usman, U.K.; Hanuranto, A. Penerapan Unmanned Aerial Vehicle (UAV) untuk Pengukuran Kuat Sinyal (Drive Test) pada Jaringan 4G LTE. Aviat. Electron. Inf. Technol. Telecommun. Electr. Controls (AVITEC) 2021, 3, 69–82. [Google Scholar] [CrossRef]

- Qualcomm Technologies Inc. LTE Unmanned Aircraft Systems: Trial Report; Version 1.0.1; Technical Report; Qualcomm Technologies Inc.: San Diego, CA, USA, 2017. [Google Scholar]

- Jotham, A.F.; Dirgantara, F.M.; Ruriawan, M.F. Implementation of Cellular-Based Drone Module using Cloud Services. J. Electr. Electron. Information, Commun. Technol. (Jotham) 2023, 5, 56–59. [Google Scholar] [CrossRef]

- Liu, X.; Wen, H.; Li, A.; Xu, D.; Hou, Z. Research of multi-rotor UAV atmospheric environment monitoring system based on 4G network. In E3S Web of Conferences; EDP Sciences: Les Ulis, France, 2020; Volume 165, p. 02029. [Google Scholar]

- Royo, P.; Vargas, A.; Guillot, T.; Saiz, D.; Pichel, J.; Rabago, D.; Duch, M.A.; Grossi, C.; Luchkov, M.; Dangendorf, V.; et al. The Mapping of Alpha-Emitting Radionuclides in the Environment Using an Unmanned Aircraft System. Remote Sens. 2024, 16, 848. [Google Scholar] [CrossRef]

- Guo, J.; Li, X.; Zhigang, L.; Liangliang, L. Design of Real-time Video Transmission System for Drone Reliability. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 790, p. 012004. [Google Scholar] [CrossRef]

- Morales, J.; Rodriguez, G.; Huang, G.; Akopian, D. Toward UAV Control via Cellular Networks: Delay Profiles, Delay Modeling, and a Case Study Within the 5-mile Range. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4132–4151. [Google Scholar] [CrossRef]

- Motlagh, N.H.; Bagaa, M.; Taleb, T. UAV-Based IoT Platform: A Crowd Surveillance Use Case. IEEE Commun. Mag. 2017, 55, 128–134. [Google Scholar] [CrossRef]

- Lappas, V.; Zoumponos, G.; Kostopoulos, V.; Lee, H.I.; Shin, H.S.; Tsourdos, A.; Tantardini, M.; Shomko, D.; Munoz, J.; Amoratis, E.; et al. EuroDRONE, a European Unmanned Traffic Management Testbed for U-Space. Drones 2022, 6, 53. [Google Scholar] [CrossRef]

- Sehad, N.; Nadir, Z.; Song, J.; Taleb, T. VR-Based Immersive Service Management in B5G Mobile Systems: A UAV Command and Control Use Case. IEEE Internet Things J. 2022, 9, 17616–17631. [Google Scholar] [CrossRef]

- R, N.C. Intelligent aerial surveillance for safer railways using machine learning. Int. J. Innov. Res. Sci. Stud. 2025, 8, 1160–1166. [Google Scholar] [CrossRef]

- Chiang, C.H.; Juang, J.G. Application of UAVs and Image Processing for Riverbank Inspection. Machines 2023, 11, 876. [Google Scholar] [CrossRef]

- Makropoulos, G.; Koumaras, H.; Kolometsos, S.; Gogos, A.; Sarlas, T.; Järvet, T.; Srinivasan, G.; Setaki, F. Field Trial of UAV flight with Communication and Control through 5G cellular network. In Proceedings of the 2021 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Athens, Greece, 7–10 September 2021; pp. 330–335. [Google Scholar] [CrossRef]

- Laubner, M.; Benders, S.; Goormann, L.; Lorenz, S.; Pruter, I.; Rudolph, M. A Remote Test Pilot Control Station for Unmanned Research Aircraft; Technical Report; DLR: Dresden, Germany, 2022. [Google Scholar]

- Huang, X.; Li, Y.; Ma, H.; Huang, P.; Zheng, J.; Song, K. Fuel cells for multirotor unmanned aerial vehicles: A comparative study of energy storage and performance analysis. J. Power Sources 2024, 613, 234860. [Google Scholar] [CrossRef]

- Ookla. Speedtest Global Index—Mobile (City Rankings): Istanbul. 2025. Available online: https://www.speedtest.net/global-index (accessed on 8 November 2025).

- Sezgin, G.; Coskun, Y.; Basar, E.; Karabulut Kurt, G. Performance Evaluation of a Live Multi-Site LTE Network. IEEE Access 2018, 6, 49690–49704. [Google Scholar] [CrossRef]

- ASTM F3411-22a; Standard Specification for Remote ID and Tracking. ASTM International: West Conshohocken, PA, USA, 2022.

- European Union Aviation Safety Agency. Easy Access Rules for Unmanned Aircraft Systems (U-Space Concept); European Union Aviation Safety Agency: Cologne, Germany, 2023. [Google Scholar]

- Federal Aviation Administration. Beyond Visual Line of Sight (BVLOS) Operations Rulemaking and Guidance; Federal Aviation Administration: Washington, DC, USA, 2024. [Google Scholar]

- International Civil Aviation Organization. UAS Traffic Management (UTM) Framework, 4th ed.; International Civil Aviation OrganizationL: Montreal, QC, Canada, 2022. [Google Scholar]

- Directorate General of Civil Aviation (SHGM). İnsansız Hava Aracı (İHA) Kayıt Sistemi—UAV Registration System; Directorate General of Civil Aviation (SHGM): Istanbul Türkiye, 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).