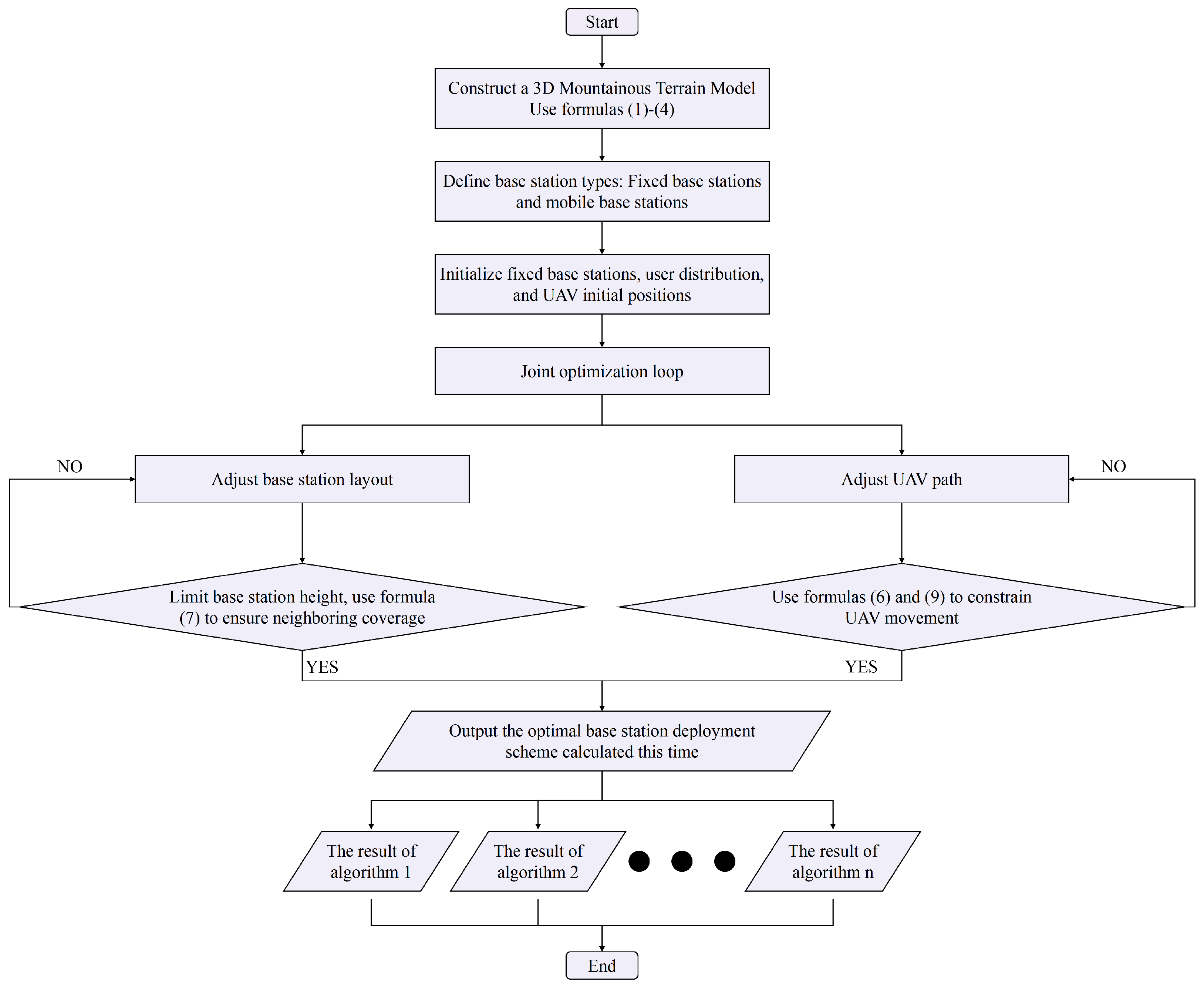

To further verify the feasibility and effectiveness of the AMB-TD3 algorithm in base station deployment, this section will validate the advantages of the AMB-TD3 algorithm in three-dimensional base station deployment issues based on randomly generated terrain and user locations by comparing it with other base station layout algorithms in recent years, as well as through ablation experiments.

6.4. Comparative Experiments

We have meticulously selected a comprehensive suite of reinforcement learning algorithms for comparative analysis to rigorously evaluate the performance and innovation of the proposed AMB-TD3 framework from multiple dimensions. The selected baselines encompass foundational RL methods (e.g., Q-learning [

52]) and established deep RL approaches (e.g., Deep Q-Network, DQN [

56]), which serve as essential benchmarks to quantify fundamental performance gains. The comparison is further extended to advanced policy optimization techniques such as Phasic Policy Gradient (PPG) [

57], enabling a critical assessment of the efficacy of our integrated actor–critic architecture coupled with evolutionary fine-tuning. Moreover, we include several state-of-the-art algorithms specifically tailored for base station deployment optimization: DRL-based Energy-efficient Control for Coverage and Connectivity (DRL-EC

3) [

58] is incorporated as a direct competitor addressing the critical trade-off between coverage and energy efficiency; Simulated Annealing with Q-learning (SA-Q) [

59] and Double DQN with State Splitting Q Network (DDQN-SSQN) [

60] are employed to juxtapose our hybrid framework against alternative algorithmic fusion paradigms and advanced network architectures; Decentralized Reinforcement Learning with Adaptive Exploration and Value-based Action Selection (DecRL-AE & VAS) [

53] provides a contrasting perspective on multi-agent coordination strategies. Finally, the hybrid learning-evolutionary framework DERL [

61] is included to contextualize AMB-TD3 within the landscape of contemporary approaches that synergistically combine reinforcement learning with evolutionary algorithms. The specific parameter configurations for all algorithms are detailed in

Table 3.

To ensure the fairness of the evaluation and clearly demonstrate the advantages and limitations of AMB-TD3 compared to other methods, we adopted the following standardized evaluation protocol:

All algorithms were tested under identical environmental settings, including consistent terrain models, user distributions, and signal propagation characteristics. For all reinforcement learning methods, we maintained exactly the same state space (Equation (

18)) and action space (Equation (

19)) as AMB-TD3, ensuring that all methods operate within the same decision-making structure and environmental constraints, thereby eliminating performance variations due to interface inconsistencies.

All algorithms employed the unified reward function (Equation (

24)), with coverage efficiency, energy consumption, and deployment costs serving as common optimization objectives. This ensures direct comparability of results across different methods.

To avoid bias against algorithms with different convergence characteristics that might result from using identical iteration counts, we implemented a fixed computational budget strategy: all algorithms were allocated equal training episodes (200 episodes). This ensures that regardless of each algorithm’s inherent complexity or convergence speed, optimization occurs under equal resource conditions, guaranteeing evaluation fairness.

Each algorithm was iterated for 200 rounds, and each was independently executed 20 times. The optimal values (Best), worst values (Worst), and standard deviations (Std) were calculated for each algorithm. If the AMB-TD3 algorithm achieved the best values, these will be highlighted in bold. To further verify the transparency and significance of the data differences, a Wilcoxon signed-rank test for 20 sets of data was conducted with a one-tailed significance level set at 0.05. If the

p-value is less than 0.05, it indicates a significant difference between the two algorithms; otherwise, the difference is not significant. The direction marker is attached to the

p-value; if it is (-), it represents that the performance of the AMB-TD3 algorithm is significantly better than the other algorithms. The specific optimization deployment results are shown in

Table 4.

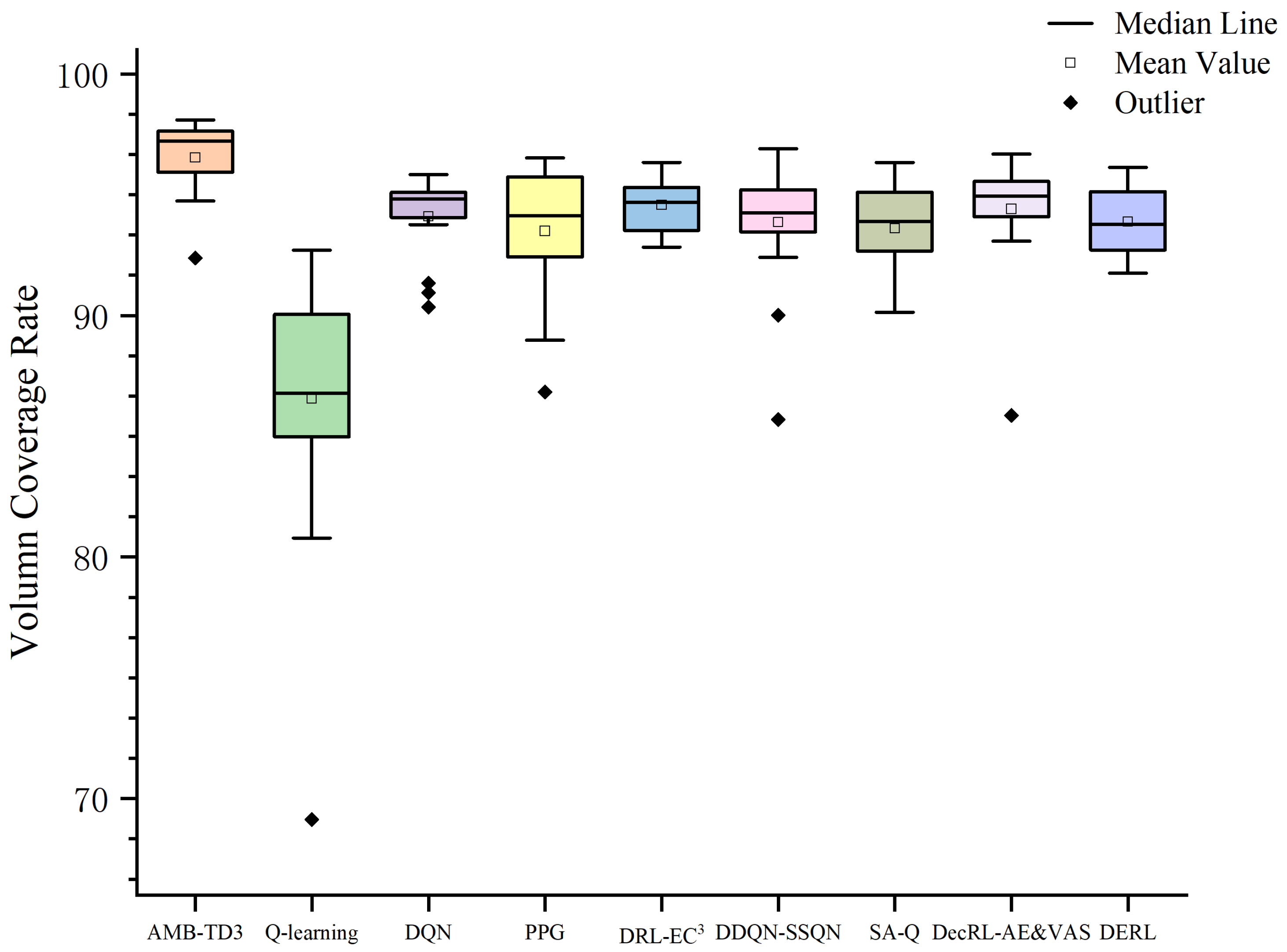

From

Table 4 and

Figure 14, it is evident that AMB-TD3 demonstrates superior performance in base station layout optimization. In terms of coverage rate, the best coverage rate of the AMB-TD3 algorithm significantly exceeds that of other algorithms, being the only one to surpass 98%, with a worst coverage rate of 92.379%, second only to the DRL-EC

3 algorithm. Although the PPG and DecRL-AE&VAS algorithms also achieve a best coverage rate of over 95%, their worst coverage rates are only around 85%, indicating greater variability. Regarding stability, AMB-TD3 has a smaller standard deviation, consistently yielding excellent solutions across different scenarios. The DQN also has a minimal standard deviation of only 1.706%, but its best and worst coverage rates are comparatively lower.

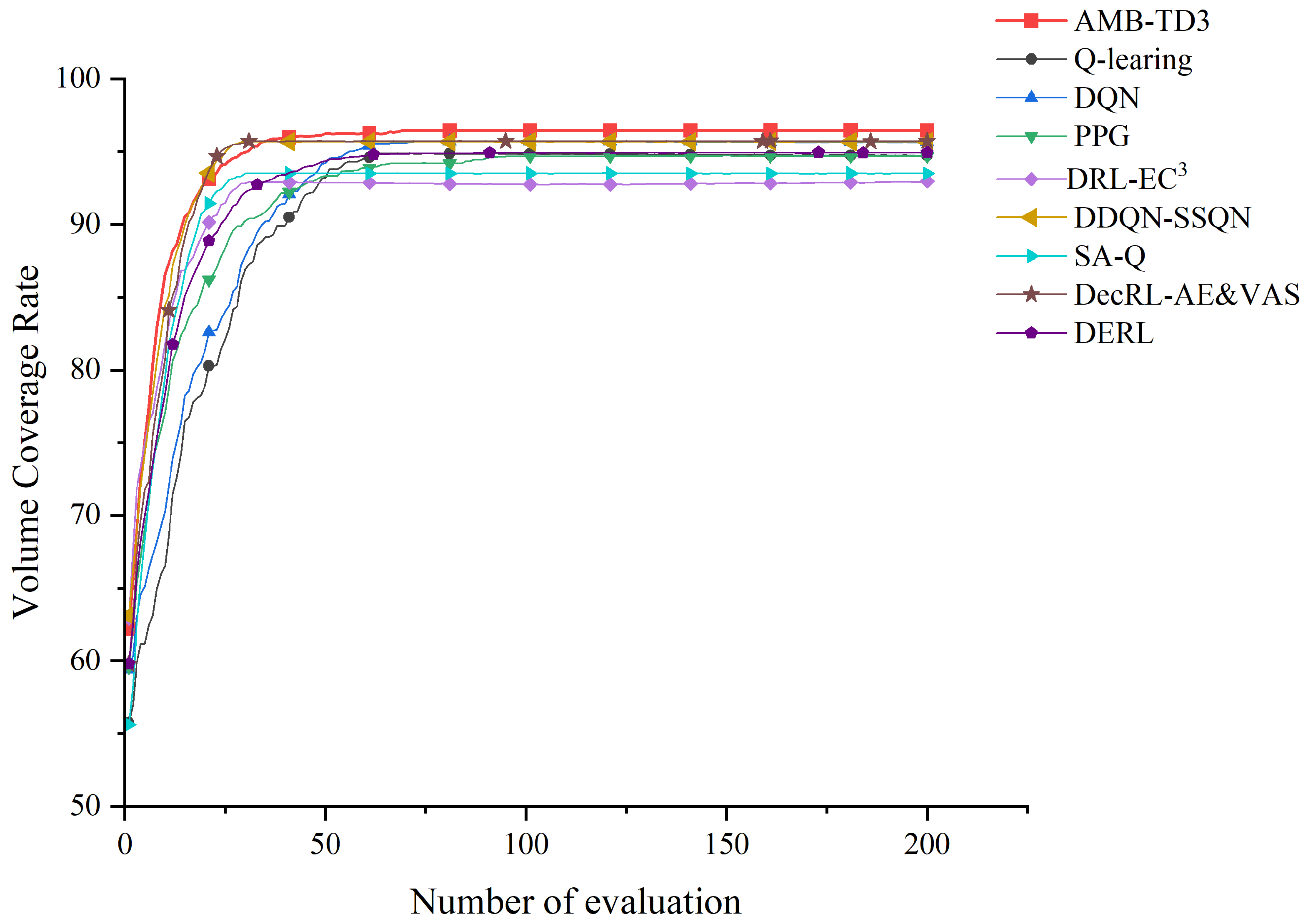

Observing the convergence from

Figure 15, the red squares representing our algorithm are distinctly higher than those of other algorithms. Moreover, the convergence rate is faster, achieving complete convergence to the optimal solution within 50 iterations. Other algorithms, such as DecRL-AE&VAS and DDQN-SSQN, although converging more rapidly than AMB-TD3, do not achieve final convergence results as superior as those of AMB-TD3.

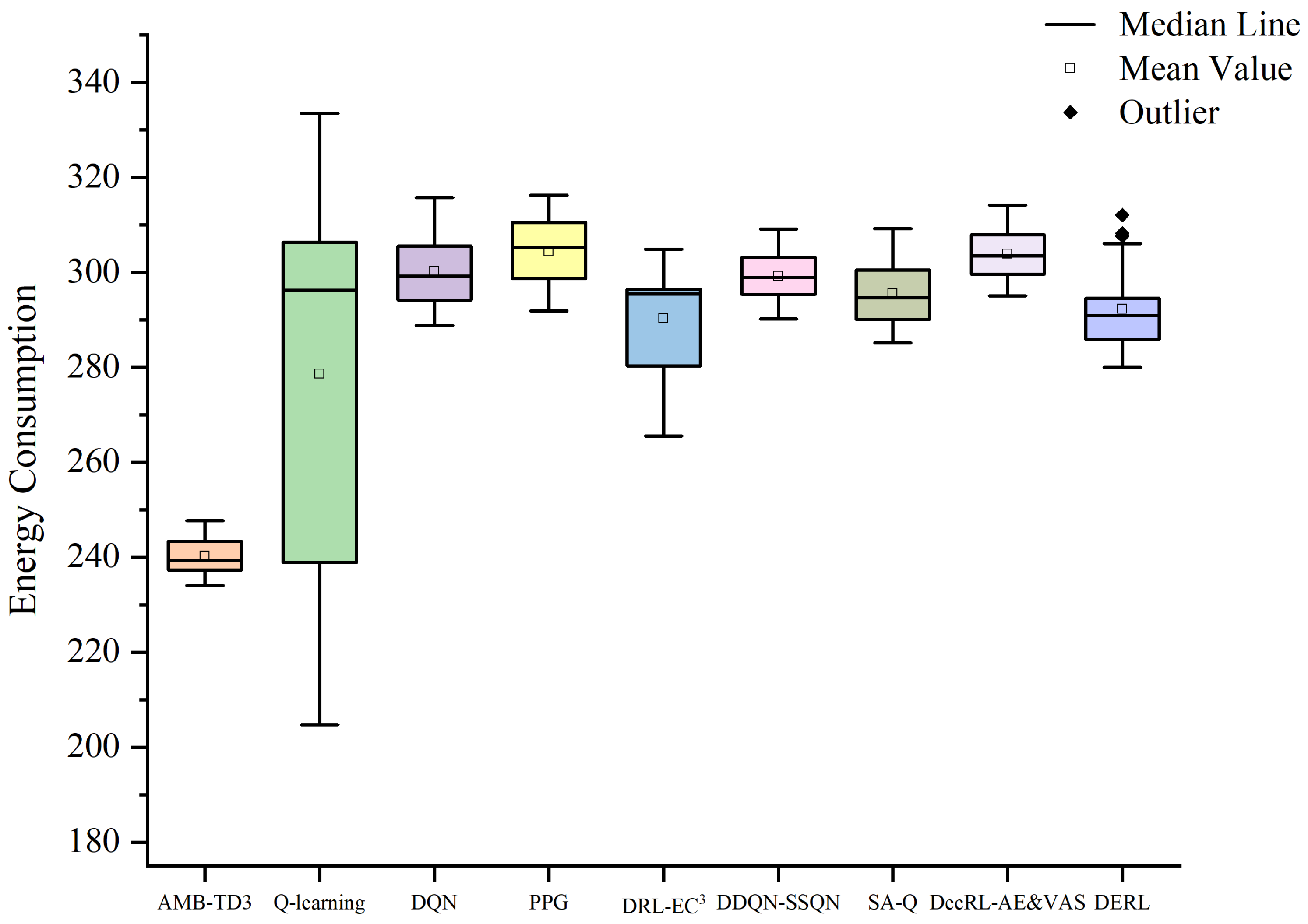

Figure 16 compares the performance of different algorithms in terms of energy consumption. It is evident from the boxplot that the position of the box for AMB-TD3 is significantly lower than that of the other algorithms, indicating that the AMB-TD3 algorithm provides a more rational path planning for the UAVs, resulting in lower energy consumption. Although the minimum energy consumption point of Q-learning is lower than that of the AMB-TD3 algorithm, the box for Q-learning is longer, signifying greater instability, and its mean value is notably higher than that of the AMB-TD3 algorithm. Other algorithms, such as PPG and DDQN-SSQN, exhibit relatively stable energy consumption.

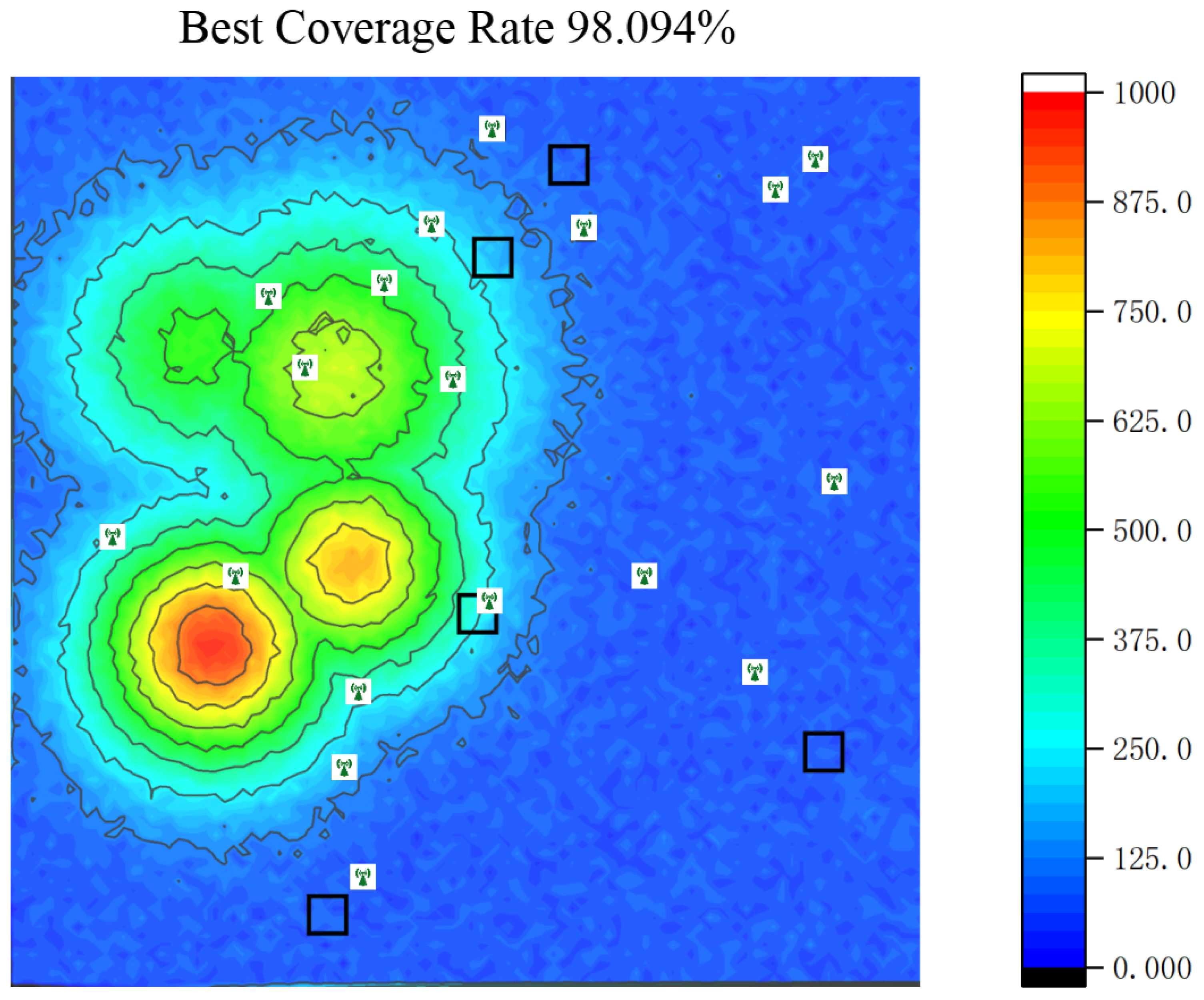

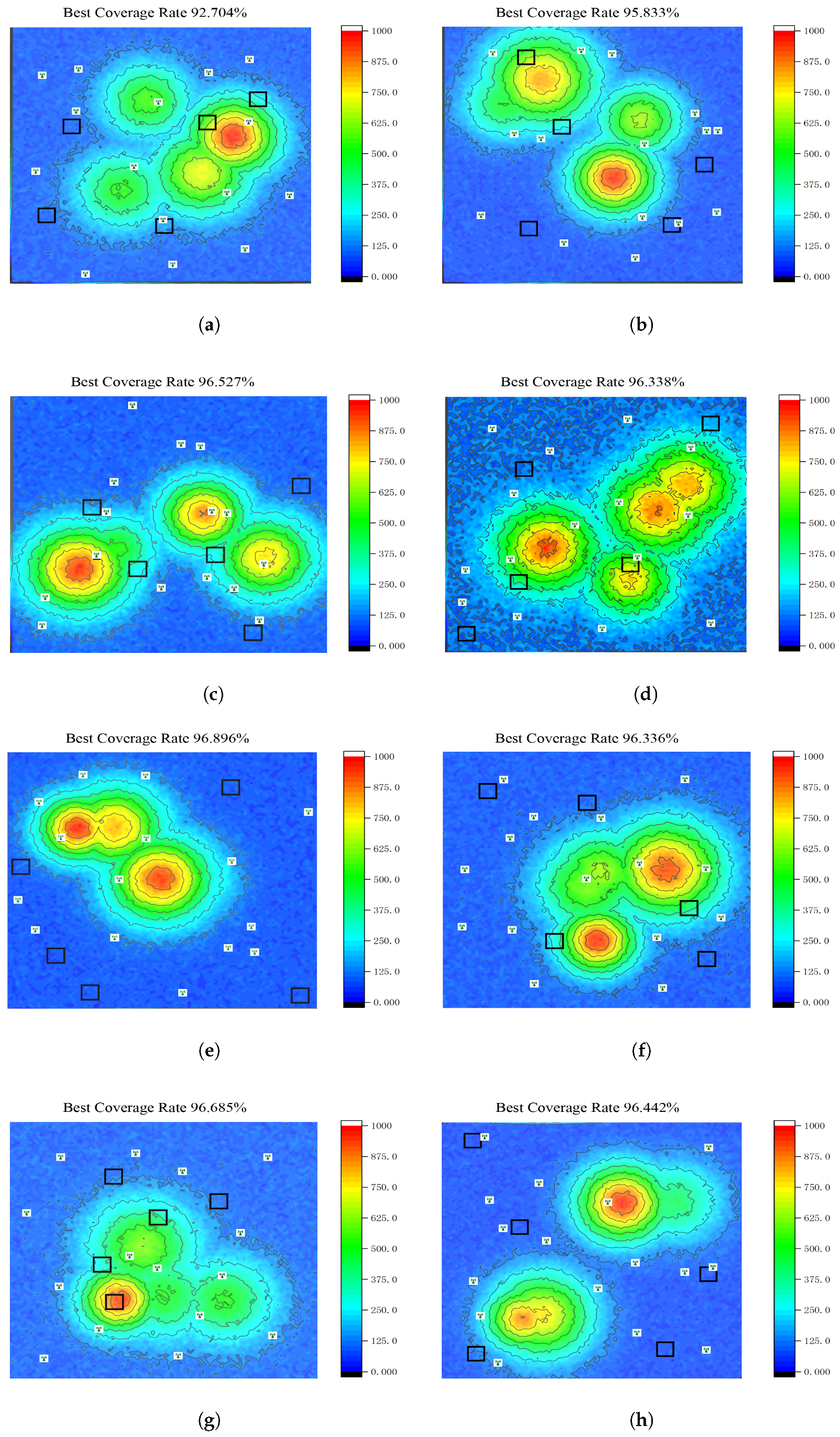

In terms of best coverage rate, stability of coverage, UAV energy consumption, and stability of energy consumption, the AMB-TD3 algorithm proposed in this paper has demonstrated excellent performance, confirming its significant advantages in base station layout optimization and showcasing its potential and practical value in such application scenarios. The best solutions for each algorithm have been visualized, as shown in

Figure 17.

Experimental results demonstrate that the proposed AMB-TD3 algorithm exhibits significant superiority in the joint optimization problem of base station deployment and UAV path planning in mountainous areas.

From the perspective of fundamental reinforcement learning methods, the performance of Q-learning and DQN is constrained by their inherent architectures. Both algorithms rely on discrete action spaces, which hinders fine-grained continuous control of UAV trajectories and high-precision deployment of base station positions in complex three-dimensional environments. As shown in

Table 4, the best coverage rates achieved by Q-learning and DQN are 92.704% and 95.833%, respectively, both significantly lower than AMB-TD3’s 98.094%. Furthermore, Q-learning exhibits a high standard deviation of 5.208%, indicating considerable performance instability, which is visualized in the box plot of

Figure 14 as an exceptionally long box with numerous outliers. Although DQN shows improved stability, its post-convergence coverage rate, as depicted in

Figure 15, remains substantially inferior.

The PPG algorithm operates within a continuous policy space, thereby avoiding errors associated with discretization. However, its performance is limited by its single-timescale optimization strategy and the lack of effective integration with heuristic search algorithms. This makes it difficult to adapt to the dynamically changing coverage demands in mountainous terrain. The significant gap between its best coverage rate (96.527%) and worst coverage rate (86.834%), as recorded in

Table 4, confirms its vulnerability to environmental fluctuations.

The decentralized framework employed by DecRL-AE&VAS offers good scalability. However, since each agent makes decisions based solely on local information without a global perspective, the agent behavior becomes myopic, leading to poor system-level coordination. This coordination failure is visually apparent in the base station distribution map shown in

Figure 17g, where the layout fails to form an effective cooperative coverage network, exhibiting obvious coverage gaps and resource overlap. This ultimately limits its performance ceiling, with a best coverage rate of 96.685%.

The DDQN-SSQN algorithm introduces a more sophisticated value estimation mechanism through double Q-learning and state splitting. Nevertheless, it remains fundamentally a value-based method, and its performance upper bound is constrained by the fundamental challenge of discretizing the continuous action space in complex control tasks. Although its best coverage rate (96.896%) is comparable to PPG and DecRL-AE&VAS, its worst coverage rate (85.693%) is among the lowest of all compared algorithms, indicating insufficient stability.

Methods like SA-Q and DRL-EC3 demonstrate the potential of combining reinforcement learning with heuristic search or energy-aware components. However, their hybrid architectures are essentially unidirectional. For instance, the simulated annealing in SA-Q acts merely as a passive optimizer for the reinforcement learning agent’s output, forming an open-loop system. This lack of bidirectional feedback prevents the reinforcement learning policy from learning and adapting based on the expert knowledge of the heuristic algorithm, thereby limiting further performance gains. While DRL-EC3 emphasizes energy efficiency, its coverage performance (best 96.338%) still lags behind AMB-TD3.

6.5. Ablation Experiments

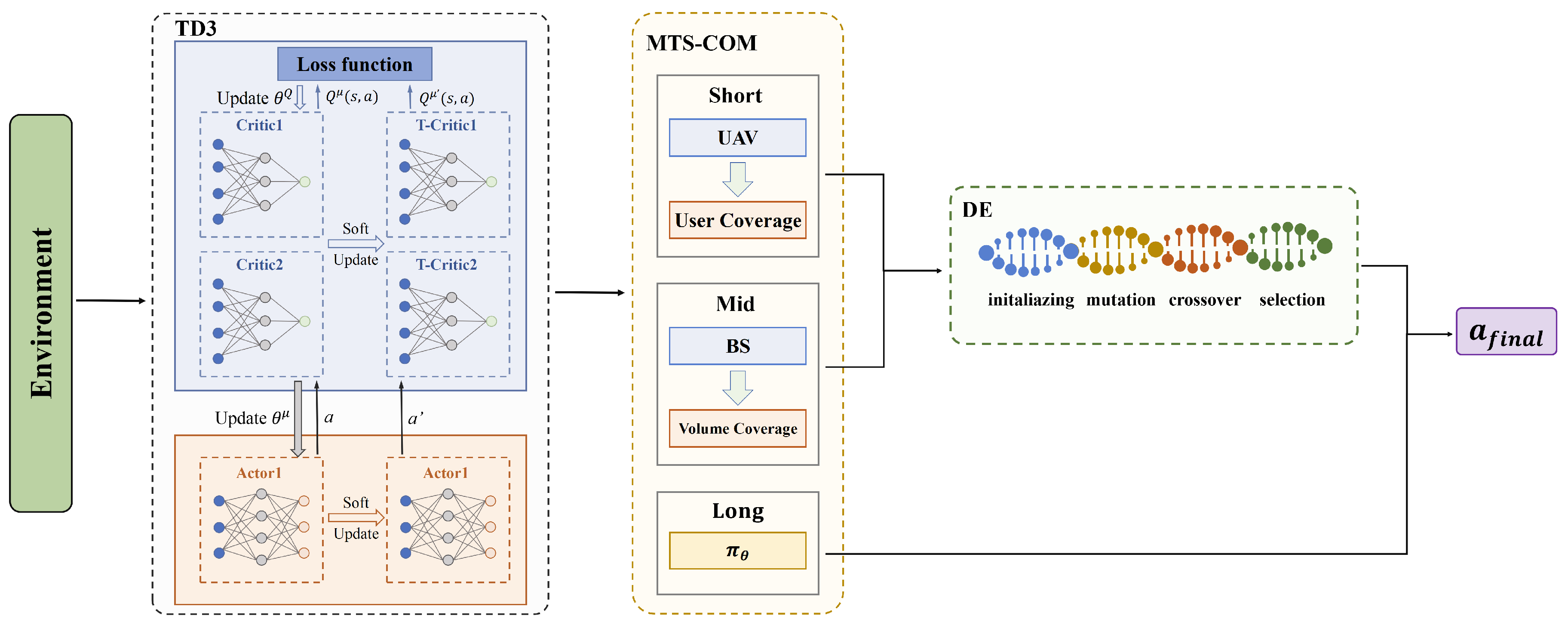

To thoroughly investigate the individual roles and necessity of the three core innovative modules in the AMB-TD3 algorithm—namely, the Dynamic Weight Adaptation Mechanism (DWAM), the Multi-Timescale Collaborative Optimization Method (MTS-COM), and the Bidirectional Information Exchange Channel (BIEC)—we designed systematic ablation studies. These three modules do not exist in isolation but rather form a synergistic and integrated optimization framework: DWAM is responsible for dynamic strategy selection at the algorithmic level, MTS-COM decouples complex optimization tasks across the temporal dimension, and BIEC achieves deep integration of the two optimization paradigms at the knowledge level. Together, they address the core challenges faced by traditional hybrid algorithms in dynamic and complex environments, such as rigid decision-making, singular optimization focus, and knowledge isolation.

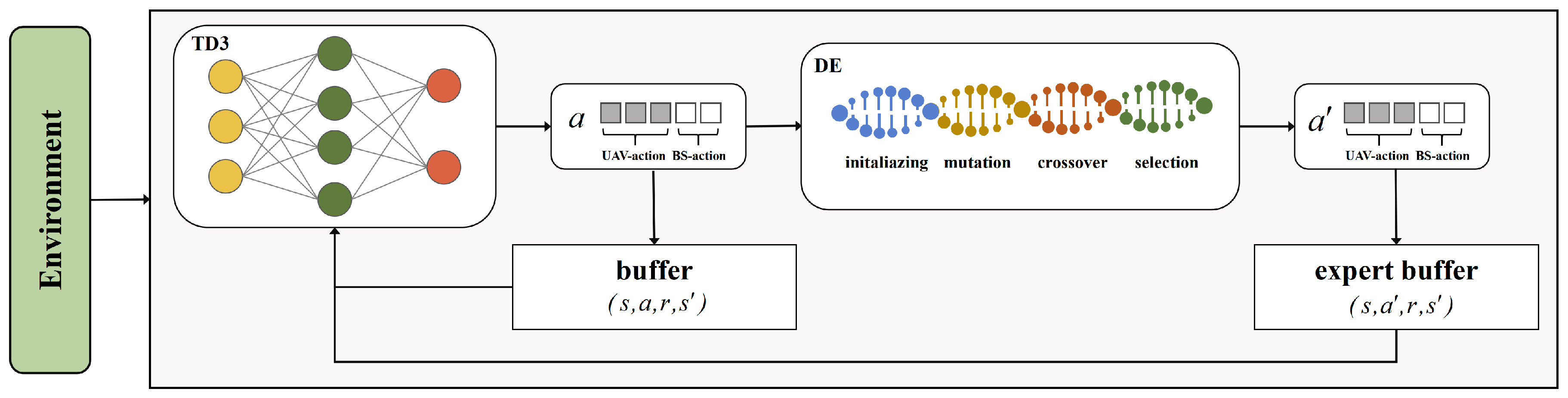

To precisely quantify the contribution of each module, we constructed three variant algorithms by sequentially removing these key components for comparative analysis. Specifically, (1) The TD3-1 algorithm removes the Multi-Timescale Collaborative Optimization Method (MTS-COM). This means the algorithm loses its capability for hierarchical optimization across short-, medium-, and long-term timescales, reverting to a single-timescale optimization framework. This variant is used to validate the critical role of MTS-COM in coordinating immediate responses with long-term planning. (2) The TD3-2 algorithm removes the Bidirectional Information Exchange Channel (BIEC), severing the bidirectional knowledge transfer between TD3 and the Differential Evolution (DE) algorithm. This prevents the optimization experience of DE from feeding back into the policy learning process of TD3, regressing to a traditional unidirectional hybrid architecture. This variant aims to assess the value of BIEC in facilitating co-evolution between algorithms. (3) The TD3-3 algorithm removes the Dynamic Weight Adaptation Mechanism (DWAM), fixing the decision weights of TD3 and DE, thereby rendering the algorithm incapable of adapting the dominant strategy based on environmental dynamics. This variant is used to examine the necessity of DWAM in enhancing the environmental self-adaptability of the algorithm.

By comparing these variants against the complete AMB-TD3 algorithm under identical experimental conditions, we can clearly isolate the specific impact of each innovative module on the final performance, thereby confirming their indispensability in the overall algorithm design.

Each of these algorithms was independently executed 20 times, and the specific optimization results are presented in

Table 5.

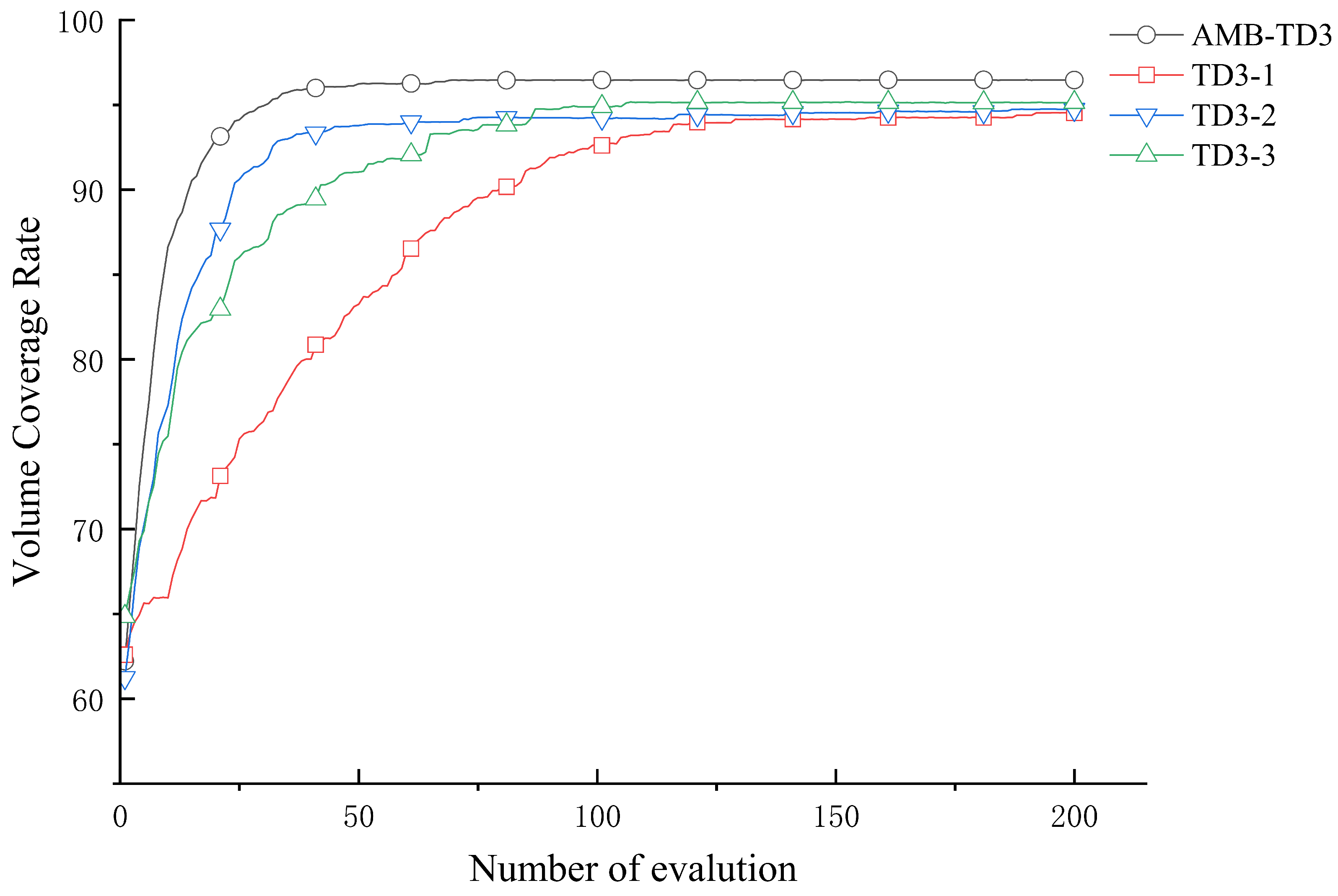

The data from

Table 5 indicates that AMB-TD3 consistently achieves the highest best and worst coverage rates, followed by TD3-2, which lacks the expert buffer, and TD3-3, which lacks the adaptive weight mechanism. The algorithm with the least effective performance is TD3-1, which has removed the multi-timescale mechanism. This suggests that all three innovative aspects contribute to performance enhancements over the original TD3, with the multi-timescale optimization mechanism providing the most significant improvement. However, when analyzing the runtime, the execution duration of AMB-TD3 and TD3-2 and TD3-3 is significantly longer than that of TD3-1, indicating that the multi-timescale optimization mechanism is more time-consuming and represents the core optimization mechanism.

We plot the box plot of the best coverage rate over 20 rounds, as well as the convergence graph, as shown in

Figure 18 and

Figure 19. From

Figure 18, it can be observed that AMB-TD3 consistently achieves the highest coverage rate, and the relatively short box indicates that AMB-TD3 is more stable, yielding excellent solutions each time. The algorithm without the adaptive weight mechanism, TD3-3, has a longer box and significantly lower best and worst coverage rates compared to TD3-1 and TD3-2, demonstrating the importance of the adaptive weight optimization mechanism for enhancing the stability of the original TD3 algorithm. The convergence graph from

Figure 19 of the ablation study also shows that the AMB-TD3 algorithm, represented by the gray line, converges earlier compared to other algorithms, around 50 iterations, followed by TD3-1 and TD3-2, around 80 iterations, and TD3-3 converges the slowest, around 125 iterations. Moreover, the final average best coverage rate converges to the highest for AMB-TD3, followed by TD3-1, TD3-2, and finally TD3-3, indicating that the adaptive weight mechanism can greatly improve the convergence speed and effectiveness of the original TD3 algorithm, better matching dynamic environments. Overall, the AMB-TD3 algorithm exhibits excellent performance in enhancing capabilities, confirming the effectiveness of combining these two optimization strategies.

6.6. Computational Complexity and Scalability Analysis

Algorithmic computational complexity is a key metric for evaluating the applicability of algorithms in large-scale mountain communication scenarios. This section analyzes the time complexity of the AMB-TD3 algorithm. The time complexity of AMB-TD3 is composed of its foundational components TD3 and DE along with the innovative mechanisms: Dynamic Weight Adaptive Mechanism, Multi-Time Scale Collaborative Optimization Method, and Bidirectional Information Exchange Channel.

The computational core of TD3 involves parameter updates for the actor policy network and the twin critic value networks. The actor network, with an input dimension of

and an output dimension of

, has a time complexity for forward propagation and backward gradient calculation of

. Each of the twin critic networks contains

hidden layers; the forward and backward computation complexity for a single network is

, resulting in a total overhead of

for both networks. Combined with the experience replay mechanism, where

B experience samples are drawn per round, the time complexity of TD3 per iteration can be expressed as:

The computation of DE focuses on mutation, crossover, and selection operations, all related to the population size

and the decision variable dimension

. The mutation operation requires traversing all individuals in the population to generate mutation vectors, with a complexity of

. The crossover operation involves stochastic crossover decisions for each of the

decision variables per individual, with a complexity of

. The selection operation requires calculating the fitness of each individual, which relies on user SNR evaluation and coverage range calculation, with a complexity of

, leading to a total overhead of

. The time complexity of DE per iteration is thus:

The Dynamic Weight Adaptive Mechanism requires calculating the Environmental Static Complexity (ESC) and the Environmental Volatility Index (EVI). ESC is based on the elevation standard deviation of terrain grid points, with a computational complexity of . EVI is based on the position changes and SNR standard deviation of users, with a complexity of . Since the computational magnitudes of and are significantly lower than the iterative overhead of the foundational components, the additional overhead of this mechanism can be approximated as , having no significant impact on the overall complexity.

In the Multi-Time Scale Coupling mechanism, short-term optimization invokes DE for fine-tuning UAV positions. Medium-term optimization is only triggered when coverage blind spots persist beyond a threshold (where , e.g., set to in experiments). Long-term optimization relies on the global strategy of TD3. The average per-round overhead of medium-term optimization can be expressed as . Given that is large, this cost can be integrated into the average per-round overhead of DE.

The Bidirectional Information Exchange Channel facilitates knowledge transfer between TD3 and DE via an expert buffer. Its core overhead involves priority calculation for high-value experiences (based on the optimization gain of B experiences), with a complexity of , which is on the same order of magnitude as the batch size B in TD3’s experience replay and can thus be incorporated into the OTD3 calculation.

In summary, the total time complexity of AMB-TD3 over

iterations can be expressed as:

Substituting the specific expressions for

and

, and considering

(so

), this can be further simplified to:

Due to the integration of DE’s global search capability, AMB-TD3 has a slightly higher complexity than the standalone TD3 algorithm. However, it is significantly lower than traditional heuristic algorithms, such as the Genetic Algorithm, which has a complexity of . The actual runtime cost of AMB-TD3 is balanced by its performance improvements, validating its applicability in complex mountain environments.