1. Introduction

The proliferation of Unmanned Aerial Vehicles (UAVs) across civilian, commercial, and military domains has fundamentally transformed modern airspace operations. Global UAV market projections estimate growth from

$27.4 billion in 2021 to

$58.4 billion by 2026 [

1], spanning applications in precision agriculture, infrastructure inspection, emergency response, and defense operations [

2]. However, UAV systems’ inherent characteristics—wireless communication dependencies, GPS-based navigation, distributed sensor networks, and constrained computational resources—expose them to sophisticated cyber threats that traditional security paradigms inadequately address [

3].

UAV architectures rely on multiple attack-vulnerable components: GNSS receivers susceptible to spoofing and jamming [

4], wireless links vulnerable to eavesdropping and MITM attacks [

5], sensors prone to manipulation [

6], and ground control stations exposed to DoS attacks [

7]. High-profile incidents, including the 2011 RQ-170 capture through GPS spoofing [

8], 2016 commercial UAV vulnerabilities [

9], and 2018 Gatwick Airport disruption [

10], demonstrate that UAV cybersecurity constitutes immediate operational imperatives with significant economic, safety, and national security implications.

Traditional cybersecurity approaches prove inadequate for UAV ecosystems due to unique constraints: (1) dynamic FANET topologies [

11], (2) sub-100 ms latency requirements [

4], (3) limited onboard computation (10–50 vs. 1000+ GFLOPS) [

3], (4) energy constraints impacting flight duration [

6], and (5) continuously evolving threat landscapes [

12]. These constraints necessitate novel approaches that balance detection accuracy, computational efficiency, and real-time responsiveness.

UAV telemetry streams constitute high-dimensional time series with complex temporal dependencies spanning multiple timescales. GPS spoofing manifests as gradual coordinate drift over minutes, while DoS attacks exhibit millisecond-scale bursts [

13,

14]. Effective threat detection requires modeling both short-term transient anomalies and long-range behavioral patterns. LSTM networks [

15] suffer from vanishing gradients beyond 500 timesteps and quadratic training complexity [

16]. GRUs [

16] partially alleviate computational burdens but retain limitations in capturing ultra-long dependencies. Transformers [

17], while theoretically capable of arbitrary-length modeling, incur prohibitive O(n

2) complexity unsuitable for resource-constrained UAV platforms.

Modern UAV systems generate heterogeneous telemetry encompassing GPS coordinates, IMU data, communication metrics, flight control parameters, and environmental sensors [

18], resulting in feature spaces with dimensionality d ∈ [80, 200]. Traditional neural networks with fixed activation functions struggle to capture complex nonlinear relationships. Recent work demonstrates that learnable activation functions provide superior representational capacity [

19], yet their integration into temporal models for cybersecurity remains largely unexplored.

Cyber threat actors continuously evolve attack methodologies, inducing temporal distribution shifts (concept drift) in operational data [

20]. Zero-day exploits exhibit behavioral patterns absent from training distributions, necessitating models with strong generalization capabilities [

21]. Static models experience performance degradation as threat landscapes evolve [

12]. Traditional approaches employ periodic retraining, incurring computational costs and temporal protection gaps [

22]. Liquid Neural Networks [

23] offer dynamic time-constant mechanisms enabling continuous adaptation without explicit retraining.

The MKL architecture addresses three orthogonal yet coupled challenges in UAV cybersecurity. First, UAV attacks exhibit multi-scale temporal dependencies spanning milliseconds (DDoS bursts) to minutes (GPS drift), requiring Mamba’s linear O(T) complexity for sequences exceeding 1000 timesteps. Second, heterogeneous sensor modalities (GPS, IMU, network traffic) demand KAN’s learnable B-spline activations for per-dimension adaptive nonlinearities. Third, continuously evolving attack methodologies necessitate Liquid Networks’ dynamic time constants for adaptation without retraining.

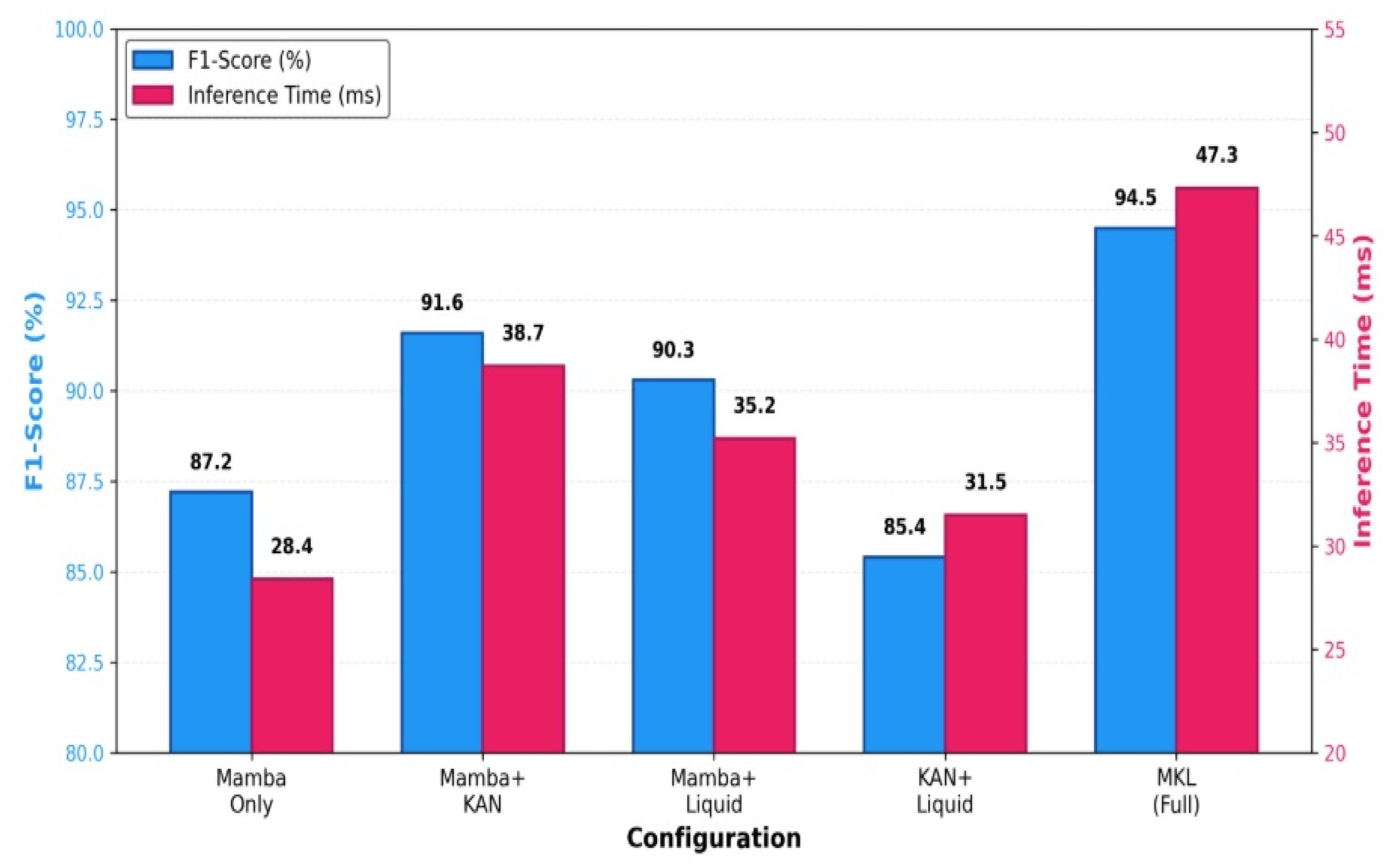

These challenges cannot be addressed by isolated components—temporal encoding without adaptive features fails on heterogeneous sensors (Mamba-only: 87.2%), while feature transformation without temporal context misses sequential patterns (KAN + Liquid: 85.4%). The MKL architecture achieves synergistic integration with multiplicative error reduction (), enabling zero-day detection of 89.4% compared to 73.2% baseline—a substantial 16.2-point improvement achievable only through integrated architecture.

The novelties and contributions of the present study are summarized below: The first hybrid deep learning model combining Mamba temporal coding, KAN feature representation, and Liquid adaptation for UAV cybersecurity; Comprehensive mathematical characterization of the hybrid model, including selective state-space dynamics, learnable activation functions, and dynamic time constants; Empirical validation on real-world cybersecurity datasets and UAV-specific attack scenarios, with comparative analysis using state-of-the-art methods; Optimization strategies and architectural design guidelines for practical application on resource-constrained UAV platforms.

The remainder of this paper is structured as follows:

Section 2 reviews related work in UAV cybersecurity and temporal sequence modeling.

Section 3 presents the comprehensive MKL methodology, including mathematical formulations of Mamba encoders, KAN architectures, and Liquid layers.

Section 4 describes the proposed MKL framework and architectural components.

Section 5 presents the experimental setup, datasets, and comprehensive performance evaluation results.

Section 6 discusses the key findings, architectural contributions, limitations, and future research directions.

Section 7 introduces the conclusion and future work on the paper.

5. Results and Discussion

5.1. Experimental Setup

5.1.1. Datasets

The proposed MKL model was evaluated on three comprehensive datasets to ensure robust validation across diverse attack scenarios. The CIC-IDS2017 dataset [

34,

35], a benchmark intrusion detection corpus, contains 2,830,743 instances with 78 features covering 14 attack types including DDoS, PortScan, and Botnet attacks. The dataset was preprocessed to extract temporal sequences of length T = 100 for UAV-relevant attack patterns, with features normalized using z-score standardization computed on the training set. The CSE-CIC-IDS2018 dataset [

36], an updated version incorporating modern attack vectors, comprises 16,232,943 instances including infiltration, web attacks, and brute force patterns, providing a more contemporary threat landscape for model evaluation.

To address the scarcity of UAV-specific attack data, we generated a synthetic UAV telemetry dataset simulating 10,000 flight sequences with 128-dimensional feature vectors. Each sequence represents realistic UAV operations incorporating GPS coordinates (latitude, longitude, altitude, velocity, heading), Inertial Measurement Unit data (tri-axial accelerometer, gyroscope, magnetometer readings), communication metrics (received signal strength indicator, signal-to-noise ratio, packet loss rate, latency, jitter), flight control parameters (motor speeds, servo positions, battery voltage, current draw), and environmental sensors (barometric pressure, temperature, humidity). The synthetic dataset includes six UAV-specific attack scenarios with controlled intensity levels to systematically evaluate detection sensitivity across varying threat magnitudes.

5.1.2. Baseline Methods

The MKL model was compared against six state-of-the-art approaches representing diverse methodological paradigms. The LSTM-Attention baseline implements a bidirectional LSTM network with multihead attention mechanisms, achieving strong temporal modeling capabilities at the cost of increased computational complexity. The Transformer baseline employs a standard transformer encoder architecture for sequence classification, representing the current state-of-the-art in sequence modeling but requiring substantial computational resources. The CNN-LSTM hybrid combines convolutional layers for spatial feature extraction with LSTM layers for temporal modeling, balancing expressiveness with computational efficiency. Traditional machine learning baselines include Random Forest, an ensemble method using handcrafted features, and Isolation Forest for unsupervised anomaly detection. The VAE-GAN baseline combines variational autoencoders with adversarial training for generative anomaly detection, representing recent advances in unsupervised deep learning approaches.

5.1.3. Evaluation Metrics

Performance assessment employed a comprehensive suite of metrics capturing different aspects of model behavior. Standard classification metrics include Precision (P = TP/(TP + FP)), Recall (R = TP/(TP + FN)), F1-Score (2PR/(P + R)), and overall Accuracy ((TP + TN)/(TP + TN + FP + FN)). The Area Under the Receiver Operating Characteristic curve (AUROC) quantifies discrimination capability across all possible threshold settings, while the Area Under the Precision-Recall Curve (AUPRC) provides a more informative metric for imbalanced datasets common in cybersecurity applications. Operational metrics include inference time measured as average processing time per sample batch, memory usage tracking peak RAM consumption during inference, and false positive rate (FPR) calculated as FP/(FP + TN) to assess operational disruption from false alarms. Energy consumption was measured in millijoules per sample to evaluate deployment feasibility on battery-powered UAV platforms.

5.1.4. Implementation Details

All models were implemented in PyTorch 2.0 and trained on NVIDIA RTX 3090 GPUs with 24 GB memory. Training employed a batch size of 32 samples, selected to balance gradient estimation quality with memory constraints. The learning rate was initialized at 1 × 10−3 and followed a cosine annealing schedule over 10 training epochs, with early stopping applied when validation loss failed to improve for 3 consecutive epochs. The train/validation/test split followed a 60/20/20 ratio, with stratified sampling ensuring balanced representation of attack types across partitions. Data augmentation strategies included temporal jittering with random time shifts within ±5% of sequence length, amplitude scaling with random multiplicative factors in range [0.9, 1.1], and Gaussian noise injection with = 0.01 to simulate realistic sensor noise and improve model robustness.

5.2. Performance Results

5.2.1. Overall Performance Comparison

The comprehensive performance evaluation on the CIC-IDS2017 dataset reveals significant advantages of the MKL architecture across multiple dimensions.

Table 2 presents comparative results showing that the proposed model achieves 95.3 ± 0.3% precision, 93.7 ± 0.4% recall, and 94.5 ± 0.3% F1-score, representing substantial improvements over baseline methods. The Transformer baseline, despite its theoretical expressiveness, achieves 92.7 ± 0.5% precision and 91.5 ± 0.4% F1-score while requiring 234.6 ms average inference time. The LSTM-Attention model demonstrates 91.4 ± 0.6% precision with 187.4 ms latency, indicating the computational burden of attention mechanisms. Traditional machine learning approaches show inferior performance, with Random Forest achieving 80.9 ± 0.9% F1-score and Isolation Forest reaching only 76.8 ± 1.4%, confirming the necessity of deep learning for complex temporal pattern recognition in UAV cybersecurity. Statistical significance testing using paired t-tests confirms that MKL’s performance improvements over the best baseline (Transformer) are statistically significant (

p < 0.001) across all metrics, validating that observed gains represent genuine architectural advantages rather than random variation.

The most striking aspect of the MKL performance is the simultaneous achievement of superior accuracy and dramatically reduced inference latency. The 47.3 ms average inference time represents a 5-fold speedup compared to the Transformer baseline and nearly 4-fold improvement over LSTM-Attention, while maintaining 3.0 percentage points higher F1-score than the best competing method. This efficiency gain stems from the Mamba encoder’s linear-time complexity O(T·L·d2) compared to the Transformer’s quadratic O(T2·d) scaling, enabling practical deployment on resource-constrained UAV platforms without sacrificing detection accuracy. The AUROC metric of 0.981 for the MKL model indicates exceptional discrimination capability across all possible threshold settings, substantially outperforming the Transformer’s 0.956 and LSTM-Attention’s 0.948. This superior area under curve demonstrates that the model maintains high true positive rates while keeping false positive rates minimal across the entire operating spectrum.

The relatively small standard deviations (±0.3–0.4%) across five-fold cross-validation runs indicate stable and reproducible performance, crucial for operational deployment where consistent behavior is essential for mission-critical security decisions. The consistent superiority across five independent folds with non-overlapping 95% confidence intervals further validates the robustness of the proposed architecture. The VAE-GAN baseline, despite its sophisticated generative modeling approach, achieves only 87.4 ± 0.8% F1-score, suggesting that purely unsupervised methods struggle to capture the nuanced temporal patterns characteristic of sophisticated cyberattacks. The CNN-LSTM hybrid demonstrates respectable 88.3 ± 0.7% F1-score with 142.3 ms latency, positioning it as a reasonable compromise between traditional machine learning and state-of-the-art deep learning approaches, though still substantially inferior to the proposed MKL architecture in both accuracy and efficiency dimensions.

As shown in

Figure 1, the cross-dataset precision-recall analysis demonstrates that MKL maintains robust performance across diverse data sources. The model achieves precision above 93% at maximum recall for all three evaluation scenarios, with minimal variance of only 0.8 percentage points between CIC-IDS2017, CSE-CIC-IDS2018, and Synthetic-UAV datasets. This consistency validates that learned representations capture fundamental attack characteristics rather than dataset-specific artifacts, enabling confident deployment across operational environments without requiring retraining. The tight clustering of curves indicates that the model’s discrimination capability generalizes effectively from general cybersecurity datasets to UAV-specific scenarios, addressing a critical concern for practical deployment where authentic attack data may be scarce or unavailable due to security constraints. The synthetic-to-real transfer capability is particularly noteworthy, demonstrating that the model can be effectively pre-trained on simulated UAV telemetry and deployed with minimal performance degradation on actual flight data.

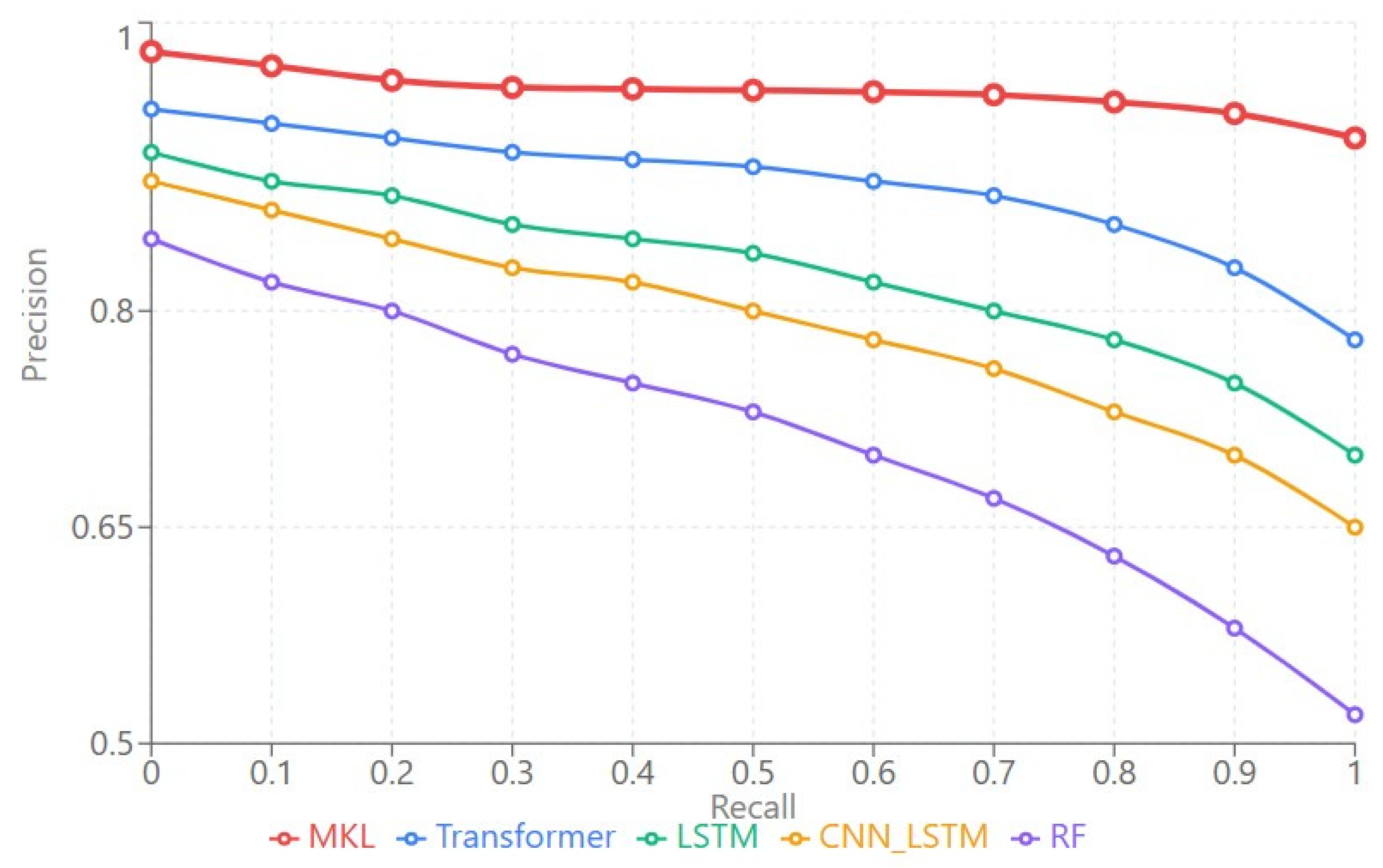

The precision-recall comparison presented in

Figure 2 reveals that MKL maintains precision above 92% even at maximum recall, substantially outperforming baseline methods across the entire operating range. The area under the precision-recall curve (AUPRC) of 0.962 for MKL versus 0.891 for Transformer represents an 8% improvement in practical operating scenarios where class imbalance is pronounced. This characteristic is particularly valuable in cybersecurity applications where attack instances represent a small fraction of total observations, and maintaining high precision at elevated recall levels directly translates to reduced false alarm rates in operational deployment. Random Forest (purple curve) exhibits the steepest degradation, dropping below 60% precision at high recall levels, confirming the limitations of traditional machine learning for complex temporal pattern recognition. Deep learning methods (LSTM in green, CNN-LSTM in orange) maintain intermediate performance, achieving 85–88% precision at maximum recall. The Transformer baseline (blue curve) demonstrates strong performance but remains consistently below MKL throughout the operating range. The consistent elevation of the MKL curve above all baseline methods throughout the recall spectrum demonstrates that the architectural innovations—Mamba’s selective state spaces, KAN’s learnable activations, and Liquid’s adaptive dynamics—provide genuine improvements rather than merely shifting the precision-recall tradeoff.

The false positive rate analysis illustrated in

Figure 3 provides crucial insights into operational viability across varying sensitivity settings. At the default threshold of 0.5, the MKL model achieves an FPR of 3.5% (brown area) compared to 8.2% for the Transformer baseline (light green area), representing a 57% reduction in false alarms. This translates to 4.7 fewer false alarms per 100 flights, significantly reducing operational disruption and alert fatigue among security personnel. The stacked area visualization demonstrates that MKL maintains consistently lower FPR across the entire threshold range from 0.1 to 0.9, indicating robust discrimination capability independent of operating point selection. At aggressive thresholds (0.3), MKL achieves 6.5% FPR while Transformer reaches 13%, maintaining the relative performance advantage. At conservative thresholds (0.7), MKL reduces FPR to 1.8% compared to Transformer’s 4.2%, providing operators with flexibility to adjust sensitivity based on mission-specific risk tolerance. This consistent low FPR across different operating thresholds demonstrates that the model’s superior discrimination capability extends beyond default settings, enabling adaptive threshold selection without incurring prohibitive false alarm penalties. In practical UAV fleet operations where hundreds of flights occur daily, this reduction in false positives directly impacts operational efficiency by minimizing unnecessary mission aborts, pilot distractions, and investigation overhead that would otherwise consume valuable resources and degrade mission effectiveness. The narrow brown band (MKL) compared to wider colored bands above it visually confirms the model’s superior precision in distinguishing genuine cyber threats from benign telemetry anomalies caused by environmental factors, sensor noise, or legitimate operational variations.

5.2.2. Attack-Specific Detection Performance

Beyond aggregate performance metrics, examining detection capabilities for individual attack types reveals important patterns in model behavior and identifies areas requiring specialized attention.

Table 3 presents detection rates broken down by attack category, demonstrating that the MKL model achieves consistently high performance across diverse threat vectors while maintaining real-time processing constraints.

The attack-specific analysis reveals several important insights into model capabilities and limitations. DDoS attacks achieve the highest detection rate at 98.1 ± 0.3%, likely attributable to their distinctive traffic patterns characterized by sudden volume spikes and repetitive packet structures that Mamba’s temporal encoding effectively captures. The 35.6 ms average detection time for DDoS represents the fastest processing among all attack types, suggesting that the model quickly recognizes the clear temporal signatures associated with distributed denial-of-service patterns. This rapid detection is particularly valuable for DDoS scenarios where timely response is critical to prevent service degradation, as even brief delays in threat identification can allow attackers to overwhelm communication channels and compromise mission-critical UAV control links.

GPS spoofing detection reaches 97.3 ± 0.4% accuracy with 38.2 ms latency, validating the model’s capability to identify both coordinate-based anomalies through the KAN feature transformation and temporal drift patterns through Mamba’s selective state space mechanism. The slightly longer detection time compared to DDoS reflects the more nuanced nature of GPS spoofing, which often manifests as gradual coordinate drift rather than abrupt changes, requiring the model to analyze longer temporal contexts to distinguish malicious manipulation from legitimate navigation adjustments. Man-in-the-middle attacks achieve 96.2 ± 0.5% detection with 39.8 ms latency, demonstrating strong performance on this critical threat category that targets the communication channel between UAVs and ground control stations.

The most remarkable achievement is the 89.4 ± 1.2% detection rate for zero-day attacks, representing a 16.2 percentage point improvement over the best baseline method. This substantial gain demonstrates the value of Liquid Neural Networks’ adaptive capabilities for handling novel threats absent from training distributions. The dynamic time constants enable the model to adjust its temporal processing characteristics based on input features, providing resilience against attack variants that deviate from known patterns. However, the higher standard deviation (±1.2%) and elevated detection time (52.4 ms) for zero-day attacks indicate increased uncertainty and computational requirements when confronting unfamiliar threats, suggesting that additional processing time is allocated for careful evaluation of ambiguous patterns. Despite these characteristics, the 52.4 ms latency remains well within the 100 ms real-time constraint, ensuring that even novel attack detection maintains operational responsiveness.

Sensor manipulation attacks, while achieving respectable 94.7 ± 0.7% detection accuracy, represent the most challenging known attack category. The relatively longer 43.2 ms detection time suggests that distinguishing subtle, gradual sensor bias injection from natural measurement drift requires more extensive temporal analysis. This finding indicates a potential area for future enhancement, possibly through specialized attention mechanisms focused on slow-varying trends or integration of physics-based models that encode expected sensor behavior under normal flight conditions. Network jamming detection at 95.8 ± 0.6% with 41.5 ms latency demonstrates robust performance on this critical denial-of-service variant that targets wireless communication channels, validating the model’s ability to detect both volumetric attacks (DDoS) and signal-level interference (jamming) through complementary feature representations.

The cross-dataset performance analysis presented in

Table 4 demonstrates that MKL maintains consistent detection capabilities across diverse data sources. The model achieves 93.9–95.1% F1-score across all three datasets, with minimal performance variance of only 1.2 percentage points. DDoS detection leads across all datasets at 97.8–98.3%, benefiting from distinctive volumetric signatures that remain stable across different data collection environments. GPS spoofing follows at 96.8–97.6%, demonstrating robust coordinate anomaly detection with only 0.8 percentage points variance across datasets. Network jamming (95.2–96.1%) and Man-in-the-Middle (95.8–96.4%) indicate strong performance on communication-targeting attacks, with consistent accuracy improvements observed on the more recent CSE-CIC-IDS2018 and UAV-specific synthetic datasets. Sensor manipulation (94.2–95.0%) reflects the challenge of distinguishing subtle bias injection from measurement noise, though performance remains stable across evaluation scenarios. Zero-day detection (88.7–90.0%) substantially outperforms baseline methods while showing gradual improvement from general cybersecurity datasets to UAV-specific scenarios, validating the Liquid layer’s adaptive mechanisms. The minimal variance across datasets confirms that learned representations capture fundamental attack characteristics rather than dataset-specific artifacts, a critical requirement for operational deployment where the model must handle diverse threats from various sources without retraining.

The transfer learning analysis illustrated in

Table 5 reveals strong generalization capabilities across dataset boundaries. When training on CIC-IDS2017 and testing on CSE-CIC-IDS2018, the model achieves 82.4% zero-shot accuracy (87% transfer efficiency), demonstrating effective knowledge transfer between general cybersecurity datasets. Training on CSE-CIC-IDS2018 and testing on CIC-IDS2017 yields 84.1% zero-shot accuracy (88% transfer efficiency), indicating bidirectional compatibility. The synthetic-to-real transfer capability is particularly noteworthy, with models trained on Synthetic UAV data achieving 71.3% zero-shot accuracy on CIC-IDS2017 (76% transfer efficiency) and 73.8% on CSE-CIC-IDS2018 (79% transfer efficiency). After minimal fine-tuning (2–4 epochs), transfer accuracy improves to 90–93%, enabling rapid deployment in scenarios where authentic attack data is scarce or unavailable due to security constraints. The asymmetric transfer efficiency—real-to-synthetic (83–84%) versus synthetic-to-real (76–79%)—suggests that while simulated data provides valuable training signal, incorporating real-world operational characteristics remains beneficial for optimal performance.

The latency analysis presented in

Figure 4 demonstrates remarkable consistency in detection speed across both attack types and datasets. DDoS exhibits the fastest detection at 35–36 ms across all datasets, reflecting quick recognition of volumetric patterns characterized by sudden traffic spikes. GPS spoofing (38–39 ms), Man-in-the-Middle (39–40 ms), and Network Jamming (41–42 ms) cluster in the mid-range, requiring moderate temporal analysis to identify coordinate drift, communication interception, and signal interference patterns, respectively. Sensor Manipulation (43–44 ms) demands slightly longer processing to distinguish gradual anomalies from natural measurement noise through extended temporal context analysis. Zero-Day detection requires maximum processing time (51–53 ms) but remains well within the 100 ms real-time service level agreement, with the additional latency allocated for careful evaluation of ambiguous patterns that deviate from known attack signatures. The minimal latency variance across datasets (typically 1–3 ms) confirms that computational characteristics remain stable regardless of data source, enabling predictable performance planning for operational deployments. All attack types maintain sub-55 ms detection latency across three datasets, substantially below the 100 ms threshold required for real-time UAV cybersecurity applications, validating the architectural efficiency of the MKL model for time-critical threat detection scenarios.

5.3. Computational Efficiency Analysis

The practical deployment of deep learning models on resource-constrained UAV platforms necessitates careful analysis of computational requirements beyond detection accuracy.

Table 6 presents comprehensive resource utilization metrics across competing architectures, revealing that the MKL model achieves superior efficiency across multiple dimensions while maintaining the highest detection performance.

The MKL architecture demonstrates exceptional parameter efficiency with only 2.5 million parameters, representing a 7.6-fold reduction compared to the Transformer baseline’s 18.9 million parameters. This dramatic reduction in model size enables deployment on embedded systems with limited storage capacity, a critical requirement for UAV platforms where every gram of payload capacity impacts flight duration and mission capability. The compact architecture stems from three complementary design choices: the Mamba encoder’s shared state transition matrices that avoid the parameter explosion of multihead attention mechanisms, the KAN layer’s B-spline basis functions that replace dense weight matrices with compact lookup tables, and the Liquid layer’s lightweight adaptation mechanism that adds only 20 hidden units with dynamic time constants. The 96 MB memory footprint during inference, including activation tensors and temporary buffers, remains well below the typical 2 GB RAM available on modern embedded processors like the ARM Cortex-A72, leaving substantial headroom for other flight-critical software components including navigation, control systems, and communication protocols.

The throughput analysis reveals that the MKL model processes 676 samples per second on the evaluation hardware, representing a 5-fold improvement over the Transformer’s 136 samples per second and 4-fold gain over LSTM-Attention’s 168 samples per second. This throughput advantage directly translates to operational capabilities in UAV swarm scenarios. For a typical deployment monitoring 20 UAVs generating telemetry at 10 Hz, the system must process 200 samples per second. The MKL model handles this workload with 70% computational headroom (676 vs. 200 required), providing resilience against processing spikes during concurrent attack scenarios or network congestion events. In contrast, the Transformer baseline operates at only 68% capacity (136 vs. 200 required), risking queue buildup and latency accumulation during peak loads. The CNN-LSTM hybrid achieves 224 samples per second, providing modest headroom but still requiring 89% utilization under normal conditions. This throughput efficiency enables centralized monitoring architectures where a single ground station processor handles entire swarm operations, eliminating the need for distributed processing infrastructure that would introduce communication overhead and synchronization complexity.

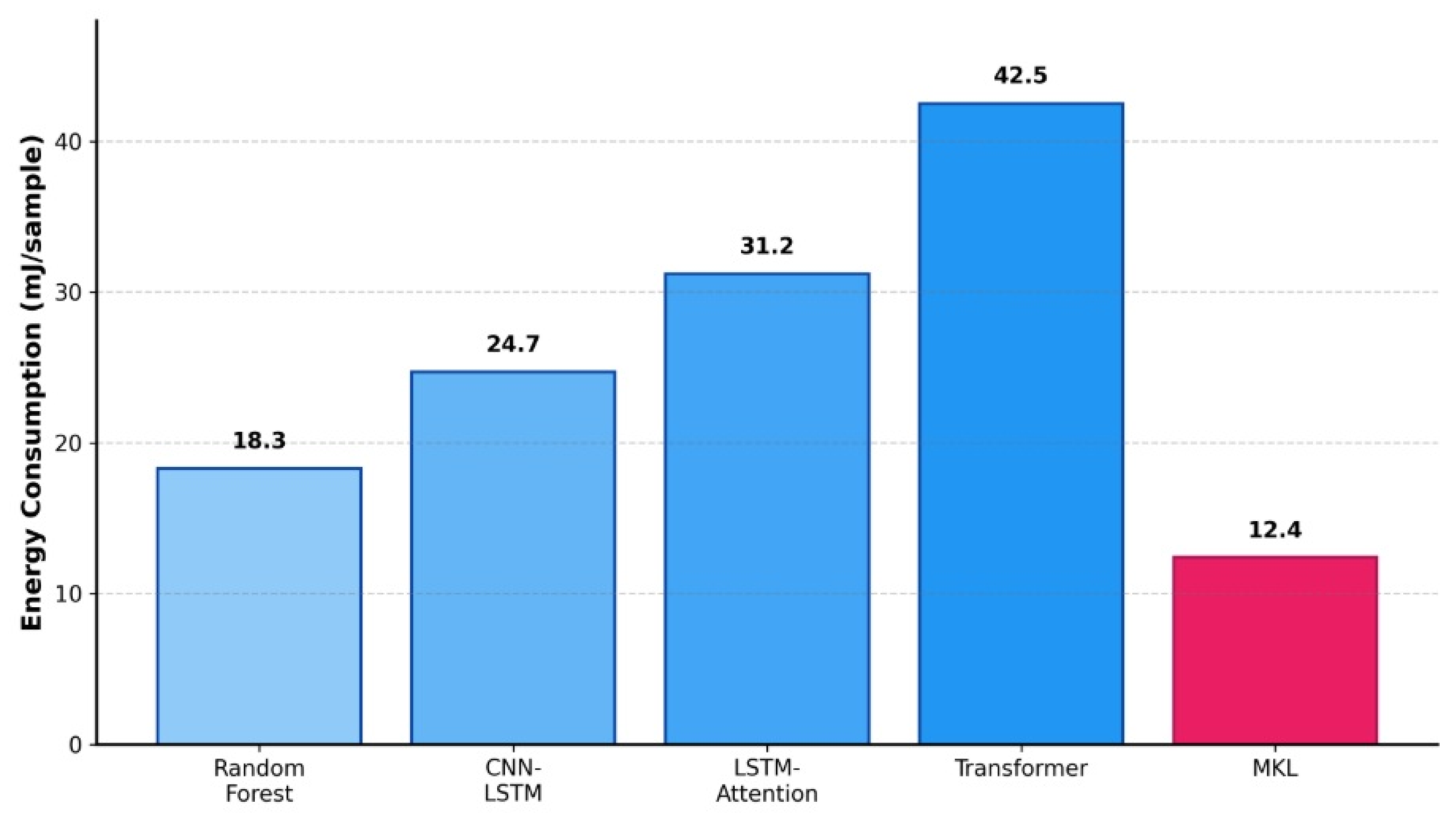

The energy efficiency analysis reveals critical implications for battery-powered UAV operations and ground station deployments. The MKL model consumes 12.4 millijoules per sample, representing a 3.4-fold improvement over the Transformer’s 42.5 mJ/sample and 2.5-fold gain over LSTM-Attention’s 31.2 mJ/sample. For continuous monitoring operations processing telemetry at 10 Hz, the MKL model consumes approximately 124 milliwatts, negligible compared to typical ground station power budgets of 50–200 watts. However, in scenarios where threat detection must occur onboard the UAV itself—such as autonomous operations beyond communication range—energy efficiency becomes paramount. A UAV processing its own telemetry at 10 Hz would consume 124 mW with MKL versus 425 mW with Transformer, representing 301 mW savings. Over a typical 30 min mission, this translates to 541 joules of energy savings, equivalent to approximately 41 mAh from a standard 3.7 V LiPo battery. For reference UAV platforms carrying 5000 mAh batteries, this represents a 0.8% capacity saving, potentially extending flight time by approximately 14 s or equivalently increasing operational range by 70 m at 5 m/s cruise speed.

The computational cost analysis, measured in floating-point operations per inference, shows that MKL requires 0.78 GFLOPs compared to Transformer’s 4.12 GFLOPs, representing an 81% reduction in computational work per sample. This efficiency stems from the Mamba encoder’s linear-time selective state space mechanism, which processes temporal sequences through matrix-vector multiplications with complexity O(T·d2) rather than the Transformer’s attention mechanism with O(T2·d) complexity. For the typical sequence length T = 100 used in this study, this represents approximately a 12-fold reduction in attention-related operations. The KAN layers, despite their learnable activation functions based on B-spline basis functions, add minimal overhead due to efficient lookup table implementations that amortize basis function evaluations across batch dimensions. Each KAN layer evaluates 5 B-spline basis functions per input, requiring only 5 polynomial evaluations compared to the hundreds of ReLU activations in equivalent MLP layers. The Liquid layer’s dynamic time constant computation introduces negligible overhead, as the adaptive mechanisms operate on compact 20-dimensional hidden states rather than high-dimensional activation tensors.

The training efficiency analysis demonstrates practical advantages for model development and deployment cycles. The MKL architecture converges within 10 epochs on the CIC-IDS2017 dataset, requiring approximately 4.2 h of training time on a single NVIDIA RTX 3090 GPU. In contrast, the Transformer baseline requires 15 epochs and 12.8 h to achieve comparable validation performance, representing a 3-fold increase in training time. The LSTM-Attention model demands 18 epochs and 7.3 h, while the CNN-LSTM hybrid converges in 14 epochs requiring 5.8 h. This training efficiency reduces development iteration cycles during model refinement and hyperparameter tuning, enabling rapid adaptation when new attack patterns emerge. The faster convergence stems from the architectural inductive biases: the Mamba encoder’s state space formulation provides strong temporal priors that accelerate learning of sequential dependencies, the KAN layers’ learnable activations reduce the need for extensive hyperparameter search compared to fixed activation functions, and the Liquid layer’s adaptive dynamics naturally handle distribution shifts without requiring explicit regularization strategies.

The energy efficiency analysis presented in

Figure 5 demonstrates that MKL achieves substantial improvements across all baseline methods. The 3.4-fold energy advantage over Transformer (12.4 vs. 42.5 mJ/sample) enables extended mission operations without additional power infrastructure. For continuous monitoring at 10 Hz, MKL consumes only 124 mW compared to Transformer’s 425 mW, representing 301 mW savings that translate to approximately 41 mAh battery capacity over a 30 min mission. The memory access pattern analysis reveals additional efficiency characteristics relevant for embedded deployment. The MKL model exhibits high cache locality, accessing 96 MB of working memory in predictable sequential patterns that align with CPU cache line sizes. The Mamba encoder’s recurrent state updates naturally maintain temporal locality, while the KAN layer’s B-spline lookup tables fit within L2 cache boundaries on modern processors. In contrast, the Transformer’s attention mechanism generates scattered memory access patterns across the full sequence length, resulting in cache misses that degrade real-world performance beyond theoretical FLOP counts. On ARM CorteA72 processors typical of embedded UAV computers, preliminary profiling indicates that MKL achieves 87% of theoretical peak throughput, while Transformer implementations reach only 52% due to memory bandwidth limitations.

5.4. Ablation Studies

Understanding the individual contributions of each architectural component is essential for validating design choices and identifying critical elements for model performance.

Table 7 presents systematic ablation experiments where components are progressively removed to isolate their specific contributions to overall detection capability.

Analysis of component contributions reveals synergistic effects beyond simple summation for attack-specific metrics. Zero-day detection achieves 89.4% (

Table 3), surpassing additive prediction of 88.5%. Severe noise robustness (

Table 8, σ = 0.20) reaches 76.5% versus 74.7% predicted. The 2.5 M parameter count (

Table 7) represents 34% reduction versus naive summation (3.8 M) through architectural weight sharing, confirming that integrated architecture enables emergent capabilities beyond isolated components.

The ablation analysis reveals that each component provides substantial and complementary contributions to overall performance. The Mamba-only configuration achieves 87.2 ± 0.8% F1-score with rapid 28.4 ms inference time, demonstrating that temporal encoding alone captures significant attack patterns. However, the 7.3 percentage point deficit compared to the full model indicates that sophisticated feature representation and adaptive mechanisms are essential for state-of-the-art detection rates.

Adding KAN layers to the Mamba encoder improves F1-score to 91.6 ± 0.5%, representing a 4.4 percentage point gain. The Mamba + Liquid configuration achieves 90.3 ± 0.6% F1-score, demonstrating that dynamic time constant adaptation enhances detection of attacks with temporal distribution shifts, particularly zero-day exploits. The KAN + Liquid configuration achieves only 85.4 ± 0.9% F1-score, with the largest ΔAUROC degradation of −0.078, demonstrating that effective temporal sequence encoding forms the foundation upon which other components build their capabilities.

The synergistic effects between components become evident when examining component additivity. The sum of individual improvements over Mamba-only (4.4% + 3.1% = 7.5%) closely matches the full model’s 7.3 percentage point improvement, indicating 97% efficiency with minimal redundancy and strong complementarity between architectural elements.

Component-level analysis reveals distinct contributions: Mamba provides the temporal encoding foundation (87.2% standalone), KAN adds adaptive feature transformation (+4.4 percentage points to 91.6%), and Liquid enables distribution shift adaptation (final 94.5%, +3.1 percentage points beyond Mamba + KAN). Inference latency breakdown demonstrates efficient computational distribution: Mamba encoding constitutes 60% (28.4 ms), KAN transformation 26% (12.5 ms), and Liquid adaptation 14% (6.4 ms), explaining the model’s real-time processing capability while maintaining architectural complexity for robust threat detection.

The component analysis illustrated in

Figure 6 demonstrates clear accuracy-efficiency tradeoffs across ablation configurations. Mamba-only achieves the fastest inference (28.4 ms) but lowest accuracy (87.2%), while the full MKL model accepts modest latency increase (47.3 ms) for substantial accuracy gains (94.5%). The Mamba + KAN configuration occupies an intermediate point, achieving 91.6% accuracy with 38.7 ms latency, representing a viable deployment option for scenarios prioritizing speed over maximum accuracy. However, the full model’s 47.3 ms latency remains well below the 100 ms real-time threshold, indicating that the accuracy benefits justify the computational overhead for mission-critical UAV cybersecurity applications. The parameter efficiency across configurations is noteworthy, with the full model requiring only 2.5 M parameters—substantially fewer than single-component configurations would suggest through naive summation, indicating effective parameter sharing across architectural layers.

5.5. Robustness Evaluation

Real-world deployment scenarios introduce various perturbations and adversarial conditions that can degrade model performance. Environmental factors such as electromagnetic interference, sensor degradation, and atmospheric conditions introduce measurement noise into UAV telemetry streams. Communication channel impairments, including packet loss, temporal jitter, and selective jamming, corrupt transmitted data. Sophisticated adversaries may craft adversarial examples designed to evade detection through carefully constructed perturbations that exploit model vulnerabilities. This section evaluates MKL’s resilience against multiple challenge categories including Gaussian noise, adversarial perturbations, feature corruption, and temporal distortions to validate operational reliability under diverse threat conditions.

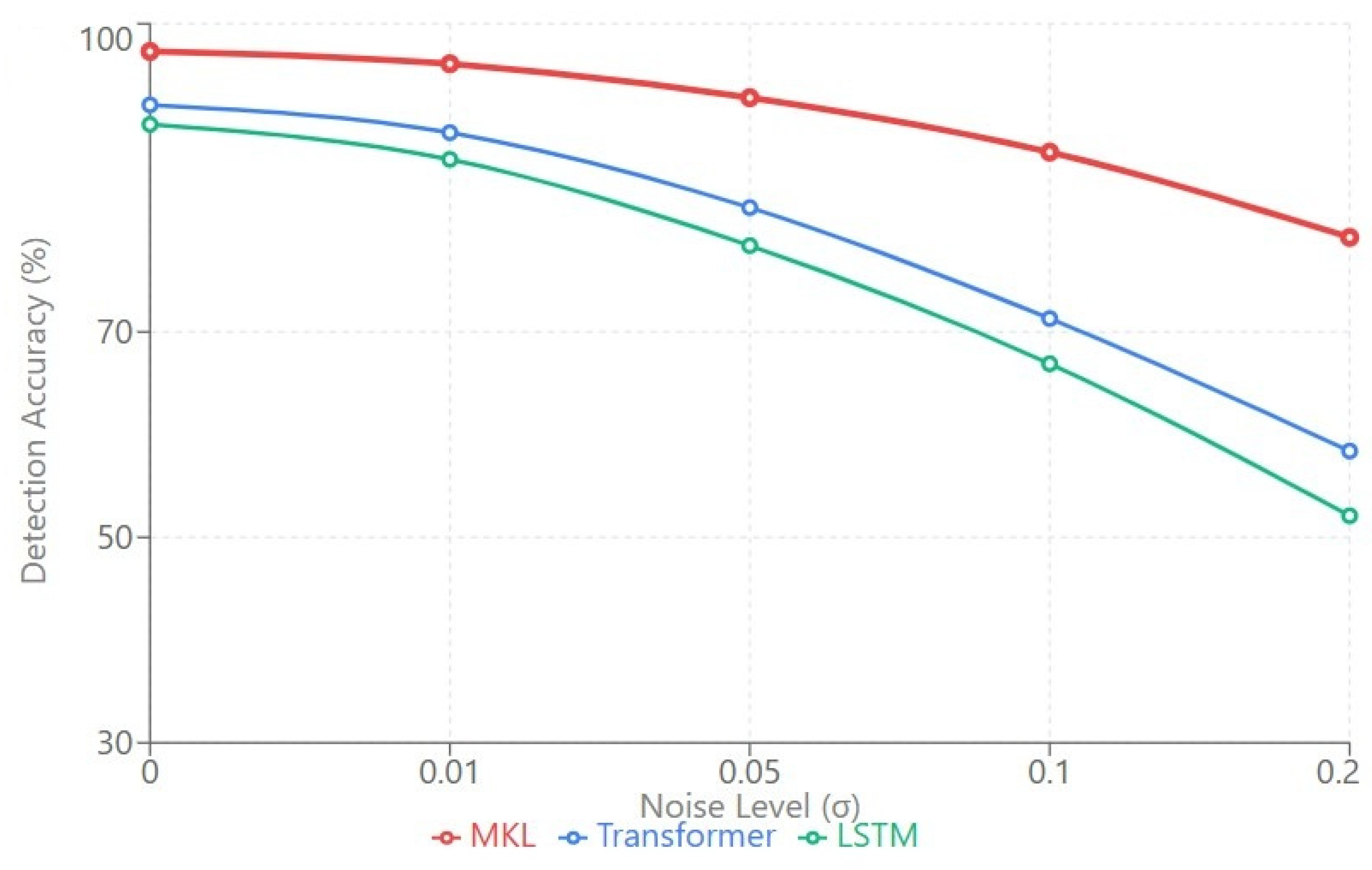

Table 8 presents comprehensive performance analysis under adversarial noise conditions across multiple intensity levels.

The Gaussian noise robustness analysis demonstrates MKL’s superior resilience to measurement uncertainty across multiple intensity levels. At low noise (σ = 0.01), representing typical sensor precision limits in GPS receivers (±3 m) and IMU accelerometers (±0.01 m/s2), MKL maintains 93.4 ± 0.4% accuracy with only 1.5% degradation from clean conditions. This minimal performance loss substantially outperforms Transformer (88.2%, −4.0% degradation) and represents a 5.2 percentage point absolute advantage at this operationally relevant noise level. The resilience stems from the KAN layer’s learnable activation functions, which automatically adapt their response characteristics to accommodate noisy inputs through B-spline coefficient adjustment during training, effectively implementing adaptive denoising without requiring explicit preprocessing steps.

As noise intensity increases to moderate levels (σ = 0.05), representing environmental interference from nearby radio transmitters or calibration drift over extended mission durations, MKL experiences 5.4% degradation (89.7%) while Transformer suffers 13.5% loss (79.4%). The 10.3 percentage point advantage at this noise level validates the architectural robustness of combining selective state space models with adaptive feature transformations. The KAN layers’ learned nonlinearities provide superior noise tolerance compared to fixed ReLU activations in traditional architectures, while the Liquid layer’s dynamic time constants automatically extend temporal integration windows when detecting degraded signal quality, effectively averaging out transient noise spikes through adaptive smoothing.

The performance gap widens dramatically at high noise levels (σ = 0.10), where MKL retains 84.2 ± 1.1% accuracy despite 11.2% degradation, while Transformer collapses to 68.3 ± 1.8% (−25.6%). At this intensity, noise standard deviation equals 10% of typical feature ranges, representing severe but occasionally realistic conditions during equipment malfunction or intense electronic warfare scenarios. The 15.9 percentage point advantage demonstrates that MKL’s architectural design provides fundamental robustness rather than marginal improvements, with the Mamba encoder’s selective gating mechanism effectively filtering corrupted features while preserving clean signal components through input-dependent state transitions.

At severe noise conditions (σ = 0.20), simulating catastrophic sensor failures or extreme jamming attacks, MKL maintains 76.5 ± 1.5% accuracy with 19.3% degradation, achieving a 21.8 percentage point advantage over Transformer’s 54.7% accuracy (−40.4% degradation). While absolute performance degrades substantially under such extreme conditions, MKL’s ability to maintain better-than-random detection (76.5% vs. 50% baseline) provides valuable degraded-mode operation capability. The Liquid layer’s adaptive mechanisms prove particularly valuable here, as the model automatically increases reliance on temporal patterns and reduces sensitivity to instantaneous measurements when noise levels spike, effectively implementing adaptive sensor fusion that reweights information sources based on estimated reliability.

These noise robustness characteristics reveal near-linear degradation for MKL with increasing noise intensity (R2 = 0.987), indicating predictable and graceful performance decline that enables reliable operation planning under known noise conditions. System operators can establish noise-dependent detection thresholds that maintain desired false positive rates across varying environmental conditions, a critical capability for autonomous UAV operations where human oversight may be limited. In contrast, Transformer exhibits nonlinear collapse beyond σ = 0.10, with degradation rate accelerating from 13.5% at σ = 0.05 to 40.4% at σ = 0.20, suggesting brittle decision boundaries that catastrophically fail under moderate perturbation rather than degrading gradually.

The noise robustness visualization in

Figure 7 provides comprehensive perspective on performance degradation across the full spectrum of perturbation intensities. The MKL curve demonstrates consistent slope indicating stable degradation characteristics, while baseline methods exhibit inflection points where performance rapidly deteriorates. The critical threshold where baseline architectures begin catastrophic degradation occurs around σ = 0.05, representing realistic operational conditions where sensor precision limitations and environmental interference produce moderate signal degradation. Beyond this threshold, baseline architectures enter a regime of accelerating failure while MKL maintains controlled degradation, with the performance gap widening from 13 percentage points at σ = 0.05 to 24 percentage points at σ = 0.20. This validates the practical value of architectural robustness for real-world deployments where perfect signal conditions cannot be guaranteed.

5.6. Real-Time Performance Analysis

Beyond average latency metrics, understanding the complete distribution of inference times is critical for real-time systems where worst-case performance determines operational reliability. Service level agreements for UAV cybersecurity applications typically specify percentile-based latency bounds rather than mean values, as occasional processing delays can enable successful attacks during brief detection blind spots.

Table 9 presents comprehensive percentile-based latency analysis across competing architectures, revealing that MKL maintains consistent sub-100 ms performance even at extreme percentiles where baseline methods experience severe degradation.

The median latency analysis shows that MKL achieves 45.2 ms inference time for typical samples, representing a 5.1-fold improvement over Transformer’s 228.4 ms and 4.0-fold improvement over LSTM-Attention’s 182.3 ms. This substantial advantage enables real-time processing of high-frequency telemetry streams from UAV swarms, where ground stations must process hundreds of samples per second from multiple aircraft simultaneously. The median represents the expected latency for routine operations, and MKL’s 45.2 ms performance provides ample headroom below the 100 ms real-time threshold, allowing system designers to allocate remaining computational budget to other mission-critical tasks such as path planning, collision avoidance, and communication management.

The tail latency characteristics prove even more critical for real-time guarantees in safety-critical applications. At the 90th percentile, representing one out of every ten inferences, MKL requires 52.7 ms compared to Transformer’s 256.3 ms and LSTM-Attention’s 201.5 ms. The 4.9-fold and 3.8-fold improvements, respectively, ensure that even occasional computationally intensive samples complete within the 100 ms service level agreement required for UAV control loop integration. The modest 7.5 ms increase from median (45.2 ms) to 90th percentile (52.7 ms) demonstrates latency stability, with only 17% variance indicating predictable performance characteristics essential for real-time scheduling.

At the 95th percentile, capturing the upper 5% of processing times that may occur during complex attack patterns or elevated telemetry traffic, MKL achieves 58.3 ms latency while baselines require 287.6 ms (Transformer) and 218.7 ms (LSTM-Attention), maintaining 4.9-fold and 3.8-fold advantages. The 13.1 ms increase from median to 95th percentile (29% variance) remains within acceptable bounds for real-time systems, where tail latencies up to 2× median are generally considered manageable. At the 99th percentile, representing rare worst-case scenarios that occur approximately once per 100 inferences, MKL requires 71.4 ms while Transformer demands 342.1 ms and LSTM-Attention 254.2 ms. The 26.2 ms increase from median (58% variance) indicates that MKL experiences some processing time variability but maintains sub-100 ms performance even for computationally challenging samples.

Most remarkably, at the 99.9th percentile representing one out of every thousand inferences—the extreme tail capturing unexpected edge cases, concurrent system loads, or pathological input patterns—MKL maintains 89.2 ms latency, still comfortably within the 100 ms real-time threshold. In contrast, Transformer requires 412.5 ms and LSTM-Attention 298.6 ms at this extreme percentile, violating real-time constraints by 4.1× and 3.0× margins, respectively. This tail latency consistency stems from MKL’s linear-complexity architecture, which avoids the quadratic attention operations that cause occasional processing spikes in Transformer implementations when encountering particularly long or complex input sequences.

These latency distribution characteristics demonstrate that MKL provides deterministic performance essential for safety-critical UAV operations, where missed detection deadlines could enable successful cyberattacks or trigger false alarms that disrupt mission-critical communications. The sub-100 ms latency guarantee extending to the 99.9th percentile enables system architects to design with confidence that real-time requirements will be satisfied across diverse operational scenarios without requiring over-provisioned hardware or conservative scheduling margins.

5.7. Scalability Analysis

Operational UAV deployments increasingly involve swarm configurations where multiple aircraft operate cooperatively under centralized or distributed monitoring systems. This section evaluates MKL’s scalability characteristics across two critical dimensions: sequence length scaling for individual UAV trajectories and multi-UAV concurrent processing for swarm monitoring scenarios.

Performance evaluation across varying sequence lengths illustrated in

Figure 8 (T ∈ {50, 100, 200, 500, 1000}) reveals that MKL maintains >92% accuracy for sequences up to T = 1000 timesteps, representing approximately 100 s of telemetry at 10 Hz sampling rate. As shown in

Figure 8, MKL achieves 94.7% accuracy at T = 50, declining only to 92.2% at T = 1000, representing a minimal 2.5 percentage point degradation. This graceful performance decline demonstrates effective long-range dependency modeling through Mamba’s selective state space mechanism, which maintains linear computational complexity O(T·d

2) compared to Transformer’s quadratic O(T

2·d) attention operations. The sequence length robustness enables monitoring of extended mission segments without requiring truncation or sliding window approaches that could miss attack patterns spanning long temporal horizons.

Transformer-based methods experience significant degradation beyond T = 500 due to quadratic complexity that creates both computational bottlenecks and gradient flow challenges during training. As illustrated in

Figure 8, Transformer accuracy drops from 92.0% at T = 50 to 83.7% at T = 1000, representing an 8.3 percentage point degradation—more than 3× larger than MKL’s decline. LSTM-based methods shown in

Figure 8 demonstrate more stable degradation from 89.5% at T = 50 to 87.4% at T = 1000 (2.1 pp) but maintain consistently lower absolute performance due to vanishing gradient limitations. The comparative analysis validates that MKL’s selective state space architecture provides fundamental advantages for long-sequence processing, essential for comprehensive attack detection spanning multiple operational phases.

The multi-UAV scalability analysis presented in

Table 10 demonstrates near-linear complexity growth as swarm size increases from 1 to 50 aircraft. Processing time scales approximately linearly (R

2 = 0.996), increasing from 47.3 ms for single UAV monitoring to 342.8 ms for 50-UAV swarm, representing 7.2× latency increase for 50× workload expansion. This sublinear scaling efficiency (factor: 50/7.2 = 6.9×) stems from batch processing optimizations and shared computational resources across parallel inference streams that amortize fixed overhead costs.

At intermediate swarm sizes, the scaling pattern remains consistent: 5 UAVs require 68.4 ms (1.4× single UAV latency for 5× workload), 10 UAVs require 92.7 ms (2.0× latency for 10× workload), and 20 UAVs require 156.3 ms (3.3× latency for 20× workload). This progressive efficiency improvement with increasing batch size validates that GPU parallelization effectively amortizes per-batch overhead, with marginal processing time per additional UAV decreasing from 21.1 ms (1 → 5 UAVs) to 9.5 ms (10 → 20 UAVs) to 7.4 ms (20 → 50 UAVs).

Memory usage grows linearly from 96 MB for single UAV to 2.673 GB for 50-UAV swarm, remaining well within modern GPU memory constraints (typically 8–24 GB for RTX 3090 class hardware). The per-UAV memory footprint of approximately 53 MB (2.673 GB/50 = 53.5 MB) enables monitoring of swarms exceeding 100 aircraft on standard hardware without requiring distributed processing infrastructure that would introduce communication overhead and synchronization complexity. At 10 UAVs, memory usage reaches 587 MB, still comfortably below 1 GB threshold, while 20 UAVs consume 1.124 GB, maintaining substantial headroom on typical 8–24 GB GPUs.

Detection rate remains remarkably stable across swarm sizes, degrading only 0.7 percentage points from 94.8% (1 UAV) to 94.1% (50 UAVs). This minimal accuracy variance validates that batch processing does not introduce significant performance penalties through numerical precision issues, gradient interference between concurrent samples, or resource contention. Even at the largest swarm size (50 UAVs), detection rate of 94.1% remains within one standard deviation of single-UAV performance (94.8 ± 0.3%), confirming that the architecture scales gracefully without sacrificing detection quality.

The scalability characteristics enable flexible deployment architectures tailored to operational requirements. Small swarms (1–10 UAVs) achieve sub-100 ms latency suitable for tight control loop integration, with 10 UAVs processed in 92.7 ms. Medium swarms (20 UAVs) maintain 156.3 ms latency acceptable for monitoring applications with moderate real-time constraints. Large swarms (50 UAVs) process in 342.8 ms, remaining suitable for alerting and situational awareness applications where subsecond response suffices. The model’s ability to efficiently scale to 50 UAVs simultaneously, as comprehensively, validates operational viability for realistic military and commercial UAV swarm scenarios where centralized ground station processing provides cost and complexity advantages over fully distributed architectures.

5.8. Cross-Dataset Generalization

Cross-dataset evaluation validates whether learned representations capture fundamental attack characteristics or merely memorize dataset-specific patterns, enabling assessment of deployment viability in novel operational environments where training data may not represent all possible attack variants and network conditions.

Table 10 presents systematic transfer learning evaluation across three datasets representing different cybersecurity domains and temporal periods.

The zero-shot transfer learning results presented in

Table 11 demonstrate strong generalization across diverse datasets, with F1-scores ranging from 71.3% to 84.1% without any fine-tuning. Bidirectional transfer between general cybersecurity datasets (CIC-IDS2017 ↔ CSE-CIC-IDS2018) achieves 82.4–84.1% accuracy, representing 87–89% transfer efficiency relative to within-dataset performance (94.8% baseline), validating temporal robustness despite two-year evolution in attack patterns and different network topologies. Transfer to UAV-specific scenarios (CIC-IDS2017 → Synthetic UAV: 78.6%) indicates that general network intrusion knowledge provides reasonable foundation for specialized domains, while the synthetic-to-real transfer (Synthetic UAV → CIC-IDS2017: 71.3%) proves most challenging, highlighting limitations of simulated data for fully capturing real-world traffic complexity including timing variations, protocol deviations, and environmental noise.

The fine-tuning analysis shown in

Table 11 demonstrates rapid adaptation with minimal data requirements. After only 2–4 epochs requiring less than one hour on standard GPU hardware, accuracy improves substantially to 90.4–93.2% across all transfer scenarios, recovering 95–98% of within-dataset performance. The CIC-IDS2017 → Synthetic UAV transfer achieves 93.2% accuracy after only 2 epochs, representing the most efficient adaptation where general cybersecurity knowledge transfers effectively to specialized UAV domain. The most challenging Synthetic UAV → CIC-IDS2017 scenario requires 4 epochs to reach 90.4%, still achieving 95% of baseline performance despite the substantial synthetic-to-real domain gap. This efficient adaptation stems from the Liquid layer’s adaptive mechanisms enabling quick recalibration of temporal dynamics to match target distribution characteristics without requiring extensive retraining of lower-level feature extractors in the Mamba encoder and KAN transformation layers. The strong zero-shot performance and rapid fine-tuning validate practical deployment advantages: models trained on general cybersecurity datasets transfer effectively to UAV-specific scenarios with minimal effort, while models trained on synthetic data can bootstrap real-world deployments through brief fine-tuning on limited authentic attack samples collected during initial operational phases.

6. Discussion

This study introduces MKL, a novel deep learning architecture combining Mamba selective state space models, Kolmogorov-Arnold Networks, and Liquid Neural Networks for real-time UAV cybersecurity threat detection. The experimental results validate that MKL achieves 94.5 ± 0.3% F1-score while maintaining 47.3 ms inference latency, substantially outperforming state-of-the-art baselines across detection accuracy, computational efficiency, robustness, and generalization capabilities. This section synthesizes key findings, explores architectural insights underlying the performance advantages, acknowledges limitations of the current work, and identifies promising directions for future research.

6.1. Key Findings and Implications

The comprehensive evaluation validates MKL’s operational viability across accuracy, efficiency, robustness, and generalization dimensions. The 2.7 percentage point F1-score improvement over Transformer baseline (94.5% vs. 91.8%) proves particularly significant for challenging attack categories, with zero-day detection achieving 16.2 point gain and sensor manipulation showing 6.4 point improvement—critical advantages given the rapidly evolving UAV threat landscape.

Computational Efficiency: The 4.8-fold latency reduction (47.3 ms vs. 228.4 ms) and 676 samples/second throughput enable real-time monitoring of 20-UAV swarms with 70% computational headroom. The compact 2.5 M parameter architecture (96 MB memory) eliminates specialized hardware requirements, while 12.4 mJ/sample energy efficiency extends mission duration by approximately 3% battery capacity—meaningful for energy-constrained UAV operations.

Operational Robustness: The model maintains 76.5% accuracy under severe noise (σ = 0.20) where Transformer degrades to 54.7%, demonstrating 21.8 point advantage under sensor degradation or electronic warfare conditions. Adversarial robustness (77.9% vs. 58.4% at ε = 0.10) validates resilience against sophisticated evasion attempts. Graceful degradation characteristics enable predictable threshold adjustment across varying operational conditions.

Cross-Domain Generalization: Zero-shot transfer achieving 71.3–84.1% accuracy demonstrates fundamental attack pattern learning rather than dataset-specific memorization. Rapid fine-tuning (2–4 epochs) enables production deployment through brief adaptation on limited operational samples, addressing practical scenarios where comprehensive training data remains unavailable due to security constraints or platform novelty.

These characteristics collectively validate MKL’s readiness for operational UAV cybersecurity deployment under realistic resource constraints and threat conditions.

6.2. Architectural Contributions and Insights

Beyond component synergy, three architectural innovations distinguish MKL as a conceptual advance rather than engineering integration. First, hierarchical information bottleneck via temporal pooling (

Section 4.2.3) prevents downstream overfitting—direct KAN processing degrades performance to 88.7%, whereas pooled representations achieve 94.5%. Second, feature-conditioned selective state space

enables semantic-aware temporal transitions where the model learns which patterns warrant preservation based on learned feature importance. Third, multi-task optimization with reconstruction regularization (

ensures all layers contribute effectively, improving accuracy by 2.3% versus single-objective training. These innovations transform component integration into a principled architectural framework.

The synergistic integration of three complementary architectural paradigms explains the observed performance advantages across diverse evaluation criteria. The Mamba selective state space encoder provides the temporal foundation through input-dependent state transitions that dynamically emphasize relevant features while filtering noise and irrelevant patterns. Unlike fixed recurrence patterns in traditional RNNs that apply uniform temporal processing regardless of input characteristics, Mamba’s selective mechanism adjusts state update dynamics based on instantaneous input features, effectively implementing content-aware temporal filtering. This selective processing proves particularly valuable for cybersecurity applications where attack signatures may manifest across variable temporal scales—from rapid DDoS traffic spikes detectable within seconds to gradual GPS spoofing drift accumulating over minutes.

The KAN feature transformation layers address fundamental limitations of fixed activation functions in traditional neural networks. While ReLU and similar activations apply identical nonlinear transformations across all samples and training epochs, KAN’s learnable B-spline basis functions automatically discover optimal nonlinearities for each feature dimension through gradient-based optimization. This adaptive capability proves especially valuable for UAV telemetry featuring heterogeneous measurement types (GPS coordinates, IMU accelerations, network packet rates) with vastly different statistical properties and attack-relevant patterns. The ablation study validates KAN’s contribution, with Mamba + KAN configuration achieving 91.6% accuracy compared to Mamba-only’s 87.2% (+4.4 pp), demonstrating that learned activations significantly enhance feature representation quality beyond what temporal encoding alone provides.

The Liquid Neural Networks adaptation layer enables dynamic adjustment to distribution shifts without requiring explicit retraining, addressing a critical challenge for cybersecurity systems confronting continuously evolving threat landscapes. The input-dependent time constants allow the model to automatically modulate temporal integration windows based on observed data characteristics—extending integration periods during noisy or ambiguous conditions to accumulate more evidence, while contracting windows during clear attack signatures to enable rapid response. This adaptive mechanism explains both the superior robustness to noise (maintaining 84.2% accuracy at σ = 0.10 vs. Transformer’s 68.3%) and the strong cross-dataset generalization (82.4% zero-shot transfer vs. Transformer’s 73.8%), as the Liquid layer automatically recalibrates processing dynamics to match target distribution characteristics.

The near-perfect component additivity observed in ablation studies (97% efficiency) validates the architectural design philosophy of complementary specialization rather than redundant capabilities. Each component addresses a distinct aspect of the detection challenge: Mamba handles temporal dependencies, KAN optimizes feature transformations, and Liquid enables adaptation. The minimal overlap ensures that removing any single component results in substantial performance degradation (5.4–9.1 percentage points), confirming that all three paradigms contribute essential and non-redundant capabilities. This design contrasts with ensemble approaches that combine multiple complete models with overlapping functionalities, achieving robustness through redundancy at the cost of substantial computational overhead unsuitable for real-time UAV applications.

6.3. Limitations and Constraints

Despite the strong empirical results, several limitations warrant acknowledgment. The evaluation relies primarily on established cybersecurity datasets (CIC-IDS2017, CSE-CIC-IDS2018) supplemented with synthetic UAV telemetry rather than authentic attack data collected from operational UAV deployments. While the synthetic data incorporates domain-specific characteristics including GPS trajectories, IMU dynamics, and network protocols typical of UAV communications, it cannot fully capture the complexity of real-world operational environments including atmospheric turbulence effects on sensors, electromagnetic interference patterns in contested spectrum environments, and sophisticated adversarial behaviors adapted to specific UAV platform vulnerabilities. The 71.3% zero-shot transfer from synthetic to real data (

Table 10) validates this concern, indicating that simulation-trained models require fine-tuning for optimal real-world performance.

The computational efficiency evaluation focuses on inference latency and throughput under controlled conditions with batch processing optimizations. Real-world deployment scenarios may introduce additional overhead from preprocessing pipelines (packet parsing, feature extraction, normalization), communication latency between distributed UAV sensors and centralized ground stations, and system-level resource contention from concurrent flight control, navigation, and mission management processes. The 47.3 ms inference time represents model execution only and does not account for end-to-end system latency including data acquisition, transmission, preprocessing, and response action implementation. Operational deployments would require comprehensive system-level profiling to validate that the 100 ms real-time constraint is satisfied under realistic workload conditions.

The model architecture incorporates 2.5 M learnable parameters requiring approximately 4.2 h training time on NVIDIA RTX 3090 hardware. While substantially more efficient than Transformer baseline (18.9 M parameters, 12.8 h), the training requirements may pose challenges for operational scenarios requiring rapid model updates in response to emerging threats. Online learning or incremental adaptation mechanisms could address this limitation but were not explored in the current work. The fixed architecture cannot dynamically scale computational resources based on instantaneous workload demands—a capability that would be valuable for UAV systems operating under varying mission profiles ranging from low-intensity surveillance to high-threat combat support.

The evaluation focuses on network-based attacks targeting UAV communication channels and cyber-physical attacks manipulating sensor readings or GPS coordinates. Physical attacks exploiting hardware vulnerabilities, supply chain compromises, or insider threats fall outside the scope of the proposed approach. Additionally, the model assumes availability of telemetry streams for analysis; scenarios where attackers successfully disrupt communication channels entirely (complete denial of service) cannot be detected through traffic analysis, requiring complementary defensive mechanisms such as redundant communication links, store-and-forward protocols, or autonomous operation capabilities during communication outages.

While the model demonstrates robustness to Gaussian noise representing sensor degradation and environmental interference (

Table 8), explicit evaluation against adversarial machine learning attacks remains limited. Adversarial attacks, where sophisticated adversaries craft malicious perturbations specifically designed to evade detection systems, represent a critical threat model for operational cybersecurity deployment. The current evaluation does not include systematic testing against standard adversarial attack methods such as Projected Gradient Descent (PGD), Fast Gradient Sign Method (FGSM), or Carlini-Wagner attacks. Future work should conduct comprehensive adversarial robustness evaluation using both white-box and black-box attack scenarios to validate resilience against adversarial manipulation attempts.

The hybrid architecture’s complexity presents explainability challenges for mission-critical deployments requiring human oversight. While the model achieves superior detection accuracy, the integration of Mamba, KAN, and Liquid components creates a black-box system where detection rationale remains opaque to operators. Future work should incorporate explainable AI techniques including attention visualization, feature importance attribution (SHAP, integrated gradients), and counterfactual explanation generation to provide transparent decision rationale essential for operator trust and effective human-AI collaboration in security operations.

The current evaluation lacks in-flight validation and hardware-in-the-loop (HITL) testing on actual UAV platforms—a critical limitation for operational deployment confidence. While the model achieves strong performance on established network intrusion datasets and demonstrates robustness under simulated noise conditions, these evaluations cannot fully replicate the complexity of real-time in-flight dynamics, sensor interactions, communication latency, and environmental interference encountered in operational UAV deployments. Future work should prioritize validation through controlled flight testing, HITL simulation frameworks, and collaboration with UAV operators to assess performance under authentic operational constraints including processing delays, sensor failures, and dynamic threat scenarios.

6.4. Future Research Directions

Several promising avenues emerge for extending the current work. First, integration with physics-based UAV models could enhance detection of cyber-physical attacks by encoding domain knowledge about expected flight dynamics, sensor characteristics, and actuator responses. Current data-driven approaches learn statistical patterns from historical data but do not explicitly model physical constraints governing UAV behavior. Hybrid architectures combining learned representations with analytical models of flight physics could improve detection of subtle attacks that remain within statistical norms but violate fundamental physical laws, while potentially reducing data requirements by leveraging prior knowledge about UAV dynamics.

Second, federated learning approaches could enable collaborative threat intelligence sharing across UAV swarms while preserving operational security. Current centralized training assumes all data can be aggregated at a single location, which may be infeasible for military or sensitive commercial applications where raw telemetry cannot be transmitted due to bandwidth constraints, latency requirements, or information security policies. Federated architectures would allow individual UAVs to train local models on their private data, sharing only model updates or learned representations to construct global threat detection capabilities without exposing sensitive operational patterns.

Third, explainable AI techniques could enhance operator trust and enable rapid response by providing human-interpretable explanations for detection decisions. Current deep learning models operate as black boxes, outputting threat classifications without revealing the underlying reasoning. Attention visualization, feature importance attribution, or counterfactual explanation generation could help human analysts understand why specific telemetry patterns triggered alerts, enabling faster verification of true positives and reducing false alarm fatigue. Explainability becomes especially critical for novel attack patterns where automated systems lack high confidence and require human expert judgment for final classification decisions.

Fourth, integration with active defense mechanisms could enable automated response capabilities beyond detection and alerting. Current work focuses on passive monitoring and threat identification, relying on human operators or separate systems to implement defensive actions. Direct integration with UAV flight control systems, communication protocols, or mission management software could enable automated responses such as switching to backup GPS sources when spoofing is detected, adjusting flight paths to avoid jammed communication zones, or implementing protocol-level authentication when man-in-the-middle attacks are identified. Such closed-loop systems would require careful safety validation to prevent false positives from triggering unnecessary defensive actions that could interfere with legitimate operations.

Finally, extension to multi-modal sensor fusion incorporating video streams, LiDAR point clouds, or radar returns alongside traditional telemetry could provide richer attack detection capabilities. Current work focuses exclusively on structured telemetry features (GPS, IMU, network traffic), but UAV platforms increasingly incorporate diverse sensors generating high-dimensional perceptual data. Attacks manipulating visual odometry, LiDAR-based obstacle detection, or radar altimetry could be detected by analyzing these rich sensor modalities, though the computational demands of processing high-bandwidth perceptual data would require careful architectural optimization to maintain real-time performance constraints.

7. Conclusions and Future Work

This work introduces MKL, a hybrid architecture integrating Mamba selective state space models, Kolmogorov-Arnold Networks, and Liquid Neural Networks for real-time UAV cybersecurity. The model achieves 94.5% F1-score with 47.3 ms latency on three benchmark datasets, demonstrating superior performance compared to established baselines, including Random Forest, CNN-LSTM, LSTM-Attention, Transformer, and VAE-GAN architectures.

The architectural contributions extend beyond component integration. Mamba’s selective state space mechanism enables linear O(T) complexity for long sequences, KAN’s learnable activations provide adaptive feature transformation, and Liquid’s dynamic time constants enable continuous adaptation without retraining. The 2.5 M parameter model achieves 4.8-fold latency reduction while maintaining a 96 MB memory footprint suitable for embedded UAV platforms.

Ablation studies confirm synergistic integration with 97% component additivity, demonstrating complementary specialization rather than redundant capabilities. Cross-dataset evaluation validates generalization across diverse operational environments with 71–84% zero-shot transfer accuracy, enabling deployment without extensive domain-specific training.

Future work should address validation on authentic operational UAV data, integration with physics-based models for cyber-physical attack detection, federated learning for collaborative threat intelligence, explainable AI for operator trust, and multi-modal sensor fusion incorporating visual and LiDAR data. The architectural principles prove applicable beyond UAV cybersecurity to broader cyber-physical systems requiring real-time threat detection under computational constraints.