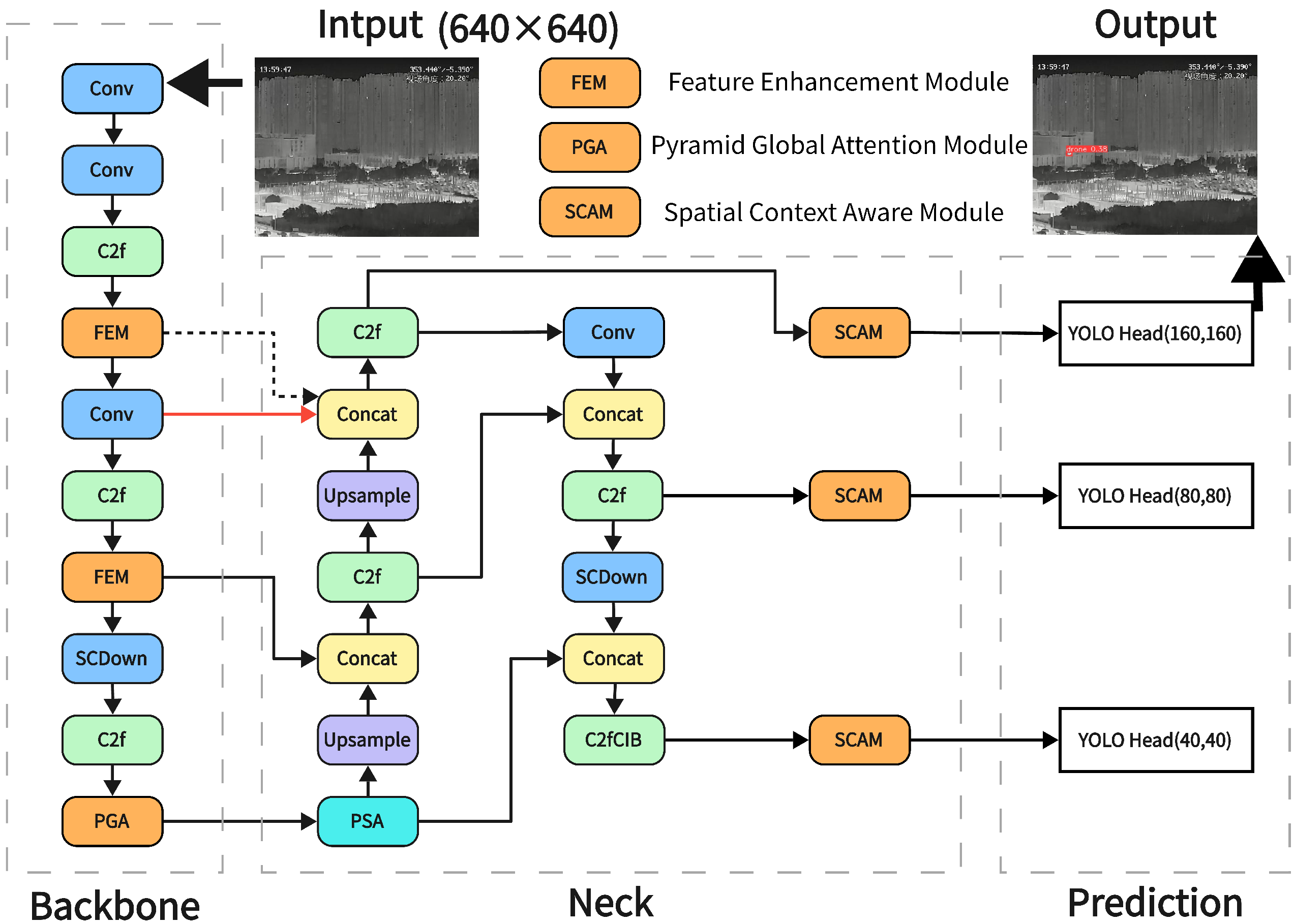

Global and Local Context-Aware Detection for Infrared Small UAV Targets

Highlights

- Re-designing the feature extraction backbone for infrared unmanned aerial vehicle targets.

- The designed algorithm has demonstrated superior performance on two different target scale datasets.

- Suitable for detecting targets of different scales, including small unmanned aircraft.

- Proposed a single-stage framework for infrared small target detection.

Abstract

1. Introduction

2. Related Work

2.1. Segmentation-Based Infrared Small Target Detection Algorithms

2.2. Bounding Box-Based Infrared Small Target Detection Algorithms

3. Proposed Methods

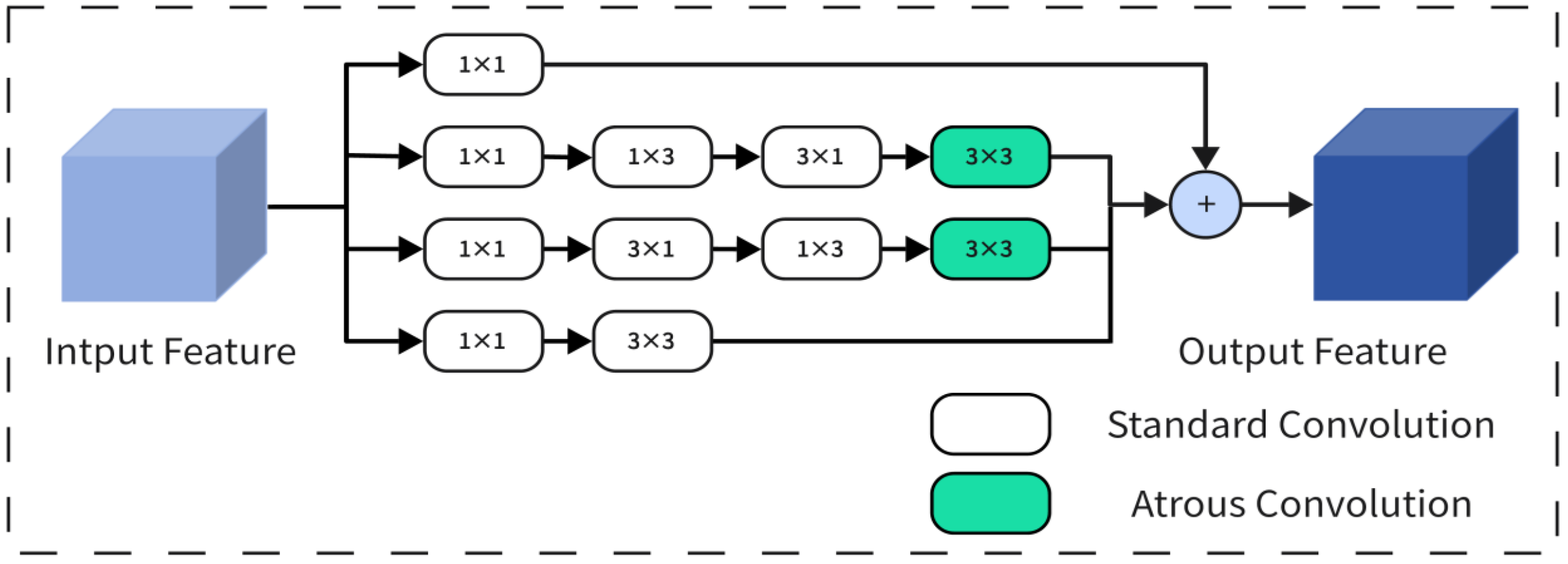

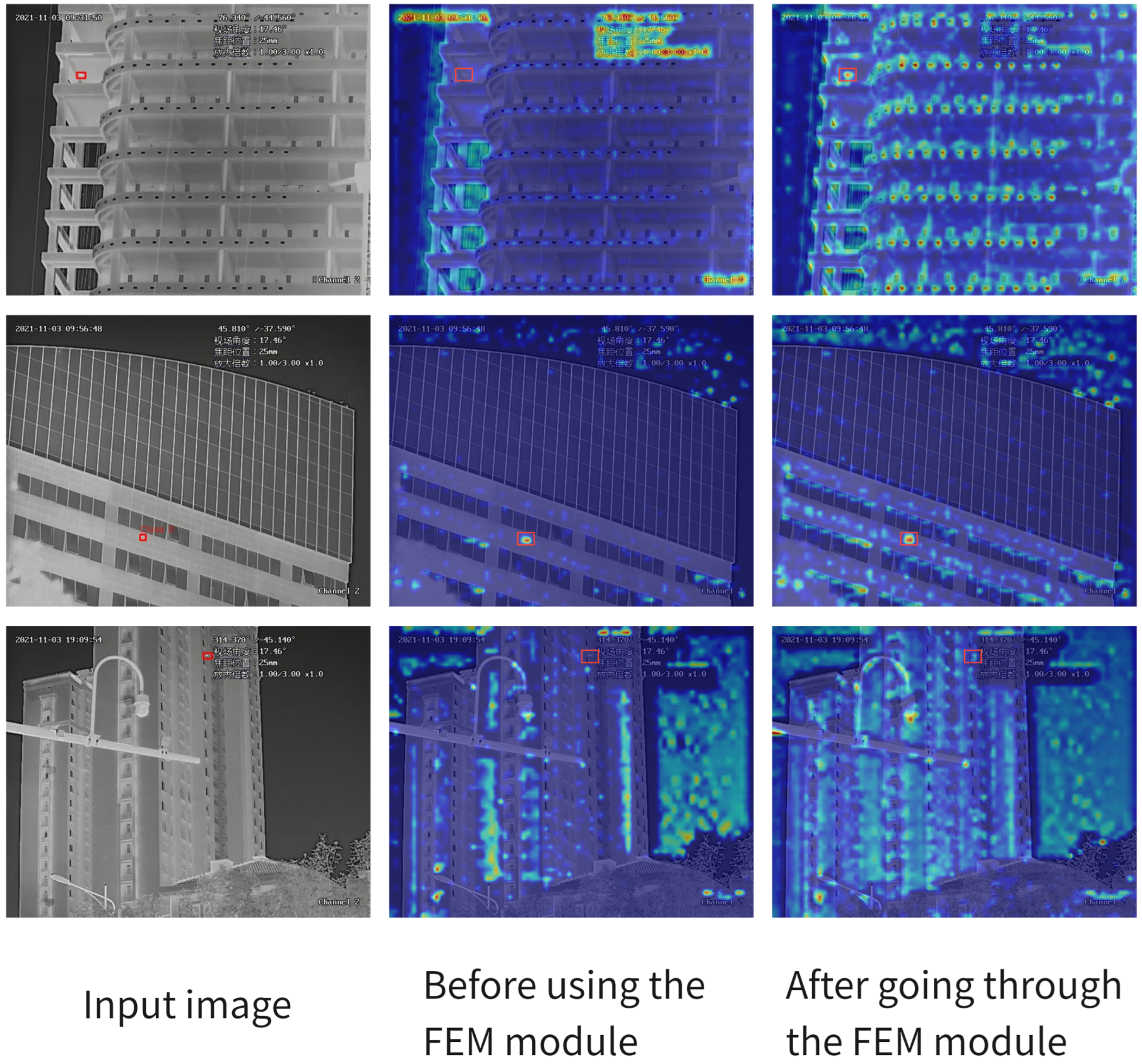

3.1. Feature Enhancement Module

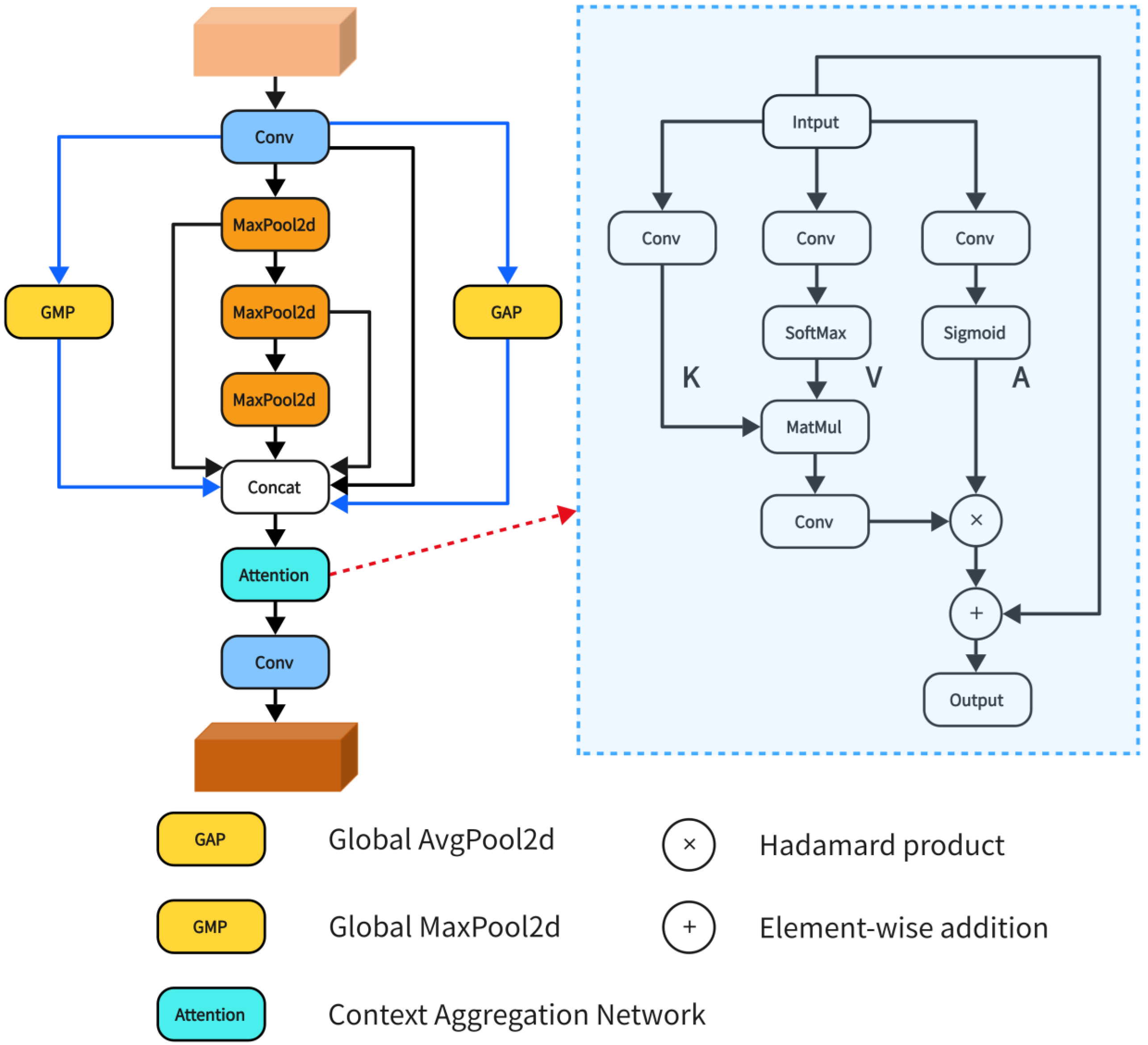

3.2. Pyramid Global Attention Module

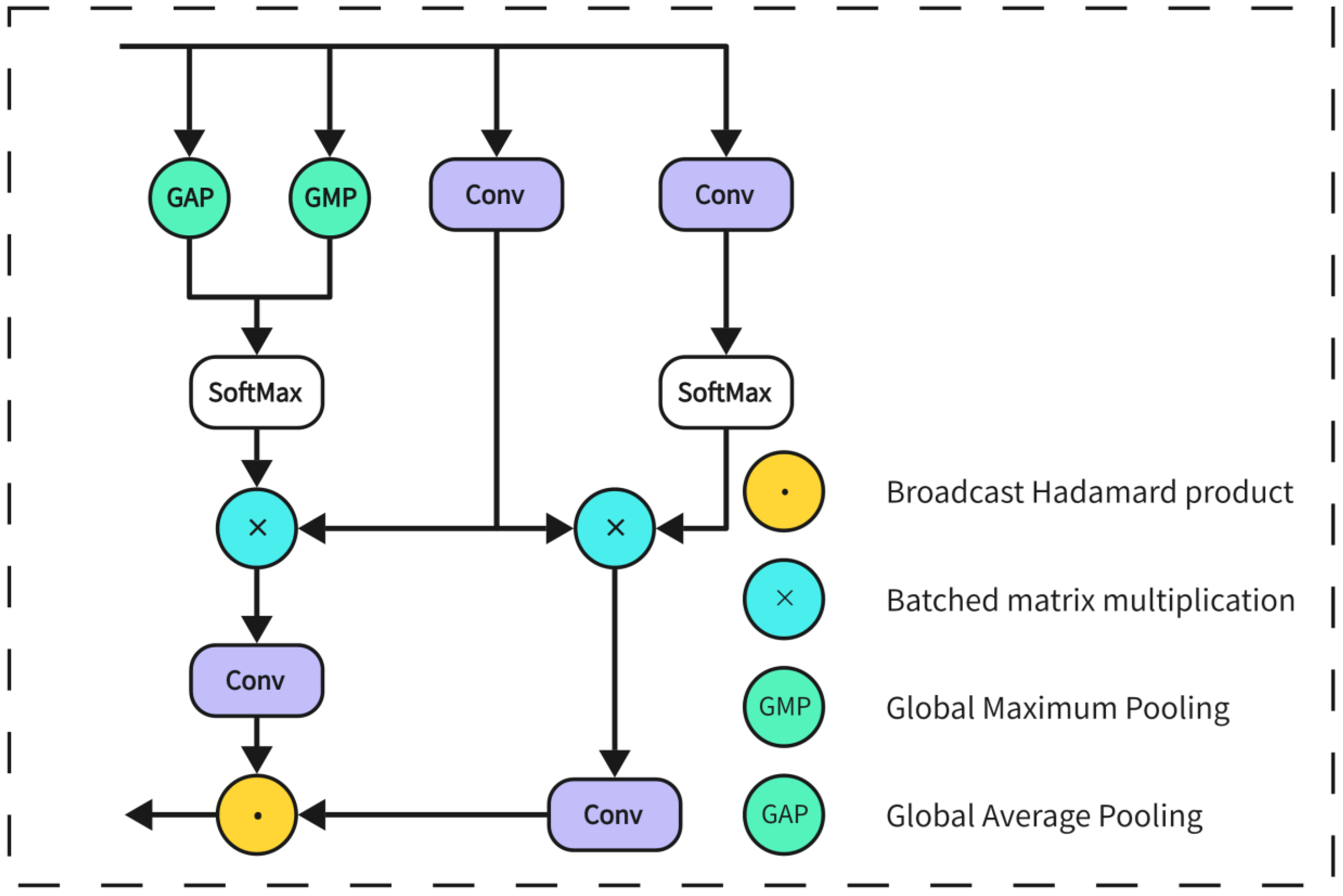

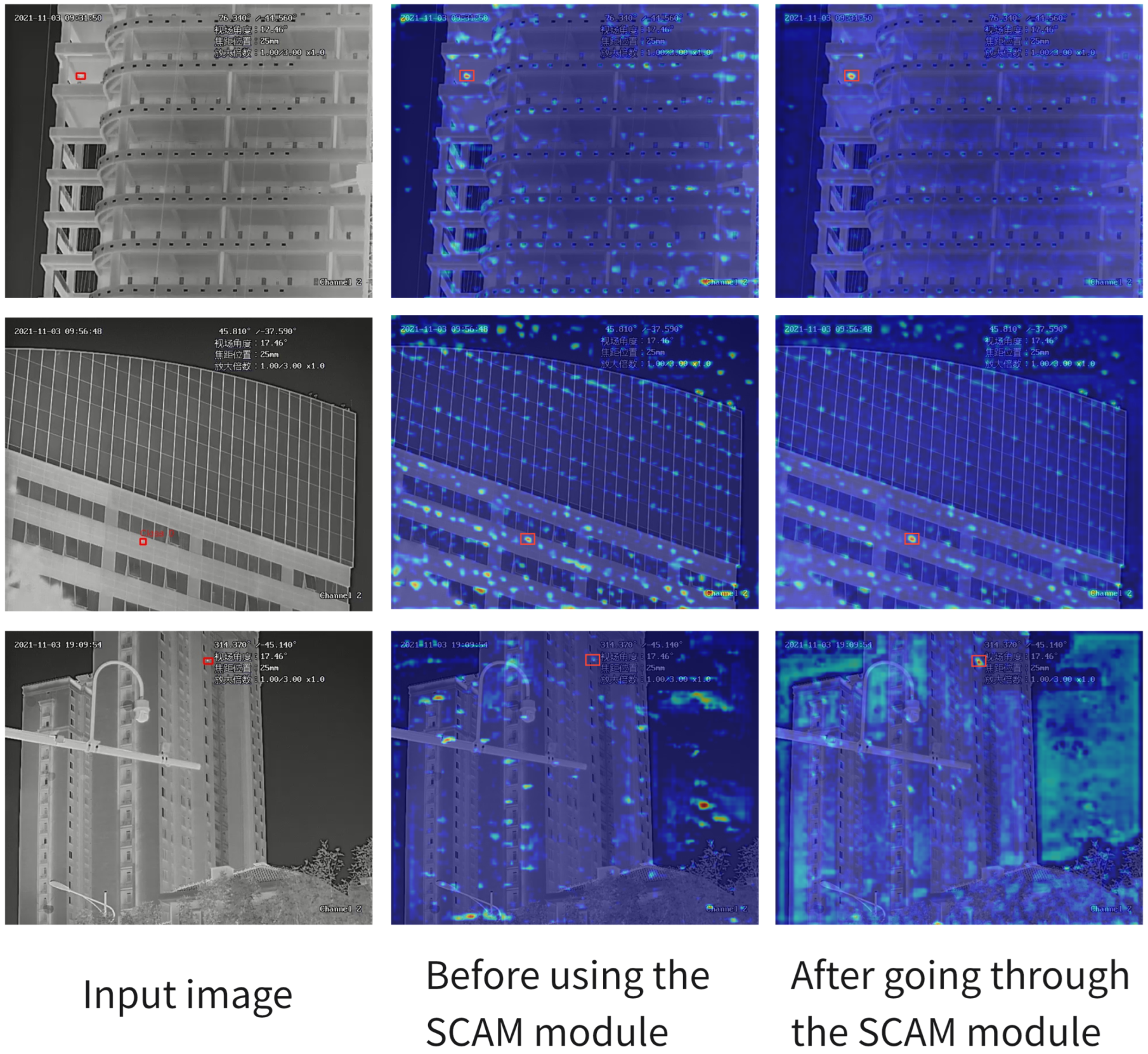

3.3. Spatial Context-Aware Module

4. Results of the Experiments

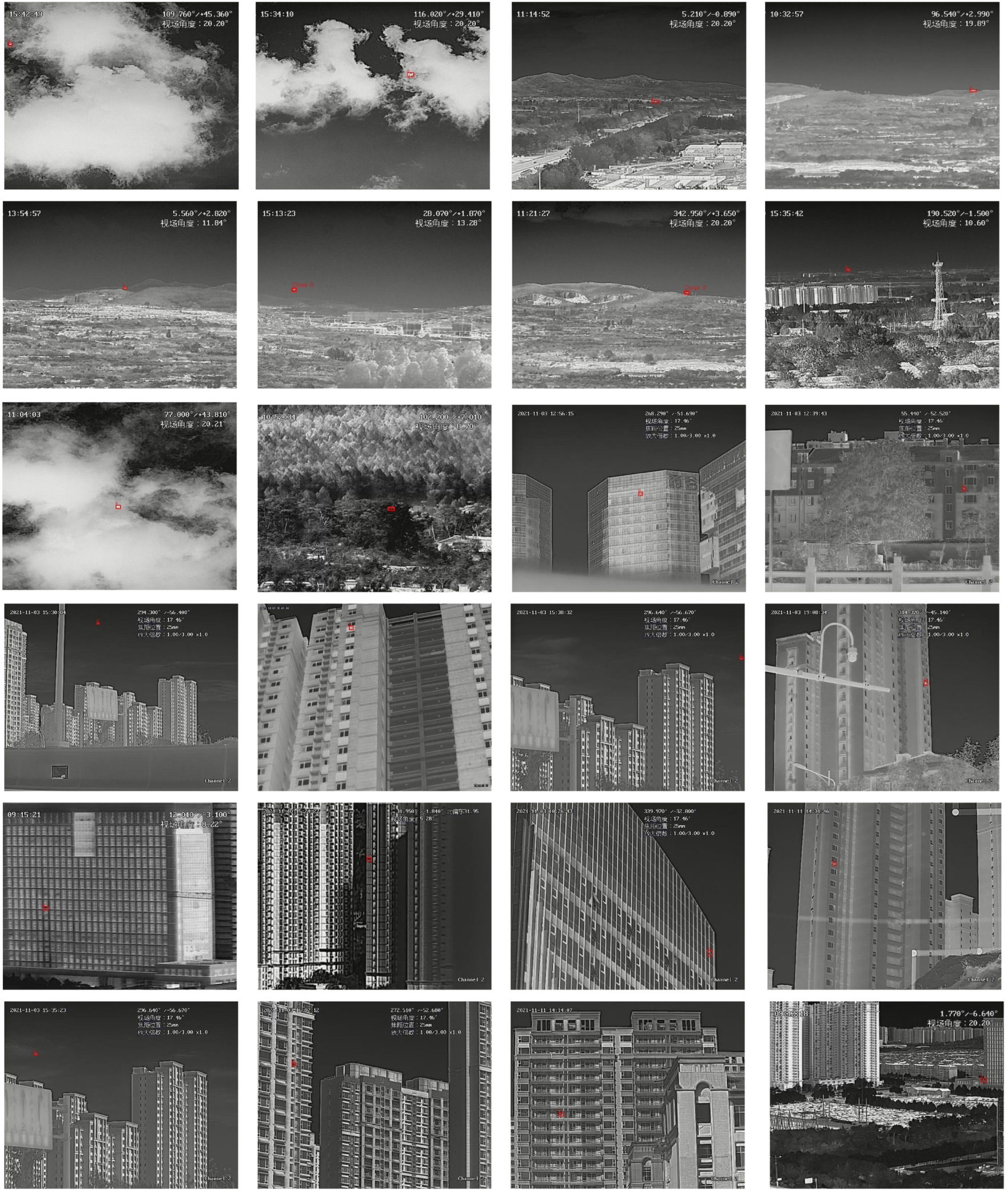

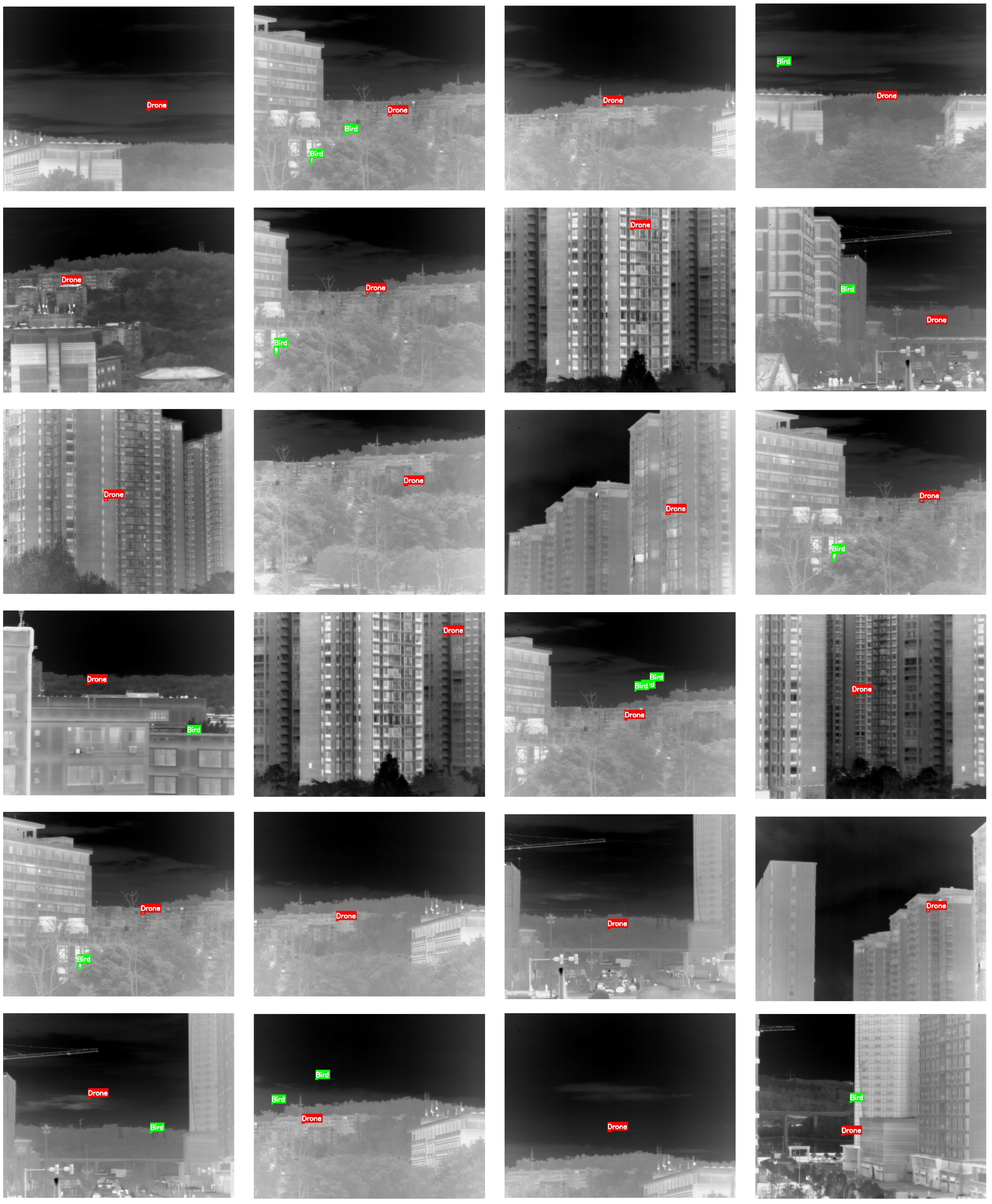

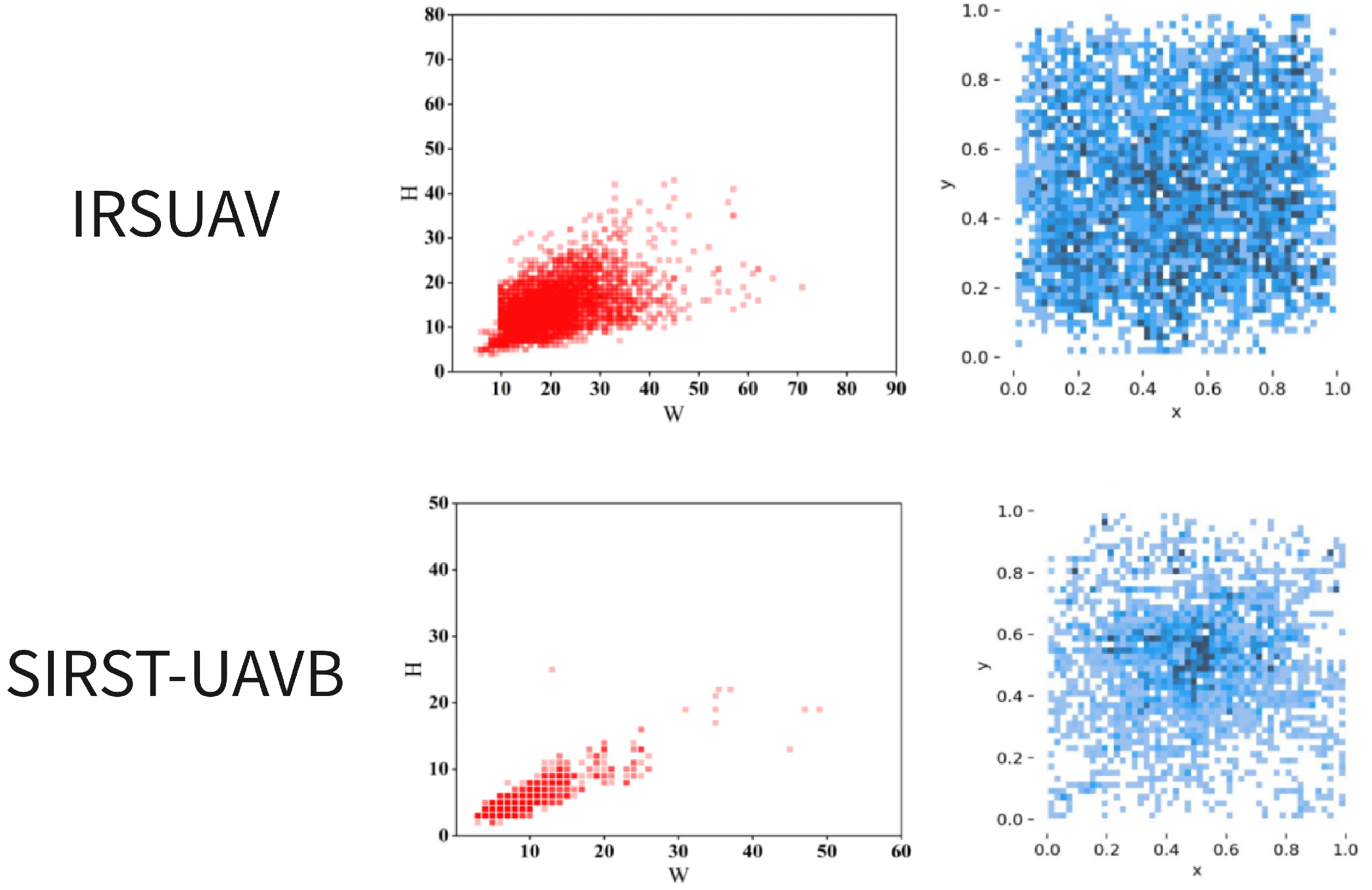

4.1. Data Preparation

4.2. Evaluation Metrics

4.3. Parameter Settings

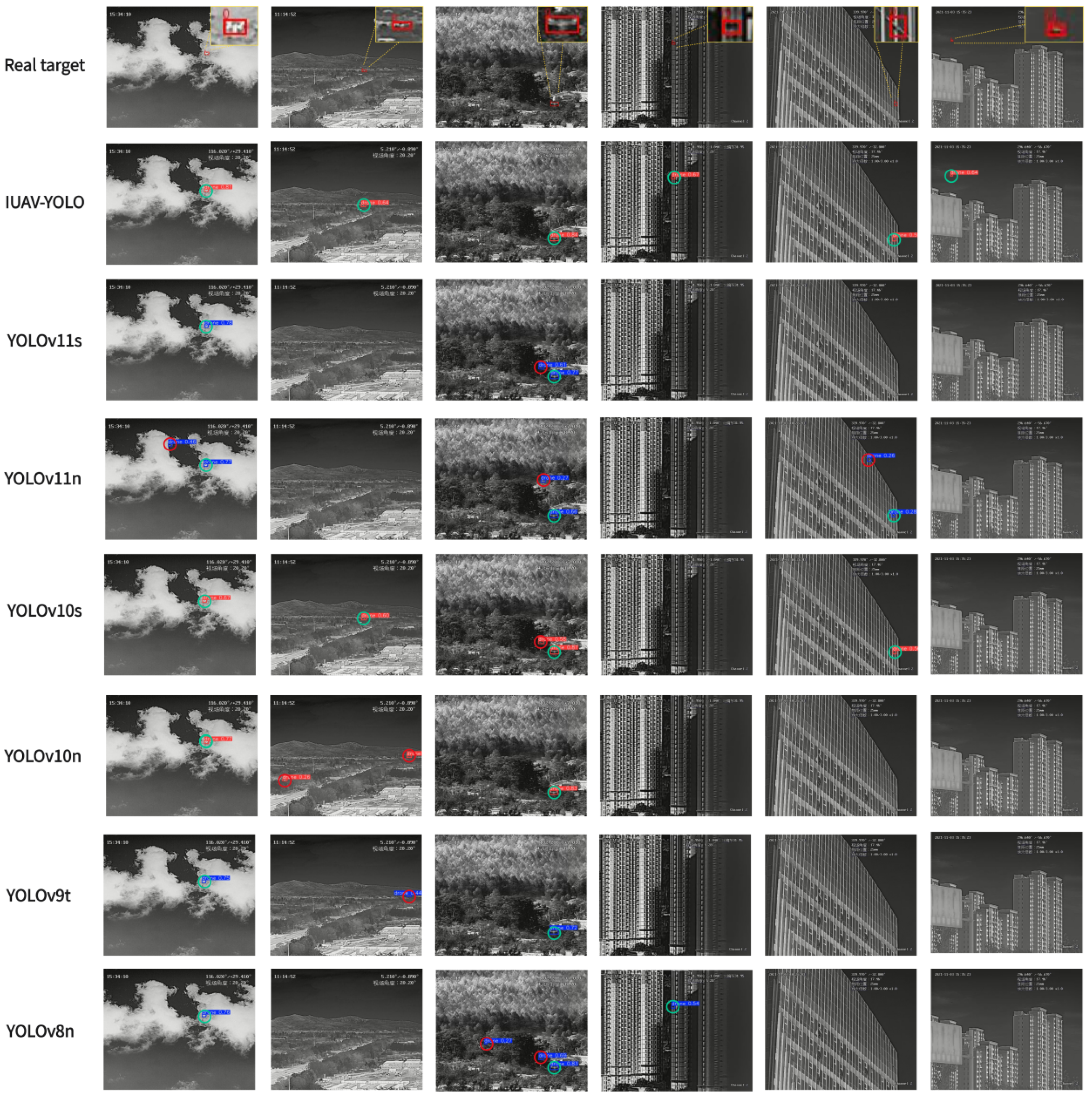

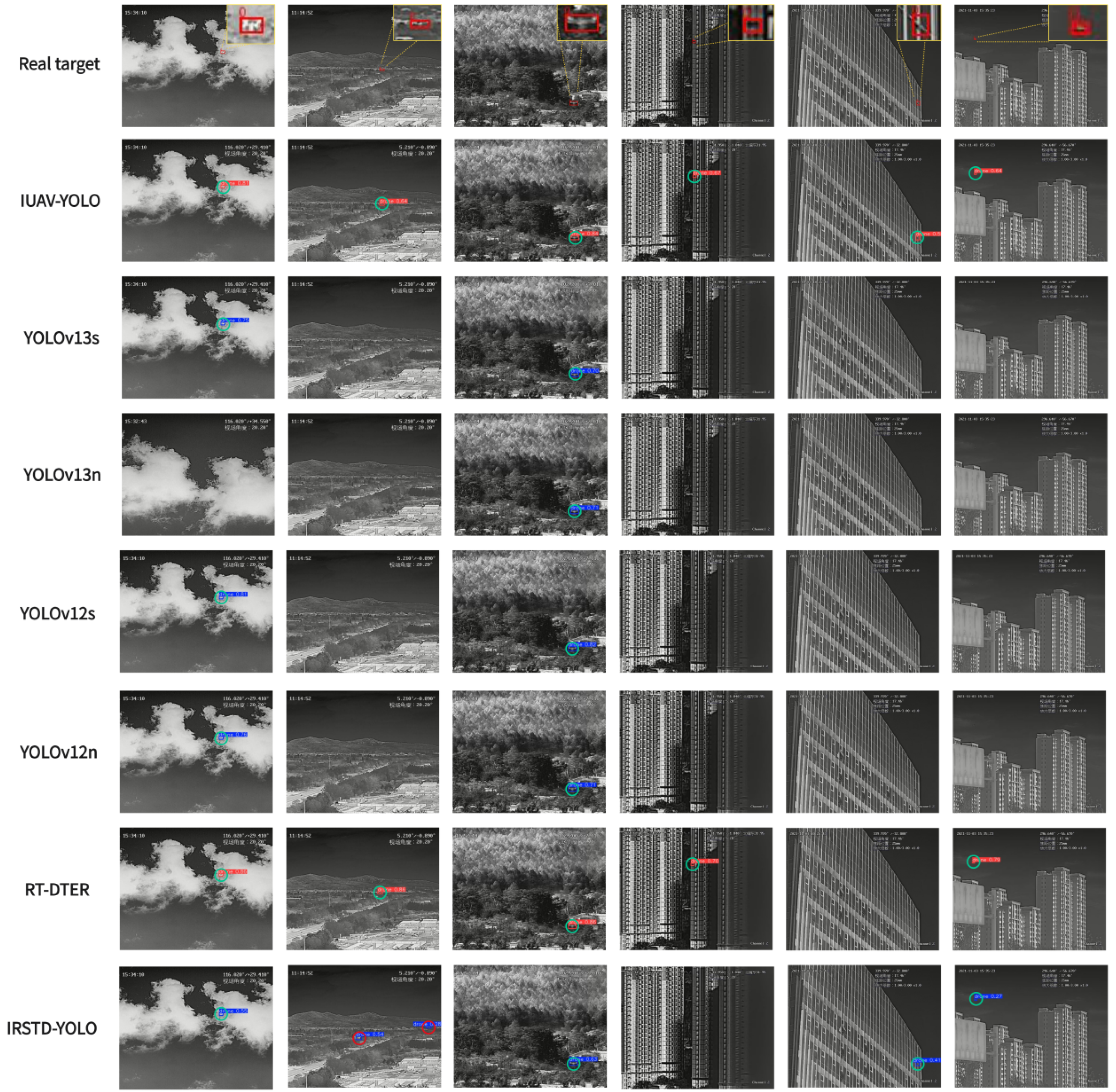

4.4. Comparative Experiment

4.4.1. IRSUAV Dataset

4.4.2. SIRST-UAVB Dataset

4.5. Ablation Experiment

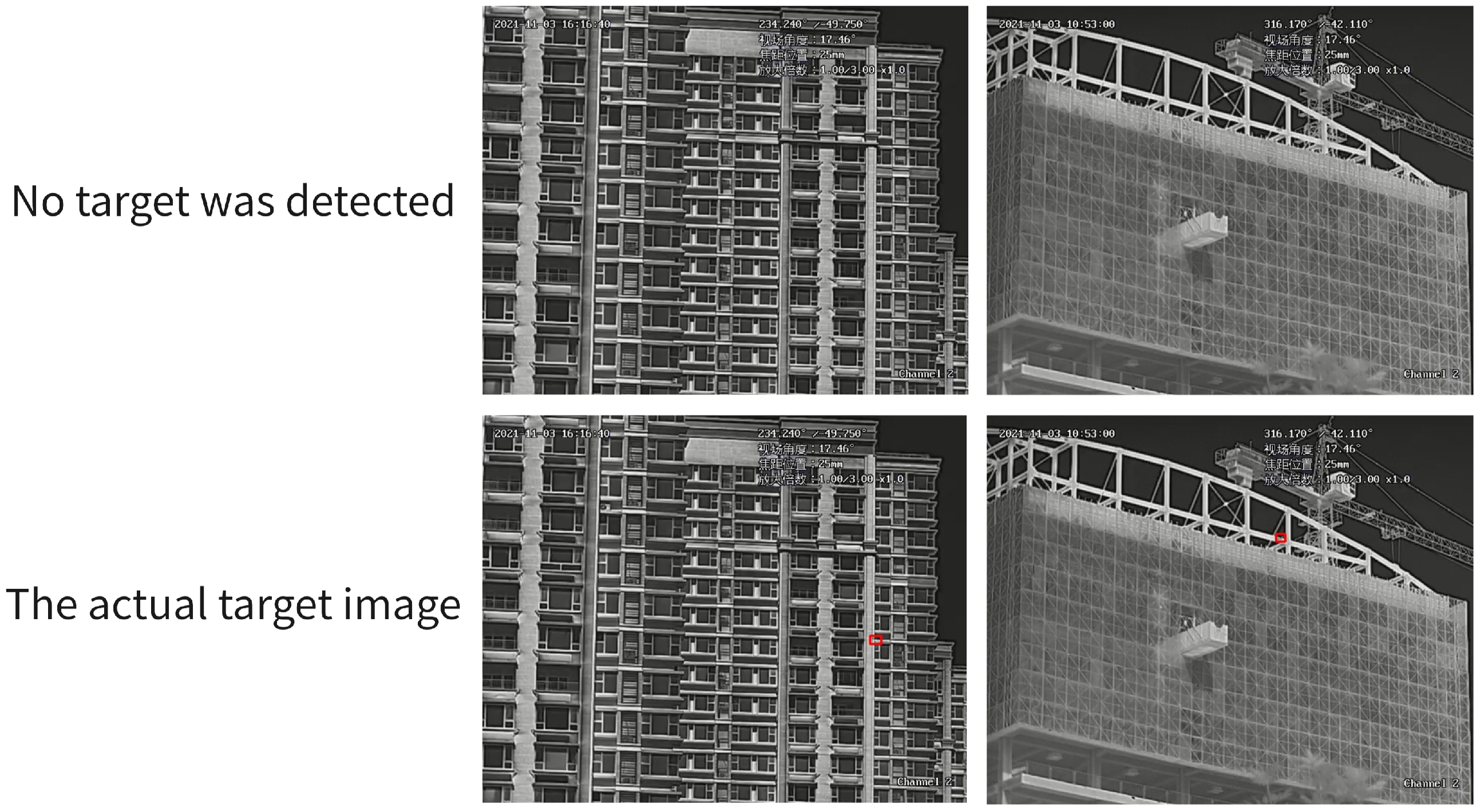

5. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hao, Z.; Wang, Z.; Xu, X.; Jiang, Z.; Sun, Z. YOLO-ISTD: An infrared small target detection method based on YOLOv5-S. PLoS ONE 2024, 19, e0303451. [Google Scholar] [CrossRef]

- Xu, Y.; Shao, A.; Kong, X.; Wu, J.; Chen, Q.; Gu, G.; Wan, M. Infrared Small Target Detection Based on Sub-Maximum Filtering and Local Intensity Weighted Gradient Measure. IEEE Sens. J. 2024, 24, 22236–22248. [Google Scholar] [CrossRef]

- Kapur, J.N.; Sahoo, P.K.; Wong, A.K. A new method for gray-level picture thresholding using the entropy of the histogram. Comput. Vis. Graph. Image Process. 1985, 29, 273–285. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small targets. In Proceedings of the Signal and Data Processing of Small Targets 1999, Denver, CO, USA, 19–23 July 1999; Volume 3809, pp. 74–83. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar] [CrossRef]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 5–9 January 2021; pp. 949–958. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wu, X.; Hong, D.; Chanussot, J. UIU-Net: U-Net in U-Net for Infrared Small Object Detection. IEEE Trans. Image Process. 2023, 32, 364–376. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the International Workshop on Deep Learning in Medical Image Analysis, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust Infrared Small Target Detection Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.; Xi, Y.; Zheng, H.; Li, N. ISTDU-Net: Infrared Small-Target Detection U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Zhang, J.; Guo, J.; Li, Y.; Gao, X. Dim2Clear Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2023, 32, 1745–1758. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss Detection vs. False Alarm: Adversarial Learning for Small Object Segmentation in Infrared Images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8508–8517. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape Matters for Infrared Small Target Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 867–876. [Google Scholar] [CrossRef]

- Tong, X.; Su, S.; Wu, P.; Guo, R.; Wei, J.; Zuo, Z.; Sun, B. MSAFFNet: A Multiscale Label-Supervised Attention Feature Fusion Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Wang, K.; Du, S.; Liu, C.; Cao, Z. Interior Attention-Aware Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Yang, H.; Mu, T.; Dong, Z.; Zhang, Z.; Wang, B.; Ke, W.; Yang, Q.; He, Z. PBT: Progressive Background-Aware Transformer for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Hu, C.; Huang, Y.; Li, K.; Zhang, L.; Long, C.; Zhu, Y.; Pu, T.; Peng, Z. DATransNet: Dynamic Attention Transformer Network for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Tong, X.; Zuo, Z.; Su, S.; Wei, J.; Sun, X.; Wu, P.; Zhao, Z. ST-Trans: Spatial-Temporal Transformer for Infrared Small Target Detection in Sequential Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–19. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Shi, Z.; Zhang, J.; Wei, M. Design and Training of Deep CNN-Based Fast Detector in Infrared SUAV Surveillance System. IEEE Access 2019, 7, 137365–137377. [Google Scholar] [CrossRef]

- Ren, K.; Chen, Z.; Gu, G.; Chen, Q. Research on infrared small target segmentation algorithm based on improved mask R-CNN. Optik 2023, 272, 170334. [Google Scholar] [CrossRef]

- Zhao, J. Wireless and Satellite Systems. In In Proceedings of the 13th EAI International Conference, WiSATS 2022, Singapore, 12–13 March 2023; Springer Nature: Berlin/Heidelberg, Germany, 2023; Volume 509. [Google Scholar]

- Yue, T.; Lu, X.; Cai, J.; Chen, Y.; Chu, S. YOLO-MST: Multiscale deep learning method for infrared small target detection based on super-resolution and YOLO. Opt. Laser Technol. 2025, 187, 112835. [Google Scholar] [CrossRef]

- Liu, B.; Jiang, Q.; Wang, P.; Yao, S.; Zhou, W.; Jin, X. IRMSD-YOLO: Multiscale Dilated Network With Inverted Residuals for Infrared Small Target Detection. IEEE Sens. J. 2025, 25, 16006–16019. [Google Scholar] [CrossRef]

- Zhu, J.; Qin, C.; Choi, D. YOLO-SDLUWD: YOLOv7-based small target detection network for infrared images in complex backgrounds. Digit. Commun. Netw. 2025, 11, 269–279. [Google Scholar] [CrossRef]

- Zhou, G.; Liu, X.; Bi, H. Recognition of UAVs in Infrared Images Based on YOLOv8. IEEE Access 2025, 13, 1534–1545. [Google Scholar] [CrossRef]

- Phat, N.T.; Giang, N.L.; Duy, B.D. GAN-UAV-YOLOv10s: Improved YOLOv10s network for detecting small UAV targets in mountainous conditions based on infrared image data. Neural Comput. Appl. 2025, 37, 17217–17229. [Google Scholar] [CrossRef]

- Tang, Y.; Xu, T.; Qin, H.; Li, J. IRSTD-YOLO: An Improved YOLO Framework for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Yang, J.; Liu, S.; Wu, J.; Su, X.; Hai, N.; Huang, X. Pinwheel-shaped convolution and scale-based dynamic loss for infrared small target detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 Febraury–4 March 2025; Volume 39, pp. 9202–9210. [Google Scholar]

| Metric | Description |

|---|---|

| Precision (P) | Ratio of correct positive predictions to all detections. |

| Recall (R) | Ratio of correctly detected positives to all ground-truth positives. |

| mAP50 | Mean Average Precision at IoU = 0.5. |

| mAP50–95 | Mean Average Precision averaged over IoU thresholds from 0.5 to 0.95. |

| Parameters (Param) | Number of model parameters. |

| Frames Per Second (FPS) | FPS refers to the number of images that can be processed per second. |

| Floating-point Operations per Second in billions (GFLOPs) | The floating-point operation volume (GFLOPs) reflects the computational complexity of the model during the operation process. |

| Algorithm | AP0.5 | AP0.5:0.95 | Precision | Recall | Params | FPS | GFLOPs |

|---|---|---|---|---|---|---|---|

| RT-DETR (res18) | 0.836 | 0.420 | 0.910 | 0.808 | 40.5 M | - | 57.2 |

| Cascade R-CNN (res50) | 0.804 | - | - | - | 69.1 M | - | 105.5 |

| Faster R-CNN (res50) | 0.776 | - | - | - | 41.3 M | - | 75.5 |

| YOLOv13s | 0.815 | 0.380 | 0.893 | 0.769 | 18.6 M | - | 20.7 |

| YOLOv13n | 0.795 | 0.368 | 0.883 | 0.721 | 5.4 M | - | 6.2 |

| YOLOv12s | 0.841 | 0.395 | 0.904 | 0.777 | 19.0 M | - | 21.2 |

| YOLOv12n | 0.799 | 0.372 | 0.886 | 0.720 | 5.5 M | - | 6.5 |

| YOLOv11s | 0.845 | 0.384 | 0.916 | 0.794 | 19.2 M | - | 21.3 |

| YOLOv11n | 0.835 | 0.378 | 0.911 | 0.777 | 5.5 M | - | 6.3 |

| YOLOv10s | 0.840 | 0.393 | 0.881 | 0.749 | 16.5 M | - | 24.4 |

| YOLOv10n | 0.810 | 0.374 | 0.877 | 0.738 | 5.8 M | - | 8.2 |

| YOLOv9t | 0.824 | 0.384 | 0.917 | 0.744 | 4.2 M | - | 6.4 |

| YOLOv8s | 0.839 | 0.393 | 0.881 | 0.778 | 20.0 M | - | 23.6 |

| YOLOv8n | 0.833 | 0.383 | 0.902 | 0.765 | 6.3 M | - | 8.1 |

| IRSTD-YOLO | 0.837 | 0.401 | 0.897 | 0.777 | 22.3 M | - | 43.2 |

| IUAV-YOLO (Ours) | 0.853 | 0.400 | 0.909 | 0.794 | 5.1 M | 88.7 | 24.2 |

| Algorithm | Category | AP0.5 | AP0.5:0.95 | Precision | Recall | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|---|

| YOLOv13s | All | 0.617 | 0.326 | 0.896 | 0.486 | 18.7 | 20.7 |

| UAV | 0.719 | 0.389 | 0.910 | 0.581 | – | – | |

| Bird | 0.514 | 0.263 | 0.883 | 0.392 | – | – | |

| YOLOv13n | All | 0.604 | 0.311 | 0.846 | 0.480 | 5.4 | 6.2 |

| UAV | 0.711 | 0.374 | 0.888 | 0.587 | – | – | |

| Bird | 0.496 | 0.248 | 0.803 | 0.373 | – | – | |

| YOLOv12s | All | 0.640 | 0.346 | 0.861 | 0.512 | 19.0 | 21.2 |

| UAV | 0.751 | 0.413 | 0.904 | 0.613 | – | – | |

| Bird | 0.529 | 0.278 | 0.818 | 0.402 | – | – | |

| YOLOv12n | All | 0.564 | 0.287 | 0.836 | 0.472 | 5.6 | 6.5 |

| UAV | 0.705 | 0.362 | 0.883 | 0.585 | – | – | |

| Bird | 0.423 | 0.211 | 0.789 | 0.359 | – | – | |

| YOLOv11s | All | 0.662 | 0.363 | 0.898 | 0.528 | 19.2 | 21.3 |

| UAV | 0.764 | 0.430 | 0.938 | 0.611 | – | – | |

| Bird | 0.561 | 0.297 | 0.859 | 0.444 | – | – | |

| YOLOv11n | All | 0.601 | 0.315 | 0.841 | 0.494 | 5.5 | 6.3 |

| UAV | 0.700 | 0.373 | 0.866 | 0.582 | – | – | |

| Bird | 0.502 | 0.257 | 0.815 | 0.405 | – | – | |

| YOLOv10s | All | 0.637 | 0.335 | 0.863 | 0.522 | 16.6 | 24.4 |

| UAV | 0.723 | 0.388 | 0.895 | 0.599 | – | – | |

| Bird | 0.551 | 0.283 | 0.831 | 0.444 | – | – | |

| YOLOv10n | All | 0.618 | 0.333 | 0.849 | 0.502 | 5.8 | 8.2 |

| UAV | 0.725 | 0.393 | 0.896 | 0.586 | – | – | |

| Bird | 0.511 | 0.272 | 0.802 | 0.418 | – | – | |

| YOLOv8n | All | 0.618 | 0.325 | 0.867 | 0.490 | 5.7 | 8.1 |

| UAV | 0.726 | 0.389 | 0.902 | 0.576 | – | – | |

| Bird | 0.510 | 0.261 | 0.832 | 0.405 | – | – | |

| IUAV-YOLO (Ours) | All | 0.915 | 0.496 | 0.914 | 0.868 | 5.2 | 24.2 |

| UAV | 0.956 | 0.555 | 0.957 | 0.912 | – | – | |

| Bird | 0.873 | 0.437 | 0.872 | 0.824 | – | – |

| Algorithm | AP0.5 | AP0.5:0.95 | Precision | Recall | Params |

|---|---|---|---|---|---|

| IUAV-YOLO | 0.853 | 0.399 | 0.912 | 0.783 | 5.1 M |

| IUAV-YOLO (w/h FEM) | 0.805 | 0.376 | 0.887 | 0.730 | 8.7 M |

| IUAV-YOLO (w/h PGA) | 0.834 | 0.391 | 0.871 | 0.770 | 4.5 M |

| IUAV-YOLO (w/h SCAM) | 0.834 | 0.387 | 0.892 | 0.773 | 4.7 M |

| YOLOv10n (Baseline) | 0.81 | 0.374 | 0.877 | 0.738 | 5.8 M |

| Algorithm | AP0.5 | AP0.5:0.95 | Precision | Recall | Params |

|---|---|---|---|---|---|

| YOLOv10n (Baseline) | 0.81 | 0.374 | 0.877 | 0.738 | 5.8 M |

| YOLOv10n + FEM | 0.816 | 0.386 | 0.845 | 0.75 | 4.1 M |

| YOLOv10n + FEM + PGA | 0.834 | 0.387 | 0.892 | 0.773 | 4.7 M |

| YOLOv10n + FEM + PGA + SCAM (IUAV-YOLO) | 0.853 | 0.399 | 0.912 | 0.783 | 5.1 M |

| Algorithm | AP0.5 | AP0.5:0.95 | Precision | Recall | Params |

|---|---|---|---|---|---|

| PGA | 0.853 | 0.399 | 0.912 | 0.783 | 5.1 M |

| SPPFimprov | 0.837 | 0.392 | 0.887 | 0.788 | 4.5 M |

| SPPFattention | 0.838 | 0.394 | 0.892 | 0.776 | 4.7 M |

| Algorithm | AP0.5 | AP0.5:0.95 | Precision | Recall | Params |

|---|---|---|---|---|---|

| Solid red line | 0.853 | 0.399 | 0.912 | 0.783 | 5.1 M |

| Black dashed line | 0.849 | 0.424 | 0.779 | 0.849 | 5.1 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Zhang, Y.; Li, Y.; Zhong, H. Global and Local Context-Aware Detection for Infrared Small UAV Targets. Drones 2025, 9, 804. https://doi.org/10.3390/drones9110804

Zhao L, Zhang Y, Li Y, Zhong H. Global and Local Context-Aware Detection for Infrared Small UAV Targets. Drones. 2025; 9(11):804. https://doi.org/10.3390/drones9110804

Chicago/Turabian StyleZhao, Liang, Yan Zhang, Yongchang Li, and Han Zhong. 2025. "Global and Local Context-Aware Detection for Infrared Small UAV Targets" Drones 9, no. 11: 804. https://doi.org/10.3390/drones9110804

APA StyleZhao, L., Zhang, Y., Li, Y., & Zhong, H. (2025). Global and Local Context-Aware Detection for Infrared Small UAV Targets. Drones, 9(11), 804. https://doi.org/10.3390/drones9110804