Highlights

What are the main findings?

- We integrate three technical paradigms of AUV cluster cooperative path planning, clarifying their evolutionary logic, applicability boundaries, and cross-disciplinary integration pathways.

- We identify the core bottleneck of “lack of realism in training environments,” which restricts algorithm migration from simulation to real-sea applications, and summarize 20 years of key challenges.

What is the implication of the main finding?

- It provides a panoramic reference for researchers by synthesizing multiple core studies over two decades, bridging the gap between theoretical fragmentation and engineering practice in the field.

- It guides future directions for practical deployment, including high-fidelity marine environment modeling, lightweight algorithm design, and dynamic coupling mechanisms of hybrid paradigms to enhance real-world adaptability of AUV clusters.

Abstract

Cooperative path planning is recognized as a critical technology for Autonomous Underwater Vehicle (AUV) clusters to execute complex marine operations. Through multi-AUV cooperative decision-making, perception limitations of individual robots can be mitigated, thereby significantly enhancing the efficiency of tasks such as deep-sea resource exploration and submarine infrastructure maintenance. However, the underwater environment is characterized by severe disturbances and limited communication, making cooperative path planning for AUV clusters particularly challenging. Currently, this field is still in its early research stage, and there exists an urgent need for the integration of scattered technical achievements to provide theoretical references and directional guidance for relevant researchers. Based on representative studies published in recent years, this paper provides a review of the research progress in three major technical domains: heuristic optimization, reinforcement and deep learning, and graph neural networks integrated with distributed control. The advantages and limitations of different technical approaches are elucidated. In addition to cooperative path planning algorithms, the evolutionary logic and applicable scenarios of each technical school are analyzed. Furthermore, the lack of realism in algorithm training environments has been recognized as a major bottleneck in cooperative path planning for AUV clusters, which significantly limits the transferability of algorithms from simulation-based validation to real-sea applications. This paper aims to comprehensively outline the current research status and development context of the field of AUV cluster cooperative path planning and propose potential future research directions.

1. Introduction

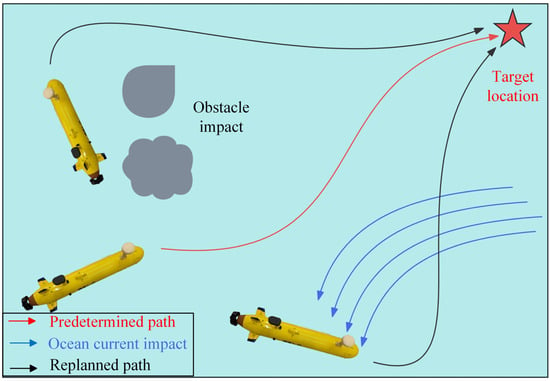

Autonomous Underwater Vehicles are unmanned systems that integrate environmental perception, autonomous decision-making, and dynamic control. They play a vital role in deep-sea resource exploration, underwater rescue, and marine environmental monitoring [1]. However, single-AUV path planning is constrained by limited perception range and insufficient dynamic obstacle avoidance capability, making it difficult to meet the demands of large-scale and multi-objective underwater missions. To address these limitations, AUV cluster systems have been proposed as a promising research direction. Through cooperative control algorithms, these systems enable multi-agent task allocation, conflict avoidance, and coordinated path planning. Such cooperation significantly improves task efficiency in dynamic and uncertain marine environments, including those affected by ocean currents and sudden obstacles [2]. Cooperative path planning, as the core technology of multi-AUV mission execution, directly affects environmental adaptability, operational efficiency, and energy utilization. It has therefore become a research focus in the field of cooperative control for underwater equipment.

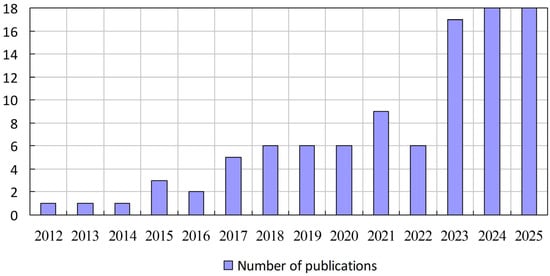

According to statistics from the Web of Science (WOS) database, a total of 99 papers related to “multi-AUV” and “path planning” were published between 2012 and October 2025. Figure 1 presents the number and trend of publications. The data show a steady increase from one in 2012 to eighteen in 2025, reflecting the rapid development of this research field. Despite this progress, several challenges remain. First, the migration of algorithms from simulation to real AUVs remains a challenging task. Many algorithms perform well in idealized simulation environments but show degraded performance in real marine conditions characterized by high noise and strong interference. Second, multi-objective optimization still faces the issue of weight quantification. There is a lack of objective evaluation criteria to determine the relative importance of factors such as path length, energy consumption, and obstacle avoidance safety, leading to manual parameter adjustments for different mission scenarios. Third, environmental modeling techniques cannot accurately represent real ocean conditions. Most studies rely on simplified models of flow fields and terrain, with insufficient attention to complex features such as high pressure, low temperature, and varying salinity in deep-sea or polar environments. Finally, heterogeneous AUV clusters exhibit diverse performance requirements, further increasing the complexity of cooperative path planning algorithm design.

Figure 1.

Number and trend of papers related to AUV cluster path planning indexed by WOS since the database’s inception (as of October 2025). Source: WOS.

Several review studies [3,4,5] have summarized the progress of multi-AUV cooperative path planning and formation control in recent years. However, current reviews mainly focus on isolated algorithmic categories and lack a unified comparison of how different paradigms perform under real-sea conditions. Most existing reviews also overlook the relationship between algorithmic principles and their real-world applicability, and few have examined how different paradigms adapt to dynamic currents and communication constraints. Therefore, a clear research gap exists in understanding the transferability and robustness of cooperative path planning algorithms across environments. This review aims to fill this gap by providing a cross-paradigm synthesis that connects algorithmic evolution with real-sea adaptability. A unified analytical framework is introduced to evaluate heuristic optimization, reinforcement learning, and graph neural network methods under consistent criteria of complexity, robustness, and energy efficiency.

This paper takes AUV cluster cooperative path planning algorithms as the core research object, aiming to sort out the technological evolution and research paradigms in this field over the past 20 years. Based on differences in algorithm principles and implementation path optimization, this paper will focus on analyzing the three current mainstream technical schools: heuristic optimization algorithms, reinforcement learning and deep learning methods, and graph neural networks and distributed control strategies. The comparison framework is designed from three core dimensions: algorithmic complexity, environmental robustness, and energy efficiency. These dimensions are applied consistently in later sections to ensure a standardized and transparent evaluation among different algorithmic paradigms.

To ensure methodological transparency, a semi-systematic review approach was employed. Literature data were collected from four major databases: Web of Science, IEEE Xplore, ScienceDirect, and SpringerLink. The search keywords “multi-AUV” and “path planning” were used. The retrieved papers were screened through a three-step procedure. First, titles were reviewed to exclude irrelevant publications. Second, abstracts were examined to remove studies unrelated to algorithmic methods. Finally, full texts were evaluated to include only those works that addressed both multi-AUV systems and path planning, including relevant review papers.

A total of 103 papers were finally identified and analyzed. Among them, representative and highly cited studies were selected for explicit citation to maintain conciseness, while all retrieved works were incorporated into the overall analytical synthesis to ensure comprehensiveness and representativeness.

Although numerous differences are observed between underwater and aerial environments in terms of communication latency, sensor types, and dynamic disturbances, important cross-domain references for AUV clusters have been provided by research findings on Unmanned Aerial Vehicle (UAV) swarm cooperative path planning. In particular, at the level of multi-agent cooperative control algorithms, the core logic of distributed decision-making and dynamic path optimization methods for UAV swarms can be transferred to AUV cluster scenarios. For example, the multi-agent deep reinforcement learning framework proposed for multi-UAV systems achieves individual trajectory optimization and global coordination through distributed policy gradients, providing new insights for solving real-time path adjustment problems of AUV clusters in communication-constrained environments [6]. In addition, the improved artificial potential field method applied in multi-UAV formation control balances target attraction and obstacle repulsion through the design of dynamic potential field functions, and its processing method for multi-agent motion constraints in complex environments can be referenced for path planning of underwater clusters [7]. These UAV swarm studies have indicated that cross-scenario adaptability is exhibited by the cooperative control logic of both learning-based algorithms and traditional heuristic methods; however, parameter adjustments and model modifications are required to address the specific characteristics of underwater environments.

This paper analyzes the underlying causes of the gap between theoretical exploration and engineering practice in AUV cluster path planning, identifying key opportunities and technical challenges. The main contributions are summarized as follows:

- To the best of our knowledge, this is the first study that achieves cross-dimensional integration of research from three algorithmic paradigms over the past two decades. By tracing the evolution of representative algorithms, a panoramic view of the field is provided.

- By focusing on algorithmic principles, environmental adaptability, and cooperative mechanisms, this paper compares the strengths and weaknesses of different paradigms under dynamic ocean current interference and communication topology variations, emphasizing breakthroughs in highly cited works from the last five years.

- Based on technological evolution and application demands, this study outlines current research opportunities and challenges for multi-AUV cooperative path planning.

The main innovation of this study lies in establishing a unified comparative framework that integrates heuristic optimization, reinforcement learning, and graph neural networks under consistent evaluation criteria. This framework provides a structured foundation for understanding the transition from simulation-level validation to real-sea deployment, underscoring the critical role of environmental modeling and communication-aware coordination.

The remainder of this paper is structured as follows: Section 2 reviews heuristic optimization algorithms; Section 3 discusses advances in reinforcement and deep learning methods; Section 4 analyzes graph neural networks and distributed control strategies; Section 5 outlines future research directions; and Section 6 concludes the paper.

2. Heuristic Optimization Algorithms

Heuristic optimization algorithms have long been regarded as a fundamental paradigm for path planning in AUV clusters. These methods are inspired by biological swarm intelligence and utilize probabilistic search mechanisms to approximate near-optimal paths under uncertain and complex marine conditions. They have been widely applied in tasks such as pipeline inspection, seabed exploration, and underwater rescue, where nonlinear constraints and unpredictable environmental disturbances are common.

Compared with traditional single-vehicle planning techniques, heuristic algorithms present several notable advantages. First, stochastic exploration enables a rapid approximation of the global optimum without exhaustive searching, which makes these algorithms suitable for large-scale mission environments. Second, distributed parallel computation can be naturally achieved, allowing multiple AUVs to conduct simultaneous local optimization and thereby improve overall planning efficiency. Third, adaptive parameter adjustment allows real-time responses to environmental variations, ensuring trajectory stability under ocean current disturbances.

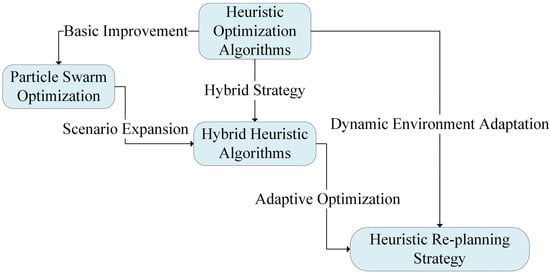

In recent research, a four-dimensional optimization balance has been emphasized, involving path accuracy, energy efficiency, temporal constraints, and robustness. These four objectives jointly define the optimization focus of heuristic approaches. Based on these principles, three major algorithmic frameworks have been developed: swarm intelligence algorithms, hybrid evolutionary algorithms, and heuristic re-planning strategies, as illustrated in Figure 2. Their improvement mechanisms, applicable conditions, and performance limitations are analyzed in the following subsections.

Figure 2.

Relationships of Heuristic Optimization Algorithms.

2.1. Improvements and Extensions of Particle Swarm Optimization Algorithms

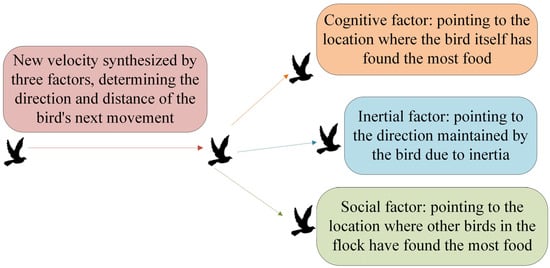

The Particle Swarm Optimization (PSO) algorithm performs parallel path optimization by simulating the foraging behavior of bird flocks, as illustrated in Figure 3. With its strong global search capability and flexible parameter adjustment, PSO has become one of the core algorithms for AUV cluster path planning in large-scale and complex marine environments. However, the traditional PSO algorithm easily suffers from premature convergence and local optima. These issues have motivated further studies focused on maintaining population diversity and improving dynamic adaptability.

Figure 3.

Particle Swarm Optimization Algorithm Simulating Bird Flock Foraging Behavior.

In the standard PSO process, a population of random particles is initialized. Each particle updates its velocity and position iteratively under the influence of inertia, cognitive, and social components. The fitness of each particle is evaluated at every iteration, and the best individual and global positions are continuously updated until convergence toward an optimal path is achieved.

Recent studies have introduced various improvements to enhance PSO’s convergence performance and adaptability to dynamic marine environments. Zhi et al. [8] proposed an Adaptive Multi-Population PSO (AMP-PSO) that employs a hierarchical evolution mechanism with leader–follower subpopulations and periodic particle exchange to maintain diversity and avoid premature convergence. This approach significantly reduced path length in 3D simulations. Building on this idea, Wang et al. [9] developed an Adaptive Hybrid PSO that integrates environmental feedback to dynamically adjust learning coefficients, thereby achieving greater stability under unsteady ocean current conditions.

Sun et al. [10] presented an Improved Multi-Objective PSO (IMOPSO) for 3D vortex environments, combining a sine double-sequence initialization with an adaptive differential evolution operator to improve cooperative behavior and stability in obstacle-rich regions. Liu et al. [11] proposed a Multi-Cluster Cooperative Optimization (MCO) algorithm, enabling cross-population communication via dynamic particle sharing, which improved task completion rates in complex 3D simulations. Furthermore, Zhang et al. [12] introduced a Multi-Objective PSO with an adaptive energy-consumption model, demonstrating lower energy loss during cooperative maneuvers under varying flow velocities.

Overall, these improvements provide complementary advantages: AMP-PSO and IMOPSO effectively enhance convergence and solution diversity, while the Hybrid PSO and energy-aware PSO exhibit better robustness in unsteady flow fields. The MCO algorithm improves coordination efficiency through inter-population communication, although its performance degrades under communication loss. Despite these advances, most methods remain validated only under controlled simulation conditions, lacking real-sea testing and comprehensive robustness assessments involving sensor noise or communication uncertainty.

In summary, the major limitation of current PSO-based approaches lies in their dependency on manually tuned parameters and limited adaptability to real-time environmental variations. Without adaptive parameter mechanisms that respond to environmental feedback, these algorithms exhibit reduced scalability and resilience in real marine operations.

2.2. Hybrid Heuristic Algorithms

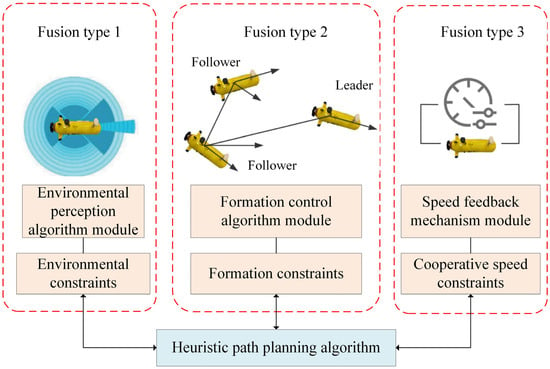

Hybrid heuristic algorithms integrate the core mechanisms of different optimization methods to achieve complementary advantages. They are designed to handle multi-objective optimization problems in complex marine environments. Existing studies have mainly proposed three integration types, as illustrated in Figure 4.

Figure 4.

Main integration forms of hybrid heuristic algorithms.

The first type is the integration of environmental perception and path planning. Mu et al. [13] proposed a three-level integration framework combining an improved Dijkstra algorithm, PSO, and ELKAI solver, which couples marine acoustic models with path optimization to address multi-AUV coverage path planning (CPP). The detection range is calculated using the sonar equation to generate coverage sampling points. An adjacency matrix under the influence of ocean currents is then built using the improved Dijkstra algorithm. Task assignment is performed by PSO, and the ELKAI solver is used to generate the final paths. Simulation results in thermocline environments show improved coverage compared with traditional grid methods. However, the simplified modeling of seabed topography causes larger detection errors in reef-dense areas.

Recent studies have expanded these hybrid frameworks by combining evolutionary computation with classical geometric planners. Genetic-based and ant-colony-based hybrid models combined with A-star (A*) and Dijkstra algorithms have been developed to enhance global path optimality and convergence speed [14,15,16,17,18]. These algorithms use adaptive encoding, pheromone reinforcement, and trajectory-smoothing techniques to produce shorter and smoother paths in dynamic ocean currents. Other studies [19,20,21,22] have merged information theory and graph optimization with heuristic sampling to improve spatial coverage and obstacle perception. By fusing mutual information, entropy-based evaluation, and RRT* or A*-based planning, these approaches have achieved higher exploration efficiency and better safety in uncertain underwater environments.

The second integration form is the cooperative optimization of formation control and path search. Feng et al. [23] developed a Variable-Width A* (VWA*) algorithm that performs joint decision-making for collision-free path planning and formation adjustment by adding a formation structure dimension to the state space. The algorithm dynamically adjusts inter-vehicle distances according to environmental complexity and employs a multi-objective cost function to guide the search. It shows improved formation stability in dynamic obstacle conditions and reduced navigation time. However, its dependence on predefined formation libraries limits its adaptability. When obstacle density is high, formation-switching stability declines.

Beyond formation coordination, several multi-heuristic cooperative optimization algorithms have been developed to balance objectives such as energy efficiency, safety, and mission time. Representative studies [24,25,26,27] have combined simulated annealing, tabu search, fuzzy logic, immune optimization, and differential evolution. These methods adopt adaptive neighborhood structures and multi-criteria selection rules, which improve global convergence and reduce computational cost in large-scale multi-AUV mission planning. In addition, adaptive hybrid algorithms [28,29,30,31,32,33] have been proposed for dynamic marine environments. By fusing heuristic search with adaptive control or neuro-fuzzy regulation, these algorithms enable online re-planning under variable currents and moving obstacles, thereby enhancing robustness and response speed.

The third integration form is the fusion of swarm intelligence and speed regulation mechanisms. Yin et al. [34] combined ant colony optimization with an equal-time waypoint method to generate candidate path sets using random sampling and introduced a dynamic speed adjustment module to improve conflict avoidance. In multi-AUV conflict scenarios, this approach outperforms traditional particle swarm optimization in energy efficiency and conflict reduction. However, its fixed waypoint intervals limit adaptability to complex terrain and may lead to local optima.

Biologically inspired neural dynamic approaches [35,36,37] have also been integrated into hybrid frameworks to model target attraction and obstacle repulsion in unknown 3D underwater environments. These neurodynamic models simulate continuous activity landscapes that guide AUV movement and provide self-adaptive obstacle avoidance without pre-defined potential functions. When combined with evolutionary or fuzzy controllers, they achieve smoother trajectories and better adaptability in disturbed flow conditions.

Despite these advances, several limitations remain. Most hybrid algorithms lack practical implementation in environmental modeling. Simplified sonar models and terrain coupling often reduce their applicability in complex sea areas. Predefined dynamic response mechanisms can only handle limited environmental changes. For instance, the predefined formation library in the VWA* algorithm and the fixed waypoint intervals in Yin et al.’s method cannot adapt to complex dynamic environments. In addition, module-level collaboration efficiency remains low. The serial execution of PSO task allocation and ELKAI solver path generation in Mu et al.’s framework causes computation time to increase linearly with the number of AUVs.

From a broader perspective, numerous hybrid studies have confirmed that hybridization has become a major trend in addressing multi-objective challenges for AUV path planning. However, a unified integration framework is still missing. Each hybrid design remains highly task-specific and lacks scalability.

Overall, the key challenge for hybrid heuristic algorithms lies in the trade-off between scenario-specific optimization and generalization capability. Most current approaches are tailored to particular missions and cannot be easily transferred to other scenarios. For instance, Mu et al.’s three-level framework cannot be generalized to formation control, and the formation optimization logic of VWA* does not extend effectively to coverage path planning. The absence of a unified integration framework thus necessitates extensive re-configuration and limits adaptability in complex deep-sea multi-task operations.

2.3. Heuristic Re-Planning Strategies in Dynamic Environments

Heuristic re-planning strategies in dynamic environments represent a key research direction in AUV cluster path planning. Their main goal is to maintain a balance between global optimality and real-time adaptability through continuous environmental feedback and online decision adjustment. Static pre-planning approaches cannot adapt to the dynamic and uncertain nature of marine environments, where time-varying currents, communication delays, and sensor noise often cause path deviations. As a result, research has increasingly focused on heuristic re-planning frameworks that integrate real-time perception, distributed decision-making, and adaptive optimization to enhance robustness against dynamic disturbances, as illustrated in Figure 5.

Figure 5.

Heuristic re-planning in dynamic environments.

Early efforts were based on region segmentation and local re-planning to reduce environmental complexity through spatial decomposition. Chang et al. [38] first applied Morse decomposition theory to maritime search and rescue, achieving balanced sub-region allocation and dynamic target prioritization through the SAR-A algorithm. Subsequent studies [39,40] incorporated ocean current models and probabilistic occupancy maps to adaptively reshape sub-regions when environmental conditions changed. These methods effectively reduced computational cost and improved coverage performance, but their simplified assumptions about flow dynamics led to reduced accuracy under turbulent or rapidly changing currents, reflecting the inherent limitations of spatial segmentation models.

To address these issues, hierarchical and multi-layer decision architectures were developed to achieve dynamic responses across different time scales. These systems generally include pre-planning, online adjustment, and feedback execution layers that cooperate through continuous information exchange. Zhang et al. [41] proposed a five-layer routing planner that integrates Bayesian networks for flow-field prediction. In simulations of random multi-node current fields, this framework reduced task completion time and maintained high access rates even under node failure conditions. Other studies [42,43,44,45,46,47] introduced probabilistic graphical models, hierarchical reinforcement mechanisms, and distributed Markov decision processes to improve robustness under uncertain environmental conditions. These approaches achieved faster convergence and better real-time adaptability compared with centralized optimization. However, they still rely heavily on accurate prior knowledge of the ocean environment, and their performance degrades significantly when environmental priors are incomplete or outdated.

The capability to dynamically adjust path strategies in response to environmental changes has become the core of heuristic re-planning. Liu et al. [48] proposed a co-evolutionary computation algorithm that enhances dynamic responsiveness through population adaptation and multi-objective optimization. Although it improved flexibility, its computational complexity increased exponentially with the number of AUVs, limiting its application to large-scale clusters. Recent studies [49,50,51,52] have extended this concept by combining heuristic search with reinforcement learning, fuzzy control, and metaheuristic adaptation. These adaptive methods utilize sensor feedback to update cost functions or redefine control parameters online, thereby enabling continuous trajectory correction and improved environmental adaptability. Reinforcement-assisted planners have demonstrated smoother trajectories and higher energy efficiency under dynamic currents, while neuro-fuzzy frameworks enhanced coordination stability among agents.

Despite these advancements, several persistent challenges remain. Environmental modeling is often oversimplified, and the coupling effects of terrain interaction, turbulence, and ocean vortices are rarely considered. As a result, the robustness of these algorithms decreases in real-world applications. In many cases, real-time perception and decision-making are not synchronized. Sensor latency and computational delay frequently lead to decision lag. Distributed coordination also causes conflicts when the communication topology changes, which results in non-convex optimization and inconsistent local behaviors. In addition, the definition of adaptive trigger thresholds for re-planning is still problematic. Loose thresholds lead to large path deviations, whereas tight thresholds cause excessive oscillations. Although several studies have introduced online learning mechanisms for threshold adjustment, these models still rely heavily on prior environmental knowledge and cannot completely remove parameter sensitivity.

Overall, heuristic re-planning strategies have evolved from static decomposition frameworks to adaptive and learning-based systems. The integration of real-time perception, probabilistic prediction, and reinforcement-driven optimization has substantially enhanced AUV autonomy and robustness in dynamic marine conditions. However, achieving a unified framework that ensures both real-time responsiveness and computational efficiency remains an open challenge for future research in this field. These representative heuristic optimization approaches are summarized and compared in Table 1.

Table 1.

Comparative summary of representative heuristic optimization algorithms for AUV cluster path planning.

Among the three heuristic paradigms, PSO-based extensions primarily enhance convergence speed and global optimization capability through population diversity maintenance. Hybrid heuristic algorithms focus on balancing multiple objectives, demonstrating superior adaptability to dynamic environments due to the integration of adaptive control and environmental feedback. In contrast, heuristic re-planning strategies emphasize real-time adaptability and online decision-making, which improve robustness under time-varying disturbances but often at the cost of higher computational complexity. Collectively, these approaches reveal a clear trade-off between convergence efficiency and environmental adaptability, suggesting the need for unified adaptive frameworks that can achieve both.

3. Reinforcement Learning and Deep Learning Methods

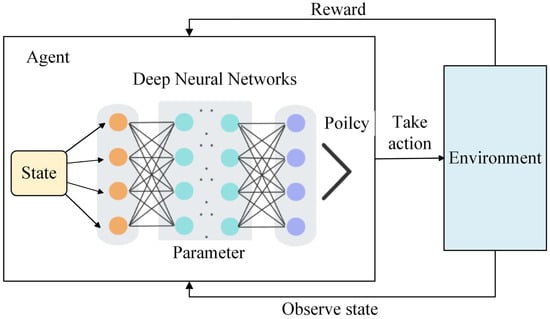

Reinforcement learning (RL) and deep learning (DL) have transformed research on AUV cluster path planning, marking a shift from model-driven to data-driven paradigms. In RL, decision policies are optimized through continuous interaction between an agent and its environment, while DL enables hierarchical extraction of high-dimensional features from complex sensory data. The integration of these two approaches forms Deep Reinforcement Learning (DRL), which combines the adaptability of RL with the representational capability of DL. Through this combination, autonomous decision-making can be achieved directly from raw sensor inputs. Since the introduction of the Deep Q-Network (DQN) by DeepMind in 2013, DRL has exhibited superior performance in robotic control and autonomous navigation. In the context of AUV clusters, it has been recognized as an effective approach for addressing dynamic, multi-task environments characterized by uncertainty, communication delay, and real-time decision constraints. The general structure of a DRL-based AUV path planning framework is shown in Figure 6.

Figure 6.

DRL structure diagram.

Technological advances in DRL-based AUV planning have been concentrated in three main areas:

- State-space compression, achieved through convolutional and recurrent neural networks to reduce the complexity of unstructured sonar and flow-field data while preserving key spatial–temporal features;

- Dynamic reward design, in which adaptive weighting mechanisms are introduced for multi-objective optimization, allowing real-time balancing of energy, distance, and safety factors;

- Multi-agent cooperation, in which attention-based distributed policy gradient methods are applied to enable autonomous role allocation and negotiation among heterogeneous AUVs, thereby improving coordination efficiency.

3.1. Deep Reinforcement Learning Algorithms

DRL has shown strong potential for multi-AUV cluster path planning by combining deep learning’s capability for high-dimensional perception with reinforcement learning’s mechanism for sequential decision optimization. However, its real-world deployment still faces major challenges. One of the main difficulties lies in coupling high-dimensional environmental perception with continuous control. AUVs must process large-scale unstructured data such as sonar point clouds and flow-field velocities while simultaneously generating continuous control actions, including propeller speeds and rudder angles. Traditional DRL models rely on handcrafted feature extraction, which may cause information loss, whereas end-to-end models risk the curse of dimensionality, leading to reduced decision accuracy and high computational load.

Another key challenge is the inefficiency of training samples. DRL depends on large-scale interactions with the environment to learn effective policies, yet underwater experiments are expensive and risky. Fault-induced trial-and-error in strong currents can damage hardware, making it impractical to collect millions of training samples required by standard DRL methods. Recent studies [53,54] have attempted to address this issue through transfer learning and co-evolutionary mechanisms, enabling pre-training in simulated environments followed by fine-tuning in real-world data. These methods improved sample efficiency and reduced the gap between simulation and real-world performance, yet they remain limited by the quality and representativeness of synthetic data.

In addition, high computational demand restricts real-time deployment. Complex DRL networks require powerful processors, while AUVs are constrained by limited onboard resources. This mismatch often leads to control oscillations and excessive energy consumption during field operations. Lightweight network designs and embedded optimization frameworks [55,56,57] have been developed to reduce the number of network parameters and improve inference speed without significant performance loss. These lightweight models demonstrated better stability and lower energy usage in resource-constrained simulations, but real-sea validation is still rare. From a technological perspective, DRL research for AUV path planning has followed three main directions: value function approximation, policy gradient optimization, and cross-domain theoretical integration.

Value function-based algorithms optimize decision-making by estimating expected returns for each action. They perform well in discrete control spaces. For example, Chu et al. [58] designed an improved Double Deep Q-Network (DDQN) framework that uses a dual-channel convolutional neural network to fuse sonar data with ocean current features. A dynamic composite reward function was designed to balance distance, obstacle avoidance, and energy efficiency. Although effective in discrete domains, this approach still suffers from reduced precision when applied to continuous control, as action discretization limits adaptability and requires large training datasets.

Policy gradient-based algorithms are designed to optimize the policy function directly, thereby improving decision smoothness. Chu et al. [59] developed an enhanced TD3 algorithm that suppresses extreme action outputs through mean-function regularization and introduces an action memory mechanism for adaptive weight adjustment. These modifications were reported to accelerate convergence and improve stability. However, the fixed weighting design of the reward function limits adaptability across multi-task conditions. Several studies [60] have extended policy gradient approaches to multi-agent scenarios, where distributed AUVs learn cooperative policies through shared reward mechanisms. These approaches improve coordination under communication delay but also increase system complexity and synchronization overhead.

Cross-domain theoretical integration has also been explored as an effective means to improve robustness and interpretability. Wang et al. [61] incorporated the Value of Information concept into reinforcement learning and proposed the LG-DQN algorithm to enhance data collection efficiency in underwater wireless sensor networks. Hierarchical decision structures were used to manage information priorities and communication costs. Other approaches combined deep reinforcement learning with control theory or fuzzy adaptation to improve stability under uncertain current disturbances. These hybrid methods extend DRL beyond purely data-driven optimization; however, they remain dependent on prior models and accurate environmental representations.

In comparison, value function–based methods (such as DDQN) are computationally efficient but less suitable for continuous control. Policy gradient methods provide smoother control and faster convergence, but require extensive training samples. Cross-domain and hybrid strategies improve robustness and adaptability but involve higher model complexity. Overall, deep reinforcement learning algorithms still face unresolved challenges, including high training cost, limited transferability, and constrained real-time performance in practical marine environments. Advances in lightweight network design, adaptive reward shaping, and distributed learning mechanisms may help promote their transition from simulation to real-sea deployment.

3.2. Multi-Agent Reinforcement Learning and Cooperative Mechanisms

Research on multi-agent reinforcement learning (MARL) and cooperative mechanisms has moved from simulation-based verification toward practical applications. The central aim is to handle three key issues: dynamic task allocation in distributed decision-making, communication resource limitations, and consistency of group behavior. Current studies follow two main directions. One focuses on improving MARL algorithms themselves through attention mechanisms and credit assignment strategies. The other explores integration with traditional control theory. This section discusses the progress and challenges of the former in cooperative mechanism design.

Dynamic task priority adjustment represents a typical MARL application in multi-target tracking and cooperative surveillance tasks. The general MARL framework includes environmental perception, strategy interaction, and reward feedback. Indirect cooperation among AUVs is achieved through differentiated reward functions, allowing dynamic adjustment of task weights without explicit communication. For instance, the DSBM algorithm proposed by Wang et al. [62] constructs a hierarchical self-organizing network, where local observations are transformed into global strategies using a dynamic attention-weight switching mechanism. Estimation degradation in high-noise conditions is mitigated by particle-filter-based resampling. Significant improvement in tracking accuracy was achieved in simulation tests; however, dependence on centralized experience sharing remains. In fully distributed environments, asynchronous policy updates often cause task overlap or omission, highlighting the gap between idealized no-communication assumptions and real-world underwater conditions.

Distributed value function estimation has become the mainstream solution to the credit assignment problem in multi-AUV encirclement and formation tasks. This approach achieves decentralized control by decoupling local Q-value networks from the global value function. The MADA algorithm proposed by Hou et al. [63] exemplifies this method. Each AUV maintains an independent strategy network, and global coherence is maintained through a virtual leader mechanism. Curriculum learning is further introduced to accelerate convergence from static to dynamic target scenarios. However, the robustness of this approach depends heavily on stable communication links. When delays occur, state estimation errors of the virtual leader can destabilize the entire formation.

Recent studies have sought to address these challenges by enhancing coordination robustness and communication efficiency. Zhu et al. [64] proposed a distributed MARL framework that incorporates communication-weight adaptation and uncertainty modeling to maintain cooperative stability under packet loss. This design enables real-time coordination among AUVs with limited bandwidth and achieves more stable task allocation in dynamic ocean environments. Similarly, Wibisono et al. [65] integrated policy gradient regularization into MARL to suppress extreme action fluctuations and improve convergence stability across agents. These improvements help mitigate the instability observed in asynchronous policy updates and partially bridge the gap between simulation and real-world deployment.

In addition, graph-based reinforcement learning approaches [66] have been introduced to model complex inter-agent relationships in AUV clusters. In these frameworks, AUVs are represented as nodes in a dynamic graph, and local communication and observation data are propagated through graph neural network (GNN) layers. This integration enhances cooperative perception and task coordination by explicitly encoding spatial dependencies and interaction topology. Simulation experiments have demonstrated improved scalability and robustness when handling large-scale multi-AUV missions, although computational cost remains a key limitation.

Despite these advances, most MARL-based algorithms still rely on idealized simulation environments. In real marine conditions, strategy performance degrades significantly due to sensor noise, communication loss, and hardware constraints. The core challenge remains insufficient environmental realism in MARL models. Future research is expected to focus on bridging the gap between distributed coordination theory and real-world constraints through adaptive communication, model compression, and hybrid MARL–control co-design strategies.

3.3. Hybrid Reinforcement Learning Frameworks and Applications

Hybrid reinforcement learning frameworks have been proposed to overcome the limitations of pure DRL in multi-AUV cluster path planning. These frameworks combine reinforcement learning with heuristic optimization, control theory, or bio-inspired intelligence to improve adaptability and convergence performance. In recent studies, two major hybridization approaches have been explored. The first involves integrating bio-inspired or imitation-based heuristics to enhance learning efficiency. The second focuses on dynamic optimization to improve adaptability in time-varying environments. Although these hybrid approaches have shown promising results in simulations, the coupling of heterogeneous algorithms still lacks a unified theoretical foundation.

The first direction focuses on hybridizing neural networks with heuristic or biologically inspired algorithms. This approach combines the global search ability of swarm intelligence with the local perception capability of neural networks. Ma et al. [67] proposed a Self-Organizing Map (SOM)–neural wave hybrid model that performs three-dimensional mapping through a self-organizing activity matrix and neural wave diffusion, achieving high task completion rates in dynamic obstacle environments. Similarly, a Knowledge-Guided Reinforcement Learning approach integrated with an Artificial Potential Field (APF) is introduced for multi-AUV cooperative scenarios [68]. This method enhances sample efficiency and operational safety, particularly in addressing complex control challenges under variable ocean currents. The concept has been further developed in subsequent studies [69,70,71], where imitation learning and adaptive actor–critic modules were employed to reduce the amount of required training data and improve generalization to unseen environments. These bio-inspired hybrids have demonstrated shorter convergence times and more energy-efficient trajectories, but remain limited by simplified environmental modeling and inconsistent physical constraints.

The second direction emphasizes hybrid control and dynamic optimization in non-stationary ocean environments. Liu et al. [72] proposed a cooperative path planning algorithm that employs distributed dynamic programming based on time difference of arrival to minimize synchronization errors among AUVs. Li et al. [73] further improved multi-AUV task assignment by combining an improved SOM neural network with a Dubins Path generator, addressing kinematic constraints through workload-balanced task allocation and feasible path planning. Distributed learning frameworks such as [74,75] introduced adaptive feedback loops and policy-sharing mechanisms among AUVs to achieve faster convergence and greater robustness against communication delays. However, most of these frameworks still depend on prior flow field data and simplified assumptions, making real-time adaptation in unknown marine conditions difficult.

Table 2 provides a comparative summary of representative hybrid reinforcement learning algorithms for AUV cluster path planning. Overall, current hybrid reinforcement learning studies present diverse algorithmic integrations but lack a cohesive theoretical framework for deep coupling. Most works remain at the level of module stacking rather than systematic fusion, often triggering new coordination conflicts in practice. Future progress depends on building unified frameworks that integrate lightweight network architectures, transfer learning, and distributed training mechanisms. Reducing training costs through knowledge transfer and sample reuse can enhance generalization, while incorporating real-sea data into hardware-in-the-loop validation will be key to bridging the gap between simulation and deployment.

Table 2.

Representative Hybrid Reinforcement Learning Algorithms for AUV Cluster Path Planning.

The main technical breakthroughs of reinforcement learning and deep learning in AUV cluster path planning can be summarized as follows. First, deep reinforcement learning eliminates the dependence of traditional algorithms on accurate mathematical models by adaptively extracting environmental features and optimizing strategies dynamically. Second, multi-agent reinforcement learning enhances the robustness of cluster operations through distributed decision-making and cooperative policy learning. Finally, the combined development of these two approaches has facilitated the gradual transition of AUV path planning from simulation-based verification to real-sea application. However, current progress remains limited by low sample efficiency, insufficient generalization in complex marine environments, and the challenge of maintaining real-time performance while ensuring stability in distributed coordination.

Future research on reinforcement learning–based methods should focus on two directions. First, the development of a cooperative framework that integrates lightweight network architectures, transfer learning, and distributed training paradigms should be prioritized. Such a framework would reduce training costs through knowledge transfer and sample reuse, while improving adaptability in dynamic marine environments. Second, an integrated development process should be established, linking theoretical modeling, hardware-in-the-loop testing, and data-driven validation from real-sea experiments. This process will help bridge the gap between theoretical design and engineering implementation, accelerating the practical deployment of reinforcement learning algorithms in AUV clusters.

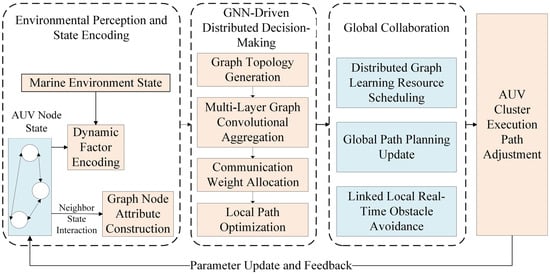

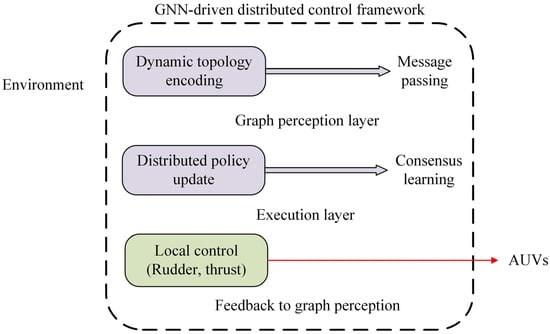

4. Graph Neural Networks and Distributed Control Strategies

GNNs and distributed control strategies have recently emerged as the third major paradigm in AUV cluster path planning, addressing the scalability and adaptability limitations of traditional centralized frameworks. GNNs provide a powerful means for topological representation learning, enabling each AUV node to process local information while maintaining global coordination through message passing across network edges. By integrating GNNs with distributed control theory, dynamic topology perception and cooperative decision optimization can be achieved under real-time communication constraints. This combination significantly enhances the robustness and scalability of AUV clusters operating in complex marine environments. Figure 7 depicts the general framework of a GNN-driven distributed AUV cluster planning system. Figure 8 conceptually illustrates how graph learning mechanisms mitigate communication constraints and support dynamic topology adaptation.

Figure 7.

Architecture of AUV cluster path planning based on distributed graph neural networks.

Figure 8.

GNN-driven distributed control conceptual framework for AUV swarms.

Compared with earlier heuristic and DRL-based approaches, GNN-driven frameworks are superior in modeling dynamic interaction networks and heterogeneous communication qualities. Node and edge attributes, such as relative motion states, energy levels, and link stability, can be encoded into graph representations. Adaptive weight learning via attention mechanisms is thereby enabled. Moreover, efficient utilization of heterogeneous onboard computing resources is enabled by federated graph learning architectures, while redundant communication is reduced. Real-time coordination between global path optimization and local obstacle avoidance is thereby improved.

4.1. Topological Modeling and Information Interaction of Graph Neural Networks

Unlike conventional distributed control systems that operate on static or predefined communication graphs, graph neural network-based frameworks introduce adaptive topology learning through message passing and attention-weight mechanisms. This approach enables each AUV node to infer implicit coordination strategies from local interactions, eliminating the need for explicit centralized commands. Consequently, the gap between dynamic topology perception and cooperative control optimization is effectively bridged.

By representing AUVs and their communication links as graph structures, GNNs facilitate dynamic topology adaptation and distributed decision optimization. Through iterative message propagation and weight aggregation, global coordination is achieved in a fully decentralized manner. Current research efforts in this area mainly focus on three directions: dynamic weight allocation, adaptive topology evolution, and scalability in large-scale clusters. These research fronts aim to enhance information exchange efficiency, improve robustness under structural variations, and ensure real-time cooperative control. Despite these advances, persistent challenges remain in achieving high responsiveness under fast-changing ocean dynamics, coordinating heterogeneous AUV nodes, and maintaining robustness in dense and uncertain marine environments.

For dynamic weight allocation, Li et al. [76] proposed a Message-Aware Graph Attention Network, which incorporates task urgency into attention weight computation. Information priority is dynamically adjusted through a key–value pair mechanism, achieving matching optimization between task demand and communication bandwidth. However, the model does not fully consider high-frequency graph updates for rapidly moving AUVs. Similar ideas were explored in [77,78,79], where node-level reinforcement mechanisms were introduced to adjust attention weights based on environmental variation and communication reliability. These studies improved message consistency and reduced packet loss in dynamic topologies but still relied heavily on pre-defined graph update intervals.

For topological adaptability, Wang et al. [80] developed a dynamic formation strategy based on a distributed auction mechanism. Virtual nodes were introduced to emulate temporary communication links, thereby mitigating dependence on static topology. In related works [81,82], dynamic link-prediction and temporal graph learning frameworks were proposed, which update graph structures through environmental feedback rather than fixed schedules. These methods enhanced resilience against link failures and improved stability under communication delays. However, parameter sensitivity in their adaptive modules may still cause oscillations in path coordination accuracy.

In terms of large-scale scalability, Ma et al. [83] proposed a distributed obstacle-avoidance model using local communication and cooperative rules to achieve global conflict resolution. This reduced communication overhead compared to centralized methods. Zhu et al. [84] explored topological modeling for multi-AUV path planning using an improved neural network framework, which emphasizes information interaction. By integrating initial orientation and ocean current constraints into the topological structure, this model enables AUVs to exchange path-related information, thereby optimizing the coordination of multi-agent systems in complex marine environments. These architectures integrated sensory fusion with graph-based inference to enhance perception in complex marine terrains. Nevertheless, scalability in extremely dense AUV swarms and heterogeneous computing environments remains a major challenge.

Overall, phased progress has been achieved in AUV cluster path planning using GNN-based topological modeling. Yet, most current studies lack an integrated cooperative optimization framework that jointly considers dynamic perception, heterogeneous resources, and environmental uncertainty. Imbalances between topology update frequency and computational cost often lead to decision delays in fast-changing ocean currents. In addition, differences in computing power among AUV nodes cause asynchronous convergence during distributed training, lowering the generalization capability of learned obstacle avoidance policies. Future work should emphasize the construction of hierarchical graph-learning architectures that integrate environmental semantics, communication stability, and adaptive attention to achieve robust real-time collaboration in large-scale underwater networks.

The integration of graph learning and distributed control establishes a hierarchical feedback mechanism. GNNs perceive and encode the evolving network structure, whereas distributed control translates this perception into synchronized actions among AUVs. This bidirectional interaction allows real-time topology reconfiguration and ensures consistent cooperative behavior across heterogeneous agents. Compared with traditional approaches, this architecture provides a scalable foundation for robust underwater swarm coordination.

4.2. Event-Triggered Control and Communication Optimization

Event-triggered control has become a core technology for communication optimization in AUV clusters. It balances control performance and system resource consumption by dynamically adjusting the frequency of information exchange. Coupled with distributed topological control, this mechanism has been widely studied to enhance real-time responsiveness in cooperative path planning. Current approaches can be categorized into three main groups: threshold-triggered cooperative strategies, hybrid formation control frameworks, and task-driven communication resource allocation models. These strategies aim to reduce redundant transmissions and energy waste caused by traditional periodic communication schemes.

For threshold-triggered cooperative control, several studies have introduced dynamic triggering mechanisms to improve communication efficiency under ocean disturbances. Wang et al. [85] proposed a fixed-time event-triggered obstacle avoidance algorithm that integrates an improved artificial potential field with a leader–follower structure. Communication is activated only when formation error exceeds a predefined threshold, ensuring convergence within a finite time. However, empirical tuning of nonlinear damping parameters limits real-world deployment. Similarly, refs. [86,87,88,89] explored adaptive threshold mechanisms and predictive event scheduling to achieve better trade-offs between stability and bandwidth consumption. These models adjust triggering frequencies according to environmental fluctuations and network delay conditions, effectively preventing over-triggering in dynamic flow fields. Despite their progress, the selection of optimal thresholds remains scenario-dependent and lacks unified theoretical guidance.

For distributed hybrid formation control, the Leader–Follower and Virtual Structure framework proposed by Zhang et al. [90] combines local path tracking with global geometric constraints. Formation error feedback is embedded in the potential field design, enabling AUVs to maintain formation topology during obstacle avoidance. This method mitigates oscillations in traditional APF-based navigation. Nonetheless, its reliance on global coordinates restricts large-scale deployment. To address this issue, hybrid formation models based on distributed cooperative learning [91,92,93,94] have been proposed. These frameworks integrate reinforcement learning or adaptive consensus into event-triggered systems, allowing local controllers to learn triggering policies that minimize communication load while maintaining formation stability. Simulation results show significant improvement in robustness under communication delays and uncertain currents, yet convergence speed remains sensitive to parameter initialization.

For task-driven communication resource allocation, distributed negotiation and auction-based approaches have received increasing attention. An integrated framework was proposed by Chen et al. [95], in which a distributed auction algorithm was combined with the fast-marching method. Dynamic team formation was achieved through local communication and negotiation. Building on this concept, a game-theoretic event-triggered communication strategy was later developed [96]. In this method, bandwidth was allocated adaptively according to task urgency and the residual energy of each AUV. The approach effectively reduced communication congestion and ensured fair channel access during large-scale missions. However, when targets moved at high velocity, the reallocation process became insufficiently responsive, leading to a decline in cooperative efficiency.

Overall, event-triggered control methods have enabled localized optimization of communication efficiency and formation performance. Nevertheless, their adaptability to dynamic topology switching and multi-source interference remains limited. Most existing algorithms lack a unified control logic that links triggering thresholds to environmental disturbance levels in real time. Future research is expected to focus on self-optimizing event-triggered frameworks that dynamically couple environmental perception with control decisions. The integration of reinforcement learning and adaptive compensation mechanisms may further achieve cooperative optimization of triggering policies and interference mitigation, thereby enhancing the robustness and responsiveness of multi-AUV systems in complex marine environments.

4.3. Heterogeneous Cluster Cooperative Path Planning

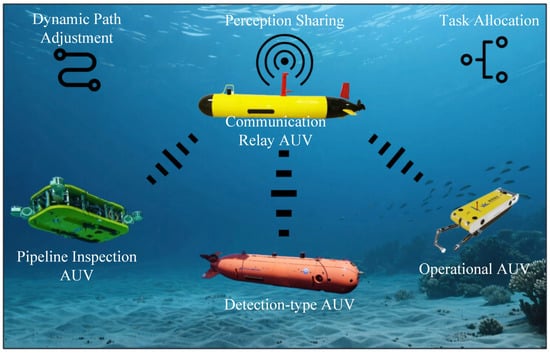

The primary challenge in heterogeneous AUV cluster path planning lies in enabling underwater robots with different dynamic, sensing, and communication capabilities to collaborate effectively under complex marine conditions. Current studies mainly focus on three aspects: task allocation, environmental information sharing, and dynamic path adaptation. Although progress has been achieved in improving path coverage and adaptive adjustment, major challenges remain in coordinating diverse kinematic constraints and achieving real-time decision-making in uncertain underwater environments. The concept of collaboration among heterogeneous AUVs under complex marine environments is depicted in Figure 9.

Figure 9.

Cooperative path planning of heterogeneous AUVs.

For task allocation and route segmentation, the ER-MCPP algorithm proposed by Li et al. [97] partitions the mission area using grid and region segmentation, dynamically adjusting sampling point density to improve coverage efficiency. Although this method enhances performance by refining the lawnmower algorithm, it neglects motion differences among heterogeneous AUVs, causing delayed task completion for slower units. To address this limitation, recent studies [98,99,100] have introduced multi-criteria optimization models that jointly consider propulsion limits, energy constraints, and sensor ranges. These models employ graph partitioning or multi-agent bidding strategies to assign sub-regions according to individual vehicle capabilities. Such adaptive allocation frameworks significantly reduce idle time and improve overall mission balance, though they increase computational cost in large-scale deployments.

For environmental information fusion and cross-robot knowledge sharing, the physics information fusion framework proposed by Yan et al. [101] enables shared environmental models between exploration and operational robots through federated learning. This improves terrain matching and reduces redundancy in data processing. However, latency in model exchange can delay real-time path adjustment during obstacle avoidance. In related work, Zhao et al. [102] employed a Markov decision process to prioritize path segments under incomplete environmental perception, achieving stable performance under communication uncertainty. Building upon this idea, studies such as refs. [103,104] integrated graph-based representation learning with underwater wireless sensor networks, enhancing multi-robot environmental perception through distributed data aggregation. These frameworks improve robustness against sensor failure and noise interference, but their scalability remains limited in high-density communication networks.

For dynamic path adjustment and adaptive decision-making, reinforcement and imitation learning frameworks have been widely used to adapt route selection to changing environmental conditions. Reference [105] proposed an adaptive reinforcement-planning model that adjusts local path curvature and velocity profiles in response to current changes, enabling smoother trajectory transitions. Similarly, hybrid control approaches combining rule-based safety constraints and learning-based prediction have shown improved energy efficiency and robustness in simulations of heterogeneous clusters. Nevertheless, most of these models still rely on pre-defined environmental priors and have not been validated in real-sea experiments.

In summary, existing heterogeneous AUV path planning approaches face three main limitations. First, real-time feedback between environmental perception and path generation remains weak, leading to delayed adaptation when environmental changes occur. Second, heterogeneous task coordination often lacks a unified multi-objective optimization framework, resulting in inefficient cooperation under energy and communication constraints. Third, current models depend heavily on pre-trained environmental knowledge and struggle to generalize to unseen conditions. Future work should focus on developing cross-domain adaptive learning frameworks that couple physics-based modeling with graph and reinforcement learning, achieving self-adaptive collaboration among heterogeneous AUVs in dynamic and uncertain marine environments.

5. Future Research Directions

Despite significant progress in cooperative path planning algorithms for Autonomous Underwater Vehicle clusters, a considerable gap persists between theoretical exploration and engineering applications. As analyzed earlier, current studies are largely reliant on idealized simulation environments and simplified assumptions, with notable limitations in modeling complex marine dynamic characteristics, enhancing the robustness of heterogeneous platform collaboration, and constructing real-vehicle verification systems. Combined with technical bottlenecks and practical marine engineering requirements, future research may focus on the following directions:

- Dynamic optimization mechanisms based on multi-algorithm fusion are recognized as crucial for improving adaptability to complex environments. Existing algorithms exhibit distinct advantages in single-objective optimization, but weight allocation in multi-objective cooperative optimization remains dependent on manual parameter tuning. To achieve dynamic coupling between global path planning and local obstacle avoidance strategies and reduce reliance on prior knowledge, the development of an environment-adaptive framework based on multi-algorithm fusion is necessary.

- High-fidelity dynamic marine environment modeling is identified as the core foundation for algorithm training and verification. Most existing simulation environments employ static flow fields and simplified terrain models, which cannot reproduce the spatiotemporal evolution of strong flow fields, terrain scattering effects, and multi-physical field coupling characteristics of real marine environments. A multidisciplinary integrated modeling framework must be constructed. High-precision simulation of multi-scale dynamic characteristics of the marine environment should be achieved through physical constraints and data-driven methods, to provide a virtual test environment that closely approximates real operating conditions for algorithm training and verification.

- Insufficient online environmental perception and real-time decision-making capabilities severely limit path planning efficiency. Most existing methods depend on offline environmental data, rendering most algorithms unable to handle dynamic obstacles and unknown terrain. In-depth research on lightweight deep reinforcement learning models is required. Target recognition accuracy in turbid waters should be enhanced through sonar or optical data fusion. An online path optimizer with low computational power consumption should be developed to achieve millisecond-level response on embedded hardware.

In summary, a significant gap exists between theoretical research and practical application in AUV cluster path planning. Key issues, including dynamic environment modeling, multi-algorithm fusion, lightweight algorithm models, and real-time control under communication constraints, require further investigation.

6. Conclusions

Over the past two decades, research on AUV cluster cooperative path planning has evolved through three major paradigms: heuristic optimization, deep reinforcement learning, and graph neural network-based distributed control. Each paradigm addresses specific aspects of the path planning problem, but also reveals unique limitations. Heuristic algorithms achieve high accuracy in static optimization tasks yet lack adaptability to dynamic marine environments. Reinforcement learning enhances environmental responsiveness and decision autonomy but remains limited by data efficiency and transferability to real-world conditions. Graph neural networks and distributed control frameworks improve communication efficiency and scalability. However, their robustness under large-scale and high-speed conditions remains insufficient.

Compared with previous reviews, this paper provides a comprehensive analytical framework that links algorithmic evolution with engineering constraints, offering an integrated understanding of how heuristic, learning-based, and graph-driven approaches collectively shape the development of AUV cooperative path planning. By highlighting the interaction among these paradigms, this review advances the current understanding from isolated algorithmic progress to system-level adaptability and scalability. Future efforts are expected to focus on establishing unified theoretical foundations for heterogeneous AUV coordination, developing lightweight and adaptive learning architectures suitable for embedded systems, and validating algorithms through real-sea, hardware-in-the-loop experimentation. These directions are essential for bridging the gap between simulation-based validation and practical deployment in autonomous underwater operations.

Author Contributions

Conceptualization, J.W.; methodology, J.W.; validation, D.Y. and V.F.; formal analysis, J.W. and C.L.; investigation, J.W. and R.C.; resources, C.L. and V.F.; data curation, J.W. and A.Z. (Ao Zheng); writing—original draft preparation, J.W. and C.L.; writing—review and editing, V.F., D.Y. and A.Z. (Alexander Zuev); visualization, R.C. and A.Z. (Ao Zheng); supervision, C.L., V.F. and A.Z. (Alexander Zuev); project administration, C.L.; funding acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Guangdong Provincial Science and Technology Plan, Regional Innovation Capacity and Support System Construction Special Project (grant number: MS202500035); Guangdong Ocean University, 2025 “Chong Bu Qiang” Provincial Financial Special Project (grant number: 080507202501).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

We would like to acknowledge anonymous reviewers for their helpful comments on the manuscript. We thank the AI-based tool for its assistance in language refinement, with all final revisions made by the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, D.; Wang, P.; Du, L. Path Planning Technologies for Autonomous Underwater Vehicles-A Review. IEEE Access 2019, 7, 9745–9768. [Google Scholar] [CrossRef]

- Hadi, B.; Khosravi, A.; Sarhadi, P. A Review of the Path Planning and Formation Control for Multiple Autonomous Underwater Vehicles. J. Intell. Robot. Syst. 2021, 101, 67. [Google Scholar] [CrossRef]

- An, D.; Mu, Y.Z.; Wang, Y.Q.; Li, B.K.; Wei, Y.G. Intelligent Path Planning Technologies of Underwater Vehicles: A Review. J. Intell. Robot. Syst. 2023, 107, 22. [Google Scholar] [CrossRef]

- Kot, R. Review of Collision Avoidance and Path Planning Algorithms Used in Autonomous Underwater Vehicles. Electronics 2022, 11, 2301. [Google Scholar] [CrossRef]

- Cheng, C.X.; Sha, Q.X.; He, B.; Li, G.L. Path Planning and Obstacle Avoidance for AUV: A Review. Ocean Eng. 2021, 235, 109355. [Google Scholar] [CrossRef]

- Wang, L.; Wang, K.Z.; Pan, C.H.; Xu, W.; Aslam, N.; Hanzo, L. Multi-Agent Deep Reinforcement Learning-Based Trajectory Planning for Multi-UAV Assisted Mobile Edge Computing. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 73–84. [Google Scholar] [CrossRef]

- Pan, Z.H.; Zhang, C.X.; Xia, Y.Q.; Xiong, H.; Shao, X.D. An Improved Artificial Potential Field Method for Path Planning and Formation Control of the Multi-UAV Systems. IEEE Trans. Circuits Syst. II-Express Briefs 2022, 69, 1129–1133. [Google Scholar] [CrossRef]

- Zhi, L.W.; Zuo, Y. Collaborative Path Planning of Multiple AUVs Based on Adaptive Multi-Population PSO. J. Mar. Sci. Eng. 2024, 12, 223. [Google Scholar] [CrossRef]

- Sun, B.; Lv, Z. Multi-AUV Dynamic Cooperative Path Planning with Hybrid Particle Swarm and Dynamic Window Algorithm in Three-Dimensional Terrain and Ocean Current Environment. Biomimetics 2025, 10, 536. [Google Scholar] [CrossRef]

- Sun, B.; Niu, N.N. Multi-AUVs Cooperative Path Planning in 3D Underwater Terrain and Vortex Environments Based on Improved Multi-Objective Particle Swarm Optimization Algorithm. Ocean Eng. 2024, 311, 113245. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, D.; Hou, J.; Zhang, F.; Liang, G. AUV Path Planning in a Three-Dimensional Marine Environment Based on a Novel Multiple Swarm Co-Evolutionary Algorithm. Appl. Soft Comput. 2024, 164, 111933. [Google Scholar] [CrossRef]

- Zhang, J.Y.; Ning, X.; Ma, S.C. An Improved Particle Swarm Optimization Based on Age Factor for Multi-AUV Cooperative Planning. Ocean Eng. 2023, 287, 115753. [Google Scholar] [CrossRef]

- Mu, X.K.; Gao, W. Coverage Path Planning for Multi-AUV Considering Ocean Currents and Sonar Performance. Front. Mar. Sci. 2025, 11, 1234567. [Google Scholar] [CrossRef]

- Sun, B.; Li, Y.Y.; Zhang, W. Multipopulation Grey Wolf Optimization for Cooperative Multi-AUV Path Planning in Complex Underwater Environments. IEEE Syst. J. 2025, 19, 837–847. [Google Scholar] [CrossRef]

- Li, J.; Lu, H.T.; Zhang, H.H.; Zhang, Z.H. Dynamic Target Hunting Under Autonomous Underwater Vehicle (AUV) Motion Planning Based on Improved Dynamic Window Approach (DWA). J. Mar. Sci. Eng. 2025, 13, 221. [Google Scholar] [CrossRef]

- Xie, Y.M.; Hui, W.B.; Zhou, D.C.; Shi, H. Three-Dimensional Coverage Path Planning for Cooperative Autonomous Underwater Vehicles: A Swarm Migration Genetic Algorithm Approach. J. Mar. Sci. Eng. 2024, 12, 1366. [Google Scholar] [CrossRef]

- Zhang, X.M.; Hao, X.W.; Zhang, L.C.; Liu, L.; Zhang, S.; Ren, R.Z. Multi-Autonomous Underwater Vehicle Full-Coverage Path-Planning Algorithm Based on Intuitive Fuzzy Decision-Making. J. Mar. Sci. Eng. 2024, 12, 1276. [Google Scholar] [CrossRef]

- Li, H.F.; Zhu, D.Q.; Chen, M.Z.; Wang, T.; Zhu, H.X. An Improved Reeds-Shepp and Distributed Auction Algorithm for Task Allocation in Multi-AUV System with Both Specific Positional and Directional Requirements. J. Mar. Sci. Eng. 2024, 12, 486. [Google Scholar] [CrossRef]

- Pang, W.; Zhu, D.Q.; Sun, C.Y. Multi-AUV Formation Reconfiguration Obstacle Avoidance Algorithm Based on Affine Transformation and Improved Artificial Potential Field Under Ocean Currents Disturbance. IEEE Trans. Autom. Sci. Eng. 2024, 21, 1469–1487. [Google Scholar] [CrossRef]

- Guo, S.X.; Chen, M.Z.; Pang, W. Path Planning for Autonomous Underwater Vehicles Based on an Improved Artificial Jellyfish Search Algorithm in Multi-Obstacle Ocean Current Environment. IEEE Access 2023, 11, 31010–31023. [Google Scholar] [CrossRef]

- Luo, Q.H.; Shao, Y.; Li, J.F.; Yan, X.Z.; Liu, C. A Multi-AUV Cooperative Navigation Method Based on the Augmented Adaptive Embedded Cubature Kalman Filter Algorithm. Neural Comput. Appl. 2022, 34, 18975–18992. [Google Scholar] [CrossRef]

- Cui, R.X.; Li, Y.; Yan, W.S. Mutual Information-Based Multi-AUV Path Planning for Scalar Field Sampling Using Multidimensional RRT. IEEE Trans. Syst. Man Cybern. Syst. 2016, 46, 993–1004. [Google Scholar] [CrossRef]

- Feng, H.B.; Hu, Q.; Zhao, Z.Y.; Feng, X.; Jiang, C. A Varied-Width Path Planning Method for Multiple AUV Formation. Comput. Ind. Eng. 2025, 199, 108234. [Google Scholar] [CrossRef]

- Ru, J.Y.; Yu, S.J.; Wu, H.; Li, Y.H.; Wu, C.D.; Jia, Z.X.; Xu, H.L. A Multi-AUV Path Planning System Based on the Omni-Directional Sensing Ability. J. Mar. Sci. Eng. 2021, 9, 806. [Google Scholar] [CrossRef]

- Sun, A.L.; Cao, X.; Xiao, X.; Xu, L.W. A Fuzzy-Based Bio-Inspired Neural Network Approach for Target Search by Multiple Autonomous Underwater Vehicles in Underwater Environments. Intell. Autom. Soft Comput. 2021, 27, 551–564. [Google Scholar] [CrossRef]

- Chen, Y.L.; Ma, X.W.; Bai, G.Q.; Sha, Y.B.; Liu, J. Multi-Autonomous Underwater Vehicle Formation Control and Cluster Search Using a Fusion Control Strategy at Complex Underwater Environment. Ocean Eng. 2020, 216, 108048. [Google Scholar] [CrossRef]

- Yu, X.; Chen, W.N.; Hu, X.M.; Gu, T.L.; Yuan, H.Q.; Zhou, Y.R.; Zhang, J. Path Planning in Multiple-AUV Systems for Difficult Target Traveling Missions: A Hybrid Metaheuristic Approach. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 561–574. [Google Scholar] [CrossRef]

- Chen, M.Z.; Zhu, D.Q. Multi-AUV Cooperative Hunting Control with Improved Glasius Bio-Inspired Neural Network. J. Navig. 2019, 72, 759–776. [Google Scholar] [CrossRef]

- Sun, B.; Zhu, D.Q.; Tian, C.; Luo, C.M. Complete Coverage Autonomous Underwater Vehicles Path Planning Based on Glasius Bio-Inspired Neural Network Algorithm for Discrete and Centralized Programming. IEEE Trans. Cogn. Dev. Syst. 2019, 11, 73–84. [Google Scholar] [CrossRef]

- Ge, H.Q.; Chen, G.B.; Xu, G. Multi-AUV Cooperative Target Hunting Based on Improved Potential Field in a Surface-Water Environment. Appl. Sci. 2018, 8, 973. [Google Scholar] [CrossRef]

- Zhu, D.Q.; Liu, Y.; Sun, B. Task Assignment and Path Planning of a Multi-AUV System Based on a Glasius Bio-Inspired Self-Organising Map Algorithm. J. Navig. 2018, 71, 482–496. [Google Scholar] [CrossRef]

- Ni, J.J.; Yang, L.; Wu, L.Y.; Fan, X.N. An Improved Spinal Neural System-Based Approach for Heterogeneous AUVs Cooperative Hunting. Int. J. Fuzzy Syst. 2018, 20, 672–686. [Google Scholar] [CrossRef]

- Ni, J.J.; Yang, L.; Shi, P.F.; Luo, C.M. An Improved DSA-Based Approach for Multi-AUV Cooperative Search. Comput. Intell. Neurosci. 2018, 2018, 2186574. [Google Scholar] [CrossRef]

- Yin, C.X.; Shi, K.; Wang, H.L. The Equal-Time Waypoint Method: A Multi-AUV Path Planning Approach That Is Based on Velocity Variation. Drones 2025, 9, 336. [Google Scholar] [CrossRef]

- Cao, X.; Zhu, D.Q. Multi-AUV Task Assignment and Path Planning with Ocean Current based on Biological Inspired Self-Organizing Map and Velocity Synthesis Algorithm. Intell. Autom. Soft Comput. 2017, 23, 31–39. [Google Scholar] [CrossRef]

- Ni, J.J.; Wu, L.Y.; Shi, P.F.; Yang, S.X. A Dynamic Bioinspired Neural Network Based Real-Time Path Planning Method for Autonomous Underwater Vehicles. Comput. Intell. Neurosci. 2017, 2017, 9269742. [Google Scholar] [CrossRef]

- Cao, X.; Zhu, D.Q.; Yang, S.X. Multi-AUV Target Search Based on Bioinspired Neurodynamics Model in 3-D Underwater Environments. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2364–2374. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.; Chen, J.F.; Yan, Q.L.; Liu, F. A Multi-Robot Coverage Path Planning Method for Maritime Search and Rescue Using Multiple AUVs. Remote Sens. 2022, 15, 168. [Google Scholar]

- Zhang, J.X.; Liu, M.Q.; Zhang, S.L.; Zheng, R.H.; Dong, S.L. A Path Planning Approach for Multi-AUV Systems With Concurrent Stationary Node Access and Adaptive Sampling. IEEE Robot. Autom. Lett. 2024, 9, 2343–2350. [Google Scholar] [CrossRef]

- Zhang, Y.X.; Wang, Q.; Shen, Y.; Wang, T.; Dai, N.; He, B. Multi-AUV Cooperative Search Method Based on Dynamic Optimal Coverage. Ocean Eng. 2023, 288, 116168. [Google Scholar] [CrossRef]

- Zhang, J.X.; Liu, M.Q.; Zhang, S.L.; Zheng, R.; Dong, S. Five-Tiered Route Planner for Multi-AUV Accessing Fixed Nodes in Uncertain Ocean Environments. Ocean Eng. 2024, 292, 112156. [Google Scholar] [CrossRef]

- Bai, G.Q.; Chen, Y.L.; Hu, X.Y.; Shi, Y.; Jiang, W.W.; Zhang, X.Q. Multi-AUV Dynamic Trajectory Optimization and Collaborative Search Combined with Task Urgency and Energy Consumption Scheduling in 3-D Underwater Environment with Random Ocean Currents and Uncertain Obstacles. Ocean Eng. 2023, 275, 113841. [Google Scholar] [CrossRef]

- Han, G.J.; Qi, X.Y.; Peng, Y.; Lin, C.; Zhang, Y.; Lu, Q. Early Warning Obstacle Avoidance-Enabled Path Planning for Multi-AUV-Based Maritime Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 2656–2667. [Google Scholar] [CrossRef]

- Zhang, J.X.; Liu, M.Q.; Zhang, S.L.; Zheng, R.H.; Dong, S.L. Multi-AUV Adaptive Path Planning and Cooperative Sampling for Ocean Scalar Field Estimation. IEEE Trans. Instrum. Meas. 2022, 71, 9505514. [Google Scholar] [CrossRef]

- Meng, X.Q.; Sun, B.; Zhu, D.Q. Harbour Protection: Moving Invasion Target Interception for Multi-AUV Based on Prediction Planning Interception Method. Ocean Eng. 2021, 219, 108268. [Google Scholar] [CrossRef]