Applications of Machine Learning in Assessing Cognitive Load of Uncrewed Aerial System Operators and in Enhancing Training: A Systematic Review

Highlights

- A review of 38 studies on the machine learning (ML) assessment of cognitive load (CL) for UAS operators and training has been conducted.

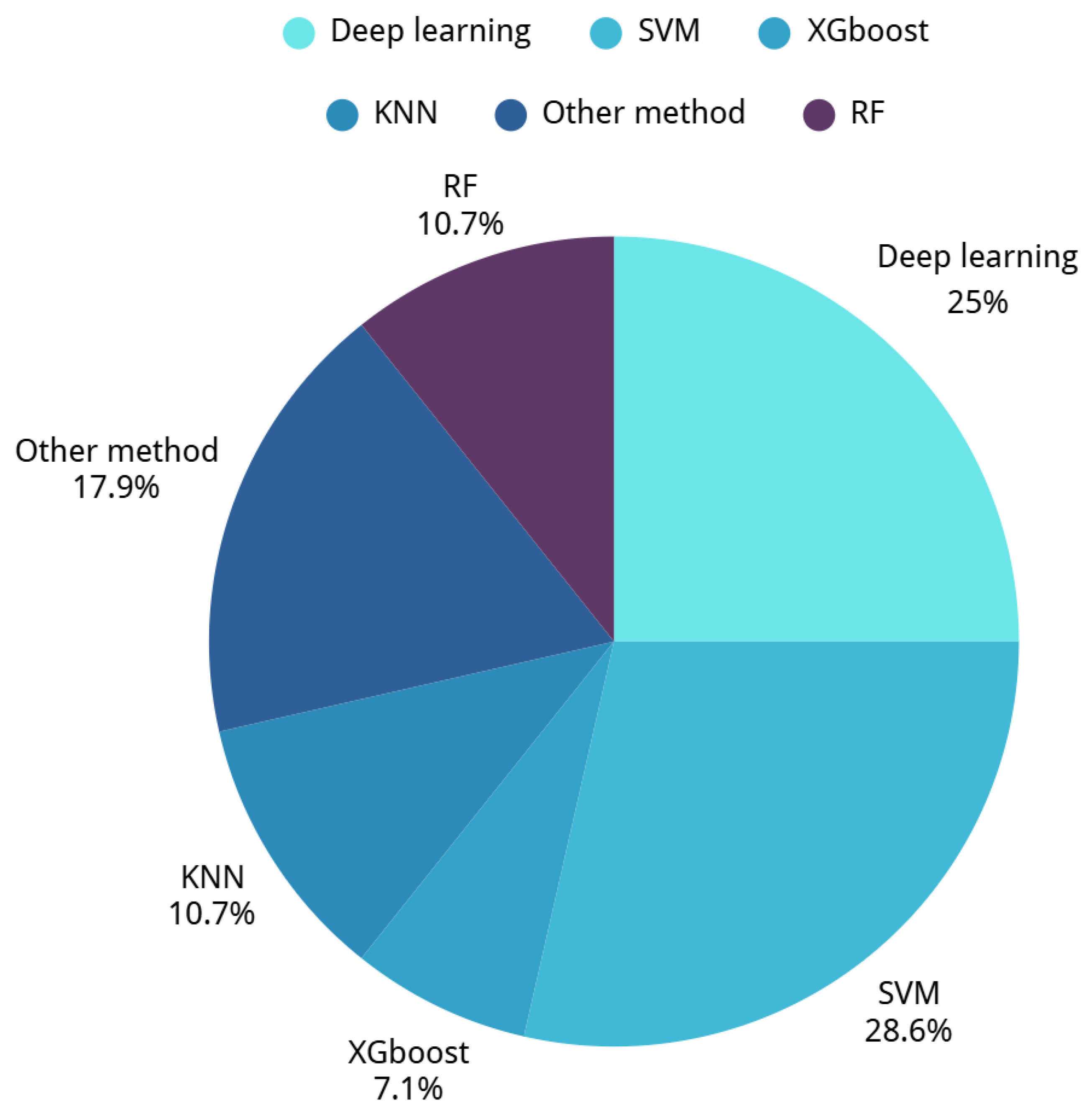

- Support Vector Machine (SVM) was the most frequently used model, achieving up to 90% accuracy for UAS operators’ CL assessment.

- ML approaches enhance UAS operator training through real-time, adaptive, and effective training compared with traditional training.

- The findings provide a methodological foundation for developing applicable MLbased adaptive training systems in UAS contexts.

Abstract

1. Introduction

- 1.

- What ML models have been effective in assessing CL for UAS operators?

- 2.

- Which ML methodological factors influence the effectiveness of ML-based CL assessment?

- 3.

- How can ML be used to enhance the training of UAS operators?

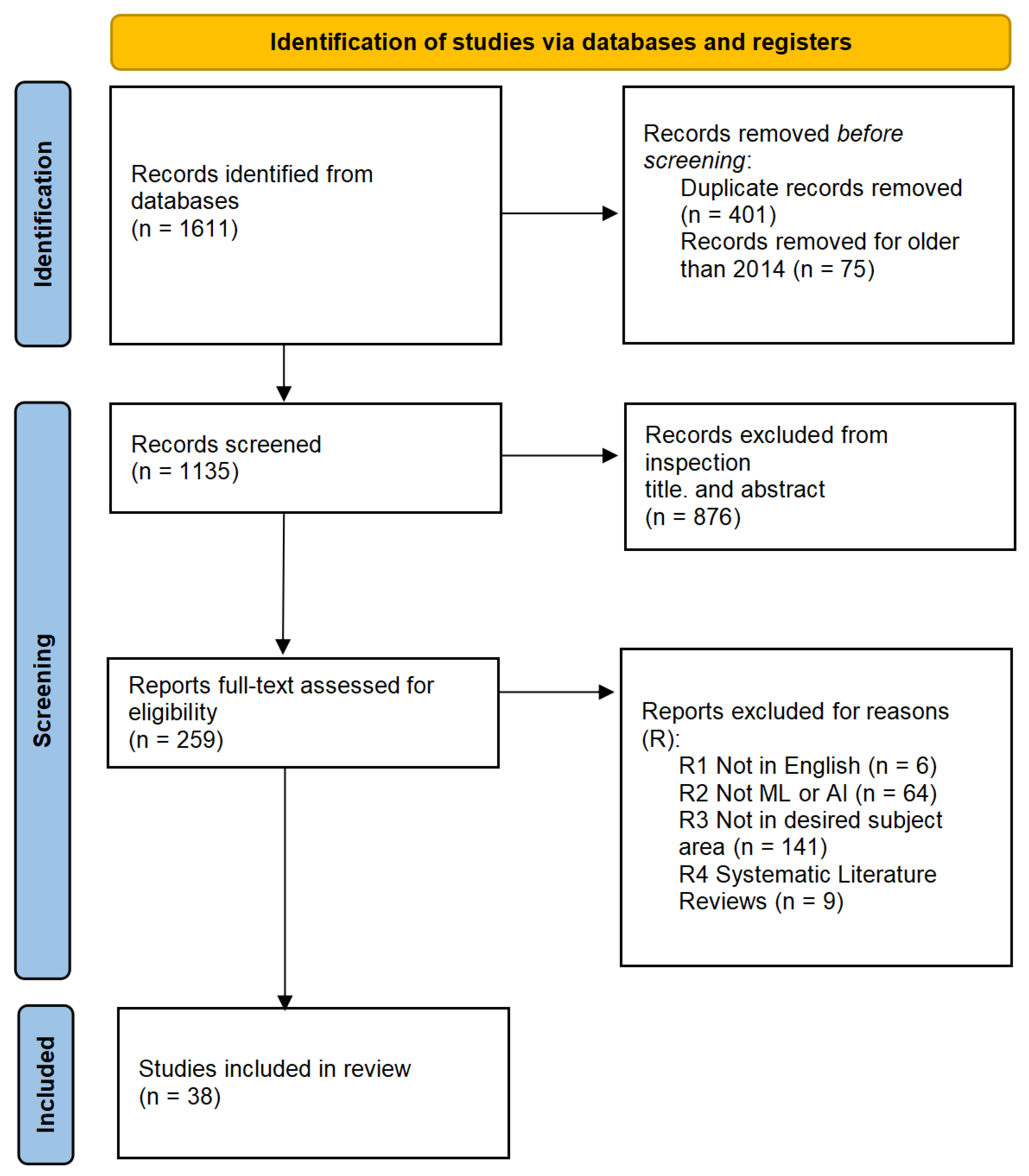

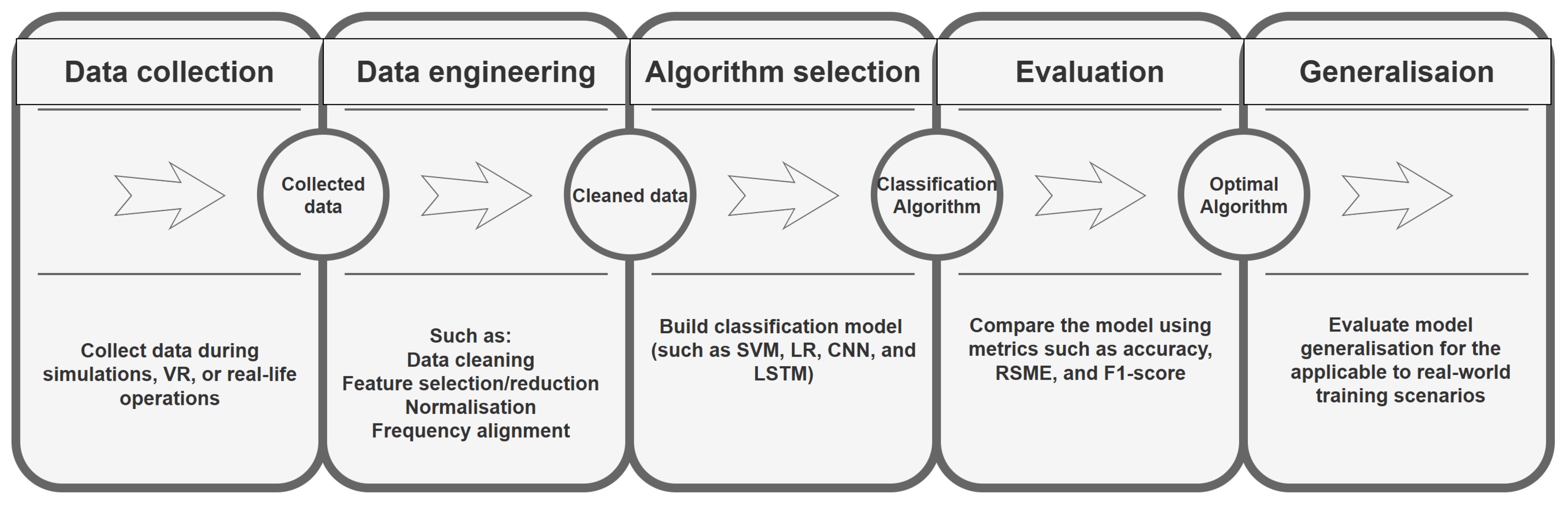

2. Materials and Methods

- ML-based approaches used to assess CL.

- ML-based approaches used to assess operator-related data in enhancing training.

- Datasets related to UAS operators and sensor operators under simulated or sensor-driven environments.

- Articles published between 1 January 2014 and 31 December 2024.

- All articles not published in English.

- All articles that are not peer reviewed.

- All articles that are not review studies.

- All articles that do not include ML approaches.

- All articles that do not use data related to training variables or CL.

- All articles that are not in the area of aviation or UAS.

3. Results

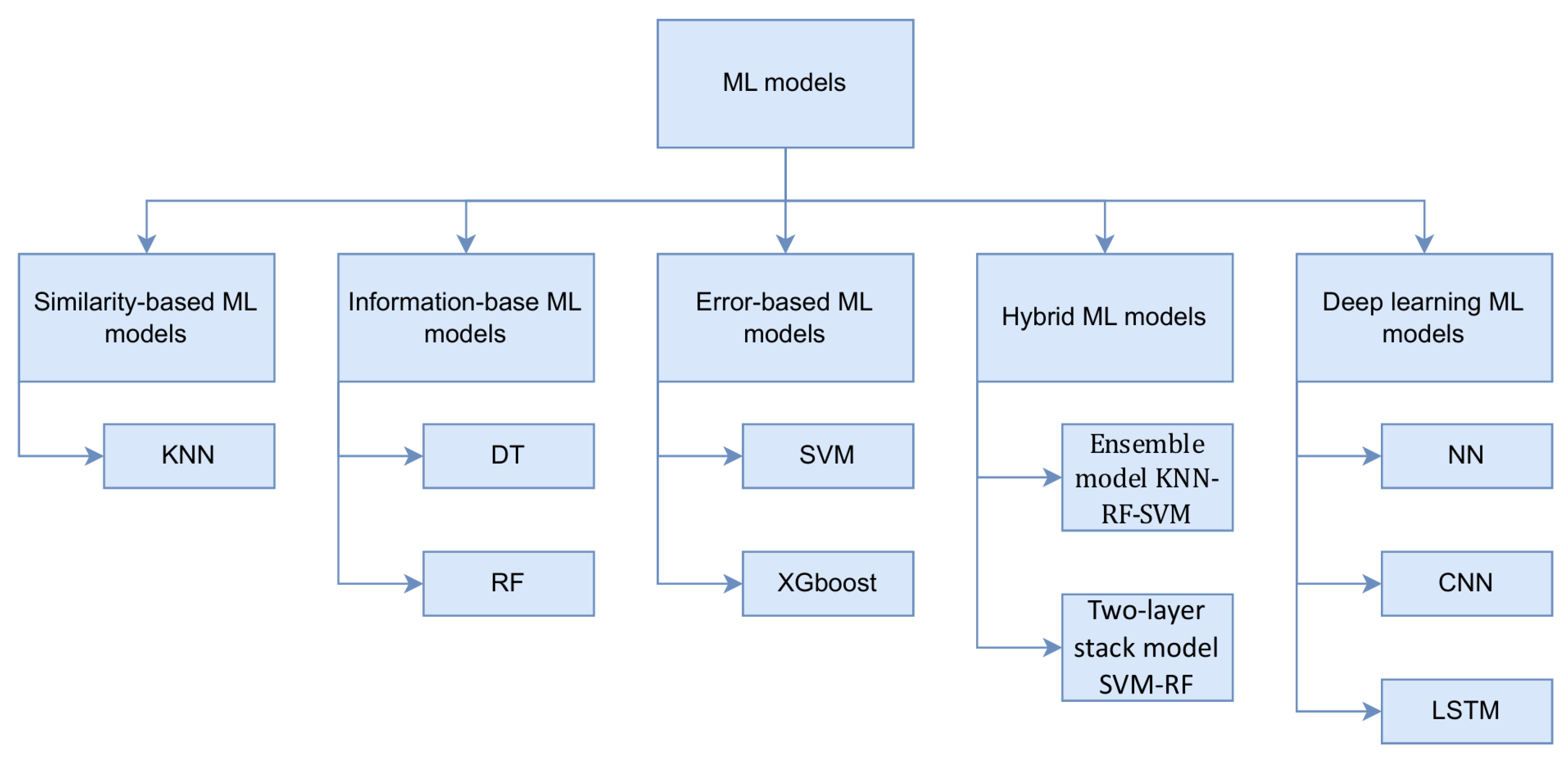

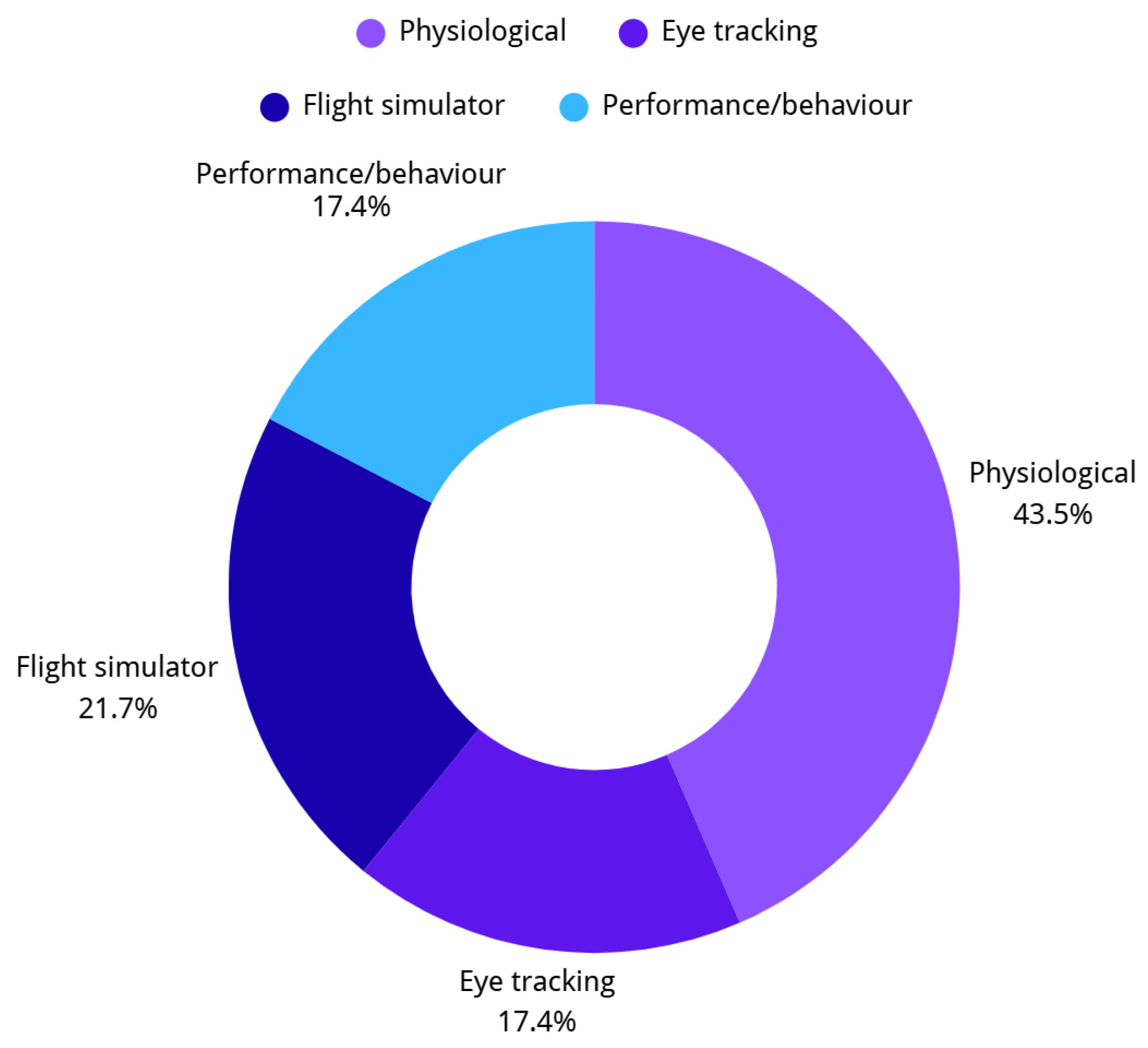

3.1. Machine Learning Methods for Assessing Cognitive Load

3.1.1. Similarity-Based ML Models

3.1.2. Information-Based ML Models

3.1.3. Error-Based ML Models

3.1.4. Hybrid/Ensemble ML Models

3.1.5. Deep Learning ML Models

3.2. Machine Learning Methods to Enhance UAS Operator Adaptive Training

3.2.1. ML Methods Supporting Biometric-Referenced Adaptive Training

3.2.2. ML Models Supporting Automated Training Evaluation

3.3. Machine Learning Evaluation Metrics

4. Discussion

4.1. ML Approaches for Cognitive Load Assessment

4.1.1. ML Models Applied to Cognitive Load Assessment

4.1.2. ML-Based Real-Time Monitoring and Prediction of Cognitive Load

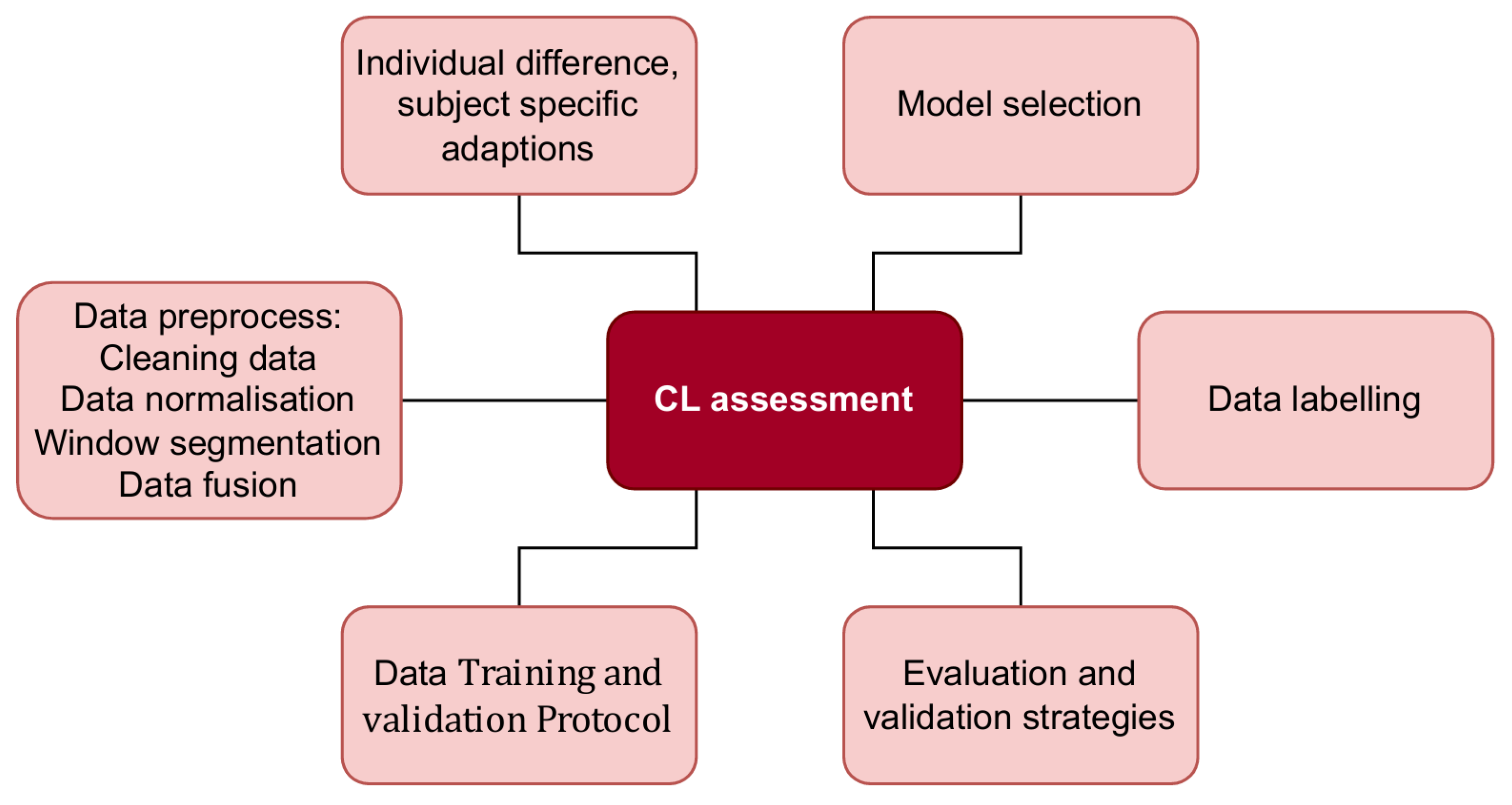

4.2. Key Methodological Factors in ML-Based CL Assessment

4.2.1. Data and Data Preprocessing

4.2.2. Addressing Individual Differences

4.2.3. Data Labelling Approaches

4.2.4. Validation and Evaluation Protocols

4.3. ML Enhancement of UAS Operator Training

4.3.1. ML Potential for Enhancing UAS Operator Training

4.3.2. Deployment Considerations

4.3.3. Ethical Considerations

4.3.4. Cost Constraints

4.4. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ATCs | Air Traffic Controllers |

| BVP | Blood Volume Pulse |

| CL | Cognitive Load |

| CNN | Convolutional Neural Network |

| CV | Cross-Validation |

| DL | Deep Learning |

| DT | Decision Tree |

| EDA | Electrodermal Activity (Galvanic Skin Response, GSR) |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| EDS | Electrodermal Signals |

| EMG | Electromyography |

| fNIRS | Functional Near-Infrared Spectroscopy |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| ICA | Independent Component Analysis |

| KNN | K-Nearest Neighbours |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| NASA-TLX | NASA Task Load Index |

| NN | Neural Network |

| PCA | Principal Component Analysis |

| PPG | Photoplethysmography |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RBF | Radial Basis Function |

| RFE | Recursive Feature Elimination |

| RNN | Recurrent Neural Network |

| RMSE | Root Mean Squared Error |

| RSP | Respiration |

| SHAP | SHapley Additive exPlanations |

| SKT | Skin Temperature |

| SVM | Support Vector Machine |

| UAS | Uncrewed Aerial System |

| UAV | Uncrewed Aerial Vehicle |

| VR | Virtual Reality |

| XGBoost | Extreme Gradient Boosting |

References

- Rabiu, L.; Ahmad, A.; Gohari, A. Advancements of Unmanned Aerial Vehicle Technology in the Realm of Applied Sciences and Engineering: A Review. J. Adv. Res. Appl. Sci. Eng. Technol. 2024, 40, 74–95. [Google Scholar] [CrossRef]

- Wanner, D.; Hashim, H.A.; Srivastava, S.; Steinhauer, A. UAV avionics safety, certification, accidents, redundancy, integrity, and reliability: A comprehensive review and future trends. Drone Syst. Appl. 2024, 12, 1–23. [Google Scholar] [CrossRef]

- Grindley, B.; Phillips, K.; Parnell, K.J.; Cherrett, T.; Scanlan, J.; Plant, K.L. Over a decade of UAV incidents: A human factors analysis of causal factors. Appl. Ergon. 2024, 121, 104355. [Google Scholar] [CrossRef] [PubMed]

- Orlady, L.M. Airline pilot training today and tomorrow. In Crew Resource Management; Elsevier: Amsterdam, The Netherlands, 2010; pp. 469–491. [Google Scholar]

- Qi, S.; Wang, F.; Jing, L. Unmanned aircraft system pilot/operator qualification requirements and training study. In MATEC Web of Conferences, Proceedings of the 2018 2nd International Conference on Mechanical, Material and Aerospace Engineering (2MAE 2018), Wuhan, China, 10–13 May 2018; EDP Sciences: Les Ulis, France, 2018; Volume 179, p. 03006. [Google Scholar]

- Stulberg, A.N. Managing the unmanned revolution in the US Air Force. Orbis 2007, 51, 251–265. [Google Scholar] [CrossRef]

- Wojciechowski, P.; Wojtowicz, K.; Błaszczyk, J. AI-driven method for UAV Pilot Training Process Optimization in a Virtual Environment. In Proceedings of the 2023 IEEE International Workshop on Technologies for Defense and Security (TechDefense), Rome, Italy, 20–22 November 2023; IEEE: New York, NY, USA, 2023; pp. 240–244. [Google Scholar]

- Gopher, D.; Donchin, E. Workload: An examination of the concept. In Handbook of Perception and Human Performance, Vol. 2. Cognitive Processes and Performance; John Wiley & Sons: Hoboken, NJ, USA, 1986. [Google Scholar]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Mattys, S.L.; Wiget, L. Effects of cognitive load on speech recognition. J. Mem. Lang. 2011, 65, 145–160. [Google Scholar] [CrossRef]

- Le Cunff, A.L.; Giampietro, V.; Dommett, E. Neurodiversity and cognitive load in online learning: A systematic review with narrative synthesis. Educ. Res. Rev. 2024, 43, 100604. [Google Scholar] [CrossRef]

- Cummings, M.; Huang, L.; Zhu, H.; Finkelstein, D.; Wei, R. The impact of increasing autonomy on training requirements in a UAV supervisory control task. J. Cogn. Eng. Decis. Mak. 2019, 13, 295–309. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, C.; Zhu, W.; Chen, H.; Yuan, J.; Li, Q.; Wang, T.; Jiang, C. Noncontact perception for assessing pilot mental workload during the approach and landing under various weather conditions. Signal Image Video Process. 2025, 19, 98. [Google Scholar] [CrossRef]

- Nguyen, T.; Lim, C.P.; Nguyen, N.D.; Gordon-Brown, L.; Nahavandi, S. A review of situation awareness assessment approaches in aviation environments. IEEE Syst. J. 2019, 13, 3590–3603. [Google Scholar] [CrossRef]

- Buchner, J.; Buntins, K.; Kerres, M. The impact of augmented reality on cognitive load and performance: A systematic review. J. Comput. Assist. Learn. 2022, 38, 285–303. [Google Scholar] [CrossRef]

- Heitmann, S.; Grund, A.; Fries, S.; Berthold, K.; Roelle, J. The quizzing effect depends on hope of success and can be optimized by cognitive load-based adaptation. Learn. Instr. 2022, 77, 101526. [Google Scholar] [CrossRef]

- Tugtekin, U.; Odabasi, H.F. Do interactive learning environments have an effect on learning outcomes, cognitive load and metacognitive judgments? Educ. Inf. Technol. 2022, 27, 7019–7058. [Google Scholar] [CrossRef]

- Kapustina, L.; Izakova, N.; Makovkina, E.; Khmelkov, M. The global drone market: Main development trends. In SHS Web of Conferences, Proceedings of the 21st International Scientific Conference Globalization and its Socio-Economic Consequences 2021, Online, 13–14 October 2021; EDP Sciences: Les Ulis, France, 2021; Volume 129, p. 11004. [Google Scholar]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef]

- Rastgoftar, H. Safe Human-UAS Collaboration Abstraction. arXiv 2024, arXiv:2402.05277. [Google Scholar] [CrossRef]

- Williams, K.W.; Mofle, T.C.; Hu, P.T. UAS Air Carrier Operations Survey: Training Requirements; Technical Report; U.S. Department of Transportation: Washington, DC, USA, 2023. [Google Scholar]

- European Commission. Commission Implementing Regulation (EU) 2019/945 of 12 March 2019 on the Requirements for the Design and Manufacture of Unmanned Aircraft Systems Intended to be Operated Under the Rules and Conditions Laid Down in Regulation (EU) 2019/947. 2019. Official Journal of the European Union, L 152, 11.6.2019, pp. 1–40. Available online: https://regulatorylibrary.caa.co.uk/2019-945-pdf/PDF.pdf (accessed on 23 October 2025).

- Lin, J.; Matthews, G.; Wohleber, R.W.; Funke, G.J.; Calhoun, G.L.; Ruff, H.A.; Szalma, J.; Chiu, P. Overload and automation-dependence in a multi-UAS simulation: Task demand and individual difference factors. J. Exp. Psychol. Appl. 2020, 26, 218. [Google Scholar] [CrossRef]

- McCarley, J.S.; Wickens, C.D. Human Factors Concerns in UAV Flight. Institute of Aviation, Aviation Human Factors Division, University of Illinois at Urbana-Champaign: Urbana-Champaign, IL, USA, 2004; Available online: https://www.researchgate.net/profile/Christopher-Wickens/publication/241595724_HUMAN_FACTORS_CONCERNS_IN_UAV_FLIGHT/links/00b7d53b850921e188000000/HUMAN-FACTORS-CONCERNS-IN-UAV-FLIGHT.pdf (accessed on 23 October 2025).

- Chen, J.Y.; Durlach, P.J.; Sloan, J.A.; Bowens, L.D. Human–robot interaction in the context of simulated route reconnaissance missions. Mil. Psychol. 2008, 20, 135–149. [Google Scholar] [CrossRef]

- Planke, L.J.; Lim, Y.; Gardi, A.; Sabatini, R.; Kistan, T.; Ezer, N. A cyber-physical-human system for one-to-many uas operations: Cognitive load analysis. Sensors 2020, 20, 5467. [Google Scholar] [CrossRef] [PubMed]

- Marinescu, A.; Sharples, S.; Ritchie, A.; López, T.S.; McDowell, M.; Morvan, H. Exploring the relationship between mental workload, variation in performance and physiological parameters. IFAC-PapersOnLine 2016, 49, 591–596. [Google Scholar] [CrossRef]

- Alreshidi, I.; Moulitsas, I.; Jenkins, K.W. Advancing aviation safety through machine learning and psychophysiological data: A systematic review. IEEE Access 2024, 12, 5132–5150. [Google Scholar] [CrossRef]

- Zhang, C.; Yuan, J.; Jiao, Y.; Liu, H.; Fu, L.; Jiang, C.; Wen, C. Variation of Pilots’ Mental Workload Under Emergency Flight Conditions Induced by Different Equipment Failures: A Flight Simulator Study. Transp. Res. Rec. 2024, 2678, 365–377. [Google Scholar] [CrossRef]

- Paas, F.; Tuovinen, J.E.; Tabbers, H.; Van Gerven, P.W. Cognitive load measurement as a means to advance cognitive load theory. In Cognitive Load Theory; Routledge: London, UK, 2016; pp. 63–71. [Google Scholar]

- Momeni, N.; Dell’Agnola, F.; Arza, A.; Atienza, D. Real-time cognitive workload monitoring based on machine learning using physiological signals in rescue missions. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; IEEE: New York, NY, USA, 2019; pp. 3779–3785. [Google Scholar]

- Fernandez Rojas, R.; Debie, E.; Fidock, J.; Barlow, M.; Kasmarik, K.; Anavatti, S.; Garratt, M.; Abbass, H. Electroencephalographic workload indicators during teleoperation of an unmanned aerial vehicle shepherding a swarm of unmanned ground vehicles in contested environments. Front. Neurosci. 2020, 14, 40. [Google Scholar] [CrossRef]

- Haapalainen, E.; Kim, S.; Forlizzi, J.F.; Dey, A.K. Psycho-physiological measures for assessing cognitive load. In Proceedings of the 12th ACM International Conference on Ubiquitous Computing, Copenhagen, Denmark, 26–29 September 2010; pp. 301–310. [Google Scholar]

- Wang, P.; Houghton, R.; Majumdar, A. Detecting and Predicting Pilot Mental Workload Using Heart Rate Variability: A Systematic Review. Sensors 2024, 24, 3723. [Google Scholar] [CrossRef]

- Hamann, A.; Carstengerdes, N. Assessing the development of mental fatigue during simulated flights with concurrent EEG-fNIRS measurement. Sci. Rep. 2023, 13, 4738. [Google Scholar] [CrossRef] [PubMed]

- Borghini, G.; Aricò, P.; Di Flumeri, G.; Ronca, V.; Giorgi, A.; Sciaraffa, N.; Conca, C.; Stefani, S.; Verde, P.; Landolfi, A.; et al. Air Force Pilot Expertise Assessment during Unusual Attitude Recovery Flight. Safety 2022, 8, 38. [Google Scholar] [CrossRef]

- Jorna, P.G. Spectral analysis of heart rate and psychological state: A review of its validity as a workload index. Biol. Psychol. 1992, 34, 237–257. [Google Scholar] [CrossRef]

- Dell’Agnola, F.; Jao, P.K.; Arza, A.; Chavarriaga, R.; Millán, J.d.R.; Floreano, D.; Atienza, D. Machine-learning based monitoring of cognitive workload in rescue missions with drones. IEEE J. Biomed. Health Inform. 2022, 26, 4751–4762. [Google Scholar] [CrossRef]

- Debie, E.; Rojas, R.F.; Fidock, J.; Barlow, M.; Kasmarik, K.; Anavatti, S.; Garratt, M.; Abbass, H.A. Multimodal fusion for objective assessment of cognitive workload: A review. IEEE Trans. Cybern. 2019, 51, 1542–1555. [Google Scholar] [CrossRef] [PubMed]

- Charles, R.L.; Nixon, J. Measuring mental workload using physiological measures: A systematic review. Appl. Ergon. 2019, 74, 221–232. [Google Scholar] [CrossRef]

- Cheng, L.; Yu, T. A new generation of AI: A review and perspective on machine learning technologies applied to smart energy and electric power systems. Int. J. Energy Res. 2019, 43, 1928–1973. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using wireless EEG signals to assess memory workload in the n-back task. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 424–435. [Google Scholar] [CrossRef]

- Ma, H.L.; Sun, Y.; Chung, S.H.; Chan, H.K. Tackling uncertainties in aircraft maintenance routing: A review of emerging technologies. Transp. Res. Part E Logist. Transp. Rev. 2022, 164, 102805. [Google Scholar] [CrossRef]

- Sandström, V.; Luotsinen, L.; Oskarsson, D. Fighter pilot behavior cloning. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 686–695. [Google Scholar]

- Georgila, K.; Core, M.G.; Nye, B.D.; Karumbaiah, S.; Auerbach, D.; Ram, M. Using reinforcement learning to optimize the policies of an intelligent tutoring system for interpersonal skills training. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 737–745. [Google Scholar]

- Wang, F. Reinforcement learning in a pomdp based intelligent tutoring system for optimizing teaching strategies. Int. J. Inf. Educ. Technol. 2018, 8, 553–558. [Google Scholar] [CrossRef]

- Madeira, T.; Melício, R.; Valério, D.; Santos, L. Machine learning and natural language processing for prediction of human factors in aviation incident reports. Aerospace 2021, 8, 47. [Google Scholar] [CrossRef]

- Guevarra, M.; Das, S.; Wayllace, C.; Epp, C.D.; Taylor, M.; Tay, A. Augmenting flight training with AI to efficiently train pilots. Aaai Conf. Artif. Intell. 2023, 37, 16437–16439. [Google Scholar] [CrossRef]

- Jahanpour, E.S.; Berberian, B.; Imbert, J.P.; Roy, R.N. Cognitive fatigue assessment in operational settings: A review and UAS implications. IFAC-PapersOnLine 2020, 53, 330–337. [Google Scholar] [CrossRef]

- Peißl, S.; Wickens, C.D.; Baruah, R. Eye-tracking measures in aviation: A selective literature review. Int. J. Aerosp. Psychol. 2018, 28, 98–112. [Google Scholar] [CrossRef]

- Masi, G.; Amprimo, G.; Ferraris, C.; Priano, L. Stress and workload assessment in aviation—A narrative review. Sensors 2023, 23, 3556. [Google Scholar] [CrossRef]

- Suárez, M.Z.; Valdés, R.M.A.; Moreno, F.P.; Jurado, R.D.A.; de Frutos, P.M.L.; Comendador, V.F.G. Understanding the research on air traffic controller workload and its implications for safety: A science mapping-based analysis. Saf. Sci. 2024, 176, 106545. [Google Scholar] [CrossRef]

- Shaker, M.H.; Al-Alawi, A.I. Application of big data and artificial intelligence in pilot training: A systematic literature review. In Proceedings of the 2023 International Conference on Cyber Management and Engineering (CyMaEn), Bangkok, Thailand, 26–27 January 2023; IEEE: New York, NY, USA, 2023; pp. 205–209. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Bmj 2021, 372, n71. [Google Scholar] [CrossRef]

- Arico, P.; Borghini, G.; Di Flumeri, G.; Colosimo, A.; Graziani, I.; Imbert, J.P.; Granger, G.; Benhacene, R.; Terenzi, M.; Pozzi, S.; et al. Reliability over time of EEG-based mental workload evaluation during Air Traffic Management (ATM) tasks. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; IEEE: New York, NY, USA, 2015; pp. 7242–7245. [Google Scholar]

- Wang, Y.; Zhang, C.; Liu, C.; Liu, K.; Xu, F.; Yuan, J.; Jiang, C.; Liu, C.; Cao, W. Analysis on pulse rate variability for pilot workload assessment based on wearable sensor. Hum. Factors Ergon. Manuf. Serv. Ind. 2024, 34, 635–648. [Google Scholar] [CrossRef]

- Shao, Q.; Jiang, K.; Li, R. A numerical evaluation of real-time workloads for ramp controller through optimization of multi-type feature combinations derived from eye tracker, respiratory, and fatigue patterns. PLoS ONE 2024, 19, e0313565. [Google Scholar] [CrossRef] [PubMed]

- Qin, H.; Zhou, X.; Ou, X.; Liu, Y.; Xue, C. Detection of mental fatigue state using heart rate variability and eye metrics during simulated flight. Hum. Factors Ergon. Manuf. Serv. Ind. 2021, 31, 637–651. [Google Scholar] [CrossRef]

- Sakib, M.N.; Chaspari, T.; Behzadan, A.H. Physiological data models to understand the effectiveness of drone operation training in immersive virtual reality. J. Comput. Civ. Eng. 2021, 35, 04020053. [Google Scholar] [CrossRef]

- Massé, E.; Bartheye, O.; Fabre, L. Classification of electrophysiological signatures with explainable artificial intelligence: The case of alarm detection in flight simulator. Front. Neuroinform. 2022, 16, 904301. [Google Scholar] [CrossRef]

- Yiu, C.Y.; Ng, K.K.; Li, X.; Zhang, X.; Li, Q.; Lam, H.S.; Chong, M.H. Towards safe and collaborative aerodrome operations: Assessing shared situational awareness for adverse weather detection with EEG-enabled Bayesian neural networks. Adv. Eng. Inform. 2022, 53, 101698. [Google Scholar] [CrossRef]

- Jiang, G.; Chen, H.; Wang, C.; Xue, P. Mental workload artificial intelligence assessment of pilots’ EEG based on multi-dimensional data fusion and LSTM with attention mechanism model. Int. J. Pattern Recognit. Artif. Intell. 2022, 36, 2259035. [Google Scholar] [CrossRef]

- Nittala, S.K.; Elkin, C.P.; Kiker, J.M.; Meyer, R.; Curro, J.; Reiter, A.K.; Xu, K.S.; Devabhaktuni, V.K. Pilot skill level and workload prediction for sliding-scale autonomy. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; IEEE: New York, NY, USA, 2018; pp. 1166–1173. [Google Scholar]

- Xi, P.; Law, A.; Goubran, R.; Shu, C. Pilot workload prediction from ECG using deep convolutional neural networks. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Qiu, J.; Han, T. Integrated Model for Workload Assessment Based on Multiple Physiological Parameters Measurement. In Engineering Psychology and Cognitive Ergonomics, Proceedings of the 13th International Conference, EPCE 2016, Held as Part of HCI International 2016, Toronto, ON, Canada, 17–22 July 2016, Proceedings 13; Springer: Berlin/Heidelberg, Germany, 2016; pp. 19–28. [Google Scholar]

- Laskowski, J.; Pytka, J.; Laskowska, A.; Tomilo, P.; Skowron, Ł.; Kozlowski, E.; Piatek, R.; Mamcarz, P. AI-Based Method of Air Traffic Controller Workload Assessment. In Proceedings of the 2024 11th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Lublin, Poland, 3–5 June 2024; IEEE: New York, NY, USA, 2024; pp. 46–51. [Google Scholar]

- Jao, P.K.; Chavarriaga, R.; Dell’Agnola, F.; Arza, A.; Atienza, D.; Millán, J.d.R. EEG correlates of difficulty levels in dynamical transitions of simulated flying and mapping tasks. IEEE Trans. Hum.-Mach. Syst. 2020, 51, 99–108. [Google Scholar] [CrossRef]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Colosimo, A.; Bonelli, S.; Golfetti, A.; Pozzi, S.; Imbert, J.P.; Granger, G.; Benhacene, R.; et al. Adaptive automation triggered by EEG-based mental workload index: A passive brain-computer interface application in realistic air traffic control environment. Front. Hum. Neurosci. 2016, 10, 539. [Google Scholar] [CrossRef]

- Zhou, W.g.; Yu, P.p.; Wu, L.h.; Cao, Y.f.; Zhou, Y.; Yuan, J.j. Pilot turning behavior cognitive load analysis in simulated flight. Front. Neurosci. 2024, 18, 1450416. [Google Scholar] [CrossRef]

- Zak, Y.; Parmet, Y.; Oron-Gilad, T. Subjective Workload assessment technique (SWAT) in real time: Affordable methodology to continuously assess human operators’ workload. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; IEEE: New York, NY, USA, 2020; pp. 2687–2694. [Google Scholar]

- Lochner, M.; Duenser, A.; Sarker, S. Trust and Cognitive Load in semi-automated UAV operation. In Proceedings of the 31st Australian Conference on Human-Computer-Interaction, Fremantle, Australia, 2–5 December 2019; pp. 437–441. [Google Scholar]

- Gianazza, D. Learning air traffic controller workload from past sector operations. In Proceedings of the ATM Seminar, 12th USA/Europe Air Traffic Management R&D Seminar, Seattle, WA, USA, 26–30 June 2017. [Google Scholar]

- Shao, Q.; Li, H.; Sun, Z. Air Traffic Controller Workload Detection Based on EEG Signals. Sensors 2024, 24, 5301. [Google Scholar] [CrossRef]

- Salvan, L.; Paul, T.S.; Marois, A. Dry EEG-based Mental Workload Prediction for Aviation. In Proceedings of the 2023 IEEE/AIAA 42nd Digital Avionics Systems Conference (DASC), Barcelona, Spain, 1–5 October 2023; IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Monfort, S.S.; Sibley, C.M.; Coyne, J.T. Using machine learning and real-time workload assessment in a high-fidelity UAV simulation environment. In Next-Generation Analyst IV; SPIE: Bellingham, WA, USA, 2016; Volume 9851, pp. 93–102. [Google Scholar]

- Aricò, P.; Reynal, M.; Di Flumeri, G.; Borghini, G.; Sciaraffa, N.; Imbert, J.P.; Hurter, C.; Terenzi, M.; Ferreira, A.; Pozzi, S.; et al. How neurophysiological measures can be used to enhance the evaluation of remote tower solutions. Front. Hum. Neurosci. 2019, 13, 303. [Google Scholar] [CrossRef] [PubMed]

- Dell’Agnola, F.; Momeni, N.; Arza, A.; Atienza, D. Cognitive workload monitoring in virtual reality based rescue missions with drones. In Virtual, Augmented and Mixed Reality. Design and Interaction, Proceedings of the 12th International Conference, VAMR 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020, Proceedings, Part I; Springer: Cham, Switzerland, 2020; pp. 397–409. [Google Scholar]

- Kelleher, J.D.; Mac Namee, B.; D’arcy, A. Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, Worked Examples, and Case Studies; MIT press: Cambridge, MA, USA, 2020. [Google Scholar]

- Rodríguez-Fernández, V.; Menéndez, H.D.; Camacho, D. Automatic profile generation for UAV operators using a simulation-based training environment. Prog. Artif. Intell. 2016, 5, 37–46. [Google Scholar] [CrossRef]

- Yang, S.; Yu, K.; Lammers, T.; Chen, F. Artificial intelligence in pilot training and education–towards a machine learning aided instructor assistant for flight simulators. In HCI International 2021—Posters, Proceedings of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021, Proceedings, Part II; Springer: Cham, Switzerland, 2021; pp. 581–587. [Google Scholar]

- Yuan, J.; Ke, X.; Zhang, C.; Zhang, Q.; Jiang, C.; Cao, W. Recognition of Different Turning Behaviors of Pilots Based on Flight Simulator and fNIRS Data. IEEE Access 2024, 12, 32881–32893. [Google Scholar] [CrossRef]

- Caballero, W.N.; Gaw, N.; Jenkins, P.R.; Johnstone, C. Toward automated instructor pilots in legacy air force systems: Physiology-based flight difficulty classification via machine learning. Expert Syst. Appl. 2023, 231, 120711. [Google Scholar] [CrossRef]

- Li, Q.; Ng, K.K.; Yiu, C.Y.; Yuan, X.; So, C.K.; Ho, C.C. Securing air transportation safety through identifying pilot’s risky VFR flying behaviours: An EEG-based neurophysiological modelling using machine learning algorithms. Reliab. Eng. Syst. Saf. 2023, 238, 109449. [Google Scholar] [CrossRef]

- Yin, W.; An, R.; Zhao, Q. Intelligent Recognition of UAV Pilot Training Actions Based on Dynamic Bayesian Network. J. Physics Conf. Ser. 2022, 2281, 012014. [Google Scholar] [CrossRef]

- Paces, P.; Insaurralde, C.C. Artificially intelligent assistance for pilot performance assessment. In Proceedings of the 2021 IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 October 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Pietracupa, M.; Ben Abdessalem, H.; Frasson, C. Detection of Pre-error States in Aircraft Pilots Through Machine Learning. In Generative Intelligence and Intelligent Tutoring Systems, Proceedings of the 20th International Conference, ITS 2024, Thessaloniki, Greece, 10–13 June 2024, Proceedings, Part II; Springer: Cham, Switzerland, 2024; pp. 124–136. [Google Scholar]

- Binias, B.; Myszor, D.; Cyran, K.A. A machine learning approach to the detection of pilot’s reaction to unexpected events based on EEG signals. Comput. Intell. Neurosci. 2018, 2018, 2703513. [Google Scholar] [CrossRef]

- Alkadi, R.; Al-Ameri, S.; Shoufan, A.; Damiani, E. Identifying drone operator by deep learning and ensemble learning of imu and control data. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 451–462. [Google Scholar] [CrossRef]

- Taheri Gorji, H.; Wilson, N.; VanBree, J.; Hoffmann, B.; Petros, T.; Tavakolian, K. Using machine learning methods and EEG to discriminate aircraft pilot cognitive workload during flight. Sci. Rep. 2023, 13, 2507. [Google Scholar] [CrossRef]

- Watkins, D.; Gallardo, G.; Chau, S. Pilot support system: A machine learning approach. In Proceedings of the 2018 IEEE 12th International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 31 January–2 February 2018; IEEE: New York, NY, USA, 2018; pp. 325–328. [Google Scholar]

- Jain, N.; Gambhir, A.; Pandey, M. Unmanned aerial networks—UAVs and AI. In Recent Trends in Artificial Intelligence Towards a Smart World: Applications in Industries and Sectors; Springer: Singapore, 2024; pp. 321–351. [Google Scholar]

- Zhu, W.; Zhang, C.; Liu, C.; Xu, F.; Jiang, C.; Cao, W. Assessment of Pilot Mental Workload Based on Physiological Signals: A Real Helicopter Cross-country Flight Study. In Proceedings of the 2023 IEEE 5th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 11–13 October 2023; IEEE: New York, NY, USA, 2023; pp. 638–643. [Google Scholar]

- Troussas, C.; Krouska, A.; Mylonas, P.; Sgouropoulou, C. Reinforcement learning-based dynamic fuzzy weight adjustment for adaptive user interfaces in educational software. Future Internet 2025, 17, 166. [Google Scholar] [CrossRef]

- Sun, Q.; Xue, Y.; Song, Z. Adaptive user interface generation through reinforcement learning: A data-driven approach to personalization and optimization. In Proceedings of the 2024 6th International Conference on Frontier Technologies of Information and Computer (ICFTIC), Qingdao, China, 13–15 December 2024; IEEE: New York, NY, USA, 2024; pp. 1386–1391. [Google Scholar]

- Amaliah, N.R.; Tjahjono, B.; Palade, V. Human-in-the-Loop XAI for Predictive Maintenance: A Systematic Review of Interactive Systems and Their Effectiveness in Maintenance Decision-Making. Electronics 2025, 14, 3384. [Google Scholar] [CrossRef]

- Emami, Y.; Almeida, L.; Li, K.; Ni, W.; Han, Z. Human-in-the-loop machine learning for safe and ethical autonomous vehicles: Principles, challenges, and opportunities. arXiv 2024, arXiv:2408.12548. [Google Scholar]

| Refs. | Input of ML Model (Data) | Output of ML Model | ML Model and Results |

|---|---|---|---|

| Arico et al. [55] | Electroencephalogram (EEG) signal data (collected from 12 subjects). | High, medium, or low workload. | Step-Wise Linear Discriminant Analysis (SWLDA). |

| Wang et al. [56] | Photoplethysmography (PPG) signal data and NASA-TLX (collected from 21 subjects). | High, medium, or low mental workload. | K-Nearest Neighbors (KNN) achieved the best performance with 88.9% accuracy. |

| Shao et al. [57] | Fatigue status, eye-tracking, and respiratory data (collected from 8 subjects). | High or low workload level. | KNN showed the best performance with 98.6% accuracy in classifying workload. |

| Luo et al. [13] | Facial expression via FaceReader software and NASA-TLX score (collected from 21 subjects). | High, medium, and low workload. | Convolutional Neural Network (CNN) showed the best accuracy of 99.87%. |

| Qin et al. [58] | Visual analogue scale (subjective measure), heart rate variability (HRV), and eye-tracking data (collected from 11 subjects). | Mental fatigue (low/medium/high). | Support Vector Machine (SVM) achieved the best performance of 91.8% in determining levels of mental fatigue. |

| Sakib et al. [59] | NASA-TLX, CARMA for stress level, heart rate (HR), heart rate variability (HRV), skin electrical activity (EDA), skin temperature (SKT), and behavioural data (e.g., reaction time), (collected from 25 subjects). | Cognitive load, performance, and stress levels: low, medium low, medium, medium high, and high. | SVM showed the best performance in classifying cognitive load. |

| Massé et al. [60] | Subjective level of fatigue behavioural data, and EEG signal data (collected from 24 subjects). | Attention (alarm) detected or not. | SVM model performed best with an average individual accuracy rate of 76.4%. |

| Yiu et al. [61] | EEG and NASA-TLX score (collected from 30 subjects). | Classification of weather conditions: good visibility conditions or poor visibility condition. | Bayesian Neural Network (BNN) model performed the best in the task of classifying, with accuracy rate of 66.5%. |

| Jiang et al. [62] | EEG signal, flight operation, eye-tracking, and flight record data (collected from 6 subjects). | Classification of mental workload into 5 levels: low to high. | Long Short-Term Memory (LSTM) with attention achieved average 94% accuracy. |

| Nittala et al. [63] | HR, flight control data, flight sensor data, environment feature (day, night, clear, and cloud), and NASA-TLX score (collected from 15 subjects). | Skill level (novice or expert), and workload (high or low). | SVM achieved AUC of 0.99. |

| Xi et al. [64] | ECG and NASA-TLX. | Mental workload (high/medium/low). | Using CNN and transfer learning can classify workload levels. |

| Qiu and Han [65] | HR, range of motion (ROM), HRV, respiration, and NASA-TLX (collected from 6 subjects). | Operator workload level. | Four features selected by PCA showed significant inverse trends with increasing workload. |

| Laskowski et al. [66] | Air traffic data and task characteristics. | Operator workload levels. | Delphi method (expert) with Neural Network model achieved 98% accuracy. |

| Jao et al. [67] | EEG and NASA-TLX (collected from 24 subjects). | Cognitive load (high/low). | Linear regression showed success in assessing cognitive load. |

| Aricò et al. [68] | EEG and NASA-TLX (collected from 12 subjects). | High or low cognitive load. | Classification algorithm with automatic stop Stepwise Linear Discriminant Analysis (asSWLDA). |

| Zhou et al. [69] | HRV data (collected from 28 subjects). | Cognitive load (low/medium/high). | LSTM the showed best performance of 0.9491 F1-score in accurately identifying the cognitive load levels. |

| Dell’Agnola et al. [38] | Respiration (RSP), ECG, PPG, SKT, and NASA-TLX (collected from 24 subjects). | High/low CL. | SVM with subject-specific weights achieved best accuracy performance. |

| Momeni et al. [31] | ECG, RSP, PPG, SKT signal data, and NASA-TLX score (collected from 24 subjects). | CL (high/low). | XGboost achieve best 86% accuracy on unseen data. |

| Planke et al. [26] | EEG, eye-tracking data, task performance data, and NASA-TLX (collected from 5 subjects). | CL (high/medium/low). | Supervised ML model. |

| Zak et al. [70] | Joystick movement, and NASA-TLX score (collected from 6 subjects). | Operator workload (high/low). | Showed Lasso Regression, NN, RF, and XGBoost can all assess workload. |

| Zhang et al. [29] | fNIRS signals using change of oxyhemoglobin as features, and NASA-TLX score (collected from 25 subjects). | Mental workload (high/medium/low). | SVM hierarchical achieved high accuracy (88.74%). |

| Lochner et al. [71] | GSR signal, acceleration signal, HR, NASA-TLX score, and system trust scale score (collected from 43 subjects). | Trust state (high/low). | Decision Tree showed an accuracy of 80%. |

| Gianazza [72] | Radar trajectory data and sector records. | Workload (low/normal/high). | NN showed best performance of 81.9% accuracy to classify workload. |

| Shao et al. [73] | EEG signal data, and NASA-TLX score (collected from 16 subjects). | Cognitive state (rest/low/medium/high). | SVM with the gamma wave metrics showed effectiveness in capturing cognitive state variations. |

| Salvan et al. [74] | EEG and NASA-TLX score (collected from 48 subjects). | Mental workload (high/medium/low). | RF showed best accuracy of 76% in predicting mental workload. |

| Monfort et al. [75] | Eye-tracking, and behaviour data (such as reaction time, accuracy, and error rates) (collected from 20 subjects). | Mental workload (low/high). | Ensemble of KNN, RF, and SVM achieved 78% accuracy in predicting UAV operator workload. |

| Aricò et al. [76] | ECG, GSR, EEG, blink rate, reaction time, and NASA-TLX score (collected from 16 subjects). | Workload (low/high). | Automatic stop Stepwise Linear Discriminant Analysis (asSWLDA) showed success in assessing workload. |

| Dell’Agnola et al. [77] | ECG, RSP, SKT, PPG EDA, and NASA-TLX score (collected from 24 subjects). | Cognitive load (2 or 3 levels). | XGboost showed best performance of 80.2% accuracy in classifying 2 levels of CL. |

| Refs. | Input of ML Model (Data) | Output of ML Model | ML Model and Results |

|---|---|---|---|

| Wojciechowski et al. [7] | Electroencephalography (EEG), electrocardiography (ECG), blood pressure, skin temperature, facial expression, eye-tracking (ET), and Simulator Sickness Questionnaire (SSQ). | UAV operator’s pressure level (classification of operator state). | Recurrent Neural Network (RNN) assessed operator pressure levels. |

| Guevarra et al. [48] | Operator operation trajectory data (i.e., action and reaction time) and operator control inputs. | Detection of operator performance errors. | Imitation learning (behavioral cloning) applied for autonomous pilot error state detection. |

| Rodríguez-Fernández et al. [79] | UAV operator operation records including features of score, cooperation, aggressiveness, and initial plan complexity. | Classification of operator skill level (low, medium, high). | Unsupervised clustering (K-means, agglomerative, divisive, and partition around medoids) and fuzzy logic to evaluate operator training skills. |

| Yang et al. [80] | Flight simulator data, including aircraft state variables (e.g., attitude, altitude, airspeed) and operator control actions. | Classification of trainee performance alignment with expert “standard operation.” | ML approach provided real-time, objective, and quantitative assessment of operator performance. |

| Yuan et al. [81] | functional near-infrared spectroscopy (fNIRS) signal (collected from 25 subjects). | Classification of operator behaviour (left vs. right turning). | SVM-RBF achieved 92.6% accuracy using 60% of selected features. |

| Caballero et al. [82] | Electromyography (EMG), forearm acceleration (ACC), EDA, ECG, respiration (RES), eye-tracking, and PPG signal data (collected from 21 subjects). | Classification of pilot task difficulty (low or high). | AdaBoost achieved the best performance with 87.14% accuracy. |

| Li et al. [83] | EEG signals (collected from 20 subjects). | Classification of unsafe vs. safe behaviour. | SVM-Linear achieved 80.8% accuracy. |

| Yin et al. [84] | Operator movement data including altitude, altitude change rate, heading angle, heading change rate, and flight speed. | Recognition of 15 classes of training actions. | Dynamic Bayesian Network successfully recognised current training actions. |

| Paces and Insaurralde [85] | Flight parameters including position, navigation data, and flight attitude. | Classification of safe vs. unsafe flying behaviour. | AI algorithm effectively evaluated pilot flight performance. |

| Pietracupa et al. [86] | EEG signal data. | Classification of pre-error vs. non-error states. | Transformer model achieved the best F1-score of 0.578. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Molloy, O.; El-Fiqi, H.; Eves, G. Applications of Machine Learning in Assessing Cognitive Load of Uncrewed Aerial System Operators and in Enhancing Training: A Systematic Review. Drones 2025, 9, 760. https://doi.org/10.3390/drones9110760

Li Q, Molloy O, El-Fiqi H, Eves G. Applications of Machine Learning in Assessing Cognitive Load of Uncrewed Aerial System Operators and in Enhancing Training: A Systematic Review. Drones. 2025; 9(11):760. https://doi.org/10.3390/drones9110760

Chicago/Turabian StyleLi, Qianchu, Oleksandra Molloy, Heba El-Fiqi, and Gary Eves. 2025. "Applications of Machine Learning in Assessing Cognitive Load of Uncrewed Aerial System Operators and in Enhancing Training: A Systematic Review" Drones 9, no. 11: 760. https://doi.org/10.3390/drones9110760

APA StyleLi, Q., Molloy, O., El-Fiqi, H., & Eves, G. (2025). Applications of Machine Learning in Assessing Cognitive Load of Uncrewed Aerial System Operators and in Enhancing Training: A Systematic Review. Drones, 9(11), 760. https://doi.org/10.3390/drones9110760