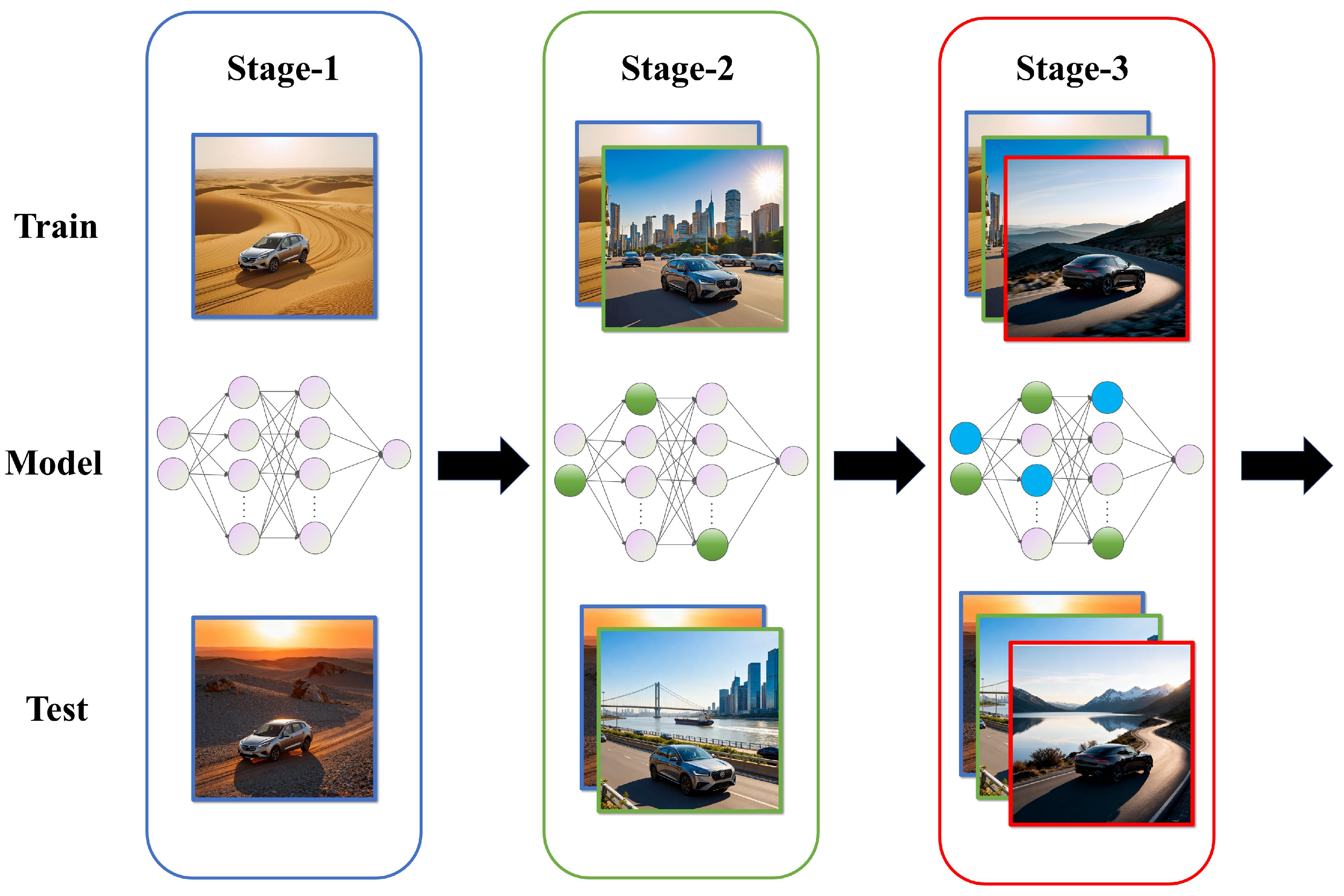

In order to continuously improve the ability of data-driven autonomous guidance models from multi-scenario guidance data, we adopt a continual learning architecture based on feature generation and replay. On this basis, to enhance the realism of the generated features, we design the structural loss. Finally, in order to expand the knowledge capacity of the model itself, we design a dynamic knowledge expansion module based on MoE.

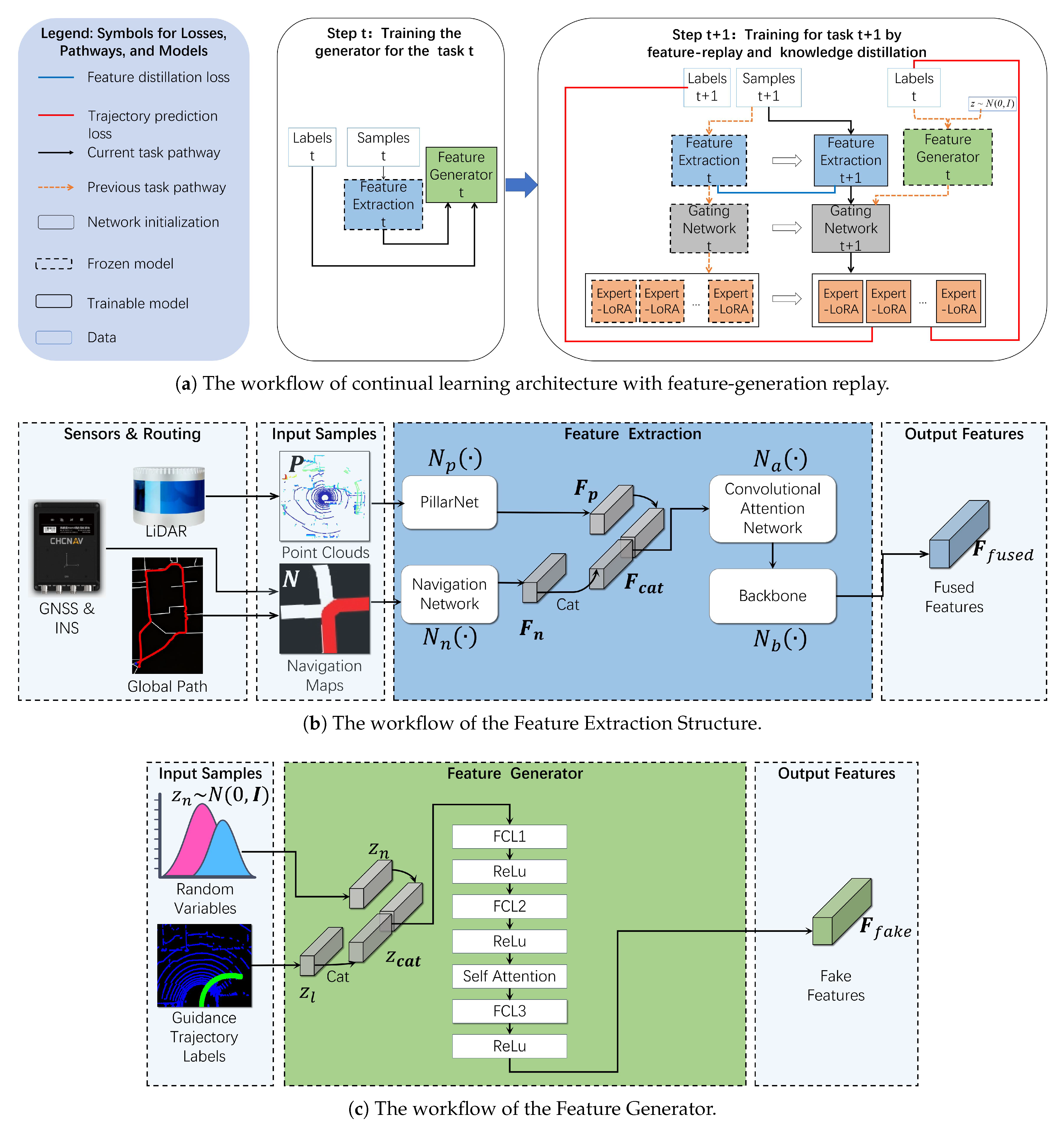

3.1. Continual Learning Architecture with Feature-Generation-Replay

The core of the continual learning architecture based on feature generation and playback is the combination of feature playback, knowledge distillation of features, and transfer learning of weight initialization of the generative network. As shown in

Figure 3a, the process of continual learning for a current task is divided into two key elements: (a) Feature replay of the generator: the generator of task

t is trained to generate features for task

t, which are used to train task

instead of the original data of task

t. By replaying the features of previous tasks, the model can review the knowledge of previous tasks while learning the current task so as to effectively alleviate catastrophic forgetting. Compared with the sample replay method, this method does not need to store training samples, which effectively reduces the storage cost. (b) Knowledge distillation of features: Knowledge distillation preserves the knowledge of the old task by restricting the consistency of the output features of the new and old models. As shown in the figure, we force the feature extractor

of the current task model to stay close to the feature extractor

of the old task through

-loss (Euclidean distance), ensuring the feature representation stability of the feature extractor of the current task model for the previous task. The detailed calculation formula is presented in Equation (

15).

Additionally, transfer learning will also be used for parameter initialization: When performing model training for task , we take the corresponding model weights of the model for task t as the initial weights for the new model. The reason is that the model of task t has been fully trained, and its weights already contain rich general feature representations. Using these weights as the initial values of the target task, the model can accelerate convergence and improve the performance of the model.

3.2. Structure-Enhanced Feature Generation Method

We adopt a structure-enhanced feature generation method. On the one hand, the feature generator is built on a Multi-Layer Perceptron (MLP) integrated with a self-attention module [

30] , as shown in

Figure 3c. The feature generator has two inputs. The first is a random vector

that follows a Gaussian distribution, and the second is a guidance trajectory label

. These two variables are concatenated to form a concatenated variable

. The output of the feature generator is a fake feature

, which is used to simulate the real feature

generated by the feature extractor.

Conventional MLP models fail to characterize the correlation information across different dimensions and positions in features. To address this limitation, we incorporate a self-attention module to capture cross-dimensional and positional dependencies, enabling the generator to focus on the relationships between different elements in the feature vector.

The core of the self-attention module lies in the matrix operation of query (), key (), and value (), which enables each position in the sequence to pay attention to the information of all other positions, and ultimately outputs the weighted features. The complete calculation process can be decomposed into the following four key steps.

Firstly, after inputting the feature

, three independent linear layers

are applied, which, respectively, generate the query matrix

, the key matrix

, and the value matrix

:

where the size of

,

and

is all

. The purpose of this step is to map the input to a space that is more suitable for calculating attention correlation.

Secondly, the attention score

is used to measure the similarity between

and

. The higher the score, the more attention is paid to the

at the corresponding position. The formula for calculating the attention score is

where multiplying the matrix of

with the transpose matrix of

results in a similarity matrix (denoted as

) with a size of

. The element at position

in the

represents the dot product of “the

at the i-th position” and “the

at the j-th position”.

As for why needs to be divided by , it is because when is relatively large, the numerical value of the dot product result of and will be extremely large, causing the input of the Softmax function to fall into the saturation zone, where the gradient is close to zero. And the derivative of the Softmax function for large values approaches zero, making it difficult for the model to update the parameters. Furthermore, when and follow an independent and identically distributed (i.i.d.) standard normal distribution, the expected value of their dot product is 0 and the variance is . To keep the variance of the dot product constant, it is necessary to scale the dot product result by (i.e., divide it by ). This ensures that as increases, the dot product does not grow linearly but remains at a constant level.

Thirdly, the attention scores

are converted into probability weight

through the Softmax function, such that the sum of the weights for all positions is 1, ensuring that the weights are in a reasonable distribution ratio. The formula for the converted attention probability weight is

where

represents the probability weight, with the size of

. The element at position

in

represents the attention weight of the

i-th position towards the

j-th position. The higher the weight, the greater the influence that the

i-th position has on the calculation of the output, indicating that the information of the

j-th position of

needs to be more incorporated at that position.

Finally, a weighted sum of the

matrix is computed using probability weight

, yielding the final output

for each position. The output at each position incorporates the

information from all positions. The formula for the final output is given by

where

denotes the final output matrix of the self-attention module. The

i-th row represents the feature of the

i-th position after integrating information from all positions.

On the other hand, we construct the structural loss of features, as shown in (

5) , in terms of sparsity patterns and statistical distribution. The adversarial loss of traditional a Generative Adversarial Network (GAN) only focuses on the overall distribution matching and ignores the fine-grained structure inside the features. The true features are structurally sparse, while the features trained according to the adversarial loss may be randomly sparse.

where

and

denote the weights of

and

, respectively.

Firstly, in terms of sparsity pattern, we model which positions of the feature vector should be nonzero and the spatial correlation of non-zero positions. Jaccard distance [

31] is a measure of the dissimilarity of two sets, which is scale-invariant and insensitive to specific values. We treat the representation of the feature vector as a binary mask (1 for non-zero elements and 0 for zero elements) and compute the Jaccard distance between the generated features and the true features (ranging from 0 to 1), as shown in Equation (

6). A Jaccard distance closer to 1 indicates that the sparsity patterns of the two features are more similar, while a Jaccard distance closer to 0 indicates the opposite.

where

represent the non-zero mask for the generated features and the true features.

Secondly, in terms of statistical distribution, the distribution between the nonzero values of the feature vectors is mainly constrained. As shown in (

7), the mean of the pointwise differences between the nonzero values of the generated and true features is computed, and the KL divergence between the generated distribution and the true distribution is computed. The non-zero value distribution of the generated features is in line with the true distribution.

where

and

represent the sets of non-zero values for the generated and real features, respectively, and

and

represent the probability distribution (estimated by Gaussian kernel density estimation) of non-zero values. KL represents Kullback–Leibler Divergence, which is used to measure the difference between distributions.

represents the coefficient of KL. The sparse pattern addresses the question of which values are nonzero, while statistical distributions address the question of what values should reside in the nonzero positions. Together, these two components form a structural loss, which drives the generated features to be more aligned with the true features.

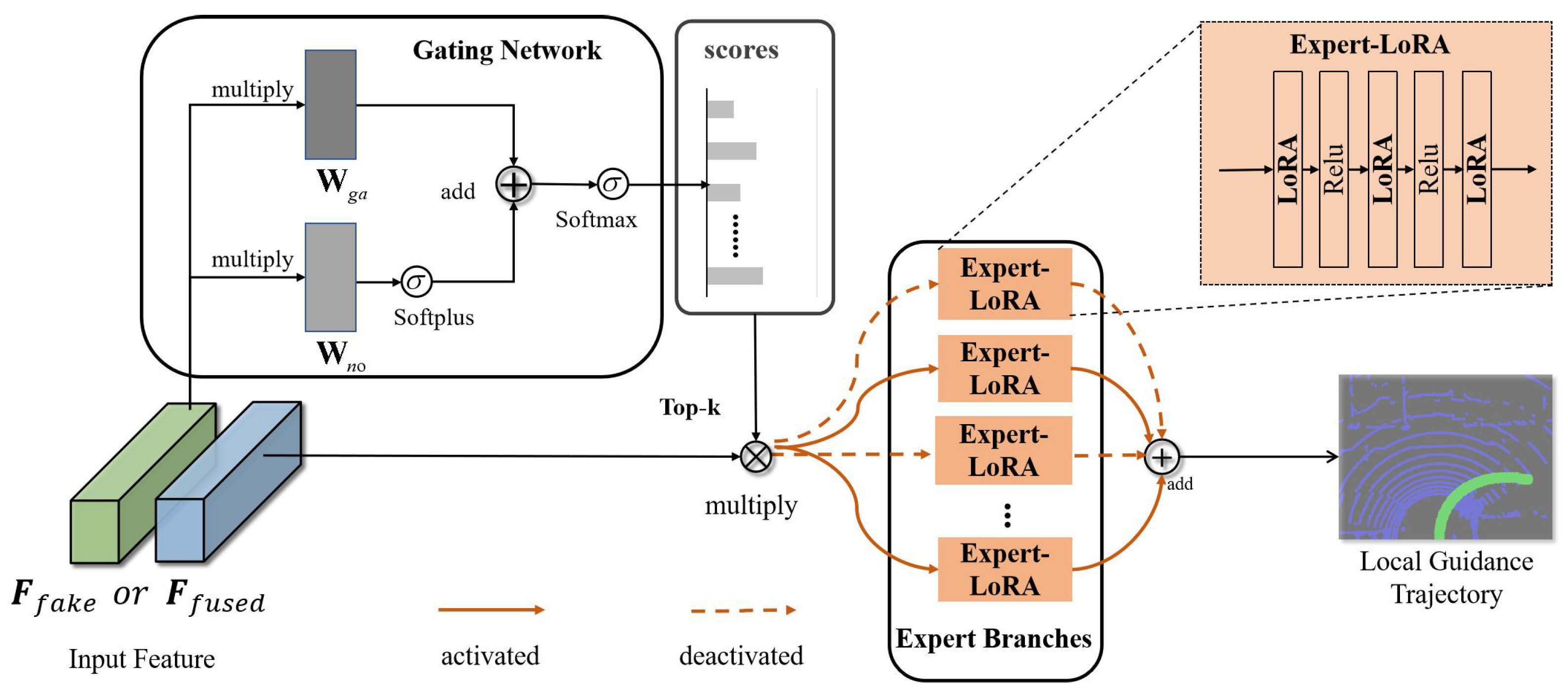

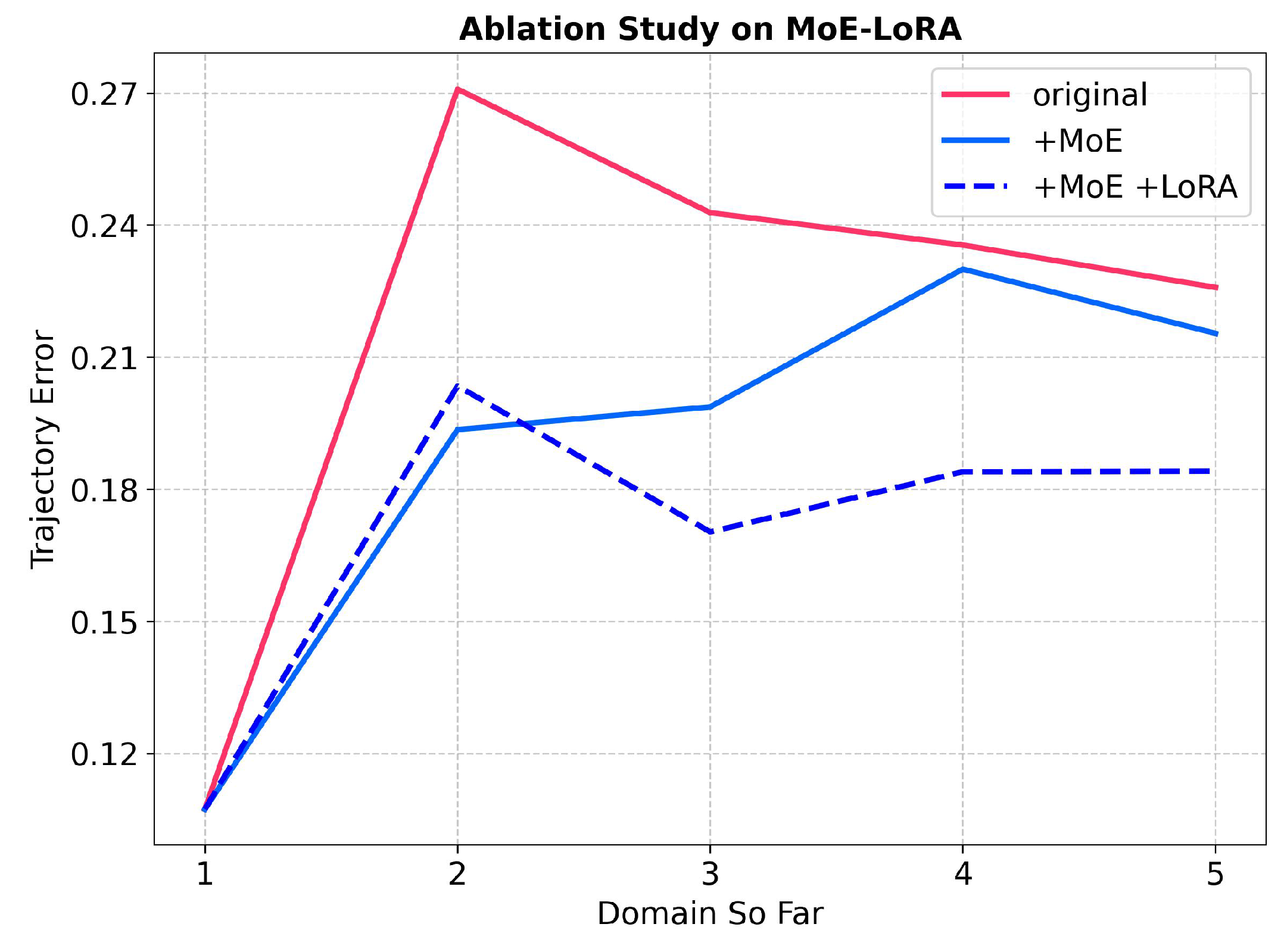

3.3. Dynamic Knowledge Expansion Model Based on MoE-LoRA

Dynamic Knowledge expansion Model based on MoE-LoRA: MoE [

23] is a dynamic neural network architecture, which consists of a feature extractor, a gating structure, and an expert branch, as shown in

Figure 4. The knowledge capacity of the network model can be improved by adding expert branches. At the same time, the gating structure can adaptively activate part of the expert branches so as to reduce the amount of calculation. LoRA [

32] performs task-specific fine-tuning by multiplying two smaller matrices. When training a new task, we add the LoRA layer to the expert branch of MoE to form the MoE-LoRA structure so as to improve the knowledge capacity of the model and better improve the continual learning performance of the model.

Let

and

denote the output of the gating network and the output of the

i-th expert branch respectively. Given the input feature

, the output

of MoE can be expressed as follows:

where

denotes the number of expert model branches. Whenever

,

will not be computed. Leveraging the sparsity of

, the computational cost is significantly reduced. The formulas for

,

, and

are provided in Equations (

9), (

10) and (

11), respectively.

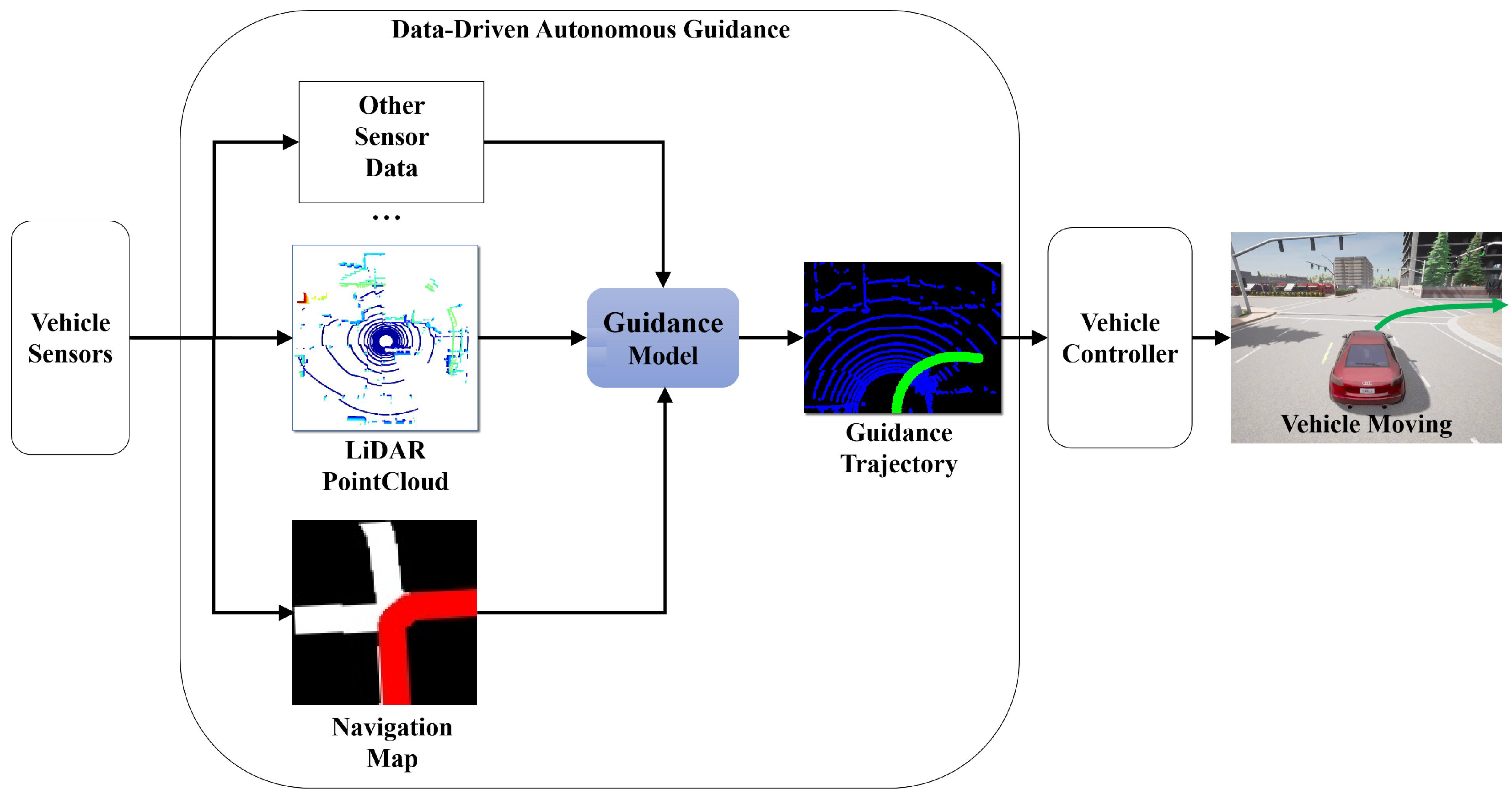

3.3.1. Feature Extraction Structure

In our previous work [

5], we validated the effectiveness of this feature extractor structure for the data-driven autonomous guidance problem. Therefore, this study will continue to adopt this structure. Below, we will briefly introduce the main components and workflow of the Feature Extraction Structure, while its specific design details and calculation formulas will not be reiterated.

Figure 3b illustrates the Feature Extraction Structure workflow of the guidance model. Point clouds

and navigation maps

are obtained from LiDAR, GNSS&INS, and other sensors, serving as the input samples for the feature extractor. Here,

represents the total number of points within a single point cloud frame.

denotes the dimension of the LiDAR point cloud, which contains

and reflectivity.

represents the dimension of the navigation map.

denote the width and the height of the navigation map, respectively. After feature extraction by PillarNet, Navigation Network, Convolutional Attention Network, and Backbone, the output feature

is obtained.

Within the Feature Extraction Structure, a convolutional neural network is primarily utilized to extract features from both the LiDAR point cloud and the navigation map. Subsequently, the extracted features from these two sources are concatenated, and the channel and spatial attention modules are integrated to facilitate adaptive feature fusion. After applying a deep convolutional neural network to the fused features, high-dimensional features are generated.

Denoting the input LiDAR point cloud data as

,

represents the total number of points within a single point cloud frame.

denotes the dimension of the LiDAR point cloud, which contains

and reflectivity. Let

represent the input navigation map. The output feature

of the Feature Extraction Structure is calculated by

where

denotes the backbone network, convolutional attention network, pointpillar network, and navigation network, respectively.

3.3.2. Gating Network

The gating network is a neural network structure comprising a single data element as input and a weight as output. The weight indicates the extent to which the expert contributes to the processing of the input data. The top-k models are typically selected by modeling the probability distribution via softmax.

The gating network consists of two main components: sparsity, implemented by the activation module, and noise, implemented by the noise module. We use

to denote the gating weights and noise weights of the gating network, respectively.

denotes the number of expert model branches.

denotes the dimension of the feature map from the backbone network in Equation (

9). Then, the calculation of the gating network can be formulated by the following equations:

where

denotes the softplus activation function and

denotes the softmax activation function.

denotes the noise regularization term.

denotes the noise module.

denotes the activation module.

3.3.3. Expert Branches with LoRA

The structure of the expert is a model based on a multilayer perceptron (MLP) with LoRA. Each MLP consists of three fully connected layers with LoRA and a Relu activation function. The output of the

j-th fully connected layer of the

i-th expert branch can be expressed in Equation (

11). In practice, we add a LoRA structure to each fully connected layer of the expert branch.

where

represents of the original weights

j-th fully connected layer of the

i-th expert branch.

represent the LoRA layer matrix of the

i-th expert.

represents the number of fully connected layers.