A Workflow for Urban Heritage Digitization: From UAV Photogrammetry to Immersive VR Interaction with Multi-Layer Evaluation

Abstract

Highlights

- An end-to-end workflow integrates UAV photogrammetry, LiDAR, and VR for heritage.

- Three-layer evaluation shows focused attention, edge-anchored movement, and clearer cultural understanding.

- UAV-enabled completeness improves both geometric fidelity and user experience in VR.

- The workflow is affordable and transferable, supporting under-resourced heritage sites.

Abstract

1. Introduction

1.1. UAVs as an Enabler for Urban-Heritage Digitization

1.2. Digital Interaction for Heritage: From Maps to Immersive Technology

1.3. Research Gaps and Research Questions

2. Case Study

3. Materials and Methods

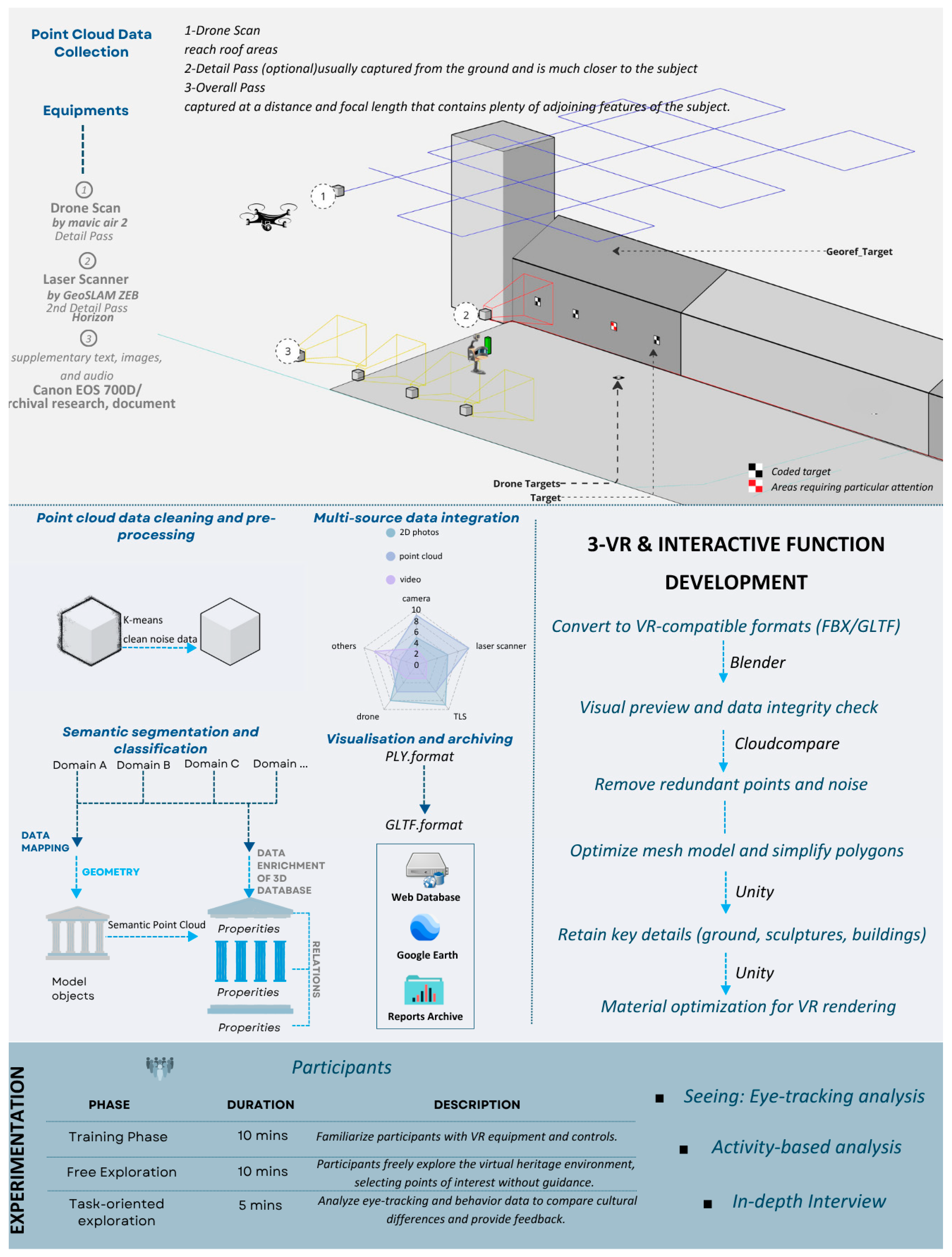

3.1. Data Collection

3.2. Development of the Immersive Virtual Environment

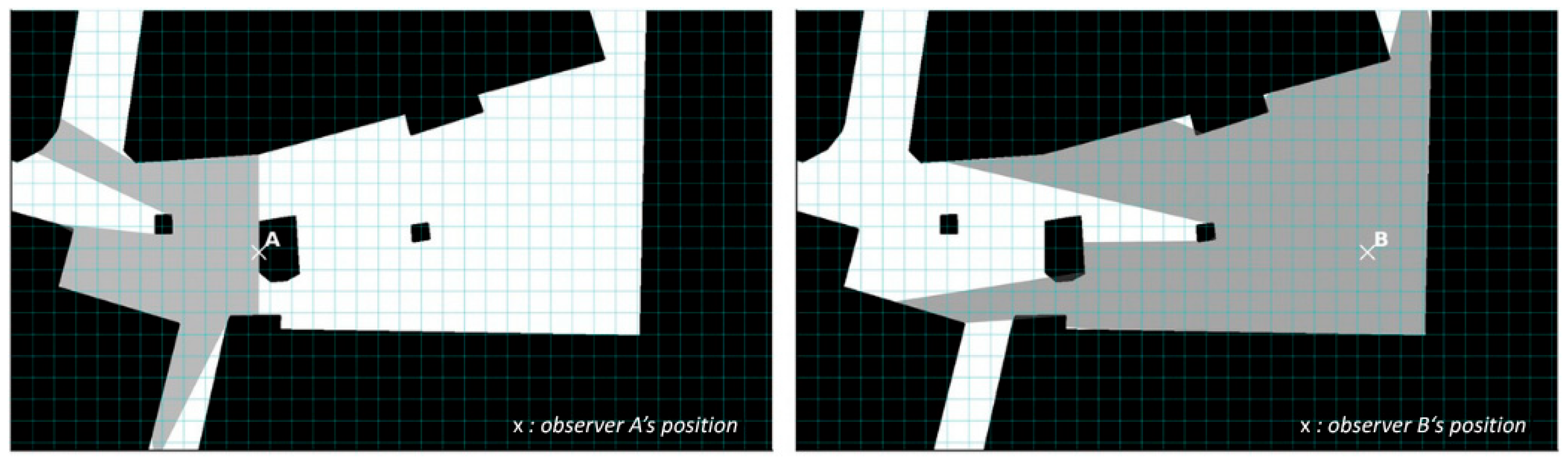

3.3. Spatial Analysis for the Virtual Environment

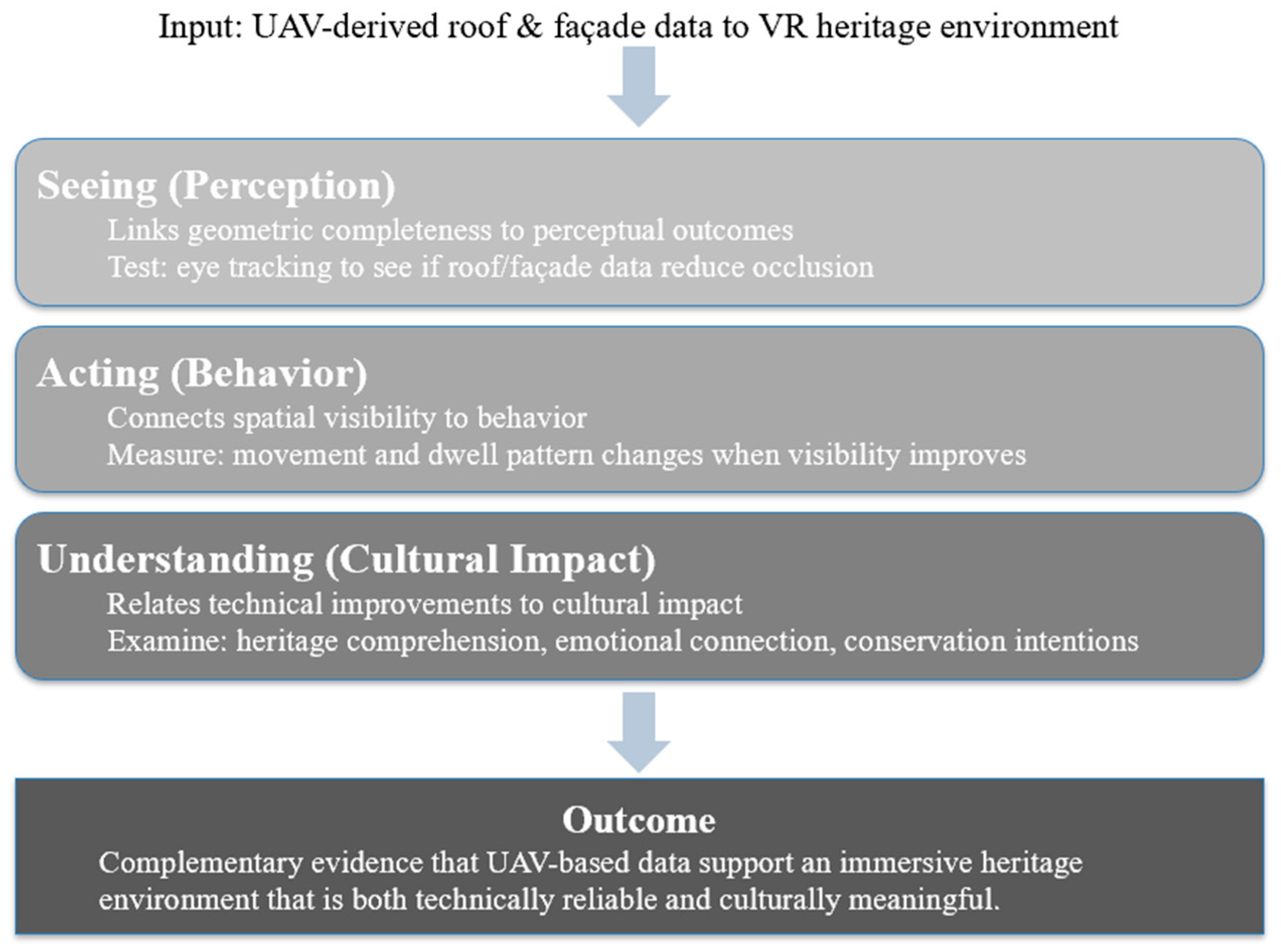

3.4. Evaluation Methods

3.4.1. VR-Based Experiment Process

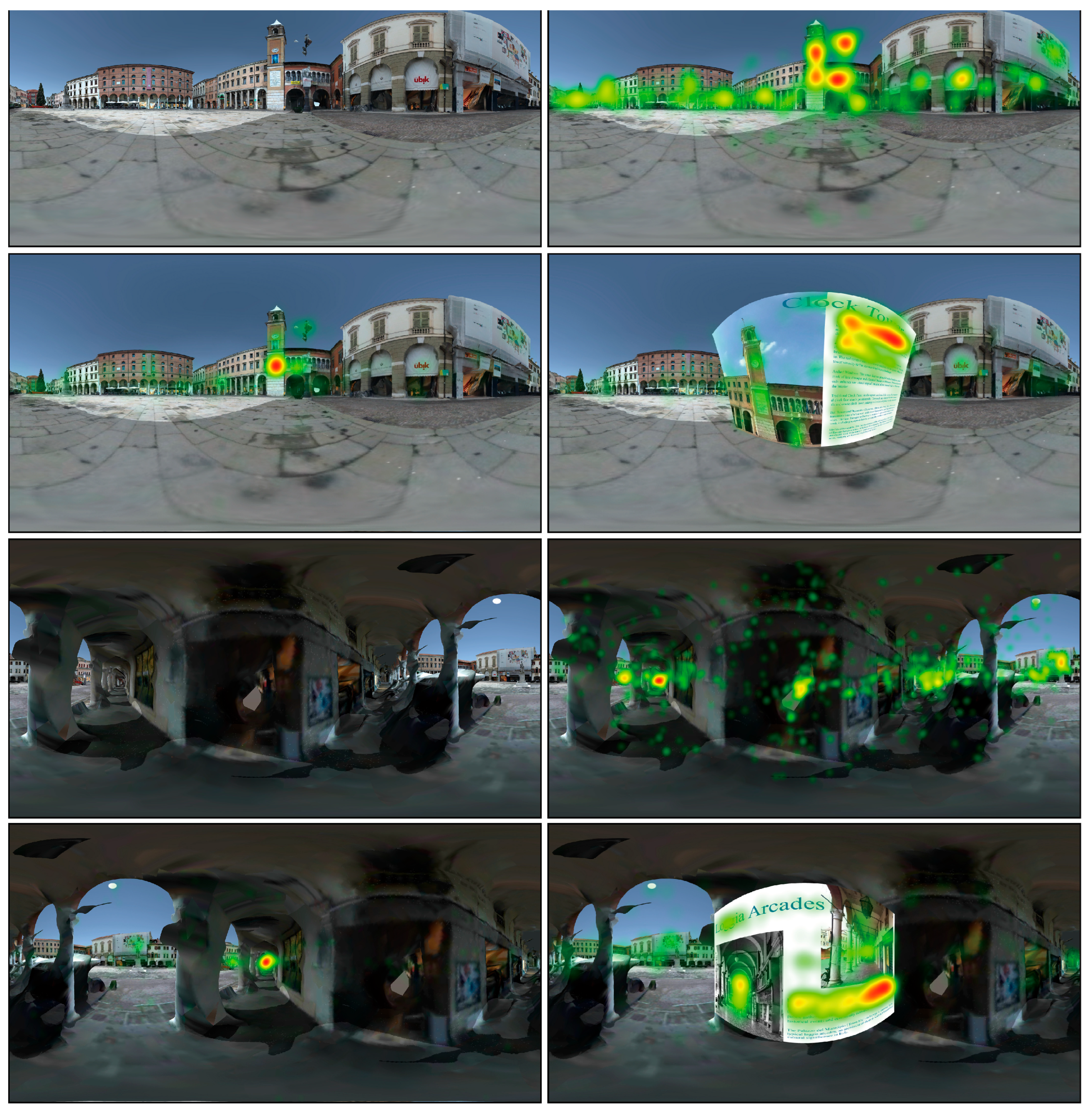

3.4.2. Seeing: Eye-Tracking Analysis

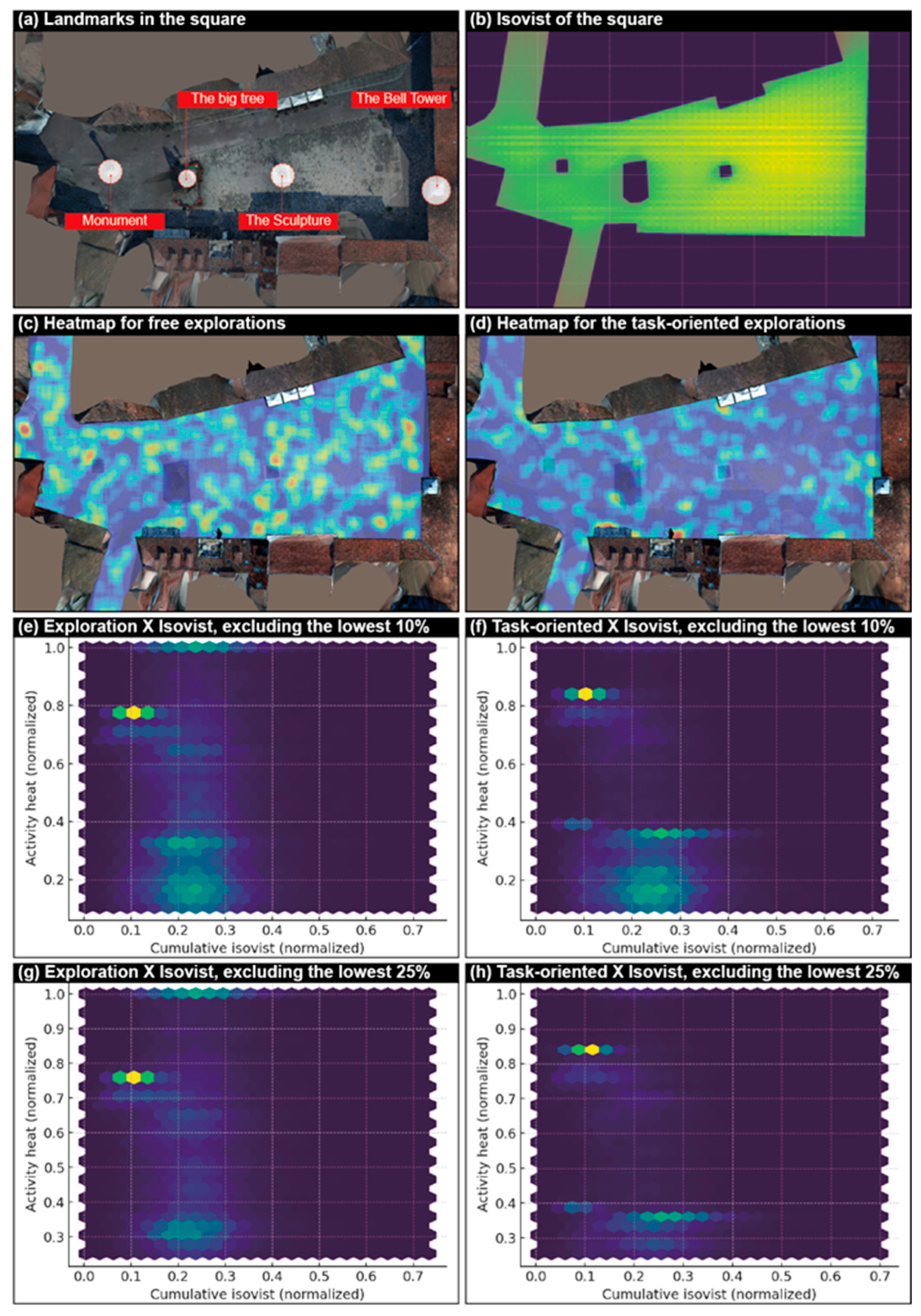

3.4.3. Acting: Activity-Based Analysis

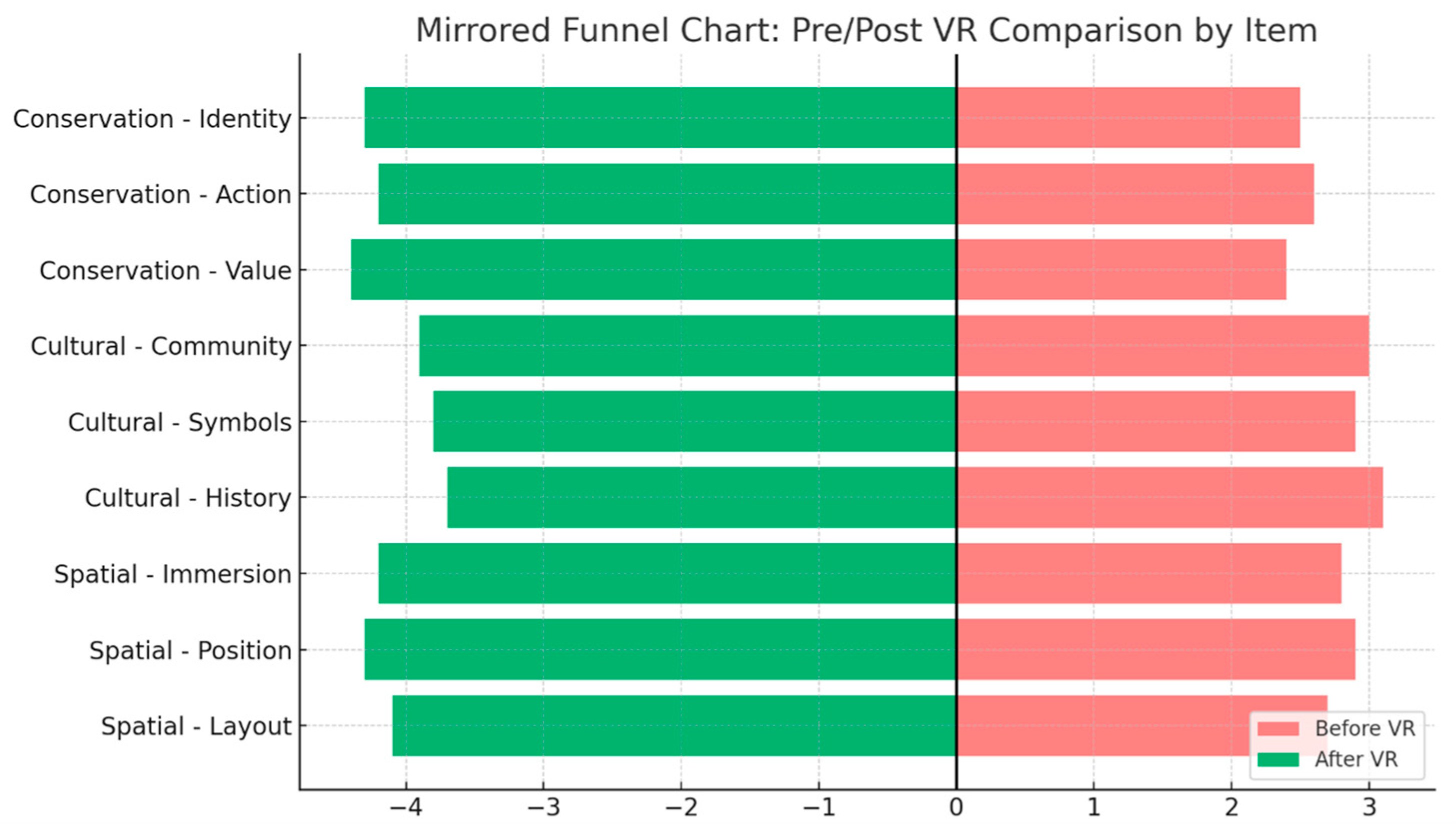

3.4.4. Understanding: Feedback Interview

3.4.5. Comprehensive Evaluation

4. Results

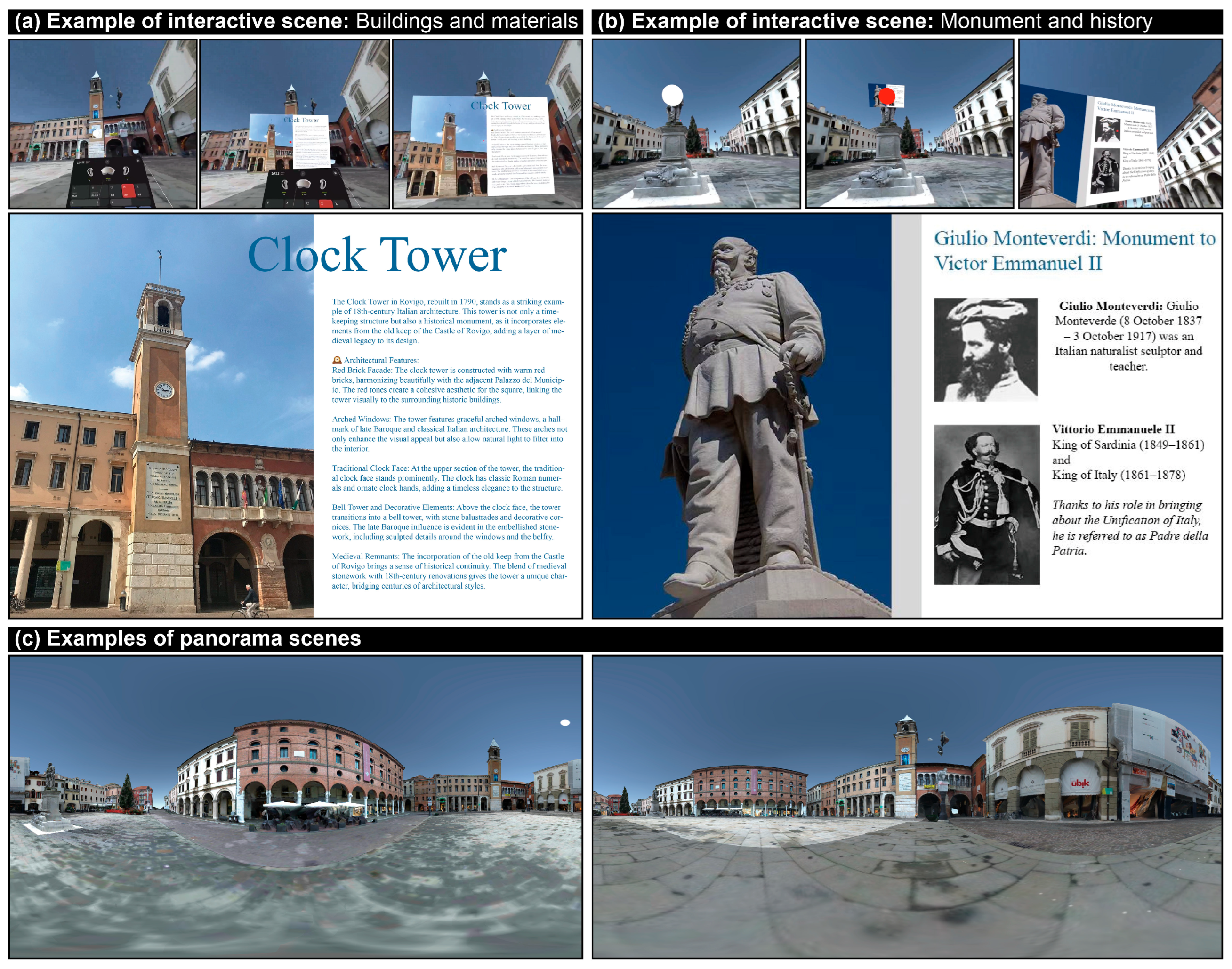

4.1. Results of Virtual Environment Construction

4.1.1. Model Representation and Environmental Effects

4.1.2. Interactive Design

4.2. Seeing: Visual Attention in the Virtual Environment

4.3. Acting: Exploration and Task-Oriented Behaviors

4.4. Understanding: Enhancement of Heritage Cognition and Protecting Intentions

4.5. Results of Comprehensive Evaluation

5. Discussion

5.1. The Advantages of Using UAV

5.2. Significance and Extensibility of the Pipeline

5.3. Three-Layer Evaluation and Differences from Real-World Behavior

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bandarin, F.; Van Oers, R. The Historic Urban Landscape: Managing Heritage in an Urban Century; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Wang, K.; Fouseki, K. Sustaining the Fabric of Time: Urban Heritage, Time Rupture, and Sustainable Development. Land 2025, 14, 193. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, G.; Chen, H.; Huang, H.; Shi, Y.; Wang, Q. Internet of Things and Extended Reality in Cultural Heritage: A Review on Reconstruction and Restoration, Intelligent Guided Tour, and Immersive Experiences. IEEE Internet Things J. 2025, 12, 19018–19042. [Google Scholar] [CrossRef]

- Gibson, J.J. The Ecological Approach to Visual Perception; Classic Edition; Psychology Press: Hove, UK, 2014. [Google Scholar] [CrossRef]

- Ulvi, A. Documentation, Three-Dimensional (3D) Modelling and visualization of cultural heritage by using Unmanned Aerial Vehicle (UAV) photogrammetry and terrestrial laser scanners. Int. J. Remote Sens. 2021, 42, 1994–2021. [Google Scholar] [CrossRef]

- Pepe, M.; Alfio, V.S.; Costantino, D. UAV Platforms and the SfM-MVS Approach in the 3D Surveys and Modelling: A Review in the Cultural Heritage Field. Appl. Sci. 2022, 12, 12886. [Google Scholar] [CrossRef]

- Kerle, N.; Nex, F.; Gerke, M.; Duarte, D.; Vetrivel, A. UAV-Based Structural Damage Mapping: A Review. ISPRS Int. J. Geo-Inf. 2020, 9, 14. [Google Scholar] [CrossRef]

- Yang, S.; Hou, M.; Li, S. Three-Dimensional Point Cloud Semantic Segmentation for Cultural Heritage: A Comprehensive Review. Remote Sens. 2023, 15, 548. [Google Scholar] [CrossRef]

- Hu, D.; Minner, J. UAVs and 3D City Modeling to Aid Urban Planning and Historic Preservation: A Systematic Review. Remote Sens. 2023, 15, 5507. [Google Scholar] [CrossRef]

- Templin, T.; Popielarczyk, D. The Use of Low-Cost Unmanned Aerial Vehicles in the Process of Building Models for Cultural Tourism, 3D Web and Augmented/Mixed Reality Applications. Sensors 2020, 20, 5457. [Google Scholar] [CrossRef]

- Georgopoulos, A.; Stathopoulou, E.K. Data Acquisition for 3D Geometric Recording: State of the Art and Recent Innovations. In Heritage and Archaeology in the Digital Age: Acquisition, Curation, and Dissemination of Spatial Cultural Heritage Data; Vincent, M.L., López-Menchero Bendicho, V.M., Ioannides, M., Levy, T.E., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–26. [Google Scholar] [CrossRef]

- Economou, M. Heritage in the Digital Age. In A Companion to Heritage Studies; Wiley Online Library: Hoboken, NJ, USA, 2015; pp. 215–228. [Google Scholar] [CrossRef]

- Santos, I.; Henriques, R.; Mariano, G.; Pereira, D.I. Methodologies to Represent and Promote the Geoheritage Using Unmanned Aerial Vehicles, Multimedia Technologies, and Augmented Reality. Geoheritage 2018, 10, 143–155. [Google Scholar] [CrossRef]

- Yu, Y.; Verbree, E.; van Oosterom, P.; Pottgiesser, U. 3D Gaussian Splatting for Modern Architectural Heritage: Integrating UAV-Based Data Acquisition and Advanced Photorealistic 3D Techniques. Agil. GISci. Ser. 2025, 6, 51. [Google Scholar] [CrossRef]

- Choi, K.; Nam, Y. Do Presence and Authenticity in VR Experience Enhance Visitor Satisfaction and Museum Re-Visitation Intentions? Int. J. Tour. Res. 2024, 26, e2737. [Google Scholar] [CrossRef]

- Barrado-Timón, D.A.; Hidalgo-Giralt, C. The Historic City, Its Transmission and Perception via Augmented Reality and Virtual Reality and the Use of the Past as a Resource for the Present: A New Era for Urban Cultural Heritage and Tourism? Sustainability 2019, 11, 2835. [Google Scholar] [CrossRef]

- Yan, Y.; Du, Q. From digital imagination to real-world exploration: A study on the influence factors of VR-based reconstruction of historical districts on tourists’ travel intention in the field. Virtual Real. 2025, 29, 85. [Google Scholar] [CrossRef]

- Schott, E.; Makled, E.B.; Zoeppig, T.J.; Muehlhaus, S.; Weidner, F.; Broll, W.; Froehlich, B. UniteXR: Joint Exploration of a Real-World Museum and its Digital Twin. In Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, Christchurch, New Zealand, 9–11 October 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Bolognesi, C.M.; Fiorillo, F. Virtual Representations of Cultural Heritage: Sharable and Implementable Case Study to Be Enjoyed and Maintained by the Community. Buildings 2023, 13, 410. [Google Scholar] [CrossRef]

- Szóstak, M.; Mahamadu, A.-M.; Prabhakaran, A.; Caparros Pérez, D.; Agyekum, K. Development and testing of immersive virtual reality environment for safe unmanned aerial vehicle usage in construction scenarios. Saf. Sci. 2024, 176, 106547. [Google Scholar] [CrossRef]

- Elghaish, F.; Matarneh, S.; Talebi, S.; Kagioglou, M.; Hosseini, M.R.; Abrishami, S. Toward digitalization in the construction industry with immersive and drones technologies: A critical literature review. Smart Sustain. Built Environ. 2020, 10, 345–363. [Google Scholar] [CrossRef]

- Lynch, K. “The Image of the Environment” and “The City Image and Its Elements”: From The Image of the City (1960). In The Urban Design Reader; Routledge: Abingdon, UK, 2013; pp. 125–138. ISBN 978-0-262-62001-7. [Google Scholar]

- Guccio, C.; Martorana, M.F.; Mazza, I.; Rizzo, I. Technology and Public Access to Cultural Heritage: The Italian Experience on ICT for Public Historical Archives. In Cultural Heritage in a Changing World; Borowiecki, K.J., Forbes, N., Fresa, A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 55–75. [Google Scholar] [CrossRef]

- Montello, D.R. Navigation. In The Cambridge Handbook of Visuospatial Thinking; Shah, P., Miyake, A., Eds.; Cambridge University Press: Cambridge, UK, 2005; pp. 257–294. [Google Scholar] [CrossRef]

- Wiener, J.M.; Büchner, S.J.; Hölscher, C. Taxonomy of Human Wayfinding Tasks: A Knowledge-Based Approach Taxonomy of Human Wayfinding Tasks: A Knowledge-Based Approach. Spat. Cogn. Comput. 2009, 9, 152–165. [Google Scholar] [CrossRef]

- Zhang, L.-M.; Zhang, R.-X.; Jeng, T.-S.; Zeng, Z.-Y. Cityscape protection using VR and eye tracking technology. J. Vis. Commun. Image Represent. 2019, 64, 102639. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, Y.; Gan, W.; Zou, Y.; Dong, W.; Zhou, S.; Wang, M. A Survey of the Landscape Visibility Analysis Tools and Technical Improvements. Int. J. Environ. Res. Public Health 2023, 20, 1788. [Google Scholar] [CrossRef] [PubMed]

- Omrani Azizabad, S.; Mahdavinejad, M.; Hadighi, M. Three-dimensional embodied visibility graph analysis: Investigating and analyzing values along an outdoor path. Environ. Plan. B Urban Anal. City Sci. 2025, 52, 1669–1684. [Google Scholar] [CrossRef]

- Mazzetto, S. Integrating Emerging Technologies with Digital Twins for Heritage Building Conservation: An Interdisciplinary Approach with Expert Insights and Bibliometric Analysis. Heritage 2024, 7, 6432–6479. [Google Scholar] [CrossRef]

- Jo, Y.H.; Hong, S. Three-Dimensional Digital Documentation of Cultural Heritage Site Based on the Convergence of Terrestrial Laser Scanning and Unmanned Aerial Vehicle Photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Chiabrando, F.; Di Pietra, V.; Lingua, A.; Maschio, P.; Noardo, F.; Sammartano, G.; Spano, A. TLS MODELS GENERATION ASSISTED BY UAV SURVEY. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 413–420. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Koehl, M.; Grussenmeyer, P.; Freville, T. ACQUISITION AND PROCESSING PROTOCOLS FOR UAV IMAGES: 3D MODELING OF HISTORICAL BUILDINGS USING PHOTOGRAMMETRY. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 163–170. [Google Scholar] [CrossRef]

- de la Fuente Suárez, L.A. Subjective experience and visual attention to a historic building: A real-world eye-tracking study. Front. Archit. Res. 2020, 9, 774–804. [Google Scholar] [CrossRef]

- Walter, J.L.; Essmann, L.; König, S.U.; König, P. Finding landmarks—An investigation of viewing behavior during spatial navigation in VR using a graph-theoretical analysis approach. PLoS Comput. Biol. 2022, 18, e1009485. [Google Scholar] [CrossRef]

- Bakirman, T.; Bayram, B.; Akpinar, B.; Karabulut, M.F.; Bayrak, O.C.; Yigitoglu, A.; Seker, D.Z. Implementation of ultra-light UAV systems for cultural heritage documentation. J. Cult. Herit. 2020, 44, 174–184. [Google Scholar] [CrossRef]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the advent of the twenties: Where we stand and what is next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Dostal, C.; Yamafune, K. Photogrammetric texture mapping: A method for increasing the Fidelity of 3D models of cultural heritage materials. J. Archaeol. Sci. Rep. 2018, 18, 430–436. [Google Scholar] [CrossRef]

- Mat Adnan, A.; Darwin, N.; Ariff, M.F.M.; Majid, Z.; Idris, K.M. INTEGRATION BETWEEN UNMANNED AERIAL VEHICLE AND TERRESTRIAL LASER SCANNER IN PRODUCING 3D MODEL. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W16, 391–398. [Google Scholar] [CrossRef]

- Tysiac, P.; Sieńska, A.; Tarnowska, M.; Kedziorski, P.; Jagoda, M. Combination of terrestrial laser scanning and UAV photogrammetry for 3D modelling and degradation assessment of heritage building based on a lighting analysis: Case study—St. Adalbert Church in Gdansk, Poland. Herit. Sci. 2023, 11, 53. [Google Scholar] [CrossRef]

- Storeide, M.S.B.; George, S.; Sole, A.; Hardeberg, J.Y. Standardization of digitized heritage: A review of implementations of 3D in cultural heritage. Herit. Sci. 2023, 11, 249. [Google Scholar] [CrossRef]

- Waagen, J. Documenting drone remote sensing: A reality-based modelling approach for applications in cultural heritage and archaeology. Drone Syst. Appl. 2025, 13, 1–14. [Google Scholar] [CrossRef]

- Stanga, C.; Banfi, F.; Roascio, S. Enhancing Building Archaeology: Drawing, UAV Photogrammetry and Scan-to-BIM-to-VR Process of Ancient Roman Ruins. Drones 2023, 7, 521. [Google Scholar] [CrossRef]

- Zidianakis, E.; Partarakis, N.; Ntoa, S.; Dimopoulos, A.; Kopidaki, S.; Ntagianta, A.; Ntafotis, E.; Xhako, A.; Pervolarakis, Z.; Kontaki, E.; et al. The Invisible Museum: A User-Centric Platform for Creating Virtual 3D Exhibitions with VR Support. Electronics 2021, 10, 363. [Google Scholar] [CrossRef]

- Azzola, P.; Cardaci, A.; Mirabella Roberti, G.; Nannei, V. UAV PHOTOGRAMMETRY FOR CULTURAL HERITAGE PRESERVATION MODELING AND MAPPING VENETIAN WALLS OF BERGAMO. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 45–50. [Google Scholar] [CrossRef]

- Dumonteil, M.; Gouranton, V.; Macé, M.J.-M.; Nicolas, T.; Gaugne, R. Cognitive Archaeology in Virtual Environment. In Proceedings of the 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Saint Malo, France, 8–12 March 2025; pp. 43–46. [Google Scholar] [CrossRef]

- Bozzelli, G.; Raia, A.; Ricciardi, S.; Nino, M.D.; Barile, N.; Perrella, M.; Tramontano, M.; Pagano, A.; Palombini, A. An integrated VR/AR framework for user-centric interactive experience of cultural heritage: The ArkaeVision project. Digit. Appl. Archaeol. Cult. Herit. 2019, 15, 00124. [Google Scholar] [CrossRef]

| Area/Scope | Tool Used | Resolution (Approx.) | Scanning Duration | Comments |

|---|---|---|---|---|

| Roof and surrounding environment | DJI UAV (MINI 3) | Medium (≈3–6 cm) | 30–45 min | Effective for aerial views and roof details |

| Entire area and streets/facade area | GeoSLAM ZEB Horizon RT scanner (3D laser scanner) | Medium-High (≈1–3 cm) | 45–60 min | Ideal for large-scale mapping and street views |

| Sculptural details and features | Canon EOS 700D/archival research | High (≈0.5–1.5 mm) | 1–2 h | Used for intricate details and texture accuracy |

| Variable | Category | Total (n) | Total (%) |

|---|---|---|---|

| Age | 18–25 | 2 | 4.9 |

| 26–35 | 24 | 58.5 | |

| 36–45 | 12 | 29.3 | |

| 46–55 | 3 | 7.3 | |

| 56+ | 0 | 0.0 | |

| Gender | Male | 20 | 48.8 |

| Female | 21 | 51.2 |

| Theme | Key Findings | Example Quotes |

|---|---|---|

| Knowledge Enhancement | Participants gained deeper knowledge of materials, craftsmanship, and local traditions. | “I never knew about these traditional materials before, but now I understand why they are important.” |

| Emotional Connection | VR created a stronger emotional link to cultural heritage, making history feel ‘alive’ rather than distant. | “I felt like I was actually in the past, experiencing the site rather than just reading about it.” |

| Comparison with Traditional Media | VR was described as more immersive and engaging compared to text, images, or videos. | “Books tell you about history, but VR lets you feel it.” |

| Increased Motivation for Heritage Preservation | Participants felt more inclined to protect and promote cultural heritage after experiencing it in VR. | “I want to visit the real place now and learn more.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Lin, G.; Peng, Y.; Yu, Y. A Workflow for Urban Heritage Digitization: From UAV Photogrammetry to Immersive VR Interaction with Multi-Layer Evaluation. Drones 2025, 9, 716. https://doi.org/10.3390/drones9100716

Zhang C, Lin G, Peng Y, Yu Y. A Workflow for Urban Heritage Digitization: From UAV Photogrammetry to Immersive VR Interaction with Multi-Layer Evaluation. Drones. 2025; 9(10):716. https://doi.org/10.3390/drones9100716

Chicago/Turabian StyleZhang, Chengyun, Guiye Lin, Yuyang Peng, and Yingwen Yu. 2025. "A Workflow for Urban Heritage Digitization: From UAV Photogrammetry to Immersive VR Interaction with Multi-Layer Evaluation" Drones 9, no. 10: 716. https://doi.org/10.3390/drones9100716

APA StyleZhang, C., Lin, G., Peng, Y., & Yu, Y. (2025). A Workflow for Urban Heritage Digitization: From UAV Photogrammetry to Immersive VR Interaction with Multi-Layer Evaluation. Drones, 9(10), 716. https://doi.org/10.3390/drones9100716