Design of a Reinforcement Learning-Based Speed Compensator for Unmanned Aerial Vehicle in Complex Environments

Abstract

1. Introduction

2. UAV Modelling and Landing Environment Modelling

3. Reinforcement Learning-Based Design of Rotational Speed Compensator

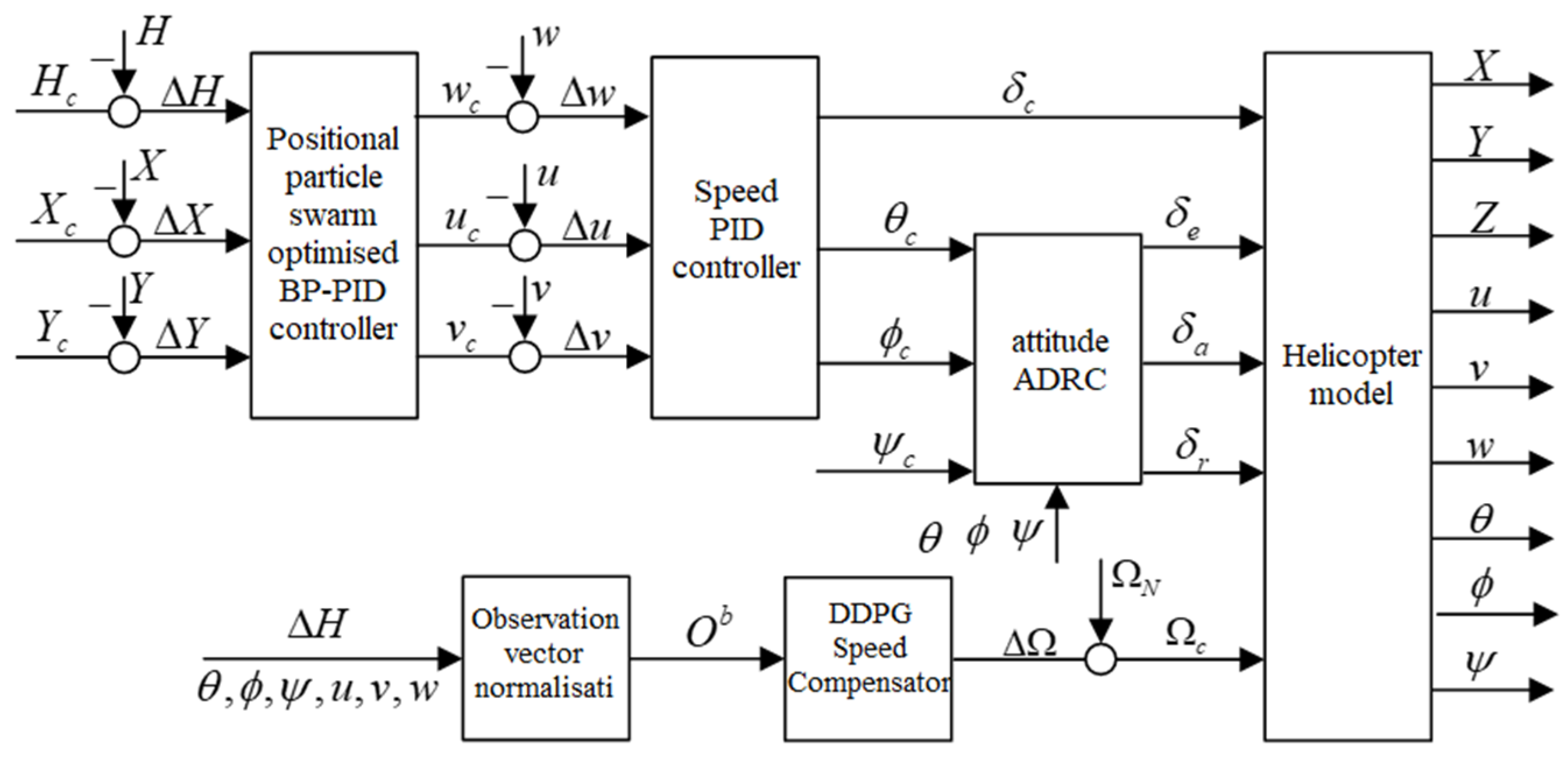

3.1. Design of Landing Control Structure at Variable Speed

3.2. Intensive Study of Relevant Theories

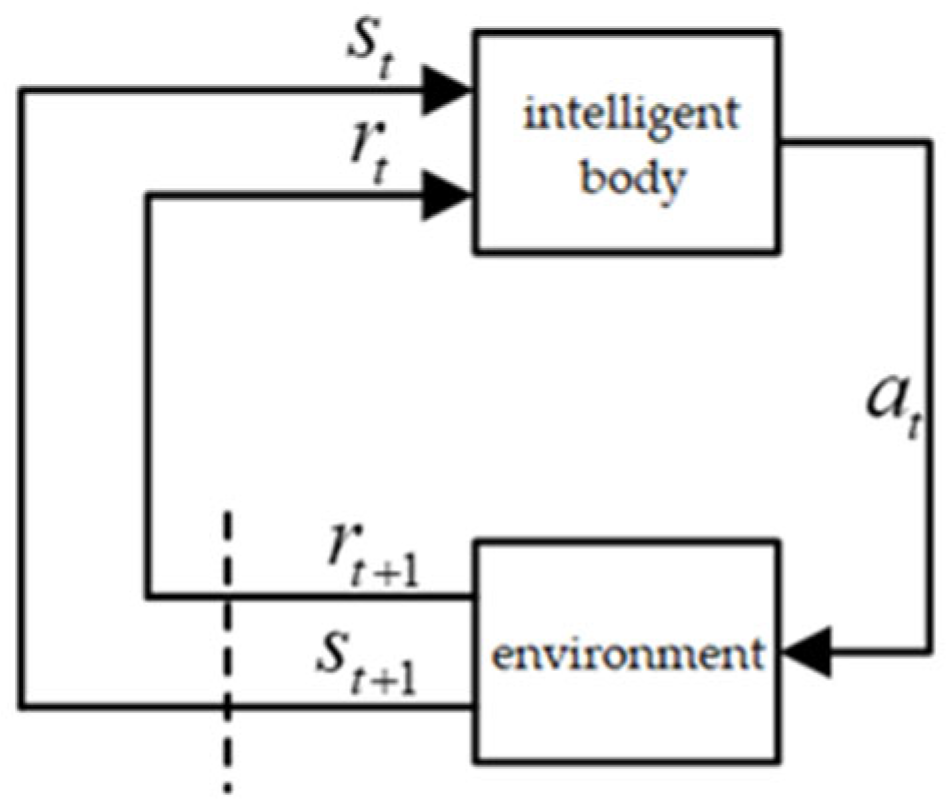

3.2.1. Concepts of Reinforcement Learning

3.2.2. Components of Reinforcement Learning

- Reward is an important measure of the effectiveness of an intelligent body’s actions in a given environment. Reinforcement learning assumes that all decision-making problems can be viewed as problems of maximising cumulative rewards, because intelligences need to learn how to take optimal actions to obtain long-term rewards by interacting with the environment. Intelligentsia learn by trial and error based on the reward signals given by the environment and gradually improve their decision-making ability. In conclusion, rewards play a key role in reinforcement learning and are the basis for intelligent body behavioural feedback and learning.

- Intelligence and environment. They are two core concepts in reinforcement learning, and they interact with each other to form the basic framework of reinforcement learning. From the perspective of the intelligent body, at time the intelligent body firstly observes the state of the environment, which is the perception of the intelligent body to the environment, and it contains all kinds of information about the environment. The intelligent body needs to make an action based on this state, which is the intelligent body’s response to the environment and it will change the state of the environment. After executing this action in the environment, the intelligent body will get a reward signal returned by the environment . The reward signal is the evaluation of the intelligent body’s action by the environment. In reinforcement learning, the interaction between the intelligent body and the environment is a continuous process. The intelligent body makes actions by observing the state of the environment and then adjusts its behaviour according to the feedback from the environment in the expectation of maximising the cumulative rewards over a long period of learning. This process is the core idea of reinforcement learning: the intelligent body learns how to make optimal behaviours through interaction with the environment.

- State. This includes states at the environment, intelligence, and information levels. The environment state is a specific depiction of the environment in which the intelligent body is located, including the data that needs to be observed at the next moment and the data related to the generation of the reward signal , among others. Intelligentsia are not always fully aware of all the details of the environment state, and sometimes this data may contain redundant information. The state of an intelligent body describes the internal structure and parameters, including relevant data used to determine future actions such as network parameters, policy information, etc. The information state, also known as the Markov state, is the sum of all the data that has been useful to the intelligences in history. It reflects the experience and knowledge accumulated from past learning and decision making and becomes an important basis for decision making. In conclusion, state is an essential and important component of AI systems that influences behaviour and decision making, and measures learning effectiveness.

- Strategies. A policy is the basis on which an intelligent body chooses an action in a given state, and it determines how the intelligent body behaves. A strategy is usually represented as a mapping from states to actions and can be a simple rule, function or complex algorithm. Strategies can be categorised as deterministic or stochastic based on whether they contain randomness or not. A deterministic strategy always outputs the same action in a given state, while a stochastic strategy selects different actions based on a certain probability distribution. The stochastic strategy helps the intelligent body to maintain a certain degree of diversity when exploring unknown environments and avoid falling into local optimal solutions.

- Reward function. Reward function is a function that generates reward signals according to the state of the environment, reflecting the effect of the action of the intelligent body and the completion of the goal. In strategy adjustment, the reward function is an important reference basis. The reward signal measures how well the intelligent body chooses an action, and is usually expressed as a numerical value, with larger values indicating greater rewards. For stability, the reward signal is generally adjusted between −1 and 1. Reinforcement learning maximises the cumulative reward value by responding to the reward signal. Intelligentsia need to continuously adjust their action strategies based on real-time reward signals. However, a reward function that is reasonable, objective and applicable to the current task can provide an effective reference and guide the intelligent body towards the optimal solution.

- Value function. The value function is different from the reward function that evaluates the new state directly, but evaluates the current strategy from a long-term perspective, so the value function is also called the evaluation function. The value of state in reinforcement learning is the expectation of the cumulative reward received by the intelligent after performing a series of actions in state , denoted as .

- Environment Modelling. The environment model in reinforcement learning is an optional part that is used to model how the environment behaves. The environment model consists of two main parts: the reward function and the state transfer function. The reward function is used to determine how good or bad it is to perform an action in a defined state, while the state transfer function determines the next state based on the current state and action.

- Markov Decision Process. Markov Decision Process (MDP) in Reinforcement Learning is a mathematical model for describing the interaction between the environment and the intelligences. MDP assumes that the environment has Markovianity, i.e., the future state of the environment is only related to the current state and not to the past state, or there is no difference between referring to the historical state information and ignoring out the historical information to produce a new state as shown in Equation (4), where the right side of the equation can be represented by the left side of the equation representation. This assumption simplifies the complexity of the problem and allows the reinforcement learning algorithm to handle it more efficiently.

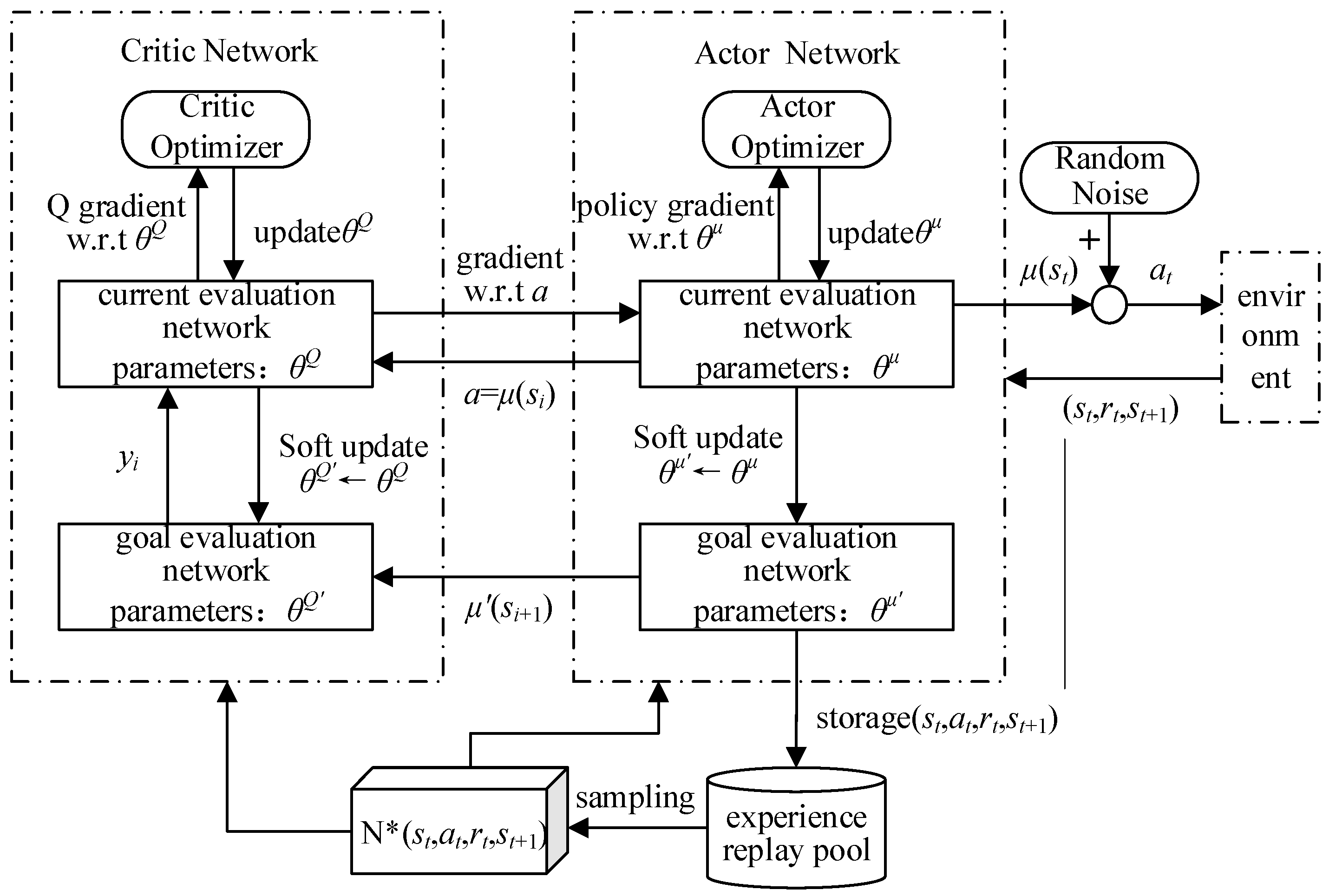

3.3. Deep Deterministic Policy Gradient

- Actor current network: this is a policy network that is responsible for generating actions based on the current state. It receives the state as input and outputs a deterministic action through a neural network. The goal of the Actor current network is to maximize the cumulative rewards, learn and optimize the strategy by interacting with the environment.

- Actor target network: this is a copy of the Actor’s current network and is used to stabilise the learning process. Its weights remain constant during training but are updated periodically from the Actor current network. The Actor goal network provides a stable goal for training and helps to reduce oscillations in the learning process.

- Critic Current Network: this is a value network that is responsible for assessing the value of actions taken in the current state. It receives the state and action as inputs and outputs a Q-value through a neural network. The Critic Current Network learns and optimises the value function by interacting with the environment to more accurately assess the value of the action.

- Critic target network: this is a copy of the Critic current network, also used to stabilise the learning process. Its weights remain constant during training, but are periodically updated from the Critic current network. The Critic target network provides a stable target Q value for training, helping to reduce fluctuations in value estimates.

3.4. Design of UAV Speed Controller Based on DDPG

3.4.1. Observation Vector Design

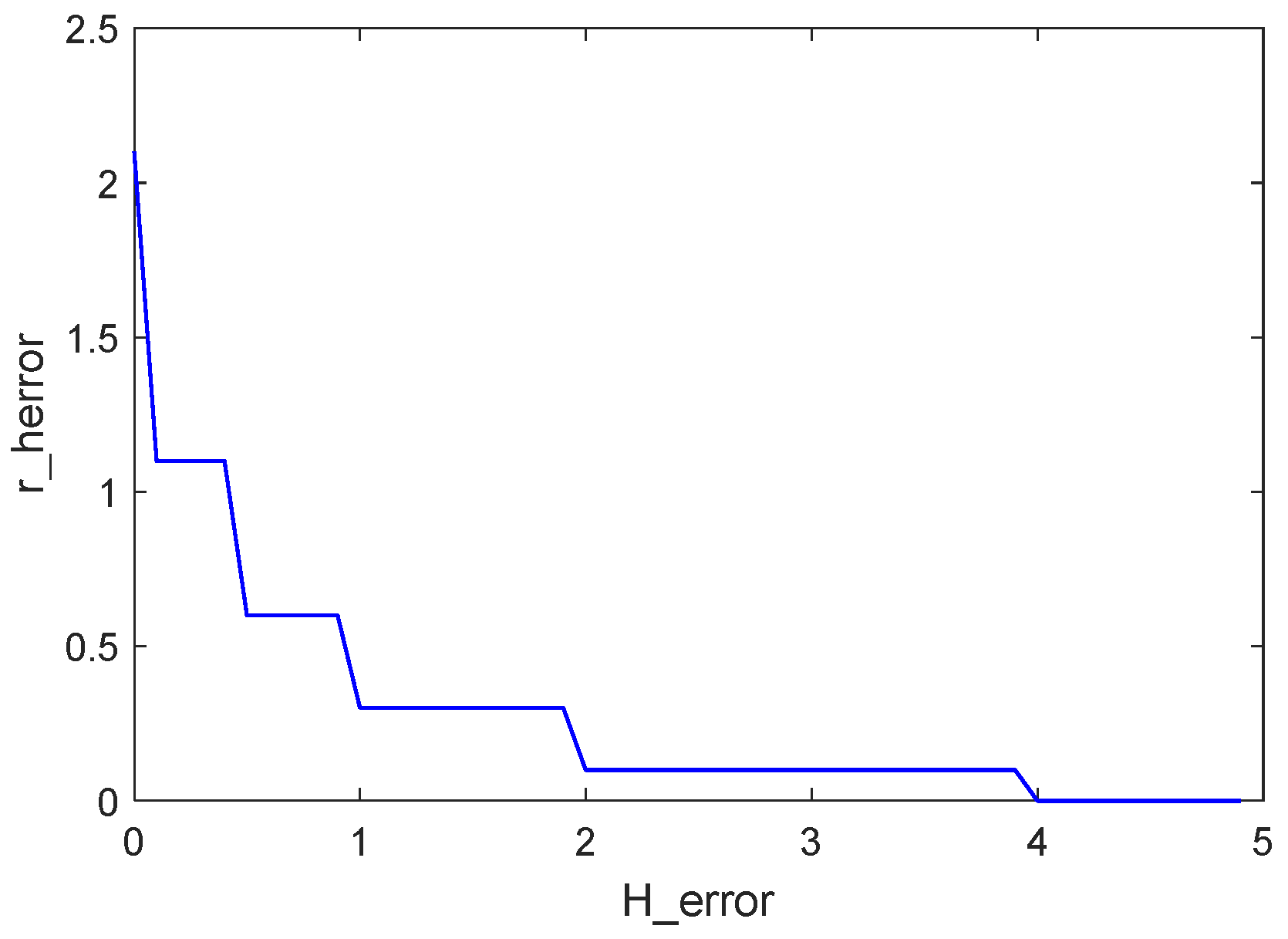

3.4.2. Reward Function Design

- Improved speed for height tracking

- 2.

- Improved smoothness of the height tracking process

- 3.

- Improved smoothness of speed changes

- 4.

- State constraint

3.5. Motion Output

3.6. Cognitive Training

- Critic and Actor Network Design

- 2.

- Parameterisation

- 3.

- Deployment training

4. UAV Landing Comprehensive Simulation Experiment

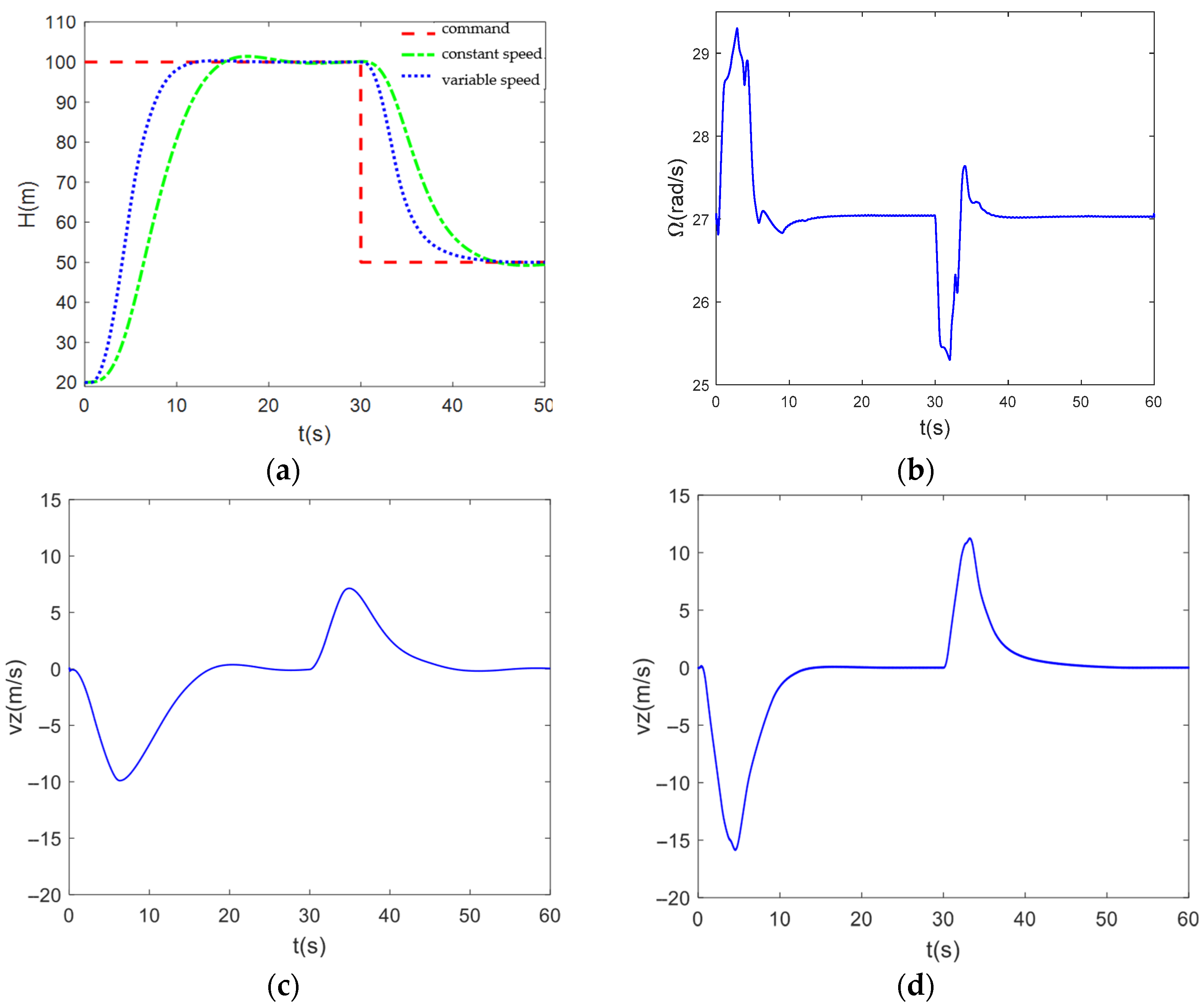

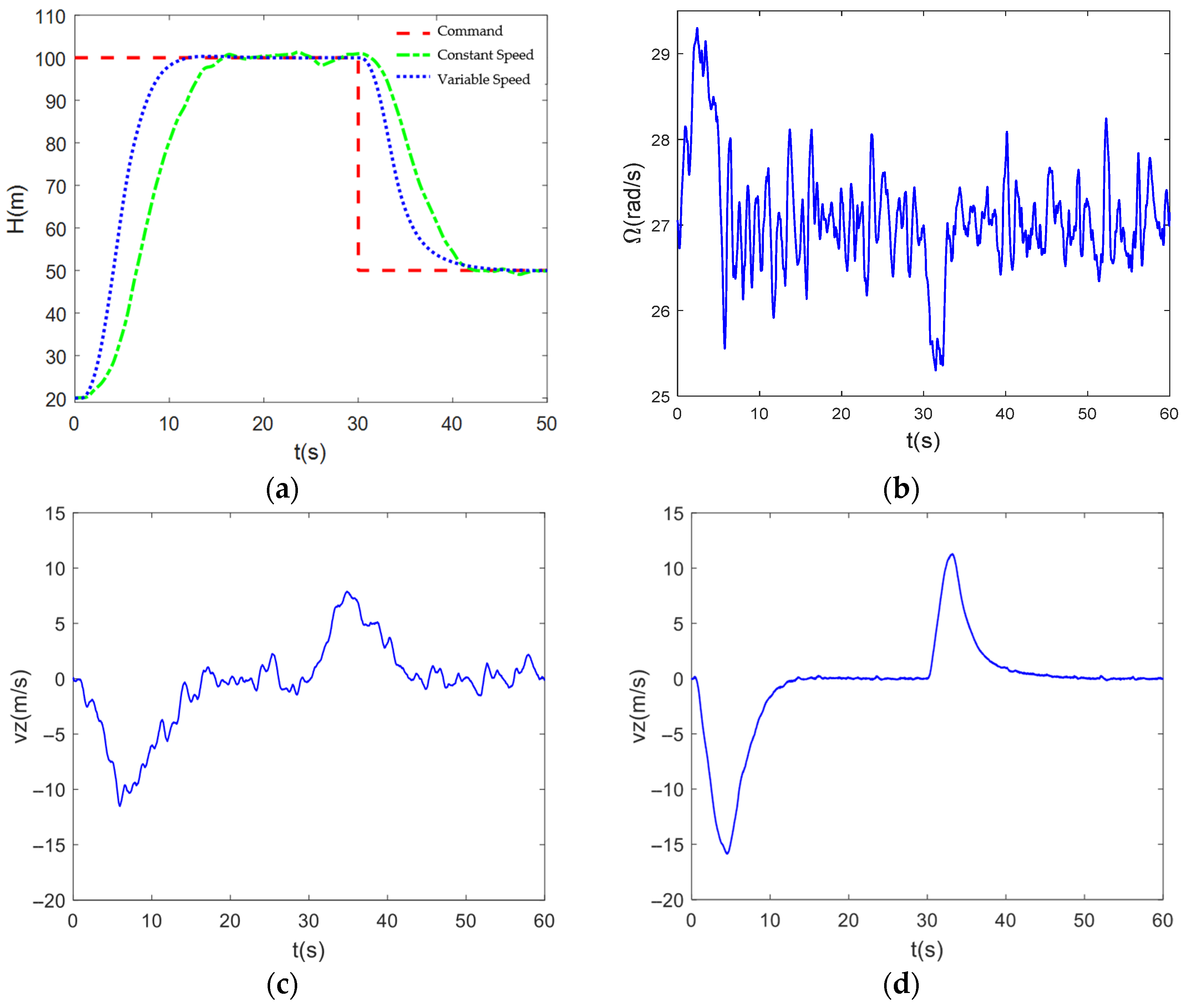

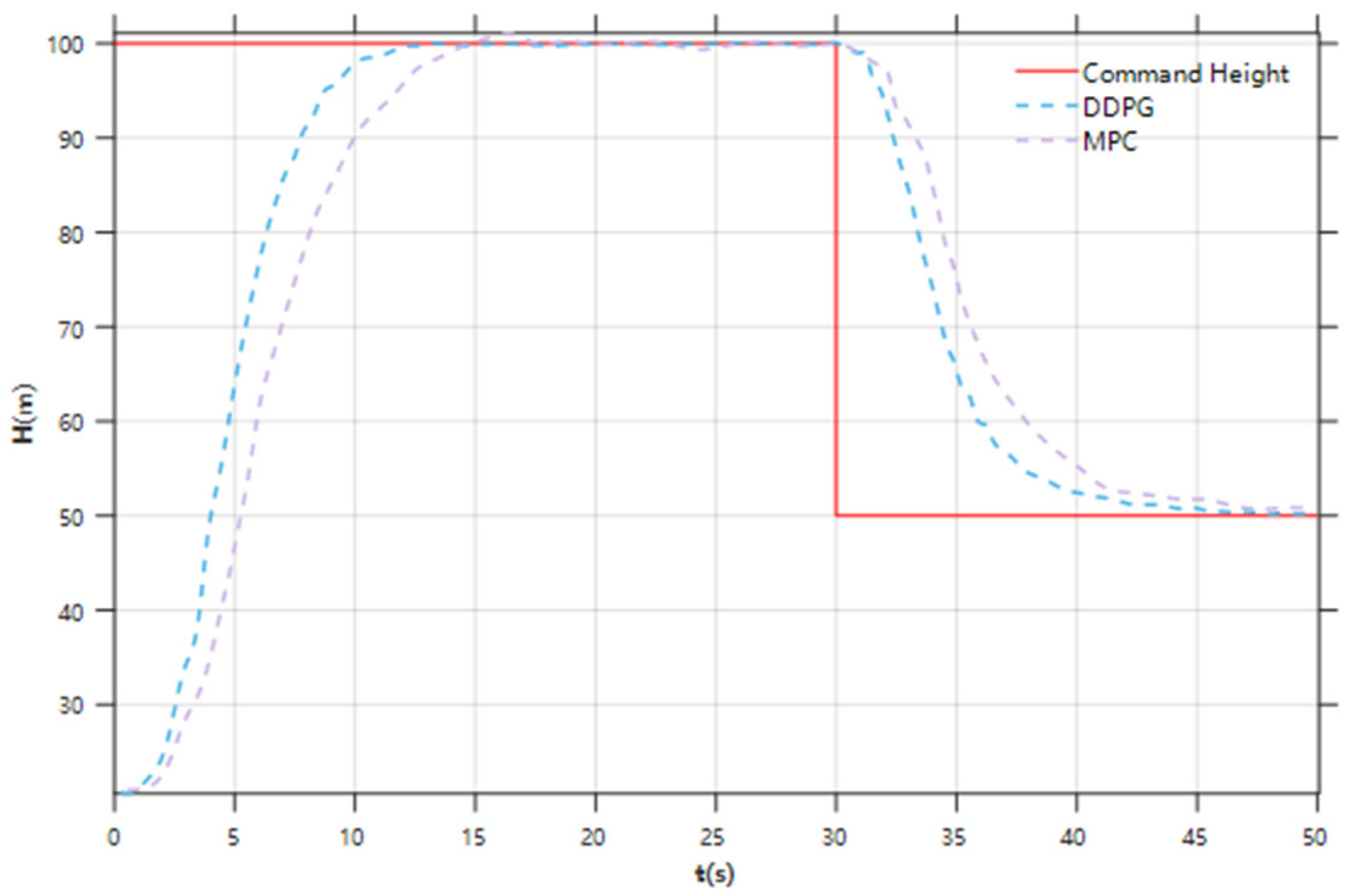

4.1. Highly Controlled Simulation Without Interference

4.2. Simulation of Altitude Control Under Air Turbulence

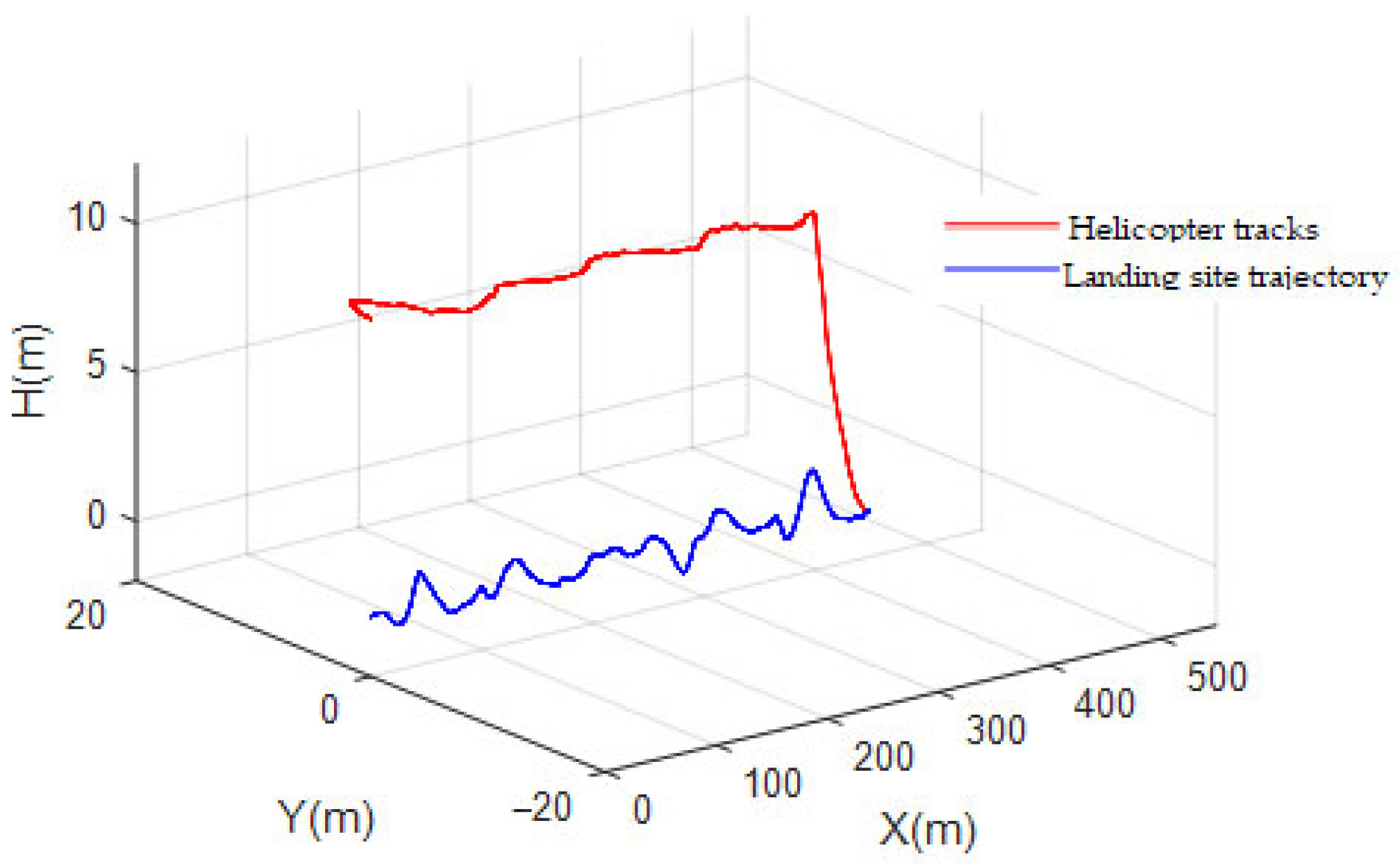

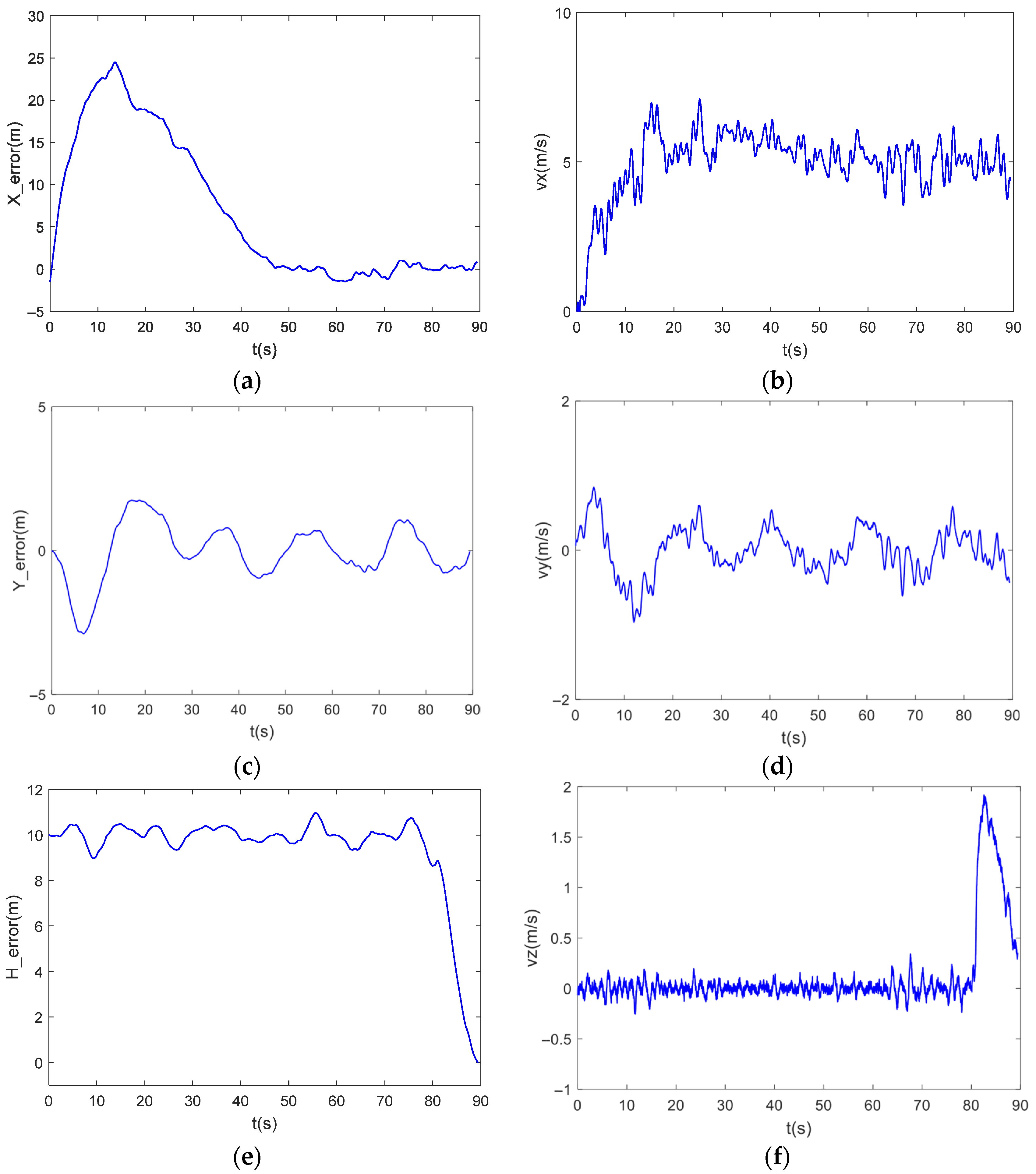

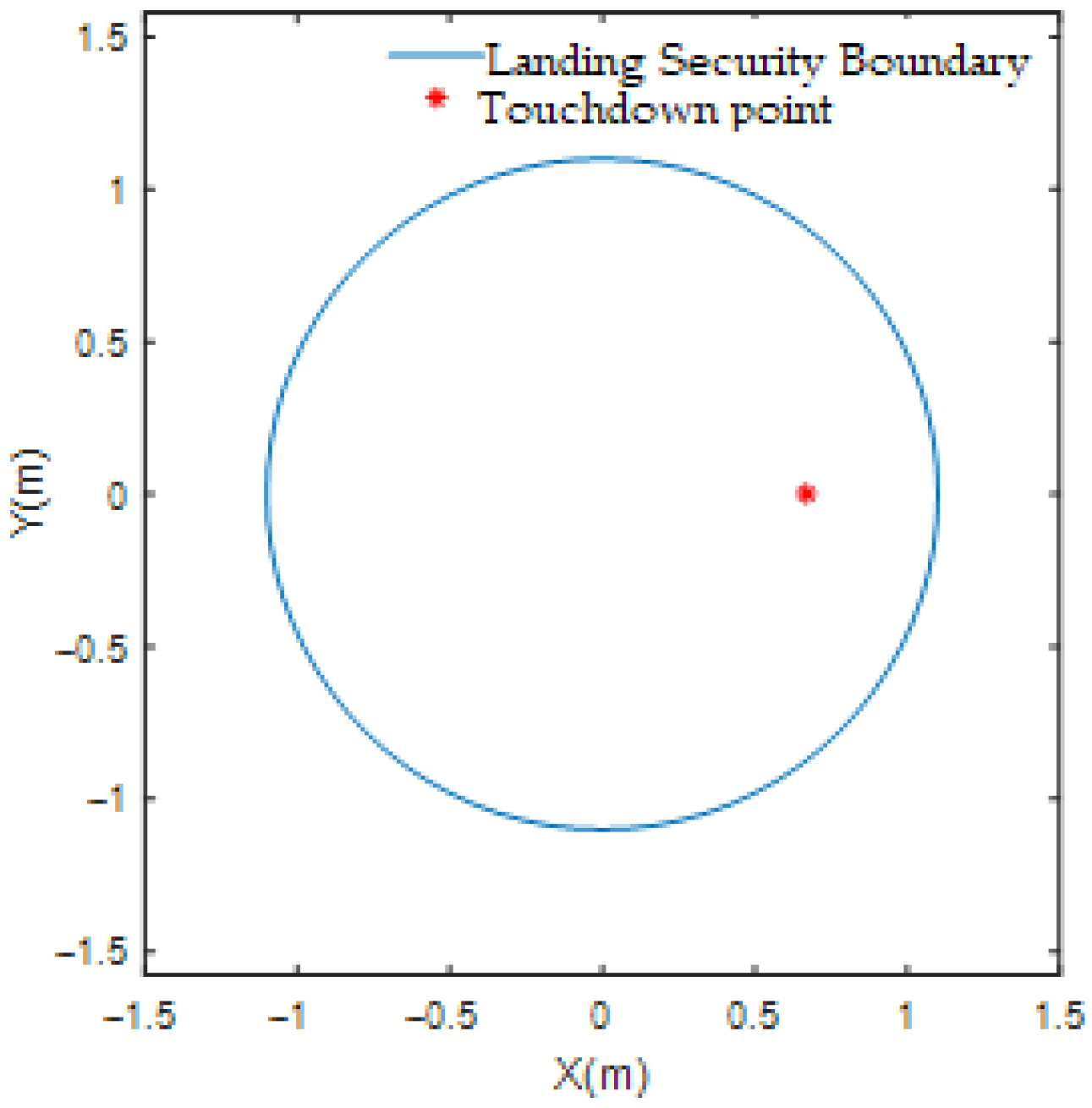

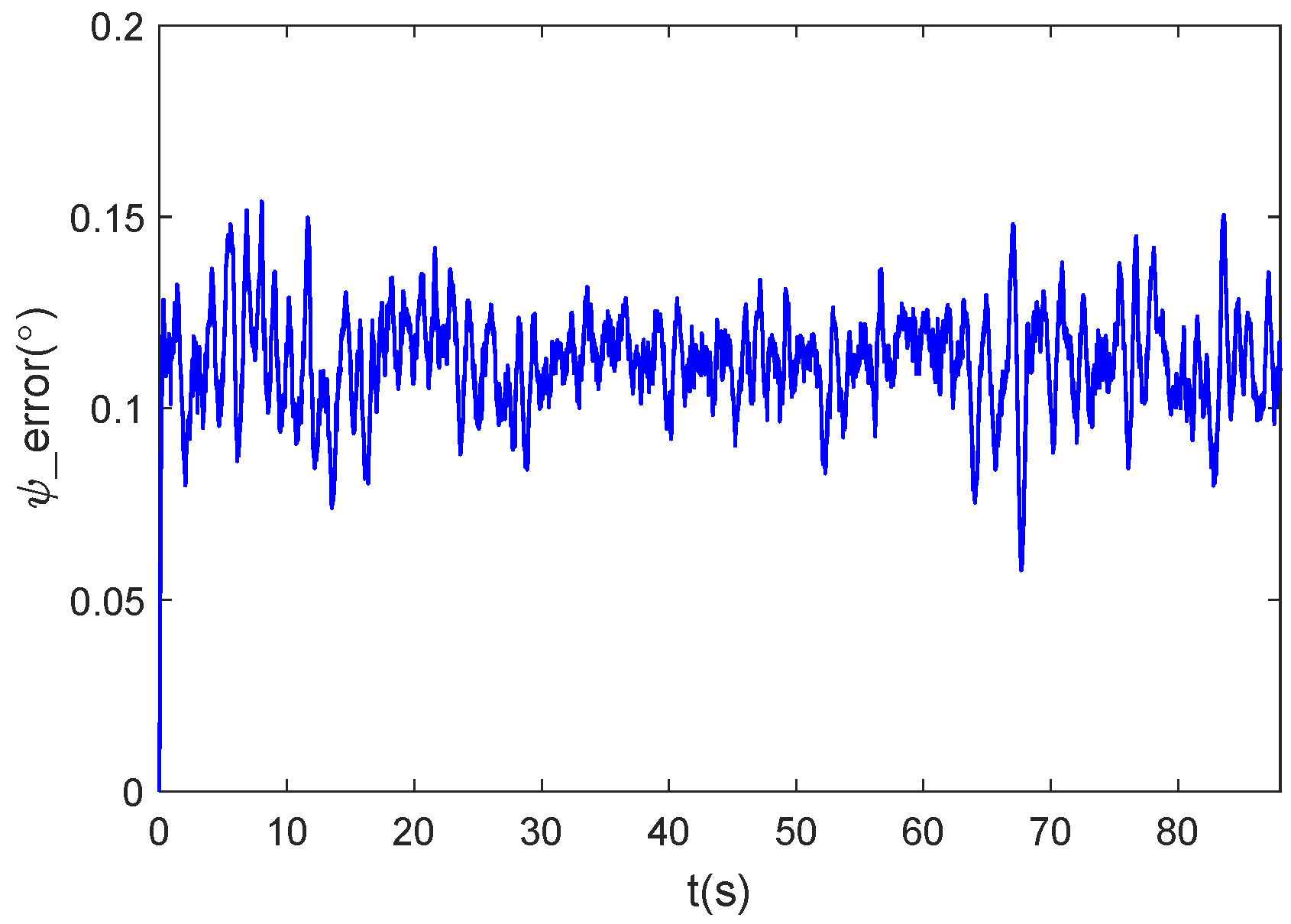

4.3. Linear Trajectory Tracking Experiment

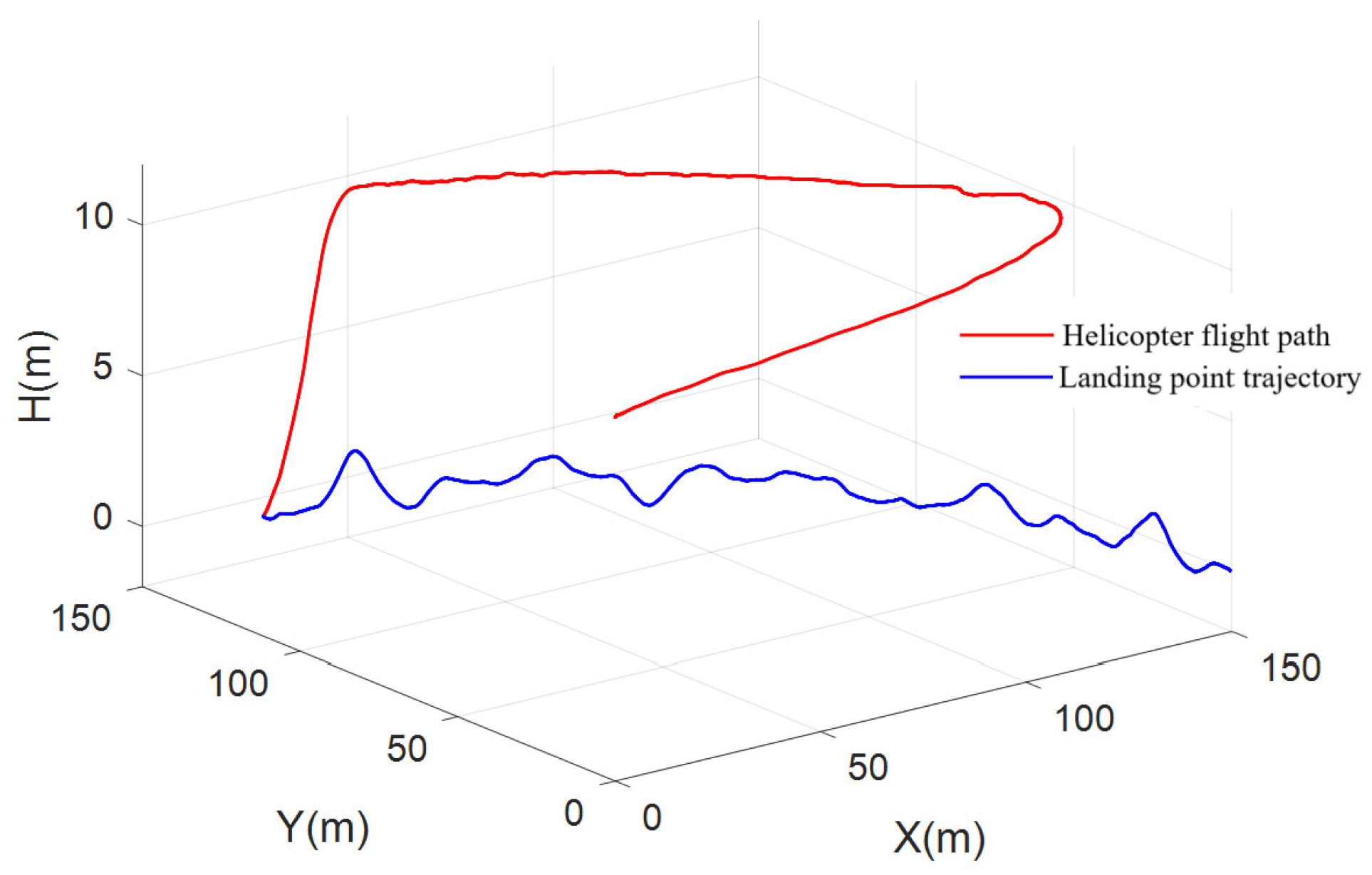

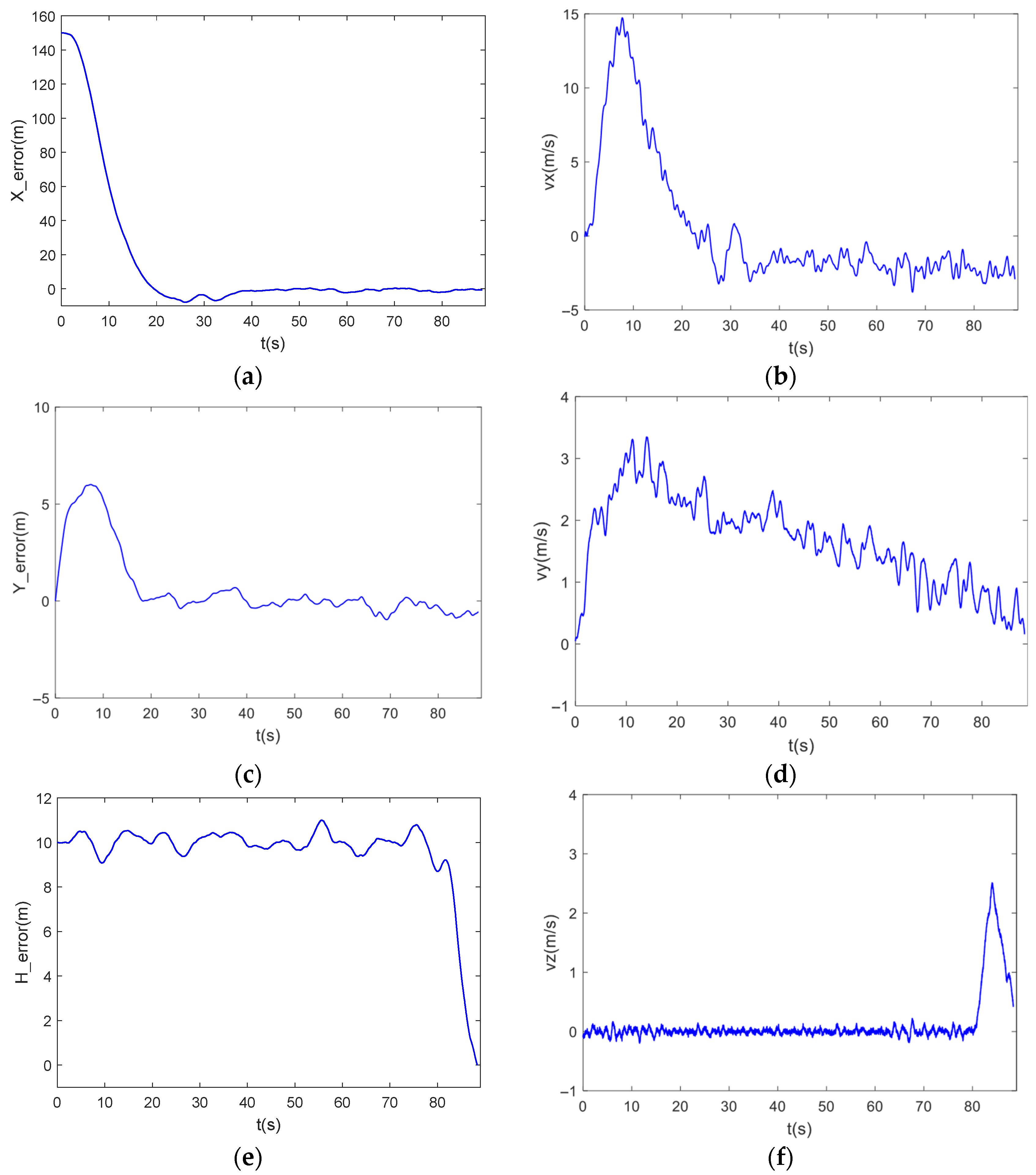

4.4. Circular Trajectory Tracking Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shi, Y.; He, X.; Xu, Y.; Xu, G. Numerical study on flow control of ship airwake and rotor airload during helicopter shipboard landing. Chin. J. Aeronaut. 2019, 32, 324–336. [Google Scholar] [CrossRef]

- Huang, Y.; Zhu, M.; Zheng, Z.; Feroskhan, M. Fixed-time autonomous shipboard landing control of a helicopter with external disturbances. Aerosp. Sci. Technol. 2019, 84, 18–30. [Google Scholar] [CrossRef]

- Horn, J.F.; Yang, J.; He, C.; Lee, D.; Tritschler, J.K. Autonomous ship approach and landing using dynamic inversion control with deck motion prediction. In Proceedings of the 41st European Rotorcraft Forum 2015, Munich, Germany, 1–4 September 2015. [Google Scholar]

- Memon, W.A.; Owen, I.; White, M.D. Motion Fidelity Requirements for Helicopter-Ship Operations in Maritime Rotorcraft Flight Simulators. J. Aircr. 2019, 56, 2189–2209. [Google Scholar] [CrossRef]

- Xia, K.; Lee, S.; Son, H. Adaptive control for multi-rotor UAVs autonomous ship landing with mission planning. Aerosp. Sci. Technol. 2020, 96, 105549. [Google Scholar] [CrossRef]

- Wang, L.; Chen, R.; Xie, X.; Yuan, Y. Modeling and full-speed range transition strategy for a compound helicopter. Aerosp. Sci. Technol. 2025, 160, 110092. [Google Scholar] [CrossRef]

- Song, J.; Wang, Y.; Ji, C.; Zhang, H. Real-time optimization control of variable rotor speed based on Helicopter/turboshaft engine on-board composite system. Energy 2024, 301, 131701. [Google Scholar] [CrossRef]

- Xia, H. Modeling and control strategy of small unmanned helicopter rotation based on deep learning. Syst. Soft Comput. 2024, 6, 200146. [Google Scholar] [CrossRef]

- Dudnik, V.; Gaponov, V. Correction system of the main rotor angular speed for helicopters of little weight categories. Transp. Res. Procedia 2022, 63, 187–194. [Google Scholar] [CrossRef]

- Sun, L.; Huang, Y.; Zheng, Z.; Zhu, B.; Jiang, J. Adaptive nonlinear relative motion control of quadrotors in autonomous shipboard landings. J. Frankl. Inst. 2020, 357, 13569–13592. [Google Scholar] [CrossRef]

- Qiu, Y.; Li, Y.; Lang, J.; Wang, Z. Dynamics analysis and control of coaxial high-speed helicopter in transition flight. Aerosp. Sci. Technol. 2023, 137, 108278. [Google Scholar] [CrossRef]

- Halbe, O.; Hajek, M. Robust Helicopter Sliding Mode Control for Enhanced Handling and Trajectory Following. J. Guid. Control Dyn. 2020, 43, 1805–1821. [Google Scholar] [CrossRef]

- Yuan, Y.; Duan, H. Adaptive Learning Control for a Quadrotor Unmanned Aerial Vehicle landing on a Moving Ship. IEEE Trans. Ind. Inform. 2024, 20, 534–545. [Google Scholar] [CrossRef]

- Shi, Y.; Li, G.; Su, D.; Xu, G. Numerical investigation on the ship/multi-helicopter dynamic interface. Aerosp. Sci. Technol. 2020, 106, 106175. [Google Scholar] [CrossRef]

- Greer, W.B.; Sultan, C. Infinite horizon model predictive control tracking application to helicopters. Aerosp. Sci. Technol. 2020, 98, 105675. [Google Scholar] [CrossRef]

- Di, Z.; Mishra, S.T.; Gandhi, F. A Differential Flatness-Based Approach for Autonomous Helicopter Shipboard Landing. IEEE/ASME Trans. Mechatron. 2022, 27, 1557–1569. [Google Scholar] [CrossRef]

- Huang, Y.; Zhu, M.; Zheng, Z.; Low, K.H. Linear Velocity-Free Visual Servoing Control for Unmanned Helicopter Landing on a Ship with Visibility Constraint. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 2979–2993. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, W.; Sun, H.; Wang, B.; Zhao, D.; Ni, T. Research on Reliability Growth of Shock Absorption System in Rapid Secure Device of Shipboard Helicopter. IEEE Access 2023, 11, 134652–134662. [Google Scholar] [CrossRef]

- Liu, M.; Wei, J.; Liu, K. A Two-Stage Target Search and Tracking Method for UAV Based on Deep Reinforcement Learning. Drones 2024, 8, 544. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, J.; Yi, W.; Lin, H.; Lei, L.; Song, X. A State-Decomposition DDPG Algorithm for UAV Autonomous Navigation in 3D Complex Environments. IEEE Internet Things J. 2023, 11, 10778–10790. [Google Scholar] [CrossRef]

- Yu, Y. Multi-Objective Optimization for UAV-Assisted Wireless Powered IoT Networks Based on Extended DDPG Algorithm. IEEE Trans. Commun. 2021, 69, 6361–6374. [Google Scholar] [CrossRef]

- Tian, S.; Li, Y.; Zhang, X.; Zheng, L.; Cheng, L.; She, W.; Xie, W. Fast UAV path planning in urban environments based on three-step experience buffer sampling DDPG. Digit. Commun. Netw. 2024, 10, 813–826. [Google Scholar] [CrossRef]

- Wang, T.; Ji, X.; Zhu, X.; He, C.; Gu, J.F. Deep Reinforcement Learning based running-track path design for fixed-wing UAV assisted mobile relaying network. Veh. Commun. 2024, 50, 100851. [Google Scholar] [CrossRef]

- Barnawi, A.; Kumar, N.; Budhiraja, I.; Kumar, K.; Almansour, A.; Alzahrani, B. Deep reinforcement learning based trajectory optimization for magnetometer-mounted UAV to landmine detection. Comput. Commun. 2022, 195, 441–450. [Google Scholar] [CrossRef]

- Sarikaya, B.S.; Bahtiyar, S. A survey on security of UAV and deep reinforcement learning. Ad Hoc Netw. 2024, 164, 103642. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Dong, Q. Autonomous navigation of UAV in multi-obstacle environments based on a Deep Reinforcement Learning approach. Appl. Soft Comput. 2022, 115, 108194. [Google Scholar] [CrossRef]

- Trad, T.Y.; Choutri, K.; Lagha, M.; Meshoul, S.; Khenfri, F.; Fareh, R.; Shaiba, H. Real-Time Implementation of Quadrotor UAV Control System Based on a Deep Reinforcement Learning Approach. Comput. Mater. Contin. 2024, 81, 4757–4786. [Google Scholar] [CrossRef]

- Ma, B.; Liu, Z.; Jiang, F.; Zhao, W.; Dang, Q.; Wang, X.; Zhang, G.; Wang, L. Reinforcement learning based UAV formation control in GPS-denied environment. Chin. J. Aeronaut. 2023, 36, 281–296. [Google Scholar] [CrossRef]

- Lu, Y.; Xu, C.; Wang, Y. Joint Computation Offloading and Trajectory Optimization for Edge Computing UAV: A KNN-DDPG Algorithm. Drones 2024, 8, 564. [Google Scholar] [CrossRef]

- Yang, H.; Ni, T.; Wang, Z.; Wang, Z.; Zhao, D. Dynamic modeling and analysis of traction operation process for the shipboard helicopter. Aerosp. Sci. Technol. 2023, 142, 108661. [Google Scholar] [CrossRef]

- Taymourtash, N.; Zagaglia, D.; Zanotti, A.; Muscarello, V.; Gibertini, G.; Quaranta, G. Experimental study of a helicopter model in shipboard operations. Aerosp. Sci. Technol. 2021, 115, 106774. [Google Scholar] [CrossRef]

- Wang, G. Design of Position Control Rate for Unmanned Helicopters During Hovering/Low-Speed Flight Under Atmospheric Disturbances. Master’s Thesis, Nanjing University of Aeronautics and Astronautics, Nanjing, China, 2013. [Google Scholar]

- Beal, T.R. Digital simulation of atmospheric turbulence for Dryden and von Karman models. J. Guid. Control Dyn. 1993, 16, 132–138. [Google Scholar] [CrossRef]

| Parameter | Value | Unit |

|---|---|---|

| 60 | deg | |

| −60 | deg | |

| 60 | deg | |

| −60 | deg | |

| 60 | deg | |

| −60 | deg | |

| 20 | deg/s | |

| −20 | deg/s | |

| 20 | deg/s | |

| −20 | deg/s | |

| 20 | deg/s | |

| −20 | deg/s | |

| 20 | m/s | |

| −20 | m/s | |

| 100 | m | |

| −100 | m |

| Parameter | Value |

|---|---|

| −0.0016 | |

| 0.1 | |

| 0.2 | |

| 0.3 | |

| 0.5 | |

| 1 | |

| −0.0006 | |

| −0.22 | |

| −0.02 | |

| −1.6 | |

| 15 | |

| 0.01 |

| Parameter | Value | Unit |

|---|---|---|

| 30 | rad/s | |

| 24 | rad/s |

| Parameter | Value |

|---|---|

| Target network attenuation factor | 0.99 |

| Experience pool size | |

| Sample extraction rate | 256 |

| Reward Discount Rate | 0.99 |

| Real-time network action noise variance | 0.01 |

| Target network action noise | 0.02 |

| Target network action output upper limit | 1 |

| Target network action output lower limit | −1 |

| Value function network learning rate | 0.001 |

| Strategy e-learning rate | 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, G.; Feng, P.; Wang, X. Design of a Reinforcement Learning-Based Speed Compensator for Unmanned Aerial Vehicle in Complex Environments. Drones 2025, 9, 705. https://doi.org/10.3390/drones9100705

Chen G, Feng P, Wang X. Design of a Reinforcement Learning-Based Speed Compensator for Unmanned Aerial Vehicle in Complex Environments. Drones. 2025; 9(10):705. https://doi.org/10.3390/drones9100705

Chicago/Turabian StyleChen, Guanyu, Pengyu Feng, and Xinhua Wang. 2025. "Design of a Reinforcement Learning-Based Speed Compensator for Unmanned Aerial Vehicle in Complex Environments" Drones 9, no. 10: 705. https://doi.org/10.3390/drones9100705

APA StyleChen, G., Feng, P., & Wang, X. (2025). Design of a Reinforcement Learning-Based Speed Compensator for Unmanned Aerial Vehicle in Complex Environments. Drones, 9(10), 705. https://doi.org/10.3390/drones9100705