Highlights

What are the main findings?

- A structured prompting framework, guided by HFACS with evidence binding and cross-level checks, enables LLMs to extract operator, precondition, supervisory, and organizational factors from UAV accident narratives, with improved inference on UAV-specific factors.

- A human-factor-annotated UAV accident dataset was developed from ASRS reports and used to benchmark the method, showing performance comparable to expert assessment, particularly on UAV-specific categories.

What is the implication of the main finding?

- LLM-assisted investigation provides a rapid, systematic triage layer that surfaces human-related and organization-related cues early from sparse, unstructured occurrence reports.

- The approach complements safety management and regulatory oversight in low-altitude operations by preserving causal reasoning and supporting human-in-the-loop verification across the reporting-to-classification workflow.

Abstract

UAV accident investigation is essential for safeguarding the fast-growing low-altitude airspace. While near-daily incidents are reported, they were rarely analyzed in depth as current inquiries remain expert-dependent and time-consuming. Because most jurisdictions mandate formal reporting only for serious injury or substantial property damage, a large proportion of minor occurrences receive no systematic investigation, resulting in persistent data gaps and hindering proactive risk management. This study explores the potential of using large language models (LLMs) to expedite UAV accident investigations by extracting human-factor insights from unstructured narrative incident reports. Despite their promise, the off-the-shelf LLMs still struggle with domain-specific reasoning in the UAV context. To address this, we developed a human factors analysis and classification system (HFACS)-guided analytical framework, which blends structured prompting with lightweight post-processing. This framework systematically guides the model through a two-stage procedure to infer operators’ unsafe acts, their latent preconditions, and the associated organizational influences and regulatory risk factors. A HFACS-labelled UAV accident corpus comprising 200 abnormal event reports with 3600 coded instances has been compiled to support evaluation. Across seven LLMs and 18 HFACS categories, macro-F1 ranged 0.58–0.76; our best configuration achieved macro-F1 0.76 (precision 0.71, recall 0.82), with representative category accuracies > 93%. Comparative assessments indicate that the prompted LLM can match, and in certain tasks surpass, human experts. The findings highlight the promise of automated human factor analysis for conducting rapid and systematic UAV accident investigations.

1. Introduction

Unmanned aircraft systems (UAS) play a critical role in disaster response, infrastructure inspection, environmental monitoring, precision agriculture, and emerging low-altitude logistics in the developing low-altitude economy [1,2,3]. Despite accelerating operational demand, near-daily incidents are reported, yet, they were rarely subjected to systematic causal analysis because formal reporting in most jurisdictions is triggered only by serious injury or substantial third-party property damage [4,5,6]. Investigating these occurrences is crucial to prevent future similar accidents [7]. Under the current regulatory framework in the U.S., for example, a remote pilot must notify the Federal Aviation Administration (FAA) only when a small UAS occurrence involves serious injury or more than $500 in third-party property damage [8]. Consequently, the vast majority of low-consequence events never enter any authoritative database. Even when a report is filed, follow-up proceedings proceed under the conventional manned-aviation model: the U.S. National Transportation Safety Board notes that a typical accident inquiry requires 12–24 months for the expert-driven process to reach a probable cause determination [9]. While this study focuses on the U.S. regulatory context, it is noteworthy that other jurisdictions adopt similarly limited mandatory reporting thresholds; for example, in Australia, most small remotely piloted aircraft (RPA) operations only require reporting in cases of death or serious injury [10], and in the European Union, reporting under Regulation (EU) 376/2014 [11] applies to UAS operators pursuant to Article 19(2) of Regulation (EU) 2019/947 [12], with mandatory reporting for certified or declared UAS operations, and for occurrences involving manned aircraft or resulting in serious injury.

Deriving pre-investigation human-factor cues from initial occurrence reports is a pragmatic strategy in UAS accident work, because human error remains the predominant contributor to mishaps in unmanned-aircraft operations as well as in the broader civil-aviation accident record [13,14]. Such early extraction narrows the search space before committing scarce resources. For drones, these reports—typically the remote pilot’s mandatory notification or voluntary safety submission—often constitute the only consistently available evidence [4]. Their structured descriptions enable the timely identification of operator-centric errors stemming from stress, diminished situational awareness, or flawed judgment. This directs limited analytical resources toward the most probable causal pathways, providing invaluable insight to accident investigators.

Unmanned aircraft initial accident reports are typically submitted in an unstructured textual format, offering a distinctive opportunity to apply large language models (LLMs) to aviation safety analytics. Prior studies have shown that coupling LLMs with legacy, labor-intensive investigation pipelines can yield markedly smarter analysis workflows [15]. Yet, these reports are highly fragmented, frequently incomplete, and dense with specialized aeronautical terminology; reporter-introduced omissions and errors further erode data quality and must be repaired with domain expertise. Bringing such semi-structured data into fixed analytical frameworks stretches basic natural language processing (NLP) to its limits, and deploying LLMs in a trustworthy, system-level manner for domain-specific tasks remains challenging [16]. The difficulty extends to agentic architectures, where LLMs must acquire explicit reasoning skills, tool-use proficiency, and embedded aviation knowledge to execute complex analytic workflows [17]. Designing modular pipelines that co-ordinate specialized components while preserving interpretability and reliability is therefore a central hurdle [18]. Fom a deployment perspective, UAV-assisted mobile-edge computing has explored joint optimization of computation offloading and flight paths under tight latency; a recent KNN-DDPG approach reports lower end-to-end delay, motivating near-real-time, edge-side HFACS-LLM deployment [19]. Recent studies show that injecting domain knowledge into LLM-driven agents significantly improves reasoning accuracy and task performance [20]. Complementary to these LLM-centered advances, digital-twin-based drone forensics reconstructs incidents in ROS 2/Gazebo to recover physical trajectories and system states; by contrast, our study targets narrative-driven HFACS–LLM attribution from initial reports [21]. Nevertheless, a true end-to-end multi-agent framework for curating and analyzing UAV Aviation Safety Reporting System (ASRS) data [22] is still lacking. To close this gap, we introduce a human factors analysis and classification system (HFACS)-guided, human-in-the-loop multi-agent architecture that interactively assists investigators in completing incomplete reports, secures data integrity before analysis, and then classifies human errors across the four HFACS tiers: unsafe acts, preconditions, unsafe supervision, and organizational influence [23,24] (see Figure 1). This integration leverages AI for systematic safety assessment while retaining essential expert oversight throughout the reporting-to-classification lifecycle.

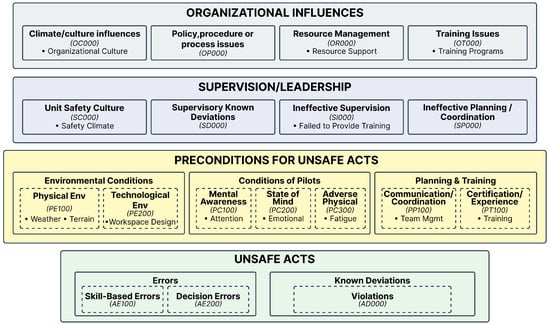

Figure 1.

Main structure of HFACS 8.0.

Notably, the unmodified HFACS 8.0—though well established for crewed aviation—does not fully express the techno-human particularities of UAV operations, including remote piloting, heavy automation dependence, data-link fragility, and distinctive ground-station interfaces [4,5,6]. We therefore introduce HFACS-UAV, which preserves the four canonical tiers but refines selected definitions and nanocodes for UAV contexts and augments narrative analysis with structured inputs from the ASRS. Concretely, HFACS-UAV treats free-text narratives as the evidential core. The search space is conditioned through a field-to-factor mapping. For example, phase-of-flight descriptors cue likely error modes, while atmospheric and lighting conditions indicate physical-environment factors and perceptual risks. Automation usage highlights technological-environmental influences and out-of-the-loop states. Information on crew roles and handoffs signals personnel factors and supervisory issues. Finally, callback notes often surface latent organizational contributors [22,25]. The resulting procedure proceeds in three stages. Stage 1 classifies the operator’s unsafe acts by determining, in sequence, whether they represent a skill-based error, a judgment or decision-making error, a perceptual error, or a deliberate violation, with each classification explicitly grounded in cited narrative evidence. Stage 2 infers the enabling preconditions—most notably UAV-centric technological and physical environment factors and the operator’s state and readiness—anchored to the report text and conditioned, though not dictated, by structured fields. Stage 3 extends the analysis to higher tiers by identifying contributory supervisory and organizational influences where the narrative permits, thereby completing the multi-level HFACS reasoning process. To strengthen reasoning, we employ level-wise prompting with explicit evidence binding and lightweight, programmatic consistency checks across levels. Experiments on self-built UAV-ASRS-HFACS reports with a modern LLM indicate that this configuration surpasses generic HFACS prompts and rule-based baselines, with the most significant gains on UAV-salient categories such as technological environment and organizational process; ablations confirm that both the refined UAV definitions and the field-to-factor conditioning contribute materially to the improvement.

In summary, the key contributions of this work include the following:

- We present an end-to-end LLM-assisted pipeline that integrates intake, human-in-the-loop supplementation, data validation, analytic triage, and HFACS reasoning, with a publicly available prototype (access at https://uav-accident-forensics.streamlit.app/, accessed on 7 October 2025).

- We propose HFACS-UAV, an adaptation of the four-tier HFACS framework with UAV-specific nanocodes and ASRS field mapping, implemented through a three-stage prompt design with evidence binding and consistency checks.

- We provide a curated UAV-ASRS-HFACS-2010–2025(05) corpus, covering Part 107, Section 44809, and Public UAS operations. Each record links narratives with structured fields; the dataset will be released under ASRS terms.

- We demonstrate through comparative evaluation that GPT-5 models match, and in some UAV-specific categories surpass, human experts in HFACS inference, highlighting the potential of LLMs in UAV accident investigation.

The rest of this paper is organized as follows. Section 2 reviews related work and identifies research gaps. Section 3 introduces the HFACS-UAV framework and our incident analysis pipeline. Section 4 describes our annotated UAV incident corpus. Section 5 presents experimental results and ablation studies. Section 6 discusses implications and concludes the research.

2. Related Works

2.1. UAV Accident Investigation: Current Practices and Challenges

The rapid proliferation of unmanned aerial vehicles (UAVs) across commercial, recreational, and governmental sectors—together with the emerging “low-altitude economy”— has fundamentally transformed the practice of accident investigation. Traditional methods developed for manned aviation through decades of formal mishap analysis and logical-model research [7], and codified in ICAO Annex 13 [26], provide an essential foundation, but they only partially address UAV-specific failure modes such as command-and-control (C2) link loss, on-board autonomy malfunctions, and the distinctive workload and situational-awareness challenges faced by remote pilots.

Empirical evidence confirms the gap: Wild et al. [27] analyzed 152 civil-drone accident and incident reports and showed that technical system malfunctions—not pilot factors—were the single largest causal category. Conversely, when human error is involved, the consequences can be severe: a HFACS (see Figure 1) review of 221 U.S. military UAV mishaps found that 60% contained at least one unsafe act by a remote pilot or supervisor [28]. These findings underscore the need for investigation frameworks that integrate both engineered-system and human-system perspectives. Regulation adds another layer of complexity. In the United States, the Small UAS Rule (14 CFR Part 107) requires formal reporting only when an operation results in serious injury or property damage greater than $500, creating a substantial under-reporting bias [29]. The European Union’s Implementing Regulation (EU) 2019/947 establishes comparable thresholds, leading to a similar “iceberg” of unreported occurrences [12]. Without a systematic collection of lower-severity events, valuable lead indicators of emerging hazards remain hidden.

Finally, data availability remains a critical bottleneck. A recent FAA ASSURE study showed that fewer than 5% of small-UAS platforms in the United States carry any form of crash-surivable flight-data recorder, and that where logging exists, parameter sets and sampling rates vary widely across manufacturers [30]. The absence of cockpit-voice or datalink-voice recordings forces investigators to rely on partial telemetry and post-event interviews, complicating causal reconstruction. These technological, regulatory, and data-management challenges highlight the need for investigation tools and evidence-collection strategies that are specifically tailored to the socio-technical realities of UAV operations.

2.2. HFACS: Origins, Structure, and Aviation Applications

The human factors analysis and classification system (HFACS) is based on Reason’s theory of organizational accidents, which explains how unsafe acts are enabled by latent conditions within supervision and organizations rather than being solely the result of frontline operator error [31,32,33]. Building on this conceptual basis, Wiegmann and Shappell formalized HFACS as a four-level framework—(L1) Unsafe Acts, (L2) Preconditions for Unsafe Acts, (L3) Unsafe Supervision, and (L4) organizational Influences—with defined causal categories supporting systematic analysis of human and organizational contributory factors in aviation mishaps (see Figure 1) [23,34,35]. Over the past two decades, HFACS has been widely adopted in accident investigation, safety audits, and safety management systems (SMS), providing a common taxonomy that improves comparability, traceability, and learning across events [35]. The U.S. Department of Defense maintains an updated HFACS 8.0 implementation that clarifies definitions and nanocodes for consistent application across domains [24].

In UAV operations, recent studies both reinforce and refine HFACS. Analyses of medium/large-UAV mishaps coded with HFACS report that Level 1 Decision Errors commonly predominate, which are statistically associated with Level 2 Technological Environment and Adverse Mental State; contributory factors also appear at supervisory and organizational tiers, informing regulator- and SMS-oriented interventions [13]. Focusing on loss of control in flight (LOC-I), El Safany and Bromfield examined a corpus of UAV accidents and identified design/manufacturing issues as significant factors; they further observed that recovery attempts are often missing in reported cases. Comparing HFACS, AcciMap, and an Accident Route Matrix, they proposed an adapted ARM-UAV to better maintain event sequences and illustrate recovery for LOC-I cases [36]. Complementing taxonomy-based analysis, Alharasees and Kale integrated HFACS with OODA and SHELL using an analytic hierarchy process and combined this with real-time operator physiology (HR/HRV/RR) across automation levels; novices exhibited higher stress responses, emphasizing operator-centered interventions within training [37].

2.3. Large Language Models in Aviation Safety Analysis

Aviation safety text mining has advanced rapidly from rule-based keyword heuristics and shallow classifiers to transformer encoders purpose-built for specialist corpora. Early studies such as Tanguy et al. [38] framed Directorate-General of Civil Aviation narratives as bag-of-words vectors and obtained F1 scores around 0.80 with support-vector machines, yet they faltered when confronted with the syntactic complexity and dense jargon of accident prose. The arrival of BERT-style encoders narrowed this gap: fine-tuning generic DistilBERT lifted exact-match accuracy for question answering on the ASRS corpus to ∼70%, but the decisive improvement came from domain pre-training. AviationBERT, trained on roughly 2.3 million ASRS and NTSB narratives, outperformed BERT-Base by 4–10 percentage points on topic classification and semantic similarity tasks, while NASA’s SafeAeroBERT achieved equivalent gains on causal-factor labeling [39,40]. These results establish a clear pattern: contextual encoders benefit disproportionately from sector-specific corpora that capture operational phraseology, regulatory references, and domain abbreviations. The generative turn has further expanded analytical possibilities. Large models such as GPT-3 and GPT-4 can now draft coherent accident summaries and infer causal chains with only few-shot prompts [16]. Crucially, Liu et al. [15] embedded the HFACS into a stepwise chain-of-thought scaffold, raising adjusted average F1 by 27.5% over zero-shot GPT-4 and surpassing human raters on several unsafe-act sub-tasks. This success has catalyzed a new class of bespoke generative models: AviationGPT extends LLaMA-2 with continual training on flight-operations data, delivering over 40% gains on in-domain QA and summarisation benchmarks while foreshadowing multimodal LLMs that will fuse cockpit-voice, flight-data-recorder, and textual evidence within a unified narrative engine [41].

Yet, these advances remain overwhelmingly concentrated in manned aviation domains. Recent systematic reviews reveal that fewer than 2% of aviation safety NLP papers address UAV incidents [42,43]. This disparity is reflected in U.S. Air Force safety data: between 2006 and 2011, the MQ-9 Reaper accumulated a lifetime Class-A mishap rate of 4.6 per 100,000 flight-hours, whereas mature manned fleets recorded rates below 1 per 100,000 flight-hours during 2010–2018 [44,45]. The few UAV-specific efforts demonstrate both promise and fundamental limitations. Zibaei and Borth [46] developed ArduCorpus, containing 935,000 sentences from UAV technical resources, achieving 32% higher precision in cause–effect extraction compared to pure machine learning approaches. Similarly, Editya et al. [47] fine-tuned multimodal models for drone accident forensics, yet, these isolated efforts lack the comprehensive framework necessary for systematic UAV safety analysis.

The technical challenges of adapting LLMs to UAV contexts extend beyond data scarcity. UAV operations introduce unique failure modes absent in manned aviation: data-link interruptions, ground control station interface failures, and autonomous system malfunctions that existing aviation taxonomies fail to capture adequately [48]. Moreover, the heterogeneity of UAV platforms—from consumer quadcopters to military-grade fixed-wing systems—defies the relatively standardized frameworks developed for commercial aviation. Token length constraints further compound these challenges, as comprehensive UAV accident analysis requires simultaneous processing of telemetry logs, sensor streams, environmental data, and operator narratives that frequently exceed current model capacities. Regulatory and validation challenges present additional barriers. The absence of AI-specific standards in aviation has prevented the adoption of LLM-based safety analysis in operational contexts [49]. Traditional deterministic validation approaches prove inadequate for probabilistic language models, while the “black box” nature of transformer architectures conflicts with aviation’s requirements for transparent, auditable decision-making. Recent audits indicate that explainability dashboards serve technical developers rather than frontline investigators, leaving a critical transparency gap that undermines trust and adoption [50]. The overwhelming focus on post-incident analysis neglects real-time safety monitoring capabilities. While manned aviation benefits from comprehensive Flight Data Monitoring (FDM) programs integrated with safety management systems, UAV operations lack equivalent infrastructure for continuous safety assessment. The latency requirements for real-time UAV decision support—often measured in milliseconds for collision avoidance—exceed current LLM inference capabilities by orders of magnitude, constraining applications to retrospective analysis rather than proactive risk mitigation.

Overall, although LLMs have already delivered substantive gains in manned-aviation safety analysis—through specialized models such as SafeAeroBERT and AviationGPT—their application to UAV safety remains nascent and fragmented. The unique operational characteristics of unmanned systems—remote piloting paradigms, heterogeneous platforms, elevated accident rates, and distinct failure modes—demand purpose-built approaches rather than adapted manned aviation solutions. This gap motivates our development of HFACS-UAV and associated prompting methodologies, specifically designed to address the technical, regulatory, and operational challenges inherent in UAV accident investigation while leveraging the demonstrated capabilities of modern language models.

2.4. Prompt Engineering and Multi-Agent Systems for Domain-Specific Reasoning

The application of LLMs to complex reasoning tasks has revealed a fundamental tension between generative capabilities and propensity for hallucination in specialized domains [51]. Chain-of-Thought (CoT) prompting has emerged as a transformative approach, enabling LLMs to decompose complex problems into tractable reasoning steps [52]. This paradigm has evolved into sophisticated variants: Tree of Thoughts (ToT) explores multiple reasoning paths simultaneously [17], while self-consistency sampling aggregates diverse reasoning chains to improve reliability [53]. The distinction between manual and automatic CoT generation reveals critical insights for domain adaptation—while zero-shot CoT using “let’s think step by step” shows promise [54], domain-specific tasks require carefully crafted examples that encode expert knowledge [55]. Recent work demonstrates that integrating domain expertise directly into prompt structures yields substantial improvements: Singhal et al. [56] achieved physician-level performance by embedding clinical guidelines into medical reasoning prompts, while Liu et al. [15] integrated HFACS into aviation safety analysis, surpassing human expert accuracy on several classification tasks. The emergence of multi-agent systems further expands these capabilities [57]. By orchestrating specialized agents that maintain distinct knowledge bases and reasoning strategies, complex analytical tasks can be decomposed across multiple experts [58]. Hong et al. [59] demonstrated that structured multi-agent collaboration could achieve 85.9% success rates on complex software engineering tasks, suggesting similar potential for multi-faceted accident investigation.

Complementary to CoT and multi-agent prompting, recent retrieval-augmented generation (RAG) methods reduce hallucinations by explicitly coupling reasoning with targeted retrieval. ReAct interleaves chain-of-thought with tool calls so the model plans, retrieves, and verifies iteratively [60]; Self-RAG learns when to retrieve and how to critique drafts via reflection tokens, improving factuality and citation faithfulness [61]; Active RAG (FLARE) decides when/what to retrieve during generation rather than only once up front [62]; GraphRAG builds corpus-level knowledge graphs to support multi-hop evidence aggregation for complex queries [63].

Yet, significant gaps remain in applying these techniques to UAV accident analysis. Existing prompt engineering assumes stable domain knowledge, while UAV operations span rapidly evolving technologies with shifting failure modes—from early loss-of-link scenarios to contemporary autonomy conflicts [1]. Current multi-agent frameworks lack the rigor required for safety-critical analysis, providing neither evidence traceability nor deterministic guarantees demanded by aviation standards [64]. Most critically, the heterogeneous nature of UAV accident data—spanning narrative reports, telemetry logs, maintenance records, and regulatory notices—exceeds current integration capabilities. While retrieval-augmented generation shows promise [65], existing approaches focus on isolated tasks rather than the end-to-end investigative workflow from initial report intake through final safety recommendations. The fragmentation of UAV incident data, with most minor occurrences never entering formal databases, demands systems capable of active data collection, intelligent report completion, and progressive refinement through human-in-the-loop interaction. These limitations motivate our development of an integrated multi-agent framework that combines HFACS-guided prompting with comprehensive investigation capabilities, addressing the complete lifecycle of UAV accident analysis while maintaining the rigor demanded by safety-critical applications.

3. Methodology

3.1. UAV-Specific Enhancement-Pattern Development

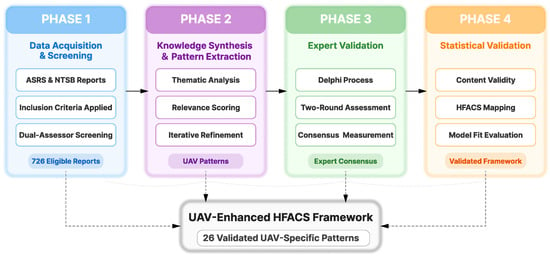

This study adopted a four-phase, evidence-driven procedure (Figure 2) to extend HFACS 8.0 to unmanned-aviation contexts. The design mirrors best practice in taxonomy engineering for aviation mishap research [7,15,23], ensuring both methodological rigor and theoretical fidelity.

Figure 2.

Systematic four-phase methodology for developing UAV-specific HFACS enhancement patterns.

3.1.1. Phase 1—Data Acquisition and Screening

We harvested 847 small-UAS occurrence reports from the NASA Aviation Safety Reporting System (ASRS) [22] and the National Transportation Safety Board (NTSB) accident database [66], covering the period January 2010–May 2025. This window brackets the introduction of FAA Part 107 [4] and captures sUAS integration and oversight efforts [29,67]. Reports were retained when they satisfied the inclusion rule:

where denotes human-operator involvement, a five-point narrative-detail score (), and the presence of causal statements. Dual-assessor screening yielded 726 eligible reports (85.7 % retention; Cohen’s ), providing the empirical substrate for pattern discovery.

3.1.2. Phase 2—Knowledge Synthesis and Pattern Extraction

In the subsequent knowledge-synthesis phase, thematic analysis was conducted following the established methodology of Braun and Clarke [68]. Recent large-scale UAV human-factor audits [6,13,27] informed the inductive coding stage and ensured construct coverage. The analysis employed both inductive and deductive coding approaches, with initial codes derived from existing HFACS categories and emergent codes identified through a systematic review of incident narratives. Patterns were extracted and ranked using a frequency-weighted relevance-scoring method:

where is the normalized relevance score of pattern j; N is the total number of reports (726); is the binary occurrence of pattern j in report i (0 or 1); and denotes the severity weight based on outcome severity (1 = minor incident, 3 = substantial-damage accident). Severity weights were assigned in accordance with the accident-outcome scale in the FAA Aeronautical Information Manual, (AIM § 11-8-4, 2025), § 8 [4]. Initially, 35 candidate patterns exceeded the empirically determined threshold (); these were subsequently refined through iterative team review, yielding a consolidated list of 26 patterns that demonstrated both theoretical coherence and practical significance.

As depicted in Figure 2, the methodology progressed through four distinct phases, each supported by specific quality-control measures. Phase 1 established the empirical foundation through systematic data collection and screening, achieving high inter-reviewer reliability (Cohen’s ) for inclusion decisions. Phase 2 employed established thematic-analysis techniques to extract and consolidate patterns, with a frequency-weighted scoring method ensuring that patterns captured both prevalence and severity.

3.1.3. Phase 3—Expert Validation

Phase 3 incorporated expert domain knowledge through a structured Delphi process [69,70], achieving substantial consensus across all patterns. Then, it delivered statistical validation of pattern structure and content validity, confirming the theoretical coherence of the enhanced framework. Expert validation used a two-round Delphi technique, engaging five domain specialists with 7–15 years of UAV and human factor experience. The panel comprised two certified remote pilots, one aviation safety analyst, one automation researcher, and one regulatory-compliance engineer.

In Round 1, each expert rated every pattern on three criteria—theoretical relevance, practical significance, and definitional clarity—using a five-point Likert scale. The composite score for pattern p was as follows:

where is the number of experts and is expert e’s mean rating for pattern p. Patterns with advanced to Round 2, reflecting the 75-percentile retention rule recommended by [70]. Inter-rater reliability across all patterns was quantified with Fleiss’ kappa,

yielding (95 % CI: 0.65–0.92), interpreted as substantial agreement under the Landis–Koch scale [71].

Round 2 targeted items with initial disagreement ( for the individual pattern). After reviewing anonymized feedback, experts re-rated those items. Consensus was measured with the inter-quartile range (IQR) index,

where is the consensus index for pattern p, the inter-quartile range of re-ratings, and the effective width of the 1–5 scale. A higher indicates stronger consensus. Following [70], consensus was declared when (equivalently, ). All 26 patterns exceeded this benchmark, with a mean (SD = 0.08).

Table A1 present the complete set of 26 UAV-specific enhancement patterns with their operational definitions and representative case examples derived from the ASRS incident database. Each pattern is mapped to specific HFACS categories while addressing unique operational characteristics of UAV systems. The organizational level patterns emphasize regulatory compliance challenges specific to UAV operations, including Part 107 requirements and LAANC authorization processes that have no direct equivalent in traditional manned aviation. The preconditions level demonstrates the highest pattern concentration, reflecting the critical role of technological dependencies such as command and control link reliability and telemetry accuracy in UAV safety outcomes.

3.1.4. Phase 4—Statistical Validation

Content validity was assessed through expert evaluation using Lawshe’s Content Validity Ratio (CVR) [72]:

where is the number of experts rating pattern p as essential (rating on 5-point scale), and is the total number of experts. With five experts, Lawshe CVR can only assume values of {−1, −0.6, −0.2, 0.2, 0.6, 1.0}. Individual CVR values therefore ranged from 0.60 to 1.00, and 21 of 26 patterns (80.8%) met the critical cutoff of 0.60 for [72]. The mean CVR was 0.72.

Given the limited size of our expert panel (5 experts × 26 patterns), traditional factor analysis was not feasible. We therefore employed theoretical mapping validation as an alternative. This approach, whereby each pattern was systematically mapped to its corresponding HFACS level, achieved 96% expert consensus and provides appropriate structural validity evidence for our sample parameters.

Model fit was further evaluated using confirmatory indices from the expert rating data:

- Pattern-level agreement: Mean (range: 0.71–0.94).

- Content validity index: 85% (23/26 patterns with CVR ).

- Theoretical alignment: 96% expert consensus on HFACS mapping.

- Practical relevance: Mean rating 4.2/5.0 (SD = 0.6).

These metrics collectively indicate that the 26 UAV-specific enhancement patterns demonstrate strong psychometric properties and appropriately extend the HFACS framework while maintaining theoretical coherence and practical applicability.

This enhanced framework fills critical methodological gaps in UAV safety analysis. Its systematic and statistically validated development provides an empirically robust extension of HFACS tailored explicitly for UAV operations, ensuring both practical relevance and theoretical alignment with existing aviation safety frameworks. This enhanced framework addresses critical gaps in current UAV safety analysis methodologies and provides a foundation for more effective incident investigation and prevention strategies in the rapidly evolving unmanned aviation sector. Detailed definitions of all extracted UAV enhancement patterns are provided in Table A1.

3.2. HFACS-UAV Framework Development

Development of the HFACS-UAV framework proceeded through three sequential stages— architectural design, pattern encoding, and LLM integration.

3.2.1. Stage 1—Architectural Design

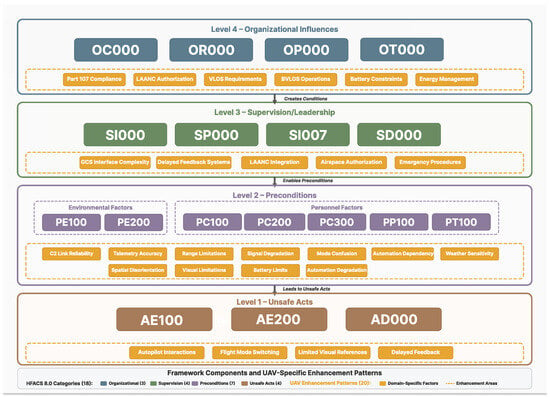

The framework extends the four-level HFACS hierarchy [23] via a dual-taxonomy architecture that embeds UAV-specific patterns while preserving the original causal structure (Figure 3). The HFACS 8.0 taxonomy is preserved in full (four levels, 18 categories) with no deletions or renamings; UAV-specific factors are introduced solely as additive extensions mapped to their respective HFACS parent categories. Each pattern is linked to its parent HFACS category, enabling the following:

Figure 3.

HFACS–UAV architecture linking 26 UAV enhancement patterns to HFACS 8.0. UPPER-CASE codes (e.g., AE100, PE200, SI000, OC000) indicate original HFACS 8.0 categories, the titled pattern nodes (e.g., C2 Link Reliability, Battery Constraints) are UAV-specific extensions mapped to their HFACS parents.

- (a)

- Selective activation—patterns are invoked only when incident context demands;

- (b)

- Backward compatibility with classic HFACS analyses;

- (c)

- Parallel causal reasoning across mixed manned- and unmanned-aviation chains [73].

Together, these properties ensure both flexibility and interoperability of the HFACS-UAV framework. For clarity and analytical consistency, we organize the taxonomy into four hierarchical levels (L1–L4).

3.2.2. Stage 2—Pattern Encoding

Each enhancement pattern is defined as follows:

where D = definition, I = empirical indicators, M = HFACS mappings, R = inter-pattern relations, and C = activation constraints [26]. Directed-graph relations enable causal-chain reconstruction using do-calculus reasoning [74].

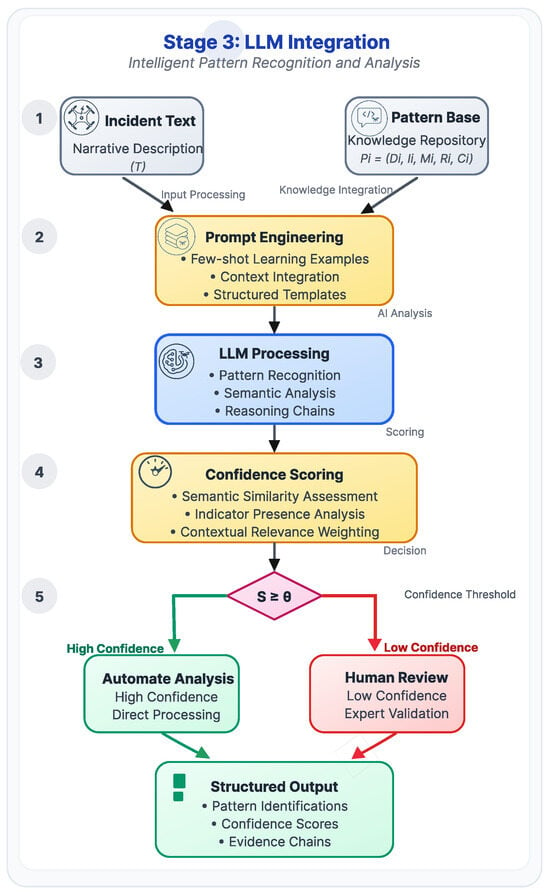

3.2.3. Stage 3—LLM Integration

The framework’s integration with large language models employs structured prompt engineering to leverage encoded pattern representations for automated incident analysis (Figure 4). Incident narratives T are processed through a confidence-weighted pattern matching algorithm:

where , , and represent weighting parameters for semantic similarity, indicator presence, and contextual relevance, respectively. The functions , , and compute semantic similarity, indicator matching, and contextual relevance between incident narratives and pattern components. ranges from 0 to 1, with higher values indicating stronger pattern activation.

Figure 4.

End-to-end LLM workflow from incident narratives and pattern schemas to confidence-scored, structured outputs.

The prompt engineering methodology incorporates the complete pattern knowledge base as contextual information, enabling LLM access to pattern definitions, indicators, and inter-pattern relationships during analysis. Few-shot learning examples derived from expert-analyzed incidents provide concrete examples of pattern application, ensuring consistent interpretation across incident types [52].

The integration employs structured JSON output format containing pattern identifications, confidence scores, supporting evidence excerpts, and reasoning chains that trace from identified patterns to textual evidence. For cases where pattern matching confidence falls below predetermined thresholds, the system implements human-in-the-loop mechanisms that flag incidents for expert review [1]. This hybrid approach ensures analytical quality while enabling scalable processing of large incident datasets, maintaining compatibility with the dual-taxonomy architecture.

3.3. End-to-End Incident Processing Pipeline

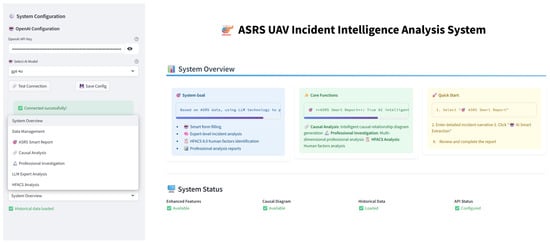

The theoretical framework culminates in our end-to-end incident processing pipeline that integrates automated report collection, intelligent completion, and evidence-grounded analytical workflows. This comprehensive system transforms the HFACS-UAV methodology into a practical tool capable of processing real-world incident data while maintaining analytical rigor and transparency. Figure 5 presents the complete system interface, demonstrating the integration of multiple analytical modules within a unified platform.

Figure 5.

End-to-end platform integrating system configuration, data management, LLM-based expert analysis, and an HFACS coding interface.

3.3.1. Intelligent Data Preprocessing and Completion System

The system incorporates a structured pipeline that addresses the inherent incompleteness and variability of real-world incident reports. The preprocessing begins with automated ASRS data parsing and UAV-specific incident identification, followed by systematic completeness assessment and intelligent information completion.

Data Completeness Assessment: The system evaluates data sufficiency through a structured assessment of critical information fields. Based on the analysis of ASRS reporting requirements and HFACS analytical needs, we identified 23 critical fields across four categories: temporal–spatial context (date, time, location, airspace), aircraft specifications (make, model, weight, propulsion type), operational parameters (flight phase, control method, mission type), and incident characteristics (primary problem, contributing factors, environmental conditions). The completeness assessment is performed by the LLM through structured evaluation prompts that assess both field presence and information quality.

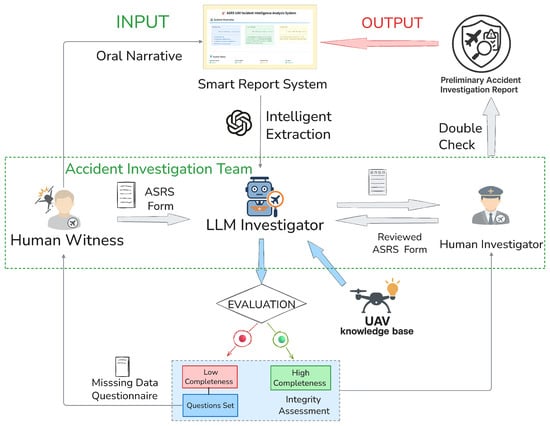

Intelligent Information Completion (human-in-the-loop): After the witness submits the oral narrative via the smart reporting interface, the system performs LLM-based extraction, assigns confidence to each field, and tracks the completeness of the ASRS form. For records with insufficient completeness, the system employs LLM-driven contextual reasoning to infer missing information and generate targeted completion questions. The completion mechanism leverages the comprehensive UAV knowledge base to provide domain-informed recommendations and maintains explicit confidence tracking for all inferred information. These questions are returned to the witness for clarification, while a human investigator reviews the preliminary extraction and the evolving ASRS form. All inferred items retain explicit confidence tags and remain distinguishable from explicitly reported information. The LLM investigator and the human investigator operate as a joint team: the witness answers or edits, the system integrates new inputs and re-scores, and the human investigator performs a final confirmation before the record proceeds to HFACS 8.0 analysis (see Figure 6).

Figure 6.

Human-in-the-loop information-completion workflow linking witness Q&A, LLM-based extraction and -guided question generation, and investigator final confirmation.

3.3.2. LLM Integration and Intelligent Analysis Engine

The framework operationalizes the HFACS-UAV architecture through a sophisticated LLM integration strategy that transforms abstract reasoning frameworks into executable analytical workflows. The implementation employs structured prompt engineering, multi-dimensional confidence assessment, and comprehensive quality validation mechanisms.

Structured Prompt Engineering: The system implements dynamic prompt generation that systematically incorporates the four components of the HFACS-UAV architecture: domain knowledge injection , task decomposition , evidence binding , and confidence quantification . Each analysis session begins with the injection of relevant UAV operational knowledge selected from the comprehensive knowledge base containing 600+ technical terms, 28 UAV-specific risk factors, and regulatory frameworks. The task decomposition function breaks complex HFACS classification into sequential reasoning steps, guiding the LLM through systematic evaluation of each potential category with explicit reasoning chains.

Multi-Dimensional Quality Assessment: The system implements a comprehensive quality evaluation framework that assesses classification reliability across multiple dimensions. For each HFACS classification, the quality score is computed as follows:

where represents confidence appropriateness (evaluating whether confidence levels align with evidence strength), measures evidence quality (assessing specificity and relevance of textual citations), evaluates reasoning quality (examining logical coherence and narrative references), and assesses category-layer consistency (verifying alignment with HFACS hierarchical structure).

Confidence Calibration and Evidence Binding: The evidence binding function implements a structured assessment protocol with explicit confidence scoring: 0.9–1.0 for explicit textual statements, 0.7–0.8 for strong indirect evidence, 0.5–0.6 for moderate implications, 0.3–0.4 for weak indicators, and 0.1–0.2 for very weak or speculative evidence. This calibrated scoring system ensures analytical transparency and enables systematic validation of classification decisions through traceable evidence chains.

3.3.3. Interactive Analysis Platform and Quality Assurance

The complete system is implemented as a modular web-based application using the Streamlit framework, providing an intuitive interface for both individual incident analysis and batch processing of large datasets. The platform incorporates real-time progress monitoring, interactive visualization of HFACS classification results, and comprehensive quality assurance mechanisms including human-in-the-loop validation workflows.

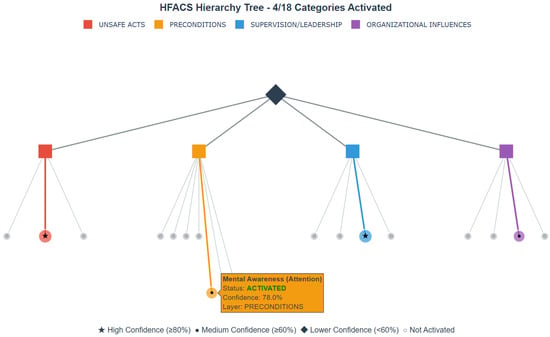

The system employs structured JSON output format containing pattern identifications, confidence scores, supporting evidence excerpts, and reasoning chains that trace from identified patterns to textual evidence. As demonstrated in Figure 7, the platform provides comprehensive visualization of HFACS classification results through interactive hierarchical trees that display the four-layer structure with color-coded confidence levels and detailed classification metrics.

Figure 7.

Interactive HFACS hierarchy highlighting four selected categories across the four tiers.

For cases where classification confidence falls below predetermined thresholds (typically 0.5 for individual classifications or 0.6 for overall analysis confidence), the system implements human-in-the-loop mechanisms that flag incidents for expert review. This hybrid approach ensures analytical quality while enabling scalable processing of large incident datasets, maintaining compatibility with the dual-taxonomy architecture and supporting continuous refinement of analytical models and knowledge bases based on expert feedback and emerging UAV operational patterns. The complete system has been deployed as a publicly accessible prototype at https://uav-accident-forensics.streamlit.app/ (accessed on 7 October 2025) for evaluation and testing.

4. Dataset Construction and Annotation

4.1. Data Source and Selection

To address the shortage of HFACS-coded UAV accident data, we developed a dedicated UAV-HFACS dataset using the Aviation Safety Reporting System (ASRS) [22], the primary U.S. voluntary safety reporting program operated by NASA. From the complete ASRS repository, we extracted all Unmanned Aircraft System (UAS) reports between 2010 and May 2025, covering three operational categories: Public Aircraft Operations, Recreational Operations/Section 44809, and Part 107 commercial operations. Reports were retained only if they explicitly involved UAV operations, contained sufficiently detailed narratives for human factors analysis, and had complete structured metadata. The selection also ensured diversity across flight phases, control modes, and environmental conditions. Applying these criteria yielded an initial collection of 586 high-quality UAV accident reports.

Each retained record preserved the following ASRS fields essential for HFACS analysis:

- ACN: Unique record identifier for traceability.

- Narrative: Primary analysis text containing detailed event descriptions.

- Synopsis: Auxiliary summary providing context.

- Anomaly: Preliminary classification indicators.

- Flight Phase: Operational phase during the event.

- Control Mode (UAS): Manual, autopilot, or assisted operation.

- Human Factors: Pre-tagged human factor indicators.

- Weather/Environment: Conditions affecting operations.

- Authorization Status: Regulatory compliance and waiver information.

- Visual Observer Configuration: Crew resource management factors.

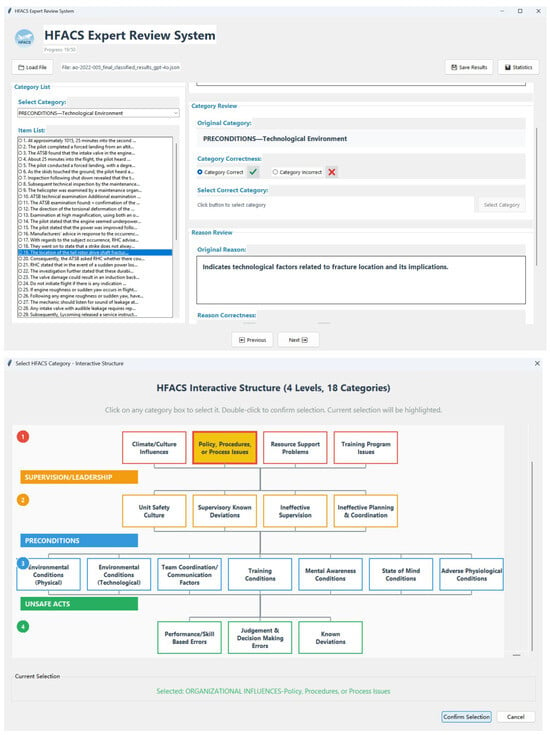

4.2. Annotation and HFACS Coding

Annotation was conducted using an in-house developed HFACS Expert System (Figure 8), which provided structured workflows, automated consistency checks, and consensus resolution mechanisms. The annotation team consisted of five domain experts with complementary backgrounds in aviation safety engineering, human factors, UAV operations, accident investigation, and aviation psychology. Each record was independently coded according to the HFACS 8.0 framework, systematically covering four hierarchical levels and 18 categories. Level 1 (Unsafe Acts) addressed performance/skill-based errors AE100), judgment and decision-making errors AE200), and known deviations (AD000). Level 2 (Preconditions) covered mental awareness conditions (PC100), state of mind conditions (PC200), adverse physiological conditions (PC300), the physical environment (PE100), technological environment (PE200), team coordination/communication factors (PP100), and training conditions (PT100). Level 3 (Unsafe Supervision) included supervisory climate/unit safety culture (SC000), supervisory known deviations (SD000), ineffective supervision (SI000), and ineffective planning and coordination (SP000). Level 4 (Organizational Influences) encompassed organizational climate/culture (OC000), policy, procedures, or process issues (OP000), resource support problems (OR000), and training program problems (OT000). Corresponding codes can be found in Figure 3. Following annotation, the UAV-HFACS dataset comprises 586 de-identified ASRS UAS reports, each containing the original ACN identifier, normalized narrative text, structured metadata, and HFACS 8.0 labels. The complete dataset contains 10,548 coded data points (586 reports × 18 HFACS categories), with 583 reports (99.5%) containing at least one identified human factor. From this comprehensive collection, a stratified subset of 200 reports (34.1% of total) was systematically selected for experimental validation, ensuring representative coverage across diverse operational contexts and maintaining robust statistical foundation for model performance evaluation.

Figure 8.

HFACS Expert System interface for UAV accident annotation.

To facilitate reproducibility, ACNs are retained to allow matching with supplementary ASRS fields or external datasets. The dataset, along with the HFACS coding guidelines and preprocessing scripts, will be released under a research-only license, enabling its use in safety analysis, human factors research, and AI-assisted accident investigation.

4.3. Quality Assurance and Dataset Characteristics

Quality assurance was ensured through independent coding by all experts, with inter-rater reliability exceeding 85%, and resolution of discrepancies through structured consensus meetings. Each classification was assigned a confidence score on a 0–1 scale, ensuring completeness and consistency across all HFACS categories. From the initial pool, a final experimental subset of 200 records was selected for analysis, prioritizing completeness, temporal continuity, and narrative clarity. The resulting dataset contains 3600 coded data points, including 1522 positive instances (an average of 7.6 factors per record) with full coverage of all HFACS categories. The category distribution reflects realistic UAV accident complexity, with high-frequency factors (≥50%) dominated by decision-making error, cognitive state, and policies/procedures, medium-frequency factors (20–50%) spanning multiple levels (nine categories), and low-frequency factors (<20%) representing rare but critical risks (two categories). Detailed statistics are provided in Table 1. Overall, the UAV-HFACS dataset provides a rigorously coded, quality-assured resource that reflects the operational realities of UAV safety events and establishes a robust foundation for subsequent analytical experiments.

Table 1.

UAV-HFACS dataset statistics and category distribution.

5. Experiments and Results

5.1. Selected UAV-HFACS Subset

The proposed analysis was conducted on a curated subset of the UAV-HFACS dataset. Considering the sample sizes adopted in comparable human factors reasoning tasks and balancing practical constraints of expert review, a total of 200 accident records were selected. This subset was chosen to prioritize completeness of HFACS category coverage, temporal continuity, and narrative clarity. To ensure representativeness, cases were drawn to preserve the natural distribution of UAV operational contexts and environmental conditions.

The final experimental set contains 3600 coded data points, including 1522 positive instances, corresponding to an average of 7.6 HFACS-coded factors per record. All four HFACS levels and 18 categories are represented, with high-frequency factors (≥50%) dominated by judgment & decision-making errors, ineffective supervision, and policy/procedures issues; medium-frequency factors (20–50%) distributed across nine categories spanning multiple HFACS levels; low-frequency factors (<20%) capturing rare but critical risks including supervisory known deviations and adverse physiological conditions. Inter-rater reliability for the subset exceeded 85%, with all discrepancies resolved via structured consensus meetings. Detailed category-level statistics are provided in Table 1.

5.2. Model and Performance Metrics

5.2.1. Model Configuration

Seven state-of-the-art LLMs, spanning proprietary and open-source architectures, were evaluated for HFACS-UAV multi-label classification. Proprietary models comprised GPT-4o, GPT-4o-mini, Gemma3:4B, GPT-4.1-nano, Llama3:8B, GPT-5, and GPT-5-mini, selected for their strong reasoning and NLU performance. Open-source baselines included Llama3:8B and Gemma3:4B (via Ollama) to examine the potential for cost-efficient deployment. All models were run under identical inference parameters—temperature , max_tokens , and top-—to control for configuration-induced variance and enable direct capability comparison.

5.2.2. Domain Knowledge and Few-Shot Enhancement

To address the domain–task transfer gap of general-purpose LLMs, a structured YAML knowledge base was developed containing: (i) definitions of all 18 HFACS categories, (ii) UAV-specific exemplars from real accident cases, (iii) hierarchical L1–L4 mapping, and (iv) operational context guidelines for different mission types. This machine-readable yet human-auditable resource enhanced reproducibility and transparency, allowing models to ground predictions in codified safety taxonomies rather than generic priors (see Figure 3 and Table A1).

A few-shot prompting strategy further supplied curated examples spanning: diverse UAV contexts, varying complexity (single- vs. multi-factor), explicit contrasts across categories, and balanced coverage across HFACS layers. This pairing of structured domain knowledge with representative demonstrations was designed to sharpen decision boundaries and reduce category ambiguity.

5.2.3. Evaluation Metrics

Performance was assessed using standard multi-label classification metrics—Precision, Recall, F1-score, and Accuracy—calculated per HFACS category with macro-averaging. Given the operational imperative to avoid missed contributing factors in early-stage investigations, Recall and F1 were prioritized as primary indicators over Precision. Prediction confidence scores (0–1) were also analyzed to gauge deployment reliability. Ground-truth labels were derived through expert consensus validation of GPT-4.1-mini outputs, providing a balance of reasoning fidelity and computational cost. Statistical robustness was ensured through effect-size analysis with 95% confidence intervals.

5.3. Evaluation Results

5.3.1. Overall Evaluation

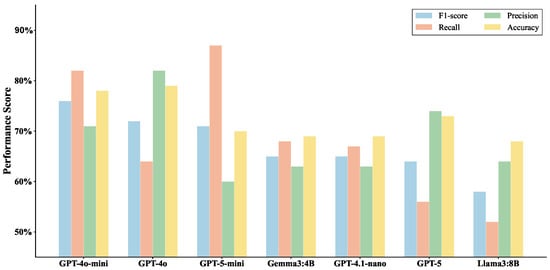

All models were evaluated on the same dataset of 200 expert-annotated UAV accident reports. Due to technical limitations and API constraints, some models were unable to process the complete dataset: GPT-5-mini processed 198 records (99.0%), Gemma3:4B processed 193 records (96.5%), GPT-5 processed 197 records (98.5%), and Llama3:8B processed 195 records (97.5%). Performance metrics were calculated on the successfully processed records for each model, ensuring fair comparison within each model’s operational constraints. The performance comparison visualized in Figure 9 demonstrates clear distinctions across the four key metrics. Across all evaluated models, macro-F1 ranged from 0.576 to 0.763 (mean = 0.671, ). GPT-4o-mini demonstrated the best overall balance (F1 = 0.763; Precision = 0.712; Recall = 0.821), accompanied by an average confidence of 0.815. Proprietary models generally outperformed open-source counterparts in balancing precision and recall.

Figure 9.

HFACS-UAV classification performance across evaluated LLMs.

The performance comparison demonstrates clear distinctions across the four key metrics, with GPT-4o-mini showing optimal F1-score performance through superior recall capabilities while maintaining competitive precision. GPT-4o achieved the highest precision (0.817) but suffered from lower recall (0.635), resulting in an F1-score of 0.715 and highlighting the precision–recall trade-off inherent in HFACS classification tasks. GPT-5-mini demonstrated exceptional recall (0.872) with moderate precision (0.599), achieving an F1-score of 0.710 and indicating a tendency toward over-identification of HFACS factors. Confidence scores showed only moderate correlation with performance. For instance, GPT-4o combined high confidence (0.853) with strong F1. Although open-source models lagged substantially behind proprietary counterparts, the performance of Gemma3:4B was broadly comparable to GPT-4.1-nano, indicating that open-source small models, despite lagging behind proprietary counterparts, still demonstrate consistent and potentially scalable performance in HFACS classification tasks.

5.3.2. Sub-Tasks Evaluation

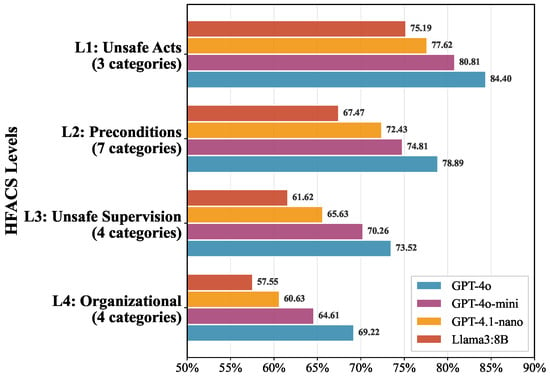

This subsection presents a fine-grained evaluation of seven large language models across the 18 HFACS 8.0 categories, assessed with four standard metrics (F1, recall, precision, and accuracy) on 200 UAV accident reports. Hierarchical-level results are summarized in Figure 10, while category-level envelopes are illustrated in Figure 11. For visual clarity, the figures show only four representative models; complete results for all seven models are provided in Table A2 and Table A3. To ensure comparability, all results were generated under identical protocols and are reported to two decimal places.

Figure 10.

Four-level HFACS radar (L1–L4) F1 Comparison for four models.

Figure 11.

Eighteen-category radar comparison across F1, recall, precision, and accuracy for seven models under HFACS 8.0.

At the hierarchical level, performance declines monotonically from L1 (Unsafe Acts) to L4 (Organizational Influences), consistent with the increasing abstraction of causal constructs and the reduced prevalence of explicit textual cues (Figure 10). L1 remains the most stable across models. At the category level, Figure 11 indicates that detectability is driven by a combination of linguistic salience and empirical frequency. Within L1, AE200 (Decision-Making Error) achieves a peak F1 of 93.87% with GPT-4o, while AE100 (Skill-Based Error) peaks at 81.61% with GPT-4o-mini (Table A2). L2 (Preconditions) shows intermediate performance but higher variance: PE200 benefits from concrete equipment/interface descriptions, reaching F1 = 91.28% with GPT-4o along with high precision and accuracy (93.15% and 93.50%, respectively). By contrast, PC300 is constrained by sparse physiological cues and peaks at only 55.56% (GPT-4o). L3 (Unsafe Supervision) requires broader contextual inference: SI000 and SC000 attain F1 = 80.75% and 77.25%, respectively, on GPT-4o-mini, while SD000 remains particularly challenging, with a best F1 of 46.67% (Table A3). The most difficult categories are clustered in PC300, SD000, and PC200, where signal sparsity (physiological states), rarity (supervisory deviations), or abstraction (management-level decisions) constrain performance. These categories—previously identified as the most challenging—consistently remain in the F1 < 60.00% band across most models, constrained by signal sparsity (physiological states), rarity (supervisory deviations), or abstraction (management-level decisions), as reflected in both tables. Proprietary models are more consistent on supervisory and organizational factors, whereas open-source baselines are comparatively competitive on action-level L1 categories but lag on L3/L4.

A cross-metric view of Table A2 and Table A3 reveals distinct model profiles. GPT-4o is precision/accuracy oriented, attaining the highest precision in PE200 and AE100) and leading accuracy in several L2 categories, thereby favoring low false-positive rates. GPT-4o-mini provides the most balanced F1 across layers, reflecting a robust precision–recall trade-off. GPT-5-mini prioritizes recall, achieving perfect recall in PE200 (100%) and elevated recall in supervisory categories (e.g., SP000 = 95.35%). This recall-oriented profile maximizes factor coverage but requires downstream verification to control false positives. Category-level disparities can be substantial, highlighting the sensitivity of HFACS-specific capability to model architecture, pre-training distribution, and optimization objectives.

Frequency effects are evident: high-support categories (e.g., AE200, OP000) consistently achieve stable, higher scores, whereas rarer or more abstract categories (PC300, SD000, OT000) remain constrained across all models. This pattern suggests that targeted data augmentation, HFACS-aware prompting, and expert-in-the-loop workflows could preferentially enhance recall and calibration for rare but safety-critical supervisory and organizational factors.

These observations inform deployment strategies that should be aligned with specific operational priorities. For a robust F1 baseline with broad stability, GPT-4o-mini provides the most balanced trade-off. When false positives are costly and overall accuracy is paramount, GPT-4o is better suited for compliance-oriented review. For early-stage safety screening where sensitivity is prioritized, GPT-5-mini maximizes factor capture and can be paired with human verification or cascaded filtering. Open-source models are currently most effective for action-level analyses at L1, while their performance at L3/L4 improves when combined with domain knowledge bases or weakly supervised adaptation strategies. The qualitative envelopes shown in Figure 10 and Figure 11 align closely with the quantitative rankings presented in Table A2 and Table A3.

5.3.3. Error Pattern Analysis

To provide transparency and guide future improvements, we analyzed the error patterns in GPT-4o’s predictions. We identified 304 false positives (hallucinations, 8.57%) and 654 false negatives (omissions, 18.44%), achieving an overall accuracy of 72.98%. We categorized the errors into distinct patterns (Table 2). The predominance of omissions over hallucinations (2.15:1 ratio) indicates a conservative bias. Notably, organizational-level factors (Level 4) were most frequently missed (37.5% of all omissions), while precondition factors (Level 2) showed the highest hallucination rate, particularly for psychological factors such as PC100 (Adverse Mental States, 48 cases).

Table 2.

Error Pattern Analysis for GPT-4o HFACS Classification.

Representative Examples:

- Category Confusion (ACN 1294113): A UAV pilot filed a NOTAM but forgot to contact the Tower via phone before each flight. Ground truth: AE200 (Perceptual Errors—memory lapse). GPT-4o prediction: AE100 (Skill-Based Errors). The model confused cognitive failure with skill deficiency.

- Level Confusion (ACN 1652001): UAV operator reported miscommunication with ATC during flight. Ground truth: PC100 + PP100 (Level 2—preconditions). GPT-4o prediction: AE100 (Level 1—unsafe act). The model attributed the error to operator skill rather than underlying workload/stress conditions.

- Organizational Blindness (ACN 879418): Pilot allowed non-qualified person in pilot seat during mission. Ground truth included OP000 (Organizational Process) and OR000 (Resource Management). GPT-4o correctly identified supervisory failures but missed organizational root causes.

These error patterns suggest targeted improvements including contrastive learning for category distinction, hierarchical reasoning for level attribution, and enhanced attention to organizational factors in complex incidents.

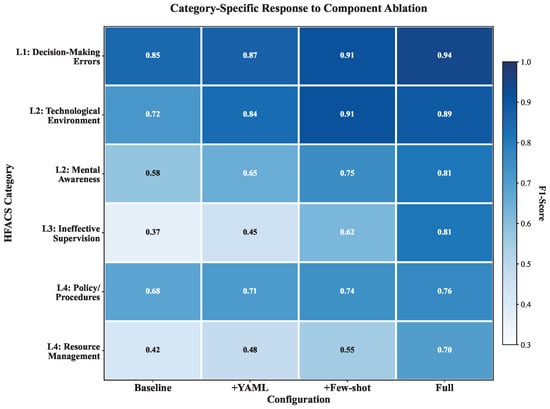

5.4. Ablation Studies

5.4.1. Design and Reporting Protocol

This ablation study systematically evaluates the independent and combined effects of two components—a YAML-based HFACS/UAV knowledge resource and in-context few-shot exemplars—using a controlled factorial design. All experiments use GPT-4o-mini with identical decoding settings (temperature ). Prompts differ only in the presence/absence of the two components. The evaluation set comprises 200 expert-annotated UAV accident reports in a multi-label regime over 18 HFACS 8.0 categories. Macro-F1 is computed as the unweighted average of per-category F1 scores across all 18 categories, giving each category equal weight regardless of frequency.

We compare four configurations: Baseline (No-YAML, zero-shot),+YAML (YAML, zero-shot), +Few-shot (No-YAML, few-shot), and Full (YAML, few-shot). The YAML resource supplies HFACS 8.0 definitions, hierarchical relations, and UAV terminology in a machine-readable format, ensuring transparency and reproducibility, but excludes any hand-crafted narrative-to-label rules (see Figure 3 and Table A1. Few-shot exemplars are drawn from a pool disjoint from the evaluation set to prevent information leakage, and all other hyperparameters are kept fixed across conditions. We report Macro-F1 and absolute/relative changes versus the Baseline. No additional inferential statistics are introduced.

5.4.2. Results and Practical Implications

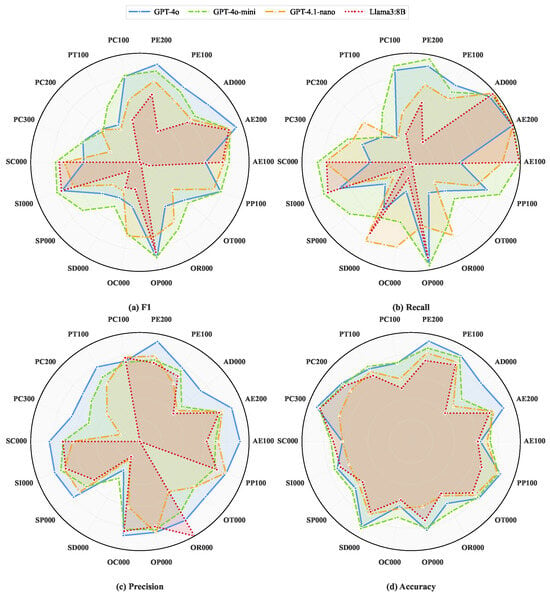

As shown in Figure 12, The Baseline configuration achieves a Macro-F1 of 0.594, indicating non-trivial inherent HFACS reasoning ability in GPT-4o-mini.+YAML improves to 0.644 (absolute +0.050, relative +8.4%), showing that structured domain knowledge improves performance even without exemplars. +Few-shot reaches 0.653 (absolute +0.059, relative +10.0%), suggesting slightly stronger gains from contextual examples than from knowledge alone. The Full configuration reaches a Macro-F1 of 0.705, an absolute gain of +0.111 (+18.7%) over Baseline, demonstrating the complementary benefits of structured knowledge and contextual exemplars.

Figure 12.

Ablation with a design. (A) Macro-F1 across four configurations, improving from Baseline to Full. (B) Component contributions showing YAML formatting and few-shot learning gains with minimal interaction effects. (C) Level-wise performance (L1–L4) demonstrating hierarchical degradation from concrete unsafe acts to abstract organizational factors. (D) Absolute improvements by level, with larger gains for L3 supervision and L2 preconditions factors.

An additive attribution analysis indicates balanced contributions: approximately 53% of the total gain stems from few-shot exemplars, 45% from the YAML knowledge base, and only 2% from interaction effects (i.e., the difference between the Full improvement and the sum of the individual improvements). This near-additive behavior suggests that the two components operate through largely independent mechanisms: the YAML resource contributes systematic domain structure, while exemplars reinforce contextual pattern recognition. Figure 12 summarizes these findings: panel (A) shows the monotonic progression from Baseline to Full, panel (B) visualizes the additive attribution, and panels (C,D) present the level-wise effects.

Category-level responses (Figure 13) align with the ablation trends. High-frequency, linguistically salient categories show strong Baseline performance and modest incremental gains under Full—e.g., L1 Decision-Making Errors: 0.85 → 0.94 (, ) and L4 Policy/Procedures: 0.68 → 0.76 (, ), consistent with ceiling effects. In contrast, categories requiring more complex inference benefit disproportionately when both components are combined—L2 Mental Awareness: 0.58 → 0.81 (+0.23, +39.7%); L3 Ineffective Supervision: 0.37 → 0.81 (+0.44, +118.9%). Technical factors exhibit a nuanced interaction: L2 Technological Environment peaks under +Few-shot () and slightly declines under Full (), indicating mild interference between definitions and exemplar-driven cues. All remaining categories improve monotonically from Baseline to Full, including L4 Resource Management: 0.42 → 0.70 (+0.28, +66.7%).

Figure 13.

Category-specific responses to component ablation for six representative HFACS categories.

For practical deployment, we advocate a modular integration approach. Under resource constraints, prioritizing few-shot exemplars is advisable, as they typically yield larger marginal gains and are more cost-effective to implement initially. For comprehensive, cross-level coverage—particularly at higher HFACS tiers—the Full configuration is preferable, providing the most balanced hierarchical improvements. Because interactions between the two components are minimal, a phased rollout is natural: begin with exemplars to secure rapid gains, then incorporate the YAML knowledge base to broaden coverage and lock in predictable, incremental improvements. In technical subdomains, lightly calibrating the specificity of YAML definitions helps avoid over-constraining exemplar-driven inference.

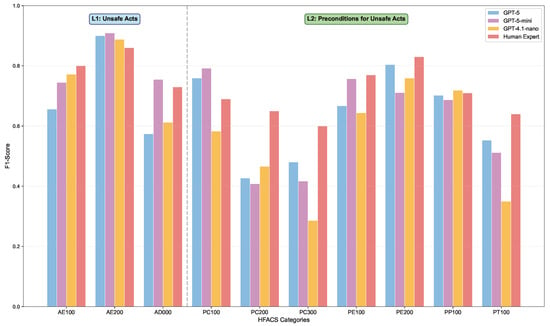

5.5. AI Models vs. Human Expert Evaluation

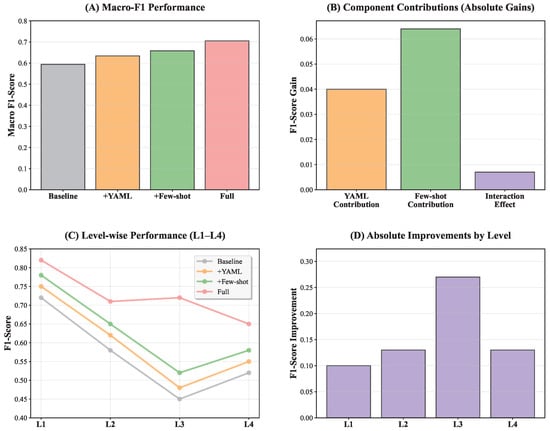

To further evaluate the performance of large language models in UAV accident investigation, we compared the performance of three advanced AI models (GPT-5, GPT-5-mini, and GPT-4.1-nano) with that of human experts, focusing on L1 (Unsafe Acts) and L2 (Preconditions for Unsafe Acts) HFACS categories using standardized coding. This comparison provides crucial insights into the potential and limitations of AI-assisted HFACS analysis for the most directly observable and inferable factors from accident narratives. The evaluation encompasses ten categories: three L1 categories (AE100 Performance/Skill-Based Error, AE200 Judgment & Decision-Making Error, AD000 Known Deviation) and seven L2 categories (PC100 Mental Awareness Conditions, PC200 State of Mind Conditions, PC300 Adverse Physiological Conditions, PE100 Physical Environment, PE200 Technological Environment, PP100 Team Coordination/Communication Factors, PT100 Training Conditions). Human-expert performance was measured on the same 200 reports using our HFACS Expert System (Figure 8), with scores computed against adjudicated consensus labels from the five-expert annotation team, thereby controlling for the inherent challenges of inferring cognitive and physiological states from narrative descriptions.

The comparison reveals distinct performance patterns across L1 and L2 HFACS categories. Human experts achieve the highest average F1-score (0.728), followed by GPT-5-mini (0.669), GPT-5 (0.652), and GPT-4.1-nano (0.608), with an approximately 8.1% relative gap between human experts and the best-performing AI model. As shown in Figure 14, Category-level patterns are clear: in L1, GPT-5-mini surpasses humans in AE200 (Decision-Making Error, 0.909 vs. 0.860) and AD000 (Known Deviation, 0.755 vs. 0.730), whereas humans lead AE100 (Skill-Based Error, 0.800 vs. 0.772 for the best AI). In L2, GPT-5-mini leads PC100 (Mental Awareness, 0.792 vs. 0.690), GPT-4.1-nano leads PP100 (Team Coordination, 0.719 vs. 0.710), and humans remain ahead in PC200 (State of Mind, 0.650 vs. 0.466 for the best AI), PC300 (Physical Conditions, 0.600 vs. 0.480), PE100 (Physical Environment, 0.770 vs. 0.757), PE200 (Technological Environment, 0.830 vs. 0.804), and PT100 (Training Conditions, 0.640 vs. 0.553). Overall, AI models lead in four of the ten categories (GPT-5-mini: AE200, AD000, PC100; GPT-4.1-nano: PP100), while humans lead in the remaining six; the largest human advantage occurs in PC200 (State of Mind), underscoring the difficulty of inferring complex psychological states from narrative text. These findings argue for a complementary division of labor: deploy AI for systematic decision analysis and explicit violation/cognitive cues, and allocate human expertise to psychological-state assessment, skill-based evaluations, and nuanced environmental interpretation—favoring category-specific collaboration over uniform automation.

Figure 14.

LLMs vs. human experts on HFACS L1–L2: per-category F1 across ten standardized categories.

6. Conclusions

This study developed a UAV accident forensics system that integrates the HFACS 8.0 human factors framework with advanced LLM reasoning, supporting the identification of the underlying human factors in unstructured narrative incident reports. By coupling HFACS 8.0’s structured taxonomy with the inferential power of an LLM, the system can systematically extract contributory factors across all causal tiers—from frontline unsafe acts and preconditions to supervisory and organizational influences—in UAV operations. In our evaluation, the HFACS-LLM approach demonstrated the ability to detect subtle narrative cues and latent error patterns that human analysts may overlook, thereby augmenting traditional investigation methods with greater consistency and depth. Beyond analytical accuracy, this approach can reduce the time, cost, and human effort required for UAV accident reporting and analysis, enabling more timely collection and assessment of incidents that might otherwise go unreported. Such efficiency can help narrow the gap between real-world occurrences and research data availability, creating a more complete foundation for evidence-based safety improvements.

However, several limitations must be acknowledged. First, the system’s performance is constrained by the quality and detail of incident narratives; incomplete or biased reports could diminish the accuracy of its inferences. Second, the models were developed and validated on specific UAV operational data, so their generalizability to other UAV domains or mission profiles may be limited without further adaptation. Third, in high-stakes investigative contexts, human expert validation remains essential to interpret AI-generated conclusions and maintain accountability. Addressing these challenges in future work will involve training on more diverse incident datasets and incorporating additional contextual data (e.g., flight telemetry or environmental conditions) to improve the models’ robustness. Moreover, integrating a more structured human-in-the-loop validation step or an ensemble-based analytical framework could further enhance the reliability and acceptance of AI-driven forensics. By addressing these limitations, the HFACS-LLM reasoning approach may evolve into a trusted component of next-generation UAV safety management, ultimately contributing to stronger regulatory assessments and safer autonomous flight operations.

Author Contributions

Conceptualization, Y.Y.; Methodology, Y.Y. and B.L.; Software, Y.Y.; Data curation, B.L.; Writing—original draft, Y.Y. and B.L.; Writing—review & editing, B.L. and G.L.; Supervision, B.L. and G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the China Scholarship Council (CSC) scholarship (Grant No. 202410320015).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

This research utilized publicly available, anonymized ASRS incident reports released by NASA for safety research purposes. No personally identifiable information was processed or retained. The study was conducted in accordance with institutional research ethics guidelines, and all expert validation participants provided informed consent.

Data Availability Statement

All experimental data, code, and Appendix A are available in the project repository https://github.com/YukeyYan/UAV-Accident-Forensics-HFACS-LLM (accessed on 7 October 2025).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. HFACS-UAV Patterns and Performance Metrics

This appendix presents the 26 UAV-specific enhancement patterns and complete performance metrics for all evaluated models.

Table A1.

UAV-Specific Enhancement Patterns for HFACS.

Table A1.

UAV-Specific Enhancement Patterns for HFACS.

| Pattern Name | Definition | Representative Case Example |

|---|---|---|

| Level 4: Organizational Influences | ||

| Part 107 Compliance | Organizational challenges in maintaining compliance with FAA Part 107 small UAS regulations | Commercial operator failed to maintain current remote pilot certificates for 40% of flight crew, resulting in unauthorized operations |

| LAANC Authorization | Organizational processes for Low Altitude Authorization and Notification Capability | Delivery company’s automated LAANC requests contained systematic altitude errors, causing 15 unauthorized airspace penetrations |

| VLOS Requirements | Organizational management of Visual Line of Sight operational requirements | Survey company’s policy allowed operations up to 2 miles without visual observers, violating VLOS requirements |

| BVLOS Operations | Organizational challenges in Beyond Visual Line of Sight operations | Research institution conducted BVLOS flights without proper waiver, lacking required detect-and-avoid systems |

| Battery Constraints | Organizational resource management for power system limitations | Fleet operator’s inadequate battery replacement schedule led to 30% capacity degradation and multiple forced landings |

| Energy Management | Organizational strategies for UAV energy and endurance management | Emergency response team lacked standardized battery management protocols, causing mission failures during critical operations |

| Level 3: Unsafe Supervision | ||

| GCS Interface Complexity | Supervisory challenges in managing Ground Control Station interface complexity | Supervisor failed to ensure pilot training on new GCS software (QGroundControl v4.2.8), leading to mode confusion during critical mission |

| Delayed Feedback Systems | Supervisory management of delayed feedback in remote operations | Operations manager did not account for 200 ms video latency in flight planning, causing multiple near-miss incidents |

| LAANC Integration | Supervisory oversight of LAANC system integration and usage | Flight supervisor approved operations without verifying LAANC authorization status, resulting in controlled airspace violation |

| Airspace Authorization | Supervisory management of airspace authorization processes | Chief pilot failed to establish procedures for real-time airspace status monitoring, causing TFR violations |

| Emergency Procedures | Supervisory oversight of UAV-specific emergency procedures | Training supervisor did not require C2 link loss simulation, leaving pilots unprepared for actual communication failure |

| Level 2: Preconditions for Unsafe Acts | ||

| C2 Link Reliability | Command and Control link reliability as precondition for safe operations | Pilot lost C2 link for 45 s due to interference, unable to regain control before UAV entered restricted airspace |

| Telemetry Accuracy | Accuracy and timeliness of telemetry data transmission | GPS altitude readings were 50 ft inaccurate due to multipath interference, causing terrain collision during low-altitude survey |

| Range Limitations | Communication and control range limitations affecting operations | Pilot attempted operation at 3 mile range despite 2-mile equipment limitation, resulting in complete signal loss |

| Signal Degradation | Progressive degradation of communication signals during operations | Gradual signal weakening over 10 min went unnoticed, leading to delayed response during emergency maneuver |

| Mode Confusion | Confusion between different flight modes and automation levels | Pilot believed UAV was in manual mode but was actually in GPS hold, causing unexpected behavior during landing approach |

| Automation Dependency | Over-reliance on automated systems affecting manual skills | When autopilot failed, pilot’s degraded manual flying skills resulted in hard landing and structural damage |

| Weather Sensitivity | UAV sensitivity to weather conditions affecting operations | 15-knot crosswind exceeded small UAV’s capability, but pilot continued operation resulting in loss of control |