UAV Accident Forensics via HFACS-LLM Reasoning: Low-Altitude Safety Insights

Abstract

Highlights

- A structured prompting framework, guided by HFACS with evidence binding and cross-level checks, enables LLMs to extract operator, precondition, supervisory, and organizational factors from UAV accident narratives, with improved inference on UAV-specific factors.

- A human-factor-annotated UAV accident dataset was developed from ASRS reports and used to benchmark the method, showing performance comparable to expert assessment, particularly on UAV-specific categories.

- LLM-assisted investigation provides a rapid, systematic triage layer that surfaces human-related and organization-related cues early from sparse, unstructured occurrence reports.

- The approach complements safety management and regulatory oversight in low-altitude operations by preserving causal reasoning and supporting human-in-the-loop verification across the reporting-to-classification workflow.

Abstract

1. Introduction

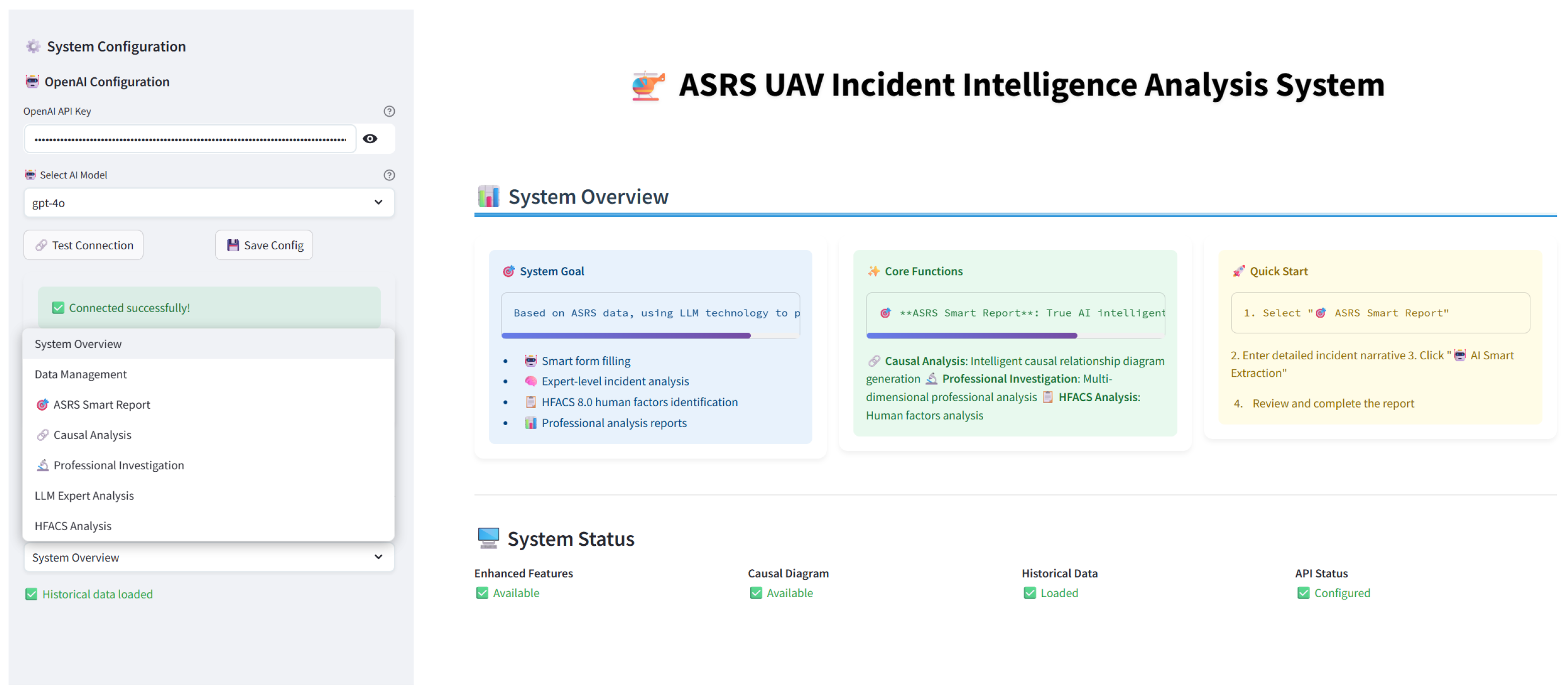

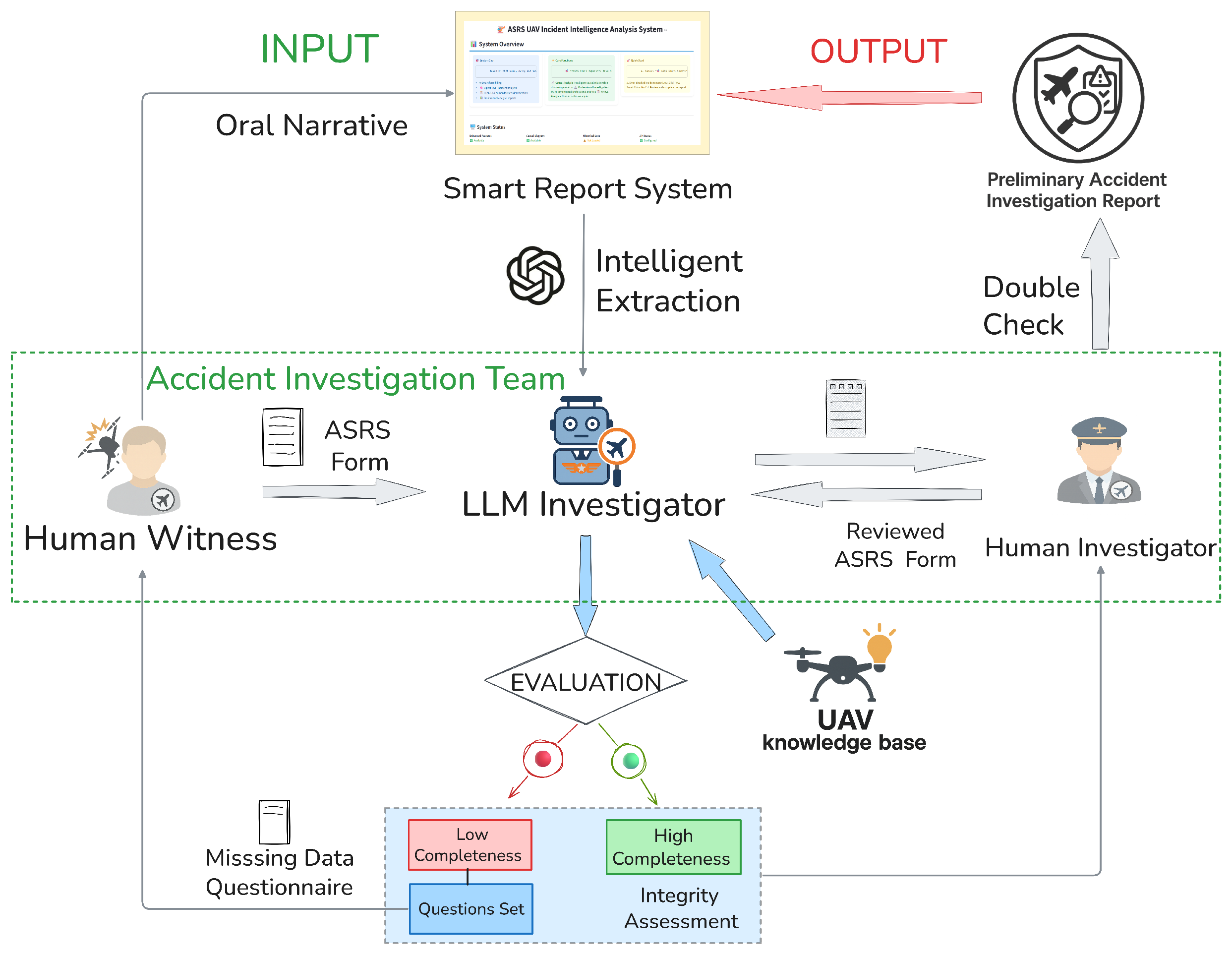

- We present an end-to-end LLM-assisted pipeline that integrates intake, human-in-the-loop supplementation, data validation, analytic triage, and HFACS reasoning, with a publicly available prototype (access at https://uav-accident-forensics.streamlit.app/, accessed on 7 October 2025).

- We propose HFACS-UAV, an adaptation of the four-tier HFACS framework with UAV-specific nanocodes and ASRS field mapping, implemented through a three-stage prompt design with evidence binding and consistency checks.

- We provide a curated UAV-ASRS-HFACS-2010–2025(05) corpus, covering Part 107, Section 44809, and Public UAS operations. Each record links narratives with structured fields; the dataset will be released under ASRS terms.

- We demonstrate through comparative evaluation that GPT-5 models match, and in some UAV-specific categories surpass, human experts in HFACS inference, highlighting the potential of LLMs in UAV accident investigation.

2. Related Works

2.1. UAV Accident Investigation: Current Practices and Challenges

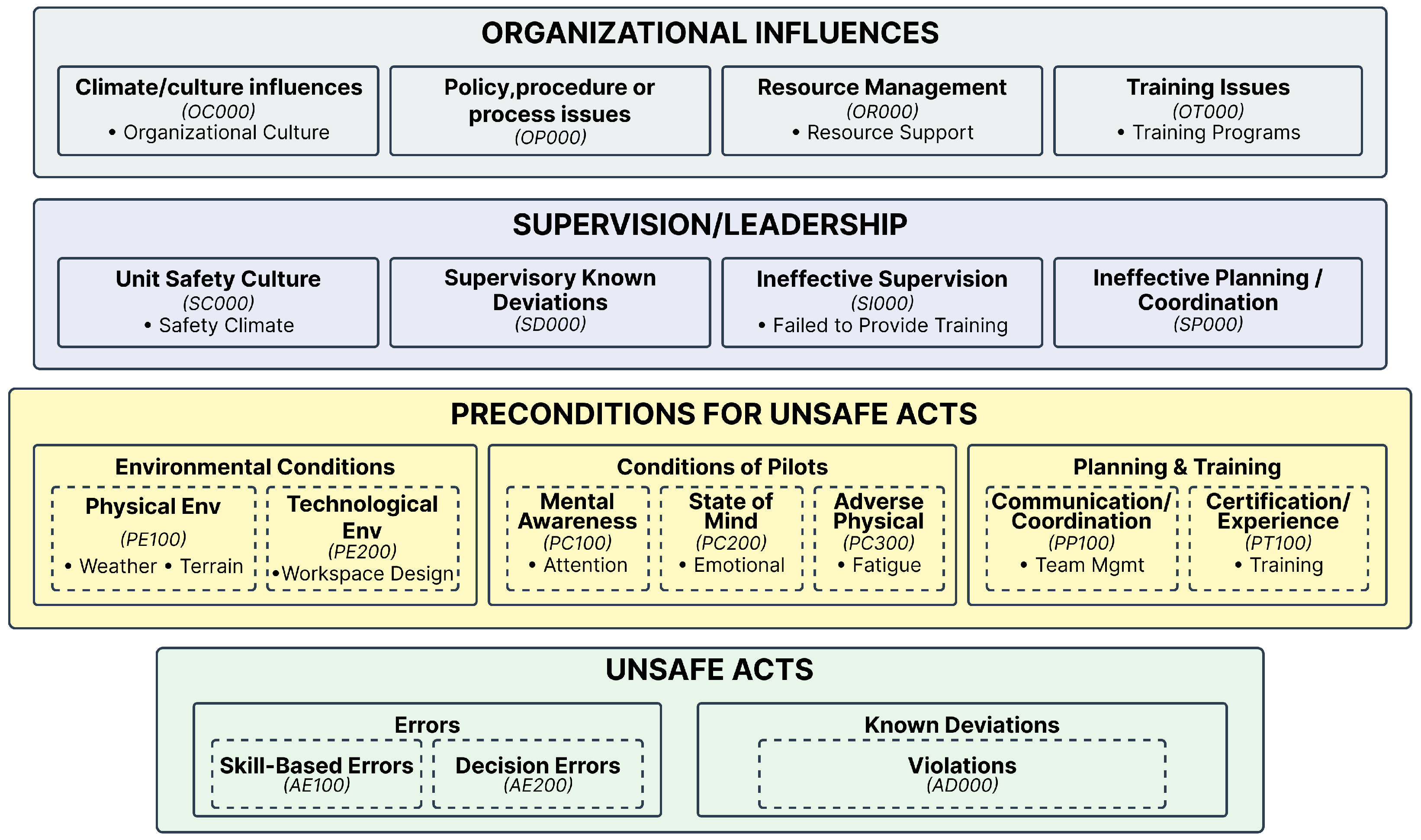

2.2. HFACS: Origins, Structure, and Aviation Applications

2.3. Large Language Models in Aviation Safety Analysis

2.4. Prompt Engineering and Multi-Agent Systems for Domain-Specific Reasoning

3. Methodology

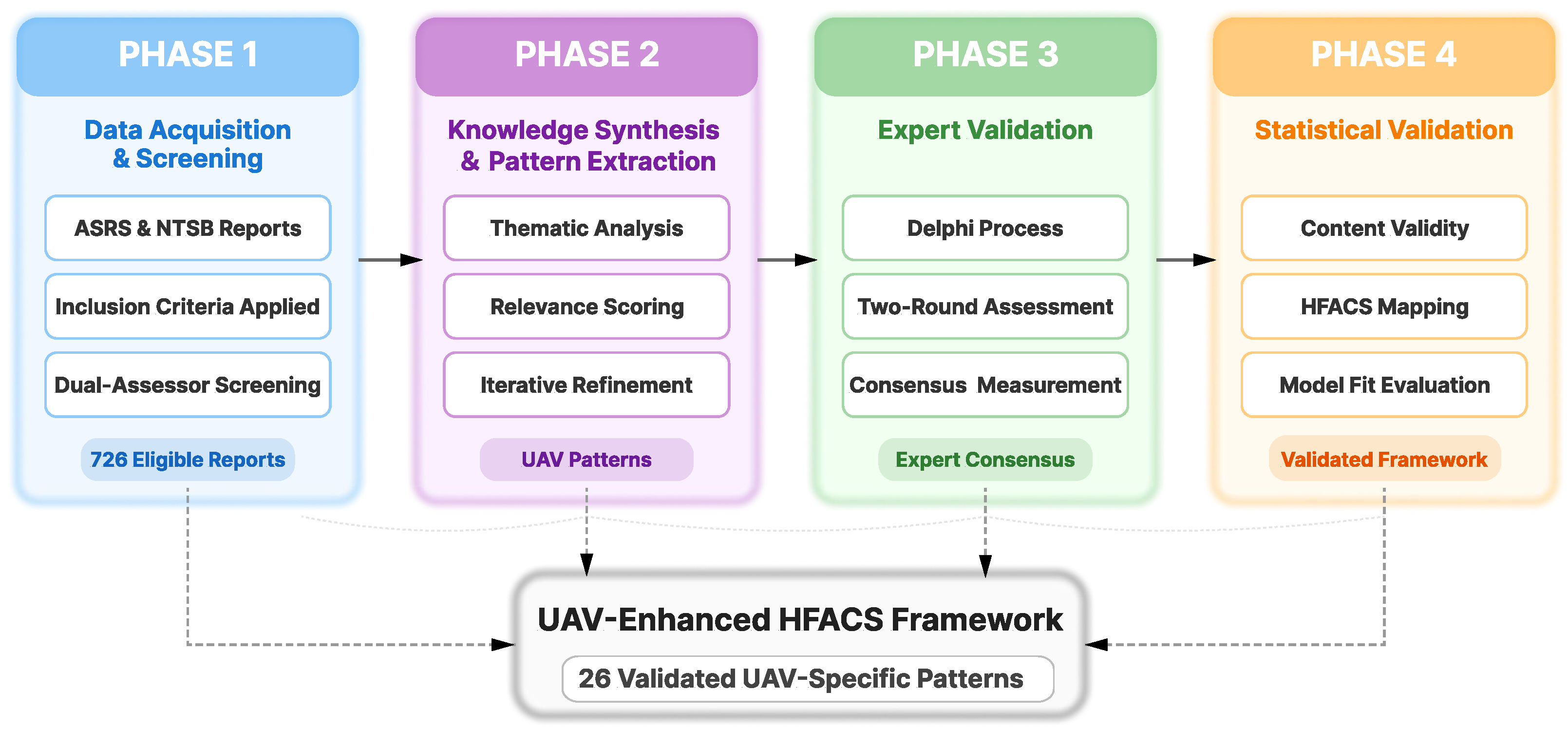

3.1. UAV-Specific Enhancement-Pattern Development

3.1.1. Phase 1—Data Acquisition and Screening

3.1.2. Phase 2—Knowledge Synthesis and Pattern Extraction

3.1.3. Phase 3—Expert Validation

3.1.4. Phase 4—Statistical Validation

- Pattern-level agreement: Mean (range: 0.71–0.94).

- Content validity index: 85% (23/26 patterns with CVR ).

- Theoretical alignment: 96% expert consensus on HFACS mapping.

- Practical relevance: Mean rating 4.2/5.0 (SD = 0.6).

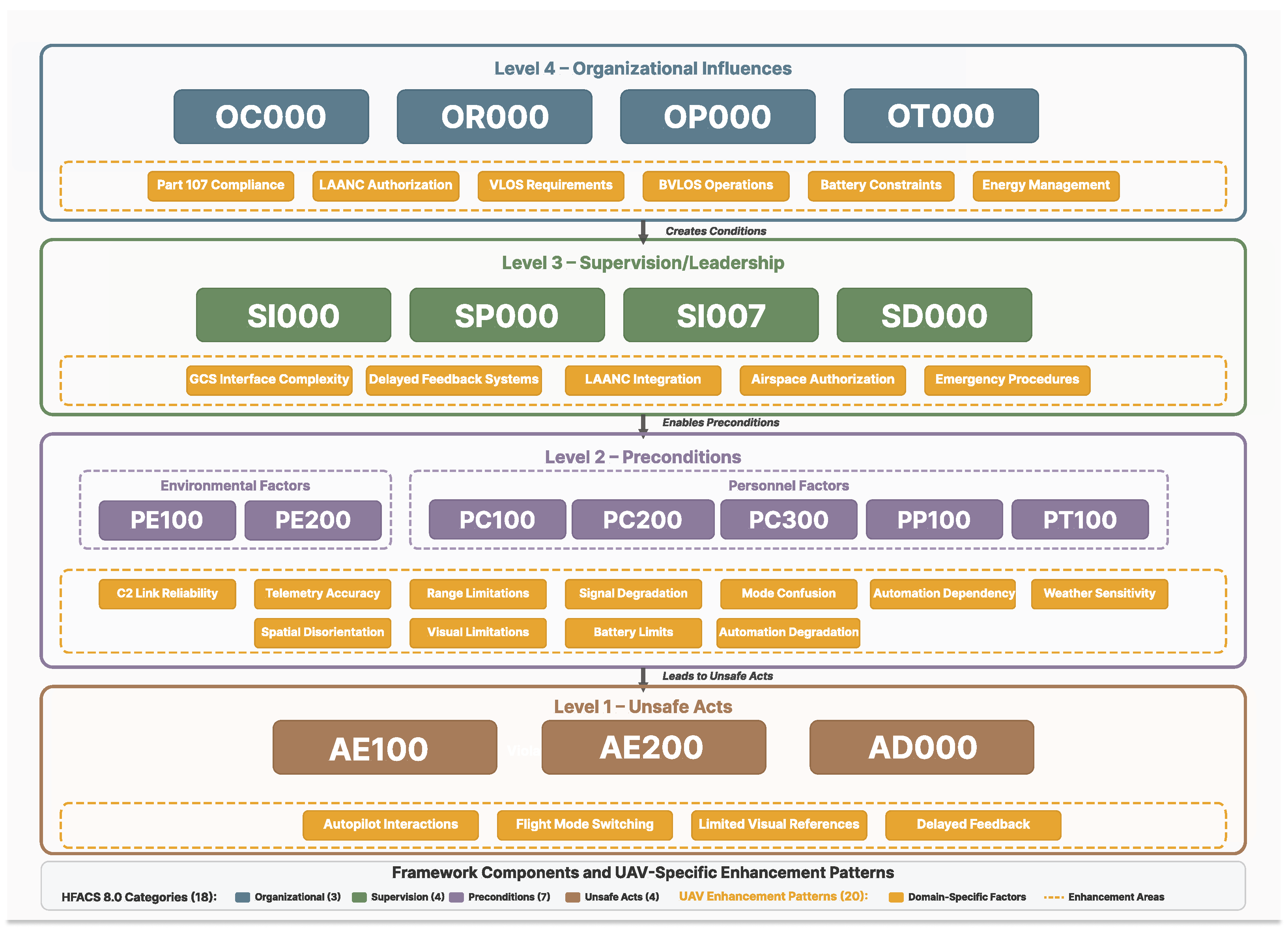

3.2. HFACS-UAV Framework Development

3.2.1. Stage 1—Architectural Design

- (a)

- Selective activation—patterns are invoked only when incident context demands;

- (b)

- Backward compatibility with classic HFACS analyses;

- (c)

- Parallel causal reasoning across mixed manned- and unmanned-aviation chains [73].

3.2.2. Stage 2—Pattern Encoding

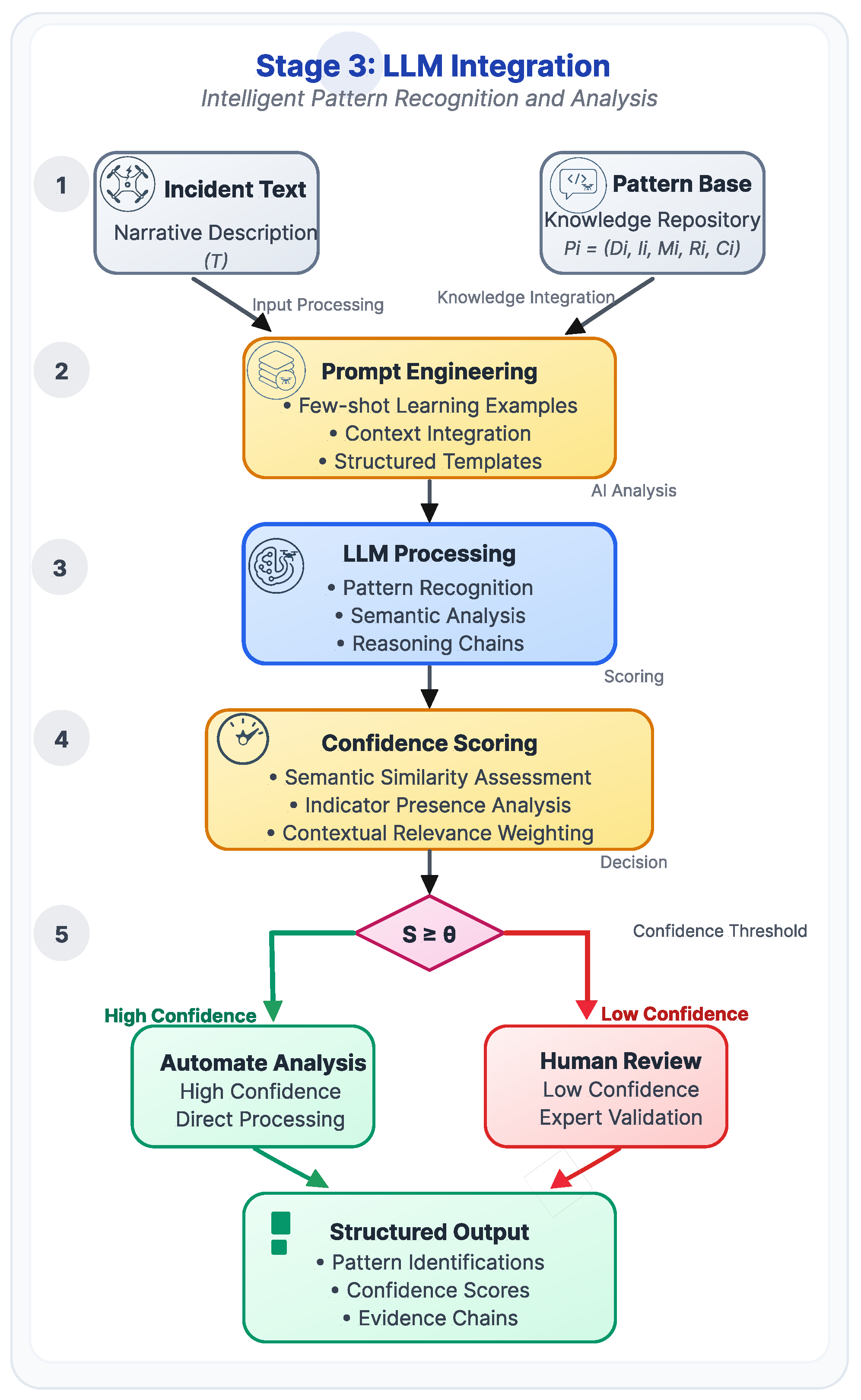

3.2.3. Stage 3—LLM Integration

3.3. End-to-End Incident Processing Pipeline

3.3.1. Intelligent Data Preprocessing and Completion System

3.3.2. LLM Integration and Intelligent Analysis Engine

3.3.3. Interactive Analysis Platform and Quality Assurance

4. Dataset Construction and Annotation

4.1. Data Source and Selection

- ACN: Unique record identifier for traceability.

- Narrative: Primary analysis text containing detailed event descriptions.

- Synopsis: Auxiliary summary providing context.

- Anomaly: Preliminary classification indicators.

- Flight Phase: Operational phase during the event.

- Control Mode (UAS): Manual, autopilot, or assisted operation.

- Human Factors: Pre-tagged human factor indicators.

- Weather/Environment: Conditions affecting operations.

- Authorization Status: Regulatory compliance and waiver information.

- Visual Observer Configuration: Crew resource management factors.

4.2. Annotation and HFACS Coding

4.3. Quality Assurance and Dataset Characteristics

5. Experiments and Results

5.1. Selected UAV-HFACS Subset

5.2. Model and Performance Metrics

5.2.1. Model Configuration

5.2.2. Domain Knowledge and Few-Shot Enhancement

5.2.3. Evaluation Metrics

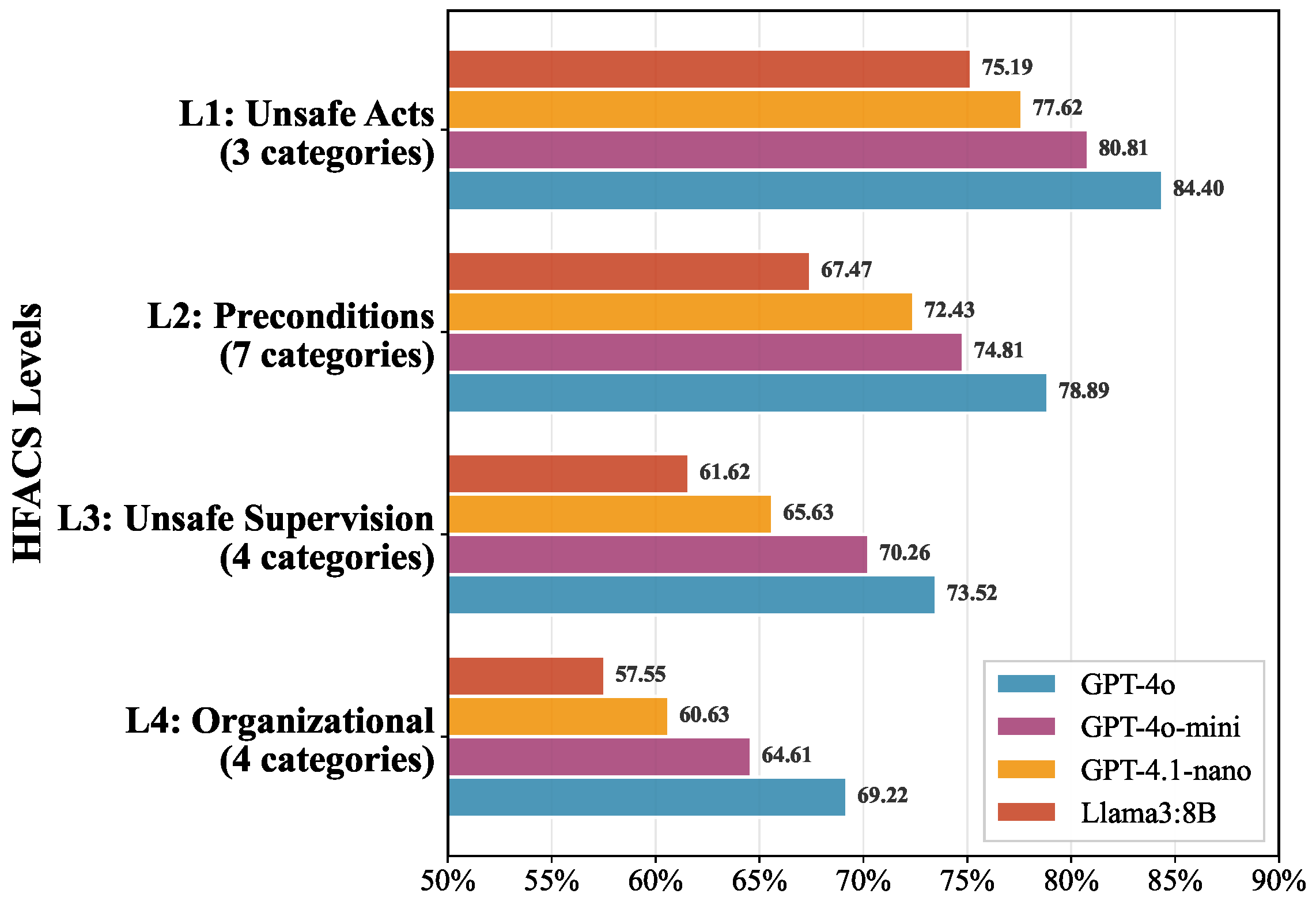

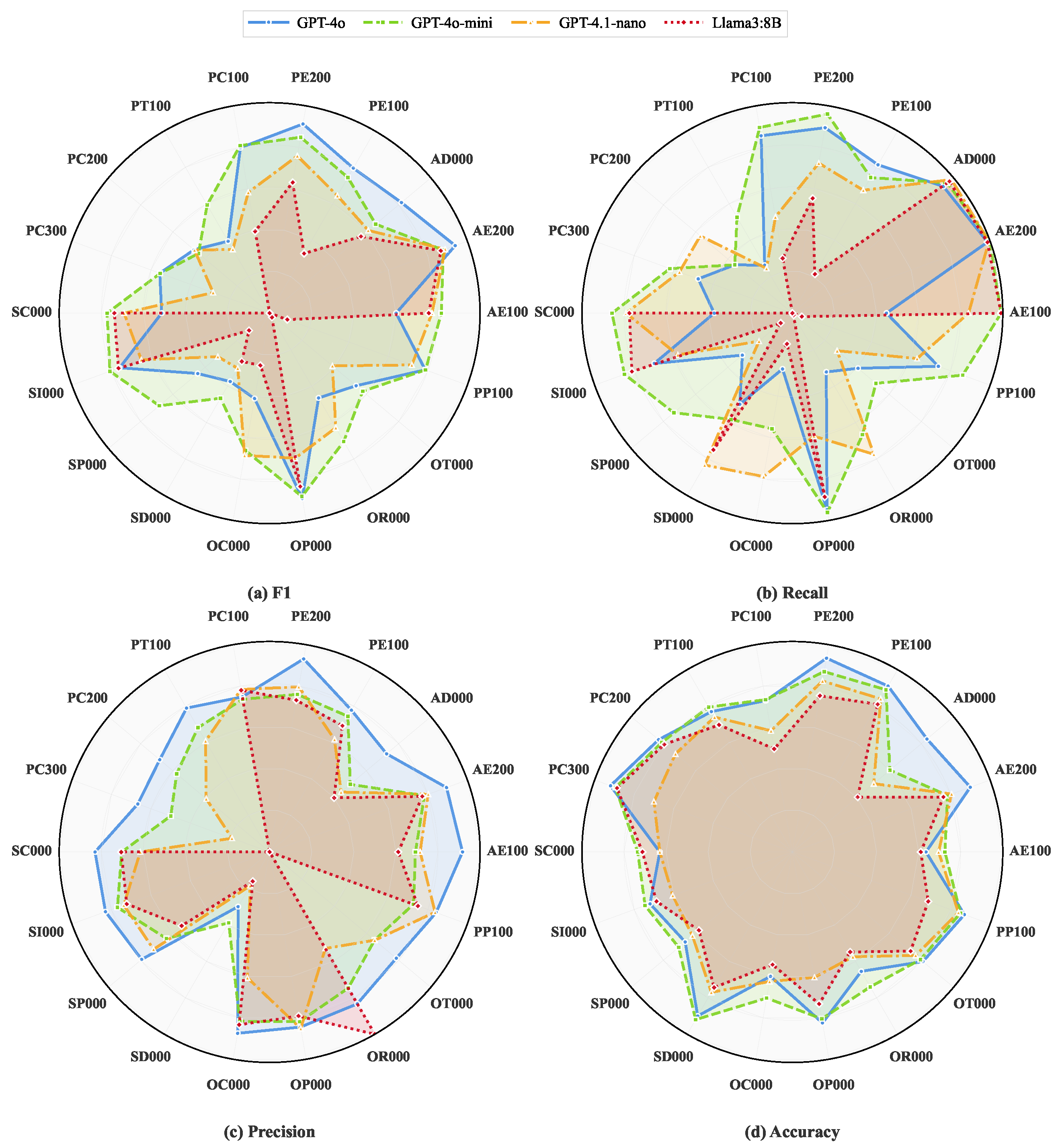

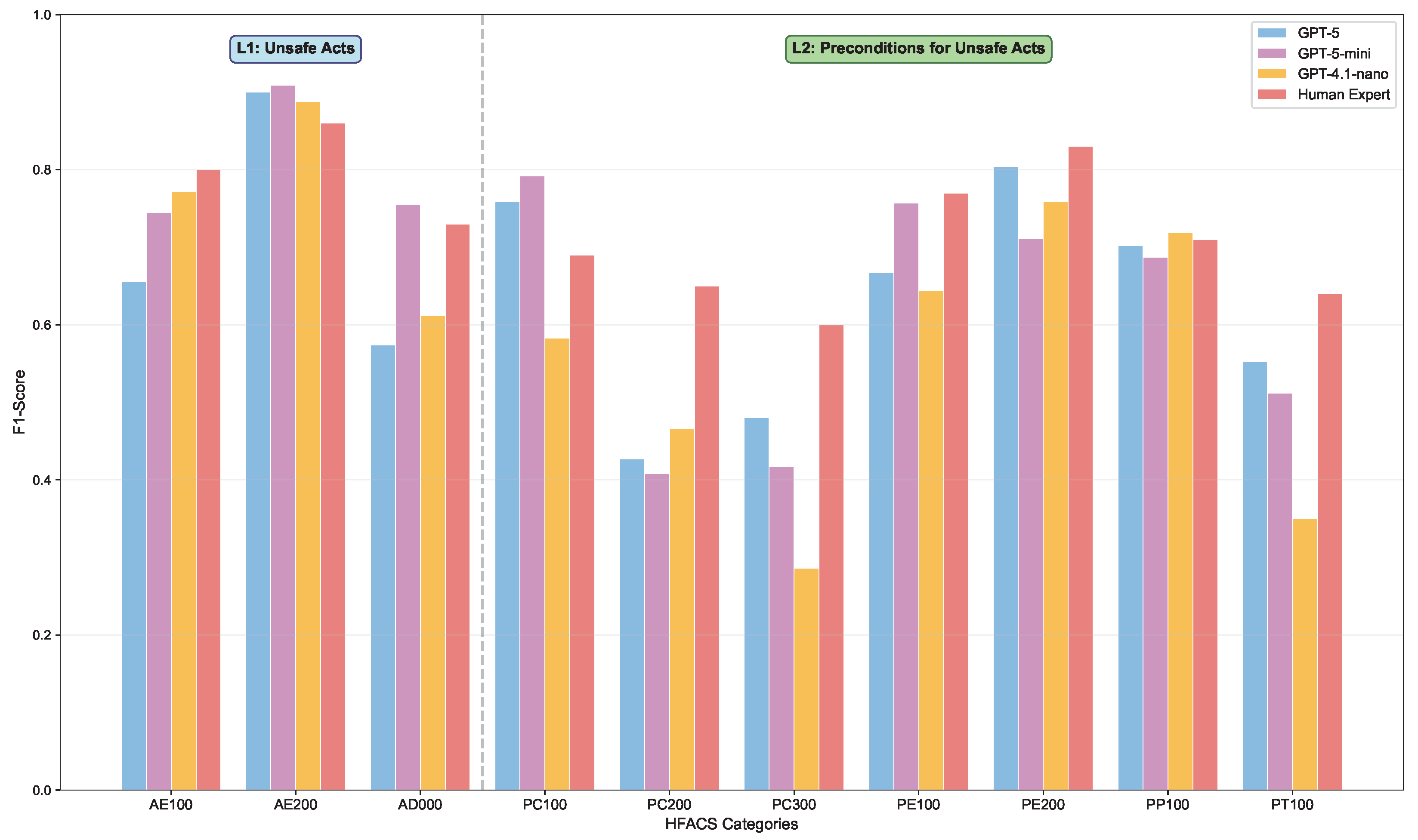

5.3. Evaluation Results

5.3.1. Overall Evaluation

5.3.2. Sub-Tasks Evaluation

5.3.3. Error Pattern Analysis

- Category Confusion (ACN 1294113): A UAV pilot filed a NOTAM but forgot to contact the Tower via phone before each flight. Ground truth: AE200 (Perceptual Errors—memory lapse). GPT-4o prediction: AE100 (Skill-Based Errors). The model confused cognitive failure with skill deficiency.

- Level Confusion (ACN 1652001): UAV operator reported miscommunication with ATC during flight. Ground truth: PC100 + PP100 (Level 2—preconditions). GPT-4o prediction: AE100 (Level 1—unsafe act). The model attributed the error to operator skill rather than underlying workload/stress conditions.

- Organizational Blindness (ACN 879418): Pilot allowed non-qualified person in pilot seat during mission. Ground truth included OP000 (Organizational Process) and OR000 (Resource Management). GPT-4o correctly identified supervisory failures but missed organizational root causes.

5.4. Ablation Studies

5.4.1. Design and Reporting Protocol

5.4.2. Results and Practical Implications

5.5. AI Models vs. Human Expert Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. HFACS-UAV Patterns and Performance Metrics

| Pattern Name | Definition | Representative Case Example |

|---|---|---|

| Level 4: Organizational Influences | ||

| Part 107 Compliance | Organizational challenges in maintaining compliance with FAA Part 107 small UAS regulations | Commercial operator failed to maintain current remote pilot certificates for 40% of flight crew, resulting in unauthorized operations |

| LAANC Authorization | Organizational processes for Low Altitude Authorization and Notification Capability | Delivery company’s automated LAANC requests contained systematic altitude errors, causing 15 unauthorized airspace penetrations |

| VLOS Requirements | Organizational management of Visual Line of Sight operational requirements | Survey company’s policy allowed operations up to 2 miles without visual observers, violating VLOS requirements |

| BVLOS Operations | Organizational challenges in Beyond Visual Line of Sight operations | Research institution conducted BVLOS flights without proper waiver, lacking required detect-and-avoid systems |

| Battery Constraints | Organizational resource management for power system limitations | Fleet operator’s inadequate battery replacement schedule led to 30% capacity degradation and multiple forced landings |

| Energy Management | Organizational strategies for UAV energy and endurance management | Emergency response team lacked standardized battery management protocols, causing mission failures during critical operations |

| Level 3: Unsafe Supervision | ||

| GCS Interface Complexity | Supervisory challenges in managing Ground Control Station interface complexity | Supervisor failed to ensure pilot training on new GCS software (QGroundControl v4.2.8), leading to mode confusion during critical mission |

| Delayed Feedback Systems | Supervisory management of delayed feedback in remote operations | Operations manager did not account for 200 ms video latency in flight planning, causing multiple near-miss incidents |

| LAANC Integration | Supervisory oversight of LAANC system integration and usage | Flight supervisor approved operations without verifying LAANC authorization status, resulting in controlled airspace violation |

| Airspace Authorization | Supervisory management of airspace authorization processes | Chief pilot failed to establish procedures for real-time airspace status monitoring, causing TFR violations |

| Emergency Procedures | Supervisory oversight of UAV-specific emergency procedures | Training supervisor did not require C2 link loss simulation, leaving pilots unprepared for actual communication failure |

| Level 2: Preconditions for Unsafe Acts | ||

| C2 Link Reliability | Command and Control link reliability as precondition for safe operations | Pilot lost C2 link for 45 s due to interference, unable to regain control before UAV entered restricted airspace |

| Telemetry Accuracy | Accuracy and timeliness of telemetry data transmission | GPS altitude readings were 50 ft inaccurate due to multipath interference, causing terrain collision during low-altitude survey |

| Range Limitations | Communication and control range limitations affecting operations | Pilot attempted operation at 3 mile range despite 2-mile equipment limitation, resulting in complete signal loss |

| Signal Degradation | Progressive degradation of communication signals during operations | Gradual signal weakening over 10 min went unnoticed, leading to delayed response during emergency maneuver |

| Mode Confusion | Confusion between different flight modes and automation levels | Pilot believed UAV was in manual mode but was actually in GPS hold, causing unexpected behavior during landing approach |

| Automation Dependency | Over-reliance on automated systems affecting manual skills | When autopilot failed, pilot’s degraded manual flying skills resulted in hard landing and structural damage |

| Weather Sensitivity | UAV sensitivity to weather conditions affecting operations | 15-knot crosswind exceeded small UAV’s capability, but pilot continued operation resulting in loss of control |

| Spatial Disorientation | Loss of spatial orientation due to remote operation characteristics | Pilot lost orientation during FPV flight in featureless terrain, unable to determine UAV attitude or position |

| Visual Limitations | Limited visual references and environmental cues in remote operations | Camera’s limited field of view prevented detection of approaching aircraft until collision was unavoidable |

| Battery Limits | Battery capacity and performance limitations affecting operations | Cold weather reduced battery performance by 40%, causing unexpected power loss during return flight |

| Automation Degradation | Degradation of automated system performance over time | Uncalibrated IMU caused gradual drift in autonomous flight path, leading to controlled airspace violation |

| Level 1: Unsafe Acts | ||

| Autopilot Interactions | Errors in interaction with autopilot systems and mode management | Pilot incorrectly programmed waypoint altitude as 400ft AGL instead of MSL, causing terrain collision |

| Flight Mode Switching | Errors during transitions between different flight modes | Rapid switching between manual and GPS modes during emergency caused control oscillations and crash |

| Limited Visual References | Errors due to limited visual references in remote operations | Pilot misjudged distance to obstacle due to camera perspective, resulting in collision with power line |

| Delayed Feedback | Errors caused by delayed feedback from remote systems | 300 ms video delay caused pilot to overcorrect during landing, resulting in hard impact and damage |

| Metric | Model | AE100 | AE200 | AD000 | PC100 | PC200 | PC300 | PE100 | PE200 | PP100 |

|---|---|---|---|---|---|---|---|---|---|---|

| F1-score | GPT-4o-mini | 81.61 | 87.82 | 65.80 | 80.87 | 44.12 | 55.32 | 74.42 | 84.88 | 78.87 |

| GPT-4o | 60.11 | 93.87 | 81.77 | 79.85 | 46.88 | 55.56 | 79.55 | 91.28 | 78.69 | |

| GPT-5-mini | 74.46 | 90.91 | 75.51 | 79.22 | 40.82 | 41.67 | 75.73 | 71.09 | 68.67 | |

| Gemma3:4B | 76.92 | 87.46 | 56.51 | 77.67 | 22.22 | 29.27 | 71.05 | 63.30 | 72.34 | |

| GPT-4.1-nano | 77.15 | 88.76 | 61.18 | 58.29 | 46.60 | 28.57 | 64.44 | 75.86 | 71.93 | |

| GPT-5 | 65.59 | 90.00 | 57.38 | 75.91 | 42.70 | 48.00 | 66.67 | 80.45 | 70.18 | |

| Llama3:8B | 75.64 | 86.63 | 56.72 | 39.51 | 0.00 | 0.00 | 32.73 | 63.08 | 8.96 | |

| Recall | GPT-4o-mini | 99.19 | 100.00 | 96.20 | 89.60 | 35.71 | 61.90 | 74.42 | 96.05 | 86.15 |

| GPT-4o | 44.72 | 98.71 | 93.67 | 85.60 | 35.71 | 47.62 | 81.40 | 89.47 | 73.85 | |

| GPT-5-mini | 70.49 | 97.40 | 93.67 | 97.60 | 71.43 | 71.43 | 90.70 | 100.00 | 87.69 | |

| Gemma3:4B | 100.00 | 100.00 | 100.00 | 100.00 | 14.63 | 30.00 | 64.29 | 93.24 | 85.00 | |

| GPT-4.1-nano | 83.74 | 99.35 | 98.73 | 46.40 | 57.14 | 57.14 | 67.44 | 72.37 | 63.08 | |

| GPT-5 | 50.83 | 88.82 | 45.45 | 85.25 | 45.24 | 60.00 | 92.86 | 96.00 | 62.50 | |

| Llama3:8B | 99.16 | 98.68 | 97.44 | 26.45 | 0.00 | 0.00 | 21.43 | 55.41 | 4.76 | |

| Precision | GPT-4o-mini | 69.32 | 78.28 | 50.00 | 73.68 | 57.69 | 50.00 | 74.42 | 76.04 | 72.73 |

| GPT-4o | 91.67 | 89.47 | 72.55 | 74.83 | 68.18 | 66.67 | 77.78 | 93.15 | 84.21 | |

| GPT-5-mini | 78.90 | 85.23 | 63.25 | 66.67 | 28.57 | 29.41 | 65.00 | 55.15 | 56.44 | |

| Gemma3:4B | 62.50 | 77.72 | 39.38 | 63.49 | 46.15 | 28.57 | 79.41 | 47.92 | 62.96 | |

| GPT-4.1-nano | 71.53 | 80.21 | 44.32 | 78.38 | 39.34 | 19.05 | 61.70 | 79.71 | 83.67 | |

| GPT-5 | 92.42 | 91.22 | 77.78 | 68.42 | 40.43 | 40.00 | 52.00 | 69.23 | 80.00 | |

| Llama3:8B | 61.14 | 77.20 | 40.00 | 78.05 | 0.00 | 0.00 | 69.23 | 73.21 | 75.00 | |

| Accuracy | GPT-4o-mini | 72.50 | 78.50 | 60.50 | 73.50 | 81.00 | 89.50 | 89.00 | 87.00 | 85.00 |

| GPT-4o | 63.50 | 90.00 | 83.50 | 73.00 | 83.00 | 92.00 | 91.00 | 93.50 | 87.00 | |

| GPT-5-mini | 70.20 | 84.85 | 75.76 | 67.68 | 56.06 | 78.79 | 87.37 | 69.19 | 73.74 | |

| Gemma3:4B | 62.69 | 77.72 | 39.38 | 64.25 | 78.24 | 84.97 | 88.60 | 58.55 | 79.79 | |

| GPT-4.1-nano | 69.50 | 80.50 | 50.50 | 58.50 | 72.50 | 70.00 | 84.00 | 82.50 | 84.00 | |

| GPT-5 | 67.51 | 84.77 | 73.60 | 66.50 | 74.11 | 86.80 | 80.20 | 82.23 | 82.74 | |

| Llama3:8B | 61.03 | 76.41 | 40.51 | 49.74 | 79.49 | 88.72 | 81.03 | 75.38 | 68.72 |

| Metric | Model | PT100 | SC000 | SD000 | SI000 | SP000 | OC000 | OP000 | OR000 | OT000 |

|---|---|---|---|---|---|---|---|---|---|---|

| F1-score | GPT-4o-mini | 59.41 | 77.25 | 46.67 | 80.75 | 68.45 | 66.29 | 88.43 | 70.45 | 57.73 |

| GPT-4o | 39.47 | 51.32 | 37.50 | 75.86 | 44.63 | 41.18 | 89.36 | 46.51 | 53.66 | |

| GPT-5-mini | 51.23 | 74.18 | 40.00 | 80.16 | 64.57 | 72.46 | 85.80 | 70.04 | 45.63 | |

| Gemma3:4B | 60.87 | 58.89 | 22.22 | 51.72 | 51.43 | 53.42 | 85.98 | 8.60 | 21.95 | |

| GPT-4.1-nano | 35.00 | 69.17 | 30.30 | 64.89 | 32.14 | 68.62 | 69.96 | 62.88 | 38.96 | |

| GPT-5 | 55.32 | 48.18 | 36.36 | 60.96 | 60.24 | 48.18 | 60.17 | 44.93 | 43.75 | |

| Llama3:8B | 0.00 | 73.83 | 26.47 | 76.45 | 12.77 | 25.21 | 83.65 | 2.22 | 0.00 | |

| Recall | GPT-4o-mini | 52.63 | 85.71 | 58.33 | 84.92 | 73.56 | 55.77 | 96.13 | 66.67 | 51.85 |

| GPT-4o | 26.32 | 37.14 | 50.00 | 69.84 | 31.03 | 26.92 | 94.84 | 32.26 | 40.74 | |

| GPT-5-mini | 94.55 | 76.70 | 66.67 | 79.84 | 95.35 | 73.53 | 90.85 | 91.21 | 90.38 | |

| Gemma3:4B | 63.64 | 53.00 | 16.67 | 36.89 | 43.37 | 43.43 | 92.62 | 4.49 | 17.31 | |

| GPT-4.1-nano | 24.56 | 79.05 | 83.33 | 57.94 | 20.69 | 78.85 | 59.35 | 77.42 | 27.78 | |

| GPT-5 | 45.61 | 32.04 | 33.33 | 45.97 | 58.14 | 32.35 | 46.71 | 33.33 | 38.89 | |

| Llama3:8B | 0.00 | 77.45 | 75.00 | 81.15 | 7.23 | 14.85 | 88.67 | 1.12 | 0.00 | |

| Precision | GPT-4o-mini | 68.18 | 70.31 | 38.89 | 76.98 | 64.00 | 81.69 | 81.87 | 74.70 | 65.12 |

| GPT-4o | 78.95 | 82.98 | 30.00 | 83.02 | 79.41 | 87.50 | 84.48 | 83.33 | 78.57 | |

| GPT-5-mini | 35.14 | 71.82 | 28.57 | 80.49 | 48.81 | 71.43 | 81.29 | 56.85 | 30.52 | |

| Gemma3:4B | 58.33 | 66.25 | 33.33 | 86.54 | 63.16 | 69.35 | 80.23 | 100.00 | 30.00 | |

| GPT-4.1-nano | 60.87 | 61.48 | 18.52 | 73.74 | 72.00 | 60.74 | 85.19 | 52.94 | 65.22 | |

| GPT-5 | 70.27 | 97.06 | 40.00 | 90.48 | 62.50 | 94.29 | 84.52 | 68.89 | 50.00 | |

| Llama3:8B | 0.00 | 70.54 | 16.07 | 72.26 | 54.55 | 83.33 | 79.17 | 100.00 | 0.00 | |

| Accuracy | GPT-4o-mini | 79.50 | 73.50 | 92.00 | 74.50 | 70.50 | 70.50 | 80.50 | 74.00 | 79.50 |

| GPT-4o | 77.00 | 63.00 | 90.00 | 72.00 | 66.50 | 60.00 | 82.50 | 65.50 | 81.00 | |

| GPT-5-mini | 50.00 | 72.22 | 87.88 | 75.25 | 54.55 | 71.21 | 76.77 | 64.14 | 43.43 | |

| Gemma3:4B | 76.68 | 61.66 | 92.75 | 56.48 | 64.77 | 61.14 | 76.68 | 55.96 | 66.84 | |

| GPT-4.1-nano | 74.00 | 63.00 | 77.00 | 60.50 | 62.00 | 62.50 | 60.50 | 57.50 | 76.50 | |

| GPT-5 | 78.68 | 63.96 | 92.89 | 62.94 | 66.50 | 63.96 | 52.28 | 61.42 | 72.59 | |

| Llama3:8B | 69.74 | 71.28 | 74.36 | 68.72 | 57.95 | 54.36 | 73.33 | 54.87 | 73.33 |

References

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef]

- Sun, J.; Li, B.; Jiang, Y.; Wen, C.y. A Camera-Based Target Detection and Positioning UAV System for Search and Rescue (SAR) Purposes. Sensors 2016, 16, 1778. [Google Scholar] [CrossRef]

- Federal Aviation Administration. Accidents and Incidents: UAS Operator Responsibilities. AIM §11-8-4. 2025. Available online: https://www.faa.gov/air_traffic/publications/atpubs/aim_html/chap11_section_8.html (accessed on 27 July 2025).

- Kasprzyk, P.J.; Konert, A. Reporting and Investigation of Unmanned Aircraft Systems (UAS) Accidents and Serious Incidents—Regulatory Perspective. J. Intell. Robot. Syst. 2021, 103, 3. [Google Scholar] [CrossRef]

- Ghasri, M.; Maghrebi, M. Factors affecting unmanned aerial vehicles’ safety: A post-occurrence exploratory data analysis of drones’ accidents and incidents in Australia. Saf. Sci. 2021, 139, 105273. [Google Scholar] [CrossRef]

- Johnson, C.; Holloway, C. A survey of logic formalisms to support mishap analysis. Reliab. Eng. Syst. Saf. 2003, 80, 271–291. [Google Scholar] [CrossRef]

- Federal Aviation Administration. 14 CFR § 107.9—Safety Event Reporting. 2025. Available online: https://www.ecfr.gov/current/title-14/part-107/section-107.9 (accessed on 27 July 2025).

- National Transportation Safety Board. The Investigative Process. 2025. Available online: https://www.ntsb.gov/investigations/process/Pages/default.aspx (accessed on 27 July 2025).

- Australian Transport Safety Bureau. Reporting Requirements for Remotely Piloted Aircraft (RPA). 2021. Available online: https://www.atsb.gov.au/reporting-requirements-rpa (accessed on 15 August 2025).

- European Parliament and Council of the European Union. Regulation (EU) No 376/2014 on the Reporting, Analysis and Follow-Up of Occurrences in Civil Aviation. 2014. Available online: https://eur-lex.europa.eu/eli/reg/2014/376/oj (accessed on 7 October 2025).

- European Commission. Commission Implementing Regulation (EU) 2019/947 of 24 May 2019 on the Rules and Procedures for the Operation of Unmanned Aircraft. 2019. Available online: https://eur-lex.europa.eu/eli/reg_impl/2019/947/oj (accessed on 7 October 2025).

- Grindley, B.; Phillips, K.; Parnell, K.J.; Cherrett, T.; Scanlan, J.; Plant, K.L. Over a decade of UAV incidents: A human factors analysis of causal factors. Appl. Ergon. 2024, 121, 104355. [Google Scholar] [CrossRef]

- Boyd, D.D. Causes and risk factors for fatal accidents in non-commercial twin engine piston general aviation aircraft. Accid. Anal. Prev. 2015, 77, 113–119. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Li, F.; Ng, K.K.H.; Han, J.; Feng, S. Accident Investigation via LLMs Reasoning: HFACS-guided Chain-of-Thoughts Enhance General Aviation Safety. Expert Syst. Appl. 2025, 269, 126422. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems: 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020; Volume 33. Available online: https://proceedings.neurips.cc/paper/2020/file/1457c0d6bfcb4967418bfb8ac142f64a-Paper.pdf (accessed on 7 October 2025).

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. In Proceedings of the Advances in Neural Information Processing Systems: 37th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Curran Associates, Inc.: Nice, France, 2023; Volume 36. [Google Scholar]

- Bran, A.M.; Cox, S.; Schilter, O.; Baldassari, C.; White, A.D.; Schwaller, P. Augmenting large language models with chemistry tools. Nat. Mach. Intell. 2024, 6, 525–535. [Google Scholar] [CrossRef]

- Lu, Y.; Xu, C.; Wang, Y. Joint Computation Offloading and Trajectory Optimization for Edge Computing UAV: A KNN-DDPG Algorithm. Drones 2024, 8, 564. [Google Scholar] [CrossRef]

- Chen, M.; Zhang, H.; Wan, C.; Zhao, W.; Xu, Y.; Wang, J.; Gu, X. On the effectiveness of large language models in domain-specific code generation. arXiv 2023, arXiv:2312.01639. [Google Scholar]

- Almusayli, A.; Zia, T.; Qazi, E.u.H. Drone Forensics: An Innovative Approach to the Forensic Investigation of Drone Accidents Based on Digital Twin Technology. Technologies 2024, 12, 11. [Google Scholar] [CrossRef]

- NASA Aviation Safety Reporting System. ASRS Database Fields. Overview of ASRS Coding form Fields and Their Display/Search Availability. 2024. Available online: https://akama.arc.nasa.gov/ASRSDBOnline/pdf/ASRS_Database_Fields.pdf (accessed on 7 October 2025).

- Wiegmann, D.A.; Shappell, S.A. A Human Error Analysis of Commercial Aviation Accidents Using the Human Factors Analysis and Classification System (HFACS); Technical Report DOT/FAA/AM-01/3; U.S. Department of Transportation, Federal Aviation Administration, Office of Aviation Medicine: Washington, DC, USA, 2001. [Google Scholar]

- Department of the Air Force. Human Factors Analysis and Classification System (DoD HFACS) Version 8.0 Handbook; Technical Report; Air Force Safety Center, Department of the Air Force: Kirtland AFB, NM, USA, 2023; Available online: https://navalsafetycommand.navy.mil/Portals/100/Documents/DoD%20Human%20Factors%20Analysis%20and%20Classification%20System%20%28HFACS%29%208.0.pdf (accessed on 7 October 2025).

- NASA Aviation Safety Reporting System. ASRS Coding Taxonomy (ASRS Coding Form). Official Field List and Categorical Taxonomy for ASRS Database Online. 2024. Available online: https://asrs.arc.nasa.gov/docs/dbol/ASRS_CodingTaxonomy.pdf (accessed on 7 October 2025).

- International Civil Aviation Organization. Annex 13 to the Convention on International Civil Aviation—Aircraft Accident and Incident Investigation, 13th ed.; International Civil Aviation Organization: Montreal, QC, Canada, 2024. [Google Scholar]

- Wild, G.; Murray, J.; Baxter, G. Exploring civil drone accidents and incidents to help prevent potential air disasters. Aerospace 2016, 3, 22. [Google Scholar] [CrossRef]

- Tvaryanas, A.P.; Thompson, W.T.; Constable, S.H. US Military Unmanned Aerial Vehicle Mishaps: Assessment of the Role of Human Factors Using HFACS; U.S. Military Unmanned Aerial Vehicle Mishaps: Assessment of the Role of Human Factors Using Human Factors Analysis and Classification System (HFACS); Technical Report HSW-PE-BR-TR-2005-0001; United States Air Force, 311th Human Systems Wing, Performance Enhancement Directorate, Performance Enhancement Research Division: Brooks City-Base, TX, USA, 2005; Available online: https://archive.org/details/DTIC_ADA435063 (accessed on 7 October 2025).

- Federal Aviation Administration. Small Unmanned Aircraft Systems (UAS) Regulations (Part 107); Federal Aviation Administration: Washington, DC, USA, 2025. Available online: https://www.faa.gov/newsroom/small-unmanned-aircraft-systems-uas-regulations-part-107 (accessed on 15 August 2025).

- FAA Center of Excellence for Unmanned Aircraft Systems (ASSURE). Identify Flight Recorder Requirements for Unmanned Aircraft Systems Integration into the National Airspace System (Project A55)—Final Report; Technical Report; Mississippi State University: Starkville, MS, USA, 2024; Available online: https://assureuas.org/wp-content/uploads/2021/06/A55_Final-Report.pdf (accessed on 7 October 2025).

- Reason, J. Managing the Risks of Organizational Accidents; Ashgate: Aldershot, UK, 1997. [Google Scholar]

- Reason, J. Human error: Models and management. BMJ 2000, 320, 768–770. [Google Scholar] [CrossRef] [PubMed]

- Reason, J. The contribution of latent human failures to the breakdown of complex systems. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1990, 327, 475–484. [Google Scholar] [CrossRef]

- Shappell, S.A.; Wiegmann, D.A. The Human Factors Analysis and Classification System (HFACS); Technical Report DOT/FAA/AM-00/7; Federal Aviation Administration, Office of Aviation Medicine: Washington, DC, USA, 2000. [Google Scholar]

- Wiegmann, D.A.; Shappell, S.A. A Human Error Approach to Aviation Accident Analysis: The Human Factors Analysis and Classification System; Ashgate: Aldershot, UK, 2003. [Google Scholar]

- El Safany, R.; Bromfield, M.A. A human factors accident analysis framework for UAV loss of control in flight. Aeronaut. J. 2025, 129, 1723–1749. [Google Scholar] [CrossRef]

- Alharasees, O.; Kale, U. Human Factors and AI in UAV Systems: Enhancing Operational Efficiency Through AHP and Real-Time Physiological Monitoring. J. Intell. Robot. Syst. 2025, 111, 5. [Google Scholar] [CrossRef]

- Tanguy, N.; Tulechki, N.; Urieli, A.; Hermann, E.; Raynal, C. Natural Language Processing for Aviation Safety Reports: From Classification to Interactive Analysis. Comput. Ind. 2016, 78, 80–95. [Google Scholar] [CrossRef]

- Chandra, C.; Jing, X.; Bendarkar, M.V.; Sawant, K.; Elias, L.R.; Kirby, M.; Mavris, D.N. AviationBERT: A Preliminary Aviation-Specific Natural Language Model. In Proceedings of the AIAA AVIATION 2023 Forum, San Diego, CA, USA, 12–16 June 2023. [Google Scholar] [CrossRef]

- Andrade, S.R.; Walsh, H.S. SafeAeroBERT: Towards a Safety-Informed Aerospace-Specific Language Model. In Proceedings of the AIAA Aviation 2023 Forum, San Diego, CA, USA, 12–16 June 2023. [Google Scholar] [CrossRef]

- Wang, L.; Chou, J.; Zhou, X.; Tien, A.; Baumgartner, D.M. AviationGPT: A Large Language Model for the Aviation Domain. arXiv 2023, arXiv:2311.17686. [Google Scholar] [CrossRef]

- Yang, C.; Huang, C. Natural Language Processing (NLP) in Aviation Safety: Systematic Review of Research and Outlook into the Future. Aerospace 2023, 10, 600. [Google Scholar] [CrossRef]

- Nanyonga, A.; Joiner, K.; Turhan, U.; Wild, G. Applications of Natural Language Processing in Aviation Safety: A Review and Qualitative Analysis. arXiv 2025, arXiv:2501.06210. [Google Scholar] [CrossRef]

- Taranto, M.T. A Human Factors Analysis of USAF Remotely Piloted Aircraft Mishaps. Master’s Thesis, Naval Postgraduate School, Monterey, CA, USA, 2013. Available online: https://hdl.handle.net/10945/34751 (accessed on 7 October 2025).

- Light, T.; Hamilton, T.; Pfeifer, S. Trends in U.S. Air Force Aircraft Mishap Rates (1950–2018); Technical Report RRA257-1; RAND Corporation, Santa Monica, CA, USA, 2020. Available online: https://www.rand.org/pubs/research_reports/RRA257-1.html (accessed on 7 October 2025).

- Zibaei, E.; Borth, R. Building Causal Models for Finding Actual Causes of Unmanned Aerial Vehicle Failures. Front. Robot. AI 2024, 11, 1123762. [Google Scholar] [CrossRef] [PubMed]

- Editya, A.S.; Ahmad, T.; Studiawan, H. Visual Instruction Tuning for Drone Accident Forensics. HighTech Innov. J. 2024, 5, 870–884. [Google Scholar] [CrossRef]

- Clothier, R.A.; Williams, B.P.; Fulton, N.L. Structuring the Safety Case for Unmanned Aircraft System Operations in Non-Segregated Airspace. Saf. Sci. 2015, 79, 213–228. [Google Scholar] [CrossRef]

- Bhatt, U.; Xiang, A.; Sharma, S.; Weller, A.; Taly, A.; Jia, Y.; Ghosh, J.; Puri, R.; Moura, J.M.F.; Eckersley, P. Explainable Machine Learning in Deployment. In Proceedings of the ACM Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 648–657. [Google Scholar] [CrossRef]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the ACM Conference on Fairness, Accountability, and Transparency, Virtual, 3–10 March 2021; pp. 610–623. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 248. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Proceedings of the Advances in Neural Information Processing Systems: 36th Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 24824–24837. Available online: https://proceedings.neurips.cc/paper_files/paper/2022/hash/9d5609613524ecf4f15af0f7b31abca4-Abstract-Conference.html (accessed on 7 October 2025).

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Chi, E.; Narang, S.; Chowdhery, A.; Zhou, D. Self-consistency improves chain of thought reasoning in language models. arXiv 2022, arXiv:2203.11171. [Google Scholar] [CrossRef]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. In Proceedings of the Advances in Neural Information Processing Systems: 36th Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 22199–22213. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic Chain of Thought Prompting in Large Language Models. arXiv 2022, arXiv:2210.03493. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large language models encode clinical knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef]

- Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Li, B.; Zhu, E.; Jiang, L.; Zhang, X.; Zhang, S.; Liu, J.; et al. Autogen: Enabling next-gen LLM applications via multi-agent conversation. arXiv 2023, arXiv:2308.08155. [Google Scholar] [CrossRef]

- Park, J.S.; O’Brien, J.C.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative Agents: Interactive Simulacra of Human Behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (UIST’23), San Francisco, CA, USA, 29 October–1 November 2023; ACM: New York, NY, USA, 2023. 22p. [Google Scholar] [CrossRef]

- Hong, S.; Zhuge, M.; Chen, J.; Zheng, X.; Cheng, Y.; Zhang, C.; Wang, J.; Wang, Z.; Yau, S.K.S.; Lin, Z.; et al. MetaGPT: Meta Programming for a Multi-Agent Collaborative Framework. arXiv 2023, arXiv:2308.00352. [Google Scholar] [CrossRef]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. ReAct: Synergizing Reasoning and Acting in Language Models. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. arXiv 2023, arXiv:2310.11511. [Google Scholar] [CrossRef]

- Jiang, Z.; Xu, F.; Gao, L.; Sun, Z.; Liu, Q.; Dwivedi-Yu, J.; Yang, Y.; Callan, J.; Neubig, G. Active Retrieval Augmented Generation. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP), Singapore, 6–10 December 2023; pp. 7969–7992. [Google Scholar] [CrossRef]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Metropolitansky, D.; Ness, R.O.; Larson, J. From Local to Global: A Graph RAG Approach to Query-Focused Summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Leveson, N.G. An Introduction to System Safety Engineering; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2020), Virtual, 6–12 December 2020; Volume 33, pp. 9459–9474. [Google Scholar]

- National Transportation Safety Board. Aviation Accident Database & Synopses. 2025. Available online: https://www.ntsb.gov/Pages/AviationQueryV2.aspx (accessed on 7 October 2025).

- U.S. Government Accountability Office. Drones: FAA Should Improve Its Approach to Integrating Drones into the National Airspace System; Technical Report GAO-23-105189; U.S. Government Accountability Office: Washington, DC, USA, 2023. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Hasson, F.; Keeney, S.; McKenna, H. Research guidelines for the Delphi survey technique. J. Adv. Nurs. 2000, 32, 1008–1015. [Google Scholar] [CrossRef]

- Diamond, I.R.; Grant, R.C.; Feldman, B.M.; Pencharz, P.B.; Ling, S.C.; Moore, A.M.; Wales, P.W. Defining consensus: A systematic review recommends methodologic criteria for reporting of Delphi studies. J. Clin. Epidemiol. 2014, 67, 401–409. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Lawshe, C.H. A quantitative approach to content validity. Pers. Psychol. 1975, 28, 563–575. [Google Scholar] [CrossRef]

- Leveson, N. Engineering a Safer World: Systems Thinking Applied to Safety; MIT Press: Cambridge, MA, 2011. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

| HFACS Category | Positive Cases | Frequency (%) | Avg. Confidence | Level |

|---|---|---|---|---|

| Level 1—Unsafe Acts | ||||

| Performance/Skill-Based Errors | 123 | 61.5 | 0.875 | L1 |

| Judgment & Decision-Making Errors | 155 | 77.5 | 0.880 | L1 |

| Known Deviations | 79 | 39.5 | 0.920 | L1 |

| Level 2—Preconditions | ||||

| Mental Awareness Conditions | 125 | 62.5 | 0.844 | L2 |

| State of Mind Conditions | 42 | 21.0 | 0.806 | L2 |

| Adverse Physiological Conditions | 21 | 10.5 | 0.798 | L2 |

| Physical Environment | 43 | 21.5 | 0.858 | L2 |

| Technological Environment | 76 | 38.0 | 0.887 | L2 |

| Team Coordination/Communication Factors | 65 | 32.5 | 0.834 | L2 |

| Training Conditions | 57 | 28.5 | 0.800 | L2 |

| Level 3—Unsafe Supervision | ||||

| Supervisory Climate/Unit Safety Culture | 105 | 52.5 | 0.807 | L3 |

| Supervisory Known Deviations | 12 | 6.0 | 0.833 | L3 |

| Ineffective Supervision | 126 | 63.0 | 0.825 | L3 |

| Ineffective Planning & Coordination | 87 | 43.5 | 0.793 | L3 |

| Level 4—Organizational Influences | ||||

| Organizational Climate/Culture | 104 | 52.0 | 0.767 | L4 |

| Policy, Procedures, or Process Issues | 155 | 77.5 | 0.852 | L4 |

| Resource Support Problems | 93 | 46.5 | 0.817 | L4 |

| Training Program Problems | 54 | 27.0 | 0.809 | L4 |

| Total Records | 200 | 100.0 | 0.838 | All |

| Total Positive Instances | 1522 | 42.3 | 0.838 | All |

| Avg. Factors per Record | 7.6 | – | – | All |

| Error Pattern | Count (%) | Description & Top Categories |

|---|---|---|

| Hallucinations (False Positives): 304 cases (8.57%) | ||

| Category Confusion | 201 (66.1%) | Misidentification within same HFACS level. Top: PC100 (48), PE100 (36), PE200 (32). |

| Level Confusion | 99 (32.6%) | Attribution to wrong hierarchical level; often confuses L1 with L2. |

| Spurious Detection | 4 (1.3%) | Detection of non-existent factors. |

| Omissions (False Negatives): 654 cases (18.44%) | ||

| Organizational Blindness | 245 (37.5%) | Missing Level 4 factors. Top: OP000 (81), OC000 (69), OR000 (62). |

| Supervisory Omission | 173 (26.5%) | Missing Level 3 factors. Top: SC000 (70), SI000 (67). |

| Other Omissions | 236 (36.1%) | Missing Level 1–2 factors in complex incidents. |

| Overall Accuracy | 72.98% (2588/3546) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Y.; Li, B.; Lodewijks, G. UAV Accident Forensics via HFACS-LLM Reasoning: Low-Altitude Safety Insights. Drones 2025, 9, 704. https://doi.org/10.3390/drones9100704

Yan Y, Li B, Lodewijks G. UAV Accident Forensics via HFACS-LLM Reasoning: Low-Altitude Safety Insights. Drones. 2025; 9(10):704. https://doi.org/10.3390/drones9100704

Chicago/Turabian StyleYan, Yuqi, Boyang Li, and Gabriel Lodewijks. 2025. "UAV Accident Forensics via HFACS-LLM Reasoning: Low-Altitude Safety Insights" Drones 9, no. 10: 704. https://doi.org/10.3390/drones9100704

APA StyleYan, Y., Li, B., & Lodewijks, G. (2025). UAV Accident Forensics via HFACS-LLM Reasoning: Low-Altitude Safety Insights. Drones, 9(10), 704. https://doi.org/10.3390/drones9100704