A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods

Abstract

1. Introduction

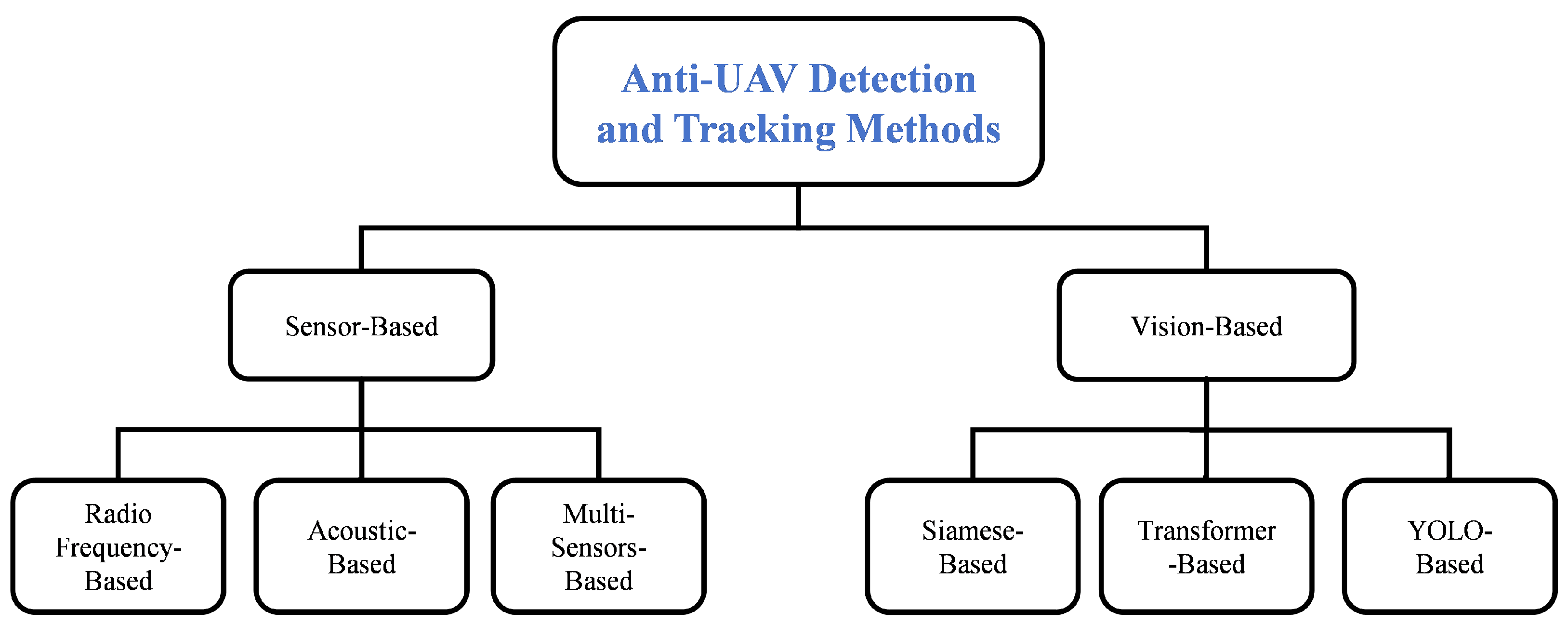

- This paper surveys recent methods for anti-UAV detection. We classify the collected methods based on the types of backbones used in the article and the scenarios in which they are applied. The methods are classified by Sensor-Based methods and Vision-Based methods. Typical examples are outlined to illustrate the main thoughts.

- We collect and summarize the public anti-UAV datasets, including RGB images, infrared images and acoustic data. Additionally, the dataset links are also provided so that the readers can access them quickly.

- The advantages and disadvantages of existing anti-UAV methods are analyzed. We give detailed discussions about the limitations of anti-UAV datasets and methods. Meanwhile, five potential directions are suggested for the future research.

2. Analysis of Surveyed Literatures

2.1. Stats of Surveyed Literatures

2.2. Method Classification of Surveyed Literatures

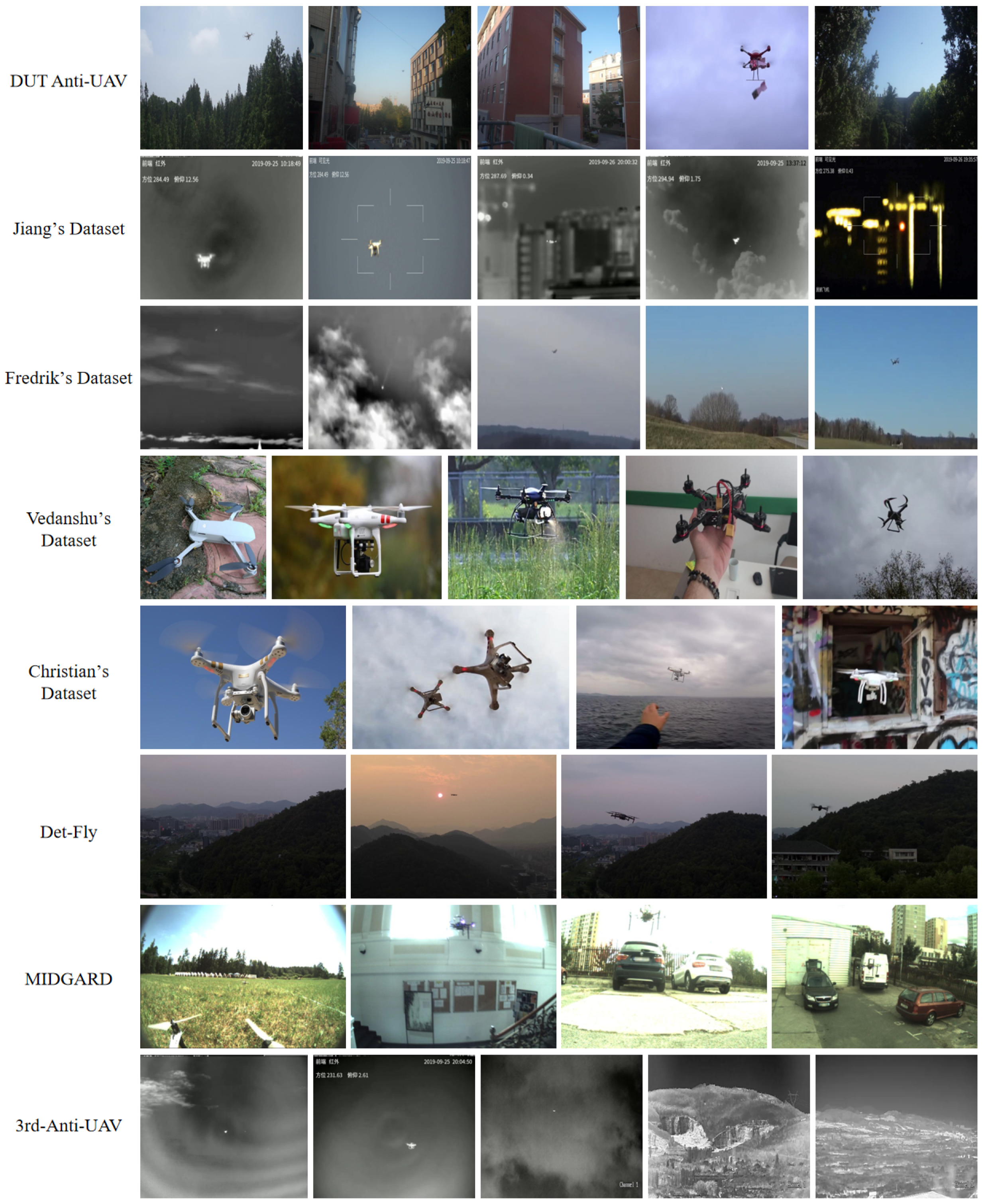

3. Anti-UAV Detection and Tracking Datasets

4. Anti-UAV Detection and Tracking Methods

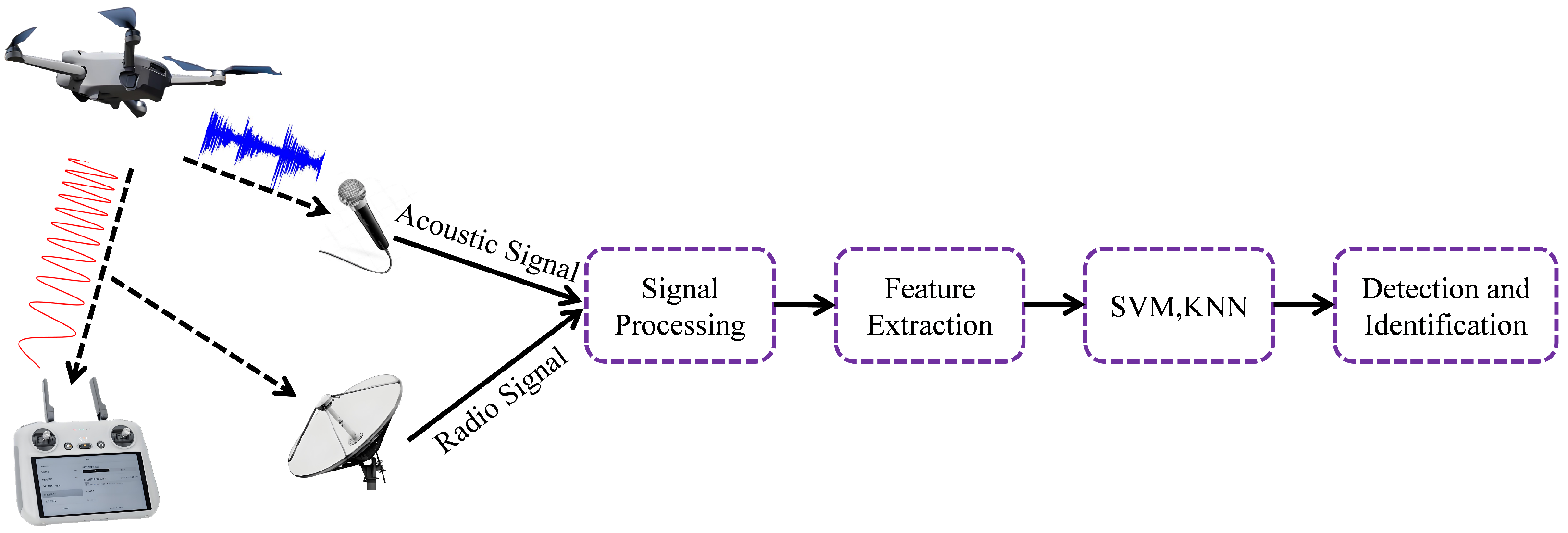

4.1. Sensor-Based Methods

4.1.1. RF-Based

4.1.2. Acoustic-Based

4.1.3. Multi-Sensors-Based

4.2. Vision-Based Methods

4.2.1. Siamese-Based

4.2.2. Transformer-Based

4.2.3. YOLO-Based

4.3. Discussions on the Results of Methods

4.3.1. Evaluation Metrics

4.3.2. Results of the Methods

5. Discussions

5.1. Discussions on the Limitations of Datasets

- Lack of multi-target datasets: In the publicly available datasets we have collected, most are focused on single-target tracking, with minimal interference from surrounding objects (such as birds, balloons, or other flying objects). There is a lack of datasets related to multi-target tracking in complex scenarios. This limitation creates a significant gap between research and actual application needs. With the rapid development of small drone technology, scenarios involving multiple drones working in coordination are expected to become increasingly common in the future. However, existing datasets are insufficient to fully support research and development in these complex scenarios.

- Low resolution and quality: Some datasets suffer from low-resolution images and videos, which can hinder the development and evaluation of high-precision detection and tracking algorithms, especially when identifying small or distant drones. The illumination can be a factor to impact the appearance of drones.

- Scenarios: Although some datasets have a large number of images, many images have similar scenes and mainly focus on specific conditions or environments, such as urban or rural environments, day or night scenes. It limits the generalizability of evaluation techniques in the real world.

- UAV types: Existing datasets may only include a few types of drones, whereas, in reality, there is a wide variety of drones with different appearances, sizes, and flight characteristics. Recently, many bionic drones are being produced and appear in many scenes. It has stronger concealment.

5.2. Discussions on the Limitations of Methods

- Insufficiency of uniform assessment rules. Although many methods utilized their own metrics for experimental comparison, it still lacks of uniform assessment rules. Meanwhile, considering many approaches are designed and implemented under different running environments, it faces challenges to provide a fair evaluation of methods.

- Uncertainty of the model’s size. Quite a number of literatures do not provide the models’ size. This is important for real-time applications because the light-weight models are more likely to be deployed in embedded devices.

- Difficult to achieve the trade-off between performance and accuracy: For rapidly flying UAV, it has large requirements for accurate detection in short time. The algorithms that achieve high performance always with high complexity, that is, it needs more computation resources.

- Insufficient generalization ability: Due to the lack of diversity in the dataset, the network model may overfit to specific scenarios during training, resulting in insufficient generalization ability. This means that performance may decrease when the model is applied to a new and different environment. Especially in the continuous day-to-night monitoring scenarios, it is difficult for one model to cover all day’s surveillance.

- The detection and tracking of UAV swarms remain underdeveloped: Current technologies face significant challenges in handling multi-target recognition, trajectory prediction, and addressing occlusions and interferences in complex environments. Particularly, during UAV swarm flights, the close distance between individuals, along with varying attitudes and speeds, makes it difficult for traditional detection algorithms to maintain efficient and accurate recognition.

5.3. Future Research Directions

- Image super-resolution reconstruction: In infrared scenarios, anti-UAV systems often operate at long distances [157] where the image resolution is not only very low but also often encountering many artifacts. Super-resolution techniques enable the recovery of additional details from low-resolution images, making the appearance, shape, and size of UAV clearer. When the drone moves quickly or away from the camera, super-resolution technology can help restore lost image details and maintain tracking continuity. However, image super-resolution usually requires significant computing resources, and algorithms need to be optimized to balance computational efficiency and image quality. Therefore, image super-resolution reconstruction can be considered as a critical technology for small UAV target detection and tracking.

- Autonomous learning capability: As UAV technology becomes increasingly intelligent, UAV can autonomously take countermeasures when detecting interference during flight. For example, they might change communication protocols, switch transmission frequencies to avoid being intercepted, or even dynamically adjust flight strategies. This advancement in intelligence imposes higher demands on anti-UAV detection and tracking algorithms. To effectively address these challenges, anti-UAV detection and tracking algorithms need to possess autonomous learning capabilities and be able to make real-time decisions, thereby adapting to and countering the evolving intelligent behaviors of UAV. This not only requires algorithms to be highly flexible and adaptive but also to maintain effective tracking and countermeasure capabilities in complex environments.

- Integration of multimodal perception techniques: In Section 3, we have discussed in detail the advantages and disadvantages of Sensor-Based and Vision-Based methods. However, these two approaches can play complementary roles in anti-UAV technology. While Sensor-Based methods may be affected by environmental noise, Vision-Based methods can provide additional information to compensate for these interferences. Therefore, combining these two approaches can significantly enhance UAV detection and identification capabilities under various environments and conditions. Although Section 3 has discussed Multi-Sensors-Based methods, these approaches have primarily been explored at the experimental stage, indicating considerable room for improvement in practical applications.

- Countering multi-agent collaborative operations: With the continuous advancement of drone technology, the trends of increasing intelligence and reducing costs are becoming more evident, leading to more frequent scenarios where multiple intelligent UAV work collaboratively. This collaborative operation mode offers significant advantages in complex tasks; however, it also presents new challenges for anti-drone technology. Existing detection and tracking algorithms may perform well against single targets, but when faced with multiple intelligent UAV operating collaboratively, they may experience decreased accuracy, target loss, and other issues. Therefore, developing anti-drone technologies that can effectively counter multi-agent collaborative operations has become a critical direction for current technological development.

- Anti-interference capability: In practical applications, anti-UAV systems need not only to detect and track UAVs but also to possess strong anti-interference capabilities. It is crucial to accurately distinguish between similar objects such as birds, kites, and balloons, thereby significantly enhancing anti-interference performance and ensuring stable operation in various complex environments.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicles |

| RF | Radio Frequency |

| SVM | Support Vector Machine |

| CNN | Convolutional Neural Networks |

| KNN | k-Nearest Neighbors |

| SNR | Signal to Noise Ratio |

| YOLO | You Only Look Once |

| STFT | Short-Time Fourier Transform |

References

- Fan, J.; Yang, X.; Lu, R.; Xie, X.; Li, W. Design and implementation of intelligent inspection and alarm flight system for epidemic prevention. Drones 2021, 5, 68. [Google Scholar] [CrossRef]

- Filkin, T.; Sliusar, N.; Ritzkowski, M.; Huber-Humer, M. Unmanned aerial vehicles for operational monitoring of landfills. Drones 2021, 5, 125. [Google Scholar] [CrossRef]

- McEnroe, P.; Wang, S.; Liyanage, M. A survey on the convergence of edge computing and AI for UAVs: Opportunities and challenges. IEEE Internet Things J. 2022, 9, 15435–15459. [Google Scholar] [CrossRef]

- Wang, Z.; Cao, Z.; Xie, J.; Zhang, W.; He, Z. RF-based drone detection enhancement via a Generalized denoising and interference-removal framework. IEEE Signal Process. Lett. 2024, 31, 929–933. [Google Scholar] [CrossRef]

- Zhou, T.; Xin, B.; Zheng, J.; Zhang, G.; Wang, B. Vehicle detection based on YOLOv7 for drone aerial visible and infrared images. In Proceedings of the 2024 6th International Conference on Image Processing and Machine Vision (IPMV), Macau, China, 12–14 January 2024; pp. 30–35. [Google Scholar]

- Shen, Y.; Pan, Z.; Liu, N.; You, X. Performance analysis of legitimate UAV surveillance system with suspicious relay and anti-surveillance technology. Digit. Commun. Netw. 2022, 8, 853–863. [Google Scholar] [CrossRef]

- Lin, N.; Tang, H.; Zhao, L.; Wan, S.; Hawbani, A.; Guizani, M. A PDDQNLP algorithm for energy efficient computation offloading in UAV-assisted MEC. IEEE Trans. Wirel. Commun. 2023, 22, 8876–8890. [Google Scholar] [CrossRef]

- Cheng, N.; Wu, S.; Wang, X.; Yin, Z.; Li, C.; Chen, W.; Chen, F. AI for UAV-assisted IoT applications: A comprehensive review. IEEE Internet Things J. 2023, 10, 14438–14461. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H.; Gao, X.; Zhang, S.; Yang, C. UAV target detection for IoT via enhancing ERP component by brain–computer interface system. IEEE Internet Things J. 2023, 10, 17243–17253. [Google Scholar] [CrossRef]

- Wang, B.; Li, C.; Zou, W.; Zheng, Q. Foreign object detection network for transmission lines from unmanned aerial vehicle images. Drones 2024, 8, 361. [Google Scholar] [CrossRef]

- Saha, B.; Kunze, S.; Poeschl, R. Comparative study of deep learning model architectures for drone detection and classification. In Proceedings of the 2024 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Madrid, Spain, 12 August 2024; pp. 167–172. [Google Scholar]

- Khawaja, W.; Semkin, V.; Ratyal, N.I.; Yaqoob, Q.; Gul, J.; Guvenc, I. Threats from and countermeasures for unmanned aerial and underwater vehicles. Sensors 2022, 22, 3896. [Google Scholar] [CrossRef]

- Zamri, F.N.M.; Gunawan, T.S.; Yusoff, S.H.; Alzahrani, A.A.; Bramantoro, A.; Kartiwi, M. Enhanced small drone detection using optimized YOLOv8 with attention mechanisms. IEEE Access 2024, 12, 90629–90643. [Google Scholar] [CrossRef]

- Elsayed, M.; Reda, M.; Mashaly, A.S.; Amein, A.S. LERFNet: An enlarged effective receptive field backbone network for enhancing visual drone detection. Vis. Comput. 2024, 40, 1–14. [Google Scholar] [CrossRef]

- Kunze, S.; Saha, B. Long short-term memory model for drone detection and classification. In Proceedings of the 2024 4th URSI Atlantic Radio Science Meeting (AT-RASC), Meloneras, Spain, 19–24 May 2024; pp. 1–4. [Google Scholar]

- Li, Y.; Fu, M.; Sun, H.; Deng, Z.; Zhang, Y. Radar-based UAV swarm surveillance based on a two-stage wave path difference estimation method. IEEE Sens. J. 2022, 22, 4268–4280. [Google Scholar] [CrossRef]

- Xiao, J.; Chee, J.H.; Feroskhan, M. Real-time multi-drone detection and tracking for pursuit-evasion with parameter search. IEEE Trans. Intell. Veh. 2024, 1–11. [Google Scholar] [CrossRef]

- Deng, A.; Han, G.; Zhang, Z.; Chen, D.; Ma, T.; Liu, Z. Cross-parallel attention and efficient match transformer for aerial tracking. Remote Sens. 2024, 16, 961. [Google Scholar] [CrossRef]

- Nguyen, D.D.; Nguyen, D.T.; Le, M.T.; Nguyen, Q.C. FPGA-SoC implementation of YOLOv4 for flying-object detection. J. Real-Time Image Process. 2024, 21, 63. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, J.; Li, D.; Wang, D. Vision-based anti-uav detection and tracking. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25323–25334. [Google Scholar] [CrossRef]

- Sun, Y.; Li, J.; Wang, L.; Xv, J.; Liu, Y. Deep Learning-based drone acoustic event detection system for microphone arrays. Multimed. Tools Appl. 2024, 83, 47865–47887. [Google Scholar] [CrossRef]

- Yu, Q.; Ma, Y.; He, J.; Yang, D.; Zhang, T. A unified transformer based tracker for anti-uav tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3036–3046. [Google Scholar]

- Jiang, N.; Wang, K.; Peng, X.; Yu, X.; Wang, Q.; Xing, J.; Li, G.; Guo, G.; Ye, Q.; Jiao, J.; et al. Anti-uav: A large-scale benchmark for vision-based uav tracking. IEEE Trans. Multimed. 2021, 25, 486–500. [Google Scholar] [CrossRef]

- Wang, C.; Wang, T.; Wang, E.; Sun, E.; Luo, Z. Flying small target detection for anti-UAV based on a Gaussian mixture model in a compressive sensing domain. Sensors 2019, 19, 2168. [Google Scholar] [CrossRef]

- Sheu, B.H.; Chiu, C.C.; Lu, W.T.; Huang, C.I.; Chen, W.P. Development of UAV tracing and coordinate detection method using a dual-axis rotary platform for an anti-UAV system. Appl. Sci. 2019, 9, 2583. [Google Scholar] [CrossRef]

- Fang, H.; Li, Z.; Wang, X.; Chang, Y.; Yan, L.; Liao, Z. Differentiated attention guided network over hierarchical and aggregated features for intelligent UAV surveillance. IEEE Trans. Ind. Inform. 2023, 19, 9909–9920. [Google Scholar] [CrossRef]

- Zhu, X.F.; Xu, T.; Zhao, J.; Liu, J.W.; Wang, K.; Wang, G.; Li, J.; Zhang, Z.; Wang, Q.; Jin, L.; et al. Evidential detection and tracking collaboration: New problem, benchmark and algorithm for robust anti-uav system. arXiv 2023, arXiv:2306.15767. [Google Scholar]

- Zhao, R.; Li, T.; Li, Y.; Ruan, Y.; Zhang, R. Anchor-free multi-UAV Detection and classification using spectrogram. IEEE Internet Things J. 2023, 11, 5259–5272. [Google Scholar] [CrossRef]

- Zheng, J.; Chen, R.; Yang, T.; Liu, X.; Liu, H.; Su, T.; Wan, L. An efficient strategy for accurate detection and localization of UAV swarms. IEEE Internet Things J. 2021, 8, 15372–15381. [Google Scholar] [CrossRef]

- Yan, X.; Fu, T.; Lin, H.; Xuan, F.; Huang, Y.; Cao, Y.; Hu, H.; Liu, P. UAV detection and tracking in urban environments using passive sensors: A survey. Appl. Sci. 2023, 13, 11320. [Google Scholar] [CrossRef]

- Svanström, F.; Englund, C.; Alonso-Fernandez, F. Real-time drone detection and tracking with visible, thermal and acoustic sensors. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7265–7272. [Google Scholar]

- Wang, C.; Shi, Z.; Meng, L.; Wang, J.; Wang, T.; Gao, Q.; Wang, E. Anti-occlusion UAV tracking algorithm with a low-altitude complex background by integrating attention mechanism. Drones 2022, 6, 149. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, J.; Yang, Z.; Fan, B. A motion-aware siamese framework for unmanned aerial vehicle tracking. Drones 2023, 7, 153. [Google Scholar] [CrossRef]

- Li, S.; Gao, J.; Li, L.; Wang, G.; Wang, Y.; Yang, X. Dual-branch approach for tracking UAVs with the infrared and inverted infrared image. In Proceedings of the 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 15–17 April 2022; pp. 1803–1806. [Google Scholar]

- Zhao, J.; Zhang, X.; Zhang, P. A unified approach for tracking UAVs in infrared. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1213–1222. [Google Scholar]

- Zhang, J.; Lin, Y.; Zhou, X.; Shi, P.; Zhu, X.; Zeng, D. Precision in pursuit: A multi-consistency joint approach for infrared anti-UAV tracking. Vis. Comput. 2024, 40, 1–16. [Google Scholar] [CrossRef]

- Ojdanić, D.; Naverschnigg, C.; Sinn, A.; Zelinskyi, D.; Schitter, G. Parallel architecture for low latency UAV detection and tracking using robotic telescopes. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 5515–5524. [Google Scholar] [CrossRef]

- Soni, V.; Shah, D.; Joshi, J.; Gite, S.; Pradhan, B.; Alamri, A. Introducing AOD 4: A dataset for air borne object detection. Data Brief 2024, 56, 110801. [Google Scholar] [CrossRef] [PubMed]

- Yuan, S.; Yang, Y.; Nguyen, T.H.; Nguyen, T.M.; Yang, J.; Liu, F.; Li, J.; Wang, H.; Xie, L. MMAUD: A comprehensive multi-modal anti-UAV dataset for modern miniature drone threats. arXiv 2024, arXiv:2402.03706. [Google Scholar]

- Svanström, F.; Alonso-Fernandez, F.; Englund, C. A dataset for multi-sensor drone detection. Data Brief 2021, 39, 107521. [Google Scholar] [CrossRef] [PubMed]

- Dewangan, V.; Saxena, A.; Thakur, R.; Tripathi, S. Application of image processing techniques for uav detection using deep learning and distance-wise analysis. Drones 2023, 7, 174. [Google Scholar] [CrossRef]

- Kaggle. Available online: https://www.kaggle.com/datasets/dasmehdixtr/drone-dataset-uav (accessed on 15 March 2024).

- Drone Detection. Available online: https://github.com/creiser/drone-detection (accessed on 11 April 2024).

- Zheng, Y.; Chen, Z.; Lv, D.; Li, Z.; Lan, Z.; Zhao, S. Air-to-air visual detection of micro-uavs: An experimental evaluation of deep learning. IEEE Robot. Autom. Lett. 2021, 6, 1020–1027. [Google Scholar] [CrossRef]

- Walter, V.; Vrba, M.; Saska, M. On training datasets for machine learning-based visual relative localization of micro-scale UAVs. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10674–10680. [Google Scholar]

- 3rd-Anti-UAV. Available online: https://anti-uav.github.io/ (accessed on 15 March 2024).

- Xiao, Y.; Zhang, X. Micro-UAV detection and identification based on radio frequency signature. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 1056–1062. [Google Scholar]

- Yang, B.; Matson, E.T.; Smith, A.H.; Dietz, J.E.; Gallagher, J.C. UAV detection system with multiple acoustic nodes using machine learning models. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 493–498. [Google Scholar]

- Rahman, M.H.; Sejan, M.A.S.; Aziz, M.A.; Tabassum, R.; Baik, J.I.; Song, H.K. A comprehensive survey of unmanned aerial vehicles detection and classification using machine learning approach: Challenges, solutions, and future directions. Remote Sens. 2024, 16, 879. [Google Scholar] [CrossRef]

- Moore, E.G. Radar detection, tracking and identification for UAV sense and avoid applications. Master’s Thesis, University of Denver, Denver, CO, USA, 2019; p. 13808039. [Google Scholar]

- Fang, H.; Ding, L.; Wang, L.; Chang, Y.; Yan, L.; Han, J. Infrared small UAV target detection based on depthwise separable residual dense network and multiscale feature fusion. IEEE Trans. Instrum. Meas. 2022, 71, 1–20. [Google Scholar] [CrossRef]

- Yan, B.; Peng, H.; Wu, K.; Wang, D.; Fu, J.; Lu, H. Lighttrack: Finding lightweight neural networks for object tracking via one-shot architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15180–15189. [Google Scholar]

- Blatter, P.; Kanakis, M.; Danelljan, M.; Van Gool, L. Efficient visual tracking with exemplar transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 1571–1581. [Google Scholar]

- Cheng, Q.; Wang, Y.; He, W.; Bai, Y. Lightweight air-to-air unmanned aerial vehicle target detection model. Sci. Rep. 2024, 14, 1–18. [Google Scholar] [CrossRef]

- Zhao, J.; Li, J.; Jin, L.; Chu, J.; Zhang, Z.; Wang, J.; Xia, J.; Wang, K.; Liu, Y.; Gulshad, S.; et al. The 3rd C workshop & challenge: Methods and results. arXiv 2023, arXiv:2305.07290. [Google Scholar]

- Chen, C.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Globaltrack: A simple and strong baseline for long-term tracking. AAAI Conf. Artif. Intell. 2020, 34, 11037–11044. [Google Scholar] [CrossRef]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10428–10437. [Google Scholar]

- Deng, T.; Zhou, Y.; Wu, W.; Li, M.; Huang, J.; Liu, S.; Song, Y.; Zuo, H.; Wang, Y.; Yue, Y.; et al. Multi-modal UAV detection, classification and tracking algorithm–technical report for CVPR 2024 UG2 challenge. arXiv 2024, arXiv:2405.16464. [Google Scholar]

- Svanström, F.; Alonso-Fernandez, F.; Englund, C. Drone detection and tracking in real-time by fusion of different sensing modalities. Drones 2022, 6, 317. [Google Scholar] [CrossRef]

- You, J.; Ye, Z.; Gu, J.; Pu, J. UAV-Pose: A dual capture network algorithm for low altitude UAV attitude detection and tracking. IEEE Access 2023, 11, 129144–129155. [Google Scholar] [CrossRef]

- Schlack, T.; Pawlowski, L.; Ashok, A. Hybrid event and frame-based system for target detection, tracking, and identification. Unconv. Imaging Sens. Adapt. Opt. 2023, 12693, 303–311. [Google Scholar]

- Song, H.; Wu, Y.; Zhou, G. Design of bio-inspired binocular UAV detection system based on improved STC algorithm of scale transformation and occlusion detection. Int. J. Micro Air Veh. 2021, 13, 17568293211004846. [Google Scholar] [CrossRef]

- Huang, B.; Dou, Z.; Chen, J.; Li, J.; Shen, N.; Wang, Y.; Xu, T. Searching region-free and template-free siamese network for tracking drones in TIR videos. IEEE Trans. Geosci. Remote Sens. 2023, 62, 5000315. [Google Scholar] [CrossRef]

- Wang, C.; Meng, L.; Gao, Q.; Wang, J.; Wang, T.; Liu, X.; Du, F.; Wang, L.; Wang, E. A lightweight UAV swarm detection method integrated attention mechanism. Drones 2022, 7, 13. [Google Scholar] [CrossRef]

- Lee, H.; Cho, S.; Shin, H.; Kim, S.; Shim, D.H. Small airborne object recognition with image processing for feature extraction. Int. J. Aeronaut. Space Sci. 2024, 25, 1–15. [Google Scholar] [CrossRef]

- Lee, E. Drone classification with motion and appearance feature using convolutional neural networks. Master’s Thesis, Purdue University, West Lafayette, IN, USA, 2020; p. 30503377. [Google Scholar]

- Yin, X.; Jin, R.; Lin, D. Efficient air-to-air drone detection with composite multi-dimensional attention. In Proceedings of the 2024 IEEE 18th International Conference on Control & Automation (ICCA), Reykjavík, Iceland, 18–21 June 2024; pp. 725–730. [Google Scholar]

- Ghazlane, Y.; Gmira, M.; Medromi, H. Development Of a vision-based anti-drone identification friend or foe model to recognize birds and drones using deep learning. Appl. Artif. Intell. 2024, 38, 2318672. [Google Scholar] [CrossRef]

- Swinney, C.J.; Woods, J.C. Low-cost raspberry-pi-based UAS detection and classification system using machine learning. Aerospace 2022, 9, 738. [Google Scholar] [CrossRef]

- Lu, S.; Wang, W.; Zhang, M.; Li, B.; Han, Y.; Sun, D. Detect the video recording act of UAV through spectrum recognition. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 24–26 June 2022; pp. 559–564. [Google Scholar]

- He, Z.; Huang, J.; Qian, G. UAV detection and identification based on radio frequency using transfer learning. In Proceedings of the 2022 IEEE 8th International Conference on Computer and Communications (ICCC), Chengdu, China, 9–12 December 2022; pp. 1812–1817. [Google Scholar]

- Cai, Z.; Wang, Y.; Jiang, Q.; Gui, G.; Sha, J. Toward intelligent lightweight and efficient UAV identification with RF fingerprinting. IEEE Internet Things J. 2024, 11, 26329–26339. [Google Scholar] [CrossRef]

- Uddin, Z.; Qamar, A.; Alharbi, A.G.; Orakzai, F.A.; Ahmad, A. Detection of multiple drones in a time-varying scenario using acoustic signals. Sustainability 2022, 14, 4041. [Google Scholar] [CrossRef]

- Guo, J.; Ahmad, I.; Chang, K. Classification, positioning, and tracking of drones by HMM using acoustic circular microphone array beamforming. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 1–19. [Google Scholar] [CrossRef]

- Shi, X.; Yang, C.; Xie, W.; Liang, C.; Shi, Z.; Chen, J. Anti-drone system with multiple surveillance technologies: Architecture, implementation, and challenges. IEEE Commun. Mag. 2018, 56, 68–74. [Google Scholar] [CrossRef]

- Koulouris, C.; Dimitrios, P.; Al-Darraji, I.; Tsaramirsis, G.; Tamimi, H. A comparative study of unauthorized drone detection techniques. In Proceedings of the 2023 9th International Conference on Information Technology Trends (ITT), Dubai, United Arab Emirates, 24–25 May 2023; pp. 32–37. [Google Scholar]

- Xing, Z.; Hu, S.; Ding, R.; Yan, T.; Xiong, X.; Wei, X. Multi-sensor dynamic scheduling for defending UAV swarms with Fresnel zone under complex terrain. ISA Trans. 2024, 153, 57–69. [Google Scholar] [CrossRef]

- Chen, H. A benchmark with multi-sensor fusion for UAV detection and distance estimation. Master’s Thesis, State University of New York at Buffalo, Getzville, NY, USA, 2022. [Google Scholar]

- Li, S. Applying multi agent system to track uav movement. Master’s Thesis, Purdue University, West Lafayette, IN, USA, 2019. [Google Scholar]

- Samaras, S.; Diamantidou, E.; Ataloglou, D.; Sakellariou, N.; Vafeiadis, A.; Magoulianitis, V.; Lalas, A.; Dimou, A.; Zarpalas, D.; Votis, K.; et al. Deep learning on multi sensor data for counter UAV applications—A systematic review. Sensors 2019, 19, 4837. [Google Scholar] [CrossRef]

- Jouaber, S.; Bonnabel, S.; Velasco-Forero, S.; Pilte, M. Nnakf: A neural network adapted kalman filter for target tracking. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 4075–4079. [Google Scholar]

- Xie, W.; Wan, Y.; Wu, G.; Li, Y.; Zhou, F.; Wu, Q. A RF-visual directional fusion framework for precise UAV positioning. IEEE Internet Things J. 2024, 1. [Google Scholar] [CrossRef]

- Cao, B.; Yao, H.; Zhu, P.; Hu, Q. Visible and clear: Finding tiny objects in difference map. arXiv 2024, arXiv:2405.11276. [Google Scholar]

- Singh, P.; Gupta, K.; Jain, A.K.; Jain, A.; Jain, A. Vision-based UAV detection in complex backgrounds and rainy conditions. In Proceedings of the 2024 2nd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 15–16 March 2024; pp. 1097–1102. [Google Scholar]

- Wu, T.; Duan, H.; Zeng, Z. Biological eagle eye-based correlation filter learning for fast UAV tracking. IEEE Trans. Instrum. Meas. 2024, 73, 7506412. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, Y.; Yang, Z.; Li, X.; Sun, W.; Zhen, H.; Wang, Y. UAV image detection based on multi-scale spatial attention mechanism with hybrid dilated convolution. In Proceedings of the 2024 3rd International Conference on Image Processing and Media Computing (ICIPMC), Hefei, China, 17–19 May 2024; pp. 279–284. [Google Scholar]

- Wang, G.; Yang, X.; Li, L.; Gao, K.; Gao, J.; Zhang, J.y.; Xing, D.j.; Wang, Y.z. Tiny drone object detection in videos guided by the bio-inspired magnocellular computation model. Appl. Soft Comput. 2024, 163, 111892. [Google Scholar] [CrossRef]

- Munir, A.; Siddiqui, A.J.; Anwar, S. Investigation of UAV detection in images with complex backgrounds and rainy artifacts. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikoloa, HI, USA, 1–6 January 2024; pp. 232–241. [Google Scholar]

- Unlu, E.; Zenou, E.; Riviere, N.; Dupouy, P.E. Deep learning-based strategies for the detection and tracking of drones using several cameras. IPSJ Trans. Comput. Vis. Appl. 2019, 11, 1–13. [Google Scholar] [CrossRef]

- Al-lQubaydhi, N.; Alenezi, A.; Alanazi, T.; Senyor, A.; Alanezi, N.; Alotaibi, B.; Alotaibi, M.; Razaque, A.; Hariri, S. Deep learning for unmanned aerial vehicles detection: A review. Comput. Sci. Rev. 2024, 51, 100614. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, G.; Chen, Y.; Li, L.; Chen, B.M. VDTNet: A high-performance visual network for detecting and tracking of intruding drones. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9828–9839. [Google Scholar] [CrossRef]

- Kassab, M.; Zitar, R.A.; Barbaresco, F.; Seghrouchni, A.E.F. Drone detection with improved precision in traditional machine learning and less complexity in single shot detectors. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 3847–3859. [Google Scholar] [CrossRef]

- Zhang, Z.; Jin, L.; Li, S.; Xia, J.; Wang, J.; Li, Z.; Zhu, Z.; Yang, W.; Zhang, P.; Zhao, J.; et al. Modality meets long-term tracker: A siamese dual fusion framework for tracking UAV. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8–11 October 2023; pp. 1975–1979. [Google Scholar]

- Xing, D. UAV surveillance with deep learning using visual, thermal and acoustic sensors. Ph.D. Thesis, New York University Tandon School of Engineering, Brooklyn, NY, USA, 2023. [Google Scholar]

- Xie, W.; Zhang, Y.; Hui, T.; Zhang, J.; Lei, J.; Li, Y. FoRA: Low-rank adaptation model beyond multimodal siamese network. arXiv 2024, arXiv:2407.16129. [Google Scholar]

- Elleuch, I.; Pourranjbar, A.; Kaddoum, G. Leveraging transformer models for anti-jamming in heavily attacked UAV environments. IEEE Open J. Commun. Soc. 2024, 5, 5337–5347. [Google Scholar] [CrossRef]

- Rebbapragada, S.V.; Panda, P.; Balasubramanian, V.N. C2FDrone: Coarse-to-fine drone-to-drone detection using vision transformer networks. arXiv 2024, arXiv:2404.19276. [Google Scholar]

- Zeng, H.; Li, J.; Qu, L. Lightweight low-altitude UAV object detection based on improved YOLOv5s. Int. J. Adv. Network, Monit. Control. 2024, 9, 87–99. [Google Scholar] [CrossRef]

- AlDosari, K.; Osman, A.; Elharrouss, O.; Al-Maadeed, S.; Chaari, M.Z. Drone-type-set: Drone types detection benchmark for drone detection and tracking. In Proceedings of the 2024 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 8–10 May 2024; pp. 1–7. [Google Scholar]

- Bo, C.; Wei, Y.; Wang, X.; Shi, Z.; Xiao, Y. Vision-based anti-UAV detection based on YOLOv7-GS in complex backgrounds. Drones 2024, 8, 331. [Google Scholar] [CrossRef]

- Wang, C.; Meng, L.; Gao, Q.; Wang, T.; Wang, J.; Wang, L. A target sensing and visual tracking method for countering unmanned aerial vehicle swarm. IEEE Sens. J. 2024, 1. [Google Scholar] [CrossRef]

- Sun, S.; Mo, B.; Xu, J.; Li, D.; Zhao, J.; Han, S. Multi-YOLOv8: An infrared moving small object detection model based on YOLOv8 for air vehicle. Neurocomputing 2024, 588, 127685. [Google Scholar] [CrossRef]

- He, X.; Fan, K.; Xu, Z. Uav identification based on improved YOLOv7 under foggy condition. Signal Image Video Process. 2024, 18, 6173–6183. [Google Scholar] [CrossRef]

- Fang, H.; Wu, C.; Wang, X.; Zhou, F.; Chang, Y.; Yan, L. Online infrared UAV target tracking with enhanced context-awareness and pixel-wise attention modulation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5005417. [Google Scholar] [CrossRef]

- Jiang, W.; Pan, H.; Wang, Y.; Li, Y.; Lin, Y.; Bi, F. A multi-level cross-attention image registration method for visible and infrared small unmanned aerial vehicle targets via image style transfer. Remote Sens. 2024, 16, 2880. [Google Scholar] [CrossRef]

- Noor, A.; Li, K.; Tovar, E.; Zhang, P.; Wei, B. Fusion flow-enhanced graph pooling residual networks for unmanned aerial vehicles surveillance in day and night dual visions. Eng. Appl. Artif. Intell. 2024, 136, 108959. [Google Scholar] [CrossRef]

- Nair, A.K.; Sahoo, J.; Raj, E.D. A lightweight FL-based UAV detection model using thermal images. In Proceedings of the 7th International Conference on Networking, Intelligent Systems and Security (NISS), Meknes, Morocco, 18–19 April 2024; pp. 1–5. [Google Scholar]

- Xu, B.; Hou, R.; Bei, J.; Ren, T.; Wu, G. Jointly modeling association and motion cues for robust infrared UAV tracking. Vis. Comput. 2024, 40, 1–12. [Google Scholar] [CrossRef]

- Li, Q.; Mao, Q.; Liu, W.; Wang, J.; Wang, W.; Wang, B. Local information guided global integration for infrared small target detection. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 4425–4429. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Hu, W.; Wang, Q.; Zhang, L.; Bertinetto, L.; Torr, P.H. Siammask: A framework for fast online object tracking and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3072–3089. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. AAAI Conf. Artif. Intell. 2020, 34, 12549–12556. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Chen, J.; Huang, B.; Li, J.; Wang, Y.; Ren, M.; Xu, T. Learning spatio-temporal attention based siamese network for tracking UAVs in the wild. Remote Sens. 2022, 14, 1797. [Google Scholar] [CrossRef]

- Yin, W.; Ye, Z.; Peng, Y.; Liu, W. A review of visible single target tracking based on Siamese networks. In Proceedings of the 2023 4th International Conference on Electronic Communication and Artificial Intelligence (ICECAI), Guangzhou, China, 12–14 May 2023; pp. 282–289. [Google Scholar]

- Feng, M.; Su, J. RGBT tracking: A comprehensive review. Inf. Fusion 2024, 110, 102492. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–16 October 2016; pp. 850–865. [Google Scholar]

- Huang, B.; Chen, J.; Xu, T.; Wang, Y.; Jiang, S.; Wang, Y.; Wang, L.; Li, J. Siamsta: Spatio-temporal attention based siamese tracker for tracking uavs. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1204–1212. [Google Scholar]

- Fang, H.; Wang, X.; Liao, Z.; Chang, Y.; Yan, L. A real-time anti-distractor infrared UAV tracker with channel feature refinement module. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 1240–1248. [Google Scholar]

- Huang, B.; Li, J.; Chen, J.; Wang, G.; Zhao, J.; Xu, T. Anti-uav410: A thermal infrared benchmark and customized scheme for tracking drones in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 2852–2865. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Zhang, Y.; Shi, Z.; Zhang, Y. Gasiam: Graph attention based siamese tracker for infrared anti-uav. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), Changchun, China, 20–22 May 2022; pp. 986–993. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam r-cnn: Visual tracking by re-detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6578–6588. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Cheng, F.; Liang, Z.; Peng, G.; Liu, S.; Li, S.; Ji, M. An anti-UAV long-term tracking method with hybrid attention mechanism and hierarchical discriminator. Sensors 2022, 22, 3701. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Xi, J.; Yang, X.; Lu, R.; Xia, W. Stftrack: Spatio-temporal-focused siamese network for infrared uav tracking. Drones 2023, 7, 296. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, X.; Cao, G.; Yang, Y.; Jiao, L.; Liu, F. ViT-YOLO: Transformer-based YOLO for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2799–2808. [Google Scholar]

- Gao, S.; Zhou, C.; Ma, C.; Wang, X.; Yuan, J. Aiatrack: Attention in attention for transformer visual tracking. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 146–164. [Google Scholar]

- Mayer, C.; Danelljan, M.; Bhat, G.; Paul, M.; Paudel, D.P.; Yu, F.; Van Gool, L. Transforming model prediction for tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, Louisiana, USA, 19–24 June 2022; pp. 8731–8740. [Google Scholar]

- Lin, L.; Fan, H.; Zhang, Z.; Xu, Y.; Ling, H. Swintrack: A simple and strong baseline for transformer tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 16743–16754. [Google Scholar]

- Tong, X.; Zuo, Z.; Su, S.; Wei, J.; Sun, X.; Wu, P.; Zhao, Z. ST-Trans: Spatial-temporal transformer for infrared small target detection in sequential images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5001819. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Stefenon, S.F.; Singh, G.; Souza, B.J.; Freire, R.Z.; Yow, K.C. Optimized hybrid YOLOu-Quasi-ProtoPNet for insulators classification. IET Gener. Transm. Distrib. 2023, 17, 3501–3511. [Google Scholar] [CrossRef]

- Souza, B.J.; Stefenon, S.F.; Singh, G.; Freire, R.Z. Hybrid-YOLO for classification of insulators defects in transmission lines based on UAV. Int. J. Electr. Power Energy Syst. 2023, 148, 108982. [Google Scholar] [CrossRef]

- Stefenon, S.F.; Seman, L.O.; Klaar, A.C.R.; Ovejero, R.G.; Leithardt, V.R.Q. Hypertuned-YOLO for interpretable distribution power grid fault location based on EigenCAM. Ain Shams Eng. J. 2024, 15, 102722. [Google Scholar] [CrossRef]

- Xiao, H.; Wang, B.; Zheng, J.; Liu, L.; Chen, C.P. A fine-grained detector of face mask wearing status based on improved YOLOX. IEEE Trans. Artif. Intell. 2023, 5, 1816–1830. [Google Scholar] [CrossRef]

- Ajakwe, S.O.; Ihekoronye, V.U.; Kim, D.S.; Lee, J.M. DRONET: Multi-tasking framework for real-time industrial facility aerial surveillance and safety. Drones 2022, 6, 46. [Google Scholar] [CrossRef]

- Wang, J.; Hongjun, W.; Liu, J.; Zhou, R.; Chen, C.; Liu, C. Fast and accurate detection of UAV objects based on mobile-YOLO network. In Proceedings of the 2022 14th International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 1–3 November 2022; pp. 01–05. [Google Scholar]

- Cheng, Q.; Li, J.; Du, J.; Li, S. Anti-UAV detection method based on local-global feature focusing module. In Proceedings of the 2024 IEEE 7th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 15–17 March 2024; Volume 7, pp. 1413–1418. [Google Scholar]

- Tu, X.; Zhang, C.; Zhuang, H.; Liu, S.; Li, R. Fast drone detection with optimized feature capture and modeling algorithms. IEEE Access 2024, 12, 108374–108388. [Google Scholar] [CrossRef]

- Hu, Y.; Wu, X.; Zheng, G.; Liu, X. Object detection of UAV for anti-UAV based on improved YOLOv3. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8386–8390. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Dadboud, F.; Patel, V.; Mehta, V.; Bolic, M.; Mantegh, I. Single-stage uav detection and classification with YOLOv5: Mosaic data augmentation and panet. In Proceedings of the 2021 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; pp. 1–8. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Changyu, L.; Hogan, A.; Hajek, J.; Diaconu, L.; Kwon, Y.; Defretin, Y.; et al. ultralytics/yolov5: V5. 0-YOLOv5-P6 1280 models, AWS, Supervise. ly and YouTube integrations. Zenodo 2021. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Li, Y.; Yuan, D.; Sun, M.; Wang, H.; Liu, X.; Liu, J. A global-local tracking framework driven by both motion and appearance for infrared anti-uav. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3026–3035. [Google Scholar]

- Fang, A.; Feng, S.; Liang, B.; Jiang, J. Real-time detection of unauthorized unmanned aerial vehicles using SEB-YOLOv8s. Sensors 2024, 24, 3915. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. arXiv 2024, arXiv:2309.11331. [Google Scholar]

- Liu, Q.; Li, X.; He, Z.; Li, C.; Li, J.; Zhou, Z.; Yuan, D.; Li, J.; Yang, K.; Fan, N.; et al. LSOTB-TIR: A large-scale high-diversity thermal infrared object tracking benchmark. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3847–3856. [Google Scholar]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 879–892. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Méndez, M.; De la Iglesia, D.; González, I.; Mercier, J.P.; et al. Drone vs. bird detection: Deep learning algorithms and results from a grand challenge. Sensors 2021, 21, 2824. [Google Scholar] [CrossRef]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Dimou, A.; Zarpalas, D.; Akyon, F.C.; Eryuksel, O.; Ozfuttu, K.A.; Altinuc, S.O.; et al. Drone-vs-bird detection challenge at IEEE AVSS2021. In Proceedings of the 2021 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; pp. 1–8. [Google Scholar]

- Xi, Y.; Zhou, Z.; Jiang, Y.; Zhang, L.; Li, Y.; Wang, Z.; Tan, F.; Hou, Q. Infrared moving small target detection based on spatial-temporal local contrast under slow-moving cloud background. Infrared Phys. Technol. 2023, 134, 104877. [Google Scholar] [CrossRef]

| Dataset Name | Main Characteristics | Image or Video Number | Complexity | Multi-Sensors | Scene | UAV Type |

|---|---|---|---|---|---|---|

| DUT Anti-UAV [20] | Images and videos of various scenes are from around DUT, such as the sky, dark clouds, jungles, high-rise buildings, residential buildings, farmlands and playgrounds | Image 10,000 Video 20 | Medium | NO | RGB | Not available |

| Jiang’s Dataset [23] | DJI and Parrot drones are used to captured video from the air. The video records two lighting conditions (day and night), two light modes (infrared and visible light), and a variety of backgrounds (buildings, clouds, trees) | Video_RGB 318 Video_Infrared 318 | Large | NO | RGB and Infrared | DJI and Parrot drones |

| MMAUD [39] | This dataset is built by integrating multiple sensing inputs including stereo vision, various Lidar, radar, and audio arrays | Multi-category | Large | Yes | Infrared | Not available |

| Fredrik’s Dataset [31] | This dataset is captured at three airports in Sweden and three different drones are used for the video shooting | Audio 90 Video_RGB 285 Video_Infrared 365 | Large | Yes | Infrared | DJI Phantom4 Pro, DJI Flame Wheel and Hubsan H107D+ |

| Vedanshu’s Dataset [41] | The majority of the dataset’s images are collected from Kaggle and the remaining are captured using a smartphone camera | Image 1874 | Medium | NO | RGB | Not available |

| Drone Detection [43] | Images of the DJI Phantom 3 quadcopter obtained through Google image search and dozens of screenshots from YouTube videos | Image 500 | Small | NO | RGB | DJI Phantom 3 quadcopter |

| Det-Fly [44] | This dataset uses a flying drone (DJI M210) to photograph another flying target drone (DJI Mavic) | Image 13,271 | Medium | NO | RGB | DJI M210 and DJI Mavic |

| MIDGARD [45] | This dataset is automatically generated using relatively Micro-scale Unmanned Aerial Vehicles and positioning sensor | Image 8776 | Medium | NO | Infrared | Not available |

| 3rd-Anti-UAV [46] | This dataset consists of single-frame infrared images derived from video sequences | Video Sequence | large | NO | Infrared | Not available |

| Dataset Name | Available Link | Access Date |

|---|---|---|

| DUT Anti-UAV [20] | https://github.com/wangdongdut/DUT-Anti-UAV | 22 January 2024 |

| Jiang’s Dataset [23] | https://github.com/ucas-vg/Anti-UAV | 22 January 2024 |

| MMAUD [39] | https://github.com/ntu-aris/MMAUD | 22 January 2024 |

| Fredrik’s Dataset [31] | https://github.com/DroneDetectionThesis/Drone-detection-dataset | 15 March 2024 |

| Vedanshu’s Dataset [41] | https://drive.google.com/drive/folders/1FJ09dOOa-VFMy_tM7UoZGzOA8iYpmaHP | 15 March 2024 |

| Drone Detection [43] | https://github.com/creiser/drone-detection | 11 April 2024 |

| Det-Fly [44] | https://github.com/Jake-WU/Det-Fly | 15 March 2024 |

| MIDGARD [45] | https://mrs.felk.cvut.cz/midgard | 21 March 2024 |

| 3rd-Anti-UAV [46] | https://anti-uav.github.io | 28 March2024 |

| Category | Methods | Datasets | Results | Experimental Environment | |

|---|---|---|---|---|---|

| Sensor-Based | RF-Based | Xiao et al. [47] | Mavic Pro, Phantom3 and WiFi signals | With SVM, the more than 0.90 at − 3 dB SNR; With KNN, the more than 0.90 at −4 dB SNR | receiver with 200 MHz on 2.4 GHz ISM frequency |

| Acoustic-Based | Yang et al. [48] | The six nodes recorded audio data | With SVM, result of training STFT = 0.787, With SVM, result of training MFCC = 0.779 | C = , where i = 1, 2, …, 14, 15; = , where i = −15, −14, …, −2, −1 | |

| Multi-Sensors-Based | Fredrik et al. [31] | 365 infrared videos, 285 visible light videos and an audio dataset | The average of infrared sensor = 0.7601, The average of Visible camera = 0.7849, The audio sensor = 0.9323 | camera, sound acquisition device, and ADS-B receiver | |

| Xie et al. [83] | Self built multiple background condition UAV detection datasets containing visual images and RF signals | = 44.7, = 39 | NVIDIA GeForce GTX 3090 Hikvision pan-tilt-zoom (PTZ) dome camera, and TP-8100 five-element array antenna | ||

| Vision-Based | Siamese-Based | Huang et al. [119] | 2nd Anti-UAV [46] | = 88.8%, = 65.55%, average overlap accuracy = 67.30% | Not provide |

| Fang et al. [120] | 14,700 infrared images self-built | = 97.6%, = 97.6%, = 0.976, acc = 70.3%, = 37.1 | 2.40 GHz Intel Xeon Silver 4210R CPU, 3× NVIDIA RTX3090 GPU and PyTorch 1.8.1 with CUDA 11.1 | ||

| Huang et al. [121] | 410 self built infrared tracking video sequences | = 68.19% | Not provide | ||

| Shi et al. [122] | Jiang’s dataset [23] and 163 videos self-built | = 94.9%, = 71.5% | 4× NVIDIA Geforce RTX 2080 Super cards, Python 3 | ||

| Cheng et al. [125] | Jiang’s dataset [23] | = 88.4%, = 67.7% | NVIDIA RTX 3090 GPU and Pytorch | ||

| Xie et al. [126] | Jiang’s dataset [23] and LSOTB-TIR [153] | = 92.12%, = 66.66%, = 67.7%, = 12.4 | Intel Core i9-9940X@3.30 GHz, 4× NVIDIA RTX 2080Ti, Python 3.7 and PyTorch 1.10 | ||

| Transformer-Based | Tong et al. [131] | Collect and organize data on anti-UAV competitions | = 88.39% | Intel i9-13900K, GeForce RTX 4090 GPU | |

| Yu et al. [22] | 1st and 2nd Anti-UAV [46] | 1st TestDEV: = 77.9%, = 98.0%; 2st TestDEV: = 72.4%, = 93.4% | 4× NVIDIA RTX 3090 GPU, Python 3.6 and Pytorch 1.7.1 | ||

| YOLO-Based | Hu et al. [141] | 280 testing images, self-built | = 89%, = 37.41%, = 56.3 | Intel Xeon E5-2630 v4, NVIDIA GeForce GTX 1080 Ti, 64-bit Ubuntu 16.04 operating system | |

| Zhou et al. [92] | FL-Drone [154] | = 98% | NVIDIA RTX3090 | ||

| Fardad et al. [143] | 116,608 images from [44,155] | = 98%, = 96% | 4× Tesla V100-SXM2 graphic cards | ||

| Vedanshu et al. [41] | 1874 images from Kaggle [42] and self-built | = 96.7%, = 95%, = 95.6% | Not provide | ||

| Li et al. [149] | 3rd Anti-UAV [46] | = 49.61% | Not provide | ||

| Fang et al. [150] | Det-Fly [44] | = 0.914, = 91.9% | NVIDIA A40 | ||

| Category | Methods | Advantages | Disadvantages |

|---|---|---|---|

| Sensor-Based | RF-Based | Long distance monitoring No need for line of sight | The communication protocol of UAV may undergo periodic changes Many UAV can dynamically switch communication frequencies Prone to interference from devices like WiFi and signal towers |

| Acoustic-Based | Low cost Multiple acoustic nodes monitoring | Susceptible to interference from environmental noise Short sound propagation distance limits the listening range. | |

| Multi-Sensors-Based | High accuracy Has good adaptability to complex environments | High model complexity and large computational load Multimodal data fusion is challenging | |

| Vision-Based | Siamese-Based | Suitable for real-time single target tracking Low computational cost Fast inference speed | Brief occlusion can cause Siamese networks to lose track Sensitive to scale changes |

| Transformer-Based | Excel in processing complex scenes Robust against challenging scenarios | Not sensitive to sparse small UAV features Large computational load, insufficient real-time performance | |

| YOLO-Based | Emphasize speed and real-time capabilities Suitable for rapid detection or tracking Balance between inference speed and accuracy | Sensitive to obstruction by obstacles Easy to be affected by weather conditions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Li, Q.; Mao, Q.; Wang, J.; Chen, C.L.P.; Shangguan, A.; Zhang, H. A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods. Drones 2024, 8, 518. https://doi.org/10.3390/drones8090518

Wang B, Li Q, Mao Q, Wang J, Chen CLP, Shangguan A, Zhang H. A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods. Drones. 2024; 8(9):518. https://doi.org/10.3390/drones8090518

Chicago/Turabian StyleWang, Bingshu, Qiang Li, Qianchen Mao, Jinbao Wang, C. L. Philip Chen, Aihong Shangguan, and Haosu Zhang. 2024. "A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods" Drones 8, no. 9: 518. https://doi.org/10.3390/drones8090518

APA StyleWang, B., Li, Q., Mao, Q., Wang, J., Chen, C. L. P., Shangguan, A., & Zhang, H. (2024). A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods. Drones, 8(9), 518. https://doi.org/10.3390/drones8090518