A Lightweight Insulator Defect Detection Model Based on Drone Images

Abstract

1. Introduction

- (1)

- We propose IDD-YOLO, a lightweight and accurate detection model, specifically designed for detecting insulator defects in transmission lines. Compared to the existing mainstream insulator defect detection models, IDD-YOLO demonstrates a higher accuracy and a smaller number of parameters. Additionally, we constructed ID-2024, a dataset that includes multiple types of insulator defects, to better meet practical inspection needs.

- (2)

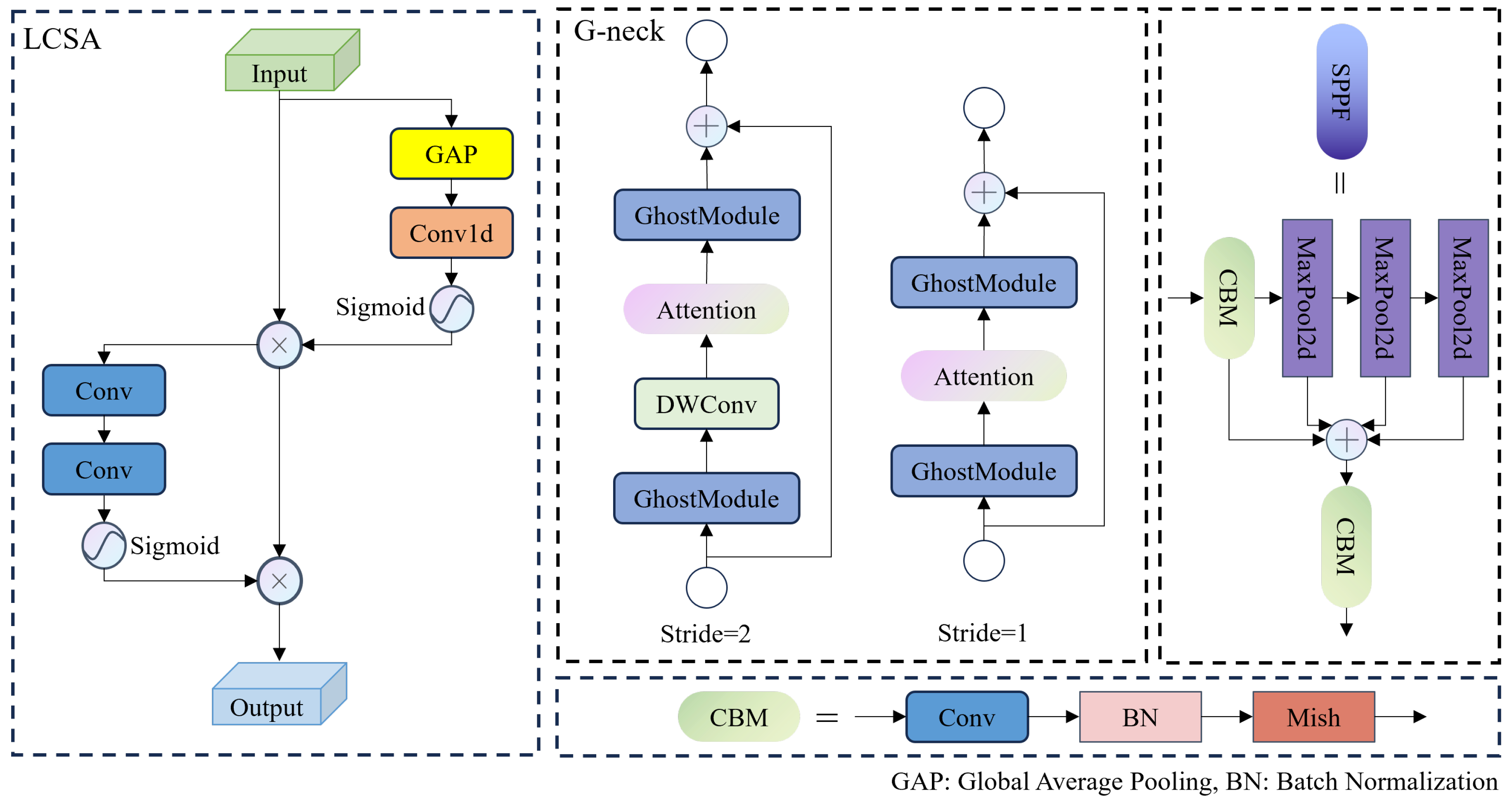

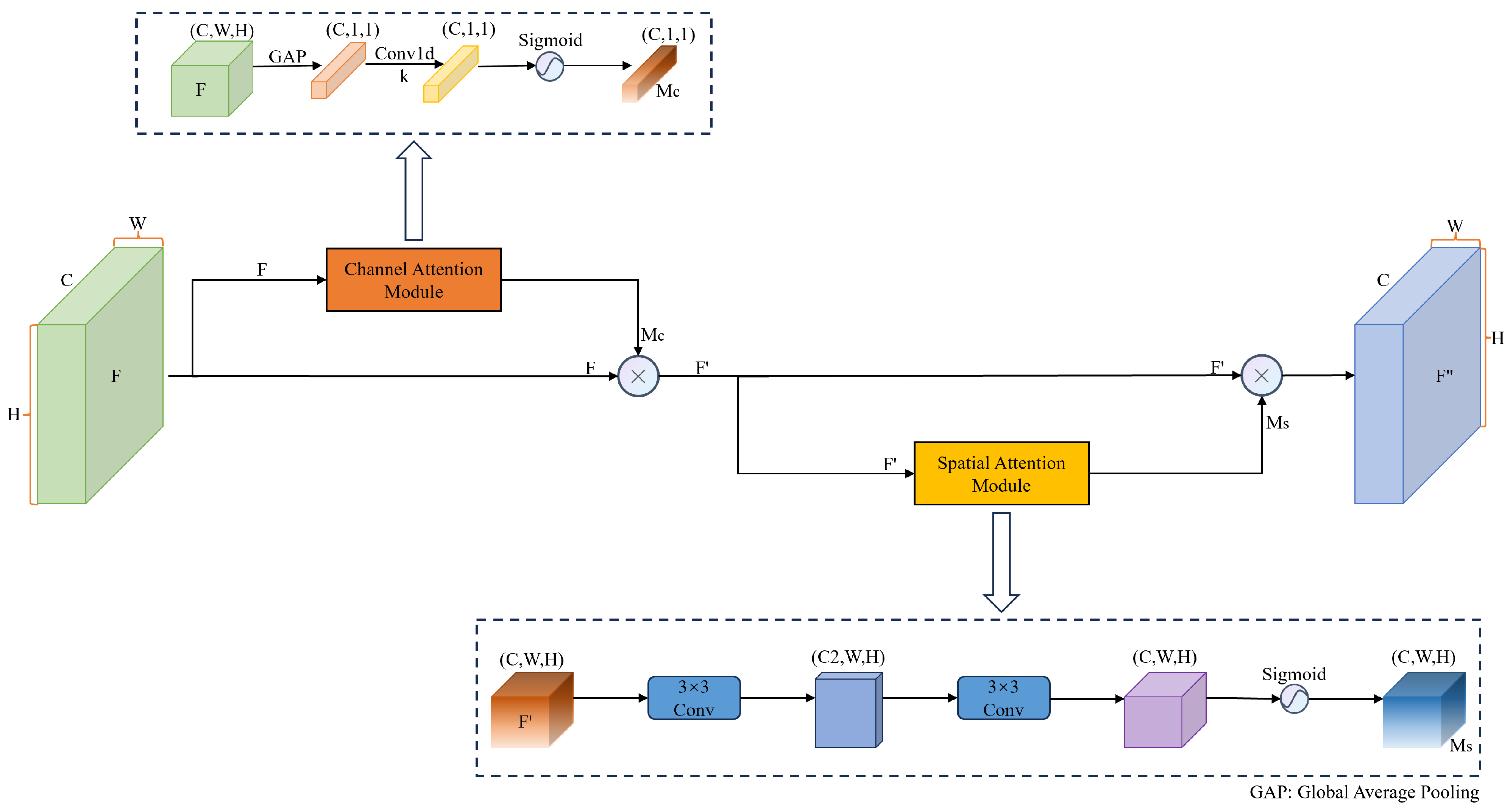

- We propose LCSA, a novel attention mechanism, and integrate it with the GhostNet backbone network, enabling the model to extract features more comprehensively without increasing computational parameters.

- (3)

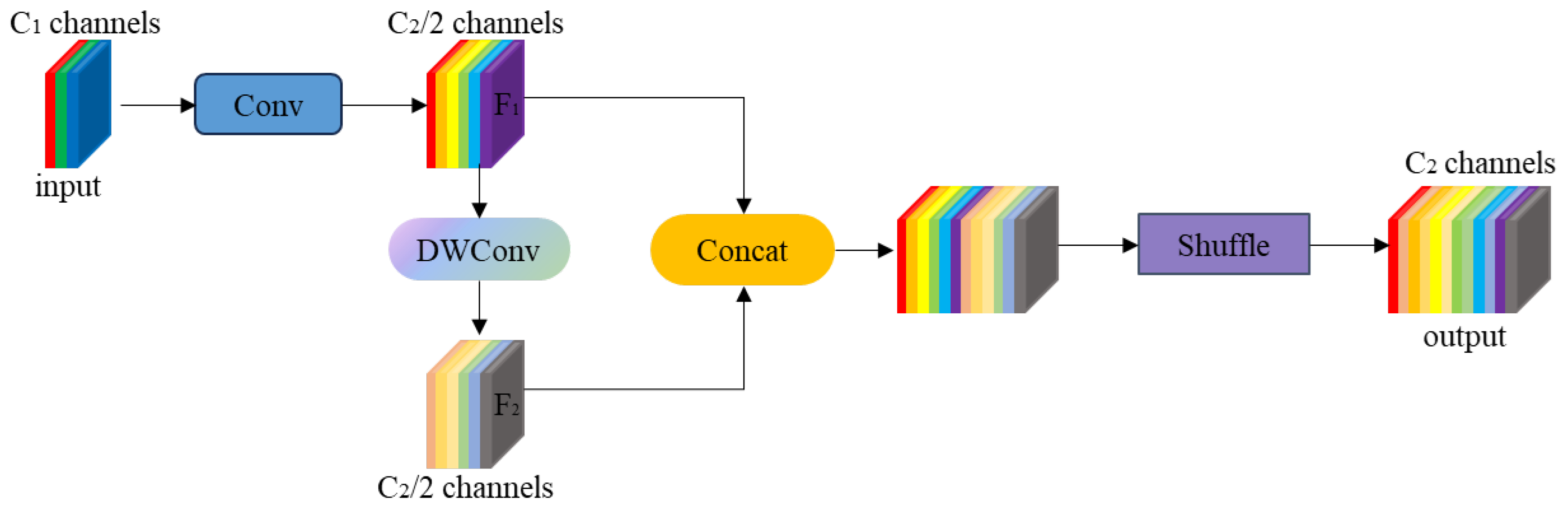

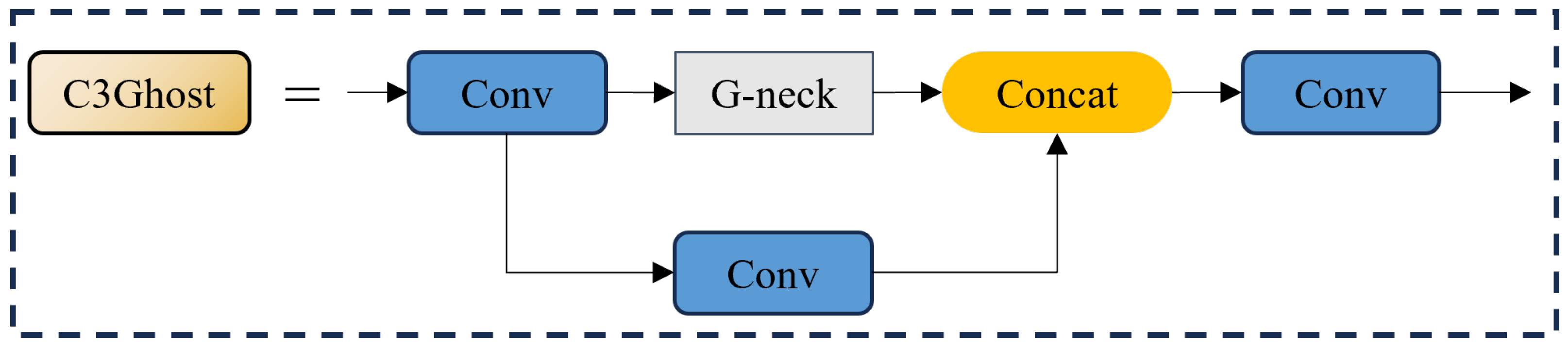

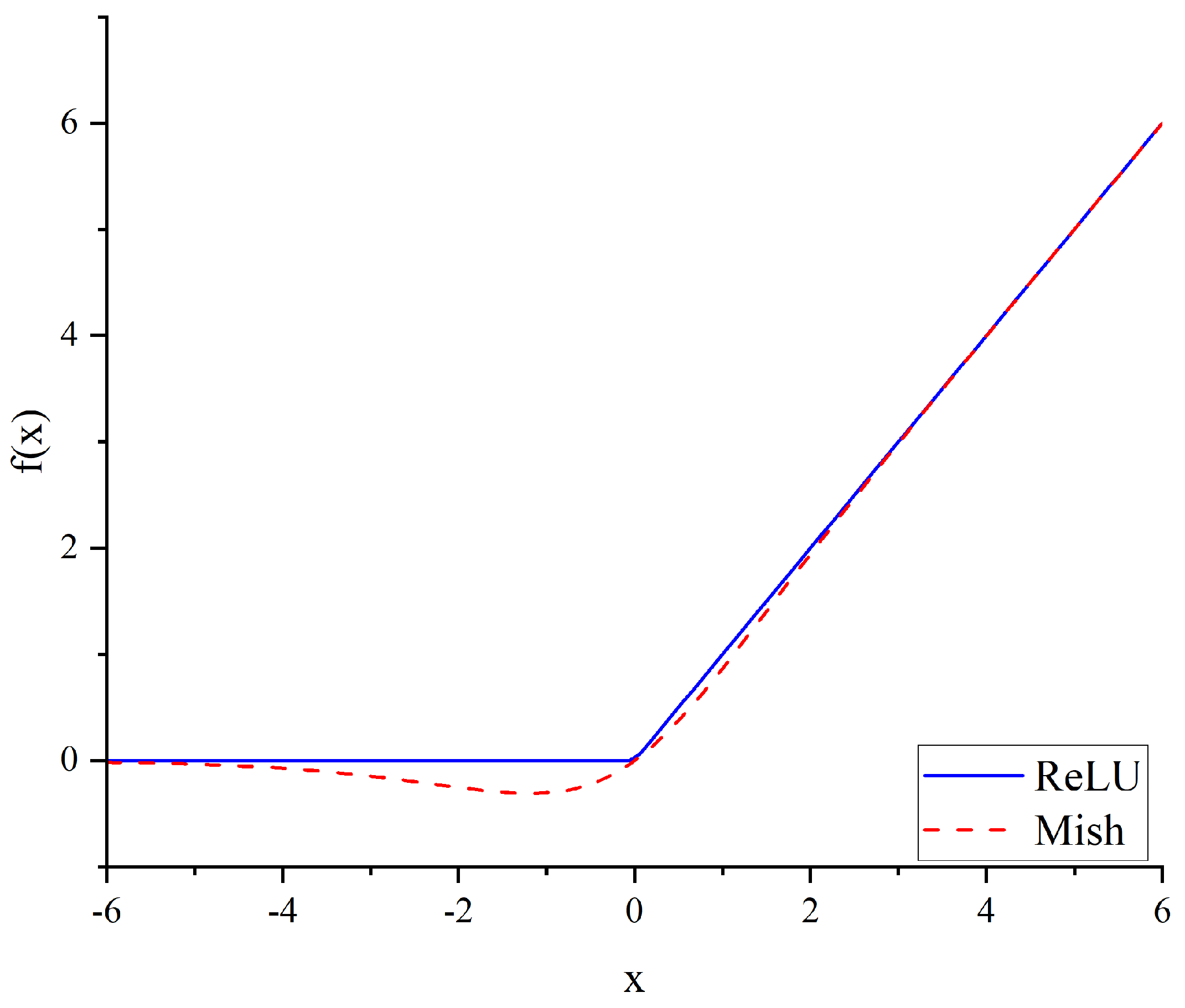

- We incorporate the GSConv and C3Ghost modules into the neck network to reduce the model size. Additionally, the EIOU loss function and Mish activation function are utilized to optimize detection speed and accuracy.

- (4)

- We use TensorRT to compress and accelerate the IDD-YOLO model and successfully deploy it on the embedded device Jetson TX2 NX to verify the model’s feasibility in practical application scenarios.

2. Related Work

3. Methods

3.1. IDD-YOLO Object Detection Algorithm

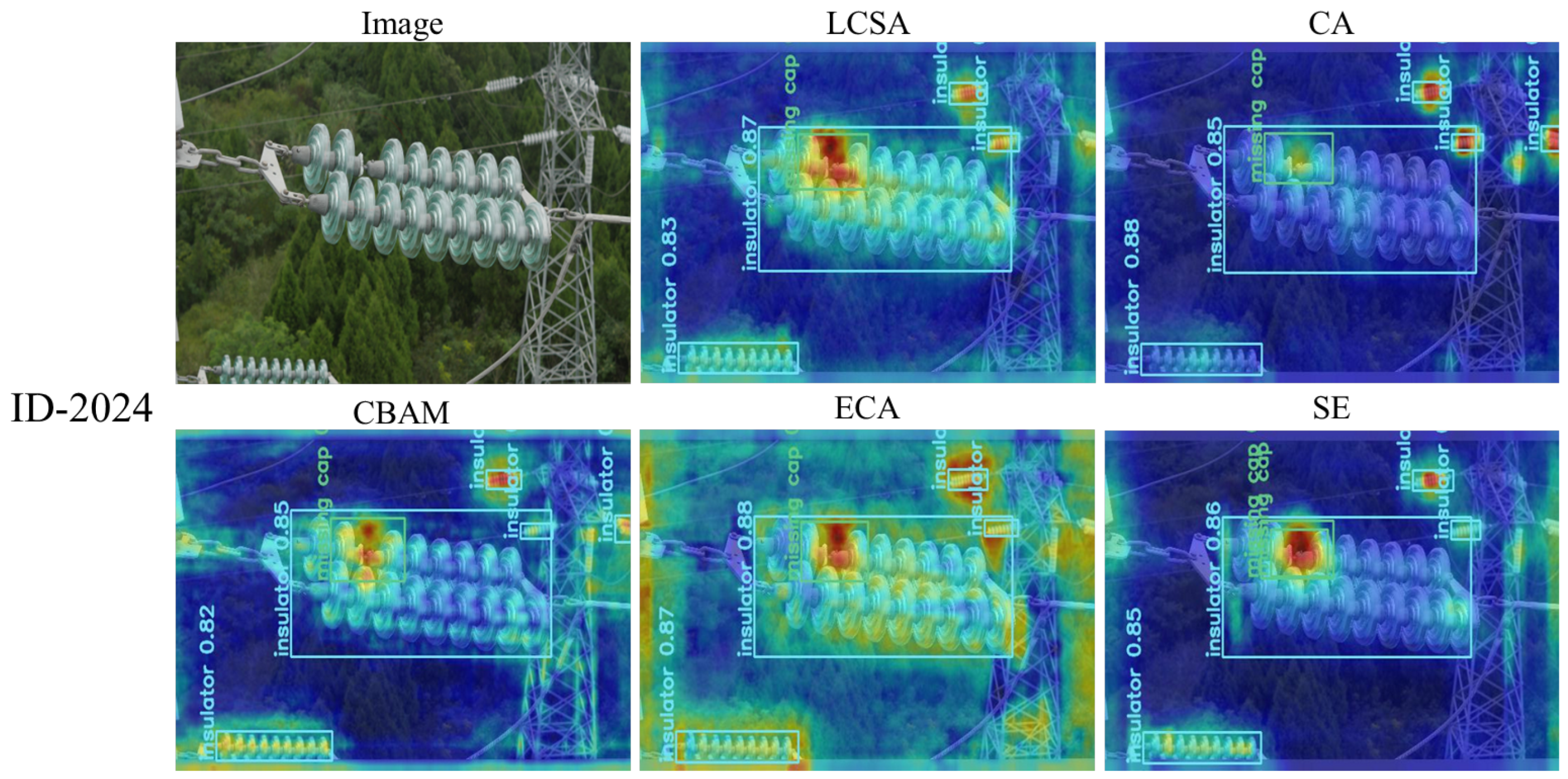

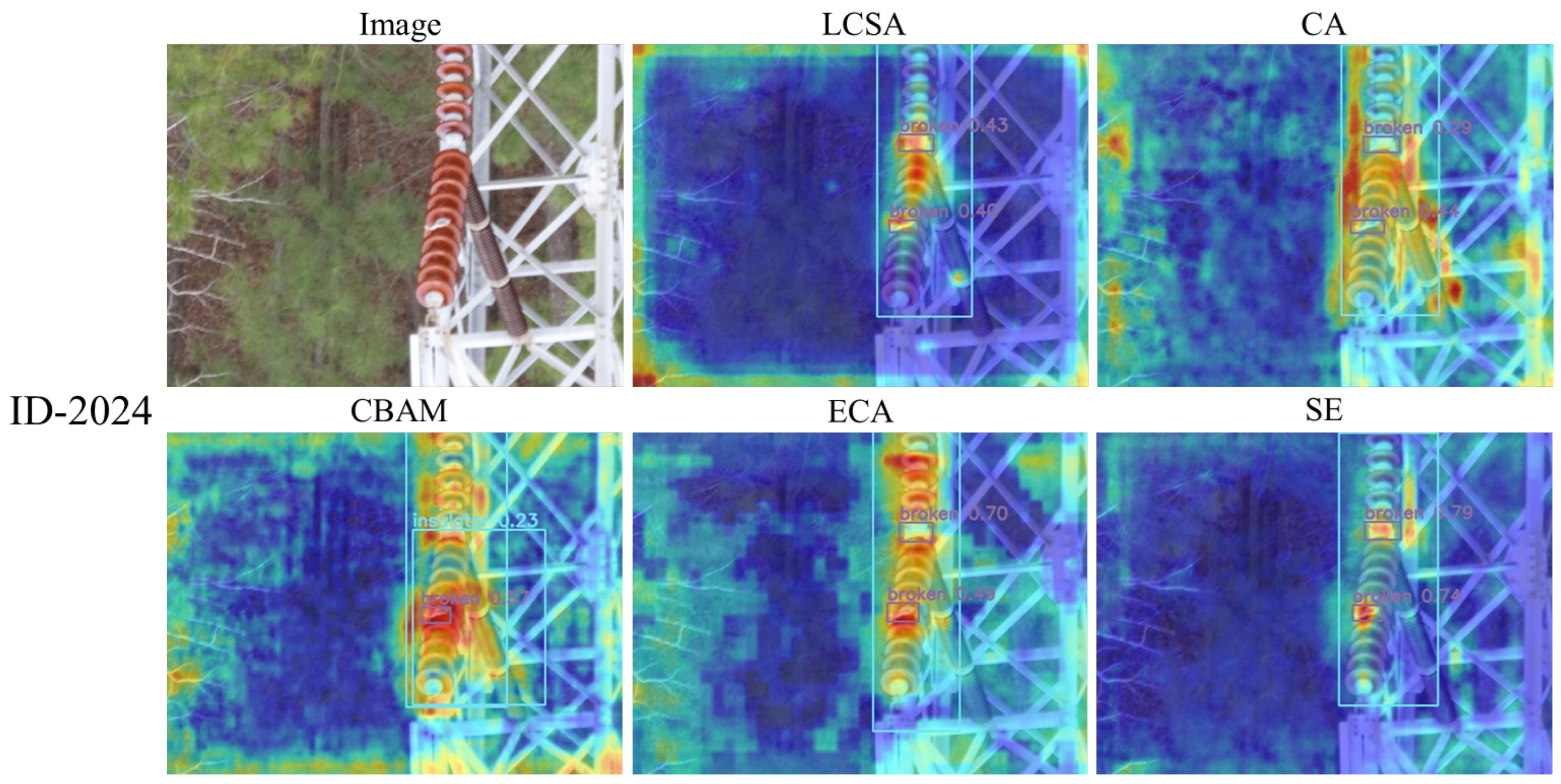

3.2. LCSA Attention Mechanism

3.3. GSConv Module

3.4. C3Ghost Module

3.5. EIOU Loss Function

3.6. Mish Activation Function

4. Experiments

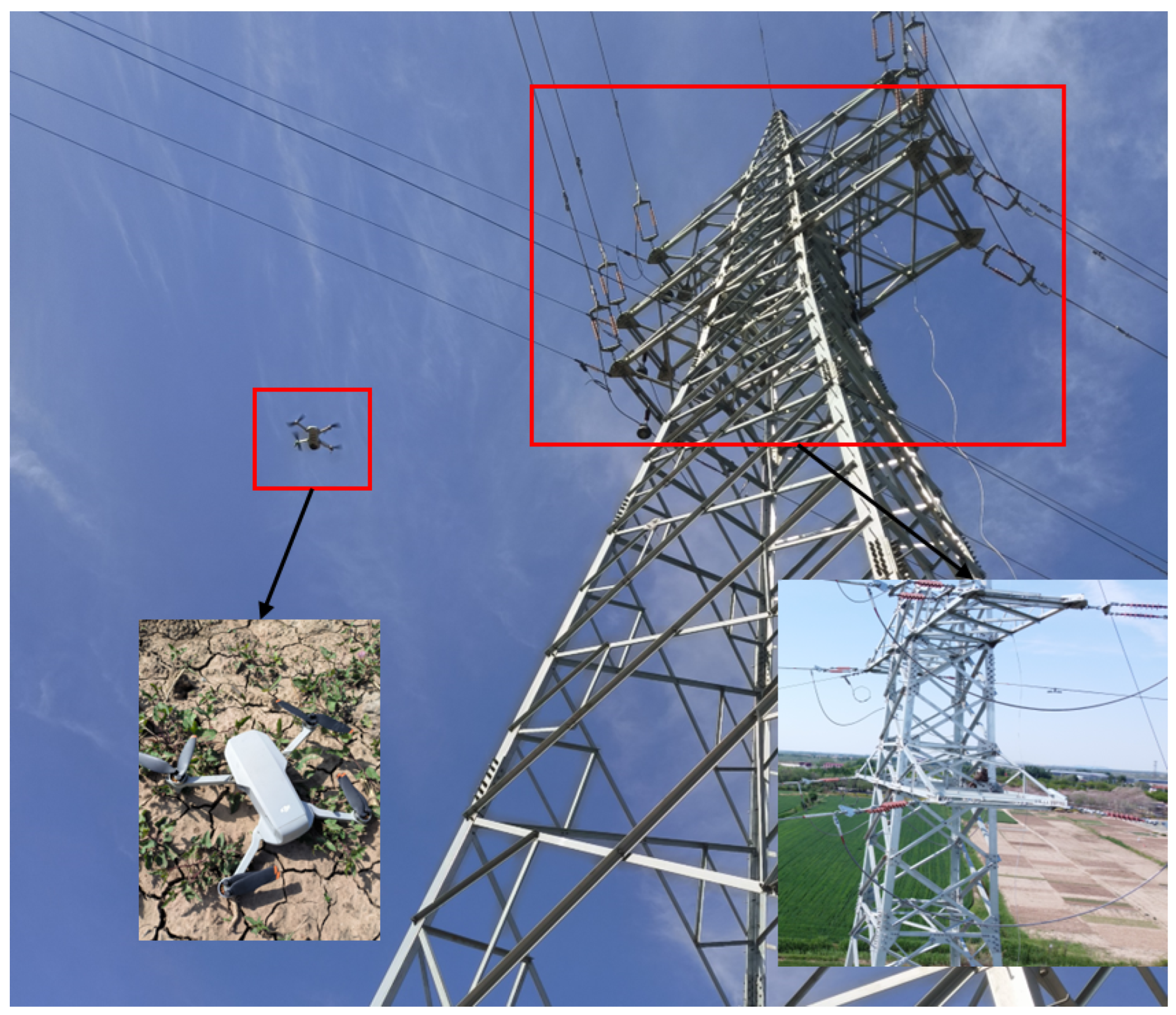

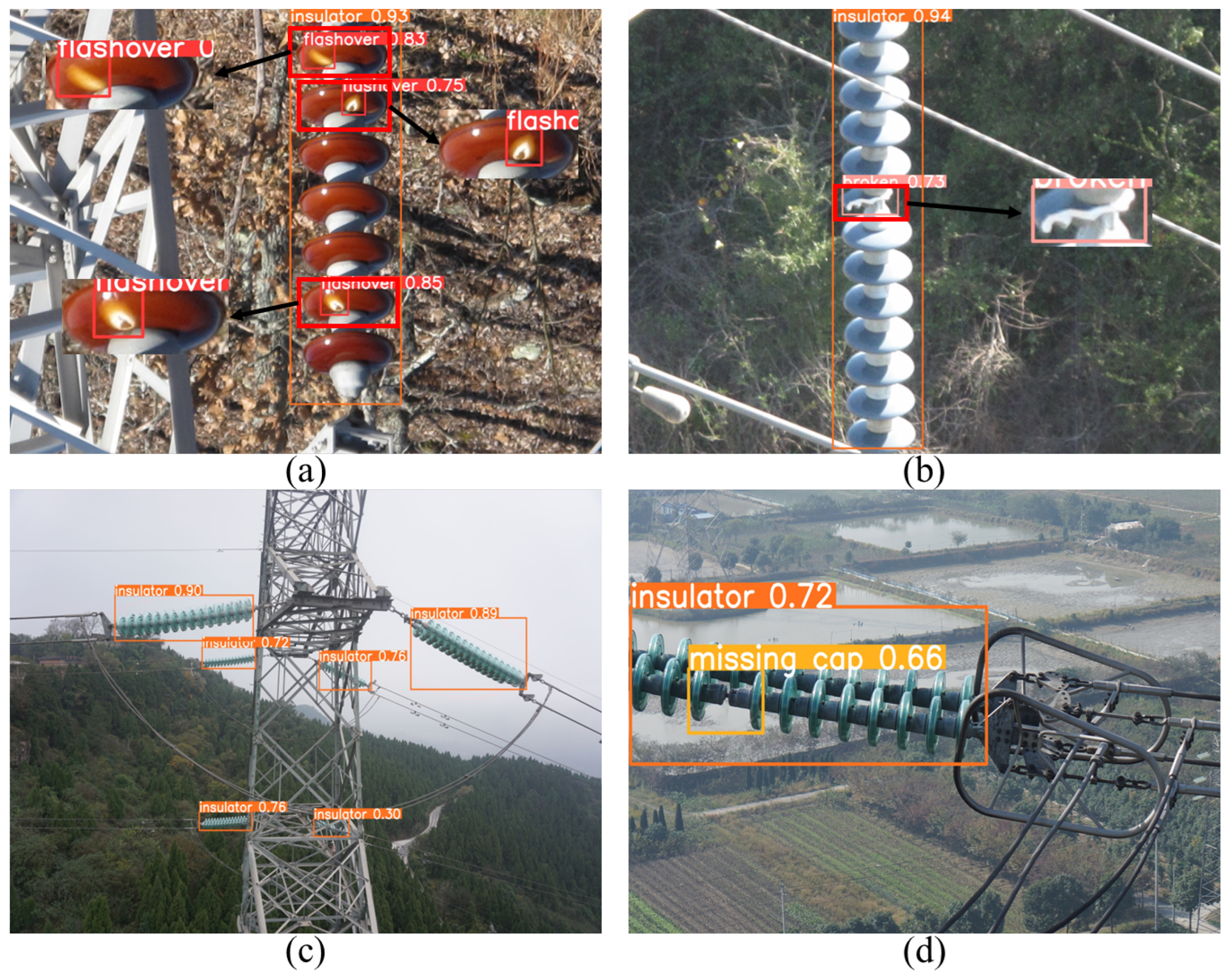

4.1. Dataset

4.2. Experimental Setup

4.3. Evaluation Metrics

4.4. Comparative Experiments with Mainstream Attention Mechanisms

4.5. Comparison Experiment with Mainstream Lightweight Object Detection Algorithms

4.6. Experimentation on the SFID Dataset

4.7. Acceleration and Deployment on Edge Platforms

4.8. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, J.; Hu, M.; Dong, J.; Lu, X. Summary of insulator defect detection based on deep learning. Electr. Power Syst. Res. 2023, 224, 109688. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, D.; Huang, X.; Li, C. Insulator defect detection with deep learning: A survey. IET Gener. Transm. Distrib. 2023, 17, 3541–3558. [Google Scholar] [CrossRef]

- Ahmed, M.F.; Mohanta, J.; Sanyal, A. Inspection and identification of transmission line insulator breakdown based on deep learning using aerial images. Electr. Power Syst. Res. 2022, 211, 108199. [Google Scholar] [CrossRef]

- Yang, Z.; Xu, Z.; Wang, Y. Bidirection-Fusion-YOLOv3: An Improved Method for Insulator Defect Detection Using UAV Image. IEEE Trans. Instrum. Meas. 2022, 71, 1–8. [Google Scholar] [CrossRef]

- Wu, J.; Jing, R.; Bai, Y.; Tian, Z.; Chen, W.; Zhang, S.; Richard Yu, F.; Leung, V.C.M. Small Insulator Defects Detection Based on Multiscale Feature Interaction Transformer for UAV-Assisted Power IoVT. IEEE Internet Things J. 2024, 11, 23410–23427. [Google Scholar] [CrossRef]

- Panigrahy, S.; Karmakar, S. Real-Time Condition Monitoring of Transmission Line Insulators Using the YOLO Object Detection Model With a UAV. IEEE Trans. Instrum. Meas. 2024, 73, 1–9. [Google Scholar] [CrossRef]

- Wu, Q.; An, J. An active contour model based on texture distribution for extracting inhomogeneous insulators from aerial images. IEEE Trans. Geosci. Remote Sens. 2013, 52, 3613–3626. [Google Scholar] [CrossRef]

- Han, Y.; Liu, Z.; Lee, D.; Liu, W.; Chen, J.; Han, Z. Computer vision–based automatic rod-insulator defect detection in high-speed railway catenary system. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418773943. [Google Scholar] [CrossRef]

- Oberweger, M.; Wendel, A.; Bischof, H. Visual recognition and fault detection for power line insulators. In Proceedings of the 19th Computer Vision Winter Workshop, Krtiny, Czech Republic, 3–5 February 2014; pp. 1–8. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Wen, Q.; Luo, Z.; Chen, R.; Yang, Y.; Li, G. Deep learning approaches on defect detection in high resolution aerial images of insulators. Sensors 2021, 21, 1033. [Google Scholar] [CrossRef]

- Lei, X.; Sui, Z. Intelligent fault detection of high voltage line based on the Faster R-CNN. Measurement 2019, 138, 379–385. [Google Scholar] [CrossRef]

- Tan, P.; Li, X.f.; Ding, J.; Cui, Z.s.; Ma, J.e.; Sun, Y.l.; Huang, B.q.; Fang, Y.t. Mask R-CNN and multifeature clustering model for catenary insulator recognition and defect detection. J. Zhejiang Univ. Sci. A 2022, 23, 745–756. [Google Scholar] [CrossRef]

- Zhao, W.; Xu, M.; Cheng, X.; Zhao, Z. An insulator in transmission lines recognition and fault detection model based on improved faster RCNN. IEEE Trans. Instrum. Meas. 2021, 70, 1–8. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics; Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 17 July 2024).

- Hao, K.; Chen, G.; Zhao, L.; Li, Z.; Liu, Y.; Wang, C. An insulator defect detection model in aerial images based on multiscale feature pyramid network. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Qu, F.; Lin, Y.; Tian, L.; Du, Q.; Wu, H.; Liao, W. Lightweight Oriented Detector for Insulators in Drone Aerial Images. Drones 2024, 8, 294. [Google Scholar] [CrossRef]

- Zhang, Z.; Lv, G.; Zhao, G.; Zhai, Y.; Cheng, J. BS-YOLOv5s: Insulator Defect Detection with Attention Mechanism and Multi-Scale Fusion. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8–11 October 2023; pp. 2365–2369. [Google Scholar] [CrossRef]

- Chen, J.; Fu, Z.; Cheng, X.; Wang, F. An method for power lines insulator defect detection with attention feedback and double spatial pyramid. Electr. Power Syst. Res. 2023, 218, 109175. [Google Scholar] [CrossRef]

- Song, Z.; Huang, X.; Ji, C.; Zhang, Y. Deformable YOLOX: Detection and rust warning method of transmission line connection fittings based on image processing technology. IEEE Trans. Instrum. Meas. 2023, 72, 1–21. [Google Scholar] [CrossRef]

- Zhang, Z.D.; Zhang, B.; Lan, Z.C.; Liu, H.C.; Li, D.Y.; Pei, L.; Yu, W.X. FINet: An insulator dataset and detection benchmark based on synthetic fog and improved YOLOv5. IEEE Trans. Instrum. Meas. 2022, 71, 1–8. [Google Scholar] [CrossRef]

- Bao, W.; Du, X.; Wang, N.; Yuan, M.; Yang, X. A defect detection method based on BC-YOLO for transmission line components in UAV remote sensing images. Remote Sens. 2022, 14, 5176. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, Y.; Xin, M.; Liao, J.; Xie, Q. A light-weight network for small insulator and defect detection using UAV imaging based on improved YOLOv5. Sensors 2023, 23, 5249. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Chen, C.; Yang, B.; Yan, Z.; Wang, Z.; He, Y.; Wu, S.; Li, L.; Fu, J. ID-Det: Insulator Burst Defect Detection from UAV Inspection Imagery of Power Transmission Facilities. Drones 2024, 8, 299. [Google Scholar] [CrossRef]

- Ding, L.; Rao, Z.Q.; Ding, B.; Li, S.J. Research on defect detection method of railway transmission line insulators based on GC-YOLO. IEEE Access 2023, 11, 102635–102642. [Google Scholar] [CrossRef]

- Luo, B.; Xiao, J.; Zhu, G.; Fang, X.; Wang, J. Occluded Insulator Detection System Based on YOLOX of Multi-Scale Feature Fusion. IEEE Trans. Power Deliv. 2024, 39, 1063–1074. [Google Scholar] [CrossRef]

- Huang, X.; Jia, M.; Tai, X.; Wang, W.; Hu, Q.; Liu, D.; Guo, P.; Tian, S.; Yan, D.; Han, H. Federated knowledge distillation for enhanced insulator defect detection in resource-constrained environments. IET Comput. Vis. 2024. [Google Scholar] [CrossRef]

- Li, Y.; Feng, D.; Zhang, Q.; Li, S. HRD-YOLOX based insulator identification and defect detection method for transmission lines. IEEE Access 2024, 12, 22649–22661. [Google Scholar] [CrossRef]

- Li, D.; Lu, Y.; Gao, Q.; Li, X.; Yu, X.; Song, Y. LiteYOLO-ID: A Lightweight Object Detection Network for Insulator Defect Detection. IEEE Trans. Instrum. Meas. 2024, 73, 1–12. [Google Scholar] [CrossRef]

- Shuang, F.; Han, S.; Li, Y.; Lu, T. RSIn-Dataset: An UAV-Based Insulator Detection Aerial Images Dataset and Benchmark. Drones 2023, 7, 125. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, JMLR Workshop and Conference Proceedings, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the atmosphere: Scattering by molecules and particles. Phys. Bull. 1976, 28, 521. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

| Models | Detection Accuracy | Advantages | Disadvantages |

|---|---|---|---|

| [7] | Achieved a precision of 85.95% on a self-built insulator dataset | Strong quantitative validation | Complex implementation |

| [8] | Achieved a recall rate of 98.97% on a self-built dataset | High detection accuracy | Sensitivity to initial conditions |

| [9] | Achieved a maximum recall rate of 98% on a self-built dataset | High performance metrics | Manual ground truth creation |

| [15] | Achieved a 97.6% average precision (AP) on a self-built test set of 112 images | Efficient use of pretrained models | Slow inference speed |

| [16] | Achieved 92.5% accuracy on a self-built dataset | High model accuracy | Complex real-world deployment, high computational resource demand |

| [17] | Achieved a mean Average Precision (mAP) of 90.8% on a self-built dataset | High detection accuracy | Requires significant computational resources, risk of overfitting |

| [27] | Achieved a mAP of 95.63% on the CPLMID dataset | High accuracy and speed | Complex model structure, difficult to deploy in practice |

| [28] | Achieved an AP of 62.48% on a self-built dataset | Efficient on edge devices, high detection speed | Highly dependent on data quality, slightly lower detection accuracy |

| [29] | Achieved a mAP of 90.1% on the WI dataset | High model accuracy | Complex real-world deployment |

| [30] | Achieved a mAP of 97.1% on the UPID dataset | High detection accuracy | Poor real-time applicability |

| [31] | Achieved a mAP of 85% on a self-built dataset | Good robustness | Complex operations, high computational demands |

| [32] | Achieved a 96.2% F1-score on the SFID dataset | Enhanced dataset and open-source, provides benchmark models | Slow real-time inference speed |

| [33] | Achieved a mAP of 89.1% on the DVID dataset | High detection accuracy | Difficult real-world deployment |

| [34] | Achieved a mAP of 99.4% on a self-built dataset | High accuracy and recall | Complexity and high computational demand |

| [35] | Achieved a precision of 97.38% on the ID dataset | Focus on practical application | Deployment requires substantial computational resources |

| [36] | Achieved a mAP of 94.2% on a self-built dataset | High accuracy in detecting small targets | Long training and inference times |

| [37] | Achieved a precision of 90.71% on a self-built dataset | Robust algorithm performance | High computational load |

| [38] | Achieved a mAP of 85.6% on a dataset collected online | Lightweight model | Dependent on high-quality data |

| [39] | Achieved a mAP of 91.34% on a self-built dataset | Strong real-time detection capabilities | High computational resource needs |

| [40] | Achieved a mAP of 65.1% on the IDID-Plus dataset | Lightweight model, efficient on edge devices | Risk of overfitting |

| [41] | Achieved a mAP of 94.24% on the RSIn-Dataset | High detection accuracy | Difficult real-world deployment |

| Classes | Number |

|---|---|

| Flashover | 5078 |

| Broken | 1862 |

| Insulator | 5445 |

| Missing cap | 563 |

| Models | mAP@0.5 | Param. (M) | R | mAP@0.5:0.95 | P | FLOPs (G) |

|---|---|---|---|---|---|---|

| +CA [45] | 64.3% | 2,841,770 | 59.5% | 37.9% | 77.5% | 4.8 |

| +CBAM [46] | 62.4% | 2,918,347 | 61.9% | 35.3% | 74.2% | 5.0 |

| +ECA [48] | 59% | 2,570,988 | 57.5% | 33.7% | 71.5% | 4.5 |

| +SE [47] | 61.9% | 2,582,196 | 62.3% | 35.1% | 71.6% | 4.5 |

| +LCSA | 66.2% | 2,851,872 | 63.6% | 38.5% | 74.9% | 5.1 |

| Models | mAP@0.5 | Param. (M) | R | mAP@0.5:0.95 | P | FLOPs (G) |

|---|---|---|---|---|---|---|

| BC-YOLO [33] | 64.4% | 7,247,858 | 61.4% | 37.3% | 73.1% | 16.5 |

| I-YOLOv5 [34] | 61.4% | 3,803,684 | 58.1% | 34.9% | 71.1% | 9.7 |

| GC-YOLO [36] | 54.4% | 9,645,420 | 53.2% | 31.8% | 63.7% | 29.0 |

| YOLOv5s [22] | 62.1% | 7,020,913 | 60.3% | 35.4% | 72.7% | 15.8 |

| YOLOv6n [24] | 56.3% | 4,234,140 | 53.9% | 34.7% | 70.2% | 11.8 |

| YOLOv7t [25] | 65.9% | 6,023,106 | 60.3% | 38.6% | 78.7% | 13.2 |

| YOLOv8n [26] | 59.4% | 3,022,812 | 58.7% | 37.3% | 73.7% | 8.1 |

| YOLOv8s [26] | 62.5% | 11,127,132 | 60.3% | 39.5% | 74.3% | 28.4 |

| IDD-YOLO | 66.2% | 2,851,872 | 63.6% | 38.5% | 74.9% | 5.1 |

| Model | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|

| Mask R-CNN [13] | 98.3% | 82.0% |

| Faster R-CNN [11] | 98.4% | 80.1% |

| YOLOX [23] | 99.4% | 86.0% |

| Swin-Transformer [58] | 99.0% | 86.4% |

| YOLOv5s [22] | 99.3% | 87.0% |

| IDD-YOLO | 99.4% | 87.2% |

| Model | Param. (M) | FPS |

|---|---|---|

| BC-YOLO [33] | 7,247,858 | 13.53 |

| I-YOLOv5 [34] | 3,803,684 | 12.23 |

| GC-YOLO [36] | 9,645,420 | 6.73 |

| YOLOv4-tiny [21] | 5,883,356 | 7.28 |

| YOLOv5s [22] | 7,020,913 | 15.67 |

| YOLOv8s [26] | 11,127,132 | 16.82 |

| IDD-YOLO | 2,851,872 | 20.83 |

| G-LCSA | G-PANet | EIOU&Mish | mAP@0.5 | Param. (M) | R | mAP@0.5:0.95 | P | FLOPs (G) |

|---|---|---|---|---|---|---|---|---|

| - | - | - | 62.1% | 7,020,913 | 60.3% | 35.4% | 72.7% | 15.8 |

| √ | - | - | 63.6% | 4,255,120 | 62.3% | 36.6% | 75.5% | 7.6 |

| - | √ | - | 64.6% | 5,617,665 | 62.6% | 38% | 78.1% | 13.3 |

| - | - | √ | 60.5% | 7,020,913 | 60.8% | 35.9% | 71.8% | 15.8 |

| √ | √ | - | 64.7% | 2,851,872 | 65% | 36.8% | 69.5% | 5.1 |

| √ | - | √ | 65.2% | 4,255,120 | 63.7% | 37% | 75.8% | 7.6 |

| - | √ | √ | 65.6% | 5,617,665 | 62.5% | 37.9% | 76.7% | 13.3 |

| √ | √ | √ | 66.2% | 2,851,872 | 63.6% | 38.5% | 74.9% | 5.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Li, D.; Li, D.; Li, X.; Gao, Q.; Yu, X. A Lightweight Insulator Defect Detection Model Based on Drone Images. Drones 2024, 8, 431. https://doi.org/10.3390/drones8090431

Lu Y, Li D, Li D, Li X, Gao Q, Yu X. A Lightweight Insulator Defect Detection Model Based on Drone Images. Drones. 2024; 8(9):431. https://doi.org/10.3390/drones8090431

Chicago/Turabian StyleLu, Yang, Dahua Li, Dong Li, Xuan Li, Qiang Gao, and Xiao Yu. 2024. "A Lightweight Insulator Defect Detection Model Based on Drone Images" Drones 8, no. 9: 431. https://doi.org/10.3390/drones8090431

APA StyleLu, Y., Li, D., Li, D., Li, X., Gao, Q., & Yu, X. (2024). A Lightweight Insulator Defect Detection Model Based on Drone Images. Drones, 8(9), 431. https://doi.org/10.3390/drones8090431