6.1. Simulation Experiment of the Attitude Correction Algorithm

To test the performance of the proposed attitude correction algorithm, the simulation experiment conditions were set as follows: the cooperative platform, UAV, and target all moved at a constant speed in a straight line, with initial and final position parameters as shown in

Table 1. The UAV simultaneously observed the target and the cooperative platform, and the position of the UAV was provided by RTK, which has a standard deviation of (1 m, 1 m, 1 m); the sampling period was 1 s, the total simulation time was 1000 s, and 100 Monte Carlo simulation experiments were carried out.

- (a)

Comparative experiment on the attitude correction algorithm and other algorithms

The standard deviations of the random errors (distance, azimuth, and elevation) of the UAV observing the target were (5 m, 0.2°, 0.2°), and the corresponding systematic errors were (5 m, 0.02°, 0.02°); the standard deviations of the random errors (distance, azimuth, elevation) of the UAV observing the cooperative platform were (5 m, 0.01°, 0.01°), and the corresponding systematic errors were (5 m, 0.01°, 0.01°); for simplification, it was assumed that the systematic errors of the UAV attitude angles (yaw, pitch, roll) were constant, with systematic errors of (0.05°, 0.05°, 0.05°) and standard deviations of random errors of (0.05°, 0.05°, 0.05°).

The target tracking positions obtained by the navigation and positioning method (including attitude angle error), static method (navigation and positioning method without attitude angle error), and attitude correction method (this method) were compared, and the results are shown in

Figure 5 and

Table 2.

The simulation results in

Figure 5 show that the attitude correction algorithm is similar to that of the static method. Furthermore, the quantitative results from

Table 3 demonstrate that the root mean square error (RMSE) of the target position observed by the UAV using the navigation positioning method is 246.75 m. The RMSE of the target position observed by the UAV using the static method (without attitude angle error) is 77.65 m. In addition, using the attitude correction algorithm based on the cooperation platform, the RMSE of the target position is 65.41 m, suggesting that the proposed attitude correction algorithm in this paper effectively enhances the localization accuracy of the target.

As evident from

Table 2, the positioning accuracy of the attitude correction algorithm surpasses that of the static method. This superiority can be attributed to the algorithm’s capacity to mitigate not only the impact of attitude angle errors on target localization but also the effects of observation system errors and UAV position errors on the same task. This can also be explained by analyzing theoretical Formula (16).

- (b)

The impact of UAV attitude angle errors on the attitude correction algorithm

This section analyzes the reliability of the proposed method under different attitude angle errors. The systematic error and standard deviation of random errors for the UAV’s attitude angles are displayed in

Table 3 (set yaw, pitch, and rolling to the same error). The other conditions are the same as 6.1(a), and the simulation results are shown in

Table 4.

Table 4 presents the RMSE of the target position obtained by solving the corresponding attitude angle errors of each group. Comparing the second and third columns in

Table 4, it can be observed that both the navigation localization method and the attitude correction method result in an increase RMSE of the target position as the attitude angle error increases. The attitude angle error has a greater impact on the navigation positioning method, and larger attitude angle errors significantly increase the accuracy of the target positioning. Compared to the navigation localization method, the proposed attitude correction method in this paper effectively reduces the impact of attitude angle errors on the accuracy of target positioning.

From the data in the third and fourth columns of

Table 4, it can be seen that, except for the first group of attitude angle error conditions where the target positioning accuracy obtained by the attitude correction method is lower than the static method, the attitude correction method is superior to the static observation method in the second, third, and fourth groups of attitude angle error conditions. Therefore, for the situation with a large attitude angle error, the static method is better than the correction method, while for situations with relatively small attitude angle errors, the correction method is superior to the static method.

- (c)

The impact of time-varying attitude angles’ deviation on the attitude correction algorithm

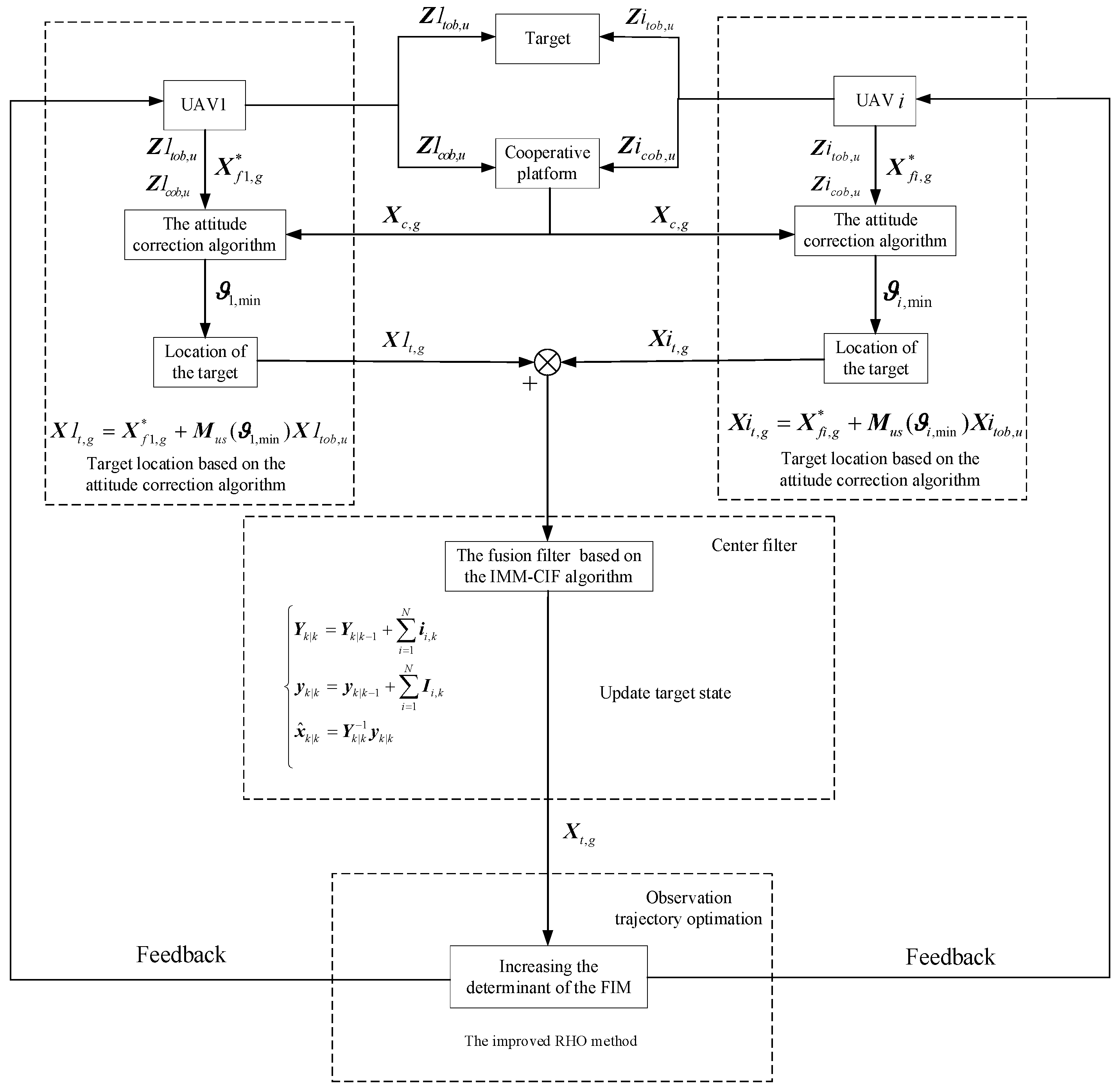

The existing error registration algorithms for inertial platform sensor systems assume that the systematic error of attitude angles does not change with time. However, in reality, the systematic error of attitude angles may experience a sudden jump. Therefore, this section analyzes the adaptive capability of the attitude correction algorithm for time-varying systematic errors of attitude angles.

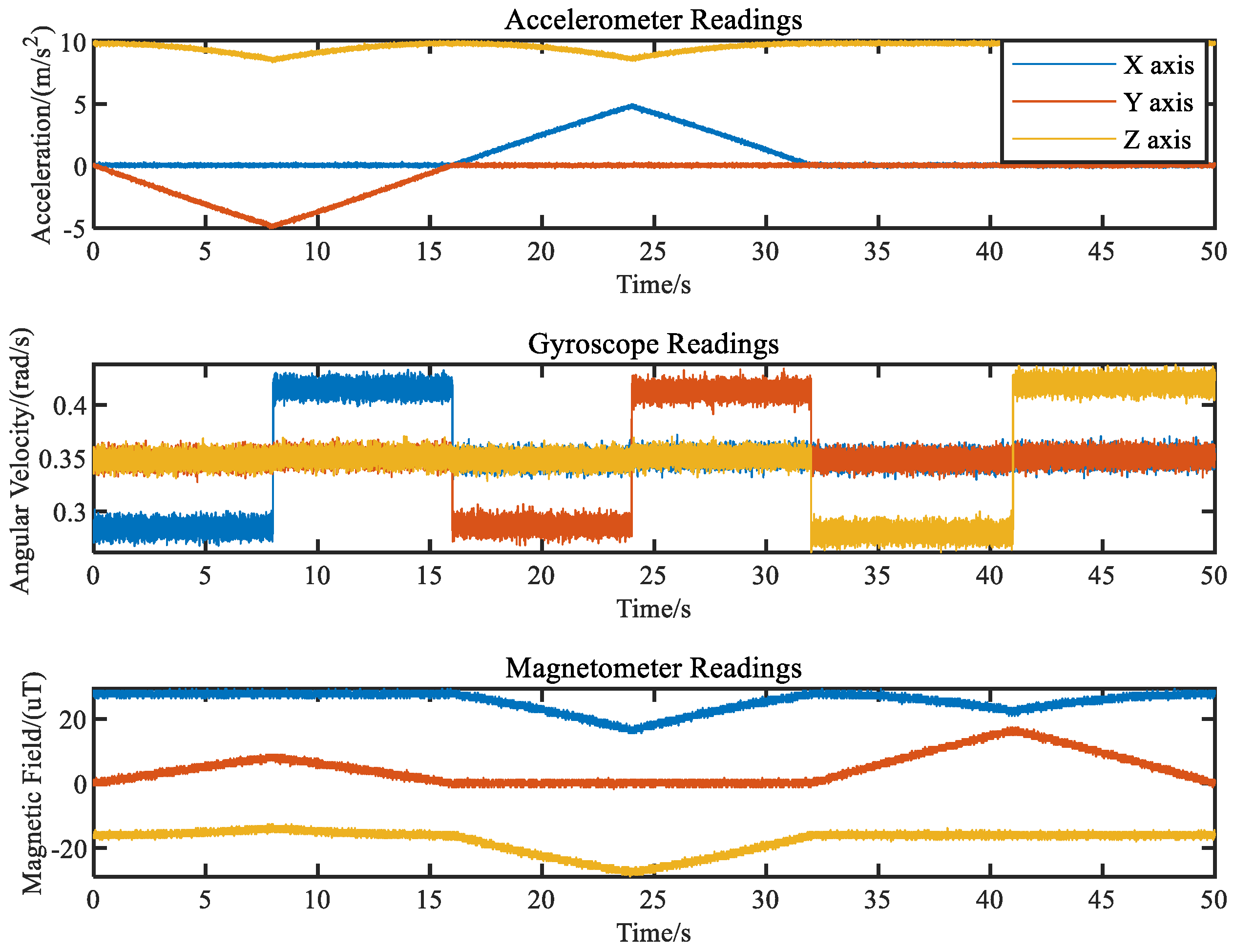

To simulate realistic attitude angle errors, the attitude angles outputted by the IMU were simulated in the MATLAB simulation environment [

40]. In the simulation experiment, MPU9250, which is a commonly used sensor, was chosen for simulation. Firstly, the sensor characteristics of MPU9250 were imported into MATLAB according to its sensor data sheet [

41]. Then, the MARG data were generated using the imuSensor function in MATLAB 2020a. Finally, the Madgwick algorithm [

42] was employed to estimate the attitude angles of the UAV. The sampling frequency was set to 20 Hz, and the simulation diagram of the attitude angle estimation process is shown in

Figure 6.

Assuming the flight trajectory of the UAV is as shown in

Figure 7, the MARG data output by the MPU9250 sensor are shown in

Figure 8. The attitude angles are calculated using the Madgwick algorithm from the MARG data, and the curve of the attitude angle errors is shown in

Figure 9. It can be seen from

Figure 9 that the systematic error of the UAV’s attitude angles during flight is not always fixed, but varies with time.

In this simulation, the navigation positioning method utilized estimated attitude angle data of the UAV, whereas the static method employed actual attitude angle data as shown in

Figure 9. The total simulation time was 100 s, and the other conditions were the same as those in 6.1(a); the simulation results are shown in

Figure 10 and

Table 5.

As shown in

Figure 10, the estimated target state of the navigation positioning method cannot converge, and it exhibits significant fluctuations. A comparison with the target state estimated by the static method reveals that this is attributable to systematic errors in the time-varying attitude angles. When the systematic errors of the attitude angle are substantial, the navigation positioning method yields less accurate estimations of the target state. Conversely, when these errors are relatively minor, the target state estimation improves.

In addition, the target state estimated by the attitude correction algorithm is similar to that estimated by the static method, and it is closer to the true target state. Further quantitative analysis of the results in

Table 5 shows that the target state estimated by the attitude correction method is superior to the static method. The simulation results verify that the attitude correction algorithm proposed in this paper can effectively reduce the impact of time-varying attitude angle deviation on positioning accuracy, and it also rectifies a portion of the systematic error originating from the sensor observation.

In summary, compared with the existing error registration algorithms of inertial platform sensor systems, the proposed attitude correction algorithm can avoid establishing the state equation of attitude angle systematic errors, thus overcoming the influence of time-varying attitude angle systematic errors on the positioning accuracy. Moreover, the proposed algorithm has a simple principle, a small calculation amount, and easy real-time online processing, making it suitable for engineering applications.

- (d)

The impact of observation target error on the attitude correction algorithm

As seen in

Section 6.1(c), the attitude correction algorithm can reduce a portion of the systematic error originating from the sensor observation and improve the positioning accuracy of the target. Therefore, this section analyzes the impact of the systematic error originating from the sensor observation on the attitude correction algorithm. The parameters of the observation target error by the UAV are displayed in

Table 6 (set azimuth and elevation to the same error), and the other conditions were the same as those in 6.1(a); the simulation results are shown in

Table 7.

An analysis of the data presented in columns 3 and 4 of

Table 7 reveals that when the measurement error of the UAVs’ observation of the target is relatively small, the target localization accuracy of the attitude correction method significantly surpasses that of the navigation positioning method. In the case where the measurement error of the UAV’s observation the target increases to a certain extent, although the attitude angle error of the UAVs is not the predominant factor affecting localization accuracy, the attitude correction method can correct part of the observation system error of the UAVs, resulting in a higher target positioning accuracy compared to the static method. Through the analysis of the data in the fourth group, it can be observed that when there is substantial systematic error in the UAVs’ observation of the target, the improvement in target localization accuracy offered by the attitude correction algorithm is not substantial.

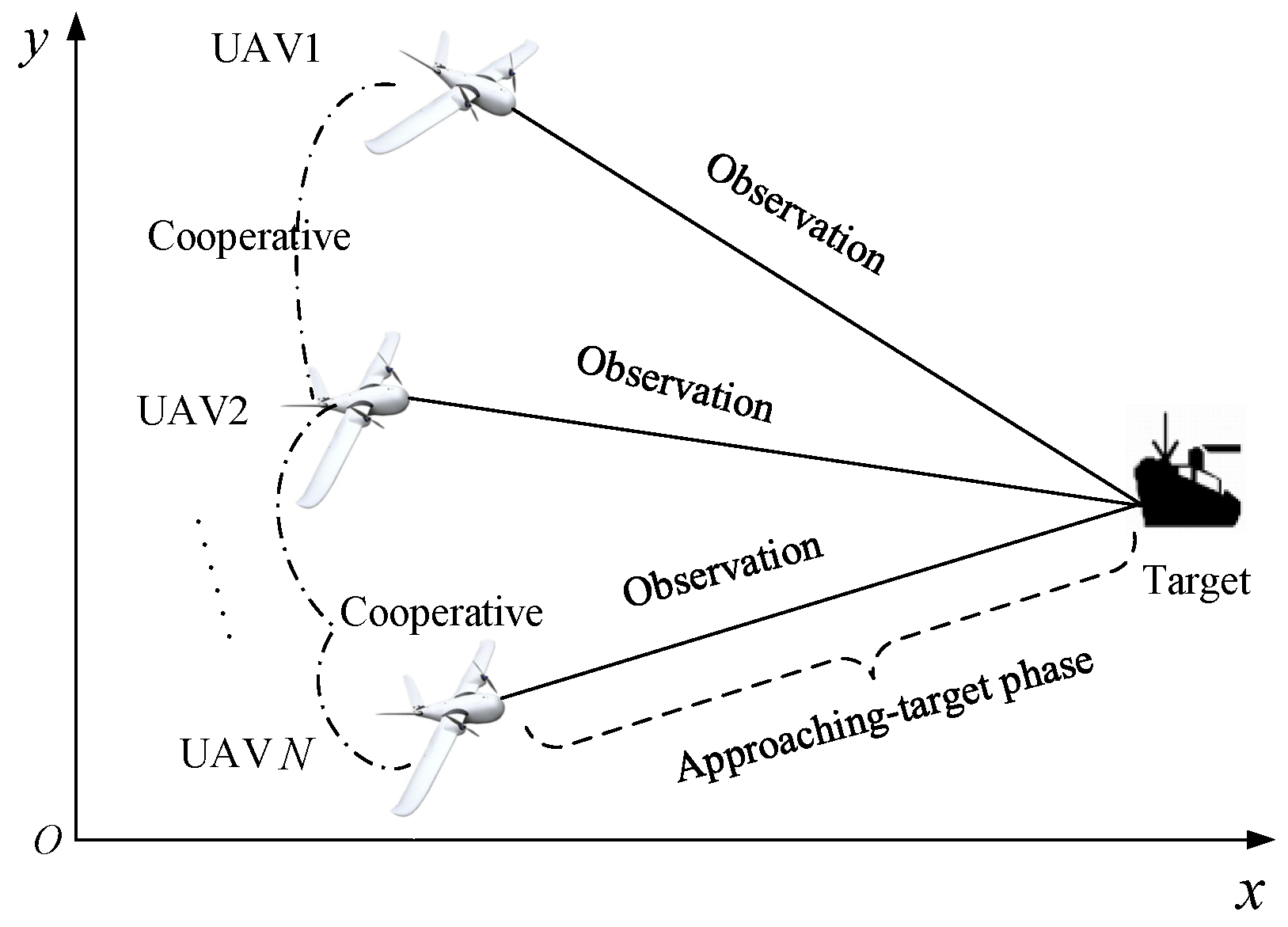

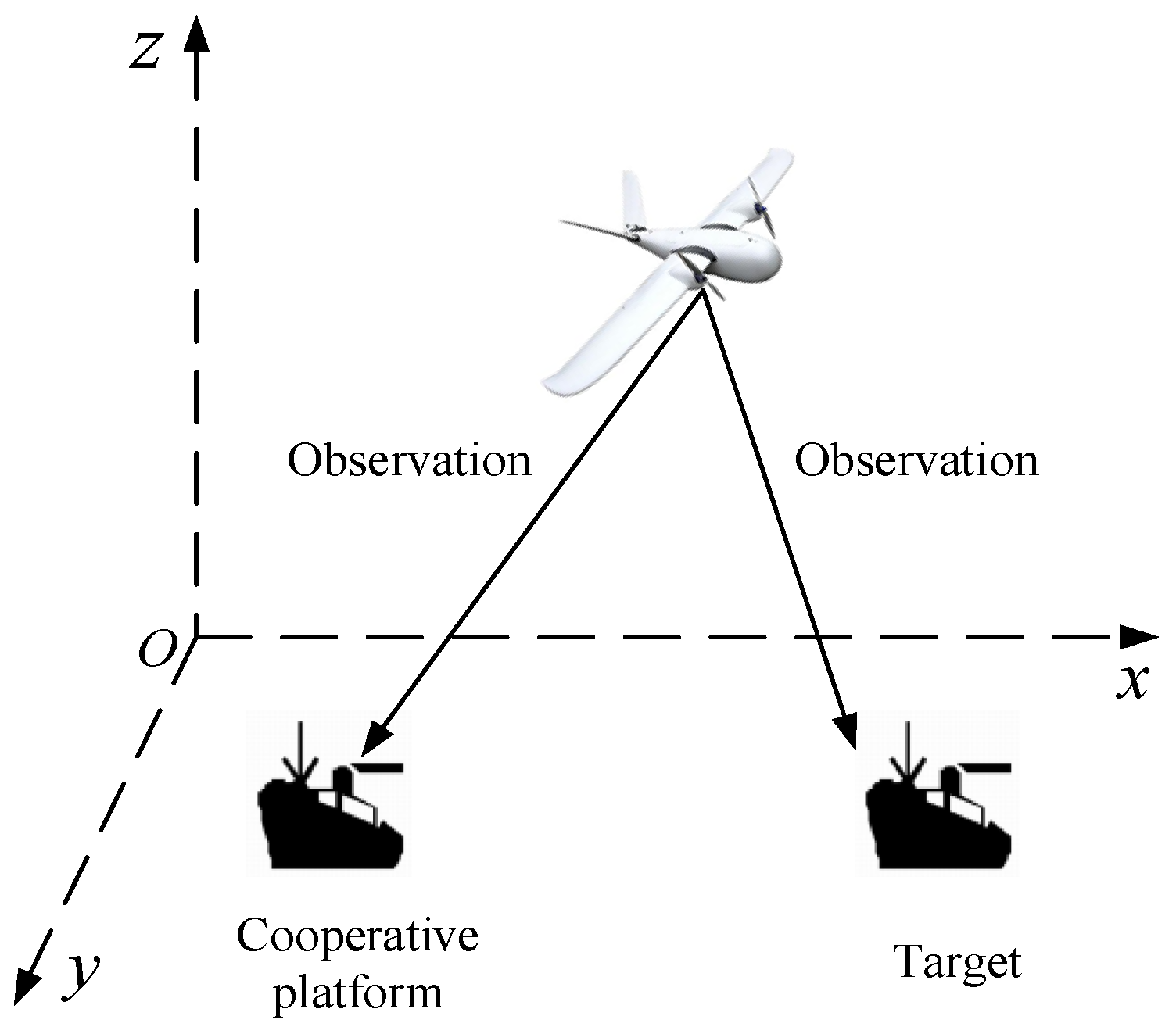

6.2. Multi-UAVs Cooperative Target Tracking and Trajectory Optimization

This section reports the simulation experiments conducted to verify the effectiveness of the proposed algorithm based on the attitude correction method when muti-UAVs track the maneuvering target. The simulation parameters were set as follows: the cooperation platform was located at (0 m, 0 m, 0 m), with both the UAVs and the cooperative platform at a distance of 20 km. The UAVs flew at a fixed altitude, and the initial positions and performance parameters of the three UAVs are shown in

Table 8. The errors of the UAV’s observation of the target and cooperation platform are shown in

Table 9. The target with the initial state of

performed right-turn maneuvering of 0.2°/s in 350 s~650 s, performed left-turn maneuvering of 0.2°/s in 850 s~1150 s, and maintained a uniform motion for the rest of the time. The initial situation of the cooperation platform, target, and UAVs are shown in

Figure 11, and the total simulation time is 1500 s with a simulation interval of 0.5 s.

Under the target maneuvering conditions mentioned above, the IMM algorithm uses three models to estimate the state: uniform motion model, acceleration model, and turning model. The initial values of the model probability

, and the state transition matrix is

The initial control sequence for the RHO method is

, and the relevant simulation parameters are shown in

Table 10.

- (a)

Scenario 1 of cooperative target tracking

This section discusses the situation of the cooperative target tracking by UAV1 and UAV2. The systematic errors and random errors of the UAVs’ attitude angles are both (0.1°, 0.1°, 0.1°). Using the cooperative target tracking and trajectory optimization presented in

Section 5, the simulation results are shown in

Figure 12,

Figure 13,

Figure 14,

Figure 15 and

Figure 16.

Figure 12 shows the trajectory of the UAVs optimized by the RHO method, and

Figure 13 depicts the variation curve of the line of sight between the UAVs and the target. It can be seen that for the maneuvering target, the line of sight between two UAVs and the target approaches 90 degrees, indicating that the UAVs are in an optimized observation position. This is consistent with a previous theoretical analysis [

43], demonstrating the effective optimization of the UAVs’ trajectory using the RHO method.

The estimated trajectories of the maneuvering target are presented in

Figure 14. It can be seen from

Figure 14 that the target trajectory estimated by a single UAV without the attitude correction algorithm deviates greatly from the real target trajectory, while the trajectory estimated by a single UAV with the attitude correction algorithm is relatively close to the real target trajectory. Compared with the single UAV’s observation, the target trajectory of two UAVs’ cooperative estimation based on the attitude correction algorithm is closer to the real trajectory.

Figure 15 and

Figure 16 show the RMSE of the target position and velocity after 200 Monte Carlo simulations, respectively. As shown in

Figure 15 and

Figure 16, the fusion of the estimation algorithms of two UAVs based on the attitude correction algorithm can significantly improve the tracking accuracy. The comparison parameters in

Table 11 also confirm this conclusion.

- (b)

Scenario 2 of cooperative target tracking

To illustrate the adaptability of the algorithm, this section reports a simulation conducted to examine the effectiveness of the fusion estimation algorithm of two UAVs based on the attitude correction proposed in this paper when the attitude angle error is large. The systematic error and random error of the UAVs’ attitude angles were both (0.2°, 0.2°, 0.2°), and the remaining simulation parameters were the same as those of

Section 6.2(a). The simulation results are shown in

Figure 17,

Figure 18 and

Figure 19 and

Table 12.

As shown in

Figure 17,

Figure 18 and

Figure 19 and

Table 12, the attitude correction algorithm proposed in this paper can effectively improve the target location accuracy in the case of the large attitude angle errors. Moreover, compared to the results of the single UAV’s estimated target state based on the attitude correction algorithm, the fusion of the estimation algorithms of the two UAVs based on the attitude correction can significantly improve the target detection accuracy. By comparing

Table 11 and

Table 12, the target tracking accuracy obtained by the attitude correction algorithm under different attitude angle errors is almost consistent, indicating that the proposed algorithm can effectively eliminate the influence of attitude angle errors on tracking accuracy.

- (c)

Scenario 3 of cooperative target tracking

To evaluate the effectiveness of the multi-UAV cooperative tracking algorithm, this section discusses the scenario in which UAV 1, UAV 2, and UAV 3 cooperatively tracked a target; UAV 3 was added based on the framework established in

Section 6.2(b). The attitude angle system error and random error of UAV 3 were both (0.2°, 0.2°, 0.2°). The initial position and performance of UAV 3 are shown in

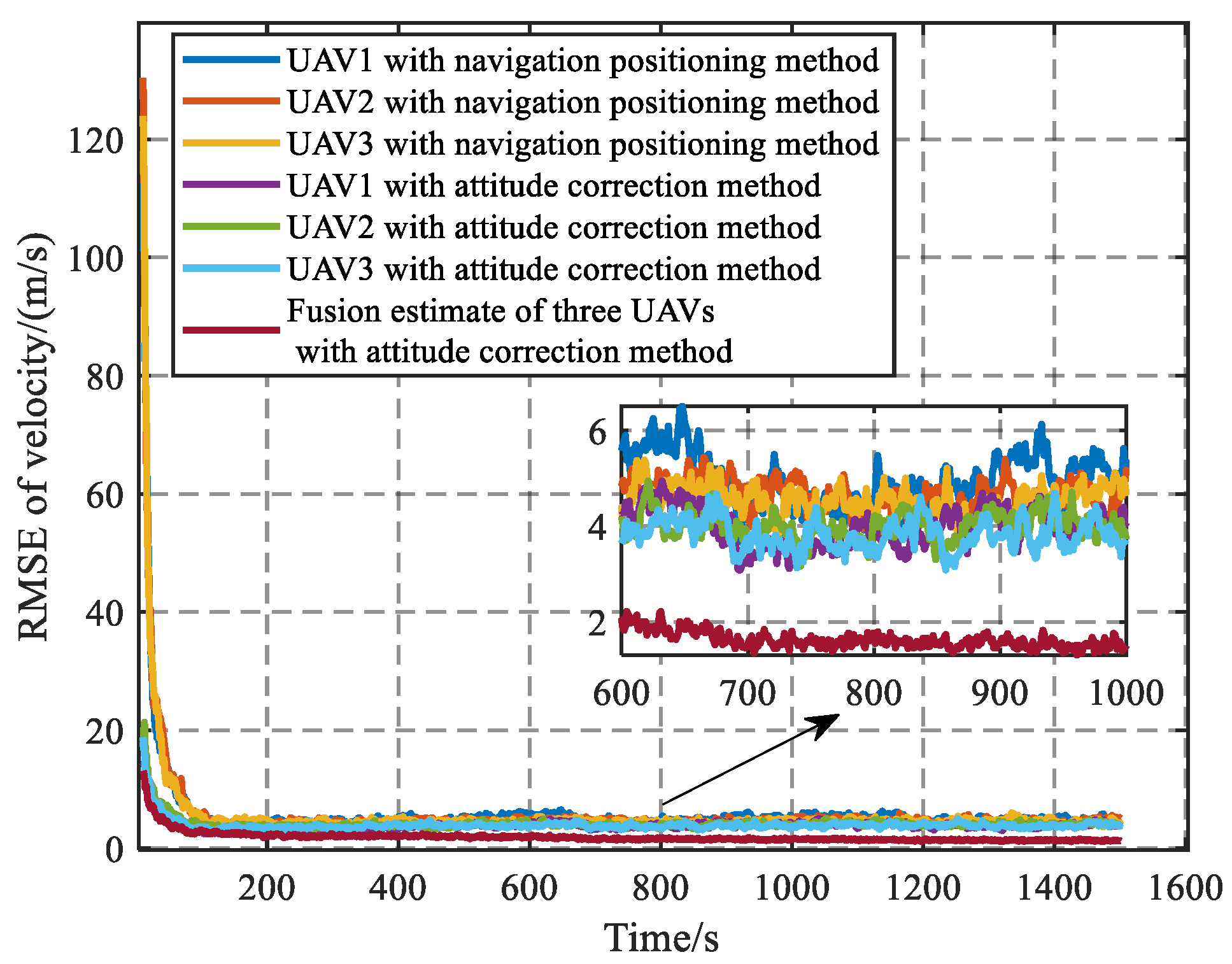

Table 13. The other simulation parameters were consistent with those in 6.2(b), and the simulation results are shown in

Figure 20,

Figure 21,

Figure 22,

Figure 23,

Figure 24 and

Figure 25.

As shown in

Figure 20,

Figure 21 and

Figure 22, the optimized UAV trajectory based on the RHO method allows for the UAV to form an optimized observation configuration under the condition of satisfying angular velocity constraints (as shown in

Figure 21), where the line of sight between three UAVs and the target is maintained at 60 degrees or 120 degrees. This observation configuration is very conducive to cooperative detection.

Figure 23,

Figure 24 and

Figure 25 and

Table 13 show that the proposed algorithm can effectively improve the detection accuracy. A comparison between

Table 12 and

Table 13 shows that, compared to the fusion estimation of two UAVs based on the attitude correction algorithm, the fusion estimation of three UAVs increases the position accuracy of the target from 24.1 m to 22.5 m and the velocity accuracy from 2.35 m/s to 1.91 m/s. The detection accuracy is improved to a certain extent, but the improvement is not significant.

In summary, combining

Section 6.2(a),

Section 6.2(b), and

Section 6.2(c) shows that the proposed algorithm can effectively optimize the observation trajectory of the UAV, reduce the influence of UAV attitude angle errors on observation accuracy, and improve the cooperative tracking accuracy of the target. The simulation results verify the effectiveness of the proposed method.