A General Method for Pre-Flight Preparation in Data Collection for Unmanned Aerial Vehicle-Based Bridge Inspection

Abstract

1. Introduction

2. Methodology

2.1. Inspection Purpose and Hardware Selection

2.2. Flight Path Planning

- Insignificant—crack width less than 0.1016 mm (prestressed) or 0.3048 mm (reinforced), or medium-width cracks that have been sealed.

- Medium—crack width ranging from 0.1016 to 0.2286 mm (prestressed) or 0.3048–1.27 mm (reinforced).

- Wide—crack width wider than 0.2286 mm (prestressed) or 1.27 mm (reinforced).

2.3. Camera Calibration

3. Case Studies and Results

3.1. Inspection Purpose and Hardware Selection

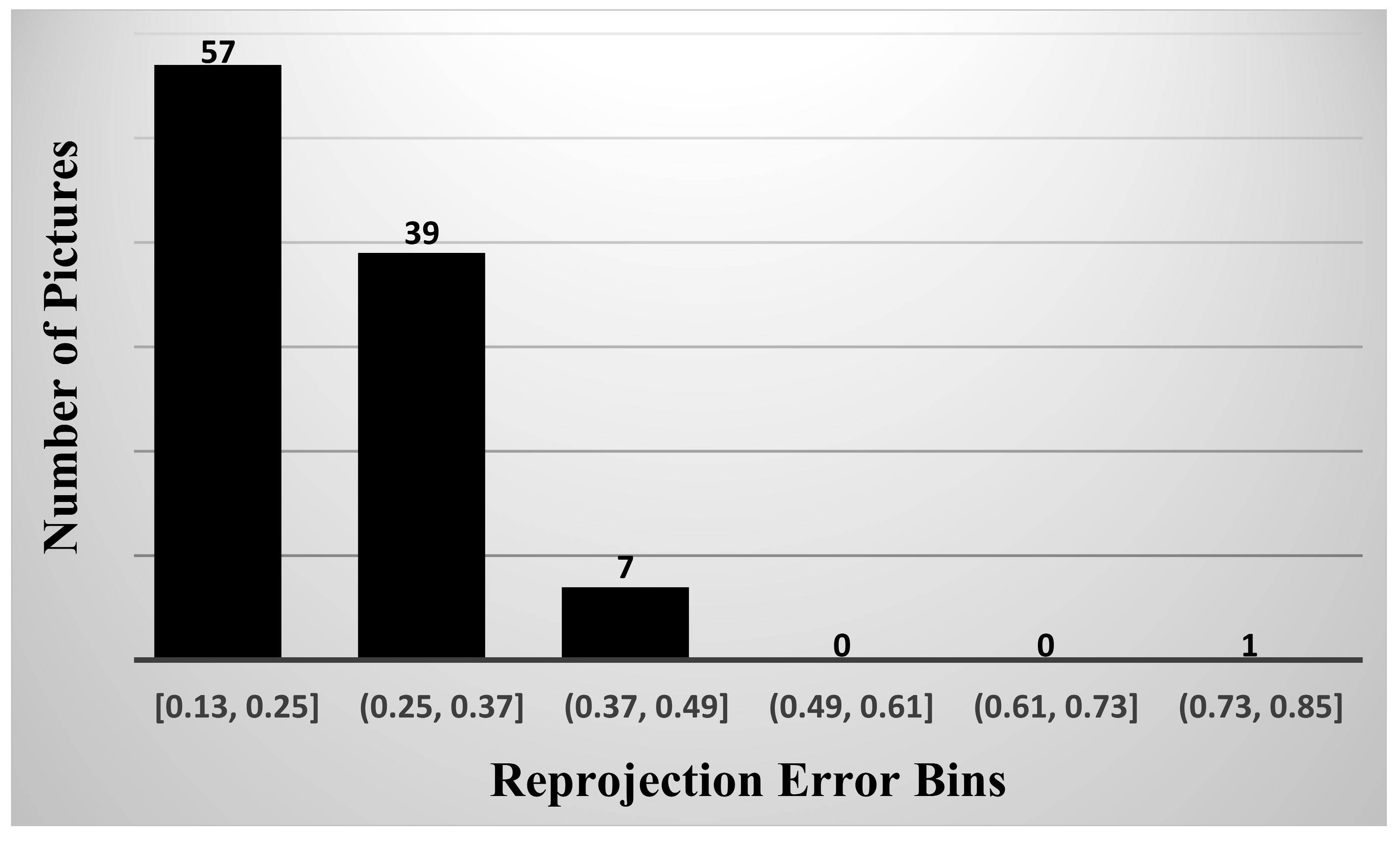

3.2. In-Lab Calibration

3.3. Flight Path Planning

3.4. On-Site Camera Calibration

3.5. Validation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Black, A.P. 2022 Bridge Report; American Road & Transportation Builders Association. 2022. Available online: https://artbabridgereport.org/reports/2022-ARTBA-Bridge-Report.pdf (accessed on 1 January 2020).

- Zhang, Q.; Ro, S.H.; Wan, Z.; Babanajad, S.; Braley, J.; Barri, K.; Alavi, A.H. Automated Unmanned Aerial Vehicle-Based Bridge Deck Delamination Detection and Quantification. Transp. Res. Rec. J. Transp. Res. Board 2023, 2677, 036119812311554. [Google Scholar] [CrossRef]

- Xiang, T.-Z.; Xia, G.-S.; Zhang, L. Mini-Unmanned Aerial Vehicle-Based Remote Sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef]

- Duque, L.; Seo, J.; Wacker, J. Bridge Deterioration Quantification Protocol Using UAV. J. Bridge Eng. 2018, 23, 04018080. [Google Scholar] [CrossRef]

- Aliyari, M.; Droguett, E.L.; Ayele, Y.Z. UAV-Based Bridge Inspection via Transfer Learning. Sustainability 2021, 13, 11359. [Google Scholar] [CrossRef]

- Mohan, A.; Poobal, S. Crack detection using image processing: A critical review and analysis. Alex. Eng. J. 2018, 57, 787–798. [Google Scholar] [CrossRef]

- Deng, J.; Singh, A.; Zhou, Y.; Lu, Y.; Lee, V.C.-S. Review on computer vision-based crack detection and quantification methodologies for civil structures. Constr. Build. Mater. 2022, 356, 129238. [Google Scholar] [CrossRef]

- Li, H.; Wang, W.; Wang, M.; Li, L.; Vimlund, V. A review of deep learning methods for pixel-level crack detection. J. Traffic Transp. Eng. (Engl. Ed.) 2022, 9, 945–968. [Google Scholar] [CrossRef]

- Sreenath, S.; Malik, H.; Husnu, N.; Kalaichelavan, K. Assessment and Use of Unmanned Aerial Vehicle for Civil Structural Health Monitoring. Procedia Comput. Sci. 2020, 170, 656–663. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Coopmans, C.; Maguire, M. A Practitioner’s Guide to Small Unmanned Aerial Systems for Bridge Inspection. Infrastructures 2019, 4, 72. [Google Scholar] [CrossRef]

- Almasi, P.; Premadasa, R.; Rouhbakhsh, S.; Xiao, Y.; Wan, Z.; Zhang, Q. A Review of Developments and Challenges of Preflight Preparation for Data Collection of UAV-based Infrastructure Inspection. CTCSE 2024, 10. [Google Scholar] [CrossRef]

- Yanagi, H.; Chikatsu, H. Camera Calibration in 3d Modelling for UAV Application. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2015, XL-4/W5, 223–226. [Google Scholar] [CrossRef]

- Cramer, M.; Przybilla, H.-J.; Zurhorst, A. UAV Cameras: Overview and Geometric Calibration Benchmark. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-2/W6, 85–92. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Ameli, Z.; Aremanda, Y.; Friess, W.A.; Landis, E.N. Impact of UAV Hardware Options on Bridge Inspection Mission Capabilities. Drones 2022, 6, 64. [Google Scholar] [CrossRef]

- Mahama, E.; Karimoddini, A.; Khan, M.A.; Cavalline, T.L.; Hewlin, R.L.; Smith, E.; Homaifar, A. Testing and Evaluating the Impact of Illumination Levels on UAV-assisted Bridge Inspection. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Nasimi, R.; Moreu, F.; Fricke, G.M. Sensor Equipped UAS for Non-Contact Bridge Inspections: Field Application. Sensors 2023, 23, 470. [Google Scholar] [CrossRef] [PubMed]

- Kaveh, A.; Almasi, P.; Khodagholi, A. Optimum Design of Castellated Beams Using Four Recently Developed Meta-heuristic Algorithms. Iran J. Sci. Technol. Trans. Civ. Eng. 2023, 47, 713–725. [Google Scholar] [CrossRef]

- Kaveh, A.; Zaerreza, A. Shuffled Shepherd Optimization Method: A New Meta-Heuristic Algorithm. In Structural Optimization Using Shuffled Shepherd Meta-Heuristic Algorithm; Studies in Systems, Decision and Control; Springer Nature: Cham, Switzerland, 2023; Volume 463, pp. 11–52. [Google Scholar] [CrossRef]

- Jung, S.; Song, S.; Kim, S.; Park, J.; Her, J.; Roh, K.; Myung, H. Toward Autonomous Bridge Inspection: A framework and experimental results. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Republic of Korea, 24–27 June 2019; pp. 208–211. [Google Scholar] [CrossRef]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Debus, P.; Rodehorst, V. Multi-scale Flight Path Planning for UAS Building Inspection. In Proceedings of the 18th International Conference on Computing in Civil and Building Engineering; Toledo Santos, E., Scheer, S., Eds.; Lecture Notes in Civil Engineering; Springer International Publishing: Cham, Switzerland, 2021; Volume 98, pp. 1069–1085. [Google Scholar] [CrossRef]

- Morgenthal, G.; Hallermann, N.; Kersten, J.; Taraben, J.; Debus, P.; Helmrich, M.; Rodehorst, V. Framework for automated UAS-based structural condition assessment of bridges. Autom. Constr. 2019, 97, 77–95. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, X.; Fan, J.; Liu, X. Image-based crack assessment of bridge piers using unmanned aerial vehicles and three-dimensional scene reconstruction. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 511–529. [Google Scholar] [CrossRef]

- Li, H.; Chen, Y.; Liu, J.; Zhang, Z.; Zhu, H. Unmanned Aircraft System Applications in Damage Detection and Service Life Prediction for Bridges: A Review. Remote Sens. 2022, 14, 4210. [Google Scholar] [CrossRef]

- Kim, I.-H.; Yoon, S.; Lee, J.H.; Jung, S.; Cho, S.; Jung, H.-J. A Comparative Study of Bridge Inspection and Condition Assessment between Manpower and a UAS. Drones 2022, 6, 355. [Google Scholar] [CrossRef]

- Toriumi, F.Y.; Bittencourt, T.N.; Futai, M.M. UAV-based inspection of bridge and tunnel structures: An application review. Rev. IBRACON Estrut. Mater. 2023, 16, e16103. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intel. Serv. Robot. 2023, 16, 109–137. [Google Scholar] [CrossRef] [PubMed]

- Chan, B.; Guan, H.; Jo, J.; Blumenstein, M. Towards UAV-based bridge inspection systems: A review and an application perspective. Struct. Monit. Maint. 2015, 2, 283–300. [Google Scholar] [CrossRef]

- Dorafshan, S.; Campbell, L.E.; Maguire, M.; Connor, R.J. Benchmarking Unmanned Aerial Systems-Assisted Inspection of Steel Bridges for Fatigue Cracks. Transp. Res. Rec. 2021, 2675, 154–166. [Google Scholar] [CrossRef]

- Xu, Y.; Turkan, Y. BrIM and UAS for bridge inspections and management. ECAM 2019, 27, 785–807. [Google Scholar] [CrossRef]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in Civil Infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- Dorafshan, S.; Maguire, M. Bridge inspection: Human performance, unmanned aerial systems and automation. J. Civil. Struct. Health Monit. 2018, 8, 443–476. [Google Scholar] [CrossRef]

- Kalaitzakis, M. Uncrewed Aircraft Systems for Autonomous Infrastructure Inspection. Ph.D. Thesis, University of South Carolina, Columbia, SC, USA, 2022. [Google Scholar]

- Seo, J.; Duque, L.; Wacker, J. Drone-enabled bridge inspection methodology and application. Autom. Constr. 2018, 94, 112–126. [Google Scholar] [CrossRef]

- Wells, J.; Lovelace, B.; Collins Engineers, Inc. Unmanned Aircraft System Bridge Inspection Demonstration Project Phase II Final report. MN/RC 2017-18, Jun. 2017. Available online: https://rosap.ntl.bts.gov/view/dot/32636 (accessed on 2 January 2017).

- Phung, M.D.; Hoang, V.T.; Dinh, T.H.; Ha, Q. Automatic Crack Detection in Built Infrastructure Using Unmanned Aerial Vehicles. arXiv 2017, arXiv:1707.09715. [Google Scholar]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. Fatigue Crack Detection Using Unmanned Aerial Systems in Fracture Critical Inspection of Steel Bridges. J. Bridge Eng. 2018, 23, 04018078. [Google Scholar] [CrossRef]

- Omar, T.; Nehdi, M.L. Remote sensing of concrete bridge decks using unmanned aerial vehicle infrared thermography. Autom. Constr. 2017, 83, 360–371. [Google Scholar] [CrossRef]

- Escobar-Wolf, R.; Oommen, T.; Brooks, C.N.; Dobson, R.J.; Ahlborn, T.M. Unmanned Aerial Vehicle (UAV)-Based Assessment of Concrete Bridge Deck Delamination Using Thermal and Visible Camera Sensors: A Preliminary Analysis. Res. Nondestruct. Eval. 2018, 29, 183–198. [Google Scholar] [CrossRef]

- Canny, J.; Reif, J. New lower bound techniques for robot motion planning problems. In Proceedings of the 28th Annual Symposium on Foundations of Computer Science (sfcs 1987), Los Angeles, CA, USA, 27–29 October 1987; pp. 49–60. [Google Scholar] [CrossRef]

- Specifications for the National Bridge Inventory. 2022. Available online: https://link.springer.com/chapter/10.1007/978-3-030-00764-5_57 (accessed on 1 January 2020).

- Yu, S.; Zhu, R.; Yu, L.; Ai, W. Effect of Checkerboard on the Accuracy of Camera Calibration. In Proceedings of the Advances in Multimedia Information Processing–PCM 2018: 19th Pacific-Rim Conference on Multimedia, Hefei, China, 21–22 September 2018. [Google Scholar]

- MATLAB. The MathWorks, Inc., (R2023b). Available online: https://www.mathworks.com (accessed on 1 January 2020).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Machine Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Pix4D. Reprojection Error. Available online: https://support.pix4d.com/hc/en-us/articles/202559369-Reprojection-error (accessed on 1 January 2020).

| UAV Platform | Price ($) | Max Endurance (Minutes) | Payload Capacity (kg) | Related Research |

|---|---|---|---|---|

| DJI Mavic 2 | 2700 | 31 | 1 | [34] |

| Aurelia X6 Standard LE | 5700 | 45 | 5 | [35] |

| DJI Phantom 4 | 3000 | 30 | 1 | [36] |

| senseFly Albris | 2000 | 22 | N/A | [37] |

| 3DR Solo | 1000 | 15 | 1.5 | [38] |

| 3DR Iris | 750 | 22 | 0.4 | [39] |

| DJI Inspire 1 Pro | 3900 | 18 | 3.4 | [40] |

| Bergen hexacopter | 6000 | 30 | 5 | [41] |

| Heights (m) | Angles (°) |

|---|---|

| 0.5, 1, 1.5, 2, 2.5, 3 | 15, 30, 45, 60, 75, 90 |

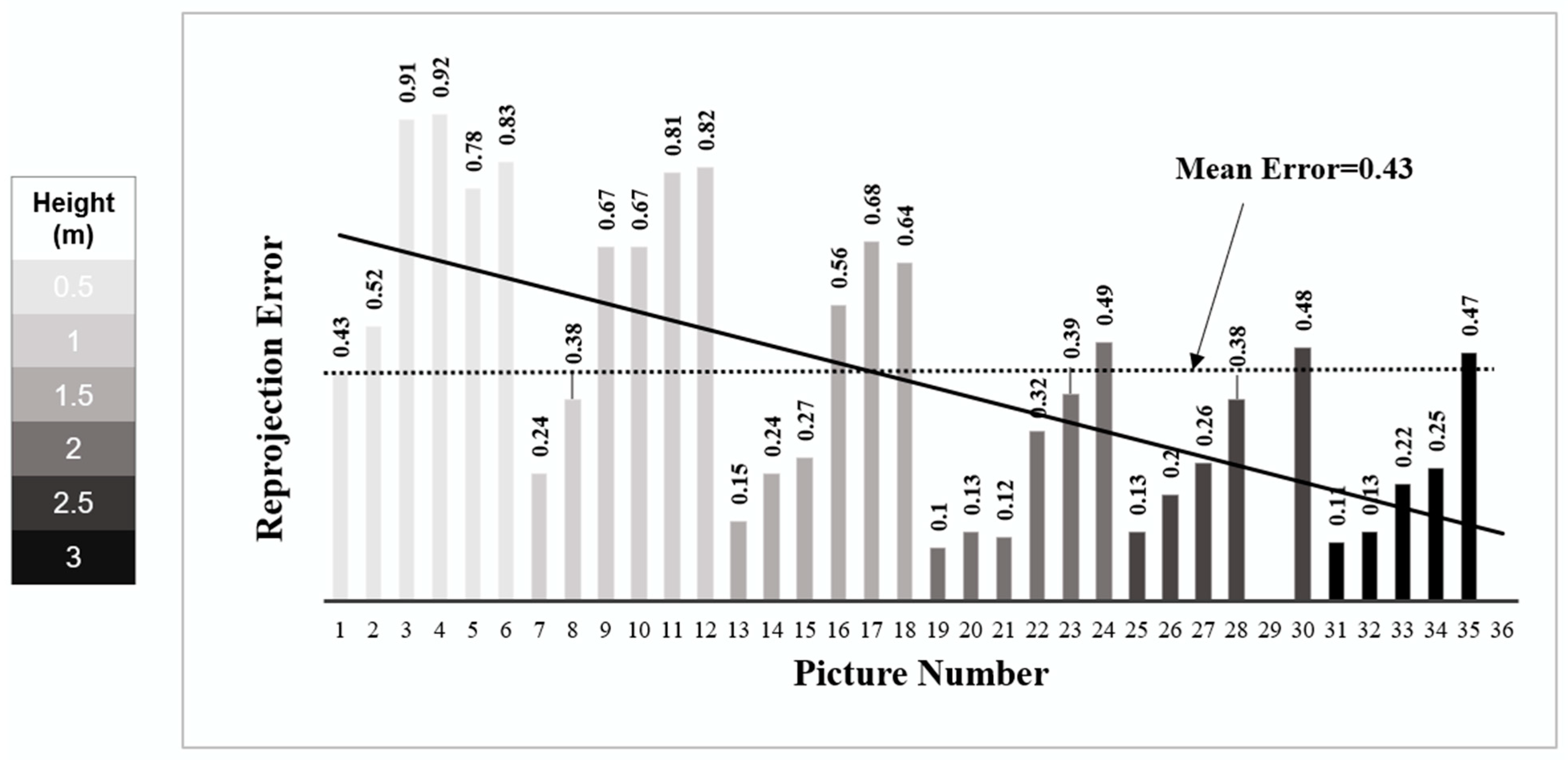

| East Direction | ||||

|---|---|---|---|---|

| Overall Mean Error (pixels) | x Focal Length (pixels) | y Focal Length (pixels) | x Radial Distortion (pixels) | y Radial Distortion (pixels) |

| 0.43 | ||||

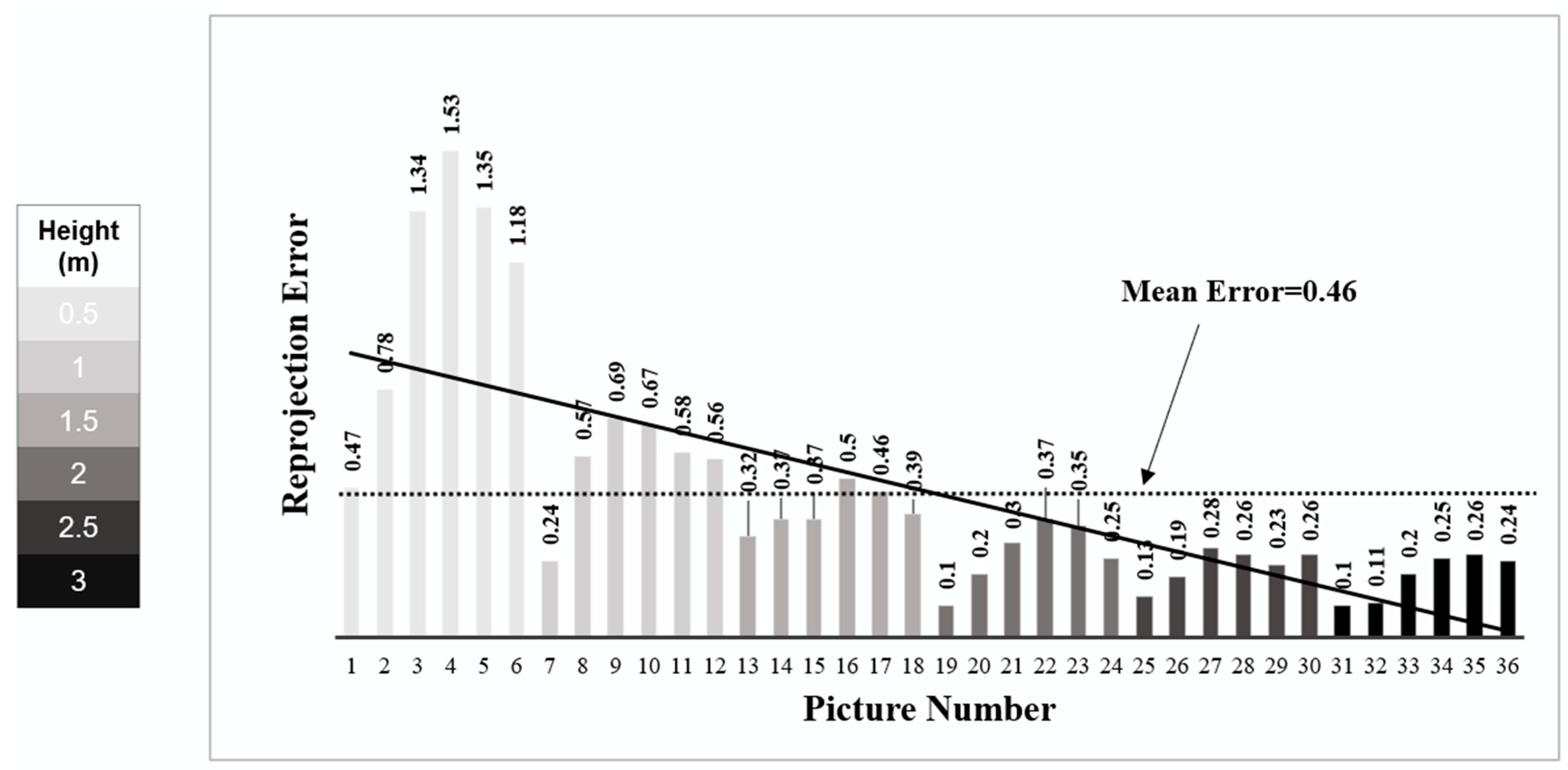

| North Direction | ||||

| Overall Mean Error (pixels) | x Focal Length (pixels) | y Focal Length (pixels) | x Radial Distortion (pixels) | y Radial Distortion (pixels) |

| 0.46 | ||||

| South Direction | ||||

| Overall Mean Error (pixels) | x Focal Length (pixels) | y Focal Length (pixels) | x Radial Distortion (pixels) | y Radial Distortion (pixels) |

| 0.28 | ||||

| Flight Number | Flight Height (m) | Transverse Distance (m) | Camera Angles (°) |

|---|---|---|---|

| 1 | 2 | 1 | 30, 35 |

| 2 | 2 | 2 | 30, 35 |

| 3 | 3 | 1 | 30, 35 |

| 4 | 3 | 2 | 30, 35 |

| Flight Number | Flight Height (m) | Transverse Distance (m) | Camera Angles (°) |

|---|---|---|---|

| 1 | 0 | 2 | 0 |

| 2 | 0 | 3 | 0 |

| 3 | 0 | 4 | 0 |

| Overall Mean Error (Pixels) | x Focal Length (Pixels) | y Focal Length (Pixels) | x Radial Distortion (Pixels) | y Radial Distortion (Pixels) |

|---|---|---|---|---|

| 0.25 |

| Overall Mean Error (Pixels) | x Focal Length (Pixels) | y Focal Length (Pixels) | x Radial Distortion (Pixels) | y Radial Distortion (Pixels) |

|---|---|---|---|---|

| 0.20 |

| Overall Mean Error (Pixels) | x Focal Length (Pixels) | y Focal Length (Pixels) | x Radial Distortion (Pixels) | y Radial Distortion (Pixels) |

|---|---|---|---|---|

| 0.29 |

| Overall Mean Error (Pixels) | x Focal Length (Pixels) | y Focal Length (Pixels) | x Radial Distortion (Pixels) | y Radial Distortion (Pixels) |

|---|---|---|---|---|

| 0.14 |

| Flight | Distance (m) | Measured Width (mm) | Detected Width from Raw Images (mm) | Detected Width after Correction (mm) | Result Accuracy Improvement (%) |

|---|---|---|---|---|---|

| 1 | 2 | 0.51 | 0.71 | 0.66 | 9.80 |

| 2 | 3 | 0.51 | 0.71 | 0.62 | 17.65 |

| 3 | 4 | 0.51 | 1 | 0.9 | 19.61 |

| Flight | Distance (m) | Measured Width (mm) | Detected Width from Raw Images (mm) | Detected Width after Correction (mm) | Result Accuracy Improvement (%) |

|---|---|---|---|---|---|

| 1 | 2 | 1.53 | 1.78 | 1.67 | 7.19 |

| 2 | 3 | 1.53 | 1.77 | 1.55 | 14.38 |

| 3 | 4 | 1.53 | 2.13 | 1.80 | 21.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almasi, P.; Xiao, Y.; Premadasa, R.; Boyle, J.; Jauregui, D.; Wan, Z.; Zhang, Q. A General Method for Pre-Flight Preparation in Data Collection for Unmanned Aerial Vehicle-Based Bridge Inspection. Drones 2024, 8, 386. https://doi.org/10.3390/drones8080386

Almasi P, Xiao Y, Premadasa R, Boyle J, Jauregui D, Wan Z, Zhang Q. A General Method for Pre-Flight Preparation in Data Collection for Unmanned Aerial Vehicle-Based Bridge Inspection. Drones. 2024; 8(8):386. https://doi.org/10.3390/drones8080386

Chicago/Turabian StyleAlmasi, Pouya, Yangjian Xiao, Roshira Premadasa, Jonathan Boyle, David Jauregui, Zhe Wan, and Qianyun Zhang. 2024. "A General Method for Pre-Flight Preparation in Data Collection for Unmanned Aerial Vehicle-Based Bridge Inspection" Drones 8, no. 8: 386. https://doi.org/10.3390/drones8080386

APA StyleAlmasi, P., Xiao, Y., Premadasa, R., Boyle, J., Jauregui, D., Wan, Z., & Zhang, Q. (2024). A General Method for Pre-Flight Preparation in Data Collection for Unmanned Aerial Vehicle-Based Bridge Inspection. Drones, 8(8), 386. https://doi.org/10.3390/drones8080386